Abstract

Pediatric brain tumors are the second most common type of cancer, accounting for one in four childhood cancer types. Brain tumor resection surgery remains the most common treatment option for brain cancer. While assessing tumor margins intraoperatively, surgeons must send tissue samples for biopsy, which can be time-consuming and not always accurate or helpful. Snapshot hyperspectral imaging (sHSI) cameras can capture scenes beyond the human visual spectrum and provide real-time guidance where we aim to segment healthy brain tissues from lesions on pediatric patients undergoing brain tumor resection. With the institutional research board approval, Pro00011028, 139 red-green-blue (RGB), 279 visible, and 85 infrared sHSI data were collected from four subjects with the system integrated into an operating microscope. A random forest classifier was used for data analysis. The RGB, infrared sHSI, and visible sHSI models achieved average intersection of unions (IoUs) of 0.76, 0.59, and 0.57, respectively, while the tumor segmentation achieved a specificity of 0.996, followed by the infrared HSI and visible HSI models at 0.93 and 0.91, respectively. Despite the small dataset considering pediatric cases, our research leveraged sHSI technology and successfully segmented healthy brain tissues from lesions with a high specificity during pediatric brain tumor resection procedures.

Keywords: pediatric brain tumor, neurosurgery, snapshot hyperspectral imaging, random forest, segmentation

1. Introduction

Brain tumors are the most common solid tumors in children and account for the highest number of cancer-related deaths worldwide [1]. The main symptoms include headaches, seizures, nausea, drowsiness, and microcephaly. When left untreated, they can lead to coma or death. While developing a treatment plan, neurosurgeons need to understand the tumor type, grade, category, and location. There are two types of tumors: benign and malignant [2]. Benign tumors grow slowly and are non-cancerous, whereas malignant tumors are extremely aggressive. The grade refers to the level of aggressiveness of the tumor cells; a higher grade indicates more malignant tumors [2]. Considering that tumors may transform into higher grades, early treatment is critical. Therefore, it is vital to know their categories, which are primary and secondary (or metastatic). Primary tumors originate in the brain, whereas metastatic tumors originate in other parts of the body and move up to the brain [2]. Finally, the tumor location is determined by scanning the brain (via CTs or MRIs) [3], which is important for assessing the type of surgery required for removal or biopsy.

The current intraoperative standard for surgeons to evaluate tumor margins is to send small pieces of tissue for biopsy to the pathology department for testing, which is time-consuming and increases the duration of surgery, as the tests do not provide real-time results and are often not definitive. This usually requires the surgeon to make real-time decisions based upon the appearance of the different tissues including what is likely a tumor versus normal brain tissue. Often, tumors, especially low-grade tumors, appear indistinguishable from normal brain tissue. Snapshot hyperspectral imaging (HSI) can potentially identify tumor margins in real time and thus can be used to help or contribute to a greater degree of tumor resection as well as minimize morbidity. In addition, this can be used to teach less-experienced surgeons to identify tumors from normal brain tissue [4].

HSI is an imaging technique that captures and processes a wide spectrum of light between visible and infrared wavelengths. Unlike the conventional RGB image, which uses only three colors (red, green, and blue), HSI captures spectral data at each pixel of the image. These data are then used to create a three-dimensional hyperspectral data cube containing spatial and spectral information [5]. The wide range of spectral information allows HSI to identify different materials and objects with unique high-resolution spectral properties [6,7]. Different materials exhibit different light reflection, absorption, and scattering responses [8]. Although HSI was originally developed for remote sensing [9,10], it is widely used in medical imaging owing to its noninvasive imaging modality, which helps collect spatial and spectral information from tissues [6].

Some of the common uses of HSI in medical imaging include identifying different types of tissues and cancers [11,12,13], monitoring treatment responses, and providing [6] surgical guidance [14]. This is possible because HSI provides detailed information on tissue properties and biochemical processes that cannot be visualized using traditional imaging or visible wavelengths. More specifically, in cancer cases, HSI can detect biochemical and morphological changes in tissues, which aids in diagnosis [14]. Additionally, HSI spectral signatures help extract and differentiate cancerous and normal tissues [13].

Furthermore, HSI can be used intraoperatively for real-time guidance in identifying tumor margins and achieving more accurate and complete tumor removal [13]. Significant advances have been made in machine learning-based tumor diagnosis. In a study by Shokouhifar et al. [15], they used a three-stage deep learning ensemble model embedded in a camera scanning tool to measure the volume of the arm of patients with lymphedema. The model was very successful, allowing for patients to be measured in an inexpensive and noninvasive manner. Another used of machine learning for tumor detection is classification. Veeraiah et al. [16] used the mayfly optimization with a generative adversarial network to classify different types of leukemia from blood smear images. Other innovations in machine learning-based tumor segmentation have also been achieved [17,18], including brain tumor segmentation using machine learning [19,20,21,22]. Kalaivani et al. [23] used machine learning to segment brain tumors based on MRI images. The collected MRI images were pre-processed through denoising to remove irrelevant information and improve image quality. Features were extracted, and three machine learning classifiers, i.e., Fuzzy C-Mean Clustering (FCM), K-nearest neighbor (KNN), and K-means, were implemented to classify the areas of the MRI images as tumor or nontumor regions. The classifiers were highly successful with segmentation accuracies of 98.97%, 89.96%, and 79.95% for FCM, KNN, and K-means, respectively. Combining both segmentation and classification, Eder at al. were able to use segmented MRI images of patients with brain tumors to predict if the patient would survive [24].

By combining HSI and machine learning classifiers, Ruiz et al. [25] classified the regions of the HSI images of four patients with glioblastoma grade IV brain tumors. This classification was performed using random forest and support vector machine (SVM), and the goal was to train the models to classify the regions of the image into five classes: healthy tissue, tumor, venous blood vessel, arterial blood vessel, and dura mater. Two experiments were conducted: the first experiment considered 80% of the images of each patient for training and the remaining 20% for testing, and the second experiment considered three patients for training and one for testing. In the first experiment, random forest slightly outperformed SVM with almost-perfect accuracy scores. However, in the second experiment, SVM exhibited significantly better accuracy.

In another study, Ma et al. [26] used a hyperspectral microscopic imaging system to detect head and neck cancer nuclei on histological slides. The HSI and co-registered RGB images were trained using a convolutional neural network (CNN) for nuclear classification. Compared with the RGB CNN, which had a test accuracy of 0.74, the HSI CNN produced significantly better results, with a test accuracy of 0.89 because the RGB CNN uses spatial information, whereas the HSI CNN uses spatial and spectral information.

Similar to earlier studies, we introduce a new snapshot hyperspectral camera with a random forest classifier. Snapshot HSI helps capture hyperspectral images in a single exposure. Although this technique is extensively used in astronomy [27], it is rarely used in the medical domain. The snapshot HSI sensor is ideal for medical imaging because it is noninvasive and nonionizing; however, it acquires large datasets in real time. Currently, there is an unmet need to examine anatomical structures beyond the visible human spectrum, specifically in a manner that is unobtrusive to the surgical workflow. Although brain tumors have clear margins and are easily excised, others are diffused or located in critical brain structures. Using our HSI device, we can differentiate tissue types by observing their characteristic spectra and training a deep learning classifier, such as a random forest, to perform pixel-level segmentation.

Key Achievements:

We developed a compact sHSI camera designed for seamless integration with an existing surgical microscope, enabling remote control for the simultaneous acquisition of both color and hyperspectral data.

Our study harnessed sHSI technology to capture real-time images extending beyond the visible spectrum, effectively distinguishing healthy brain tissues from lesions in surgical scenarios.

We conducted machine learning model training by utilizing data from pediatric patients and assessed the resulting performance outcomes.

2. Materials and Methods

2.1. Data Collection

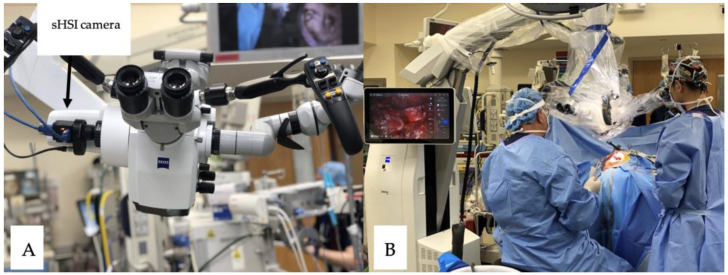

Herein, we constructed a camera comprising a single housing unit with visible and infrared sensors linked via a beam splitter. A Bayer-like array with 16 visible (482, 493, 469, 462, 570, 581, 555, 543, 615, 622, 603, 592, 530, 540, 516, 503) and 25 infrared (613, 621, 605, 601, 685, 813, 825, 801, 789, 698, 764, 776, 750, 737, 712, 652, 660, 643, 635, 677, 865, 869, 854, 843, 668) wavelength (unit: nm) filters arranged in grid patterns was placed in front of each sensor. Images were collected from pediatric patients undergoing open brain surgery at the Children’s National Medical Center (IRB protocol number Pro00011028). Herein, subjects who were diagnosed with epilepsy or malignant neoplasm and planned to undergo surgical resection of pathological tissue were considered; moreover, they were required to be under the age of 18 and to provide consent for participation. The subjects were recruited from the physician’s pool of patients, and when they participated, a sHSI camera (BaySPec OCI™-D-2000 Ultra-Compact Hyperspectral Images) was attached to the operative microscope before the surgery (Figure 1A). During surgery, the staff captured periodic HSI and RGB images of the pathological brain matter (Figure 1B), which other study staff might classify. The images were then uploaded to a computer for processing.

Figure 1.

Surgical setup: (A) hyperspectral camera attached to operative (Zeiss surgical microscope) microscope; (B) HSI image visible on the screen during surgery.

In all of the cases, there was generally no interference in the circulatory conditions of the patients. We collected data from four patients: in three cases, a visible HSI camera was used; in two cases, RGB images were collected; and only in one case was an infrared HSI camera used during operation.

2.2. Data Preprocessing

The images were collected using an RGB camera and two types of hyperspectral cameras: one in the visible spectrum and the other in the infrared spectrum; 136 RGB images, 279 visible hyperspectral images, and 85 infrared hyperspectral images were used. By eliminating images without the brain tissue, we obtained 60 RGB images, 234 visible HSI images, and 60 infrared HSI images. Visible HSI images were used to create a fourth dataset, which included only images with tumors (47 images).

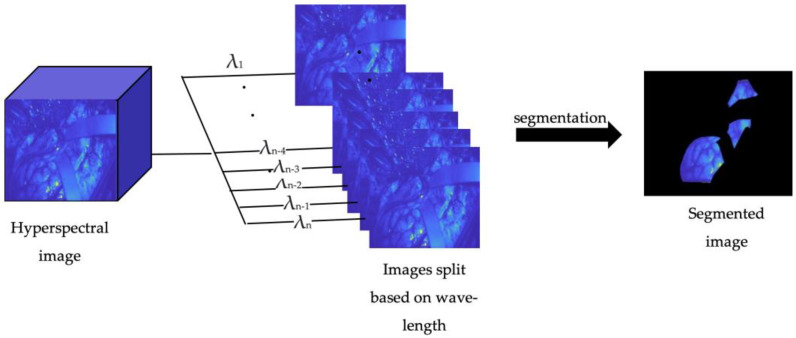

Using MATLAB (MATLAB 2023a, MathWorks NY USA), all hyperspectral images were separated based on wavelength, resulting in 16 images for each hyperspectral image in the visible spectrum and 25 for the infrared spectrum. This was performed to simplify the ground-truth segmentation and create a more detailed training dataset.

Finally, using the ImageSegmenter tool in MATLAB, the ground truth segmentation was manually created by delineating and separating the healthy tissue from the background in the case of the first three datasets, as depicted in Figure 2. For the fourth dataset, the tumor and background were separated.

Figure 2.

Preprocessing steps: original HSI image split based on wavelength and segmented.

2.3. Machine Learning

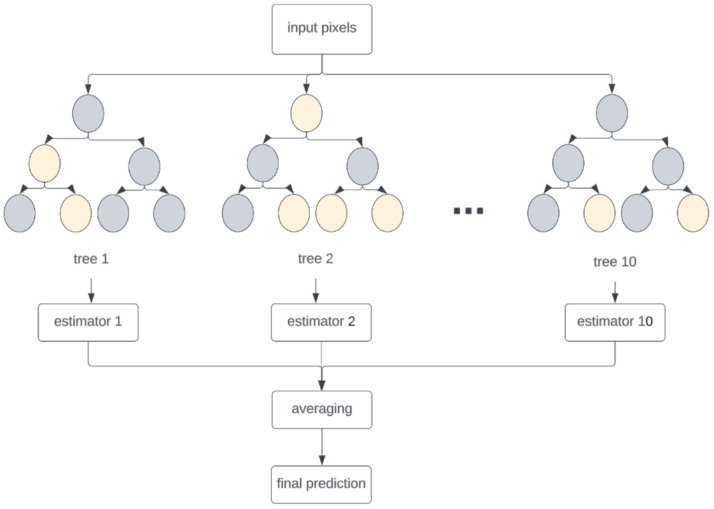

Random forest is a machine learning model commonly used for classification and regression problems. It comprises multiple decision trees, which are used to obtain a single output. A decision tree is a type of machine learning algorithm that comprises nodes and branches, the nodes being decision points and the branches being possible outcomes. At each node, a decision is made to determine the branch to follow. The goal of a decision tree is to classify inputs into distinct categories. However, decision trees are prone to bias and overfitting. To avoid this issue, an ensemble of decision trees is used in the random forest algorithm [28].

We used random forest for the segmentation problem, as illustrated in Figure 3. This was performed by treating each pixel as a data point and assigning a label (0 for background and 1 for tissue). Random forest extracts features from an image via edge detection, pixel intensity evaluation, or texture analysis. Using these features and ground-truth labels, the random forest was trained by creating an ensemble of decision trees on a subset of randomly selected features. Once trained, each decision tree assigned a label to each pixel using majority voting, and a single label was generated for each pixel in the image. We selected random forest because of its superior capacity to handle large datasets, speed, and robustness to noise and outliers [29], which set it apart from other machine learning algorithms.

Figure 3.

Random forest block diagram. Pixel variables can be divided into 0 (Gray circle) or 1 (Yellow circle).

Random forest with ten estimators was used to train our datasets. This was determined after comparing the average accuracy of the visible sHSI model for tissue segmentation at 2, 5, 10, and 15 estimators. The model was trained with each input column representing one of the wavelengths. For each model, the data were split in the ratio of 70:30 for training and testing, respectively.

2.4. Evaluation

To evaluate the model performance, we calculated the average intersection over union (IoU) and standard deviation.

Sensitivity and specificity were considered to evaluate the model’s performance.

2.5. Bench Top Testing

To assess the segmentation potential of random forest with an HSI camera, we initially tested it on a 24-colormap card; 43 images of the 24-colormap card were captured using the same HSI camera. The images were captured at different angles under various lighting conditions, and images with other objects next to or partially on top of the card were captured as well. By applying the same steps, the images were preprocessed and trained using random forest. Training was performed twice: initially on 30 random images (test 1) and then on 40 images (test 2); the remaining 13 and 3 images were used for testing. The average IoU value was considered to evaluate the model performance.

Furthermore, we compared random forest with another machine learning classifier, SVM. This was performed by calculating the average accuracy of each model trained on test 2.

3. Results

As summarized in Table 1, the average IoU values of the colormap card images ranged between 0.71 and 0.54 for tests 2 and 1, respectively, and the standard deviations were 0.1 and 0.01 for tests 1 and 2, respectively. Additional training data significantly improved the model’s performance (by approximately 20%). This case was considered while training the brain images.

Table 1.

Segmentation performance for the bench top test.

| Average IoU | Standard Deviation | |

|---|---|---|

| Test 1 | 0.54 | 0.1 |

| Test 2 | 0.71 | 0.01 |

Figure 4 shows the 24-colormap images in black and white overlaid with segmentation resulting from random forest. As shown in the images, almost every small box was segmented, the lines between the boxes were always clearly black, and no background areas were segmented. Therefore, although some boxes were not entirely segmented, the model could distinguish the background from the region of interest.

Figure 4.

The 24-colormap captured using the hyperspectral camera. The three images on the top represent the 24-colormap at different angles where RGB images are false-colored for better visibility. The bottom three images represent the original hyperspectral black and white images overlayed with the segmentation results in red.

As one can see in Table 2, comparing random forest with SVM, the average accuracy of the random forest model was higher by 0.07. Furthermore, random forest is less computationally expensive and less likely to overfit due to noise. Since sHSI images are low-resolution images, random forest is the better choice.

Table 2.

Average accuracy result of different machine learning classifiers.

| Average Accuracy | |

|---|---|

| Random forest | 0.84 |

| SVM | 0.77 |

Table 3 shows that the average accuracy of the model increased by 0.01 between 2, 5, and 10 estimators. This value peaked at 10 estimators with an average accuracy of 0.854. Between 10 and 15 estimators, the average accuracy stayed stagnant. However, it was more computationally expensive and time consuming to train on 15 estimators. This is why the segmentation models were trained on 10 estimators.

Table 3.

Average accuracy score of visible HSI model trained with different numbers of estimators.

| Number of Estimators | Average Accuracy |

|---|---|

| 2 | 0.834 |

| 5 | 0.844 |

| 10 | 0.854 |

| 15 | 0.854 |

Table 4 lists the average IoU achieved for each of the four datasets. The highest average IoU (0.76) was achieved when the tissue was segmented using RGB images, followed by tissue segmentation using infrared HSI (0.59) and visible HSI (0.57). Finally, the lowest average IoU was achieved (0.10) when the tumor was segmented using visible HSI. The performance results of the visible and infrared HSI were compared; however, the visible HSI used images of three patients as opposed to one for the infrared image, which indicated that despite visible HSI producing slightly lower values, the segmentation was more robust. Furthermore, contrary to our initial predictions, RGB segmentation outperformed the other models.

Table 4.

Segmentation performance of the four datasets using IoU.

| Average IoU | Standard Deviation | |

|---|---|---|

| Tissue—RGB images | 0.76 | 0.10 |

| Tissue—Visible HSI | 0.57 | 0.16 |

| Tissue—Infrared HSI | 0.59 | 0.20 |

| Tumor—Visible HSI | 0.10 | 0.09 |

Table 5 lists the average specificity and sensitivity values obtained by testing the models. The RGB model produced the highest sensitivity score (0.81). The second-highest sensitivity score was 0.50 for the visible HIS, followed by infrared HSI (0.45) and tumor segmentation (0.09). However, the opposite trend was observed for specificity. Tumor segmentation exhibited the highest specificity (0.996), followed by infrared HSI (0.93) and visible HSI (0.91). The lowest score was 0.72 for the RGB images. All the models exhibited high specificity values, which was not the case for sensitivity, specifically in the case of tumor segmentation. This is most likely due to the small region of interest that the tumor occupies compared to healthy brain tissue.

Table 5.

Average specificity and sensitivity of each dataset.

| Specificity | Sensitivity | |

|---|---|---|

| Tissue—RGB images | 0.72 | 0.81 |

| Tissue—Visible HSI | 0.91 | 0.50 |

| Tissue—Infrared HSI | 0.93 | 0.45 |

| Tumor—Visible HSI | 0.996 | 0.09 |

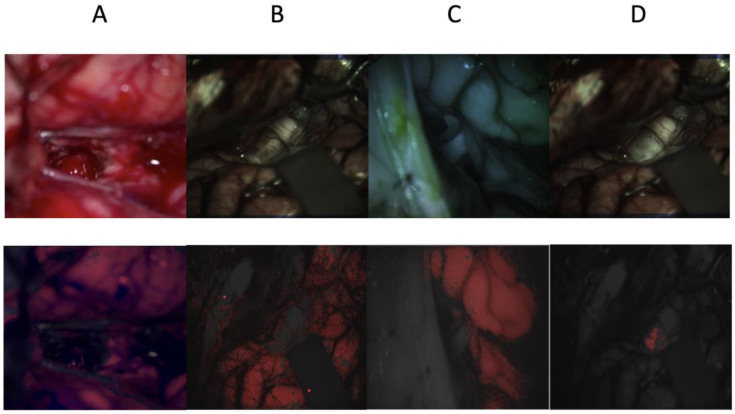

Figure 5 shows each dataset, where the top image represents either the original RGB image of the hyperspectral image that has been artificially colored, and the bottom images represent the same images in black and white overlaid in red via segmentation. Image A indicates the high performance of the RGB model. The tumor was extracted from the unsegmented central area, and the surrounding tissue was healthy. The model correctly segmented the area of interest, which could be explained by the high sensitivity and specificity scores. Image B represents a visible HSI dataset of segmented healthy tissues. As shown in the image, random forest successfully excluded the surgical tools and skull; however, the margin of the healthy tissue was not clear. A similar observation can be made for image C (infrared HSI); however, it was less apparent, and the healthy tissue was more consistently segmented. Finally, in image D, which shows the visible HSI segmenting the tumor, the segmented area represents the tumor only; however, not all tumors were segmented. This was further demonstrated based on the high average specificity of 0.996.

Figure 5.

Pediatric brain images were captured using RGB, visible, and infrared HSI cameras. The four images at the top are the original RGB and hyperspectral images that have been artificially colored. The bottom four images are their respective segmentations overlayed in red. Images (A–C) are collected from the RGB, visible HSI, and infrared HSI datasets, respectively, where the healthy tissue is being segmented, and image (D) is obtained from the visible HSI dataset but for tumor segmentation.

4. Discussion

The highest overall average IoU was achieved using the RGB images, with an average IoU of 0.76, and the HSI tissue segmentation models performed at average IoUs of 0.59 and 0.57, respectively. These results were significantly higher than those of tumor segmentation, which only achieved an average IoU of 0.10. However, the tumor segmentation achieved the highest average specificity of 0.996; when analyzing this and the example image, we can see that the model did not confuse the background information with the tumor; however, the lower sensitivity score indicates that the model had issues segmenting the entire tumor. The specificity scores of the other models were also very high, ranging from 0.93 to 0.72, indicating that the models can distinguish the background; however, the moderate sensitivity scores of the HSI models indicate that the overall tissue was not being segmented, which is a problem, specifically around the margins. Finally, the RGB model yielded a higher sensitivity score of 0.81. This model achieved significant results for all the metrics.

Lean et al. [30] used a hyperspectral camera to collect images of patients with brain tumors who underwent brain surgery. The HSI spectra were in the visible and infrared regions, similar to those in our dataset. They segmented normal brain tissue and blood vessels using these images and machine learning classifiers. They used unsupervised and supervised machine learning algorithms, which were based on random forest. To train the models, they used images of the visible and infrared spectra and the fusion of the two images. Random forest achieved an accuracy of up to 93.10% and 82.93% for the infrared and visible spectra, respectively.

Compared to our model’s performance, they were able to achieve significantly better results [30]; however, we were unable to compare dataset sizes owing to limited information. We assume that the difference in performance can be attributed to the aforementioned scenario. This motivates us to study a larger dataset to achieve better results in the future.

5. Conclusions

In conclusion, our initial findings show great promise as we achieved an average IoU of 0.76 for the RGB dataset and 0.59 or 0.57 for the HSI datasets in the segmentation of healthy tissues. While the average IoU for tumor segmentation was lower at 0.10, however the specificity score of 0.996 provides strong evidence that the background segmentation remained accurate. Moreover, the high specificity observed in other models underscores the consistent segmentation of the region of interest. Notably, our models encountered challenges when segmenting tumor margins, an issue we aim to address through dataset expansion. However, the limitation of training and testing on a limited number of patients deserves consideration, as variations in tumor size and location across patients may impact model performance. This emphasizes the critical need for robust data collection and algorithm development. Additionally, the difficulty in visualizing non-surface brain tumors presents an obstacle, which we plan to overcome by integrating our sHSI camera with a laparoscope to capture multi-angle data. Future endeavors include developing a model capable of distinguishing between different brain regions (healthy tissue, tumor, and skull) and training a deep learning model with an expanded dataset.

Acknowledgments

Authors would like to thank Jeremy Kang, Ava Jiao, and Ashley Yoo for their data annotation. We also thank Deki Tserling and Tiffany Nguyen Phan for administrative supports.

Author Contributions

Conceptualization, R.K. and R.J.C.; Methodology, N.K., B.N., I.K. and R.J.C.; Software, N.K.; Validation, N.K.; Investigation, S.T. and D.A.D.; Resources, R.J.C.; Data curation, N.K. and S.T.; Writing—original draft, N.K. and R.J.C.; Writing—review and editing, B.N., D.A.D., R.K., and R.J.C.; Supervision, R.K. and R.J.C.; Project administration, S.T. and R.J.C.; Funding acquisition, R.K. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Children’s Research Institute (Protocol number: Pro00011028 and date of approval: 24 December 2018).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data is unavailable due to privacy or ethical restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was funded by Intramural Funding at Children’s National Hospital, grant number SPF44265-20190311 (R.K.) and National Institute of Biomedical Imaging and Bioengineering, grant numbers K23EB034110 (D.A.D.) and R44EB030874 (R.J.C.).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Hwang E.I., Sayour E.J., Flores C.T., Grant G., Wechsler-Reya R., Hoang-Minh L.B., Kieran M.W., Salcido J., Prins R.M., Figg J.W., et al. The current landscape of immunotherapy for pediatric brain tumors. Nat. Cancer. 2022;3:11–24. doi: 10.1038/s43018-021-00319-0. [DOI] [PubMed] [Google Scholar]

- 2.Iqbal S., Khan M.U.G., Saba T., Rehman A. Computer-assisted brain tumor type discrimination using magnetic resonance imaging features. Biomed. Eng. Lett. 2018;8:5–28. doi: 10.1007/s13534-017-0050-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Biratu E.S., Schwenker F., Ayano Y.M., Debelee T.G. A Survey of Brain Tumor Segmentation and Classification Algorithms. J. Imaging. 2021;7:179. doi: 10.3390/jimaging7090179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Pertzborn D., Nguyen H.N., Huttmann K., Prengel J., Ernst G., Guntinas-Lichius O., von Eggeling F., Hoffmann F. Intraoperative Assessment of Tumor Margins in Tissue Sections with Hyperspectral Imaging and Machine Learning. Cancers. 2022;15:213. doi: 10.3390/cancers15010213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yoon J. Hyperspectral Imaging for Clinical Applications. BioChip J. 2022;16:1–12. doi: 10.1007/s13206-021-00041-0. [DOI] [Google Scholar]

- 6.Zhang Y., Wu X., He L., Meng C., Du S., Bao J., Zheng Y. Applications of hyperspectral imaging in the detection and diagnosis of solid tumors. Transl. Cancer Res. 2020;9:1265–1277. doi: 10.21037/tcr.2019.12.53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ortega S., Fabelo H., Camacho R., de la Luz Plaza M., Callico G.M., Sarmiento R. Detecting brain tumor in pathological slides using hyperspectral imaging. Biomed. Opt. Express. 2018;9:818–831. doi: 10.1364/BOE.9.000818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Halicek M., Fabelo H., Ortega S., Callico G.M., Fei B. In-Vivo and Ex-Vivo Tissue Analysis through Hyperspectral Imaging Techniques: Revealing the Invisible Features of Cancer. Cancers. 2019;11:756. doi: 10.3390/cancers11060756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bioucas-Dias J.M., Plaza A., Camps-Valls G., Scheunders P., Nasrabadi N., Chanussot J. Hyperspectral Remote Sensing Data Analysis and Future Challenges. IEEE Geosci. Remote Sens. Mag. 2013;1:6–36. doi: 10.1109/MGRS.2013.2244672. [DOI] [Google Scholar]

- 10.Plaza A., Benediktsson J.A., Boardman J.W., Brazile J., Bruzzone L., Camps-Valls G., Chanussot J., Fauvel M., Gamba P., Gualtieri A., et al. Recent advances in techniques for hyperspectral image processing. Remote Sens. Environ. 2009;113:S110–S122. doi: 10.1016/j.rse.2007.07.028. [DOI] [Google Scholar]

- 11.Ogihara H., Hamamoto Y., Fujita Y., Goto A., Nishikawa J., Sakaida I. Development of a Gastric Cancer Diagnostic Support System with a Pattern Recognition Method Using a Hyperspectral Camera. J. Sens. 2016;2016:1803501. doi: 10.1155/2016/1803501. [DOI] [Google Scholar]

- 12.Liu Z., Wang H., Li Q. Tongue tumor detection in medical hyperspectral images. Sensors. 2012;12:162–174. doi: 10.3390/s120100162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Akbari H., Halig L.V., Schuster D.M., Osunkoya A., Master V., Nieh P.T., Chen G.Z., Fei B. Hyperspectral imaging and quantitative analysis for prostate cancer detection. J. Biomed. Opt. 2012;17:076005. doi: 10.1117/1.JBO.17.7.076005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lu G., Fei B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014;19:10901. doi: 10.1117/1.JBO.19.1.010901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Shokouhifar A., Shokouhifar M., Sabbaghian M., Soltanian-Zadeh H. Swarm intelligence empowered three-stage ensemble deep learning for arm volume measurement in patients with lymphedema. Biomed. Signal Process. Control. 2023;85:105027. doi: 10.1016/j.bspc.2023.105027. [DOI] [Google Scholar]

- 16.Veeraiah N., Alotaibi Y., Subahi A.-F. MayGAN: Mayfly Optimization with Generative Adversarial Network-Based Deep Learning Method to Classify Leukemia Form Blood Smear Images. Comput. Syst. Sci. Eng. 2023;46:2039–2058. doi: 10.32604/csse.2023.036985. [DOI] [Google Scholar]

- 17.Klimont M., Oronowicz-Jaskowiak A., Flieger M., Rzeszutek J., Juszkat R., Jonczyk-Potoczna K. Deep Learning-Based Segmentation and Volume Calculation of Pediatric Lymphoma on Contrast-Enhanced Computed Tomographies. J. Pers. Med. 2023;13:184. doi: 10.3390/jpm13020184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rahman H., Bukht T.F.N., Imran A., Tariq J., Tu S., Alzahrani A. A Deep Learning Approach for Liver and Tumor Segmentation in CT Images Using ResUNet. Bioengineering. 2022;9:368. doi: 10.3390/bioengineering9080368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Malathi M., Sinthia P. Brain Tumour Segmentation Using Convolutional Neural Network with Tensor Flow. Asian Pac. J. Cancer Prev. 2019;20:2095–2101. doi: 10.31557/APJCP.2019.20.7.2095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Xiao Z., Huang R., Ding Y., Lan T., Dong R., Qin Z., Zhang X., Wang W. A deep learning-based segmentation method for brain tumor in MR images; Proceedings of the 2016 IEEE 6th International Conference on Computational Advances in Bio and Medical Sciences (ICCABS); Atlanta, GA, USA. 13–15 October 2016; pp. 1–6. [Google Scholar]

- 21.Selvakumar J., Lakshmi A., Arivoli T. Brain tumor segmentation and its area calculation in brain MR images using K-mean clustering and Fuzzy C-mean algorithm; Proceedings of the IEEE-International Conference On Advances In Engineering, Science And Management (ICAESM-2012); Nagapattinam, India. 30–31 March 2012; pp. 186–190. [Google Scholar]

- 22.Ahmed M., Mohamad D. Segmentation of Brain MR Images for Tumor Extraction by Combining Kmeans Clustering and Perona-Malik Anisotropic Diffusion Model. Int. J. Image Process. 2008;2:27–34. [Google Scholar]

- 23.Kalaivani I., Oliver A.S., Pugalenthi R., Jeipratha P.N., Jeena A.A.S., Saranya G. Brain Tumor Segmentation Using Machine Learning Classifier; Proceedings of the 2019 Fifth International Conference on Science Technology Engineering and Mathematics (ICONSTEM); Chennai, India. 14–15 March 2019; pp. 85–90. [Google Scholar]

- 24.Eder M., Moser E., Holzinger A., Jean-Quartier C., Jeanquartier F. Interpretable Machine Learning with Brain Image and Survival Data. BioMedInformatics. 2022;2:492–510. doi: 10.3390/biomedinformatics2030031. [DOI] [Google Scholar]

- 25.Ruiz L., Martín-Pérez A., Urbanos G., Villanueva M., Sancho J., Rosa G., Villa M., Chavarrías M., Perez A., Juarez E., et al. Multiclass Brain Tumor Classification Using Hyperspectral Imaging and Supervised Machine Learning; Proceedings of the 2020 XXXV Conference on Design of Circuits and Integrated Systems (DCIS); Segovia, Spain. 18–20 November 2020. [Google Scholar]

- 26.Ma L., Little J.V., Chen A.Y., Myers L., Sumer B.D., Fei B. Automatic detection of head and neck squamous cell carcinoma on histologic slides using hyperspectral microscopic imaging. J. Biomed. Opt. 2022;27:046501. doi: 10.1117/1.JBO.27.4.046501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Goetz A.F., Vane G., Solomon J.E., Rock B.N. Imaging spectrometry for Earth remote sensing. Science. 1985;228:1147–1153. doi: 10.1126/science.228.4704.1147. [DOI] [PubMed] [Google Scholar]

- 28.Breiman L. Random Forests. Mach. Learn. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 29.Chan J.C.-W., Paelinckx D. Evaluation of Random Forest and Adaboost tree-based ensemble classification and spectral band selection for ecotope mapping using airborne hyperspectral imagery. Remote Sens. Environ. 2008;112:2999–3011. doi: 10.1016/j.rse.2008.02.011. [DOI] [Google Scholar]

- 30.Leon R., Fabelo H., Ortega S., Pineiro J.F., Szolna A., Hernandez M., Espino C., O’Shanahan A.J., Carrera D., Bisshopp S., et al. VNIR-NIR hyperspectral imaging fusion targeting intraoperative brain cancer detection. Sci. Rep. 2021;11:19696. doi: 10.1038/s41598-021-99220-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data is unavailable due to privacy or ethical restrictions.