Abstract

In the last decade there has been a proliferation of research on misinformation. One important aspect of this work that receives less attention than it should is exactly why misinformation is a problem. To adequately address this question, we must first look to its speculated causes and effects. We examined different disciplines (computer science, economics, history, information science, journalism, law, media, politics, philosophy, psychology, sociology) that investigate misinformation. The consensus view points to advancements in information technology (e.g., the Internet, social media) as a main cause of the proliferation and increasing impact of misinformation, with a variety of illustrations of the effects. We critically analyzed both issues. As to the effects, misbehaviors are not yet reliably demonstrated empirically to be the outcome of misinformation; correlation as causation may have a hand in that perception. As to the cause, advancements in information technologies enable, as well as reveal, multitudes of interactions that represent significant deviations from ground truths through people’s new way of knowing (intersubjectivity). This, we argue, is illusionary when understood in light of historical epistemology. Both doubts we raise are used to consider the cost to established norms of liberal democracy that come from efforts to target the problem of misinformation.

Keywords: misinformation and disinformation, intersubjectivity, correlation versus causation, free speech

The aim of this review is to answer the question, (Why) is misinformation a problem? We begin the main review with a discussion of definitions of “misinformation” because this, in part motivated our pursuit to answer this question. Incorporating evidence from many disciplines helps us to examine the speculated effects and causes of misinformation, which give some indication of why it might be a problem. Answers in the literature reveal that advancements in information technology are the commonly suspected primary cause of misinformation. However, the reviewed literature shows considerable divergence regarding the assumed outcomes of misinformation. This may not be surprising given the breadth of disciplines involved; researchers in different fields observe effects from different perspectives. The fact that so many effects of misinformation are reported is not a concern as long as the direct causal link between misinformation and the aberrant behaviors it generates is clear. We emphasize that the evidence provided by studies investigating this relationship is weak. This exposes two issues: one that is empirical, as to the effects of misinformation, and one that is conceptual, as to the cause of the problem of misinformation. We argue that the latter issue has been oversimplified. Uniting the two issues, we propose that the alarm regarding the speculated relationship between misinformation and aberrant societal behaviors appears to be rooted in the increased opportunities through advancements in information technology for people to “go it alone”—that is, establish their own ways of knowing that increases deviations from ground truths.

We therefore propose our own conceptual lens from which to understand the cause of concern that misinformation poses. It acknowledges modern information technology (e.g., Internet, social media) but goes beyond it to understand the roots of knowledge through historical epistemology—the study of (primarily scientific) knowledge, specifically concepts (e.g., objectivity, belief), objects (e.g., statistics, DNA), and the development of science (Feest & Sturm, 2011). The current situation is triggering alarm because it appears that the process of knowing is undergoing a transition whereby objectivity is second to intersubjectivity. Intersubjectivity, as we define it, is a coordination effort by two or more people to interpret entities in the world through social interaction. Owing to progress in information technologies, intersubjectivity is seen as being in opposition to the way ground truths are established by traditional institutions. However, the two processes are not necessarily at odds because, for instance, the scientific endeavor is not itself devoid of intersubjective mechanisms. Second, there is no clear evidence that intersubjectivity as a means for establishing truth is on the rise outside of the fact that the Internet facilitates and exposes more clearly the interactions people are having. Critically, the causal connection between beliefs established in an intersubjective manner and aberrant behavior is also not established. In our concluding section, we discuss whether the efforts to reduce misinformation are proportionate to the actual or rather the perceived scale of the problem of misinformation. We also propose possible methodological avenues for future exploration that might help to better expose causal relations between misinformation and behavior.

Defining Misinformation and Other Associated Terms

What is misinformation? Several definitions of “misinformation” refer to it as “false,” “inaccurate,” or “incorrect” information (e.g., Qazvinian et al., 2011; Tan et al., 2015; Van der Meer & Jin, 2019) and as the antonym to information. By contrast, disinformation is false information that is also described as being intentionally shared (e.g., Levi, 2018). Information shared for malicious ends—to cause harm to an individual, organization, or country (Wardle, 2020)—is malinformation and can be either true (e.g., as with doxing, when private information is publicly shared) or false. A close cousin of the term disinformation is fake news (Anderau, 2021; Zhou & Zafarani, 2021), a term made popular by the former U.S. President Donald Trump, for which there are a variety of examples that fall under this catchall term (Tandoc et al., 2018). Similar to disinformation, “fake news” is defined as information presented as news that is intentional and verifiably false (Allcott & Gentzkow, 2017; Anderau, 2021). This is different from satire, parody, and propaganda. However, rumor, often discussed alongside hearsay, gossip, or word of mouth, is perhaps the oldest relative in the misinformation family, with research dating back decades (for discussion, see Allport & Postman’s The Psychology of Rumor, 1947). A companion of humankind for millennia, a rumor is commonly defined as “unverified and instrumentally relevant information statements in circulation” (DiFonzo & Bordia, 2007, p. 31).

Yet these terms are used differently (e.g., Habgood-Coote, 2019; Karlova & Lee, 2011; Scheufele & Krause, 2019). For example, Wu et al. (2019) employed “misinformation” as an umbrella term to include all false or inaccurate information (unintentionally spread misinformation, intentionally spread misinformation, fake news, urban legends, rumor). This is arguably why Krause et al. (2022) concluded that misinformation has become a catchall term with little meaning. It is perhaps not surprising that these definitions are also contentious and that debates continue among scholars. For example, a point of discussion is the demarcation between information and misinformation, with some scholars arguing that this is a false dichotomy (e.g., Ferreira et al., 2020; Haiden & Althuis, 2018; Marwick, 2018; Tandoc et al., 2018). The problem with drawing a line between the two is that it ignores what it means to ascertain the truth. Krause et al. (2020) illustrated this in their real-world example of COVID-19, in which the efficacy of measures such as masks was initially unknown. Osman et al. (2022) demonstrated this with reference to the origins of the COVID-19 virus and the problems with prematurely labeling what was conspiracy and what was a viable scientific hypothesis. A continuum approach is an alternative to reductionist classifications into true or false information (e.g., Hameleers et al., 2021; Tandoc et al., 2018; Vraga & Bode, 2020), but the problem of how exactly truth is or ought to be established remains.

Some define truth by what it is not, rather than what it is. Paskin (2018) argued that fake news has no factual basis, which implies that truth is equated simply to facts. Other scholars make reference to ideas such as accuracy and objectivity (Tandoc et al., 2018) as well as to evidence and expert opinion (Nyhan & Reifler, 2010). Regarding what is false, many scholars define “disinformation” as intentional sharing of false information (e.g., Fallis, 2015; Hernon, 1995; Shin et al., 2018; Søe, 2017), but the problem is how to determine intent (e.g., Karlova & Lee, 2011; Shu et al., 2017). Given how fundamental the conceptual problems are, some have proposed that it is too early to investigate misinformation (Avital et al., 2020; Habgood-Coote, 2019).

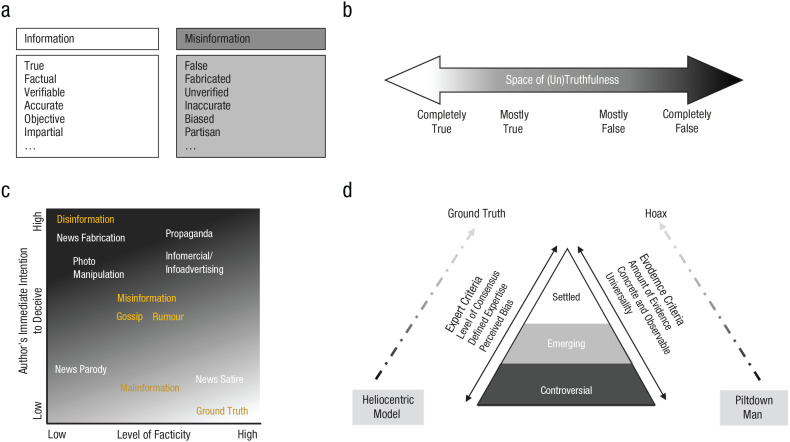

For the purposes of this review, a commonly agreed-upon definition is not necessary (or likely possible). Instead, what we have done here is graphically represent various conceptions of information, misinformation, and other related phenomena (Fig. 1) with reference to some examples. We also distill what we view as a shared essential property of most of the definitions we have discussed here: information that departs from established ground truths. Three things of note: First, we make no assertion about whether the information is unintentionally or deliberately designed to depart from ground truth; we note only that it does. Second, criteria for determining ground truth are evidentially problematic given the conceptual obstacles already mentioned, so we do not attempt this. Rather, we return to discussing the issues around this later in the review. Third, in our view the essential property described is dynamic—what we refer to as dynamic lensing of information. This is necessary to reflect that, just as lensing is an optical property that can distort and magnify light, the status of information interpreted through various means (lenses) is liable to shifts and over time diverges from, or converges to, ground truth (for an example, see Fig. 1d).

Fig. 1.

Different ways of conceptualizing and contextualizing information and misinformation. In (a), we show dichotomous distinctions between information and misinformation using commonly discussed criteria from the literature (e.g., Levi, 2018; Qazvinian et al., 2011; Tan et al., 2015; Van der Meer & Jin, 2019). In (b) we show Hameleers, van der Meer, and Vliegenthart’s (2021) continuum “space of (un)truthfulness” that characterizes mis- or disinformation by degrees of truth and falsehood. In (c) we illustrate Tandoc et al.’s (2018) two-dimensional space, which is used to map what the authors refer to as examples of fake news, with additional examples of terms (in yellow) that we have attempted to map onto the described dimensions. In (d), Vraga and Bode’s (2020) expertise and evidence are used as criteria for contextualizing misinformation, with two examples to illustrate claims that were controversial at one time but over time became settled (either because they were verifiably true or verifiably false): Galileo’s 1632 challenge of the geocentric astronomical model in favor of the heliocentric model (the Catholic Church did not officially pardon him for heresy until 1992), and Charles Dawson’s (1913) discovery of the “missing link” from jaw and tooth remains in Piltdown (England) that were shown by Weiner, Oakley, and Clark (1953) to have been fabricated to simulate a fossilized skull. “Piltdown Man” was deemed a hoax by the scientific community.

The “Problem” of Misinformation

A review of the kind presented here has not yet been conducted, and so we present this as a starting point for future interdisciplinary reviews and meta-analyses of the causes and effects of misinformation that could extend or challenge the claims we are making here. To address the title question, we concentrated on theoretical and empirical articles that explicitly reference the effects of misinformation. We have tried to be comprehensive (if not exhaustive) by drawing on and synthesizing research across a wide range of disciplines (computer science, economics, history, information science, journalism, law, media, politics, philosophy, psychology, sociology). Our strategy involved searching for articles on Google Scholar containing the terms “misinformation” and “fake news.” However, many of these articles contained related terms such as “disinformation,” “rumor,” “posttruth,” “hoax,” “satire,” and “parody.” Because the effects of “misinformation” and related terms were rarely referred to as a problem explicitly, we initially did not add “problem” or its synonyms to our search terms, and instead selected the most cited articles. To capture any specific references to the problem of misinformation, however, we examined in the second step both “misinformation” (and related terms) and “problem” (and related terms, such as “crisis,” “trouble,” “obstacle,” “dilemma,” and “challenge”).

The time frame chosen depended on the term. Interest in “fake news” boomed after 2016. Articles with more than 50 citations served as a starting point (albeit an arbitrary one) for our search between 2016 and 2022 (from a total of 25,300 search results); we allowed some exceptions, such as the highly cited Conroy et al. (2015) article on automatic detection of fake news. Research on the term “misinformation” traditionally focused on memory (e.g., Ayers & Reder, 1998; Frost, 2000) with applications to legal settings, specifically eyewitness studies (Wright et al., 2000). There are instances of misinformation in the more general sense, as applied to the news and the Internet, as early as Hernon’s (1995) exploratory study. To make things manageable, we limited our search by examining the most cited articles between 2000 and 2022 (from a total of 116,000 search results). Most studies made reference to the effects of misinformation or fake news in their introduction as motivation for their research, or in the conclusion to explain why their work has important implications. For others, the aim of the research is to precisely examine the effects of misinformation. In total, we carefully inspected 149 articles, either because they made references to the causes or to the effects of misinformation.

We observed that scholars broadly characterize the effects of misinformation in two ways: societal-level effects, which we group into four domains (media, politics, science, economics), and individual-level effects, which are psychologically informed (cognitive, behavioral). We reserve critical appraisals for the literature on the psychological effects of misinformation because they are fundamental to, and have direct implications for, societal-level effects.

Societal-level effects of misinformation

We will begin with those articles in which the effects of misinformation can be classified as general topical areas that impact society: media, politics, science, and economics.

Media

Determining the impact of misinformation for media, particularly news media, depends on whether readers can reliably distinguish between true and false news and between biased (left- or right-leaning) and objective content. Although some empirical studies show that readers can distinguish between true and false news (Barthel et al., 2016; Burkhardt, 2017; De Beer & Matthee, 2021; Posetti & Matthews, 2018; Shu et al., 2018; Waldman, 2018), Bryanov and Vziatysheva’s (2021) review indicates that overall the evidence is mixed. Luo et al. (2022) showed that news classified as misinformation can garner increased attention, gauged by the number of social media “likes” a post receives. This does not in turn imply that the false content is judged to be credible; in fact, there is a tendency to disbelieve both fake and true messages (Luo et al., 2022). Possible spillover effects such as this one have been used to explain disengagement with news media in general and distrust in traditional news institutions (Altay et al., 2020; Axt et al., 2020; Fisher et al., 2021; Greifeneder et al., 2021; Habgood-Coote, 2019; Levi, 2018; Lewis, 2019; Robertson & Mourão, 2020; Shao et al., 2018; Shu et al., 2018; Steensen, 2019; Tandoc et al., 2021; Tornberg, 2018; Van Heekeren, 2019; Waldman, 2018; Wasserman, 2020). For example, Axios, an American news website, reported that between 2021 and 2022 there was a 50% drop in social-media interactions with news articles, an 18% drop in unique visits to the five top news sites, and a 19% drop in cable news prime-time viewing (Rothschild & Fischer, 2022). Survey work, such as the Edelman Trust Barometer (2019) and Gallup’s Confidence in Institutions survey (2018), has shown declining trust in news media and journalists. Consistent with this, Wagner and Boczkowski’s (2019) in-depth interviews indicated negative perceptions of the quality of current news and distrust of news circulated on social media. Duffy et al. (2019) suggested a positive outcome may be that mistrusting news on social media will drive the public back to traditional news sources. Along similar lines, Wasserman (2020) claimed that misinformation provides traditional news institutions with an opportunity to rebuild their position as authoritative by emphasizing verification of claims communicated to the public.

In short, declining trust in media, doubtful source credibility, and the blurring of the dichotomy between false and true news are suspected effects of misinformation that worry some (Kaul, 2012; Posetti & Matthews, 2018; Steensen, 2019). A remedy for these effects is a more nuanced appreciation of truth and a greater sense of how claims are communicated in ways that allow for healthy skepticism (Godler, 2020).

Politics

The overarching negative effect of misinformation comes from the argument that without an accurately informed public, democracy cannot function (Kuklinski et al., 2000), although there is some discussion about whether this falls squarely back on the shoulders of news media. 1 Much of the evidence base is designed to show how beliefs in misinformed claims impact the evaluation of and support for particular policies (e.g., Allcott & Gentzkow, 2017; Benkler et al., 2018; Dan et al., 2021; Flynn et al., 2021; Fowler & Margolis, 2013; Garrett et al., 2013; Greifeneder et al., 2020; Levi, 2018; Lewandowsky & van der Linden, 2021; Marwick et al., 2022; Metzger et al., 2021; Monti et al., 2019; Shao et al., 2018; Waldman, 2018). However, there is as yet no consensus on how to precisely measure misinformation to determine its direct effects on democratic processes (e.g., election voting, public discourse on policies; Watts et al., 2021).

One of the strongest claimed effects of misinformation is that it leads voters toward advocating policies that are counter to their own interests, for example, the 2016 U.S. election and the Brexit vote in the United Kingdom (Bastos & Mercea, 2017; Cooke, 2017; Humprecht et al., 2020; Levi, 2018; Monti et al., 2019; A. S. Ross & Rivers, 2018; Shu et al., 2018; Wagner & Boczkowski, 2019; Weidner et al., 2019). Silverman & Alexander (2016) found that the 20 top fake election-news stories generated more engagement on Facebook than the 20 top election stories from 19 major news outlets combined. Participants in Wagner and Boczkowski’s (2019) study indicated how the reporting of the 2016 U.S. election reduced their trust in media because of false news stories associated with both political parties. Political polarization is also affected because false stories further divisions between parties as well as reinforce support for one’s own party (e.g., Axt et al., 2020; European Commission, 2018; Ribeiro et al., 2017; Sunstein, 2017; Vargo et al., 2017; Waldman, 2018). However, evidence showing a direct causal relationship between viewing fake news and switching positions—and in turn changing the outcome of elections and referenda—is lacking, and consequently these claims have also been questioned (Allcott & Gentzkow, 2017; Grinberg et al., 2019; Guess et al., 2019). For the same reasons, drawing connections between misinformation and political polarization has been difficult, and similar challenges emerge for a variety of other alternative explanations that have been proposed (Canen et al., 2021; Hebenstreit, 2022).

Although there currently is a strong interest in the effects of misinformation on political issues, debates surrounding the effects of misinformation are certainly not new. There are documented examples as far back as ancient Rome of how misinformation as rumor can impact the reputations of political figures. As a result, misinformation has been used as a form of social control to produce political outcomes, albeit with varying success (e.g., Chirovici, 2014; Guastella, 2017). For example, when Nicolae Ceaus,escu led Romania in the 1960s and 1970s, positive rumors were used to help establish support, but negative rumors—for example, that Ceaus,escu had periodic blood transfusions from children—later had the opposite effect. Attempts by mass media to censor such negative rumors failed, and as resistance intensified, the government’s response was harsh: a rumored genocide of 60,000 civilians in Timisoara. The executions of Ceaus,escu and his wife in 1989 were based on charges of genocide, among other things (Chirovici, 2014).

As well as rumor, there are several examples of state propaganda (e.g., Pomerantsev, 2015; Snyder, 2021), alternatively referred to as disinformation campaigns. These are also designed as a form of social control. This runs counter to the “Millian” market of ideas, based on Mill’s (1859) work proposing that only in a free market of ideas are we able to arrive at the truth (Cohen-Almagor, 1997). In fact, these examples suggest that deliberate as well as inadvertent processes disrupt the possibility of the best ideas surfacing to the point of consensus (Cantril, 1938). State propaganda and rumors attempt to suppress public discourse, which have been shown to negatively impact the populous, particularly in drawing connections to the instigation of violence, and influence voting behavior (e.g., Posetti & Matthews, 2018).

Thus far, it is not clear what the remedies are for addressing the effects of misinformation in the domain of politics. What is clear is that there are strong claims regarding the effects (e.g., polarization, disengagement with democratic processes, hostility to political figures, and violence) that underscore why misinformation is perceived as a threat to democracy.

Science

Misinformation in science communication (Kahan, 2017) and around science policymaking (Farrell et al., 2019) is purported to have an effect on public understanding of science (Lewandowsky et al., 2017). Two topics that are frequently brought up in connection with the negative effects of misinformation are health and anthropogenic climate change.

COVID-19 has been at the epicenter of many misinformation studies examining how it has impacted attitudes, beliefs, intentions, and behavior (Ecker et al., 2022; Kouzy et al., 2020; Pennycook et al., 2020; Roozenbeek et al., 2020; Vraga et al., 2020). For instance, Tasnim et al. (2020) referred to reported chloroquine overdoses in Nigeria following claims on the news that it effectively treats the virus (chloroquine is a pharmacological treatment for malaria). Misinformation was also claimed to have resulted in hoarding and panic buying (G. Chua et al., 2021) as well as avoiding nonpharmacological measures (e.g., handwashing, social distancing; Shiina et al., 2020).

Researchers have also considered the effect of misinformation on the zika (Ghenai & Mejova 2017; Valecha et al., 2020) and ebola viruses (Jin et al., 2014). Negative health behaviors include vaccine hesitancy, which has been most notably related to associations between the measles, mumps and rubella (MMR) vaccine and autism (Dixon et al., 2015; Flynn et al., 2021; Kahan, 2017; Kirkpatrick, 2020; Lewandowsky et al., 2012; Pluviano et al., 2022). The actual effects that are measured vary from self-reported behaviors in response to inaccurate health claims to views on trust in the news media, politics, and general damage to democracy. Studies examining whether changes are possible suggest that belief updating can be achieved when contrary information is presented in textual form (Desai & Reimers, 2018) or through an interactive game (Roozenbeek & van der Linden, 2019).

The effects of misinformation also extend to the topic of anthropogenic climate change. It has been proposed that misinformation causes differences of opinion on the urgency of the issue (Bolsen & Shapiro, 2016; Cook, 2019; Farrell, 2019; McCright & Dunlap, 2011). Misinformation is also used to explain the stalling of political action. This is either because of a lack of public consensus on the issue (claimed to be informed by misinformation), or because of resistance to addressing it, again because of apparent false claims (Benegal & Scruggs, 2018; Conway & Oreskes, 2012; Cook et al., 2018; Flynn et al., 2021; Lewandowsky et al., 2017; Maertens et al., 2020; van der Linden et al., 2017; Y. Zhou & Shen, 2021). Moreover, the effects of misinformation do not have an equal impact on recipients because anthropogenic climate change has been perceived to be connected with particular ideological and political positions (Cook et al., 2018; Elasser & Dunlap, 2013; Farrell et al., 2019; Kormann, 2018; McCright et al., 2016).

If misinformation is indeed the sole contributor to these effects, rather than one of several factors, then fears around the impact of misinformation on generating false beliefs and motivating aberrant behaviors are justified given the potential impact on human well-being. Some of the empirical findings presented here have also included interventions designed to address the effects of misinformation, primarily centered on refocusing the way people encounter false claims and improving their ability to scrutinize claims to determine their truth status.

Economics

For some researchers, the economic cost of misinformation is considered significant enough to warrant analysis (e.g., Howell et al., 2013). Financial implications are also considered, such as, for example, how misinformation can disrupt the stability of markets (Kogan et al., 2021; Levi, 2018; Petratos, 2021), as well as the cost of attempting to debunk misinformation (Southwell & Thorson, 2015), and the policing of misinformation online in order to develop measures to limit public exposure (Gradón, 2020). For example, Canada has spent CAD$7 million to increase public awareness of misinformation (Funke, 2021) in an attempt, among other issues, to stem the economic impact. Burkhardt (2017) focuses on consumer behavior and how brands inadvertently propagate misinformation and how that can present opportunities to increase profits. As a main goal to get consumers’ attention, Burkhardt proposes that advertisements appearing alongside a piece of information that is true or false can impact purchasing behavior. Given the appeal of gossip and scandal, as illustrated by media outlets such as the National Enquirer or Access Hollywood on TV, advertisers can thus profit off sensationalized and potentially misinformed claims (e.g., Han et al., 2022). Another fundamental concern is that advertisements themselves contain misinformation (Baker, 2018; Braun & Loftus, 1998; Glaeser & Ujhelyi, 2010; Hattori & Higashida, 2014; Rao, 2022; Zeng et al., 2020, 2021).

There are also real-world examples of the economic effects of misinformation, such as how brands fall victim to unsubstantiated claims. Berthon et al. (2018) discuss how Pepsi’s stock fell by about 4% because a story went viral about Pepsi’s CEO, Indra Nooyi, allegedly telling Trump supporters to “take their business elsewhere.” This story has been cited by other marketing researchers to emphasize the adverse effects of misinformation (e.g., Talwar et al., 2019) on reputation management—an industry estimated to be worth $9.5 billion alone (Cavazos, 2019), excluding indirect costs for increasing trust and transparency. Another popular example, which aligns with Levi’s (2018) observation on market stability, is a tweet broadcast by the Associated Press in 2013. It claimed President Obama had been injured in an explosion, which reportedly caused the Dow Jones stock market index to drop 140 points in 6 min (e.g., Liu & Wu, 2020; Zhou & Zafarani, 2021). Further examples can be found in Cavazos’s (2019) report for software company Cheq, which concluded that misinformation is a $78 billion problem.

In combination, these examples often tie in with effects reported elsewhere: For instance, increased reputation management is a response to the fragile trust that we noted in relation to the media. Thus, misinformation has been claimed to impact market behavior, consumer behavior, and brand reputation, which in turn has economic and financial effects on business. When advertising sits alongside misinformation in news stories, or when misinformation is embedded in advertisements, both are claimed to facilitate profits, but it is also possible that such a juxtaposition limits profits because of the reputational damage to businesses.

Individual-level effects of misinformation

Establishing the fundamental effects of the way misinformation is processed psychologically and establishing its influence on behavior have core implications for research proposing how the general effects are expressed in society (e.g., engagement with scientific concepts, democratic processes, trust in news media, and economic factors). Therefore, we critically consider the evidential support for the effects of misinformation on cognition and behavior. We review these effects separately also because we think that a tacit inference underlying much of the current debate seems to go like this: 1) Experimental evidence shows that misinformation affects beliefs in various ways. 2) While there are few experimental studies examining the direct behavioral consequences of beliefs influenced by misinformation, we generally “know” that beliefs affect behavior. Hence, we can conclude that misinformation is a cause of aberrant behavior. Hence, we can conclude that misinformation is a cause of aberrant behavior. We are of the opinion that this line of reasoning is an oversimplification that does not do justice to the complexity of the problem and its serious implications for policymaking. The presumed causal chain is also at odds with research on the more complicated relations between beliefs and behavior and different cognitive factors (e.g., Ajzen, 1991), which we discuss below in more detail.

Cognitive effects of misinformation

A large proportion of research on misinformation has been dedicated to examining the effects on cognition. One example is the difficulty in revising beliefs when false claims are retracted (debunking or continued influence effect; Chan et al., 2017; Desai et al., 2020; Desai & Reimers, 2018; Ecker et al., 2011; Garrett et al., 2013; Lewandowsky et al., 2012; Newport, 2015; Nyhan & Reifler, 2010; Southwell & Thorson, 2015; Walter & Murphy, 2018). Findings show that presenting counterexamples (e.g., via causal explanations) corrects false beliefs (e.g., Desai & Reimers, 2018; Guess & Coppock, 2018; Wood & Porter, 2018), irrespective of group differences (e.g., age, gender, education level, political affiliation; Roozenbeek & van der Linden, 2019). Another option is to “inoculate” people from misinformation (Lewandowsky & van der Linden, 2021; Roozenbeek & van der Linden, 2019). This involves warning people in advance that they might be exposed to misinformation and giving them a “weak dose,” allowing people to produce their own cognitive “antibodies.” However, for this and other debunking efforts, there is also evidence of backfiring (increased skepticism to all claims that are presented, or increased acceptance and sharing of misinformation, as well as reduced scrutiny and correction; e.g., Courchesne et al., 2021; Nyhan & Reifler, 2010; Trevors & Duffy, 2020). In short, intervention attempts to reduce belief in misinformed claims, as defined by the researchers, can have unintended perverse effects that spill over in all manner of directions. This likely indicates problems to do with the interventions themselves as well as the nature of the claims that are the subject of interventions.

Misinformation is claimed to have a competitive advantage over accurate information in the attention economy because it is not constrained by truth (Acerbi, 2019; Hills, 2019), so it is framed in sensationalist ways to maximally capture attention (Acerbi, 2019). Hills (2019) emphasized that the unprecedented quantities of information available today require increased cognitive selection, which in turn can lead to adverse outcomes. Because people’s information acquisition is constrained by their selection processes, they tend to seek out information that is consistent with existing beliefs—negative, social, and predictive. Selecting and sharing information in such a manner in turn can lead to adverse effects. For example, preferentially seeking out and sharing negative information can lead to the social amplification of risks, and belief-consistent selection of information can lead to polarization. These processes in turn shape the information ecosystem, leading, for instance, to a proliferation of misinformation.

In addition, frequent exposure to misinformation is claimed to hinder people’s general ability to distinguish between true and false information (Barthel et al., 2016; Burkhardt, 2017; Grant, 2004; Newman et al., 2019; Shu et al., 2018; Tandoc et al., 2018). However, methodological concerns have been raised because of overinterpretation of questionnaire responses as indicators of stable beliefs informed by misinformation, as well as the likelihood of pseudo-opinions (Bishop et al., 1980)—especially as responses can reflect bad guesses by participants in response to unfamiliar content (e.g., Pasek et al., 2015).

Truth, lies, and objectivity

Studies of deception and lie detection provide important insights into the relationship between truth and misinformation, as well as people’s ability to detect the difference. Meta-analyses show that people’s accuracy in lie detection is barely above chance level (Bond & DePaulo, 2006; Hartwig & Bond, 2011, 2014). Even if people can use the appropriate behavioral markers to make judgments about what is true, objective relations between deception and behavior tend to be weak. In other words, catching liars is hard because the validity of behavioral cues to lying is so low.

Not only are people bad at telling the difference between truth and lies in others, they also have a distorted sense of their own immunity to bias and falsehoods, referred to as the objectivity illusion (Robinson et al., 1995; L. Ross, 2018). Essentially the illusion is expressed in such a way that if “I” take a particular stance on a topic (including beliefs, preferences, choices), I will view this position as one that is objective. I can then appeal to objectivity as a persuasive mechanism to convince others of my position, along with supporting evidence that is supposedly unbiased. If disagreement with me ensues, and my proposed position is rejected, the rationalization is that the other side is both unreasonable and irrational. This is a powerful expression of a bias that is centered on justifying a position with reference to objectivity without accepting that it may also be liable to bias. Pronin et al. (2002) called this a “biased blind spot.” Regardless of political affiliation (e.g., Schwalbe et al., 2020), and even one’s profession (e.g., scientists expert in reasoning from evidence; Ceci & Williams, 2018), no one is immune from the objectivity illusion.

Laying the effects of misinformation on distorting cognition comes with a problem. The fundamental issue is how to establish a normative rule to define criteria that distinguish reliably between truth and falsehood, to determine in turn whether or not people can adequately distinguish between the two. But in a world in which no obvious diagnostic truth criteria exist, the clichés of how truth and deception or objectivity and bias can be discriminated are by magnitudes stronger than any normative rule.

Behavioral effects of misinformation

The most commonly referenced behavioral effects pertain to health behaviors in response to false claims (e.g., antivaccine movements, speculated vaccine-autism link, genetically modified mosquitos and the Zika virus, COVID-19; Bode & Vraga, 2017; Bronstein et al., 2021; Galanis et al., 2021; Gangarosa et al., 1998; Greene & Murphy, 2021; Joslyn et al., 2021; Kadenko et al., 2021; Loomba et al., 2021; Muğaloğlu et al., 2022; van der Linden et al., 2020; Van Prooijen et al., 2021; Xiao & Wong, 2020). The same association has also been made between misinformation associated with anthropogenic climate change and resistance to adopting proenvironmental behaviors (Gimpel et al., 2020; Soutter et al., 2020). The effects of misinformation on behavior extend to the rise in far-right platforms (Z. Chen et al., 2021), religious extremism impacting voting behavior (Das & Schroeder, 2021), disengagement in political voting (Drucker & Barreras, 2005; Finetti et al., 2020; Galeotti, 2020), intended voting behavior (Pantazi et al., 2021), and advertising aligned with fake news reports leading to increased consumer spending (Di Domenico et al., 2021; Di Domenico & Visentin, 2020). A further study examined the unconscious influences of misinformation (specifically fake news) in a priming study, demonstrating direct effects on the speed of tapping responses (Bastick, 2021).

Another behavioral effect of misinformation is more interpersonal and centers on information sharing. First, there are those studies that examine the extent of sharing behavior. Some researchers propose that this is highly prevalent: Chadwick et al. (2018) found that 67.7% of respondents admitted sharing problematic news on social media during the general election campaign in the United Kingdom in June 2017. However, others argue against claims that sharing is a cause for concern (Altay et al., 2020; Grinberg et al., 2019; Guess et al., 2019; Nelson & Taneja, 2018). Grinberg et al. (2019) found that just 0.1% of Twitter users shared 80% of misinformation during the 2016 U.S. election, whereas Allen et al. (2020) estimated that misinformation comprises only 0.15% of Americans’ daily media diet. Such estimates should be taken with a grain of salt, because of the problematic basis on which these estimates are made, but they do nevertheless imply that for many citizens the signal-to-noise ratio is fairly high.

But why would people not share misinformation? Altay et al. (2020) showed that people do not share misinformation because it hurts their reputation and that they would do so only if they were paid. This aligns with the work of Duffy et al. (2019): They found that respondents expressed regret when they shared news that later turned out to be misinformation. In explaining sharing behavior, studies suggest there are message-based characteristics, such as its ability to spark discussion (X. Chen et al., 2015), emotional appeal (Berger & Milkman, 2012; Valenzuela et al., 2019), and thematic relevance to the recipient (Berger & Milkman, 2012). Researchers have also argued for social reasons to share misinformation, that is, to entertain or please others (Chadwick et al., 2018; X. Chen et al., 2015; Duffy et al., 2019; Pennycook et al., 2021c), to express oneself (X. Chen et al., 2015), to inform or help others (Duffy et al., 2019; Herrero-Diz et al., 2020), to signal group membership (Osmundsen et al., 2021), to achieve social validation (Waruwu et al., 2021), and to address a fear of missing out (Talwar et al., 2019). Studies show that these motivations can lead people to pay less attention to the accuracy of information because other factors play more of a salient role in the sharing process beyond the content itself (Pennycook et al., 2021). This reaffirms the fundamental misinformation/disinformation distinction: Sharing misinformation involves unintentional deception driven by interactional motivations, whereas disinformation stems from intentional deception. Research indicates that there are several motivations for sharing misinformation beyond the goal of deliberately spreading false information to influence others.

Last, there are individual differences that increase the likelihood of sharing behavior and therefore lead to adverse effects of misinformation. For instance, those who believe that knowledge is stable and easy to acquire (i.e., epistemologically naive) are more likely to share online health rumors than those who believe knowledge is fluid and hard to acquire (i.e., epistemologically robust; Chua & Banerjee 2017). Another factor that impacts one’s likelihood of sharing misinformation is the need to instill chaos, which arises from social marginalization and an antisocial disposition (Arcenaux et al., 2021). From an ideological and age perspective, conservatives are more likely to share misinformation than liberals, and older generations are more likely to share misinformation than younger age groups (Grinberg et al., 2019; Guess et al., 2019).

Beliefs, attitudes, intentions, and behavior

The logic behind studies drawing a causal connection between misinformation and behavior is that misinformation is pivotal to motivating negative behaviors. In other words, if claims that were factually inaccurate had not been encountered, then the harmful behaviors would not have occurred. There are two ways in which this presumed causal relationship between misinformation and behavior can be theorized. Either misinformation introduces new false beliefs and attitudes, and these in turn motivate a particular aberrant behavior, or misinformation reinforces preexisting false beliefs and attitudes and strengthens them enough to motivate a particular aberrant behavior (Imhoff et al., 2022; Pennycook & Rand, 2021b; Van Bavel et al., 2021).

Both of these hypotheses rest on a reliable relationship between beliefs and attitudes and behavior. Since Fishbein and Ajzen’s (1975) seminal work, psychologists have been interested in belief and attitude formation and with showing how it is associated with intention and behavior. According to Ajzen’s theory of planned behavior (Ajzen, 1991, 2012, 2020), the principle of compatibility (Ajzen, 1988) requires an explicit definition of the behavior, the target, the context in which the behavior appears, and the time frame. From this, it is then possible to apply an analysis that determines how each factor (behavior, target, context, time frame) contributes to the target of interest. If this approach is applied to the problem of misinformation, we can examine its influence on a behavior of interest. For example, after people encounter some misinformation on social media regarding climate change, when it comes to food consumption (behavior), we could predict that more meat is eaten (target), in a lunchtime canteen (context), and observed within a few days of encountering the misinformation (time frame). The determinants of the intention to act in accordance with the consumptive behavior involve beliefs and attitudes, which in this case are negatively valenced. One can then derive a belief index through the application of an expectancy-value model to calculate the strength of the belief (e.g., climate-change denial) multiplied by the subjective evaluation (e.g., negative attitudes to eating sustainably) and the outcome (e.g., not eating sustainably in a lunchtime canteen setting). Critically, there is a requirement to show how misinformation is instrumental in generating the beliefs and attitudes that can then lead to misbehaviors.

With the exception of the unconscious priming study (Bastick, 2021), none of the cited work examining the association between misinformation (and fake news) and behavior shows a causal link between the two. 2 None of the evidence as yet has been able to reveal the kind of relationships needed to reliably establish the cause of behavior via changes in belief. Why are we making this strong critique? Much of the empirical work relies on self-reports of intentions or on judged willingness or judged resistance to behave in particular ways, or else demonstrates correlations between the circulation of misinformation and aberrant behaviors. In other words, they are subjective judgments about behavior, not actual direct indicators of behavior. There are some recent meta-analyses that have examined the impact of misinformation on sharing intentions (Pennycook & Rand, 2021b), people’s beliefs (Walter & Murphy, 2018), and people’s worldviews (Walter & Tukachinsky, 2020), as well as the impact of fact-checking on political beliefs (Walter et al., 2020) and people’s misunderstanding and behavioral intentions regarding health misinformation (Walter et al., 2021). Again, as yet, none of this work has been able to draw a direct connection between misinformation and specific measurable behavioral effects, aside from intentions to and judgments about willingness to behave in a particular way.

More generally, several meta-analytic studies examined the relationship between different types of beliefs, attitudes, and intentions on behavior (e.g., Glasman & Albarracín, 2006; Kim & Hunter, 1993; Kraus, 1995; Sheeran et al., 2016; Webb & Sheeran, 2006; Zebregs et al., 2015). On the whole, the reported effects of belief on behavior suggest that there is a relationship, but many authors note that their analyses are limited by the fact that they measured behavioral intentions, not behavior itself (Gimpel et al., 2020; Kim & Hunter, 1993; Xiao & Wong, 2020). In addition, there are weak relationships between beliefs and intentions (Zebregs et al., 2015), and when intentions and behaviors are examined the effects can also be weak, with many other moderating intervening factors (e.g., personality, incentives, goals, persuasiveness of communication) explaining this weakness (Soutter et al., 2020; Webb & Sheeran, 2006). The observed weak relations are consistent with the results of research on more applied issues. For instance, in health and risk communication it is widely accepted that the mere provision of accurate information is typically not sufficient to induce behavioral change—raising the question of why perceiving false information should be sufficient to induce aberrant behavior.

We return to the issue regarding evidence for the association between misinformation and aberrant behaviors in the concluding section, and we will address how combinations of experimental methods could be used to better locate potential directional relationships between misinformation and behavior.

Causes of the Problem of Misinformation

The digital age seems rife with misinformation, which in turn is alleged to lead to several profound societal and individual problems. A comprehensive approach to understanding the causes of these reported effects is therefore required. Why exactly is misinformation a problem, given all these apparent effects?

One of the most common explanations of the causes of misinformation in its various forms relates to the advances in technologies that produce and distribute information. The information ecosystem (i.e., the technological infrastructure that enables the flow of information across individuals and groups) is assumed to be driving the problem of misinformation, because it is the critical means by which people now all source information, and it has itself been contaminated by misinformation (Pennycook & Rand, 2021b; Shin et al., 2018; Shu et al., 2016). There is nothing like the digital landscape for quick and wide dissemination of misinformation (Celliers & Hattingh, 2020; Lazer et al., 2018; Moravec et al., 2018; Tambiusco et al., 2015), and it is said to have transformed consumers into producers of information (Ciampaglia et al., 2015; Greifeneder et al., 2020; Kaul, 2012; Marwick, 2018), and misinformation (Bufacchi, 2020; Levi, 2018). Others emphasize that the sheer volume of information that is now available encourages sharing behavior through online networks (Bessi et al., 2015) and leads to biased information-selection processes with potentially adverse consequences (Hills, 2019).

Also seen as facilitating the proliferation of misinformation are technological tools such as recommender systems (Fernandez & Bellogín, 2020, 2021), Web platforms (e.g., Han et al., 2022), and social media (Allcott et al., 2019; Chowdhury et al., 2021; Durodolu & Ibenne, 2020; Pennycook & Rand, 2021a, 2021b; Valenzuela, Halpern, et al., 2019). Also, social-media environments allow swarms of bots to disseminate or obscure information (Bradshaw & Howard, 2017). Moreover, these can also be used to generate Sybil attacks (Asadian & Javadi, 2018), when a single entity emulates the behaviors of multiple users and attempts to create problems both for other users and the network itself. In some cases, sybils are used to make individuals appear affluent to give the impression that their opinions are highly endorsed by other social-media users, when in fact this is artificially generated (Ferrara et al., 2016). Thus, not only is the content artificially generated, but features used to judge its reliability, popularity, and interest from others are also artificially manipulated.

The digitization of media also enables producers of (mis)information to access sophisticated and extremely convincing tools of digital forgery, such as Deepfakes, the digital alteration of an image or video that convincingly replaces the voice, the face, and the body of one individual for another (Levi, 2018; Steensen, 2019; Whyte, 2020). Even accurate news of actual events can be distorted as successive users are adding their own contexts and interpretations (Peck, 2020). Opaque algorithmic curation that takes humans out of the loop aims to maximize consumption and interactions, possibly causing viral appeal to take precedence over truthfulness (Rader & Gray, 2015). However, technology also offers ways to tackle misinformation. Bode and Vraga (2017) showed how comments on social media are as effective as algorithms in correcting misperceptions, as well as contributing to the development of tools to track misinformation (Shao et al., 2016).

The media landscape has certainly been fundamentally changed by the Internet and social media, but historians have also argued that although gossip is a permanent and widespread feature of social exchanges online, its transmission and diffusion still retain the same core characteristics as pre-Internet, pretelecommunications, prewriting press (Darnton, 2009; Guastella, 2017; Shibutani, 1966). For instance, Guastella (2017) argued that information has always been diffused through open-ended, chainlike transmission. This hinders the ability to verify any single item of information, because the source responsible is by its very nature obscured. In other words, communication is frequently disorderly and untraceable, whether the issue at hand is a rumor in antiquity or in the present day. Cultural historian Darnton (2009) offered a different perspective but drew similar conclusions regarding the role of technology in misinformation. He claimed that we are not witnessing a change in the information landscape but a continuation of the long-standing instability of texts. Information is no more unreliable today than in the past, because news sources have never corresponded to actual events.

According to these views, although new channels for misinformation have emerged, the conditions in which it is created and spread have not changed as much as one might think. In fact, viewing misinformation through a historical as well as a philosophical lens not only sheds light on shifts regarding the medium of information transmission but also informs how to view the problem of misinformation in the main. We now turn our attention to this research that explores why misinformation may not be cause for alarm and the scholars from different disciplines who share this perspective.

Misinformation: unsounding the alarm?

Misinformation is not a new phenomenon (Allcott & Gentzkow, 2017; De Beer & Matthee, 2021; Kopp et al., 2018; Scheufele & Krause, 2019; Waldman, 2018). For instance, fake news was recorded and disseminated through early forms of writing on clay, stone, and papyrus so that leaders could maintain power and control (Burkhardt, 2017).

Altay et al. (2021) deconstruct several alarmist misconceptions about the problem of misinformation. One is that misinformation engulfs the Internet, specifically social media, and as a result falsehoods spread faster than the truth. For example, according to a BuzzFeed report (2016), the top 20 fake news stories on Facebook resulted in nine million instances of engagement (likes, comments, shares) between August and November 2016. However, if all 1.5 billion Internet users engaged with one piece of content a week, these 9 million instances would represent only 0.042% of all engagements (Watts & Rothschild, 2017). Also, fact-checking and science-based evidence were found to be retweeted more than false information during the pandemic (Pulido et al., 2020). If, as some have claimed, misinformation has always existed as an inherent feature of human society (Acerbi, 2019; Allcott & Gentzkow, 2017; Nyhan, 2020; Pettegree, 2014), then the current focus on its presence online may reflect methodological convenience (i.e., it can be measured more easily; Tufekci, 2014), overlooking misinformation on television, newspapers, and the radio.

Another misconception is conflating the volume of content engagement with content belief. Reasons for engaging with misinformation are numerous, from expressing sarcasm (Metzger et al., 2021) to informing others (Duffy et al., 2019): Thus, inferring acceptance from consumption can exaggerate the negative effects of misinformation (Wagner & Boczkowski, 2019). Consumption of information is informed by prior beliefs (Guess et al., 2019, 2021), which suggests that it is not strictly instrumental in the generation of new false beliefs. People are not necessarily misinformed but may simply be uninformed; this is also the point at which pseudo-opinions can emerge (Bishop et al., 1980), so this is an important distinction (Scheufele & Krause, 2019). Luskin & Bullock (2011) observed that 90% of surveys lack a “don’t know” response, which increases the likelihood of an incorrect answer by 9 percent.

These misperceptions suggest that concerns around misinformation exceed what can be inferred from the available evidence, and that the causal link between misinformation and behaviors may be exaggerated (Scheufele et al., 2021). This is also why, as Krause et al. (2022) argued, we are not in an “infodemic.” This term, frequently used during the COVID-19 pandemic, refers to an alleged surplus of false information (World Health Organization, 2022). Our complex information ecology presents a challenge for disentangling the relationship between misinformation and behavior, and science itself grapples with the volatility of the evidence base as it develops (Osman et al., 2022); determining what constitutes misinformation is therefore frequently akin to shifting sand.

From a more historical perspective, Scheufele et al. (2021) showed that the worry regarding the current circulation of misinformation neglects examples predating the advent of digitization of information. The emergence of communication studies in the United States in the 1920s is perceived to be the result of concerns over the aberrant influence of the media (Wilson, 2015). New media technologies were seen as responsible for the growing disconnect between what people believed and the real world in that era (Lippman, 1922). Panics also arose in reaction to the arrival of telegraphy in the early 19th century (Van Heekeren, 2019). 3 Before that, after the invention of the printing press in 1440 granted open access to knowledge, concerns from the Catholic Church resulted in the 1560 publication of the Index of Forbidden Books. In all such attempts to sound the alarm, and then to address the alarm around technologies offering greater access of information to the populace, failure ensued—because ideas continue to circulate even once speech is restricted (Berkowitz, 2021). Only when we consider misinformation through a historical lens can we learn that the current situation is arguably preferable, although admittedly still problematic, compared with previous information eras (Van Heekeren, 2019), because, as explained earlier, traditional media can learn from the past and focus on verification and fact-checking as a way to reassert their authority. Furthermore, digital media has devised means of increasing the likelihood of self-correction (e.g., debunking and fact-checking websites).

The discussion in this section presents obstacles for the argument that technological advances lead to misinformation which, in turn, has a range of negative ramifications. Nonetheless, if this position is pursued, one might ask how, then, can we make sense of why misinformation is perceived to be a problem? We address this question with reference to historical epistemology, which is concerned with the process of knowing. We argue that knowledge is currently guided by the concept of objectivity and its associated objects, such as statistics and DNA, but that this is a recent development; knowledge has previously been guided by other concepts, such as subjectivity relying on rhetoric. Thus, how people arrive at ground truth is not as consistent as one might assume. This suggests that the worries around misinformation are rooted in a perceived shifting away from norms of objectivity and empirical facts.

It is helpful to begin with the emergence of rhetorike—the art of public speaking (Sloane, 2001). As Aristotle’s Art of Rhetoric dictates, rhetoric was believed to communicate, rather than discover, truth through ethos (trustworthiness), logos (logic), and pathos (emotion). Thus, the primary vehicle for both ancient philosophy and rhetoric was orality which, according to Guastella (2017), meant that information was engulfed by the disorderly and unstable flow of time. Chirovici’s (2014) work on rumor throughout the ages also revealed people’s tendency to favor stirring the listener’s imagination over veracity. This is exemplified in Grant’s (2004) research, which showed how historical writing in antiquity contained numerous instances of misinformation. For example, Roman historian Titus Livy was known for embellishing his accounts to such an extent that scholars debated whether he should be regarded as a novelist or a historian. According to Grant, Titus Livy was motivated to build his depictions of events on blatantly fictitious legends. The upshot of the oral tradition was a series of historical accounts that were incomplete, untrustworthy, or entirely false.

During the Middle Ages, the process of establishing knowledge transformed with the emergence of the modern fact (e.g., Shapiro, 2000; Poovey, 1998; Wootton, 2015). Before the 13th century, written records regarding one’s financial affairs were kept secret and stored away in locked chests with other important documents such as heirlooms, prayers, and IOUs (Poovey, 1998). However, Poovey (1998) observed that double-entry bookkeeping by merchants was a catalyst for the development of a new “epistemological unit,” otherwise known as the modern fact. This had two repercussions: (a) Private information became a vehicle for public knowledge, and (b) the status of numbers was elevated such that they were now positively associated with accuracy and precision, rather than negatively associated with supernaturalism and symbolism. Wootton (2015) also referred to the critical role of double-entry bookkeeping in the mathematization of the world. The value of numerical descriptions produced new standards of evidence that focused on accurately measuring natural phenomena and precisely characterizing objects for practical use (Winchester, 2018).

The arrival of the printing press solidified a shift in the meaning of knowing (Wootton, 2015): Facts occupied the new cornerstone of knowledge and became synonymous with truth. The assumption that facts have always been integral to knowledge is evidenced by their use as a reference point for ground truth among those attempting to manage and detect misinformation (e.g., Hui et al., 2018; Rashkin et al., 2017; Shao et al., 2016; Tambuscio et al., 2015). Once facts became embedded in public discourse, the role of rhetoric faded. This is best reflected in the Royal Society’s new motto in 1663 (nullius in verba, meaning “take nobody’s word for it”) (Wootton, 2015). As Bender, J., & Wellberry, D. (1990). succinctly observed, “Rhetoric drowned in a sea of ink.” By the 18th century, knowledge stemmed from an objective relationship between an individual and the natural world in the form of measurements and facts. Crucially, knowledge could now be widely communicated to an increasingly literate public.

This historical and philosophical research on misinformation, as well as studies by researchers from other disciplines (e.g., Altay et al., 2021; Berkowitz, 2021; Krause et al., 2022; Van Heekeren, 2019), are distinct from the work reviewed earlier. This alternative position regards misinformation as neither new or especially concerning; it is treated as part and parcel of a variety of linguistic and communication styles that impact every aspect of the way people encounter information. So if we take the position that the problem is not novel and the way we use information to establish ground truth is never entirely stable, then what else could be causing the current concern over misinformation? The work of those reviewed in this section (particularly Altay et al., 2021; Krause et al., 2022) lays the foundations for us to propose why we believe misinformation is perceived to be a problem.

Intersubjectivity

The heart of our argument

If we condense the critical issues of misinformation into the following, then academic literature, traditional news media (e.g., BBC, Reuters, CNN) and public policy (e.g., the European Commission, the World Health Organization, the World Economic Forum) are sounding the alarm because the digital information ecosystem allows everyone to generate and distribute misinformation. Technological advances have not only increased access to better quality information but also created a corrupt ecosystem that enables a greater supply of poor information—which in turn has even greater potential for negative impact on behavior offline.

We pose the following argument: Information technological advances over the past few decades increased the transparency of interactions between people. Simply observing people interact with each other online is not an issue in and of itself. However, by exposing these interactions, current information systems pave the way for a simple and erroneous inference. The vast volume of interactions must also mean there are more opportunities for people to deviate from objectivity, because they can more easily coordinate beliefs about the world with one another (intersubjectively). In other words, there is a perception that intersubjectivity is emerging as a new way of knowing, and this threatens established (though historically fairly young) norms of objectivity. This leads to a tension between ground truths that are established through traditional institutions and agents that are typically considered authorities presiding over ground truths, and the processes (e.g., via social media) used to establish knowledge outside of these institutions. The problem of misinformation as conceptualized by those sounding the alarm is therefore the perceived misalignment between laypeople’s coordination efforts for interpreting entities and mechanisms for objectively establishing these entities.

We argue that the currently available evidence does not support a clear link between beliefs generated or reinforced through misinformation and aberrant behavior. The dynamics of belief formation are far more complicated. In addition, even if intersubjectivity does replace objectivity as the primary way of knowing, research demonstrates that our relationship with epistemic concepts (e.g., objectivity, belief, probability) and objects (e.g., metrology, statistics, rhetoric) has been dynamic throughout history. Desiring a pre-internet era suggests recovery from such current shifts in knowing to some point in time where misinformation was less of a problem, but when was that? Last, some coordination between the scientific community is required to determine what evidence meets the criteria of objectivity, because it does not happen on its own outside of group consensus. In the next section we expand on what we mean by intersubjectivity and how it can be a useful conceptual device for understanding why there is such alarm associated with misinformation.

Tensions between intersubjectivity and objectivity

We define intersubjectivity as a coordination effort between two or more people to interpret entities in the world (ideas, events, people, observations) through social interaction. Our definition of intersubjectivity is informed by prior definitions proposed in philosophy (Schuetz, 1942), economics (Kaufmann, 1934), psychology (W. James, 1908), psychoanalysis (Bernfeld, 1941), psycholinguistics (Rommetveit, 1979), and rhetoric (Brummett, 1976). The core idea of intersubjectivity, according to Brummett (1976), is that meaning arises from our interactions. Specifically, he argued that there is no objective reality in that sensations, perceptions, beliefs, and experiences are meaningless. Rather, it is our experiences with other people that imbue these sensations, perceptions, beliefs, and other constructs of knowing with meaning—namely emotional valence and moral judgments.

How does this contrast with objectivity? According to Reiss and Sprenger (2020), observations are objective if they are (a) based on publicly observable phenomena, (b) free from bias, and (c) accurate representations of the world. There is not only consensus on how these observations should be made and interpreted, but this information and the ways by which it is obtained are also made visible for scrutiny (e.g., in the form of academic publications). This signals that the information has met the necessary standards while simultaneously reinforcing them. Crucially, if these observations do meet the criteria, they are regarded as contributing to knowledge. Objectivity converts observations into facts, which have become synonymous with ground truth, and this functions to reduce uncertainty about the world. Indeed, the fact-as-truth sense developed from the fact-as-occurrence sense (Poovey, 1998).

Intersubjectivity as a candidate for why misinformation is a big problem

So, why is intersubjectivity supposedly replacing objectivity, and why is this a cause for concern with respect to misinformation? Regarding the first question, social media is inherently about connecting people, so the number of visible interactions is potentially vast. Regarding the second question, the concern is that intersubjectivity is free from the formal rules of generating objective truths. In short, it looks as though more people are going it alone in establishing knowledge, and this has now been better exposed through advanced information technologies. Anyone can now participate because the affordances encourage intersubjective knowledge production and sharing. Intersubjectivity hampers the influence of facts in helping people accurately understand the world, leading to an uncontrollable breeding ground for misinformation, which then produces more aberrant behaviors.

In addition, intersubjectivity can appear to be a good candidate for generating misinformation, and there is research suggesting that social relationships and interactions, rather than objective methods, are at the foreground of knowledge. Kahan (2017) drew on Sherman and Cohen’s (2002) identity-protective cognition, which argues that culture is both cognitively and normatively prior to fact. He uses this theory to explain his findings that people are more likely to hold misconceptions if those misconceptions are consistent with their values. Similarly, Oyserman and Dawson (2020) argued that during the United Kingdom referendum in 2016, people used simple identity-based reasoning rather than complex information-based reasoning to inform their voting decision. According to Margolin’s (2021) theory of informative fictions, there are two types of information in any exchange: property information (object-focused) and character information (agent-focused). Misinformation therefore occurs when people prioritize character information despite the negative impact on property information. These ideas are also reflected in Pennycook et al.’s (2021c) study, which found that although people care about the accuracy of the information that they share with others, signaling political affiliation is more important because of the social-media context.

Perceived intersubjectivity as a candidate for why misinformation is a big problem

We each have a vast network of social ties, each with its own strengths (strong/weak) and valences (positive/negative). This complicates which claims are believed and for how long, for two reasons. First, our relationships to others are not static but are susceptible to transformation during an interaction. Second, if a claim is circulating widely, whether it is in a community or on a news website, then it becomes available for negotiation across a multitude of interactions with individuals. There are two consequences: First, an individual’s likelihood of believing a claim is an aggregate of their interactions; and second, the interpretation of an entity (e.g., observation of the world) is constantly in flux. These consequences make intersubjectivity a candidate for explaining why there is so much alarm about the proliferation and consequences of misinformation. But, on the other hand, intersubjectivity also helps to expose why this perception is potentially illusory.

Knowledge can emerge from an interaction, but an interaction or sharing of false information does not equate to evidence that knowledge has been negatively impacted in a fundamental way. Conflating the diffusion of information with its adoption is problematic because engagement, through likes or shares, does not automatically mean belief (Altay et al., 2021). The billions of interactions we see online (Boyd & Crawford, 2012), whether they contain misinformation or not, may seem concerning but do not necessarily reflect all agents’ inner state of mind (Bryanov & Vziatysheva, 2021; Fletcher & Nielsen, 2019; Wagner & Boczkowski, 2019).

Intersubjectivity does not subscribe to the transparency of objectivity. Although scientific methods are explicitly designed to distinguish between competing hypotheses and, ideally, between truth and falsehood, intersubjectivity lacks this normative appeal. However, this does not mean that social-negotiation processes of generation and transmission cannot have their own corrective mechanisms, and they may draw attention to consequential, and potentially false, claims that require scrutiny. An example of this is the Reddit thread ChangeMyView, where individuals post their argument about a claim and ask users to change their minds (e.g., “Influencers are not only pointless, but causing active harm to society”; “Statistics is much more valuable than trigonometry and should be the focus in schools”). On occasions, this seems to be successful, as indicated by the individual’s responses to the proposed counterarguments, and research has been conducted into the language of persuasion in this thread (e.g., Musi, 2018; Priniski & Horne, 2018; Wei et al., 2016).

Intersubjectivity in public debate, especially in the absence of simple ground truths, can resemble features of scientific discourse (Brummett, 1976; Trafimow & Osman, 2022). Statistics, which are widely regarded today as a window to ground truth, were initially doubted in the early 19th century precisely because they were treated as another tool of rhetoric masquerading as irrefutable, legitimate evidence (Coleman, 2018). Thus, the emergence and eventual domination of statistics incorporated an element of coordination and negotiation among scientists to establish statistics as a valid representation of, and inference mechanism for, observations of the world.

Although one might be tempted to consider objectivity and intersubjectivity as mutually exclusive, they both feature in the scientific selection of ideas (Heylighen, 1997). A recent example occurred when various hypotheses regarding the origins of the COVID-19 pandemic were ruled out because they were initially regarded by many in the scientific community as conspiratorial; these theories were later recognized as valid hypotheses (Osman et al., 2022). This demonstrates how knowledge and claims can be in flux when they are interpreted by different agents. It also highlights the difficulties of being objective on a topic when the evidence is accumulating in real time and when political factors try to steer what constitutes legitimate and illegitimate investigation. Moreover, there is a historical precedent for censorship when authorities or experts (scholars, journalists, politicians) assert themselves in order to address the destabilization of epistemological foundations, especially when it presents an existential as well as a political threat (Berkowitz, 2021). Such strategies therefore serve to maintain not just power but perceived order in the world. Intersubjectivity and objectivity, although different, can and have coexisted in the past, which offers reassurance to those who are concerned that intersubjectivity is causing chaos in the form of misinformation (Krause et al., 2022).

Last, there needs to be a consistent way in which deviations from ground truth are managed. If researchers see the present situation as more problematic than ever before, this indicates that to them pre-Internet society was preferable and had recovered from past shifts in the value of epistemic concepts. In other words, if the present is contrasted with the past to highlight the current problem of misinformation, then there needs to be an acknowledgment that past shifts, which caused great concern at the time, were not as problematic as those of the present day. Just because past shifts have not been problematic, this does not mean that future shifts will also be nonproblematic. It is just that there is a lack of evidence that objectivity has been displaced by intersubjectivity to the extent that it is the current default mode of knowing, and that misinformation arising from this way of knowing impacts behavior. Although we do not deny the possibility that misinformation may be a serious problem at some point, it seems premature to reach this diagnosis at the moment.

Conclusion

In reviewing the perceived problem of misinformation, we found that many disciplines agree that the issue is not novel, but that modern technology generates unprecedented quantities of misinformation. This exacerbates its potential to cause harm both on the individual level and the societal level. The hyperconnectivity of today’s information and (social) media landscape is seen as facilitating the generation and distribution of misinformation. In turn, this leads to a perceived increase in establishing worldviews intersubjectively, which deviates from the epistemic objectivity heralded by traditional institutions and gatekeepers of knowledge and truth. We have proposed our own analysis on the fundamentals of the problem of misinformation, and through the lens of intersubjectivity we have provided a conceptual framework to argue two essential points.

First, today’s informational ecosystem is the mechanism by which we can observe transparently the multitude of interactions that it hosts. Seeing the volume of daily interactions in, for instance, social-media networks, leads to the inference that more people are deviating from truth, because they can better coordinate their own subjective knowledge of the world outside of established facts—that is, intersubjectively. We argue that simply because technology is increasing the number of visible interactions does not necessarily suggest that there is anything profoundly new about the status of epistemic objects. The interactions people have when sharing what they think and feel is not equivalent to evidence that epistemic objects in and of themselves have changed. This, we propose, explains why misinformation is viewed as an existential threat. Further, in our view, the current evidence is also not sufficient to justify this conclusion because correlation is often viewed as causation.

Second, historical epistemology exposes what is often ignored: The generation of knowledge is a process of establishing conventions for the best way to arrive at ground truths. We make this point from a realist, not a relativist, perspective. There are objective facts. Nonetheless, historical epistemology shows that whereas there is an absence of stable diagnostic criteria for ground truth, the mechanism by which objective facts are acquired, characterized, and communicated has changed over the course of human history. This, we propose, explains why misinformation cannot be examined without the recognition that distortion affects any act of communicating truth.

What does future work on misinformation need to consider?

Future work needs to address three key issues. First, it must show that misinformation in a particular context of interest has the widespread potential to establish or significantly strengthen related beliefs in a considerable manner. This is important because the Internet and social media may seem rife with misinformation, but reliable estimates on its prevalence and impact on recipients are hard to come by (e.g., Allen et al., 2020; Altay et al., 2021). Thus, precisely mapping the (social) media landscape to gauge the extent of the problem is an important first step in understanding its potential impact on individuals’ beliefs and public discourse. Of course, even if the sheer amount of misinformation is small compared with legitimate information, it might still have a severe impact on people’s beliefs and attitudes, as well as on their evaluation of evidence. Again, however, it can be debated to what extent misinformation influences the public’s beliefs on a large scale (e.g., British Royal Society, 2022). Thus, the jury is still out regarding the severity of the problem.