Abstract

Birefringence, an inherent characteristic of optically anisotropic materials, is widely utilized in various imaging applications ranging from material characterizations to clinical diagnosis. Polarized light microscopy enables high-resolution, high-contrast imaging of optically anisotropic specimens, but it is associated with mechanical rotations of polarizer/analyzer and relatively complex optical designs. Here, we present a form of lens-less polarization-sensitive microscopy capable of complex and birefringence imaging of transparent objects without an optical lens and any moving parts. Our method exploits an optical mask-modulated polarization image sensor and single-input-state LED illumination design to obtain complex and birefringence images of the object via ptychographic phase retrieval. Using a camera with a pixel size of 3.45 μm, the method achieves birefringence imaging with a half-pitch resolution of 2.46 μm over a 59.74 mm2 field-of-view, which corresponds to a space-bandwidth product of 9.9 megapixels. We demonstrate the high-resolution, large-area, phase and birefringence imaging capability of our method by presenting the phase and birefringence images of various anisotropic objects, including a monosodium urate crystal, and excised mouse eye and heart tissues.

Subject terms: Polarization microscopy; Lasers, LEDs and light sources

Introduction

Since the invention of the first compound microscope, several hardware improvements, including optical components and image sensors, have been made over the years. However, its basic design consisting of multiple lenses to form an optical image of an object has not changed significantly. This conventional imaging system is beset by the inherent trade-offs between the spatial resolution and field-of-view (FoV), limiting their space–bandwidth product (SBP), which can be calculated as the FoV divided by the square of the spatial resolution1. In other words, one can only image the fine details of an object with high resolution in a small region. Because imaging systems require a large SBP to handle multiple applications, the conventional imaging design relies on complicated optical and mechanical architectures to increase the SBP, resulting in a bulky and expensive imaging setup.

In recent years, as advanced computational algorithms have been developed and computational resources became more powerful, various computational microscopy techniques were developed to overcome the SBP limitation of conventional imaging systems2–5. Lens-less microscopy is one of the representative computational imaging platforms that were developed to address the SBP limitation, while enabling a “lean” optical architecture6–9. Because the final image can be obtained with unit magnification by placing the object directly on the image sensor, the imaging FoV is only limited by the size of the image sensor, typically a few millimeters, and the resolution is determined by the pixel size of the imaging sensor9. Moreover, various pixel-super resolution methods can be employed to further enhance the resolution, using measurement diversities based on multiple object heights10,11, object/sensor translation12,13, multiple wavelengths14,15, etc. Synthetic aperture-based lens-less imaging technology also enables high-resolution imaging by enlarging the effective numerical aperture (NA) using angle varied illumination16. The lens-less microscope can also retrieve the complex information of the object from intensity-only measurements via back projection and a phase retrieval algorithm. Taking advantage of these features, namely high information throughput, small form-factor, and cost-effectiveness, various imaging modalities such as phase and fluorescence imaging have been demonstrated in a lens-less platform17,18, and numerous applications have been proposed, including cell observation19, disease diagnosis6, and air quality monitoring20.

Birefringence refers to the polarization-dependent refractive index of optically anisotropic materials, resulting from the ordered arrangement of microstructures within the materials21,22. Birefringence can be found in a myriad of natural and engineered materials, including collagen, reticulin23, and various kinds of synthetic two-dimensional materials24. This intrinsic property has thus been broadly studied for various applications such as material inspection25–27 and biomedical diagnosis28–30. Polarization light microscopy (PLM) enables to assess anisotropy properties such as phase retardation and optic-axis orientation in transparent materials. The operation of PLM entails capturing multiple images with mechanical rotations of the polarizer and analyzer in a conventional optical microscope, which is a relatively complex process. Furthermore, PLM, like other conventional microscopes, suffers from limited SBP. To address these limitations, various imaging methods like digital holographic microscopy31, ptychography32, single-pixel imaging33, differential phase-contrast microscopy34, and lens-less holographic microscopy35,36 have been explored for birefringence imaging. Among them, lens-free holographic polarization microscopy enables large-area birefringence imaging in a lens-free manner35, but two sets of raw holograms must be taken with illuminations in two different polarization states, which requires precise image alignment of the recorded images. Recently, a promising approach has been proposed that combines deep learning with lens-free holographic polarization microscopy to achieve single-shot birefringence imaging, although it requires training with a large number of datasets37.

Here, we present a form of lens-less polarization-sensitive (PS) microscopy, which allows for inertia-free, large-area, complex and birefringence imaging. Our polarization-sensitive ptychographic lens-less microscope (PS-PtychoLM) adopts a high-resolution, large-FoV imaging capability of a mask-modulated ptychographic imager38,39, while quantitatively measuring the birefringence properties of transparent samples with a single-input-state illumination and polarization-diverse imaging system. Compared to conventional polarization imaging techniques, our PS-PtychoLM does not involve any mechanical rotation of the polarizer/analyzer and translation of object or light source, which makes the system more robust and easier to operate. Bai et al.40 has previously demonstrated a computational PLM capable of inertia-free birefringence imaging for transparent anisotropic crystals using a polarization image sensor. However, the method is built on an optical microscope with bulky optics, and does not provide complex information (i.e., amplitude and phase). In contrast, our method is lens-less, and provides both complex and birefringence information of objects over a large FoV. PS Fourier ptychographic microscopes have been introduced41, 42, but still demonstrated in the platforms of optical microscopes, which is distinct from our lens-free implementation.

We employ a Jones-matrix analysis to formulate the PS-PtychoLM image reconstruction process and evaluate various pixel demosaicing methods to suggest the optimum demosaicing scheme in the mask-modulated ptychographic platform. We demonstrate high-accuracy PS imaging capability over a large-FoV by presenting the birefringence images of various anisotropic objects, including a monosodium urate (MSU) crystal, mouse eye and heart tissue sections.

Results

PS-PtychoLM optical setup

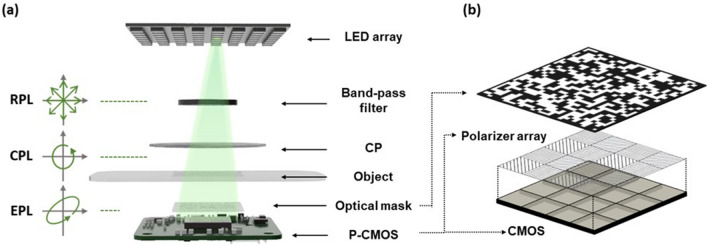

Our PS-PtychoLM was built on a mask-modulated ptychographic imager38,39, which provides inertia-free complex image reconstruction with a programmable LED array. A schematic of the PS-PtychoLM is depicted in Fig. 1. We employed a custom-built programmable LED array for angle-varied illumination. The LED array was composed of high-power LEDs (Shenzhen LED color, APA102-202 Super-LED, center wavelength = 527 nm, full-width at half-maximum bandwidth ~ 30 nm) and featured a 4 mm pitch with 9 × 9 elements that can be controlled independently. The central 9 × 9 elements of the LED array were used, and a total of 81 raw intensity images were captured for each LED illumination. The distance from the LED array to the object was set to be ~ 400 mm, providing illumination angles of -2.29 to 2.29°. Prior to illuminating an object, the LED light passed through a band-pass filter (Thorlabs, FL514.5-10, center wavelength = 514.5 nm, full-width at half-maximum bandwidth = 10 nm) to increase temporal coherence, after which the light was circularly polarized using a zero-order circular polarizer (Edmund optics, CP42HE). If the object is optically anisotropic, the light passing through the object becomes elliptically polarized. The transmitted light was then intensity modulated by a binary amplitude mask (Fig. 1b). The mask was fabricated by coating a 1.5 mm thick soda-lime glass slab with chromium metal with a random binary pattern that was employed in Ref. 38,39. The transparent-to-light-blocking area ratio of the optical mask was designed to be one and the pattern had a feature size of 27.6 μm. The modulated light was then captured using a board-level polarization-sensitive complementary metal–oxide–semiconductor image sensor (P-CMOS, Lucid Vision Labs, PHX050S1-PNL) with a pixel resolution of 2048 × 2448 and a pixel size of 3.45 μm, allowing for a FoV of 7.07 mm × 8.45 mm. The camera was equipped with four different directional polarization filters (0°, 45°, 90°, and 135°) on the image sensor for every four pixels, allowing the information of each polarization state to be captured simultaneously (Fig. 1b). PS-PtychoLM could thus acquire polarization images along a specific orientation required for computing the birefringence distribution without mechanical rotation of the polarizers or variable retarder.

Figure 1.

PS-PtychoLM. (a) Schematic of polarization-sensitive ptychographic lens-less microscope (PS-PtychoLM). A programmable light-emitting diode (LED) array is turned on sequentially to generate angle-varied illumination. Unpolarized LED light passes through a band-pass filter and circular polarizer (CP) to be circularly polarized and to illuminate an object. (b) The light transmitted through the object is intensity modulated by an optical mask, and then recorded through four different polarization channels of a polarization camera (P-CMOS). (RPL: randomly polarized light, CPL: circularly polarized light, EPL: elliptically polarized light).

Numerical simulation

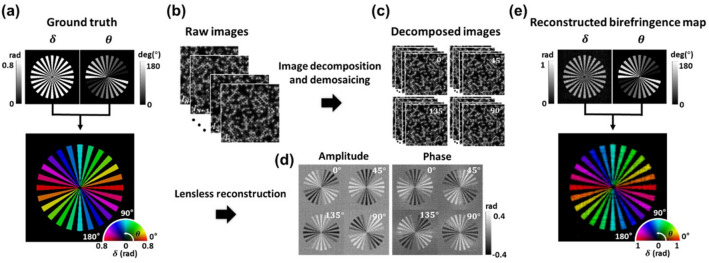

Figure 2 outlines the PS-PtychoLM image reconstruction procedure and numerical simulation results of a simulated birefringence object. We considered a Siemens birefringent object, of which each sector form was characterized by a phase retardation of π/4 and their optic axes oriented along the longer sides. The corresponding birefringence map is presented by a pseudo-color map to denote optic-axis orientation (θ) and retardation (δ) with color and intensity, respectively (Fig. 2a). The PS-PtychoLM measurements were numerically performed (Fig. 2b). The recorded images by the P-CMOS under angle-varied LED illuminations were first decomposed into four images at different polarization channels. The resultant images were then processed for polarization demosaicing to estimate missing polarization information at the neighboring pixels (Fig. 2c). For the demosaicing, we used Newton's polynomial interpolation model43 because it was found to recover images with high fidelity in both low- and high-frequency features, compared to other schemes (see Methods). The amplitude and phase images of each polarization channel were then reconstructed using a ptychographic reconstruction algorithm (Fig. 2d)44 (Sects. 1 and 2 in the Supplementary Information) and further used to obtain phase retardation and optic-axis orientation of the birefringent object based on the Jones-matrix analysis. The reconstructed birefringence information is presented in Fig. 2e, which agrees with the ground truth map (Fig. 2a). Details of the forward imaging model and reconstruction algorithm are provided in the Supplementary Information Sects. 1 and 2.

Figure 2.

PS-PtychoLM birefringence map reconstruction procedure and simulation results. (a) Ground truth birefringence information of a simulation target. (b, c) PS-PtychoLM captures raw images under angle-varied LED illuminations, and each recorded image is decomposed into the images in four polarization channels. The missing pixel information in each image is interpolated using a polarization demosaicing scheme. (d) The amplitude and phase information of each polarization channel are then reconstructed using a ptychographic reconstruction algorithm, and further used to obtain phase retardation and optic-axis orientation maps of the object based on Jones-matrix analysis. (e) Obtained birefringence information can be jointly presented using a pseudo-color map that encodes retardation and optic-axis orientation with intensity and color, respectively.

Experimental demonstration: MSU crystals

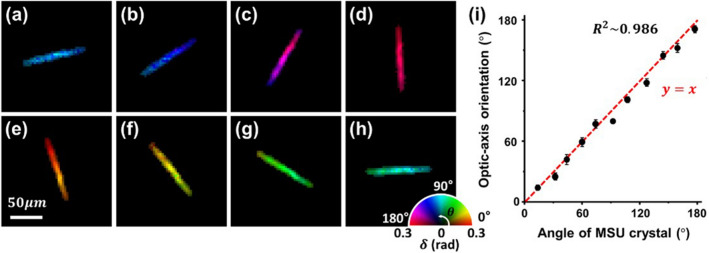

We then experimentally performed imaging of monosodium urate (MSU) crystals (InvivoGen Co., tlrl-MSU) immersed in phosphate-buffered saline to validate the birefringence imaging capability of the PS-PtychoLM. The MSU crystals are needle-shaped birefringent crystals with strong negative birefringence, i.e., the fast axis is oriented along the axial direction of the crystals35. Following the PS-PtychoLM reconstruction, we selected and analyzed a single MSU crystal to verify optic-axis orientation measurements. Figure 3a–h present representative birefringence maps of the single MSU crystal at various rotation angles. Figure 3i shows a comparison of the optic-axis orientation measured with PS-PtychoLM and that obtained manually with the NIH ImageJ program. The coefficient of determination (R2) was measured to be ~ 0.986, and the phase retardation was found to be ~ 0.272 rad, regardless of the rotation angle. The standard deviation of optic-axis orientation distribution was calculated over the pixels in the MSU crystal (104 pixels) for each angle and is indicated by the error bars in Fig. 3i. To evaluate the PS-PtychoLM accuracy in the determination of phase retardation, we used the information in the literatures to estimate the phase retardation of the MSU crystals. Using the refractive index information in Park et al.45 and measured phase maps in our reconstruction result, we first estimated the diameter of the MSU crystal to be 0.236 μm. The phase retardation was then determined to be 0.288 rad based on the birefringence information of the crystals in Zhang et al.35 . Compared with the PS-PtychoLM measurement (i.e., ~ 0.272 rad), the relative error was found to be 5.66%.

Figure 3.

Validation of PS-PtychoLM optic-axis orientation measurements. (a–h) Birefringence maps of a single MSU crystal at different rotation angles (15°,33°,61°,93°,108°,128°,145°, and 178°, respectively). (i) Optic-axis orientation of the MSU crystal measured through rotation at approximately 15° intervals.

Experimental demonstration: biological tissue samples

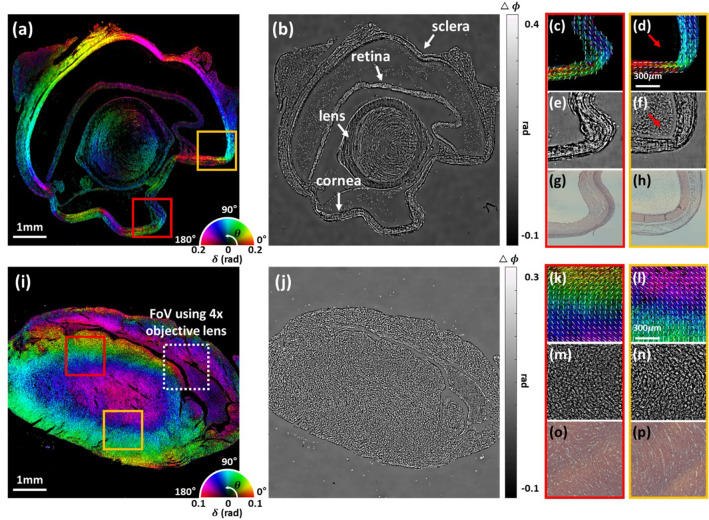

In order to demonstrate large-FoV PS-PtychoLM imaging capability for biological specimens, we further performed PS-PtychoLM imaging of mouse eye and heart tissues. Paraffin-embedded mouse eye and heart tissue sections were obtained from Asan Medical Center and Yonsei Severance Hospital, Seoul, Republic of Korea, respectively. All experimental procedures were carried out in accordance with the guidelines of the Institutional Animal Care and Use Committees which were approved by Asan Institute for Life Science and Yonsei University College of Medicine. We de-paraffinized the tissue sections (the eye and heart were sectioned at 10 μm and 5 μm thick, respectively) using hot xylene, placed them onto a microscope glass slide, and performed PS-PtychoLM imaging. Shown in Fig. 4a,b are the large-FoV quantitative birefringence and phase images of the mouse eye section, respectively. The phase image was obtained by evaluating the mean of the phases obtained at the four polarization channels. Some parts of the eye, including the cornea and sclera, are formed of a stack of orientated collagen fibers, which result in birefringence with its optic-axis orientated along the fibers46,47. Figure 4c-f show the magnified birefringence and phase images of the parts marked with red and orange boxes in Fig. 4a, which correspond to the cornea and sclera, respectively. Visualization of the optic-axis orientation was enhanced by overlaying short white lines that indicate the mean optic-axis orientation evaluated over a small region (70 μm × 70 μm). Through the ptychographic phase retrieval algorithm, PS-PtychoLM provides complex and birefringence information of the specimen, which can be used to measure various features of the objects. The phase information provides optical path-length distribution of the sample, while the birefringence map allows for interrogating phase retardation and optic-axis orientation. This feature is manifested in Fig. 4d and f in that the cellular structures observed in the phase image are not visualized in the corresponding birefringence image, as marked with red arrow. Figure 4g,h are the images captured with a conventional microscope (Nikon, ECLIPSE Ti, 0.2 NA) after the samples were stained with hematoxylin and eosin (H&E). It is clearly observed that the fiber arrangement observed in Fig. 4g,h greatly matched the optic-axis orientation obtained with PS-PtychoLM.

Figure 4.

PS-PtychoLM imaging results of mouse eye (a–h) and heart tissue specimens (i–p). (a, i) Quantitative birefringence maps of the mouse eye and heart tissues over the entire FoV. (b, j) Quantitative phase images of the mouse eye and heart tissues over the entire FoV. (c–f) Enlarged birefringence and phase images of the parts marked with red and orange boxes in (a), respectively. (g, h) Hematoxylin and eosin (H&E) staining images of the parts (c, d) captured with an optical microscope (Nikon, ECLIPSE Ti, 0.2NA). (k–n) Enlarged birefringence and phase images of the parts marked with red and orange boxes in (i), respectively. (o, p) H&E staining images of the parts (k, l) captured with an optical microscope (Nikon, ECLIPSE Ti, 0.2NA). Overlaid white short lines on (c, d, k, and l) indicate the mean optic-axis orientation evaluated over a small region (70 μm × 70 μm).

Figure 4i,j present the birefringence and phase images of the mouse heart section, along with the enlarged images (Fig. 4k,l for birefringence and Fig. 4m,n for phase) of the parts marked with red and orange boxes in Fig. 4i. Because fibrous tissues found in the myocardium exhibit optical birefringence, the optic-axis information may be used to infer myocardium orientation48. Figure 4o,p are the corresponding H&E images obtained from the optical microscope (Nikon, ECLIPSE Ti, 0.2 NA); these images correspond to the same area depicted in Fig. 4k and l, respectively. The alignment direction of the myocardium observed in the H&E image is consistent with the reconstructed optic-axis orientation in the PS-PtychoLM images. Myofiber disorganization compromises normal heart function and is associated with various cardiovascular diseases such as myocardial infarction. Note that, the boxed area marked with a white dotted line in Fig. 4i indicate the FoV obtainable with a 4 × objective lens, which provides the same spatial resolution as the PS-PtychoLM. One can see that in this limited FoV, it is difficult to obtain a comprehensive information of the myocardium alignment direction49 over the large tissue specimens. These results suggest that, with further improvement, PS-PtychoLM may serve as a viable PS imaging tool for large-scale biological specimens and disease diagnosis.

Conclusion

In summary, we described a computational lens-less birefringence microscope by combining the inertia-free, high-resolution, large-area imaging capability of the mask-modulated ptychography with single-input-state polarization-sensitive imaging setup. The proposed PS-PtychoLM could produce complex-valued information on each polarization channel of the image sensor, and the information was further used to obtain a 2D birefringence map over the entire sensor surface. Our method featured a half-pitch resolution of 2.46 μm across an FoV of 7.07 mm × 8.45 mm, which corresponds to 9.9 megapixels in SBP.

Several features should be noted in the reported platform. We employed the mask-modulated ptychographic configuration with angle-varied LED illumination to achieve inertia-free ptychographic birefringence imaging. The programmable LED array enabled simple, cost-effective, and motion-free operation of PS-PtychoLM, but its short coherence both in spatial and temporal domains and low light-throughput compromised the spatial resolution and imaging speed. We used a bandpass filter in the illumination path to improve the temporal coherence, but the spatial coherence could not be improved because either the use of smaller LEDs or larger distance between the LEDs and object compromised the light throughput. The imaging throughput in our prototype is limited largely by low light-throughput of the LEDs. Instead of LEDs, coherence light sources such as laser or laser diodes can certainly be employed. These light sources provide directed beams with higher spatial and temporal coherence, but in such cases, either the illumination light or object must be scanned or translated to obtain measurement diversity, which is required for ptychographic phase retrieval. Note that various implementations of ptychographic microscopy are summarized in Wang et al.50. In our study, we aimed to demonstrate a cost-effective and robust imaging platform and thus employed the LED array as the illumination source instead of the aforementioned solutions. It should be noted that mask structures that can divert high spatial frequency information scattered from the object into the image sensor would also help improving the spatial resolution. Recent studies reported on various mask configurations for high-resolution lens-less imaging, and among them, mask structures with broad spatial frequency responses were found to produce superior image reconstruction results17,51,52. Optimized mask designs in the platform of PS-PtychoLM are underway.

Measurement uncertainty of birefringence properties is highly dependent on the precision of intensity information reconstructed in each polarization channel. The theoretical measurement uncertainty of birefringence properties can be quantified by evaluating the variances of optic-axis orientation and phase retardation fluctuations, which were found to be and with and . Here, and are the fluctuations of and respectively (see Eq. (8,9) in Methods). The uncertainty in the intensity information is influenced by various factors, including image sensor characteristics such as dark/readout noise and angular response to incident light, unwanted spatial phase noise incurred in the pixel interpolation step, the intensity fluctuations of illumination LEDs, and misalignment in the LED illumination angles. Hence, the use of image sensors with larger well capacities and low noise would help to improve the measurement precision. Moreover, image sensors with smaller pixel sizes enable finer spatial sampling, which mitigates any errors during the interpolation stage. For high-resolution image reconstruction, one should also consider the angular response of the image sensor to different angles of incident light. Recently, Jiang et al.17 developed an imaging model that considers the angular response of the image sensor. A similar model can be implemented in the PS-PtychoLM imager to improve the imaging performance. In terms of the light source, the use of power-stable light sources is highly desired to alleviate the issues with intensity noises from the light source. Li et al.53 recently introduced a computational method that performs angle calibration and corrects for LED intensity fluctuations in the platform of lens-less ptychography. Employment of such strategies to correct for potential noise sources is likely to improve the detection performance of the PS-PtychoLM imager.

In our PS-PtychoLM implementation, we employed binary mask-assisted ptychographic phase retrieval to obtain complex information of the object. Instead of the binary mask, several recent studies reported on using other random structures such as diffusers with micro-/nano- features to achieve superior resolution8,17. However, we note that with the random diffuser in our setup, the object information could not be accurately obtained. Our prototype employed a pixelated polarization image sensor, and it is thus required to decompose the captured image into the ones in different polarization channels and perform pixel interpolation through demosaicing methods. However, the random diffuser significantly scrambles the propagation wavefront of the object wave, and thus information in the missing pixels could not be correctly interpolated by the demosaicing schemes. We numerically investigated the mean squared errors (MSEs) between the information acquired with a full pixel-resolution camera (i.e., without pixel demosaicing method) and the one obtained with pixelated polarization camera and pixel demosaicing. The measured MSE with the random diffuser was found to be ~ 3 × larger than that with the binary mask. Detailed simulation results of PS-PtychoLM imaging with diffuser and binary mask are provided in the Supplementary Information Sect. 4. We also experimentally validated that the use of a binary mask provided an accurate estimation of polarization information in all the detection channels, thus resulting in accurate birefringence maps. This problem can certainly be alleviated by using a non-polarized full pixel-resolution camera, but it would require mechanical rotation of polarizer/analyzer to reconstruct birefringence maps.

In terms of the mask pattern, we utilized a binary amplitude mask with 50% open channels. Optimal binary mask designs for rapid and robust phase recovery are certainly the subject of future research, as also noted by Ref. 39. The influence of the mask design parameters (e.g., feature size and distribution) on the image recovery performance is being investigated. The results are expected to provide useful guidelines for binary mask designs in the mask-assisted ptychographic imaging systems.

We expect that our method would have a broad range of applications in material inspections and various quantitative biological studies. For example, large-FoV, high-resolution birefringence imaging can be used to inspect internal features (e.g., molecular orientation of the liquid crystal layer between two substrates) of liquid crystal-based devices54 and to detect defects of semiconductor wafers in the manufacturing process25. In addition, birefringence imaging can be used in several biomedical applications such as for malaria detection41,55, brain slide imaging56, retina imaging57,58, cancerous cell differentiation59,60 and bulk tissue characterization61. On a technical note, PS-PtychoLM can be extended to depth-multiplexed birefringence imaging by considering the wave propagation through the multilayered structures. Each layer can be regarded as a thin anisotropic material, and birefringence information of each layer can be updated iteratively via ptychographic algorithms. This platform would enable high-throughput optical anisotropy imaging of stacked specimens. Note that similar approach has been demonstrated for depth-multiplexed phase imaging62. Our demonstration is limited to imaging only thin and transparent samples. However, implementation of the multi-slice beam propagation (MBP) model63,64 on the lens-less imaging platform would enable imaging of thick multiple scattering samples through a combination with illumination engineering methods (e.g., angle scanning). Recently, Song et al.65 introduced tomographic birefringence microscopy based on vectorial MBP model and gradient decent method. Relevant forward imaging model and image reconstruction algorithms may be adopted and integrated into our PS-PtychoLM platform, generating lens-less tomographic birefringence imager.

Methods

Jones-matrix analysis for PS-PtychoLM

The Jones-matrix analysis was used to formulate the PS-PtychoLM image reconstruction process, in which the polarization state of light is represented by a Jones vector, and the optical elements, including specimens, are represented by Jones matrices66,67. Jones matrix for a thin sample can be represented with a phase retardance magnitude (δ) and an optic-axis orientation (θ) as:

| 1 |

where is a rotation matrix defined by optic-axis orientation θ, is the phase retardation matrix, and is the diattenuation matrix which is negligible in thin specimens. As a result, the Jones matrix for thin specimens can be formulated as:

| 2 |

In addition, the Jones matrix for the linear polarizer on the detector along ψ direction is expressed as:

| 3 |

Light field output () measured at each polarization channel through our imaging system can be represented as:

| 4 |

where denotes the Jones vector for the incident light of intensity with .

The measured intensity values for each polarization channel can be written as:

| 5 |

Using Eq. (1–5), one can easily obtain the expressions for the light intensity measured along the four polarization channels as follows:

| 6 |

Then, we combine Eq. (6) to introduce two auxiliary quantities and defined as:

| 7 |

Finally, the spatial distribution of retardance magnitude and the optic-axis orientation of the birefringent sample can be reconstructed as:

| 8 |

| 9 |

Comparison of various demosaicing methods

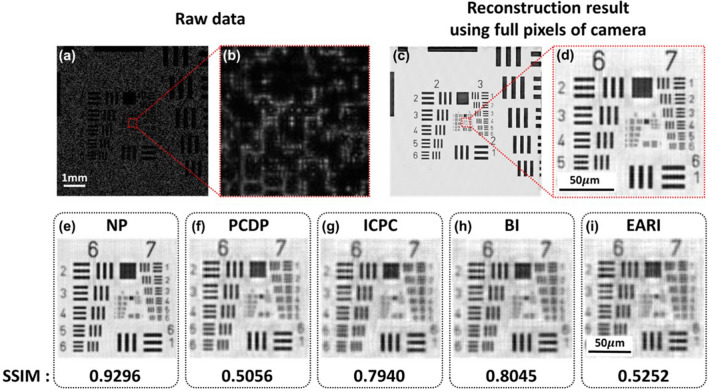

In order to find a suitable demosaicing method for PS-PtychoLM, we evaluated various pixel demosaicing methods including Newton’s polynomial (NP)43, polarization channel difference prior (PCDP)68, intensity correlation among polarization channels (ICPC)69, bilinear interpolation (BI), and edge-aware residual interpolation (EARI) algorithms70. We performed PS-PtychoLM imaging of an USAF resolution target and compared the reconstruction performances with the aforementioned demosaicing methods. A representative raw intensity image captured using the PS-PtychoLM is shown in Fig. 5a, along with an enlarged image of the area marked with a red box in Fig. 5a (Fig. 5b). As can be noted, the features in the object could not clearly be discerned because the object information was largely obscured by the mask-modulated object wave. Upon the acquisition of 81 images with angle-varied LED illuminations, we separated each image into the ones in four polarization channels and the missing pixel information in each channel was estimated using the interpolation methods. We also carried out image reconstruction using the full pixel information of the camera without demosaicing as the reference. Figure 5c,d present the image reconstruction results obtained using the full pixel information of the image sensor. It is evident that, after ptychographic retrieval, the features in the target can be clearly visualized. The reconstruction results using NP, PCDP, ICPC, BI, and EARI interpolation algorithms are presented in Fig. 5e–i, along with their structural similarity index measure (SSIM) values computed with reference to the result in Fig. 5c. It can be noted that the NP interpolation method provided superior image reconstruction compared to other methods. This superior performance of NP compared with others may be accounted for by that the NP operates on the polynomial interpolation error estimation, thus being much effective in preserving both low- and high-spatial-frequency information43.

Figure 5.

Compared image reconstruction performances of various pixel demosaicing methods. Compared image reconstruction performances of various pixel demosaicing methods. (a) Representative raw image of an USAF resolution target captured with an LED along the optical axis. (b) Enlarged image of the area marked with a red box in (a). (c) Reconstructed image of the target using the full pixels of the camera (i.e., without pixel demosaicing method). (d) Enlarged image of the area marked with a red box in (c). (e–i) Reconstruction results with various demosaicing methods. The acquired image was decomposed into the ones of each polarization channel and missing pixel information was estimated through (e) Newton’s polynomial (NP), (f) polarization channel difference prior (PCDP), (g) intensity correlation among polarization channels (ICPC), (h) bilinear interpolation (BI), and (i) edge-aware residual interpolation (EARI) algorithms, respectively. The structural similarity index measure (SSIM) values for each reconstruction result were evaluated against the result in (c) and are presented.

Supplementary Information

Acknowledgements

This work was partially supported by the Samsung Research Funding & Incubation Center of Samsung Electronics (SRFC-IT2002-07), the Korea Institute for Advancement of Technology (KIAT) grant funded by the Korea Government (MOTIE)(P0019784), the Korea Medical Device Development Fund (KMDF_PR_20200901_0099, Project Number: 9991007255), the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No.2023R1A2C3004040), Commercialization Promotion Agency for R&D Outcomes(COMPA) funded by the Ministry of Science and ICT(MSIT) (1711198541, Development of key optical technologies of inspection and measurement for analysis of 3D complex nano structure), and Development of core technologies for advanced measuring instruments funded by Korea Research Institute of Standards and Science (KRISS–2023–GP2023-0012).

Author contributions

C.J. conceived the initial concept. J.K. developed the principles and reconstruction algorithm, performed the experiments and simulations, and analyzed the data. S.S., H.K. supported the analysis of the imaging results. B.K., M.P., S.O., Y.H. prepared and analyzed the samples. D.K., B.C., J.L. participated in discussions during the development of the paper. All the authors contributed to writing the manuscript.

Data availability

All the data are available upon reasonable request to the corresponding author (cjoo@yonsei.ac.kr).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-023-46496-z.

References

- 1.Lohmann AW, Dorsch RG, Mendlovic D, Zalevsky Z, Ferreira C. Space–bandwidth product of optical signals and systems. JOSA A. 1996;13:470–473. doi: 10.1364/JOSAA.13.000470. [DOI] [Google Scholar]

- 2.Gustafsson MG. Nonlinear structured-illumination microscopy: wide-field fluorescence imaging with theoretically unlimited resolution. Proc. Natl. Acad. Sci. 2005;102:13081–13086. doi: 10.1073/pnas.0406877102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hillman TR, Gutzler T, Alexandrov SA, Sampson DD. High-resolution, wide-field object reconstruction with synthetic aperture Fourier holographic optical microscopy. Opt. Express. 2009;17:7873–7892. doi: 10.1364/OE.17.007873. [DOI] [PubMed] [Google Scholar]

- 4.Zheng G, Horstmeyer R, Yang C. Wide-field, high-resolution Fourier ptychographic microscopy. Nat. Photonics. 2013;7:739–745. doi: 10.1038/nphoton.2013.187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Park J, Brady DJ, Zheng G, Tian L, Gao L. Review of bio-optical imaging systems with a high space-bandwidth product. Advanced Photonics. 2021;3:044001–044001. doi: 10.1117/1.AP.3.4.044001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Greenbaum A, et al. Imaging without lenses: achievements and remaining challenges of wide-field on-chip microscopy. Nat. Methods. 2012;9:889–895. doi: 10.1038/nmeth.2114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Mudanyali O, et al. Compact, light-weight and cost-effective microscope based on lensless incoherent holography for telemedicine applications. Lab Chip. 2010;10:1417–1428. doi: 10.1039/c000453g. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jiang S, et al. Wide-field, high-resolution lensless on-chip microscopy via near-field blind ptychographic modulation. Lab Chip. 2020;20:1058–1065. doi: 10.1039/C9LC01027K. [DOI] [PubMed] [Google Scholar]

- 9.Wu Y, Ozcan A. Lensless digital holographic microscopy and its applications in biomedicine and environmental monitoring. Methods. 2018;136:4–16. doi: 10.1016/j.ymeth.2017.08.013. [DOI] [PubMed] [Google Scholar]

- 10.Greenbaum A, Sikora U, Ozcan A. Field-portable wide-field microscopy of dense samples using multi-height pixel super-resolution based lensfree imaging. Lab Chip. 2012;12:1242–1245. doi: 10.1039/c2lc21072j. [DOI] [PubMed] [Google Scholar]

- 11.Zhang J, Sun J, Chen Q, Li J, Zuo C. Adaptive pixel-super-resolved lensfree in-line digital holography for wide-field on-chip microscopy. Sci. Rep. 2017;7:11777. doi: 10.1038/s41598-017-11715-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Guizar-Sicairos M, Fienup JR. Phase retrieval with transverse translation diversity: A nonlinear optimization approach. Opt. Express. 2008;16:7264–7278. doi: 10.1364/OE.16.007264. [DOI] [PubMed] [Google Scholar]

- 13.Maiden AM, Rodenburg JM. An improved ptychographical phase retrieval algorithm for diffractive imaging. Ultramicroscopy. 2009;109:1256–1262. doi: 10.1016/j.ultramic.2009.05.012. [DOI] [PubMed] [Google Scholar]

- 14.Sanz M, Picazo-Bueno JA, García J, Micó V. Improved quantitative phase imaging in lensless microscopy by single-shot multi-wavelength illumination using a fast convergence algorithm. Opt. Express. 2015;23:21352–21365. doi: 10.1364/OE.23.021352. [DOI] [PubMed] [Google Scholar]

- 15.Wu X, et al. Wavelength-scanning lensfree on-chip microscopy for wide-field pixel-super-resolved quantitative phase imaging. Opt. Lett. 2021;46:2023–2026. doi: 10.1364/OL.421869. [DOI] [PubMed] [Google Scholar]

- 16.Luo W, Greenbaum A, Zhang Y, Ozcan A. Synthetic aperture-based on-chip microscopy. Light Sci. Appl. 2015;4:e261–e261. doi: 10.1038/lsa.2015.34. [DOI] [Google Scholar]

- 17.Jiang S, et al. Resolution-enhanced parallel coded ptychography for high-throughput optical imaging. ACS Photonics. 2021;8:3261–3271. doi: 10.1021/acsphotonics.1c01085. [DOI] [Google Scholar]

- 18.Coskun AF, Su T-W, Ozcan A. Wide field-of-view lens-free fluorescent imaging on a chip. Lab Chip. 2010;10:824–827. doi: 10.1039/b926561a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Greenbaum A, et al. Wide-field computational imaging of pathology slides using lens-free on-chip microscopy. Sci. Trans. Med. 2014;6:267ra175–267ra175. doi: 10.1126/scitranslmed.3009850. [DOI] [PubMed] [Google Scholar]

- 20.Wu Y-C, et al. Air quality monitoring using mobile microscopy and machine learning. Light Sci. Appl. 2017;6:e17046–e17046. doi: 10.1038/lsa.2017.46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hecht E. Optics. Pearson Education India; 2012. [Google Scholar]

- 22.Saleh BE, Teich MC. Fundamentals of photonics. John Wiley & sons; 2019. [Google Scholar]

- 23.Wolman M. Polarized light microscopy as a tool of diagnostic pathology. J. Histochem. Cytochem. 1975;23:21–50. doi: 10.1177/23.1.1090645. [DOI] [PubMed] [Google Scholar]

- 24.Rong R, et al. The interaction of 2D materials with circularly polarized light. Adv. Sci. 2023;10:2206191. doi: 10.1002/advs.202206191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bajor AL. Automated polarimeter–macroscope for optical mapping of birefringence, azimuths, and transmission in large area wafers. Part I. Theory of the measurement. Rev. Sci. Instrum. 1995;66:2977–2990. doi: 10.1063/1.1145584. [DOI] [Google Scholar]

- 26.Yamada, M., Matsumura, M., Fukuzawa, M., Higuma, K. & Nagata, H. in Integrated Optics Devices IV. 101–106 (SPIE, 2000).

- 27.Barnes, B. M. et al. in Metrology, Inspection, and Process Control for Microlithography XXVII. 134–141 (SPIE, 2013).

- 28.Lawrence C, Olson JA. Birefringent hemozoin identifies malaria. Am. J. Clin. Pathol. 1986;86:360–363. doi: 10.1093/ajcp/86.3.360. [DOI] [PubMed] [Google Scholar]

- 29.Miura M, et al. Imaging polarimetry in central serous chorioretinopathy. Am. J. Ophthalmol. 2005;140:1014–1019. doi: 10.1016/j.ajo.2005.06.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ku S, Mahato K, Mazumder N. Polarization-resolved Stokes-Mueller imaging: A review of technology and applications. Lasers Med. Sci. 2019;34:1283–1293. doi: 10.1007/s10103-019-02752-1. [DOI] [PubMed] [Google Scholar]

- 31.Yang Y, Huang H-Y, Guo C-S. Polarization holographic microscope slide for birefringence imaging of anisotropic samples in microfluidics. Opt. Express. 2020;28:14762–14773. doi: 10.1364/OE.389973. [DOI] [PubMed] [Google Scholar]

- 32.Ferrand P, Baroni A, Allain M, Chamard V. Quantitative imaging of anisotropic material properties with vectorial ptychography. Opt. Lett. 2018;43:763–766. doi: 10.1364/OL.43.000763. [DOI] [PubMed] [Google Scholar]

- 33.Kim S, Cense B, Joo C. Single-pixel, single-input-state polarization-sensitive wavefront imaging. Opt. Lett. 2020;45:3965–3968. doi: 10.1364/OL.396442. [DOI] [PubMed] [Google Scholar]

- 34.Hur S, Song S, Kim S, Joo C. Polarization-sensitive differential phase-contrast microscopy. Opt. Lett. 2021;46:392–395. doi: 10.1364/OL.412703. [DOI] [PubMed] [Google Scholar]

- 35.Zhang Y, et al. Wide-field imaging of birefringent synovial fluid crystals using lens-free polarized microscopy for gout diagnosis. Sci. Rep. 2016;6:28793. doi: 10.1038/srep28793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Zhou Y, Xiong B, Li X, Dai Q, Cao X. Lensless imaging of plant samples using the cross-polarized light. Opt. Express. 2020;28:31611–31623. doi: 10.1364/OE.402288. [DOI] [PubMed] [Google Scholar]

- 37.Liu T, et al. Deep learning-based holographic polarization microscopy. ACS Photonics. 2020;7:3023–3034. doi: 10.1021/acsphotonics.0c01051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Zhang Z, et al. Invited article: Mask-modulated lensless imaging with multi-angle illuminations. APL Photonics. 2018;3:060803. doi: 10.1063/1.5026226. [DOI] [Google Scholar]

- 39.Lu C, et al. Mask-modulated lensless imaging via translated structured illumination. Opt. Express. 2021;29:12491–12501. doi: 10.1364/OE.421228. [DOI] [PubMed] [Google Scholar]

- 40.Bai B, et al. Pathological crystal imaging with single-shot computational polarized light microscopy. J. Biophotonics. 2020;13:e201960036. doi: 10.1002/jbio.201960036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Song S, Kim J, Hur S, Song J, Joo C. Large-area, high-resolution birefringence imaging with polarization-sensitive fourier ptychographic microscopy. ACS Photonics. 2021;8:158–165. doi: 10.1021/acsphotonics.0c01695. [DOI] [Google Scholar]

- 42.Dai X, et al. Quantitative Jones matrix imaging using vectorial Fourier ptychography. Biomed. Opt. Express. 2022;13:1457–1470. doi: 10.1364/BOE.448804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Li N, Zhao Y, Pan Q, Kong SG. Demosaicking DoFP images using Newton’s polynomial interpolation and polarization difference model. Opt. Express. 2019;27:1376–1391. doi: 10.1364/OE.27.001376. [DOI] [PubMed] [Google Scholar]

- 44.Maiden A, Johnson D, Li P. Further improvements to the ptychographical iterative engine. Optica. 2017;4:736–745. doi: 10.1364/OPTICA.4.000736. [DOI] [Google Scholar]

- 45.Park S, et al. Detection of intracellular monosodium urate crystals in gout synovial fluid using optical diffraction tomography. Sci. Rep. 2021;11:10019. doi: 10.1038/s41598-021-89337-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Bour LJ, Cardozo NJL. On the birefringence of the living human eye. Vis. Res. 1981;21:1413–1421. doi: 10.1016/0042-6989(81)90248-0. [DOI] [PubMed] [Google Scholar]

- 47.Yamanari M, et al. Scleral birefringence as measured by polarization-sensitive optical coherence tomography and ocular biometric parameters of human eyes in vivo. Biomed. Opt. Express. 2014;5:1391–1402. doi: 10.1364/BOE.5.001391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Wang Y, Yao G. Optical tractography of the mouse heart using polarization-sensitive optical coherence tomography. Biomed. Opt. Express. 2013;4:2540–2545. doi: 10.1364/BOE.4.002540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Castonguay A, et al. Serial optical coherence scanning reveals an association between cardiac function and the heart architecture in the aging rodent heart. Biomed. Opt. Express. 2017;8:5027–5038. doi: 10.1364/BOE.8.005027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Wang T, et al. Optical ptychography for biomedical imaging: Recent progress and future directions. Biomed. Opt. Express. 2023;14:489–532. doi: 10.1364/BOE.480685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Adams JK, et al. In vivo lensless microscopy via a phase mask generating diffraction patterns with high-contrast contours. Nat. Biomed. Eng. 2022;6:617–628. doi: 10.1038/s41551-022-00851-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Jiang S, et al. High-throughput digital pathology via a handheld, multiplexed, and AI-powered ptychographic whole slide scanner. Lab Chip. 2022;22:2657–2670. doi: 10.1039/D2LC00084A. [DOI] [PubMed] [Google Scholar]

- 53.Li P, Maiden A. Lensless LED matrix ptychographic microscope: Problems and solutions. Appl. Opt. 2018;57:1800–1806. doi: 10.1364/AO.57.001800. [DOI] [PubMed] [Google Scholar]

- 54.Benecke C, Seiberle H, Schadt M. Determination of director distributions in liquid crystal polymer-films by means of generalized anisotropic ellipsometry. Japn. J. Appl. Phys. 2000;39:525. doi: 10.1143/JJAP.39.525. [DOI] [Google Scholar]

- 55.Li C, Chen S, Klemba M, Zhu Y. Integrated quantitative phase and birefringence microscopy for imaging malaria-infected red blood cells. J. Biomed Opt. 2016;21:090501–090501. doi: 10.1117/1.JBO.21.9.090501. [DOI] [PubMed] [Google Scholar]

- 56.Zilles, K. et al. in Axons and brain architecture, 369–389 (Elsevier, 2016).

- 57.Zotter S, et al. Measuring retinal nerve fiber layer birefringence, retardation, and thickness using wide-field, high-speed polarization sensitive spectral domain OCT. Investig. Ophthalmol. Vis. Sci. 2013;54:72–84. doi: 10.1167/iovs.12-10089. [DOI] [PubMed] [Google Scholar]

- 58.Cense B, Chen TC, Park BH, Pierce MC, De Boer JF. In vivo depth-resolved birefringence measurements of the human retinal nerve fiber layer by polarization-sensitive optical coherence tomography. Opt. Lett. 2002;27:1610–1612. doi: 10.1364/OL.27.001610. [DOI] [PubMed] [Google Scholar]

- 59.Tuchin VV. Polarized light interaction with tissues. J. Biomed. Opt. 2016;21:071114–071114. doi: 10.1117/1.JBO.21.7.071114. [DOI] [PubMed] [Google Scholar]

- 60.Ramella-Roman JC, Saytashev I, Piccini M. A review of polarization-based imaging technologies for clinical and preclinical applications. J. Opt. 2020;22:123001. doi: 10.1088/2040-8986/abbf8a. [DOI] [Google Scholar]

- 61.Qi J, Elson DS. A high definition Mueller polarimetric endoscope for tissue characterisation. Sci. Rep. 2016;6:25953. doi: 10.1038/srep25953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Guo C, et al. Depth-multiplexed ptychographic microscopy for high-throughput imaging of stacked bio-specimens on a chip. Biosens. Bioelectron. 2023;224:115049. doi: 10.1016/j.bios.2022.115049. [DOI] [PubMed] [Google Scholar]

- 63.Chowdhury S, et al. High-resolution 3D refractive index microscopy of multiple-scattering samples from intensity images. Optica. 2019;6:1211–1219. doi: 10.1364/OPTICA.6.001211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Maiden AM, Humphry MJ, Rodenburg JM. Ptychographic transmission microscopy in three dimensions using a multi-slice approach. JOSA A. 2012;29:1606–1614. doi: 10.1364/JOSAA.29.001606. [DOI] [PubMed] [Google Scholar]

- 65.Song S, et al. Polarization-sensitive intensity diffraction tomography. Light Sci. Appl. 2023;12:124. doi: 10.1038/s41377-023-01151-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Jones RC. A new calculus for the treatment of optical systemsi. description and discussion of the calculus. Josa. 1941;31:488–493. doi: 10.1364/JOSA.31.000488. [DOI] [Google Scholar]

- 67.Goldstein DH. Polarized light. CRC Press; 2017. [Google Scholar]

- 68.Wu R, Zhao Y, Li N, Kong SG. Polarization image demosaicking using polarization channel difference prior. Opt. Express. 2021;29:22066–22079. doi: 10.1364/OE.424457. [DOI] [PubMed] [Google Scholar]

- 69.Zhang J, Luo H, Hui B, Chang Z. Image interpolation for division of focal plane polarimeters with intensity correlation. Opt. express. 2016;24:20799–20807. doi: 10.1364/OE.24.020799. [DOI] [PubMed] [Google Scholar]

- 70.Morimatsu, M., Monno, Y., Tanaka, M. & Okutomi, M. in 2020 IEEE International Conference on Image Processing (ICIP). 2571–2575 (IEEE).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All the data are available upon reasonable request to the corresponding author (cjoo@yonsei.ac.kr).