Abstract

Cognitive task analysis (CTA) methods are traditionally used to conduct small-sample, in-depth studies. In this case study, CTA methods were adapted for a large multi-site study in which 102 anesthesiologists worked through four different high-fidelity simulated high-consequence incidents. Cognitive interviews were used to elicit decision processes following each simulated incident. In this paper, we highlight three practical challenges that arose: (1) standardizing the interview techniques for use across a large, distributed team of diverse backgrounds; (2) developing effective training; and (3) developing a strategy to analyze the resulting large amount of qualitative data. We reflect on how we addressed these challenges by increasing standardization, developing focused training, overcoming social norms that hindered interview effectiveness, and conducting a staged analysis. We share findings from a preliminary analysis that provides early validation of the strategy employed. Analysis of a subset of 64 interview transcripts using a decompositional analysis approach suggests that interviewers successfully elicited descriptions of decision processes that varied due to the different challenges presented by the four simulated incidents. A holistic analysis of the same 64 transcripts revealed individual differences in how anesthesiologists interpreted and managed the same case.

Keywords: Cognitive task analysis, decision-making, high-risk events, anesthesiology, simulation

Introduction

This is a methods paper documenting reflections on adapting cognitive task analysis (CTA) methods for use in large sample studies. CTA methods are commonly used to support small-sample, in-depth, exploratory studies of skilled performance in complex settings (Hoffman and Militello, 2008). These methods produce rich data sets that are analyzed iteratively using qualitative methods. However, there has been a recent push in healthcare to use CTA methods in studies involving larger sample sizes, multiple sites, and subjects with different levels of experience to facilitate comparison of groups (e.g., different experience levels, different work settings, etc.) to support generalization (Militello et al., 2020). The authors have collectively experienced this pressure from grant reviewers, as well as from colleagues in the healthcare community who aim to apply CTA findings more broadly.

Adapting in-depth methods for larger scale studies that survey a broad sample of clinicians raises a number of challenges. First, cognitive interviews are generally semi-structured, allowing room for discovery as unanticipated but relevant topics and concepts emerge during the discussion. This type of semi-structured interview, however, requires judgment on the part of the interviewer in determining where to probe further and when to redirect the interview. It also means that the same questions may not be asked of each interviewee, or a topic may be discussed at length in some interviews and not in others. For a large-scale study in which comparison across groups is important, a more structured approach is required so that data collected are comparable across interviews and sites. Second, for multi-site studies, there are generally multiple data collection teams. As a result, strategies for training interviewers, often of differing backgrounds, level of knowledge and experience in the domain of interest, and interviewing experience, becomes increasingly important, as do strategies for managing “drift” in interview focus and approach over time as the study progresses. It is common for interview teams to shift interview focus to deepen on particular topics and refine questions over time as they learn more about the phenomenon of study; however, this type of drift would greatly complicate comparative analyses, particularly if components of the distributed data collection team “drifted” in different directions. Third, analysis of qualitative data is notoriously costly, time-consuming, and iterative (Crandall et al., 2006). Large sample studies raise the question of how to analyze interview data within the time and resource constraints of the project.

We reflect on these issues in the context of a case study aimed at understanding decision-making of anesthesiologists during high-fidelity simulations of critical clinical events. Cognitive interviews were one component of a large, geographically distributed, multi-site mixed-methods study entitled, Improving Medical Performance during Acute Crises Through Simulation (IMPACTS). Objectives for the CTA aspect of this study included (1) developing a descriptive model of anesthesiologist decision-making and (2) identifying differences in decision strategies between “high” and “low” clinical performances. The study included 102 anesthesiologist participants (across four different sites), who underwent the same four simulation scenarios each followed by an approximately 40-minute cognitive interview resulting in a total of 408 interviews.

Methods

In designing the methods for this study, we adapted an established cognitive interview technique called the simulation interview (Militello and Hutton, 1998). We trained cognitive interviewers at each of the four sites and developed strategies to certify interviewers and minimize drift over time. We also adapted qualitative data analysis strategies for processing large sets of data. In the following sections, we detail the strategies used to adapt the methods for this study.

Cognitive Interviews

Simulation sessions were conducted in dedicated simulation facilities at four large academic medical centers. Each anesthesiologist participated in four 15–17-minute simulated scenarios in a single day. Table 1 provides an overview of each scenario. Scenarios were designed to create challenging situations such as role conflicts with other members of the healthcare team and complex acute care crises (e.g., multiple organ systems deteriorating simultaneously and/or difficult to diagnose medical conditions). To increase realism, scenarios included the use of trained confederates playing the role of patients and members of the healthcare team. Two of the scenarios used a commercial computerized simulation manikin (Laerdal SimMan 3G) in the patient role to allow participants to perform more invasive medical interventions as the patient deteriorated during the scenario. Immediately following each simulation session, the anesthesiologist participated in a cognitive interview.

Table 1.

Summary of Simulation Scenarios.

| Scenario name | Chief Complaint | Scenario summary | Key challenges |

|---|---|---|---|

|

| |||

| Brown | Shortness of breath | A 55-year-old man is in PACU after undergoing laparotomy for bowel perforation due to diverticulitis. In PACU he becomes progressively short of breath, hypotensive and tachycardic. Without treatment this progresses to a respiratory rate of 30, desaturation, even with supplemental O2 of 80%, blood pressure to 88/70 and heart rate of 140 with his preexisting atrial fibrillation | Participant must prioritize and manage multiple problems; diagnostic challenges are substantial. This case requires rapid reaction as patient’s condition quickly deteriorates. Sepsis and surgical complications are likely underlying causes; requires coordination with surgical team. Medical management is complex |

| Hines | Hypotension | The patient is a 51-year-old, T6 paraplegic (after motor vehicle accident 5 years ago) with hypertension, diabetes mellitus (type 2), rheumatoid arthritis (steroid dependent) and a long smoking history, who presented for surgery with pain due to a left kidney stone, having been off all medications for 2 days. He is now in the PACU after multiple minimally invasive urologic procedures resulting in stone extraction and stent placements. He has both a nephrostomy tube and Foley in place with hematuria evident). The patient has potential urosepsis and instability in PACU. He becomes progressively somnolent, hypotensive and tachycardic requiring resuscitation and intubation | Participant must evaluate the key elements of the history and surgical events. Several changes in patient’s condition require reassessment. Left shoulder pain may be a distractor. Spinal injury invokes a broad range of abnormal physiological responses that must be evaluated. This case requires the participant to coordinate care and rapidly execute multiple interventions including ventilatory support and aggressively use vasopressors |

| Jonesa | Altered mental status | Previously healthy adult patient who is in the PACU after an uneventful sedation and regional block for a carpal tunnel release. The patient has an unusual emergence – inappropriately progressing to incoherent verbal responses and abnormal motor movements. The anesthesiologist (confederate) who has been taking care of this patient in the PACU is fixated on the belief that the patient has post-anesthesia delirium. When the confederate anesthesiologist tries to reverse the residual anesthetics, the patient gets worse | Participant must manage a colleague (confederate) fixated on an incorrect diagnosis, creating potentially challenging interpersonal dynamics with an unknown peer. The underlying condition, serotonin syndrome, is relatively uncommon and not well-known to many. Participant must change the direction of the treatment strategy despite an insistent colleague (confederate) and broaden the differential diagnosis considered by the team |

| Wilsona | Chest pain | A 47-year-old female with diabetes mellitus (type 2), hypertension, reflux, and anxiety/depression presents for total thyroidectomy for toxic goiter (on methimazole). In pre-op holding during pre-anesthetic evaluation, she becomes progressively more anxious, tachypneic and uncomfortable. This progresses to chest pain, electrocardiogram changes, and hemodynamic instability with nadir at 15 minutes, if untreated, of tachycardia (134), hypotension (78/48), desaturation (82%), and wheezing | Participant must delay or cancel surgery; requires negotiation with surgical colleague (confederate) who is reluctant to delay or cancel. The patient arrives late, increasing time pressure. Participant must recognize an acute myocardial infarction, provide supportive therapy, and coordinate care for transfer to cardiac catheterization lab/Cardiology. Patient’s persistent hyperthyroidism complicates interpretation of symptoms |

PACU refers to Post-Anesthesia Care Unit.

Patient is portrayed by an actor (i.e., a standardized patient). For Brown and Jones, the patient is portrayed by a manikin with someone else speaking through a microphone for patient interactions.

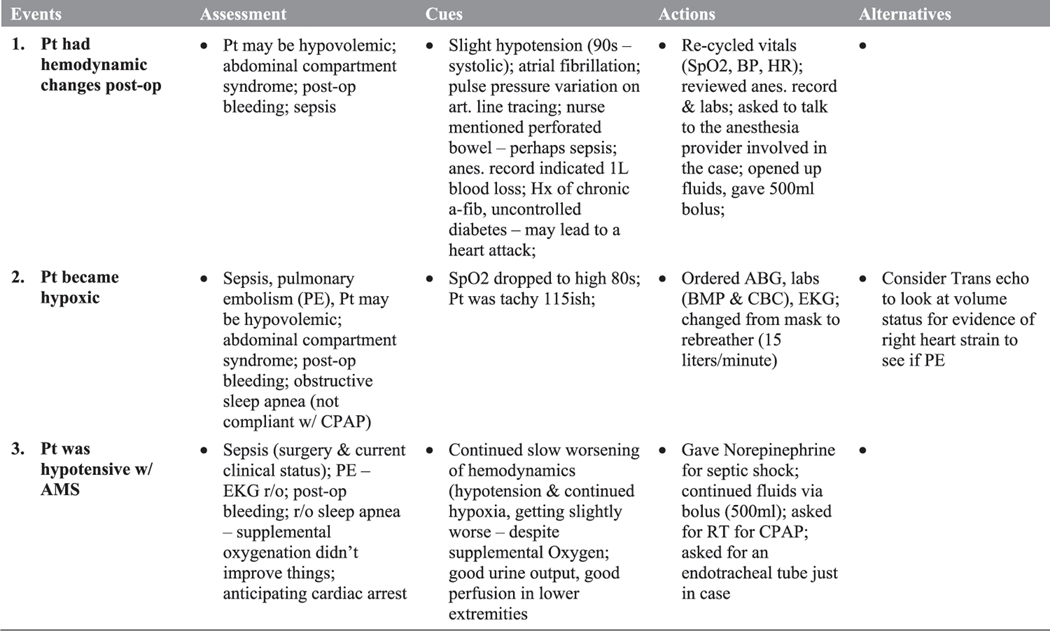

The simulation interview (Militello and Hutton, 1998) was initially described as part of the applied cognitive task analysis (ACTA) suite of methods, intended to make CTA methods more streamlined and usable by instructional designers and practitioners with limited background in cognitive psychology or in conducting cognitive interviews. This interview technique was well-suited to the IMPACTS study design. The traditional simulation interview begins with the participant experiencing a simulated scenario, after which they are asked to identify 3–5 key events that stand out for them in the scenario. Events that stand out for people are often significant shifts in the interviewee’s understanding of the situation, and actions taken by the interviewee or others involved in the incident. The interviewer then walks through each key event, using cognitive probes to help the interviewee unpack what they noticed, how they made sense of the situation, and actions they took. The precise cognitive probes used are tailored to each study. For the IMPACTS project, we used the probes listed in Figure 1.

Figure 1.

Cognitive probes.

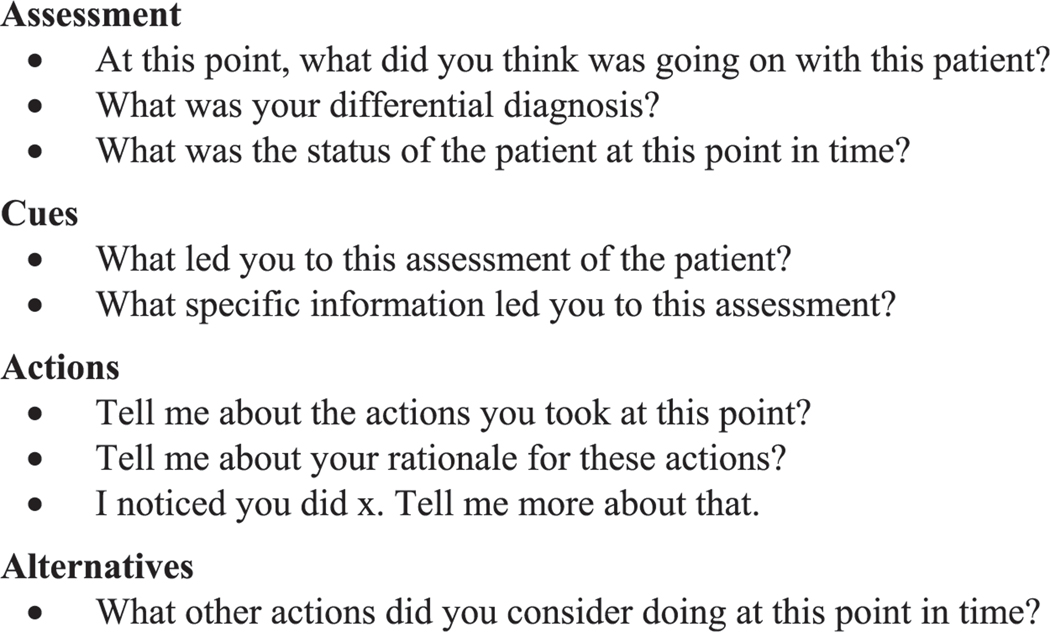

As described by Militello and Hutton (1998), interviewers use a structured note-taking form as a shared representation of the ensuing discussion so both the interviewer and the interviewee have a visible reminder of the key topics of the interview. The note-taking form is drawn as a matrix, in which the key events are listed in the left column as the interviewee articulates them at the beginning of the interview. Columns 2–5 provide space for the interviewer to record responses to cognitive probes for each event. The note-taking matrix is designed to aid interviewers in structuring the interview by first filling out the left column with the key events from the interviewee’s perspective, then by unpacking each event by filling in each row before moving on to the next event. Furthermore, the shared representation allows the interviewee to correct any misunderstandings as they are recorded throughout the interview. Figure 2 provides an excerpt from a note-taking form used in an interview about the Brown scenario.

Figure 2.

Excerpt from shared note-taking form for brown scenario. Pt = patient, RT = respiratory therapist, r/o = rule out, SpO2 = oxygen saturation, HR = heart rate, BP = blood pressure.

Training Interviewers

Fourteen interviewers across 4 sites conducted interviews for this study. Five had experience with cognitive task analysis. Others came from a variety of backgrounds including nursing, mechanical engineering, counseling, library and information science, and informatics. Some had significant experience conducting other types of qualitative interviews, simulation debriefs, or counseling interviews. To train this diverse set of interviewers, we began with a two-day in-person workshop in which cognitive interviewers were provided an overview of the simulation interview technique and a demonstration. Interviewers then broke up into small groups of 2–3 to practice conducting and documenting interviews. Interviewers observed a video recording of a pilot simulation, and then interviewed an anesthesiologist about the scenario they had just observed. The anesthesiologists who helped with training were not part of the cognitive interviewing team. They were instructed to imagine that they were the person in the recording, and to provide challenges to the interviewer such as responding to questions with vague, single word answers; over-explaining simple concepts; or derailing the conversation with unrelated tangents. There were four practice interview sessions, one for each scenario. Each interviewer had an opportunity to practice leading 1–2 interviews and to observe 1–3 interviews.

Experienced cognitive engineers were present in each practice session to provide real-time coaching and feedback. After each practice session, the entire group met to discuss insights and challenges before going into the next practice session. After completing the four practice sessions, the cognitive interviewers met to work through a pre-mortem exercise (Klein, 2007) in which they were asked to imagine that the project had failed and to write down the reasons for failure. The group then discussed the responses, exploring strategies for overcoming potential obstacles.

Adjusting the Project After the COVID-19 Pandemic Began

Soon after this in-depth training and before data collection began, shut-downs in response to the COVID-19 pandemic were enacted, delaying data collection for approximately six months. During this break in study activities, some interviewers who attended the initial training had moved on to other jobs necessitating the recruitment of new interviewers; for the others, we anticipated that the passage of time may have led to skill decay. Due to the delay between training and data collection, the team determined that it would be important to develop a strategy for certifying interviewers to ensure that there was consistency across interviewers and study sites. Further, best practices for conducting cognitive interviews virtually via Zoom needed to be developed and communicated to the interviewers at each site, as it continued to be important to limit face-to-face interactions in the context of the COVID-19 pandemic.

Variability Across Sites.

As data collection became possible, study sites conducted additional pilot simulation sessions and recorded practice cognitive interviews. Our experienced cognitive engineers reviewed recordings of practice cognitive interviews and sent written feedback to interviewers. It was determined that interviewers would benefit from refresher training. In addition, as cognitive engineers reviewed recordings from different study sites, it became clear that individual sites were using different procedures and individual interviewers were modifying their interview technique. For example, in some interviews the anesthesiologist led the discussion, simply talking through the shared note-taking form while the interviewer transcribed what was said. In these cases, no cognitive probes were used; rather the anesthesiologist gave top-of-the-head responses based on the column labels in the note-taking matrix. In other interviews, events were not elicited; rather the anesthesiologist simply talked for 30 minutes about the experience with limited direction from the interviewer. In some interviews, the interviewer moved through the columns of the note-taking form rather than the rows. Thus, instead of unpacking assessment, cues, and actions for a single event, the interviewer asked about assessments for each event, followed by cues for each event, and then actions, often resulting a muddled recounting of the incident.

Certifying Interviewers and Reducing Drift.

To address these issues, the cognitive engineering team further standardized the procedure to reduce variability. Key procedural issues were discussed with site principal investigators to encourage common ground across sites regarding interview set up. The cognitive engineering team created an interview guide that clearly led interviewers through the procedure beginning with a scripted introduction that established the interviewer as the person who would lead the interview session. The interview guide described the interview in terms of five clear steps: (1) Read the introduction, (2) Elicit 3–5 key events to record in the first column of the matrix, (3) Complete the entire row of the matrix for each event identified by the participant, (4) Ask standardized questions for each event as it is unpacked, and (5) Wrap up. The guide included prompts for when to share screens, when to start the timer, and how to take notes (See Online Appendix B for complete interview guide).

The new standardized procedures were introduced in a series of virtual training sessions. Due to scheduling difficulties, we were unable to assemble all interviewers at the same time. Thus, multiple sessions were held and interviewers were encouraged to attend as many as they could. The virtual training included four components:

The perspective component reviewed study goals, models of decision-making and expertise, and general interviewing skills. In addition, we discussed the types of variability that we had observed and introduced the new interview guide.

In the critiquing component, interviewers were asked to review and critique excerpts from recorded interviews, and then met to discuss.

For the practice component, interviewers had an opportunity to interview anesthesiologists via Zoom with coaches present. Virtual breakout rooms were used so that multiple practice interviews could happen simultaneously. At the end of each practice session, interviewers met to discuss lessons learned, challenges, and points of confusion.

An interactive and continuous feedback and training component included regular monthly meetings, during which interviewers for all sites met to discuss what was going well, specific challenges, and strategies to address any challenges or questions that had arisen.

It is important to note that not every CTA interview yields rich data, regardless of the skill and experience of the interview team. There are a number of variables that influence data quality including the (often difficult-to-predict) level of rapport and trust between interviewee and interviewer, individual participant differences in ability to reflect on and describe one’s experiences, and interviewer skill. The virtual training sessions addressed these common interviewing challenges.

Qualitative Analysis

Qualitative analysis of cognitive interview data typically involves in-depth exploration of cases, using thematic analysis (Braun and Clarke, 2012), grounded theory (Strauss and Corbin, 1997), and various adaptations of these approaches (Crandall et al., 2006). Militello and Anders (2020) describe two complementary approaches to the analysis of cognitive interview data. One approach is decompositional, which focuses on identifying themes related to topics of interest. In this approach, multi-disciplinary teams review a subset of the data to identify and discuss potential themes. These potential themes are developed into a codebook to support systematic review of the data for evidence to support, refine, and refute the potential themes. Excerpts from interview transcripts are coded into categories so that all data relevant to a potential theme can be examined together to abstract insights. This process is generally exploratory and iterative with an emphasis on independent review of the data, followed by consensus meetings to explore different interpretations and reach consensus about the implications of the data for the project’s research questions.

A second approach focuses on examining intact or complete incidents related by interviewees to explore commonalities and idiosyncrasies in cues that were noticed, challenges or complexities encountered, goals formed, and strategies implemented. Rather than decomposing each incident into themes, this more holistic approach examines entire incidents to understand the decision process in context.

These two complementary approaches allow for a thorough examination of qualitative data sets. The use of multi-disciplinary teams and emphasis on consensus meetings to explore different perspectives is designed to increase the likelihood of discovery of unexpected insights. However, this process is labor intensive because it requires each transcript to be reviewed by multiple people and, often, multiple sweeps through the data are conducted. The time required to conduct this type of analysis on the 480 interview transcripts collected in the IMPACTS study was infeasible given the constraints of the research project’s timeline and duration.

Hence, for this project, we started with a subset of the data to develop a streamlined analysis strategy. We selected two ‘high’ performances and two ‘low’ performances for each of the four scenarios from each of the four sites, for a total of 64 transcripts. Performance was rated by a team of experienced anesthesiologists who were blinded to the participants’ experience level and affiliation.

We began by tailoring these two complementary analysis approaches to the data set and project goals. One goal of this study was to inform a descriptive model of anesthesiologist decision-making. The team had developed a hybrid working model of decision-making (Reale et al., 2021; Anders et al., 2022), integrating models from the naturalistic decision-making (Klein et al., 2010) and anesthesia communities (Gaba, 1992; Gaba et al., 2014) to describe processes such as problem detection and framing, assessing and recognizing, critiquing, acting, and correcting. For the decompositional analysis, we used the decision-making processes described in the hybrid working model to form an initial codebook. A team of behavioral researchers (SA, LGM, CR, and MES) and anesthesiologists (JR and DG) independently coded one transcript using this initial codebook, and then met to discuss. The team generated definitions for each coding category and identified examples from the data. Coding categories were added to represent aspects of decision-making described by interviewees that did not fit into existing categories. The team coded a second interview and continued refining the codebook. After four interviews, one from each scenario, the codebook was deemed to be stable; specifically, no new categories emerged and coders were able to agree on definitions for each category. See Table 2 for an excerpt of the codebook, and online Appendix A for the full codebook.

Table 2.

Excerpts From Codebook.

| Category | Code | Definition |

|---|---|---|

|

| ||

| Acting | Anticipating | Participant describes an action taken or considered in anticipation of patient’s future condition |

| Execution evaluation | Participant describes checking for effective implementation of an action | |

| Information seeking | Provider takes an action with the intent of learning more about the patient’s condition | |

| Innovation | Participant reports that protocol is not sufficient; they innovate | |

| Rule in/Rule out | Participant describes strategy for ruling in/ruling out hypotheses | |

| Rule-based behavior | If this, then that; participant describes an action taken that is tied to a rule, protocol based; includes use of procedures in decision aids | |

| Serial versus concurrent implementation | Participant reports that they must prioritize, choose which thing(s) to do first; or participant describes a series of actions to achieve a certain goal | |

| Temporizing | Participant describes actions meant to forestall, buy time, watchful waiting, hedging, etc. | |

| Assessing/Recognizing | Analogical reasoning | Participant indicates that they have seen something analogous before and made judgments based on the similar case |

| Goals | Participant describes goals at a particular point in time, or participant describes global goals such as “I wanted to bring BP down.” | |

| Mechanistic thinking | Physiologic thinking, while doing routine things tagging back to the physiology | |

| Pattern matching | Participant describes a global assessment that integrates several sets of data points. For example, describes a pattern of cues and an assessment (A + B + C = D) |

|

| Recognition | Participant describes recognizing the situation or condition as familiar – knew exactly what to do | |

| Refining the differential | Participant describes multiple diagnostic hypotheses and how he/she is ordering and re-ordering the hypotheses in terms of likelihood | |

| Sense-making | Participant describes specific cues and how they fit together; this could include multiple possible interpretations | |

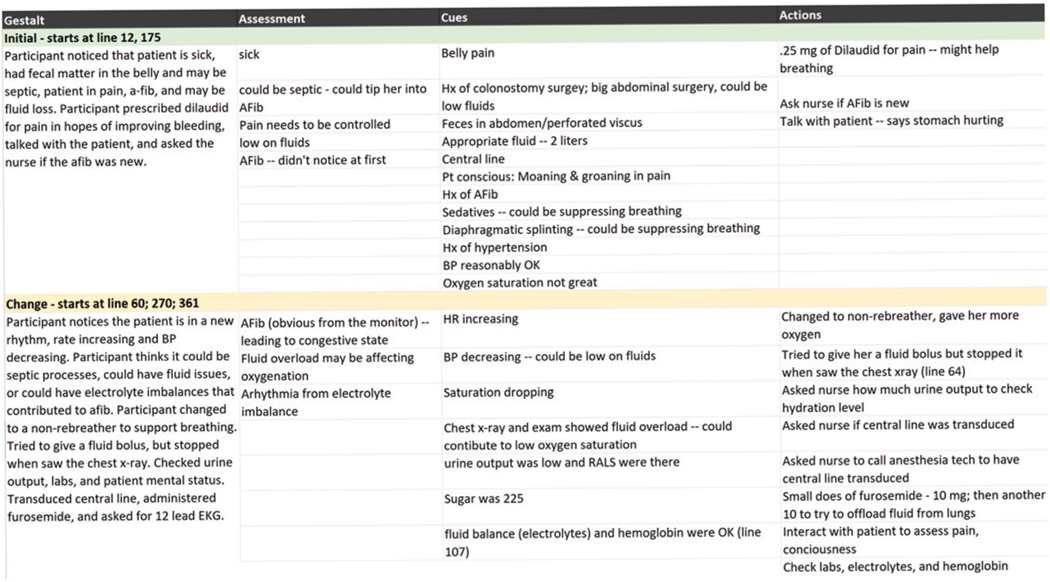

A second goal of this study was to identify differences in decision strategies between high and low performers. We used the holistic analysis approach for this. Analysts identified three assessment points in each scenario: an initial assessment, an assessment when the patient’s condition changed dramatically, and an assessment near the close of the scenario. Because these were simulated incidents, there was a designated point at which the patient’s condition escalated in each scenario. Analysts reviewed the interview transcript and the shared note-taking form (Figure 2) to extract a holistic statement summarizing the interviewee’s understanding of the situation at each of these points in time, as well as cues, assessments, action taken, and actions considered but not taken. They used the holistic coding form in Figure 3 to record their summary of the incident.

Figure 3.

Holistic analysis form for one interview in the brown scenario.

To streamline the process, a team of two analysts was assigned to analyze each scenario. Our rationale was that by analyzing data from 16 transcripts based on the same simulated scenario, it would be easier for analysts without a background in anesthesiology to become familiar with a specific incident. We anticipated that each analyst team would begin to notice common cues, assessments, and actions, as well as anomalies for that particular scenario. We also anticipated that this analysis would provide insight into commonalities and differences in decision processes.

The coding process progressed from independent coding to consensus within each analysis team. Each coder in a team independently reviewed a transcript and used Dedoose™ qualitative analysis software to apply the codes as part of the decompositional analysis. After coding the transcript, they each identified where in the transcript the discussion shifted from the initial assessment to the escalation assessment to the final assessment (sometimes highlighting multiple sections if the interview moved back and forth in time) and drafted a holistic statement summarizing the participant’s understanding at each assessment point. The coding team met to discuss the transcript and reach consensus on the codes in Dedoose™ as well as agree on the points in the transcript that corresponded to the three key assessment points and the holistic statements. They independently completed the holistic coding form, and then met again to reach consensus. To reduce coder drift, when all of the coding teams had coded seven transcripts, an analyst from outside each team coded the eighth transcript and participated in the consensus meetings. All coders met at this midpoint to discuss and resolve any differences in coding practices.

Findings

We examined both the decomposition and holistic analyses to determine whether the cognitive interviews did, in fact, elicit cognitive information that would inform project objectives.

Decompositional Analysis

With regard to the decompositional analysis, we examined the decision-making processes described in the hybrid working model of decision-making (the basis of our codebook), and the number of transcripts in which each appeared, broken down by patient scenario. See Table 3 for an excerpt of this analysis; additional findings from this analysis will be reported in a forthcoming manuscript. We found that each decision-making process appeared in at least one transcript, suggesting that the cognitive interviews successfully elicited examples of cognitive activities. Furthermore, when we examined the frequency of codes across scenarios, there was important diversity. The scenarios were designed to present different cognitive challenges that would require different approaches. These data suggest that the scenarios were successful and that the cognitive interviews helped participants describe their cognitive processes in these different contexts. For example, frame shifts appeared more often in transcripts related to the Hines scenario (12 out of 16) than the Wilson scenario (5 out of 16). For this study, the term frame shift referred to instances when participants described a change in cognitive frame; in other words, their understanding of the situation changed. The Hines scenario includes an event designed to surprise; specifically, as participants are assessing and treating left shoulder pain after surgery, the patient’s blood pressure decreases rapidly requiring participants to reassess the situation and reprioritize their actions. Thus, one might expect participants to experience a frame shift. The temporizing code provides another example of diversity across scenarios. This code was applied to actions taken to “buy time,” allowing participants to work on stabilizing the patient before they had figured out exactly what was occurring. The Jones scenario appears to have evoked more temporizing (15 out of 16 transcripts) than any of the other scenarios (9 out of 16 transcripts per scenario). This aligns with the patient condition in the Jones scenario. Mrs. Jones is experiencing serotonin syndrome, a relatively rare condition that is diagnosed primarily by exclusion. Mrs. Jones’ vital signs remain stable, although it is clear that she is having a serious problem. If the participant does not recognize serotonin syndrome, a common clinical approach is to temporize by keeping the patient stable and comfortable as the healthcare team gathers more information. For the other scenarios, the patients exhibited signs of impending decompensation requiring immediate intervention; thus, less temporizing would be expected.

Table 3.

Frequency of Codes Across Scenarios.

| Codes | Brown (N = 16) | Hines (N = 16) | Wilson (N = 16) | Jones (N = 16) | Total (N = 64) |

|---|---|---|---|---|---|

|

| |||||

| Temporizing | 9 | 9 | 9 | 15 | 42 |

| Frame shifts | 11 | 12 | 5 | 9 | 37 |

| Confirming/Disconfirming | 13 | 13 | 8 | 14 | 48 |

| Expectancies | 9 | 14 | 3 | 16 | 42 |

A third example relates to the expectancies and confirming/disconfirming codes. These codes were used when participants described how specific findings confirmed or disconfirmed their hypotheses, helping them refine the diagnosis. The Wilson transcripts had fewer examples confirming/disconfirming codes (8 of 16 transcripts) compared to transcripts of interviews based on other scenarios. Confirming/disconfirming codes were found in 13 of 16 Brown and Hines transcripts and 14 Jones transcripts. In the Wilson scenario, the patient exhibits signs of pronounced anxiety and the surgeon (confederate) insists that it is safe to proceed with surgery as anxiety is the primary issue for this patient. In spite of this misdirection, scenario designers expect skilled participants to order a 12-lead electrocardiogram (ECG), recognize the myocardial infarction, and cancel the surgery. When the participant suspects evolving cardiac deterioration, it is relatively easily confirmed. This is in contrast to the other scenarios in which the underlying cause(s) of the patient’s condition are more complex and not easily confirmed with a single test. The diversity across interviews provides evidence that the cognitive activities elicited were context-specific, as one would expect—the challenges of different situations evoked different cognitive responses.

Holistic Analysis

With regard to the holistic coding, our analysis suggests that the cognitive interviews did, in fact, help participants describe their understanding of the situation as the scenario unfolded, specific cues they attended to, goals at different points in time, actions they took, and their rationale for actions. To illustrate, we detail differences between the ways Participant 1 and Participant 2 described their experiences in the Brown scenario. In the Brown scenario, participants were presented with a patient complaining of pain following abdominal surgery. The nurse told participants that bowel contents had leaked into the abdomen during surgery—this is usually a trigger for an experienced clinician to be concerned about postoperative abdominal infection and associated hemodynamic instability. As participants 1 and 2 recounted their experiences, they framed it quite differently.

Initial Assessment.

At the start of the scenario, Participant 1 noted that the patient was complaining of abdominal pain and had peripheral IVs. Their initial assessment was that the patient probably needed blood and did not get enough medication during surgery. They recognized that the patient was sick and required immediate action. They began by reviewing the patient’s history with the nurse to learn how much blood was lost during surgery, whether the patient received blood and fluids, and if the patient was looking noticeably worse since the surgery.

In contrast, at Time 1, Participant 2 noted that the patient was hypotensive and had a “dirty abdomen,” referring to the stool content that spilled into the abdomen when the bowel ruptured. Their initial assessment was that the patient would be pretty sick for at least 24 hours and was likely to develop sepsis. At the same time, Participant 2 recalled thinking of alternative explanations, such as anemia from blood loss, atrial fibrillation, and pain. Participant 2 recalled checking the dressing on the wound to make sure it was clean and dry, checking blood pressure, administering an IV bolus of fluids, ordering medication to increase blood pressure (phenylephrine), and increasing the oxygen administered through the non-rebreather face mask.

Escalation Assessment.

At Time 2, Participant 1 recalled being concerned about the low oxygen status despite the use of a non-rebreather mask, and about fluid overload as crackles on both lungs were detected. Participant 1 noted that the patient had not received blood products, even though she had lost one liter of blood in the operating room (OR). The combination of low blood pressure, high heart rate, high temperature, and low urine output prompted Participant 1 to take several actions: conduct a more thorough physical exam; order labs, such as a complete blood count (CBC) panel and lactate; give a small dose of pain medication; administer medication to raise blood pressure (norepinephrine); order a fluid bolus; call to have more blood available; and order imaging of the chest and abdomen. Participant 1’s differential diagnosis included fluid overload, under-resuscitation, heart failure, pulmonary edema, poor pain control, collapsed lungs, free air or bowel perforation, and fluid filled process in the lungs.

Participant 2, in contrast, had only two things on their differential at Time 2. They were confident that the patient had sepsis but had not yet completely ruled out bleeding. They noted that the patient appeared mildly confused. Participant 2 wanted to accurately monitor and assess the patient’s mental status, so they held off on giving pain medication. To determine whether the patient was properly ventilating, Participant 2 ordered an arterial blood gas analysis (ABG), with a focus on learning the patient’s acid-base status (acidosis would indicate hypovolemia and/or sepsis). Participant 2 checked the wound again to assess whether the belly was soft and told the nurse to prepare to intubate.

Final Assessment.

At the end of the scenario, Participant 1 still had a broad differential including under-resuscitation from blood loss, transfusion-related acute lung injury, transfusion-related cardiac injury, acute heart failure, postoperative pain, flash pulmonary edema, and acute kidney injury. Participant 2 had refined the differential to septic shock, had administered pain medication, and was committed to intubating the patient. Both participants highlighted the significance of the elevated lactic acid (which suggested poor perfusion to the organs), called the surgical team, and planned to move the patient to the intensive care unit (ICU). Even though their actions at the end of the 15-min simulated scenario were quite similar, their recounting of the incident highlighted differences in what cues they considered critical, the way they built and refined their differential, and the rationale for their actions at different points in time. Table 4 summarizes the differences in participants’ self-report of their experiences in the same scenario. Future analysis will examine data in this same way to identify potential patterns in decision-making style.

Table 4.

Differences in Two Participants’ Description of Their Experience in the Brown Scenario.

| Participant 1 | Participant 2 | |

|---|---|---|

|

| ||

| Time 1 | ||

| Cues | – Abdominal pain | – Hypotensive |

| – Peripheral IVs | – “Dirty abdomen” | |

| Assessment | – Patient is sick | – Patient will be sick for 24 hours |

| – Patient requires immediate action | – Patient likely to develop sepsis Differential includes: | |

| – Anemia from blood loss | ||

| – Atrial fibrillation | ||

| – Poor pain control | ||

| Actions | – Reviewed patient history to learn about blood loss, blood/fluids administered, change since surgery | – Checked dressing on wound |

| – Checked blood pressure | ||

| – Ordered IV fluids | ||

| – Ordered medication to increase blood pressure | ||

| – Increased oxygen | ||

| Time 2 | ||

| Cues | – Low oxygen status | – Patient appears mildly confused |

| – Crackles on lungs suggest fluid overload | ||

| – Patient had not received blood products | ||

| – Combination of low blood pressure, high heart rate, high temperature, low urine output | ||

| Assessment | Differential includes: | Differential includes: |

| – Fluid overload | – Sepsis | |

| – Under-resuscitation | – Bleeding | |

| – Heart failure | ||

| – Pulmonary edema | ||

| – Poor pain control | ||

| – Collapsed lungs | ||

| – Free air or bowel perforation | ||

| – Fluid filled process in lungs | ||

| Actions | – Conducted physical exam | – Held off ordering pain medication to monitor and assess mental status |

| – Ordered labs, CBC panel and lactate | ||

| – Ordered pain medication | ||

| – Ordered medication to increase blood pressure | – Ordered ABG to assess ventilation and lactate | |

| – Ordered IV fluids | – Assessed belly again | |

| – Called to have more blood available | – Told nurse to prepare to intubate | |

| – Ordered imaging of chest and abdomen | ||

| Time 3 | ||

| Cues | – Elevated lactate | – Elevated lactate |

| Assessment | Differential includes: | Differential includes: |

| – Under-resuscitation | – septic shock | |

| – Transfusion-related lung injury | ||

| – Transfusion-related cardiac injury | ||

| – Acute heart failure | ||

| – Postoperative pain | ||

| – Flash pulmonary edema | ||

| – Acute kidney injury | ||

| Actions | – Called the surgical team | – Ordered pain medication |

| – Planned to move patient to intensive care unit | – Committed to intubating | |

| – Called the surgical team | ||

| – Planned to move patient to intensive care unit | ||

| End of simulation | ||

Discussion and Conclusion

We highlight three practical challenges in scaling CTA from small, in-depth studies to a large sample, multi-site study. The first practical challenge involved standardizing the interview technique. With a smaller research team, interviewers are usually able to be more agile, adapting the technique as needed while still maintaining common ground across the team; this allows more room for interviewers to exercise their own judgment and follow their curiosity. For this larger research team, distributed across multiple sites and with varied backgrounds, pilot interviews revealed that there was too much variability in the conduct of the interviews to support analysis across sites. Delays in data collection due to the global COVID-19 pandemic may have further exacerbated the variability between interviewers. Therefore, more standardization was required. We found that creating a streamlined interview guide and hosting many virtual training sessions helped us to create a process to elicit the data needed to address our study goals.

The second practical challenge was developing a strategy for training this diverse and distributed team of interviewers. We created virtual training sessions that addressed both the specific interview procedures and also the role of the interviewer. We discovered that there were social norms to overcome when speaking with anesthesiologist interviewees about their domain of expertise. Not all interviewers understood that it was their role to lead and direct the interview rather than follow along as one might in a social situation. In some cases, there was a need to overcome a perceived power differential between the physician interviewee and the non-physician interviewer. Many interviewers needed to learn specific strategies for politely but effectively interrupting and redirecting the participant anesthesiologists, while maintaining a positive rapport during the interview sessions.

The third practical challenge was developing an analysis strategy for this large data set. We began by sampling two high performers and two low performers from each site for each scenario. We established four two-person analysis teams to conduct in-depth analysis of the 16 transcripts from each scenario. Teams analyzed each transcript for themes related to decision processes (decompositional analysis) and also examined each participant’s description of the unfolding incident (holistic analysis). Beginning with a sample of the data allowed us to establish an analysis strategy, develop a codebook, and explore the data. These analysis activities lay the foundation for developing priorities and strategies for analyzing additional transcripts, perhaps using more streamlined, efficient, (and likely narrower) strategies. In summary, key learnings for adapting CTA methods for a large, distributed team include the following:

Standardization. Increased standardization of technique was needed for this large, multi-site study. This included a scripted introduction, a clear interview guide designed to be used real-time as a job aid, and note-taking forms.

Training. Multiple training sessions that included demonstrations, practice interviews, coaching, and critique of recorded interviews helped prepare interviewers. Although we began with in-person training, constraints imposed by the COVID pandemic inspired us to create virtual training that proved effective.

Overcoming social norms. Interviewers benefitted from coaching that helped them understand and communicate their role in directing the interview, politely interrupting and redirecting, and maintaining rapport.

Staged analysis. For this large data set, starting with a subset of data allowed us to explore the data and refine an analysis strategy that sets the stage for additional analysis of the larger dataset.

Given that this was our first experience using a large, distributed interviewing team with varied backgrounds, we were eager for evidence that the cognitive interviews worked and that they successfully elicited cognitive aspects of performance related to decision-making. Over the course of the study, 14 different people were trained and conducted cognitive interviews. Our analysis suggests that even with this large, diverse team, the cognitive interviews were successful. Participants were able to articulate what cues they noticed, how they made sense of them, their goals, and the rationale for their actions.

One benefit of the approach used in this study is that we gained substantial scheduling flexibility and improved interviewer availability for study days because any of our trained interviewers could remotely cover upcoming scheduling gaps for another site or fill in for a sick interviewer at the last minute without delaying the study or rescheduling participants. Due to the extensive resources required to conduct these full-day simulation studies at four different sites, the remotely accessible large interviewer team ended up being an important asset, saving valuable time and money (e.g., no travel costs or missed data collection opportunities).

One limitation of this study is that we did not compare different interview techniques; other techniques may have elicited more detail or more depth. However, the data collected are sufficient to meet our study goals, namely, eliciting examples of decision processes to inform the hypothesized hybrid model of decision-making for anesthesiologists and to explore differences in decision processes across participants. Another limitation of this study stems from the complexity associated with such a large, distributed study design that relies heavily on audio, video, and information technology. Specifically, some of the interview data were lost or incomplete (e.g., recordings that captured only a small portion of the interview or had poor audio quality). Recordings of some interviews were not consistently uploaded, or the files were misnamed making it impossible to track down the correct recordings. Some of the companion note-taking matrices were not shared with the analysis team and could not be tracked down after cognitive interviewers left the project. Many of these issues stemmed from technical challenges (e.g., large file sizes) and each site tailoring study procedures to fit their own constraints. In spite of these logistical challenges, only a small number of interviews were lost; in identifying 64 transcripts for this initial analysis only two of those screened were deemed unusable due to technical difficulties.

Cognitive task analysis methods are traditionally tailored to each project. In most cases, however, a small set of interviewers conducts a relatively small set of in-depth interviews that are extensively analyzed, often iteratively. CTA is often used during front-end, exploratory projects to understand cognitive challenges and how experienced practitioners manage complexity. The case study discussed in this paper expands the use of CTA methods by adapting the methods to be more streamlined in order to support large-scale data collection.

These preliminary analyses set the stage for analysis of the larger data set. This in-depth analysis will inform the proposed hybrid model of decision-making and characterize differences in decision processes between high and low performers. Insights identified in the preliminary analysis will inform more targeted hypotheses to be explored in the larger data set. For example, if particular patterns of cues, assessments, and actions appear to distinguish skilled from unskilled performances in these preliminary analyses, we will investigate whether the same patterns appear in the larger data set, and whether mid-level performances show a distinct pattern. We expect that findings from these analyses will have implications for anesthesiologist training design and will articulate future lines of follow-on research.

Supplementary Material

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by grant 5R18HS026158 to M. B. Weinger and Vanderbilt University Medical Center from the Agency for Healthcare Research and Quality (AHRQ, Rockville, MD) and grants K12HS026395 and K01HS029042 to M.E. Salwei from AHRQ. The views of this abstract represent those of the authors and not AHRQ.

Biographies

Laura G. Militello is co-founder and CEO at Applied Decision Science, LLC, a research and development company that studies decision making in complex environments. She also co-founded Unveil, LLC, a company that leverages emerging technologies such as augmented reality to deliver training to combat medics and others. She conducts cognitive task analysis to support design of technology and training. She is an active member of the Naturalistic Decision Making community and cohosts the NDM podcast.

Megan E. Salwei, PhD is a Research Assistant Professor in the Center for Research and Innovation in Systems Safety (CRISS) in the Departments of Anesthesiology and Biomedical Informatics at Vanderbilt University Medical Center. She received her PhD in Industrial and Systems Engineering from the University of Wisconsin-Madison. Her research applies human factors engineering methods and principles to improve the design of health information technology (IT) to support clinician’s work and improve patient safety.

Ms. Carrie Reale is a board-certified nurse informaticist and human factors researcher with the Center for Research and Innovation in Systems Safety (CRISS) at Vanderbilt University Medical Center. Her research focus is on the application of human factors methods to improve the safety and usability of healthcare technology, including electronic health record user interfaces, clinical decision support tools, and medical devices.

Christen Susherebais a research associate at Applied Decision Science. She applies cognitive engineering and human factors methods to a variety of domains including military, medicine, workload, and human-automation teaming. Ms. Sushereba has over 10 years of experience applying cognitive task analysis methods to address research questions around effectiveness of training and new technology solutions. Ms. Sushereba holds an M.S. degree in Human Factors and Industrial/Organizational Psychology from Wright State University in Dayton, Ohio.

Dr. Jason M. Slagle is a Research Associate Professor in the Center for Research and Innovation in Systems Safety (CRISS) at VUMC. Dr. Slagle applies human factors principles & methodologies in a wide variety of clinical domains to study the effects of a variety of factors (e.g., technology, non-routine events, experience, workload, teamwork, fatigue, etc.) on performance. He also does work involving the design & evaluation of HIT and clinical decision support tools.

David Gaba, MD is Associate Dean for Immersive and Simulation-based Learning and Professor of Anesthesiology at Stanford School of Medicine. He is a Staff Physician and Founder of Simulation Center at VA Palo Alto Health Care System. For many years he has performed theoretical and empirical research on decision making and performance of anesthesiologists. He was Founding and first 12 years Editor-in-Chief of the primary peer-reviewed simulation journal –Simulation in Healthcare.

Matthew B. Weinger, MD, MS is the Norman Ty Smith chair in patient safety and medical simulation and is a professor of anesthesiology, biomedical informatics, and medical education at Vanderbilt University. He is the director of the Center for Research and Innovation in Systems Science (CRISS) at Vanderbilt University Medical Center. Dr. Weinger is a fellow of the American Association for the Advancement of Science (AAAS) and of the Human Factors and Ergonomics Society (HFES).

John Rask, MD is Professor Emeritus of Anesthesiology at the Univ of New Mexico. Clinically active for 38 years, he was Director of the UNM BATCAVE Simulation Center. He has been a member of the ASA Editorial Boards for Simulation Education and Interactive Computer-based Education, and was an ABA Examiner. His academic interests include international simulation faculty development, decision-making in medicine, use of simulation for assessment, and the use of XR/VR/AR in medical education.

Janelle Faiman, who trained as a sociologist, is a human factors specialist in CRISS at Vanderbilt University Medical Center. She focuses on the application of human factors methods to improve the safety and usability of healthcare technology, including EHR user interfaces, clinical decision support tools, and medical devices.

Michael Andreae, MD PhD is Professor of Anesthesiology at the University of Utah, Salt Lake City, UT. His research interest is in health systems and healthcare disparity research, with mixed and participatory methods and grounded theory to investigate decision-making regarding, and facilitators/barriers to equitable adherence with evidence-based guideline. Dr. Andreae is board certified in Anesthesiology; he holds an MD, and PhD from Bonn, and Freiburg University, Germany, respectively.

Amanda R. Burden, MD is a Professor of Anesthesiology at Cooper Medical School of Rowan University. Dr. Burden’s research and publications address the use of simulation to explore a range of physician education and patient safety issues, particularly involving critical thinking and decision-making during crises. Dr. Burden completed her residency and fellowship training in anesthesiology and pediatric anesthesiology at the Hospital of the University of Pennsylvania and Children’s Hospital of Philadelphia.

Shilo Anders, PhDis a Research Associate Professor in the Departments of Anesthesiology, Biomedical Informatics, and Computer Science at Vanderbilt University. She trained as a cognitive engineer receiving her PhD from The Ohio State University. Dr Anders’ research interest is to apply human factors engineering as an approach to improve system design, individual and team performance, and patient safety and quality in healthcare using simulation and in real-world environments.

Footnotes

Supplemental Material

Supplemental material for this article is available online.

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Contributor Information

Laura G. Militello, Applied Decision Science, Kettering, OH, USA

Megan E. Salwei, Center for Research and Innovation in Systems Safety, Department of Anesthesiology & Biomedical Informatics, Vanderbilt University Medical Center, Nashville, TN, USA

Carrie Reale, Center for Research and Innovation in Systems Safety, Department of Anesthesiology, Vanderbilt University Medical Center, Nashville, TN, USA.

Christen Sushereba, Applied Decision Science, Kettering, OH, USA.

Jason M. Slagle, Center for Immersive & Simulation-based Learning, Department of Anesthesiology, Perioperative & Pain Medicine, Stanford School of Medicine, Stanford, CA, USA

David Gaba, Patient Simulation Center, National Center for Collaborative Healthcare Innovation VA Palo Alto Health Care System.

Matthew B. Weinger, Center for Research and Innovation in Systems Safety, Department of Anesthesiology & Biomedical Informatics, Vanderbilt University Medical Center, Nashville, TN, USA

John Rask, Department of Anesthesiology, University of New Mexico, Albuquerque, NM, USA.

Janelle Faiman, Center for Research and Innovation in Systems Safety, Department of Anesthesiology, Vanderbilt University Medical Center, Nashville, TN, USA.

Michael Andreae, Department of Anesthesiology, University of Utah, Salt Lake City, UT, USA.

Amanda R. Burden, Cooper Medical School of Rowan University, Camden, NJ, USA

Shilo Anders, Center for Research and Innovation in Systems Safety, Department of Anesthesiology & Biomedical Informatics, Vanderbilt University Medical Center, Nashville, TN, USA.

References

- Anders S, Reale C, Salwei ME, Slagle J, Militello LG, Gaba D, Sushereba C, & Weinger MB (2022, March 2023). Using a hybrid decision making model to inform qualitative data coding [Paper presentation]. International Symposium on Human Factors and Ergonomics in Health Care, New Orleans, LA, United States. [Google Scholar]

- Braun V, & Clarke V (2012). Thematic analysis. In Cooper H, Camic PM, Long DL, Panter AT, Rindskopf D, & Sher KJ (Eds.), APA handbook of research methods in psychology, Vol. 2. Research designs: Quantitative, qualitative, neuropsychological, and biological (pp. 57–71). American Psychological Association. [Google Scholar]

- Crandall B, Klein GA, & Hoffman RR (2006). Working minds: A practitioner’s guide to cognitive task analysis. Mit Press. [Google Scholar]

- Gaba DM (1992). Dynamic decision-making in anesthesiology: Cognitive models and training approaches. In Evans DA & Patel VL (Eds.), Advanced models of cognition for medical training and practice (pp. 123–147). [Google Scholar]

- Gaba DM, Fish KJ, Howard SK, & Burden AR (2014). Crisis management in anesthesiology (2nd ed.). Elsevier. [Google Scholar]

- Hoffman RR, & Militello LG (2008). Perspectives on cognitive task analysis: Historical origins and modern communities of practice. Taylor and Francis. [Google Scholar]

- Klein G. (2007). Performing a project premortem. Harvard Business Review, 85(9), 18–19. https://hbr.org/2007/09/performing-a-project-premortem [Google Scholar]

- Klein G, Calderwood R, & Clinton-Cirocco A. (2010). Rapid decision making on the fire ground: The original study plus a postscript. Journal of Cognitive Engineering and Decision Making, 4(3), 186–209. 10.1518/155534310X12844000801203 [DOI] [Google Scholar]

- Militello LG, & Anders S. (2020). Incident-based methods for studying expertise. In Ward P, Schraagen JM, Gore J, & Roth EM (Eds.), The Oxford handbook of expertise. Oxford University Press. [Google Scholar]

- Militello LG, Hurley RW, Cook RL, Danielson EC, DiIulio J, Downs SM, Anders S, & Harle CA (2020). Primary care clinicians’ beliefs and strategies for managing chronic pain in an era of a national opioid epidemic. Journal of General Internal Medicine 35(12), 3542–3548. 10.1007/s11606-020-06178-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Militello LG, & Hutton RBJ (1998). Applied cognitive task analysis (ACTA): A practitioner’s toolkit for understanding cognitive task demands. Ergonomics, 41(11), 1618–1641. 10.1080/001401398186108 [DOI] [PubMed] [Google Scholar]

- Reale C, Sushereba C, Anders S, Gaba D, Burden A, & Militello L. (2021, June 21–23). What good are models? [Paper presentation]. Naturalistic Decision Making/Resilience Engineering Conference, France. [Google Scholar]

- Strauss A, & Corbin JM (Eds.). (1997). Grounded theory in practice. Sage Publications. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.