Abstract

Real-world actions require one to simultaneously perceive, think, and act on the surrounding world, requiring the integration of (bottom-up) sensory information and (top-down) cognitive and motor signals. Studying these processes involves the intellectual challenge of cutting across traditional neuroscience silos, and the technical challenge of recording data in uncontrolled natural environments. However, recent advances in techniques, such as neuroimaging, virtual reality, and motion tracking, allow one to address these issues in naturalistic environments for both healthy participants and clinical populations. In this review, we survey six topics in which naturalistic approaches have advanced both our fundamental understanding of brain function and how neurologic deficits influence goal-directed, coordinated action in naturalistic environments. The first part conveys fundamental neuroscience mechanisms related to visuospatial coding for action, adaptive eye-hand coordination, and visuomotor integration for manual interception. The second part discusses applications of such knowledge to neurologic deficits, specifically, steering in the presence of cortical blindness, impact of stroke on visual-proprioceptive integration, and impact of visual search and working memory deficits. This translational approach—extending knowledge from lab to rehab—provides new insights into the complex interplay between perceptual, motor, and cognitive control in naturalistic tasks that are relevant for both basic and clinical research.

Introduction

Real-world sensorimotor behavior—from grasping a toothbrush after breakfast to washing the dishes after dinner—seems so simple that it is easily taken for granted, so long as one is healthy. But for a neuroscientist, such “eye-hand coordination” behavior requires the seamless integration of multiple brain processes. The visual aspects alone involve object recognition and localization within complex scenes, and then the integration of this information with proprioception and other senses for use in action. The action systems include coordinated control of the eyes, head, and hand in these examples. These mechanisms are guided by top-down cognitive processes, including directed attention, decision-making, and planning. Daily goal-directed behavior that we take for granted can be severely impacted by neurologic deficits and cause severe challenges for various patient populations.

Scientists face several challenges in understanding how the brain coordinates real-world behavior, and how to understand and treat deficits in real-world sensorimotor behavior. One challenge is that the neural mechanisms for sensory systems, perception, cognition, sensorimotor transformations, and motor coordination have traditionally been studied in isolation, whereas one must understand how they interact to study real-world, coordinated action (Ballard et al., 1992; Johansson et al., 2001; Crawford et al., 2004; Land, 2006). A second challenge is that natural behavior, by its nature, is relatively uncontrolled compared with typical psychophysics tasks, hard to replicate in the laboratory, and hard to measure, especially in conjunction with brain activity. This factor has been mitigated by more sophisticated visual presentation technologies, body motion tracking, and wearable devices for behavioral and neuroimaging studies (Fig. 1A).

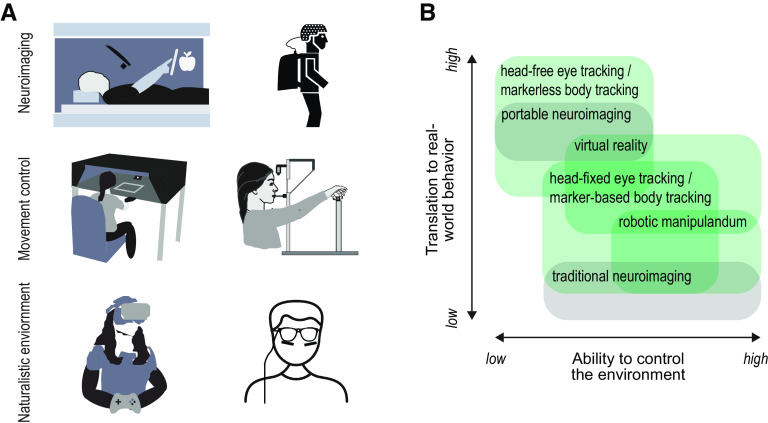

Figure 1.

Measuring coordinated action with different experimental tools. A, Top, The neural control of eye-hand and body coordination can be probed using MRI-compatible tablets and eye-trackers in the scanner or by developing portable setups (e.g., portable EEG system). Middle, Using a robotic manipulandum allows experimental control of the visual and movement space (e.g., mechanical perturbations) and a head-fixed setup enables well-calibrated high-precision eye tracking, while investigating real object manipulation. Bottom, Studies using virtual reality setups or head-mounted eye-tracking glasses allow the study of eye-hand coordination in naturalistic environments. B, Schematic represents how different experimental tools vary along two axes. The ability by the experimenter to control the visual and physical environment (x axis) and the translation of the observed behavior to the real world (y axis). Green boxes represent behavioral methods. Gray boxes represent neuroimaging techniques.

One approach to overcome the challenge of maintaining experimental control in a realistic environment is to study action tasks in virtual reality (Fig. 1B), which allows the manipulation of object appearance and physical properties within a naturalistic scene (Diaz et al., 2013; Rolin et al., 2019; Cesanek et al., 2021). Despite these advantages, the ecological validity of the virtual presentation of objects for action has been questioned (Harris et al., 2019). Another approach is to use portable eye and head tracking in combination with algorithmic methods that classify eye and/or body movements (Nath et al., 2019; Kothari et al., 2020; Matthis and Cherian, 2022). These recent technological advances in hardware and software allow us to move research on visuomotor control from the laboratory to real-world applications, with the ultimate goal of translating fundamental findings to natural behavior in healthy and brain-damaged individuals.

The current review, based on the 2023 Society for Neuroscience Mini-Symposium Perceptual–Cognitive Integration for Coordinated Action in Naturalistic Environments, illustrates such progress through six topics that investigate the mechanisms for goal-directed, coordinated action using technologies that span the naturalistic approaches shown in Figure 1. The first three topics focus on fundamental research questions (visuospatial coding of action-relevant object location, adaptive eye-hand coordination, visuomotor integration for manual interception), whereas the final three highlight applications to the understanding of neurologic deficits (steering in the presence of cortical blindness (CB), visual-proprioceptive integration in stroke survivors, impact of working memory deficits on visual and manual search). Together, these topics provide a coherent narrative that illustrates how studying naturalistic behaviors improves the potential for the translation of neuroscience to real-world clinical situations.

Goal-directed action in healthy individuals

An understanding of the brain, and its deficits, begins with the study of normal function. This first section addresses several such topics, starting with visuospatial coding of action goals, fundamental behavioral aspects of eye-hand coordination, and then considers specific visuomotor mechanisms for interception. In each case, we consider how laboratory experiments can be translated to real-world conditions.

Spatial coding for goal-directed action in naturalistic environments

A first step in understanding the neural mechanisms for goal-directed reach involves the mechanisms whereby the visual system encodes spatial goals. This can be accomplished using one of two main categories of reference frame. The first type (egocentric reference frames) involves determining the position of an object with respect to the self (i.e., eye position, hand position, etc.) (Blohm et al., 2009; Crawford et al., 2011). Typically, this has been associated with dorsal regions of cortex (Fig. 2A), particularly within medial posterior parietal cortex and frontal regions (Zaehle et al., 2007; Chen et al., 2014). These regions are able to use current or remembered visual information to program appropriate motor plans online (Buneo and Andersen, 2006; Piserchia et al., 2017).

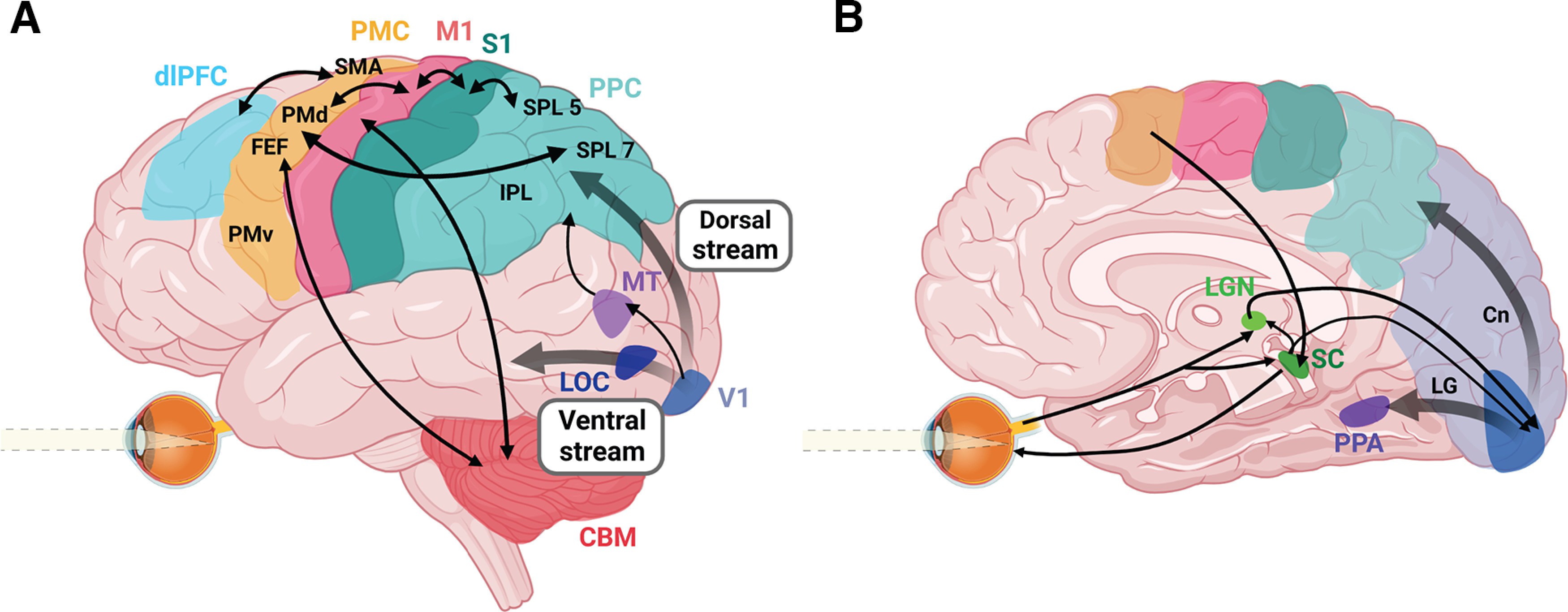

Figure 2.

Schematic overview of the major brain regions and pathways involved in goal-directed eye-hand coordination. Pathways for vision, object and motion perception, eye movements, and top-down cognitive strategies are integrated with cortical, subcortical, and cerebellar networks for sensorimotor transformations to produce coordinated action. A, Left brain lateral view. B, Right brain medial view. LOC, Lateral occipital cortex; MT, middle temporal area; PPC, posterior parietal cortex; SPL 7, superior parietal lobule area 7; SPL 5, superior parietal lobule area 5; IPL, inferior parietal lobule; S1, somatosensory cortex; M1, primary motor cortex; PMC, premotor cortex, SMA, supplementary motor area; PMd, dorsal premotor cortex; FEF, frontal eye fields; PMv, ventral premotor cortex; dlPFC, dorsolateral PFC; CBM, cerebellum; SC, superior colliculus; LGN, lateral geniculate nucleus; PPA, parahippocampal place area; LG, lingual gyrus; Cn, cuneus. Created with Biorender.com.

However, in real-world circumstances, egocentric mechanisms are often augmented or replaced by the use of allocentric reference frames (i.e., the coding of object position relative to other) surrounding visual landmarks. The ventral visual stream (Fig. 2A) has been associated with the use of allocentric reference frames for object location (Adam et al., 2016). Humans can be instructed to rely on one or the other cue through “top-down” instructions (Chen et al., 2011; Chen and Crawford, 2020), but normally this weighting occurs automatically, through “bottom-up” processing of sensory inputs (Byrne and Crawford, 2010). In this case, egocentric and allocentric information is weighted differently, depending on context (Neely et al., 2008; Fiehler and Karimpur, 2023).

To understand how those findings translate to real-world environments, recent investigations have opted for testing within more naturalistic environments, such as 2D complex scenes or 3D virtual environments (Fiehler and Karimpur, 2023). These studies have shown that cognitive factors influence the weighting of egocentric and allocentric visual information, including task relevance (Klinghammer et al., 2015) and prior knowledge (Lu et al., 2018). Another factor in the coding of object location that has garnered recent attention is object semantics. For example, if a scene contains objects from two different semantic categories (e.g., food vs utensils in a kitchen), a target object's perceived location will be influenced more by surrounding objects of the same category, compared with unrelated objects (Karimpur et al., 2019). However, many questions remain about how scene semantics affect the spatial coding of objects. This includes whether these effects reside at the level of individual object properties, interactions thereof, and/or whether these properties must be scene-specific (Võ, 2021).

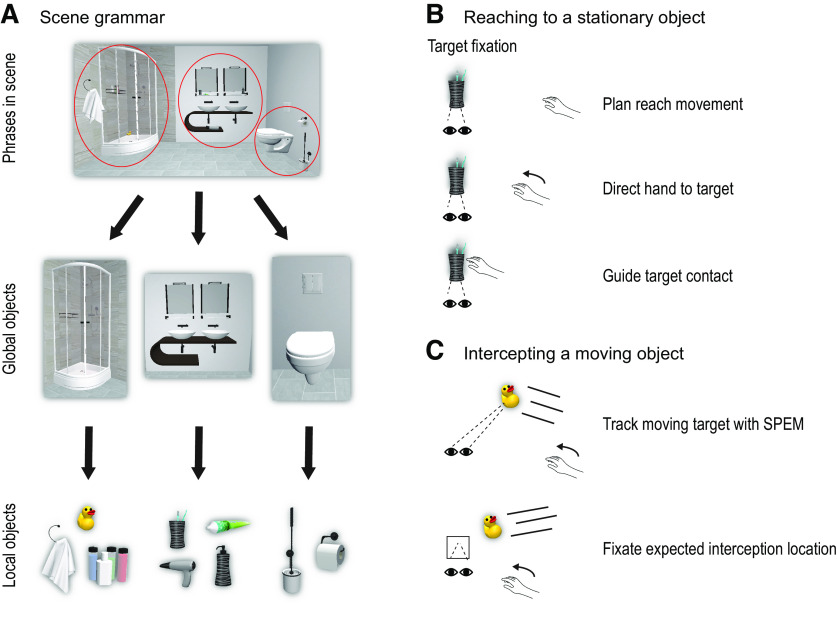

Given the richness of information present within scenes, one way to systematically understand natural behaviors has been prompted by the categorization of scenes according to a particular “grammar” defined by specific building blocks in a hierarchical structure (Fig. 3A) (Võ, 2021). In this hierarchy, the lowest level is the (local) object level (see above) (Karimpur et al., 2019), whereby scene context information can be extracted from the nature or category of objects (small, moveable objects) found therein (e.g., kitchen-related objects are cues to the likelihood that they are in a kitchen). At present, this is the only level where a causal relationship between semantic information and spatial coding of objects has been established (Karimpur et al., 2019). Intuitively, when one thinks about designing a given scene, the large global objects (i.e., large, immovable objects with strong, statistical local object associations) are the first ones whose positions are defined (Draschkow and Võ, 2017) and, only later, are the local objects placed. Accordingly, the next level of the scene grammar (semantics) hierarchy is the global object level (Võ, 2021). Given the relationship between these two levels in the scene grammar hierarchy of location perception, it remains to be determined how the global object level may also influence local object spatial coding in naturalistic environments. Current efforts are investigating these relationships, as well as how gaze can guide spatial coding within different scenes (Boettcher et al., 2018; Helbing et al., 2022). Understanding how scene semantic information and spatial coding mechanisms interact will play an important role in understanding how surrounding stimuli influence object recognition, localization, and action.

Figure 3.

Visual signals for action. A, Visual scenes consist of “grammar” that is defined by building blocks in a hierarchical structure, consisting of phrases (top), global objects (middle), and associated local objects (bottom) (Võ, 2021). B, When reaching to stationary objects, the target object is commonly fixated throughout the reach, which allows the integration of foveal vision of the object and peripheral vision of the hand. C, When intercepting moving objects, the eyes either track the moving object with SPEMs or fixate on the expected interception location to guide the hand toward the object.

Toward adaptive eye-hand coordination in real-world actions

Once objects are localized, the brain can initiate the equally important process of acting on them. Real-world actions, such as cooking, involve the coordination of eye movements and goal-directed actions (Land, 2006). Past research has shown that eye and hand movements are functionally coordinated in a broad range of manipulation and interception tasks (de Brouwer et al., 2021; Fooken et al., 2021). When reaching for and manipulating objects, eye movements support hand movements by fixating critical landmarks as the hand approaches, and then shifting to the next landmark (Ballard et al., 1992; Land et al., 1999; Johansson et al., 2001).

In controlled laboratory environments, the eyes often remain anchored to the reach goal throughout the movement (Neggers and Bekkering, 2000, 2001). This has been associated with the inhibition of neuronal firing in the parietal saccade region by neurons in the parietal reach region (Fig. 2) (Hagan and Pesaran, 2022). In this case, where gaze (foveal vision) remains fixed on the reach goal, the hand is initially viewed in peripheral vision and then transitions across the retina toward the fovea (Fig. 3B). This allows one to compare foveal vision with peripheral vision of the hand to directly compute a reach vector in visual coordinates, whereas comparison of target and hand location in somatosensory coordinates requires extraretinal signals for eye and hand position (Sober and Sabes, 2005; Buneo and Andersen, 2006; Beurze et al., 2007; Khan et al., 2007). Further, peripheral vision of the hand can be used to rapidly (∼150 ms) correct for reach errors (Paillard, 1996; Sarlegna et al., 2003; Saunders and Knill, 2003, 2004; Smeets and Brenner, 2003; Dimitriou et al., 2013; de Brouwer et al., 2018). Fixating the goal at reach completion allows the use of central (i.e., parafoveal) vision, which engages slow visual feedback loops to guide fine control of object contact (Paillard, 1996; Johansson et al., 2001). In addition, central vision is used to monitor and confirm completion of task subgoals (Safstrom et al., 2014). Conversely, deviations of gaze from the goal can degrade reach accuracy and precision, especially in memory-guided reaching (Henriques et al., 1998), and can either improve or degrade performance in patients with visual field deficits, depending on direction of the goal relative to the deficit (Khan et al., 2005a, 2007).

In naturalistic tasks, there is often a trade-off between fixating the eyes on the immediate movement goal versus deviating gaze toward other action-relevant information. Daily activities, such as locomotion (Jovancevic-Misic and Hayhoe, 2009; Matthis et al., 2018; Domínguez-Zamora and Marigold, 2021), ball catching (Cesqui et al., 2015; López-Moliner and Brenner, 2016), or navigation (Zhu et al., 2022), require the integration of low-level perceptual information and high-level cognitive goals (Tatler et al., 2011). The ongoing decision-making about which fixation strategy to prioritize, and when and where to move the eyes, depends on the visuomotor demands of the task (Sims et al., 2011), the visual context of the action space (Delle Monache et al., 2019; Goettker et al., 2021), and the availability of multisensory signals (Wessels et al., 2022; Kreyenmeier et al., 2023). Because action demands and perceptual context constantly change in naturalistic tasks, a major challenge for future research is to quantitatively describe ongoing visuomotor control in relation to changing environments.

Although humans perceive and act on the environment in parallel, the two mechanisms have mostly been studied in isolation. Critically, the dynamic interaction between perception and action depends on when and where relevant visual information is needed or becomes available. Whereas many perceptual events, such as the color change of a traffic light, are determined externally, the timing of motor events, such as contacting a target object, can to some extent be controlled by the actor. Past research has shown that people learn to predict temporal regularities of the environment (Nobre and Van Ede, 2023), and this knowledge can be exploited to intelligently aim eye movements while monitoring competing locations of interest (Hoppe and Rothkopf, 2016). An open research question is whether and how people use their perceptual expectation of external events to continuously adapt the timing of their ongoing movements and eye-hand coordination. A promising approach to understand the dynamic interaction between perceiving, thinking, and acting is to design tasks that require multitasking (e.g., when participants perform a perceptual task in parallel with an action task).

Visuomotor integration for manual interception

A major challenge for real-world eye-hand coordination is that action goals are not always stable. A case in point is manual interception behavior. Manual interception is a goal-directed action in which the hand attempts to catch, hit, or otherwise interact with a moving object. Studying interceptive actions offers two major advantages for understanding perceptual-motor integration in naturalistic environments: First, manual interceptions are ubiquitous in everyday tasks (e.g., quickly reacting to catch a falling object; Fig. 3C) and are a hallmark of skilled motor performance (e.g., in many professional sports). Second, interception tasks allow for simple, systematic manipulation of separable parameters related to both bottom-up sensory properties (e.g., target speed, occlusion) and top-down cognitive-motor strategies (e.g., deciding on interception location, accuracy demands) (Zago et al., 2009). Interceptions rely on combining sensorimotor predictions with online sensory information about the object and the hand, enabling continuous adjustments to intercept objects under varied spatial and temporal constraints (Brenner and Smeets, 2018). Precise timing is facilitated by integrating both object motion kinematics and temporal cues (Chang and Jazayeri, 2018), allowing for enhanced temporal predictions beyond what would be expected from perceptual judgments alone (de la Malla and López-Moliner, 2015; Schroeger et al., 2022).

Eye movements play an integral role in interception performance (for review, see Fooken et al., 2021). As is the case with stationary objects (see above), deviations of gaze from the tracked object systematically degrade performance (Dessing et al., 2011). To maintain gaze on a moving object, humans combine saccades with smooth pursuit eye movements (SPEM) to enhance spatiotemporal precision for interception (van Donkelaar and Lee, 1994; Fooken et al., 2016), but engaging the SPEM system is less useful when the object motion trajectory is predictable or greater accuracy is required (de la Malla et al., 2019). When interception depends on accurately perceiving a moving object's shape (e.g., circle or ellipse), initial saccades are faster and gaze lags farther behind the object, compared with situations where object shape is irrelevant (Barany et al., 2020a), suggesting that eye movement strategies are adapted to task demands.

Compared with studies of reaching to static targets (Battaglia-Mayer and Caminiti, 2019), relatively few have investigated the neural basis of manual interception. Neurophysiological and neuroimaging studies of target interception have revealed dorsal visual regions (Fig. 2A), including the visual middle temporal area (Bosco et al., 2008; Dessing et al., 2013) and the superior parietal lobule area 7 in posterior parietal cortex (Merchant et al., 2004; de Azevedo Neto and Júnior, 2018; Li et al., 2022) involved in dynamically transforming visual motion information into motor plans. This sensorimotor information is reflected in primary motor cortex outputs (Merchant et al., 2004; Marinovic et al., 2011) and conveyed to brainstem areas, such as the superior colliculus, to trigger rapid interception (Contemori et al., 2021). Connections between cortex and the cerebellum (Spampinato et al., 2020) also contribute to accurate sensory prediction for timing of interception (Fig. 2) (Diedrichsen et al., 2007; Therrien and Bastian, 2019).

Neuroimaging studies of human manual interception are lacking, but advances in neuroimaging techniques for studying visuomotor interactions with static targets (for review, see Gallivan and Culham, 2015) can guide future approaches. For example, hand movement kinematics have been recorded during fMRI data acquisition using real-time video-based motion tracking (Barany et al., 2014), MRI-compatible tablets (Karimpoor et al., 2015), and real 3D action set-ups (Marneweck et al., 2018; Knights et al., 2022; Velji-Ibrahim et al., 2022) to reveal distinct activation patterns associated with naturalistic reaching and grasping across the cortical sensorimotor network (Fig. 2). Eye movements during scanning can now be directly reconstructed from the MR signal (Frey et al., 2021; Kirchner et al., 2022), when using MRI-compatible eye-trackers is not feasible. More sensitive multivariate data analysis techniques, such as representational similarity analysis, during complex action tasks have uncovered task-dependent neural representations of low-level muscle information and high-level kinematic and task demand information in primary motor cortex (Barany et al., 2020b; Kolasinski et al., 2020). Improvements in source localization in EEG and sophisticated techniques for studying whole-brain functional “connectivity,” such as graph theory analysis, can likewise contribute to studying the temporal evolution of sensory and motor information across frontal and parietal regions in less constrained environments (Ghaderi et al., 2023). Finally, emerging mobile neuroimaging systems (Stangl et al., 2023) are a promising tool for understanding neural dynamics in naturalistic settings, such as the table tennis task illustrated in Figure 1A. This experiment showed fluctuations in parietal-occipital and superior parietal cortices related to the object kinematics and predictability of the upcoming interception (Studnicki and Ferris, 2023). A multimodal combination of these new methods may help close the gap in our understanding of how the human brain continuously plans movements when action goals are not stable.

Deficits in goal-directed action produced by neural damage

In this second half of the review, we explore ways in which the study of naturalistic behaviors following brain damage (primarily stroke) both shed light on brain function and suggest possible means of diagnosis and rehabilitation. These topics range from the influence of central visual processing on real-world behaviors, such as driving, to disintegration of vision with the internal sense of body position (proprioception), to the impact of visual search and memory capacity on movement control during brain damage. Each example highlights how translational neuroscience helps one to understand the real-world impact of cortical damage.

CB and the use of optic flow in steering and navigation

Each year, up to half a million individuals in the United States suffer from stroke-related damage to the primary visual cortex (V1). When this damage is unilateral, it leads to CB in a quarter to a half of the contralateral visual field (Gilhotra et al., 2002; Pollock et al., 2011). CB negatively impacts autonomy and overall quality of life in many ways, including the ability to safely or legally drive (Papageorgiou et al., 2007; Gall et al., 2010; Pollock et al., 2011). Although individuals with CB are legally prohibited from driving in at least 22 U.S. states, those CB-affected drivers who choose to exercise their legal right to remain on the road experience significantly more motor vehicle accidents than visually intact controls (McGwin et al., 2016) and demonstrate more variable steering behavior (Bowers, 2016).

One possible explanation for these behavioral deficits is that the processing of global motion patterns commonly used to guide steering (optic flow) (Gibson, 1950; Warren et al., 2001) is disrupted in the presence of CB (Fig. 4) (Issen, 2013). In healthy individuals, partially obscuring or omitting the visual field does not significantly degrade the perception of heading (Warren and Kurtz, 1992), so driving deficits with CB are not likely because of a simple omission of visual information. An alternative explanation is that the blind field acts as a generator of neural noise (Cavanaugh et al., 2015). It is possible that this noise is spatially integrated with unaffected regions before the downstream representation of global motion in the middle temporal area (Adelson and Movshon, 1982) (Fig. 2A), and area MSTd (Schmitt et al., 2020). MSTd receives input from the middle temporal area, but has larger receptive fields, attributed to the decoding of whole-field patterns of global motion that arise during translation (van Essen and Gallant, 1994). Emerging research on motion processing for navigation in these areas promises further insights into understanding the impact of CB. Other research has used artificial neural networks to estimate and/or control heading on the basis of simulated input (Layton, 2021; Mineault et al., 2021). Finally, the use of mobile eye tracking in freely moving humans has provided some of our first empirical measurements of the statistics of retinal optical flow that might shape selectivity (Dowiasch et al., 2020; Muller et al., 2023). Together, these approaches provide valuable guidance for physiological investigations into how the brain responds to natural motion stimulation during translation, and how this is affected by cortical damage.

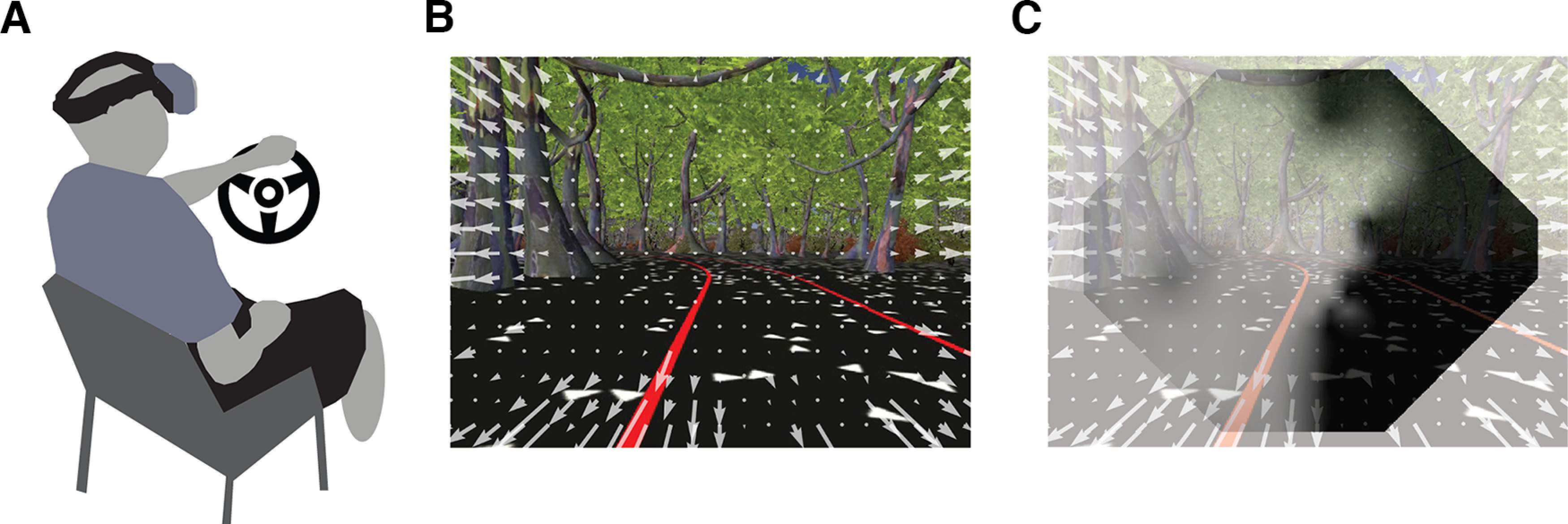

Figure 4.

The role of optic flow on visually guided steering. A, Drivers are immersed in a simulated environment seen through a head-mounted display with integrated eye tracking. B, Exemplary view inside the virtual reality as participants attempt to keep their head centered within a parameterized and procedurally generated roadway. This image is superimposed with a computational estimate of optic flow indicated here as white arrows (Matthis et al., 2018). C, To assess the effect of cortical blindness on the visual perception of heading will require the development of computational models of visually guided steering that account for the blind field. For illustrative purposes, we have superimposed the results from a Humphrey visual field test on the participant's view at a hypothetical gaze location with approximate scaling (reprinted from Cavanaugh et al., 2015 with permission).

When considering the role of optic flow experienced during navigation, it is also important to consider the role of eye movements and, in the context of CB, the compensatory gaze behaviors that appear spontaneously following the onset of CB (Elgin et al., 2010; Bowers et al., 2014; Bowers, 2016). As described above, gaze position has a strong influence on goal-directed behaviors in both healthy and brain-damaged individuals with visual field-specific deficits (Khan et al., 2005a, 2007). Likewise, altered gaze behaviors have a strong effect on steering, even in visually intact participants (Wilkie and Wann, 2003; Robertshaw and Wilkie, 2008). The effect of modified eye movements extends beyond influencing what portion of the scene is foveated: (1) because saccades-related inputs to MST cause transient distortions in perceived heading direction (Bremmer et al., 2017); and (2) because the rotational component of eye motion interacts with the optic flow because of translation (Cutting et al., 1992), eye movements have the potential to play an active role in structuring the pattern of retinal optic flow in a way that is optimized for the visual guidance of steering (Matthis et al., 2022). Recent modeling work has demonstrated that instantaneous extraretinal information about the direction of gaze relative to heading is sufficient for the reproduction of human-like steering behaviors when navigating a slalom of waypoints (Tuhkanen et al., 2023). Others suggest that navigation may also leverage path planning and internal models (Alefantis et al., 2022; but see Zhao and Warren, 2015). In the context of CB, altered gaze behaviors may indicate a shift in reliance from noisy optic flow signals toward increased weighting of alternative forms of visual information (Warren et al., 2001), such as strong allocentric cues from the road edges (Land and Horwood, 1995).

These considerations emphasize the critical need to study the impact of CB in naturalistic contexts that facilitate compensatory gaze behaviors, and translation of fundamental neuroscience (see “Goal-directed action in healthy individuals”) into these contexts. Naturalistic studies will provide new insight into the mechanisms visual function in the absence of the primary visual cortex and would refine current theories concerning the mechanisms underlying CB's disruptive influence on visual processing. Continued investigation of CB in naturalistic contexts also promises to provide new methods for assessment (Kartha et al., 2022) and rehabilitation (Kasten and Sabel, 1995) for applications to real-world settings beyond the laboratory environment (Fig. 4).

Impact of stroke on multisensory integration of vision and proprioception

In stroke, the integrity of neural processing is impacted by injury to the CNS. Quite often, this affects sensorimotor function of the upper limb (Lawrence et al., 2001). To date, problems with action-based behavior, including movement quality, coordination, and stability, are typically attributed to impairments in motor execution. However, the contributions of sensory information to the disordered output of movement are also critically important (Jones and Shinton, 2006; Carey et al., 2018; Rand, 2018). For example, as noted above, visual feedback can be used to calculate the direction of reach in eye coordinates, but the proprioceptive sense of eye and hand position is needed for interpreting visual input and calculating desired reach direction in body coordinates (Sober and Sabes, 2005; Buneo and Andersen, 2006; Khan et al., 2007).

In the past decade, several studies have highlighted the contributions of proprioceptive impairments of the upper limb toward functional impairments after stroke (Leibowitz et al., 2008; Dukelow et al., 2010; Semrau et al., 2013, 2015; Simo et al., 2014; Young et al., 2022). As one might expect, individuals who lack proprioception (because of degradation of sensory afferents) show substantial improvements in movement execution in the presence of visual feedback of the hand (Ghez et al., 1995; Sarlegna and Sainburg, 2009). However, some (∼20%) individuals with proprioceptive impairments have difficulty executing reaches from a proprioceptive reference, even with full vision of the limb (Fig. 5A, left, middle) (Semrau et al., 2018; Herter et al., 2019). Notably, these deficits could not be explained by visual field impairments or visual attention deficits (Patel et al., 2000; Meyer et al., 2016a). Thus, questions remain about the limitations of visual feedback for stroke rehabilitation, how brain injury impacts sensory integration, and how this impacts goal-directed motor behavior.

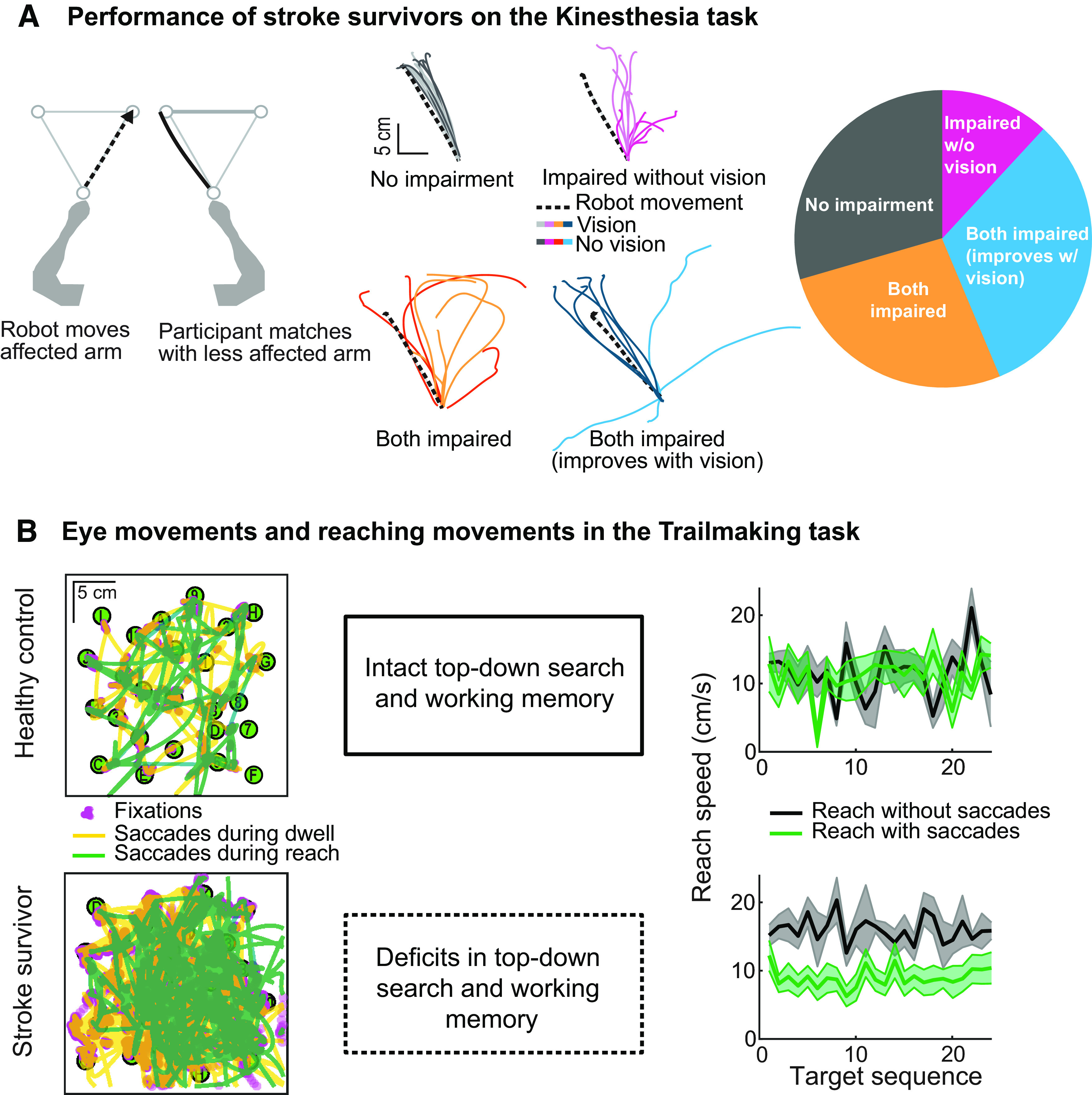

Figure 5.

Movement impairment in stroke survivors. A, Kinesthetic Matching Task where a robotic manipulandum (Kinarm Exoskeleton Lab) passively moved the more affected arm and stroke survivors mirror-matched with the less affected arm (left). Middle, Performance from a stroke survivor that performed well with vision of the limb (top, left and right) and without vision of the limb (top, left); other participants performed worse than control participants with and without the use of vision (bottom, left and right), although vision significantly improved performance for some (right, top and bottom). Distribution of participant performance demonstrates that only 12% of stroke survivors (total N = 261) used vision to effectively correct proprioceptively referenced movements, suggesting that vision often fails to effectively compensate for multisensory impairments (right) (Semrau et al., 2018). B, Left, Saccades made by a healthy control (top) and stroke survivor (bottom) during hand movement (green) and hand dwell on target (yellow) in the Trails Making task. Excessive saccades in stroke survivors are associated with deficits in working memory and top-down visual search (middle). When stroke survivors make saccades during reaching, those movements tend to be slower compared with when they do not make saccades during reaching (right panels).

Previous studies have produced conflicting results concerning the degree to which patients are able to compensate for proprioceptive impairments of the hand and limb using visual feedback (Darling et al., 2008; Scalha et al., 2011; Semrau et al., 2018; Herter et al., 2019). Bernard-Espina et al. (2021) have suggested that we need to consider that vision and proprioception are encoded not only in their native coordinate frames (i.e., retinal coordinates, joint space, etc.), because integration of these signals also occurs within higher-order cortical areas that are equally susceptible to damage after stroke (Buneo and Andersen, 2006; Khan et al., 2007). This might explain why vision does not improve performance in proprioceptive-guided tasks for some stroke survivors (Fig. 5A, right) (Semrau et al., 2018; Herter et al., 2019).

Further, recent neuroimaging work has shown that damage to brain areas not normally associated with proprioception (e.g., the insula, superior temporal gyrus, and subcortical areas) can result in apparent proprioception deficits (Findlater et al., 2016; Kenzie et al., 2016; Meyer et al., 2016b; Semrau et al., 2019; Chilvers et al., 2021). This suggests that the “proprioception” network is far more expansive than previously assumed, including considerable links to visual perception (Fig. 2) (Desimone et al., 1990; Sterzer and Kleinschmidt, 2010).

In summary, impairments because of stroke and other neurologic injuries are typically investigated using unimodal approaches, but recent work has highlighted that the sensorimotor issues observed after stroke are not solely rooted in difficulty with motor execution. To fully understand the underlying mechanisms of complex multisensory deficits, one must consider the integration of sensory information (especially vision and proprioception) for coordination, error correction, and action monitoring. To effectively understand how these systems contribute to movement control after neural injury, we must therefore adopt a multisensory framework.

Visual search and working memory deficits: influence on movement sequences

While many studies focus on single actions, real-world behavior is often composed of action sequences, both of eye motion (see “Goal-directed action in healthy individuals”) and manual actions. For example, when a driver turns a car at an intersection, they must also look for incoming traffic and pedestrians and plan their future movements accordingly. Studies on action sequences often focus on the phenomenon of chunking, a process that causes individual elements of a movement sequence to be “fused” together to become faster, smoother, and cognitively less demanding with practice (Acuna et al., 2014; Ramkumar et al., 2016). However, the chunking literature has largely ignored the role of eye movements for action sequencing. The few studies that have considered how humans both search and reach toward a sequence of multiple visual targets have suggested that these behaviors are statistically optimized to minimize sense of effort (Gepshtein et al., 2007; Diamond et al., 2017; Moskowitz et al., 2023a,b).

The more extensive visual search literature has provided insights into the mechanisms of how bottom-up (stimulus-driven) and top-down (goal-dependent) attention drive eye movements (Treisman, 1986; Eckstein, 2011; Wolfe et al., 2011) and action selection (Buschman and Miller, 2007; Siegel et al., 2015). While bottom-up attention is primarily guided by stimulus salience, top-down processes involve knowledge-driven predictions that enable observers to direct their gaze toward task-relevant regions of visual space (Henderson, 2017). Visual search studies have also shown that healthy humans exhibit near optimal visual search behavior (Najemnik and Geisler, 2005), even in the presence of distractors (Ma et al., 2011).

A critical component of visual search is working memory: the ability to temporarily hold and manipulate information during cognitive tasks (Baddeley, 2003; Miller et al., 2018). Visual search slows when the spatial working memory buffer is loaded (Oh and Kim, 2004; Woodman and Luck, 2004). Importantly, spatial working memory also provides efficient storage of spatial locations to allow fast and smooth execution of reaching movements. Not coincidentally, the cortical systems for saccades and working memory overlap extensively (Constantinidis and Klingberg, 2016), likely because attention often drives both and because visual memory has to be updated during eye movements (Henriques et al., 1998; Dash et al., 2015).

Right hemisphere damage often produces spatial neglect, which may contribute to visual search deficits in stroke survivors (Ten Brink et al., 2016). But visual search is also compromised after left hemisphere stroke in individuals that do not show spatial neglect (Mapstone et al., 2003; Hildebrandt et al., 2005). Further, the cortical regions associated with disorganized visual search overlap with the spatial working memory system (Ten Brink et al., 2016). This suggests that trans-saccadic spatial integration may be disrupted in stroke survivors (Khan et al., 2005a, b).

Recently, an augmented-reality version of the Trails-Making test (Reitan, 1958) was used to address how visual search and spatial working memory deficits in stroke survivors affect limb motor performance. In this test, the optimal search area is in the vicinity of hand location. In this task, stroke was associated with impairments of visual search characterized by deficits in spatial working memory and top-down topographic planning of visual search (Singh et al., 2017). Healthy controls either restricted the search space around the hand or used working memory to search within a larger space. In contrast, stroke survivors did not use working memory and used many more saccades to search randomly within a much larger workspace, resulting in suboptimal search performance (Fig. 5B, left, middle). Stroke survivors made more saccades as the task became more challenging (Singh et al., 2023). Further, an increased number of saccades was strongly associated with slower reaching speed (Fig. 5B, right), decreased reaching smoothness, and greater difficulty performing functional tasks in stroke survivors (Singh et al., 2018).

Together, these studies suggest that healthy individuals optimally coordinate eye and limb movements to search for objects, store object locations in working memory, and successfully interact with those objects. In contrast, stroke survivors exhibit deficits in top-down spatial organization and use of spatial working memory for visual search. Deficits in these functions, which are mediated by the dorsolateral PFC (Fig. 2), likely contribute to the sluggishness and intermittency of reaching movements that slow down even more with an increase in cognitive load. It has been proposed that enhanced cognitive load and neural injuries may reduce top-down inhibition of the ocular motor system triggering saccades toward salient but irrelevant stimuli, even at the cost of task performance (Singh et al., 2023).

Conclusions

This review highlights six research topics that illustrate how naturalistic laboratory studies can be translated toward real-world, goal-directed action in healthy individuals (“Goal-directed action in healthy individuals”) and individuals with neurologic injuries (“Deficits in goal-directed action produced by neural damage”). In “Goal-directed action in healthy individuals,” we described how ego/allocentric spatial coding mechanisms are influenced by scene context in naturalistic environments, how adaptive eye movement strategies optimize visual information for action, and how manual interception tasks provide a useful framework to investigate eye-hand coordination in naturalistic environments. “Deficits in goal-directed action produced by neural damage” extends similar concepts to understand the behavioral consequences of CB and compensatory gaze behaviors for driving, why limb-based deficits may be the result of and/or aggravated by multisensory impairments in proprioceptive and visual systems, and how stroke symptoms are exacerbated by increased cognitive load in visuomotor tasks. Recurrent themes in these studies include the importance of considering interaction between systems (multisensory, sensorimotor, cognitive-motor, and multiple effector control) and interactions between bottom-up sensory and top-down cognitive/motor signals.

Although none of these studies occurred in completely natural environments, each study attempts to simulate naturalistic behavior through the use of new technologies (stimulus presentation, motion tracking, neuroimaging) and by combining approaches that were previously studied in isolation. This has important practical value because traditional diagnostics often rely on simplistic sensory or motor tests that may not translate well to complex real-world situations. This highlights the need for research and training programs that translate such knowledge for long-term rehabilitation and occupational therapy.

In conclusion, there is considerable agreement that sensory, cognitive, and sensorimotor systems continuously interact to produce goal-directed movements, and that these fragile interactions are readily disrupted in neurologic disorders, such as stroke. Understanding these interactions still presents considerable methodological and conceptual challenges, but the advances described here show that laboratory neuroscience can be translated for clinical populations dealing with real-world, complex problems. Together, these findings provide a nascent and compelling computational and experimental framework for future research.

Footnotes

J.F. was supported by a Deutsche Forschungsgemeinschaft Research Fellowships Grant FO 1347/1-1. B.R.B. was supported by Deutsche Forschungsgemeinschaft Grant FI 1567/6-1 “The Active Observer” and “The Adaptive Mind,” funded by the Excellence Program of the Hessian Ministry for Higher Education, Research, Science and the Arts. D.A.B. was supported by the University of Georgia Mary Frances Early College of Education and University of Georgia Office of Research. G.D. was supported by National Institutes of Health Award R15EY031090 and Research to Prevent Blindness/Lions Clubs International Low Vision Research Award. J.A.S. was supported by National Science Foundation Award 1934650. T.S. was supported by the Penn State College of Health and Human Development. This work was supported in part by University of South Carolina ASPIRE grants. J.D.C. was supported by a York Research Chair. We thank Justin McCurdy and Helene Mehl for assistance with figure creation.

G.D. works as a consultant for Luminopia. All other authors declare no competing financial interests.

References

- Acuna DE, Wymbs NF, Reynolds CA, Picard N, Turner RS, Strick PL, Grafton ST, Kording KP (2014) Multifaceted aspects of chunking enable robust algorithms. J Neurophysiol 112:1849–1856. 10.1152/jn.00028.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adam JJ, Bovend'Eerdt TJ, Schuhmann T, Sack AT (2016) Allocentric coding in ventral and dorsal routes during real-time reaching: evidence from imaging-guided multi-site brain stimulation. Behav Brain Res 300:143–149. 10.1016/j.bbr.2015.12.018 [DOI] [PubMed] [Google Scholar]

- Adelson EH, Movshon JA (1982) Phenomenal coherence of moving visual patterns. Nature 300:523–525. 10.1038/300523a0 [DOI] [PubMed] [Google Scholar]

- Alefantis P, Lakshminarasimhan K, Avila E, Noel JP, Pitkow X, Angelaki DE (2022) Sensory evidence accumulation using optic flow in a naturalistic navigation task. J Neurosci 42:5451–5462. 10.1523/jneurosci.2203-21.2022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley A (2003) Working memory: looking back and looking forward. Nat Rev Neurosci 4:829–839. 10.1038/nrn1201 [DOI] [PubMed] [Google Scholar]

- Ballard DH, Hayhoe MM, Li F, Whitehead SD (1992) Hand-eye coordination during sequential tasks. Philos Trans R Soc Lond B Biol Sci 337:331–339. [DOI] [PubMed] [Google Scholar]

- Barany DA, Della-Maggiore V, Viswanathan S, Cieslak M, Grafton ST (2014) Feature interactions enable decoding of sensorimotor transformations for goal-directed movement. J Neurosci 34:6860–6873. 10.1523/JNEUROSCI.5173-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barany DA, Gómez-Granados A, Schrayer M, Cutts SA, Singh T (2020a) Perceptual decisions about object shape bias visuomotor coordination during rapid interception movements. J Neurophysiol 123:2235–2248. 10.1152/jn.00098.2020 [DOI] [PubMed] [Google Scholar]

- Barany DA, Revill KP, Caliban A, Vernon I, Shukla A, Sathian K, Buetefisch CM (2020b) Primary motor cortical activity during unimanual movements with increasing demand on precision. J Neurophysiol 124:728–739. 10.1152/jn.00546.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Battaglia-Mayer A, Caminiti R (2019) Corticocortical systems underlying high-order motor control. J Neurosci 39:4404–4421. 10.1523/JNEUROSCI.2094-18.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernard-Espina J, Beraneck M, Maier MA, Tagliabue M (2021) Multisensory integration in stroke patients: A theoretical approach to reinterpret upper-limb proprioceptive deficits and visual compensation. Front in Neurosci 15:646698. 10.3389/fnins.2021.646698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beurze SM, De Lange FP, Toni I, Medendorp WP (2007) Integration of target and effector information in the human brain during reach planning. J Neurophysiol 97:188–199. 10.1152/jn.00456.2006 [DOI] [PubMed] [Google Scholar]

- Blohm G, Keith GP, Crawford JD (2009) Decoding the cortical transformations for visually guided reaching in 3D space. Cereb Cortex 19:1372–1393. 10.1093/cercor/bhn177 [DOI] [PubMed] [Google Scholar]

- Boettcher SE, Draschkow D, Dienhart E, Võ ML (2018) Anchoring visual search in scenes: assessing the role of anchor objects on eye movements during visual search. J Vis 18:11. 10.1167/18.13.11 [DOI] [PubMed] [Google Scholar]

- Bosco G, Carrozzo M, Lacquaniti F (2008) Contributions of the human temporoparietal junction and MT/V5+ to the timing of interception revealed by transcranial magnetic stimulation. J Neurosci 28:12071–12084. 10.1523/JNEUROSCI.2869-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowers AR (2016) Driving with homonymous visual field loss: a review of the literature. Clin Exp Optom 99:402–418. 10.1111/cxo.12425 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowers AR, Ananyev E, Mandel AJ, Goldstein RB, Peli E (2014) Driving with hemianopia: IV. Head scanning and detection at intersections in a simulator. Invest Ophthalmol Vis Sci 55:1540. 10.1167/iovs.13-12748 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bremmer F, Churan J, Lappe M (2017) Heading representations in primates are compressed by saccades. Nat Commun 8:920. 10.1038/s41467-017-01021-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brenner E, Smeets JB (2018) Continuously updating one's predictions underlies successful interception. J Neurophysiol 120:3257–3274. 10.1152/jn.00517.2018 [DOI] [PubMed] [Google Scholar]

- Buneo CA, Andersen RA (2006) The posterior parietal cortex: sensorimotor interface for the planning and online control of visually guided movements. Neuropsychologia 44:2594–2606. 10.1016/j.neuropsychologia.2005.10.011 [DOI] [PubMed] [Google Scholar]

- Buschman TJ, Miller EK (2007) Top-down versus bottom-up control of attention in the prefrontal and posterior parietal cortices. Science 315:1860–1862. 10.1126/science.1138071 [DOI] [PubMed] [Google Scholar]

- Byrne PA, Crawford JD (2010) Cue reliability and a landmark stability heuristic determine relative weighting between egocentric and allocentric visual information in memory-guided reach. J Neurophysiol 103:3054–3069. 10.1152/jn.01008.2009 [DOI] [PubMed] [Google Scholar]

- Carey LM, Matyas TA, Baum C (2018) Effects of somatosensory impairment on participation after stroke. Am J Occup Ther 72:7203205100p1. 10.5014/ajot.2018.025114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanaugh MR, Zhang R, Melnick MD, Das A, Roberts M, Tadin D, Carrasco M, Huxlin KR (2015) Visual recovery in cortical blindness is limited by high internal noise. J Vis 15:9. 10.1167/15.10.9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cesanek E, Zhang Z, Ingram JN, Wolpert DM, Flanagan JR (2021) Motor memories of object dynamics are categorically organized. Elife 10:e71627. 10.7554/Elife.71627 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cesqui B, Mezzetti M, Lacquaniti F, d'Avella A (2015) Gaze behavior in one-handed catching and its relation with interceptive performance: what the eyes can't tell. PLoS One 10:e0119445. 10.1371/journal.pone.0119445 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang CJ, Jazayeri M (2018) Integration of speed and time for estimating time to contact. Proc Natl Acad Sci USA 115:E2879–E2887. 10.1073/pnas.1713316115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y, Crawford JD (2020) Allocentric representations for target memory and reaching in human cortex. Ann NY Acad Sci 1464:142–155. 10.1111/nyas.14261 [DOI] [PubMed] [Google Scholar]

- Chen Y, Byrne P, Crawford JD (2011) Time course of allocentric decay, egocentric decay, and allocentric-to-egocentric conversion in memory-guided reach. Neuropsychologia 49:49–60. 10.1016/j.neuropsychologia.2010.10.031 [DOI] [PubMed] [Google Scholar]

- Chen Y, Monaco S, Byrne P, Yan X, Henriques DY, Crawford JD (2014) Allocentric versus egocentric representation of remembered reach targets in human cortex. J Neurosci 34:12515–12526. 10.1523/JNEUROSCI.1445-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chilvers MJ, Hawe RL, Scott SH, Dukelow SP (2021) Investigating the neuroanatomy underlying proprioception using a stroke model. J Neurol Sci 430:120029. 10.1016/j.jns.2021.120029 [DOI] [PubMed] [Google Scholar]

- Constantinidis C, Klingberg T (2016) The neuroscience of working memory capacity and training. Nat Rev Neurosci 17:438–449. 10.1038/nrn.2016.43 [DOI] [PubMed] [Google Scholar]

- Contemori S, Loeb GE, Corneil BD, Wallis G, Carroll TJ (2021) The influence of temporal predictability on express visuomotor responses. J Neurophysiol 125:731–747. 10.1152/jn.00521.2020 [DOI] [PubMed] [Google Scholar]

- Crawford JD, Henriques DY, Medendorp WP (2011) Three-dimensional transformations for goal-directed action. Annu Rev Neurosci 34:309–331. 10.1146/annurev-neuro-061010-113749 [DOI] [PubMed] [Google Scholar]

- Crawford JD, Medendorp WP, Marotta JJ (2004) Spatial transformations for eye–hand coordination. J Neurophysiol 92:10–19. 10.1152/jn.00117.2004 [DOI] [PubMed] [Google Scholar]

- Cutting JE, Springer K, Braren PA, Johnson SH (1992) Wayfinding on foot from information in retinal, not optical, flow. J Exp Psychol Gen 121:41–72. 10.1037//0096-3445.121.1.41 [DOI] [PubMed] [Google Scholar]

- Darling WG, Bartelt R, Pizzimenti MA, Rizzo M (2008) Spatial perception errors do not predict pointing errors by individuals with brain lesions. J Clin Exp Neuropsychol 30:102–119. 10.1080/13803390701249036 [DOI] [PubMed] [Google Scholar]

- Dash S, Yan X, Wang H, Crawford JD (2015) Continuous updating of visuospatial memory in superior colliculus during slow eye movements. Curr Biol 25:267–274. 10.1016/j.cub.2014.11.064 [DOI] [PubMed] [Google Scholar]

- de Azevedo Neto RM, Júnior EA (2018) Bilateral dorsal fronto-parietal areas are associated with integration of visual motion information and timed motor action. Behav Brain Res 337:91–98. 10.1016/j.bbr.2017.09.046 [DOI] [PubMed] [Google Scholar]

- de Brouwer AJ, Flanagan JR, Spering M (2021) Functional use of eye movements for an acting system. Trends Cogn Sci 25:252–263. 10.1016/j.tics.2020.12.006 [DOI] [PubMed] [Google Scholar]

- de Brouwer AJ, Gallivan JP, Flanagan JR (2018) Visuomotor feedback gains are modulated by gaze position. J Neurophysiol 120:2522–2531. 10.1152/jn.00182.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de la Malla C, López-Moliner J (2015) Predictive plus online visual information optimizes temporal precision in interception. J Exp Psychol Hum Percept Perform 41:1271–1280. 10.1037/xhp0000075 [DOI] [PubMed] [Google Scholar]

- de la Malla C, Rushton SK, Clark K, Smeets JB, Brenner E (2019) The predictability of a target's motion influences gaze, head, and hand movements when trying to intercept it. J Neurophysiol 121:2416–2427. 10.1152/jn.00917.2017 [DOI] [PubMed] [Google Scholar]

- Delle Monache S, Lacquaniti F, Bosco G (2019) Ocular tracking of occluded ballistic trajectories: effects of visual context and of target law of motion. J Vis 19:13. 10.1167/19.4.13 [DOI] [PubMed] [Google Scholar]

- Desimone R, Wessinger M, Thomas L, Schneider W (1990) Attentional control of visual perception: cortical and subcortical mechanisms. Cold Spring Harb Symp Quant Biol 55:963–971. 10.1101/sqb.1990.055.01.090 [DOI] [PubMed] [Google Scholar]

- Dessing JC, Crawford JD, Medendorp WP (2011) Spatial updating across saccades during manual interception. J Vis 11:4. 10.1167/11.10.4 [DOI] [PubMed] [Google Scholar]

- Dessing JC, Vesia M, Crawford JD (2013) The role of areas MT+/V5 and SPOC in spatial and temporal control of manual interception: an rTMS study. Front Behav Neurosci 7:15. 10.3389/fnbeh.2013.00015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diamond JS, Wolpert DM, Flanagan JR (2017) Rapid target foraging with reach or gaze: the hand looks further ahead than the eye. PLoS Comput Biol 13:e1005504. 10.1371/journal.pcbi.1005504 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diaz G, Cooper J, Rothkopf C, Hayhoe M (2013) Saccades to future ball location reveal memory-based prediction in a virtual-reality interception task. J Vis 13:20. 10.1167/13.1.20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diedrichsen J, Criscimagna-Hemminger SE, Shadmehr R (2007) Dissociating timing and coordination as functions of the cerebellum. J Neurosci 27:6291–6301. 10.1523/JNEUROSCI.0061-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dimitriou M, Wolpert DM, Franklin DW (2013) The temporal evolution of feedback gains rapidly update to task demands. J Neurosci 33:10898–10909. 10.1523/JNEUROSCI.5669-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domínguez-Zamora FJ, Marigold DS (2021) Motives driving gaze and walking decisions. Curr Biol 31:1632–1642.e4. 10.1016/j.cub.2021.01.069 [DOI] [PubMed] [Google Scholar]

- Dowiasch S, Wolf P, Bremmer F (2020) Quantitative comparison of a mobile and a stationary video-based eye-tracker. Behav Res Methods 52:667–680. 10.3758/s13428-019-01267-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Draschkow D, Võ ML (2017) Scene grammar shapes the way we interact with objects, strengthens memories, and speeds search. Sci Rep 7:16471. 10.1038/s41598-017-16739-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dukelow SP, Herter TM, Moore KD, Demers MJ, Glasgow JI, Bagg SD, Norman KE, Scott SH (2010) Quantitative assessment of limb position sense following stroke. Neurorehabil Neural Repair 24:178–187. 10.1177/1545968309345267 [DOI] [PubMed] [Google Scholar]

- Eckstein MP (2011) Visual search: a retrospective. J Vis 11:14. 10.1167/11.5.14 [DOI] [PubMed] [Google Scholar]

- Elgin J, McGwin G, Wood JM, Vaphiades MS, Braswell RA, DeCarlo DK, Kline LB, Owsley C (2010) Evaluation of on-road driving in people with hemianopia and quadrantanopia. Am J Occup Ther 64:268–278. 10.5014/ajot.64.2.268 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiehler K, Karimpur H (2023) Spatial coding for action across spatial scales. Nat Rev Psychol 2:72–84. 10.1038/s44159-022-00140-1 [DOI] [Google Scholar]

- Findlater SE, Desai JA, Semrau JA, Kenzie JM, Rorden C, Herter TM, Scott SH, Dukelow SP (2016) Central perception of position sense involves a distributed neural network: evidence from lesion-behavior analyses. Cortex 79:42–56. 10.1016/j.cortex.2016.03.008 [DOI] [PubMed] [Google Scholar]

- Fooken J, Yeo SH, Pai DK, Spering M (2016) Eye movement accuracy determines natural interception strategies. J Vis 16:1. 10.1167/16.14.1 [DOI] [PubMed] [Google Scholar]

- Fooken J, Kreyenmeier P, Spering M (2021) The role of eye movements in manual interception: a mini-review. Vision Res 183:81–90. 10.1016/j.visres.2021.02.007 [DOI] [PubMed] [Google Scholar]

- Frey M, Nau M, Doeller CF (2021) Magnetic resonance-based eye tracking using deep neural networks. Nat Neurosci 24:1772–1779. 10.1038/s41593-021-00947-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gall C, Franke GH, Sabel BA (2010) Vision-related quality of life in first stroke patients with homonymous visual field defects. Health Qual Life Outcomes 8:33. 10.1186/1477-7525-8-33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, Culham JC (2015) Neural coding within human brain areas involved in actions. Curr Opin Neurobiol 33:141–149. 10.1016/j.conb.2015.03.012 [DOI] [PubMed] [Google Scholar]

- Gepshtein S, Seydell A, Trommershäuser J (2007) Optimality of human movement under natural variations of visual–motor uncertainty. J Vis 7:13. 10.1167/7.5.13 [DOI] [PubMed] [Google Scholar]

- Ghaderi A, Niemeier M, Crawford JD (2023) Saccades and presaccadic stimulus repetition alter cortical network topology and dynamics: evidence from EEG and graph theoretical analysis. Cereb Cortex 33:2075–2100. 10.1093/cercor/bhac194 [DOI] [PubMed] [Google Scholar]

- Ghez C, Gordon J, Ghilardi MF (1995) Impairments of reaching movements in patients without proprioception: II. Effects of visual information on accuracy. J Neurophysiol 73:361–372. 10.1152/jn.1995.73.1.361 [DOI] [PubMed] [Google Scholar]

- Gibson JJ (1950) The perception of the visual world. Orlando, FL: Houghton Mifflin. [Google Scholar]

- Gilhotra JS, Mitchell P, Healey PR, Cumming RG, Currie J (2002) Homonymous visual field defects and stroke in an older population. Stroke 33:2417–2420. 10.1161/01.str.0000037647.10414.d2 [DOI] [PubMed] [Google Scholar]

- Goettker A, Pidaparthy H, Braun DI, Elder JH, Gegenfurtner KR (2021) Ice hockey spectators use contextual cues to guide predictive eye movements. Curr Biol 31:R991–R992. 10.1016/j.cub.2021.06.087 [DOI] [PubMed] [Google Scholar]

- Hagan MA, Pesaran B (2022) Modulation of inhibitory communication coordinates looking and reaching. Nature 604:708–713. 10.1038/s41586-022-04631-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris DJ, Buckingham G, Wilson MR, Vine SJ (2019) Virtually the same? How impaired sensory information in virtual reality may disrupt vision for action. Exp Brain Res 237:2761–2766. 10.1007/s00221-019-05642-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helbing J, Draschkow D, Võ ML (2022) Auxiliary scene-context information provided by anchor objects guides attention and locomotion in natural search behavior. Psychol Sci 33:1463–1476. 10.1177/09567976221091838 [DOI] [PubMed] [Google Scholar]

- Henderson JM (2017) Gaze control as prediction. Trends Cogn Sci 21:15–23. 10.1016/j.tics.2016.11.003 [DOI] [PubMed] [Google Scholar]

- Henriques DY, Klier EM, Smith MA, Lowy D, Crawford JD (1998) Gaze-centered remapping of remembered visual space in an open-loop pointing task. J Neurosci 18:1583–1594. 10.1523/JNEUROSCI.18-04-01583.1998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herter TM, Scott SH, Dukelow SP (2019) Vision does not always help stroke survivors compensate for impaired limb position sense. J Neuroeng Rehabil 16:129. 10.1186/s12984-019-0596-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hildebrandt H, Schütze C, Ebke M, Brunner-Beeg F, Eling P (2005) Visual search for item- and array-centered locations in patients with left middle cerebral artery stroke. Neurocase 11:416–426. 10.1080/13554790500263511 [DOI] [PubMed] [Google Scholar]

- Hoppe D, Rothkopf CA (2016) Learning rational temporal eye movement strategies. Proc Natl Acad Sci USA 113:8332–8337. 10.1073/pnas.1601305113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Issen L (2013) Using optic flow to determine direction of heading: patterns of spatial information use in visually intact human observers and following vision loss. PhD thesis, University of Rochester. Available at http://hdl.handle.net/1802/28003. [Google Scholar]

- Johansson RS, Westling G, Bäckström A, Flanagan JR (2001) Eye–hand coordination in object manipulation. J Neurosci 21:6917–6932. 10.1523/JNEUROSCI.21-17-06917.2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones SA, Shinton RA (2006) Improving outcome in stroke patients with visual problems. Age Ageing 35:560–565. 10.1093/ageing/afl074 [DOI] [PubMed] [Google Scholar]

- Jovancevic-Misic J, Hayhoe MM (2009) Adaptive gaze control in natural environments. J Neurosci 29:6234–6238. 10.1523/JNEUROSCI.5570-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karimpoor M, Tam F, Strother SC, Fischer CE, Schweizer TA, Graham SJ (2015) A computerized tablet with visual feedback of hand position for functional magnetic resonance imaging. Front Hum Neurosci 9:150. 10.3389/fnhum.2015.00150 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karimpur H, Morgenstern Y, Fiehler K (2019) Facilitation of allocentric coding by virtue of object-semantics. Sci Rep 9:6263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kartha A, Sadeghi R, Swanson T, Dagnelie G (2022) Visual wayfinding in people with ultra low vision using virtual reality. Invest Ophthalmol Vis Sci 63:4215. [Google Scholar]

- Kasten E, Sabel BA (1995) Visual field enlargement after computer training in brain-damaged patients with homonymous deficits: an open pilot trial. Restor Neurol Neurosci 8:113–127. 10.3233/RNN-1995-8302 [DOI] [PubMed] [Google Scholar]

- Kenzie JM, Semrau JA, Findlater SE, Yu AY, Desai JA, Herter TM, Hill MD, Scott SH, Dukelow SP (2016) Localization of impaired kinesthetic processing post-stroke. Front Hum Neurosci 10:505. 10.3389/fnhum.2016.00505 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khan AZ, Pisella L, Rossetti Y, Vighetto A, Crawford JD (2005a) Impairment of gaze-centered updating of reach targets in bilateral parietal–occipital damaged patients. Cereb Cortex 15:1547–1560. 10.1093/cercor/bhi033 [DOI] [PubMed] [Google Scholar]

- Khan AZ, Pisella L, Vighetto A, Cotton F, Luauté J, Boisson D, Salemme R, Crawford JD, Rossetti Y (2005b) Optic ataxia errors depend on remapped, not viewed, target location. Nat Neurosci 8:418–420. 10.1038/nn1425 [DOI] [PubMed] [Google Scholar]

- Khan AZ, Crawford JD, Blohm G, Urquizar C, Rossetti Y, Pisella L (2007) Influence of initial hand and target position on reach errors in optic ataxic and normal subjects. J Vis 7:8. 10.1167/7.5.8 [DOI] [PubMed] [Google Scholar]

- Kirchner J, Watson T, Lappe M (2022) Real-time MRI reveals unique insight into the full kinematics of eye movements. eNeuro 9:ENEURO.0357-21.2021. 10.1523/ENEURO.0357-21.2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klinghammer M, Blohm G, Fiehler K (2015) Contextual factors determine the use of allocentric information for reaching in a naturalistic scene. J Vis 15:24. 10.1167/15.13.24 [DOI] [PubMed] [Google Scholar]

- Knights E, Smith FW, Rossit S (2022) The role of the anterior temporal cortex in action: evidence from fMRI multivariate searchlight analysis during real object grasping. Sci Rep 12:9042. 10.1038/s41598-022-12174-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolasinski J, Dima DC, Mehler DM, Stephenson A, Valadan S, Kusmia S, Rossiter HE (2020) Spatially and temporally distinct encoding of muscle and kinematic information in rostral and caudal primary motor cortex. Cereb Cortex Commun 1:tgaa009. 10.1093/texcom/tgaa009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kothari R, Yang Z, Kanan C, Bailey R, Pelz JB, Diaz GJ (2020) Gaze-in-wild: a dataset for studying eye and head coordination in everyday activities. Sci Rep 10:2539. 10.1038/s41598-020-59251-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreyenmeier P, Schroeger A, Cañal-Bruland R, Raab M, Spering M (2023) Rapid audiovisual integration guides predictive actions. eNeuro 10:ENEURO.0134-23.2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Land M (2006) Eye movements and the control of actions in everyday life. Prog Retin Eye Res 25:296–324. 10.1016/j.preteyeres.2006.01.002 [DOI] [PubMed] [Google Scholar]

- Land M, Horwood J (1995) Which parts of the road guide steering? Nature 377:339–340. 10.1038/377339a0 [DOI] [PubMed] [Google Scholar]

- Land M, Mennie N, Rusted J (1999) The roles of vision and eye movements in the control of activities of daily living. Perception 28:1311–1328. 10.1068/p2935 [DOI] [PubMed] [Google Scholar]

- Lawrence ES, Coshall C, Dundas R, Stewart J, Rudd AG, Howard R, Wolfe CD (2001) Estimates of the prevalence of acute stroke impairments and disability in a multiethnic population. Stroke 32:1279–1284. 10.1161/01.STR.32.6.1279 [DOI] [PubMed] [Google Scholar]

- Layton OW (2021) ARTFLOW: a fast, biologically inspired neural network that learns optic flow templates for self-motion estimation. Sensors (Basel) 21:8217. 10.3390/s21248217 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leibowitz N, Levy N, Weingarten S, Grinberg Y, Karniel A, Sacher Y, Serfaty C, Soroker N (2008) Automated measurement of proprioception following stroke. Disabil Rehabil 30:1829–1836. 10.1080/09638280701640145 [DOI] [PubMed] [Google Scholar]

- Li Y, Wang Y, Cui H (2022) Posterior parietal cortex predicts upcoming movement in dynamic sensorimotor control. Proc Natl Acad Sci USA 119:e2118903119. 10.1073/pnas.2118903119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- López-Moliner J, Brenner E (2016) Flexible timing of eye movements when catching a ball. J Vis 16:13. 10.1167/16.5.13 [DOI] [PubMed] [Google Scholar]

- Lu Z, Klinghammer M, Fiehler K (2018) The role of gaze and prior knowledge on allocentric coding of reach targets. J Vis 18:22. 10.1167/18.4.22 [DOI] [PubMed] [Google Scholar]

- Ma WJ, Navalpakkam V, Beck JM, Berg RV, Pouget A (2011) Behavior and neural basis of near-optimal visual search. Nat Neurosci 14:783–790. 10.1038/nn.2814 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mapstone M, Weintraub S, Nowinski C, Kaptanoglu G, Gitelman DR, Mesulam MM (2003) Cerebral hemispheric specialization for spatial attention: spatial distribution of search-related eye fixations in the absence of neglect. Neuropsychologia 41:1396–1409. 10.1016/s0028-3932(03)00043-5 [DOI] [PubMed] [Google Scholar]

- Marinovic W, Reid CS, Plooy AM, Riek S, Tresilian JR (2011) Corticospinal excitability during preparation for an anticipatory action is modulated by the availability of visual information. J Neurophysiol 105:1122–1129. 10.1152/jn.00705.2010 [DOI] [PubMed] [Google Scholar]

- Marneweck M, Barany DA, Santello M, Grafton ST (2018) Neural representations of sensorimotor memory-and digit position-based load force adjustments before the onset of dexterous object manipulation. J Neurosci 38:4724–4737. 10.1523/JNEUROSCI.2588-17.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matthis JS, Cherian A (2022) FreeMoCap: a free, open source markerless motion capture system (0.0.54) [Computer software]. [Google Scholar]

- Matthis JS, Yates JL, Hayhoe MM (2018) Gaze and the control of foot placement when walking in natural terrain. Curr Biol 28:1224–1233.e5. 10.1016/j.cub.2018.03.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matthis JS, Muller KS, Bonnen KL, Hayhoe MM (2022) Retinal optic flow during natural locomotion. PLoS Comput Biol 18:e1009575. 10.1371/journal.pcbi.1009575 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGwin G Jr, Wood J, Huisingh C, Owsley C (2016) Motor vehicle collision involvement among persons with hemianopia and quadrantanopia. Geriatrics 1:19. 10.3390/geriatrics1030019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merchant H, Battaglia-Mayer A, Georgopoulos AP (2004) Neural responses during interception of real and apparent circularly moving stimuli in motor cortex and area 7a. Cereb Cortex 14:314–331. 10.1093/cercor/bhg130 [DOI] [PubMed] [Google Scholar]

- Meyer S, De Bruyn N, Lafosse C, Van Dijk M, Michielsen M, Thijs L, Truyens V, Oostra K, Krumlinde-Sundholm L, Peeters A, Thijs V, Feys H, Verheyden G (2016a) Somatosensory impairments in the upper limb poststroke: distribution and association with motor function and visuospatial neglect. Neurorehabil Neural Repair 30:731–742. 10.1177/1545968315624779 [DOI] [PubMed] [Google Scholar]

- Meyer S, Kessner SS, Cheng B, Bönstrup M, Schulz R, Hummel FC, De Bruyn N, Peeters A, Van Pesch V, Duprez T, Sunaert S, Schrooten M, Feys H, Gerloff C, Thomalla G, Thijs V, Verheyden G (2016b) Voxel-based lesion-symptom mapping of stroke lesions underlying somatosensory deficits. Neuroimage Clin 10:257–266. 10.1016/j.nicl.2015.12.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Lundqvist M, Bastos AM (2018) Working memory 2.0. Neuron 100:463–475. 10.1016/j.neuron.2018.09.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mineault PJ, Bakhtiari S, Richards BA, Pack CC (2021) Your head is there to move you around: goal-driven models of the primate dorsal pathway. Adv Neural Inf Process, 34:28757–28771. [Google Scholar]

- Moskowitz JB, Berger SA, Fooken J, Castelhano MS, Gallivan JP, Flanagan JR (2023a) The influence of movement-related costs when searching to act and acting to search. J Neurophysiol 129:115–130. 10.1152/jn.00305.2022 [DOI] [PubMed] [Google Scholar]

- Moskowitz JB, Fooken J, Castelhano MS, Gallivan JP, Flanagan JR (2023b) Visual search for reach targets in actionable space is influenced by movement costs imposed by obstacles. J Vis 23:4. 10.1167/jov.23.6.4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller KS, Matthis J, Bonnen K, Cormack LK, Huk AC, Hayhoe M (2023) Retinal motion statistics during natural locomotion. Elife 12:e82410. 10.7554/Elife.82410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Najemnik J, Geisler WS (2005) Optimal eye movement strategies in visual search. Nature 434:387–391. 10.1038/nature03390 [DOI] [PubMed] [Google Scholar]

- Nath T, Mathis A, Chen AC, Patel A, Bethge M, Mathis MW (2019) Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat Protoc 14:2152–2176. 10.1038/s41596-019-0176-0 [DOI] [PubMed] [Google Scholar]

- Neely KA, Tessmer A, Binsted G, Heath M (2008) Goal-directed reaching: movement strategies influence the weighting of allocentric and egocentric visual cues. Exp Brain Res 186:375–384. 10.1007/s00221-007-1238-z [DOI] [PubMed] [Google Scholar]

- Neggers SF, Bekkering H (2000) Ocular gaze is anchored to the target of an ongoing pointing movement. J Neurophysiol 83:639–651. 10.1152/jn.2000.83.2.639 [DOI] [PubMed] [Google Scholar]

- Neggers SF, Bekkering H (2001) Gaze anchoring to a pointing target is present during the entire pointing movement and is driven by a non-visual signal. J Neurophysiol 86:961–970. 10.1152/jn.2001.86.2.961 [DOI] [PubMed] [Google Scholar]

- Nobre AC, Van Ede F (2023) Attention in flux. Neuron 111:971–986. 10.1016/j.neuron.2023.02.032 [DOI] [PubMed] [Google Scholar]

- Oh SH, Kim MS (2004) The role of spatial working memory in visual search efficiency. Psychon Bull Rev 11:275–281. 10.3758/bf03196570 [DOI] [PubMed] [Google Scholar]

- Paillard J (1996) Fast and slow feedback loops for the visual correction of spatial errors in a pointing task: a reappraisal. Can J Physiol Pharmacol 74:401–417. [PubMed] [Google Scholar]

- Papageorgiou E, Hardiess G, Schaeffel F, Wiethoelter H, Karnath HO, Mallot H, Schoenfisch B, Schiefer U (2007) Assessment of vision-related quality of life in patients with homonymous visual field defects. Graefes Arch Clin Exp Ophthalmol 245:1749–1758. 10.1007/s00417-007-0644-z [DOI] [PubMed] [Google Scholar]

- Patel AT, Duncan PW, Lai SM, Studenski S (2000) The relation between impairments and functional outcomes poststroke. Arch Phys Med Rehabil 81:1357–1363. 10.1053/apmr.2000.9397 [DOI] [PubMed] [Google Scholar]

- Piserchia V, Breveglieri R, Hadjidimitrakis K, Bertozzi F, Galletti C, Fattori P (2017) Mixed body/hand reference frame for reaching in 3D space in macaque parietal area PEc. Cereb Cortex 27:1976–1990. 10.1093/cercor/bhw039 [DOI] [PubMed] [Google Scholar]

- Pollock A, Hazelton C, Henderson CA, Angilley J, Dhillon B, Langhorne P, Livingstone K, Munro FA, Orr H, Rowe FJ, Shahani U (2011) Interventions for visual field defects in patients with stroke. Cochrane Database Syst Rev CD008388. 10.1002/14651858.CD008388.pub2 [DOI] [PubMed] [Google Scholar]

- Ramkumar P, Acuna DE, Berniker M, Grafton ST, Turner RS, Kording KP (2016) Chunking as the result of an efficiency computation trade-off. Nat Commun 7:12176. 10.1038/ncomms12176 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rand D (2018) Proprioception deficits in chronic stroke: upper extremity function and daily living. PLoS One 13:e0195043. 10.1371/journal.pone.0195043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reitan RM (1958) Validity of the trail making test as an indicator of organic brain damage. Percept Mot Skills 8:271–276. 10.2466/pms.1958.8.3.271 [DOI] [Google Scholar]

- Robertshaw KD, Wilkie RM (2008) Does gaze influence steering around a bend? J Vis 8:18. 10.1167/8.4.18 [DOI] [PubMed] [Google Scholar]

- Rolin RA, Fooken J, Spering M, Pai DK (2019) Perception of looming motion in virtual reality egocentric interception tasks. IEEE Trans Vis Comput Graph 25:3042–3048. 10.1109/TVCG.2018.2859987 [DOI] [PubMed] [Google Scholar]

- Safstrom D, Johansson RS, Flanagan JR (2014) Gaze behavior when learning to link sequential action phases in a manual task. J Vis 14:3. 10.1167/14.4.3 [DOI] [PubMed] [Google Scholar]

- Sarlegna F, Sainburg RL (2009) The roles of vision and proprioception in the planning of reaching movements. In: Progress in motor control (Sternad D, ed), pp 317–335. New York: Springer. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarlegna F, Blouin J, Bresciani JP, Bourdin C, Vercher JL, Gauthier GM (2003) Target and hand position information in the online control of goal-directed arm movements. Exp Brain Res 151:524–535. 10.1007/s00221-003-1504-7 [DOI] [PubMed] [Google Scholar]

- Saunders JA, Knill DC (2003) Humans use continuous visual feedback from the hand to control fast reaching movements. Exp Brain Res 152:341–352. 10.1007/s00221-003-1525-2 [DOI] [PubMed] [Google Scholar]

- Saunders JA, Knill DC (2004) Visual feedback control of hand movements. J Neurosci 24:3223–3234. 10.1523/JNEUROSCI.4319-03.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scalha TB, Miyasaki E, Lima NM, Borges G (2011) Correlations between motor and sensory functions in upper limb chronic hemiparetics after stroke. Arq Neuropsiquiatr 69:624–629. 10.1590/s0004-282x2011000500010 [DOI] [PubMed] [Google Scholar]

- Schmitt C, Baltaretu BR, Crawford JD, Bremmer F (2020) A causal role of area hMST for self-motion perception in humans. Cereb Cortex Commun 1:tgaa042. 10.1093/texcom/tgaa042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeger A, Grießbach E, Raab M, Cañal-Bruland R (2022) Spatial distances affect temporal prediction and interception. Sci Rep 12:15786. 10.1038/s41598-022-18789-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Semrau JA, Herter TM, Scott SH, Dukelow SP (2013) Robotic identification of kinesthetic deficits after stroke. Stroke 44:3414–3421. 10.1161/STROKEAHA.113.002058 [DOI] [PubMed] [Google Scholar]

- Semrau JA, Herter TM, Scott SH, Dukelow SP (2015) Examining differences in patterns of sensory and motor recovery after stroke with robotics. Stroke 46:3459–3469. 10.1161/STROKEAHA.115.010750 [DOI] [PubMed] [Google Scholar]

- Semrau JA, Herter TM, Scott SH, Dukelow SP (2018) Vision of the upper limb fails to compensate for kinesthetic impairments in subacute stroke. Cortex 109:245–259. 10.1016/j.cortex.2018.09.022 [DOI] [PubMed] [Google Scholar]

- Semrau JA, Herter TM, Scott SH, Dukelow SP (2019) Differential loss of position sense and kinesthesia in sub-acute stroke. Cortex 121:414–426. 10.1016/j.cortex.2019.09.013 [DOI] [PubMed] [Google Scholar]

- Siegel M, Buschman TJ, Miller EK (2015) Cortical information flow during flexible sensorimotor decisions. Science 348:1352–1355. 10.1126/science.aab0551 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simo L, Botzer L, Ghez C, Scheidt RA (2014) A robotic test of proprioception within the hemiparetic arm post-stroke. J Neuroeng Rehabil 11:77. 10.1186/1743-0003-11-77 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sims CR, Jacobs RA, Knill DC (2011) Adaptive allocation of vision under competing task demands. J Neurosci 31:928–943. 10.1523/JNEUROSCI.4240-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]