Abstract

Protein mutations can significantly influence protein solubility, which results in altered protein functions and leads to various diseases. Despite of tremendous effort, machine learning prediction of protein solubility changes upon mutation remains a challenging task as indicated by the poor scores of normalized Correct Prediction Ratio (CPR). Part of the challenge stems from the fact that there is no three-dimensional (3D) structures for the wild-type and mutant proteins. This work integrates persistent Laplacians and pre-trained Transformer for the task. The Transformer, pretrained with hunderds of millions of protein sequences, embeds wild-type and mutant sequences, while persistent Laplacians track the topological invariant change and homotopic shape evolution induced by mutations in 3D protein structures, which are rendered from AlphaFold2. The resulting machine learning model was trained on an extensive data set labeled with three solubility types. Our model outperforms all existing predictive methods and improves the state-of-the-art up to 15%.

Keywords: Mutation, Protein solubility, Persistent Laplacian, Transformer, AlphaFold2

1. Introduction

Genetic mutations alter the genome sequence, leading to changes in the corresponding amino acid sequence of a protein. These alternations have far-reaching implications on the protein’s structure, function, and stability, affecting attributes such as folding stability, binding affinity, and solubility. The consequences of protein mutations have been extensively studied in diverse fields such as evolutionary biology, cancer biology, immunology, directed evolution, and protein engineering [1]. Understanding the impact of genetic mutations on protein solubility is crucial in various fields, including protein engineering, drug discovery, and biotechnology. Accurately analyzing and predicting the impact of mutations on protein solubility is therefore crucial in many fields, facilitating the engineering of proteins with desirable functions. There are numerous intricately interconnected factors impacting protein solubility, ranging from amino acid sequence arrangement, post-translational modifications, protein-protein interactions, to environmental conditions, such as solvent type, ion type and concentration, the presence of small molecules, temperature, etc. Unfortunately, the existing data set does not contain sufficient information. This complexity poses significant challenges for the accurate prediction and modeling of protein solubility, often requiring multifaceted computational approaches for reliable outcomes.

Computational predictions serve as a valuable complement to experimental mutagenesis analysis of protein stability changes upon mutation. Such computational approaches offer several advantages, including being economical, efficient, and provide a viable alternative to labor-intensive site-directed mutagenesis experiments [2]. As a result, the development of accurate and reliable computational techniques for mutational impact prediction could substantially enhance the throughput and accessibility of research in protein engineering and drug discovery.

Over the years, a variety of computational methods have been developed to explore the effects of mutations on protein solubility, including but not limited to CamSol [3], OptSolMut [4], PON-Sol [5], SODA [6], Solubis [7], and others as summarized in a recent review [8]. CamSol employs an algorithm to construct a residue-specific solubility profile, although no explicit method has been made publicly available. OptSolMut is trained on 137 samples, each featuring single or multiple mutations affecting solubility or aggregation. PON-Sol utilizes a random forest model trained on a dataset of 406 single amino acid substitutions labeled as solubility-increasing, solubility-decreasing, or exhibiting no change in solubility. SODA, which is based on the PON-Sol data, specifically targets samples with decreasing solubility [6]. Solubis is an optimization tool that increases protein solubility and integrates interaction analysis from FoldX [2], aggregation prediction from TANGO [9], and structural analysis from YASARA [10]. Recently, PON-Sol2 [11] extended the original PON-Sol dataset and employed a gradient boosting algorithm for sequence-based predictions. Despite of intensive effort, the current prediction accuracy in terms of normalized Correct Prediction Ratio (CPR) remains very low, calling for innovative strategies.

Topological data analysis (TDA) is a relatively new approach for data science. Its main technique is persistent homology [12, 13]. The essential idea of persistent homology is to construct a multiscale analysis of data in terms of topological invariants. The resulting changes of topological invariants over scales can be used to characterize the intricate structures of data, leading to an unusually powerful approach in describing protein structure, flexibility, and folding [14]. Persistent homology was integrated with machine learning for the classification of proteins in 2015 [15], which was one the first integrations of TDA and machine learning, and the predictions of mutation-induced protein stability changes [16, 17] and protein-protein binding free energy changes [18, 19]. One of the major achievements of TDA is its winning of D3R Grand Challenges, an annual worldwide competition series in computer-aided drug design [20, 21]. A nearly comprehensive summary of the early success of TDA in biological science was given in a review [22].

However, persistent homology only tracks the changes in topological invariants and cannot capture homotopic shape evolution of data over scales or induced by mutations. To overcome this limitation, Wei and coworkers introduced persistent combinatorial Laplacians, also called persistent spectral graphs, on point clouds [23] and evolutionary de Rham-Hodge method on manifolds [24] in 2019. The essence of these methods is the persistent topological Laplacians (PTLs) either on point clouds or on manifolds. PTLs not only fully capture the topological invariants in its harmonic spectra as those given by persistent homology, but also capture the homotopic shape evolution of data during the multiscale analysis or a mutation process. PTLs were applied to the predictions of protein flexibility [25] and protein-ligand binding free energies [26], protein–protein interactions[27, 28], and protein engineering [1]. The most remarkable accomplishment by persistent Laplacian is its accurate forecasting of emerging dominant SARS-CoV-2 variants BA.4 and BA.5 about two months in advance [29].

However, TDA approaches depend on the biomolcular structures, which may not be available. In fact, many proteins involved in the present study do not have 3D structures. In recent years, advanced natural language processing (NLP) models, including Transformers and long short-term memory (LSTM), have been widely implemented across various domains to extract protein sequence information. For example, Tasks Assessing Protein Embedding (TAPE) introduced three different architectures, namely transformer, dilated residual network (ResNet), and LSTM [30]. Additionally, LSTM-based models like Bepler [31] and UniRep [32] have been developed. Additionally, large-scale protein transformer models trained on extensive datasets comprising hundreds of millions of sequences have made significant advancements in the field. These models, including Evolutionary Scale Modeling (ESM) [33] and ProtTrans [34, 35], have exhibited exceptional performance in capturing a variety of protein properties. ESM, for instance, allows for fine-tuning based on either downstream task data or local multiple sequence alignments [36]. In the present work, we leverage the pre-trained ESM transformer model to extract crucial information from protein sequences.

In this work, we will integrate transformer-based sequence embedding and persistent topological Laplacians to predict protein solubility changes upon mutation. While sequence-based models can be applied without 3D structural information, the PTL-based features require high-quality structures. We generate 3D structures of wild type proteins from AlphaFold2 [37] to facilitate topological embedding. By combining both embedding approaches, they naturally complement each other in classifying protein solubility changes upon mutation. These embeddings are fed into an ensemble classifier, gradient boosted trees (GBT), to build a machine learning model, called TopLapGBT. We validate TopLapGBT on the classification of protein solubility changes upon mutation. We demonstrate that this integrated machine learning model gives rise to a substantial improvement as compared to existing state-of-the-art models. Residue-Similarity plots are also applied to assess how well the TopLapGBT model classify three solubility labels.

2. Results

2.1. Overview of TopLapGBT

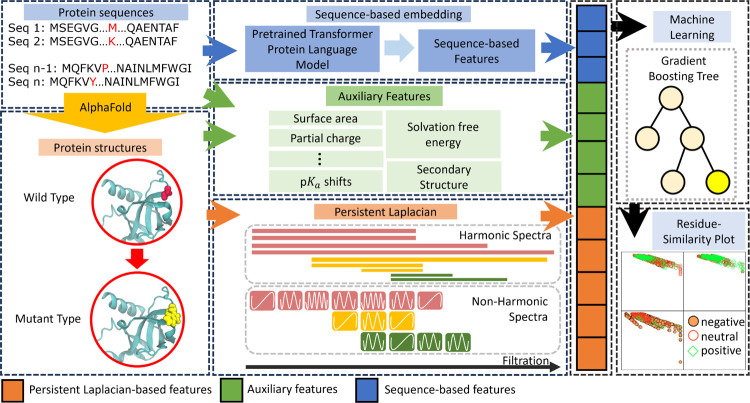

TopLapGBT integrates both structure-based and sequence-based features, derived from protein structures and sequences respectively, into a unified model. Our architecture comprises three distinct embedding modules: persistent Laplacian-based embeddings, sequence-based embeddings, and auxiliary feature embeddings, all of which feed into an ensemble classifier as depicted in Figure 1.

Figure 1:

The illustration of the workflow for TopLapGBT. Protein sequences are first preprocessed by AlphaFold 2 to generate wild type protein structures. Mutant proteins are generated from the Jackal software [38]. The structure-based features from persistent Laplacian, auxiliary and sequence-based features are then concatenated to form a long feature input for gradient boosting tree to classify the protein solubility changes upon mutation. The predicted labels are also analyzed on Residue-Similarity (R-S) plots.

In the persistent Laplacian-based feature embedding module, we employ persistent Laplacian techniques to generate features that encapsulate the structural attributes of proteins both pre- and post-mutation. This approach is particularly effective in capturing the structural alterations induced by mutations within the localized neighborhoods of the mutation sites. Mathematically, the persistent Laplacian builds a sequence of simplicial complexes through a filtration process, thereby characterizing atom-atom interactions across multiple scales (details in the Methods section). In the sequence-based feature embedding module, a pre-trained transformer model generates latent feature vectors extracted from protein sequences. Specifically, the transformer model used here is a 650M-parameter protein language model, trained on a corpus of 250M protein sequences spanning multiple organisms [39]. Finally, the auxiliary feature embedding module incorporates a variety of attributes such as surface area, partial charge, shifts, solvation free energy, and secondary structural information, synthesized from both protein sequences and structures. These three distinct sets of feature embeddings are subsequently concatenated to produce a comprehensive feature vector. This vector is then fed into a gradient-boosting tree classifier to categorize the mutation-induced samples.

2.2. Performance of TopLapGBT on PON-Sol2 dataset

In our study, we utilize the dataset employed by PON-Sol2 as detailed in [11]. The dataset is comprised of 6,328 mutation samples, originating from 77 distinct proteins. These samples are categorized into three labels: decrease in solubility, increase in solubility, and no change in solubility. Specifically, the dataset contains 3,136 samples demonstrating a decrease in solubility, 1,026 samples showing an increase, and 2,166 samples with no change. Notably, the dataset exhibits a class imbalance, with a ratio of 1 : 0.69 : 0.34, indicating a bias towards samples that exhibit a decrease in solubility. To assess the performance of our model, we initially carry out a random 10-fold cross-validation on the dataset. Subsequently, an independent blind test prediction is executed to provide further validation of the model’s efficacy.

In Table 1, we present a comparative analysis of the performance of existing classifiers by PON-Sol [5] and PON-Sol2 [11] against our proposed model, TopLapGBT, using 10-fold cross-validation. It should be noted that PON-Sol2 incorporates feature selection techniques such as recursive feature elimination (RFE). To provide a robust assessment of TopLapGBT’s performance, we conduct 10 repeated runs, and the mean values of these runs are reported to account for any randomness in the model’s output.

Table 1:

Comparison of performance metrics between TopLapGBT and both single layer and double layer classifiers of PON-Sol2 in the 10-fold crossvalidation.

| Performance Metric | Model | ||||||

|---|---|---|---|---|---|---|---|

| PON-Sol2 [11] | TopGBT | TopLapGBT | |||||

| Single Three-Class Classifier | Two-Layer Three-Class Classifier | - | - | ||||

| All Features | 30 Features Selected by RFE | All Features | 34 Features Selected by RFE | - | - | ||

| − | 0.842/0.742 | 0.835/0.729 | 0.875/0.793 | 0.869/0.781 | 0.868/0.785 | 0.873/0.797 | |

| PPV | N | 0.657/0.536 | 0.658/0.543 | 0.635/0.521 | 0.647/0.534 | 0.686/0.554 | 0.681/0.557 |

| + | 0.563/0.730 | 0.586/0.752 | 0.520/0.696 | 0.538/0.714 | 0.646/0.797 | 0.627/0.779 | |

| − | 0.913/0.954 | 0.901/0.947 | 0.893/0.942 | 0.891/0.941 | 0.932/0.965 | 0.931/0.964 | |

| NPV | N | 0.841/0.824 | 0.847/0.832 | 0.847/0.829 | 0.855/0.838 | 0.864/0.849 | 0.858/0.842 |

| + | 0.877/0.737 | 0.877/0.738 | 0.877/0.736 | 0.878/0.739 | 0.886/0.749 | 0.888/0.757 | |

| − | 0.919/0.919 | 0.906/0.906 | 0.892/0.892 | 0.891/0.891 | 0.937/0.937 | 0.934/0.934 | |

| Sensitivity | N | 0.701/0.701 | 0.717/0.717 | 0.724/0.724 | 0.738/0.738 | 0.752/0.752 | 0.735/0.735 |

| + | 0.329/0.329 | 0.326/0.326 | 0.336/0.336 | 0.340/0.340 | 0.359/0.359 | 0.395/0.395 | |

| − | 0.831/0.839 | 0.825/0.831 | 0.875/0.883 | 0.868/0.874 | 0.860/0.872 | 0.867/0.881 | |

| Specificity | N | 0.812/0.697 | 0.807/0.697 | 0.785/0.667 | 0.792/0.678 | 0.821/0.697 | 0.823/0.707 |

| + | 0.948/0.938 | 0.954/0.947 | 0.938/0.927 | 0.941/0.932 | 0.962/0.954 | 0.953/0.944 | |

| CPR | 0.747/0.650 | 0.746/0.650 | 0.743/0.651 | 0.747/0.656 | 0.780/0.682 | 0.792/0.688 | |

| GC2 | 0.317/0.298 | 0.309/0.289 | 0.322/0.313 | 0.323/0.312 | 0.371/0.354 | 0.376/0.361 | |

The negative solubility samples are denoted as “-” whereas the positive solubility change samples are denoted as “+”. The samples with no solubility change are denoted as “N”. Performance metrics include the positive predicted values (PPV), negative predicted values (NPV), sensitivity, specificity, correct prediction ratio (CPR) and generalised correlation . PPV refers to the proportions of positive predictions for each solubility class while NPV refers to the proportions of negative predictions for each solubility class. CPR calculates the percentage of correctly classified samples while measures the correlation coefficient of the classification. All normalized metrics are also reported. For each metric, the first value is without normalization while the second one is with normalization.

Performance evaluation of our model, TopLapGBT, is conducted using a range of metrics, including Positive Predictive Value (PPV), Negative Predictive Value (NPV), Sensitivity, Specificity, Correct Prediction Ratio (CPR), and Generalized Squared Correlation . PPV and NPV quantify the proportions of correct positive and negative predictions for each solubility class, respectively. Given that we are dealing with a -class problem with three distinct solubility classes, and are particularly relevant for providing a holistic view of the model’s performance [40]. Specifically, CPR measures the overall accuracy of the model, while quantifies the correlation coefficient of the classification, ranging from 0 to 1. Larger values for these metrics denote better performance. Importantly, due to the class imbalance in the number of mutation samples across the categories, all performance metrics are normalized to ensure a robust and reliable evaluation of the model’s efficacy (further details are elaborated in the Methods section).

The proposed model, TopLapGBT, demonstrates significant performance gains over existing featurization methods in PON-Sol2 across all evaluation metrics [11]. Specifically, normalized CPR and scores of TopLapGBT stand at 0.688 and 0.361, marking improvements of 4.88% and 15.71% over PON-Sol2, respectively. These gains underscore the merit of incorporating both structure-based and sequence-based features into the model. To elucidate the contribution of Persistent Laplacian (PL)-based features, we also present a comparative analysis with our TopGBT model in Table 1. The TopGBT model utilizes persistent homology-based embeddings alongside auxiliary and pre-trained transformer features. While TopGBT still outperforms all existing PON-Sol2 models, the incorporation of PL-based features in TopLapGBT leads to an incremental improvement of 1% and 2% in CPR and metrics, respectively. This validates our approach of leveraging Persistent Laplacian to comprehensively capture both the topological and homotopic nuances in the evolution of protein structures.

2.3. Performance of TopLapGBT on independent test set

To robustly assess the performance of TopLapGBT, we subjected it to an independent test using the same dataset employed by PON-Sol2 [11]. In this validation, TopLapGBT consistently out-performed all five existing models, as evidenced in Table 2. Specifically, TopLapGBT registers a normalized CPR of 0.564 and a normalized of 0.185, surpassing PON-Sol2 by 3.49% and 17.83%, respectively. Relative to TopGBT, the inclusion of PL-based features in TopLapGBT yielded incremental gains in both CPR and metrics, thereby further substantiating the utility of Persistent Laplacian in capturing the homotopic shape evolution within protein structures.

Table 2:

Performance of TopLapGBT with existing state-of-the-art models on the independent blind test classification.

| Performance Metric | Independent Test | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| PON-Sol [5] | SODA | SODA(5 as Threshold) | SODA(10 as Threshold) | SODA(17 as Threshold) | PON-Sol2 [11] | TopGBT | TopLapGBT | ||

| − | 0.593/0.428 | 0.427/0.258 | 0.606/0.428 | 0.673/0.468 | 0.742/0.585 | 0.804/0.643 | 0.781/0.649 | 0.789/0.645 | |

| PPV | N | 0.427/0.385 | NaN/NaN | 0.425/0.365 | 0.397/0.357 | 0.383/0.350 | 0.600/0.475 | 0.617/0.462 | 0.624/0.475 |

| + | 0.151/0.373 | 0.080/0.229 | 0.047/0.149 | 0.060/0.184 | 0.098/0.284 | 0.233/0.472 | 0.524/0.761 | 0.476/0.718 | |

| − | 0.514/0.691 | 0.373/0.537 | 0.508/0.684 | 0.502/0.677 | 0.501/0.677 | 0.794/0.887 | 0.843/0.920 | 0.842/0.918 | |

| NPV | N | 0.685/0.700 | 0.642/0.667 | 0.761/0.739 | 0.797/0.782 | 0.797/0.782 | 0.804/0.793 | 0.816/0.795 | 0.826/0.809 |

| + | 0.881/0.693 | 0.832/0.605 | 0.848/0.633 | 0.858/0.649 | 0.858/0.649 | 0.879/0.684 | 0.881/0.692 | 0.880/0.688 | |

| − | 0.263/0.263 | 0.488/0.488 | 0.195/0.195 | 0.098/0.098 | 0.068/0.068 | 0.802/0.802 | 0.867/0.867 | 0.864/0.864 | |

| Sensitivity | N | 0.456/0.456 | 0.000/0.000 | 0.759/0.759 | 0.886/0.886 | 0.954/0.954 | 0.671/0.671 | 0.692/0.692 | 0.713/0.713 |

| + | 0.448/0.448 | 0.253/0.253 | 0.069/0.069 | 0.057/0.057 | 0.046/0.046 | 0.161/0.161 | 0.126/0.126 | 0.115/0.115 | |

| − | 0.812/0.824 | 0.318/0.297 | 0.867/0.869 | 0.951/0.944 | 0.975/0.976 | 0.796/0.777 | 0.747/0.765 | 0.759/0.763 | |

| Specificity | N | 0.659/0.636 | 1.000/1.000 | 0.426/0.340 | 0.249/0.204 | 0.144/0.116 | 0.751/0.630 | 0.760/0.597 | 0.760/0.606 |

| + | 0.617/0.623 | 0.558/0.573 | 0.786/0.802 | 0.863/0.872 | 0.936/0.942 | 0.920/0.910 | 0.983/0.980 | 0.981/0.977 | |

| CPR | 0.356/0.389 | 0.282/0.247 | 0.381/0.341 | 0.375/0.347 | 0.382/0.356 | 0.671/0.545 | 0.707/0.562 | 0.711/0.564 | |

| GC2 | 0.010/0.011 | NaN/NaN | 0.041/0.045 | 0.022/0.022 | 0.016/0.016 | 0.181/0.157 | 0.205/0.184 | 0.206/0.185 | |

The negative solubility samples are denoted as “-” whereas the positive solubility change samples are denoted as “+”. The samples with no solubility change are denoted as “N”. Performance metrics include the positive predicted values (PPV), negative predicted values (NPV), sensitivity, specificity, correct prediction ratio (CPR) and generalised correlation . PPV refers to the proportions of positive predictions for each solubility class while NPV refers to the proportions of negative predictions for each solubility class. CPR calculates the percentage of correctly classified samples while measures the correlation coefficient of the classification. All normalized metrics are also reported. For each metric, the first value is without normalization while the second one is with normalization.

3. Discussion

The performance of machine learning models generally relies on the nature of the input features. In our model, the PL-based features depend on one main element which is the quality of the protein structures from AlphaFold 2 (AF2). The quality of AF2 structures are crucial in determining the performance of TopLapGBT. Recently, AF2 structures have been reported to achieve comparable performance to nuclear magnetic resonance (NMR) structures while ensemble methods can be used to enhance the performance by combining multiple NMR structures [1]. This allows AF2 structures to serve as a practical substitute for experimental structural data. Although AF2 structures are not as reliable as X-ray structures, the fusion of sequence-based pre-trained transformer features and PL-based features provides robust featurization even for low quality AF2 structural data. PL elucidates the precise mutation geometry and topology, while sequence-based pre-trained transformer features capture evolutionary patterns from an extensive sequence library. This synergy holds significance and can be applied to a diverse range of other challenges in the field of biomolecular research. For the rest of this section, we analyze the model’s performance based on the region of the mutations and the type of mutations. We also discuss the performance of different feature types using the Residue-Similarity plots.

3.1. Performance analysis based on different mutation regions

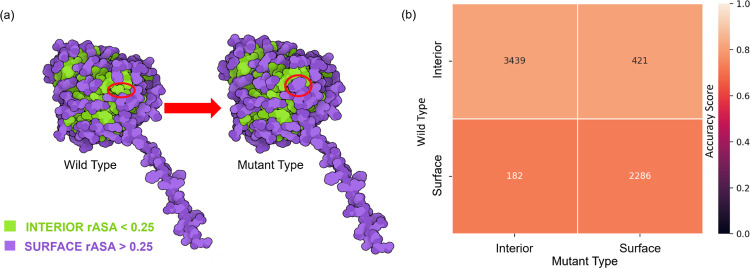

To delve deeper into the model’s performance, we categorize mutation samples based on their structural regions: interior and surface, as depicted in Figure 2 pre- and post-mutations. These regions are defined by their relative accessible solvent area (rASA), using a cutoff value . A residue at the mutation site is classified as buried or interior if its rASA falls below this cutoff. While the discrete nature of initially raised concerns, given that amino acids have a continuous exposure profile, empirical analyses on databases from organisms like Escherichia coli, Saccharomyces cerevisiae, and Homo sapiens have shown that an optimal rASA cutoff of approximately 25% is effective for distinguishing between surface and interior residues [41]. In our analysis, we apply this framework to identify surface and interior residues in the solubility dataset. We observe that some mutation sites undergo a regional transition, moving from one structural domain to another, consequent to the mutation.

Figure 2:

(a) The definitions of the structural regions on the protein label 213133708 with mutation ID: I283W. For both wild type and mutant type, amino acids in the proteins are classified under surface or interior regions based on the rASA of the residue. The residue ID 283 of protein label 213133708 was mutated from isoleucine (interior region) to trytophan (surface region). Structures are plotted with the software Illustrate[42]. (b) A comparison of performance of TopLapGBT among different mutation region types. The -axis represents the region type for the original residue and the -axis represents the region type for the mutated residue. The numbers indicated in each cell corresponds to the number of mutation samples in each region-region mutation pair. The accuracy scores (CPR) for both interior-interior and interior-surface are 0.813 and 0.812 while the accuracy score for both surface-interior and surface-surface are 0.725 and 0.730.

To gain nuanced insights into TopLapGBT’s performance, we segment the results according to the mutation’s structural location within the protein. We present these segmentations as heatmap plots that delineate both mutation regions and amino acid types. Structural regions are defined based on relative accessible surface area (rASA) [41]. By categorizing residues as either interior or surface, we can examine the influence of continuous amino acid exposure on solubility change classification post-mutation. Figure 2(b) displays accuracy scores for four types of mutations: interior-interior, interior-surface, surface-interior, and surface-surface. TopLapGBT attains an average accuracy score of 0.770 across these categories. Extended data in Figure S1 further breaks down accuracy scores for all 20 distinct amino acids within each region-pair, revealing variations in residue-residue pair performance.

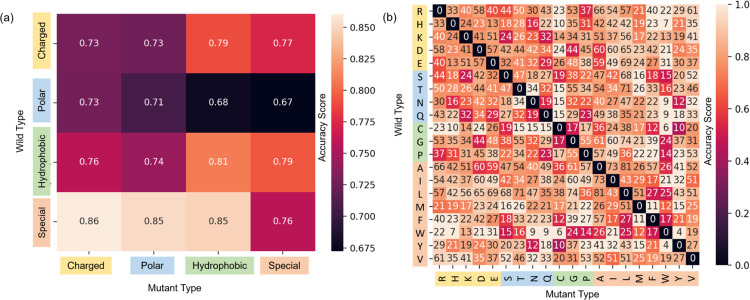

3.2. Performance analysis based on different mutation types

Switching focus to mutation types, our model’s capability in classifying solubility changes also merits exploration across the 20 distinct amino acid types in the dataset. In addition to this, we subgroup amino acids as charged, polar, hydrophobic, or special case. Table S1 enumerates the sample counts for each mutation group pair. Figure 3(a) displays accuracy scores for each mutation group pair, while Figure 3(b) shows scores for each amino acid pair. Notably, the special-charged and special-polar groups register the highest accuracy, whereas the polar-hydrophobic and polar-special groups underperform. One plausible reason could be the inherent complexity in accurately classifying mutations with non-negative solubility changes. It’s worth noting that PON-Sol2 employed a two-layer classifier to improve classification [11]. Our results indicate that TopLapGBT surpasses the performance of this two-layer system.

Figure 3:

A comparison of 10-fold cross validation accuracy scores (CPR) for (a) different mutation groups and (b) its associated amino acid types. The -axis labels the residue type of the original protein, whereas the -axis labels the residue type of the mutant. The squares colored in black in (b) have zero mutation samples. For a reverse mutation, the labels are taken with reverse solubility change unless the change is zero.

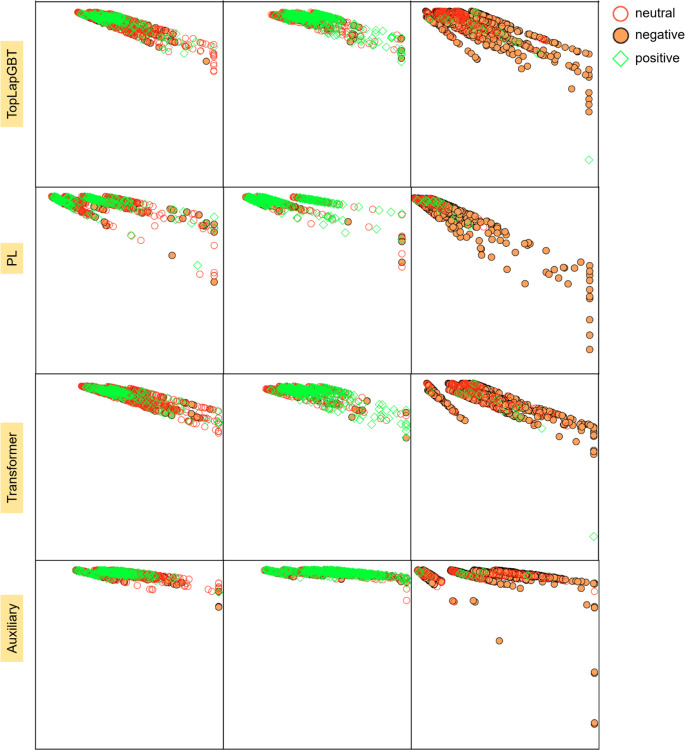

3.3. Feature analysis based on Residue-Similarity plots

The Residue Similarity Index (RSI) serves as a potent metric for evaluating the efficacy of dimensionality reduction in both clustering and classification contexts [43]. RSI has proven its value in generating classification accuracy scores that align well with supervised methods in single-cell typing. When applied to our solubility change dataset, Residue-Similarity (R-S) plots can be constructed to scrutinize how the Residue Index (RI) and Similarity Index (SI) may indicate the quality of cluster separation.

Figure 4 juxtaposes the R-S plots derived from TopLapGBT against those from various feature sets utilized in model training. Across all visualizations, samples manifest a range of classification outcomes—both correct and incorrect—for each true label. However, a noteworthy observation is that Transformer-pretrain and persistent Laplacian-based features demonstrate superior clustering attributes compared to auxiliary features. The high RI and SI scores for auxiliary features cause these data points to cluster near the upper regions of their respective sections. Despite this, the integrative use of all three feature types in TopLapGBT results in appreciable clustering performance, corroborated by the CPR metrics obtained in 10-fold cross-validation. To solidify the rationale behind adopting robust supervised classifiers like TopLapGBT, we contrast the R-S plots with UMAP visualizations (shown in Figure S2). It becomes evident that UMAP plots fail to form clusters that are as distinct as those observed in R-S plots, thereby reinforcing the need for a specialized approach to classify mutation samples effectively.

Figure 4:

The comparison of R-S plots between the different types of features used in TopLapGBT model. The -axis represents the residue score, whereas the -axis represents the similarity score. RS scores were computed for the testing set, and all 10-folds were visualized. Each section corresponds to one of the 3 true solubility labels, and the sample’s color and marker correspond to the predicted label from TopLapGBT.

The impetus for utilizing structure-based features stems from the multifaceted relationship that exists among protein sequence, structure, and solubility. Factors such as hydrophobicity, charge distribution, and intermolecular interactions contribute to the complexity of protein solubility. Traditional prediction methods, which often rely on empirical rules or rudimentary descriptors, fall short in capturing this intricate molecular interplay. By employing advanced mathematical techniques like persistent Laplacian (PL) coupled with machine learning algorithms, we can decipher the complex patterns and relationships embedded within protein sequences and structures. Persistent Laplacian, in particular, provides a robust mathematical representation that captures both the topological and homotopic evolution of protein structures. Furthermore, machine learning models rooted in advanced mathematics offer several advantages for classifying changes in protein solubility. These models are well-suited for handling high-dimensional and complex data sets, such as those involving protein sequences and structures. They are also capable of learning non-linear relationships and capturing nuanced dependencies that are often overlooked by traditional linear models. Importantly, these advanced models can adeptly manage class-imbalanced datasets, which are commonly encountered in protein solubility studies.

4. Conclusion

In the multifaceted quest to understand mutation-induced solubility changes, various scientific domains including quantum mechanics, molecular mechanics, biochemistry, biophysics, and molecular biology have made significant contributions. Despite these concerted efforts, state-of-art models have limitations, as evidenced by their normalized CPR value of 0.656 even after employing feature selection methods. Persistent homology (PH) has emerged as a powerful tool for capturing the complexity of biomolecular structures and has achieved noteworthy success in drug discovery applications. However, its inability to capture the nuances of homotopic shape evolution, crucial for delineating molecular interactions in proteins, presents a critical shortcoming.

Our study introduces TopLapGBT, a novel model that integrates persistent Laplacian (PL) features with pretrained transformer features, thereby bridging the gap in capturing both topology and homotopic shape evolution. This innovative fusion leads to significant advancements in classification performance. Specifically, TopLapGBT achieves normalized CPR and scores of 0.688 and 0.361, respectively, marking improvements of 4.88% and 15.71% over the state-of-the-art PON-Sol2. These findings are further corroborated by an independent blind test, where TopLapGBT continues to outperform existing models.

In summary, our proposed TopLapGBT model not only achieves superior performance over existing state-of-the-art methods but also introduces a more nuanced approach for the classification of protein solubility changes upon mutation. These results underscore the transformative potential of integrating geometric and topological features with machine learning in advancing the field of molecular biology.

5. Materials and Methods

In this section, we endeavor to elucidate key mathematical and computational foundations that are instrumental for the work presented in this study. Specifically, we delve into spectral graph theory, simplicial complex, and persistent Laplacian methods, highlighting their significance in capturing topological and spectral properties essential for the characterization of proteins. Additionally, we discuss machine learning and deep learning paradigms, focusing on their role in processing, analyzing, and interpreting these complex features, especially within the confines of test datasets and validation settings.

5.1. Persistent Laplacian characterization of proteins

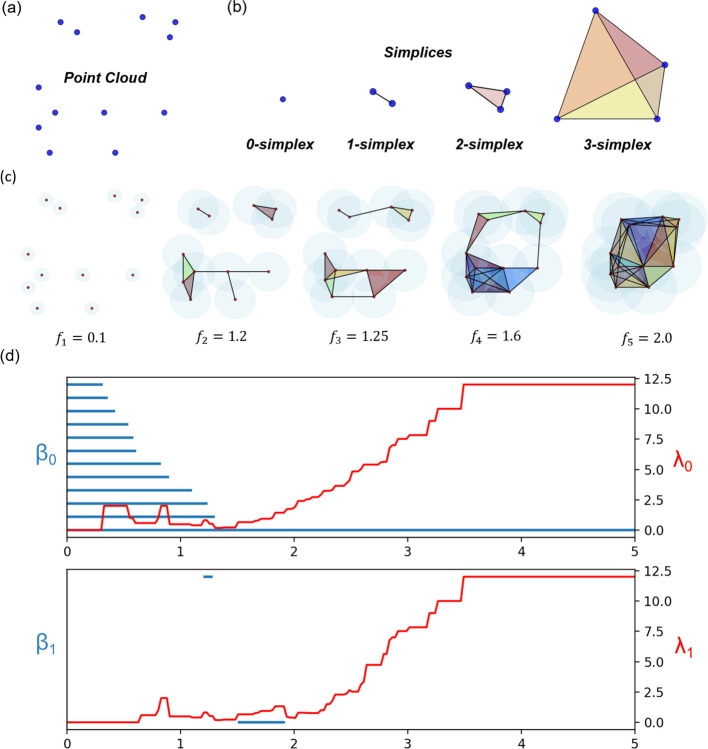

Simplicial complex

A simplicial complex is made up of a set of simplices and generalises beyond graph networks at higher dimensions [44, 45, 46, 47]. Every simplex is a finite set of vertices which can be interpreted as the atoms in a protein structure. Essentially, simplices can be a point (0-simplex), an edge (1-simplex), a triangle (2-simplex), a tetrahedron (3-simplex), or in higher dimensions, a -simplex. In other words, a -simplex is the convex hull formed by affinely independent points as follows,

A geometric simplicial complex is a finite set of geometric simplexes that satisfy two essential conditions. First, any face of a simplex from is also in . Second, the intersection of any two simplexes in is either empty or shares faces. Commonly used methods to construct simplicial complexes are Čech complex, Vietoris-Rips complex, Alpha complex, Clique complex, Cubic complex, and Morse complex [44, 45, 46, 47].

Chain Group

A -th chain group is a free Abelian group generated by oriented -simplices . A boundary operator defined on an oriented -simplex can be written as

where is an oriented -simplex, which is constructed by the all the vertices except , i.e., removing from the simplex. The boundary operator satisfies .

The adjoint of , which is

satisfies the inner product relation , for every . This will be used in the combinatorial Laplacian.

Combinatorial Laplacian

For the -boundary operator in , define to be an matrix representation of the boundary operator under the standard bases and of and . Similarly, the matrix representation of is the transpose matrix , with respect to the same ordered bases of the boundary operator .

More specifically, let and be the number of -simplices and -simplices respectively in a simplicial complex . The boundary matrix has entries defined as follows:

where and . Here, represents the -th -simplex is a face of -th -simplex and indicates the coefficient of in is 1. Likewise, means that is not a face of and indicates that the coefficient of in is −1.

Then the -combinatorial Laplacian or the topological Laplacian is a linear operator

| (1) |

The -combinatorial Laplacian exhibits an matrix representation and is given by

| (2) |

In the case , then since is a zero map.

The number of rows in represents the number of -simplices in and the number of columns refers to the number of -simplices in . Furthermore, the upper -combinatorial Laplacian matrix is and the lower -combinatorial Laplacian matrix is . Recall that since is a zero map, hence with being a zero matrix and being an oriented simplicial complex of dimension 1. In fact, the 0-combinatorial Laplacian matrix is actually the graph Laplacian in spectral graph theory.

The above graph Laplacian matrices can be explicitly described in terms of the simplex relations. More precisely, can be described as

which is equivalent to the graph Laplacian. Furthermore, when can be expressed as

Here, we denote if they have the same orientation, i.e. similarly oriented. Furthermore, we say that two -simplices and are upper adjacent (resp. lower adjacent) neighbors, denoted as (resp. ), if they are both faces of a common -simplex (resp. they both share a common -simplex as their face). In addition, if the orientations of their common lower simplex are the same, it is called similar common lower simplex ( and ). On the other hand, if the orientations are different, it is called dissimilar common lower simplex ( and ). The (upper) degree of a -simplex , denoted as , is the number of -simplices, of which is a face.

The eigenvalues of combinatorial Laplacian matrices are independent of the choice of the orientation [48]. Furthermore, the multiplicity of zero eigenvalues, i.e. the total number of zero eigenvalues, of corresponds to the th Betti number , according to the combinatorial Hodge theorem [49]. The th Betti numbers are topological invariants that describe the -dimensional holes in a simplicial complex. In particular, and represents the numbers of independent components, rings and cavities, respectively.

Persistent Laplacian

Persistent Laplacian (PL) were first introduced by integrating graph Laplacian and multiscale filtration [25]. Analyzing the spectra of -combinatorial Laplacian matrix allows both topological and geometric information (i.e. connectivity and robustness of simple graphs) to be obtained. However, this method is genuinely free of metrics or coordinates, which induced too little topological and geometric information that can be used to describe a single configuration.

Therefore, PL was extended to simplicial complexes. This allows a sequence of simplicial complexes from a filtration process to generate persistent Laplacian which is largely inspired by persistent homology and in earlier works in multiscale graphs. For the rest of this section, we introduce mainly on the construction of PL. First, a -combinatorial Laplacian matrix is symmetric and positive semi-definite. Therefore, its eigenvalues are all real and non-negative. The multiplicity of zero spectra (also called harmonic spectra) reveals the topological information, and the geometric information will be preserved in the non-harmonic spectra.

A key concept of PL is the filtration process. Essentially, an ever-increasing filtration value is used to generate a series of topological spaces, which are represented by a nested sequence of multiscale simplicial complexes. Naturally, PL generates a sequence of simplicial complexes induced by varying a filtration parameter [25]. For an oriented simplicial complex , its filtration is a nested sequence of simplicial complexes of

This nested sequence of simplicial complexes induces a family of chain complexes

| (3) |

where is the chain group for the subcomplex , and its -boundary operator is . In the case , then is an empty set and is a zero map. For , the boundary operator

| (4) |

with being the -simplex, and being the -simplex for which its vertex is removed. Similarly, the adjoint of is the operator . The topological and spectral characteristics can then be studied from by varying the filtration parameter and diagonalizing the -combinatorial Laplacian matrix. The multiplicity of the zero spectra of is the persistent Betti number , which represents the number of -dimensional holes in . In other words,

| (5) |

In particular, represents the number of connected components in counts the number of one-dimensional cycles in and reveals the number of two-dimensional voids in . In addition, the spectra of can be written in the following ascending order

| (6) |

where here is an matrix. The -persistent -combinatorial Laplacian can be extended based on the boundary operator as well. Further details can be found in [25].

In order to illustrate the difference between PL and PH, Figure 5 describes a point cloud, basic simplices, a filtration process and the comparison between persistent Laplacian and persistent homology barcodes of 13 points. The filtration process in Figure 5(c) shows the different stages of a Rips filtration process for the 13 points. Figure 5(d) shows the persistent homology barcodes (in blue) and persistent non-harmonic spectra (in red). It can be seen that the non-harmonic spectra provides the additional homotopic shape evolution that is missing in persistent homology in the later part of the filtration process.

Figure 5:

The illustration of (a) point cloud, (b) basic simplices and (c) the filtration process and (d) Comparison between PH barcodes [50, 12] and the non-harmonic spectra of persistent Laplacians (PLs) [25] from the filtration process in (c). The -axis represents the filtration parameter . By discretising the filtration region into equal-sized bins and adding all the Betti bars together, the topological invariants are summarized into persistent Betti numbers that acts a topological descriptor extracted from protein structures. Persistent Laplacians (PLs) [25] for thirteen points. The first non-zero eigenvalues of dimension 0, , and dimension 1, , of PLs are depicted in red. The harmonic spectra of PLs return all the topological invariants of PH, whereas the non-harmonic spectra of PLs capture the additional homotopic shape evolution of PLs during the filtration that are neglected by PH.

5.2. Persistent Laplacian descriptors

In order to capture the mutation-induced solubility change, we apply the persistent Laplacian (PL) to characterize the interactions between the mutation site and the rest of the protein. To describe these interactions, we first propose the interactive PL with the distance function describing the distance between two atoms and defined as

| (7) |

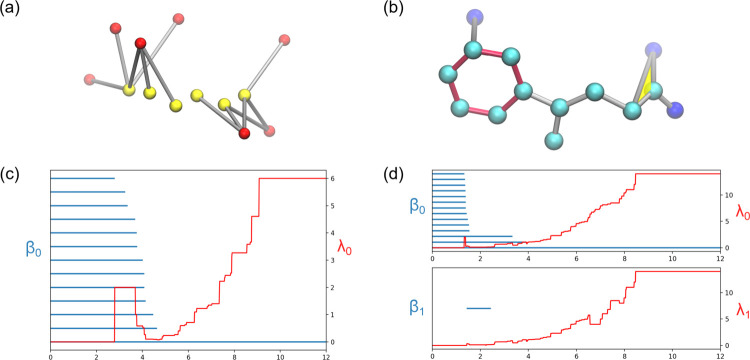

where is the Euclidean distance between the two atoms and refers to the atom’s location which is either in the mutation site or in the rest of the protein. Here, we construct two types of simplicial complexes in our PL computation, such as Vietoris-Rips complex (VC) and Alpha complex (AC). Both complexes are used to characterize the first order interactions and higher order patterns respectively. To capture and characterize different types of atom-atom interactions, we generate the PL based on different atom subsets by selecting one type of atom in the mutation site and one other atom type in the rest of the protein. Different types of atom-atom interactions characterize the different interactions in proteins. For example, interactions generated from carbon atoms are associated with hydrophobic interactions. Similarly, interactions between nitrogen and oxygen atoms correlate to hydrophilic interactions and/or hydrogen bonds. Both types of interactions are illustrated in Figure 6. Interactive PLs have the capability to unveil additional details about bonding interactions and offer a fresh and distinct representation of molecular interactions in proteins.

Figure 6:

An illustration of interactive PL showing hydrophillic interactions based on DI-based filtration (left) and hydrophobic interactions based on DE-based filtration (right) at a mutation site. (a) Hydrophillic interactions between the nitrogen atoms (red) and oxygen atoms (yellow). (b) Hydrophobic interactions between the carbon atoms (cyan) and oxygen atoms (dark blue). The hexagon ring is colored in red and the triangle is colored in yellow. (c) The PH barcodes and PL for dimension 0 of the hydrophillic interactions in (a). (d) The PH barcodes and PL for dimension 0 and dimension 1 of the hydrophobic interactions in (b). The Betti-1 bar is due to the red hexagon ring in (b).

The set of persistent spectra from each persistent Laplacian computation consists of and where refers to the mutant protein or the wild type protein, is the atom type chosen in the rest of the protein and is the atom type chosen in the mutation site. applies the distance DI-based filtration to generate 0-dimensional Laplacian using the Vietoris-Rips complex and applies the Euclidean distance DE-based filtration to generate 1 and 2-dimensional Laplacian using the alpha complex. In total, there are 54 sets of persistent spectra. The persistent spectra from PL contains both harmonic and non-harmonic spectra that are capable of revealing the molecular mechanism of protein solubility.

For zero dimensions, we consider both the harmonic spectra and non-harmonic spectra information for each persistent Laplacian. Filtration using Rips complex with distance is used. The 0-dimensional PL features are generated from 0Å to 6Å with 0.5Å gridsize. For the non-harmonic spectra information, we count the number of non-harmonic spectra and calculate seven statistical values of non-harmonic spectra such as sum, minimum, maximum, mean, standard deviation, variance and the sum of eigenvalues squared. This generates eight statistical values for each of the nine atomic pairs. Therefore, the dimension of 0-dimensional PL features for a protein is 72. In total, the 0-dimensional PL-based feature size after concatenating features at different dimensions for wild type and mutant is 1872.

For one or two dimensions, we perform the filtration using Alpha complex with the distance. The limited number of atoms in the local protein structure can create only a few high-dimensional simplexes, resulting in minimal alterations in shape. As a result, it suffice to consider features from only harmonic spectra of persistent Laplacians by coding the topological invariants for the high-dimensional interactions. Using GUDHI[51], the persistence of the harmonic spectra can be represented by persistent barcodes. The topological feature vectors are generated by computing the statistics of bar lengths, births and deaths. Bars shorter than 0.1Å are excluded as they do not exhibit any clear physical meaning. The remaining bars are then used for computing the statistics: (1) sum, maximum and mean for lengths of bars; (2) minimum and maximum for the birth values of bars; (3) minimum and maximum for the death values of bars. Each set of point clouds leads to a seven-dimensional vector. These features are calculated on nine single atomic pairs and one heavy atom pair. The dimension of one- and two-dimensional PL feature vectors for a protein is 140. In total, the higher-dimensional PL-based feature size after concatenating features at different dimensions for wild type, mutant and their difference is 420.

5.3. Persistent Homology

Persistent homology is part of the harmonic spectra of PL. The homology groups in PH illustrate the persistence of topological invariants, hence providing the harmonic spectral information in PL. The site- and element-specific PH features are generated in a similar way as compared to PL. Similar to PL, filtration construction is also employed to PH. For the zero dimension, the filtration parameter can be discretized into several equally spaced bins, namely [0, 0.5], (0.5, 1], ⋯, (5.5, 6]Å. The death value of the bars are summed in each bin resulting in features.

For each bin, we count the numbers of persistent bars, resulting in a nine-dimensional vector for each point cloud. Similarly, this is performed for each of the nine single atomic pairs. Hence, the dimension of PH features for a protein is 216. For one or two dimensions, the identical featurization from the statistics of persistent bars in PH is used. The PH embedding combines features at different dimensions as described above and concatenated for wild type, mutant and their difference, resulting in a 648-dimensional vector.

5.4. Transformer Features

Recently, we have seen significant advancements in modelling protein properties using large-scale protein transformer models trained on hundreds of millions of sequences. These models, like ESM [33] (evolutionary scale modeling) and ProtTrans[34, 35], have demonstrated impressive performance. Moreover, hybrid fine-tuning approaches that leverage both local and global evolutionary data have proven to enhance these models even further. For instance, eUniRep is an improved LSTM-based UniRep model achieved through fine-tuning with knowledge extracted from local multiple sequence alignments (MSAs). Additionally, the ESM model can be fine-tuned using either downstream task data or local MSAs. In our research, we employed the ESM-1b transformer, a model that falls under the transformer architecture. This particular variant was trained on a dataset of 250 million sequences using a masked filling procedure and boasts an architecture comprising 34 layers with a whopping 650 million parameters. The ESM transformer’s primary role in our work was to generate sequence embeddings. At each layer of the ESM model, it encoded a sequence of length L into a matrix sized at , excluding the start and terminal tokens. For our study, we utilized the sequence representation derived from the final (34th) layer and computed the average along the sequence length axis, resulting in a 1,280-component vector.

5.5. Performance Metrics

PPV and NPV assesses the true positive and true negative proportion of the predicted results for each solubility class. PPV and NPV are computed based on TP, TN, FP and FN which represents the true positive, true negative, false positive and false negative values for each solubility class. For each solubility class, PPV and NPV can be computed by:

| (8) |

| (9) |

Furthermore, specificity and sensitivity can be computed by the following:

| (10) |

| (11) |

The correct prediction ratio (CPR) and generalized squared correlation are used to evaulate the overall performance of TopLapGBT. CPR and can be computed as

| (12) |

| (13) |

where is the number of classes and is the number of samples. Here, represents the number of samples of class to class . Let be the number of inputs from class , and be the number of inputs predicted to class . Then the expected number of samples in -th entry of the multiclass confusion matrix is

Since the mutational samples across the three solubility classes are imbalanced, we normalized the values to provide more reliable calculation of performance metrics.

6. Software and resources

Protein sequences are first preprocessed by AlphaFold 2 to generate wild type protein structures. In particular, 3D protein structures are generated from protein sequences using Colab-Fold [52]. Mutant proteins are generated from the Jackal software[38]. All TopLapGBT models are built using the sklearn machine learning library [53]. The hyperparameters for all the TopLapGBT are: n_estimators = 20000, learning rate = 0.05, max_depth = 7, subsample = 0.4, min_sample_split = 3 and max_features = sqrt. The PQR files, which contains the partial charge information of the proteins, are generated from the PDB2PQR software [54]. The PQR files for both the wild type proteins are generated with AMBER force field. The solvation energy and surface area information are calculated from the in-house online software package ESES [55] and MIBPB [56]. The pKa values are computed from the PROPKA software package [57]. The position-specific-scoring matrices (PSSM) are computed from the BLAST+ software [58] using the nr database. The secondary structure features and torsion angle sequence-based information are calculated from SPIDER [59]. The persistent Laplacian descriptors for both VR complexes and alpha complexes are calculated using the GUDHI software library [60]. All computational work in support of this research was performed using the resources from the National Super Computing Centre of Singapore (NSCC).

Acknowledgments

This work was supported in part by NIH grants R01GM126189, R01AI164266, and R01AI146210, NSF grants DMS-2052983, DMS-1761320, and IIS-1900473, NASA grant 80NSSC21M0023, MSU Foundation, Bristol-Myers Squibb 65109, and Pfizer. It was supported in part by Nanyang Technological University Startup Grant M4081842.110, Singapore Ministry of Education Academic Research fund Tier 1 RG109/19 and Tier 2 MOE-T2EP20120-0013, MOE-T2EP20220-0010, and MOE-T2EP20221-0003.

Footnotes

Supporting Information

Supporting Information is available for supplementary tables, figures, and methods.

Code and Data Availability

The 3D protein structures and the TopLapGBT code can be found in https://github.com/ExpectozJJ/TopLapGBT. The source code for the R-S plot can be found at https://github.com/hozumiyu/RSI.

References

- [1].Qiu Y. and Wei G.-W., “Persistent spectral theory-guided protein engineering,” Nature Computational Science, vol. 3, no. 2, pp. 149–163, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Guerois R., Nielsen J. E., and Serrano L., “Predicting changes in the stability of proteins and protein complexes: a study of more than 1000 mutations,” Journal of molecular biology, vol. 320, no. 2, pp. 369–387, 2002. [DOI] [PubMed] [Google Scholar]

- [3].Sormanni P., Aprile F. A., and Vendruscolo M., “The CamSol method of rational design of protein mutants with enhanced solubility,” Journal of molecular biology, vol. 427, no. 2, pp. 478–490, 2015. [DOI] [PubMed] [Google Scholar]

- [4].Tian Y., Deutsch C., and Krishnamoorthy B., “Scoring function to predict solubility mutagenesis,” Algorithms for Molecular Biology, vol. 5, no. 1, pp. 1–11, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Yang Y., Niroula A., Shen B., and Vihinen M., “PON-Sol: Prediction of effects of amino acid substitutions on protein solubility,” Bioinformatics, vol. 32, no. 13, pp. 2032–2034, 2016. [DOI] [PubMed] [Google Scholar]

- [6].Paladin L., Piovesan D., and Tosatto S. C., “SODA: Prediction of protein solubility from disorder and aggregation propensity,” Nucleic acids research, vol. 45, no. W1, pp. W236–W240, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Van Durme J., De Baets G., Van Der Kant R., Ramakers M., Ganesan A., Wilkinson H., Gallardo R., Rousseau F., and Schymkowitz J., “Solubis: a webserver to reduce protein aggregation through mutation,” Protein Engineering, Design and Selection, vol. 29, no. 8, pp. 285–289, 2016. [DOI] [PubMed] [Google Scholar]

- [8].Vihinen M., “Solubility of proteins,” ADMET and DMPK, vol. 8, no. 4, pp. 391–399, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Fernandez-Escamilla A.-M., Rousseau F., Schymkowitz J., and Serrano L., “Prediction of sequence-dependent and mutational effects on the aggregation of peptides and proteins,” Nature Biotechnology, vol. 22, no. 10, pp. 1302–1306, 2004. [DOI] [PubMed] [Google Scholar]

- [10].Land H. and Humble M. S., “YASARA: A tool to obtain structural guidance in biocatalytic investigations,” Protein engineering: methods and protocols, pp. 43–67, 2018. [DOI] [PubMed] [Google Scholar]

- [11].Yang Y., Zeng L., and Vihinen M., “PON-Sol2: Prediction of effects of variants on protein solubility,” International Journal of Molecular Sciences, vol. 22, no. 15, p. 8027, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Edelsbrunner H. and Harer J. L., Computational Topology: An Introduction. American Mathematical Society, 2022. [Google Scholar]

- [13].Zomorodian A. and Carlsson G., “Computing Persistent Homology,” in Proceedings of the twentieth annual symposium on Computational geometry, pp. 347–356, 2004. [Google Scholar]

- [14].Xia K. and Wei G.-W., “Persistent homology analysis of protein structure, flexibility, and folding,” International journal for numerical methods in biomedical engineering, vol. 30, no. 8, pp. 814–844, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Cang Z., Mu L., Wu K., Opron K., Xia K., and Wei G.-W., “A topological approach for protein classification,” Computational and Mathematical Biophysics, vol. 3, no. 1, 2015. [Google Scholar]

- [16].Cang Z. and Wei G.-W., “Topologynet: Topology based deep convolutional and multitask neural networks for biomolecular property predictions,” PLoS computational biology, vol. 13, no. 7, p. e1005690, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Cang Z. X. and Wei G. W., “Integration of element specific persistent homology and machine learning for protein-ligand binding affinity prediction,” International journal for numerical methods in biomedical engineering, vol. 34, no. 2, p. e2914, 2018. [DOI] [PubMed] [Google Scholar]

- [18].Wang M., Cang Z., and Wei G.-W., “A topology-based network tree for the prediction of protein-protein binding affinity changes following mutation,” Nature Machine Intelligence, vol. 2, no. 2, pp. 116–123, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Chen J., Wang R., Wang M., and Wei G.-W., “Mutations strengthened SARS-CoV-2 infectivity,” Journal of molecular biology, vol. 432, no. 19, pp. 5212–5226, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Nguyen D. D., Cang Z., Wu K., Wang M., Cao Y., and Wei G.-W., “Mathematical deep learning for pose and binding affinity prediction and ranking in D3R grand challenges,” Journal of computer-aided molecular design, vol. 33, pp. 71–82, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Nguyen D. D., Gao K., Wang M., and Wei G.-W., “MathDL: mathematical deep learning for d3r grand challenge 4,” Journal of computer-aided molecular design, vol. 34, pp. 131–147, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Nguyen D. D., Cang Z., and Wei G.-W., “A review of mathematical representations of biomolecular data,” Physical Chemistry Chemical Physics, vol. 22, no. 8, pp. 4343–4367, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Wang R., Nguyen D. D., and Wei G.-W., “Persistent spectral graph,” arXiv preprint arXiv:1912.04135, 2019. [Google Scholar]

- [24].Chen J., Zhao R., Tong Y., and Wei G.-W., “Evolutionary de Rham-Hodge method,” arXiv preprint arXiv:1912.12388, 2019. [Google Scholar]

- [25].Wang R., Nguyen D. D., and Wei G.-W., “Persistent spectral graph,” International Journal for Numerical Methods in Biomedical Engineering, p. e3376, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Meng Z. and Xia K., “Persistent spectral-based machine learning (PerSpect ML) for protein-ligand binding affinity prediction,” Science Advances, vol. 7, no. 19, p. eabc5329, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Wee J. and Xia K., “Persistent spectral based ensemble learning (PerSpect-EL) for protein-protein binding affinity prediction,” Briefings in Bioinformatics, p. bbac024, 2022. [DOI] [PubMed] [Google Scholar]

- [28].Bi J., Wee J., Liu X., Qu C., Wang G., and Xia K., “Multiscale Topological Indices for the Quantitative Prediction of SARS CoV-2 Binding Affinity Change upon Mutations,” Journal of Chemical Information and Modeling, vol. 63, no. 13, pp. 4216–4227, 2023. [DOI] [PubMed] [Google Scholar]

- [29].Chen J., Qiu Y., Wang R., and Wei G.-W., “Persistent Laplacian projected Omicron BA.4 and BA.5 to become new dominating variants,” Computers in Biology and Medicine, vol. 151, p. 106262, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Rao R., Bhattacharya N., Thomas N., Duan Y., Chen P., Canny J., Abbeel P., and Song Y., “Evaluating protein transfer learning with TAPE,” Advances in neural information processing systems, vol. 32, 2019. [PMC free article] [PubMed] [Google Scholar]

- [31].Bepler T. and Berger B., “Learning protein sequence embeddings using information from structure,” in International Conference on Learning Representations, 2018. [Google Scholar]

- [32].Alley E. C., Khimulya G., Biswas S., AlQuraishi M., and Church G. M., “Unified rational protein engineering with sequence-based deep representation learning,” Nature methods, vol. 16, no. 12, pp. 1315–1322, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Rives A., Meier J., Sercu T., Goyal S., Lin Z., Liu J., Guo D., Ott M., Zitnick C. L., Ma J., et al. , “Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences,” Proceedings of the National Academy of Sciences, vol. 118, no. 15, p. e2016239118, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Vaswani A., Shazeer N., Parmar N., Uszkoreit J., Jones L., Gomez A. N., Kaiser Ł., and Polosukhin I., “Attention is all you need,” Advances in Neural Information Processing Systems, vol. 30, 2017. [Google Scholar]

- [35].Devlin J., Chang M.-W., Lee K., and Toutanova K., “BERT: Pre-training of Deep Bidi-rectional Transformers for Language Understanding,” in North American Chapter of the Association for Computational Linguistics, 2019. [Google Scholar]

- [36].Meier J., Rao R., Verkuil R., Liu J., Sercu T., and Rives A., “Language models enable zero-shot prediction of the effects of mutations on protein function,” in Advances in Neural Information Processing Systems (Ranzato M., Beygelzimer A., Dauphin Y., Liang P., and Vaughan J. W., eds.), vol. 34, pp. 29287–29303, Curran Associates, Inc., 2021. [Google Scholar]

- [37].Jumper J., Evans R., Pritzel A., Green T., Figurnov M., Ronneberger O., Tunyasu-vunakool K., Bates R., Žídek A., Potapenko A., et al. , “Highly accurate protein structure prediction with alphafold,” Nature, vol. 596, no. 7873, pp. 583–589, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Xiang J. Z. and Honig B., “Jackal: A protein structure modeling package,” Columbia University and Howard Hughes Medical Institute, New York, 2002. [Google Scholar]

- [39].Rao R. M., Liu J., Verkuil R., Meier J., Canny J., Abbeel P., Sercu T., and Rives A., “MSA Transformer,” in International Conference on Machine Learning, pp. 8844–8856, PMLR, 2021. [Google Scholar]

- [40].Baldi P., Brunak S., Chauvin Y., Andersen C. A., and Nielsen H., “Assessing the accuracy of prediction algorithms for classification: An Overview,” Bioinformatics, vol. 16, no. 5, pp. 412–424, 2000. [DOI] [PubMed] [Google Scholar]

- [41].Levy E. D., “A simple definition of structural regions in proteins and its use in analyzing interface evolution,” Journal of molecular biology, vol. 403, no. 4, pp. 660–670, 2010. [DOI] [PubMed] [Google Scholar]

- [42].Goodsell D. S., Autin L., and Olson A. J., “Illustrate: Software for biomolecular illustration,” Structure, vol. 27, no. 11, pp. 1716–1720, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Hozumi Y., Tanemura K. A., and Wei G.-W., “Preprocessing of Single Cell RNA Sequencing Data Using Correlated Clustering and Projection,” Journal of Chemical Information and Modeling, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Munkres J. R., Elements of algebraic topology. CRC Press, 2018. [Google Scholar]

- [45].Zomorodian A. J., Topology for computing, vol. 16. Cambridge university press, 2005. [Google Scholar]

- [46].Edelsbrunner H. and Harer J., Computational topology: an introduction. American Mathematical Soc., 2010. [Google Scholar]

- [47].Mischaikow K. and Nanda V., “Morse theory for filtrations and efficient computation of persistent homology,” Discrete and Computational Geometry, vol. 50, no. 2, pp. 330–353, 2013. [Google Scholar]

- [48].Horak D. and Jost J., “Spectra of combinatorial Laplace operators on simplicial complexes,” Advances in Mathematics, vol. 244, pp. 303–336, 2013. [Google Scholar]

- [49].Eckmann B., “Harmonische funktionen und randwertaufgaben in einem komplex,” Commentarii Mathematici Helvetici, vol. 17, no. 1, pp. 240–255, 1944. [Google Scholar]

- [50].Zomorodian A., “Topological data analysis,” Advances in applied and computational topology, vol. 70, pp. 1–39, 2012. [Google Scholar]

- [51].Project T. G., GUDHI User and Reference Manual. GUDHI Editorial Board, 2015. [Google Scholar]

- [52].Mirdita M., Schütze K., Moriwaki Y., Heo L., Ovchinnikov S., and Steinegger M., “ColabFold: Making protein folding accessible to all,” Nature methods, vol. 19, no. 6, pp. 679–682, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Pedregosa F., Varoquaux G., Gramfort A., Michel V., Thirion B., Grisel O., Blondel M., Prettenhofer P., Weiss R., Dubourg V., Vanderplas J., Passos A., Cournapeau D., Brucher M., Perrot M., and Duchesnay E., “Scikit-learn: Machine Learning in Python,” Journal of Machine Learning Research, vol. 12, pp. 2825–2830, 2011. [Google Scholar]

- [54].Dolinsky T. J., Nielsen J. E., McCammon J. A., and Baker N. A., “PDB2PQR: An automated pipeline for the setup of Poisson-Boltzmann electrostatics calculations,” Nucleic acids research, vol. 32, no. suppl_2, pp. W665–W667, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Liu B., Wang B., Zhao R., Tong Y., and Wei G.-W., “ESES: Software for eulerian solvent excluded surface,” 2017. [DOI] [PubMed]

- [56].Chen D., Chen Z., Chen C., Geng W., and Wei G.-W., “MIBPB: A software package for electrostatic analysis,” Journal of computational chemistry, vol. 32, no. 4, pp. 756–770, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [57].Li H., Robertson A. D., and Jensen J. H., “Very fast empirical prediction and rationalization of protein pKa values,” Proteins: Structure, Function, and Bioinformatics, vol. 61, no. 4, pp. 704–721, 2005. [DOI] [PubMed] [Google Scholar]

- [58].Johnson M., Zaretskaya I., Raytselis Y., Merezhuk Y., McGinnis S., and Madden T. L., “NCBI BLAST: A better web interface,” Nucleic acids research, vol. 36, no. suppl_2, pp. W5–W9, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Heffernan R., Paliwal K., Lyons J., Dehzangi A., Sharma A., Wang J., Sattar A., Yang Y., and Zhou Y., “Improving prediction of secondary structure, local backbone angles and solvent accessible surface area of proteins by iterative deep learning,” Scientific reports, vol. 5, no. 1, p. 11476, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Maria C., Boissonnat J.-D., Glisse M., and Yvinec M., “The GUDHI library: Simplicial complexes and persistent homology,” in Mathematical Software-ICMS 2014: 4th International Congress, Seoul, South Korea, August 5–9, 2014. Proceedings 4, pp. 167–174, Springer, 2014. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The 3D protein structures and the TopLapGBT code can be found in https://github.com/ExpectozJJ/TopLapGBT. The source code for the R-S plot can be found at https://github.com/hozumiyu/RSI.