Abstract

Artificial intelligence (AI) has transitioned from the lab to the bedside, and it is increasingly being used in healthcare. Radiology and Radiography are on the frontline of AI implementation, because of the use of big data for medical imaging and diagnosis for different patient groups. Safe and effective AI implementation requires that responsible and ethical practices are upheld by all key stakeholders, that there is harmonious collaboration between different professional groups, and customised educational provisions for all involved. This paper outlines key principles of ethical and responsible AI, highlights recent educational initiatives for clinical practitioners and discusses the synergies between all medical imaging professionals as they prepare for the digital future in Europe. Responsible and ethical AI is vital to enhance a culture of safety and trust for healthcare professionals and patients alike. Educational and training provisions for medical imaging professionals on AI is central to the understanding of basic AI principles and applications and there are many offerings currently in Europe. Education can facilitate the transparency of AI tools, but more formalised, university-led training is needed to ensure the academic scrutiny, appropriate pedagogy, multidisciplinarity and customisation to the learners’ unique needs are being adhered to. As radiographers and radiologists work together and with other professionals to understand and harness the benefits of AI in medical imaging, it becomes clear that they are faced with the same challenges and that they have the same needs. The digital future belongs to multidisciplinary teams that work seamlessly together, learn together, manage risk collectively and collaborate for the benefit of the patients they serve.

Introduction

Rationale

Artificial intelligence (AI) implementation will bring AI from the lab to the clinic, to start benefiting the patients and easing workflows. To achieve this, different initiatives need to be put in place such as (a) responsible and ethical AI use, (b) relevant and customised training and education, and (c) multidisciplinary collaboration within the AI ecosystem. There is increasing work relating to the importance of involving patients to the design and implementation of AI tools but it goes beyond the scope of this paper and we hope to be able to develop this in a separate publication.

Background

With the increasing rate of design and implementation of AI-enabled applications in healthcare, it is vital that the new technology is used responsibly and ethically 1 to maximise benefits and mitigate risks. Radiology and radiography have attracted this technological revolution far more compared to other disciplines within healthcare. 2–5 However, AI needs to be ethical and responsible, follow robust governance frameworks to guide safe and successful adoption, provide adequate education/training to key stakeholders, and all these are discussed below.

Ethical AI

The concepts of responsible AI and AI ethics are deeply intertwined; AI cannot be considered responsible unless it conforms to certain AI ethical principles, underpinned by specific standards and regulations. Ethical concerns for AI in healthcare are complex, and often encompass the basic principles of: (i) beneficence and non-maleficence in relation to technical safety and clinical efficiency, (ii) data privacy and management and informed consent for data use, (iii) fairness/equity in their design and output, (iv) accountability in decision-making, (v) transparency and explainability. 3,6

Responsible AI

Responsible AI encompasses ethics but goes beyond the basic principles, extending into instilling integrity and trustworthiness into the conceptualisation, design, use, evaluation and monitoring of AI tools. These principles may relate to traceability, trustworthiness, establishing a robust ground truth for an AI tool, including a representative sample during training and testing of algorithms. 7–9 These principles must underpin every stage of the AI lifecycle and be followed by all key stakeholders involved, from inception to implementation. The impact of AI within the medical imaging ecosystem is part of the ‘responsibility’ of AI designers, users, and consumers, as the downstream effects of AI may further influence new research or innovations in the area. Responsible and ethical AI are usually covered under one term called “AI governance”.

Generic principles of responsible AI and AI ethics

AI governance models, non-specific to medical imaging, have been suggested to ensure AI is both responsible and ethical; governance is not a simple procedure and requires constant surveillance. 8,10 It is essential to consider these principles at an individual level, but these must also be considered for wider populations and at a global socioeconomic level. 11 Once the potential risks of AI are established, they can be protected against, and the undoubted benefits from AI can be maximised. 6

Unifying AI governance in medical imaging

Developing ethical and responsible AI is essential to establish and maintain clinician, patient and public trust and, therefore, facilitate implementation in clinical practice. 5,6,12 Establishing robust governance for all stages of the AI lifecycle needs to involve all key stakeholders, from software developers, government bodies, clinical practitioners to patient interest groups, similar to what the Dutch AI coalition has achieved in the Netherlands. 8 While most governance frameworks remain fragmented and contextualised, recent work in medical imaging and radiotherapy in the UK has proposed a unified governance framework, which underlines the importance of ethics, co-production with patients, and training on AI for all healthcare practitioners to facilitate its implementation. 13 Similar work was conducted in other countries in the EU, such as the Innovation Funnel for Valuable AI in Healthcare by the Dutch Ministry of Health, Welfare and Sport 14 which provides a tool identifying the legal and regulatory scope of action for eight steps in the innovation funnel.

AI ethics guidelines for healthcare in Europe

In Europe, there are a variety of ethical guidelines and frameworks for the use of AI in healthcare, 15,16 including data regulations, such as General Data Protection Regulation (GDPR). 17 The European Commission published a set of non-binding ethical guidelines for trustworthy AI, non- specific to healthcare. 18 Other institutions have also produced AI ethical guidelines, 19–24 and academics have used these to compile local frameworks. 25–27 A one-size-fits-all-approach to AI ethics in healthcare is proving too challenging, so there is, inevitably, a lot of fragmentation. 28

The National Health Service (NHS) within the UK has established the ‘NHS AI Lab’; an organisation to aid acceleration of AI implementation. 29 The NHS AI Lab comprises, among other important functions, an AI ethics initiative, which supports research that translates AI principles into practice, and helps build the evidence base required to mitigate risks and provide ethical assurance. 30 This research could form the groundwork of robust European or global guidelines for AI ethics in healthcare. Furthermore, the British Standards Institute has drafted new AI standards specific to healthcare (“BS 30440 Validation framework for the use of AI within healthcare – Specification”), about to be published in Spring 2023, “applicable to products, models, systems or technologies whose function uses elements of AI, including machine learning (ML), and whose key function is to enable or provide treatment or diagnoses, or enable the management of health conditions, for the purposes of healthcare”. 31

AI ethics guidelines for medical imaging in Europe

Data used in medical imaging present the additional challenge of exhibiting a great variability, 32 and lots of medical imaging data, usually in the universally accepted DICOM format, are unstructured. 33 These additional challenges corroborate the need for rigorous guidance in medical imaging, compared to different specialties. Initially there were no standardised ethical guidelines for AI in medical imaging in Europe, with different countries, e.g. Italy and France, having produced country-specific AI ethical recommendations. 34,35 However, in 2019, this has changed with the joint, multisociety statement for AI ethics in medical imaging, including the European Society of Radiology (ESR) and the European Society of Medical Imaging Informatics (EuSoMII) as European partners. 3 This joint statement offers unified ethical guidance, specific to the context of radiology. The key points of this statement are the need for the radiology community to recognise and mitigate the risks arising from AI and the need to acquire new skills to prepare for the digital future. 3 While ethical considerations are required for patient and practitioner safety, caution is needed so they do not become hurdles to the development, creativity, and speed of implementation of AI technologies. These views are echoed in the joint statement by the European Federation of Radiographer Societies (EFRS) and the International Society of Radiographers and Radiological Technologists (ISRRT), as well as, the Society and College of Radiographers (SCoR) guidance, which highlight the need for radiographers to ensure that all AI applications are implemented in an ethical way. 36,37

The AI ecosystem in medical imaging

AI has the potential to change practice in medical imaging in different ways for different professionals. These changes in appointment scheduling, patient positioning, data acquisition, post-processing and segmentation or image analysis and interpretation may have tremendous impact on patient experiences and outcomes. 2,37,38 It is definite that AI will take its position within the medical imaging ecosystem, where new roles, responsibilities and professional identities will develop, and the previous forms will go through a transitional phase. 39 It is important for all medical imaging professionals (e.g. radiologists, radiographers, nuclear medicine practitioners, medical physicists) and other, increasingly needed scientists (e.g. data scientists/informaticians, biomedical engineers), to work together as a team. 3,36,40 This unity must be manifested in both the theoretical principles and the practice and implementation of digital transformation.

The importance of AI education

For a robust medical imaging ecosystem, both radiologists and radiographers need to be adequately educated in AI, 41 including on AI ethics. For UK radiographers, AI is now included as a core competency for fitness to practice. 42 Proportionate education might also be offered to patients, to make AI accessible, better understood, and trusted.

Aim and objectives

The following work aims to discuss different AI implementation priorities, 12 including: a) examine the different ethical frameworks about AI for radiologists and radiographers in Europe, and b) highlight the AI training provisions accessible to them. The synergies, challenges, and ways forward for the seamless collaboration between radiographers and radiologists will also be discussed.

Methods

A rapid review 43 was undertaken, with its design following an abbreviated, simplified version of review protocols, often purposedly omitting traditional methodological steps of a scoping or systematic review, to ensure timely data gathering from relevant literature. 44 Rapid reviews can summarise extensive knowledge in a timely manner on fast developing topics, therefore suitable for AI-related work. 45 Eligible papers were initially screened at abstract and title level and then included if they fulfilled the following eligibility criteria: a) related to AI (or synonyms, such as ML and deep learning) in radiology and radiography, b) pertaining to ethics, governance, and education/training, c) limited to Europe.

The search terms were pre-determined, as per Supplementary Table 1.

Supplementary Table 1.

A literature search for peer-reviewed literature was conducted within six databases (PubMed, MEDLINE, EBSCOhost, CINAHL, Taylor and Francis, and ERIC). Google Scholar was also used to ensure relevant grey literature was also included. Only literature written in English, published between 2012 and January 2023 was included in this search. Related literature on AI applications, ethics, education taking place outside Europe, or literature that did not specifically refer to radiography or radiology was excluded.

To understand the perspectives of the key European radiology/radiography learned societies, extra searches on their websites and related conferences were also conducted. Professional societies included were: the EuSoMII, the ESR, the SCoR, the EFRS, and the British Institute of Radiology (BIR).

After gathering all the eligible literature, a content analysis approach was applied, as a systematic, replicable, yet agile approach to understand, analyse, and interpret visual or verbal data. 46 The compression of text, or phenomena progresses from defined generic concepts into common categories and, finally, overarching themes, in accordance with the texts’ characteristics. 47 Content analysis is a focused process to categorise a large amount of text and ideas into a manageable number of groups. 48 The interconnection between categories generates new concepts, 49 can highlight different stakeholders’ perceptions, and provide an overview, suitable for a rapid review. 50

Results

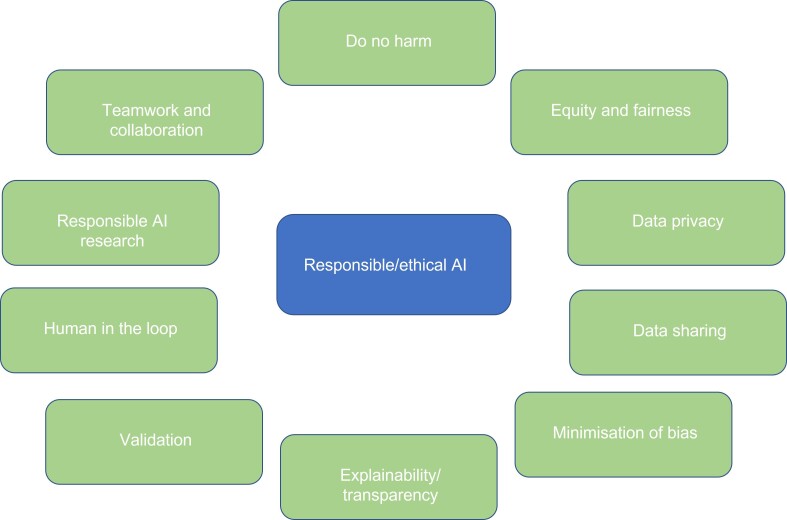

In total, 19 papers in relation to AI ethics and medical imaging were sourced and more details of their key concepts can be found in Supplementary Table 2. This table summarises the currently available evidence that relates to ethical and responsible AI for radiology and radiography in Europe. These include the following principles (also presented in Figure 1), which are further commented on in discussion: do no harm, equity and fairness, data privacy, data sharing, minimisation of bias, explainability, external validation, human-in-the-loop approach, responsible AI research and AI teamwork.

Figure 1.

Responsible AI principles, based on the rapid review

Supplementary Table 2.

A rapid exploration of the available literature on AI education delivered in Europe, and of the websites of European universities and related AI industry, reflects a promising educational landscape for all relevant health professionals; bespoke information for patients is also available, albeit limited.

Supplementary Table 3 demonstrates the 24 different educational initiatives delivered in Europe for different healthcare professionals in medical imaging, summarising different information about delivery, cost, and target audience. Many of these opportunities can also be accessed by other professionals and organisations with a vested interest in AI. Finally, Supplementary Material 4 presents the related websites for access to these courses for those interested to learn more. These numbers are likely to change soon given developments in the field of AI but can be considered current up to June 2023.

Supplementary Table 3.

Supplementary Table 4.

Discussion

The discussion below covers latest updates on AI education and governance, as well as the necessary synergies between radiographers and radiologists, and challenges and ways forward for AI implementation in medical imaging.

AI governance

Our rapid review has identified that ethical and responsible AI is important for both radiologists and radiographers, as clinical practitioners. Radiographer-led papers appear somewhat later than the radiologist-led ones; this might relate to the early implementation of AI-enabled tools on image interpretation and diagnosis, that were available to radiologists, but, also, to the smaller critical mass of radiographer researchers. 51 It might also convey their differing roles and liability in case of erroneous use and interpretation of AI technology. It is encouraging, though, to see that the same principles and concerns govern clinical practice of both professional groups. The principles outlined below apply to the clinical implementation of AI tools used by both radiologists and radiographers.

Do no harm

Patient harm could result from the use of AI models, which have not been optimally trained and validated for the clinical setting at hand, either related to differences in the training population and clinical population or to the intended use. Practitioners might be liable for implementing these into clinical practice, in any context their role prescribes (whether in radiography or radiology). 3,52,53 Responsible AI will help enhance patient care, societal, and practitioner acceptance, by promoting a culture of safety and trust. 5

Equity and fairness

Important differences in the use of AI have been found among high- and low-resource environments. 54 AI developers and adopters must ensure that the benefits and the costs associated with the clinical use of AI will be equally distributed to all populations, thus enhancing the principle of fairness and justice. 55

Data privacy

Any data used for developing, training, and validating AI models need to be appropriately encrypted, secured and properly de-identified. 3,5,6,52,54 Any sensitive information linked to data owners should be removed before it is shared and used, and appropriate processes should be established for data de-identification. Due to potential risks of data being re-identified, specific steps must be taken by organisations to eliminate these risks. 56 The European GDPR should also be fully respected, to ensure legal and ethical use and sharing of data. 5,6 In addition, organisations might take advantage of the block chain technology to improve cybersecurity. 56,57 Differential privacy and homomorphic encryption are two more effective strategies to enhance security whilst also promoting innovation. 58

Data sharing

Centralisation of shared data using a single platform could result in a single point of data sharing policies and accountability. 56 Rigorous data storage and data sharing policies will also help enhance patients’ privacy. To ensure data security, federated ML techniques can be used, where AI algorithms are distributed for local training at the place of data acquisition, instead of data being distributed to external companies. 58–60

Informed consent is also a fundamental process linked to data sharing. Data owners (i.e. patients) have the right to decide on the use of their data, and all organisations should seek informed consent from patients before using their data for AI model development, training, or validation. 3,6,54,61 This will also help to preserve patients’ autonomy, which is a fundamental principle of ethics. 18,52,62 Finally, the same processes should be applied whenever patient data is required for AI research purposes, and this should be also stated during study reporting. 61

Minimisation of algorithmic bias

Algorithmic biases can occur when developing an AI model using a non-representative data set. 3,52,53 These biases may include societal or financial factors, gender, sexual orientation, ethnicity, environment etc. 53 These may further result in discrimination against certain underrepresented populations and also in health inequalities. 54 When developing and training AI algorithms, it is essential to ensure that these algorithms use diverse data sets, ideally representing all populations. Diverse, large data sets are needed to minimise any data bias. 54,56

External, clinical validation

Before AI models have been clinically deployed, clinical validation is essential, ideally using new, unseen data, to ensure that the models’ performance is not compromised by differences raised by clinical environments. 5,6,37 Clinical validation can be also performed by radiographers 39 or radiologists, 56 in their respective, distinct areas of practice.

Explainability

AI algorithms should be explainable to end-users. When AI tools perform decision-making tasks, it is vital for end-users to be able to understand the rationale behind these decisions. Explainability is central to ethical and responsible AI, and it helps to build trust between practitioners, patients, and AI technology. 3,6,39,52,53 Effort is required by vendors to develop transparent processes to support knowledge sharing with the wider scientific community. 35

Human-in-the-loop

Automation biases can occur when professionals overrely on the predictions made by AI models. This could potentially result in patient harm, 54 thus being in contrast with the principles of responsible AI. Automation biases can be potentially exacerbated in low-resource environments, where there might be a lack of appropriately trained professionals to monitor the clinical effectiveness of AI models and identify any failures. A model that facilitates a ‘’human-in-the-loop’’ approach is optimal to minimise automation bias. 53

Responsible and ethical AI innovation and research

Further to ethical clinical practice, AI research and its dissemination must be always conducted in an ethical manner. 63 AI researchers should ideally focus on prospective research studies. 61 Also, diverse, multidisciplinary research teams working on AI projects can harness the different strengths and perspectives of the AI ecosystem. 39 AI research studies should be transparent and allow open access to the code and training/validation data sets of the model. 61 There is also a need to report data curation and data partitioning processes, data annotation strategies, and also to provide a detailed explanation of the model’s architecture and hyperparameters used. 61 When reporting AI research studies, it is vital to ensure that all factors (human, algorithmic, environmental etc.) are clearly reported in a transparent way to minimise patient harm and research data waste. 64–66 Finally, AI studies should be robust enough to allow reproducibility and generalisability of the results in all clinical settings, regardless of data sources. 61

AI teamwork and professional synergies

To ensure the ethical use of AI technologies in medical imaging, engagement of all key stakeholders is needed in all processes of the AI model’s lifecycle. Patient representatives are also essential, 5 and they should be included as equal partners. 37 This will also help to enhance the quality of the interaction between practitioners and patients. 62 Another important step of making AI ethical and responsible is to involve multidisciplinary teams of key stakeholders in all AI procedures. 39,52

AI education and training

Our North American colleagues have recently worked to summarise the available AI educational provisions, outlining challenges and opportunities for Radiology. 67 Europe has, in recent years, started to develop educational provisions for medical imaging professionals, to enable knowledge sharing, facilitate implementation and cultivate much-needed trust. 68 More courses are being currently developed in Europe and ready to be launched in the academic year of 2023–2024, so this information will be changing very fast.

From Supplementary Table 3, it can be appreciated that the majority of education and training for medical imaging professionals and other healthcare scientists in Europe are of a continuing professional development nature, mostly delivered outside of formal academic education, sporadic in nature and not necessarily part of a lifelong learning journey. 69–85 AI training overwhelmingly continues to be delivered through conference/congress programmes of learned societies 75 and by commercial sponsors; the latter can cause concerns about how impartial or industry-agnostic the information can be. The overwhelming majority of the AI courses is primarily aimed at radiologists, with only few courses applicable currently to the radiographers’ remit. 86 However, most course curricula have thematic overlaps for both professional groups and underline the common need for multidisciplinarity in content and delivery, to reflect the true AI ecosystems in medical imaging. The lack of alignment to the busy clinical schedules of radiologists and radiographers and their lack of time to engage with these courses, alongside associated costs, remain the major challenges of the delivery of AI-focussed educational provisions. 67,68,87

AI-specific focussed conferences 70,71,73,74 further underscore the efforts invested to enable the effective and efficient AI implementation, whilst ensuring patient safety, optimal care, and improved outcomes. The qualification-linked educational provisions are often linked to taught Master’s programmes 86,88,89 or integrated at undergraduate level in a staggered manner. 87 Those educational opportunities offered by commercial companies are frequently linked to their products and services 76,90 which may leave knowledge gaps in the education and training of the practitioners that will work with different AI manufacturers in the clinical practice setting.

There are also a few platforms that primarily exist to share the latest information and insights about AI to those interested, whether professionals, organisations, researchers, or patients. 61,84,91 One can thus argue that this contributes to continuous AI literacy development among professional communities and the general population in preparation for an AI-enabled world. There are multiple free education opportunities. 61,69,81,82,87,91 However, other courses are fee-paying, which can limit accessibility to AI-related education and training. 70,71,73–75,77,83,85,86,88,89,92

Evidence indicates that most AI education and training is offered in an online format, whilst some are in-person only. The duration of the current provisions ranges from 30 min to 1 year and others are self-paced. Most of the educational initiatives reviewed for this work are applicable to multidisciplinary audiences, followed by radiology-specific audiences. There is only one radiographer-specific module offered at present (Supplementary Table 3). It can be posited that this format can cultivate a stronger multidisciplinary workforce, where different parties have greater appreciations for others’ roles and therefore the patient care and management pathways and ultimately patient outcomes can be enhanced through cost-effective, timely, and appropriate actions taken by relevant practitioners.

There is a common thread regarding the content covered in the current AI education and training opportunities and programmes reviewed in this work. AI, ML and deep learning fundamentals, ethical considerations and societal impacts associated with AI, AI regulation, data sharing and preparation, explainable AI, workflow integration as well as specific clinical applications of AI relative to imaging modality or body area and structured reporting are common topics covered for both radiologists and radiographers. Radiomics, critical appraisal of AI literature, procurement, AI governance, prospective clinical trial development and implementation, scalability of AI innovations in healthcare settings and patient and practitioner acceptability are less frequently covered areas at present.

Conclusions

The implementation of AI, as vital to harness the benefits of this new technology, relies on knowledge and trust. A comprehensive AI governance framework in medical imaging proposes this can be achieved through robust ethics, comprehensive, customised training and collaboration amongst healthcare practitioners, among other attributes. Responsible and ethical AI is vital to enhance a culture of safety and trust for healthcare professionals and patients alike. Educational and training provisions for medical imaging professionals on AI are central to the understanding of basic AI principles and applications. Education can facilitate the transparency of AI tools, but more formalised, university-led training is needed to ensure the academic scrutiny, appropriate pedagogy, multidisciplinarity and customisation to the learners’ unique needs are being adhered to. As radiographers and radiologists work together and with other professionals to understand and harness the benefits of AI in medical imaging, it becomes clear that they are faced with the same challenges and that they have the same needs. The digital future belongs to multidisciplinary teams that work seamlessly together, learn together, manage risk collectively and collaborate for the benefit of the patients they serve.

Contributor Information

Gemma Walsh, Email: gemma.walsh.3@city.ac.uk.

Nikolaos Stogiannos, Email: nstogiannos@yahoo.com.

Riaan van de Venter, Email: riaan.vandeventer@mandela.ac.za.

Clare Rainey, Email: c.rainey@ulster.ac.uk.

Winnie Tam, Email: winnie.tam@city.ac.uk.

Sonyia McFadden, Email: s.mcfadden@ulster.ac.uk.

Jonathan P McNulty, Email: jonathan.mcnulty@ucd.ie.

Nejc Mekis, Email: nejc.mekis@zf.uni-lj.si.

Sarah Lewis, Email: sarah.lewis@sydney.edu.au.

Tracy O'Regan, Email: tracyo@sor.org.

Amrita Kumar, Email: amrita.kumar@nhs.net.

Merel Huisman, Email: merel.huisman1@gmail.com.

Sotirios Bisdas, Email: s.bisdas@ucl.ac.uk.

Elmar Kotter, Email: elmar.kotter@uniklinik-freiburg.de.

Daniel Pinto dos Santos, Email: pintodossantos.daniel@gmail.com.

Cláudia Sá dos Reis, Email: Claudia.sadoreis@hesav.ch.

Peter van Ooijen, Email: p.m.a.van.ooijen@umcg.nl.

Adrian P Brady, Email: adrianbrady@me.com.

Christina Malamateniou, Email: christina.malamateniou@city.ac.uk.

REFERENCES

- 1. Google AI. Responsible AI practices. Available from: https://ai.google/responsibilities/responsible-ai-practices/

- 2. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in Radiology. Nat Rev Cancer 2018; 18: 500–510. doi: 10.1038/s41568-018-0016-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Geis JR, Brady A, Wu CC, Spencer J, Ranschaert E, Jaremko JL, et al. Ethics of artificial intelligence in Radiology: summary of the joint European and North American Multisociety statement. Insights Imaging 2019; 10(1): 101. doi: 10.1186/s13244-019-0785-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. BrainxAI . BrainX CommunityLive! March 2022: Artificial Intelligence in Healthcare: 2021 Year in Review. 2022. Available from: https://brainxai.org/connect/brainx-community-live-february-2022-artificial-intelligence-in-healthcare-2021-year-in-review/

- 5. Rockall A. From Hype to hope to hard work: developing responsible AI for Radiology. Clin Radiol 2020; 75: 1–2. doi: 10.1016/j.crad.2019.09.123 [DOI] [PubMed] [Google Scholar]

- 6. Brady AP, Neri E. Artificial intelligence in Radiology-ethical considerations. Diagnostics (Basel) 2020; 10(4): 231. doi: 10.3390/diagnostics10040231 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. McCradden MD, Joshi S, Anderson JA, Mazwi M, Goldenberg A, Zlotnik Shaul R. Patient safety and quality improvement: ethical principles for a regulatory approach to bias in Healthcare machine learning. J Am Med Inform Assoc 2020; 27: 2024–27. doi: 10.1093/jamia/ocaa085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Reddy S, Allan S, Coghlan S, Cooper P. A Governance model for the application of AI in health care. J Am Med Inform Assoc 2020; 27: 491–97. doi: 10.1093/jamia/ocz192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Ricci Lara MA, Echeveste R, Ferrante E. Addressing fairness in artificial intelligence for medical imaging. Nat Commun 2022; 13(1): 4581. doi: 10.1038/s41467-022-32186-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Mäntymäki M, Minkkinen M, Birkstedt T, Viljanen M. Defining organizational AI Governance. AI Ethics 2022; 2: 603–9. doi: 10.1007/s43681-022-00143-x [DOI] [Google Scholar]

- 11. Morley J, Floridi L. An ethically mindful approach to AI for health care. Lancet 2020; 395: 254–55. doi: 10.1016/S0140-6736(19)32975-7 [DOI] [PubMed] [Google Scholar]

- 12. Malamateniou C, McEntee M. Integration of AI in radiography practice: ten priorities for implementation. RAD Magazine 2022; 48: 19–20. Available from: https://www.radmagazine.com/scientific-article/integration-of-ai-in-radiography-practice-ten-priorities-for-implementation/ [Google Scholar]

- 13. Stogiannos N, Malik R, Kumar A, Barnes A, Pogose M, Harvey H, et al. Black box no more: a Scoping review of AI Governance Frameworks to guide procurement and adoption of AI in medical imaging and radiotherapy in the UK. Br J Radiol 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Ministerie van Volksgezondheid Welzijn en Sport. Innovation Funnel for Valuable AI in Healthcare. [Published 2022. January 05]. Available from: https://www.datavoorgezondheid.nl/documenten/publicaties/2021/07/15/innovation-funnel-for-valuable-ai-in-healthcare

- 15. Karimian G, Petelos E, Evers S. The ethical issues of the application of artificial intelligence in Healthcare: a systematic Scoping review. AI Ethics 2022; 2: 539–51. doi: 10.1007/s43681-021-00131-7 [DOI] [Google Scholar]

- 16. European Commission . Regulatory framework proposal on artificial intelligence. Available from: https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai (accessed 29 Sep 2022)

- 17. European Union . What is GDPR, the EU’s new data protection law?. Available from: https://gdpr.eu/what-is-gdpr/

- 18. European Commission . Ethics guidelines for trustworthy AI. Available from: https://op.europa.eu/en/publication-detail/-/publication/d3988569-0434-11ea-8c1f-01aa75ed71a1/language-en (accessed 8 Apr 2019)

- 19. Nuffield Council on Bioethics . The collection, linking and use of data in biomedical research and health care: ethical issues. 2015. Available from: https://www.nuffieldbioethics.org/wp-content/uploads/Biological_and_health_data_web.pdf

- 20. The Wellcome Trust . Ethical, Social, and Political Challenges of Artificial Intelligence in Health Care. 2018. Available from: https://wellcome.org/sites/default/files/ai-in-health-ethical-social-political-challenges.pdf

- 21. Academy of Medical Royal Colleges . Artificial Intelligence in Healthcare. Published. 2019. Available from: https://www.aomrc.org.uk/reports-guidance/artificial-intelligence-in-healthcare/

- 22. Department of Health and Social Care . A guide to good practice for digital and data-driven health technologies. Updated. 2021. Available from: https://www.gov.uk/government/publications/code-of-conduct-for-data-driven-health-and-care-technology/initial-code-of-conduct-for-data-driven-health-and-care-technology

- 23. National Institute for Health and Care Excellence . Evidence standards framework for digital health technologies. Available from: https://www.nice.org.uk/corporate/ecd7 (accessed 9 Aug 2022) [DOI] [PMC free article] [PubMed]

- 24.Leslie D. The Alan Turing Institute; Understanding Artificial Intelligence Ethics and Safety: A Guide for the Responsible Design and Implementation of AI Systems in the Public Sector. [DOI] [Google Scholar]

- 25. Fiske A, Tigard D, Müller R, Haddadin S, Buyx A, McLennan S. Embedded ethics could help implement the pipeline model framework for machine learning Healthcare applications. Am J Bioeth 2020; 20: 32–35. doi: 10.1080/15265161.2020.1820101 [DOI] [PubMed] [Google Scholar]

- 26. Morley J, Machado CCV, Burr C, Cowls J, Joshi I, Taddeo M, et al. The ethics of AI in health care: A mapping review. Soc Sci Med 2020; 260: 113172. doi: 10.1016/j.socscimed.2020.113172 [DOI] [PubMed] [Google Scholar]

- 27. Smallman M. Multi scale ethics—why we need to consider the ethics of AI in Healthcare at different scales. Sci Eng Ethics 2022; 28(6): 63. doi: 10.1007/s11948-022-00396-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Goirand M, Austin E, Clay-Williams R. Implementing ethics in Healthcare AI-based applications: A Scoping review. Sci Eng Ethics 2021; 27(5): 61. doi: 10.1007/s11948-021-00336-3 [DOI] [PubMed] [Google Scholar]

- 29. NHS England . The NHS AI Lab. Available from: https://transform.england.nhs.uk/ai-lab/

- 30. NHS England . The AI Ethics Initiative. Available from: https://transform.england.nhs.uk/ai-lab/ai-lab-programmes/ethics/

- 31. British Standards Institute . BS 30440 Validation framework for the use of AI within healthcare – Specification. Available from: https://standardsdevelopment.bsigroup.com/projects/2021-00605#/section

- 32. Kondylakis H, Kalokyri V, Sfakianakis S, Marias K, Tsiknakis M, Jimenez-Pastor A, et al. Data Infrastructures for AI in medical imaging: a report on the experiences of five EU projects. Eur Radiol Exp 2023; 7(1): 20. doi: 10.1186/s41747-023-00336-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Panayides AS, Amini A, Filipovic ND, Sharma A, Tsaftaris SA, Young A, et al. AI in medical imaging Informatics: Current challenges and future directions. IEEE J Biomed Health Inform 2020; 24: 1837–57. doi: 10.1109/JBHI.2020.2991043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Neri E, Coppola F, Miele V, Bibbolino C, Grassi R. Artificial intelligence: who is responsible for the diagnosis. Radiol Med 2020; 125: 517–21. doi: 10.1007/s11547-020-01135-9 [DOI] [PubMed] [Google Scholar]

- 35. SFR-IA Group, CERF, French Radiology Community . French Radiology community. artificial intelligence and medical imaging 2018: French Radiology community white paper. Diagn Interv Imaging 2018; 99: 727–42. doi: 10.1016/j.diii.2018.10.003 [DOI] [PubMed] [Google Scholar]

- 36. International Society of Radiographers and Radiological Technologists. Electronic address: admin@isrrt.org . European Federation of Radiographer societies. artificial intelligence and the Radiographer/radiological Technologist profession: A joint statement of the International society of Radiographers and radiological Technologists and the European Federation of Radiographer societies. Radiography (Lond) 2020; 26: 93–95. doi: 10.1016/j.radi.2020.03.007 [DOI] [PubMed] [Google Scholar]

- 37. Malamateniou C, McFadden S, McQuinlan Y, England A, Woznitza N, Goldsworthy S, et al. Artificial intelligence: guidance for clinical imaging and therapeutic radiography professionals, a summary by the society of Radiographers AI working group. Radiography (Lond) 2021; 27: 1192–1202. doi: 10.1016/j.radi.2021.07.028 [DOI] [PubMed] [Google Scholar]

- 38. Hardy M, Harvey H. Artificial intelligence in diagnostic imaging: impact on the radiography profession. Br J Radiol 2020; 93: 20190840. doi: 10.1259/bjr.20190840 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Malamateniou C, Knapp KM, Pergola M, Woznitza N, Hardy M. Artificial intelligence in radiography: where are we now and what does the future hold? Radiography 2021; 27: S58–62. doi: 10.1016/j.radi.2021.07.015 [DOI] [PubMed] [Google Scholar]

- 40. Allen B, Dreyer K. The artificial intelligence Ecosystem for the radiological sciences: ideas to clinical practice. Journal of the American College of Radiology 2018; 15: 1455–57. doi: 10.1016/j.jacr.2018.02.032 [DOI] [PubMed] [Google Scholar]

- 41. Huisman M, Ranschaert E, Parker W, Mastrodicasa D, Koci M, Pinto de Santos D, et al. An international survey on AI in Radiology in 1,041 Radiologists and Radiology residents part 1: fear of replacement, knowledge, and attitude. Eur Radiol 2021; 31: 7058–66. doi: 10.1007/s00330-021-07781-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Health & Care Professions Council . Standards of proficiency for radiographers. Available from: https://www.hcpc-uk.org/globalassets/standards/standards-of-proficiency/reviewing/radiographers---sop-changes.pdf

- 43. Speckemeier C, Niemann A, Wasem J, Buchberger B, Neusser S. Methodological guidance for rapid reviews in Healthcare: A Scoping review. Res Synth Methods 2022; 13: 394–404. doi: 10.1002/jrsm.1555 [DOI] [PubMed] [Google Scholar]

- 44. Tricco AC, Khalil H, Holly C, Feyissa G, Godfrey C, Evans C, et al. Rapid reviews and the methodological rigor of evidence synthesis: a JBI position statement. JBI Evidence Synthesis 2022; 20: 944–49. doi: 10.11124/JBIES-21-00371 [DOI] [PubMed] [Google Scholar]

- 45. Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann Intern Med 2018;169(7):467–473. 10.7326/m18-0850 [DOI] [PubMed] [Google Scholar]

- 46. Harwood TG, Garry T. An overview of content analysis. Mark Rev 2003; 3: 479–98. doi: 10.1362/146934703771910080 [DOI] [Google Scholar]

- 47. Stemler S. An overview of content analysis. PARE 2001;7:17. Available from: https://scholarworks.umass.edu/pare/vol7/iss1/17/ [Google Scholar]

- 48. Hsieh H-F, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res 2005; 15: 1277–88. doi: 10.1177/1049732305276687 [DOI] [PubMed] [Google Scholar]

- 49. Bengtsson M. How to plan and perform a qualitative study using content analysis. NursingPlus Open 2016; 2: 8–14. doi: 10.1016/j.npls.2016.01.001 [DOI] [Google Scholar]

- 50. Lacy S, Watson BR, Riffe D, Lovejoy J. Issues and best practices in content analysis. Journalism & Mass Communication Quarterly 2015; 92: 791–811. doi: 10.1177/1077699015607338 [DOI] [Google Scholar]

- 51. The Society and College of Radiographers . The Society and College of Radiographers policy statement: Artificial Intelligence. Published 2020. Available from: https://www.sor.org/learning-advice/professional-body-guidance-and-publications/documents-and-publications/policy-guidance-document-library/the-society-and-college-of-radiographers-policy-st

- 52. Akinci D’Antonoli T. Ethical considerations for artificial intelligence: an overview of the current Radiology landscape. Diagn Interv Radiol 2020; 26: 504–11. doi: 10.5152/dir.2020.19279 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Goisauf M, Cano Abadía M. Ethics of AI in Radiology: A review of ethical and societal implications. Front Big Data 2022; 5: 850383. doi: 10.3389/fdata.2022.850383 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Recht MP, Dewey M, Dreyer K, Langlotz C, Niessen W, Prainsack B, et al. Integrating artificial intelligence into the clinical practice of Radiology: challenges and recommendations. Eur Radiol 2020; 30: 3576–84. doi: 10.1007/s00330-020-06672-5 [DOI] [PubMed] [Google Scholar]

- 55. Vasey B, Clifton DA, Collins GS, Denniston AK, Faes L, Geerts BF, et al. DECIDE-AI: new reporting guidelines to bridge the development to implementation gap in clinical artificial intelligence. Nat Med 2021; 27: 186–87. doi: 10.1038/s41591-021-01229-5 [DOI] [PubMed] [Google Scholar]

- 56. Mudgal KS, Das N. The ethical adoption of artificial intelligence in Radiology. BJR Open 2020; 2(1): 20190020. doi: 10.1259/bjro.20190020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. European Society of Radiology (ESR) . Esrsr white paper: Blockchain and medical imaging. Insights Imaging 2021; 12(1): 82. doi: 10.1186/s13244-021-01029-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Kaissis GA, Makowski MR, Rückert D, Braren RF. Secure, privacy-preserving and Federated machine learning in medical imaging. Nat Mach Intell 2020; 2: 305–11. doi: 10.1038/s42256-020-0186-1 [DOI] [Google Scholar]

- 59. Darzidehkalani E, Ghasemi-Rad M, van Ooijen PMA. Federated learning in medical imaging: part I: toward Multicentral health care Ecosystems. J Am Coll Radiol 2022; 19: 969–74. doi: 10.1016/j.jacr.2022.03.015 [DOI] [PubMed] [Google Scholar]

- 60. Darzidehkalani E, Ghasemi-Rad M, van Ooijen PMA. Federated learning in medical imaging: part II: methods, challenges, and considerations. J Am Coll Radiol 2022; 19: 975–82. doi: 10.1016/j.jacr.2022.03.016 [DOI] [PubMed] [Google Scholar]

- 61. Cerdá-Alberich L, Solana J, Mallol P, Ribas G, García-Junco M, Alberich-Bayarri A, et al. MAIC-10 brief quality checklist for publications using artificial intelligence and medical images. Insights Imaging 2023; 14(1): 11. doi: 10.1186/s13244-022-01355-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Sand M, Durán JM, Jongsma KR. Responsibility beyond design: physicians’ requirements for ethical medical AI. Bioethics 2022; 36: 162–69. doi: 10.1111/bioe.12887 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Bockhold S, McNulty J, Abdurakman E, Bezzina P, Drey N, England A, et al. Research ethics systems, processes, and awareness across Europe: radiography research ethics standards for Europe (RRESFE). Radiography 2022; 28: 1032–41. doi: 10.1016/j.radi.2022.07.002 [DOI] [PubMed] [Google Scholar]

- 64. Lekadir K, Osuala R, Gallin C, Lazrak N, Kushibar K, Tsakou G, et al. n.d.).( Guiding principles and consensus recommendations for trustworthy artificial intelligence in medical imaging. ArXiv. [Google Scholar]

- 65. Liu X, Cruz Rivera S, Moher D, Calvert MJ, Denniston AK, The SPIRIT-AI and CONSORT-AI Working Group, et al. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat Med 2020; 26: 1364–74. doi: 10.1038/s41591-020-1034-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Cruz Rivera S, Liu X, Chan A-W, Denniston AK, Calvert MJ, The SPIRIT-AI and CONSORT-AI Working Group, et al. Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI extension. Nat Med 2020; 26: 1351–63. doi: 10.1038/s41591-020-1037-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Tejani AS, Elhalawani H, Moy L, Kohli M, Kahn CE. Artificial intelligence and Radiology education. Radiol Artif Intell 2023; 5(1): e220084. doi: 10.1148/ryai.220084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Schuur F, Rezazade Mehrizi MH, Ranschaert E. Training opportunities of artificial intelligence (AI) in Radiology: a systematic review. Eur Radiol 2021; 31: 6021–29. doi: 10.1007/s00330-020-07621-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. European Society of Medical Imaging Informatics. Webinars 2018–2023. Available from: https://www.eusomii.org/webinars/

- 70. The British Institute of Radiology . AI Congress 2023. Available from: https://www.mybir.org.uk/l/s/community-event?id=a173Y00000FkWVrQAN

- 71. The British Institute of Radiology . AI Congress 2022. Available from: https://www.mybir.org.uk/CPBase__event_detail?id=a173Y00000GALT7QAP&site=a0N2000000COvFsEAL [Google Scholar]

- 72. The British Institute of Radiology . AI in radiology: The main worries. Available from: https://birorgukportal.force.com/CPBase__event_detail?id=a170O00000Dw7leQAB

- 73. The British Institute of Radiology . AI Congress 2019. Available from: https://www.bir.org.uk/media/390257/artificial_intelligence_in_2019_programme_v2.pdf

- 74. The British Institute of Radiology . AI Congress 2020. Available from: https://www.mybir.org.uk/CPBase__event_detail?id=a173Y00000CwkXhQAJ&site=a0N2000000COvFsEAL

- 75. European Society of Radiology . European Congress of Radiology 2023. Available from: https://connect.myesr.org/ondemand/?f=eecr-2023

- 76. Kumar S, Dass R.. Gain CoR endorsed super user training. Quer.ai, 2022. Available from: https://qure.ai/gain-cor-endorsed-super-user-training/ [Google Scholar]

- 77. European Society of Radiology . Intelligence, innovation, imaging: the perfect vision of AI. 2019. Available from: http://www.myesr.link/Mailings/ESORAInm/?utm_source=ESOR+non+member&utm_campaign=0934231224-EMAIL_CAMPAIGN_2019_02_04_08_44_COPY_03&utm_medium=email&utm_term=0_1560fe400b-0934231224-83993449

- 78. European Society of Radiology . ESOR - Foundation Course on Artificial Intelligence in Radiology. 2020. Available from: https://healthmanagement.org/c/imaging/event/esor-foundation-course-on-artificial-intelligence-in-radiology

- 79. Academy TMC. Artificial intelligence in radiology workflow: from concept to experience. 2022. Available from: https://academy.telemedicineclinic.com/fellowships/3391/artificial-intelligence-in-radiology-workflow-from-concept-to-experience-nov-2022/

- 80. Commission E. European Statistical Training Programme (ESTP). 2022. Available from: https://ec.europa.eu/eurostat/cros/content/artificial-intelligence-data-science-2022_en

- 81. Mediaire . Mediaire contributes to European AI-course in healthcare. 2022. Available from: https://mediaire.ai/en/mediaire-contributes-to-european-ai-course-in-healthcare/

- 82. University Medical Center of Groningen Research . AI+ Hospital. Available from: https://umcgresearch.org/w/dash-project-aiprohealth

- 83. Health EIT. HelloAI programme. 2023. Available from: https://www.helloaiprofessional.com/

- 84. AI-on-Demand . European AI-on-demand (AIOD) platform. 2019. Available from: https://www.ai4europe.eu/

- 85. European Society of Radiology . ESR masterclass in AI. 2023. Available from: https://masterclassai.talentlms.com/index

- 86. van de Venter R, Skelton E, Matthew J, Woznitza N, Tarroni G, Hirani SP, et al. Artificial intelligence education for Radiographers, an evaluation of a UK postgraduate educational intervention using Participatory action research: a pilot study. Insights Imaging 2023; 14(1): 25. doi: 10.1186/s13244-023-01372-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Hedderich DM, Keicher M, Wiestler B, Gruber MJ, Burwinkel H, Hinterwimmer F, et al. AI for doctors-A course to educate medical professionals in artificial intelligence for medical imaging. Healthcare (Basel) 2021; 9: 10: 1278. doi: 10.3390/healthcare9101278 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88. University College London . Artificial Intelligence and Medical Imaging MSc. 2023. Available from: https://www.ucl.ac.uk/prospective-students/graduate/taught-degrees/artificial-intelligence-and-medical-imaging-msc

- 89. City, University of London. Artificial Intelligence MSc. 2023. Available from: https://www.city.ac.uk/prospective-students/courses/postgraduate/artificial-intelligence

- 90. Quantib Learn more about Artificial Intelligence in Healthcare with our resources. 2023. Available from: https://www.quantib.com/resources

- 91. European Society of Radiology . AI blog. Available from: https://ai.myesr.org/education/

- 92. Woodruff HC, Lambin P.. Artificial Intelligence 4 Imaging. 2023. Available from: https://www.ai4imaging.org/

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Table 1.

Supplementary Table 2.

Supplementary Table 3.

Supplementary Table 4.