Summary

Latent factor models, like principal component analysis (PCA), provide a statistical framework to infer low-rank representation in various biological contexts. However, feature selection is challenging when this low-rank structure manifests from a sparse subspace. We introduce SuSiE PCA, a scalable sparse latent factor approach that evaluates uncertainty in contributing variables through posterior inclusion probabilities. We validate our model in extensive simulations and demonstrate that SuSiE PCA outperforms other approaches in signal detection and model robustness. We apply SuSiE PCA to multi-tissue expression quantitative trait loci (eQTLs) data from GTEx v8 and identify tissue-specific factors and their contributing eGenes. We further investigate its performance on the large-scale perturbation data and find that SuSiE PCA identifies modules with a higher enrichment of ribosome-related genes than sparse PCA (false discovery rate [FDR] vs. ), while being 18x faster. Overall, SuSiE PCA provides an efficient tool to identify relevant features in high-dimensional biological data.

Subject areas: Biocomputational method, Classification of bioinformatical subject, data processing in systems biology, Algorithms

Graphical abstract

Highlights

-

•

Efficient PCA feature selection via posterior inclusion probabilities

-

•

Learned model prior enhances inferential robustness

-

•

Seamless CPU/GPU/TPU implementation enables efficient inference

Biocomputational method; Classification of bioinformatical subject; Data processing in systems biology; Algorithms

Introduction

Principal component analysis (PCA) is a popular dimension reduction technique1 that has been widely applied for exploratory data analysis in many fields. One notable functionality of PCA is to synthesize crucial information across features into a small number of principal components (PCs). For example, PCA is commonly used to infer population structure from large-scale genetic data.2,3 The top PCs explain differences in genetic variation arising from different geographic origins and ancestry of individuals, due to historical migration, admixture, etc.4 Moreover, PCA provides a means to rank contributing relevant variables for each latent component, as Tipping and Bishop (1986) proposed the probabilistic reformulation of principal component analysis (PPCA).5 Specifically, each PC is independent of other PCs and has its unique weights to represent the “importance” of original features, suggesting different latent components arise from different combinations of variables, or distinct aspects of information from the data.

However, one disadvantage of conventional PCA is that PCs provide limited interpretability, as each results from a linear combination of variables in the data.6 To improve the interpretability of PCs, while providing an identifiable solution in high-dimensional data, a common approach is to impose sparsity on the PCA loadings. Broadly speaking, there are two types of approaches to achieving sparsity on the loading matrix. The first is the regularization methods such as sparse PCA,6 which rewrites the PCA as a regression-based optimization problem and then includes a penalty on the objective function to achieve sparse loadings. The second type of method is the Bayesian treatment of PPCA, which imposes sparsity-induced prior on the factor loading matrix.7,8,9,10,11,12 Despite various methods that focus on inducing sparse solutions for PCA, few provide a statistically rigorous way to select variables relevant to each factor in a post hoc manner. Although several sparse models are capable of shrinking the loadings of uninformative variables to zero, for those variables with non-zero weights, neither a reasonable threshold nor a formal statistical test is provided to inform feature prioritization for validation or follow-up.

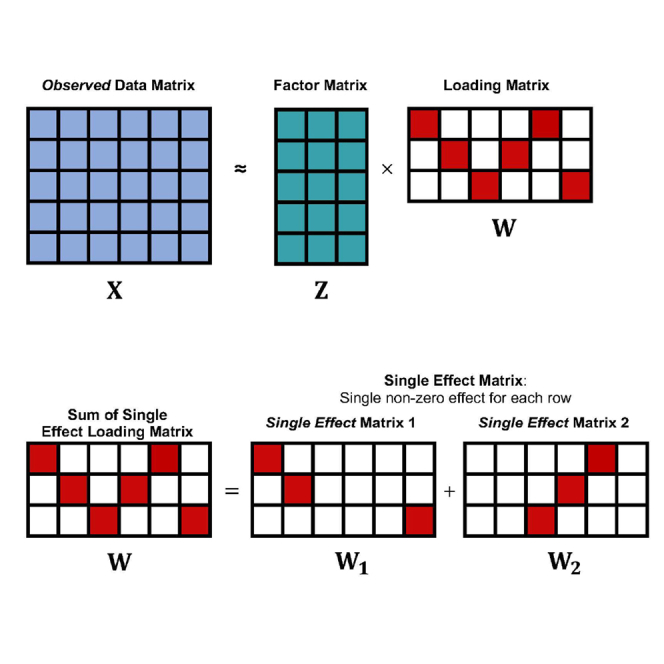

Here, we propose SuSiE PCA, a highly scalable Bayesian framework for sparse PCA, that quantifies the uncertainty of contributing features for each latent component. Specifically, SuSiE PCA leverages the recent “sum of single effects” (SuSiE) approach13 to model a loading matrix such that each latent factor contains at most L contributing features. Latent factors and sparse loading weights are learned through an efficient variational algorithm. In addition to providing a sparse loading matrix, SuSiE PCA computes posterior inclusion probabilities (PIPs) for each feature, which enables defining level credible sets for feature selection. We demonstrate through extensive simulations that SuSiE PCA outperforms sparse PCA6 and empirical Bayes matrix factorization (EBMF)12 in identifying relevant features contributing to structured data while being robust to data-generating assumptions. Next, we apply SuSiE PCA to multi-tissue expression quantitative trait loci (eQTLs) data from the the genotype-tissue expression (GTEx) v812,14 study to identify tissue-specific components of regulatory genetic features and contributing eGenes (genes that have an associated eQTL). We also apply SuSiE PCA to high-dimensional perturb-seq data (CRISPR-based screens with single-cell RNA-sequencing readouts)15 and identify gene sets more enriched in the ribosome and coronavirus disease pathways when compared with sparse PCA (false discovery rate (FDR) , 63 genes involved vs. , 35 genes involved) while requiring 17.8 times less computing time. Overall, we find that SuSiE PCA provides an efficient approach to compute interpretable latent factors from high-dimensional biological data. We provide an open-source python implementation that can run seamlessly on central processing unit (CPU), graphics processing unit (GPU), or tensor processing unit (TPU) available at http://www.github.com/mancusolab/susiepca.

Results

PIPs from SuSiE PCA outperform existing approaches for PCA feature selection

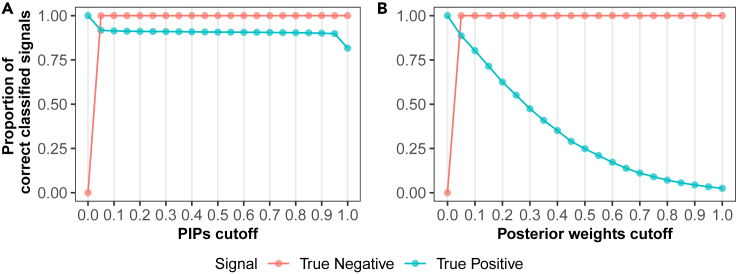

To evaluate the performance of SuSiE PCA, we performed extensive simulations (see details in STAR Methods). Briefly, we performed 100 simulations by varying model parameters one at a time and performed inference using SuSiE PCA with the true number of latent variables (K) and effects (L) known. First, we evaluated the ability of inferred PIPs to discriminate between relevant and non-relevant features for latent factors. Specifically, we compared the sensitivity and specificity of inferred PIPs to normalized posterior mean weights from SuSiE PCA (see Figure 1). When selecting variables based on , SuSiE PCA identifies 88.9% of true positive (non-zero) signals, demonstrating largely calibrated posterior inference. We observed nearly all true negative signals exhibited . As a comparison, the normalized posterior weights performed well on excluding the true negative signals but failed to capture true positive signals as rapidly as PIP thresholds. Overall, the simulation demonstrates that PIPs provide an intuitive and more efficient indicator for feature selection than normalized posterior weights in SuSiE PCA. In addition, we also examined the sensitivity and specificity using weights estimated from sparse PCA and EBMF (see Figure S1), which have similar trends to the curves in Figure 1B and can only capture a small proportion of the true positive signals as the cutoff threshold increases.

Figure 1.

PIPs exhibit a higher efficiency in selecting the true signals than the posterior weights in SuSiE PCA

The proportion of correct classified signals using PIPs as cutoff (A) or posterior weights as cutoff (B). The green dots represent sensitivity, i.e., , and the red dots represent specificity, i.e., . For consistency and to ensure comparability between PIPs and weights, the weights are normalized to be ranged from 0 to 1.

SuSiE PCA is robust to model mis-specification

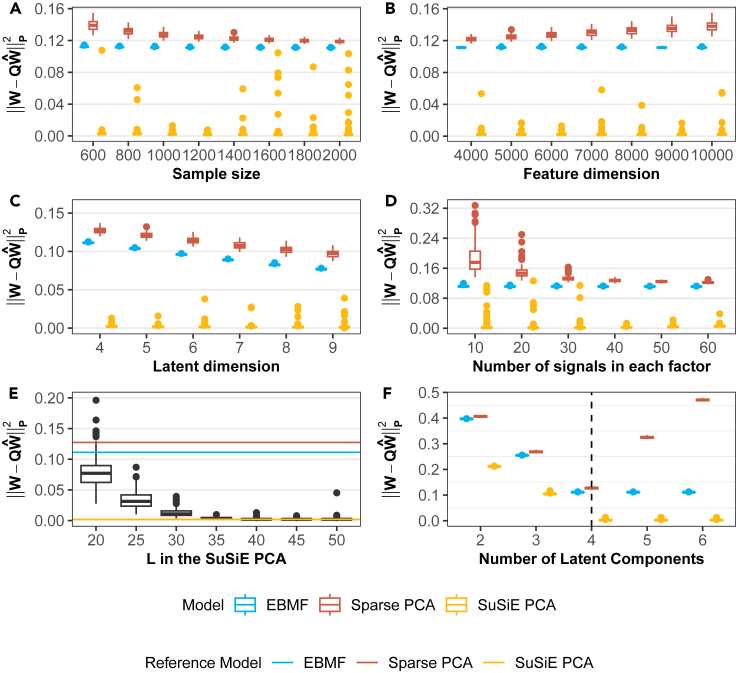

Next, we examined the estimation accuracy of the loading matrix as a function of sample size (N), feature dimension (P), latent dimension (K), and the number of single effects (or sparsity level) (L), via the Procrustes errors16 (the Frobenius norm after Procrustes transformation,17 see STAR Methods) (Figures 2A–2D). We found that SuSiE PCA has the smallest Procrustes errors across all simulation settings compared to sparse PCA and EBMF. And we noticed that the Bayesian methods including SuSiE PCA and EBMF maintain a low error even with a small sample size or high feature dimension. Moreover, we found that SuSiE PCA has the lowest relative root mean squared error (RRMSE) across all simulations compared with other methods (Figure S2); and EBMF and SuSiE PCA have a lower level of Procrustes error of factor than sparse PCA (Figure S3). In summary, SuSiE PCA exhibits the highest estimation accuracy, which is consistent with its superior performance in variable selection.

Figure 2.

SuSiE PCA outperforms sparse PCA and EBMF in estimation accuracy and model robustness

SuSiE PCA generates the smallest Procrustes error in weight matrix than sparse PCA and EBMF (A–D) and is robust to over-specified K and L (E and F). For each scenario in (A–D) we vary one of the parameters at a time to generate the simulation data while fixing the other three parameters, and then input the true parameters ( ) into models. Finally, we compute the Procrustes error and plot them as a function of . For (E and F), we use the same simulation setting in Figure 1 to generate data but vary the specified L in SuSiE PCA (E) and K in all three models (F). Reference lines refer to the error from the models with correctly specified parameters (i.e., ).

We next investigated model robustness under model mis-specification. Similar to other latent factor models, SuSiE PCA could be mis-specified as it requires manually inputting the latent dimension K and the number of single effects L. Considering the potential model mis-specification setting, the simulation datasets are generated based on and then input into SuSiE PCA, sparse PCA, and EBMF with two mis-specified situations: vary L while fixing K, or vary K while fixing L. The model estimation accuracy is then compared among three models with Procrustes error (see Figures 2E and 2F). We observed that as K and L in the model approach the true value (i.e., or ), the Procrustes error decreases rapidly to the lower level in SuSiE PCA and remains the same even when or . However, the error for sparse PCA has a V shape and reaches its minimum at the real K. The explanation is that when there are over-specified latent factors in the model, SuSiE PCA and EBMF will not extract any information from the data due to their probabilistic model structure; the sparse PCA, on the other hand, cannot handle the weights since it does not impose a probabilistic assumption on them. Instead, the value of the redundant latent factor in sparse PCA is close to 0, which ensures the latent component does not contribute.

Finally, to compare the generative capacity, we computed and compared the log likelihood of held-out data between sparse PCA and SuSiE PCA. We observed that SuSiE PCA outperforms sparse PCA and obtains higher log likelihoods for simulations (Figure S5). In addition to the overall superior model performance, SuSiE PCA remains faster on both CPU and GPU than sparse PCA and EBMF due to the efficient variational algorithm we implement (see STAR Methods) with the JAX library developed by Google.18

Dissecting cross-tissue eQTLs in GTEx

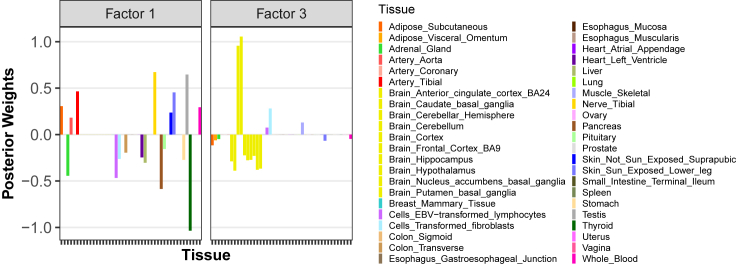

To illustrate the utility of SuSiE PCA to make inferences in biological data, we analyzed multi-tissue eQTL Z score results computed from GTEx v812,14 (see STAR Methods). Specifically, we sought to identify latent factors corresponding to tissue-specific and tissue-shared eQTLs similar to ref. 12. Overall, we found that 27 latent factors explained 53.1% of the variance in the data (see Figure S6). Although we set across all factors, we found the number of tissues with is frequently lower than 18 in different factors (see Figure S9), which is due to inferred acting to “shut off” uninformative features. Indeed, we observed 30 out of 486 with estimates greater than (see Figure S7) which effectively shrink the effect size of the corresponding single effect toward 0, driving the number of non-zero single effects in some factors smaller than specified L. We found this behavior also reflected in estimated level-0.9 credible sets, where 456 out of 486 contained a single tissue, and the remaining 30 credible sets contained at least two tissues.

To understand what each factor represents, we examined inferred PIPs (Figure S9) and posterior mean weights of each tissue across 27 factors (Figure S8). Here we present the results from factor and through the posterior weights (Figure 3; see Figure S8 for the remainder). We observed that the latent factor with the second largest percentage of variance (PVE) demonstrates high absolute weights on most tissues except for the brain tissues, while the latent factor has large weights almost exclusively on brain tissues. Moreover, we observed that brain tissue tends to appear as a group and has similar effects, implying the eQTLs in brain tissue are different from those in other tissue and those strong signals are specifically captured by the factor . For the rest of the factors, we noticed that factors with large PVE such as tended to have large weights on multiple tissues; for example, factor has large weights on esophagus and thyroid, suggesting the eQTLs signals are mostly shared across those tissues, while the factors with small PVE usually have large weights exclusively on one or a few tissues, for example, liver-specific component , lung-specific component , etc. The only exception is that the factor with the largest PVE has an exclusively large weight only on the testis, implying the captures the testis-specific eQTL signals. This is consistent with the investigation of the latent factor values of : the gene with the largest factor value in is D-dopachrome tautomerase (DDT) (Figure S10), which is shown to be associated with testis cancer.19 To make a comparison with the existing method, we expanded our investigation by applying sparse PCA to the GTEx Z score dataset and observed comparable tissue weights and factor scores across components in both SuSiE PCA and sparse PCA (Figure S11). However, a notable distinction arises where certain tissues exhibit tiny weights and can potentially be neglected in sparse PCA; in contrast, the SuSiE PCA can successfully capture the signals in those tissues through the PIP. For example, from the original analysis, both models identify adipose gland as the most relevant tissue in factor 10, while the remaining tissues have a much smaller relative weight and can effectively be ignored. Despite this, SuSiE PCA assigns a PIP of 1 to the lowly weighted tissues, suggesting that important signals would be missed if weights alone were used to provide insight. Overall, we find that SuSiE PCA is able to identify tissue-specific components from multi-tissue eQTL data in an intuitive, interpretable manner.

Figure 3.

Factor and captures different types of tissues (tissues without brain vs. brain tissues)

The posterior weights refer to the inferred matrix from the SuSiE PCA. The clustering pattern in different factors is found as there are only a limited number of tissues with non-zero weights in each factor since we set L = 18 while the feature dimension is 44.

Identifying regulatory modules from perturb-seq data

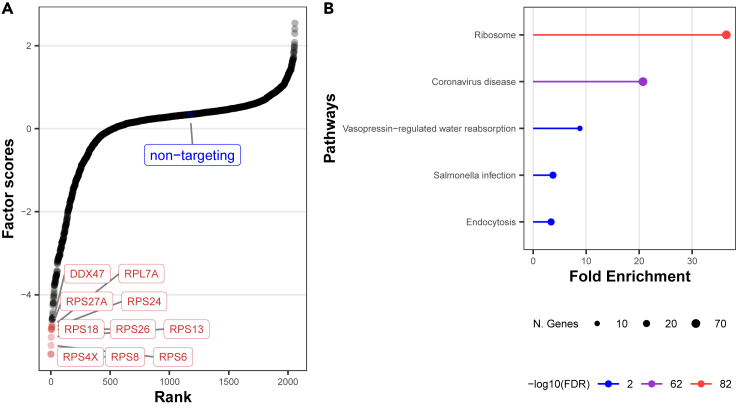

To identify gene regulatory modules from genome-wide perturbation data, we ran SuSiE PCA on perturb-seq in cell lines15 (see STAR Methods) with and . Briefly, we inputted the normalized expression data () to SuSiE PCA to identify gene regulatory modules (i.e., ) and downstream-regulated networks (i.e., ). To ensure our results were robust to K and L, we explored a grid of possible combinations and found that K = 10 and L = 300 retain the most important information while keeping the relevant gene set much smaller (see Figure S12 for a detailed explanation).

Overall, we found the total PVE was 10.71% across all components (Figure S13), with each component exhibiting 299 downstream genes with PIP on average. Focusing on the leading component, we found that perturbations with the top 10 largest absolute factor scores are primarily related to Ribosomal Protein Small (RPS) subunit genes and Ribosomal Protein Large (RPL) subunit family (Figure 4A). To provide a broader characterization of the module function, we extracted downstream genes with PIP greater than 0.9 (298 genes) as input into ShinyGO20 to perform a gene set enrichment analysis (Figure 4B). We observed the most enriched pathway was related to ribosome function (FDR = , 63 genes involved), followed by coronavirus disease (FDR = , 62 genes involved). Inspecting the loadings at these downstream genes, we found nearly all weights were positive, suggesting that the knockout of RPS and RPL genes downregulates the expression level of those downstream genes. We found multiple elongation factor genes (EEF1G, EEF1A1, EEF1B2, EIF4B, EIF3L) among the leading downstream genes, which are known to be involved in ribosome function. Additionally, recent studies have suggested that the decreased expression of elongation factor genes is associated with less severe conditions among COVID-19 patients.21,22 We repeated pathway analysis for each latent factor using corresponding loadings at genes with PIP greater than 0.9 (see Figures S14–S22).

Figure 4.

Dominant factor scores in top component link to RPL and RPS family with subsequent gene enrichment in Ribosome and Coronavirus disease

The perturbations with top factor scores in the first component mostly belong to RPL and RPS family(A), and the enrichment analysis results of downstream genes in the same component are enriched for ribosome and coronavirus disease(B). Each point in (A) represents the latent factor value of each perturbation. The top 9 points as well as the control group are labeled in the plot and colored red and blue, respectively. In gene set enrichment analysis, we input the downstream genes with and show the top enriched pathways with log(FDR) and the number of genes included in the corresponding pathways.

To compare with sparse PCA, we performed the same pathway analysis on factor loadings and assessed enrichments. From the sparse PCA with the largest PVE (alpha = 1), we observed components identified by sparse PCA to be less enriched with biological pathways when compared to SuSiE PCA (80 unique enriched pathways in sparse PCA versus 88 pathways in SuSiE PCA), and the top enriched pathways such as ribosome and coronavirus disease are less significant and contain less number of selected genes (FDR , 35 genes; FDR , 29 genes). We noticed that, when alpha equals 17, the sparse PCA achieves an approximate similar total PVE (10.91%) with that of our model (10.71%) but with lower sparsity level (Figure S23). We then extracted the top 300 genes with non-zero weights in sparse PCA with alpha = 17 and performed the gene set enrichment analysis and found that the significance level is almost similar to that in SuSiE PCA (Figure S24). However, this is a post hoc analysis that suggests SuSiE PCA is more suitable for sparse data analysis while maintaining the power to perform the feature selection in a more statistical and reasonable manner.

Overall, we find distinct biological functions identified by each component, with groupings consistent with those reported in previous works.23,24,25

Discussion

In this paper, we propose SuSiE PCA, an efficient Bayesian variable selection approach to PCA for structured biological data. The sparsity of the loading matrix is achieved by restricting the number of features associated with each factor to be at most L. Through simulations and real-data application, we find that SuSiE PCA outperforms existing approaches to sparse latent structure learning in identifying contributing features, while maintaining a more efficient run time.

There are several advantages of SuSiE PCA as compared to other sparse factor models. First, SuSiE PCA generates the PIPs for each feature that quantifies the uncertainty of the selected feature, which can not be provided by other sparse models, such as sparse PCA with regularization13 or the Bayesian treatment of PPCA. And assessing the selected variables based on the probability is more reasonable and convenient than using weights. Second, PIPs are capable of selecting more signals with high confidence. In simulations, we demonstrated that using weights for variable selection from SuSiE PCA, sparse PCA, and EBMF can deliver a high specificity (low FDR) but with low sensitivity as the cutoff value increases, while using PIPs as selection tools can maintain a high sensitivity for any positive cutoff value between 0 and 1. Third, SuSiE PCA provides a more precise estimate of the loadings and higher prediction accuracy, even in the mis-specified case, as we impose a probabilistic distribution over the loadings that enables a much more accurate inference on the posterior distribution. Finally, the inference procedure of SuSiE PCA works on the dimension of K and L, which is typically set to be much smaller than feature dimension P; therefore, it is scalable to high-dimensional data and requires less computational demands. We implement the SuSiE PCA with the JAX library developed by Google18 to enable fast convergence on CPU, GPU, or TPU. The comparison of run time among SuSiE PCA, sparse PCA, and EBMF is listed in Table 1.

Table 1.

Comparison of mean and standard deviation of running time (seconds) between models

| Modela | Simulationb | GTEx Z score | Perturb-seq |

|---|---|---|---|

| SuSiE PCA | 3.14(0.49) | 1.20 | 68.11 |

| Sparse PCA | 51.96(33.50) | 41.22 | 1213.21 |

| EBMF | 39.83(5.80) | 498.60 | 243.03 |

All run time data in the table are based on the analyses performed on the same CPU for consistency. The CPU we used is the Apple M2 chip with 16 GB memory.

In the SuSiE PCA, two parameters, the number of components K, and the number of single effects L, need to be prespecified by the user before fitting the model. The selection of K follows a similar strategy as conventional PCA, often informed by researchers’ domain expertise. The merit of SuSiE PCA is that when there are excessive latent components being specified, the variance explained for those components would be extremely minimal with a near-zero count of single effects exhibiting . This effectively allows for an initial choice of a relatively large K and subsequently inspecting the PVE and PIPs in each component to decide the most suitable K.

The choice of L determines the sparsity in the SuSiE PCA. Although SuSiE PCA only allows one common L specified across all factors, the number of non-zero effects captured across factors can be varied and learned from the data. This is because we treat the inverse of variance of the single effect in factor as a random variable. As the Algorithm 1 demonstrates, the maximum likelihood estimate (MLE) of at the step 3 is derived before inference of other parameters. When the L specified in the model, for a certain factor k, is greater than the true number of signals associated with that factor, the MLE of the will be extremely large for those excessive single effects, which then shrinks the and PIP to be 0 or close to 0, and therefore removes the redundant single effects from the model. For example, in the simulation and GTEx Z score data analysis, we have shown that when the user-specified L is larger than the data-generating L, the automatic relevance determination-like (ARD) prior over loadings will shrink effects toward 0, thus adding little additional predictive power and overall mean square error (MSE) from the true loadings matrix. Although it seems like the L parameter may be automatically set to the total number of variables (and thus “shut off” if necessary), we emphasize that this still comes with an added computational cost, albeit a low one due to the scalability of our approach. Therefore, we allow users to specify their own choice of L. From this point of view, without prior knowledge of the data, one can specify a relatively larger L during the initial model fitting and then examine the estimates of to explore how many single effects are reasonable for the dataset.

Algorithm 1. Algorithm for SuSiE PCA.

Require: Data

Require: Number of Factors K; Number of single effects in each factor L

Require: Initialize variational parameters ; hyperparameters , for

Require: update equations on different variables :

Require: function to compute

Ensure: ELBO increase

1: repeat

2: . Define as arrays by arranging

3:

4: for k in do

5: compute the first two terms in Eq

6: for l in do

7: removing the effect from

8: complete the calculation of

9:

10:

11: Update the

12: end for

13: end for

14:

15:

16:

17: until ELBO convergence criterion satisfied

Overall, SuSiE PCA provides a flexible approach to high-dimensional biological data with a low-rank structure and allows for feature selection in sparse PCA.

Limitations of the study

One limitation of SuSiE PCA is that under the mean-field approximation, all the posteriors, i.e., and , are factorized to facilitate inference. Under this factorization, estimation for mean terms (i.e., and ) is approximately unbiased.26 However, it produces overconfident covariance structures within variables (W, Z, etc) due to the assumed independence across Q functions.

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited data | ||

| The Genotype-Tissue Expression Z score data | Wei Wang and Matthew Stephens, Empirical Bayes Matrix Factorization, 20211 | https://github.com/ysfoo/sparsefactor |

| Genome-scale Perturb-seq experiment data | Joseph Replogle and Jonathan Weissman, Mapping information-rich genotype-phenotype landscapes with genome-scale Perturb-seq, 20222 | https://plus.figshare.com/articles/dataset/_Mapping_information-rich_genotype-phenotype_landscapes_with_genome-scale_Perturb-seq_Replogle_et_al_2022_processed_Perturb-seq_datasets/20029387 |

| Software and algorithms | ||

| Scikit-learn library: sparse principal component analysis | Python library scikit-learn | https://scikit-learn.org/stable/modules/generated/sklearn.decomposition.SparsePCA.html; RRID:SCR_002577 |

| R Package: Factors and Loadings by Adaptive SHrinkage in R (flashr) | Wei Wang and Matthew Stephens, Empirical Bayes Matrix Factorization1 | https://stephenslab.github.io/flashr/index.html |

| Variational algorithm in SuSiE PCA | This paper | http://www.github.com/mancusolab/susiepca |

| Python 3.9 | Python Software Foundation | https://www.python.org/ |

| R 4.0.0 | R Software | https://www.r-project.org |

| ShinyGO v0.77 | Ge SX, Jung D & Yao R3 | http://bioinformatics.sdstate.edu/go/; RRID:SCR_019213 |

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Dong Yuan (dongyuan@usc.edu).

Material availability

This study did not generate new unique materials or reagents.

Experimental model and subject details

This study did not include experiments with a specific model or subject.

Method details

Overview of SuSiE PCA

In this section, we will give a detailed description of SuSiE PCA. Let be the observed data matrix, be the K dimensional latent vectors, and be the loading matrix. We denote the normal distribution with mean μ and variance as , the multinomial distribution with n choices and probabilities as and the matrix normal distribution with dimension , mean , row-covariance , and column-covariance as . We denote the basis vector in which coordinate is 1 and 0 elsewhere as . The sampling distribution of under the SuSiE PCA model is given by,

| (Equation 1) |

| (Equation 2) |

| (Equation 3) |

| (Equation 4) |

| (Equation 5) |

| (Equation 6) |

| (Equation 7) |

where corresponds to the row of , and contains at most L non-zero elements determined by the sum of L single-effect vectors . These single-effect vectors are described by a single random effect and indicator vector which assigns the effect to a feature with prior probabilities .

Posterior inclusion probability

One of the distinguishing features that the SuSiE model13 provides is a posterior inclusion probability (PIP). The PIP reflects the posterior probability that a given variable has a non-zero effect given the observed data. Here we extend the PIP definition to include latent factors. Specifically, given variational parameters we can define the PIP that the variable has a non-zero effect in the latent component as,

| (Equation 8) |

Similarly, a level- credible set (CS) refers to a subset of variables that cumulatively explain at least ρ of the posterior density. Here, we define factor-specific level- CSs, which can be computed across each independently, resulting in total level-ρ credible sets. This lets us reflect on the uncertainty in identified variables to explain a single-effect for each latent factor.

Variational inference in SuSiE PCA

We seek to perform inference of model variables and conditional on observed data , however, the marginal likelihood is intractable to compute and therefore, we cannot evaluate the posterior exactly. While sampling based approaches such as Markov Chain Monte Carlo (MCMC) methods provide a numerical approximation of the exact posterior distribution,27 they often lack computational efficiency in high-dimensional settings. As an alternative, we leverage recent advancements in the variational inference that provides an analytical approximation to the posterior distribution28 and remains computationally efficient.

Briefly, To approximate the conditional distribution of latent variables given the observed samples , variational methods first impose a family of densities over the latent variables, , usually predefined as known distributions parameterized with a set of variational parameters. Then the goal is to infer those variational parameters such that the variational distribution is as similar as possible to the true posterior distribution . A quantity commonly used to measure dissimilarity between distributions is Kullback-Leibler divergence .29 However, since KL divergence contain the unknown true posterior distribution , it cannot be directly computed. Instead, we can show that the log-likelihood of data, can be decomposed as:

| (Equation 9) |

Where , which is also known as the Evidence Lower Bound (ELBO). Since the is a constant with respect to the variational parameters, minimizing KL divergence is equivalent to maximizing ELBO. As the ELBO does not contain the unknown posterior distribution and therefore is tractable to compute and maximize for variational parameters.

Mean-field approximation

Mean field approximation30 is a common solution to find the optimal solution that maximizes ELBO. The basic assumption is that we can factorize the variational distribution into independent components. Then using the calculus of variations, one can show that the distribution minimizing KL divergence for each factor can be expressed as:

| (Equation 10) |

Applying the Mean-Field approximation to SuSiE PCA the approximate posterior given by,

| (Equation 11) |

| (Equation 12) |

Equation 11 factorizes the variational densities of the latent variables and the loading matrix into independent parts. We further assume that the variational distribution of loadings from each factor across L single effects are independent as well, leading to Equation 12. For ease of notation we first define . Based on the factorization, the complete-data log-likelihood of data and parameters of SuSiE PCA is given by:

Helpful definitions

Before proceeding to the full derivation of variational distribution of parameters , we first give some helpful definitions, including the expansion of the first and second moment of and .

The second moment of is:

The first and second moments of are listed as follows:

The first and second moments of are listed as follows:

Other terms in likelihood function:

Derivation of model parameters

In this section, we present the detailed derivation of the optimal variational distributions of variables , and . First, we derived the

Second, we derive the :

Noticed that we can update for all feature at once:

Finally we derive the : Note that

In summary, the optimal variational distribution of model parameters can be summarized as:

| (Equation 13) |

| (Equation 14) |

| (Equation 15) |

The corresponding update rules for variational parameters from can be expressed as,

| (Equation 16) |

| (Equation 17) |

| (Equation 18) |

| (Equation 19) |

| (Equation 20) |

| (Equation 21) |

Derivation of evidence lower bound (ELBO)

The ELBO provides a natural criterion for evaluating model performance during model training, and also provides a means to perform hyperparameter optimization for model variance τ and (or equivalently precision) parameters. Given the above definitions for Q, we derive the ELBO for SuSiE PCA as

Based on the above derivation, ELBO can be decomposed into three parts. The first term is the expectation of the data with respect to all the parameters in the model:

The second term is the negative KL divergence of .

The last term contains joint negative KL divergence of can be further decomposed as following:

The first expectation term of the last line of equation can be expanded as following:

And the second expectation term can be decomposed as

With the explicit form of ELBO, we obtained the maximum likelihood estimates of model precision parameters by setting the derivative of ELBO with respect to each variance parameter to be 0, which results in closed-form update equations given by,

| (Equation 22) |

| (Equation 23) |

Simulations

To investigate the performance of SuSiE PCA in variable selection and model fitting, we simulated various data sets that are controlled by 4 parameters: the sample size N, number of features P, number of latent factors K, and number of single effects L in each of the factors. For simplicity, we assume L is the same across different factors. The simulated data is generated according to equation ((0.1)), where , and and , for are simulated such that each factor only contain 40 non-zero effects (0.67%) given by,

| (Equation 24) |

| (Equation 25) |

| (Equation 26) |

| (Equation 27) |

| (Equation 28) |

with the remaining effects set to zero. Considering the scale of the estimates of loadings may differ from various types of methods, we normalized the loading matrix with respect to Frobenius norm, i.e. .

To evaluate the accuracy of SuSiE PCA, we compared inferred posterior expectations with the true latent variables. However, due to the rotational invariance property in latent factor models, evaluating loading or latent factor accuracy can be challenging. To account for possible rotation, we leverage the Procrustes transformation,17 which finds an orthogonal rotation matrix to transform the estimated loading matrix to the true loading matrix space. Specifically, given an estimated loading matrix under approximate posterior distribution Q and true effect matrix , the “Procrustes Norm” can be obtained as following:

| (Equation 29) |

Here we perform the Procrustes analysis via Procrustes package,16 from which is obtained by performing a singular value decomposition on matrix (padding zeros on matrix would ensure the above operation process correctly).

In addition, we employ the relative root mean squared error (RRMSE) to evaluate the reconstructed data loss as,

| (Equation 30) |

Lastly, to assess generative modeling proficiency, we computed the log-likelihood under held-out data. Specifically, we first trained the model on simulated training data. Next, we computed latent space representations for the testing data under each of the trained models. Lastly, we computed log-likelihoods under normality assumptions given the latent representations and learned loadings and parameters.

For model comparison, we also evaluate the performance of sparse PCA6 and Empirical Bayes Matrix Factorization (EBMF) (a recently described variational approach)12 on the same simulation data sets with the same K, and compare the model performance with SuSiE PCA via criterion described above.

GTEx Z score dataset

To illustrate the application of SuSiE PCA in genetic research, we downloaded the Genotype-Tissue Expression (GTEx)14 summary statistics data, composed of z-scores computed from the testing association between genetic variants and the gene expression levels across 44 different human tissues.12 The GTEx project collected genotype data and gene expression data from 49 non-disease tissues across individuals, providing an ideal resource database to study the relationship between genetic variants and gene expression levels.14 The genetic variants that are statistically associated with gene expression levels are referred to as expression quantitative trait loci (eQTLs). To identify eQTLs, the GTEx project tested the association between each nearby genetic variant of a certain gene with its expression levels using linear regression to yield a Z score. The summary data we explored reflects the most significant eQTL (equivalently, the largest absolute Z score in each SNP and gene pair) at each of 16069 genes (row) from 44 tissues (column) curated from GTEx v8,12,14 as those 16069 genes show indication of being expressed in 44 of all 49 human tissues. To identify tissue-specific components of regulatory genetic features and contributing genes, we applied SuSiE PCA across this Z score matrix with a latent dimension of 27 and the number of single effects of 18. The prior information on the number of latent dimensions comes from Wang et al. (2021)12 who contribute to the Z score dataset and run the EBMF model with 27 factors. To determine the appropriate L that fits the data, we run the SuSiE PCA with L ranged from 10 to 25, and select the model when the increase in the total percentage of variance explained (PVE) is less than 5%. PVE is a measure of the amount of signals in the data captured by the latent component, the PVE of the factor is calculated based on the following equation:

| (Equation 31) |

where .

Purturb-seq dataset

We next investigated genome-scale Perturb-seq data15 to discover the co-regulated gene sets affected by some common type of perturbations. The Perturb-seq data originated from Perturb-seq experiments performed by Replogle et al.15 Perturb-seq is a cutting-edge technique combining CRISPR-based perturbations with single-cell RNA-sequencing readouts, enabling the investigation of co-regulated gene sets affected by various perturbations. The researchers employed three cell lines: K562 cells, hTERT-immortalized RPE1 cells, and HEK293T cells. CRISPRi technology was used to generate cell lines expressing dCas9-BFP-KRAB (KOX1-derived) for the perturbation experiments. Since we focus our analyses on the expression data from the K562 cell line, we give a brief description of the experiments performed on the K562 cell lines. Namely, the authors targeted genes expressed in K562 cells, transcription factors, Cancer Dependency Map common essential genes, and included non-targeting control sgRNAs accounting for 5% of the total library. The gene sets were defined based on a combination of bulk RNA-seq data from ENCODE and 10x Genomics 3′ single-cell RNA-seq data. Libraries were constructed with dual-sgRNA pairs targeting each gene, expressed from tandem U6 expression cassettes in a single lentiviral vector, and ranked based on empirical data and computational predictions. Subsequently, the author conducted Perturb-seq experiments on the K562 cells, with 2056 distinct knocked-out genes and one non-targeting control group over an average of 150 different single cells, and then measured the expression levels of the downstream 8563 genes from each cell.

The final dataset contains 310385 rows, each representing one perturbation in a specific cell, and the expression levels of 8563 downstream genes as the column. As an exploratory analysis, we omitted the single-cell level information and aggregated the expression levels of downstream genes with the same perturbation over all the cells, which resulted in a “psuedo-bulk” data matrix with 2057 rows and 8563 columns. We then performed the SuSiE PCA and Sparse PCA to investigate the regulatory modules from the common perturbations. To exclude the batch effects and other non-genetic covariates, we regressed out the germ-line group and the mitochondrial percent from the original expression data and then aggregated the expression level of downstream genes with the same perturbation. Finally, the aggregated data is centered and standardized before input into SuSiE PCA.

As a comparison, we also run the sparse PCA with the same K in both datasets. While choosing an appropriate sparsity parameter alpha in sparse PCA is less straightforward than tuning L in the SuSiE PCA, as we cannot directly pull all of the non-zero genes even with a fairly large alpha (higher sparsity). To make a reasonable comparison, we run sparse PCA with a set of alpha from 1 to 20 and choose two models to compare: first, choose the model giving the highest PVE, then investigate the model having a similar level of PVE with SuSiE PCA.

Acknowledgments

This work was funded by the National Institutes of Health (NIH) under awards R01HG012133, P01CA196569.

Author contributions

D.Y. and N.M. developed the method. D.Y. performed analysis. D.Y. and N.M. edited and approved the manuscript.

Declaration of interests

The authors declare no competing interests.

Published: October 13, 2023

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.isci.2023.108181.

Contributor Information

Dong Yuan, Email: dongyuan@usc.edu.

Nicholas Mancuso, Email: nmancuso@usc.edu.

Supplemental information

Data and code availability

-

•

This paper analyzes existing, publicly available data, i.e., the GTEx z-score dataset14 and the perturb-seq data.15 These accession numbers for the datasets are listed in the key resources table.

-

•

All original codes related to SuSiE PCA have been deposited and are publicly available on GitHub (https://github.com/mancusolab/susiepca).

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

References

- 1.Hotelling H. Analysis of a complex of statistical variables into principal components. J. Educ. Psychol. 1933;24:417–441. doi: 10.1037/h0071325. [DOI] [Google Scholar]

- 2.Patterson N., Price A.L., Reich D. Population Structure and Eigenanalysis. PLoS Genet. 2006;2 doi: 10.1371/journal.pgen.0020190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Agrawal A., Chiu A.M., Le M., Halperin E., Sankararaman S. Scalable probabilistic PCA for large-scale genetic variation data. PLoS Genet. 2020;16 doi: 10.1371/journal.pgen.1008773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.McVean G. A Genealogical Interpretation of Principal Components Analysis. PLoS Genet. 2009;5 doi: 10.1371/journal.pgen.1000686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jolliffe I.T. Springer-Verlag; 1986. Principal Component Analysis. [Google Scholar]

- 6.Zou H., Hastie T., Tibshirani R. Sparse Principal Component Analysis. J. Comput. Graph Stat. 2006;15:265–286. doi: 10.1198/106186006X113430. [DOI] [Google Scholar]

- 7.Bishop C. Advances in Neural Information Processing Systems. MIT Press; 1998. Bayesian PCA. [Google Scholar]

- 8.Guan Y., Dy J. Proceedings of the Twelth International Conference on Artificial Intelligence and Statistics. PMLR; 2009. Sparse Probabilistic Principal Component Analysis; pp. 185–192. [Google Scholar]

- 9.Ning B. Spike and slab Bayesian sparse principal component analysis. arXiv. 2021 doi: 10.48550/arXiv.2102.00305. Preprint at. [DOI] [Google Scholar]

- 10.Armagan A., Clyde M., Dunson D. Advances in Neural Information Processing Systems. Curran Associates, Inc.; 2011. Generalized Beta Mixtures of Gaussians. [PMC free article] [PubMed] [Google Scholar]

- 11.Zhao S., Gao C., Mukherjee S., Engelhardt B.E. Bayesian group factor analysis with structured sparsity. J. Mach. Learn. Res. 2016;17:1–47. [Google Scholar]

- 12.Wang W., Ge L., Zhang L., Liu L., Zhang X., Ma X. Empirical bayes matrix factorization. Hum. Fertil. 2021;22:1–11. [Google Scholar]

- 13.Wang G., Sarkar A., Carbonetto P., Stephens M. A simple new approach to variable selection in regression, with application to genetic fine mapping. J. R. Stat. Soc. Series B Stat. Methodol. 2020;82:1273–1300. doi: 10.1111/rssb.12388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.GTEx Consortium. Ardlie K.G., Deluca D.S., Segrè A.V., Sullivan T.J., Young T.R., Gelfand E.T., Trowbridge C.A., Maller J.B., Tukiainen T., et al. The Genotype-Tissue Expression (GTEx) pilot analysis: Multitissue gene regulation in humans. Science. 2015;348:648–660. doi: 10.1126/science.1262110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Replogle J.M., Saunders R.A., Pogson A.N., Hussmann J.A., Lenail A., Guna A., Mascibroda L., Wagner E.J., Adelman K., Lithwick-Yanai G., et al. Mapping information-rich genotype-phenotype landscapes with genome-scale Perturb-seq. Cell. 2022;185:2559–2575.e28. doi: 10.1016/j.cell.2022.05.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Meng F., Richer M., Tehrani A., La J., Kim T.D., Ayers P.W., Heidar-Zadeh F. Procrustes: A python library to find transformations that maximize the similarity between matrices. Comput. Phys. Commun. 2022;276 doi: 10.1016/j.cpc.2022.108334. [DOI] [Google Scholar]

- 17.Borg I., Groenen P. 2005. Modern Multidimensional Scaling: Theory and Applications. (Springer Series in Statistics)). [DOI] [Google Scholar]

- 18.Bradbury J., Frostig R., Hawkins P., Johnson M.J., Leary C., Maclaurin D., Necula G., Paszke A., VanderPlas J., Wanderman-Milne S., et al. 2018. JAX: Composable Transformations of Python+NumPy Programs. [Google Scholar]

- 19.Cohn B.A., Cirillo P.M., Christianson R.E. Prenatal DDT Exposure and Testicular Cancer: A Nested Case-Control Study. Arch. Environ. Occup. Health. 2010;65:127–134. doi: 10.1080/19338241003730887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ge S.X., Jung D., Yao R. ShinyGO: a graphical gene-set enrichment tool for animals and plants. Bioinformatics. 2020;36:2628–2629. doi: 10.1093/bioinformatics/btz931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Amrute J.M., Perry A.M., Anand G., Cruchaga C., Hock K.G., Farnsworth C.W., Randolph G.J., Lavine K.J., Steed A.L. Cell specific peripheral immune responses predict survival in critical COVID-19 patients. Nat. Commun. 2022;13:882. doi: 10.1038/s41467-022-28505-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Garg M., Li X., Moreno P., Papatheodorou I., Shu Y., Brazma A., Miao Z. Meta-analysis of COVID-19 single-cell studies confirms eight key immune responses. Sci. Rep. 2021;11 doi: 10.1038/s41598-021-00121-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Signorile A., Sgaramella G., Bellomo F., De Rasmo D. Prohibitins: A Critical Role in Mitochondrial Functions and Implication in Diseases. Cells. 2019;8:71. doi: 10.3390/cells8010071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Artal-Sanz M., Tsang W.Y., Willems E.M., Grivell L.A., Lemire B.D., van der Spek H., Nijtmans L.G.J. The mitochondrial prohibitin complex is essential for embryonic viability and germline function in Caenorhabditis elegans. J. Biol. Chem. 2003;278:32091–32099. doi: 10.1074/jbc.M304877200. [DOI] [PubMed] [Google Scholar]

- 25.Artal-Sanz M., Tavernarakis N. Prohibitin couples diapause signalling to mitochondrial metabolism during ageing in C. elegans. Nature. 2009;461:793–797. doi: 10.1038/nature08466. [DOI] [PubMed] [Google Scholar]

- 26.Opper M., Saad D. MIT Press; 2001. Advanced Mean Field Methods: Theory and Practice. [Google Scholar]

- 27.Andrieu C., de Freitas N., Doucet A., Jordan M.I. An Introduction to MCMC for Machine Learning. Mach. Learn. 2003;50:5–43. doi: 10.1023/A:1020281327116. [DOI] [Google Scholar]

- 28.Jordan M.I., Ghahramani Z., Jaakkola T.S., Saul L.K. An Introduction to Variational Methods for Graphical Models. Mach. Learn. 1999;37:183–233. doi: 10.1023/A:1007665907178. [DOI] [Google Scholar]

- 29.Kullback S., Leibler R.A. On Information and Sufficiency. Ann. Math. Stat. 1951;22:79–86. [Google Scholar]

- 30.Tanaka T. Advances in Neural Information Processing Systems. MIT Press; 1998. A Theory of Mean Field Approximation. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

-

•

This paper analyzes existing, publicly available data, i.e., the GTEx z-score dataset14 and the perturb-seq data.15 These accession numbers for the datasets are listed in the key resources table.

-

•

All original codes related to SuSiE PCA have been deposited and are publicly available on GitHub (https://github.com/mancusolab/susiepca).

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.