Key Points

Question

Does natural language processing accurately identify hospitalizations for heart failure based on medical record text from multiple medical centers?

Findings

This secondary analysis of a randomized clinical trial validated a natural language processing model developed within a single healthcare system to identify heart failure hospitalizations.

Meaning

The findings indicate that natural language processing may improve the efficiency of future multicenter clinical trials by accurately identifying clinical events at scale.

This secondary analysis of a randomized clinical trial validates the findings of a single-center study regarding the use of natural language processing for the adjudication of heart failure hospitalization.

Abstract

Importance

The gold standard for outcome adjudication in clinical trials is medical record review by a physician clinical events committee (CEC), which requires substantial time and expertise. Automated adjudication of medical records by natural language processing (NLP) may offer a more resource-efficient alternative but this approach has not been validated in a multicenter setting.

Objective

To externally validate the Community Care Cohort Project (C3PO) NLP model for heart failure (HF) hospitalization adjudication, which was previously developed and tested within one health care system, compared to gold-standard CEC adjudication in a multicenter clinical trial.

Design, Setting, and Participants

This was a retrospective analysis of the Influenza Vaccine to Effectively Stop Cardio Thoracic Events and Decompensated Heart Failure (INVESTED) trial, which compared 2 influenza vaccines in 5260 participants with cardiovascular disease at 157 sites in the US and Canada between September 2016 and January 2019. Analysis was performed from November 2022 to October 2023.

Exposures

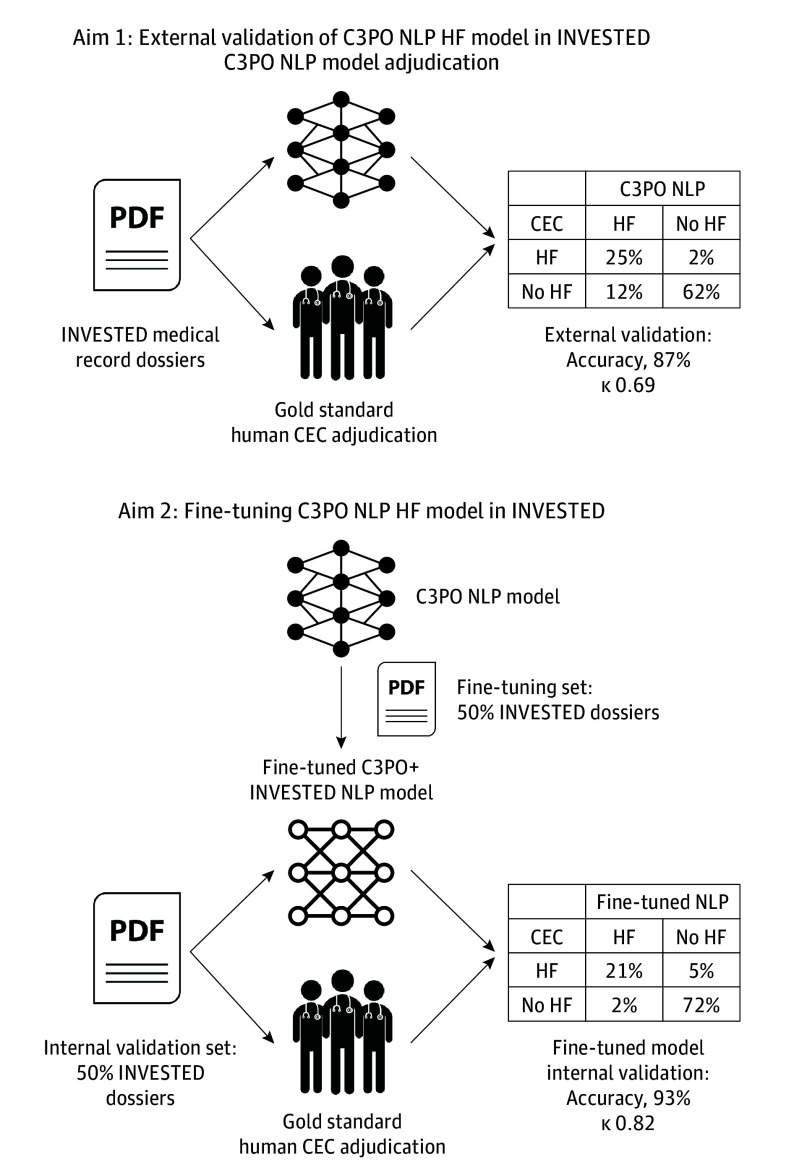

Individual sites submitted medical records for each hospitalization. The central INVESTED CEC and the C3PO NLP model independently adjudicated whether the cause of hospitalization was HF using the prepared hospitalization dossier. The C3PO NLP model was fine-tuned (C3PO + INVESTED) and a de novo NLP model was trained using half the INVESTED hospitalizations.

Main Outcomes and Measures

Concordance between the C3PO NLP model HF adjudication and the gold-standard INVESTED CEC adjudication was measured by raw agreement, κ, sensitivity, and specificity. The fine-tuned and de novo INVESTED NLP models were evaluated in an internal validation cohort not used for training.

Results

Among 4060 hospitalizations in 1973 patients (mean [SD] age, 66.4 [13.2] years; 514 [27.4%] female and 1432 [72.6%] male]), 1074 hospitalizations (26%) were adjudicated as HF by the CEC. There was good agreement between the C3PO NLP and CEC HF adjudications (raw agreement, 87% [95% CI, 86-88]; κ, 0.69 [95% CI, 0.66-0.72]). C3PO NLP model sensitivity was 94% (95% CI, 92-95) and specificity was 84% (95% CI, 83-85). The fine-tuned C3PO and de novo NLP models demonstrated agreement of 93% (95% CI, 92-94) and κ of 0.82 (95% CI, 0.77-0.86) and 0.83 (95% CI, 0.79-0.87), respectively, vs the CEC. CEC reviewer interrater reproducibility was 94% (95% CI, 93-95; κ, 0.85 [95% CI, 0.80-0.89]).

Conclusions and Relevance

The C3PO NLP model developed within 1 health care system identified HF events with good agreement relative to the gold-standard CEC in an external multicenter clinical trial. Fine-tuning the model improved agreement and approximated human reproducibility. Further study is needed to determine whether NLP will improve the efficiency of future multicenter clinical trials by identifying clinical events at scale.

Introduction

Heart failure (HF) is the leading cause of hospitalization in the US.1 Identification and classification of HF events is challenging due to clinical and phenotypic heterogeneity as well as the absence of definitive and scalable diagnostic testing. Yet the validity of HF randomized clinical trials, epidemiology, and outcomes research depends on accurate identification of HF hospitalization events. The time-intensive gold-standard for defining HF outcomes in most randomized clinical trials is for local sites to identify potential events and submit medical records to a central clinical events committee (CEC), a group of expert physicians who manually review the records and determine whether the case meets established criteria.2 For larger (primarily observational) studies for which CEC adjudication is impractical, International Classification of Disease (ICD) codes are commonly used to define HF hospitalizations.3,4 But ICD codes for HF are known to be imprecise: approximately 40% of hospitalizations with primary position ICD codes for HF do not meet established criteria on medical record review.5,6,7,8 Automated adjudication of HF hospitalizations from medical records at scale could streamline or reduce the need for physician CEC review and therefore may represent 1 step toward larger and less expensive clinical trials and more precise clinical outcome data for epidemiology research.

Natural language processing (NLP) is a potential strategy for automated clinical outcome adjudication. Innovations in pretrained transformer-based NLP architectures have enabled the development of accurate models for specific document classification tasks requiring only modest numbers of expert-labeled training examples.9,10 In prior work, we developed and internally validated a transformer-based NLP model which adjudicates HF hospitalizations from discharge summary text in a single-center electronic health record (EHR) cohort, the Community Care Cohort Project (C3PO).8 Since future applications of large-scale NLP adjudication at scale would include many centers, it is essential to evaluate whether the accuracy of this model generalizes beyond a single center before considering implementation. Indeed, machine learning models can be overfit to a particular care setting and thus generalize poorly.11,12,13 In this study, we externally validated the C3PO NLP model outside the health care system in which it was developed and compared to a gold-standard CEC in a multicenter National Institutes of Health–sponsored randomized clinical trial, the Influenza Vaccine to Effectively Stop Cardio Thoracic Events and Decompensated Heart Failure (INVESTED) study.14,15

Methods

Patients and Clinical Adjudication

The design and primary results of the INVESTED trial have been published.14,15 Briefly, INVESTED enrolled 5260 patients with recent acute myocardial infarction or HF hospitalization and at least 1 additional risk factor at 157 sites in the US and Canada from September 2016 through January 2019. Participants were randomized to high-dose trivalent influenza vaccine or standard-dose quadrivalent influenza vaccine. INVESTED was sponsored by the National Heart, Lung, and Blood Institute. Institutional review boards at each site approved the study protocol, which is presented in Supplement 1. All patients provided written informed consent. Reporting followed the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) reporting guideline. Race and ethnicity data were assessed per National Institutes of Health guidelines and were self-reported based on fixed categories.

INVESTED sites submitted medical records (admission note or discharge summary at minimum) for all deaths and hospitalizations considered by the site investigator as potentially for a cardiopulmonary reason for adjudication by the central CEC at Brigham and Women’s Hospital. The cause of each hospitalization was adjudicated according to prespecified criteria based on the 2017 Standardized Data Collection for Cardiovascular Trials Initiative Cardiovascular and Stroke Endpoint Definitions for Clinical Trials.2 Each hospitalization dossier was adjudicated independently by 2 CEC physician reviewers. J.W.C. was a reviewer for both C3PO and the INVESTED CEC (272 adjudications). When the reviewers disagreed, the case was discussed in a CEC, with the chair serving as tiebreaker.

Preparation of INVESTED Dossiers for NLP Adjudication

Adjudication dossiers were converted from Portable Document Format (PDF) to Tag Image File Format (TIFF) image files using Ghostscript Seamless16 and from TIFF to text using Tesseract optical character recognition (eMethods in Supplement 2).17 To assess NLP performance on adjudicating the hospitalization events based on the adjudication dossiers, a cover sheet describing the site investigator’s adjudication of the hospitalization’s cause and a brief summary of the event was removed. Hospitalizations in INVESTED were defined as unplanned admissions. Elective procedures, emergency department visits, and outpatient visits submitted to the CEC (n = 364) were not adjudicated and therefore excluded from this analysis. Dossiers with no available medical records beyond the cover sheet (n = 270), or with medical records in French (n = 142) were excluded. The INVESTED CEC event definitions included a nonspecific cardiopulmonary hospitalization category for hospitalizations that could be secondary to either congestive HF or pulmonary etiology but for which the primary etiology could not be determined. The 300 hospitalizations (7%) that received a final CEC adjudication of nonspecific cardiopulmonary were excluded from primary NLP validation but included in sensitivity analysis.

External Validation of the C3PO Heart Failure NLP Model

The development and validation of the C3PO NLP model for HF hospitalization using the Clinical Longformer pretrained architecture has been previously described (eMethods in Supplement 2).8,18,19 The design of this validation study is outlined in Figure 1. The text from each INVESTED medical record dossier was submitted to the C3PO NLP model, yielding a continuous C3PO HF NLP score. For the 1657 dossiers (41%) longer than the 4096-token maximum that Clinical Longformer can accommodate, only the first 4096 tokens were submitted (eFigure 1 in Supplement 2). The threshold of NLP score to define a HF adjudication (greater than 0.958) was maintained from the previous study without recalibration.

Figure 1. Study Design.

CEC indicates clinical event committee; C3PO, Community Care Cohort Project; HF, heart failure; NLP, natural language processing.

Fine-Tuning the C3PO NLP Model and Training a De Novo NLP Model in INVESTED

As a second aim, we hypothesized that new NLP models created by fine-tuning the C3PO NLP model or training a new model using a subset of INVESTED records would agree with the CEC adjudication more frequently than the C3PO NLP model. We separated INVESTED hospitalizations randomly into a development set (1954 hospitalizations) and internal validation set (2106 hospitalizations), avoiding overlapping individuals between sets. The fine-tuned C3PO + INVESTED model was created by initializing Clinical Longformer with weights from the C3PO NLP model and further training it on the labeled INVESTED development set. The de novo INVESTED NLP model was created by training Clinical Longformer in the development set without C3PO model weights. Training details are provided in eMethods in Supplement 2. These models were evaluated in the held-out internal validation set against the CEC adjudication. To investigate whether a smaller development set would have been sufficient, we trained versions of these models using subsets of the development set and evaluated them on the identical validation set.

Statistical Analysis

Agreement between the NLP HF adjudications and the final CEC adjudication (HF vs non-HF) was assessed by κ statistic, sensitivity, specificity, and positive and negative predictive value, using the CEC adjudication as the gold standard. 95% CIs for these statistics were reported. C3PO NLP model κ was assessed in key subgroups. Interrater reproducibility between the 2 independent human reviewers on the INVESTED CEC and agreement between site investigator adjudication and the final CEC adjudication were assessed using the same metrics. Analysis was performed from November 2022 to October 2023.

Results

Baseline Characteristics

A total of 4060 adjudicated hospitalizations with available medical records occurred in 1973 patients (mean [SD] age, 66.4 [13.2] years; 514 [27.4%] female and 1432 [72.6%] male]; by self-report, 30 participants [1.5%] were Asian, 370 were Black [18.8%], 16 [0.8%] were First Nation/American Indian, 1498 [75.9%] were White, and 59 [3%] were of another race, including Native Hawaiian, Pacific Islander, more than 1 race, participant did not want to report, participant did not know, and race not available or missing; 136 [6.9%] were Hispanic/Latino, 1822 [92.3%] were non-Hispanic/Latino, and 15 [0.8%] were of another ethnicity, including participants who did not want to report, who did not know, or for whom ethnicity not available or missing). Baseline characteristics of patients with at least 1 hospitalization event are shown in Table 1. Enrollment occurred at US Veterans Administration (VA) sites in 542 participants (27.5%), non-VA US sites in 1091 (55.3%), and Canadian sites in 340 (17.2%). A total of 1549 (78.5%) qualified for trial entry due to HF hospitalization, and 424 (21.5%) by myocardial infarction; 963 (48.8%) had a current or prior left ventricular ejection fraction of less than 40%.

Table 1. Baseline Characteristics of Patients With At Least 1 Adjudicated Hospitalization.

| Characteristic | No. (%) (N = 1973) |

|---|---|

| Age, mean (SD), y | 66.4 (13.2) |

| Female | 541 (27.4) |

| Male | 1432 (72.6) |

| Randomization period | |

| 2016-2017 | 285 (14.4) |

| 2017-2018 | 1026 (52) |

| 2018-2019 | 662 (33.6) |

| Region and site | |

| Canada | 340 (17.2) |

| US | 1633 (82.8) |

| Veterans Administration | 542 (27.5) |

| Non–Veterans Administration | 1091 (55.3) |

| Racea | |

| Asian | 30 (1.5) |

| Black | 370 (18.8) |

| First Nation/American Indian | 16 (0.8) |

| White | 1498 (75.9) |

| Otherb | 59 (3) |

| Ethnicitya | |

| Hispanic/Latino | 136 (6.9) |

| Non-Hispanic/Latino | 1822 (92.3) |

| Otherc | 15 (0.8) |

| Ejection fraction | 40.5 ± 16.7 |

| New York Heart Association functional class | |

| I | 196 (13.2) |

| II | 698 (46.9) |

| III | 531 (35.7) |

| IV | 63 (4.2) |

| Body mass indexd | 31.7 ± 8.2 |

| Qualifying event | |

| Heart failure | 1549 (78.5) |

| Myocardial infarction | 424 (21.5) |

| Current or past left ventricular ejection fraction <40% | 963 (48.8) |

| Diabetes | 869 (44) |

| Prior heart failure hospitalization | 486 (24.6) |

| History of myocardial infarction | 309 (15.7) |

| History of atrial fibrillation | 846 (42.9) |

| History of chronic obstructive pulmonary disease | 468 (23.7) |

Race and ethnicity were assessed per National Institutes of Health guidelines and were self-reported based on fixed categories.

Other race includes Native Hawaiian, Pacific Islander, more than 1 race, participant did not want to report, participant did not know, and race not available or missing.

Other ethnicity includes participants who did not want to report, who did not know, or for whom ethnicity not available or missing.

Calculated as weight in kilograms divided by height in meters squared.

External Validation of the Existing NLP Heart Failure Model

Out of 4060 total hospitalizations, the CEC adjudicated 1074 (26%) as HF and 2986 as other causes (Table 2). The C3PO NLP model adjudicated 1486 hospitalizations (37%) as HF, reflecting that the externally calibrated C3PO NLP model was more permissive in adjudicating HF than the CEC. The C3PO NLP model adjudications demonstrated substantial agreement with the final CEC adjudication (raw agreement, 87% [95% CI, 86-88]; κ, 0.69 [95% CI, 0.66-0.72]). C3PO NLP sensitivity was 94% (95% CI, 92-95) and specificity was 84% (95% CI, 83-85). Positive predictive value was 68% (95% CI, 65-70) and negative predictive value, 97% (95% CI, 97-98). Agreement between C3PO NLP and final CEC adjudication appeared to be greater in patients without history of ejection fraction less than 40% (κ, 0.75 [95% CI, 0.70-0.78] vs 0.65 [95% CI, 0.61-0.69]; P for interaction = .004) and in patients 65 years and older (κ, 0.72 [95% CI, 0.68-0.76] vs 0.66 [95% CI, 0.61-0.71]; P for interaction = .048) and was consistent across sex, country and type of site, race, and enrollment based on HF hospitalization vs myocardial infarction (eFigure 2 in Supplement 2). False positive C3PO NLP adjudication for HF was more likely when the CEC adjudicated a non-HF cardiovascular cause (297 of 1375 [22%]), particularly cardiac arrhythmia (64 of 196 [33%]), compared to pulmonary (31 of 290 [11%]) or noncardiopulmonary causes (147 of 1305 [11%]) (eTable 2 in Supplement 2). In a sensitivity analysis in which 300 hospitalizations adjudicated as nonspecific cardiopulmonary cause were included and considered HF, model performance decreased only slightly: accuracy was 85% (95% CI, 84-86) and κ 0.68 (95% CI, 0.65-0.75), compared to 87% (95% CI, 86-88) and 0.69 (95% CI, 0.66-0.72) in the original cohort, respectively.

Table 2. Heart Failure Adjudication by the Community Care Cohort Project (C3PO) Natural Language Processing (NLP) Model Compared to Clinical Events Committee (CEC).

| Human CEC | C3PO NLP model, No. (%) | ||

|---|---|---|---|

| Heart failure | No heart failure | Total | |

| Heart failure | 1009 (25) | 65 (2) | 1074 (26) |

| No heart failure | 477 (12) | 2509 (62) | 2986 (74) |

| Total | 1486 (37) | 2574 (63) | 4060 (100) |

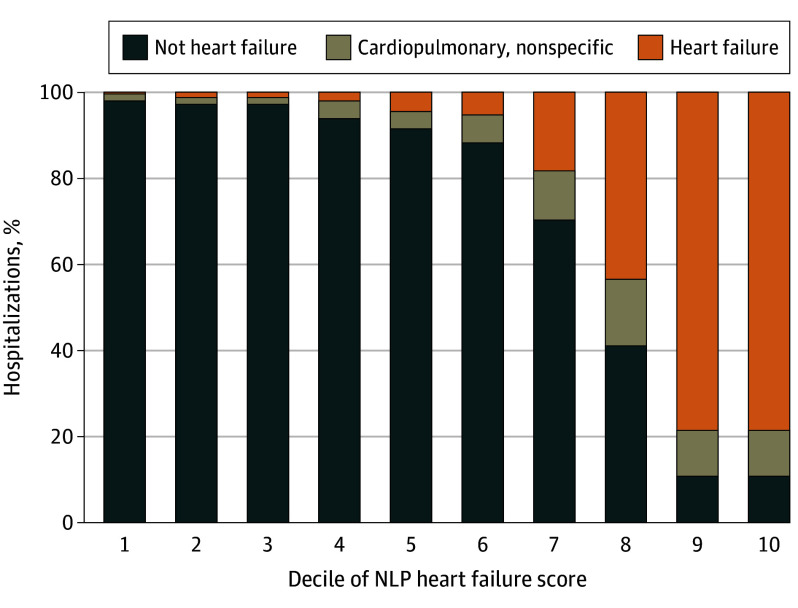

The likelihood of CEC HF adjudication increased with continuous C3PO NLP HF score (Figure 2). Just 4% of hospitalizations with NLP HF scores in deciles 1 to 5 were adjudicated as HF or nonspecific cardiopulmonary by the CEC, compared to 89% in the top 2 deciles.

Figure 2. Human Clinical Events Committee Adjudications in Each Decile of Community Care Cohort Project (C3PO) Natural Language Processing (NLP) Heart Failure Score.

A total of 4360 hospitalizations were included; 960 of these with the exact highest possible C3PO NLP heart failure score were considered deciles 9 and 10, and 439 hospitalizations with identical scores just below the maximal score were considered decile 8. Deciles 1-7 include 415 to 430 hospitalization each.

We evaluated the accuracy of 2 hypothetical hybrid human + NLP adjudication strategies. First, one could manually adjudicate the 20% of hospitalizations with continuous C3PO NLP HF score rank closest to the prespecified threshold for HF (10% in each direction) and use the NLP adjudication in the remaining 80% of hospitalizations. This strategy would have yielded 94% agreement with the CEC (κ, 0.85 [95% CI, 0.82-0.88]) while reducing the number of hospitalizations requiring manual adjudication by 80%. Second, one could rule out hospitalizations in NLP score deciles 1 to 5 and manually adjudicate the rest. This strategy would reduce adjudications by half while ruling out just 16 hospitalizations the CEC considered to be HF.

Fine-Tuning or Retraining the NLP Model in INVESTED

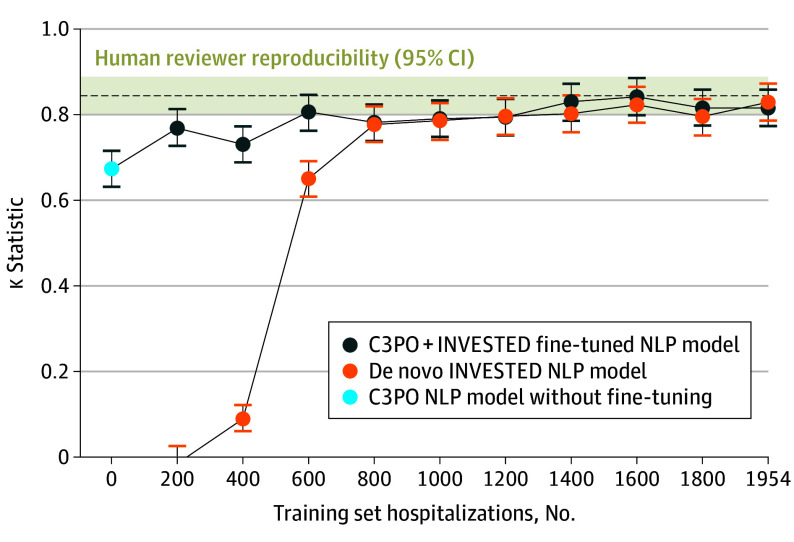

Fine-tuning the C3PO NLP model or training a de novo NLP model using half the INVESTED hospitalizations substantially improved agreement with the gold-standard CEC adjudication. On the held-out internal validation set of INVESTED hospitalizations, the C3PO + INVESTED model demonstrated κ 0.82 (95% CI, 0.77-0.86); accuracy, 93% (95% CI, 92-94); sensitivity, 81% (95% CI, 77-84); specificity, 98% (95% CI, 97-98); positive predictive value, 93% (95% CI, 90-95); and negative predictive value, 93% (95% CI, 92-95) relative to the CEC. The de novo INVESTED NLP model performed similarly: κ, 0.83 (95% CI, 0.79-0.87); accuracy, 93% (95% CI, 92-95); sensitivity 84% (95% CI, 81-87); specificity, 97% (95% CI, 96-98); positive predictive value, 90% (95% CI, 88-93); and negative predictive value, 95% (95% CI, 93-96). Both models were superior to the original C3PO NLP model, which demonstrated a κ of 0.67 (95% CI, 0.63-0.72) on the same internal validation set. The continuous NLP scores from these models (without thresholding) provided greater discrimination for the CEC-adjudicated outcome (area under the receiver operating characteristic curve, 0.96 [95% CI, 0.95-0.97] for C3PO+INVESTED and 0.97 [95% CI, 0.96-0.98] for de novo INVESTED) than original C3PO model (0.94 [95% CI, 0.93-0.95]), indicating that training in INVESTED improved the models beyond mere recalibration. At smaller training set sample sizes, the fine-tuned C3PO model was superior to the de novo model at 200 to 600 examples, and the 2 models performed similarly at 800 examples or greater (Figure 3).

Figure 3. Fine-Tuned Community Care Cohort Project (C3PO) + Influenza Vaccine to Effectively Stop Cardio Thoracic Events and Decompensated Heart Failure (INVESTED) and De Novo INVESTED Natural Language Processing (NLP) Models at Varying Training Set Sample Size.

The figure shows the κ statistic for C3PO + INVESTED and de novo INVESTED NLP models with gold-standard clinical event committee (CEC) adjudication on a held-out internal validation set of 2106 INVESTED hospitalizations. The C3PO NLP model without fine-tuning indicates external validation accuracy. Human reviewer reproducibility indicates the agreement between 2 independent CEC reviewers on the internal validation set.

Benchmarking NLP Adjudication to Interrater Reproducibility of 2 Human Reviewers and Site Investigator Adjudication

We compared the NLP models to a benchmark of human performance, defined as the interrater reproducibility between the 2 human CEC reviewers that reviewed each hospitalization. On the internal validation set, the 2 reviewers agreed on whether the cause of hospitalization was HF with agreement 94% (95% CI, 93-95) and κ 0.85 (95% CI, 0.80-0.89) (Figure 3) This interrater reproducibility was similar to the agreement between the C3PO + INVESTED (accuracy, 93% [95% CI, 92-94]; κ, 0.82 [95% CI, 0.77-0.86]) and de novo INVESTED NLP models (accuracy, 93% [95% CI, 92-95]; κ, 0.83 [95% CI, 0.79-0.87]), with modest differences falling within 95% CIs.

The local site investigator adjudicated HF in 557 of 2106 cases (26%) in the internal validation set. The final CEC adjudication agreed with the site investigator in 1925 cases (91%), with κ 0.78 (95% CI, 0.74-0.82). Thus, agreement between site investigator and final CEC adjudications was lower than interreviewer reproducibility or the fine-tuned or de novo retrained INVESTED NLP models but greater than the C3PO model.

Discussion

In this secondary analysis of a randomized clinical trial, we validated and generalized the findings of a single-center study of NLP for clinical outcome adjudication. Identifying clinical events like HF hospitalization in clinical trials and epidemiological cohorts is a labor-intensive process that includes recognizing potential events, collecting medical records, and review of these records by CEC physicians to determine whether they meet prespecified criteria. We previously developed an NLP model for HF hospitalization adjudication within a single-center EHR-based cohort (C3PO).8 In this external validation study, we demonstrate that the C3PO NLP model identified HF hospitalizations from medical record text with 87% agreement with the human CEC without retraining or recalibration in the INVESTED trial. Moreover, fine-tuning the C3PO NLP model or training a new NLP model in half of INVESTED hospitalizations further improved agreement with the CEC up to 93%, which approximated the interrater reproducibility between 2 human reviewers (94%). These results represent a first step toward the application of NLP to streamline the adjudication of clinical outcomes at scale in clinical trials and observational cohorts. Future implementation of NLP for this purpose will require further validation studies across a multitude of EHR systems, care settings, and countries, and integration with methods to collect medical records efficiently.

Rigorous external validation of machine learning models without retraining or recalibration is a prerequisite for meaningful implementation.11,12,13 Single-center models are prone to learning artifacts, such as the floor number of the HF ward or the name of a HF physician, rather than symptoms, signs, and treatment of the disease. Since these artifacts are no longer present in external data sets, models depending on them generalize poorly, a major issue in machine learning that has also been called conceptual reproducibility.11,12 Our results showing good agreement between the NLP and human review in a multicenter trial provide reassurance that the model identifies universal evidence of HF rather than artifacts. Our findings extend other published NLP models for HF by demonstrating external validation from a hospital-based sample in a multicenter clinical trial.20,21

The second key finding of this study is that NLP models which were fine-tuned or de novo trained on a subset of INVESTED hospitalizations attained accuracy on par with human-level adjudication accuracy on the rest of INVESTED. These internal validation results provide optimism that future NLP models trained specifically for clinical trial event adjudication may attain human-level performance on external clinical trial data sets. However, since these models could only be internally validated within INVESTED, their generalizability beyond INVESTED is unknown and will need to be assessed in future work.

Relatively inexpensive end point adjudication by NLP has the potential to improve the efficiency of clinical trials, observational research, and quality improvement. For clinical trials, reducing CEC cost could enable larger sample sizes or trials for indications that currently lack funding while maintaining cause-specific outcomes, like HF hospitalization, which may be most strongly affected by novel therapies and are standard for regulatory approval.7,22 Beyond efficacy outcomes, NLP could enable more consistent and timely reporting of adverse events in clinical trials. Further, NLP adjudication of HF outcomes is broadly applicable to genomic or clinical epidemiology studies within large biobanks and registries, which have been limited by imprecise HF definitions using ICD codes.23 We acknowledge that current NLP performance may be insufficient for some use cases such as phase 3 randomized trials, that NLP models will need to be evaluated further to ensure that results are not biased in new settings, and that NLP can only be applied in trials and cohorts for medical record data has already been collected. Our results showing good agreement between NLP and CEC adjudication across multiple centers are a first step toward broad use of NLP models for these use cases.

In INVESTED, site investigator adjudication of HF frequently agreed with the CEC, consistent with other studies suggesting that the benefit of CECs (and NLP) may be incremental when site investigator adjudication is available.24,25 This observation highlights that the greatest impact of NLP will occur when site adjudications are not available, such as in EHR cohorts, like C3PO, or pragmatic clinical trials in which sites do not follow-up with patients longitudinally. For example, the TRANSFORM trial reduced costs by eliminating postrandomization site involvement and adjudication but was limited to all-cause outcomes.22 Agreement between site investigator and CEC may have been inflated in INVESTED because the CEC but not the NLP model was exposed to the site investigator adjudication and case summary.

As researchers begin to implement NLP adjudication of clinical events in future studies, combining NLP and human adjudication may be a practical approach. One easily implemented strategy is to manually adjudicate a subset of cases (for example, 20%) with equivocal NLP scores and to trust the NLP for hospitalizations with very high or low scores. This approach yielded 94% accuracy in our study while reducing manual adjudications by 80%.

Limitations

Our conclusions should be interpreted in the context of limitations in the study design. First, while NLP has the potential to reduce or eliminate manual medical record review, this is just one of many processes required to obtain clinical outcome data in randomized clinical trials. Additional processes—identifying potential HF hospitalizations, collecting medical records, and excluding events which were not unplanned hospitalizations—will require future studies. Second, we restricted analysis to English language medical records because the C3PO NLP model was developed at Mass General Brigham where medical records are in English. Future studies are needed to validate current models on translated documents from global trials or develop new models for documents in other languages. Third, exclusion of hospitalizations with unavailable medical records (investigator cover sheet only) may have biased the results toward greater NLP accuracy, since hospitalizations with limited documentation may be difficult to adjudicate. Reported agreement with the CEC may represent a best-case scenario. Fourth, exclusion of hospitalizations adjudicated as nonspecific cardiopulmonary cause may also have inflated our assessment of the NLP model, although in a sensitivity analysis, including these hospitalizations only modestly decreased the κ statistic of NLP. Fifth, this study included only a single trial conducted at relatively homogeneous sites in the US and Canada. The race and ethnicity of hospitalized INVESTED participants may differ from real-world settings, which may impact generalizability of the results. Additional studies evaluating NLP in patients with varying racial backgrounds, hospitals in other regions of the world, and for different disease outcomes are needed. Sixth, INVESTED sites were asked to submit only hospitalizations that were potentially for a cardiopulmonary cause; inclusion of nonsubmitted hospitalizations might have changed the results. Seventh, CEC adjudication is subject to human error and is not perfectly reproducible, as our findings demonstrate. Eighth, dossiers longer than 4096 tokens were truncated before submission to the NLP model; future models accommodating longer text might perform better.

Conclusions

NLP is a promising strategy for identifying clinical events from medical record text at scale. In this study, the single-center C3PO NLP model for adjudication of HF hospitalizations agreed with the gold-standard human CEC 87% of the time in a multicenter clinical trial. Fine-tuning the C3PO NLP model or training a new NLP model further improved agreement with the CEC to 93%, which was similar to human reviewer reproducibility. These results represent a first step toward application of NLP to streamline the identification of clinical outcomes scalably in clinical trials and observational cohorts, although further validation studies including other EHR systems, health systems, and countries and integration with methods to obtain medical records are needed.

Trial protocol

eMethods

eTable 1. Development Test Set Performance of Each Pre-Trained NLP Model During Original Development of the C3PO NLP Model

eTable 2. Rate of NLP Heart Failure by True CEC Adjudication

eFigure 1. Histogram of Token Length of Medical Record Dossiers

eFigure 2. Agreement Between NLP and Human CEC Heart Failure Adjudications in Key Subgroups of Patients

Data sharing statement

References

- 1.Ziaeian B, Fonarow GC. The prevention of hospital readmissions in heart failure. Prog Cardiovasc Dis. 2016;58(4):379-385. doi: 10.1016/j.pcad.2015.09.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hicks KA, Mahaffey KW, Mehran R, et al. ; Standardized Data Collection for Cardiovascular Trials Initiative (SCTI) . 2017 Cardiovascular and stroke endpoint definitions for clinical trials. Circulation. 2018;137(9):961-972. doi: 10.1161/CIRCULATIONAHA.117.033502 [DOI] [PubMed] [Google Scholar]

- 3.Heidenreich PA, Zhao X, Hernandez AF, et al. Impact of an expanded hospital recognition program for heart failure quality of care. J Am Heart Assoc. 2014;3(5):e000950. doi: 10.1161/JAHA.114.000950 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bielinski SJ, Pathak J, Carrell DS, et al. A robust e-epidemiology tool in phenotyping heart failure with differentiation for preserved and reduced ejection fraction: the Electronic Medical Records and Genomics (EMERGE) network. J Cardiovasc Transl Res. 2015;8(8):475-483. doi: 10.1007/s12265-015-9644-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Strom JB, Faridi KF, Butala NM, et al. Use of administrative claims to assess outcomes and treatment effect in randomized clinical trials for transcatheter aortic valve replacement: findings from the EXTEND study. Circulation. 2020;142(3):203-213. doi: 10.1161/CIRCULATIONAHA.120.046159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Danaei G. Causal analyses of nested case-control studies for comparative effectiveness research. PCORI Public Prof Res Rep. Posted online 2021. doi: 10.25302/07.2021.ME.160936748 [DOI]

- 7.Cowie MR, Blomster JI, Curtis LH, et al. Electronic health records to facilitate clinical research. Clin Res Cardiol. 2017;106(1):1-9. doi: 10.1007/s00392-016-1025-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cunningham JW, Singh P, Reeder C, et al. Natural language processing for adjudication of heart failure in the electronic health record. JACC Heart Fail. 2023;11(7):852-854. doi: 10.1016/j.jchf.2023.02.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Minaee S, Kalchbrenner N, Cambria E, Nikzad Khasmakhi N, Asgari-Chenaghlu M, Gao J. Deep learning based text classification: a comprehensive review. arXiv. Revised January 4, 2021. doi: 10.48550/arXiv.2004.03705 [DOI]

- 10.Rose T, Stevenson M, Whitehead M. The Reuters Corpus volume 1 -from yesterday’s news to tomorrow’s language resources. In: Proceedings of the Third International Conference on Language Resources and Evaluation (LREC’02). European Language Resources Association (ELRA); 2002. http://www.lrec-conf.org/proceedings/lrec2002/pdf/80.pdf

- 11.McDermott MBA, Wang S, Marinsek N, Ranganath R, Foschini L, Ghassemi M. Reproducibility in machine learning for health research: still a ways to go. Sci Transl Med. 2021;13(586):eabb1655. doi: 10.1126/scitranslmed.abb1655 [DOI] [PubMed] [Google Scholar]

- 12.Barak-Corren Y, Chaudhari P, Perniciaro J, Waltzman M, Fine AM, Reis BY. Prediction across healthcare settings: a case study in predicting emergency department disposition. NPJ Digit Med. 2021;4(1):169. doi: 10.1038/s41746-021-00537-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yang J, Soltan AAS, Clifton DA. Machine learning generalizability across healthcare settings: insights from multi-site COVID-19 screening. NPJ Digit Med. 2022;5(1):69. doi: 10.1038/s41746-022-00614-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vardeny O, Udell JA, Joseph J, et al. High-dose influenza vaccine to reduce clinical outcomes in high-risk cardiovascular patients: rationale and design of the INVESTED trial. Am Heart J. 2018;202:97-103. doi: 10.1016/j.ahj.2018.05.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Vardeny O, Kim K, Udell JA, et al. ; INVESTED Committees and Investigators . Effect of high-dose trivalent vs standard-dose quadrivalent influenza vaccine on mortality or cardiopulmonary hospitalization in patients with high-risk cardiovascular disease: a randomized clinical trial. JAMA. 2021;325(1):39-49. doi: 10.1001/jama.2020.23649 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Artifex Software . Ghostscript. Accessed May 9, 2023. http://www.ghostscript.com

- 17.Kay A. Tesseract: an open-source optical character recognition engine. Linux J. 2007;(159):2. https://www.linuxjournal.com/article/9676 [Google Scholar]

- 18.Li Y, Wehbe RM, Ahmad FS, Wang H, Luo Y. Clinical-Longformer and Clinical-BigBird: transformers for long clinical sequences. Published online 2022. doi: 10.48550/ARXIV.2201.11838 [DOI]

- 19.Khurshid S, Reeder C, Harrington LX, et al. Cohort design and natural language processing to reduce bias in electronic health records research. NPJ Digit Med. 2022;5(1):47. doi: 10.1038/s41746-022-00590-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Goto S, Homilius M, John JE, et al. Artificial intelligence-enabled event adjudication: estimating delayed cardiovascular effects of respiratory viruses. medRxiv. Published online January 1, 2020. doi: 10.1101/2020.11.12.20230706 [DOI]

- 21.Ambrosy AP, Parikh RV, Sung SH, et al. A natural language processing-based approach for identifying hospitalizations for worsening heart failure within an integrated health care delivery system. JAMA Netw Open. 2021;4(11):e213515. doi: 10.1001/jamanetworkopen.2021.35152 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mentz RJ, Anstrom KJ, Eisenstein EL, et al. ; TRANSFORM-HF Investigators . Effect of torsemide vs furosemide after discharge on all-cause mortality in patients hospitalized with heart failure: the TRANSFORM-HF randomized clinical trial. JAMA. 2023;329(3):214-223. doi: 10.1001/jama.2022.23924 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Aragam KG, Chaffin M, Levinson RT, et al. ; GRADE Investigators; Genetic Risk Assessment of Defibrillator Events (GRADE) Investigators . Phenotypic refinement of heart failure in a national biobank facilitates genetic discovery. Circulation. 2019;139(4):489-501. doi: 10.1161/CIRCULATIONAHA.118.035774 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tyl B, Lopez Sendon J, Borer JS, et al. Comparison of outcome adjudication by investigators and by a central end point committee in heart failure trials: experience of the SHIFT heart failure study. Circ Heart Fail. 2020;13(7):e006720. doi: 10.1161/CIRCHEARTFAILURE.119.006720 [DOI] [PubMed] [Google Scholar]

- 25.Carson P, Teerlink JR, Komajda M, et al. Comparison of investigator-reported and centrally adjudicated heart failure outcomes in the EMPEROR-Reduced trial. JACC Heart Fail. 2023;11(4):407-417. doi: 10.1016/j.jchf.2022.11.017 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Trial protocol

eMethods

eTable 1. Development Test Set Performance of Each Pre-Trained NLP Model During Original Development of the C3PO NLP Model

eTable 2. Rate of NLP Heart Failure by True CEC Adjudication

eFigure 1. Histogram of Token Length of Medical Record Dossiers

eFigure 2. Agreement Between NLP and Human CEC Heart Failure Adjudications in Key Subgroups of Patients

Data sharing statement