Abstract

Background

The implementation of simulation-based training (SBT) to teach flexible bronchoscopy (FB) skills to novice trainees has increased during the last decade. However, it is unknown whether SBT is effective to teach FB to novices and which instructional features contribute to training effectiveness.

Research Question

How effective is FB SBT and which instructional features contribute to training effectiveness?

Study Design and Methods

We searched Embase, PubMed, Scopus, and Web of Science for articles on FB SBT for novice trainees, considering all available literature until November 10, 2022. We assessed methodological quality of included studies using a modified version of the Medical Education Research Study Quality Instrument, evaluated risk of bias with relevant tools depending on study design, assessed instructional features, and intended to correlate instructional features to outcome measures.

Results

We identified 14 studies from an initial pool of 544 studies. Eleven studies reported positive effects of FB SBT on most of their outcome measures. However, risk of bias was moderate or high in eight studies, and only six studies were of high quality (modified Medical Education Research Study Quality Instrument score ≥ 12.5). Moreover, instructional features and outcome measures varied highly across studies, and only four studies evaluated intervention effects on behavioral outcome measures in the patient setting. All of the simulation training programs in studies with the highest methodological quality and most relevant outcome measures included curriculum integration and a range in task difficulty.

Interpretation

Although most studies reported positive effects of simulation training programs on their outcome measures, definitive conclusions regarding training effectiveness on actual bronchoscopy performance in patients could not be made because of heterogeneity of training features and the sparse evidence of training effectiveness on validated behavioral outcome measures in a patient setting.

Trial Registration

PROSPERO; No.: CRD42021262853; URL: https://www.crd.york.ac.uk/prospero/

Key Words: bronchoscopy, learning, simulation, training

FOR EDITORIAL COMMENT, SEE PAGE 820

Take-home Points.

Study Question: How effective is flexible bronchoscopy simulation-based training and which instructional features contribute to training effectiveness?

Results: This systematic review shows that flexible bronchoscopy simulation-based training is effective in improving skills when evaluated in a simulation setting. However, the effects of simulation training on skill performance of novices in a patient setting are less clear because of a lack of studies using homogeneous validated outcome measures. Integrating bronchoscopy simulation training programs in the curriculum and increasing task difficulty appear to contribute to training effectiveness.

Interpretation: To further improve our knowledge of the effectiveness of bronchoscopy simulation-based training and how to optimize these training programs, we advocate that future studies use more homogeneous validated outcome measures, preferably in a patient setting.

Use of simulation in health professions education has increased significantly over the past 2 decades.1 This shift from the traditional apprenticeship model (see one, do one, teach one) toward simulation-based training (SBT) is largely the result of concerns for patient safety.2,3 In general, the apprenticeship method, and more specifically, flexible bronchoscopy (FB) training, are associated with a higher complication risk4,5 and increased patient discomfort.6 Hence, a shift to SBT might be desirable.

Currently, a variety of FB simulators are used for bronchoscopy training (eg, animal models,7 3-D printed airway models,8 high-fidelity virtual reality simulators9,10). To date, four reviews on bronchoscopy training programs (TPs) using simulators have been published.2,11, 12, 13 The systematic review by Kennedy et al2 concluded that SBT was effective in comparison with no training. The authors also assessed the presence of 10 key instructional features, as identified in an earlier review on features of medical simulation TPs.14 The interpretation of the Kennedy et al2 review is somewhat hampered by the inclusion of a variety of different simulation methods for different types of bronchoscopies (eg, rigid bronchoscopy, FB, endobronchial ultrasound). Furthermore, the studies’ settings were heterogeneous (eg, in an otolaryngology or anesthesiology setting). Bronchoscopy in these settings requires less detailed navigation competencies compared with FB in a pulmonology setting.9 Three additional reviews have been published since then on FB SBT,11, 12, 13 but their interpretation is also hampered by their narrative designs and lack of systematic study quality assessments. In addition, none of these three reviews looked at the effectiveness of instructional features present in the included TPs.

Based on these reviews, there is still no clear-cut answer to the basic question of whether FB SBT is effective in improving basic FB skills of novice pulmonology trainees and which instructional TP features might contribute to training effectiveness. In this review, we therefore aim (1) to summarize the current evidence of the effectiveness of SBT on improving novice bronchoscopists’ basic FB skills, taking into account quality of included studies, and (2) to give an overview of the general and instructional features of the investigated TPs. Furthermore, we describe the relation between instructional features and outcomes to identify the most effective training strategies.

Study Design and Methods

This review was written in compliance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines.15 Because only publicly available data were used and no human subjects were involved, institutional review board approval was not required.

A search was performed in PubMed, Embase, Scopus, and Web of Science, encompassing all available articles up until November 10, 2022, using the search strategies developed in collaboration with an experienced research librarian (e-Table 1). The search was composed of relevant terms related to bronchoscopy, simulation training, and competence. No language criteria were applied. The following selection criteria were used for inclusion of studies into the final analysis: (1) the study design had to be a pretest-posttest, two-group nonrandomized, or randomized design; (2) the study had to include novice trainees regarding bronchoscopy experience; and (3) the intervention had to include at least basic FB SBT, where the simulator is a tool or device with which the trainee physically interacts to simulate an FB. Studies reporting only trainee-reported outcome measures were excluded.

Two reviewers (E. C. F. G. and A. C.) independently performed all evaluations regarding screening and data extraction. Only full texts were considered. In case of discrepancy, a consensus meeting was planned. In case no consensus could be achieved, a third reviewer (F. W. J. M. S.) made the final decision.

First, the reviewers screened all titles and abstracts of studies from the search results against the inclusion criteria. After achieving consensus on which articles to include, they screened reference lists of those articles for other possible relevant articles.

Second, the following characteristics of the full texts of included papers were assessed: study design, number of participants and their level of education, simulator modality, comparator, outcome measures, and intervention’s effects on the outcome measures.

Articles that fully met all inclusion criteria were included for analysis.

The reviewers also evaluated on which Kirkpatrick level16 outcome measures were assessed. This is a four-level model to evaluate training impact: reaction (level 1), learning (level 2), behavior (level 3), and results (level 4).17 In a simulation training setting, level 1 refers to participants’ satisfaction with the training (not applicable in our study because these studies were excluded), level 2 refers to an improvement in skills (an improvement in outcomes in a simulation setting), level 3 learning is suggested when on-the-job behavior is improved (an improvement in bronchoscopy performance in a patient setting), and level 4 refers to improvement in patient outcomes18 (eg, less discomfort, fewer complications).

To prevent bias, the name of the journal, authors, abstract, and discussion sections were removed from the articles for the three reviewers in all their further evaluations. The reviewers then assessed the methodological quality of studies using the modified Medical Education Research Study Quality Instrument (mMERSQI).19 A score of 4.5 to 8.5 indicates low quality, 9.0 to 13.0 indicates moderate quality, and 13.5 to 18.0 indicates high quality.20 This tool was adapted on the validity of the evaluation instrument domain because this domain was considered not fully applicable for the current review because of it being open to interpretation in this setting. Therefore, this domain was transformed into a single known-groups comparison parameter to evaluate the validity of the evaluation instrument, for which a positive score was given if the instrument had any (referred) proven validity in terms of a known-groups comparison. Considering the maximum score with our mMERSQI tool was 2.0 points lower than the original one, we adapted the interpretation of the scores regarding quality accordingly: 4.5 to 8.0 indicating low quality, 8.5 to 12.0 indicating moderate quality, and 12.5 to 16.0 indicating high quality.

Risk of bias (RoB) was determined with different tools depending on study design21,22 (Table 1). For each study, the reviewers calculated how many items they could answer positively, where a positive score for an item means that the study had a low RoB for that item. Next, they divided the total number of positive items by the number of applicable items for that study and transformed all scores to a final score on the original scale of the RoB tool.

Table 1.

Risk of Bias Tool Used for Each Study Design

| Study Design | Risk of Bias Tool | Study | Maximum Score |

|---|---|---|---|

| Pretest-posttest | Quality Assessment Tool for Before-After (Pre-Post) Studies With No Control Group | National Heart, Lung, and Blood Institute22 | 12 |

| Two-group nonrandomized | Critical Appraisal Tool for Quasi-Experimental Studies (nonrandomized experimental studies) | Tufanaru et al21 | 9 |

| Randomized controlled trial | Quality Assessment of Controlled Intervention Studies | National Heart, Lung, and Blood Institute22 | 14 |

Finally, all studies were carefully assessed for the general and instructional features listed in Table 2. Features not explicitly mentioned in a study were assumed not to be present. In case the reviewers could not extract all characteristics from the publication, they contacted the authors to request further information.

Table 2.

General and Instructional Features and Definitions

| Feature Category | Feature | Definition |

|---|---|---|

| General | Duration | Training duration in hours and days |

| Assessment by | Assessment by simulator, observer, or both | |

| Observer instruction | Observer instruction described | |

| Validity evidence reported/referred | Use of validated assessment tool/procedure or referred to known-groups comparison for the assessment tool/procedure | |

| Instructional designa | Clinical variation | Multiple different scenarios were present |

| Curriculum integration | Training was a part of the curriculum | |

| Feedback | Feedback was provided by an instructor | |

| Group practice | Training occurred in a group | |

| Individualized learning | Training could be tailored to the trainee depending on individual performance | |

| Mastery learning | Trainee must attain a predefined level of performance | |

| Prestudy | Participants had to study or watch a video or presentation before the training | |

| Range in task difficulty | There was a variation in task difficulty |

These features were partially based on a study from Issenberg et al14 from 2005. Although initially planned, it was decided to leave out the following three features: (1) multiple learning strategies (because no clear-cut definition of a learning strategy could be found), (2) number of learning modalities (because if training programs included more learning modalities, it was always because of videos or books being present, which was already taken into account in prestudy), and (3) repetition (because the opportunity to repeat a task multiple times is almost always possible when training on a simulator).

Although a meta-analysis was planned, this proved impossible because of the high level of heterogeneity of the interventions and outcomes in the included studies.23 Therefore, the reviewers evaluated the methodological quality and characteristics of all studies and related these to their results.

Results

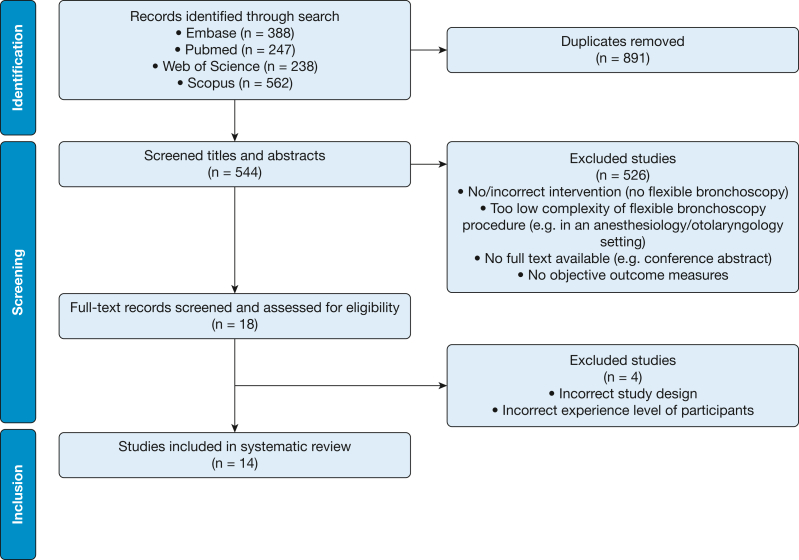

The search yielded 544 articles after removal of duplicates. Initially, 18 studies ended up meeting the inclusion criteria (Fig 1). Reference list analysis of those studies did not lead to any other relevant articles. After evaluating the full texts of these studies, the reviewers were undecided about five studies. The third reviewer excluded four of those studies because the design or the participants’ experience level did not meet the inclusion criteria.24, 25, 26, 27

Figure 1.

Flow diagram of the systematic review.

Methodological quality of studies was moderate to high, with mMERSQI scores ranging from 10 to 14 on a 16-point scale (mean ± SD, 12.2 ± 1.2) (Table 3).6,9,10,28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38 Six studies had a high mMERSQI score (≥ 12.5).28, 29, 30, 31, 32, 33 The score differences were mainly caused by differences in study design.

Table 3.

Study Characteristics of Included Studies

| Study | Design | No. of Participants (IG/CG)a | Experience Level | Simulator Modality | Comparator | Outcome Measures | mMERSQI |

|---|---|---|---|---|---|---|---|

| Colt et al6 | P-P | 5 | Novice pulmonary and critical care medicine fellows | VR | NA | Simulator (learning) | 11 |

| Ost et al28 | RCT | 6 (3/3) | Novice pulmonary fellows | VR | Conventional training | Patient (behavior) | 13 |

| Blum et al29 | RCT | 10 (5/5) | First-year surgical residents | VR | No training | Patient (behavior) | 13 |

| Wahidi et al33 | 2G-NR | 44 (22/22) | Novice pulmonary fellows | VR | Conventional training | Patient (behavior) | 14 |

| Colt et al31 | P-P | 24 | Novice pulmonary and critical care fellows | Unknown | NA | Simulator (learning) | 13 |

| Bjerrum et al35 | P-P | 47 | Medical students | VR | NA | Simulator (learning) | 12 |

| Krogh et al32 | RCT | 20 (10/10) | Medical students | VR | No training | Simulator (learning) | 13.5 |

| Bjerrum et al10 | P-P | 36 | Medical students | VR | NA | Simulator (learning) | 12 |

| Bjerrum et al36 | P-P | 20 | Physicians in training | VR | NA | Simulator (learning) | 12 |

| Gopal et al37 | P-P | 47 | Medical students | VR | NA | Simulator (learning) | 11.5 |

| Veaudor et al9 | P-P | 8 | Novice first-year pulmonology residents | VR | NA | Simulator (learning) | 10 |

| Feng et al34 | P-P | 28 | Medical students | Part-task trainer | NA | Simulator (learning) | 11 |

| Schertel et al38 | P-P | 54 | Medical students | VR | NA | Simulator (learning) | 11 |

| Siow et al30 | 2G-NR | 18 (8/10) | Pulmonary medicine residents | VR | Conventional training | Patient (behavior) | 14 |

2G-NR = two-group nonrandomized; CG = control group; IG = intervention group; mMERSQI = modified Medical Education Research Study Quality Instrument; NA = not applicable; P-P = pretest-posttest; RCT = randomized controlled trial; VR = virtual reality.

For pretest-posttest studies, only one number is shown because those studies do not have a CG.

Table 3 shows study characteristics. Most (n = 9) used a pretest-posttest design, and the number of participants in all included studies ranged from five to 54. Twelve studies used a virtual-reality simulator,6, 9, 10, 28, 29, 30, 32, 33, 35, 36, 37, 38 one study used a part-task trainer,34 and for one study, the reviewers could not extract the used simulation equipment from the text.31 Ten studies measured outcomes in a simulation setting6,9,10,31,32,34, 35, 36, 37, 38 (eg, number of wall contacts, [modified] validated Bronchoscopy Skills and Tasks Assessment Tool [BSTAT]).39 Four studies measured Kirkpatrick (behavioral) level 3 outcomes (eg, BSTAT for a bronchoscopy performed on a patient).28, 29, 30,33

RoB scores of included studies are described in Table 4. RoB scores of pretest-posttest studies ranged from 4.437,38 to 9.636 on a 12-point scale (mean ± SD, 6.4 ± 1.8). Only two studies10,36 had relatively high RoB scores (8.4 and 9.6) and were therefore considered to have a low RoB. The two two-group nonrandomized design studies30,33 had a low RoB (final score of 7 on a 9-point scale). The three randomized controlled trials had a moderate to low RoB, with scores ranging from 7.028 to 1032 on a 14-point scale.

Table 4.

Overview of Studies’ Risk of Bias Scores

| Design | Study | Positive Items | Applicable Items | Final Score |

|---|---|---|---|---|

| Pretest-posttest | Colt et al6 | 5 | 11 | 5.5 |

| (maximum score 12) | Colt et al31 | 6 | 11 | 6.5 |

| Bjerrum et al35 | 6 | 10 | 7.2 | |

| Bjerrum et al10 | 7 | 10 | 8.4 | |

| Bjerrum et al36 | 8 | 10 | 9.6 | |

| Gopal et al37 | 4 | 11 | 4.4 | |

| Veaudor et al9 | 5 | 11 | 5.5 | |

| Feng et al34 | 6 | 11 | 6.5 | |

| Schertel et al38 | 4 | 11 | 4.4 | |

| Two-group nonrandomized | Wahidi et al33 | 7 | 9 | 7.0 |

| (maximum score 9) | Siow et al30 | 7 | 9 | 7.0 |

| Randomized controlled trial | Ost et al28 | 7 | 14 | 7.0 |

| (maximum score 14) | Blum et al29 | 9 | 14 | 9.0 |

| Krogh et al32 | 10 | 14 | 10.0 |

The final score was calculated by dividing the number of positive items by the number of applicable items, transformed to the original maximum possible score of the risk of bias tool. Pretest-posttest study scores were transformed to a final score on a 12-point scale, two-group nonrandomized study scores were transformed to a final score on a 9-point scale, and randomized controlled trial design study scores were transformed to a final score on a 14-point scale.

Table 5 shows general features of included studies. There was a large variation in the duration of TPs, ranging from 45 min34 in 1 day to 12 h in 12 weeks.30 Five TPs lasted > 1 day.6,28,30,36,37 Trainees were assessed only on the simulator in four studies.10,35,36,38 Of the studies where an observer was (partially) included in the assessment methods, four described whether the observer was instructed on how to assess the trainees.9,31, 32, 33 Studies that included assessment tools used a validated version of the BSTAT,33,34 a modified version of the BSTAT,30,31,37 or another validated bronchoscopy assessment tool.32

Table 5.

Overview of General Features of Included Studies

| Study | Duration | Assessment by | Observer Instruction | Validity Evidence Reported/Referred |

|---|---|---|---|---|

| Colt et al6 | > 1 d | Both | Unknown | No |

| Ost et al28 | > 1 d | Observer | Unknown | No |

| Blum et al29 | < 1 h and > 1 h, 1 d | Observer | Unknown | No |

| Wahidi et al33 | Unknown | Observer | Yes | Yes |

| Colt et al31 | > 1 h, 1 d | Observer | Yes | No |

| Bjerrum et al35 | > 1 h, 1 d | Simulator | NA | Yes |

| Krogh et al32 | < 1 h and > 1 h, 1 d | Observer | Yes | Yes |

| Bjerrum et al10 | > 1 h, 1 d | Simulator | NA | Yes |

| Bjerrum et al36 | > 1 h, 1 d, and > 1 d | Simulator | NA | Yes |

| Gopal et al37 | > 1 d | Observer | Unknown | No |

| Veaudor et al9 | Unknown | Both | Yes | Yes |

| Feng et al34 | < 1 h, 1 d | Observer | Unknown | Yes |

| Schertel et al38 | < 1 h, 1 d | Simulator | NA | No |

| Siow et al30 | > 1 d | Observer | Unknown | No |

NA = not applicable.

Instructional features of included studies are described in Table 6. Apart from clinical variation (present in nine studies) and prestudy (present in 10 studies), there was no dominant pattern of any of the other instructional features.

Table 6.

Overview of Instructional Features of Included Studies

| Study | Clinical Variation | Curriculum Integration | Instructor Feedback | Group Practice | Individualized Learning | Mastery Learning | Prestudy | Range in Task Difficulty |

|---|---|---|---|---|---|---|---|---|

| Colt et al6 | Yes | No | No | No | No | No | Yes | No |

| Ost et al28 | Yes | No | No | No | No | No | Yes | No |

| Blum et al29 | Yes | No | No | No | No | No | No | No |

| Wahidi et al33 | No | Yes | No | No | No | No | No | Yes |

| Colt et al31 | Yes | No | Yes | Yes | No | No | Yes | Yes |

| Bjerrum et al35 | Yes | No | Yes | No | No | No | Yes | No |

| Krogh et al32 | Yes | No | No | No | No | No | Yes | Yes |

| Bjerrum et al10 | Yes | No | Yes | Yes | No | No | Yes | No |

| Bjerrum et al36 | Yes | No | No | No | No | No | Yes | No |

| Gopal et al37 | No | No | No | No | No | No | No | No |

| Veaudor et al9 | No | No | No | No | No | No | Yes | No |

| Feng et al34 | No | No | No | No | No | No | Yes | No |

| Schertel et al38 | No | No | Yes | No | No | No | Yes | Yes |

| Siow et al30 | Yes | Yes | No | No | No | No | No | Yes |

Table 7 shows outcome measures that were present in two or more studies. We only reported these outcome measures for clarity, given the abundance of other outcome measures that were only present once in included studies (a complete overview of all outcome measures can be found in e-Table 2). Eleven studies reported significant improvements in more than one-half of their outcome measures. Outcome measures were heterogeneous, ranging from simulator metrics (eg, percentage of time in midlumen) to (validated) bronchoscopy assessment tool end scores. Two of four studies with outcomes on Kirkpatrick level 3 reported significant improvements in (modified) BSTAT outcomes.30,33 Ost et al,28 Blum et al,29 and Siow et al30 all reported procedure time outcomes in a patient setting. However, their effect on procedure time was conflicting.

Table 7.

Overview of Outcome Measures

| Outcome Measure | Level | Studies |

|---|---|---|

| Procedure time | 2 | Colt et al6,b; Bjerrum et al35; Krogh et al32,a; Bjerrum et al10; Bjerrum et al36; Veaudor et al9 |

| Segments entered | 2 | Bjerrum et al35; Bjerrum et al10; Bjerrum et al36; Feng et al34,a |

| Time in redout | 2 | Colt et al6; Bjerrum et al35; Bjerrum et al10; Bjerrum et al36 |

| Wall contacts | 2 | Bjerrum et al35; Bjerrum et al10; Bjerrum et al36 |

| (M)BSTAT simulator | 2 | Colt et al31,a; Gopal et al37,a; Feng et al34,a |

| % segments entered | 2 | Bjerrum et al35; Bjerrum et al10; Bjerrum et al36 |

| % segments entered/min | 2 | Bjerrum et al35; Bjerrum et al10; Bjerrum et al36 |

| Segments correctly identified | 2 | Veaudor et al9,a; Schertel et al38 |

| Segments correctly visualized and identified/procedure time | 2 | Ost et al28,a; Veaudor et al9,a |

| Segments missed | 2 | Colt et al6,b; Schertel et al38 |

| % time midlumen | 2 | Veaudor et al9; Schertel et al38 |

| % time scope-wall contacts | 2 | Veaudor et al9; Schertel et al38 |

| Procedure time | 3 | Ost et al28,a; Blum et al29,a; Siow et al30,a |

| (M)BSTAT patient | 3 | Wahidi et al33,a; Siow et al30,a |

Studies indicated in boldface font showed a significant improvement in the listed outcome measure. (M)BSTAT = Modified Bronchoscopy Skills and Tasks Assessment Tool.

Outcome recorded via direct observation (ie, an observer instead of simulator metrics).

Outcome both recorded via direct observation and via simulator metrics.

When evaluating the study characteristics of the studies with the highest quality (mMERSQI > 12) and positive results on the most relevant outcome measures (higher than Kirkpatrick level 2), we found that these studies30,33 shared the following characteristics: a gradual increase in task difficulty and integration of the TP in the curriculum.

Discussion

This review showed that FB SBT is an effective training method to teach basic bronchoscopy skills to novice trainees. The study quality of most studies was moderate to high. Despite these positive results, evidence for positive effects on Kirkpatrick levels 3 and 4 is still scarce. Finally, including a range in task difficulty and integrating the TP in the curriculum seem to be important to teach novices bronchoscopy skills that lead to improved bronchoscopy performance in a patient setting.

Study Design

Studying the effects of FB SBT is complex: because of the nature of the intervention and for ethical reasons, designing a blinded randomized controlled trial is difficult. Therefore, most included studies used a pretest-posttest design. This design has some drawbacks, the main being a pretest effect,40 meaning that performing a pretest might influence the scores a trainee obtains on the posttest. This testing effect might have led to an overestimation of those studies’ reported results. None of the studies in this review corrected for this possible pretest effect.

A review on postgraduate medical education simulation boot camps for clinical skills also reported that most studies used a single group pretest-posttest design, limiting the strength of the effectiveness of the reported interventions.41 This was also the case in a systematic review on technology-enhanced simulation for health professions education, where most studies used a pretest-posttest design.42 Despite its drawbacks, the pretest-posttest design may be inevitable for investigating FB SBT effectiveness, given the ethical objections associated with some trainees not practicing their skills on a simulator when one is available. However, once this design is chosen, it is important that researchers investigate the extent of a testing effect and adjust for it. In addition, to prevent bias, assessments in these studies should ideally be performed by a blinded observer.

Although long-term retention of FB skills is crucial, only one study measured participants’ skills retention after training over a period of > 6 months.33 This lack of studies measuring skill retention over a longer period of time after simulation training was also noticed in surgery and emergency care.43,44 However, in a previous review on critical care SBT, several studies were found evaluating retention outcomes using validated assessment methods after simulation training.45 Another study on SBT for internal medicine residents even reported both simulation retention outcomes and retention outcomes measured in a patient setting.46 Preferably, future studies on FB SBT should measure trainees’ skill acquisition longitudinally, where possible in a patient setting.

Outcome Measures

Ideally, SBT leads to positive outcomes on Kirkpatrick level 4 (eg, therapeutic/diagnostic completeness, complications, patient comfort); however, no studies in the current review reported outcomes at this level. It is difficult to design a study investigating the effect of SBT on patient outcomes from both an ethical and practical point of view, and potentially irregular links between simulation interventions and patient outcomes may exist.47,48

There was no consensus among investigators on outcome measures: a wide variety was used, with some simulator-generated and others observer-related. Moreover, although five studies used a (modified) BSTAT, only two studies used a validated version.33,34 In addition, all studies used a different version, leading to considerable heterogeneity, even among these studies. This problem was also identified in reviews of other areas of medical simulation training research (eg, training for surgical skills, ophthalmology, laparoscopy, endoscopy), where included studies varied highly in outcomes and assessment methods.49, 50, 51 To overcome this problem of heterogeneity and enable comparisons between studies, it is of great importance that future studies use validated homogeneous outcome measures, most preferably at a patient level (Kirkpatrick level 3 or 4). Patients having to undergo a bronchoscopy will be most interested in an adequately performed and complete bronchoscopy with the highest diagnostic and/or therapeutic yield, in preferably the shortest duration possible. Therefore, assessing trainees with a previously validated qualitative assessment (eg, validated version of the BSTAT) combined with procedure time as a secondary outcome measure will probably be very relevant to evaluate basic bronchoscopy skills. Structured progress, being the number of times an operator progressed from one segment to the correct next segment during bronchoscopy, might be added as well, because one study reported strong validity evidence of its use.52

Instructional Features

Curriculum integration and a range in task difficulty seemed to be relevant when evaluating the two studies with the highest quality.30,33 Several bronchoscopy TPs have already incorporated SBT in their curriculum,53,54 and some fellowships in interventional pulmonology require SBT.55 Unfortunately, no studies to date showed that curriculum integration had a positive effect on residents’ functioning at a behavioral level (Kirkpatrick level 3). Together with only two studies in this review that implemented their TP in the curriculum, it seems that no well-founded conclusions about the importance of curriculum integration can be drawn. However, we regard not integrating simulation training in the curriculum as ethically questionable. Unlike the apprenticeship method, SBT allows trainees to climb the initial, steep part of the learning curve of improving their bronchoscopy skills outside the patient setting. This results in lower stress levels for the trainee and, more importantly, less patient discomfort and morbidity compared with the apprenticeship method,11,37,56 which makes mandatory SBT for all trainees ethically desirable. Laparoscopic and cardiac bedside skill TPs have implemented simulations of a range in difficulty,57 and their relevance is also in line with an earlier review investigating the effectiveness of instructional design features in SBT,58 where a positive pooled effect of simulations with a range of difficulties was reported on behavior and patient outcomes.58 This is in line with previous research, which showed that competence cannot be indicated solely by a high number of performed procedures59 and where escalating task difficulty might be important to gaining competence. Nevertheless, only five studies in this review used a range of task difficulties in their program, making evidence of its relevance in an FB SBT setting rather sparse.

According to previous research, most bronchoscopy learners prefer to directly apply their newly acquired knowledge and skills60 in practice. Therefore, simulation TPs should preferably be integrated in an experiential learning model, with case-based learning exercises and small groups with a low trainee-to-instructor ratio enabling frequent interaction and feedback.60 However, given the sparse evidence on the actual effectiveness of these instructional features in a bronchoscopy training setting, more research into their relevance for FB SBT programs is warranted.

Strengths and Limitations

This review has several strengths. It provided a comprehensive overview of current evidence on FB SBT effectiveness in improving FB skills for novice bronchoscopists. It focused solely on FB, and in contrast with previous recent research, study quality, RoB, and present instructional features were evaluated. Articles in any language were considered, and multiple databases were used for the literature search. Reviewers were blinded when they assessed study quality, general features, instructional features, and outcomes, and all assessments were performed independently.

This review also has several limitations. First, because of heterogeneity in the simulation interventions and outcome measures, no formal meta-analysis could be performed. This made it impossible to compare study outcomes quantitatively and to calculate pooled effect sizes of instructional features. Second, the number of included studies was relatively small, which limited the ability to formulate well-founded, qualitative conclusions about the relevance of instructional features. Third, studies measuring outcomes only on Kirkpatrick level 1 were excluded. Although satisfaction with the training can be important for building participants’ self-confidence, this outcome measure was considered less relevant for the purpose of this review. Furthermore, we found only one Kirkpatrick level 1 study that met the inclusion criteria.61 Fourth, the methods developed by the National Heart, Lung, and Blood Institute22 and Tufanaru et al21 used to calculate RoB of studies are not yet validated. Finally, it was decided to adapt the MERSQI for the purposes of this review because some parameters were found to be open to interpretation in this setting. Although this adjustment can raise questions about the validity of the MERSQI for this use, we suspect the possibility of bias to be small because these items involve at maximum only three of the 18 points that can be scored on the MERSQI.

Interpretation

SBT is effective in teaching novices basic bronchoscopy skills. Including a gradual increase in task difficulty seems to be important when designing a TP and integrating the TP into the curriculum. However, evidence for effectiveness on a behavioral (Kirkpatrick level 3) and patient level (Kirkpatrick level 4) is scarce. Future studies should therefore focus on using validated homogeneous outcome measures focused on these levels.

Funding/Support

Funded by the Catharina Hospital research fund and the board of directors of Maastricht University Medical Center+.

Financial/Nonfinancial Disclosures

None declared.

Acknowledgments

Author contributions: E. C. F. G. takes responsibility for the content of the manuscript, including the data and analysis. E. C. F. G., J. T. A., M. G., E. H. F. M. v. d. H., W. N. K. A. v. M., and F. W. J. M. S. developed the protocol. E. C. F. G. and A. C. performed the literature search and screened results. E. C. F. G. and A. C. read full texts of relevant papers to decide whether they had to be included or not, and F. W. J. M. S. decided when no consensus could be reached. E. C. F. G. and A. C. assessed included papers on quality and general and instructional features, and F. W. J. M. S. decided when no consensus could be reached. All authors contributed to writing the paper.

Role of sponsors: The sponsor had no role in the design of the study, the collection and analysis of the data, or the preparation of the manuscript.

Other contributions: We thank Jen Yaros, MSc, for proofreading the manuscript.

Additional information: The e-Tables are available online under “Supplementary Data.”

Supplementary Data

References

- 1.Ryall T., Judd B.K., Gordon C.J. Simulation-based assessments in health professional education: a systematic review. J Multidiscip Healthc. 2016;9:69–82. doi: 10.2147/JMDH.S92695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kennedy C.C., Maldonado F., Cook D.A. Simulation-based bronchoscopy training systematic review and meta-analysis. Chest. 2013;144(1):183–192. doi: 10.1378/chest.12-1786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Vozenilek J., Huff J.S., Reznek M., Gordon J.A. See one, do one, teach one: advanced technology in medical education. Acad Emerg Med. 2004;11(11):1149–1154. doi: 10.1197/j.aem.2004.08.003. [DOI] [PubMed] [Google Scholar]

- 4.Ouellette D.R. The safety of bronchoscopy in a pulmonary fellowship program. Chest. 2006;130(4):1185–1190. doi: 10.1378/chest.130.4.1185. [DOI] [PubMed] [Google Scholar]

- 5.Stather D.R., MacEachern P., Chee A., Dumoulin E., Tremblay A. Trainee impact on procedural complications: an analysis of 967 consecutive flexible bronchoscopy procedures in an interventional pulmonology practice. Respiration. 2013;85(5):422–428. doi: 10.1159/000346650. [DOI] [PubMed] [Google Scholar]

- 6.Colt H.G., Crawford S.W., Galbraith O. Virtual reality bronchoscopy simulation: a revolution in procedural training. Chest. 2001;120(4):1333–1339. doi: 10.1378/chest.120.4.1333. [DOI] [PubMed] [Google Scholar]

- 7.Garner J.L., Garner S.D., Hardie R.J., et al. Evaluation of a re-useable bronchoscopy biosimulator with ventilated lungs. ERJ Open Res. 2019;5(2):00035–2019. doi: 10.1183/23120541.00035-2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Byrne T., Yong S.A., Steinfort D.P. Development and assessment of a low-cost 3D-printed airway model for bronchoscopy simulation training. J Bronchology Interv Pulmonol. 2016;23(3):251–254. doi: 10.1097/LBR.0000000000000257. [DOI] [PubMed] [Google Scholar]

- 9.Veaudor M., Gérinière L., Souquet P.J., et al. High-fidelity simulation self-training enables novice bronchoscopists to acquire basic bronchoscopy skills comparable to their moderately and highly experienced counterparts. BMC Med Educ. 2018;18(1):1–8. doi: 10.1186/s12909-018-1304-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bjerrum A.S., Eika B., Charles P., Hilberg O. Dyad practice is efficient practice: a randomised bronchoscopy simulation study. Med Educ. 2014;48(7):705–712. doi: 10.1111/medu.12398. [DOI] [PubMed] [Google Scholar]

- 11.Naur T.M.H., Nilsson P.M., Pietersen P.I., Clementsen P.F., Konge L. Simulation-based training in flexible bronchoscopy and endobronchial ultrasound-guided transbronchial needle aspiration (EBUS-TBNA): a systematic review. Respiration. 2017;93(5):355–362. doi: 10.1159/000464331. [DOI] [PubMed] [Google Scholar]

- 12.Nilsson P.M., Naur T.M.H., Clementsen P.F., Konge L. Simulation in bronchoscopy: current and future perspectives. Adv Med Educ Pract. 2017;8:755–760. doi: 10.2147/AMEP.S139929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Vieira L.M.N., Camargos P.A.M., Ibiapina C.D.C. Bronchoscopy simulation training in the post-pandemic world. J Bras Pneumol. 2022;48(3) doi: 10.36416/1806-3756/e20210361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Issenberg S.B., McGaghie W.C., Petrusa E.R., Gordon D.L., Scalese R.J. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27(1):10–28. doi: 10.1080/01421590500046924. [DOI] [PubMed] [Google Scholar]

- 15.Page M.J., McKenzie J.E., Bossuyt P.M., et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. doi: 10.1136/bmj.n71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kirkpatrick D.L. In: Training and Development Handbook. Craig R., Bittel I., editors. McGraw Hill; 1976. Evaluation of training; pp. 87–112. [Google Scholar]

- 17.Smidt A., Balandin S., Sigafoos J., Reed V.A. The Kirkpatrick model: a useful tool for evaluating training outcomes. J Intellect Dev Disabil. 2009;34(3):266–274. doi: 10.1080/13668250903093125. [DOI] [PubMed] [Google Scholar]

- 18.Johnston S., Coyer F.M., Nash R. Kirkpatrick’s evaluation of simulation and debriefing in health care education: a systematic review. J Nurs Educ. 2018;57(7):393–398. doi: 10.3928/01484834-20180618-03. [DOI] [PubMed] [Google Scholar]

- 19.Reed D.A., Cook D.A., Beckman T.J., Levine R.B., Kern D.E., Wright S.M. Association between funding and quality of published medical education research. J Am Med Assoc. 2007;298(9):1002–1009. doi: 10.1001/jama.298.9.1002. [DOI] [PubMed] [Google Scholar]

- 20.Bogetz J.F., Rassbach C.E., Bereknyei S., Mendoza F.S., Sanders L.M., Braddock C.H. Training health care professionals for 21st-century practice: a systematic review of educational interventions on chronic care. Acad Med. 2015;90(11):1561–1572. doi: 10.1097/ACM.0000000000000773. [DOI] [PubMed] [Google Scholar]

- 21.Tufanaru C., Munn Z., Aromataris E., Campbell J., Hopp L. Chapter 3: systematic reviews of effectiveness, JBI Manual for Evidence Synthesis, 2020, JBI. [DOI]

- 22.Study quality assessment tools. National Heart, Lung, and Blood Institute website. Accessed September 2 2022. https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools

- 23.Deeks JJ, Higgins JPT, Altman DG, eds. Chapter 10: Analysing data and undertaking meta-analyses. In: Higgins JPT, Thomas J, Chandler J, et al, eds. Cochrane Handbook for Systematic Reviews of Interventions version 6.3 (updated February 2022). Cochrane; 2022. Accessed August 25, 2022. www.training.cochrane.org/handbook

- 24.Chen J.S., Hsu H.H., Lai I.R., et al. Validation of a computer-based bronchoscopy simulator developed in Taiwan. J Formos Med Assoc. 2006;105(7):569–576. doi: 10.1016/S0929-6646(09)60152-2. [DOI] [PubMed] [Google Scholar]

- 25.Moorthy K., Smith S., Brown T., Bann S., Darzi A. Evaluation of virtual reality bronchoscopy as a learning and assessment tool. Respiration. 2003;70(2):195–199. doi: 10.1159/000070067. [DOI] [PubMed] [Google Scholar]

- 26.Siddharthan T., Jackson P., Argento A.C., et al. A pilot program assessing bronchoscopy training and program initiation in a low-income country. J Bronchology Interv Pulmonol. 2021;28(2):138–142. doi: 10.1097/LBR.0000000000000721. [DOI] [PubMed] [Google Scholar]

- 27.Mallow C., Shafiq M., Thiboutot J., et al. Impact of video game cross-training on learning bronchoscopy. a pilot randomized controlled trial. ATS Sch. 2020;1(2):134–144. doi: 10.34197/ats-scholar.2019-0015OC. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Ost D., DeRosiers A., Britt E.J., et al. Assessment of a bronchoscopy simulator. Am J Respir Crit Care Med. 2001;164(12):2248–2255. doi: 10.1164/ajrccm.164.12.2102087. [DOI] [PubMed] [Google Scholar]

- 29.Blum M., Powers T.W., Sundaresan S. Bronchoscopy simulator effectively prepares junior residents to competently perform basic clinical bronchoscopy. Ann Thorac Surg. 2004;78(1):287–291. doi: 10.1016/j.athoracsur.2003.11.058. [DOI] [PubMed] [Google Scholar]

- 30.Siow W.T., Tan G.L., Loo C.M., et al. Impact of structured curriculum with simulation on bronchoscopy. Respirology. 2021;26(6):597–603. doi: 10.1111/resp.14054. [DOI] [PubMed] [Google Scholar]

- 31.Colt H.G., Davoudi M., Murgu S., Zamanian Rohani N. Measuring learning gain during a one-day introductory bronchoscopy course. Surg Endosc. 2011;25(1):207–216. doi: 10.1007/s00464-010-1161-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Krogh C.L., Konge L., Bjurström J., et al. Training on a new, portable, simple simulator transfers to performance of complex bronchoscopy procedures. Clin Respir J. 2013;7(3):237–244. doi: 10.1111/j.1752-699X.2012.00311.x. [DOI] [PubMed] [Google Scholar]

- 33.Wahidi M.M., Silvestri G.A., Coakley R.D., et al. A prospective multicenter study of competency metrics and educational interventions in the learning of bronchoscopy among new pulmonary fellows. Chest. 2010;137(5):1040–1049. doi: 10.1378/chest.09-1234. [DOI] [PubMed] [Google Scholar]

- 34.Feng D.B., Yong Y.H., Byrnes T., et al. Learning gain and skill retention following unstructured bronchoscopy simulation in a low-fidelity airway model. J Bronchol Interv Pulmonol. 2020;27(4):280–285. doi: 10.1097/LBR.0000000000000664. [DOI] [PubMed] [Google Scholar]

- 35.Bjerrum A.S., Hilberg O., van Gog T., Charles P., Eika B. Effects of modelling examples in complex procedural skills training: a randomised study. Med Educ. 2013;47(9):888–898. doi: 10.1111/medu.12199. [DOI] [PubMed] [Google Scholar]

- 36.Bjerrum A.S., Eika B., Charles P., Hilberg O. Distributed practice. The more the merrier? A randomised bronchoscopy simulation study. Med Educ Online. 2016;21 doi: 10.3402/meo.v21.30517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gopal M., Skobodzinski A.A., Sterbling H.M., et al. Bronchoscopy simulation training as a tool in medical school education. Ann Thorac Surg. 2018;106(1):280–286. doi: 10.1016/j.athoracsur.2018.02.011. [DOI] [PubMed] [Google Scholar]

- 38.Schertel A., Geiser T., Hautz W.E., Man W.E. Man or machine? Impact of tutor-guided versus simulator-guided short-time bronchoscopy training on students learning outcomes. BMC Med Educ. 2021;21(1):123. doi: 10.1186/s12909-021-02526-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Bronchoscopy skills and tasks 10 point assessment tool. Bronchoscopy International website. Accessed September 7, 2020. https://bronchoscopy.org/downloads/tools/SkillsAndTasksAssessmentTool.pdf

- 40.Kim E.S., Willson V.L. Evaluating pretest effects in pre–post studies. Educ Psychol Meas. 2010;70(5):744–759. [Google Scholar]

- 41.Blackmore C., Austin J., Lopushinsky S.R., Donnon T. Effects of postgraduate medical education “boot camps” on clinical skills, knowledge, and confidence: a meta-analysis. J Grad Med Educ. 2014;6(4):643–652. doi: 10.4300/JGME-D-13-00373.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Cook D.A., Hatala R., Brydges R., et al. Technology-enhanced simulation for health professions education: a systematic review and meta-analysis. JAMA. 2011;306(9):978–988. doi: 10.1001/jama.2011.1234. [DOI] [PubMed] [Google Scholar]

- 43.Stefanidis D., Sevdalis N., Paige J., et al. Simulation in surgery: what’s needed next? Ann Surg. 2015;261(5):846–853. doi: 10.1097/SLA.0000000000000826. [DOI] [PubMed] [Google Scholar]

- 44.Yang C.W., Yen Z.S., McGowan J.E., et al. A systematic review of retention of adult advanced life support knowledge and skills in healthcare providers. Resuscitation. 2012;83(9):1055–1060. doi: 10.1016/j.resuscitation.2012.02.027. [DOI] [PubMed] [Google Scholar]

- 45.Legoux C., Gerein R., Boutis K., Barrowman N., Plint A. Retention of critical procedural skills after simulation training: a systematic review. AEM Educ Train. 2021;5(3) doi: 10.1002/aet2.10536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Smith C.C., Huang G.C., Newman L.R., et al. Simulation training and its effect on long-term resident performance in central venous catheterization. Simul Healthc. 2010;5(3):146–151. doi: 10.1097/SIH.0b013e3181dd9672. [DOI] [PubMed] [Google Scholar]

- 47.Brydges R., Hatala R., Zendejas B., Erwin P.J., Cook D.A. Linking simulation-based educational assessments and patient-related outcomes: a systematic review and meta-analysis. Acad Med. 2015;90(2):246–256. doi: 10.1097/ACM.0000000000000549. [DOI] [PubMed] [Google Scholar]

- 48.Pietersen P.I., Laursen C.B., Petersen R.H., Konge L. Structured and evidence-based training of technical skills in respiratory medicine and thoracic surgery. J Thorac Dis. 2021;13(3):2058. doi: 10.21037/jtd.2019.02.39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Dawe S.R., Windsor J.A., Broeders J.A.J.L., Cregan P.C., Hewett P.J., Maddern G.J. A systematic review of surgical skills transfer after simulation-based training: laparoscopic cholecystectomy and endoscopy. Ann Surg. 2014;259(2):236–248. doi: 10.1097/SLA.0000000000000245. [DOI] [PubMed] [Google Scholar]

- 50.Lee R., Raison N., Lau W.Y., et al. A systematic review of simulation-based training tools for technical and non-technical skills in ophthalmology. Eye. 2020;34(10):1737–1759. doi: 10.1038/s41433-020-0832-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Groenier M., Brummer L., Bunting B.P., Gallagher A.G. Reliability of observational assessment methods for outcome-based assessment of surgical skill: systematic review and meta-analyses. J Surg Educ. 2020;77(1):189–201. doi: 10.1016/j.jsurg.2019.07.007. [DOI] [PubMed] [Google Scholar]

- 52.Cold K.M., Svendsen M.B.S., Bodtger U., Nayahangan L.J., Clementsen P.F., Konge L. Using structured progress to measure competence in flexible bronchoscopy. J Thorac Dis. 2020;12(11):6797. doi: 10.21037/jtd-20-2181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.EBUS masterclass: tools and techniques for optimizing outcomes - June 2023. American College of Chest Physicians website. Accessed 14 March 2023. https://www.chestnet.org/Store/Products/Events/2023/EBUS-Masterclass-Tools-and-Techniques-for-Optimizing-Outcomes-june-2023

- 54.Advanced diagnostic bronchoscopy for peripheral nodules - August 2023. American College of Chest Physicians website. Accessed June 8, 2023. https://www.chestnet.org/Store/Products/Events/2023/Advanced-Diagnostic-Bronchoscopy-for-Peripheral-Nodules-August-2023

- 55.Mullon J.J., Burkart K.M., Silvestri G., et al. Interventional pulmonology fellowship accreditation standards: executive summary of the Multisociety Interventional Pulmonology Fellowship Accreditation Committee. Chest. 2017;151(5):1114–1121. doi: 10.1016/j.chest.2017.01.024. [DOI] [PubMed] [Google Scholar]

- 56.Henriksen M.J.V., Wienecke T., Kristiansen J., Park Y.S., Ringsted C., Konge L. Opinion and special articles: stress when performing the first lumbar puncture may compromise patient safety. Neurology. 2018;90(21):981–987. doi: 10.1212/WNL.0000000000005556. [DOI] [PubMed] [Google Scholar]

- 57.Motola I., Devine L.A., Chung H.S., Sullivan J.E., Issenberg S.B. Simulation in healthcare education: a best evidence practical guide. AMEE Guide No. 82. Med Teach. 2013;35(10):e1511–e1530. doi: 10.3109/0142159X.2013.818632. [DOI] [PubMed] [Google Scholar]

- 58.Cook DA, Hamstra SJ, Brydges R, et al. Comparative effectiveness of instructional design features in simulation-based education: systematic review and meta-analysis. 2013;35(1):e867-e898. [DOI] [PubMed]

- 59.Dawson L.J., Fox K., Jellicoe M., Adderton E., Bissell V., Youngson C.C. Is the number of procedures completed a valid indicator of final year student competency in operative dentistry? Br Dent J. 2021;230(10):663–670. doi: 10.1038/s41415-021-2967-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Murgu S.D., Kurman J.S., Hasan O. Bronchoscopy education: an experiential learning theory perspective. Clin Chest Med. 2018;39(1):99–110. doi: 10.1016/j.ccm.2017.11.002. [DOI] [PubMed] [Google Scholar]

- 61.Chen J.S., Hsu H.H., Lai I.R., et al. Validation of a computer-based bronchoscopy simulator developed in Taiwan. J Formos Med Assoc. 2006;105(7):569–576. doi: 10.1016/S0929-6646(09)60152-2. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.