Abstract

Purpose/Objectives.

Multimedia presentations and online platforms are used in dental education. Though studies indicate benefits of video-based lectures (VBLs), data regarding user reception and optimal video features in dental education are limited, particularly on Web 2.0 platforms like YouTube. Given increasing technology integration and remote learning, dental educators need evidence to guide implementation of YouTube videos as a freely available resource. The purpose of this study is to determine video metrics, viewership, and format efficacy for dental education videos.

Methods.

First, a cross-sectional survey was conducted of viewers (N=683) of the Mental Dental educational videos on YouTube. Analytics were evaluated for 677,200 viewers to assess audience demographics, retention, and optimal video length. Second, a randomized crossover study was conducted of dental students (N=101) who watched VBLs in either slideshow or pencast formats and were tested on content learning to compare format efficacy.

Results.

Most viewers of Mental Dental videos were dental students (44.2%) and professionals (37.8%) who would likely recommend the platform to a friend or colleague (Net Promoter Score=82.1). Audience retention declined steadily at 1.34% per minute, independent of video length. Quiz performance did not differ between slideshow and pencast videos, with students having a slight preference for slideshows (p=0.049).

Conclusions.

Dental students and professionals use VBLs and are likely to recommend them to friends and colleagues. There is no optimal video length to maximize audience retention, and lecture format (slideshow vs. pencast) does not significantly impact content learning. Results can guide implementation of VBLs in dental curricula.

Keywords: Dental education, video-based lecture, predoctoral education, education research, YouTube

INTRODUCTION

The delivery of educational content in dental schools continues to evolve, with a trajectory toward more sophisticated, graphical and interactive platforms.1,2 The use of multimedia and web-based applications accelerated because of the COVID-19 pandemic, with an obligatory shift to more digital and video-based lectures (VBLs) in virtual/remote settings.3–6 However, the shift to VBLs in dental school curricula is not universal.4 Among the forty top-searched dental videos on YouTube, dental schools are directing students to only 5% of this content.7

Reports addressing the effectiveness and perception of video lectures as a learning tool in healthcare education are encouraging. Studies reveal that test scores of medical students were similar following both live lectures and VBLs, with American students preferring live lectures and German students preferring the ability to self-pace and repeat VBL content.8,9 When VBLs were compared with textbooks for content learning by dental students, test results were significantly higher for videos than textbooks.10 A survey of 479 dental students who consulted YouTube as a learning tool for clinical procedures, revealed that 95% found the videos helpful and 89% would like their dental schools to post similar tutorials on YouTube and social media.11 However, 36% were uncertain about the videos’ evidence base.11 Reported benefits of online VBLs used by medical and dental students include ease of access, ability to view content at their own pace, flexibility to download material at any time, and ability to revisit prior material.8,12,13 These studies suggest that VBLs can be as effective as live lectures and possibly more so than textbooks, with advantages for users. Nonetheless, only a few studies have examined the adoption and reception of VBLs by dental students using Web 2.0 platforms (e.g. YouTube, Vimeo, VoiceThread), despite the rapid growth of these platforms as educational resources over the past 20 years.14,15 Viewership of educational videos on YouTube has risen from 22% of adult users in 2007 to 38% in 2009, and in 2017, 86% of US viewers reported they often use YouTube to learn new things.14,15

The use of VBLs as educational tools by institutions could be valuable, especially for those experiencing faculty shortages, financial shortfalls and a shift to remote learning. However, more data on students’ engagement with VBLs and the effectiveness of VBL formats are needed to guide the development and adoption of VBLs in dental curricula. In this study we focused specifically on a public library of dental education VBLs (created by author RTG) on the Mental Dental (MD) YouTube channel.16

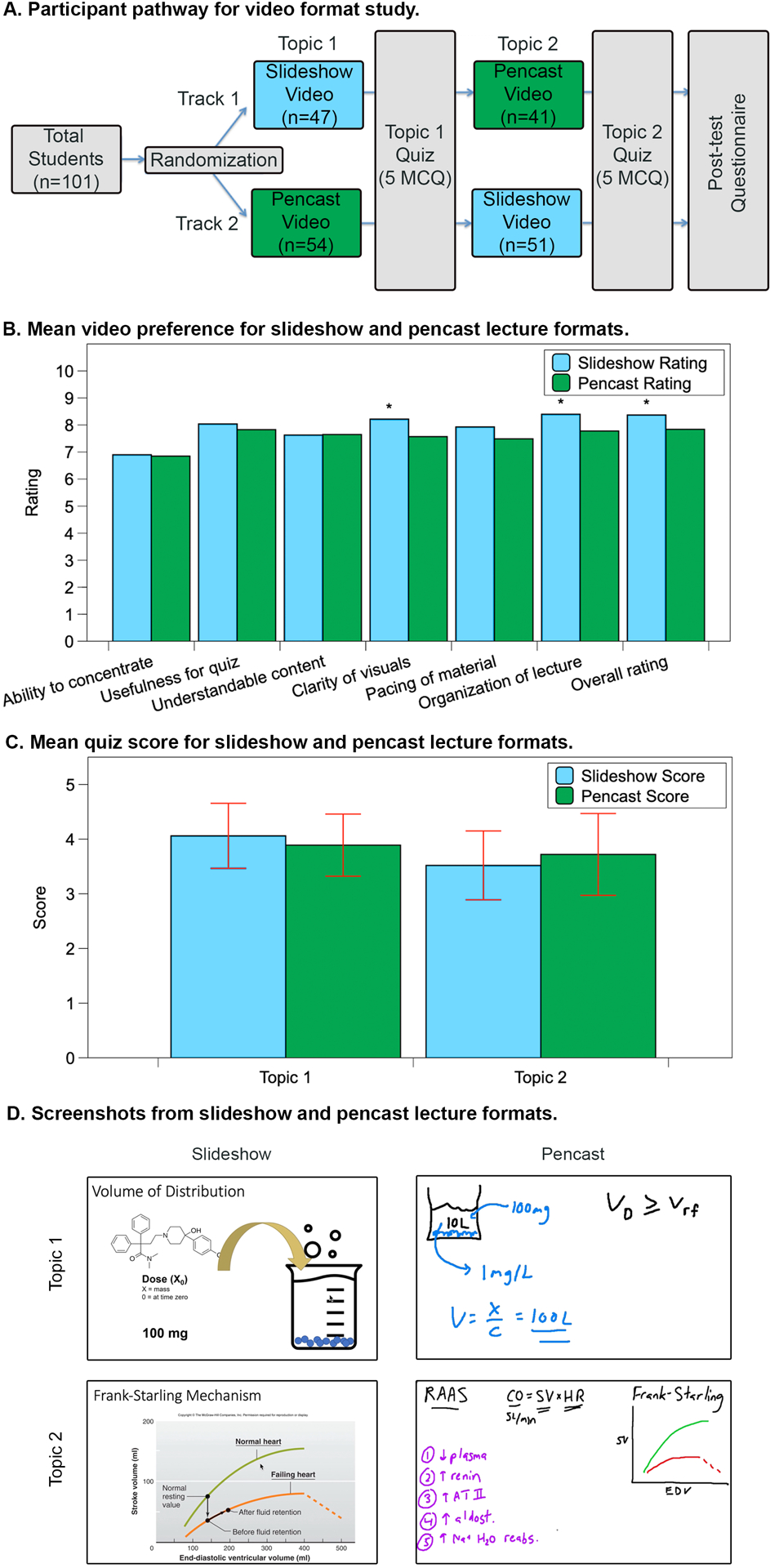

The two main goals of this study are (1) to analyze user reception and video metrics of VBLs on MD YouTube channel and (2) to determine the optimal lecture format (narrated slideshows or pencasts) for those VBLs. For our first goal, we hypothesize that more than half of MD viewers are dental students and professionals, with a majority likely to recommend the VBLs as an educational tool and that optimal video length is under ten to fifteen minutes, based on studies of medical students.17 For our second goal, we hypothesize that students will achieve similar mean quiz scores (+/−10%, no significant difference) when watching narrated slideshows and pencasting VBLs (Figure 1). Data will provide a valuable opportunity to evaluate efficacy of specific VBL formats and guide implementation in dental curriculum.

Figure 1. Randomized crossover study on video format.

A. Participant pathways for video format study. Subjects were randomly assigned to Track 1 or Track 2. The students in Track 1 watched a video on Volume of Distribution in a slideshow format, while the students in Track 2 watched a video on the same material but delivered in a pencast format. Students then answered 5 multiple choice quiz questions to test their knowledge of the topic. Then students in Track 1 watched a video on the Renin-Angiotensin-Aldosterone System in a pencast format, while the students in Track 2 watched a video on the same material but delivered in a slideshow format. Students then answered 5 quiz questions. Finally, a post-test questionnaire was administered to assess viewers’ perceptions of both video formats on a 10-point rating scale. B. Mean video preferences from 0–10 for slideshow versus pencast lecture format. C. Mean quiz scores from 0–5 for slideshow versus pencast lecture format. Slideshow score (blue) and pencast score (green). 95% confidence interval shown in red. D. Examples of slideshow and pencast lecture formats. Topic 1 is Volume of Distribution. Topic 2 is the Frank-Starling Mechanism. Screenshots are taken from the lecture videos.

MATERIALS AND METHODS

This research was reviewed and deemed exempt by the UNC Institutional Review Board (IRB #18–1229).

Part 1: User Perception and Metrics of VBLs

In order to address the first research hypothesis, we applied a mixed-methods approach to VBLs hosted on the MD YouTube channel (YouTube Inc., San Bruno, CA) to determine their audience, to quantify optimal video length, and to investigate whether viewers recommend the content. The number of views, user demographics, audience retention rates, perception, and Net Promoter Score (NPS) were assessed through YouTube analytics and a Qualtrics viewer survey (N=683, Appendix: Viewer Survey). NPS is an evidence-based market research metric used to evaluate the experience and perception that customers have for a particular product.18,19 Audience retention (AR) has been used as an imperfect proxy for assessing learning– while watching the video is a prerequisite for learning its content, viewing a video does not indicate information retention.20–22 However, not watching a video, ensures information is not delivered. In this study, AR was used to evaluate the optimal video length for retaining viewers of VBLs and not information retention.

MD VBLs present dental school course curricula, developed and produced by author RTG, a licensed dentist (Mental Dental Education LLC, Carrboro, NC). The 121 videos featured in this study ranged in length from three to forty-four minutes and were developed using Microsoft PowerPoint (Microsoft Corp., Redmond, WA), QuickTime Player (Apple Inc., Cupertino, CA), and Shotcut (Dan Dennedy LLC) before being uploaded to YouTube. Videos spanned eleven topics focused on exam preparation for dental students based on textbooks, board-review books, peer-reviewed publications, and lectures taught at the UNC Adams School of Dentistry (ASOD) via the Advocate-Clinician-Thinker (ACT) curriculum (Table S1).23

Viewer data from the YouTube analytics tool, user comments, and surveys (Qualtrics, Seattle, WA) including demographics (i.e. age, gender), traffic source (i.e. browse features, YouTube search), and access device (i.e. mobile phone, computer), were collected on 11/01/2021 from a prior ninety-day period (Table 1, Figure 2). Reported viewer data include video length, upload date, number of views, number of comments, like/dislike ratio (LDR), average view duration (AVD), mean percentage viewed (MPV), and audience retention (AR) (Table S2). MPV is the average percentage of a video watched prior to viewers ending the video, whereas AR is the percentage of viewers watching at a time point compared to the initial total number of viewers. AR data collected from viewership patterns of 677,200 viewers from 08/01/2021–11/01/2021 was reported in a curvilinear fashion for each video at each time point (Figure 3). At the conclusion of videos, YouTube’s algorithm suggested related videos based on viewers’ watch history and preferences, potentially increasing views of MD videos.

Table 1.

Sample demographics.

| YouTube Viewers (N=677,200) | YouTube User Survey Respondents (N=683) | VBL Format Quiz Respondents (N=101) | |

|---|---|---|---|

| Age in years (mean +/‒ SD) | Not available | 24.54 +/‒ 3.22 | 25.23 +/‒ 4.24 |

| Age in years (range) | 46.8% 25–34 29.7% 18–24 14.4% 35–44 5.2% 45–54 1.9% 55–64 1.1% 13–17 0.9% 65+ |

20–35 | 21–42 |

| Gender | 53.7% female 46.3% male |

42.02% female 33.09% male 24.89% not specified |

53.47% female 26.73% male 19.8% not specified |

| Geographic location | 34.2% United States 17.6% India 4.9% Canada 3.0% Saudi Arabia 3.0% Pakistan 2.9% Philippines 2.7% Egypt |

25.3% United States 6.4% India 4.0% Pakistan 3.7% Egypt 3.3% Philippines 2.6% Canada 2.6% Australia 2.6% Saudi Arabia 1.8% Iraq 1.8% Jordan |

46.5% United States 10.9% India 4.0% Iraq 2.0% Canada 2.0% Pakistan 2.0% Ukraine 1.0% Philippines 1.0% Mexico |

| Device type | 52.6% mobile phone 37.0% computer 7.1% tablet 3.1% television 0.1% game console |

Not collected (mobile phone, computer, or tablet) | Not collected (mobile phone, computer, or tablet) |

| Traffic source | 23.0% browse features 21.9% YouTube search 20.5% playlists 12.8% suggested videos 12.1% channel pages |

Not available | Not available |

Note: Data derived from YouTube analytics for viewers does not provide racial or ethnicity data.

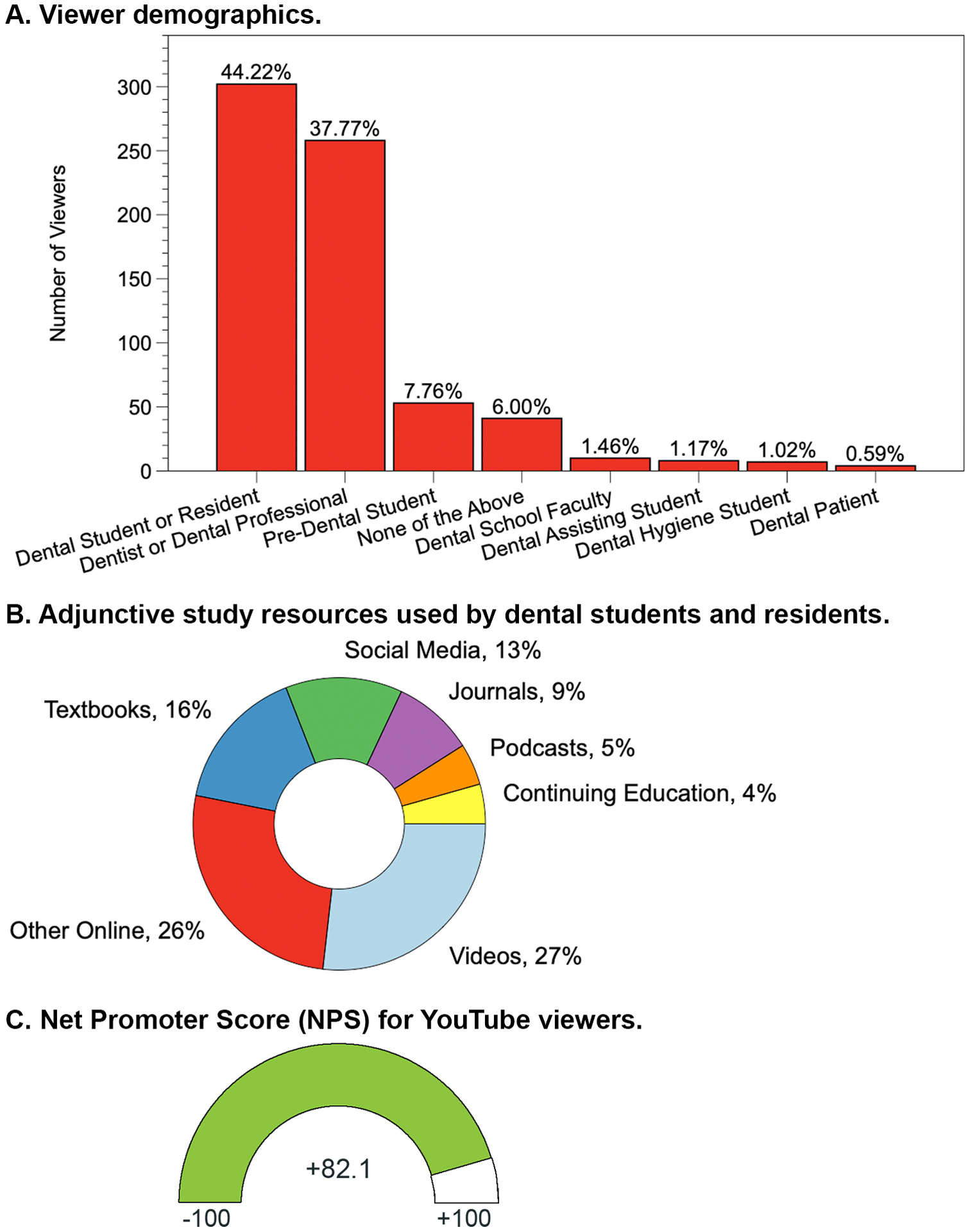

Figure 2. Viewer metrics of Mental Dental VBLs on YouTube.

A. Viewer demographics (N=683). B. Adjunctive study resources used by dental students and residents (n=302). Dental students were asked, “In addition to resources provided by dental school faculty, what other educational resources do you use to study for dental school exams and quizzes and/or learn more about dentistry?” C. Net Promoter Score (NPS) for YouTube viewers (N=683). NPS is a validated customer satisfaction tool that measures willingness of users to recommend products or services to others. It is based on a numerical response ranging from 0 to 10 to the question, “How likely is it that you would recommend this business to a friend or colleague?” A customer is classified as a “promoter” with a score of 9 or 10, “passive” with a score of 7 or 8, and “detractor” with a score of 6 and below.29 The NPS for the Mental Dental channel is measured at +82.1 with 85% promoter, 13% passive, and 2% detractor.

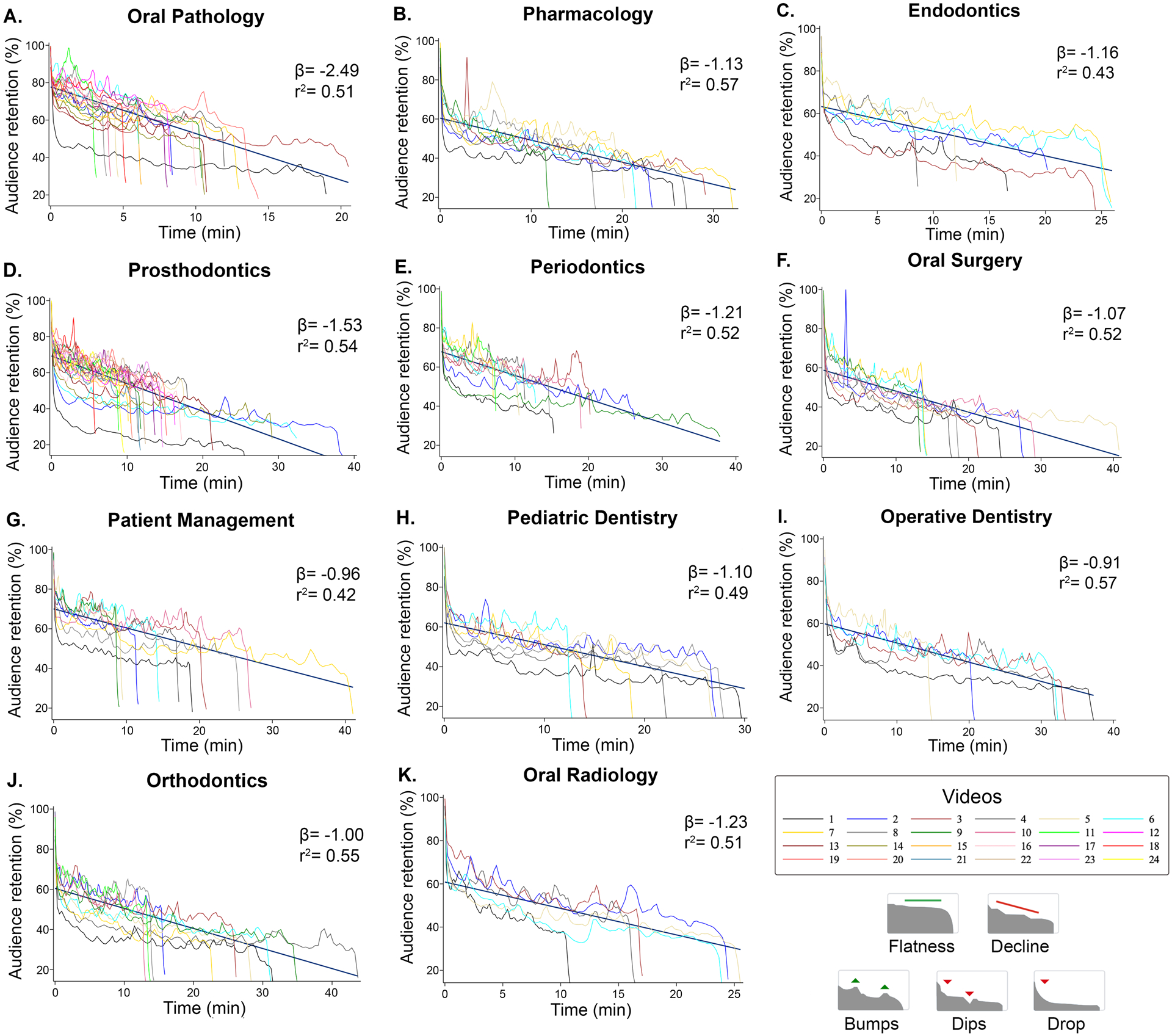

Figure 3. Audience retention for VBLs and averaged with linear regression.

Charts depict audience viewership for all videos within a topic series (e.g. Panel A shows Oral Pathology videos). Each line starts at 100% of viewers and decays to 0% by the end of the video. Flatness indicates viewers are watching the video without leaving. Gradual decline means viewers are losing attention and stop watching over time. Bumps mean viewers are rewatching or sharing those parts of the video, while dips indicate viewers are skipping over certain parts of the video. Lastly, a sudden drop means viewers are leaving at that specific part. All videos experience a sharp decline at the beginning when viewers recognize that content is irrelevant or not what they had hoped, and there is a sharp decline at the end of each video while departing pleasantries are shared. The linear regression analysis provides a line of best fit with a slope describing overall audience retention (β= −1.34) and coefficient of determination (0.52).

The viewer survey was designed and pre-tested in collaboration with the UNC Odum Institute to collect viewer feedback on the educational videos of the MD YouTube channel. Questions 8–14 were adapted from Brockfeld et al.8 Twenty-four dental students and two dental faculty members pre-tested the survey with iterative revisions for clarity.10 A link to this survey was included in the video description of each MD YouTube video in a pinned comment, in addition to a recruitment announcement included in the videos asking viewers to participate (08/01/2021–11/01/2021, N=683). The survey was hosted on a secure Qualtrics site through a university account. Viewers consented to participate and then answered questions regarding demographics and viewing experience (Appendix: Viewer Survey). Display logic directed dental students and residents to answer questions on study habits and video perception. Duplicate survey responses were eliminated by confirming a unique IP address.

Part 2: Randomized Crossover Evaluation of VBL Format

The second portion of the investigation was a randomized crossover study (created in Qualtrics) conducted with dental students (N=101) who watched online videos in both slideshow and pencast formats prior to quizzes, to compare content learning (Figure 1). Four new VBLs, six to eight minutes in length, were scripted, revised (by AMP and RTG) and presented (by RTG), covering two pharmacologic topics taught in dental school curricula.24 The videos presented information on Volume of Distribution (Topic 1) and the Renin-Angiotensin-Aldosterone System (Topic 2) with the same script and presenter, but one in a slideshow format and the other in a pencast style offering a different lecture style and visual aids. There were two videos per topic with four videos total. All four VBLs were uploaded privately to YouTube for exclusive study use. For the subsequent quiz, ten multiple choice questions (MCQs), five per topic, were developed by a pharmacology professor (AMP). Dental students were recruited to participate in this portion of the study via two recruitment videos that were uploaded to the MD YouTube channel (on 05/19/2021 and 07/21/2021) asking prospective viewers to participate in the study. A recruitment email was also sent to dental students from UNC ASOD for enrollment. Participants answered screening questions for inclusion/exclusion criteria prior to consenting (Table S3). Students were assigned to Track 1 or 2 by using a random number generator within Qualtrics (Figure 1A–C). After each video, students were asked to take a quiz made up of five multiple choice questions. Finally, a post-test survey was administered to assess video formats on a 11-point bipolar rating scale (0= very poor/negative, 5= neutral, 10= very good/positive) regarding student’s ability to concentrate, usefulness for the quiz, content intelligibility, clarity, tempo, structure, discernibility, and overall lecture quality (Appendix: Post-test Survey).8 Duplicate responses were eliminated by confirming a unique IP address.

Qualitative Analysis

A descriptive qualitative analysis was conducted on 451 comments from the viewer survey (Part 1 of study) and fifty-eight comments from the post-test survey (Part 2 of study) to collect open-ended feedback under the guidance of a qualitative research expert (PM). De-identified comments were analyzed with MAXQDA2022 software (Verbi Software GmbH, Berlin, Germany). Thematic patterns were established through iterative analysis and validity was ensured by researcher triangulation. Representative quotes were identified and reviewed by all authors for inclusion (Table 2).25,26

Table 2.

Video format themes and representative quotes.

| Theme | Slideshow Format | Pencast Format |

|---|---|---|

| Pace | More appropriate pace for information

presented “It seemed like the slideshow presented more information in less time than the pencast.” |

Slows down pace of the video with empty

handwriting time “There were little moments of impatience while the text was being written.” |

| Focus | More boring and monotonous Easier to lose focus Moving cursor can be distracting “While watching the slideshow I found my thoughts wandering often.” “I thought the cursor was a bit distracting and didn’t add much in the slideshow.” |

More interactive and engaging Easier to follow along as things are drawn on screen More personalized “When you’re writing it is easier to follow versus just glancing over the text that was already there.” “The pencast feels more personalized and allows for more emphasis on specific points.” |

| Organization | More organized “The slideshow video presented the information a lot more clearly.” |

Less organized “I felt like it was missing context and got a little messy by the end.” |

| Comprehension | Best for explaining big picture

concepts More detailed and readable diagrams and labels Harder to interpret diagrams along with vocal part of lecture at same time “I think it was harder to focus on the diagrams in the slideshow because they were overwhelming.” “The slideshow is good for getting big points out.” |

Clearer at explaining specific points

Hand-drawn visuals are not as detailed Messier and missing context with a screen covered in writing More difficult to concentrate on both writing and explanation simultaneously “I felt like the pencast was missing context and got a little messy by the end.” “The pencast allows for more emphasis on specific points.” |

| Recommendations |

“Both styles would work better

synergistically to pace the learning and be more interactive. E.g.

Annotating a slide/diagram during its

explanation.”

“Watching the handwriting appear sort of felt like it slowed the video down, but was useful for diagrams. I think a combination would be ideal - some content already on the screen and then using the pen to add/teach along the way.” |

“In my opinion the slideshow

should be combined with the pencast. It’s not about which is

better. They both should be used simultaneously in the

video.”

“I like both styles of presentation, but I believe a combination of the two can be more effective. The slideshow is good for getting big points out, but the pencast feels more personalized and allows for more emphasis on specific points.” “I feel that for myself a combination of the two is better.” |

Note: Representative quotes are taken from typed comments entered by study participants.

Statistics: Statistical analyses were conducted using SAS 9.4 Software (SAS Institute, Cary, NC, USA). Graphs were made using DataGraph (Visual Data Tools, Chapel Hill, NC, USA). For the first part of study, to characterize decline in audience retention, a linear regression analysis was conducted. A line of best fit for each topic yielded an r2 and β value, and plots were constructed to visualize the relationship between audience retention and video length.27 For the data from second portion of study, a Wilcoxon signed-rank test was used to test for preference of VBL format and a Chi-square test was used to compare mean quiz scores between the slideshow and pencast groups with p-values <0.05 to assess significance.8,28

RESULTS

Part 1: Viewer Demographics and Video-Based Lecture Feedback

Viewers (N=677,200) were more commonly female (53.7%) with the majority using a mobile phone (52.6%) or computer (37.0%) for watching. Key traffic sources included browse features (23.0%), YouTube search (21.9%), and playlists (20.5%). Viewers lived worldwide, with the largest cohorts from the United States (34.2%), India (17.6%) and Canada (4.90%). The largest age groups were 25–34-year-olds (46.8%) and 18–24-year-olds (29.7%), consistent with many viewers being students (Table 1). Viewer survey participants (N=683) included a plurality of dental students or residents (n=302, 44.2%), dental professionals (n=258, 37.8%), pre-dental students (n=53, 7.76%), dental school faculty (n=10, 1.46%) and dental assisting and hygiene students (n=15, 2.19%) (Figure 2A). This suggests about 93% of MD viewership is a dental trainee or professional. Additionally, the majority of “none of the above” respondents (n=41, 6.00%) indicated they are international or foreign dentists.

In the viewer survey, dental students and residents (n=302) were asked what adjunctive resources they use outside of faculty-provided resources; the most common resource was videos (26.8%), surpassing other online resources like Quizlet and Reddit (26.3%), textbooks (15.9%) and journals (9.01%) (Figure 2B). Some comments indicated that MD VBLs are “more captivating” than traditional lectures and “more entertaining… than reading boring books.” Students are more likely to use an adjunctive resource due to the quality of content (45.0%), ease of access (44.0%), exposure to a different point of view (21.5%), and insufficient faculty-provided resources (18.9%). The most prevalent reasons to use videos as a learning resource were to study for an exam or quiz (35.1%), to understand a topic better (33.1%), and to prepare for a standardized exam like the National Board Dental Exam (NBDE) (32.5%). Less common reasons were to help with a simulation lab technique (24.8%) or prepare for the clinic (21.5%) (Table S4). Users commented that MD VBLs were “helpful to pass exams” and offered organized, concise presentations on key topics. Qualitative comments indicated that VBLs were most helpful for audio and visual learners, and that YouTube was a useful platform for educational videos because it is free, convenient, and easy to use (Table 2). Additionally, viewers indicated that they were more likely to watch videos recommended by faculty, who could offer their educational expert opinion in support of a VBL resource. The mean NPS for the MD YouTube videos was measured at +82.1 with 85% promoter, 13% passive, and 2% detractor, indicating broad positive reception (Figure 2C).

Of the 121 VBLs included in the study, mean AVD was 41.31% and mean like/dislike ratio was 99.08, with a range of 98.1 to 100 (Table S5). Audience retention curves and linear regressions were calculated, with each line starting at 100% of viewers and decaying to 0% by the end of the video (Figure 3). The linear regression analysis provided a line of best fit with a slope describing overall audience retention (AR) with a β= −1.34 and a coefficient of determination of 0.52. This means that the audience retention declined on average 1.34% for every one minute of video viewing. This decay of viewers was unaffected by video length or order in the series.

Part 2: VBL Format Efficacy

For the second portion of the study, based on randomized crossover quiz responses, there was a significant difference in perceived clarity of visuals (p=0.045), organization of lecture (p=0.041), and overall rating (p=0.049) between the slideshow and pencast lecture styles, all slightly favoring slideshows (Table S6). There was no difference in ability to concentrate, usefulness for the quiz, understandability, and pacing of material (Figure 1B). Additionally, there was no difference between quiz scores following the two video formats (Chi-squared value: 0.00; p= 0.954) (Table S7). However, the two tracks differed in their test scoring ability (Chi-squared value: 117.3; p<0.01). Specifically, the group that did the Topic 1 slideshow and Topic 2 pencast outperformed the group that did the Topic 1 pencast and Topic 2 slideshow (Figure 1C). Examples of slideshow and pencast formats are shown in Figure 1D.

Though there were no differences in average quiz scores following lecture formats, there was a marked difference in time required for their preparation. Slideshow videos required a mean of 273 minutes (+/− 24 SD) to prepare, whereas pencast videos required an average of 324 minutes (+/− 18 SD) to prepare (p=0.037) (Table 3).

Table 3.

Video preparation time (in minutes).

| Stage | Slideshow 1 | Slideshow 2 | Pencast 1 | Pencast 2 | p-value |

|---|---|---|---|---|---|

| Data gathering | 53 | 42 | 53 | 42 | 1.00 |

| Scripting | 97 | 88 | 97 | 88 | 1.00 |

| Creating visuals | 58 | 42 | 38 | 34 | 0.232 |

| Recording | 14 | 10 | 72 | 64 | 0.006* |

| Editing | 55 | 50 | 61 | 58 | 0.138 |

| Exporting | 15 | 13 | 16 | 15 | 0.312 |

| Uploading | 5 | 4 | 5 | 5 | 0.423 |

| Total | 297 | 249 | 342 | 306 | 0.037 * |

p<0.05 significance convention

Qualitative analysis of fifty-eight comments regarding VBL format from the post-test survey revealed recurring primary topics regarding pace, ease of focus and comprehension with benefits and drawbacks to each format (Table 2, Appendix). The pencast format was perceived as being too slow with excess “drawing time,” yet was more interactive and engaging. The faster pace was preferred in the slideshow, but this format was less interactive and engaging, with viewers finding it easier to lose focus and harder to interpret the diagrams alongside the simultaneous narration. The cursor was also disclosed as distracting at times. The slideshow offered clearer explanations of “big picture” concepts, while the pencast offered better presentation of specific points. The pencast drawings were also seen as messy, having less internal context, were less detailed, and were harder to interpret than slideshow diagrams.

Several viewers recommended a narrated slideshow with written annotations as the preferred hybrid lecture style. They suggested having colored pens annotate existing diagrams to provide further explanation. These recommendations corroborate with comments concerning the strengths and weaknesses of the two formats (Table 2).

DISCUSSION

The vast majority of MD viewers are dental students and professionals, pointing to the use of VBLs on YouTube as an accepted learning tool in dental curricula. The average age of users was 24.5 years old, indicating that current dental students have gravitated to YouTube as an educational platform. This may be indicative of a generational divide in technology use in education, consistent with literature which finds faculty lacking belief in the legitimacy of online education while healthcare students feel more comfortable and less anxious with technology integration in online education than faculty.29,30 Generation Z students also prefer YouTube and other videos to textbooks, significantly more than older Millennials.31 With the constant evolution of new platforms (i.e. TikTok, Instagram Reels), it is possible that the generational gap may widen, leading to tensions with more students preferring YouTube and other Web 2.0 platforms, particularly as Generation Z students age into dental school.

VBLs offer several benefits over live lectures including: (1) flexibility to repeat videos at any time, (2) a learning atmosphere with fewer distractions, (3) the ability to control volume or use closed captioning, (4) the possibility for distance learning, (5) the convenience of watching videos on smartphones, and (6) self-pacing with playback speed and desired breaks, as corroborated by our qualitative analysis.8,13 However, videos have limitations including: (1) no potential for kinesthetic learning or discussion-based topics, (2) practical skills being harder to convey, and (3) a greater reliance on student self-motivation and focus.32 Videos are also more expensive and time-consuming to produce initially, yet can be repeated year-after-year and ultimately result in cost savings.8 Altogether, videos should be a complement, rather than a competitor to live lectures, particularly as the need for remote education grows, spurred by the COVID-19 pandemic.3–6

Viewer survey findings indicated that students are more likely to use online videos as adjunctive study materials than textbooks, social media, and journals, consistent with other studies where dental students achieved higher test scores following videos compared to textbook reading (Figure 2).10,33 MD viewers have grown from eighteen to more than 200,000 over the past six years, suggesting demand for accessible online resources. Mukhopadhyay et al. experienced a similar phenomenon when they uploaded forty videos on dental anatomy and local anesthesia to YouTube, which received 71,000 views in eighteen months.12 The NPS of +82.1 for the MD YouTube channel is high among US organizations, which have a mean of +32 and median of +44, and higher education organizations with a mean NPS of +32.34 For comparison, Kaplan’s (Kaplan Inc, Fort Lauderdale, FL) estimated NPS is +23.28 This high NPS suggests user satisfaction and likelihood of recommendation for dental VBLs consistent with our hypothesis.35

Respondents indicated they were more apt to watch videos if they were recommended by faculty expert opinion and incorporated in courses consistent with findings by Burns et al.;11 in contrast, only 5%7 to 37%36 of educational dental content on YouTube is provided by universities. To ensure evidence-based resources, dental schools may want to develop or screen video resources for curricula, as VBLs do not go through a traditional peer-review and editorial process. Some universities may also limit sharing of their VBLs on Web 2.0 platforms, depending on their resource sharing policies; schools with more restrictive policies may be unable to contribute to freely accessible platforms like YouTube, but could reference VBLs produced elsewhere and develop internal VBLs.

Using Lau’s model for evaluating VBLs with learning analytics, our VBLs were found to have a uniform linear AR decline of 1.34% per minute regardless of video length (Figure 3).27 Our results contradict the published findings on the ten to fifteen minute attention span of students attending lecture17 and the recommended six to twenty minute video lecture length.37,38 Our data suggest that the optimal video length depends on a desired final AR based on the linear decline of viewers, contrary to our hypothesis. For example, if we want at least 80% of viewers watching the entire video with an average 1.34% per minute AR decline, the video should be no longer than 15 minutes. Note that the AR decline rate may also vary between video producers, topic areas, and presentation styles, as Lau et al.’s AR decline rate was 2.80% per minute.27 AR decline may negatively impact learning; however, the relationship between AR decline and information retention was not investigated in this study.20–22

Relative to evaluating different VBL formats, Guo et al. found that students engaged for one and a half to two times as long with pencast videos compared to slideshow videos.38 However, to our knowledge, there are no data comparing the efficacy of content learning between pencast and slideshow VBLs. We found a slight student preference for slideshows, with no significant difference in subsequent test scores, consistent with our hypothesis. Despite randomized allocation, students on Track 1 performed better on both quizzes than students on Track 2, perhaps due to participants in Track 1 being inadvertently more proficient than participants in Track 2, suggesting that certain topics may be better suited to pencast or slideshow (Figure 1). Qualitative analysis of post-test survey results revealed themes to guide development of videos including recommendations for a hybrid format that contains a slideshow with live written annotation. This hybrid format would optimize the strengths of the pencast and slideshow formats. Consistent with our results, Cross et al. found that both lecture styles had complementary strengths and weaknesses with respondents commenting that handwriting is more personal, natural, and engaging while typeface is clearer, neater, and easier to read.39 For our team, slideshow VBLs took less time to prepare than pencasts, suggesting slideshows may be preferred by busy educators and students, as subsequent test scores were equivalent.

Limitations: Survey data were collected from viewers of the MD YouTube channel, which introduces selection bias for participants who utilize VBLs and who volunteer for surveys. Roughly 46% of survey respondents were channel subscribers. At the end of videos, YouTube’s algorithm suggested related videos based on viewers’ watch history and preferences, potentially increasing views of MD videos; this was not factored into data analyses. Because all VBLs were produced by the MD team, responses regarding user experience may not be as applicable to VBLs produced by other groups. Response data had geographical bias, with a majority of respondents from the US and a disproportionate number from North Carolina, raising questions of generalizability to other regions. Viewer demographic data was limited by YouTube analytics, including no racial or ethnicity data. Survey respondents were enrolled with no power calculation guiding sample size, though the YouTube survey sample (n=683) was within a range judged as “very good” (>500) and “above the acceptable range” (300–450) for surveys.40,41 However, because the second portion of the study had only 101 respondents, we were not able to fully saturate every topic. A larger sample might have led to more nuanced sub-topics. Quizzes were administered remotely due to pandemic-related restrictions, and though participants attested to not using notes for the quizzes, we cannot exclude this possibility.

A global survey of dental faculty and students to evaluate VBL utilization and efficacy in curricula would be a valuable next step. Other future directions include extending this study to evaluate VBLs by other groups, on other Web 2.0 platforms and comparing the effectiveness of VBLs with live, in-person lectures. Data can guide dental programs with development and implementation of VBLs as remote education continues to expand.

CONCLUSIONS

Dental students and professionals use VBLs on YouTube for educational purposes and are likely to recommend MD VBLs to friends and colleagues (NPS = 82.1). Surveyed students are more likely to use videos as adjunctive study materials than textbooks, journals, and social media.

There is no optimal video length that maximizes audience retention. A shorter video will have more complete views because it ends sooner, not because the rate of decline in audience retention changes.

The format of video lectures (slideshow or pencast) does not have a significant impact on quiz scores, and students have a slight preference towards slideshows. It is advised to make slideshow videos with live annotations (hybrid model), to offer the benefits of both formats and reduce VBL preparation time.

Supplementary Material

ACKNOWLEDGEMENTS

We would like to thank Michael D. Wolcott for his insight and advice while on our thesis committee. We thank and acknowledge all our participants for their time and engagement with this study.

FUNDING

This research was supported by the University of North Carolina (UNC) Doctor of Dental Surgery (DDS) Short-Term Research Fellowship sponsored by the School of Dentistry, Dental Alumni, and the Dental Foundation of North Carolina as well as the Southern Association of Orthodontists (to RTG). This study was also supported by the American Association of Orthodontics Foundation (AAOF) Martin ‘Bud’ Schulman Postdoctoral/Junior Faculty Fellowship (to LJ) and the National Institutes of Health (NIH), National Institutes of Dental and Craniofacial Research (NIDCR) K08 grant #K08DE030235 (to LJ).

Footnotes

DISCLOSURE

RTG is the owner of the Mental Dental YouTube channel. He earns advertisement revenue from videos, though they are freely available for public consumption. This research was conducted using some of the content he curated. However, research questions were not geared at promoting this educational platform, but rather investigating its effectiveness objectively. All other authors have no conflicts of interest to declare.

Data Availability Statement

The data that supports the findings of this study are available in the supplementary material of this article.

REFERENCES

- 1.Jackson TH, Zhong J, Phillips C, Koroluk LD. Self-Directed Digital Learning: When Do Dental Students Study? J Dent Educ. 2018;82(4):373–378. [DOI] [PubMed] [Google Scholar]

- 2.Jackson TH, Hannum WH, Koroluk L, Proffit WR. Effectiveness of web-based teaching modules: test-enhanced learning in dental education. J Dent Educ. 2011;75(6):775–781. [PubMed] [Google Scholar]

- 3.Farrokhi F, Mohebbi SZ, Farrokhi F, Khami MR. Impact of COVID-19 on dental education- a scoping review. BMC Med Educ. 2021;21(1):587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bartok-Nicolae C, Raftu G, Briceag R, et al. Virtual and Traditional Lecturing Technique Impact on Dental Education. Applied Sciences. 2022;12(3):1678. [Google Scholar]

- 5.Bennardo F, Buffone C, Fortunato L, Giudice A. COVID-19 is a challenge for dental education-A commentary. Eur J Dent Educ. 2020;24(4):822–824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Generali L, Iani C, Macaluso GM, Montebugnoli L, Siciliani G, Consolo U. The perceived impact of the COVID-19 pandemic on dental undergraduate students in the Italian region of Emilia-Romagna. Eur J Dent Educ. 2021;25(3):621–633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dias da Silva MA, Pereira AC, Walmsley AD. Who is providing dental education content via YouTube? Br Dent J. 2019;226(6):437–440. [DOI] [PubMed] [Google Scholar]

- 8.Brockfeld T, Müller B, de Laffolie J. Video versus live lecture courses: a comparative evaluation of lecture types and results. Med Educ Online. 2018;23(1):1555434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Paegle RD, Wilkinson EJ, Donnelly MB. Videotaped vs traditional lectures for medical students. Med Educ. 1980;14(6):387–393. [DOI] [PubMed] [Google Scholar]

- 10.Kalludi S, Punja D, Rao R, Dhar M. Is Video Podcast Supplementation as a Learning Aid Beneficial to Dental Students? J Clin Diagn Res. 2015;9(12):CC04–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Burns LE, Abbassi E, Qian X, Mecham A, Simeteys P, Mays KA. YouTube use among dental students for learning clinical procedures: A multi-institutional study. J Dent Educ. 2020;84(10):1151–1158. [DOI] [PubMed] [Google Scholar]

- 12.Mukhopadhyay S, Kruger E, Tennant M. YouTube: a new way of supplementing traditional methods in dental education. J Dent Educ. 2014;78(11):1568–1571. [PubMed] [Google Scholar]

- 13.Seo CW, Cho AR, Park JC, Cho HY, Kim S. Dental students’ learning attitudes and perceptions of YouTube as a lecture video hosting platform in a flipped classroom in Korea. J Educ Eval Health Prof. 2018;15:24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Duncan I, Yarwood-Ross L, Haigh C. YouTube as a source of clinical skills education. Nurse Educ Today. 2013;33(12):1576–1580. [DOI] [PubMed] [Google Scholar]

- 15.Think with Google. Consumer Insights. Accessed March 14, 2023. https://www.thinkwithgoogle.com/consumer-insights/consumer-trends/self-directed-learning-youtube-work-study-hobbies/

- 16.Mental Dental® YouTube Channel. Accessed March 14, 2023. https://www.youtube.com/c/MentalDental/about.

- 17.Bradbury NA. Attention span during lectures: 8 seconds, 10 minutes, or more? Adv Physiol Educ. 2016;40(4):509–513. [DOI] [PubMed] [Google Scholar]

- 18.Koladycz R, Fernandez G, Gray K, Marriott H. The Net Promoter Score (NPS) for Insight Into Client Experiences in Sexual and Reproductive Health Clinics. Glob Health Sci Pract. 2018;6(3):413–424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.NICE Satmetrix. What Is Net Promoter? Accessed March 14, 2023. https://www.netpromoter.com/know/

- 20.Brame CJ. Effective educational videos: Principles and guidelines for maximizing student learning from video content. CBE Life Sci Educ. 2016;15(4). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Guo PJ, Kim J, Rubin R. How video production affects student engagement. In: Proceedings of the First ACM Conference on Learning @ Scale Conference. ACM; 2014:41–50. [Google Scholar]

- 22.Yee A, Padovano WM, Fox IK, et al. Video-based Learning in Surgery. Ann Surg. 2020;272(6):1012–1019. [DOI] [PubMed] [Google Scholar]

- 23.Adams School of Dentistry. Doctor of Dental Surgery (DDS) Program. Accessed March 14, 2023. https://dentistry.unc.edu/education/dds/

- 24.The University of North Carolina at Chapel Hill. Doctor of Dental Surgery, D.D.S. Updated June 28, 2022. Accessed March 14, 2023. https://catalog.unc.edu/undergraduate/programs-study/doctor-of-dental-surgery-dds/#requirementstext

- 25.Miles MB, Huberman AM, Saldaña J. Qualitative Data Analysis : A Methods Sourcebook. 4th ed. SAGE; 2020. [Google Scholar]

- 26.Saldaña J The Coding Manual for Qualitative Researchers. SAGE Publications; 2009. [Google Scholar]

- 27.Lau KHV, Farooque P, Leydon G, Schwartz ML, Sadler RM, Moeller JJ. Using learning analytics to evaluate a video-based lecture series. Med Teach. 2018;40(1):91–98. [DOI] [PubMed] [Google Scholar]

- 28.Leinonen J, Laitala ML, Pirttilahti J, Niskanen L, Pesonen P, Anttonen V. Live lectures and videos do not differ in relation to learning outcomes of dental ergonomics. Clin Exp Dent Res. 2020;6(5):489–494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Makarem SC. Using Online Video Lectures to Enrich Traditional Face-to-Face Courses. International Journal of Instruction. 2015;8(2):155–164. [Google Scholar]

- 30.Culp-Roche A, Hampton D, Hensley A, et al. Generational Differences in Faculty and Student Comfort With Technology Use. SAGE Open Nurs. 2020;6:2377960820941394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pearson. New Research Finds YouTube, Video Drives Generation Z Learning Preference. August 8, 2018. Accessed March 14, 2023. https://plc.pearson.com/en-US/news/new-research-finds-youtube-video-drives-generation-z-learning-preference

- 32.Ranasinghe L, Wright L. Video lectures versus live lectures: competing or complementary? Med Educ Online. 2019;24(1):1574522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Jain A, Bansal R, Singh K, Kumar A. Attitude of medical and dental first year students towards teaching methods in a medical college of northern India. J Clin Diagn Res. 2014;8(12):XC05–XC08. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ghosh A NPS: The Most Important Metric Your Higher Education Competitors Aren’t Using. Hanover Research. March 10, 2022. Accessed March 14, 2023. https://www.hanoverresearch.com/insights-blog/metric-your-higher-education-competitors-arent-using/?org=higher-education [Google Scholar]

- 35.Comparably. Kaplan Test Prep NPS & Customer Reviews. Accessed March 14, 2023. https://www.comparably.com/brands/kaplan-test-prep

- 36.Knösel M, Jung K, Bleckmann A. YouTube, dentistry, and dental education. J Dent Educ. 2011;75(12):1558–1568. [PubMed] [Google Scholar]

- 37.Nematollahi S, St John PA, Adamas-Rappaport WJ. Lessons learned with a flipped classroom. Med Educ. 2015;49(11):1143. [DOI] [PubMed] [Google Scholar]

- 38.Guo PJ, Kim J, Rubin R. How video production affects student engagement. In: Proceedings of the First ACM Conference on Learning @ Scale Conference. ACM; 2014:41–50. [Google Scholar]

- 39.Cross A, Bayyapunedi M, Cutrell E, Agarwal A, Thies W. TypeRighting. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM; 2013:793–796. [Google Scholar]

- 40.Comrey AL, Lee HB. A First Course in Factor Analysis. Vol XII. 2nd ed. Hillsdale, NJ: Lawrence Erlbaum; 1992. [Google Scholar]

- 41.Guadagnoli E, Velicer WF. Relation of sample size to the stability of component patterns. Psychol Bull. 1988;103(2):265–275. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that supports the findings of this study are available in the supplementary material of this article.