Abstract

Technological advancements in computer science have started to bring artificial intelligence (AI) from the bench closer to the bedside. While there is still lots to do and improve, AI models in medical imaging and radiotherapy are rapidly being developed and increasingly deployed in clinical practice. At the same time, AI governance frameworks are still under development. Clinical practitioners involved with procuring, deploying, and adopting AI tools in the UK should be well-informed about these AI governance frameworks. This scoping review aimed to map out available literature on AI governance in the UK, focusing on medical imaging and radiotherapy. Searches were performed on Google Scholar, Pubmed, and the Cochrane Library, between June and July 2022. Of 4225 initially identified sources, 35 were finally included in this review. A comprehensive conceptual AI governance framework was proposed, guided by the need for rigorous AI validation and evaluation procedures, the accreditation rules and standards, and the fundamental ethical principles of AI. Fairness, transparency, trustworthiness, and explainability should be drivers of all AI models deployed in clinical practice. Appropriate staff education is also mandatory to ensure AI’s safe and responsible use. Multidisciplinary teams under robust leadership will facilitate AI adoption, and it is crucial to involve patients, the public, and practitioners in decision-making. Collaborative research should be encouraged to enhance and promote innovation, while caution should be paid to the ongoing auditing of AI tools to ensure safety and clinical effectiveness.

Introduction

Artificial intelligence (AI) is increasingly employed in healthcare. 1 Recent technology and neuroscience breakthroughs brought AI from the lab to the clinic. 2 Medical imaging was one of the first disciplines to adopt AI technologies, 3 with AI-enabled applications being used for pathology detection and staging, image reconstruction, segmentation, image optimisation, automation and optimisation of workflows, automation of imaging protocols, feature extraction etc., to name just a few. 4–8

While AI-driven applications for use in clinical practice are increasing, there is, in parallel exponential interest and discussion on the need for rigorous AI governance frameworks. 9 AI governance may entail processes related to the ethical use and deployment of AI tools, regulation and accreditation of AI models, liability, accountability, data protection processes, and education, among others.

In medical imaging, the implementation of robust AI governance frameworks is required for the safe adoption of AI in clinical practice.

Although AI is a ubiquitous term, AI governance remains loosely defined and largely underdeveloped, with no consensus on what AI governance might entail. 10 Recent research has shown important variation among organisations and countries regarding governance. A lack of standardisation could impede AI adoption, create market disparities, and compromise safety. 11 Processes like procurement, validation and evaluation, monitoring and decommissioning, as part of the AI product lifecycle, are all impacted by the lack of standardised AI governance frameworks. Hence, there is an urgent need to propose a robust, unified governance framework to enhance the trustworthiness and transparency of AI systems and mitigate any potential risks associated with the implementation of AI-enabled solutions. 12,13

In medical imaging departments, healthcare professionals, including radiographers, radiologists, and medical physicists, are often responsible for procuring medical imaging equipment. Multidisciplinary teams also ensure the safe running of the equipment by implementing robust quality assurance programmes and escalating concerns, as required. Given the ongoing increase of AI tools in clinical imaging, radiographers, radiologists, medical physicists and other relevant professionals are expected to acquire substantial knowledge related to AI in medical imaging and radiotherapy, to be in a position to facilitate clinical adoption. 14–17 Different regulatory and professional bodies in healthcare and medical imaging are pushing for AI competencies becoming central to healthcare practitioners’ training. 18–22

Scoping reviews are ideal for exploring emerging literature on fast-developing topics and identifying knowledge gaps. 23 This scoping review, part of a more comprehensive research project exploring the notion of AI governance in medical imaging and radiotherapy, aims to map out all currently available literature (peer-reviewed and grey literature) in these fields and propose a comprehensive AI governance framework.

The research question that guides this scoping review is “What might be relevant to an AI governance framework in medical imaging and radiotherapy in the UK?”. This question was generated in line with the Population, Concept, Context framework for scoping reviews. 24

Methods

Review protocol

This article is structured in line with the Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) checklist. 25

An explicit review protocol was followed for inclusion and exclusion criteria, data extraction methods and objectives. 24 Ethical approval was obtained from City, University of London School of Health and Psychological Sciences Research Ethics Committee (ref: ETH2122-1015).

Eligibility criteria

Table 1 below demonstrates the eligibility criteria applied to this scoping review.

Table 1.

Eligibility criteria

| Studies published within the last 5 years (2017–2022). |

| Studies published only in the English language. |

| Full-text articles only. |

| Both peer-reviewed studies and grey literature (white papers, guidelines, guidance, standards, and regulations) will be included. |

| All study designs were eligible for this review. |

Information sources

The following databases were searched: a) Google Scholar, b) PubMed, and c) The Cochrane Library. Searches were initiated on 30 May 2022, and the last search was performed on 17 June 2022. A new search was also performed on 15 October 2022, to ensure we captured any latest papers.

Search

A consistent methodology was applied for all database searches to enhance the reproducibility of this scoping review. 26 All searches were performed using explicit, pre-defined keywords related to the topic under exploration. Appropriate search strings were developed using Boolean operators ‘’AND’’ and ‘’OR’’ to narrow down the results. A completely worked example of the search strategy for one database is provided as Supplementary Material. The pearl growing search technique was also applied to identify relevant sources of evidence in the reference lists of already obtained studies.

Selection of sources of evidence

A researcher (NS) initially screened the articles at the level of titles and abstracts to identify relevant/non-relevant results. Non-relevant articles were excluded at this stage, based on the content provided in titles/abstracts, and only relevant articles were assessed for eligibility based on inclusion and exclusion criteria (full-text evaluation). All results were saved on the Zotero reference manager, v. 6.0.13 (Corporation for Digital Scholarship, Virginia), for further evaluation, and duplicates were automatically removed. All relevant studies were read thoroughly and evaluated against the eligibility criteria. A senior researcher (CM) then advised on the final study selection, and that list was reviewed by the research team and finalised based on consensus reading amongst the researchers.

Data charting process

To extract all meaningful data from the eligible studies, a data-charting form was used to allow a visual map of the included studies and enable correlations and convergence of ideas and topics. 27 This charting table (Table 2) allowed for a standardised method of data charting, and any disagreements were resolved until a consensus was reached.

Table 2.

Data extraction form

| Title | Author(s) | Year | Country | Aim/purpose | Population/ sample |

Methods | Intervention type | Duration of intervention | Key findings related to the scoping review question |

|---|---|---|---|---|---|---|---|---|---|

| A governance model for the application of AI in health Care. | Reddy et al. | 2020 | Australia | To propose an AI governance model. | n/a | Narrative review | n/a | n/a |

|

| A guide to good practice for digital and data-driven health technologies. | Department of Health & Social Care | 2021 | UK | To support innovators in understanding what the NHS is looking for when it buys digital and data-driven technology for use in health and care. | n/a | Grey literature | n/a | n/a |

|

| Evidence standards framework (ESF) for digital health technologies. | National Institute for Health and Care Excellence. | 2022 | UK | For companies that develop or distribute, and for evaluators and decision makers in the health and care system. | n/a | Grey literature | n/a | n/a |

|

| Ethics Guidelines for trustworthy AI. | European Commission | 2019 | Belgium | To promote trustworthy AI and set out a framework to achieve this. | n/a | Grey literature | n/a | n/a |

|

| Artificial intelligence in hospitals: providing a status quo of ethical considerations in academia to guide future research. | Mirbadaie et al. | 2021 | Germany | To identify the status quo of interdisciplinary research in academia on ethical considerations and dimensions of AI in hospitals. |

15 articles published in medical journals. | Systematic discourse | n/a | n/a |

|

| Artificial Intelligence and Healthcare Regulatory and Legal Concerns. |

Ganapathy K. | 2021 | India | To discuss liability issues when AI is deployed in healthcare. | n/a | Narrative Review | n/a | n/a |

|

| Artificial Intelligence and Liability in Medicine: Balancing Safety and Innovation. |

Maliha et al. | 2021 | USA | To discuss liability issues. | n/a | Narrative Review | n/a | n/a |

|

| Artificial intelligence and medical imaging 2018: French Radiology Community white Paper. |

SFR-IA Group, CERF | 2018 | France | To issue a position paper on AI. | n/a | Narrative Review | n/a | n/a |

|

| Artificial intelligence as a medical device in radiology: ethical and regulatory issues in Europe and the United States. |

Pesapane et al. | 2018 | Binational | To analyse the legal framework regulating medical devices and data protection in Europe and in the United States. |

n/a | Narrative Review | n/a | n/a |

|

| Artificial Intelligence in Cardiovascular Imaging: “Unexplainable” Legal and Ethical Challenges? |

Lang et al. | 2022 | Canada | To discuss legal and ethical issues arising from unexplainable AI models. | n/a | Narrative Review | n/a | n/a |

|

| Artificial intelligence in healthcare: a critical analysis of the legal and ethical implications. | Schonberger D. | 2019 | UK | To discuss ethical and legal challenges of AI in healthcare. | n/a | Narrative review | n/a | n/a |

|

| Artificial Intelligence in Radiology— Ethical Considerations. |

Brady & Neri | 2020 | Multinational | To explain some of the ethical challenges, and some of the measures we may take to protect against misuse of AI. | n/a | Narrative review | n/a | n/a |

|

| Canadian Association of Radiologists White Paper on Artificial Intelligence in Radiology. |

Tang et al. | 2018 | Binational | To inform CAR members and policymakers on key terminology, educational needs of members, research and development, partnerships, potential clinical applications, implementation, structure and governance, role of radiologists, and potential impact of AI on radiology in Canada. |

n/a | Narrative Review | n/a | n/a |

|

| Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. |

Mongan et al. | 2020 | USA | To propose CLAIM, the Checklist for AI in Medical Imaging. |

n/a | Narrative Review | n/a | n/a | -Guidelines for reporting AI studies. |

| DECIDE-AI: new reporting guidelines to bridge the development to implementation gap in clinical artificial intelligence. | The DECIDE-AI Steering Group. | 2021 | Multinational | To propose guidelines to report key information items between the in silico algorithm development/validation and large-scale clinical trials evaluating AI interventions. | n/a | Narrative review | n/a | n/a | -Reporting guidelines. |

| Do no harm: a roadmap for responsible machine learning for health care. | Wiens et al. | 2019 | Binational | To provide a comprehensive overview of the barriers to deployment and translational impact. | n/a | Narrative review | n/a | n/a |

|

| Emerging Consensus on ‘Ethical AI’: Human Rights Critique of Stakeholder Guidelines. |

Fukuda-Parr & Gibbons | 2021 | USA | To review 15 guidelines preselected to be strongest on human rights, and on global health. | 15 guidelines | Narrative review | n/a | n/a |

|

| Ethical and legal challenges of informed consent applying artificial intelligence in medical diagnostic consultations. | Astromske et al. | 2021 | Lithuania | To discuss the process of informed consent when using AI. | n/a | Narrative review | n/a | n/a |

|

| Ethical considerations for artificial intelligence: an overview of the current radiology landscape. | D'Antonoli TA | 2020 | Switzerland | To discuss ethical issues around AI. | n/a | Narrative review | n/a | n/a |

|

| Ethics of Artificial Intelligence in Radiology: Summary of the Joint European and North American Multisociety Statement. |

Geis et al. | 2019 | Multinational | Summary of a joint statement on AI Ethics. | n/a | Narrative review | n/a | n/a |

|

| Ethics of Using and Sharing Clinical Imaging Data for Artificial Intelligence: A Proposed Framework. |

Larson et al. | 2020 | USA | To propose an ethical framework for using and sharing clinical data for the development of AI applications. | n/a | Narrative review | n/a | n/a |

|

| Evaluation and Real-World Performance Monitoring of Artificial Intelligence Models in Clinical Practice: Try It, Buy It, Check It. |

Allen et al. | 2021 | USA | To discuss why regulatory clearance alone may not be enough to ensure AI will be safe and effective in all radiological practices. | n/a | Narrative review | n/a | n/a |

|

| FUTURE-AI: Guiding Principles and Consensus Recommendations for Trustworthy Artificial Intelligence in Medical Imaging. |

Lekadir et al. | 2021 | Multinational | To introduce a careful selection of guiding principles drawn from the accumulated experiences, consensus, and best practices from five large European projects on AI in Health Imaging. |

n/a | Narrative review | n/a | n/a |

|

| Identifying Ethical Considerations for Machine Learning Healthcare Applications. |

Char et al. | 2020 | USA | To identify ethical concerns of ML healthcare applications. | n/a | Narrative review | n/a | n/a |

|

| A Buyer’s Guide to AI in Health and Care. |

NHSx | 2020 | UK | To offer practical guidance on the questions to be asking before and during any AI procurement exercise in health and care. |

n/a | Grey literature | n/a | n/a |

|

| Privacy in the age of medical big data. | Price & Cohen | 2019 | Binational | To discuss patient privacy, consent, and data collection. | n/a | Narrative review | n/a | n/a |

|

| Regulatory Frameworks for Development and Evaluation of Artificial Intelligence–Based Diagnostic Imaging Algorithms: Summary and Recommendations. |

Larson et al. | 2021 | Binational | To review the major regulatory frameworks for software as a medical device applications, identify major gaps, and propose additional strategies to improve the development and evaluation of diagnostic AI algorithms. |

n/a | Narrative review | n/a | n/a |

|

| Reporting guidelines for clinical trials of artificial intelligence interventions: the SPIR IT-AI and CONSORT-AI guidelines. |

Ibrahim et al. | 2021 | Binational. | To build new guidelines to report clinical trials of AI interventions. | n/a | Narrative review | n/a | n/a | -Reporting guidelines |

| The European artificial intelligence strategy: implications and challenges for digital health. | Cohen et al. | 2020 | Multinational | To present the challenges associated with the European Commission white paper on AI. | n/a | Narrative review | n/a | n/a |

|

| The proof of the pudding: in praise of a culture of real-world validation for medical artificial intelligence. | Cabitza & Zeitoun | 2019 | Binational | To propose four types of validity corresponding to different perspectives to evaluate true clinical validity. | n/a | Narrative review | n/a | n/a |

|

| The roadmap to an effective AI assurance ecosystem. | Centre for Data Ethics and Innovation | 2021 | UK | To set out the steps needed to grow a mature AI assurance industry. | n/a | Grey literature | n/a | n/a |

|

| To buy or not to buy—evaluating commercial AI solutions in radiology (the ECLAIR guidelines). |

Omoumi et al. | 2021 | Multinational | To propose a practical framework that will help stakeholders evaluate commercial AI solutions in radiology and reach an informed decision. | n/a | Narrative review | n/a | n/a |

|

| Towards a framework for evaluating the safety, acceptability and efficacy of AI systems for health: an initial synthesis. | Morley et al. | 2021 | UK | To set out a minimally viable framework for evaluating the safety, acceptability and efficacy of AI systems for healthcare. | n/a | Narrative review | n/a | n/a |

|

| Understanding healthcare workers’ confidence in AI. | NHS AI Lab & Health Education England | 2022 | UK | To explore the factors influencing healthcare workers’ confidence in AI technologies and how these can inform the development of related education and training. |

n/a | Grey literature | n/a | n/a |

|

| Digital Technology Assessment Criteria (DTAC) | NHS England | 2021 | UK | To give patients and staff the confidence that the digital tools they use meet NHS’ clinical safety, data protection, technical security, interoperability and usability and accessibility standards. | n/a | Grey literature | n/a | n/a |

|

Data items

The team extracted essential study characteristics related to author(s), year of publication, country of origin, study population, and sample size. At the same time, any critical data relevant to the scoping review question was also extracted.

Data analysis and synthesis of results

All included studies were coded and then grouped according to their content (e.g. ethics, regulation, validation etc.). Content analysis was performed to identify concepts, categories, and themes 28 of AI governance included in the eligible papers. These themes were then compiled to inform a conceptual framework loosely based on previous clinical governance frameworks in the UK. 29–31

Results

Selection of sources of evidence

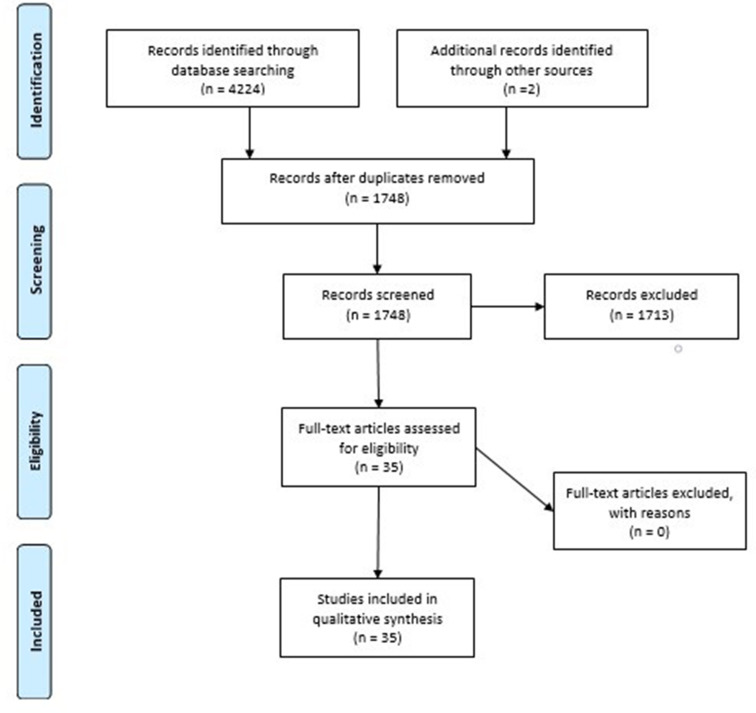

In total, 35 articles were included in this scoping review. The following diagram (Figure 1) demonstrates the details related to the search process, screening of articles, and final selection.

Figure 1.

PRISMA flow diagram. PRISMA, Preferred Reporting Items for Systematic reviews and Meta-Analyses.

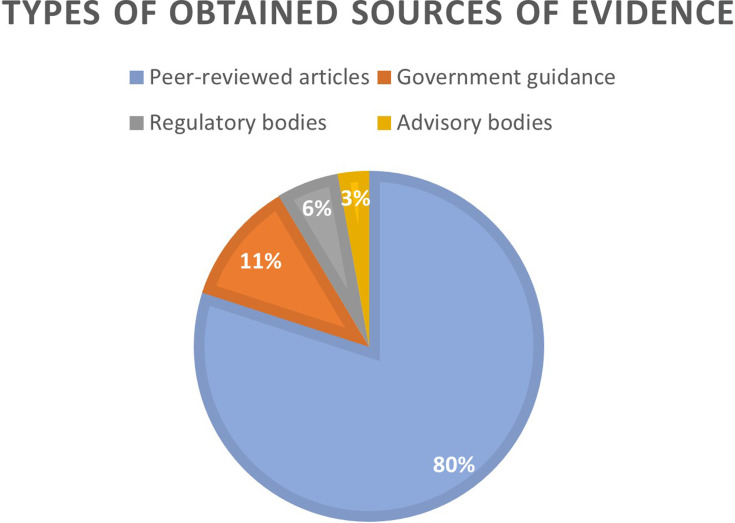

Out of 35 sources of evidence included in this scoping review, 28 articles were identified as reviews, while 7 related to ‘grey literature’ (government guidance and guidelines issued by regulatory, advisory, or professional bodies). In terms of geographical distribution, the obtained studies were from the UK (n = 8), USA (n = 6), EU (n = 5), Canada (n = 1), Australia (n = 1), India (n = 1), while a further 13 of them were identified as binational/multinational. With regard to year of publication, many of them were published in 2021 (n = 15), followed by those in 2020 (n = 8), 2019 (n = 6), 2018 (n = 3) and 2022 (n = 3). Figure 2 provides information on the types of the obtained sources of evidence.

Figure 2.

Types of the obtained sources of evidence.

The main outcome of this study was to construct an AI governance framework, based on published evidence.

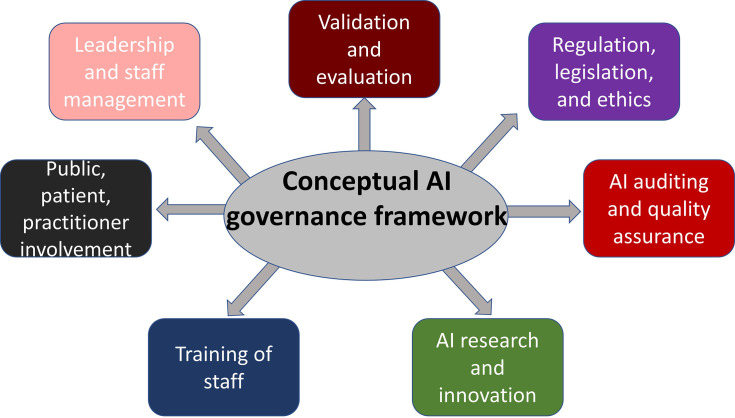

Suggested AI governance framework

The main findings were classified into concepts, then grouped into categories and further synthesised into themes in line with a content analysis approach. 32 The results of this scoping review enabled the researchers to propose a conceptual AI governance framework based on the most widely discussed topics and principles of AI governance. The following figure (Figure 3) demonstrates the seven pillars of AI governance, as identified in the literature and synthesised here into themes.

Figure 3.

Suggested AI governance framework. AI, artificial intelligence.

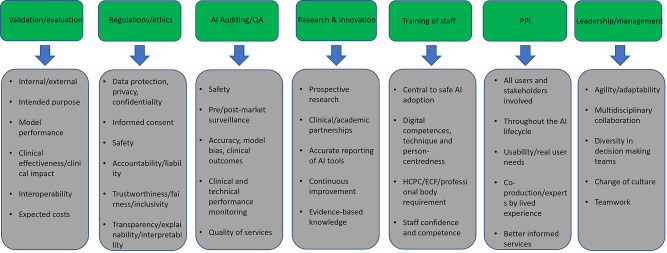

The above AI governance framework includes some fundamental principles of AI governance, such as validation, evaluation, and auditing of AI systems, while also highlighting the importance of research and innovation, appropriate staff education, and effective leadership to ensure the safe and successful deployment of AI in clinical practice. Some of the most important categories, allowing finer detail of the suggested AI governance framework, as identified by this scoping review, are summarised in the following figure (Figure 4) and in the discussion.

Figure 4.

Important concepts under each pillar of the suggested AI governance framework.

Discussion

Validation and evaluation

Validation and evaluation were highlighted in the literature as vital to assess an AI model’s technical performance and clinical effectiveness before clinical deployment, 33,34 ideally using multiple metrics and appropriate statistical methods 35 to ensure that the model is aligned with its intended purpose. 36–38 Evidence suggests the need for internal and external validation using unseen data. Validation has also been used in other contexts, e.g. to assess the generalisability/interoperability of the model. 39,40

Standardised, rigorous validation protocols and curated databases have been developed to facilitate this process. 41 Assessment of the clinical effectiveness of an AI model examines both its ability to generate the intended output effectively and to have a meaningful clinical impact. 34,42 Hence, a 3-phase validation process has been proposed, consisting of validation against the test set of the data set (internal validation), validation against unseen data (external validation), and validation against diverse datasets from multiple centres. 35

While this might sound optimal for diagnostic accuracy and clinical efficiency, in reality, data privacy and security when it comes to training and testing AI tools often make large, diverse, multisite data sets inaccessible to developers. 43

AI model interoperability enables seamless data flow across different imaging centres, systems, or geographical areas. 42,44 Interoperability in hardware, software, and data use can be achieved if large, representative training data sets are employed. 45 A common interoperability software framework has been suggested in clinical imaging. 41

Estimation of projected additional costs compared to standard practice 46 and their impact on the use of available resources 39 are also central to a comprehensive evaluation process.

Regulations, legislation, and ethics

The papers reinforce the need for rigorous regulatory frameworks to safely use any AI-enabled medical devices; however, stringent standards may come at the cost of limiting innovation. 44 Regulations must be applied to data protection, safety, and ethical use of AI models. 47 However, regulatory frameworks have not yet been standardised in many countries 48 due to technological advancements. 49

In the EU, all medical devices must be classified according to their risk and conform to the required CE regulations. 39 In the UK, all medical devices should be registered with the MHRA and undergo a UK Conformity Assessment (UKCA) from July 2024 due to the UK’s exit from the EU. 50 CE-marked devices will be accepted in the UK until that date. Devices CE-marked under the Medical Devices Directive (before 2021) will have a further 3 years to gain a UKCA certification, and devices CE-marked under the Medical Devices Regulation will have a further 5 years. In addition, all medical devices should also comply with the General Data Protection Regulation and, in the UK, the NHS Digital Technologies Assessment Criteria framework. 20,42 Moreover, all AI models should be classified according to their intended purpose, and this classification should be based on their potential risk to the system and service users. 46

Data protection is paramount when using AI technologies; all organisations must safely use, store, and dispose of data while upholding privacy and confidentiality. 42,51–55

Informed consent is another vital element of AI governance relating to the right of every patient/data owner to be informed about when their data will be used and for what purpose. 56 Consent reinforces the right to human autonomy. 53,57 Also, informed consent from data owners must be a dynamic process, and data owners should have the right to access their data and decide how it can be accessed by third parties. 51

In addition, all organisations must take appropriate steps to ensure data safety against potential adversarial attacks since data collection and usage raises concerns regarding cybersecurity. 51 Safety might also be compromised by the ‘algorithm update problem’ or ‘concept drift’, which can impact the algorithm’s performance over time as new data comes in. 58 Algorithms could be locked to remain static, limiting usability; a solution to this challenge could be to assess the algorithms for any changes periodically. 44,58 Ongoing data safety can be challenging and costly to maintain but necessary.

Serious concerns have been raised regarding potential patient harm from poor use or insufficient validation of AI models. 59 Liability of health practitioners is associated mainly with medical malpractice and negligence, while developers’ liability would most likely fall under product design liability. 59 Clinical practitioners may be liable for implementing inappropriate or non-validated AI tools in clinical practice or for failing to substantiate their recommendations.

All AI models must address the principle of fairness 36,39,51 and avoidance of harm by minimising bias. 45 For AI models to be fair, it is essential to ensure equity of benefits and costs and eliminate discrimination, stigmatisation, and unfair bias. 36 Algorithmic biases are often due to non-diverse training data sets or testing only on specific population groups. 44,60 AI models are prone to discrimination biases, which have been confirmed to be an important ethical issue. 53

In addition, AI models should be transparent to be fair, inclusive, and easy to evaluate. Transparency requires AI models to be always available to interrogation. 47,61 Also, transparent AI solutions will help build trust between patients and healthcare providers and between developers and clinical practitioners as end users. 52 Another way to increase the transparency of AI models is to establish good traceability. This principle refers to the standardised documentation of all development processes, including data collection, labelling, devices used, and annotation tools. 44

Furthermore, AI models must be explainable so that the patients and the trained staff can understand the reasoning behind their decision-making process. 39 This could enhance trust between end users and AI technologies. Explainability is closely associated with transparency. 38,58

In contrast, interpretability, often confused with explainability, refers to the ability of a model to make correct associations between cause and effect. Interpretability increases when AI models are explainable, although these models may exhibit reduced performance due to becoming more prone to external manipulation. 48

AI auditing and quality assurance

All organisations must develop ongoing procedures to test the AI model’s performance throughout its life cycle. 20 Real-world performance monitoring, in the form of pre- and post-market surveillance, has been suggested to assess any deviation in the model’s performance over time. 40 These procedures should focus on load tests, safety, bias testing 20 and clinical and technical performance over time. Regular audits have been recommended to test the clinical safety of these models, particularly after model updates. Reporting of these audits should include accuracy, model biases, and clinical outcomes. 48 All vendors should outline appropriate plans to assess their model’s performance drifts, automatically install any necessary updates and mitigate any risks from these updates. 39

AI research and innovation

Research is fundamental for improving clinical practice, patient outcomes, staff well-being and optimising workflows. 56 However, there is still a lot to be done to ensure prospective studies are prioritised and that links between industry and academia are strengthened. 62 With more academic–industry partnerships, it is vital to ensure the impartiality of researchers; researcher and clinician internships in AI startups will be central to supporting ethical, person-centred research. 62

Prospective research studies are essential to assess and document the real added value of AI in healthcare. 46 There is a need to evaluate AI tools after implementation, and there are already some checklists to assess research quality and risk of bias. 46 The Standard Protocol Items: Recommendations for Interventional Trials-AI and Consolidated Standards of Reporting Trials-AI guidelines have been developed to increase the quality of conduct and reporting of AI-related clinical trials. 63 In addition, the Checklist for AI in Medical Imaging guidelines facilitates medical imaging research reporting around AI research, 64 while a quality score has been developed for radiomic studies. Finally, new reporting guidelines have been recommended to evaluate AI interventions moving from the algorithm development stage to support large clinical trials. 65

Training of staff

Training healthcare staff on AI principles has been hailed as central to AI adoption. The HCPC has recently advised that AI digital competencies are paramount for training radiographers to practise safely and care for patients. AI as a core competency is also embedded in the latest education and career framework and the recent AI guidance by the Society and College of Radiographers and other professional and regulatory bodies. This training should include knowledge about AI basic principles, validation and evaluation, clinical applications, governance and ethics, regulation and technology implementation, and the model’s limitations. The training should include principles of person-centred care and precision medicine. 48 Appropriate staff training on AI technologies enables them to build confidence in effectively and safely using these AI tools 20 for improved workflows, better patient outcomes and higher job satisfaction. In addition, it was found that appropriate training/education provided to healthcare professionals will also increase trustworthiness. 45

Public, patient, and practitioner involvement (PPI)

Many AI developers, unfortunately, seek user feedback retrospectively. Still, the cost of the afterthought can be huge both for the service-user and the organisation and could render AI adoption impractical. Prospective user, patient and public involvement should be included at all stages, from design to product roll-out and throughout its life cycle. 50 Clinical usability is vital when deploying AI tools since user-friendly interfaces, and accessible, inclusive applications are central to effective AI adoption. 42,66

Key stakeholders of an AI solution must be actively involved at all stages of the AI integration process. 39 Staff involvement will ensure that the AI tool will meet their ergonomic, workflow and performance needs, as well as the needs of their patients. Also, participation in decisions affecting people’s lives is a core human right, requiring access to information and freedom of expression. 55 Moreover, patients and the community must also be involved in AI product design and delivering of solutions to clinical problems. They are experts through lived experience and have unique insights into the challenges of workflows and usability of clinical tools. 39 Public and patient representatives will be more effective if they are invited to act as research co-producers 48 rather than as reviewers of research outcomes at later stages. Key stakeholders may include clinicians, patients, operational and administrative leaders, hospital administrators, and regulatory agencies. 33 AI adoption relies on a diverse, highly engaged, well-trained AI ecosystem.

Leadership and staff management

Effective leadership is vital for supporting any new venture in healthcare and beyond, which is true for any successful AI adoption initiative. A well-informed, agile senior leadership should identify and support AI champions for change of culture and knowledge transfer in key practice areas. 20 Furthermore, it is essential to enable diverse, multidisciplinary teams to carry the work forward and not rely on only some professionals. 41

Importance of AI governance frameworks

This review underlines that AI governance is multiparametric, and all elements must be finely tuned to safely and effectively deploy AI models in clinical practice. The need for rigorous governance frameworks has been suggested in healthcare and other contexts, such as public administration, 67 finance, 68 and academia. 69 AI governance frameworks will also play a fundamental role in mitigating the risks associated with using AI models while also allowing to maximise their benefits, 70 enable safe implementation, 71 and build trust between humans and AI. 72 For the seamless adoption of AI, training is essential. 16 Whilst radiologists have been leading on designing and delivering AI-related educational initiatives, 73,74 radiographers have just started investing in AI education initiatives in medical imaging, 20,21,75 as central to adoption. 14,15,17

Pre- and post-market considerations

The main challenges associated with adopting AI solutions can be classified into pre- and post-market considerations. Before procurement of an AI model, it is crucial to know its purpose and clearly define the clinical problem. 76 This will ensure that this model fits the needs of the organisation and the end-users. In addition, another crucial pre-market consideration is ensuring that the AI model has been thoroughly validated and that appropriate checks have been performed onsite to assess the model’s suitability. 77 Furthermore, end-users should ensure that the AI solution does not discriminate against vulnerable groups and that appropriate measures have been taken to mitigate algorithmic bias. 78 Finally, all AI models should be assessed based on regulatory standards, and caution should be paid to the required regulatory aspects (e.g. CE marks) to be in place. 71

Post-market assessment of the model’s clinical safety and effectiveness is paramount to ensure that the model will continue to perform as per the initial design and that no harm will impact end-users. Ongoing monitoring of AI tools is essential due to the dynamic nature of these environments; hence, evaluation of these systems should be thoroughly performed throughout their life cycle. 79 This monitoring should be standardised. 80 An important post-market consideration is the potential risk of algorithmic biases over time. Third parties should be assigned for clinical audits, as they have proved valuable in detecting the weaknesses of AI models. 81 All healthcare professionals that use AI models should be able to timely recognise and escalate performance failures resulting from data shifts. 82 AI governance principles should therefore be applied to AI models throughout their life cycle, from model development to decommissioning. 83

Financial considerations

Another essential part of AI solutions is the financial implications of clinically deploying these models. Healthcare systems are struggling after COVID-19, decimated by poor staffing and access to material resources. 84 Before procuring AI models, a detailed cost/benefit analysis 85 should aim to reduce costs and improve services to benefit the patients and healthcare staff. 86 Different financial models in healthcare delivery mean different reimbursement models exist. So financial considerations will vary in other countries.

Limitations

The nature of a scoping review means that the findings may be broad due to the general nature of the research question in scoping reviews. 87 The framework is intentionally generic in nature, so it can be adjusted and contextualised to individual and local policies and circumstances. However, as evidence is still being developed around AI governance, this was the most appropriate methodology to gather the necessary evidence.

While Health Economics and Outcomes Research is central to AI adoption, 88 it was not included in this review. This was partly because of the complexity of addressing this topic for different organisations. The little evidence starting to emerge, mainly from North America, shows that reimbursement analysis will be central to AI adoption. 89,90 However, it remains to be seen how relevant this would be in the UK context.

Finally, since AI is a rapidly changing field, and the number of AI-related publications is growing exponentially, this review will inevitably miss some relevant published work nearer the time of acceptance and publication.

Given the paucity of a unified AI governance framework in medical imaging and radiotherapy in the UK, our results could be used as robust groundwork to develop locally comprehensive AI governance schemes. It is also opportune that a new British Standards Institution specification, currently in public consultation, will be released in the UK in the spring of 2023 to help clarify the finer details of AI adoption. 91

Conclusion

This scoping review identified the key elements of AI governance in the UK, focusing on medical imaging and radiotherapy. The proposed conceptual governance framework encompasses rigorous validation and evaluation procedures of AI tools, ongoing monitoring of these models' safety and clinical effectiveness, and compliance with the appropriate accreditation bodies and regulatory standards. The fundamental ethical principles associated with the safe use of AI tools should also be followed, and all AI models should be fair, transparent, trustworthy, and explainable. Staff should be confident about using AI tools in clinical practice. Appropriate staff training is necessary to build trust between AI and humans and ensure the acceptability of new technology. Effective leadership and staff management will further enhance the safe adoption of AI, while research also plays a fundamental role in driving sustainable innovation and growth.

Contributor Information

Nikolaos Stogiannos, Email: nstogiannos@yahoo.com.

Rizwan Malik, Email: rizwan.malik@boltonftnhs.uk.

Amrita Kumar, Email: amrita.kumar@nhs.net.

Anna Barnes, Email: anna.barnes@kcl.ac.uk.

Michael Pogose, Email: mike@hardianhealth.com.

Hugh Harvey, Email: hugh@hardianhealth.com.

Mark F McEntee, Email: mark.mcentee@ucc.ie.

Christina Malamateniou, Email: christina.malamateniou@city.ac.uk.

REFERENCES

- 1. Briganti G, Le Moine O. Artificial intelligence in medicine: today and tomorrow. Front Med 2020; 7: 27. doi: 10.3389/fmed.2020.00027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Bohr A, Memarzadeh K. (n.d). The rise of artificial intelligence in Healthcare applications. Artificial Intelligence in Healthcare; 2020: 25–60. Available from: https://doi.org/10.1016%2FB978-0-12-818438-7.00002-2 [Google Scholar]

- 3. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in Radiology. Nat Rev Cancer 2018; 18: 500–510. doi: 10.1038/s41568-018-0016-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Hardy M, Harvey H. Artificial intelligence in diagnostic imaging: impact on the radiography profession. Br J Radiol 2020; 93: 20190840. doi: 10.1259/bjr.20190840 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Lewis SJ, Gandomkar Z, Brennan PC. Artificial intelligence in medical imaging practice: looking to the future. J Med Radiat Sci 2019; 66: 292–95. doi: 10.1002/jmrs.369 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Malamateniou C, Knapp KM, Pergola M, Woznitza N, Hardy M. Artificial intelligence in radiography: where are we now and what does the future hold? Radiography (Lond) 2021; 27 Suppl 1: S58–62. doi: 10.1016/j.radi.2021.07.015 [DOI] [PubMed] [Google Scholar]

- 7. Avanzo M, Porzio M, Lorenzon L, Milan L, Sghedoni R, Russo G, et al. Artificial intelligence applications in medical imaging: A review of the medical physics research in Italy. Physica Medica 2021; 83: 221–41. doi: 10.1016/j.ejmp.2021.04.010 [DOI] [PubMed] [Google Scholar]

- 8. Sharma P, Suehling M, Flohr T, Comaniciu D. Artificial intelligence in diagnostic imaging status quo, challenges, and future opportunities. J Thorac Imaging 2020; 35 Suppl 1: S11–16. doi: 10.1097/RTI.0000000000000499 [DOI] [PubMed] [Google Scholar]

- 9. Baig MA, Almuhaizea MA, Alshehri J, Bazarbashi MS, Al-Shagathrh F. Urgent need for developing a framework for the governance of AI in Healthcare. Stud Health Technol Inform 2020; 272: 253–56. doi: 10.3233/SHTI200542 [DOI] [PubMed] [Google Scholar]

- 10. Aung YYM, Wong DCS, Ting DSW. The promise of artificial intelligence: a review of the opportunities and challenges of artificial intelligence in Healthcare. Br Med Bull 2021; 139: 4–15. doi: 10.1093/bmb/ldab016 [DOI] [PubMed] [Google Scholar]

- 11. Morley J, Murphy L, Mishra A, Joshi I, Karpathakis K. Governing data and artificial intelligence for health care: developing an international understanding. JMIR Form Res 2022; 6(): e31623. doi: 10.2196/31623 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Guan J. Artificial intelligence in Healthcare and medicine: promises, ethical challenges and governance. Chin Med Sci J 2019; 34: 76–83. doi: 10.24920/003611 [DOI] [PubMed] [Google Scholar]

- 13. Dixit A, Quaglietta J, Gaulton C. Preparing for the future: how organisations can prepare boards, leaders, and risk managers for artificial intelligence. Healthc Manage Forum 2021; 34: 346–52. doi: 10.1177/08404704211037995 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Malamateniou C, McFadden S, McQuinlan Y, England A, Woznitza N, Goldsworthy S, et al. Artificial intelligence: guidance for clinical imaging and therapeutic radiography professionals, a summary by the society of Radiographers AI working group. Radiography (Lond) 2021; 27: 1192–1202. doi: 10.1016/j.radi.2021.07.028 [DOI] [PubMed] [Google Scholar]

- 15. Rainey C, O’Regan T, Matthew J, Skelton E, Woznitza N, Chu K-Y, et al. Beauty is in the AI of the beholder: are we ready for the clinical integration of artificial intelligence in radiography? an exploratory analysis of perceived AI knowledge, skills, confidence, and education perspectives of UK Radiographers. Front Digit Health 2021; 3: 739327. doi: 10.3389/fdgth.2021.739327 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. England HE. The Topol Review. Preparing the healthcare workforce to deliver the digital future. Available from: https://topol.hee.nhs.uk/the-topol-review/ (accessed

- 17. Malamateniou C, McEntee M.. Integration of AI in radiography practice: ten priorities for implementation. RAD magazine 2022. Available from: https://www.radmagazine.com/scientific-article/integration-of-ai-in-radiography-practice-ten-priorities-for-implementation/ [Google Scholar]

- 18. Standards of Proficiency . Health & Care Professions Council. Radiographers. Available from: https://www.hcpc-uk.org/globalassets/standards/standards-of-proficiency/reviewing/radiographers---new-standards.pdf [Google Scholar]

- 19. Abdulhussein H, Turnbull R, Dodkin L, Mitchell P. Towards a national capability framework for artificial intelligence and Digital medicine tools – A learning needs approach. Intelligence-Based Medicine 2021; 5: 100047. doi: 10.1016/j.ibmed.2021.100047 [DOI] [Google Scholar]

- 20. Lab NHS AI England Health Education . Understanding healthcare workers’ confidence in AI. 2022. Available from: https://digital-transformation.hee.nhs.uk/binaries/content/assets/digital-transformation/dart-ed/understandingconfidenceinai-may22.pdf

- 21. Lab NHS AI England Health Education . Developing healthcare workers’ confidence in AI. 2022. Available from: https://digital-transformation.hee.nhs.uk/binaries/content/assets/digital-transformation/dart-ed/developingconfidenceinai-oct2022.pdf

- 22. The College of Radiographers . Education and Career Framework for the Radiography Workforce. 4th edition. 2022. Available from: https://www.sor.org/getmedia/11b7a986-3d0b-4b9d-8131-ae5b9b71a6e3/CoR-ECF-Interactive-2022-Final

- 23. Munn Z, Peters MDJ, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or Scoping review? guidance for authors when choosing between a systematic or Scoping review approach. BMC Med Res Methodol 2018; 18(): 143. doi: 10.1186/s12874-018-0611-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Peters MDJ, Marnie C, Tricco AC, Pollock D, Munn Z, Alexander L, et al. Updated methodological guidance for the conduct of Scoping reviews. JBI Evid Synth 2020; 18: 2119–26. doi: 10.11124/JBIES-20-00167 [DOI] [PubMed] [Google Scholar]

- 25. Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA extension for Scoping reviews (PRISMA-SCR): checklist and explanation. Ann Intern Med 2018; 169: 467–73. doi: 10.7326/M18-0850 [DOI] [PubMed] [Google Scholar]

- 26. Peters MDJ, Marnie C, Colquhoun H, Garritty CM, Hempel S, Horsley T, et al. Scoping reviews: reinforcing and advancing the methodology and application. Syst Rev 2021; 10(): 263. doi: 10.1186/s13643-021-01821-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Levac D, Colquhoun H, O’Brien KK. Scoping studies: advancing the methodology. Implement Sci 2010; 5: 69. doi: 10.1186/1748-5908-5-69 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Kleinheksel AJ, Rockich-Winston N, Tawfik H, Wyatt TR. Demystifying content analysis. Am J Pharm Educ 2020; 84(): 7113. doi: 10.5688/ajpe7113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Scally G, Donaldson LJ.. The NHS’s 50 anniversary. Clinical governance and the drive for quality improvement in the new NHS in England. BMJ 1998;317():61-5. 10.1136/bmj.317.7150.61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Gray C. What is clinical governance BMJ 2005; 330: s254. doi: 10.1136/bmj.330.7506.s254-b [DOI] [Google Scholar]

- 31. Healthcare R. What is clinical governance and what are the 7 pillars. Available from: https://radarhealthcare.com/news-blogs/what-is-clinical-governance-and-what-are-the-7-pillars/ (accessed 1 Feb 2021)

- 32. Erlingsson C, Brysiewicz P.. A hands-on guide to doing content analysis. Afr J Emerg Med 2017;7():93-9. https://doi.org/10.1016%2Fj.afjem.2017.08.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Wiens J, Saria S, Sendak M, Ghassemi M, Liu VX, Doshi-Velez F, et al. Do no harm: a roadmap for responsible machine learning for health care. Nat Med 2019; 25: 1337–40: 1627. doi: 10.1038/s41591-019-0609-x [DOI] [PubMed] [Google Scholar]

- 34. Larson DB, Harvey H, Rubin DL, Irani N, Tse JR, Langlotz CP. Regulatory frameworks for development and evaluation of artificial intelligence–based diagnostic imaging Algorithms: summary and recommendations. J Am Coll Radiol 2021; 18: 413–24. doi: 10.1016/j.jacr.2020.09.060 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Morley J, Morton C, Karpathakis K, Taddeo M, Floridi L.. Towards a framework for evaluating the safety, acceptability and efficacy of AI systems for health: an initial synthesis. 2021 Preprint. Arxiv.

- 36. European Commission . Ethics Guidelines for Trustworthy AI. Available from: https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai (accessed 8 Apr 2019)

- 37. Char DS, Abramoff MD, Feudtner C.. Identifying Ethical Considerations for Machine Learning Healthcare Applications. Am J Bioeth 2020;20():7-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Cabitza F, Zeitoun JD. The proof of the pudding: in praise of a culture of real-world validation for medical artificial intelligence. Ann Transl Med 2019; 7(): 161. doi: 10.21037/atm.2019.04.07 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. NHSx . A Buyer’s Guide to AI in Health and Care. Available from: https://www.nhsx.nhs.uk/ai-lab/explore-all-resources/adopt-ai/a-buyers-guide-to-ai-in-health-and-care/a-buyers-guide-to-ai-in-health-and-care/ (accessed 8 Sep 2020)

- 40. Allen B, Dreyer K, Stibolt R, Agarwal S, Coombs L, Treml C, Elkholy M, Brink L, Wald C.. Evaluation and Real-World Performance Monitoring of Artificial Intelligence Models in Clinical Practice: Try It, Buy It, Check It. J Am Coll Radiol 2021;18:1489-96. [DOI] [PubMed] [Google Scholar]

- 41. Tang A, Tam R, Cadrin-Chênevert A, Guest W, Chong J, Barfett J, et al. Canadian Association of Radiologists white paper on artificial intelligence in Radiology. Can Assoc Radiol J 2018; 69: 120–35: S0846-5371(18)30030-5. doi: 10.1016/j.carj.2018.02.002 [DOI] [PubMed] [Google Scholar]

- 42. England NHS . Digital technology assessment criteria (DTAC). Updated April 2021; 16. Available from: https://transform.england.nhs.uk/key-tools-and-info/digital-technology-assessment-criteria-dtac/ [Google Scholar]

- 43. Brown R. Challenges to Successful AI Implementation in Healthcare. Published October 30, 2022. Available from: https://www.datasciencecentral.com/challenges-to-successful-ai-implementation-in-healthcare/ [Google Scholar]

- 44. Lekadir K, Osuala R, Gallin C, Lazrak N, Kushibar K, Tsakou G, et al. n.d.).( Guiding Principles and Consensus Recommendations for Trustworthy Artificial Intelligence in Medical Imaging. ArXiv

- 45. Mirbabaie M, Hofeditz L, Frick NRJ, Stieglitz S. Artificial intelligence in hospitals: providing a status quo of ethical considerations in academia to guide future research. AI Soc 2022; 37: 1361–82. doi: 10.1007/s00146-021-01239-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. National Institute for Health and Care Excellence . Evidence standards framework for digital health technologies. Available from: https://www.nice.org.uk/about/what-we-do/our-programmes/evidence-standards-framework-for-digital-health-technologies (accessed 9 Aug 2022) [DOI] [PMC free article] [PubMed]

- 47. Pesapane F, Volonté C, Codari M, Sardanelli F. Artificial intelligence as a medical device in Radiology: ethical and regulatory issues in Europe and the United States. Insights Imaging 2018; 9: 745–53. doi: 10.1007/s13244-018-0645-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Reddy S, Allan S, Coghlan S, Cooper P. A governance model for the application of AI in health care. J Am Med Inform Assoc 2020; 27: 491–97. doi: 10.1093/jamia/ocz192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Ganapathy K. Artificial intelligence and Healthcare regulatory and legal concerns. TMT 2021; 6: 252. doi: 10.30953/tmt.v6.252 [DOI] [Google Scholar]

- 50. Care S. A guide to good practice for digital and data-driven health technologies. Updated 2021. Available from: https://www.gov.uk/government/publications/code-of-conduct-for-data-driven-health-and-care-technology/initial-code-of-conduct-for-data-driven-health-and-care-technology

- 51. Department of Radiology and Nuclear Medicine, University Hospital Basel, University of Basel, Basel, Switzerland, Akinci D’Antonoli T. Ethical considerations for artificial intelligence: an overview of the current Radiology landscape. Diagn Interv Radiol 2020; 26: 504–11. doi: 10.5152/dir.2020.19279 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Geis JR, Brady AP, Wu CC, Spencer J, Ranschaert E, Jaremko JL, et al. Ethics of artificial intelligence in Radiology: summary of the joint European and North American Multisociety statement. Radiology 2019; 293: 436–40. doi: 10.1148/radiol.2019191586 [DOI] [PubMed] [Google Scholar]

- 53. Schonberger D. Artificial intelligence in healthcare: a critical analysis of the legal and ethical implications. Int J Law Inf Technol 2019;27:171–203. [Google Scholar]

- 54. Price WN, Cohen IG. Privacy in the age of medical big data. Nat Med 2019; 25: 37–43. doi: 10.1038/s41591-018-0272-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Fukuda‐Parr S, Gibbons E. Emerging consensus on ‘ethical AI’: human rights critique of Stakeholder guidelines. Glob Policy 2021; 12: 32–44. doi: 10.1111/1758-5899.12965 [DOI] [Google Scholar]

- 56. Artificial intelligence and medical imaging 2018: French Radiology community white paper. Diagnostic and Interventional Imaging 2018; 99: 727–42. doi: 10.1016/j.diii.2018.10.003 [DOI] [PubMed] [Google Scholar]

- 57. Astromskė K, Peičius E, Astromskis P. Ethical and legal challenges of informed consent applying artificial intelligence in medical diagnostic consultations. AI & Soc 2021; 36: 509–20. doi: 10.1007/s00146-020-01008-9 [DOI] [Google Scholar]

- 58. Cohen IG, Evgeniou T, Gerke S, Minssen T. The European artificial intelligence strategy: implications and challenges for Digital health. The Lancet Digital Health 2020; 2: e376–79. doi: 10.1016/S2589-7500(20)30112-6 [DOI] [PubMed] [Google Scholar]

- 59. Maliha G, Gerke S, Cohen IG, Parikh RB. Artificial intelligence and liability in medicine: balancing safety and innovation. Milbank Q 2021; 99: 629–47. doi: 10.1111/1468-0009.12504 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Lang M, Bernier A, Knoppers BM. Artificial intelligence in cardiovascular imaging: “unexplainable Canadian Journal of Cardiology 2022; 38: 225–33. doi: 10.1016/j.cjca.2021.10.009 [DOI] [PubMed] [Google Scholar]

- 61. Brady AP, Neri E. Artificial intelligence in Radiology—ethical considerations. Diagnostics (Basel) 2020; 10(): 231. doi: 10.3390/diagnostics10040231 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Centre for Data Ethics and Innovation . The roadmap to an effective AI assurance ecosystem. 2021. Available from: https://www.gov.uk/government/publications/the-roadmap-to-an-effective-ai-assurance-ecosystem/the-roadmap-to-an-effective-ai-assurance-ecosystem

- 63. Ibrahim H, Liu X, Rivera SC, Moher D, Chan A-W, Sydes MR, et al. Reporting guidelines for clinical trials of artificial intelligence interventions: the SPIRIT-AI and CONSORT-AI guidelines. Trials 2021; 22(): 11. doi: 10.1186/s13063-020-04951-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Mongan J, Moy L, Kahn CE. Checklist for artificial intelligence in medical imaging (CLAIM): A guide for authors and reviewers. Radiol Artif Intell 2020; 2(): e200029. doi: 10.1148/ryai.2020200029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Vasey B, Clifton DA, Collins GS, Denniston AK, Faes L, Geerts BF, et al. DECIDE-AI: new reporting guidelines to bridge the development to implementation gap in clinical artificial intelligence. Nat Med 2021; 27: 186–87. doi: 10.1038/s41591-021-01229-5 [DOI] [PubMed] [Google Scholar]

- 66. Omoumi P, Ducarouge A, Tournier A, Harvey H, Kahn CE Jr, Louvet-de Verchère F, et al. To buy or not to buy—evaluating commercial AI solutions in Radiology (the ECLAIR guidelines). Eur Radiol 2021; 31: 3786–96. doi: 10.1007/s00330-020-07684-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Wirtz BW, Weyerer JC, Sturm BJ. The dark sides of artificial intelligence: an integrated AI governance framework for public administration. International Journal of Public Administration 2020; 43: 818–29. doi: 10.1080/01900692.2020.1749851 [DOI] [Google Scholar]

- 68. Schneider J, Abraham R, Meske C, Vom Brocke J. Artificial intelligence governance for businesses. Information Systems Management 2023; 40: 229–49. doi: 10.1080/10580530.2022.2085825 [DOI] [Google Scholar]

- 69. McKay F, Williams BJ, Prestwich G, Treanor D, Hallowell N.. Public governance of medical artificial intelligence research in the UK: an integrated multi-scale model. Res Involv Engagem 2022;8:21. 10.1186/s40900-022-00357-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Butcher J, Beridze I. What is the state of artificial intelligence governance globally The RUSI Journal 2019; 164: 88–96. doi: 10.1080/03071847.2019.1694260 [DOI] [Google Scholar]

- 71. van de Sande D, Van Genderen ME, Smit JM, Huiskens J, Visser JJ, Veen RER, et al. Developing, implementing and governing artificial intelligence in medicine: a step-by-step approach to prevent an artificial intelligence winter. BMJ Health Care Inform 2022; 29(): e100495. doi: 10.1136/bmjhci-2021-100495 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Kuziemski M, Misuraca G. AI governance in the public sector: three tales from the frontiers of automated decision-making in democratic settings. Telecommunications Policy 2020; 44: 101976. doi: 10.1016/j.telpol.2020.101976 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Wiggins WF, Caton MT Jr, Magudia K, Rosenthal MH, Andriole KP. Hands-on introduction to deep learning for Radiology Trainees. J Digit Imaging 2021; 34: 1026–33. doi: 10.1007/s10278-021-00492-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Wiggins WF, Caton MT, Magudia K, Glomski S-HA, George E, Rosenthal MH, et al. Preparing Radiologists to lead in the era of artificial intelligence: designing and implementing a focused data science pathway for senior Radiology residents. Radiol Artif Intell 2020; 2(): e200057. doi: 10.1148/ryai.2020200057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. City University of London . Introduction to Artificial Intelligence for Radiographers. Professional development course. Available from: https://www.city.ac.uk/prospective-students/courses/professional-development/introduction-to-artificial-intelligence-for-radiographers

- 76. Medicines & Healthcare products Regulatory Agency . Guidance: Medical device stand-alone software including apps (including IVDMDs). 2021. Available from: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/999908/Software_flow_chart_Ed_1-08b-IVD.pdf

- 77. Scott I, Carter S, Coiera E. Clinician checklist for assessing suitability of machine learning applications in Healthcare. BMJ Health Care Inform 2021; 28(): e100251. doi: 10.1136/bmjhci-2020-100251 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Norori N, Hu Q, Aellen FM, Faraci FD, Tzovara A. Addressing bias in big data and AI for health care: A call for open science. Patterns (N Y) 2021; 2: 100347: 100347. doi: 10.1016/j.patter.2021.100347 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Vyhmeister E, Castane G, Östberg PO, Thevenin S. A responsible AI framework: pipeline Contextualisation. AI Ethics 2023; 3: 175–97. doi: 10.1007/s43681-022-00154-8 [DOI] [Google Scholar]

- 80. Laato S, Birkstedt T, Mäntymäki M, Minkkinen M, Mikkonen T.. AI Governance in the System Development Life Cycle: Insights on Responsible Machine Learning Engineering In: 1st Conference on AI Engineering-Software Engineering for AI (CAIN’22), 2022 May 16–24 Pittsburgh, PA, USA. 10.1145/3522664.3528598 [DOI] [Google Scholar]

- 81. Raji ID, Xu P, Honigsberg C, Ho D. Outsider Oversight: Designing a Third Party Audit Ecosystem for AI Governance. AIES ’22; Oxford United Kingdom. New York, NY, USA; 26 July 2022. doi: 10.1145/3514094.3534181 [DOI] [Google Scholar]

- 82. Finlayson SG, Subbaswamy A, Singh K, Bowers J, Kupke A, Zittrain J, et al. The clinician and Dataset shift in artificial intelligence. N Engl J Med 2021; 385: 283–86. doi: 10.1056/NEJMc2104626 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83. Mäntymäki M, Minkkinen M, Birkstedt T, Viljanen M. Defining Organisational AI governance. AI Ethics 2022; 2: 603–9. doi: 10.1007/s43681-022-00143-x [DOI] [Google Scholar]

- 84. Poon YSR, Lin YP, Griffiths P, Yong KK, Seah B, Liaw SY. A global overview of Healthcare workers’ turnover intention amid COVID-19 pandemic: a systematic review with future directions. Hum Resour Health 2022; 20(): 70. doi: 10.1186/s12960-022-00764-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Bosmans H, Zanca F, Gelaude F. Procurement, commissioning and QA of AI based solutions: an MPE's perspective on introducing AI in clinical practice. Phys Med 2021; 83: 257–63. doi: 10.1016/j.ejmp.2021.04.006 [DOI] [PubMed] [Google Scholar]

- 86. Chen L, Jiang M, Jia F, Liu G. Artificial intelligence adoption in business-to-business marketing: toward a conceptual framework. JBIM 2022; 37: 1025–44. doi: 10.1108/JBIM-09-2020-0448 [DOI] [Google Scholar]

- 87. Hanneke R, Asada Y, Lieberman L, Neubauer LC, Fagen M. n.d.).( The Scoping Review Method: Mapping the Literature in “Structural Change” Public Health Interventions . 1 Oliver’s Yard, 55 City Road, London EC1Y 1SP United Kingdom. doi: 10.4135/9781473999008 [DOI] [Google Scholar]

- 88. Leavitt N. AI and HEOR: A Joining of Forces. August 18, 2021. Available from: https://www.iqvia.com/locations/united-states/library/articles/ai-and-heor-a-joining-of-forces

- 89. Abràmoff MD, Roehrenbeck C, Trujillo S, Goldstein J, Graves AS, Repka MX, et al. A reimbursement framework for artificial intelligence in Healthcare. NPJ Digit Med 2022; 5(): 72. doi: 10.1038/s41746-022-00621-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90. Venkatesh KP, Raza MM, Diao JA, Kvedar JC. Leveraging reimbursement strategies to guide value-based adoption and utilization of medical AI. NPJ Digit Med 2022; 5(. doi: 10.1038/s41746-022-00662-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91. The British Standards Institution . BS 30440 Validation framework for the use of AI within healthcare – Specification. Available from: https://standardsdevelopment.bsigroup.com/projects/2021-00605?fbclid=IwAR1MF6daoP5zKnV991I4d0zwZ6K4FR9Ggs8QoXmfuhPGw6da7T07-UKaPkk#/section

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.