Abstract

Optimal transport (OT) plays an essential role in various areas like machine learning and deep learning. However, computing discrete OT for large scale problems with adequate accuracy and efficiency is highly challenging. Recently, methods based on the Sinkhorn algorithm add an entropy regularizer to the prime problem and obtain a trade off between efficiency and accuracy. In this paper, we propose a novel algorithm based on Nesterov’s smoothing technique to further improve the efficiency and accuracy in computing OT. Basically, the non-smooth c-transform of the Kantorovich potential is approximated by the smooth Log-Sum-Exp function, which smooths the original non-smooth Kantorovich dual functional. The smooth Kantorovich functional can be efficiently optimized by a fast proximal gradient method, the fast iterative shrinkage thresholding algorithm (FISTA). Theoretically, the computational complexity of the proposed method is given by , which is lower than current estimation of the Sinkhorn algorithm. Experimentally, compared with the Sinkhorn algorithm, our results demonstrate that the proposed method achieves faster convergence and better accuracy with the same parameter.

Introduction

Optimal transport (OT) is a powerful tool to compute the Wasserstein distance between probability measures and widely used to model various natural and social phenomena, including economics (Galichon 2016), optics (Glimm and Oliker 2003), biology (Schiebinger et al. 2019), physics (Jordan, Kinderlehrer, and Otto 1998) and in other scientific fields. Recently, OT has been successfully applied in machine learning and statistics, such as parameter estimation in Bayesian non-parametric models (Nguyen 2013), computer vision (Arjovsky, Chintala, and Bottou 2017; Courty et al. 2017; Tolstikhin et al. 2018; An et al. 2020; Lei et al. 2020), and natural language processing (Kusner et al. 2015; Yurochkin et al. 2019). In these areas, the complex probability measures are approximated by summations of Dirac measures supported on the samples. To obtain the Wasserstein distance between the empirical distributions, we then solve the discrete OT problems.

Discrete Optimal Transport

In discrete OT problem, where both the source and target measures are discrete, the Kantorovich functional becomes a convex function defined on a convex domain. Due to the lack of smoothness, conventional gradient descend method can not be applied directly. Instead, it can be optimized with the sub-differential method (Nesterov 2005), in which the gradient is replaced by the sub-differential. To achieve an approximation error less than , the sub-differential method requires iterations. Recently, several approximation methods have been proposed to improve the computational efficiency. In these methods (Cuturi 2013; Benamou et al. 2015; Altschuler, Niles-Weed, and Rigollet 2017), a strongly convex entropy function is added to the prime Kantorovich problem and thus the regularized problem can be efficiently solved by the Sinkhorn algorithm. More detailed analysis shows that the computational complexity of the Sinkhorn algorithm is (Dvurechensky, Gasnikov, and Kroshnin 2018) by setting . Also, a series of primal-dual algorithms are proposed, including the APDAGD (adaptive primal-dual accelerated gradient descent) algorithm (Dvurechensky, Gasnikov, and Kroshnin 2018) with computational complexity , the APDAMD (adaptive primal-dual accelerated mirror descent) algorithm (Lin, Ho, and Jordan 2019) with where is a complex constant of the Bregman divergence, and the APDRCD (accelerated primal-dual randomized coordinate descent) algorithm (Guo, Ho, and Jordan 2020) with . But all of the three methods need to build a matrix with space complexity , making them difficult to compute when is large.

Our Method

In this work, instead of starting from the prime Kantorovich problem like the Sinkhorn based methods, we directly deal with the dual Kantorovich problem. The key idea is to approximate the original non-smooth c-transform of the Kantorovich potential by Nesterov’s smoothing technique. Specifically, we approximate the max function by the Log-Sum-Exp function, which has also been used in (Schmitzer 2019; Peyré and Cuturi 2018), such that the original non-smooth Kantorovich functional is converted to an unconstrained ()-dimensional smooth convex energy. By using the Fast Proximal Gradient Method named FISTA (Beck and Teboulle 2009), we can quickly optimize the smoothed energy to get a precise estimate of the OT cost. In theory, the method can achieve the approximate error with the space complexity and computational complexity . Additionally, we show that the induced approximate OT plan by our algorithm is equivalent to that of the Sinkhorn algorithm. The contributions of our work are as follows.

We convert the dual Kantorovich problem to an unconstrained smooth convex optimization problem by approximating the non-smooth c-transform of the Kantorovich potential with Nesterov’s smoothing idea.

The smoothed Kantorovich functional can be efficiently solved by the FISTA algorithm with computational complexity . At the same time, the computational complexity of the Kantorovich functional itself is given by .

The experiments demonstrate that compared with the Sinkhorn algorithm, the proposed method achieves faster convergence and better accuracy with the same parameter .

Notation

In this work, represents the non negative real numbers, and represents the all-zeros and all-ones vectors of appropriate dimension. The set of integers is denoted as . And and are the and norms, and , respectively. is the range of the cost matrix , namely , where and represent the maximum and minimum of the elements of with . We use to denote the minimal element of and to denote element wise division.

Related Work

Optimal transport plays an important role in various kinds of fields, and there is a huge literature in this area. Here we mainly focus on the most related works. For detailed overview, we refer readers to (Peyré and Cuturi 2018).

When both the source and target measures are discrete, the OT problem can be treated as a standard linear programming (LP) task and solved by interior-point method with computational complexity (Lee and Sidford 2014). But this method requires a practical solver of the Laplacian linear system, which is not currently available for large dataset. Another interior-point based method to solve the OT problem is proposed by Pele and Werman (Pele and Werman 2009) with complexity . Generally speaking, it is unrealistic to solve the large scale OT problem with the traditional LP solvers.

The prevalent way to compute the OT cost between two discrete measures involves adding a strongly convex entropy function to the prime Kantorovich problem (Cuturi 2013; Benamou et al. 2015). Most of the current solutions for the discrete OT problem follow this strategy. Genevay et al. (Genevay et al. 2016) extend the algorithm in its dual form and solve it by stochastic average gradient method. The Greenkhorn algorithm (Altschuler, Niles-Weed, and Rigollet 2017; Abid and Gower 2018; Chakrabarty and Khanna 2021) is a greedy version of the Sinkhorn algorithm. Specifically, Altschuler et al. (Altschuler, Niles-Weed, and Rigollet 2017) show that the complexity of their algorithm is . Later, Dvurechensky et al. (Dvurechensky, Gasnikov, and Kroshnin 2018) improve the complexity bound of the Sinkhorn algorithm to , and propose an APDAGD method with complexity . Jambulapati et al. (Jambulapati, Sidford, and Tian 2019) introduce a parallelelizable algorithm to compute the OT problem with complexity . Through screening the negligible components by directly setting them at that value before entering the Sinkhorn problem, the screenkhorn (Alaya et al. 2019) method solves a smaller Sinkhorn problem and improves the computation efficiency. Based on a primal-dual formulation and a tight upper bound for the dual solution, Lin et al. (Lin, Ho, and Jordan 2019) improve the complexity bound of the Greenkhorn algorithm to , and propose the APDAMD algorithm, whose complexity bound is proven to be , where refers to some constants in the Bregman divergence. Recently, a practically more efficient method called APDRCD (Guo, Ho, and Jordan 2020) is proposed with complexity . But all these three primal-dual based methods need to build a matrix with space complexity , which makes them impractical when is large. By utilizing Newton-type information, Blanchet et al. (Blanchet et al. 2018) and Quanrud (Quanrud 2018) propose algorithms with complexity . However, the Newton-based methods only give the theoretical upper bound and provide no practical algorithms.

Besides the entropy regularizer based methods, Blondel et al. (Blondel, Seguy, and Rolet 2018) use the squared 2-norm and group LASSO (least absolute shrinkage and selection operator) to regularize the prime Kantorovich problem and then use the quasi-Newton method to accelerate the algorithm. Xie et al. (Xie et al. 2019b) develop an Inexact Proximal point method for exact optimal transport. By utilizing the structure of the cost function, Gerber and Maggioni (Gerber and Maggioni 2017) optimize the transport plan from coarse to fine. Meng et al. (Meng et al. 2019) propose the projection pursuit Monge map, which accelerates the computation of the original sliced OT problem. Xie et al. (Xie et al. 2019a) also use the generative learning based method to model the optimal transport. But the theoretical analysis of these algorithms is still nascent.

In this work, we introduce a method based on Nesterov’s smoothing technique, which is applied to the dual Kantorovich problem with computational complexity (or equivalently and approximation error bound .

Optimal Transport Theory

In this section, we introduce some basic concepts and theorems in the classical optimal transport theory, focusing on Kantorovich’s approach and its generalization to the discrete settings via c-transform. The details can be found in Villani’s book (Villani 2008).

Optimal Transport Problem

Suppose , are two subsets of the Euclidean space are two probability measures defined on and with equal total measure, .

Kantorovich’s Approach

Depending on the cost functions and the measures, the OT map between () and () may not exist. Thus, Kantorovich relaxed the transport maps to transport plans, and defined joint probability measure , such that the marginal probability of equals to and , respectively. Formally, let the projection maps be , , then we define

| (1) |

Problem 1 (Kantorovich Problem). Given the transport cost function , find the joint probability measure that minimizes the total transport cost

| (2) |

Problem 2 (Dual Kantorovich Problem). Given two probability measures and supported on and , respectively, and the transport cost function , the Kantorovich problem is equivalent to maximizing the following Kantorovich functional:

| (3) |

where and are called Kantorovich potentials and . The above problem can be reformulated as the following minimization form with the same constraints:

| (4) |

Definition 3 (c-transform). Let and , we define

With c-transform, Eqn. (4) is equivalent to solving the following optimization problem:

| (5) |

where . When and , , Eqn. (5) gives the unconstrained convex optimization problem:

| (6) |

where the c-transform of is given by:

| (7) |

where . Suppose is the solution to Eqn. (6), then it has the following properties:

If the cost function is , where is a constant, the corresponding optimal solution is , then . At the same time, we have .

is also an optimal solution for Eqn. (6).

In order to make the solution unique, we add a constraint using the indicator function , where , and modify the Kantorovich functional in Eqn. (6) as:

| (8) |

Then solving Eqn. (6) is equivalent to finding the solution to:

| (9) |

which is essentially an ()-dimensional unconstrained convex problem. According to the definition of -transform in 7), is non-smooth with respect to .

Nesterov’s Smoothing of Kantorovich functional

Following Nesterov’s original strategy (Nesterov 2005), which has also been applied in the OT field (Peyré and Cuturi 2018; Schmitzer 2019), we smooth the non-smooth discrete Kantorovich functional . We approximate with the Log-Sum-Exp function to get the smooth Kantorovich functional . Then through the FISTA algorithm (Beck and Teboulle 2009), we can easily induce that the computation complexity of our algorithm is , with . By abuse of notation, in the following we call both and the Kantorovich functional and both and the smooth Kantorovich functional.

Definition 4 (-smoothable). A convex function is called ()-smoothable if, for any , a convex function such that

Here is called a -smooth approximation of with parameters ().

In the above definition, the parameter defines a trade-off between the approximation accuracy and the smoothness, where the smaller the , the better approximation and the less smoothness we obtain.

Lemma 5 (Nesterov’s Smoothing). Given , , for any , we have its -smooth approximation with parameters ()

| (10) |

Proof. We have ,

Furthermore, it is easy to prove that is -smooth. Therefore, is an approximation of with parameters ().

Recalling the definition of c-transform of the Kantorovich potential in Eqn. (7), we obtain the Nesterov’s smoothing of by applying Eqn. (10)

| (11) |

We use to replace in Eqn. (9) to approximate the Kantorovich functional. Then the Nesterov’s smoothing of the Kantorovich functional becomes

| (12) |

and its gradient is given by

| (13) |

Furthermore, we can directly compute the Hessian matrix of . Let and , and set , . Direct computation gives the following gradient and Hessian matrix:

| (14) |

where , and . By the Hessian matrix, we can show that is a smooth approximation of .

Lemma 6. is a -smooth approximation of with parameters ().

Proof. From Eqn. (14), we see has as its null space. In the orthogonal complementary space of , is diagonal dominant, therefore strictly positive definite.

Weyl’s inequality (Horn and Johnson 1991) states that the eigen value of is no greater than the maximal eigenvalue of minus the minimal eigenvalue of , where is an exact matrix and is a perturbation matrix. Hence the maximal eigenvalue of , denoted as , has an upper bound,

Thus the maximal eigenvalue of is no greater than . It is easy to find that . Thus, is a -smooth approximation of with parameters ().

Lemma 7. Suppose is the -smooth approximation of with parameters , is the optimizer of , then the approximate OT plan is unique and given by

| (15) |

where is the row of and .

Proof. By the gradient formula Eqn. (13) and the optimizer , we have

On the other hand, by the definition of , we have

Combing the above two equations, we obtain that and it is the approximate OT plan.

Similar to the discrete Kantorovich functional in Eqn. (6), the optimizer of the smooth Kantorovich functional in Eqn. (12) is also not unique: given an optimizer , then , is also an optimizer. We can eliminate the ambiguity by adding the indicator function as Eqn. (8), ,

| (16) |

This energy can be optimized effectively through the following FISTA iterations (Beck and Teboulle 2009).

| (17) |

with initial conditions , , and . Here is the projection of to (the proximal function of (Parikh and Boyd 2014). Similar to the Sinkhorn’s algorithm, this algorithm can be parallelized, since all the operations are row based.

Theorem 8. Given the cost matrix , the source measure and target measure with , is the optimizer of the discrete dual Kantorovich functional , and is the optimizer of the smooth Kantorovich functional . Then the approximation error is

Proof. Assume and are the minimizers of and respectively. Then by the inequality in Eqn. (10)

This shows . Removing the indicator functions, we can get

This also shows that converges quickly to as the decrease of . The convergence analysis of FISTA is given as follows:

Theorem 9 (Thm 4.4 of (Beck and Teboulle 2009)). Assume is convex and differentiable with , is Lipschitz continuous with Lipschitz constant ; and is convex and its proximal function can be evaluated. Then from the minimization of by FISTA with fixed step size , we can get

| (18) |

Corollary 10. Suppose is fixed and , then for any , we have

| (19) |

where is the optimizer of .

Proof. Under the settings of the smoothed Kantorovich problem Eqn. (12), is convex and differentiable with , is convex and its proximal function is given by . Thus, directly applying Thm. 9 and setting , we can get . Set and , then we get that, when , we have .

With the above analysis of the convergence of the smooth Kantorovich functional , in the following we give the convergence analysis of the original Kantorovich functional in Eqn. (9), where is obtained by FISTA.

Theorem 11. If , then for any , with , we have

| (20) |

where is the solver of after steps in the iterations in Alg. 1, and is the optimizer of . Then the total computational complexity is .

| Algorithm 1: Accelerated gradient descent for OT | |

|---|---|

|

|

Proof. We set the initial condition . For any given , we choose iteration step , such that , , where is the optimizer of . By theorem 9, we have

By Eqn. (16), we have

Next we show that by proving . According to Eq. (15),

| (21) |

Assume is the maximal element of , we have , where is the maximal element of the matrix . Thus,

Then, and

| (22) |

According to the inequality of arithmetic and geometric means, we have . Thus, .

| (23) |

Combine Eqn. (22) and (23), we have . Hence, we obtain that when .

For each iteration in Eqn. (17), we need times of operations, thus total the computational complexity of the proposed method is .

Relationship with Softmax

If there exists an OT map from to , then each sample of the source distribution is classified into the corresponding . If there does not exist an OT map, we can only get the OT plan, which can be treated as a soft classification problem: each weighted sample with weight will be sent to the corresponding with weight where . Here gives the OT plan from the source to the target distribution. The smoothed OT plan given by minimizing the smooth Kantorovich functional can be further treated as a relaxed OT plan. Instead of sending the weights of a specific sample to several target samples, the smooth solver tends to send each source sample to all of the target samples weighted by . Sample weighted by will be sent to with weight .

Relationship with entropy regularized OT problem

The Sinkhorn algorithm is deduced from minimizing the entropy regularized OT problem (Cuturi 2013): with . Its dual is given by (Genevay et al. 2016):

| (24) |

with gradient . With the optimal solver , the approximate OT plan is given by . We can compare them with our gradient Eqn. (13) and approximated OT plan Eqn. (15) to see the subtle differences. Actually, by setting , the minimizing problem in Eqn. (24) is equivalent to our smoothed semi-discrete problem of Eqn. (16) with a different constant term.

Furthermore, if we set with in Eqn. (15), the computed approximate OT plan can be rewritten as , which is the same as the form of the Sinkhorn solution (Cuturi 2013). Since the solution of the Sinkhorn algorithm is unique, we conclude that the induced approximate optimal transport plan Eqn. (15) by our algorithm is equivalent to that of the Sinkhorn.

Experiments

In this section, we investigate the performance of the proposed algorithm under different parameters, and then compare it with the Sinkhorn algorithm (Cuturi 2013). In the following, we first introduce the various settings of the experiments including the parameters, the cost matrix and the evaluation metrics. Then we show the experimental results. All of the codes were written in MATLAB with GPU acceleration, including the proposed method and the Sinkhorn algorithm (Cuturi 2013). The experiments are also conducted on a Windows laptop with Intel Core i7-7700HQ CPU, 16 GB memory and NVIDIA GTX 1060Ti GPU.

Parameters

There are two parameters involved in the proposed algorithm, and . The former is used to control the approximate accuracy between the Log-Sum-Exp function and the Kantorovich potential in Eqn. (11), and the latter controls the step size of the FISTA algorithm in Eqn. (17). Basically, smaller gives better approximation.

In our experiments, to get as small as possible, based on the Property 1 of the Eqn. (6), we set the median of the cost matrix equal to zero, so that the full range of the exponential of the floating-point numbers can be used, instead of only the negative part1. Thus we set and call it the translation trick. If the range of is denoted as , then the accuracy parameter is set to be , where is a positive constant. For the FISTA algorithm, the ideal step size should be , where is the maximal eigenvalue of the Hessian matrix in Eqn. (14). By Nesterov smoothing, we know , so we set the step length , where is a constant2. In practice we use () as control parameters instead of ().

Cost Matrix

In the following experiments, we test the performance of the algorithm with different parameters under different metrics. Specifically, we set , . Note that after the settings of and , they are normalized by and . To build the cost matrix, we use the Euclidean distance, squared Euclidean distance, spherical distance, and random cost matrix.

For Euclidean distance (ED) and the squared Euclidean distance (SED) experiments, in experiment 1, are randomly generated from the Gaussian distribution and are randomly sampled from the uniform distribution . Both and are randomly generated from the uniform distribution . Experiment 3 also uses a similar sampling strategy to build the discrete source and target measures. In experiment 2, like (Altschuler, Niles-Weed, and Rigollet 2017), we randomly choose one pair of images from the MNIST dataset (LeCun and Cortes 2010), and then add negligible noise 0.01 to each background pixel with intensity 0. and ( and ) are set to be the value and the coordinate of each pixel in the source (target) image. Then the Euclidean distance and squared Euclidean distance between and are given by and , respectively.

For the spherical distance (SD) experiment, both are randomly generated from the uniform distribution . are randomly generated from the Gaussian distribution and are randomly generated from the uniform distribution . Then we normalize and by and . As a result, both and are located on the sphere. The spherical distance is given by .

For the random distance (RD) matrix experiment, both and are randomly generated from the uniform distribution . Also, to build , we randomly sample from the Gaussian distribution , then is defined as .

Evaluation Metrics

We use two metrics to evaluate the proposed method: the first one is the transport cost, which is defined by Eqn. (6) and is given by ; and the second is the distance from the computed transport plan to the admissible distribution space defined in Eqn. (1), and the distance is defined as .

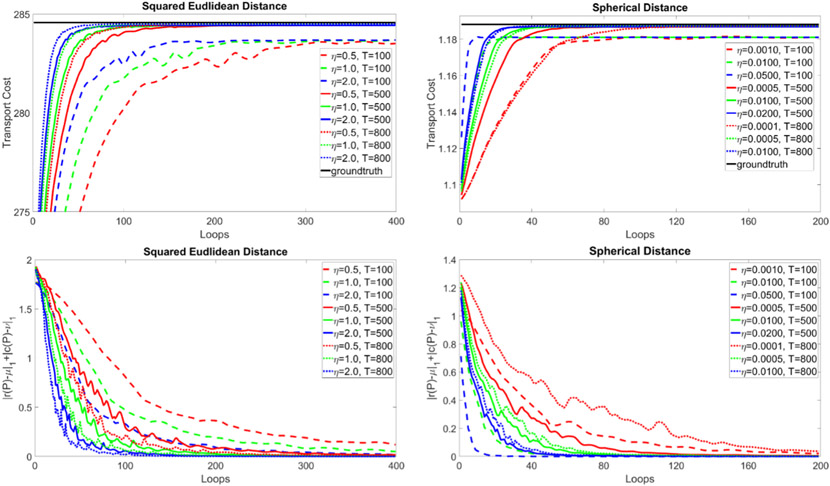

Experiment 1: The influence of different parameters

We test the performance of the proposed algorithm with different parameters under the SED and SD with and , as shown in Fig. 1. The left column shows the results for SED and the right column is the result for SD. The top row illustrates the transport costs over iterations, and the bottom row is the distance .

Figure 1:

The performance of the proposed algorithm with different parameters.

In the top row of Fig. 1, the black lines give the groundtruth transport costs, which are computed by linear programming. It is obvious that for the same , by increasing (decreasing , see the different types of the lines with the same color), the approximate accuracy is improved, and the convergence rate is increased; if (equivalently ) is fixed, by increasing (see the different colors of the lines with the same type), we increase the convergence speed.

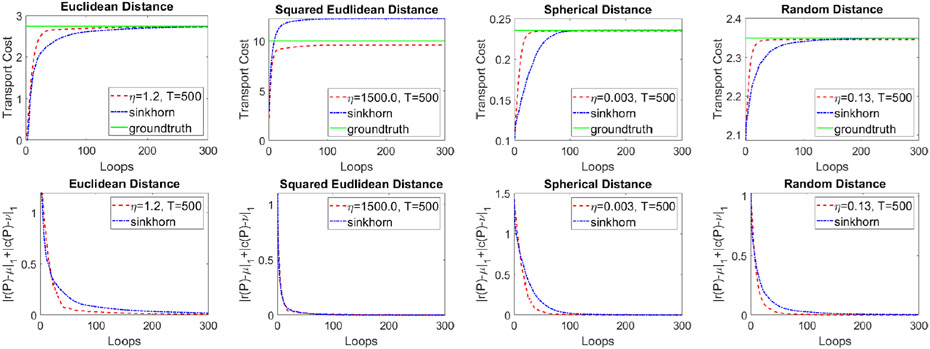

Experiment 2: Faster Convergence

For the experiments with ED and SED, the distributions come from the MNIST dataset (LeCun and Cortes 2010), as illustrated in the Cost Matrix. For the the experiments with SD and RD, we set and . Then we compare with the Sinkhorn algorithm (Cuturi 2013) with respect to both the convergence rate and the approximation accuracy. We manually set to get a good estimate of the OT cost, and then adjust to get the best convergence speed of the proposed algorithm. For the purpose of fair comparison, we use the same cost matrix with the same translation trick and the same for the Sinkhorn algorithm, where we treat each update of as one step. We summarize the results in Fig. 2, where the green curves represent the groundtruth computed by linear programming, the blue curves are for the Sinkhorn algorithm, and the red curves give the results of our method. It is obvious that in all of the four experiments, our method achieves faster convergence than the Sinkhorn algorithm. Note that the computed approximate transport plan of the Sinkhorn algorithm is intrinsically equivalent to our induced transport plan in Eqn. (15).

Figure 2:

Comparison with the Sinkhorn algorithm (Cuturi 2013) under different cost matrix.

In Tab. 1, we report the running time of our method, Sinkhorn (Cuturi 2013), its variants algorithms, including Greenkhorn (Altschuler, Niles-Weed, and Rigollet 2017) and Screenkhorn (Alaya et al. 2019), and APDAMD (Lin, Ho, and Jordan 2019) for the four experiments shown in Fig. 2 with . The stop condition is set to be . For all of the experiments, we can see that our proposed method achieves the fastest convergence.

Table 1:

Running time (s) of our method, Sinkhorn (Sink) (Cuturi 2013), Greenkhorn (Green) (Altschuler, Niles-Weed, and Rigollet 2017), Screenkhorn (Screen) (Alaya et al. 2019) and APDAMD (Lin, Ho, and Jordan 2019).

| Sink | Green | Screen | APDAMD | Ours | |

|---|---|---|---|---|---|

| ED | 0.0596 | 0.0923 | 0.0541 | 3.76 | 0.0404 |

| SED | 0.0431 | 0.0870 | 0.0328 | 3.21 | 0.0197 |

| SD | 0.0564 | 0.0862 | 0.0400 | 2.29 | 0.0142 |

| RD | 0.0374 | 0.0726 | 0.0313 | 2.88 | 0.0227 |

Experiment 3: Better Accuracy

From Fig. 2, we can observe that gives a comparable or better approximate of the OT cost than with the same small , especially for the cost function with , see the second column of Fig. 2 for an example of . To achieve precision, (equivalent to the Sinkhorn result) needs to set (Dvurechensky, Gasnikov, and Kroshnin 2018), which is smaller than our requirement of according to Thm. 11. Thus, with the same , the results of our algorithm should be more accurate than the Sinkhorn solutions. To verify this point, we give more examples in Tab. 2 with , 2, 3 and 4. Here we use the discrete measures similar to the squared Euclidean distance as stated in the Cost Matrix part, and set , . From the table, we can see that our method obtains more accurate results than Sinkhorn.

Table 2:

Comparison among the OT cost (GT) by linear programming, the Sinkhorn results (Cuturi 2013) denoted as ’Sink’ and the results of the proposed method denoted as ’Ours’ with and different .

| p | GT | Sink | Ours | ||

|---|---|---|---|---|---|

| 1.5 | 103.33 | 103.51 | 103.27 | 0.18 | 0.06 |

| 2 | 281.7 | 282.5 | 281.6 | 0.8 | 0.1 |

| 3 | 2189.8 | 2197.1 | 2187.5 | 7.3 | 2.3 |

| 4 | 16951.4 | 17038.5 | 16932.0 | 87.1 | 19.4 |

Conclusion

In this paper, we propose a novel algorithm based on Nesterov’s smoothing technique to improve the accuracy for solving the discrete OT problem. The c-transform of the Kantorovich potential is approximated by the smooth Log-Sum-Exp function, and the smoothed Kantorovich functional can be solved by FISTA efficiently. Theoretically, the computational complexity of the proposed method is given by , which is lower than current estimation of the Sinkhorn method. Experimentally, our results demonstrate that the proposed method achieves faster convergence and better accuracy than the Sinkhorn algorithm.

Acknowledgement

An and Gu have been supported by NSF CMMI-1762287, NSF DMS-1737812, and NSF FAIN-2115095; Xu by NIH R21EB029733 and NIH R01LM012434; and Lei by NSFC 61936002, NSFC 61772105 and NSFC 61720106005.

Footnotes

For example, if double-precision floating-point format is used in 64-bit processors, the range of the number is about when using MATLAB.

For one thing, if is relatively large, only with small step size, the algorithm may run out of the precision range of the processor and thus get ’Inf’ or ’NAN’. Thus, may be far less that 1. For the other thing, we have , we may also choose when itself is small.

References

- Abid BK; and Gower RM 2018. Greedy stochastic algorithms for entropy-regularized optimal transport problems. In AISTATS. [Google Scholar]

- Alaya MZ; Berar M; Gasso G; and Rakotomamonjy A 2019. Screening Sinkhorn Algorithm for Regularized Optimal Transport. In Advances in Neural Information Processing Systems 32. [Google Scholar]

- Altschuler J; Niles-Weed J; and Rigollet P 2017. Near-linear time approximation algorithms for optimal transport via Sinkhorn iteration. In Advances in Neural Information Processing Systems 30. [Google Scholar]

- An D; Guo Y; Lei N; Luo Z; Yau S-T; and Gu X 2020. AE-OT: A new Generative Model based on extended semi-discrete optimal transport. In International Conference on Learning Representations. [Google Scholar]

- Arjovsky M; Chintala S; and Bottou L 2017. Wasserstein generative adversarial networks. In ICML, 214–223. [Google Scholar]

- Beck A; and Teboulle M 2009. A Fast Iterative Shrinkage-Thresholding Algorithm for Linear Inverse Problems. SIAM Journal on Imaging Sciences, 2(1): 183–202. [Google Scholar]

- Benamou J-D; Carlier G; Cuturi M; Nenna L; and Peyré G 2015. Iterative Bregman projections for regularized transportation problems. SIAM Journal on Scientific Computing, 37(2): A1111–A1138. [Google Scholar]

- Blanchet J; Jambulapati A; Kent C; and Sidford A 2018. Towards Optimal Running Times for Optimal Transport. In arxiv:1810.07717. [Google Scholar]

- Blondel M; Seguy V; and Rolet A 2018. Smooth and Sparse Optimal Transport. In Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics, 880–889. [Google Scholar]

- Chakrabarty D; and Khanna S 2021. Better and Simpler Error Analysis of the Sinkhorn-Knopp Algorithm for Matrix Scaling. Mathematical Programming, 188(1): 395–407. [Google Scholar]

- Courty N; Flamary R; Tuia D; and Rakotomamonjy A 2017. Optimal Transport for Domain Adaptation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(9): 1853–1865. [DOI] [PubMed] [Google Scholar]

- Cuturi M 2013. Sinkhorn Distances: Lightspeed Computation of Optimal Transportation Distances. In International Conference on Neural Information Processing Systems, volume 26, 2292–2300. [Google Scholar]

- Dvurechensky P; Gasnikov A; and Kroshnin A 2018. Computational optimal transport: Complexity by accelerated gradient descent is better than by Sinkhorn’s algorithm. In International Conference on Machine Learning, 1367–1376. [Google Scholar]

- Galichon A. 2016. Optimal Transport Methods in Economics. Princeton University Press. [Google Scholar]

- Genevay A; Cuturi M; Peyré G; and Bach F 2016. Stochastic optimization for large-scale optimal transport. In Advances in Neural Information Processing Systems, 3440–3448. [Google Scholar]

- Gerber S; and Maggioni M 2017. Multiscale Strategies for Computing Optimal Transport. Journal of Machine Learning Research. [Google Scholar]

- Glimm T; and Oliker V 2003. Optical design of single reflector systems and the Monge–Kantorovich mass transfer problem. Journal of Mathematical Sciences, 117(3): 4096–4108. [Google Scholar]

- Guo W; Ho N; and Jordan M 2020. Fast Algorithms for Computational Optimal Transport and Wasserstein Barycenter. In International Conference on Artificial Intelligence and Statistics (AISTATS), 2088–2097. [Google Scholar]

- Horn TA; and Johnson CR 1991. Topics in Matrix Analysis. Cambridge. [Google Scholar]

- Jambulapati A; Sidford A; and Tian K 2019. A Direct Iteration Parallel Algorithm for Optimal Transport. In International Conference on Neural Information Processing System. [Google Scholar]

- Jordan R; Kinderlehrer D; and Otto F 1998. The variational formulation of the Fokker–Planck equation. SIAM Journal on Mathematical Analysis, 29(1): 1–17. [Google Scholar]

- Kusner M; Sun Y; Kolkin N; and Weinberger K 2015. From word embeddings to document distances. In Proceedings of the 32nd International Conference on Machine Learning, 957–966. [Google Scholar]

- LeCun Y; and Cortes C 2010. MNIST handwritten digit database. [Google Scholar]

- Lee YT; and Sidford A 2014. Path finding methods for linear programming: Solving linear programs in and faster algorithms for maximum flow. In 2014 IEEE 55th Annual Symposium on Foundations of Computer Science, 424–433. [Google Scholar]

- Lei N; An D; Guo Y; Su K; Liu S; Luo Z; Yau S-T; and Gu X 2020. A Geometric Understanding of Deep Learning. Engineering, 6(3): 361–374. [Google Scholar]

- Lin T; Ho N; and Jordan M 2019. On efficient optimal transport: An analysis of greedy and accelerated mirror descent algorithms. In International Conference on Machine Learning, 3982–3991. [Google Scholar]

- Meng C; Ke Y; Zhang J; Zhang M; Zhong W; and Ma P 2019. Large-scale optimal transport map estimation using projection pursuit. In Advances in Neural Information Processing Systems 32. [Google Scholar]

- Nesterov Y 2005. Smooth minimization of non-smooth functions. Mathematical Programming, 103(1): 127–152. [Google Scholar]

- Nguyen X 2013. Convergence of latent mixing measures in finite and infinite mixture models. Ann. Statist, 41: 370–400. [Google Scholar]

- Parikh N; and Boyd S 2014. Proximal Algorithms. Foundations and Trends in Optimization. [Google Scholar]

- Pele O; and Werman M 2009. Fast and robust earth mover’s distances. In 2009 IEEE 12th International Conference on Computer Vision (ICCV), 460–467. IEEE. [Google Scholar]

- Peyré G; and Cuturi M 2018. Computational Optimal Transport. https://arxiv.org/abs/1803.00567. [Google Scholar]

- Quanrud K. 2018. Approximating optimal transport with linear programs. In arXiv:1810.05957. [Google Scholar]

- Schiebinger G; Shu J; Tabaka M; Cleary B; Subramanian V; Solomon A; Gould J; Liu S; Lin S; Berube P; Lee L; Chen J; Brumbaugh J; Rigollet P; Hochedlinger K; Jaenisch R; Regev A; and Lander E 2019. Optimal-Transport Analysis of Single-Cell Gene Expression Identifies Developmental Trajectories in Reprogramming. Cell, 176(4): 928–943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmitzer B 2019. Stabilized Sparse Scaling Algorithms for Entropy Regularized Transport Problems. SIAM Journal on Scientific Computing, 41(3): A1443–A1481. [Google Scholar]

- Tolstikhin I; Bousquet O; Gelly S; and Schoelkopf B 2018. Wasserstein Auto-Encoders. In ICLR. [Google Scholar]

- Villani C 2008. Optimal transport: old and new, volume 338. Springer Science & Business Media. [Google Scholar]

- Xie Y; Chen M; Jiang H; Zhao T; and Zha H 2019a. On Scalable and Efficient Computation of Large Scale Optimal Transport. In Proceedings of the 36th International Conference on Machine Learning, 6882–6892. [Google Scholar]

- Xie Y; Wang X; Wang R; and Zha H 2019b. A Fast Proximal Point Method for Computing Wasserstein Distance. In Conference on Uncertainty in Artificial Intelligence, 433–453. [Google Scholar]

- Yurochkin M; Claici S; Chien E; Mirzazadeh F; and Solomon JM 2019. Hierarchical Optimal Transport for Document Representation. In Advances in Neural Information Processing Systems 32. [Google Scholar]