Abstract

Anti-vaccine content is rapidly propagated via social media, fostering vaccine hesitancy, while pro-vaccine content has not replicated the opponent’s successes. Despite this disparity in the dissemination of anti- and pro-vaccine posts, linguistic features that facilitate or inhibit the propagation of vaccine-related content remain less known. Moreover, most prior machine-learning algorithms classified social-media posts into binary categories (e.g., misinformation or not) and have rarely tackled a higher-order classification task based on divergent perspectives about vaccines (e.g., anti-vaccine, pro-vaccine, and neutral). Our objectives are (1) to identify sets of linguistic features that facilitate and inhibit the propagation of vaccine-related content and (2) to compare whether anti-vaccine, provaccine, and neutral tweets contain either set more frequently than the others. To achieve these goals, we collected a large set of social media posts (over 120 million tweets) between Nov. 15 and Dec. 15, 2021, coinciding with the Omicron variant surge. A two-stage framework was developed using a fine-tuned BERT classifier, demonstrating over 99 and 80 percent accuracy for binary and ternary classification. Finally, the Linguistic Inquiry Word Count text analysis tool was used to count linguistic features in each classified tweet. Our regression results show that anti-vaccine tweets are propagated (i.e., retweeted), while pro-vaccine tweets garner passive endorsements (i.e., favorited). Our results also yielded the two sets of linguistic features as facilitators and inhibitors of the propagation of vaccine-related tweets. Finally, our regression results show that anti-vaccine tweets tend to use the facilitators, while pro-vaccine counterparts employ the inhibitors. These findings and algorithms from this study will aid public health officials’ efforts to counteract vaccine misinformation, thereby facilitating the delivery of preventive measures during pandemics and epidemics.

Keywords: vaccine misinformation, health informatics, deep-learning, regression analyses, social media, diffusion of information

I. Introduction

Anti-vaccine content disseminated via social media fosters vaccine hesitancy, interfering with the efforts to slow down the pandemic and exacerbating health disparities. Even before the pandemic, the anti-vaccine content spiked on social media in 2014 when a public-school mandate of Gardasil 9 (Human Papillomavirus [HPV] vaccine) ignited conservatives’ protests [1]. Lately, the anti-vaccine rhetoric increased exponentially surrounding COVID-19 immunization. During the pandemic, tweets referring to the inaccurate origin of the virus and home remedies increased > 5-fold from January to March 2020 [2]. The top website domains, which the tweets referred, were unverified sources (e.g., Youtube, Instagram) [2]. An empirical association between engagement in anti-vaccine content and decreases in uptake rates has been established [3]. As of March 2022, only 65% of Americans are fully vaccinated [4].

In response to this rapidly growing anti-vaccine content on social media, pro-vaccine content has increased to defend the vaccine systems. Nonetheless, anti-vaccine communities on social media reach the undecided more effectively than provaccine groups [5], resulting in the propagation of vaccine hesitancy. What topics anti-vaccine communities discuss on social media, and how they help their messages diffuse through the network better than pro-vaccine communities remains unknown. In a similar vein, few studies have shown how pro-vaccine communities’ choice of the topics impedes the propagation of their messages.

Our primary objective, therefore, is to identify linguistic features that facilitate or inhibit the propagation of vaccine-related posts via social networks. In our investigation of ”propagation,” we focus on Twitter which is used to broadcast messages to large crowds, as opposed to fostering existing affinity as in Facebook [6]. On Twitter, we examine how original posts are shared (i.e., retweets) and how those retweeted posts are endorsed (i.e., favorites). Our secondary objective is to compare anti-, pro-, and neutral vaccine communities’ choices of linguistic features. Specifically, we investigate whether anti- and pro-vaxxers use certain features more often than the other(s), facilitating or inhibiting their propagation. This comparison across three groups, therefore, will help us understand why anti-vaxxers’ messages diffuse to the undecided better than pro-vaxxers [5]. To achieve these goals, we developed an automatic text classifier using machine learning (ML) algorithms. Specifically, we use a two-staged ternary classification framework that (1) selects only vaccine-related tweets and then (2) classifies vaccine-related tweets into anti-, pro-, and neutral perspectives about vaccines. We differentiate our approach from prior ML studies in two aspects. First, prior approaches often assumed that all the social media posts downloaded using vaccine-relevant keywords (e.g., Moderna, Pfizer) were related to vaccines. However, many social media posters use popular keywords (such as Pfizer) to garner more attention but do not discuss the topics (as in clickbait). Simultaneously, our goal was to download a nearly full coverage of vaccine discussions from Twitter; thus, we used a comprehensive set of keywords, some of which are tangentially relevant to vaccine (e.g., “Trump”). Thus, filtering out vaccine unrelated posts was necessary. Second, our approach involved ternary classification: (1) anti-vaccine (expressing negative views about vaccines and encouraging people to delay/refuse inoculation), (2) pro-vaccine (expressing positive views about vaccines and encouraging acceptance), and (3) neutral (delivering nonopinionated facts about vaccines). Most prior studies classified posts into binary classes (e.g., credible vs. fake vaccine information, refer to section II-C). However, the third class (i.e., neutral) grew during the pandemic as news about vaccines, Omicron variants, and booster shots increased substantially. For instance, someone tweeted a newspaper article, “FDA approved a second booster shot for adults over 50+,” which is neither pro nor anti-vaccine but simply stating the fact.

We embarked upon a large data collection from November 15 to December 15 of 2021, which coincides with the surge of Omicron variant and the approvals of booster shots. As mentioned earlier, our goal was to reach a nearly full coverage of all vaccine-related posts on Twitter. Thus, we used 348 keywords and collected 120,115,096 tweets, which were then classified. The classified results were fed into our regression models to identify and compare linguistic features that the three vaccine perspectives use to garner favorites and retweets.

The outcomes from this study make important contributions. First, the outcomes close the gap in vaccine-hesitancy literature by identifying why and how anti-vaxxers thrive on social media in spite of pro-vaxxers’ efforts. Specifically, we examine linguistic features that help diffuse vaccine-related content and compare these features across three vaccine classes. Understanding anti-vaccine linguistic features will aid in the development of interventions to counteract misinformation. Second, we contribute to the advancement of social media analytics for planning and managing care. Particularly, the increases in specific linguistic features linked to rapid propagation will inform public health officials of the need for interventions. This targeted approach will help reduce costs for managing public health. Third, our comprehensive and systematic approach for collecting a large number of tweets establishes a benchmark for data management during a public health crisis. Towards that end, we created a training set of ∼10,000 human-annotated vaccine-related tweets (to be shared upon the acceptance of this manuscript), which will accelerate future developments of ML algorithms. Our fine-tuned classifiers, designed to accurately categorize vaccine-related tweets and their three perspectives, are made publicly available via our anonymized github repository located at https://anonymous.4open.science/r/Vaccine-Classification-C087/.

II. Related Research

A. Propagation of Anti- and Pro-Vaccine Messages and Their Impact on Immunization

The danger of rapidly propagating anti-vaccine posts and their potential correlations with outbreaks is well-documented [5]. Johnson et al. [5] applied social network analyses to more than one million Facebook users and showed rapid growth of anti-vaccinist activities during the measles outbreaks of 2019, while pro-vaccinist activities were unchanged. Although anti-vaccine clusters on social media are smaller than their provaccine counterparts, anti-vaxxers discuss more diverse topics and have more frequent contact with the undecided. As a result, Johnson et al. predicted that anti-vaccine sentiment would become a dominant viewpoint within the next decade.

Moreover, Argyris et al. [3] have shown that this propagation of anti-vaccine rhetoric on social media is associated with decreases in vaccination rates. In contrast, pro-vaccine content on social media does not increase the uptake rates as much as the anti-vaccine counterpart decreases the rates.

B. Linguistic Features and Discussion Topics

Given the clear harms of anti-vaccine propagation to public health, a large number of studies have attempted to understand why and how anti-vaccine rhetoric is propagated better than pro-vaccine counterpart. In so doing, many of them analyzed linguistic features (such as word use) and topics of discussion. Furini and Menegoni [7] categorize the content of vaccine-related tweets and found that anti-vaxxers are angrier and discuss diverse and general topics, while pro-vaxxers discuss narrow topics of specific diseases and vaccines. Memon et al. [8] have shown that anti-vaccine Twitter users employ more linguistic intensifiers, speak more in the third person, use more gendered, subject, and object pronouns. Okuhara et al. [9] have argued that anti-vaccine sites focus on vaccine side effects and pro-vaccine sites on vaccine efficacy.

Huangfu et al. [10] have specifically focused on tweets about COVID-19 vaccines. The main topics of positive tweets were vaccine information and knowledge, while the main concerns in negative tweets were vaccine hesitancy and side effects. Using unsupervised topic modeling, Swapna and Gokhale [11] have investigated public perceptions of the COVID-19 vaccines. Four themes emerge from anti-vaccine tweets: (1) a lack of safety as a result of hasty development, (2) conspiracy and conflicts of interest, (3) ideology, globalism, and the new world order, and (4) a loss of personal choice and independence. Pro-vaxxers attempt to persuade skeptics by citing previous vaccination successes and sending warnings about the anti-vaccine movement’s expansion on social media. Pro-vaxxers accused anti-vaxxers of disseminating lies and disinformation, mocking, ridiculing, and insulting anti-vaxxers. Thelwall et al. [12] have used a content analysis to examine 446 COVID-19 vaccine-skeptical tweets. Conspiracies, the speed of vaccine development, and safety concerns were among the key topics of discussion.

C. Prior Studies on Machine-Learning-Based Classifiers

Several recent studies have focused on detecting COVID-19 vaccine misinformation in social media using ML techniques. For example, Cui and Lee [13] employed a variety of techniques such as logistic regression, support vector machine, random forest, and Hierarchical Attention Networks (HAN) to detect misinformation on Twitter. Abdelminaam et al. [14] applied conventional Recurrent Neural Network (RNN) techniques such as Gated Recurrent Unit (GRU) and Long ShortTerm Memory (LSTM) to detect COVID-19 misinformation posts and obtained an accuracy of around 98%. Similarly, Hayawi et al. [15] employed the eXtreme Gradient Boosting (XGBoost) algorithm along with deep learning methods such as LSTM and Bidirectional Encoder Representations from Transformers (BERT) [16] to detect vaccine misinformation. In this study, the authors have collected Twitter data using keywords such as vaccine, Pfizer, Moderna, and Sputnik; the authors showed that BERT outperformed LSTM and XGBoost with an accuracy of 98.4% when applied to their dataset.

III. Limitations in Prior Studies and Our Research Objectives

Prior researchers have shown that anti-vaccine messages are propagated on social media better than pro-vaccine content [5]. To find reasons for the propagation of anti-vaccine content, many researchers have identified what is discussed in anti-vaccine posts and compared them against pro-vaccine counterparts. These prior studies have shown that anti-vaccine posts are conspiratorial, linking vaccines to a corrupted, profit-driven pharmaceutical industry, while pro-vaccine counterparts focus on communal values (e.g., family, society, herd immunity) and condemn anti-vaxxers disparagingly. Nonetheless, what is missing from these studies is the link between topics discussed and the extent of propagation. In other words, very few studies have provided a comprehensive and systematic list of words used by anti- and pro-vaxxers that either facilitate or inhibit the propagation.

Likewise, as mentioned above, several prior researchers have developed ML algorithm and demonstrated reliable performance. Limitations to these prior studies are that, despite the high performance, their classification tasks focused on detecting only two classes of whether a tweet has a COVID-19 related misinformation or not. None of these studies has performed ternary classification into anti, pro, and neutral vaccine perspectives. Also, none of the prior studies has employed a preliminary step that filters out vaccine non-related text from their dataset. Nonetheless, this preliminary filtering enables a more targeted approach for genuinely vaccine-related posts. This is an important step as many posts that employ vaccine-related hashtags and keywords use them for clickbait or have other purposes, such as selling alternative care (e.g., essential oil to boost immunity) [17].

Accordingly, our goal is to close these gaps in the prior studies by:

Examining how linguistic features facilitate or inhibit the propagation of vaccine-related messages;

Comparing whether the three groups of differing vaccine perspectives (anti, pro, and neutral groups) use those facilitating or inhibiting features more frequently than other groups.

IV. Methodology

A. Data Collection

Twitter is one of the most popular social media platforms with 353 million active users and more than 500 million tweets are being posted every day [15]. The Twitter Streaming API enables the collection of public tweets, including their textual content, user information, number of retweets, and mentions in Javascript Object Notation (JSON) format. We use the Tweepy Python library [18] to access the Twitter API. By reviewing previous academic and popular literature, we identified and verified 348 keywords for querying the Twitter API in order to acquire a large coverage of pro- and anti-vaccine tweets. The selected keywords consist of terms related to vaccines (e.g., vaccine, vaccinated, unvaccinated, vaxxed, bigpharma, pharma, and mandate), pandemic (e.g., Pfizer, Moderna, virus, Omicron, and booster), and other political words (e.g., Trump).

Our data collection spans the period between November 15, 2021 and December 15, 2021, which coincides with the rise of anti-vaccine sentiment in prior years (due to the upcoming flu season [19]) and the surge of Omicron and the approval of booster shots. During this period, we collected 120,115,096 tweets, averaging 1.45 million tweets per day. More than 36.5% (44,046,115) of the collected tweets are original posts while the remainders are retweets.

B. Construction of Training Set

Our effort for building a training set that represents diverse topics of vaccine-related tweets began even before our main data collection period (the dataset can be downloaded from https://doi.org/10.5061/dryad.d51c5b05j). Our initial annotation involved two independent coders who were not aware of the research objectives. Their goal was to label tweets into three categories—anti, pro, and neutral—in an unbiased, reliable, and transparent manner, following Rietz and Maedche’s four steps [20]. In step one, the two coders hand-coded the tweets to create a coding book individually. Second, they met to highlight (mis)matches to identify coding errors and compared their coding books. Third, the two coders involved a third-party mediator (i.e., the third independent coder who did not know the research objectives) when explaining their code recommendations and rules for transparency. The two coders repeated this comparison twice until they increased the agreement rate from 89.9% (the first analysis), 97.31% (the second analysis), and finally 100%. Fourth, during these iterative processes, the coders, along with the mediator, continuously revised their coding rules and definitions and identified textual features to anchor or change each one’s recommended code for a tweet. Our final annotated data set contained 10,836 labeled tweets, consisting of the following classes: anti-vaccine (n=1,402), pro-vaccine (n=1,875), and neutral (n=1,565), with the remaining tweets unrelated to vaccine.

C. Tweet Classification

Our first step in the classification task was to distinguish between the vaccine-related tweets from unrelated ones. A binary classifier such as logistic regression with -regularization [21] can be used to fit the training data on a set of term frequency (TF) vectors extracted from the textual features of the tweets. Since the proportion of vaccine-related to vaccine-unrelated tweets in the labeled data is moderately skewed (45%:55%), oversampling was performed to create a balanced class distribution of the training data before fitting the logistic regression classifier. While it is possible to use a richer feature representation of the tweets, as will be shown in Section V, the TF approach with logistic regression classifier was sufficient to generate a robust model to classify the tweets with more than 97% test accuracy. More advanced classifiers with feature representation learning using deep neural networks may increase the test accuracy slightly between 0.1%−1.9%, but are more expensive to train.

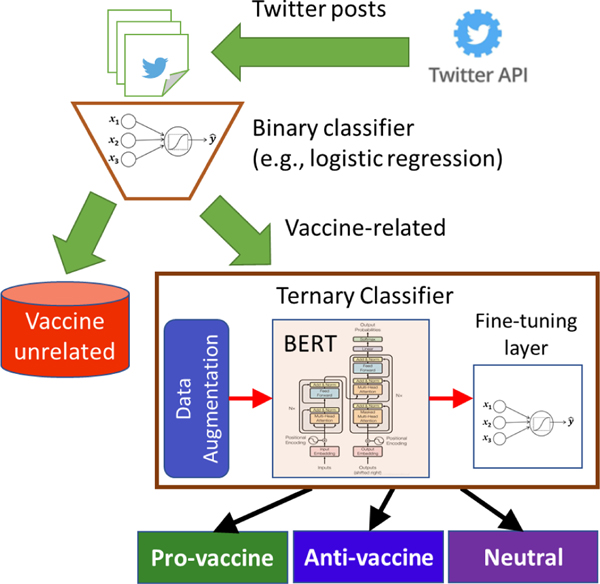

The second step of the classification task was to further categorize the vaccine-related tweets into anti, pro, or neutral groups in terms of their perspectives about vaccines. While it is possible to simultaneously classify all the tweets into one of the four classes (including vaccine unrelated), the accuracy is likely to be poor as the classifier will be biased towards classifying the larger, vaccine unrelated class more accurately. Our two-stage classification framework shown in Figure 1 helps to alleviate this problem by distinguishing vaccine related posts from unrelated ones first before identifying their perspectives about vaccines.

Fig. 1.

Proposed two-stage architecture for tweet classification.

In principle, a multinomial logistic regression is applicable to classifying the vaccine-related tweets [6]. However, its performance is highly dependent on the quality of the input features. While the TF features may be able to distinguish the vaccine-related posts from unrelated ones, they do not sufficiently capture the semantic and contextual meaning of the words in order to identify their vaccination stance. Thus, more sophisticated feature learning methods are needed to help improve the ternary classification task. In this work, a pre-trained deep learning model called BERT (Bidirectional Encoder Representations from Transformer) [16] was employed to derive the feature embedding of each tweet. BERT is a language model that provides a rich contextual word representation of a text corpus by learning the long-range dependencies between words using self-attention with bidirectional training. The pre-trained Bert base model [22] consists of 12 encoders with 12 bidirectional self-attention heads, which were then fine-tuned to fit our training data. The model was pre-trained on vast amounts of text corpus, with an unsupervised objective of masked language modeling and next-sentence prediction. It uses an auto-encoding approach by masking out a portion of the input tokens and predicting those tokens based on all other non-masked tokens while learning a concise vector representation of the tokens in the process [23]. The pretrained network was used as a feature extraction step, which was then combined with a linear layer with sigmoid activation function to predict the ternary class of each tweet. Furthermore, as the anti-vaccine posts were less frequent compared to the other two classes (pro-vaccine and neutral) in our labeled dataset, data augmentation [24] was performed during training to increase the number of training examples from the less frequent classes. Specifically, we augmented the data by simply concatenating two original tweets in the training set from the same class c to create a “new” labeled tweet, which was assigned to the same class c. The data augmentation step helps to increase the size and diversity of training examples without explicitly collecting and annotating new tweets nor resampling the original tweets using traditional stratification-based methods.

V. Results

A. Results of Tweet Classification

We applied a 10-fold cross-validation approach to evaluate the performance of the different tweet classification methods. Specifically, the labeled tweets were initially partitioned into 10 disjoint partitions (called folds). We repeated our experiment 10 times, each time using one of the folds as the test set and the remaining 9 folds as training set. The prediction results on the test sets of all 10 folds were then merged before computing the accuracy and F1-score of the merged test results. These metrics were computed as follows [25]:

where is the number of true positives (negatives), is the number of false positives (negatives), is the model recall, while is known as the model precision. Unlike accuracy, which summarizes the number of correct predictions from all classes into a single number, the F1-score allows us to evaluate how well the model accurately predicts each class separately.

We evaluated the performance of four classifiers—logistic regression, gradient boosting trees, long-short term memory (LSTM), and a fine-tuned BERT classifier—in terms of their ability to classify the tweets as vaccine related or unrelated as well as three perspectives about vaccines (pro-vaccine, anti-vaccine, or neutral). For both logistic regression and gradient boosting trees, the classifier was trained on the term-frequency (TF) vectors extracted from the tweets. The length of the TF vectors was 7044, after removing stopwords and terms that appeared in less than 3 tweets. For BERT, the initial pretrained BERT-base model [16] created a 768-dimensional feature vector for each tweet. The feature vector was subsequently provided as input to a single-layer feed-forward neural network for fine-tuning and to generate the (uncalibrated) probability estimate for each ternary class. For LSTM, an embedding vector of length 500 was initially created using the built-in Keras embedding layer. The embedding vectors were then passed to an LSTM layer to create a 768-dimensional hidden representation to match the number of hidden dimensions of BERT for a fair comparison. Finally, the feature vectors were then fed to a single-layer feedforward neural network to generate the predicted class.

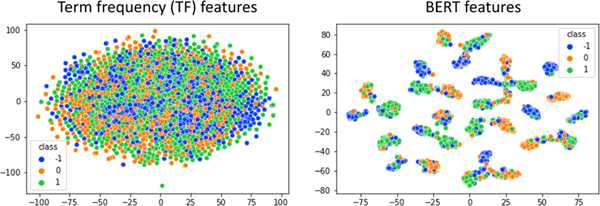

The classification results in Table I suggest that the fine-tuned BERT classifier consistently achieves higher accuracy compared to other competing methods, including logistic regression, gradient boosting trees, and LSTM. For classifying tweets as vaccine-related or unrelated, the accuracies of all 4 classifiers exceed 97%, with BERT slightly outperforming all other methods, achieving more than 99% accuracy. For the classification of anti-vaccine, pro-vaccine, and neutral tweets, logistic regression has the lowest accuracy as well as F1-scores for all the classes, followed by gradient boosting trees, LSTM, and BERT, which has the highest accuracy, exceeding 82%. These results suggest the advantage of using a transformer-based deep learning approach to extract the feature embedding of the classification task compared to the TF approach. To further validate the superiority of BERT in terms of its feature representation, Figure 2 shows a scatter plot of the 2-dimensional representation of TF against BERT using t-Distributed Stochastic Neighbor Embedding (t-SNE) [26], a popular dimensionality-reduction approach for visualizing high-dimensional data. Observe that the fine-tuned BERT features are better at separating the different classes compared to the original textual features, thus allowing the classifier to discriminate the classes more easily.

TABLE I.

Performance comparison of tweet classification methods in terms of their overall test accuracy and per-class F1-score.

| (a) Comparison of classification accuracy |

| Method | Vax-related vs. unrelated | Pro vs. Anti vs. Neutral |

|---|---|---|

| Logistic regression | 97.13 | 70.03 |

| Gradient Boosting | 98.26 | 78.52 |

| LSTM | 97.24 | 78.7 |

| Fine-tuned BERT | 99.06 | 82.63 |

| (b) Comparison of F1-score |

| Method | Pro-vaccine | Anti-vaccine | Neutral |

| Logistic regression | 72.19 | 67.14 | 70.07 |

| Gradient Boosting | 79.65 | 73.64 | 81.21 |

| LSTM | 81.98 | 74.72 | 78.91 |

| Fine-tuned BERT | 83.69 | 78.49 | 84.84 |

Fig. 2.

Comparison between TF features against the fine-tuned BERT features using t-SNE. Observe that the BERT features appear to better discriminate the different classes (0: neutral, 1: pro, −1: anti) compared to the raw TF features.

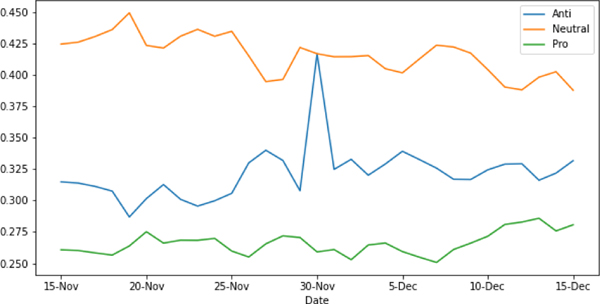

Figure 3 shows the daily distribution of vaccine-related tweets for each class. The plot shows a peak in the proportion of anti-vaccine tweets on November 30, 2021, which coincides with the date when the United States announced Omicron as a variant of concern. The anti-vaccine tweets also show an increasing trend over the time period compared to neutral tweets, which shows a gradually decreasing trend.

Fig. 3.

Proportion of daily vaccine-related tweets classified as pro-, neutral, and anti-vaccine from Nov 15 to Dec 15, 2021.

B. Sample

Using the aforementioned classifier, we identified 4,668,980 tweets as vaccine-related. Out of 4,668,980 tweets, 1,488,114 were anti-vaccine, 1,243,637 were pro-vaccine, and 1,937,239 were neutral. We then randomly selected 1.1% (51,360) of these tweets out of these 4,668,980 tweets. Out of these 51,360 tweets, 21,247 were neutral, 16,469 were anti-vaccine, and 13,644 were pro-vaccine tweets.

Out of the 51,360 tweets randomly selected to use as a sample, only 5,710 tweets had been retweeted at least once from our data collection period (Nov 15-Dec 15) to mid-March 2022 when at least three months had elapsed since its initial post. Out of 5,710 tweets that had been retweeted at least once, 1,663 were replies to tweets, leaving 4,047 original tweets that have been retweeted. As our research objective is to track the tweets that are propagated through a social network, we focused only on the 4,047 original tweets.

C. Operationalization of Variables

This number of retweets each original tweet accrued is used as our dependent variable to measure the extent of propagation because the more a post is retweeted, the more likely it reaches broader audiences. In addition, we also collected the number of favorites that each retweeted post garnered as another measure for propagation because the number of favorites suggests whether a retweeted post is actually reviewed.

For our predictor variable, we used the probability of being anti-vaccine, pro-vaccine, or neutral classes, which were generated by our classifier described above. In addition, to identify linguistic features used in each tweet, we applied Linguistic Inquiry Word Count (LIWC) text analysis tool to each tweet. LIWC counts words in psychologically significant categories. LIWC’s capacity to detect meanings in a wide range of scenarios, including showing attention, emotions, social interactions, cognitive styles, and individual differences, has been demonstrated empirically [27]. LIWC has been utilized by researchers for a variety vaccination related topic, such as comparison of language use in pro-and anti-vaccination [28], understanding anti-vaccination attitudes [29], understanding the psycho-linguistic differences among competing vaccination communities [30], [31], and characterizing discourse about COVID-19 vaccines [32]. We used LIWC2015 dictionary and generated an output value for each of the 92 psycho-linguistic categories.

Moreover, we collected other information, such as, the date and the time of posting, the numbers of friends and followers, all of which can potentially influence the propagation of a post. The more followers and friends one has, the more likely their posts will be retweeted. Likewise, a particular week a post is created potentially matters since our data collection period (Nov to Dec 15, 2021) was filled with new developments about the Omicron variant and the approval of booster shots. Moreover, generally speaking, the longer a post was available on Twitter, the higher chance the post has to be retweeted. Thus, we created a new variable, the week, from the date and time when each post was created. The week ranges from 1 to 5, to represent the five weeks between Nov 15 and Dec 15, 2021.

D. Descriptive Statistics and Preliminary Test Results

Table II presents the descriptive statistics of our data. As the number of followers, favorites, friends, and retweets vary substantially, we log-transformed these values. When log-transforming these values, we added a constant value of 1 to each value of the variables to deal with zero values.

TABLE II.

Descriptive statistics

| 95% Confidence Interval for Mean | ||||||||

|---|---|---|---|---|---|---|---|---|

| Mean | Median | Std. Deviation | Lower Bound | Upper Bound | Minimum | Maximum | ||

| Week | Anti | 3.14 | 3.00 | 1.31 | 3.06 | 3.23 | 1.00 | 5.00 |

| Neutral | 3.13 | 3.00 | 1.30 | 3.07 | 3.19 | 1.00 | 5.00 | |

| Pro | 3.26 | 3.00 | 1.31 | 3.18 | 3.33 | 1.00 | 5.00 | |

| Total | 3.17 | 3.00 | 1.31 | 3.13 | 3.21 | 1.00 | 5.00 | |

| # of retweets | Anti | 39.13 | 2.00 | 363.96 | 15.80 | 62.47 | 1.00 | 8,253.00 |

| Neutral | 18.16 | 2.00 | 107.22 | 13.46 | 22.85 | 1.00 | 2,562.00 | |

| Pro | 35.62 | 2.00 | 733.98 | (7.72) | 78.96 | 1.00 | 24,294.00 | |

| Total | 27.78 | 2.00 | 428.14 | 14.58 | 40.97 | 1.00 | 24,294.00 | |

| # of favorites | Anti | 142.94 | 5.00 | 1,267.02 | 61.71 | 224.17 | 0.00 | 31,004.00 |

| Neutral | 68.44 | 5.00 | 370.76 | 52.20 | 84.67 | 0.00 | 6,784.00 | |

| Pro | 99.39 | 8.00 | 936.89 | 44.06 | 154.72 | 0.00 | 28,984.00 | |

| Total | 94.13 | 6.00 | 824.43 | 68.72 | 119.54 | 0.00 | 31,004.00 | |

| # of followers | Anti | 85,867.59 | 2224.00 | 518,605.59 | 52,618.67 | 119,116.50 | 0.00 | 7,700,000.00 |

| Neutral | 353,193.68 | 3900.00 | 2,661,590.11 | 236,650.72 | 469,736.64 | 0.00 | 55,000,000.00 | |

| Pro | 183,314.10 | 3035.50 | 1,984,134.90 | 66,145.41 | 300,482.79 | 0.00 | 48,000,000.00 | |

| Total | 244,957.55 | 3231.00 | 2,158,394.99 | 178,439.07 | 311,476.04 | 0.00 | 55,000,000.00 | |

| # of friends | Anti | 3,835.42 | 1276.00 | 11,857.57 | 3,075.21 | 4,595.64 | 0.00 | 230,047.00 |

| Neutral | 3,538.33 | 935.50 | 16,163.94 | 2,830.56 | 4,246.10 | 0.00 | 576,594.00 | |

| Pro | 3,076.27 | 1001.00 | 8,683.69 | 2,563.48 | 3,589.07 | 0.00 | 133,915.00 | |

| Total | 3,481.07 | 1011.00 | 13,514.18 | 3,064.58 | 3,897.56 | 0.00 | 576,594.00 | |

Next, we conducted one-way ANOVAs to see if the three vaccine classes differ in terms of these variables. The results are presented in Table III below. Across the predicted class, significant differences were found in the number of favorites (F(2,4046) = 13.127, p < .001), followers (F(2,4046) = 24.625, p < .001), and friends (F(2,4046) = 22.872, p < .001). Lastly, the number of tweets over the five-week span differed significantly among the three vaccine classes (F(2,4046) = 3.429, p = .031).

TABLE III.

ANOVA results

| Variables | Sum of Squares | Mean Square | F | Sig. |

|---|---|---|---|---|

| Week | 11.882 | 5.941 | 3.492 | 0.031* |

| Retweets (log-transformed) | 1.248 | 0.624 | 0.47 | 0.625 |

| Favorites (log-transformed) | 70.444 | 35.222 | 13.127 | 0.000* |

| Followers (log-transformed) | 332.01 | 166.005 | 24.625 | 0.000* |

| Friends (log-transformed) | 45.744 | 22.872 | 7.878 | 0.000* |

Significant at α = 0.05.

Because the ANOVA results were significant, we conducted post-hoc multi-comparisons to examine which specific vaccine classes differed from others in terms of each dependent variable. We applied the Bonferroni adjustment to the level of significance, and set our value at 0.05/3 or 0.017 to control for an inflated Type 1 error due to multiple testing. The predicted class did not differ across weeks, which means, although tweets were retweeted more in earlier weeks than later weeks, there was no significantly different pattern of retweets between the classes. Pro-vaccine tweets garnered significantly more favorites than both anti-vaccine (Mean Difference [MD] = .245, p = .002) and neutral tweets (MD = .311, p < .000). Neutral tweets had significantly more followers than both pro-vaccine (MD = .692, p < .000) and anti-vaccine classes (MD = 400, p < .000). Anti-vaccine tweets had significantly more friends than neutral class (MD = .267, p < .000), but there was no difference between any other pair.

E. Regression Analyses

Given the significant multi-comparison results, we proceeded to regression analyses to further analyze the impact of LIWC features, along with the previously mentioned variables. We constructed ordinary least squares regression models using IBM SPSS (version 27). As our predictor variables–i.e., the probabilities of being anti, pro, and neutral–are highly correlated with each other, we constructed a separate model for each of the three probabilities to prevent multicollinearity. Specifically, a tweet that our classifier predicts as an antivaccine class, is unlikely to be predicted as a pro- (Pearson correlation coefficient [PCC] = −.330, p < .000) or neutral (PCC = −.605, p < .000). As shown in Table IV, we regressed the log-transformed number of retweets on the week, all the 92 LIWC features, the log-transformed number of followers and friends, and the probability of anti-vaccine (M1), pro-vaccine (M2), and neutral class (M3). Given the space constraint, we include only those LIWC features that had significant influences on the number of retweets in any of the models. M1 in Table IV shows that the coefficient for anti-vax class was positive and significant (β = .106, p = 0.03), while neither the coefficients for pro-vax (β = - .02, p = 0.68) nor neutral (β = −.08, p = 0.07) was significant. The week was also not a significant influence on retweets, but friends and followers were significant predictors of retweets, as expected. However, friends had negative and significant coefficients in all three models, which contradicts a common belief. Out of 92 LIWC features, only 14 features significantly affected retweets. Notably, all of these features had significant impacts on retweets regardless of anti-vaccine, pro-vaccine, or neutral. Also, all the pronouns, including first, second, and third person singular and plural pronouns, had negatively affected retweets.

TABLE IV.

Results of Regression on Retweets

| M1. Anti-Vax | M2. Pro-Vax | M3. Neutral | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B | Std. Error | t | sig | B | Std. Error | t | sig | B | Std. Error | t | sig | |

| (Constant) | −0.06 | 0.25 | −0.23 | 0.82 | −0.04 | 0.25 | −0.17 | 0.86 | 0.03 | 0.26 | 0.10 | 0.92 |

| Week | −0.01 | 0.01 | −1.05 | 0.30 | −0.01 | 0.01 | −1.06 | 0.29 | −0.01 | 0.01 | −1.09 | 0.28 |

| WC | 0.02 | 0.00 | 5.47 | 0.00*** | 0.02 | 0.00 | 5.42 | 0.00*** | 0.02 | 0.00 | 5.45 | 0.00*** |

| Authentic | 0.00 | 0.00 | 2.16 | 0.03* | 0.00 | 0.00 | 2.18 | 0.03* | 0.00 | 0.00 | 2.11 | 0.04* |

| pronoun | 0.69 | 0.20 | 3.37 | 0.00*** | 0.70 | 0.20 | 3.45 | 0.00*** | 0.69 | 0.20 | 3.41 | 0.00*** |

| i | −0.70 | 0.20 | −3.43 | 0.00*** | −0.72 | 0.20 | −3.51 | 0.00*** | −0.71 | 0.20 | −3.47 | 0.00*** |

| we | −0.73 | 0.20 | −3.56 | 0.00*** | −0.74 | 0.20 | −3.64 | 0.00*** | −0.73 | 0.20 | −3.60 | 0.00*** |

| you | −0.69 | 0.20 | −3.39 | 0.00*** | −0.71 | 0.20 | −3.47 | 0.00*** | −0.70 | 0.20 | −3.43 | 0.00*** |

| shehe | −0.72 | 0.20 | −3.54 | 0.00*** | −0.74 | 0.20 | −3.63 | 0.00*** | −0.73 | 0.20 | −3.58 | 0.00*** |

| they | −0.72 | 0.20 | −3.51 | 0.00*** | −0.73 | 0.20 | −3.58 | 0.00*** | −0.72 | 0.20 | −3.54 | 0.00*** |

| ipron | −0.69 | 0.20 | −3.40 | 0.00*** | −0.71 | 0.20 | −3.48 | 0.00*** | −0.70 | 0.20 | −3.44 | 0.00*** |

| percept | 0.07 | 0.03 | 2.07 | 0.04* | 0.07 | 0.03 | 2.08 | 0.04* | 0.07 | 0.03 | 2.07 | 0.04* |

| hear | −0.10 | 0.04 | −2.74 | 0.01* | −0.10 | 0.04 | −2.78 | 0.01* | −0.10 | 0.04 | −2.73 | 0.01* |

| affiliation | 0.03 | 0.01 | 2.28 | 0.02* | 0.03 | 0.01 | 2.23 | 0.03* | 0.03 | 0.01 | 2.23 | 0.03* |

| Quote | 0.02 | 0.01 | 3.01 | 0.00*** | 0.02 | 0.01 | 3.01 | 0.00*** | 0.02 | 0.01 | 3.07 | 0.00*** |

| OtherP | −0.01 | 0.00 | −2.93 | 0.00*** | −0.01 | 0.00 | −2.90 | 0.00*** | −0.01 | 0.00 | −3.03 | 0.00*** |

| followers | 0.18 | 0.01 | 25.10 | 0.00*** | 0.18 | 0.01 | 24.98 | 0.00*** | 0.18 | 0.01 | 25.06 | 0.00*** |

| Friends | −0.04 | 0.01 | −3.71 | 0.00*** | −0.04 | 0.01 | −3.60 | 0.00*** | −0.04 | 0.01 | −3.64 | 0.00*** |

| Prob_anti | 0.11 | 0.05 | 2.25 | 0.03* | - | - | - | - | - | - | - | - |

| Prob_pro | - | - | - | - | −0.02 | 0.05 | -0.41 | 0.68 | - | - | - | - |

| Prob_neutral | - | - | - | - | - | - | - | - | -0.08 | 0.04 | -1.84 | 0.07 |

| R-squared | 0.18 | 0.18 | 0.18 | |||||||||

| RMSE | 1.05 | 1.05 | 1.05 | |||||||||

Next, we regressed the log-transformed favorites on the same set of predictor variables (Table V). M4 and M5 in Table V shows that, while the coefficient for anti-vaccine tweets was not significant, the coefficient for pro-vaccine tweets was positive and significant (β = 0.17, p = 0.01). The coefficient for neutral class is negative and significant (β = − 0.17, p = 0.00). These results suggest that pro-vaccine tweets garner favorites, while anti-vaccine tweets do not, and neutral tweets even hampers the chance of garnering retweets. Next, as we expected, friends (again, a negative direction), followers, and weeks have significant coefficients. Finally, compared to 14 LIWC features that significantly affected retweets, 22 had significant affected favorites. As was in the results about retweets, Out of the 22 features, tweets that contain the 1st, 2nd, and 3rd person pronouns, such as “we,” “you,” “she/he,” “they,” “it, it’s, those,” had negative effects on favorites. These significant predictors remained consistent regardless of which vaccine class was accounted for in the model.

TABLE V.

Results of Regression on Favorites

| M1. Anti-Vax | M2. Pro-Vax | M3. Neutral | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B | Std. Error | t | sig | B | Std. Error | t | sig | B | Std. Error | t | sig | |

| (Constant) | −0.25 | 0.35 | −0.73 | 0.47 | −0.25 | 0.35 | −0.73 | 0.47 | −0.1 | 0.35 | −0.28 | 0.78 |

| Week | −0.02 | 0.02 | −1.37 | 0.17 | −0.03 | 0.02 | −1.44 | 0.15 | −0.03 | 0.02 | −1.4 | 0.16 |

| WC | 0.03 | 0.01 | 5.52 | 0.00*** | 0.03 | 0.01 | 5.53 | 0.00*** | 0.03 | 0.01 | 5.59 | 0.00*** |

| Authentic | 0.01 | 0 | 3.25 | 0.00*** | 0.01 | 0 | 3.19 | 0.00*** | 0.01 | 0 | 3.16 | 0.00*** |

| WPS | −0.01 | 0 | −2.86 | 0.00*** | −0.01 | 0 | −2.77 | 0.01* | −0.01 | 0 | −2.81 | 0.01* |

| Dic | −0.01 | 0.01 | −1.91 | 0.06 | −0.01 | 0.01 | −2.08 | 0.04* | −0.01 | 0.01 | −2.11 | 0.04* |

| function | 0.02 | 0.01 | 2.11 | 0.04* | 0.02 | 0.01 | 2.09 | 0.04* | 0.02 | 0.01 | 2.03 | 0.04* |

| pronoun | 0.91 | 0.28 | 3.26 | 0.00*** | 0.91 | 0.28 | 3.29 | 0.00*** | 0.89 | 0.28 | 3.2 | 0.00*** |

| Differen | −0.88 | 0.28 | −3.18 | 0.00*** | −0.89 | 0.28 | −3.22 | 0.00*** | −0.87 | 0.28 | −3.12 | 0.00*** |

| we | −0.91 | 0.28 | −3.28 | 0.00*** | −0.92 | 0.28 | −3.32 | 0.00*** | −0.89 | 0.28 | −3.21 | 0.00*** |

| you | −0.91 | 0.28 | −3.26 | 0.00*** | −0.91 | 0.28 | −3.3 | 0.00*** | −0.89 | 0.28 | −3.19 | 0.00*** |

| shehe | −0.92 | 0.28 | −3.31 | 0.00*** | −0.93 | 0.28 | −3.34 | 0.00*** | −0.9 | 0.28 | −3.24 | 0.00*** |

| they | −0.93 | 0.28 | −3.33 | 0.00*** | −0.93 | 0.28 | −3.36 | 0.00*** | −0.91 | 0.28 | −3.27 | 0.00*** |

| ipron | −0.91 | 0.28 | −3.29 | 0.00*** | −0.92 | 0.28 | −3.32 | 0.00*** | −0.89 | 0.28 | −3.22 | 0.00*** |

| anger | 0.05 | 0.02 | 2.48 | 0.01* | 0.05 | 0.02 | 2.44 | 0.02* | 0.05 | 0.02 | 2.41 | 0.02* |

| discrep | 0.03 | 0.01 | 2.07 | 0.04* | 0.03 | 0.01 | 2.03 | 0.04* | 0.03 | 0.01 | 2.04 | 0.04* |

| hear | −0.12 | 0.05 | −2.5 | 0.01* | −0.12 | 0.05 | −2.46 | 0.01* | −0.12 | 0.05 | −2.43 | 0.02* |

| focuspast | 0.02 | 0.01 | 2.32 | 0.02* | 0.02 | 0.01 | 2.39 | 0.02* | 0.02 | 0.01 | 2.42 | 0.02* |

| filler | −0.28 | 0.12 | −2.3 | 0.02* | −0.28 | 0.12 | −2.26 | 0.02* | −0.28 | 0.12 | −2.24 | 0.03* |

| Period | 0.01 | 0 | 2.07 | 0.04* | 0.01 | 0 | 2.08 | 0.04* | 0.01 | 0 | 1.9 | 0.06 |

| Exclam | 0.02 | 0.01 | 2.61 | 0.01* | 0.02 | 0.01 | 2.51 | 0.01* | 0.02 | 0.01 | 2.45 | 0.01* |

| Dash | −0.02 | 0.01 | −2.04 | 0.04* | −0.02 | 0.01 | −2.09 | 0.04* | −0.02 | 0.01 | −1.98 | 0.05 |

| Quote | 0.02 | 0.01 | 2.61 | 0.01* | 0.02 | 0.01 | 2.72 | 0.01* | 0.02 | 0.01 | 2.68 | 0.01* |

| OtherP | −0.01 | 0 | −2.56 | 0.01* | −0.01 | 0 | −2.74 | 0.01* | −0.01 | 0 | −2.74 | 0.01* |

| Followers | 0.29 | 0.01 | 30.12 | 0.00*** | 0.29 | 0.01 | 30.22 | 0.00*** | 0.29 | 0.01 | 30.38 | 0.00*** |

| Friends | −0.04 | 0.01 | −2.52 | 0.01* | −0.04 | 0.01 | −2.44 | 0.02* | −0.04 | 0.01 | −2.61 | 0.01* |

| Prob-Anti | 0.01 | 0.07 | 0.07 | 0.94 | - | - | - | - | - | - | - | - |

| Prob-Pro | - | - | - | - | 0.17 | 0.06 | 2.79 | 0.01* | - | - | - | - |

| Prob-neutral | - | - | - | - | - | - | - | - | −0.17 | 0.06 | −2.98 | 0.00*** |

| R-squared | 0.249 | 0.25 | 0.25 | |||||||||

| RMSE | 1.441 | 1.439 | 1.439 | |||||||||

F. Comparisons of LIWC Feature Uses across Vaccine Classes

Given the significant effects of 14 LIWC features on retweets and 22 on favorites, we conducted three-group comparisons to examine whether any group used each of these features significantly more than the others (Table VI). Like in our previous multi-group comparisons, we applied Bonferroni correction to deal with the inflated Type 1 error by dividing .05 significance level by the number of group comparisons (3), resulting in p = 0.017.

TABLE VI.

Multiple Comparisons of LIWC Feature Use

| Category | Anti | Neutral | Pro | Group Difference |

|---|---|---|---|---|

| Word count | 20.97 | 20.99 | 21.03 | No significant differences |

| Summary Language Variables | ||||

| Authentic | 21.05 | 17.54 | 25.63 | Pro vs. Anti: MD = 4.58, p < .001 |

| Neu. vs. Pro: MD = −8.08, p < .001 | ||||

| Neu. vs. Anti: MD = −3.50, p = 0.01 | ||||

| Words per sentence | 15.16 | 16.26 | 14.57 | Neu. vs. Pro: MD = 1.70, p < .001 |

| Neu. vs. Anti: MD = 1.10, p < .001 | ||||

| Dictionary words | 62.09 | 48.45 | 62.84 | Neu. vs. Pro: MD = −14.39, p < .001 |

| Neu. vs. Anti: MD = −13.64, p < .001 | ||||

| Linguistic Dimensions | ||||

| Tot. function words | 34.42 | 24.23 | 34.38 | Neu. vs. Pro: MD = − 10.15, p < .001 |

| Neu. vs. Anti: MD = −10.19, p < .001 | ||||

| Total pronouns | 7.58 | 4.07 | 8.6 | Pro vs. Anti: MD= 1.02, p < .001 |

| Neu. vs. Pro: MD = −4.53, p < .001 | ||||

| Neu. vs. Anti: MD = −3.51, p < .001 | ||||

| 1st person singular | 1.25 | 0.64 | 2.15 | Pro vs. Anti: MD= .91, p < .001 |

| Neu. vs. Pro: MD = −1.51, p < .001 | ||||

| Neu. vs. Anti: MD = −.60, p < .001 | ||||

| 1st person plural | 0.47 | 0.47 | 0.98 | Pro vs. Anti: MD= 0.52, p < .001 |

| Neu. vs. Anti: MD = −0.52, p < .001 | ||||

| 2nd person | 1.13 | 0.53 | 1.65 | Pro vs. Anti: MD= −.52, p < .001 |

| Neu. vs. Pro: MD = −1.13, p < .001 | ||||

| Neu. vs. Anti: MD = −.61, p < .001 | ||||

| 3rd person singular | 0.3 | 0.2 | 0.37 | No significant differences |

| 3rd person plural | 0.91 | 0.31 | 0.56 | Pro vs. Anti: MD= −.35, p < .001 |

| Neu. vs. Pro: MD = −.24, p < .001 | ||||

| Neu. vs. Anti: MD = −.60, p < .001 | ||||

| Impersonal pronouns | 3.51 | 1.92 | 2.89 | Pro vs. Anti: MD = −.62, p = .01 |

| Neu. vs. Pro: MD = −.97, p < .001 | ||||

| Neu. vs. Anti: MD = −1.59, p < .001 | ||||

| Anger | 0.91 | 0.25 | 0.64 | Neu. vs. Pro: MD = −.40, p < .001 |

| Neu. vs. Anti: MD = −.67, p ¡ .001 | ||||

| Discrepancy | 1.25 | 0.67 | 1.17 | Neu. vs. Pro: MD = −.50, p < .001 |

| Neu. vs. Anti: MD = −.58, p < .001 | ||||

| Perceptual processes | 1.12 | 1.03 | 1.02 | No significant differences |

| Hear | 0.44 | 0.46 | 0.37 | No significant differences |

| Affiliation | 1.05 | 1.05 | 1.99 | Pro vs. Anti: MD= .94, p < .001 |

| Neu. vs. Pro: MD = −.94, p < .001 | ||||

| Past focus | 1.93 | 1.34 | 2.11 | Neu. vs. Pro: MD = −.77, p <.001 |

| Neu. vs. Anti: MD = −.58, p < .001 | ||||

| Fillers | 0 | 0.01 | 0 | No significant differences |

| Punctuations | ||||

| Period | 10.76 | 9.64 | 10.02 | Neu. vs. Anti: MD = −1.12, p =.002 |

| Exclamation | 0.86 | 0.52 | 1.1 | Neu. vs. Pro: MD = −.59, p < .001 |

| Dash | 0.96 | 1.79 | 1.15 | Neu. vs. Pro: MD = −.65, p < .001 |

| Neu. vs. Anti: MD = −.83, p < .001 | ||||

| Quote | 1.09 | 1 | 0.57 | Pro vs. Anti: MD= −.52, p < .001 |

| Neu. vs. Pro: MD = .42, p < .001 | ||||

| Other Punctuation | 9.12 | 12.52 | 9.95 | Neu. vs. Pro: MD = 2.58, p < .001 |

| Neu. vs. Anti: MD = 3.40, p < .001 | ||||

Our comparisons between anti- and pro-vaccine tweets show that pro-vaccine tweets contained significantly more 1st, 2nd singular and plural, and 3rd person plural pronouns than anti-vaccine tweets. Pro-vaccine tweets also included more exclamation marks than neutral tweets. These results are aligned with the prior finding that pro-vaxxers accuse, mock, and condemn anti-vaxxers derogatorily for being unintelligent, believing in pseudo-science, and spreading mis/disinformation [11]. An example in our data set is ”You ignorant antivaxxers!!!”. However, as our regression results show these pronouns had negative impact on garnering retweets and favorites. Moreover, pro-vaccine tweets used affiliation (e.g., ally, friend, society) more than anti-vaccine tweets, which is again aligned with the prior findings that pro-vaxxers’ primary argument is the preventive benefits of vaccine for creating herd immunity for the larger society [9]. Moreover, pro-vaccine tweets contained more authentic features than pro-vaccine posts. Authenticity in LIWC refers to that writing is honest and personal in nature [29], which may explain why pro-vaccine tweets garnered more favorites than anti-vaccine tweets.

In contrast, anti-vaccine tweets had more quotes than pro-vaccine counterparts, having a significant and positive impact on garnering retweets and favorites. In contrast to the prior findings that anti-vaxxers make emotional appeals [7], there was no significant difference in terms of anger expressed between pro- and anti-vaccine tweets. We believe that anti-vaccine groups use quotes more perhaps in their attempt to make their claims more legitimate and credible and thus get retweeted.

VI. Discussion

A. Summary of the Findings

General findings:

Out of 120,115,096 tweets collected between November 15 and December 15, 2021, we identified 4,668,990 as vaccine-related. Of these vaccine-related ones, we randomly selected 1.1% (51,360) tweets. Of these 51,360 tweets, only 5,047 original tweets have been retweeted once or more until late March 2022.

Out of these 4,047 tweets, nearly a half of them (2,006) have neutral perspectives about vaccines, while the remaining half has more pro-vaccine tweets (1,104) than anti-vaccine counterparts (937).

A two-stage framework using a fine-tuned BERT classifier outperforms conventional ML methods such as logistic regression, gradient boosting trees, and LSTM in terms of its accuracy in classifying vaccine-relevant tweets and their three perspectives.

Anti-vaccine tweets garnered significantly more retweets than pro-vaccine and neutral counterparts, while pro-vaccine tweets garnered significantly more favorites than anti-vaccine counterparts.

The number of friends had significant and negative effects on both retweets and favorites. These results suggest that the number of followers is a better indicator of propagation for Twitter than that of friends, which may be more appropriate for other networks, such as Facebook.

Findings for Addressing Research Objective 1:

Out of 92 LIWC features, only 14 and 22 had significant effects on garnering retweets and favorites, respectively.

About half of these features had positive effects on retweets and favorites (i.e., facilitators of propagation), but the remaining half had negative effects (i.e., inhibitors of propagation).

Different sets of LIWC features garnered retweets and favorites. The set of features that garnered retweets is a subset of that for favorites. The 22 features that garnered favorites include being “authentic,” expressing “anger” (e.g., hate, kill, and annoyed), highlighting “discrepancy” (e.g., should and would), focusing on ”past” (ago, did, and talked), and quoted others. The unique features that garnered only retweets, not favorites, are ”affiliation” (ally, friend, and social) and ”perception” (look, heard, feeling).

The same set of LIWC features had significantly negative effects on garnering retweets and favorites. these features center on pronouns, such as 1st, 2nd, and 3rd person pronouns.

In sum, we have identified two idiosyncratic sets of features that facilitate or inhibit retweets and favorites.

Findings for Addressing Research Objective 2:

Compared to anti-vaccine tweets, pro-vaccine tweets contained more 1st (singular and plural), 2nd, and 3rd person plural pronouns, which negatively affected both retweets and favorites. These results is aligned with the prior finding that many pro-vaccine messages mock and attack anti-vaxxers [11]. However, our results suggest that such attacks do not help pro-vaxxers disseminate their messages to broader audiences.

Pro-vaccine tweets use authentic linguistic features that may explain why they garner more favorites than anti-vaccine tweets.

Anti-vaccine tweets use quotes more than pro-vaccine tweets, which have significant and positive impact on both retweets and favorites. Anti-vaxxers quote other sources presumably in their attempt to make their content credible and objective.

In sum, these findings enhances our understanding of why anti-vaccine rhetoric is propagated rapidly via social media compared to pro-vaccine narratives.

B. Limitations and Suggestions for Future Research

Like any other research, this study has limitations. Although our binary classification accuracy is over 97%, which is one of the highest in the literature, our ternary classification has room for improvement. However, we have attempted a more complicated, higher-order classification task that categorizes tweets into three perspectives about vaccines, resulting in >80% accuracy rate. In addition, our training set is still on the smaller side (>10,000), which needs updating and expansions on a regular basis. Lastly, we used only the LIWC text analysis tool but future researchers may consider qualitative content analyses for a deeper understanding of vaccine discussion on social media.

Acknowledgment

Research reported in this publication was supported by the National Library Of Medicine of the National Institutes of Health under Award Number R21LM013638. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Contributor Information

Young Anna Argyris, Dept of Media and Information, Michigan State University, East Lansing, MI.

Nan Zhang, Dept of Advertising and Public Relations, Michigan State University, East Lansing, MI.

Bidhan Bashyal, Dept of Computer Science and Engineering, Michigan State University, East Lansing, MI.

Pang-Ning Tan, Dept of Computer Science and Engineering, Michigan State University, East Lansing, MI.

References

- [1].Ortiz RR, Smith A, and Coyne-Beasley T, “A systematic literature review to examine the potential for social media to impact HPV vaccine uptake and awareness, knowledge, and attitudes about HPV and HPV vaccination,” vol. 15, no. 7, pp. 1465–1475, 2019. [Online]. Available: https://www.tandfonline.com/doi/abs/10.1080/21645515.2019.1581543?journalCode=khvi20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Singh L, Bansal S, Bode L, Budak C, Chi G, Kawintiranon K, Padden C, Vanarsdall R, Vraga E, and Wang Y, “A first look at COVID-19 information and misinformation sharing on twitter,” 2020. [Online]. Available: https://arxiv.org/abs/2003.13907v1

- [3].Argyris YA, Kim Y, Roscizewski A, and Song W, “The mediating role of vaccine hesitancy between maternal engagement with anti- and pro-vaccine social media posts and adolescent HPV-vaccine uptake rates in the US: The perspective of loss aversion in emotion-laden decision circumstances,” vol. 282, p. 114043, 2021. [Online]. Available: https://linkinghub.elsevier.com/retrieve/pii/S0277953621003750 [DOI] [PubMed] [Google Scholar]

- [4].“See how vaccinations are going in your county and state,” 2020. [Online]. Available: https://www.nytimes.com/interactive/2020/us/covid-19-vaccine-doses.html

- [5].Johnson NF, Velasquez N, Restrepo NJ, Leahy R, Gabriel N, El Oud S, Zheng M, Manrique P, Wuchty S, and Lupu Y, “The online competition between pro- and anti-vaccination views,” vol. 582, pp. 230–233, 2020. [Online]. Available: http://www.nature.com/articles/s41586-020-2281-1 [DOI] [PubMed] [Google Scholar]

- [6].Argyris YA, Monu K, Tan P-N, Aarts C, Jiang F, and Wiseley KA, “Using machine learning to compare provaccine and antivaccine discourse among the public on social media: Algorithm development study,” JMIR Public Health Surveill, vol. 7, no. 6, p. e23105, Jun 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Furini M and Menegoni G, “Public health and social media: Language analysis of vaccine conversations,” in 2018 international workshop on social sensing (SocialSens), 2018, pp. 50–55. [Google Scholar]

- [8].Memon SA, Tyagi A, Mortensen DR, and Carley KM, “Characterizing sociolinguistic variation in the competing vaccination communities,” 2020.

- [9].“Contents of japanese pro- and anti-HPV vaccination websites: A text mining analysis,” vol. 101, 2018. [DOI] [PubMed] [Google Scholar]

- [10].Huangfu L, Mo Y, Zhang P, Zeng DD, and He S, “Covid-19 vaccine tweets after vaccine rollout: Sentiment–based topic modeling,” Journal of medical Internet research, vol. 24, no. 2, p. e31726, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Yousefinaghani S, Dara R, Mubareka S, Papadopoulos A, and Sharif S, “An analysis of covid-19 vaccine sentiments and opinions on twitter,” International Journal of Infectious Diseases, vol. 108, pp. 256–262, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Thelwall M, Kousha K, and Thelwall S, “Covid-19 vaccine hesitancy on english-language twitter,” Profesional de la información (EPI), vol. 30, no. 2, 2021. [Google Scholar]

- [13].Cui L and Lee D, “Coaid: Covid-19 healthcare misinformation dataset,” 2020. [Online]. Available: https://arxiv.org/abs/2006.00885

- [14].Abdelminaam DS, Ismail FH, Taha M, Taha A, Houssein EH, and Nabil AM, “Coaid-deep: An optimized intelligent framework for automated detecting covid-19 misleading information on twitter,” IEEE Access, vol. 9, pp. 27840–27867, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Hayawi K, Shahriar S, Serhani M, Taleb I, and Mathew S, “Anti-vax: a novel twitter dataset for covid-19 vaccine misinformation detection,” Public Health, vol. 203, 12 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Devlin J, Chang M-W, Lee K, and Toutanova K, “Bert: Pre-training of deep bidirectional transformers for language understanding,” arXiv preprint arXiv:1810.04805, 2018.

- [17].Mønsted B and Lehmann S, “Algorithmic detection and analysis of vaccine-denialist sentiment clusters in social networks,” 2019. [Online]. Available: http://arxiv.org/abs/1905.12908

- [18].Roesslein J, “Tweepy: Twitter for python!” URL: https://github.com/tweepy/tweepy, 2020.

- [19].Tomeny TS, Vargo CJ, and El-Toukhy S, “Geographic and demographic correlates of autism-related anti-vaccine beliefs on twitter, 2009–15,” vol. 191, pp. 168–175, 2017. [Online]. Available: http://www.sciencedirect.com/science/article/pii/S0277953617305221 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Rietz T and Maedche A, “Towards the design of an interactive machine learning system for qualitative coding,” 2020, medium: PDF Publisher: AIS eLibrary (AISeL). [Online]. Available: https://publikationen.bibliothek.kit.edu/1000124563 [Google Scholar]

- [21].Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, and Duchesnay E, “Scikit-learn: Machine learning in Python,” Journal of Machine Learning Research, vol. 12, pp. 2825–2830, 2011. [Google Scholar]

- [22].Wolf T, Debut L, Sanh V, Chaumond J, Delangue C, Moi A, Cistac P, Rault T, Louf R, Funtowicz M, Davison J, Shleifer S, von Platen P, Ma C, Jernite Y, Plu J, Xu C, Scao TL, Gugger S, Drame M, Lhoest Q, and Rush AM, “Transformers: State-of-the-art natural language processing,” in Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations. Online: Association for Computational Linguistics, Oct. 2020, pp. 38–45. [Google Scholar]

- [23].Rahman W, Hasan MK, Lee S, Zadeh A, Mao C, Morency L-P, and Hoque E, “Integrating multimodal information in large pretrained transformers,” in Proceedings of the conference. Association for Computational Linguistics. Meeting, vol. 2020, 2020, p. 2359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Feng SY, Gangal V, Wei J, Chandar S, Vosoughi S, Mitamura T, and Hovy E, “A survey of data augmentation approaches for nlp,” arXiv preprint arXiv:2105.03075, 2021.

- [25].Tan P-N, Steinbach M, Karpatne A, and Kumar V, Introduction to data mining, 2nd ed. Pearson Education, 2016. [Google Scholar]

- [26].Hinton GE and Roweis S, “Stochastic neighbor embedding,” Advances in neural information processing systems, vol. 15, 2002. [Google Scholar]

- [27].Tausczik YR and Pennebaker JW, “The psychological meaning of words: Liwc and computerized text analysis methods,” Journal of language and social psychology, vol. 29, no. 1, pp. 24–54, 2010. [Google Scholar]

- [28].Faasse K, Chatman CJ, and Martin LR, “A comparison of language use in pro-and anti-vaccination comments in response to a high profile facebook post,” Vaccine, vol. 34, no. 47, pp. 5808–5814, 2016. [DOI] [PubMed] [Google Scholar]

- [29].Mitra T, Counts S, and Pennebaker JW, “Understanding anti-vaccination attitudes in social media,” in Tenth International AAAI Conference on Web and Social Media, 2021, Conference Proceedings. [Google Scholar]

- [30].Shi J, Ghasiya P, and Sasahara K, “Psycho-linguistic differences among competing vaccination communities on social media,” arXiv preprint arXiv:2111.05237, 2021.

- [31].Stachowicz RA, “Linguistic fingerprints of pro-vaccination and anti-vaccination writings,” in Annual International Symposium on Information Management and Big Data. Springer, 2021, Conference Proceedings, pp. 314–324. [Google Scholar]

- [32].Wu W, Lyu H, and Luo J, “Characterizing discourse about covid-19 vaccines: A reddit version of the pandemic story,” Health Data Science, vol. 2021, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]