Structured Abstract

Purpose of review:

Artificial intelligence (AI) and deep learning have become important tools in extracting data from ophthalmic surgery in order to evaluate, teach, and aid the surgeon in all phases of surgical management. The purpose of this review is to highlight the ever-increasing intersection of computer vision, machine learning, and ophthalmic microsurgery.

Recent Findings:

Deep learning algorithms are being applied to help evaluate and teach surgical trainees. AI tools are improving real-time surgical instrument tracking, phase segmentation, as well as enhancing the safety of robotic-assisted vitreoretinal surgery.

Summary:

Similar to strides appreciated in ophthalmic medical disease, AI will continue to become an important part of surgical management of ocular conditions. Machine learning applications will help push the boundaries of what surgeons can accomplish to improve patient outcomes.

Keywords: Artificial Intelligence, tool identification, robotic surgery, virtual reality, surgical education

Introduction

Artificial Intelligence (AI) has the potential to revolutionize the practice of modern medicine, and its capabilities are continually being realized. AI, and more specifically machine learning (ML), has sparked global interest for a new era of clinical diagnostics and management that can be applied across all fields of medicine. Important applications of machine learning in medicine include early detection of tuberculosis on chest radiographs,1 identifying cancerous skin lesions as accurately as experts in the dermatology field,2 and predicting the onset of sepsis in intensive care unit patients prior to clinical recognition.3

Ophthalmology is no stranger to ML, and the field lends itself well to ML algorithms due to the wide array of imaging modalities utilized in ophthalmic practice. Applications of AI in teleophthalmology may assist in the detection of retinopathy of prematurity, diabetic retinopathy, age-related macular degeneration (AMD) and glaucoma in remote and underserved populations.4 Several groups have demonstrated high accuracy for disease detection utilizing deep learning programs in diabetic retinopathy5–9 and AMD,10–12 and there is growing literature of ML capabilities for retinopathy of prematurity,13,14 glaucoma,15,16 and ocular oncology.17,18

Perhaps less discussed but equally exciting application of AI in medicine is its role in surgical care. Despite surgery generally considered to be highly effective in treating disease, there is significant space for enhancing patient outcomes utilizing AI for preoperative, intraoperative, and postoperative tools and decision-making. AI-driven tools may become important components of preoperative planning by analyzing clinical imaging, patient characteristics, and timing of surgery to guide the surgical approach. They may provide the surgeon personalized data on potential risks and benefits for each particular eye and predict postoperative outcomes.

Intraoperative AI applications can range anywhere from some assistance (e.g. intraoperative image guidance) to full automation (e.g. autonomous robotic surgery), and major strides have been reached in various points along this spectrum.19 Shademan et al. demonstrated in vivo autonomous robotic anastomosis of porcine intestine using the Smart Tissue Autonomous Robot (STAR), an AI-driven surgical robot which outperformed expert surgeons in various surgical tasks.20 Other robotic models with variable levels of autonomy include the DaVinci robot (Intuitive Surgical, Sunnyvale, CA) that completely relies on human control, TSolution-One (THINK Surgical, Fremont, CA) for Orthopedic knee replacements that utilizes an automated bone cutter, and Mazor X (Mazor Robotics, Caesarea, Israel) for robotic-assisted spinal surgery.19

Ophthalmologic microsurgery is technically complex with high stakes and requires a high level of precise movements on awake and non-paralyzed patients. Although generally successful in treating ocular disease, there exists a steep learning curve due to numerous physical, mental, and technical variables that affect surgical consistency. With intraocular surgery performed almost exclusively using an operating microscope with a near-fixed surgical field, a large amount of surgical video data can be used to train ML algorithms for the purposes of intraoperative guidance and future robotic eye surgery.

This review highlights the major advancements in AI and ophthalmic surgery, ranging from surgical education and preoperative planning to instrument tracking and robotic-assisted microsurgery.

Ophthalmic Surgery Education

Due to the learning curve of ophthalmic surgery and the constant room for improvement in how novice surgeons acquire technical skill, novel AI-based and virtual reality (VR) technologies have potential to disrupt ophthalmic surgical education. There is significant value in bolstering surgical training prior to even entering the operating room with live patients, as well as enhancing feedback during and after surgery.21

Virtual Reality-based Simulators

The Coronavirus Disease-2019 (COVID-19) pandemic highlighted the importance of finding ways outside of the operating room to acquire surgical skill due to a precipitous drop in elective case volume.22 One such avenue of skill acquisition is the use of virtual reality-based simulators, with the most well-known simulator called the Eyesi Surgical Platform (Haag-Streit, Bern, Switzerland). These VR platforms have become a mainstay in ophthalmic surgical education, and they offer a controlled environment in which improvement on the simulator may translate to improvement in the operating room. One teaching institution found a significant reduction in cataract surgery complication rates after introducing an Eyesi simulator to the resident surgical curriculum,23 and another study demonstrated improved scores on a previously validated task-specific cataract surgery assessment rubric (Objective Structured Assessment of Cataract Surgical Skill) for novice surgeons in live surgery after using the Eyesi simulator.24 VR-based surgical training may have utility outside of residency education, as Genentech incorporated VR headsets to teach surgeons how to place and refill the port delivery system, an implant currently being studied to deliver anti-vascular endothelial growth factor continuously for retinal disease.25 AI models can learn surgeon decision-making in VR environments which researchers can apply these lessons in real-life scenarios. For example, if a certain maneuver novice surgeons perform consistently leads to a complication during simulation, this may serve as a predictive tool and warn the learner in future environments.

Intraoperative Guidance

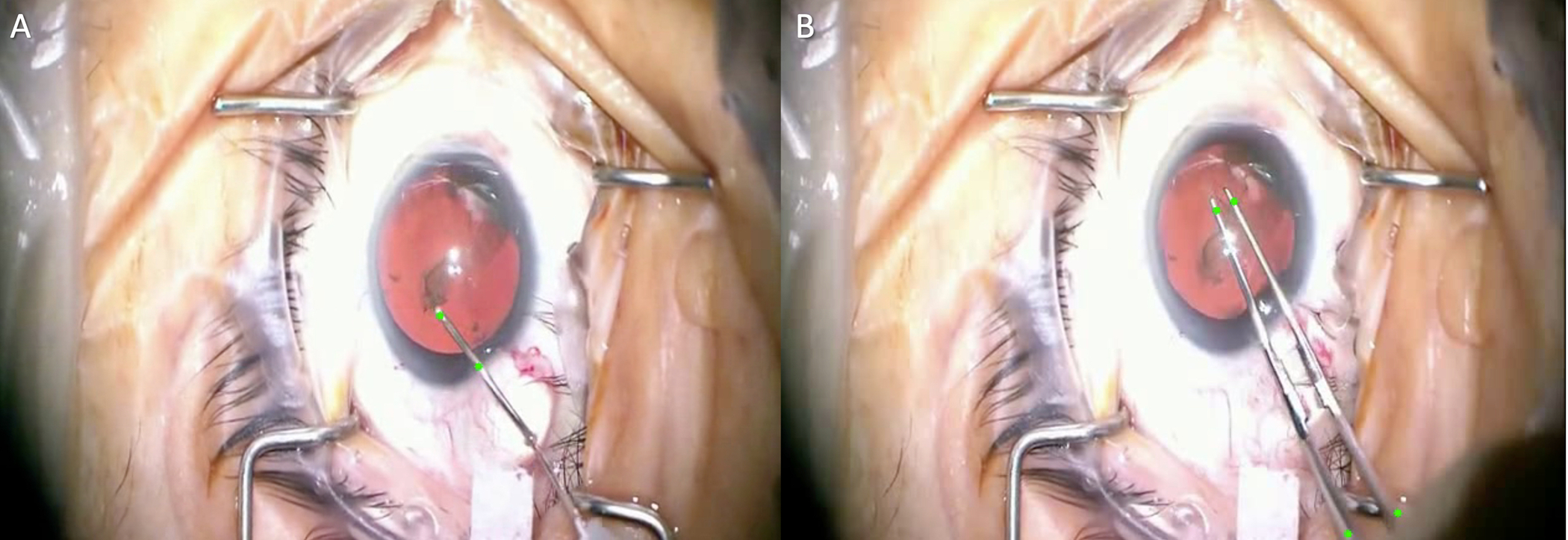

One exciting avenue for AI support tools is real-time surgical guidance for novice surgeons in which a “smart” operating microscope can incorporate augmented reality to provide step-by-step direction.20 This system would require rapid identification of the surgical step and associated instrumentation with low latency. Morita et al. utilized a convolutional neural network (CNN) model, known as the Inception V3 model, to accurately recognize the phase of cataract surgery in a surgical video at an average of 96.5% in real-time.26 Useful step-specific scenarios for the novice cataract surgeon may be utilizing the microscope to overlay a pre-specified capsulorhexis size indicator on the anterior capsule. Instrument tracking (Figure 1) coupled with intraoperative imaging such as microscope-integrated optical coherence tomography (OCT) could aid the novice surgeon in depth within the cataract nucleus during phacoemulsification, or ascertaining the forceps distance from the retina during a macular membrane peel. OCT integrated into the instrument tip can also be utilized for retinal surface detection and even tremor compensation.27 Real-time guidance can generate warnings to avoid complications or offer recommendations if the novice surgeon is noted to struggle. Further work on instrument tracking is described below.

Figure 1:

Instrument Tracking in Cataract Surgery. Tool trajectories are mapped using multiple green points on the instruments during capsulorhexis for both the cystotome needle (A) and Utrata forceps (B). Images courtesy of Shameema Sikder, M.D.

Surgical Evaluation

Surgical educators recognize that timely and objective feedback of surgical performance for the novice surgeon is necessary, prompting the emergence of several surgical grading rubrics in cataract surgery and multiple subspecialty surgeries. These rubrics were viewed as a way to standardize assessing surgical skill acquisition, however they can be time intensive for the educator and likely not completely objective if completed concurrently in front of the learner. Automating these structured feedback tools may more consistently track a learning surgeon’s skill level. Kim et al. used deep neural networks to accurately predict if capsulorhexis performance in a surgical video was performed by an expert or novice (as determined by a masked grader utilizing an assessment rubric).28 The authors crowdsourced the annotation of the intraoperative tool tip to map tip velocities for their deep neural network. Their work demonstrated a step-specific evaluation, but future research may expand this to all cataract surgery steps for the novice surgeon. With grading rubrics now being produced for subspecialty surgeries, future work will likely assess phase segmentation in these surgeries. While a vitrectomy may have more variables than an uncomplicated cataract surgery, researchers may analyze specifically the salient portions of a surgery, such as the internal limiting membrane peel maneuver during macular hole surgery. This objective feedback using video-based data may serve to form competency-based milestones in training rather than simply reaching a minimum number of surgical cases in order to meet graduation requirements.

Cataract Surgery

Cataract surgery is the most commonly performed intraocular surgery, and cataract numbers are expected to increase with the aging population. AI technology has been studied for all phases of cataract diagnosis and grading, pre-planning, and surgical management.29

Preoperative Planning

Choosing the correct intraocular lens (IOL) power prior to cataract surgery is one of the main components of preoperative planning. Several IOL formulas exist to aid the surgeon in selecting an IOL for the best refractive outcome, however achieving a postoperative refraction within 0.50 D of emmetropia or slight myopia occurs approximately 70–80% of the time.30 Ladas et al. introduced an IOL “super formula” by combining ideal portions of existing formulas to help the surgeon select an appropriate IOL for both typical and atypical values of axial length, corneal power, and anterior chamber depth.31,32 The authors report the latest version of the formula incorporates postoperative outcomes to continually improve the formula using an AI-hybrid approach.32 This interest in new AI-driven IOL formulas has surged in the last few years, and existing IOL formulas have also been improved using artificial neural networks.33 Although not widely explored, ML utilization in pre-planning for other surgeries may prove useful. Feeding pre-operative OCT and visual acuity data into an ML algorithm may guide the clinician on the rate of progression of an epiretinal membrane to decide the optimal timing for surgery. Optimal forceps grab points on the retinal surface from pre-operative OCT, such as rips in the internal limiting membrane, could be overlaid on the retinal surface during surgery to guide the novice surgeon.

Tool and Phase Identification

Video recordings of cataract surgery have become the ideal source of surgical data for step identification and instrument tracking. Initially, surgical tool analysis depended on external markers attached to the instrument. Specifically in laparoscopic surgery, these markers were color-, optical-, or acoustic-based.34 In ophthalmic surgery, motion-based analysis using sensors was limited and only applied to corneal suturing, oculoplastics, or in a simulated environment.35

Current efforts for instrument tracking utilize computer vision-based methods with video recording as the data source. Early algorithms applied motion features or spatiotemporal polynomials, and later methods used conditional random fields or hidden Markov models.36 Current methods apply deep learning using CNNs to recognize surgical instruments. Hajj et al. processed a sequence of consecutive images using CNNs for instrument classification and reported an area under the ROC curve (AUROC) of 0.953.37 In 2017, the CATARACTS challenge was presented to evaluate tool annotation algorithms for cataract surgery.34 The dataset is comprised of 50 cataract surgeries in which 21 surgical tools was manually annotated by two experts. 14 participating teams submitted solutions based on deep learning algorithms, and the winning solution achieved an AUROC of 0.987.34 Although the winning solution did not outperform the manual human grader, it came strikingly close and the authors concluded an automated solution would be a feasible option. When feeding CNN outputs to recurrent neural networks (RNN) in order to take temporal relationships into account, researchers noted an AUROC of 0.996.37 Adding contextual information such as a video stream of the surgical stray could also be considered to improve tool identification.34,39

Vitreoretinal Surgery and Robotic-Assisted Surgery

Vitreoretinal surgery is a complex and technically challenging field of ophthalmic microsurgery that requires precision movements to manipulate extremely delicate tissue. Physiologic hand tremor has been shown to have a root mean square amplitude of 50–200 microns.40 75% of forces generated by maneuvers during retinal microsurgery measured less than 7.5 mN in magnitude, and only about 20% of events generated at this level could be felt by the surgeon.41 Coincidentally, this is the near the amount of force required to create a retinal tear during vitrectomy in live rabbits.42 Although surgeons are successful in current surgical maneuvers, robotic-assisted surgery may help reduce intraoperative adverse events such as iatrogenic retinal breaks and retinal detachments following macular surgery. Robotic surgery may push the boundaries further for what retinal surgeons are capable of doing, such as retinal vein cannulation for occlusive disease or administering chemotherapy into feeder vessels of intraocular tumors.43

Visual Instrument Tracking

Instrument tracking in retinal microsurgery is difficult due to the wide variety of instrument appearances during changes in illumination, motion blur, shadows or surgical field of view. Several groups have described various computational techniques to overcome these challenges. Snitzman et al. placed instrument detection and tracking in the same framework to account for the target object entering and leaving the field of view regularly.44 Alsheakhali et al. modeled the surgical instrument as a Conditional Random Field in which each part of the instrument is detected separately.45 Roodaki et al. estimated the instrument tip’s distance to the retina by using the shadow of the instrument in intraoperative OCT images.46 Recently in retinal surgery researchers have applied deep learning algorithms for instrument tracking, as Zhao et al. used a CNN with a spatial transformer network for the process of tracking retinal surgical tools in real time without labels.47

Deep Learning for Robotic-Assisted Surgery

The DaVinci robot, commonly used in other surgical subspecialties, can be adapted for corneal suturing and pterygium surgery, but was kinematically inadequate for intraocular surgery.48 Although robotic-assisted surgery has not been widely adopted in ophthalmology, several ophthalmology-specific machine prototypes are promising. The Intraocular Robotic Interventional Surgical System (IRISS) has a master controller and a manipulator which includes two independent arms that hold surgical instruments.43 The Johns Hopkins Steady-Hand Eye Robot is a surgeon-initiated robot in which the surgeon holds instruments that are attached to robotic arms. The robot aims to smoothen the surgeon movements and also has force-sensing capabilities. The Preceyes Surgical System is also a robotic assistant in which the robotic manipulator holding the instrument is separate from the master controller, yet the surgeon still can use an instrument such as a light pipe in the other hand as well as the standard operating microscope. This system was used in the first live human intraocular robotic surgery.48

Converging deep learning and ophthalmic robotic microsurgery is a relatively recent phenomenon, and AI will likely have an increasing role in enhancing the safety, efficacy, and reliability for robotic platforms. One important, early application of AI is helping predict surgeon movements. He et al. utilized an active interventional control framework (AICF) to increase operation safety by actively intervening the operation to avoid exertion of excessive forces to the sclera.49 A RNN was used for scleral force modeling, and the authors report AICF may eliminate undesired events in advance by predicting the surgeon’s manipulations. Further work is ongoing for robotics enhanced by AI capable of adapting in real-time, and early iterations will likely still be heavily dependent on human supervision.50

Future Implementations of AI in Retina Surgery

There is undoubtedly a role for AI to enhance the safety and efficiency of retinal surgery in order to improve patient outcomes. Deep learning algorithms can provide intraoperative guidance as well as predict and act upon possible adverse events. For instance, a smart microscope could automatically detect intraoperative hemorrhage during a diabetic tractional retinal detachment repair and consequently raise the infusion pressure to tamponade the bleeding. It can subsequently reduce the pressure slowly if the hemorrhage has ceased. Other possibilities include using intraoperative OCT to detect residual subretinal fluid during an air-fluid exchange in retinal detachment repair, or detect and track residual perfluorocarbon bubbles on the retinal surface. In regard to vitreous cutter dynamics, an AI-driven system could automatically modulate the duty cycle of the vitreous cutter port depending on nearby retinal mobility. The video display of a heads-up surgery system could automatically adjust white balance and color filters to enhance membrane staining during macular surgery. Clearly there are limitless possibilities for “smart” instruments and intraoperative guidance parameters to help augment the surgeon’s capabilities.

Conclusion

Analogous to the remarkable achievements in AI and ophthalmic medical disease, AI-driven tools in surgery are advancing at a rapid pace. We will likely continue seeing novel applications of machine learning for all components of surgical management of ocular disease to aid the clinician. Utilizing deep learning in robotic surgery will help augment the surgeon’s capabilities to continue providing advanced surgical care for our patients.

Key Points:

Deep learning algorithms are increasingly utilized for capturing data from ophthalmic surgical videos.

Automated identification of surgical instruments and phase segmentation will aid in surgical education, intraoperative guidance, and robot-assisted surgery.

Machine learning has expanded the possibilities in how surgical instruments and intraoperative tools will improve surgical outcomes.

Acknowledgements:

We would like to acknowledge Tae Soo Kim, Dr. S. Swaroop Vedula, and Dr. Shameema Sikder for contributing to this paper and for their brilliant work in the field.

Financial Support and Sponsorship: This work was partially funded by Research to Prevent Blindness and NIH grant P30-EY026877

Footnotes

Conflict of Interest Disclosures: None

References:

- 1.Lakhani P, Sundaram B. Deep learning at chest radiography: automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology. 2017;284(2):574–582. [DOI] [PubMed] [Google Scholar]

- 2.Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115–118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Nemati S, Holder A, Razmi F, Stanley MD, Clifford GD, Buchman TG. An interpretable machine learning model for accurate prediction of sepsis in the icu. Crit Care Med. 2018;46(4):547–553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rathi S, Tsui E, Mehta N, Zahid S, Schuman JS. The current state of teleophthalmology in the united states. Ophthalmology. 2017;124(12):1729–1734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Abràmoff MD, Lou Y, Erginay A, et al. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Invest Ophthalmol Vis Sci. 2016;57(13):5200–5206. [DOI] [PubMed] [Google Scholar]

- 6.Gargeya R, Leng T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology. 2017;124(7):962–969. [DOI] [PubMed] [Google Scholar]

- 7.Takahashi H, Tampo H, Arai Y, Inoue Y, Kawashima H. Applying artificial intelligence to disease staging: Deep learning for improved staging of diabetic retinopathy. PLoS One. 2017;12(6):e0179790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ting DSW, Cheung CY-L, Lim G, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318(22):2211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ludwig CA, Perera C, Myung D, et al. Automatic identification of referral-warranted diabetic retinopathy using deep learning on mobile phone images. Transl Vis Sci Technol. 2020;9(2):60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Venhuizen FG, van Ginneken B, van Asten F, et al. Automated staging of age-related macular degeneration using optical coherence tomography. Invest Ophthalmol Vis Sci. 2017;58(4):2318–2328. [DOI] [PubMed] [Google Scholar]

- 11.Treder M, Lauermann JL, Eter N. Automated detection of exudative age-related macular degeneration in spectral domain optical coherence tomography using deep learning. Graefes Arch Clin Exp Ophthalmol. 2018;256(2):259–265. [DOI] [PubMed] [Google Scholar]

- 12.Burlina PM, Joshi N, Pekala M, Pacheco KD, Freund DE, Bressler NM. Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. 2017;135(11):1170–1176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ataer-Cansizoglu E, Bolon-Canedo V, Campbell JP, et al. Computer-based image analysis for plus disease diagnosis in retinopathy of prematurity: performance of the “i-rop” system and image features associated with expert diagnosis. Transl Vis Sci Technol. 2015;4(6):5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bolón-Canedo V, Ataer-Cansizoglu E, Erdogmus D, et al. Dealing with inter-expert variability in retinopathy of prematurity: A machine learning approach. Comput Methods Programs Biomed. 2015;122(1):1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Li Z, He Y, Keel S, Meng W, Chang RT, He M. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology. 2018;125(8):1199–1206. [DOI] [PubMed] [Google Scholar]

- 16.Kim SJ, Cho KJ, Oh S. Development of machine learning models for diagnosis of glaucoma. PLoS One. 2017;12(5):e0177726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kaiserman I, Rosner M, Pe’er J. Forecasting the prognosis of choroidal melanoma with an artificial neural network. Ophthalmology. 2005;112(9):1608. [DOI] [PubMed] [Google Scholar]

- 18.Damato B, Eleuteri A, Fisher AC, Coupland SE, Taktak AFG. Artificial neural networks estimating survival probability after treatment of choroidal melanoma. Ophthalmology. 2008;115(9):1598–1607. [DOI] [PubMed] [Google Scholar]

- 19.Panesar S, Cagle Y, Chander D, Morey J, Fernandez-Miranda J, Kliot M. Artificial intelligence and the future of surgical robotics. Annals of Surgery. 2019;270(2):223–226. [DOI] [PubMed] [Google Scholar]

- 20.Shademan A, Decker RS, Opfermann JD, Leonard S, Krieger A, Kim PCW. Supervised autonomous robotic soft tissue surgery. Sci Transl Med. 2016;8(337):337ra64. [DOI] [PubMed] [Google Scholar]

- 21. Bakshi SK, Lin SR, Ting DSW, Chiang MF, Chodosh J. The era of artificial intelligence and virtual reality: transforming surgical education in ophthalmology. Br J Ophthalmol. Published online August 14, 2020. * An excellent review of how artificial intelligence is aiding surgical evaluation and education tools in ophthalmology.

- 22.Mishra K, Boland MV, Woreta FA. Incorporating a virtual curriculum into ophthalmology education in the coronavirus disease-2019 era. Curr Opin Ophthalmol 2020;31(5):380–385. [DOI] [PubMed] [Google Scholar]

- 23.Staropoli PC, Gregori NZ, Junk AK, et al. Surgical simulation training reduces intraoperative cataract surgery complications among residents. Simul Healthc 2018;13(1):11–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Thomsen ASS, Bach-Holm D, Kjærbo H, et al. Operating room performance improves after proficiency-based virtual reality cataract surgery training. Ophthalmology. 2017;124(4):524–531. [DOI] [PubMed] [Google Scholar]

- 25.Castellanos S. Genentech uses virtual reality to train eye surgeons. The Wall Street Journal. 6 Feb 2019. Available https://www.wsj.com/articles/genentech-uses-virtualreality-to-train-eye-surgeons-11549495028

- 26.Morita S, Tabuchi H, Masumoto H, Yamauchi T, Kamiura N. Real-time extraction of important surgical phases in cataract surgery videos. Sci Rep 2019;9(1):16590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Cheon GW, Huang Y, Cha J, Gehlbach PL, Kang JU. Accurate real-time depth control for CP-SSOCT distal sensor based handheld microsurgery tools. Biomed Opt Express. 2015;6(5):1942–1953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Kim TS, O’Brien M, Zafar S, Hager GD, Sikder S, Vedula SS. Objective assessment of intraoperative technical skill in capsulorhexis using videos of cataract surgery. Int J Comput Assist Radiol Surg 2019;14(6):1097–1105. ** An important article demonstrating objective evaluation of cataract surgery.

- 29. Ting DSJ, Foo VH, Yang LWY, et al. Artificial intelligence for anterior segment diseases: Emerging applications in ophthalmology. Br J Ophthalmol 2021;105(2):158–168. * An important review highlighting artificical intelligence in anterior segment diseases, from cataract diagnosis to intraocular lens selections.

- 30.Aristodemou P, Knox Cartwright NE, Sparrow JM, Johnston RL. Formula choice: Hoffer Q, Holladay 1, or SRK/T and refractive outcomes in 8108 eyes after cataract surgery with biometry by partial coherence interferometry. J Cataract Refract Surg 2011;37(1):63–71. [DOI] [PubMed] [Google Scholar]

- 31.Ladas JG, Siddiqui AA, Devgan U, Jun AS. A 3-d “super surface” combining modern intraocular lens formulas to generate a “super formula” and maximize accuracy. JAMA Ophthalmol 2015;133(12):1431. [DOI] [PubMed] [Google Scholar]

- 32.Siddiqui AA, Ladas JG, Lee JK. Artificial intelligence in cornea, refractive, and cataract surgery. Current Opinion in Ophthalmology. 2020;31(4):253–260. [DOI] [PubMed] [Google Scholar]

- 33.Ladas J, Ladas D, Lin SR, Devgan U, Siddiqui AA, Jun AS. Improvement of multiple generations of intraocular lens calculation formulae with a novel approach using artificial intelligence. Transl Vis Sci Technol 2021;10(3):7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Al Hajj H, Lamard M, Conze P-H, et al. CATARACTS: Challenge on automatic tool annotation for cataRACT surgery. Medical Image Analysis. 2019;52:24–41. [DOI] [PubMed] [Google Scholar]

- 35.Alnafisee N, Zafar S, Vedula SS, Sikder S. Current methods for assessing technical skill in cataract surgery. J Cataract Refract Surg 2021;47(2):256–264. [DOI] [PubMed] [Google Scholar]

- 36.Sokolova N, Schoeffmann K, Taschwer M, Putzgruber-Adamitsch D, El-Shabrawi Y (2020) Evaluating the Generalization Performance of Instrument Classification in Cataract Surgery Videos. In: Ro Y et al. (eds) MultiMedia Modeling. MMM 2020. Lecture Notes in Computer Science, vol 11962. Springer, Cham. 10.1007/978-3-030-37734-2_51 [DOI] [Google Scholar]

- 37.Al Hajj H, Lamard M, Charriere K, Cochener B, Quellec G. Surgical tool detection in cataract surgery videos through multi-image fusion inside a convolutional neural network. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE; 2017:2002–2005. [DOI] [PubMed] [Google Scholar]

- 38.Al Hajj H, Lamard M, Conze P-H, Cochener B, Quellec G. Monitoring tool usage in surgery videos using boosted convolutional and recurrent neural networks. Medical Image Analysis. 2018;47:203–218. [DOI] [PubMed] [Google Scholar]

- 39.Al Hajj H, Lamard M, Cochener B, Quellec G. Smart data augmentation for surgical tool detection on the surgical tray. Annu Int Conf IEEE Eng Med Biol Soc 2017;2017:4407–4410. [DOI] [PubMed] [Google Scholar]

- 40.Wells TS, Yang S, Maclachlan RA, Handa JT, Gehlbach P, Riviere C. Comparison of baseline tremor under various microsurgical conditions. Conf Proc IEEE Int Conf Syst Man Cybern. Published online 2013:1482–1487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Gupta PK, Jensen PS, de Juan E. Surgical forces and tactile perception during retinal microsurgery. In: Taylor C, Colchester A, eds. Medical Image Computing and Computer-Assisted Intervention – MICCAI’99. Vol 1679. Springer; Berlin Heidelberg; 1999:1218–1225. [Google Scholar]

- 42.Sunshine S, Balicki M, He X, et al. A force-sensing microsurgical instrument that detects forces below human tactile sensation. Retina. 2013;33(1):200–206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Channa R, Iordachita I, Handa JT. Robotic vitreoretinal surgery. Retina. 2017;37(7):1220–1228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sznitman R, Richa R, Taylor RH, Jedynak B, Hager GD. Unified detection and tracking of instruments during retinal microsurgery. IEEE Trans Pattern Anal Mach Intell. 2013;35(5):1263–1273. [DOI] [PubMed] [Google Scholar]

- 45.Alsheakhali M, Eslami A, Roodaki H, Navab N. Crf-based model for instrument detection and pose estimation in retinal microsurgery. Computational and Mathematical Methods in Medicine. 2016;2016:1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Roodaki H, Filippatos K, Eslami A, Navab N. Introducing augmented reality to optical coherence tomography in ophthalmic microsurgery. In: 2015 IEEE International Symposium on Mixed and Augmented Reality. IEEE; 2015:1–6. [Google Scholar]

- 47.Zhao Z, Chen Z, Voros S, Cheng X. Real-time tracking of surgical instruments based on spatio-temporal context and deep learning. Computer Assisted Surgery. 2019;24(sup1):20–29. [DOI] [PubMed] [Google Scholar]

- 48.de Smet MD, Naus GJL, Faridpooya K, Mura M. Robotic-assisted surgery in ophthalmology. Curr Opin Ophthalmol 2018;29(3):248–253. [DOI] [PubMed] [Google Scholar]

- 49.He C, Patel N, Shahbazi M, et al. Toward safe retinal microsurgery: development and evaluation of an rnn-based active interventional control framework. IEEE Trans Biomed Eng 2020;67(4):966–977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Urias MG, Patel N, He C, et al. Artificial intelligence, robotics and eye surgery: are we overfitted? Int J Retin Vitr 2019;5(1):52. [DOI] [PMC free article] [PubMed] [Google Scholar]