Abstract

The information humans are exposed to has grown exponentially. This has placed increased demands upon our information selection strategies resulting in reduced fact-checking and critical-thinking time. Prior research shows that problem solving (traditionally measured using the Cognitive Reflection Test-CRT) negatively correlates with believing in false information. We argue that this result is specifically related to insight problem solving. Solutions via insight are the result of parallel processing, characterized by filtering external noise, and, unlike cognitively controlled thinking, it does not suffer from the cognitive overload associated with processing multiple sources of information. We administered the Compound Remote Associate Test (problems used to investigate insight problem solving) as well as the CRT, 20 fake and real news headlines, the bullshit, and overclaiming scales to a sample of 61 participants. Results show that insight problem solving predicts better identification of fake news and bullshit (over and above traditional measures i.e., the CRT), and is associated with reduced overclaiming. These results have implications for understanding individual differences in susceptibility to believing false information.

Keywords: Fake news, Problem solving, Insight, Aha! moment, Bullshit receptivity, Overclaiming

1. Introduction

False information takes many shapes. While misinformation has long been a feature of conveying the human experience to others, the rise of the internet and social media has created conditions in which individuals or groups can rapidly fabricate content capable of reaching millions of people over a short time. Considering the negative consequences of the widespread dissemination of false information during the COVID-19 pandemic, there has been renewed urgency for scientists to identify the social and cognitive mechanisms associated with believing in misinformation (Pennycook et al., 2021; Salvi et al., 2021).

The proliferation of true and false facts increases competition for attention, which by burdening cognitive selection, reduces evaluation and critical-thinking time (Hills, 2018). Difficulty in filtering out extra content leads to believing and sharing fake news. For example, people share misinformation on social media typically when their attention is focused on factors other than accuracy (Pennycook et al., 2021). Fake news has an advantage in competitive environments since it is freed from the constraints of being truthful, and therefore it easily adapts to cognitive biases toward distinctive and emotionally attractive information (Hamann, 2001; Schomaker & Meeter, 2015). These factors relate to the scientific demonstration that lies proliferate faster than the truth (Vosoughi et al., 2018).

Cognitive psychologists have begun investigating why false news is believed, and shared on the internet, particularly on social media, and found that one single prior exposure to news is enough to encourage its belief. This effect persists even when headlines are doubted by fact-checkers or disagree with the reader’s political beliefs (Pennycook, Cannon & Rand, 2018). It has been demonstrated that social media exposure reduces consideration for alternative views, boosts attitude polarization, amplifies the probability of embracing ideologically similar news, and facilitates the creation of bubbles of information (Lazer et al., 2018). Especially in environments like social media, where people are under the impression of having control over their information exposure, they are more inclined to believe in the news that pleases them, and which is consistent with their preexisting beliefs. Established prejudices and ideological beliefs tend to prevent fact-checking a given fake news story (Lazer et al., 2018). As a result, people accept information uncritically when it aligns with their beliefs or with those of their community. Belief in fake news is associated with delusionality, dogmatism, religious fundamentalism, and reduced analytical ability (Bronstein, Pennycook, Bear, Rand, & Cannon, 2019). Specifically, dogmatic individuals and religious fundamentalists may be more likely to believe fake news partially because of reduced critical thinking (Bronstein, Pennycook, Bear, Rand, & Cannon, 2019; Pennycook, Cheyne, Barr, Koehler, Fugelsang 2014). These results suggest that associating with like-minded individuals (a scenario amplified by social media interaction) boosts groups to defensively insulate themselves, thereby reducing the exploration of alternative perspectives. It also raises the possibility that people who tend to ‘think outside the box’ might be better at assessing misinformation because they consider alternative information when reasoning. A recent study investigating believing in COVID-19-related fake news revealed that better problem solving ability predicted correctly rating news headlines as real or fake, and reduced other forms of gullibility such as bullshit receptivity and overclaiming (Salvi, et al., 2021).

Most of the past research investigating believing in misinformation used the Cognitive Reflection Test (CRT) (Frederick, 2005) as a measure of engaging in analytical reasoning (e.g., Bronstein, Pennycook, Bear, Rand, and Cannon, 2019; Pennycook et al., 2021; Pennycook, and Rand, 2017, 2020). For example, in two studies Pennycook and Rand (2019 b, c) showed that solving CRT problems correlates negatively with the perceived accuracy of fake news, and correlates positively with the ability to discern fake news from real news – even when the headlines presented to participants aligned with their political ideology. Further, they found that solving CRT problems mediated the correlation between believing in pseudo-profound bullshit and perception of fake news accuracy.

There appear to be two distinct theoretical accounts of why people vary in their susceptibility to misinformation; the motivated cognition account and the classical reasoning account (see Martel, Pennycook, & Rand, 2020). Interestingly, both of these explanatory frameworks, when accounting for susceptibility to fake news, are centered on explicit analytic thinking and logical problem solving ability. In the motivated cognition account, analytic thinking can increase susceptibility to false information. In the classical reasoning account, analytic thinking can guard against misinformation (Lin, Pennycook & Rand, 2022). Much less consideration has been given to cognitive abilities and profiles centered on other sorts of problem solving and their association with different ways of processing information.

The CRT is a widely used instrument to assess reasoning in fake news research. However, CRT problems present limitations. As pointed out in several experiments CRT problems are often tricky and require high-level pragmatic competence to be solved (rather than logical analytic thinking) (e.g., Macchi & Bagassi, 2012). Plus, these problems are very popular and many subjects are already familiar with them (Baron et al., 2015; Chandler, Mueller & Paolacci, 2014; Toplak et al., 2014). Frederick’s paper on the CRT has over 4700 citations on Google Scholar; the ‘bat and ball’ problem from the CRT became very famous after having been mentioned in popular books such as Kahneman’s Thinking, Fast and Slow (Kahneman, 2011) and media outlets like The New York Times (Postrel, 2006) and Business Insider (Lubin, 2012). The CRT is frequently demonstrated in introductory psychology courses, and those students are often part of the subject pools used for research (Thomson & Oppenheimer, 2016). By contrast, an increasing number of studies are using the Compound Remote Associates (CRA) task to investigate aspects of cognitive flexibility and socio-cognitive polarization such as political partisanship, dogmatism, and xenophobia (e.g., Salvi, Cristofori, Grafman, & Beeman, 2016; Salvi, et al., 2021; Zmigrod, Rentfrow, & Robbins, 2019; Zmigrod, 2020). Studies using the CRA task demonstrate that problem solving is associated with social flexibility when expressing political and religious ideologies, together with an overall tendency of questioning the status quo and considering alternative information when reasoning (Salvi, Cristofori, Grafman, & Beeman, 2016a; Salvi et al., 2021; Zmigrod et al., 2019; Zmigrod, 2020;). For example, we found that political conservatism is negatively associated with subjects’ ability to solve CRA problems via insight (Salvi, Cristofori, Grafman & Beeman, 2016). Similarly, Zmigrod and colleagues (2019) found a U shape relation between political partisanship and problem solving (measured using the Wisconsin Card Sorting Test, CRAs, and the Alternative Uses Task), where individuals with politically polarized and rigid perspectives (both to the right and the left) performed worse on problem solving than moderates. Cognitive flexibility also negatively correlates with rigid ideologies, such as nationalism (i.e., pro-Brexit attitudes), religious beliefs, and evidence receptivity (Van Hiel et al., 2016; Zmigrod, Rentfrow, & Robbins, 2019; Zmigrod, 2020). The consistency of these studies across different measures suggests that there is a meaningful relationship between problem solving and social rigidity (e.g., political extremism, conservatism, xenophobia, etc.) that regards questioning the status quo and exploring alternative views, and thus might extend to believing in misinformation.

Scientists have identified two main ways people solve problems: via a sudden insight or a step-by-step analysis (Jung-Beeman et al., 2004; Kounios & Beeman, 2014). While solutions via step-by-step are under cognitive control, in the case of insight the novel idea emerges into awareness suddenly, in a discontinuous manner interrupting one’s train of thoughts, together with a feeling of pleasure and reward (Danek & Wiley, 2017; Oh, Chesebrough, Erickson, Zhang, & Kounios, 2020; Salvi, Bricolo, Franconeri, Kounios & Beeman, 2015; Salvi, Simoncini, Grafman, & Beeman, 2020; Shen, et al., 2018; Shen, Yuan, Liu, & Luo, 2016; Smith & Kounios, 1996; Tik et al., 2018). The burst of this idea is preceded by an internal focus of attention and disengagement from external stimuli (Danek & Salvi, 2018; Laukkonen, Webb, Salvi, Schooler, & Tangen, 2020; Laukkonen, Ingledew, Schooler, & Tangen, 2018; Salvi et al., 2015; Salvi & Bowden, 2016).

Here, we hypothesized that not just problem solving, but specifically insightfulness is associated with other information processing skills that might be advantageous when people have to assess the veracity of information. There are two main reasons why we predict this association. First, insight-based solutions have an extremely high probability of being correct (Salvi et al., 2016). This is interpreted as an index of idea selection and thus reasoning quality (Danek, Fraps, von Müller, Grothe, & Öllinger, 2014; Danek & Salvi, 2018; Danek & Wiley, 2017; Laukkonen et al., 2018; Salvi, Bricolo, Kounios, Bowden, & Beeman, 2016; Webb, Little, & Cropper, 2017). Solutions via insight are qualitatively different from those via step-by-step analysis, since they require overcoming fixation, and lead to alternative interpretations of concepts that at first seem unrelated but suddenly fit together as a good ‘Gestalt’ (Dominowski & Dallob, 1995b; Ohlsson, 1992; Shen, Yuan, et al., 2018; Smith, 1996; Smith & Blankenship, 1989; 1991; Storm & Angello, 2010). Thus, more insightful people invest time and effort in going beyond the default information to overcome the initial fixation thanks to restructuring. Such a mental exercise might translate into a greater tendency to question the information in the news by investigating its accuracy further, or by considering alternative and non-obvious explanations. In many articles, Macchi and Bagassi (Bagassi & Macchi, 2016; Macchi & Bagassi, 2012, 2014; Macchi, Cucchiarini, Caranova & Bagassi, 2019) showed how the restructuring happened before people have an insight that implies high-level implicit thinking. They describe it as a sort of ‘unconscious analytic thought’ which would be informed by information relevance ‘as the act of grasping the crucial characteristics of its structure.’ (Bagassi & Macchi, 2016, p. 57).

Second, having an insight is the result of parallel processing, characterized by an internal attention allocation facilitated by filtering interfering external information (Novick, Sherman, & Sherman, 2016; Salvi, et al., 2015, 2020; Salvi & Bowden, 2016). Unlike conscious, above-awareness reasoning, insight-based problem solving does not suffer from the cognitive overload that false information exposure brings when reasoning (Ball, Marsh, Litchfield, Cook, & Booth, 2015; Metcalfe, 1986; Novick et al., 2016). Insight processing, and overall creativity, requires a decoupling of attention from perception to isolate competing streams of internal and external information (e.g., Ball et al., 2015; Benedek et al., 2017; Benedek, & Jauk, 2018; Konishi, Brown, Battaglini, & Smallwood, 2017; Schooler et al., 2011). This characteristic is likely relevant when processing information in a crowded environment such as the internet.

Another factor that is likely relevant to falling prey to false information is known as ‘bullshit receptivity.’ Following Frankfurt’s (2005) definition of bullshit as reflecting a lack of concern for the truth, Pennycook, Cheyne, Barr, Koehler, and Fugelsang (2015) created a scale to measure individual differences in bullshit receptivity. The measure consists of extremely vague or meaningless statements that sound profound and asks participants to assess their profoundness. Several psychological features are associated with bullshit receptivity, including non-analytic thinking styles (measured using CRT problems), faith in intuition, low need for cognition, low cognitive ability as well as political ideology (Pennycook et al., 2015; Sterling, Jost, & Pennycook, 2016). Overclaiming is considered the tendency for people to ‘self-enhance’ when asked about their familiarity with general knowledge questions (Paulhus et al., 2003). People who score higher on the bullshit index also have high levels of confidence in their mathematics self-efficacy and problem solving skills as well as a tendency to overclaim (Philips & Clancy, 1972).

In this study, we aim to replicate the relationship between believing in misinformation and problem solving using a set of problems established for studying insight problem solving as well as cognitive flexibility. In the last 20 years, CRA problems became an established tool to study insight problem solving since they exhibit the key features of classic insight tasks without being as complex, and problem solvers’ success on CRAs correlates with their success on classic insight problems (Ball & Stevens, 2009; Bowden et al., 2005; Dominowski & Dallob, 1995; Schooler & Melcher, 1995). Furthermore, CRA problems are compact and can be solved within 15 seconds per problem, allowing for more trials to be presented in the same session, and increasing statistical power. Therefore, we chose them over classic insight problems (Bowden & Jung-Beeman, 2003; Salvi, Costantini, Bricolo, Perugini, & Beeman, 2015; Salvi, Costantini, Pace, & Palmiero, 2018).

We hypothesized that people who demonstrate more insight-based problem solving on the CRA task would be more successful in fake news discernment, identifying bullshit, and being less prone to overclaiming.

2. Methods

2.1. Subjects

Sixty-one right-handed, native American English speakers were recruited for the study (39 women, average age = 25.5, SD 8.1). The sample group included 63.9% White/Caucasians; 18.0% Asian Americans; 8.1% African American; 6.6% mixed ethnicity; and 1.6% Pacific Islanders. Participants were eligible for the study if they met the following criteria: (1) no history of neurological or psychiatric disorder; (2) no use of central nervous system or mood and attention affecting drugs (such as antidepressants, amphetamines, or anxiety medications); and (3) no history of traumatic brain injury or intracranial metal implantation. Participants’ level of education corresponded to an average of 16 years (SD 3.4). Participants were paid for completing the study. Each experimental session lasted approximately 1 hour. The study was approved by the Northwestern University Institutional Review Board, and all participants gave written informed consent.

2.2. Material

Problem solving

We administered 5 Cognitive Reflection Test problems to participants (CRT; Frederick, 2005; Thomson & Oppenheimer, 2016) and 5 control arithmetic problems. CRTs are problems designed to elicit an immediate, yet incorrect, response. It is only after further consideration that the correct solution becomes more apparent.

Participants were also administered with 60 Compound Remote Associate (CRA) problems randomly selected from Bowden & Jung-Beeman, 2003 and balanced for difficulty. In each trial, participants saw three stimulus words (e.g., crab, pine, and sauce), and they had to find a fourth word that would create a common compound word or two-word saying with each of the given words. For example, the solution for the following triad of words is apple (crab, pine, sauce). Participants can solve these problems through insight or step-by-step thinking, and the problem solving method is self-reported. Self-reporting has been determined to be accurate and reliable through multiple behavioral and neuroimaging studies (e.g., Bowden & Jung-Beeman, 2007; Jung-Beeman et al., 2004; Salvi, et al., 2015; Salvi, Beeman, Bikson, McKinley, & Grafman, 2020). Plus, problem solvers’ success on these problems reliably correlates with their success on classic insight problems (Dallob & Dominowski, 1993; Schooler & Melcher, 1995).

Fake News and Bullshit

The fake news questionnaire consisted of twenty headlines taken from (Pennycook & Rand, 2019). The articles contained a title, a thumbnail image, and a preview text from the article. The sources for the articles were not provided. Articles’ content could be fake 50% of the time and had political content 50% of the time (vs. neutral content). For each news article, participants were asked if they were familiar with the article; how accurate they believed the article was, and if they would share the article on social media. Accuracy was rated on a 5-point scale ranging from ‘Not at all accurate’ to ‘Very accurate’. Participants were then given a social media score that displays their propensity to share it.

Along with the fake news questionnaire, and consistent with Pennycook et al., 2015, participants were given i) profound (i.e., the real quotes), ii) pseudo-profound (i.e., randomly generated statements disguised as profound through the use of complex meaningless words that in the context of the sentence sound profound ‘Infinity is a reflection of reality), and iii) mundane statements (i.e., simple facts, for example, ‘Some things have distinct smells’). Participants were asked to rate these statements on their profundity with 1 being ‘Not at all profound’ and 5 being ‘Very profound.’ Using these responses, we created a Bullshit Receptivity Score (BRS) for each participant that displays their propensity to believe pseudo-profound bullshit. This scale was borrowed from Pennycook, Cheyne, et al. (2015). Participants’ average scores for profound and non-profound statements were also analyzed.

Overclaiming is defined as the tendency for some individuals to ‘self-enhance’ when asked about their familiarity with general knowledge questions (Pennycook & Rand, 2019). The overclaiming scale consists of a list of thirty different people, events, and topics, divided into two sections of fifteen each. One section is about historical events or figures, while the other one consisted of topics from the physical sciences (Paulhus et al., 2003). Subjects are asked to rate their familiarity on a six-point scale ranging from ‘Never heard of it’ to ‘Very Familiar’. There were a total of 6 foils (3 per section) that were designed to detect if participants would lie about their knowledge, or overclaim.1 This questionnaire was scored by summing the number of false alarms or overclaims, and the number of correct indications of familiarity. These sums were then used to create an overclaiming accuracy score. The false alarms were subtracted from the hits, meaning a higher score indicates less tendency to overclaim2

Political Ideology was measured by two Likert scales (Robinson, Shaver, & Wrightsman, 1999; Salvi, Cristofori, Grafman, & Beeman, 2016b). The scales asked if they ‘endorse many aspects of conservative political ideology’ and if they ‘endorse many aspects of liberal political ideology’. The responses were recorded on two 7-point scales, ranging from 1–7, with 1 being less conservative or liberal, and 7 agreeing with conservative or liberal ideology. Participants were then given a liberalism score based on these scales, the liberalism score was determined by making the conservative integers negative and then summing the responses to each scale.

2.3. Procedure

A set of 60 CRA problems was first administered to participants. The problem words were displayed in 28-point Times New Roman in black color text on a white background and centered horizontally. The trial order was randomized within the block. During the instruction phase, participants were trained on how to identify insight or step-by-step problem solving styles when they solved a problem.3 Self-reports differentiating between insight and step-by-step problem solving have been found in association with several behavioral and neuroimaging markers (Becker, Wiedemann, & Kühn, 2018; Bowden & Jung-Beeman, 2003; Jung-Beeman et al., 2004; Salvi, Bricolo, Franconeri, Kounios, & Beeman, 2015b; Santarnecchi et al., 2020; Sprugnoli et al., 2021; Subramaniam, Kounios, Parrish, & Jung-Beeman, 2009). E-Prime 2.10 software was used to present the experiment on a 24-in Dell screen at a viewing distance of about 60 cm.

The fake news, bullshit, overclaiming, and political ideology questionnaires were administered after the CRAs, using the Qualtrics online survey platform hosted on the Northwestern University server and presented on a 13-inch MacBook Pro laptop.

4. Results

Behavioral data analysis was performed using JASP and the significance level was set to p < 0.05. Data were tested for normality (Kolmogorov–Smirnov test) and homogeneity of variance (Levene’s test). Data were normally distributed and assumptions for the use of analysis of variance were not violated.

4.1. Problem solving

CRA.

Out of 60 problems participants correctly solved an average of 22.02, 36.7% (SD 12%) problems per person. Of the 60 administered an average of 11.64, 19.4% (SD 10.2%) problems per person were correctly solved via insight, and an average of 10.5, 17.5%, (SD 9.3%) problems per person were solved correctly via step-by-step. Out of 60 CRAs participants solved incorrectly an average of 5.88, 9.88% (SD 10.8%) problems per person. An average of 2.34, 3.9% (SD 6%) problems per person were incorrectly solved via insight, and an average of 3.54, 5.9% (SD 7.1) problems per person via step-by-step analysis. The remaining problems were unsolved.4

CRT.

Out of the 5 CRTs administered, an average of 3.11, 62.3% (SD 27.6%) per person were solved correctly as well as an average of 4.67, 93.4% (SD 12%) of the control arithmetic problems.5

Linear regression analysis shows that the percent of problems solved in the CRA predicts solving CRT problems (F (1,59) = 8.1; β = .34; p =.006, 95% CI .073 to .411). This prediction is specifically led by problems solved via insight (F (1,59) = 8.4; β = .35; p =.005, 95% CI .065 to .353) but not via step-by-step analysis (p = .619) (i.e., solutions via insight on the CRA predicts solving CRT problems). The same linear regression analysis did not turn out significant for the arithmetic problems.

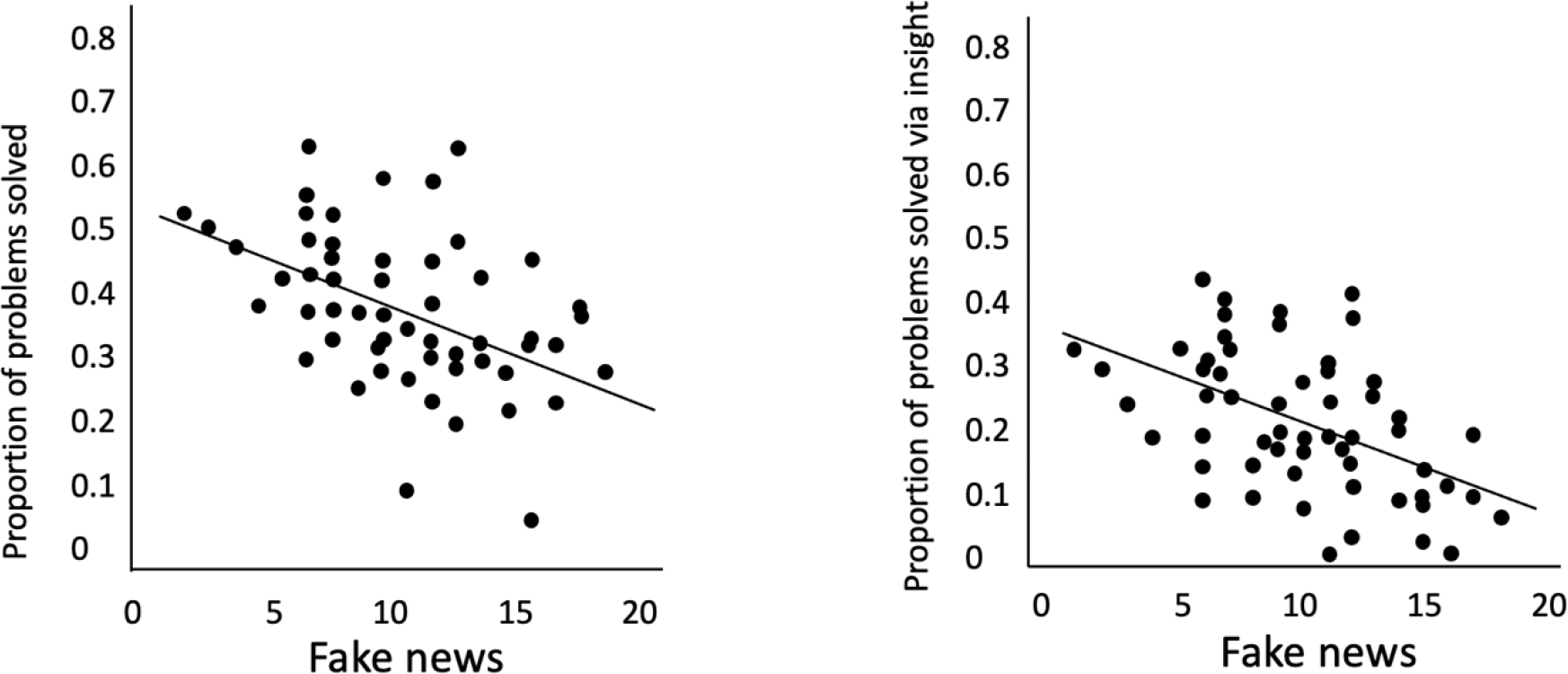

4.2. Problem solving and Fake news

A negative correlation was found between believing in fake news and solving both CRA problems and CRT problems (see table 1). Crucially there was a significant negative correlation between solving problems via insight (but not step-by-step) and believing in fake news. The correlations were significant for both political and non-political content. As Table 1 shows, no significant correlation was found when people were administered real news content. Also, the data shows a negative correlation between problem solving via insight and the tendency of sharing both real and fake news on social media (see supplementary material for further analysis on sharing).

Table 1.

Correlations between the percent of CRA problems solved via insight and step-by-step analysis, percent of CRT problems, fake news detected (overall and divided in political and non-political content), real news detected, and the probability of sharing both fake news and real news content on the media.

| Correlations | |||||

|---|---|---|---|---|---|

|

| |||||

| Percent of CRAs solved | CRAs solved via insight | CRAs solved via step-by-step | Percent of CRT solved | ||

|

| |||||

| Fake news overall | Pearson’s r | −0.491 *** | −0.503 *** | −0.087 | −0.400 ** |

| p-value | < .001 | < .001 | 0.506 | 0.001 | |

| Upper 95% CI | −0.273 | −0.288 | 0.169 | −0.165 | |

| Lower 95% CI | −0.661 | −0.670 | −0.331 | −0.592 | |

| Political Fake News | Pearson’s r | −0.364 ** | −0.416 *** | −0.028 | −0.346 ** |

| p-value | 0.004 | < .001 | 0.829 | 0.006 | |

| Upper 95% CI | −0.123 | −0.184 | 0.225 | −0.104 | |

| Lower 95% CI | −0.564 | −0.605 | −0.278 | −0.550 | |

| Non-Political Fake News | Pearson’s r | −0.469 *** | −0.432 *** | −0.124 | −0.325 * |

| p-value | < .001 | < .001 | 0.339 | 0.010 | |

| Upper 95% CI | −0.247 | −0.203 | 0.132 | −0.080 | |

| Lower 95% CI | −0.645 | −0.617 | −0.365 | −0.534 | |

| Real News overall | Pearson’s r | −0.219 | −0.119 | −0.165 | −0.113 |

| p-value | 0.090 | 0.361 | 0.205 | 0.388 | |

| Upper 95% CI | 0.034 | 0.137 | 0.091 | 0.143 | |

| Lower 95% CI | −0.446 | −0.360 | −0.400 | −0.354 | |

| Fake News Sharing | Pearson’s r | −0.242 | −0.274 * | −0.014 | −0.242 |

| p-value | 0.060 | 0.032 | 0.917 | 0.061 | |

| Upper 95% CI | 0.010 | −0.024 | 0.239 | 0.011 | |

| Lower 95% CI | −0.466 | −0.492 | −0.265 | −0.465 | |

| Real News Sharing | Pearson’s r | −0.200 | −0.286 * | 0.053 | −0.147 |

| p-value | 0.121 | 0.025 | 0.683 | 0.259 | |

| Upper 95% CI | 0.054 | −0.037 | 0.301 | 0.109 | |

| Lower 95% CI | −0.431 | −0.502 | −0.201 | −0.384 | |

The bold values indicate significant results with statistical thresholds of *p < .05, **p < .01, ***p < .001 respectively.

Regression analysis shows that the percent of CRA problems solved positively predicted detecting fake news overall (F (1,59) = 18.7; β = −.49; p < .001, 95% CI −2.35 to −.86) whether they had political (F (1,59) = 9; β = −.36; p =.004, 95% CI −12.5 to −2.5) or non-political (F (1,59) = 16.6; β = −.46; p < .001, 95% CI −12.7 to −4.3) content. This result was again influenced by solutions via insight (F (1,59) = 20; β = −.50; p < .001, 95% CI −28 to −10.7), regardless of whether they had political (F (1,59) = 12.36; β = −.41; p < .001, 95% CI −15.9 to −4.3) or non-political (F (1,59) = 13.5; β = −.43; p < .001, 95% CI −14.2 to −4.2) content.

We did not find any relation between problems solved incorrectly, incorrectly via insight, and incorrectly via step-by-step and fake news discernment.

To further investigate how quickly such news was accepted, we analyzed the RT of assessing fake and real news and compared them with problem solving abilities. Results show that solving problems via insight was negatively related to response times in assessing overall fake and real news (F (1,59) = 4.4; β = −.26; p =.040, 95% CI −34.15 to −.85). We did not find any relation between problems solved incorrectly, incorrectly via insight, and incorrectly via step-by-step and RT of assessing fake and real news.

Individual differences

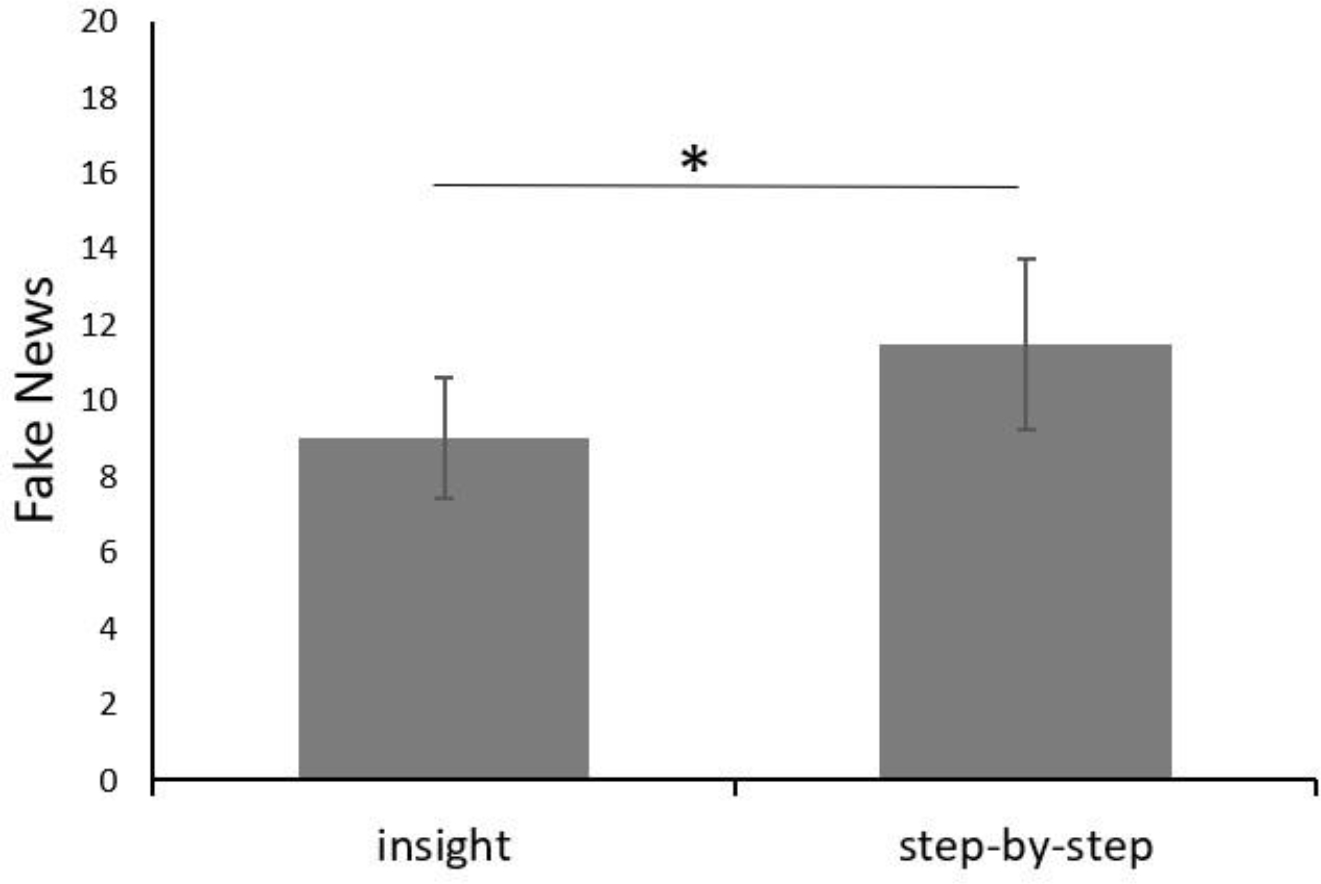

We next divided our sample into two groups according to whether they solved more problems with insight or through a step-by-step analysis.6 We obtained two samples of 33 (insight group) and 26 (step-by-step group) participants respectively. The groups were balanced for age (insight M = 25.84, SD =8.6; step-by-step M =25.30, SD =7.9) and years of education (insight M = 16.21, SD =3.1; step-by-step M =15.84, SD =3.9). We computed a one-way ANOVA between the two groups. Results show that people in the insight group were less likely to be misled by fake news F (1,57) = 6.15, p < .05, η2 =.097) (Figure 2), especially when it contained political content F (1,57) = 6.39, p< .05, η2 =.101).

Figure 2.

Graph indicating the significant difference in the number of fake news stories participants in the insight and step-by-step analysis groups believed in. Error bars represent standard deviation.

4.3. Problem solving, bullshit, and overclaiming

Results show a significant negative correlation between solving CRA problems with insight and the BRS (Table 2). Percent of problems solved via insight correlates negatively with the BRS. The percent of problems solved via insight and percent of CRT problems solved correctly correlate positively with overclaiming accuracy, which correlates negatively with BRS.

Table 2.

Correlations between the percent of CRA problems solved via insight and step-by-step analysis, percent of CRT problems, and Bullshit Receptivity Score and Overclaiming Accuracy.

| Correlations | ||||||

|---|---|---|---|---|---|---|

|

| ||||||

| Percent of CRAs solved | CRAs solved via insight | CRAs solved step-by-step | CRT % Correct | Bullshit Receptivity Score | ||

|

| ||||||

| Bullshit Receptivity Score | Pearson’s r | −0.085 | −0.346 ** | 0.273 * | −0.263 * | — |

| p-value | 0.515 | 0.006 | 0.033 | 0.041 | — | |

| Upper 95% CI | 0.170 | −0.103 | 0.491 | −0.012 | — | |

| Lower 95% CI | −0.330 | −0.550 | 0.023 | −0.483 | — | |

| Overclaiming Accuracy | Pearson’s r | 0.476 *** | 0.351 ** | 0.229 | 0.305 ** | −0.334 ** |

| p-value | < .001 | 0.006 | 0.076 | 0.017 | 0.008 | |

| Upper 95% CI | 0.650 | 0.554 | 0.455 | 0.517 | −0.090 | |

| Lower 95% CI | 0.255 | 0.109 | −0.024 | 0.058 | −0.541 | |

The bold values indicate significant results with statistical thresholds of *p < .05, **p < .01, ***p < .001 respectively.

The linear regression model revealed that CRA defined problem solving ability is a positive significant predictor of BRS (F (1,59) = 8; β = −.34; p = .006, 95% CI −495 to −.84) and overclaiming accuracy (F (1,59) = 8.2; β = −.35; p = .006, 95% CI −3.41 to −19) only when solutions are reached via insight.

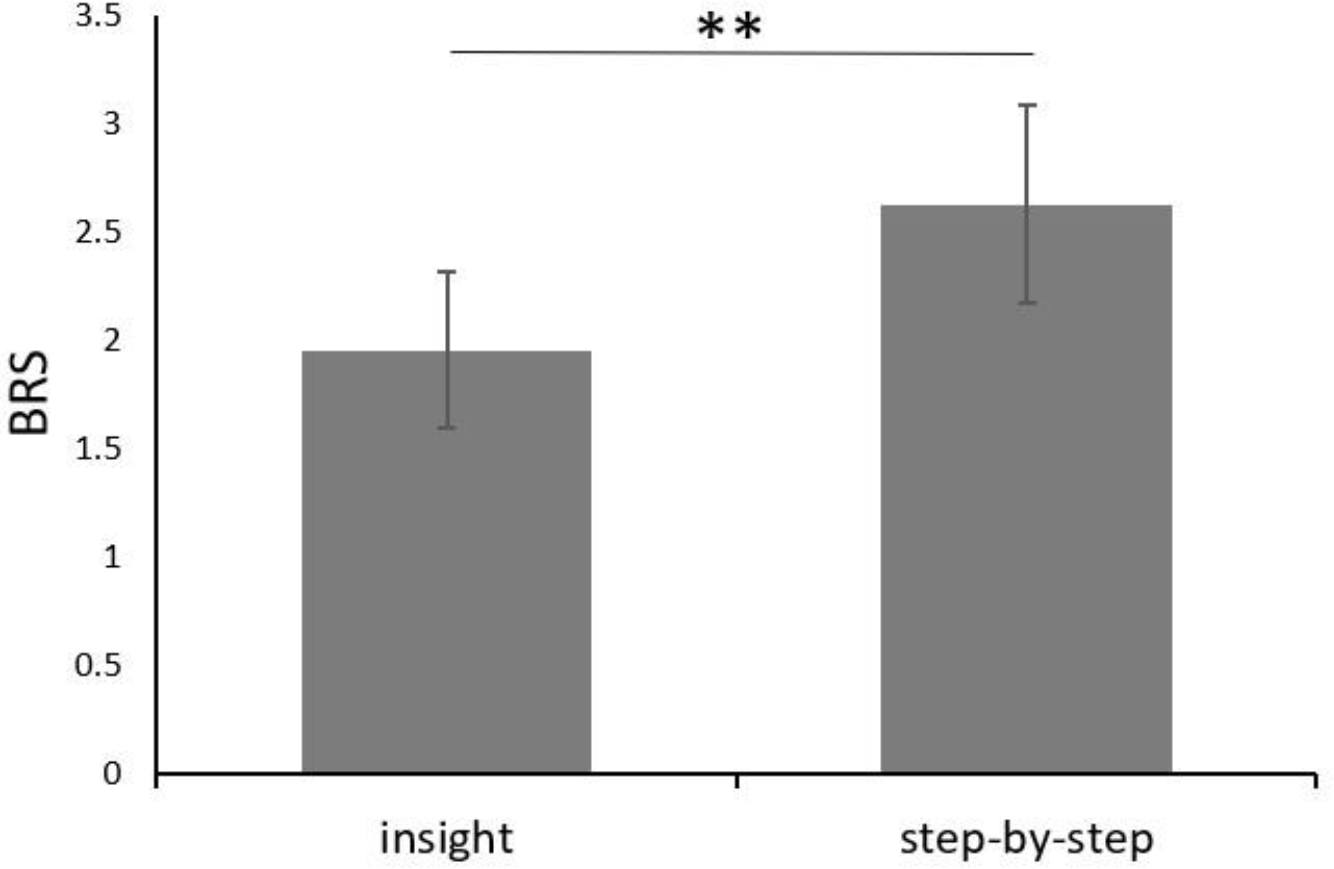

Individual differences

Between-group (insight solvers and step-by-step solvers) comparison shows a significant difference between the two groups F (1,57) = 9.96, p < .005, η2 = .149) (Figure 3) in the BRS score for both profound F (1,57) = 7.03, p <.05, η2 = .11) and non-profound statements F (1,57) = 4.6, p < .05, η2 =.07).

Figure 3.

Graph indicating the significant difference in Bullshit Receptivity Score - BRS (displays their propensity to fall for pseudo-profound bullshit) between the insight and the step-by-step analysis groups. Error bars represent standard deviation.

No significant difference was found between the two groups for the overclaiming questionnaire.

4.5. Political orientation

Among our participants, 68.8% declared themselves to be liberals, 18% independent, 8.2% voted for other parties and 4.9% declared themselves to be conservatives. As reported before, we calculated a liberalism rate by subtracting the score (1–7) of ‘endorsing conservative ideology’ from the score (1–7) endorsing a liberal ideology. The result gave us a liberalism scale with an average of 3.5 (SD 2.8) min of −5 and a max of 7. Data shows a negative correlation between liberalism and response times assessing fake news. The linear regression model shows that polarized liberalism is a significant negative predictor of response times when assessing fake (F (1,59) = 6.6; β = −.31; p = .012, 95% CI −1.36 to −.17) and real news (F (1,59) = 4.2; β = −.25; p = .045, 95% CI −1.35 to −.01).

Individual differences

Between groups comparison indicated a significant difference in the political orientation of the two groups. People in the insight group were more liberal F (1,57) = 51.83, p < .001, η2 = .476). This data replicates our previous results (Salvi, al., 2016). However, we acknowledge that, because political orientation was not the main goal of our study, our sample was not balanced.

4.6. Education

We found a negative correlation between years of education (but not age) and sharing both real (r = −.329, p < .01, 95% CI −0.08 to −0.53) and fake (r =−.322, p < .01, 95% CI −0.07 to −0.53) news on the internet. Multiple regression analysis including both years of education and age was significant for sharing fake [F (2, 58) = 7.79, Education β = −.49; Age β = .37; p < .001, 95% Education CI −3.75 to −.001; Age CI 2.82 to .061] and real news [F (2, 58) = 4.5, Education β = −.41; Age β = .18; p = .015, 95% Education CI −3 to .004; Age CI 1.32 to .19].

Years of education positive predicted liberalism [F (1,59) = 4.04; β = −.25; p = .049, 95% Education CI −.41 to −9.36].

5. Discussion

The information humans are exposed to is growing exponentially and it is constantly fostered by the massive use of social media. Such proliferation increased competition for attention and decreased information evaluation and critical-thinking time, promoting the use of heuristics and social cues to determine the veracity of information. Since social media amplifies partnering with like-minded individuals, it facilitates group isolation and discourages exposure to alternative explanations. As a result, people reduce the exploration of different perspectives, increasing prejudices, stereotypes, and beliefs in false information.

Believing in fake news became a serious threat to human health during the COVID-19 pandemic, facilitating the spread of conspiracy theories and leading people toward adopting unsafe behaviors. Former studies showed that problem solving is associated with cognitive flexibility as well as an overall tendency of questioning the status quo and considering alternative information when reasoning (Salvi, et al., 2016, 2021; Zmigrod, et al., 2019; Zmigrod, 2020). Recently, we investigated critical components of believing in COVID-19-related fake news. Among other factors, we found that problem solving has a strong positive relation with assessing news veracity. Thus, we decided to further investigate this relation, and we hypothesized that insightfulness might play a critical role in it.

Our results evidenced a significant positive relation between insightfulness and fake news discernment. In accordance with it, a new avenue of research is showing that insightfulness, as a trait or a way to solve a one-time problem, is associated with reasoning quality, and the index of accurate idea selection (Danek, Fraps, von Müller, Grothe, & Öllinger, 2014; Danek & Salvi, 2018; Danek & Wiley, 2017; Laukkonen et al., 2018; Salvi, Bricolo, Kounios, Bowden, & Beeman, 2016; Webb, Little, & Cropper, 2017). Solving a problem via insight entails generating novel and original ideas by exploring unusual reasoning paths, a skill that is associated with the ability to filter out irrelevant distractions which might bring advantages when reasoning about information coming from an overcrowded environment like the internet. Research on biological markers of insight showed that insight processing requires a decoupling of attention from perception to isolate competing streams of internal and external information. The decoupling is achieved by blinking for a longer time, or looking away from sources of visual distraction, namely ‘looking at nothing’ behavior (Salvi & Bowden, 2016; Salvi et al., 2015, 2020; Salvi, 2021). Insightfulness, and creativity in general, do not suffer from the cognitive overload that information exposure brings, thanks to a decoupling of attention from perception that isolate competing streams of internal and external information (Ball, Marsh, Litchfield, Cook, & Booth, 2015; Benedek et al., 2017; Konishi, Brown, Battaglini, & Smallwood, 2017; Metcalfe, 1986; Novick et al., 2016; Salvi, Bricolo, Franconeri, Kounios, & Beeman, 2015; Salvi, et al., 2020; Schooler et al., 2011).

Further, insight is based on restructuring the initial problem representation, leading to alternative interpretations of concepts that at first seem unrelated (Dominowski & Dallob, 1995; Ohlsson, 1992; Shen, Yuan, et al., 2018; Smith, 1996; Smith & Blankenship, 1989,1990; Storm & Angello, 2010). We believe that, among other advantages, this skill translates into a greater tendency to question the information in the news by investigating its accuracy further, or by considering alternative and non-obvious explanations. We are aware that this tendency might extend beyond news assessment, and that we might have tapped into a specific outcome of this effect that might be broader than what we are measuring in this study. While more evidence needs to be collected to shed light on this effect, our result first replicates our former study on problem solving and COVID-19 fake news (Salvi et al., 2021) further exploring the specific role of insightfulness in reasoning when assessing news veracity. Considering that in the previous study we used the Rebus Puzzle (another task that is used to study insight problem solving), to corroborate our results this time we replicate the same effect using a task that became an established measure to study insight problem solving (the CRA) since it presents key features of classic insight tasks specifically it success correlates with the success on classic insight problems (Dallob & Dominowski, 1993; Schooler & Melcher, 1995).

Second, our result is congruent with existing research on fake news and adds alternative means of understanding. Pennycook and Rand (2018) found that problem solving performance, measured using the CRT is positively related to the ability to discern fake news from real news – regardless of how closely the headline aligned with people’s political ideology. The authors defined solving CRT problems as ‘analytic thinking’ and suggested that is what people would use to assess the plausibility of headlines, regardless of whether the stories are consistent or inconsistent with one’s political ideology. However, CRT problems present several limits, they are tricky, require high-level pragmatic competence to be solved, and typical subject samples are frequently exposed to them in classes and the popular media (Macchi & Bagassi, 2016; Thomson and Oppenheimer, 2016). Thus, in this study, we adopted a different set of problems that have higher statistical power, which is often used in studying insight problem solving as well as political ideologies (Salvi, et al., 2015). Our results demonstrated a relationship between insight problem solving and fake news discernment independently of its content (political or not). Thus, our results confirm and further extend the previous finding by Pennycook and colleagues (2017, 2019) showing a relation between solving CRT problems and fake news and replicate our research using COVID-19-related fake news (Salvi et al., 2021). We speculate that solutions to CRT problems might be partly dependent on insight since the percent of problems solved in the CRA predicts solving CRT problems. In line with this result, a recent study found that CRT solvers have more accurate intuitions without having to think hard instead of being good at deliberately correcting erroneous intuitions (Roelison, Thompson & De Neys, 2020).

Further, our results have implications for understanding individual differences in susceptibility to believing false information and more generally suggest that insight problem solving is associated with the willingness to invest time and effort in going beyond the information received as an important clue in understanding why people fell prey to fake news.

In a second analysis, we demonstrated that insight problem solving is negatively related to different forms of assessing misinformation. The percent of problems solved, specifically via insight, and percent of CRT problems solved correctly correlates positively with overclaiming accuracy, which correlates negatively with bullshit receptivity indicating the robustness of our construct. We also found that solving problems via insight is negatively related to response times (RT) in assessing overall fake and real news. This finding indicates that inhibition plays an important role in recognizing fake news. For example, insightfulness is negatively related to sharing news content on the media which supports the idea that fake news should be driven more by lazy thinking than it is by bias per se (Pennycook & Rand, 2019b). Evidence we also found, where insightfulness predicts a more cautious behavior in assessing (RT) and sharing fake news. However, while this result last can be interpreted as a lack of inhibition we are aware that it might be biased by fake news discernment (see supplementary material).

Another finding from our data regards political beliefs. Established prejudices and ideological beliefs prevent fact-checking of a given fake news story (Lazer et al., 2018). As a result, people tend to passively believe the information they read online if it is following their preconceptions. In other words, they accept information uncritically, when it aligns with their beliefs or with those of their community, becoming unable to think outside the box. We calculated a scale of liberalism by subtracting how conservative people claimed to be (from 1 to 7) from how liberal they claimed to be. Our data indicate that extreme liberalism is negatively related to response times assessing fake news. Those subjects who were more politically polarized spent less time assessing if the news was real or fake. People, indeed, show higher emotional and reward responses (i.e., amygdala and ventral striatum activations) when reading political opinions in accordance with their political views, and this increases when people adopt extreme political perspectives (Gozzi et al., 2010). It is known that politically polarized individuals experience more negative emotions about politics and tend to be dismissive of outgroups compared to moderates (van Prooijen, Krouwel, Boiten, & Eendebak, 2015), conceive politics in more simplistic terms (Lammers, Koch, Conway, & Brandt, 2017), tend to reject external information and exhibit greater belief of superiority than moderates (Brandt, Evans, & Crawford, 2015). The negative correlation between liberalism and RT assessing real and fake news can be interpreted in the context of these studies on political extremism. We acknowledge that because this finding was not hypothesized at the beginning of the study, we did not recruit a balanced sample of liberals and conservatives, we calculated liberalism as a continuous variable and we interpret them only in the light of being more or less politically polarized and not about liberal vs. conservative political ideologies, an aspect that we investigated already in our 2016 study (Salvi et al., 2016).

The association of education with sharing news should be highlighted. In former studies, higher education was associated with greater sharing and liking of fake news regarding climate change (Lutzke, Drummond, Slovic, & Árvai, 2019) and better discernment between true and false political headlines (Allcott, & Gentzkow, 2016; Pennicook & Ran, 2019). However, it was not significantly associated with greater belief in COVID-19 false information (Gerosa, Gui, Hargittai, & Nguyen, 2021; Salvi et al., 2021). In our study we find a negative correlation between years of education and sharing news, however, this relation was predictive only when we introduced age in the model. Looking at the literature we can speculate that the kind of news, as well as the association of education with other covariates, might better explain this relation case by case. Regarding reasoning, education should improve people’s ability to discern fact from fiction and provides people with more information to counterargue against incongruent information (Allcott, & Gentzkow, 2016).

6. Limitations and future directions

Our study is consistent with the current literature showing an association between forms of social reasoning and cognitive flexibility (see Zmigrod, 2020). However, more work needs to be done to better understand the nuances of this relation. For example, while we replicated our results using CRAs and Rebus Puzzles (Salvi et al., 2021), investigating it also using classic insight problems would strengthen our conclusions. In addition, detecting the relation between fake news discernment and key factors of insight such as affect, feeling confidence and state of impasse would be important. Further, in our experiment we use a dichotomous choice to report insight or step-by-step solving, it would be beneficial to explore the same effect using more continuous form of insight reporting. A recent study by Laukkonen, Ingledew, Grimmer, Schooler, and Tangen (2022) was able to capture individual differences in insight on a continuum and in real-time, by using a dynamometer, and demonstrated that the impulsive feeling of Aha can carry information about the veracity of an idea since the intensity of having an insight predicted the accuracy of solutions. Thus, we wonder: would that also predict fake news discernment?

Researching how to reduce belief in and the spread of misinformation is an important emerging subfield in psychology. Overall, our results have implications for understanding individual differences in susceptibility to believing false information, indicate the importance of inhibition and reflecting on available sources of information (i.e., exploration of different perspectives) to discern facts from fiction. This translates into investing more time and effort in going beyond the information received, inhibiting the desire of sharing any content on social media before an accurate assessment. Our study also highlights how filtering out irrelevant distractions bring advantages when reasoning about information coming from an overcrowded environment like the internet. We believe this finding represents an important suggestion for improving the usability of web pages and facilitating critical reasoning.

Supplementary Material

Figure 1.

Scatterplot indicating the relation between the proportion of problems solved with insight and the number of fake news stories believed.

Acknowledgments

This research was supported by a grant from the Smart Family Foundation of New York to JG. CS was supported in part by NIH training grant T32 NS047987. We thank Mike Thayer for helping with the data analysis of the study. JD was supported by the NSF CAREER Award # 1844792 (PI J.E.D.).

Footnotes

The exact wording was:

e.g., 6 Bill Clinton

0 Fred Gruneberg

In other words, the difficulty of the items ranges from easy to impossible.

One of these foils, along with one of the actual terms had to be removed. El Puente was designated as a foil but is an actual Mayan dig site, while the real term hydroponics was misspelled as ‘hydoponics (sic)’ so we removed it from the analysis.

Specifically, the following instructions were given to participants to explain how to distinguish a solution via insight from one via analysis: You will decide whether the solution was reached with insight or with analysis. With INSIGHT means you experienced a so-called A-ha! moment and the solution came to mind as a sudden surprise. It won’t be a huge Eureka, just a small surprise and it may be difficult to articulate how you reached the solution. STEP-BY-STEP it means that you reached the solution gradually, part by part. You might have used a deliberate strategy or just trial-and-error and you can report steps. We know it is not always obvious whether you used insight or step-by-step, and you may feel as though you used a mixture of both. But we need you to choose one the best you can, so please choose whichever method your solving process most closely resembles. No solution type is better or worse than the other; there are no right or wrong answers in reporting insight or analysis. Instructions used were similar to those used by Bowden and Jung-Beeman 2003.

The percentage scores were calculated by dividing the total number of CRA problems given (60) to the number of problems solved correctly, correctly via insight, correctly vis step-by-step, solved incorrectly, incorrectly via insight and incorrectly via step-by-step. The averages of these precents per person are reported in the text.

The precents were calculated by dividing the total number of CRT problems given (5) to the number of problems solved correctly. The averages of these precents per person are reported in the text.

Two participants were excluded since they solved the same number of problems via insight and step-by-step.

Credit author statement

Carola Salvi: Conceptualization, Methodology, Data curation, Writing-Original draft preparation. Nathaniel Barr: Data interpretation, Writing. Joseph Dunsmoor and Jordan Grafman: Conceptualization, Supervision, Writing, Reviewing, and Editing.

References

- Allcott H, and Gentzkow M 2017. ”Social Media and Fake News in the 2016 Election.” Journal of Economic Perspectives, 31 (2): 211–36. [Google Scholar]

- Bagassi M, Macchi L (2016) The interpretative function and the emergence of unconscious analytic thought. In: Macchi L, Bagassi M, Viale R (eds) Cognitive unconscious and human rationality. MIT Press, Cambridge, pp 43–76. [Google Scholar]

- Bagassi M (2019). Insight Problem Solving and Unconscious Analytic Thought. New Lines of Research. Model-Based Reasoning in Science and Technology: Inferential Models for Logic, Language, Cognition, and Computation, 49, 120. [Google Scholar]

- Baron J, Scott S, Fincher KS, & Metz SE (2015). Why does the Cognitive Reflection Test (sometimes) predict utilitarian moral judgment (and other things)? Journal of Applied Research in Memory and Cognition, 4(3), 265–284. [Google Scholar]

- Ball LJ, Marsh JE, Litchfield D, Cook RL, & Booth N (2015). When distraction helps: Evidence that concurrent articulation and irrelevant speech can facilitate insight problem solving. Thinking and Reasoning, 21(1), 76–96. 10.1080/13546783.2014.934399 [DOI] [Google Scholar]

- Ball LJ, & Stevens A (2009). Evidence for a verbally-based analytic component to insight problem solving. Proceedings of the 31st Annual Conference of the Cognitive Science Society, 1060–1065. [Google Scholar]

- Becker M, Wiedemann G, & Kühn S (2018). Quantifying insightful problem solving: a modified compound remote associates paradigm using lexical priming to parametrically modulate different sources of task difficulty. Psychological Research, 0(0), 1–18. 10.1007/s00426-018-1042-3 [DOI] [PubMed] [Google Scholar]

- Benedek M, Stoiser R, Benedek M, Stoiser R, Walcher S, & Körner C (2017). Eye Behavior Associated with Internally versus Externally Directed Cognition. Frontiers in Psychology, July. 10.3389/fpsyg.2017.01092 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benedek M, & Jauk E (2018). Spontaneous and controlled processes in creative cognition. In The Oxford handbook of spontaneous thought: Mind-wandering, creativity, and dreaming (p. 285). [Google Scholar]

- Bowden EM, & Jung-Beeman M (2003). Normative data for 144 compound remote associate problems. Behavior Research Methods, Instruments, & Computers : A Journal of the Psychonomic Society, Inc, 35(4), 634–639. [DOI] [PubMed] [Google Scholar]

- Bowden EM, & Jung-Beeman M (2007). Methods for investigating the neural components of insight. Methods, 42(1), 87–99. [DOI] [PubMed] [Google Scholar]

- Bowden EM, Jung-Beeman M, Fleck JI, & Kounios J (2005). New approaches to demystifying insight. Trends in Cognitive Sciences, 9(7), 322–328. 10.1016/j.tics.2005.05.012 [DOI] [PubMed] [Google Scholar]

- Brandt MJ, Evans AM, & Crawford JT (2015). The unthinking or confident extremist? Political extremists are more likely than moderates to reject experimenter-generated anchors. Psychological Science, 26, 189–202. [DOI] [PubMed] [Google Scholar]

- Bronstein MV, Pennycook G, Bear A, Rand DG, & Cannon TD (2019). Belief in fake news is associated with delusionality, dogmatism, religious fundamentalism, and reduced analytic thinking. Journal of applied research in memory and cognition, 8(1), 108–117. [Google Scholar]

- Chandler J, Mueller P, & Paolacci G (2014). Non-naivete among Amazon Mechanical Turk workers: Consequences and solutions for behavioral researchers. Behavioral Research Methods, 46(1), 112–130. [DOI] [PubMed] [Google Scholar]

- Cohen-Zimerman S, Salvi C, & Grafman J (2020). Patients-based approaches to understanding intelligence and problem-solving. The Cambridge Handbook of Intelligence and cognitive Neuroscience. [Google Scholar]

- Cristofori I, Salvi C, Beeman M, & Grafman J (2018). The effects of expected reward on creative problem solving. Cognitive, Affective, & Behavioral Neuroscience, 5(18), 925–931. Retrieved from 10.3758/s13415-018-0613-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danek AH, Fraps T, von Müller A, Grothe B, & Öllinger M (2014). Working wonders? Investigating insight with magic tricks. Cognition, 130(2), 174–185. 10.1016/j.cognition.2013.11.003 [DOI] [PubMed] [Google Scholar]

- Danek AH, & Salvi C (2018). Moment of Truth: Why Aha! Experiences are Correct. Journal of Creative Behavior, 54(2), 484–486. 10.1002/jocb.380 [DOI] [Google Scholar]

- Danek AH, & Wiley J (2017). What about False Insights? Deconstructing the Aha ! Experience along Its Multiple Dimensions for Correct and Incorrect Solutions Separately. 7(January), 1–14. 10.3389/fpsyg.2016.02077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dominowski RL, & Dallob P (1995). Insight and Problem Solving. In Sternberg RJ & Davidson JE (Eds.), The Nature of Insight (pp. 273–278). MIT Press. [Google Scholar]

- Frederick S (2005). Cognitive Reflection and Decision Making. Journal of Economic Perspectives, 19(4), 25–42. 10.1257/089533005775196732 [DOI] [Google Scholar]

- Gerosa T, Gui M, Hargittai E, & Nguyen M (2021). (Mis)informed During COVID-19: How Education Level and Information Sources Contribute to Knowledge Gaps. International Journal Of Communication, 15, 22. [Google Scholar]

- Gozzi M, Zamboni G, Krueger F, & Grafman J (2010). Interest in politics modulates neural activity in the amygdala and ventral striatum. Human Brain Mapping, 31(11), 1763–1771. 10.1002/hbm.20976 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamann S (2001). Cognitive and neural mechanisms of emotional memory. In Trends in Cognitive Sciences. 10.1016/S1364-6613(00)01707-1 [DOI] [PubMed] [Google Scholar]

- Hills TT (2018). The Dark Side of Information Proliferation. Perspectives on Psychological Science. 10.1177/1745691618803647 [DOI] [PubMed] [Google Scholar]

- Jung-Beeman M, Bowden EM, Haberman J, Frymiare JL, Arambel-Liu S, Greenblatt R, Reber PJ, & Kounios J (2004a). Neural activity when people solve verbal problems with insight. PLoS Biology, 2(4), 500–510. 10.1371/journal.pbio.0020097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D (2011). Thinking, Fast and Slow. New York: Farrar, Strauss, Giroux. [Google Scholar]

- Konishi M, Brown K, Battaglini L, & Smallwood J (2017). When attention wanders : Pupillometric signatures of fluctuations in external attention. Cognition, 168, 16–26. 10.1016/j.cognition.2017.06.006 [DOI] [PubMed] [Google Scholar]

- Kounios J, & Beeman M (2014). The Cognitive Neuroscience of Insight. In SSRN. 10.1146/annurev-psych-010213-115154 [DOI] [PubMed] [Google Scholar]

- Lammers J, Koch A, Conway P, & Brandt MJ (2017). The Political Domain Appears Simpler to the Politically Extreme Than to Political Moderates. Social Psychological and Personality Science. 10.1177/1948550616678456 [DOI] [Google Scholar]

- Laukkonen RE, Webb ME, Salvi C, Schooler JW, & Tangen JM (2020). The Eureka heuristic: Relying on insight to appraise the quality of ideas. Preprint, February, 1–44. 10.17605/OSF.IO/EZ3TN [DOI] [Google Scholar]

- Laukkonen R, Ingledew D, Schooler J, & Tangen J (2018). The Phenomenology of Truth : The insight experience as a heuristic in contexts of uncertainty. August, 1–22. 10.17605/OSF.IO/9W56M [DOI] [Google Scholar]

- Laukkonen RE, Ingledew DJ, Grimmer HJ, Schooler JW, & Tangen JM (2021). Getting a grip on insight: real-time and embodied Aha experiences predict correct solutions. Cognition and Emotion, 35(5), 918–935. [DOI] [PubMed] [Google Scholar]

- Lazer DMJ, Baum MA, Benkler Y, Berinsky AJ, Greenhill KM, Menczer F, Metzger MJ, Nyhan B, Pennycook G, Rothschild D, Schudson M, Sloman SA, Sunstein CR, Thorson EA, Watts DJ, & Zittrain JL (2018). The science of fake news: Addressing fake news requires a multidisciplinary effort. Science, 359(6380), 1094–1096. 10.1126/science.aao2998 [DOI] [PubMed] [Google Scholar]

- Lin H, Pennycook G, & Rand DG (2022, February 3). Thinking more or thinking differently? Using drift-diffusion modeling to illuminate why accuracy prompts decrease misinformation sharing. Retrieved from psyarxiv.com/kf8m [DOI] [PubMed] [Google Scholar]

- Lubin G (2012). A simple logic question that most Harvard students get wrong. Business Insider. Retrieved from: http://www.businessinsider.com/question-that-harvard-students-get-wrong-2012-12. [Google Scholar]

- Lutzke L; Drummond C; Slovic P; Árvai J (2019). Priming critical thinking: Simple interventions limit the influence of fake news about climate change on Facebook. Glob. Environ. Chang, 58, 101964. [Google Scholar]

- Macchi L, & Bagassi M (2012). Intuitive and analytical processes in insight problem solving: a psycho-rhetorical approach to the study of reasoning. Mind & Society, 11(1), 53–67. 10.1007/s11299-012-0103-3 [DOI] [Google Scholar]

- Macchi L, & Bagassi M (2014). The interpretative heuristic in insight problem solving. Mind & Society, 13(1), 97–108. [Google Scholar]

- Macchi L, Cucchiarini V, Caravona L, Bagassi M (2019) Insight Problem Solving and Unconscious Analytic Thought. New Lines of Research. In: Nepomuceno-Fernández Á, Magnani L, Salguero-Lamillar F, Barés-Gómez C, Fontaine M. (eds) Model-Based Reasoning in Science and Technology. MBR 2018. Studies in Applied Philosophy, Epistemology and Rational Ethics, vol 49. Springer, Cham. 10.1007/978-3-030-32722-4_8 [DOI] [Google Scholar]

- Martel C, Pennycook G, & Rand DG (2020). Reliance on emotion promotes belief in fake news. Cognitive research: principles and implications, 5(1), 1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Metcalfe J (1986). Feeling of knowing in memory and problem solving. Journal of Experimental Psychology: Learning, Memory, and Cognition, 12(2), 288–294. 10.1037/0278-7393.12.2.288 [DOI] [Google Scholar]

- Novick LR, & Sherman SJ (2003). On the nature of insight solutions: Evidence from skill differences in anagram solution. The Quarterly Journal of Experimental Psychology Section A, 56(2), 351–382. [DOI] [PubMed] [Google Scholar]

- Oh Y, Chesebrough C, Erickson B, Zhang F, & Kounios J (2020). An insight-related neural reward signal. NeuroImage, 116757. 10.1016/j.neuroimage.2020.116757 [DOI] [PubMed] [Google Scholar]

- Ohlsson S (1992). Information-processing explanations of insight and related phenomena. Advances in the Psychology of Thinking, 1, 1–44. [Google Scholar]

- Paulhus DL, Harms PD, Bruce MN, & Lysy DC (2003). The Over-Claiming Technique: Measuring Self-Enhancement Independent of Ability. Journal of Personality and Social Psychology, 84(4), 890–904. 10.1037/0022-3514.84.4.890 [DOI] [PubMed] [Google Scholar]

- Pennycook G, Cannon TD, & Rand DG (2018). Prior exposure increases perceived accuracy of fake news. Journal of Experimental Psychology: General. 10.1037/xge0000465 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennycook G, Epstein Z, Mosleh M, Arechar AA, Eckles D, & Rand DG (2021). Shifting attention to accuracy can reduce misinformation online. Nature. 10.1038/s41586-021-03344-2 [DOI] [PubMed] [Google Scholar]

- Pennycook G, & Rand DG (2019a). Cognitive reflection and the 2016 US Presidential election. Personality and Social Psychology Bulletin. [DOI] [PubMed] [Google Scholar]

- Pennycook G, & Rand DG (2019b). Lazy, not biased : Susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition, 188(September 2017), 39–50. 10.1016/j.cognition.2018.06.011 [DOI] [PubMed] [Google Scholar]

- Pennycook G, & Rand DG (2019c). Who Falls for Fake News? The Roles of Analytic Thinking, Motivated Reasoning, Political Ideology, and Bullshit Receptivity. SSRN Electronic Journal. 10.2139/ssrn.3023545 [DOI] [PubMed] [Google Scholar]

- Phillips DL, & Clancy KJ (1972). Some effects of “social desirability” in survey studies. American journal of sociology, 77(5), 921–940. [Google Scholar]

- Postrel A (2006). Would you take a bird in the hand, or a 75% chance of two in the bush? The New York Times. Retrieved from: http://www.nytimes.com/2006/01/26/business/26scene.html?pagewanted=print&_r=0. [Google Scholar]

- Robinson JP, Shaver PR, & Wrightsman LS (1999). Measures of politicalattitudes (Vol.2). AcademicPress. [Google Scholar]

- Raoelison M, Thompson VA, De Neys W. The smart intuitor: Cognitive capacity predicts intuitive rather than deliberate thinking. Cognition. 2020. Nov; 204:104381. doi: 10.1016/j.cognition.2020.104381. Epub 2020 Jul 1. [DOI] [PubMed] [Google Scholar]

- Salvi C, Markers of insight. (In press). Routledge International Handbook of Creative Cognition. 10.31234/osf.io/73y5khttps://psyarxiv.com/73y5k [DOI] [Google Scholar]

- Salvi C, Beeman M, Bikson M, McKinley R, & Grafman J (2020). TDCS to the right anterior temporal lobe facilitates insight problem-solving. Scientific Reports, 10(1), 946. 10.1038/s41598-020-57724-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salvi C, & Bowden E (2020). The relation between state and trait risk taking and problem-solving. Psychological Research, 0(0), 0. 10.1007/s00426-019-01152-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salvi C, & Bowden EM (2016). Looking for Creativity: Where Do We Look When We Look for New Ideas? Frontiers in Psychology. 10.3389/fpsyg.2016.00161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salvi C, Bricolo E, Franconeri S, Kounios J, & Beeman M (2015a). Sudden Insight Is Associated with Shutting Out Visual Inputs. Psychonomic Bulletin & Review. 10.3758/s13423-015-0845-0 [DOI] [PubMed] [Google Scholar]

- Salvi C, Bricolo E, Kounios J, Bowden E, & Beeman M (2016). Insight solutions are correct more often than analytic solutions. Thinking & Reasoning, 22(4), 443–460. 10.1080/13546783.2016.1141798 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salvi C, Costantini G, Bricolo E, Perugini M, & Beeman M (2015). Validation of Italian rebus puzzles and compound remote associate problems. Behavior Research Methods. 10.3758/s13428-015-0597-9 [DOI] [PubMed] [Google Scholar]

- Salvi C, Costantini G, Pace A, & Palmiero M (2018). Validation of the Italian Remote Associate Test. The Journal of Creative Behavior, 0, 1–13. 10.1002/jocb.345 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salvi C, Cristofori I, Grafman J, & Beeman M (2016a). The politics of insight. Quarterly Journal of Experimental Psychology, 69(6), 1064–1072. 10.1080/17470218.2015.1136338 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salvi C, Iannello P, Cancer A, MacClay M, Rago S, Dunsmoor JE, & Antonietti A (2021). Going viral: how fear, socio-cognitive polarization and problem-solving influence fake news detection and proliferation during COVID-19 pandemic. Frontiers in Communication, 5(January), 1–16. 10.3389/fcomm.2020.562588 [DOI] [Google Scholar]

- Salvi C, Simoncini C, Grafman J, & Beeman M (2020). Oculometric signature of switch into awareness? Pupil size predicts sudden insight whereas microsaccades predict problem-solving via analysis. NeuroImage, 116933. 10.1016/j.neuroimage.2020.116933 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santarnecchi E, Sprugnoli G, Bricolo E, Constantini G, Liew SL, Musaeus CS, Salvi C, Pascual-Leone A, Rossi A & Rossi S (n.d.). Gamma tACS over the temporal lobe increases the occurrence of Eureka! moments. Scientific Reports. 10.1038/s41598-019-42192-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schomaker J, & Meeter M (2015). Short- and long-lasting consequences of novelty, deviance and surprise on brain and cognition. In Neuroscience and Biobehavioral Reviews. 10.1016/j.neubiorev.2015.05.002 [DOI] [PubMed] [Google Scholar]

- Schooler JW, & Melcher J (1995). The ineffability of insight. The Creative Cognition Approach, 97–133. [Google Scholar]

- Schooler JW, Smallwood J, Christoff K, Handy TC, Reichle ED, & Sayette M. a. (2011). Meta-awareness, perceptual decoupling and the wandering mind. Trends in Cognitive Sciences, 15(7), 319–326. 10.1016/j.tics.2011.05.006 [DOI] [PubMed] [Google Scholar]

- Shen W, Tong Y, Yuan Y, Zhan H, Liu C, & Luo J (2018). Feeling the Insight : Uncovering Somatic Markers of the “ aha ” Experience. Applied Psychophysiology and Biofeedback, 43(1), 13–21. 10.1007/s10484-017-9381-1 [DOI] [PubMed] [Google Scholar]

- Shen W, Yuan Y, Liu C, & Luo J (2016). In search of the ‘ Aha ! ‘ experience : Elucidating the emotionality of insight problem-solving. British Journal of Psychology, 107(2016), 281–298. 10.1111/bjop.12142 [DOI] [PubMed] [Google Scholar]

- Shen W, Yuan Y, Zao Y, Xiaojiang Z, Chang L, Luo J, Jinxiu L, & Lili F (2018). Defining Insight : A Study Examining Implicit Theories of Insight Experience Defining Insight : A Study Examining Implicit Theories of Insight Experience. Psychology of Aesthetics, Creativity, and the Arts, 12(3), 317–327. 10.1037/aca0000138 [DOI] [Google Scholar]

- Smith RW, & Kounios J (1996). Sudden insight : all-or none-processing revealed by speed-accuracy decomposition. Journal of Experimental Psychology. Learning, Memory, and Cognition, 22(6), 1443–1462. [DOI] [PubMed] [Google Scholar]

- Smith SM (1996). Getting Into and Out of Mental Ruts : A Theory of Fixation, Incubation, and Insight. In Sternberg RJ& Davidson JE (Eds.), The Nature of Insight (pp. 229–251). The MIT Press. [Google Scholar]

- Smith SM, & Blankenship SE (1989). Incubation effects. Bulletin of the Psychonomic Society. 10.3758/BF03334612 [DOI] [Google Scholar]

- Smith S, & Blankenship SE (1991). Incubation and the persistence of fixation in problem solving. American Journal of Psychology, 104(1), 61–87. [PubMed] [Google Scholar]

- Sprugnoli G, Rossi S, Liew SL, Bricolo E, Costantini G, Salvi C, … & Santarnecchi E. (2021). Enhancement of semantic integration reasoning by tRNS. Cognitive, Affective, & Behavioral Neuroscience, 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sterling J, Jost JT, & Pennycook G (2016). Are neoliberals more susceptible to bullshit? Judgment and Decision Making, 11(4), 352–360. [Google Scholar]

- Storm BC, & Angello G (2010). Overcoming Fixation. Psychological Science, 21(9), 1263–1265. 10.1177/0956797610379864 [DOI] [PubMed] [Google Scholar]

- Subramaniam K, Kounios J, Parrish TB, & Jung-Beeman M (2009). A brain mechanism for facilitation of insight by positive affect. Journal of Cognitive Neuroscience, 21(3), 415–432. 10.1162/jocn.2009.21057 [DOI] [PubMed] [Google Scholar]

- Thomson KS, & Oppenheimer DM (2016). Investigating an alternate form of the cognitive reflection test. Judgment and Decision Making, 11(1), 99–113. [Google Scholar]

- Tik M, Sladky S, Luft C, Willinger D, Hoffmann A, Banissy M, Bhattacharya J, & Windischberger C (2018). Ultra-high-field fMRI insights on insight: Neural correlates of the Aha!-moment. Human Brain Mapping, 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toplak ME, West RF, & Stanovich KE (2014). Assessing miserly information processing: An expansion of the Cognitive Reflection Test. Thinking & Reasoning, 20(2), 147–168. [Google Scholar]

- van Prooijen JW, Krouwel APM, Boiten M, & Eendebak L (2015). Fear Among the Extremes: How Political Ideology Predicts Negative Emotions and Outgroup Derogation. Personality and Social Psychology Bulletin, 41(4), 485–497. 10.1177/0146167215569706 [DOI] [PubMed] [Google Scholar]

- Vosoughi S, Roy D, & Aral S (2018). The spread of true and false news online. Science. 10.1126/science.aap9559 [DOI] [PubMed] [Google Scholar]

- Webb ME, Little DR, & Cropper SJ (2017). Once more with feeling: Normative data for the aha experience in insight and noninsight problems. Behavior Research Methods, 1–22. 10.3758/s13428-017-0972-9 [DOI] [PubMed] [Google Scholar]

- Zmigrod L (2020). The role of cognitive rigidity in political ideologies: theory, evidence, and future directions. Current Opinion in Behavioral Sciences, 34, 34–39. 10.1016/j.cobeha.2019.10.016 [DOI] [Google Scholar]

- Zmigrod L, Rentfrow PJ, & Robbins TW (2019). Journal of Experimental Psychology: General The Partisan Mind : Is Extreme Political Partisanship Related to Cognitive Inflexibility? The Partisan Mind: Is Extreme Political Partisanship Related to Cognitive Inflexibility? Journal of Experimental Psychology: General. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.