Abstract

We propose a prenet (product-based elastic net), a novel penalization method for factor analysis models. The penalty is based on the product of a pair of elements in each row of the loading matrix. The prenet not only shrinks some of the factor loadings toward exactly zero but also enhances the simplicity of the loading matrix, which plays an important role in the interpretation of the common factors. In particular, with a large amount of prenet penalization, the estimated loading matrix possesses a perfect simple structure, which is known as a desirable structure in terms of the simplicity of the loading matrix. Furthermore, the perfect simple structure estimation via the proposed penalization turns out to be a generalization of the k-means clustering of variables. On the other hand, a mild amount of the penalization approximates a loading matrix estimated by the quartimin rotation, one of the most commonly used oblique rotation techniques. Simulation studies compare the performance of our proposed penalization with that of existing methods under a variety of settings. The usefulness of the perfect simple structure estimation via our proposed procedure is presented through various real data applications.

Supplementary Information

The online version contains supplementary material available at 10.1007/s11336-022-09868-4.

Keywords: multivariate analysis, quartimin rotation, penalized maximum likelihood estimation, perfect simple structure, sparse estimation

Factor analysis investigates the correlation structure of high-dimensional observed variables by the construction of a small number of latent variables called common factors. Factor analysis can be considered as a soft clustering of variables, in which each factor corresponds to a cluster and observed variables are categorized into overlapping clusters. For interpretation purposes, it is desirable for the observed variables to be well-clustered (Yamamoto and Jennrich, 2013) or the loading matrix to be simple (Thurstone, 1947). In particular, the perfect simple structure (e.g., Bernaards and Jennrich, 2003; Jennrich, 2004), wherein each row of the loading matrix has at most one nonzero element, provides a non-overlapping clustering of variables in the sense that variables that correspond to nonzero elements of the jth column of the loading matrix belong to the jth cluster.

Conventionally, the well-clustered or simple structure of the loading matrix is found by rotation techniques. A number of rotation techniques have been proposed in the literature; for example, quartimin rotation (Carroll, 1953), varimax rotation (Kaiser, 1958), promax rotation (Hendrickson and White, 1964), simplimax rotation (Kiers, 1994), geomin rotation (Yates, 1987), and component loss criterion (Jennrich, 2004, 2006). The literature review of the rotation techniques is described in Browne 2001. The main purpose of the factor rotation is to get a good solution that is as simple as possible. See, e.g., Thurstone (1935), Carroll (1953), Neuhaus and Wrigley (1954), Kaiser (1974), Bernaards and Jennrich (2003).

The problem with the rotation technique is that it cannot produce a sufficiently sparse solution in some cases (Hirose and Yamamoto, 2015), because the loading matrix must be found among a set of unpenalized maximum likelihood estimates. To obtain sparser solutions than the factor rotation, we employ a penalization method. It is shown that the penalization is a generalization of the rotation techniques and can produce sparser solutions than the rotation methods (Hirose and Yamamoto, 2015). Typically, many researchers use the -type penalization, such as the lasso (Tibshirani, 1996), the adaptive lasso (Zou, 2006), and the minimax concave penalty (MCP) (Zhang, 2010); for example, Choi et al. (2011), Ning and Georgiou (2011), Srivastava et al. (2017), Hirose and Yamamoto (2015), Trendafilov et al. (2017), Hui et al. (2018). The penalization shrinks some of the parameters toward exactly zero; in other words, parameters that need not to be modeled are automatically disregarded. Furthermore, the degrees of sparsity are freely adjusted by changing the value of a regularization parameter. The penalization provides a good estimation accuracy, such as consistency and model selection consistency in high dimension (e.g., Zhao and Yu 2007; Wainwright, 2009; Bhlmann and van de Geer, 2011).

As described above, it is important to obtain a “good” loading matrix in the sense of simplicity and thus interpretability in the exploratory factor analysis. Although the penalization achieves the sparse estimation, the estimated loading matrix is not guaranteed to possess an interpretable structure. For example, a great amount of penalization leads to a zero matrix, which does not make sense from an interpretation viewpoint. Thus, the penalization cannot produce an interpretable loading matrix with a sufficient large regularization parameter. Even if a small value of regularization parameter is selected, the penalization cannot often approximate a true loading matrix when it is not sufficiently sparse; with the lasso, some of the factor loadings whose true values are close —but not very close—to zero are estimated as zero values, and this misspecification can often cause a significant negative effect on the estimation of other factor loadings (Hirose and Yamamoto, 2014). Therefore, it is important to estimate a loading matrix that is not only sparse but also interpretable. To achieve this, we need a different type of penalty.

In this study, we propose a prenet (product-based elastic net) penalty, which is based on the product of a pair of parameters in each row of the loading matrix. A remarkable feature of the prenet is that a large amount of penalization leads to the perfect simple structure. The existing -type penalization methods do not have that significant property. Furthermore, the perfect simple structure estimation via the prenet penalty is shown to be a generalization of the k-means clustering of variables. On the other hand, with a mild amount of prenet penalization, the estimated loading matrix is approximated by that obtained using the quartimin rotation, a widely used oblique rotation method. The quartimin criterion can often estimate a non-sparse loading matrix appropriately, so that the problem of the lasso-type penalization mentioned above is addressed. We employ the generalized expectation and maximization (EM) algorithm and the coordinate descent algorithm (e.g., Friedman et al., 2010) to obtain the estimator. The proposed algorithm monotonically decreases the objective function at each iteration.

In our proposed procedure, the regularization parameter controls the degrees of simplicity; the larger the regularization parameter is, the simpler the loading matrix is. The advantage of our proposed procedure is that we can change the degrees of simplicity according to the purpose of the analysis. This study focus on two different purposes of the analysis: (i) to find a loading matrix that fits the data and also is simple as much as possible and (ii) to conduct cluster analysis by estimating a perfect simple structure. The regularization parameter selection procedure differs depending on these two purposes. To achieve the purpose (i), we select the regularization parameter by the Akaike information criterion (AIC; Akaike, 1973; Zou et al., 2007) or the Bayesian information criterion (BIC; Schwarz, 1978; Zou et al., 2007). The purpose (ii) is attained by setting the regularization parameter to be infinity.

We conduct the Monte Carlo simulations to compare the performance of our proposed method with that of -type penalization and conventional rotation techniques. The Monte Carlo simulations investigate the performance in terms of both (i) and (ii); investigations of (i) and (ii) are detailed in Sects. 5.2 and 5.3, respectively. Our proposed method is applied to data from big five personality traits to study the performance for various sample sizes and impact of the regularization parameter on the accuracy. The analysis of big five personality traits aims at purpose (i). We also present the analyses of fMRI and electricity demand data, intended to purpose both (i) and (ii), in Section S2 of the supplemental material.

The rest of this article is organized as follows. Section 1 describes the estimation of the factor analysis model via penalization. In Sect. 2, we introduce the prenet penalty. Section 3 describes several properties of the prenet penalty, including its relationship with the quartimin criterion. Section 4 presents an estimation algorithm to obtain the prenet solutions. In Sect. 5, we conduct a Monte Carlo simulation to investigate the performance of the prenet penalization. Section 6 illustrates the usefulness of our proposed procedure through real data analysis. Extension and future works are discussed in Sect. 7. Some technical proofs and detail of our algorithm are shown in “Appendix.” Supplemental materials include further numerical and theoretical investigation, including numerical analyses of resting-state fMRI and electricity demand data.

Estimation of Factor Analysis Model via Penalization

Let be a p-dimensional observed random vector with mean vector and variance–covariance matrix . The factor analysis model is

| 1 |

where is a loading matrix, is a random vector of common factors, and is a random vector of unique factors. It is assumed that , , , , and , where is an factor correlation matrix, and is a diagonal matrix (i.e., strict factor model). The diagonal elements of are referred to as unique variances. Under these assumptions, the variance–covariance matrix of observed random vector is .

In many cases, the orthogonal factor model (i.e., ) is used but is often oversimplified. Here, is an identity matrix of order m. This paper covers the oblique factor model, which allows a more realistic estimation of latent factors than the orthogonal factor model in many cases (e.g., Fabrigar et al., 1999; Sass and Schmitt, 2010; Schmitt and Sass, 2011).

Let be n observations and be the corresponding sample covariance matrix. Let be a parameter vector, where vech is a vector that consists of a lower triangular matrix without diagonal elements. We estimate the model parameter by minimizing the penalized loss function expressed as

| 2 |

where is a loss function, is a penalty function, and is a regularization parameter. As a loss function, we adopt the maximum likelihood discrepancy function

| 3 |

where DF is an abbreviation for discrepancy function. Assume that the observations are drawn from the p-dimensional normal population with . The minimizer of is the maximum likelihood estimate. It is shown that for any , and if and only if when and are positive definite matrices. It is worth noting that our proposed penalty, described in Sect. 2, can be directly applied to many other loss functions, including a quadratic loss used for generalized least squares (Jöreskog and Goldberger, 1971).

When , the model has a rotational indeterminacy; both and generate the same covariance matrix , where is an arbitrary orthogonal matrix. Thus, when , the solution that minimizes (2) is not uniquely determined. However, when , the solution may be uniquely determined except for the sign and permutation of columns of the loading matrix when an appropriate penalty is chosen.

Prenet Penalty

Definition

We propose a prenet penalty

| 4 |

where is a tuning parameter. The most significant feature of the prenet penalty is that it is based on the product of a pair of parameters.

When , the prenet penalty is equivalent to the quartimin criterion (Carroll, 1953), a widely used oblique rotation criterion in factor rotation. As is the case with the quartimin rotation, the prenet penalty in (4) eliminates the rotational indeterminacy except for the sign and permutation of columns of the loading matrix and contributes significantly to the estimation of the simplicity of the loading matrix. The prenet penalty includes products of absolute values of factor loadings, producing factor loadings that are exactly zero.

Comparison with the Elastic Net Penalty

The prenet penalty is similar to the elastic net penalty (Zou and Hastie, 2005)

| 5 |

which is a hybrid of the lasso and the ridge penalties. Although the elastic net penalty is similar to the prenet penalty, there is a fundamental difference between these two penalties; the elastic net is constructed by the sum of the elements of parameter vector, whereas the prenet is based on the product of a pair of parameters.

Figure 1 shows the penalty functions of the prenet () and the elastic net () when , 0.5, 0.9. Clearly, the prenet penalty is a nonconvex function. A significant difference between the prenet and the elastic net is that although the prenet penalty becomes zero when either x or y attains zero, the elastic net penalty becomes zero only when both and . Therefore, for a two-factor model, the estimate of either or can be zero with the prenet penalization, leading to a perfect simple structure. On the other hand, the elastic net tends to produce estimates in which both and are small.

Fig. 1.

Penalty functions of the prenet and the elastic net with various .

The penalty functions in Fig. 1 also show that the prenet penalty becomes smooth as decreases. Thus, the value of controls the degrees of sparsity; the larger the value of , the sparser the estimate of the loading matrix. With an appropriate value of , the prenet penalty enhances both simplicity and sparsity of the loading matrix. Further investigation into the estimator against the value of is presented in Sect. 5.

Properties of the Prenet Penalty

Perfect Simple Structure

The model (1) does not impose an orthogonal constraint on the loading matrix . For unconstrained , most existing penalties, such as the lasso, shrink all coefficients toward zero when the tuning parameter is sufficiently large; we usually obtain when . However, the following proposition shows that the prenet penalty does not necessarily shrink all of the elements toward zero even when is sufficiently large.

Proposition 1

Assume that we use the prenet penalty with . As , the estimated loading matrix possesses the perfect simple structure, that is, each row has at most one nonzero element.

Proof

As , must satisfy . Otherwise, the second term of (2) diverges. When , for any . Therefore, the ith row of has at most one nonzero element.

The perfect simple structure is known as a desirable property in the literature in factor analysis because it is easy to interpret the estimated loading matrix (e.g., Bernaards and Jennrich, 2003). When is small, the estimated loading matrix can be away from the perfect simple structure but the goodness of fit to the model is improved.

Remark 1

For , the consistency of the loading matrix is shown when the true loading matrix possesses the perfect simple structure. For simplicity, we consider the orthogonal case. Assume that , where the true possesses the perfect simple structure. As , the sample covariance matrix converges to the true covariance matrix almost surely; thus, the loss function (3) is minimized when . When , must be the perfect simple structure. Therefore, we should consider the following problem:

The solution to the above problem is except for the sign and permutation of columns of the loading matrix if an identifiability condition for an orthogonal model (e.g., Theorem 5.1 in Anderson and Rubin, 1956) is satisfied.

Relationship with k-Means Clustering of Variables

The perfect simple structure corresponds to variables clustering, that is, variables that correspond to nonzero elements of the jth column of the loading matrix belong to the jth cluster. In this subsection, we investigate the relationship between the prenet with and the k-means clustering of variables, one of the most popular cluster analyses.

Let be an data matrix. can be expressed as , where is the ith column vector of . We consider the problem of the variables clustering of by the k-means. Let be a subset of indices of variables that belong to the jth cluster. The objective function of the k-means is

| 6 |

where , , and recall that . Let be a indicator variables matrix

| 7 |

Using the fact that , the k-means clustering of variables using (6) is equivalent to (Ding et al., 2005)

| 8 |

where denotes the Frobenius norm. We consider slightly modifying the condition on in (7) to

| 9 |

The modified k-means problem is then given as

| 10 |

The condition (9) is milder than (7); if satisfies (7), we obtain (9). The reverse does not always hold; with (9), the nonzero elements for each column do not have to be equal. Therefore, the modified k-means in (10) may capture a more complex structure than the original k-means.

Proposition 2

Assume that , , and is given. Suppose that satisfies . The prenet solution with is then obtained by (10).

Proof

The proof appears in Appendix A.1.

The proposition 2 shows that the prenet solution with is a generalization of the problem (10). As mentioned above, the problem (10) is a generalization of the k-means problem in (8). Therefore, the perfect simple structure estimation via the prenet is a generalization of the k-means clustering of variables. We remark that the condition in Proposition 2 implies the probabilistic principal component analysis (probabilistic PCA; Tipping and Bishop, 1999); the penalized probabilistic PCA via the prenet is also a generalization of the k-means clustering of variables.

Relationship with Quartimin Rotation

As described in Sect. 2, the prenet penalty is a generalization of the quartimin criterion (Carroll, 1953); setting to the prenet penalty in (4) leads to the quartimin criterion

The quartimin criterion is typically used in the factor rotation. The solution of quartimin rotation method, say , is obtained by two-step procedure. First, we calculate an unpenalized estimate, denoted by . The estimate , that satisfies , is not unique due to the rotational indeterminacy. The second step is the minimization of the quartimin criterion with a restricted parameter space . Hirose and Yamamoto (2015) showed that the solution of the quartimin rotation, , can be obtained by

| 11 |

under the condition that the unpenalized estimate of loading matrix is unique if the indeterminacy of the rotation in is excluded. It is not easy to check this condition, but several necessary conditions of the identifiability for the orthogonal model are provided (e.g., Theorem 5.1 in Anderson and Rubin, 1956.)

Now, we show a basic asymptotic result of the prenet solution, from which we can see that the prenet solution is a generalization of the quartimin rotation. Let be a compact parameter space with distance d and be a probability space. Suppose that for any and any , we have , where is a set of orthonormal matrices. Let denote independent -valued random variables with the common population distribution . Now, it is required that we can rewrite the empirical loss function and the true loss function as and , respectively. Let denote an arbitrary measurable prenet estimator which satisfies .

Condition 3.1

fulfills the following conditions:

For each , function on is continuous.

There exists a -integrable function such that for all and for all .

Since is the discrepancy function in Eq. (3), becomes a logarithm of density function of normal distribution; in this case, Condition 3.1 is satisfied. The following proposition shows that the prenet estimator converges almost surely to a true parameter which minimizes the quartimin criterion when as .

Proposition 3

Assume that Condition 3.1 is satisfied. We denote by a set of true solutions of the following quartimin problem.

Let () be a sequence that satisfies and . Let the prenet solution with and be . Then we obtain

where .

Proof

The proof is given in Appendix A.2.

Remark 3.1

Proposition 3 uses a set of true solutions instead of one true solution . This is because even if the quartimin solution does not have a rotational indeterminacy, it still has an indeterminacy with respect to sign and permutation of columns of the loading matrix.

Remark 3.2

The Geomin criterion (Yates, 1987) often produces a loading matrix similar to that obtained by the Quartimin criterion (Asparouhov and Muthén 2009). For the Geomin criterion, we add a small number to the loadings to address the identifiability problem (Hattori et al., 2017). Meanwhile, the prenet does not suffer from such a problem. The detailed discussion is described in Section S3 in the supplemental material.

Algorithm

It is well known that the solutions estimated by the lasso-type penalization methods are not usually expressed in a closed form because the penalty term includes an indifferentiable function. As the objective function of the prenet is nonconvex, it is not easy to construct an efficient algorithm to obtain a global minimum. Here, we use the generalized EM algorithm, in which the latent factors are considered to be missing values. The complete-data log-likelihood function is increased with the use of the coordinate descent algorithm (Friedman et al., 2010), which is commonly used in the lasso-type penalization. Although our proposed algorithm is not guaranteed to attain the global minimum, our algorithm decreases the objective function at each step. The update equation of the algorithm and its complexity are presented in Appendix B.

Efficient Algorithm for Sufficiently Large

The prenet tends to be multimodal for large as is the case with the k-means algorithm. Therefore, we prepare many initial values, estimate the solutions for each initial value, and select a solution that minimizes the penalized loss function. In this case, it seems that we require heavy computational loads. However, we can construct an efficient algorithm for a sufficiently large .

For sufficiently large , the ith column of loading matrix has at most one nonzero element, denoted by . With the EM algorithm, we can easily find the location of the nonzero parameter when the current value of the parameter is given. Assume that the (i, j)th element of the loading matrix is nonzero and the (i, k)th elements () are zero. Because the penalty function attains zero for sufficiently large , it is sufficient to minimize the following function.

| 12 |

The minimizer is easily obtained by

| 13 |

Substituting (13) into (12) gives us . Therefore, the index j that minimizes the function is

and is updated as and for any k j.

Selection of the Maximum Value of

The value of , which is the minimum value of that produces the perfect simple structure, is easily obtained using given by (13). Assume that and (). Using the update equation of in (A.10) and the soft thresholding function in (A.12) of Appendix A, we show that the regularization parameter must satisfy the following inequality to ensure that is estimated to be zero.

Thus, the value of is

where .

Estimation of the Entire Path of Solutions

The entire path of solutions can be produced with the grid of increasing values . Here, is (5.2), and , where is a small value such as 0.001. The term allows us to estimate a variety of models even if is small.

The entire solution path can be made using a decreasing sequence , starting with . The proposed algorithm at does not always converge to the global minimum, so that we prepare many initial values, estimate solutions for each initial value with the use of the efficient algorithm described in Sect. 4.1, and select a solution that minimizes the penalized log-likelihood function. We can use the warm start defined as follows: the solution at is computed using the solution at . The warm start leads to improved and smoother objective value surfaces (Mazumder et al., 2011).

One may use the warm start with increasing ; that is, the solution with is obtained by the rotation technique with MLE, and then we gradually increase , using the solution from the previous step. However, the decreasing sequence of has a significant advantage over the increasing sequence; the decreasing sequence allows the application to the case. With an increasing order, the solution with (MLE) is not available, and then the entire solution cannot be produced. Therefore, we adopt the warm start with decreasing sequence of instead of an increasing sequence.

Another method to estimate the entire solution is to use the cold start with multiple random starts. Although the cold start does not always produce a smooth estimate as a function of , it can sometimes find a better solution than the warm start when the loss function has multiple local minima. However, the cold start often requires heavier computational loads than the warm start.

When is extremely small, the loss function becomes nearly flat due to rotational indeterminacy. However, in our experience, our proposed algorithm generally produces a smooth and stable estimate when the warm start is adopted. Even when the cold start is used, the estimate can often be stable for large sample sizes when is not extremely but sufficiently small, such as . However, when , the maximum likelihood estimate cannot be obtained; therefore, the cold start often produces an unstable estimate with small .

Selection of the Regularization Parameter

The estimate of the loading matrix depends on the regularization parameter . As described in the Introduction, this study focus on two different purposes of the analysis: (i) exploratory factor analysis and (ii) clustering of variables. When the purpose of the analysis is (ii), we simply set to achieve the perfect simple structure estimation. When the purpose of the analysis is (i), is selected by the AIC or the BIC (Zou et al., 2007);

where is the number of nonzero parameters.

Our algorithm sometimes produces a loading matrix some of whose columns are zero vectors. In this case, the number of factors may be smaller than expected. The selection of the number of factors via the regularization is achieved by taking advantage of the zero column vectors estimation (Caner and Han, 2014; Hirose and Yamamoto, 2015).

Monte Carlo Simulations

The performance of our proposed method is investigated through Monte Carlo simulations. The prenet penalization has two different purposes of analysis: clustering of variables and exploratory factor analysis. In this section, we investigate the performance in terms of both purposes. The comparison of various exploratory factor analysis methods is described in Sect. 5.2, and the investigation of clustering of variables is presented in Sect. 5.3.

Simulation Models

In this simulation study, we use three simulation models as below.

Model (A):

where is a 25-dimensional vector with each element being 1.

Model (B):

The size of the loading matrix of Model (B) is the same as that of Model (A), and the nonzero factor loadings share the same values. However, all zero elements in Model (A) are replaced by small random numbers from .

Model (C):

The simulation is conducted for both orthogonal and oblique models on (A) and an orthogonal model on (B) – (C). For Model (A), we write the orthogonal and oblique models as “Model (A-ORT)” and “Model (A-OBL),” respectively. Here, “ORT” and “OBL” are abbreviations for “orthogonal” and “oblique,” respectively. The factor correlations for the oblique model are set to be 0.4. The unique variances are calculated by .

In Model (A), the loading matrix possesses the perfect simple structure. In such cases, the prenet is expected to perform well because it is able to estimate the perfect simple structure for large (Proposition 1). Note that on Model (A); therefore, maximum likelihood estimate cannot be available when .

The loading matrix of Model (B) is close to but not exactly a perfect simple structure. In this case, the prenet is expected to perform well when both and are close to zero, thanks to Proposition 3. Meanwhile, the lasso would not perform well; a small illustrative example with intuitive description is presented in Section S1 in supplemental material.

In Model (C), the loading matrix is sparse but more complex than the perfect simple structure. The loading matrix is a rotated centroid solution of the Thurstone’s box problem, reported in Thurstone (1947). We use data(ThurstoneBox26) in the fungible package in R to obtain the loading matrix. With the original loading matrix, some of the unique variances can be larger than 1 with ; therefore, the elements of the original loading matrix are multiplied by 0.83. Furthermore, to enhance the sparsity, factor loadings whose absolute values are less than 0.1 are replaced by 0.

Accuracy Investigation

The model parameter is estimated by the prenet penalty with , 0.1, 0.01, the lasso, the MCP (Zhang, 2010)

with , and the elastic net with . The regularization parameter is selected by the AIC and the BIC. We also compute a limit, , where is the estimate of the loading matrix obtained with a regularization parameter . We note that corresponds to the factor rotation with MLE (Hirose and Yamamoto, 2015). In particular, the estimate with and is equivalent to that obtained by the quartimin rotation with MLE, thanks to Proposition 3. We also conduct rotation techniques with MLE: varimax rotation (Kaiser, 1958) for the orthogonal model and promax rotation (Hendrickson and White, 1964) for the oblique model. When MLE cannot be found due to , we conduct the lasso and obtain the approximation of the MLE with .

The warm start is used for Models (A) and (B). The dimension of these models is , and then the warm start is stabler than the cold start for small in our experience. Meanwhile, we adopt the cold start on Model (C) because Thurstone’s box problem tends to possess multiple local minima.

In our implementation, the estimate of the elastic net sometimes diverges for the oblique model. Scharf and Nestler (2019a) reported the same phenomenon. To address this issue, we add a penalty to Eq. (2) with . This penalty is based on Lee (1981), a conjugate prior for Wishart distribution from a Bayesian viewpoint. We remark that the prenet does not tend to suffer from this divergence issue even if in our experience. This is probably because the prenet does not shrink all loadings to zero, thanks to Proposition 1. For example, assume that (). When the elastic net penalization is adopted, both and are close to zero with a large . When is large, must be large to get . Accordingly, the value of can be significantly large; it can be greater than 1. Meanwhile, the prenet may not suffer from this problem because either and can become large.

For each model, data sets are generated with . The number of observations is , and 500. To investigate the performance of various penalization procedures, we compare the root mean squared error (RMSE) over simulations, which is defined by

where is the estimate of the loading matrix using the sth dataset. We also compare the rate of nonzero factor loadings for Models (A) and (B). Because the loading matrix is not identifiable due to permutation and sign of columns, we change the permutation and sign such that RMSE is minimized. It is not fair to compare the rate of nonzero loadings for , because the estimated loading matrix cannot become sparse. Thus, we apply the hard-thresholding with a cutoff value being 0.1, the default of the loadings class in R.

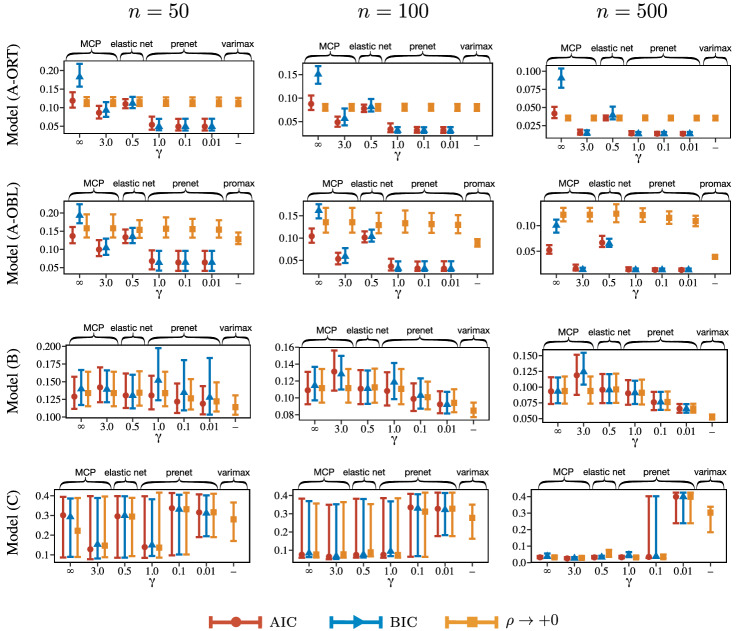

The results for RMSE and the rate of nonzero factor loadings are depicted in Figs. 2 and 3, respectively. For reference, true positive rate (TPR) and false positive rate (FPR) of the loading matrix are depicted in Figures S1.4 and S1.5 in the supplemental material. The range of the error bar indicates 90% confidence interval; we calculate 5% and 95% quantiles over 1000 simulation results and use them as the boundaries of the error bar. We obtain the following empirical observations from these figures.

- Model (A-ORT):

The prenet penalization outperforms the existing methods in terms of RMSE when the regularization parameter is selected by the model selection criteria. It is also seen that the performance of the prenet is almost independent of . When , all of the estimation procedures yield similar performances. When the is selected by the model selection criteria, the rate of nonzero loadings of the prenet is almost 0.25, that is the true rate. Considering the TPR result in Figure S1.4 in the supplemental material, the prenet with AIC or BIC correctly estimates the true zero/nonzero pattern. Meanwhile, the MCP, elastic net, and lasso tend to select a denser model than the true one.

- Model (A-OBL):

The result for the oblique model is similar to that for the orthogonal model, but the oblique model tends to produce larger RMSEs than the orthogonal model for most cases. The produces larger RMSE than that with the regularization parameter selected by model selection criteria. This is probably because the loss function becomes flat as . Therefore, the regularization may help improve the accuracy. We note that the promax rotation, which corresponds to , turns out to be stable.

- Model (B):

When n is large, the prenet with small and varimax rotation produces small RMSEs. Because the true values of cross-loadings (small loadings) are close to but not exactly zero, the type regularization that induces a sparse loading matrix does not work well. The prenet with achieves the sparse estimation but produces a loading matrix that is similar to the quartimin rotation, resulting in a nonsparse loading matrix. We also observe that the prenet with BIC sometimes results in too sparse loading matrix when .

- Model (C):

For small n, all methods result in large RMSE. For large n, the regularization methods, including the lasso, MCP, elastic net, and prenet with large yield small RMSE. However, the prenet with small and varimax rotation, which tend to estimate non-sparse loading matrix, produce large RMSE. Indeed, the average value of loading matrix in the supplemental material shows that the prenet with small is biased. Furthermore, the varimax rotation with true loading matrix does not approximate the true one. Therefore, when the loading matrix is sparse but does not have the perfect simple structure, the lasso-type penalization or prenet with would perform well.

Fig. 2.

RMSE of factor loadings. The upper and lower bars represent 95th and 5th percentiles, respectively. Here, “” denotes a limit of the estimate of the factor loadings, , which corresponds to the factor rotation.

Fig. 3.

Rate of nonzero factor loadings. The upper and lower bars represent 95th and 5th percentiles, respectively. Here, “” denotes a limit of the estimate of the factor loadings, , which corresponds to the factor rotation.

Investigation of Clustering via Perfect Simple Structure Estimation

As shown in Proposition 1, our proposed method allows the clustering of variables via the perfect simple structure estimation. We investigate the clustering accuracy on Model (A); the true loading matrix has the perfect simple structure, and then we know the true clusters. Figure 4 shows the Adjusted Rand Index (ARI) between true clusters and those obtained by prenet, lasso, and varimax. The range of the error bar indicates 90% confidence interval; we calculate 5% and 95% quantiles over 1000 simulation results and use them as the boundaries of the error bar.

Fig. 4.

Adjusted Rand Index (ARI) of the clustering results.

The clustering via prenet is achieved by perfect simple structure estimation. The lasso and varimax cannot always estimate the perfect simple structure. Therefore, we estimate the clusters as follows: for ith row vector of , say , the ith variable belongs to jth cluster, where . The regularization parameter for the lasso is , which corresponds to a special case of the component loss criterion (Jennrich, 2004, 2006) with MLE.

The result of Fig. 4 shows that the prenet and varimax result in almost identical ARIs and are slightly better than the lasso when on Model (A-ORT). All methods correctly detect the true clusters when and . For Model (A-OBL), the prenet performs slightly better than the varimax when . As with the orthogonal model, the prenet and varimax correctly detect the true clusters when and . The lasso performs worse than the other two methods for small sample sizes, suggesting that the prenet or varimax would be better if the clustering of variables is the purpose of the analysis.

Analysis of Big Five Personality Traits

We apply the prenet penalization to the survey data regarding the big five personality traits collected from Open Source Psychometrics Project (https://openpsychometrics.org/). Other real data applications (electricity demand and fMRI data) are described in Section S2 of the supplemental material. responders in the US region are asked to assess their own personality based on 50 questions developed by Goldberg (1992). Each question asks how well it describes the statement of the responders on a scale of 1–5. It is well known that the personality is characterized by five common factors; therefore, we choose . Several earlier researchers showed that the loading matrix may not possess the perfect simple structure due to the small cross-loadings (Marsh et al., 2010, 2013; Booth and Hughes, 2014); therefore, we do not aim at estimating the perfect simple structure with in this analysis. We first interpret the estimated model and then investigate the performance of the prenet penalization with selected by model selection criteria for various sample sizes. The impact of the regularization parameter on the accuracy is also studied.

Interpretation of Latent Factors

We first apply the prenet penalization and the varimax rotation with maximum likelihood estimate and compare the loading matrices estimated by these two methods. With the prenet penalization, we choose a regularization parameter using AIC, BIC, and tenfold cross-validation (CV) with . The regularization parameter selected by the AIC and CV is , and that selected by the BIC is . The heatmaps of the loading matrices are shown in Fig. 5. The result of Fig. 5 shows that these heatmaps are almost identical; all methods are able to detect the five personality traits appropriately. We also observe that the result is almost independent of . These similar results may be due to the large sample sizes.

Fig. 5.

Heatmaps of the loading matrices on big five personality traits data. Each cell corresponds to the factor loading, and the depth of color indicates the magnitude of the value of the factor loading.

We explore the estimates of cross-loadings whose absolute values are larger than 0.3; it would be reasonable to regard that these cross-loadings affect the items. There exists four items that include such large absolute values of cross-loadings, and factor loadings related to these four items are shown in Table 1.

Table 1.

Factor loadings of four items estimated by the prenet penalization with . The regularization parameter, , is selected by the BIC. The cross-loadings whose absolute values are larger than 0.3 are written in bold.

| Item | F1 | F2 | F3 | F4 | F5 |

|---|---|---|---|---|---|

| A2: Am not interested in other people’s problems. | 0.341 | 0.062 | 0.525 | 0.020 | 0.070 |

| A7: Have a soft heart. | -0.317 | 0.089 | 0.615 | 0.008 | 0.010 |

| A10: Do not have a good imagination. | 0.347 | 0.164 | 0.375 | 0.116 | 0.082 |

| C4: Change my mood a lot. | 0.083 | 0.365 | 0.033 | 0.548 | 0.022 |

The loading matrix is estimated by the prenet penalization with . The regularization parameter is selected by the BIC. The five factors represent “F1: extraversion,” “F2: neuroticism,” “F3: agreeableness,” “F4: conscientiousness,” and “F5: openness to experience.” For reference, the complete loading matrix is shown in Tables S2.5 and S2.6 of the supplemental material.

The three items, A2, A7, and A10, are affected by “F1: extraversion” and “F3: agreeableness.” The main and cross-loadings on the same item have opposite signs. We may make a proper interpretation of the factor loadings. For example, as for the question “A7: Have a soft heart,” it is easy to imagine some people who have an extraversion cannot be kind. They are interested in a profit from a person rather than the situation that the person is in now; thus, they can become selfish to get the profit even if the person’s feelings are hurt. Booth and Hughes (2014) also reported similar results of such cross-loadings. They mentioned that these cross-loadings were due to the overlap in content between extraversion and agreeableness.

Furthermore, we perform subsampling simulation with to investigate whether these cross-loadings can be found with small sample sizes. We compare the performance of four estimation methods: the prenet, lasso, MCP, and varimax rotation. For regularization methods, is selected by the BIC. We set and for the prenet and for MCP.

Table 2 shows the number of times that the absolute values of these four cross-loadings exceed 0.3. The results show that the prenet with most frequently identifies these four cross-loadings among simulations.

Table 2.

The number of times that the absolute values of four cross-loadings exceed 0.3. For regularization methods, is selected by the BIC.

| Prenet () | Prenet () | Lasso | MCP | Varimax | |

|---|---|---|---|---|---|

| A2 | 25 | 85 | 35 | 69 | 81 |

| A7 | 10 | 72 | 18 | 45 | 61 |

| A10 | 29 | 90 | 40 | 68 | 80 |

| C4 | 20 | 94 | 49 | 78 | 94 |

RMSE Comparison

We investigate the performance of the prenet in terms of estimation accuracy of the loading matrix through subsampling simulation. First, the dataset is randomly split into two datasets, and , without replacement. The sample sizes of and are . The is used for estimating a loading matrix with large sample sizes; we perform the varimax rotation with MLE and regard the estimated loading matrix as a true loading matrix, say . The true loading matrix is almost identical to the loading matrix obtained by the varimax with the entire dataset. We remark that the true loading matrix is also similar to the Model (B) of the Monte Carlo simulation described in Sect. 5.1.

The performance is investigated by subsampling the observations from with and . Figure 6 depicts RMSE and rate of nonzero loadings for n random subsampled data over 100 simulations. The RMSE is defined as

where is the estimate of the loading matrix using the sth subsampled data. We apply the lasso, MCP with , prenet with , 0.1, 0.01, and the varimax rotation with MLE. The regularization parameter is selected by the AIC, BIC, and tenfold CV. We also compute the loading matrix when , which results in the solution of the factor rotation with MLE. The nonzero pattern of the loading matrix for is estimated by a hard-thresholdings with a cutoff value being 0.1. The range of the error bar indicates 90% confidence interval over 100 simulations.

Fig. 6.

RMSE and rate of nonzero loadings when and 500. Here, “” denotes a limit of the estimate of the factor loadings, , which corresponds to the factor rotation.

We have the following empirical observations from Fig. 6:

The smaller the number of observations is, the sparser the solution is. An earlier study has shown that the model selection criterion can select a parsimonious model with small sample sizes in general frameworks (Cudeck and Henly, 1991).

The BIC results in larger RMSE and lower rate of nonzero loadings than other criteria, especially for small sample sizes. Therefore, the BIC tends to select sparse solutions, and some of the small nonzero factor loadings are estimated to be zero.

When the lasso or MCP is applied, the CV results in poor RMSE when . This is because the estimated loading matrix is too sparse; it becomes (almost) zero matrix. When the prenet is applied, such a loading matrix cannot be obtained thanks to Proposition 1.

With the prenet, small tends to estimate a dense loading matrix and produce good RMSE. A similar tendency is found in Model (B) of the Monte Carlo simulation, described in Sect. 5.2.

Impact of Tuning Parameters

We investigate the impact of the tuning parameters on the estimation of the loading matrix. Figure 7 depicts the heatmaps of the loading matrices for various values of tuning parameters on the MCP and the prenet penalization. We find the tuning parameters so that the degrees of sparseness (proportion of nonzero values) of the loading matrix are approximately 20%, 25%, 40%, and 50%. For the MCP, we set (i.e., the lasso), 5.0, 2.0, and 1.01. For prenet penalty, the values of gamma are 0.5, and 0.01. Each cell describes the elements of the factor loadings as with Fig. 5.

Fig. 7.

Heatmaps of the loading matrices on big five personality traits data for various values of tuning parameters on the MCP and the prenet penalization.

From Fig. 7, we obtain the empirical observations as follows.

With the prenet penalization, the characteristic of five personality traits are appropriately extracted for any values of tuning parameters, which suggests that the prenet penalization is relatively robust against the tuning parameters.

The prenet penalization is able to estimate the perfect simple structure when the degree of sparseness is 20%. On the other hand, with the MCP, we are not able to estimate the perfect simple structure even when is sufficiently small.

With the lasso, the number of factors becomes less than five when the degrees of sparsity are 20% and 25%; the five personality traits are not able to be found. When the value of is not sufficiently large, the MCP produces five factor model.

Discussion

We proposed a prenet penalty, which is based on the product of a pair of parameters in each row of the loading matrix. The prenet aims at the estimation of not only sparsity but also the simplicity. Indeed, the prenet is a generalization of the quartimin criterion, one of the most popular oblique techniques for simple structure estimation. Furthermore, the prenet is able to estimate the perfect simple structure, which gave us a new variables clustering method using factor models. The clustering of variables opens the door to the application of the factor analysis to a wide variety of sciences, such as image analysis, neuroscience, marketing, and biosciences.

The prenet penalization has two different purposes of analysis: clustering of variables and exploratory factor analysis. The way of using the prenet penalization depends on the purpose of the analysis. When the purpose of the analysis is the clustering of variables, the regularization parameter is set to be to achieve the perfect simple structure estimation. It is shown that the prenet performs better than the lasso and varimax in terms of clustering accuracy, as described in Sect. 5.3. Furthermore, the real data analyses in Section S2 in the supplemental material show the superiority of the prenet over the conventional clustering methods, such as the k-means clustering. When the purpose of the analysis is exploratory factor analysis, the perfect simple structure estimation is not necessarily needed. In this case, the regularization parameter is selected by the model selection criteria. The numerical results show that the prenet penalization performs well when an appropriate value of is selected.

Over a span of several decades, a number of researchers have developed methods for finding a simple loading matrix (e.g., Kaiser, 1958; Hendrickson and White, 1964) in the Thurstonian sense (Thurstone, 1947). As the simple structure is a special case of sparsity, it seems the lasso-type sparse estimation is more flexible than the prenet. Indeed, the recent trend in the exploratory factor analysis literature is to find a loading matrix that possesses the sparsity rather than simplicity (Jennrich, 2004, 2006; Trendafilov, 2013; Scharf and Nestler, 2019b, a).

Nevertheless, the lasso-type sparse estimation is not as flexible as expected. As mentioned in Model (B) in Monte Carlo simulation and Section S1 in the supplemental material, the lasso cannot often approximate the true loading matrix when the cross-loadings are not exactly but close to zero. Because some factor loadings are estimated to be exactly zero with the lasso, some other factor loadings turn out to be excessively large, which causes the difficulty in interpretation.

For this reason, we believe both sparsity and simplicity play important roles in the interpretation. The sparse estimation automatically produces the nonzero pattern of the loading matrix, which allows us to interpret the latent factors easily. In addition, simplicity is also helpful for the interpretation, as shown in Thurstone (1947). The prenet penalization is able to achieve simplicity and sparsity simultaneously. Indeed, a sparse loading matrix is estimated thanks to the penalty based on the absolute term in . In addition, simplicity is also achieved because it generalizes the quartimin criterion that often produces a simple structure (Jennrich and Sampson, 1966). Furthermore, with a large value of the regularization parameter, the loading matrix enjoys the perfect simple structure. Meanwhile, the existing methods cannot always produce a loading matrix that is both sparse and simple. For example, the lasso produces a loading matrix that is sparse but not always simple.

The structural equation modeling (SEM) has been widely used in the social and behavioral sciences. The SEM covers a wide variety of statistical models, including the factor analysis model and the regression model. An analyst develops an assumption of causal relationship and determines whether the assumption is correct or not by testing the hypothesis or evaluating the goodness of fit indices. Recently, several researchers have proposed regularized structural equation models (Jacobucci et al., 2016; Huang et al., 2017; Huang, 2018). The analyst set lasso-type penalties to specific model parameters to conduct an automatic selection of the causal relationship, enhancing the flexibility in model specification. The application of the prenet to the SEM would be an interesting future research topic.

The lasso-type regularization extracts only the nonzero pattern of parameters. In some cases, the analyst needs to detect not only the nonzero pattern of parameters but also a more complex parameter structure. The penalty must be determined depending on the structure of the parameter. For example, when the analyst needs to estimate either or to be zero, the prenet penalty would be more useful than the lasso. More generally, when one of is exactly or close to zero, we may use the Geomin-type penalty, . An application of a penalty that leads to structured sparsity would further enhance the flexibility of the analysis but beyond the scope of this research. We would like to take this as a future research topic.

Another interesting extension to the prenet penalization is the clustering of not only variables but also observations. This method is referred to as biclustering (e.g., Tan and Witten, 2014; Flynn and Perry, 2020). To achieve this, we may need an entirely new formulation along with an algorithm to compute the optimal solution. This extension should also be a future research topic.

Supplemental Materials

Further numerical and theoretical investigations Analyses of resting-state fMRI data, electricity demand data, a loading matrix of big five data, and comparison with Geomin criterion.

R-package fanc R-package fanc containing code that performs our proposed algorithm.

Loadings Average of the estimated loading matrices for Monte Carlo simulations in Sect. 5 with excel files.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

The authors would like to thank an associate editor and three reviewers for valuable comments and suggestions that improve the quality of the paper considerably. This research was supported in part by JSPS KAKENHI Grant (JP19K11862 and JP22H01139 to KH; JP20K19756, JP20H00601 to YT) and MEXT Project for Seismology toward Research Innovation with Data of Earthquake (STAR-E) Grant Number JPJ010217.

Proofs

Proof of Proposition 2

Because of Proposition 1, with the prenet, as . Thus, the prenet solution satisfies (9) as . We only need to show that the minimization problem of loss function is equivalent to that of . The inverse covariance matrix of the observed variables is expressed as

Because , we obtain

The determinant of can be calculated as

Then, the discrepancy function in (3) is expressed as

Because is given and with constant value C, we can derive (10).

Proof of Proposition 3

Recall that is an unpenalized estimator that satisfies and is a quartimin solution obtained by the following problem.

First, we show that

| A.1 |

From the assumptions, as the same manner of Chapter 6 in Pfanzagl (1994), we can obtain the following strong consistency.

| A.2 |

where . When , for all , by taking n large enough, we have

for some . From the uniform continuity of on and the fact that for any , we have

| A.3 |

Write and . We have

From this, it follows that

Thus, using (A.3), we obtain (A.1).

Next, as the similar manner of Proposition 15.1 in Foucart and Rauhut (2013), we prove , a.s. By the definition of , for any we have

| A.4 |

and

| A.5 |

Combining (A.1), (A.4), (A.5), we obtain

for some . Therefore, we have

As shown in (A.2), , and is a minimizer of over , so that the proof is complete.

Detail of the Algorithm

Update Equation of EM Algorithm for Fixed Tuning Parameters

We provide update equations of factor loadings and unique variances when and are fixed. Suppose that , , and are the current values of parameters. The parameter can be updated by minimizing the negative expectation of the complete-data penalized log-likelihood function with respect to , , and (e.g., Hirose and Yamamoto, 2014):

| A.6 |

where C is a constant and

| A.7 |

| A.8 |

| A.9 |

Here, is the ith column vector of .

In practice, minimization of (A.6) is difficult, because the prenet penalty consists of nonconvex functions. Therefore, we use a coordinate descent algorithm to obtain updated loading matrix . Let be a ()-dimensional vector . The parameter can be updated by maximizing (A.6) with the other parameters and with being fixed, that is, we solve the following problem.

| A.10 |

where

This is equivalent to minimizing the following penalized squared error loss function.

| A.11 |

The solution can be expressed in a closed form using the soft thresholding function:

| A.12 |

where .

For given , the parameters of unique variances and factor correlations, say and , are expressed as

where is the ith diagonal element of , and is the ith row of . The new value may not be expressed in an explicit form, because all of the diagonal elements of are fixed by 1. Thus, is obtained by the Broyden–Fletcher–Goldfarb–Shanno optimization procedure.

Algorithm Complexity

The complexity for our proposed algorithm for the orthogonal case is considered. To update , we need a matrix in (A.7). The matrix computation of requires operation (Zhao et al., 2007). In the coordinate descent algorithm, we need to compute in (A.11) for each step, in which O(m) operation is required. For simplicity, we consider the case where the number of cycles of the coordinate descent algorithm is one. We remark that our algorithm converges to the (local) optima for this case because both EM and coordinate descent algorithms monotonically decrease the objective function at each iteration. In this case, we need O(m) to update (; ), and therefore, operation is required to update in the coordinate descent algorithm. As a result, the algorithm complexity to update is .

For the update of , we need operation because the computation of in (A.13) requires operation for . Note that when the unpenalized maximum likelihood estimation is conducted, the order O(pm) is achieved (Zhao et al., 2007); however, this order may not be achieved for the prenet penalization.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Akaike, H. (1973). Information theory and an extension of the maximum likelihood principle. In B. N. Petrov & F. Csaki (Eds.), 2nd International symposium on information theory (pp. 267–81). Budapest: Akademiai Kiado.

- Anderson, T. W., & Rubin, H. (1956). Statistical inference in factor analysis. In Proceedings of the third Berkeley symposium on mathematical statistics and probability (Vol. 5).

- Asparouhov T, Muthén B. Exploratory structural equation modeling. Structural Equation Modeling: A Multidisciplinary Journal. 2009;16(3):397–438. [Google Scholar]

- Bernaards CA, Jennrich RI. Orthomax rotation and perfect simple structure. Psychometrika. 2003;68(4):585–588. [Google Scholar]

- Bhlmann P, van de Geer S. Statistics for high-dimensional data: Methods, theory and applications. 1. Berlin: Springer; 2011. [Google Scholar]

- Booth T, Hughes DJ. Exploratory structural equation modeling of personality data. Assessment. 2014;21(3):260–271. doi: 10.1177/1073191114528029. [DOI] [PubMed] [Google Scholar]

- Browne MW. An overview of analytic rotation in exploratory factor analysis. Multivariate Behavioral Research. 2001;36(1):111–150. [Google Scholar]

- Caner M, Han X. Selecting the correct number of factors in approximate factor models: The large panel case with group bridge estimators. Journal of Business & Economic Statistics. 2014;32(3):359–374. [Google Scholar]

- Carroll JB. An analytical solution for approximating simple structure in factor analysis. Psychometrika. 1953;18(1):23–38. [Google Scholar]

- Choi J, Zou H, Oehlert G. A penalized maximum likelihood approach to sparse factor analysis. Statistics and Its Interface. 2011;3(4):429–436. [Google Scholar]

- Cudeck R, Henly SJ. Model selection in covariance structures analysis and the" problem" of sample size: A clarification. Psychological Bulletin. 1991;109(3):512. doi: 10.1037/0033-2909.109.3.512. [DOI] [PubMed] [Google Scholar]

- Ding, C. H. Q., He, X., & Simon, H. D. (2005). On the equivalence of nonnegative matrix factorization and spectral clustering. In SDM (Vol. 5, pp. 606–610). SIAM.

- Fabrigar LR, Wegener DT, MacCallum RC, Strahan EJ. Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods. 1999;4(3):272–299. [Google Scholar]

- Flynn C, Perry P. Profile likelihood biclustering. Electronic Journal of Statistics. 2020;14(1):731–768. [Google Scholar]

- Foucart S, Rauhut H. A mathematical introduction to compressive sensing. Berlin: Springer; 2013. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software. 2010;33:1–22. [PMC free article] [PubMed] [Google Scholar]

- Goldberg LR. The development of markers for the big-five factor structure. Psychological assessment. 1992;4(1):26. [Google Scholar]

- Hattori M, Zhang G, Preacher KJ. Multiple local solutions and geomin rotation. Multivariate Behavioral Research. 2017;52(6):1–12. doi: 10.1080/00273171.2017.1361312. [DOI] [PubMed] [Google Scholar]

- Hendrickson AE, White PO. Promax: A quick method for rotation to oblique simple structure. British Journal of Statistical Psychology. 1964;17(1):65–70. doi: 10.1111/j.2044-8317.1966.tb00358.x. [DOI] [PubMed] [Google Scholar]

- Hirose K, Yamamoto M. Estimation of an oblique structure via penalized likelihood factor analysis. Computational Statistics & Data Analysis. 2014;79:120–132. [Google Scholar]

- Hirose K, Yamamoto M. Sparse estimation via nonconcave penalized likelihood in factor analysis model. Statistics and Computing. 2015;25(5):863–875. [Google Scholar]

- Huang P-H. A penalized likelihood method for multi-group structural equation modelling. British Journal of Mathematical and Statistical Psychology. 2018;71(3):499–522. doi: 10.1111/bmsp.12130. [DOI] [PubMed] [Google Scholar]

- Huang P-H, Chen H, Weng L-J. A penalized likelihood method for structural equation modeling. Psychometrika. 2017;82(2):329–354. doi: 10.1007/s11336-017-9566-9. [DOI] [PubMed] [Google Scholar]

- Hui FKC, Tanaka E, Warton DI. Order selection and sparsity in latent variable models via the ordered factor lasso. Biometrics. 2018;74(4):1311–1319. doi: 10.1111/biom.12888. [DOI] [PubMed] [Google Scholar]

- Jacobucci R, Grimm KJ, McArdle JJ. Regularized structural equation modeling. Structural Equation Modeling: A Multidisciplinary Journal. 2016;23(4):555–566. doi: 10.1080/10705511.2016.1154793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jennrich RI. Rotation to simple loadings using component loss functions: The orthogonal case. Psychometrika. 2004;69(2):257–273. [Google Scholar]

- Jennrich RI. Rotation to simple loadings using component loss functions: The oblique case. Psychometrika. 2006;71(1):173–191. [Google Scholar]

- Jennrich RI, Sampson PF. Rotation for simple loadings. Psychometrika. 1966;31(3):313–313. doi: 10.1007/BF02289465. [DOI] [PubMed] [Google Scholar]

- Jöreskog KG, Goldberger AS. Factor analysis by generalized least squaresd. ETS Research Bulletin Series. 1971;1971:i–32. [Google Scholar]

- Kaiser HF. The varimax criterion for analytic rotation in factor analysis. Psychometrika. 1958;23(3):187–200. [Google Scholar]

- Kaiser HF. An index of factorial simplicity. Psychometrika. 1974;39(1):31–36. [Google Scholar]

- Kiers HAL. Simplimax: Oblique rotation to an optimal target with simple structure. Psychometrika. 1994;59(4):567–579. [Google Scholar]

- Lee S-Y. A Bayesian approach to confirmatory factor analysis. Psychometrika. 1981;46(2):153–160. [Google Scholar]

- Marsh HW, Lüdtke O, Muthén B, Asparouhov T, Morin AJS, Trautwein U, Nagengast B. A new look at the big five factor structure through exploratory structural equation modeling. Psychological Assessment. 2010;22(3):471–491. doi: 10.1037/a0019227. [DOI] [PubMed] [Google Scholar]

- Marsh HW, Nagengast B, Morin AJS. Measurement invariance of big-five factors over the life span: ESEM tests of gender, age, plasticity, maturity, and la dolce vita effects. Developmental Psychology. 2013;49(6):1194–1218. doi: 10.1037/a0026913. [DOI] [PubMed] [Google Scholar]

- Mazumder R, Friedman J, Hastie T. Sparsenet: Coordinate descent with nonconvex penalties. Journal of the American Statistical Association. 2011;106:1125–1138. doi: 10.1198/jasa.2011.tm09738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neuhaus JO, Wrigley C. The quartimax method: An analytical approach to orthogonal simple structure. British Journal of Statistical Psychology. 1954;7(2):81–91. [Google Scholar]

- Ning, L., & Georgiou, T. T. (2011). Sparse factor analysis via likelihood and regularization. In 50th IEEE conference on decision and control and European control conference (pp. 5188–5192).

- Pfanzagl J. Parametric statistical theory. Berlin, Boston: De Gruyter; 1994. [Google Scholar]

- Sass DA, Schmitt TA. A comparative investigation of rotation criteria within exploratory factor analysis. Multivariate Behavioral Research. 2010;45(1):73–103. doi: 10.1080/00273170903504810. [DOI] [PubMed] [Google Scholar]

- Scharf F, Nestler S. Should regularization replace simple structure rotation in exploratory factor analysis? Structural Equation Modeling. 2019;26(4):576–590. [Google Scholar]

- Scharf F, Nestler S. A comparison of simple structure rotation criteria in temporal exploratory factor analysis for event-related potential data. Methodology. 2019;15:43–60. [Google Scholar]

- Schmitt TA, Sass DA. Rotation criteria and hypothesis testing for exploratory factor analysis: Implications for factor pattern loadings and interfactor correlations. Educational and Psychological Measurement. 2011;71(1):95–113. [Google Scholar]

- Schwarz G. Estimation of the mean of a multivariate normal distribution. The Annals of Statistics. 1978;9:1135–1151. [Google Scholar]

- Srivastava S, Engelhardt BE, Dunson DB. Expandable factor analysis. Biometrika. 2017;104(3):649–663. doi: 10.1093/biomet/asx030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan KM, Witten DM. Sparse biclustering of transposable data. Journal of Computational and Graphical Statistics. 2014;23(4):985–1008. doi: 10.1080/10618600.2013.852554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thurstone LL. The vectors of mind. The vectors of mind. Chicago: University of Chicago Press; 1935. [Google Scholar]

- Thurstone LL. Multiple factor analysis. Chicago: University of Chicago Press; 1947. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B. 1996;58:267–288. [Google Scholar]

- Tipping ME, Bishop CM. Probabilistic principal component analysis. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 1999;61(3):611–622. [Google Scholar]

- Trendafilov NT. From simple structure to sparse components: A review. Computational Statistics. 2013;29(3–4):431–454. [Google Scholar]

- Trendafilov NT, Fontanella S, Adachi K. Sparse exploratory factor analysis. Psychometrika. 2017;82(3):778–794. doi: 10.1007/s11336-017-9575-8. [DOI] [PubMed] [Google Scholar]

- Wainwright MJ. Sharp thresholds for high-dimensional and noisy sparsity recovery using-constrained quadratic programming (lasso) IEEE Transactions on Information Theory. 2009;55(5):2183–2202. [Google Scholar]

- Yamamoto M, Jennrich RI. A cluster-based factor rotation. British Journal of Mathematical and Statistical Psychology. 2013;66(3):488–502. doi: 10.1111/bmsp.12007. [DOI] [PubMed] [Google Scholar]

- Yates A. Multivariate exploratory data analysis: A perspective on exploratory factor analysis. New York: State University of New York Press; 1987. [Google Scholar]

- Zhang C. Nearly unbiased variable selection under minimax concave penalty. The Annals of Statistics. 2010;38:894–942. [Google Scholar]

- Zhao P, Yu B. On model selection consistency of lasso. Journal of Machine Learning Research. 2007;7(2):2541. [Google Scholar]

- Zhao JH, Yu PLH, Jiang Q. ML estimation for factor analysis: EM or non-EM? Statistics and Computing. 2007;18(2):109–123. [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. Journal of the American statistical association. 2006;101(476):1418–1429. [Google Scholar]

- Zou H, Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society, Series B. 2005;67:301–320. [Google Scholar]

- Zou H, Hastie T, Tibshirani R. On the degrees of freedom of the lasso. The Annals of Statistics. 2007;35:2173–2192. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.