Abstract

BACKGROUND:

We aimed to assess in a prospective multicenter study the quality of echocardiographic exams performed by inexperienced users guided by a new artificial intelligence software and evaluate their suitability for diagnostic interpretation of basic cardiac pathology and quantitative analysis of cardiac chamber and function.

METHODS:

The software (UltraSight, Ltd) was embedded into a handheld imaging device (Lumify; Philips). Six nurses and 3 medical residents, who underwent minimal training, scanned 240 patients (61±16 years; 63% with cardiac pathology) in 10 standard views. All patients were also scanned by expert sonographers using the same device without artificial intelligence guidance. Studies were reviewed by 5 certified echocardiographers blinded to the imager’s identity, who evaluated the ability to assess left and right ventricular size and function, pericardial effusion, valve morphology, and left atrial and inferior vena cava sizes. Finally, apical 4-chamber images of adequate quality, acquired by novices and sonographers in 100 patients, were analyzed to measure left ventricular volumes, ejection fraction, and global longitudinal strain by an expert reader using conventional methodology. Measurements were compared between novices’ and experts’ images.

RESULTS:

Of the 240 studies acquired by novices, 99.2%, 99.6%, 92.9%, and 100% had sufficient quality to assess left ventricular size and function, right ventricular size, and pericardial effusion, respectively. Valve morphology, right ventricular function, and left atrial and inferior vena cava size were visualized in 67% to 98% exams. Images obtained by novices and sonographers yielded concordant diagnostic interpretation in 83% to 96% studies. Quantitative analysis was feasible in 83% images acquired by novices and resulted in high correlations (r≥0.74) and small biases, compared with those obtained by sonographers.

CONCLUSIONS:

After minimal training with the real-time guidance software, novice users can acquire images of diagnostic quality approaching that of expert sonographers in most patients. This technology may increase adoption and improve accuracy of point-of-care cardiac ultrasound.

Keywords: imaging, machine learning, prospective studies, ultrasound

CLINICAL PERSPECTIVE.

We found that after minimal training with the novel artificial intelligence guidance software, novice users can acquire images of diagnostic quality approaching that of expert sonographers and allowing qualitative diagnostic interpretation and quantitative analysis. We believe that this technology may increase the adoption of and improve the accuracy of point-of-care cardiac ultrasound.

See Editorial by Meucci and Delgado

Unlike other cardiac imaging modalities, ultrasound imaging allows real-time visualization of the heart, lending itself to rapid assessment of cardiac size, structure, function, and hemodynamics. As a result, echocardiographic images are widely used for diagnostic interpretation, with a well-documented prognostic utility. However, one of the main challenges of echocardiographic imaging is that extensive training is required to obtain high-quality diagnostic images, and as a consequence, ultrasound examinations are predominantly performed in dedicated laboratories by highly specialized personnel. Furthermore, until recently, echocardiographic equipment has been limited in terms of portability.

With technological developments, imaging equipment has become smaller and, therefore, more portable, and the use of cardiac ultrasound has become ubiquitous, expanding in many hospitals to the bedside. Importantly, this includes critically ill patients and patients in the emergency department, where imaging is increasingly performed by physicians with different backgrounds and levels of training in ultrasound imaging.1,2 These miniaturized ultrasound devices have fewer features and capabilities, making them easier to operate. Not surprisingly, when combined with their low cost, these developments have made cardiac ultrasound attractive among a wide variety of nontraditional users.3,4 Nevertheless, to date, echocardiographic image acquisition and interpretation remain highly dependent on the operator’s experience, thereby creating opportunities for automation and standardization using artificial intelligence (AI).5–12 Thus, the growing need for training medical personnel has brought to the forefront the idea of using AI as an aid in cardiac ultrasound imaging.

Accordingly, a machine learning–based software application for real-time guidance of echocardiographic imaging was recently developed. Our novel software uses intuitive visual cues to guide image acquisition; its display is similar to that of a computer gamer’s controls tracking hand’s movements, to guide the user on how to manipulate the transducer to obtain the desired echocardiographic view.

This multicenter study was designed to evaluate the ability of medical professionals without prior imaging experience, after a short course on basic cardiac ultrasound and the use of this software, to perform limited transthoracic echocardiography examination at a level sufficient for diagnostic evaluation in common clinical scenarios that involve handheld cardiac ultrasound. Our specific aim was to compare the quality of images acquired by novices using the real-time AI guidance against those obtained by expert sonographers using the same imaging equipment without AI guidance, in terms of their ability to allow qualitative evaluation of basic cardiac pathology, as well as quantitative measurement of cardiac chamber size and function.

METHODS

The data used in this study will not be publicly available.

Population

We prospectively enrolled 240 adult patients over a period of 12 weeks at echocardiography laboratories of 3 medical centers (75 at the University of Chicago, Chicago, IL; 81 at Aurora Milwaukee, Milwaukee, WI; 84 at Sheba Medical Center, Tel Aviv, Israel), including 150 patients (63%) with a wide range of cardiac pathology (age, 61±16 years; 144 males; height, 171±10 cm; weight, 80±17 kg; body mass index [BMI], 26.5±4.5 kg/m2). The study group represented a typical population of patients undergoing echocardiographic examination in a large tertiary care setting, including patients with varying degrees of left ventricular (LV) and right ventricular dysfunction and valvular heart disease. The study was approved by the institutional review boards of the participating institutions, and written informed consent was obtained in each patient who agreed to participate, after their clinically indicated examination was completed. Exclusion criteria were any cardiac conditions that would render the patients unstable (such as acute myocardial infarction, decompensated heart failure, cardiac tamponade, and acute pulmonary edema), congenital heart abnormalities, BMI >40 kg/m2, pregnancy, severe chest wall deformities, and prior pneumonectomy. From the initial cohort of 245 patients screened for inclusion, 5 patients were ultimately excluded because of poor acoustic windows noted in prior echocardiographic examinations. The majority of study subjects were White (76.5%), about a fifth were Black (22.0%), and the rest were Asian (1.5%). Approximately two-thirds of the subjects reported that they had never consumed alcohol or smoked (65.5% and 67.4%, respectively).

Study Design

The patients underwent echocardiographic examinations by 6 nurses (Advocate Aurora Research, Milwaukee, WI and Sheba Medical Center, Israel) and 3 medical residents (University of Chicago Medical Center, Chicago, IL) with no prior imaging experience. The potential novices were offered to participate in the study, and the first ones who responded with interest were recruited. The nurses were recruited in cardiology clinics, and the medical residents were approached on cardiology inpatient services. These novice imagers underwent a brief training course that included 8 hours of lectures on cardiac anatomy, basic principles of ultrasound imaging of the heart, a demonstration of the novel AI-based guidance software (version 1.1.0, 2022; UltraSight, Ltd, Rehovot, Israel), which was integrated into a commercial handheld ultrasound imaging system, and a tutorial on its use with a supervised hands-on training session. The training was standardized and was performed simultaneously for all participants at each institution. This was followed by 8 practice scans by each participant, using the real-time guidance software. Following this training, each novice imaged between 23 and 30 patients (totaling 240) with AI guidance, including 10 standard echocardiographic views. Imaging was performed completely independently, with no one present in the imaging room, other than the novice and the patient. In the same setting, each patient underwent imaging by an expert cardiac sonographer, including acquisition of the same 10 standard views via 3 acoustic windows (parasternal, apical, and subcostal), using the same imaging equipment, but without using AI guidance. Images acquired by both novices and expert sonographers were reviewed by 5 expert readers to compare their quality, as well as suitability for qualitative diagnostic interpretation and detection of basic pathology. Finally, the images were subjected to a quantitative analysis of LV size and function and the results compared between the 2 groups of images.

Device Description

The novel machine learning–based guidance model is a software application interfaced with a commercial handheld ultrasound imaging system (Lumify; Philips Healthcare, Cambridge, MA). It receives as an input a live stream of 2-dimensional ultrasound images and performs real-time adaptive guidance by comparing the current transducer position and orientation at any given moment to the transducer optimal position and orientation needed to obtain a preset target tomographic view of the heart. In addition, it displays image quality assessment indicators designed to provide the user with feedback regarding the similarity of the current image and the desired target view. The system is based on a convolutional neural network, which was trained to guide acquisition of 10 standard views via 3 acoustic windows: parasternal long axis, parasternal short axis at the aortic valve level, parasternal short axis at the mitral valve level, parasternal short axis at the papillary muscle level, apical 4 chamber, apical 2 chamber, apical 3 chamber, apical 5 chamber, subcostal 4 chamber, and subcostal inferior vena cava. To achieve this goal, it continuously displays the current and desired transducer position and orientation on a secondary display as visual guiding cues to what transducer maneuvers are needed to obtain the optimal view, namely sliding, rotation, rocking, or tilting (Figure 1). This secondary display also includes an image quality assessment indicator in a manner that does not interfere with the ultrasound image and other information shown on the main screen. This provides real-time feedback on the image quality from the current transducer position as compared with the ideal position for a specific view.

Figure 1.

Artificial intelligence–based echocardiographic image acquisition guidance system setup. An example of a system screen (right) showing the actual real-time view (middle) and visually intuitive instructions aimed at guiding the novice user how to manipulate transducer position and orientation toward achieving the target view (top, right), along with the ideal target view shown as a reference (top, left; see text for details). The quality bar is located on the top right corner of the screen. PLAX indicates parasternal long axis.

Software Architecture and Development

The deep learning–based core is a stand-alone software application, installed on a tablet device, utilizing only the tablet’s processing power without requiring internet connectivity. The core component of the algorithm is the neural network that consists of convolutional transformers and multilayer perceptron layers. The model was implemented into software and trained using the open-source PyTorch Lightning interface (version 1.5.9). The development of the neural network was divided into an algorithm training, refinement stage, and a postalgorithm lock validation stage. The first step in the algorithm development was the creation of the scans’ data sets, used for the algorithm training and testing. These scans were collected in a multisite clinical study, designed to acquire the ultrasound transducer position and orientation. Expert sonographers captured images of subjects with a wide range of ages, BMIs, and pathologies. This training data set contained >5 000 000 individual data points on transducer location using the Philips Lumify ultrasound imaging device. The data set included labels from expert sonographers and cardiologists, annotating the scans’ diagnostic quality and was used to train the algorithm with the distances of each of the 10 views, to accomplish guidance actions and diagnostic quality thresholds for the 10 views. The resultant software has been evaluated for its guidance cues, the precision of estimates versus sonographer judgements, and its estimation of diagnostic quality, by predefined tests, and by a pilot testing of novice user performance, before its validation in this pivotal study.

Analysis of Image Quality and Diagnostic Capability

Images acquired by both the novices and the expert sonographers were reviewed in a random order by 5 independent, experienced echocardiographers outside of the participating institutions, who were blinded to the identity of the operator. Each of the 5 readers reviewed and graded image quality for each of the 10 views of each of the 480 exams (240 performed by novices and 240 by sonographers), according to the American College of Emergency Physicians guidelines for quality assurance grading scale of 1 (poor, with no recognizable structures) through 5 (excellent visualization, completely supportive of the diagnosis), and a score of ≥3 was considered as sufficient quality.

In addition, the 5 readers evaluated the ability of each exam to allow diagnostic assessment of LV and right ventricular size and function, pericardial effusion, morphology of the aortic, mitral, and tricuspid valves, and left atrial and IVC size. This ability was graded in a binary manner, namely using a yes or no answer. Finally, each reader classified each of the above features as normal, abnormal, or inconclusive. The adjudicated decision for each end point was based on a majority rule among the 5 readers, namely consensus of at least 3 of 5 readers.

Quality grades, diagnostic capability, and abnormality status were compared between images acquired by novices and sonographers. To determine factors that may affect the image quality and diagnostic capacity, these comparisons were repeated for subgroups of subjects defined by BMI of 0 to 25 kg/m2 (33.7% of the patients), 26 to 30 kg/m2 (39.4%), and >30 kg/m2 (26.9%); sex (1.5 male-to-female ratio); age ≤60 years (n=107), >60 to ≤70 years (n=55), and >70 years (n=78); and the presence of cardiac pathology (150 positive and 90 negative). In addition, these comparisons were repeated between exams performed by nurses versus those performed by medical residents.

Sample Size Calculation

Based on the results of the pilot study, assuming that the true proportion of each of the primary end points is 0.9, 240 subjects were required to complete examination by novice users to have an overall power of 80%. This sample size was required for meeting 4 null hypotheses that the proportions of subjects with sufficient quality for each end point is 0.8, versus the alternative that proportions are different from 0.8. Calculations were done considering a 2-sided type I error rate of 5%. Sample size calculation accounted for the between-novice variability.

Suitability for and Accuracy of Quantitative Analysis

A subset of images of 100 randomly selected study subjects, whose images received a quality score of ≥3, were analyzed using conventional methodology (Image Arena v. 2.30.00; TomTec, Unterschleissheim, Germany), to measure LV volumes, ejection fraction, and global longitudinal strain in the apical 4-chamber view. These measurements obtained from the images acquired by sonographers were used as a reference standard to determine whether quantitative analysis of images of sufficient quality generated by novices would yield similar results as those acquired by experts.

Statistical Analysis

The proportion of subjects for whom there was agreement in the clinical interpretation between the exams using the novices’ and the sonographers’ images was calculated, including a 95% Wilson CI. The significance of the differences between these ratios was tested using χ2 statistics. Quantitative measurements of LV volumes and ejection fraction were expressed as median values with the corresponding interquartile ranges. Comparisons between measurements obtained from the novices’ and sonographers’ images included linear regression, Pearson correlation coefficients, Bland-Altman analyses of biases, and limits of agreement. Significance of biases was assessed using 2-tailed paired Student t tests. All analyses were performed using R, version 4.1.2 (The R Foundation for Statistical Computing).

RESULTS

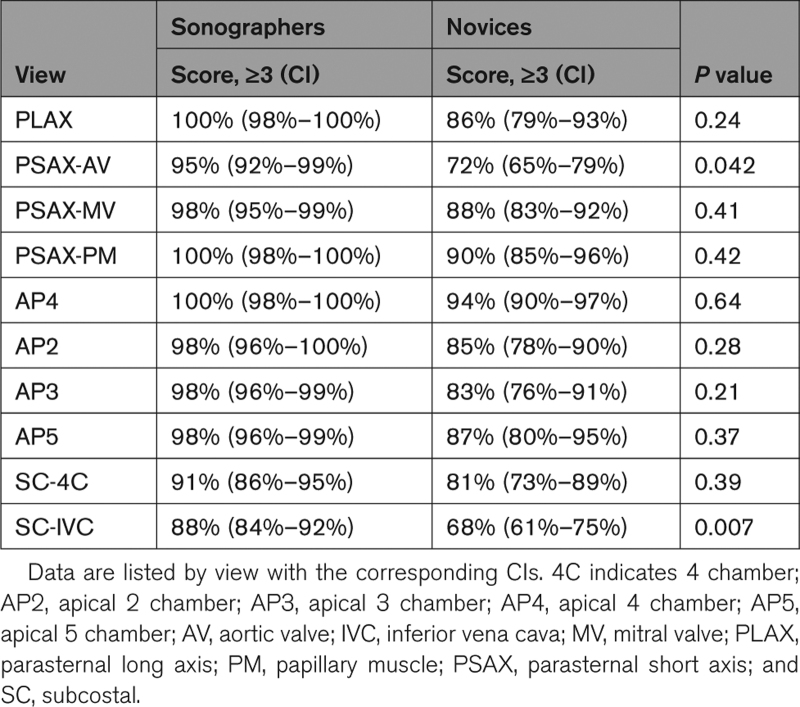

There were no adverse events of complications encountered during the study that could be attributed to the use of the AI guidance software. Most images acquired by both the sonographers and the novice users achieved a quality score ≥3 by the majority of the readers and, therefore, were deemed suitable for diagnostic evaluation, with the percentages of scores being only slightly higher for sonographers (88%–100%, depending on specific view), compared with novices (81%–94%). Table 1 shows these data on a view-by-view basis, depicting considerable variability between views. Notably, percentages of scores ≥3 for images acquired by the novices were the lowest in the subcostal IVC, parasternal short-axis at the aortic valve level, subcostal 4-chamber, as well as in the apical 3-chamber and apical 2-chamber views, in this order, with the differences being statistically significant only in the former 2 views (namely, subcostal IVC and parasternal short-axis at the aortic valve level).

Table 1.

Percentages of the Adequate Quality Images (American College of Emergency Physicians Score, ≥3) Acquired by Sonographers and Novices in the 240 Study Patients

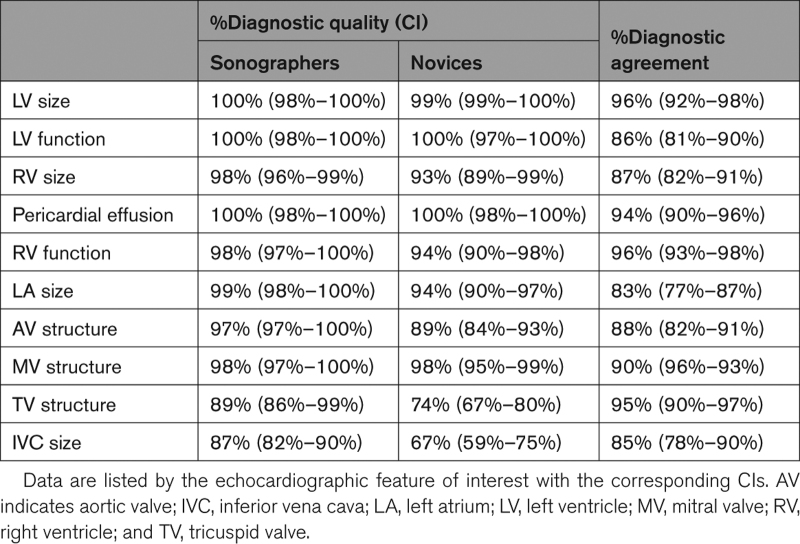

The ability of the readers to perform diagnostic evaluation from the 240 exams performed by the sonographers and the 240 exams performed by the novices is summarized in Table 2 on a feature-by-feature basis (center columns). Importantly, using images acquired by novices, the lower limit of the 95% CI was always >80% for the 4 primary end points and most clinically important features, including LV size and function, right ventricular size, and pericardial effusion. Of all 10 echocardiographic features studied, tricuspid valve structure and IVC size were the most difficult to image for both novices and sonographers, as reflected by the lowest percentages of diagnostic quality images.

Table 2.

Percentages of Images With Sufficient Quality to Allow Diagnostic Interpretation (Center) and Percentages of Images Resulting in Concordant Diagnostic Interpretation From Images Acquired by Sonographers and by Novices (Right) in the 240 Study Patients

Subgroup analyses showed that patient’s BMI, age, sex, race, and cardiac pathology status had no significant effect on the percentages of images acquired by novices that achieved quality scores ≥3. Also, there was no difference between the percentages of images that achieved such scores, when comparing images acquired by nurses and medical residents. Similarly, subgroup analyses showed that none of these factors had a significant effect on the adequacy of image quality as it affects the ability of the readers to detect abnormalities.

Table 2 also lists the agreement levels in the diagnostic interpretation, namely normal, abnormal, or inconclusive, for each of the features of interest (right column). Overall agreement in the diagnostic interpretation of the above features from images acquired by novices and those acquired by expert sonographers ranged from 83% to 96%, depending on the specific abnormality. Importantly, for the 4 most clinically important features, including LV size and function, right ventricular size, and pericardial effusion, the agreement in the diagnostic interpretation was ≥86%.

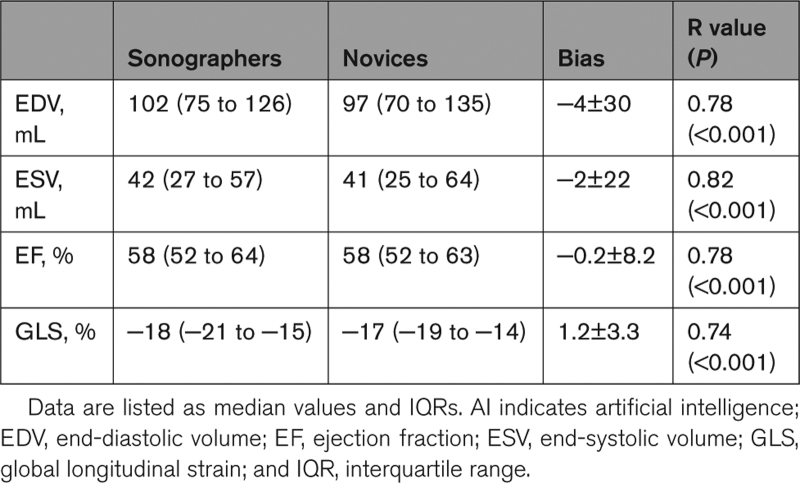

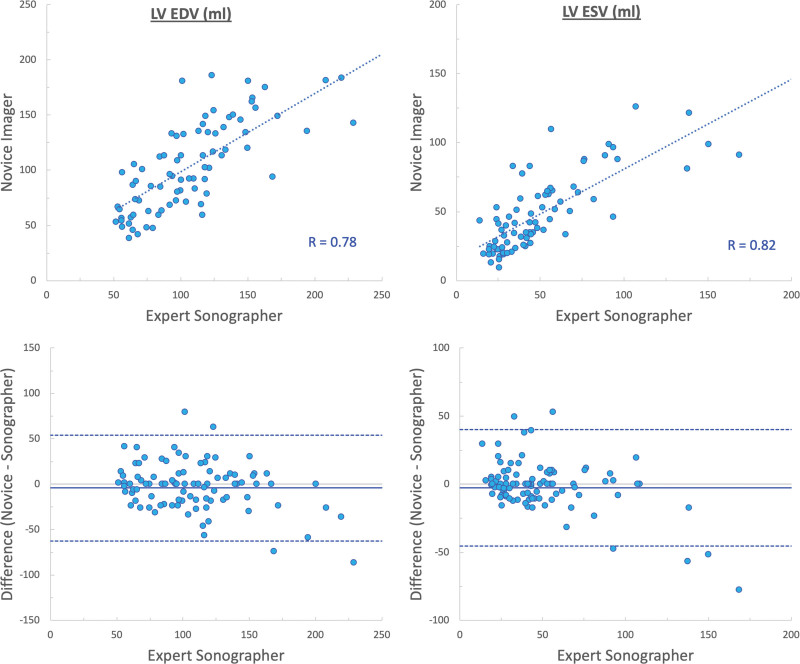

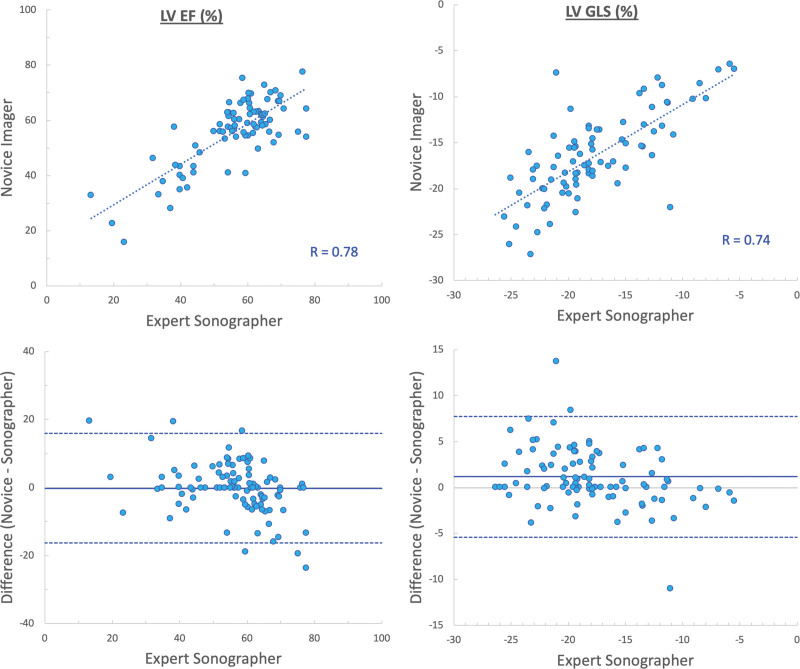

Quantitative analysis of LV size and function was feasible in 83% of the images acquired by novices. We found good agreement between parameters obtained from images acquired by novices and sonographers (Table 3), as reflected by high correlations (r values between 0.74 and 0.82) and small biases in LV end-diastolic and end-systolic volumes (−4±30 and −2±22 mL, respectively; Figure 2), ejection fraction (−0.2±8.2%), and global longitudinal strain (1.2±3.3%; Figure 3).

Table 3.

Left Ventricular Size and Function Measurements Obtained by Conventional Analysis of the Images Acquired by Novices With AI Guidance and by Sonographers Using the Same Imaging Equipment Without AI Guidance (See Text for Details)

Figure 2.

Agreement between measurements of left ventricular (LV) volumes (end-systolic volume [ESV] and end-diastolic volume [EDV]) obtained from images acquired by novices and sonographers. Linear regressions (top), and Bland-Altman plots (bottom).

Figure 3.

Agreement between measurements of left ventricular (LV) ejection fraction (EF) and global longitudinal strain (GLS) obtained from images acquired by novices and sonographers. Linear regressions (top), and Bland-Altman plots (bottom).

DISCUSSION

Over the last decade, continuous miniaturization of the ultrasound imaging equipment has brought to the forefront what only recently seems to be a futuristic idea of a miniature echocardiographic system replacing the traditional stethoscope. These technological developments have resulted in a widening spectrum of users, including a wide range of physicians and allied health workers with limited or no cardiac imaging experience, driven by the advantages of obtaining a quick bedside cardiac assessment at the point of care.1–4 Because acquisition of optimal echocardiographic views of the heart needed for diagnostic interpretation is not trivial and requires extensive training (2 years, minimum of 10 hours per week), the need for new ways to assist unconventional users with limited skills in cardiac ultrasound imaging has become clear and urgent.

The simultaneously increasing role of AI in medicine combined with the exponential increase in computing power has led to the development of novel machine learning techniques for real-time prescriptive guidance of echocardiographic image acquisition.5,6,12 While the technicalities of machine learning algorithms are difficult to understand for most who are not professionals in this area, it is well known that for these techniques to be successful, training the computer requires large amounts of data with as many as possible anatomic and functional variations. Luckily, echocardiographic images of hundreds of thousands of patients with millions of images traced, measured, and characterized by human experts over the years are available for developers in this field, resulting in a relatively fast development and a growing number of validation studies.

Our study was designed to test the ability of novel software to guide the acquisition of 10 standard echocardiographic views of diagnostic quality by nurses and medical residents with no prior ultrasound imaging experience, who had undergone only minimal training. We found that guided image acquisition by these users resulted in image quality approaching that of images obtained by experienced cardiac sonographers, as reflected by similar rates of examinations that achieved the American College of Emergency Physicians quality scores ≥3 assigned by the majority of readers. Not surprisingly, the parasternal short-axis at the aortic valve level, subcostal 4-chamber, and the subcostal IVC views were the most difficult for the novices to achieve diagnostic quality because these 3 views were also the most challenging for the expert sonographers. Importantly, however, images acquired by novices achieved score ≥3 in >80% of the cases in 8 of the 10 standard views.

Further examination of the readers’ ability to detect abnormalities from these images showed that tricuspid valve structure and IVC size were the most difficult to assess from the novices’ images. However, these also were the 2 features that were the most difficult to evaluate from the images acquired by the sonographers. Importantly, images acquired by the novices allowed diagnostic assessment in over 89% of the cases for 8 of the 10 examined features. All these findings occurred irrespective of factors that are known to affect the level of technical difficulty of echocardiographic imaging, including BMI, age, sex, and the presence of cardiac pathology. Finally, and most importantly, diagnostic assessments made using the novices’ images and expert sonographer’s images were concordant in at least 85% of the cases, including all examined features.

Moreover, quantitative measurements performed on the images acquired by the novices resulted in values closely related to those obtained from the images acquired by expert sonographers, indicating that when aided by the real-time guidance software, the novices can generate echocardiographic views of sufficient quality that lend themselves to accurate quantification of LV volumes and function, including both ejection fraction and global longitudinal strain.

The implications of all these findings for clinical practice remain to be determined in future studies. Most importantly, however, this novel AI guidance tool is capable of aiding novice users in acquisition of images of sufficient quality to allow qualitative and also quantitative diagnostic interpretation. This being said, the AI guidance tool is by no means intended to replace comprehensive echocardiographic examination by a professional sonographer but rather to allow novices to obtain with guidance a limited set of images that may aid in a cursory clinical evaluation of a patient in a setting where specialized, trained personnel is not available. Future studies will determine whether after a period of training and using the AI-assisted software, the learner will be able to obtain images of similar quality without AI guidance.

Limitations

In this era of big data, one might see the relatively small size of our test group (240 patients) as a limitation. Indeed, 240 is a disproportionately small number compared with a considerably larger number of studies used for training the deep learning software. However, it is important to remember that this was the first study to test the feasibility of this novel real-time guidance software and evaluate its ability to compete with the quality of conventional images obtained by expert sonographers in the suitability of images for diagnostic interpretation.

Also, patients with poor acoustic windows noted in prior echocardiographic examinations were excluded from this feasibility study. This choice was motivated by the thought that if sufficient quality is not achievable in such patients even for expert imagers, they are not suitable to test this new software.

Another limitation of our study is that the accuracy of quantitative analysis was only tested for the LV size and function. Whether the quality of visualization of the other chambers and cardiac structures, especially the valves, is suitable for quantitative analysis remains to be determined in future studies. Also, this analysis included only images that were judged to be of sufficient quality. However, it would not make sense to try to obtain accurate quantitative information from images that were deemed suboptimal.

Moreover, in this study, the guidance software was interfaced with a specific commercial handheld ultrasound imaging system. Theoretically, this software could be interfaced with any imaging system that provides a digital output of a real-time stream of the images displayed on the system screen.

Finally, one might question whether patient care should be guided by information obtained by nonexpert and under what circumstances this would be appropriate and ethical. It is important to remember that the software tested in this study was designed to broaden the pool of personnel capable of obtaining echocardiographic images that would be suitable for a cursory clinical interpretation by an expert reader.

Conclusions

This study demonstrated that the new deep learning algorithm for real-time guidance of ultrasound imaging of the heart is feasible and in the hands of nurses and medical residents with minimal training, can yield images of similar diagnostic quality to those obtained by expert sonographers in the majority of patients. These images not only allow qualitative evaluation of basic cardiac pathology but also quantitative measurement of LV size and function. This approach may have important implications in multiple clinical scenarios where immediate cardiac assessment is needed, such as remote sites where services of a specialized echocardiography laboratory are not readily available.

ARTICLE INFORMATION

Sources of Funding

This study was supported by UltraSight, Ltd, Rehovot, Israel.

Disclosures

In addition to employment relationships between several authors (Dr Spiegelstein, Dr Avisar, Dr Ludomirsky, and I. Kezurer) and UltraSight, Ltd, Drs Lang, Khandheria, and Klempfner received research grants and Drs Mor-Avi, Mazursky, Handel, Peleg, and Avraham served as consultants for work related to this study. The other authors report no conflicts.

Nonstandard Abbreviations and Acronyms

- AI

- artificial intelligence

- BMI

- body mass index

- IVC

- inferior vena cava

- LV

- left ventricular

For Sources of Funding and Disclosures, see page 912.

Contributor Information

Victor Mor-Avi, Email: vmoravi@bsd.uchicago.edu.

Bijoy Khandheria, Email: wi.publishing22@aah.org.

Robert Klempfner, Email: klempfner@gmail.com.

Juan I. Cotella, Email: jcotella@bsd.uchicago.edu.

Merav Moreno, Email: merav.moreno@sheba.health.gov.il.

Denise Ignatowski, Email: Denise.Ignatowski@aah.org.

Brittney Guile, Email: Brittney.Guile@uchicagomedicine.org.

Hailee J. Hayes, Email: hailweb357@gmail.com.

Kyle Hipke, Email: hipke95@hotmail.com.

Abigail Kaminski, Email: wi.publishing687@aah.org.

Dan Spiegelstein, Email: danny@ultrasight.com.

Noa Avisar, Email: noa@ultrasight.com.

Itay Kezurer, Email: itay@ultrasight.com.

Asaf Mazursky, Email: asaf@ultrasight.com.

Ran Handel, Email: ran@ultrasight.com.

Yotam Peleg, Email: yotampelleg@gmail.com.

Shir Avraham, Email: Shir@ultrasight.com.

Achiau Ludomirsky, Email: achi.ludomirsky@nyumc.org.

REFERENCES

- 1.Liebo MJ, Israel RL, Lillie EO, Smith MR, Rubenson DS, Topol EJ. Is pocket mobile echocardiography the next-generation stethoscope? A cross-sectional comparison of rapidly acquired images with standard transthoracic echocardiography. Ann Intern Med. 2011;155:33–38. doi: 10.7326/0003-4819-155-1-201107050-00005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chamsi-Pasha MA, Sengupta PP, Zoghbi WA. Handheld echocardiography: current state and future perspectives. Circulation. 2017;136:2178–2188. doi: 10.1161/circulationaha.117.026622 [DOI] [PubMed] [Google Scholar]

- 3.Kovell LC, Ali MT, Hays AG, Metkus TS, Madrazo JA, Corretti MC, Mayer SA, Abraham TP, Shapiro EP, Mukherjee M. Defining the role of point-of-care ultrasound in cardiovascular disease. Am J Cardiol. 2018;122:1443–1450. doi: 10.1016/j.amjcard.2018.06.054 [DOI] [PubMed] [Google Scholar]

- 4.Cardim N, Dalen H, Voigt JU, Ionescu A, Price S, Neskovic AN, Edvardsen T, Galderisi M, Sicari R, Donal E, et al. The use of handheld ultrasound devices: a position statement of the European Association of Cardiovascular Imaging (2018 update). Eur Heart J Cardiovasc Imaging. 2019;20:245–252. doi: 10.1093/ehjci/jey145 [DOI] [PubMed] [Google Scholar]

- 5.Johnson KW, Torres Soto J, Glicksberg BS, Shameer K, Miotto R, Ali M, Ashley E, Dudley JT. Artificial intelligence in cardiology. J Am Coll Cardiol. 2018;71:2668–2679. doi: 10.1016/j.jacc.2018.03.521 [DOI] [PubMed] [Google Scholar]

- 6.Zhang J, Gajjala S, Agrawal P, Tison GH, Hallock LA, Beussink-Nelson L, Lassen MH, Fan E, Aras MA, Jordan C, et al. Fully automated echocardiogram interpretation in clinical practice. Circulation. 2018;138:1623–1635. doi: 10.1161/CIRCULATIONAHA.118.034338 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Narula S, Shameer K, Salem Omar AM, Dudley JT, Sengupta PP. Machine-learning algorithms to automate morphological and functional assessments in 2D echocardiography. J Am Coll Cardiol. 2016;68:2287–2295. doi: 10.1016/j.jacc.2016.08.062 [DOI] [PubMed] [Google Scholar]

- 8.Asch FM, Poilvert N, Abraham T, Jankowski M, Cleve J, Adams M, Romano N, Hong H, Mor-Avi V, Martin RP, et al. Automated echocardiographic quantification of left ventricular ejection fraction without volume measurements using a machine learning algorithm mimicking a human expert. Circ Cardiovasc Imaging. 2019;12:e009303. doi: 10.1161/CIRCIMAGING.119.009303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Davis A, Billick K, Horton K, Jankowski M, Knoll P, Marshall JE, Paloma A, Palma R, Adams DB. Artificial intelligence and echocardiography: a primer for cardiac sonographers. J Am Soc Echocardiogr. 2020;33:1061–1066. doi: 10.1016/j.echo.2020.04.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lang RM, Addetia K, Miyoshi T, Kebed K, Blitz A, Schreckenberg M, Hitschrich N, Mor-Avi V, Asch FM. Use of machine learning to improve echocardiographic image interpretation workflow: a disruptive paradigm change? J Am Soc Echocardiogr. 2021;34:443–445. doi: 10.1016/j.echo.2020.11.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Asch FM, Mor-Avi V, Rubenson D, Goldstein S, Saric M, Mikati I, Surette S, Chaudhry A, Poilvert N, Hong H, et al. Deep learning-based automated echocardiographic quantification of left ventricular ejection fraction: a point-of-care solution. Circ Cardiovasc Imaging. 2021;14:e012293. doi: 10.1161/CIRCIMAGING.120.012293 [DOI] [PubMed] [Google Scholar]

- 12.Narang A, Bae R, Hong H, Thomas Y, Surette S, Cadieu C, Chaudhry A, Martin RP, McCarthy PM, Rubenson DS, et al. Utility of a deep-learning algorithm to guide novices to acquire echocardiograms for limited diagnostic use. JAMA Cardiol. 2021;6:624–632. doi: 10.1001/jamacardio.2021.0185 [DOI] [PMC free article] [PubMed] [Google Scholar]