Abstract

Background

This Obesity Medicine Association (OMA) Clinical Practice Statement (CPS) provides clinicians an overview of Artificial Intelligence, focused on the management of patients with obesity.

Methods

The perspectives of the authors were augmented by scientific support from published citations and integrated with information derived from search engines (i.e., Chrome by Google, Inc) and chatbots (i.e., Chat Generative Pretrained Transformer or Chat GPT).

Results

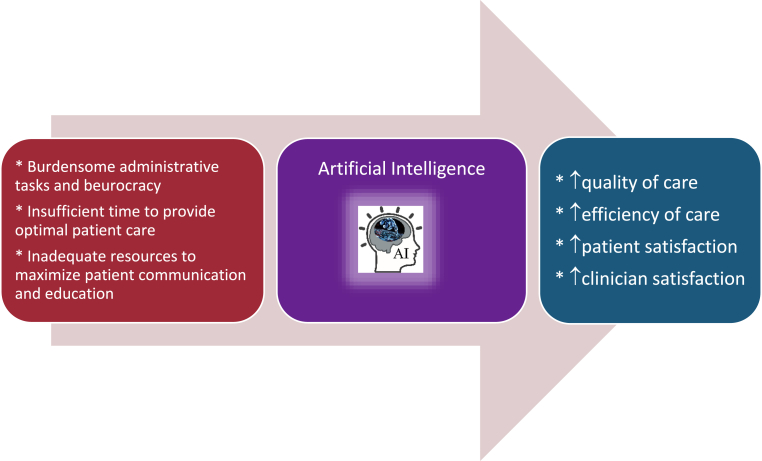

Artificial Intelligence (AI) is the technologic acquisition of knowledge and skill by a nonhuman device, that after being initially programmed, has varying degrees of operations autonomous from direct human control, and that performs adaptive output tasks based upon data input learnings. AI has applications regarding medical research, medical practice, and applications relevant to the management of patients with obesity. Chatbots may be useful to obesity medicine clinicians as a source of clinical/scientific information, helpful in writings and publications, as well as beneficial in drafting office or institutional Policies and Procedures and Standard Operating Procedures. AI may facilitate interactive programming related to analyses of body composition imaging, behavior coaching, personal nutritional intervention & physical activity recommendations, predictive modeling to identify patients at risk for obesity-related complications, and aid clinicians in precision medicine. AI can enhance educational programming, such as personalized learning, virtual reality, and intelligent tutoring systems. AI may help augment in-person office operations and telemedicine (e.g., scheduling and remote monitoring of patients). Finally, AI may help identify patterns in datasets related to a medical practice or institution that may be used to assess population health and value-based care delivery (i.e., analytics related to electronic health records).

Conclusions

AI is contributing to both an evolution and revolution in medical care, including the management of patients with obesity. Challenges of Artificial Intelligence include ethical and legal concerns (e.g., privacy and security), accuracy and reliability, and the potential perpetuation of pervasive systemic biases.

Keywords: Adiposopathy, Artificial intelligence, Education, Obesity

1. What is artificial intelligence?

1.1. Definition

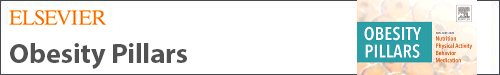

Artificial Intelligence (AI) is the technologic acquisition of knowledge and skill by a nonhuman device, that after being initially programmed, has varying degrees of operations autonomous from direct human control, and that performs adaptive output tasks based upon data input learnings. An essential component of AI is computer or “learning,” which improves the performance of a machine via cumulative exposure to data input (See Fig. 1).

Fig. 1.

Artificial Intelligence (AI) mechanistic learning with adaptive task responses. Technological devices that incorporate AI begin with baseline algorithmic programming, augmented by the subsequent ability to sense, acquire, and process data input, for the purpose of facilitating adaptive task response output.

1.2. Natural language

Natural language processing is the ability of machines (through “learning”) to understand, interpret, and respond to human language (e.g., chatbots, virtual assistants, and language translation applications). Machine learning is achieved via baseline algorithmic programming that subsequently, based upon acquisition of new data input, allows a device to adapt task output. AI has general applications relevant to medical research, electronic health records analyses, predictive analytics, medical imaging diagnostics, precision/personalized medicine, remote monitoring, and surgical assistance. AI has specific applications regarding medical research, medical practice, and has other applications in the management of patients with obesity.

1.3. Common artificial intelligence applications (chatbots)

At the time of this writing thousands of AI tools are available for widespread uses. Among the more publicized examples of an AI application was the November 30th, 2022, launch of Chat Generative Pretrained Transformer (ChatGPT) [1]. According to its developer, OpenAI:

“We’ve trained a model called ChatGPT which interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer follow-up questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests.” (https://openai.com/blog/chatgpt).

The following was the response from ChatGPT to the text-typed prompt: “What is Artificial Intelligence in less than 40 words?” (ChatGPT, personal communication, March 4, 2023).

“Artificial Intelligence (AI) refers to the development of computer systems that can perform tasks that would typically require human intelligence, such as learning, reasoning, decision-making, and natural language processing.”

In addition to ChatGPT-4 (the latest GPT version by OpenAI which is integrated with Microsoft applications), another notable chatbot includes Language Model for Dialogue Applications (LaMDA from Google) [2]. While not specifically trained for medical purposes, these chatbots are used for medical purposes through utilizing data input from openly available medical texts, research papers, health system websites, health information podcasts, and videos [1]. Table 1 outlines 10 things to know about Artificial Intelligence in the management of patients with obesity.

Table 1.

Ten things to know about Artificial Intelligence in the management of patients with obesity.

| 1. | Artificial Intelligence (AI) is the technologic acquisition of knowledge and skill by a nonhuman device, that after being initially programmed, has varying degrees of operations autonomous from direct human control, and that performs adaptive output tasks based upon data input learnings. |

| 2. | The use of AI in medical writing is undergoing exponential growth, including publications regarding obesity medicine. |

| 3. | Beyond general applications in everyday uses, AI has specific application towards medical research, medical practice, and the management of patients with obesity. |

| 4. | AI applications such as chatbots may assist clinicians who specialize in obesity medicine by answering queries and performing tasks such as writings, publications, as well as drafting office or institutional Policies and Procedures and Standard Operating Procedures. |

| 5. | AI may help clinicians who specialize in obesity medicine by offering interactive programming related to analyses of body composition imaging for more accurate assessment of anthropometrics, behavior coaching, personal nutritional intervention & physical activity recommendations, predictive modeling for identifying patients at risk for obesity-related complications, and implementation of precision/personalized medicine, where treatment is optimized based upon a patients clinical presentation and genetic makeup. |

| 6. | AI has multiple applications to medical education, such as via personalized learning, virtual reality, and intelligent tutoring systems. |

| 7. | AI may augment telemedicine, with tasks ranging from scheduling of appointments to integrating remote monitoring of patients (e.g., wearable technologies and mobile applications). |

| 8. | AI may help identify patterns in datasets related to a medical practice or institution (i.e., analytics related to electronic health records), such as revealing areas where potential improvements in health outcomes might be achieved with changes in clinician approaches. |

| 9. | Clinicians should be aware of general limitations in the use of AI for management of patients with obesity. |

| 10. | Clinicians should be aware of potential biases in the application of AI to care of patients with obesity. |

2. Illustrative timelines applicable to artificial intelligence and obesity medicine

2.1. History of artificial intelligence (AI)

The following was the response from ChatGPT to the question: “When was the first recorded practical use of “Artificial Intelligence”? (ChatGPT, personal communication, March 25, 2023).

“The first recorded practical use of the term "Artificial Intelligence" was in 1956 at the Dartmouth Conference, a workshop organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. The term was used to describe the field of study that aimed to create machines that could perform tasks that required human-like intelligence, such as natural language processing, problem-solving, and decision-making. Before the term "Artificial Intelligence" was coined, researchers in the field used terms like "cybernetics," "machine learning," and "automata theory" to describe their work. However, the Dartmouth Conference is widely regarded as the birthplace of AI as a distinct field of study and marked the beginning of a new era of research in machine intelligence.”

2.2. Medical publishing of artificial intelligence

From a medical publishing standpoint, PubMed Central is a “A free resource supporting the search and retrieval of biomedical and life sciences literature with the aim of improving health–both globally and personally. The PubMed database contains more than 35 million citations and abstracts of biomedical literature. It does not include full text journal articles; however, links to the full text are often present when available from other sources, such as the publisher's website or PubMed Central (PMC). Available to the public online since 1996, PubMed was developed and is maintained by the National Center for Biotechnology Information (NCBI), at the U.S. National Library of Medicine (NLM), located at the National Institutes of Health (NIH).” (https://pubmed.ncbi.nlm.nih.gov/about/).

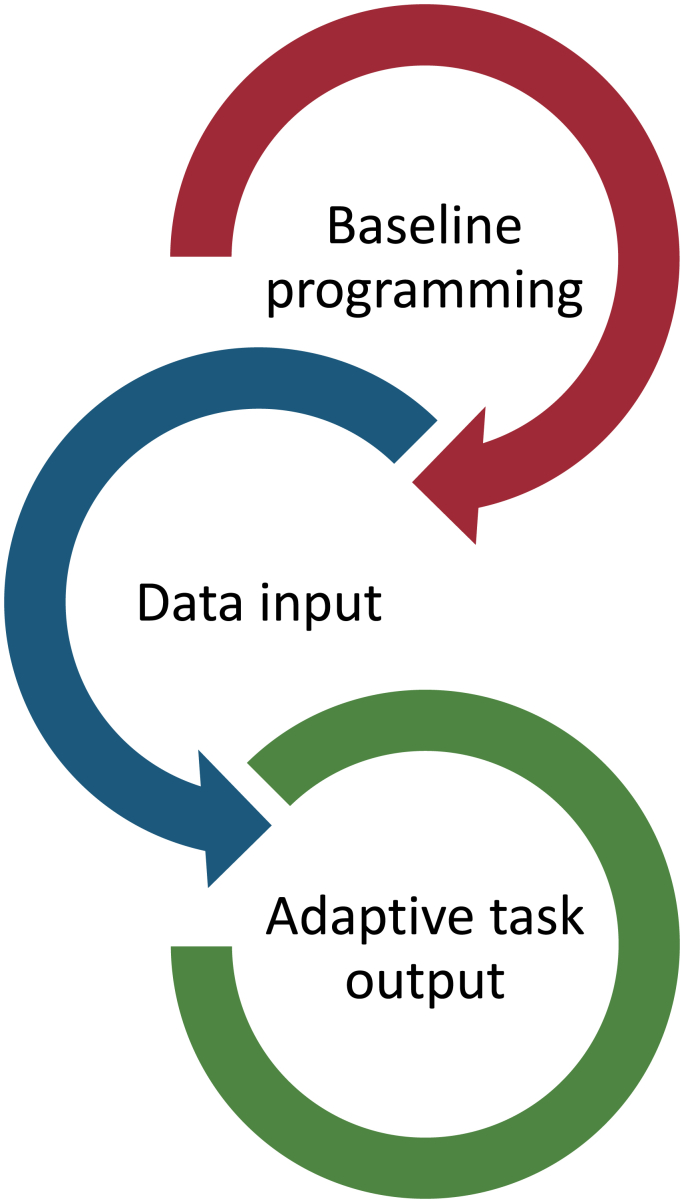

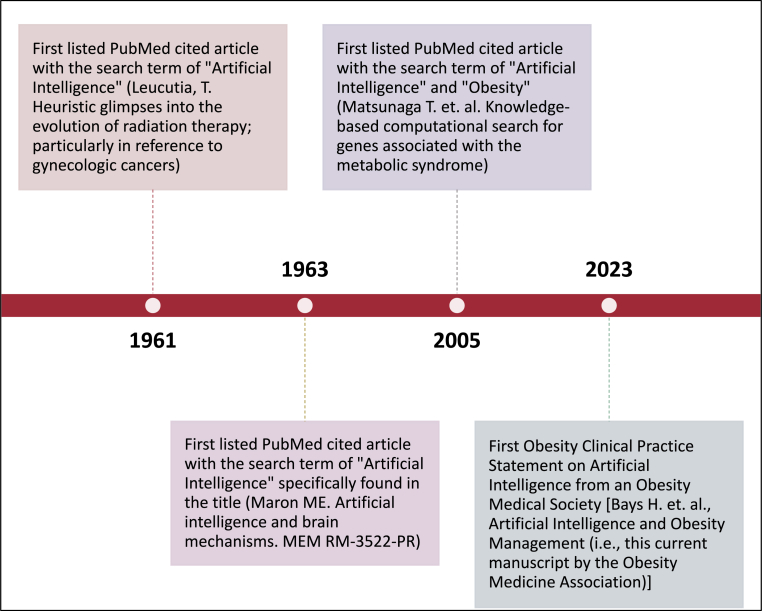

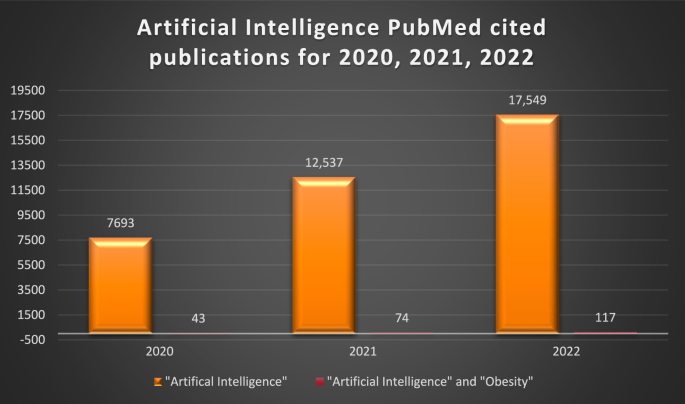

PMC currently cites journal articles it has indexed since before year 1800. Fig. 2 describes some sentinel timeline events in PubMed Central medical publications regarding AI and obesity medicine. Fig. 3 describes 20-year incremental publications for the search term “Artificial Intelligence,” as well as “Artificial Intelligence” and “Obesity” via a PubMed search. Fig. 4 describes the yearly PubMed searchable publications for years 2020, 2021, and 2022 for the search term “Artificial Intelligence,” as well as “Artificial Intelligence” and “Obesity.” Comparison of Fig. 3, Fig. 4 reveal that for the 80 years spanning from 1939 to 2019, the sum of the number of PubMed searchable publications for “Artificial Intelligence” was 30,390 and the sum of “Artificial Intelligence” and “Obesity” was 71, with all the latter occurring within 1999–2019. The sum of the number of PubMed searchable publications in the three years of 2020, 2021, and 2022 was 37,779 medical articles, with a total of 234 articles returned with the search terms “Artificial Intelligence” and “Obesity.” Thus, more PubMed searchable articles were published on “Artificial Intelligence” in the past 3 years compared to the prior 80 years (i.e., years 1939–2019). Similarly, a PubMed search revealed over 3 times as many articles published in 2020, 2021, and 2023 on “Artificial Intelligence” and “Obesity,” as was published for all prior decades.

Fig. 2.

Illustrative sentinel events in medical publications regarding Artificial Intelligence and obesity medicine via PubMed Search dated March 25, 2023 [[3], [4], [5]].

Fig. 3.

Number of 20-year increment publications from years 1939–2019 for the search term “Artificial Intelligence,” as well as for “Artificial Intelligence” and “Obesity” via PubMed search dated March 25, 2023.

Fig. 4.

Number of yearly publications for years 2020, 2021, and 2022 for the search term “Artificial Intelligence”, as well as for “Artificial Intelligence” and “Obesity” via PubMed search on March 25, 2023.

In short, a PubMed search revealed no reference citation returns for “Artificial Intelligence” and “Obesity” for years 1939–1999. However, within the past several years, the number of publications referencing Artificial Intelligence has dramatically increased. It may reasonably be anticipated that the November 30th, 2022 launch of ChatGPT and other similar chatbots will facilitate an even greater increase in PubMed searchable publications regarding “Artificial Intelligence” in the forthcoming years.

2.3. Current medical publishing of artificial intelligence chatbots

Currently, the published evidence regarding the use of existing chatbots to aid patients with overweight or obesity suggests that most chatbots provide personalized recommendations and motivational messages [6]. Present, but less common, include AI-mediated gamification and emotional support. Factors that may enhance the success of chatbots in improving patient health include accessibility (i.e., readily available 24 hours a day, 7 days per week), interactivity, empathy, emotional support, motivation (i.e., goal-setting, self-monitoring, and feedback), personalization (i.e., patient-centered approach to nutrition and physical activity based upon integration with electronic health records, patient record-keeping, and monitoring devices that include wearable technologies), and increased engagement options through availability of multiple platforms and devices (e.g., natural language text, as well as handsfree speech and videos) [6].

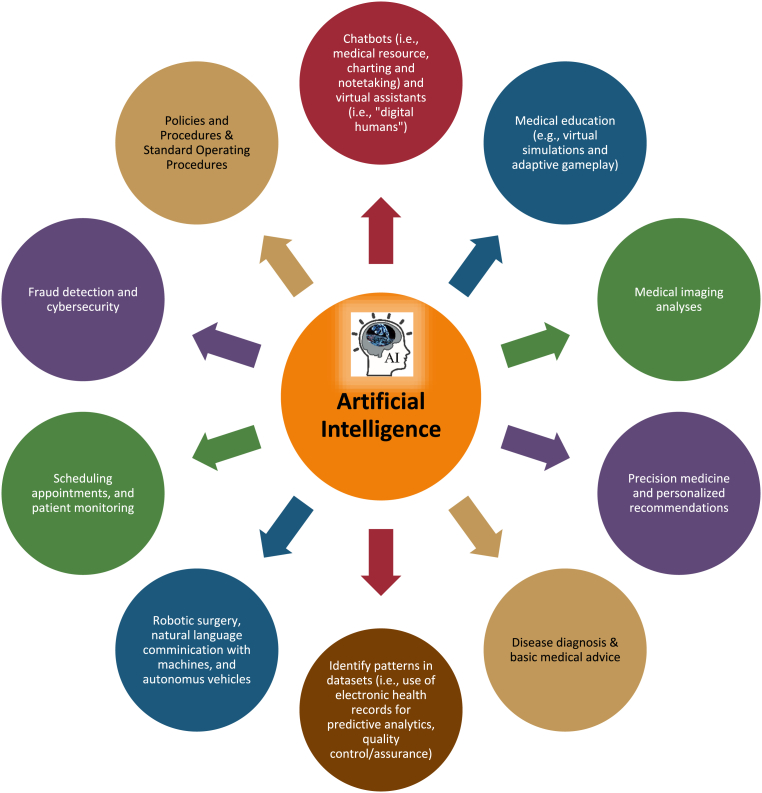

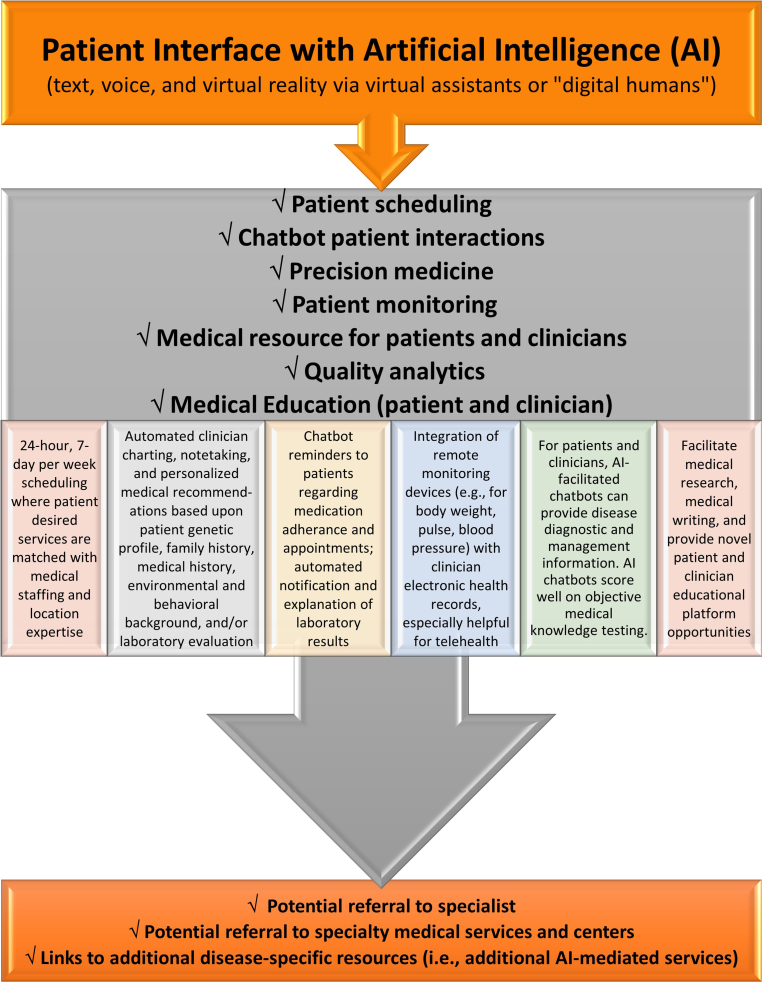

3. What are illustrative uses of artificial intelligence in medical practice? (See Fig. 5)

Fig. 5.

Illustrative uses of artificial intelligence in medical practice.

3.1. Chatbots and virtual assistants

Chatbots are applications derived from computer programs designed to interact with humans in a conversational manner [1]. Chatbots have two main components: the AI system component (i.e., device programing that simulates intelligent behavior, such as learning, problem-solving, decision-making, and perception) and the interface component (i.e., point where people, devices, and/or systems interact) [1]. Natural language chatbots utilize programmed rules and keyword recognition to simulate conversation human users.

Utilization of a chatbot begins with a “session,” where the user enters a query (i.e., “prompt”) via spoken and/or written human communications that are familiar to the user (“i.e., natural language”) [1]. A query may be a question (e.g., “why is obesity a disease?“), or it may be a request to perform a task (e.g., “Draft 3 brief paragraphs about the relationship of adiposopathy to the disease of obesity”). As per Fig. 1, AI systems involve baseline algorithmic programming and the subsequent ability to sense, acquire, and process data input to facilitate adaptive task output responses, such as communicating answers to user queries and performing tasks through natural language human interactions.

3.1.1. Note-taking and scheduling

-

•

Chatbots can assist with medical charting and notetaking (e.g., “AI-driven applications are available to capture the dictation of medical notes; many such applications are attempting to synthesize patient interviews and laboratory test results to write notes directly, without clinician intervention”) [7]. Specifically, while the clinician would still review and electronically sign their note, AI-automated notes (e.g., SOAP or subjective, objective, assessment and plan note-taking format, application of billing codes, as well as laboratory and prescription ordering) may be generated by a voice recording (e.g., clinician mobile device) of patient visits, or from a recording of the patient-physician encounter [1], allowing more time for patient interaction, and less time dedicated to charting. (https://www.medpagetoday.com/special-reports/exclusives/103713?xid=nl_mpt_investigative2023-03-29&eun=g1174638d0r&utm_source=Sailthru&utm_medium=email&utm_campaign=InvestigativeMD_032923&utm_term=NL_Gen_Int_InvestigateMD_Active).

-

•

Among the automated processes in charting that help reduce clinician (and patient) time investment are autofill of data fields with known demographics and other electronically recorded information – avoiding the need for inefficiently performing redundant tasks

-

•

Once medical charting and notes are reviewed, edited, and signed, AI-generated patient visit summaries, disease information, education applicable to the visit, and medication/treatment overview may be automatically (and electronically) sent to the patient.

-

•

Allows patient access to 24-h, 7 day a week scheduling and rescheduling, beyond regular medical practice office hours, and without the patient being placed on telephone hold

-

•

Allows patients 24-h, 7 day a week answers to frequently asked questions regarding a specific disease state or medical practice

-

•

Screens patient's condition, allergies, and insurance information and prioritizes the timing and location of appointments

-

•

Matches patient's preferred medical service to optimal location (i.e., specialized health facility or lab test center)

-

•

Matches patient desired medical care with individual clinician or staff expertise

-

•

Incorporates consideration of staff preferred working days, work hours (i.e., start of day, end of day, holidays, personal time off), and consideration of potential overtime hours

-

•

Analyzes scheduling data that may suggest areas of scheduling improvements and labor forecasting

-

•

Interactive, natural language communication with patients through chatbots sales, marketing, and lead generation may help inform patients of medical practice capabilities and medical staff expertise, thus helping promote scheduling of appointments regarding other medical services

-

•

Based on lead generation initiatives, AI can stratify (i.e., “score”) leads based on urgency and needs for services. This can then help clinicians and organizations prioritize next steps in patient encounters and allocation of medical resources.

3.1.2. Healthcare chatbots (i.e., medical resources, virtual assistants, “digital humans”)

-

•

Chatbots are programmed to provide medical information. For example: “Recently, our next iteration, Med-PaLM 2 (pathways Language Model), consistently performed at an “expert” doctor level on medical (licensing) exam questions, scoring 85%. This is an 18% improvement from Med-PaLM's previous performance and far surpasses similar AI models. While this is exciting progress, there's still a lot of work to be done to make sure this technology can work in real-world settings. Our models were tested against 14 criteria — including scientific factuality, precision, medical consensus, reasoning, bias and harm — and evaluated by clinicians and non-clinicians from a range of backgrounds and countries. Through this evaluation, we found significant gaps when it comes to answering medical questions and meeting our product excellence standards. We look forward to working with researchers and the global medical community to close these gaps and understand how this technology can help improve health delivery.” (https://blog.google/technology/health/ai-llm-medpalm-research-thecheckup/; https://arxiv.org/abs/2212.13138)

-

•

ChatGPT (GPT-4) (https://hothardware.com/news/openai-gpt-4-model) reportedly exhibits human-level performance on a variety of professional and academic benchmarks. Beyond specific AI testing, GPT-4 has scored 1410 out of 1600 on the Scholastic Aptitude Test (SAT), which correlates to 90th percental of human students, and scored well on the Quantitative and Verbal portions of the Graduate Record Examination (but less well on the Writing portion). GPT-4 has scored 298 out of 400 on the Uniform Bar Exam (sufficient for lawyer licensing) and 75% on the Medical Knowledge Self-Assessment Program – 19.

-

•

Chatbots provide patients natural language information about their health condition, treatment, and preventive measures. For example, when assessed by two transplant hepatologists and resolved by a third reviewer, and in response to 164 questions regarding knowledge, management and emotional support for cirrhosis and hepatocellular carcinoma (HCC), the generative artificial intelligence (AI) chatbot ChatGPT achieved a grade of 79.1% correct for cirrhosis and 74% for HCC. However, only small proportions (47.3% in cirrhosis, 41.1% in HCC) were labeled as comprehensive. The performance was better in basic knowledge, lifestyle, and treatment than in the domains of diagnosis and preventive medicine. The conclusion was: “ChatGPT may have a role as an adjunct informational tool for patients and physicians to improve outcomes.” [8].

-

•

In an evaluation of 25 questions addressing fundamental cardiovascular disease preventive concepts, ChatGPT provided largely appropriate responses. This suggested “the potential of interactive AI to assist clinical workflows by augmenting patient education and patient-clinician communication around common CVD prevention queries” [9] Among the limitations was that ChatGPT did not include cited references to support its responses.

-

•

For straightforward, low-complexity medical questions, ChatGPT currently performs reasonably well as an AI-assisted decision support tool in cardiovascular disease medicine. For more complex patient presentation, ChatGPT may be (currently) accurate in only 50% of cases – often providing incomplete or inappropriate recommendations when compared with expert consultation [10].

-

•

In general, chatbots provide answers to patient medical questions with basic self-care recommendations for simple medical conditions, or recommendation of referral for more severe or complicated medical conditions.

-

•Additionally, chatbots may:

-

oRemind patients to take or refill medications, as well as provide information regarding doses and side effects

-

oAfter a medial office visit, the patient may be contacted by virtual assistants or “digital humans” via computer or smartphone, utilizing a natural language platform that assists the patient in collecting requested data (e.g., body weight, pulse, blood pressure). Remote patient monitoring may include integration with home health monitoring devices and wearable technologies that may integrate with clinician electronic health records, which may be especially valuable with telehealth [11].

-

oProvide patient feedback and motivation regarding achievement of health goals

-

oProvide coping strategies and emotional support for patients with depression and anxiety, with algorithmic determination of need for referral to mental health professional. AI-enhanced psychotherapy (i.e., via AI chatbot agents) may result in high patient satisfaction, engagement, and high retention rates [12].

-

oStreamline the process of prior authorization process for insurance payor drug approval by use of a universal preauthorization template [13]. AI facilitated direct communication with the insurance payors, using information derived from the electronic health record, may reduce the number of required health care individuals (e.g., medical office staff, pharmacists) for prior authorization approval (and rebuttals to other health insurance coverage denials) and thus allowing for more optimal and more timely treatment of patients https://www.medpagetoday.com/special-reports/exclusives/102312.

-

oHelp patients clarify services available and covered by insurance payors (e.g., dietitian and nutrition services) clarify to reduce “surprise billing”

-

o

3.1.3. E-commerce

-

•

Chatbots can provide answers to patient questions regarding the medical practice 24-h, 7 day a week

-

•

Chatbots can provide answers to patient questions regarding billing and insurance 24-h, 7 day a week

-

•

AI analyses of patient data and medical service purchase history may help identify and potentially recommend additional personalized medical service

-

•

AI-mediated website creators are available where, based upon entering a simple business idea, the AI application will generate a landing page, collect site images, integrated email functions, prescriber management, develop brand name, create logo, include testimonials, engage search engine optimization (SEO), and generate ads (long and short form writing).

-

•

Chatbots may assist conduct of surveys with feedback to the medical practice regarding patient perception of quality and desired additional services

-

•

From an overall organizational standpoint, analyses of online reviews can be categorized by sentiment, allowing organizations to drive process improvement efforts from a data-driven perspective

3.1.4. Medical education and training

Types of AI-mediated medical training include:

-

•

Personalized learning, with teaching approaches tailored to the individual's background knowledge, strengths, needs, skills, interest, and best method of learning

-

•

Virtual simulations through use of virtual reality involving visual and other sensory interfaces that serve as a likeness of patient interaction

-

•

Clinical decision support through ready access to evidenced-based recommendations and guidelines

-

•

Natural language communication about medical topics (i.e., writings, publications, presentations)

3.1.5. Examples of current “virtual assistant” medical chatbots

While general chatbots can provide general medical science and general clinical advice, more specialized chatbots may help assist with:

-

•

Medical illness symptom assessment, followed by potential diagnostic possibilities and applicable pharmacies, test centers, and clinician referrals

-

•

Medication adherence

-

•

Cancer management

-

•

Mood, emotional health, and cognitive behavioral therapy

-

•

Enhance patient motivation for disease and preventive care management via AI mediated gaming and competitive interfaces

3.1.6. Chatbot strengths and weaknesses can be differentiated based upon

-

•

User-friendliness (e.g., technical interface using smart phone, computer, or both)

-

•

Popularity

-

•

Content focus (i.e., applicability for a specific field of academia, type of business, or field of interest)

-

•

Availability (i.e., free versus paid, marketed versus “in development”)

-

•

Interactive platforms (i.e., visual/video and/or audio – via smartphone/computer)

-

•

Ability for customization

-

•

Input/Output capabilities (e.g., text-to-text, text-to-image, text-to-video, voice-to-text, voice-to-image, voice-to-video, image-to-image)

-

•

Date of initial knowledge base (i.e., may have limited ability to provide updated answers after the date of the initial knowledge programming)

-

•

Ability to access information from the Internet in real time

-

•

Permissiveness of output (e.g., some chatbots restrict or censor “sensitive” topics)

-

•

Accuracy of output

-

•

Completeness of output

-

•

Reference database and citation of original source

-

•

Integration with internet tools (e.g., Edge web browser/search engine Bing with AI ChatCPT or Chrome web browser/search engine Google with AI Bard)

-

•

Storage of personal data (i.e., chatbots may store conversations to “improve performance”)

-

•

Scalability and future expandability and survivability.

-

•

Personal preference

3.1.7. Future of specific chatbots

Due to the number of available chatbot options and variance in their capabilities and developers, it is challenging to list all the applicable specific chatbots available. It is even more challenging to predict which chatbots and other AI integrated devices and services will be most popular in the future. That is because AI and chatbots are in their infancy. An analogy would be the popularity of search engines. In the early 1990's, among the more popular search engines were Yahoo, Netscape Navigator, WebCrawler, Lycos, AltaVista, Ask Jeeves, and America Online. Some of these previously popular search engines are rarely used or no longer exist. Currently Google (Chrome) is the most popular Internet search engine, followed by Microsoft Bing (https://blog.hubspot.com/marketing/top-search-engines). The integration of ChatGPT-4 with Bing has substantially increased its usage (https://www.theverge.com/2023/3/9/23631912/microsoft-bing-100-million-daily-active-users-milestone).

3.2. Medical education and gameplay

As noted in section 3.1 regarding chatbots and virtual assistants, AI can enhance medical education via personalized learning, virtual simulations, clinical decision support, and natural language communication about medical topics (i.e., writings, publications, presentations). In addition, AI facilitated gaming and adaptive gameplay may have application to medical education through interactive, “game scoring” assessed medical simulations that allow medical students to diagnose and treat medical conditions and practice medical procedures (i.e., such as virtual surgeries without the risk of harm to patients). AI gaming can be used to help patients better recover from injuries or illnesses or help patients with immobility or other complications due to obesity, via facilitation of interactive and personalized engagement.

3.3. Medical imaging analyses and image recognition

Most clinicians are familiar with AI-facilitated facial recognition and fingerprint mediated access to computers and smart technologies (i.e., smartphones). As noted in Fig. 1, the AI process begins with baseline algorithmic programming, followed by a computerized “learning” process where the applicable device is then able to “recognize” a face or fingerprint. Image recognition technology can also be used to create a 360-degree navigable, 3-dimensional models of a patient's anatomy, which may help more accurately analyze and interpret (i.e., diagnose) body imaging anomalies, more safely plan surgeries and other medical procedures, or more precisely monitor the stability, growth, or regression of an anatomic lesion.

3.4. Precision medicine and personalized recommendations

From a general, non-medical, marketing perspective, AI-mediated analysis of data regarding customer demographics, interests, and online behavior of an individual facilitate advertisement and recommendations of products and services specific for the specific user. Use of AI for personalized advertisement and shopping recommendations is commonplace for search engines and online e-commerce stores. For example, if a patient has an Internet search or online purchase history for nutritional, physical exercise, and/or weight reduction services or products, then a search engine and/or online e-commerce store will often prioritize online advertisement, specifically to that patient, based upon data related to previous online searches and/or online purchases. Similarly, through analysis of data regarding customer demographics, interests, and online behavior, AI facilitates recommendations of information and entertainment specific for the user. For example, if patient has a history of viewing online streaming content related to nutrition, physical activity or weight reduction, then a streaming service will often prioritize and advertise additional recommended content (and entertainment) related to nutrition (i.e., cooking shows), physical activity, or weight reduction.

A similar concept applies to precision medicine, which is a diagnostic and therapeutic approach less focused on “one size fits all,” and a strategy more focused on individualized care based upon variability in genes, environment, and behavior. Via predictive modeling based upon analysis and integration of patient data (e.g., genetic profile, family history, medical history, environmental and behavioral background, and laboratory evaluation), AI may help predict disease and treatment risks and benefits respective to the individual. AI may also help predict an individual's potential for drug-drug interactions [14].

3.5. Disease diagnosis and basic medical advice

For more basic medical conditions, chatbots and virtual assistants can interact with patients using natural language processing and suggest the most common diagnosis based upon the patient signs and symptoms, as well as basic initial treatments to consider. For more complex or more serious medical conditions, chatbots and virtual assistants can provide the patient guidance regarding the need of more complete evaluation, the timing of such medical evaluation, and potentially scheduling an appointment to conduct the medical evaluation. Other ways in which AI can assist with diagnosis includes AI algorithmic analysis of medical images to better detect abnormalities and track changes over time.

3.6. Identify patterns in datasets for predictive analytics

AI analyses of medical data (i.e., electronic health records) can be used to predict the likelihood of future health outcomes for individual patients or groups of patients. AI analyses of medical practices and institutions allows for patient data to be used to identify and predict metric trends that may benefit from adaptation of quality control and quality assurance policies, and to proactively prevent potentially avoidable adverse patient outcomes.

3.7. Robotics, natural language communication with machines, and autonomous vehicles

Robotics illustrate the difference between a simple machine versus an AI-empowered machine. Vacuum cleaners without AI will vacuum areas as manually moved by the human user. Conversely, vacuum cleaners integrated with AI can be self-programmed to map out the desired vacuum area, start vacuuming at a programmed times and dates, vacuum the mapped spaces, and then return to a base when the vacuuming is completed (See Fig. 1). Medical robotics are used for minimally invasive surgeries with greater precision and control. Regarding bariatric surgery, AI-direct robotics has the potential to augment surgical judgment towards improved patient outcomes [15].

Regarding natural language communication with machines, the term “Internet of things” refers to physical objects such as machines that are embedded with sensors and software that connect and exchange data with other devices and systems via the Internet. Examples include voice AI devices that can answer questions, engage in smart home appliance control (e.g., lights, thermostats, locks, and security camera), play music, set reminders and alarms, and make phone calls. Natural language communication with a computer can be used to extract clinical notes and other medical documents, identify patients meeting criteria for clinical trials, enhance medical education by allowing students to interact with virtual patients, and practice clinical decision-making in a safe and controlled environment.

Many are familiar with how smart phones can remotely connect to a car to monitor basic functions, and actively start and lock car doors. Many cars also have voice recognition capabilities adaptable to an individual's voice patterns. As the result of this “learning,” cars can respond to voice commands and make phone calls, map destinations, recommend route options, adjust car temperatures, and control audio functions such as radio vs other audio sources and volume control. On a more advanced level, AI technologies for self-driving cars employ computer vision, machine learning, and deep learning, that allows for perception of surroundings (i.e., roads, traffic), decision-making, and safe navigation. Potential future use of autonomous vehicles for medical purposes would be the transportation of medical supplies and equipment to patients in remote areas where access to medical supplies may be otherwise challenging, as well as transportation to and from appointments for patients who may have challenges with mobility.

3.8. Scheduling appointments and patient monitoring

As noted in section 3.1, chatbots can assist with 24-h, 7 day a week patient and staff appointment scheduling, which can be programmed to be flexible and adaptable based upon the patient's condition and insurance information, location of desired appointments, matching patient preferred medical service to optimal location (i.e., specialized health facility or lab test center), and matching patient desired medical care with the most appropriate individual clinician or staff expertise. In addition, AI can employ chatbots, virtual assistants, or other interfaces to remind patients to take medications and to collect post medical evaluation for remote monitoring (e.g., body weight, pulse, blood pressure) that may integrate patient home health monitoring devices and wearable technologies with clinician electronic health records – which may be especially valuable with telehealth [11].

3.9. Fraud detection and cybersecurity

Some areas of medicine (i.e., the disease of obesity and its treatment) are especially susceptible to scams and fraud (https://consumer.ftc.gov/articles/truth-behind-weight-loss-ads). AI algorithms can: (1) analyze patient data, medical billing records, and insurance claims to identify patterns and anomalies that may suggest fraudulent activity; (2) identify potential security threats to medical data systems via detection of unusual activity on networks or computer servers (i.e., unauthorized access or data breaches); and (3) analyze email communications with the intent to identify suspicious links or email attachments.

3.10. Policies and procedures & standard operating procedures

In 2022, the Obesity Medicine Association published a Clinical Practice Statement providing a listing of sentinel documents related to establishment of an (obesity) medical practice [11], with illustrative major headings that included:

-

•

Medical Practice Summary Essentials

-

•

Business Startup

-

•

Equipment

-

•

Services

-

•

Medical Practice Priorities and Philosophy

-

•

Office Policies and Procedures

-

•

Standard Operating Procedures

-

•

Patient Materials

-

•

Certifications and Ongoing Training

Under each of the major headings above were extensive item listings. However, the authors of this OMA Clinical Practice Statement did not provide full document examples of each item listed under each of these headings. That is because such documents are best crafted based upon individual needs, preferences, and local standards. Chatbots may be able to provide information and/or a draft of most all the suggested items listed in these tables. Alternatively, or in addition, various websites provide adaptable and free documents - including legal documents.

As an illustrative example, in the OMA CPS table entitled: “Office Policies and Procedures,” one of the item listings was “Smoking Policies” [11].” Table 2 represents the response from ChatGPT to the text-typed question: “Create a final draft of smoking policies and procedures for a medical practice in Kentucky” (ChatGPT, personal communication, April 3, 2023). If this were to be utilized in a medical practice, then the wording would best be edited to reflect the individual needs, philosophy, and priority of the medical practice, with perhaps a legal review.

Table 2.

Response from ChatGPT to the text-typed question: “Create a final draft of smoking policies and procedures for a medical practice in Kentucky” (ChatGPT, personal communication, April 3, 2023). This is illustrative of a policy and procedure, or standard operating procedure that if used in “real life,” would need to be edited to better fit the individual needs of the medical practice and may potentially benefit from a legal review.

|

Smoking Policies and Procedures for a Medical Practice in Kentucky Introduction: The medical practice in Kentucky is committed to providing a healthy and safe environment for all employees, patients, and visitors. Smoking is a significant health hazard that can cause serious illnesses such as heart disease, lung cancer, and stroke. In line with this, the medical practice has implemented the following policies and procedures on smoking: Policy Statement: Smoking is prohibited in all indoor and outdoor areas of the medical practice facility, including parking lots, sidewalks, and vehicles on the premises. This policy applies to all employees, patients, visitors, and contractors. Procedure: Signs: The medical practice will post “No Smoking” signs at the entrances, exits, and prominent locations throughout the facility to remind everyone of the smoking policy. Designated Smoking Area The medical practice will not provide a designated smoking area. However, patients who require medical assistance and cannot go off the premises for a smoke can inform their healthcare provider. The healthcare provider can make an exception in some cases, but only in areas that are not common or shared spaces, and only with prior approval from a supervisor. Education and Awareness The medical practice will provide educational materials on the dangers of smoking, the benefits of quitting smoking, and resources for quitting smoking. The practice will also include information on smoking in its orientation program for new employees. Enforcement The medical practice expects all employees, patients, visitors, and contractors to comply with the smoking policy. The medical practice will take the following measures to ensure compliance:

It is the responsibility of all employees, patients, visitors, and contractors to comply with the smoking policy. Review The smoking policy will be reviewed periodically to ensure its effectiveness and relevance. Conclusion: The medical practice in Kentucky is committed to providing a healthy and safe environment for everyone. The smoking policies and procedures will ensure that everyone is aware of the dangers of smoking and that the medical practice is doing everything possible to protect everyone's health. We appreciate your cooperation in implementing these policies and procedures. |

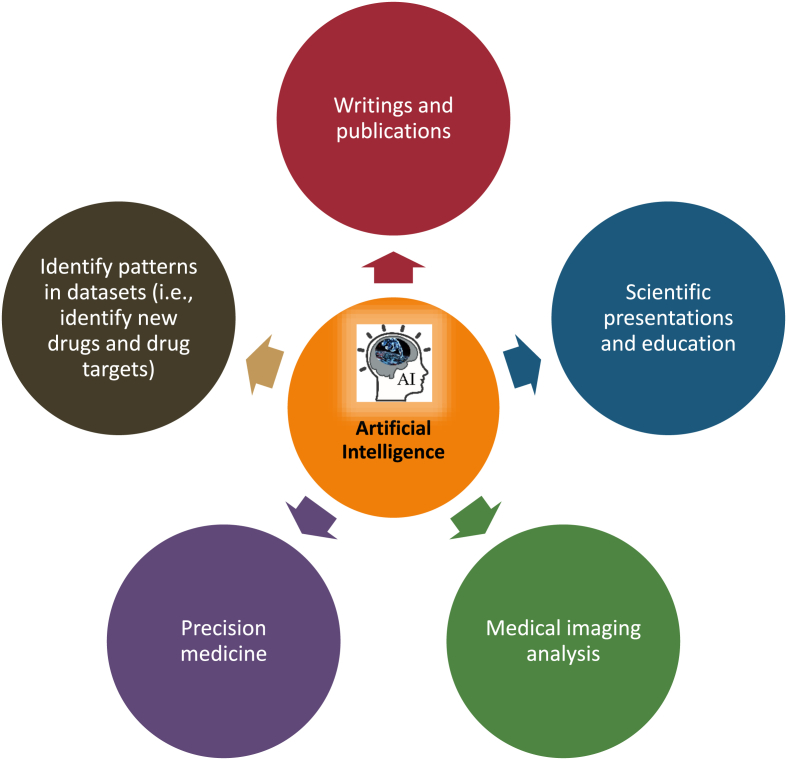

4. What are illustrative uses of artificial intelligence in medical research? (See Fig. 6)

Fig. 6.

Illustrative uses of artificial intelligence in medical research.

Use of AI for medical research includes:

4.1. Writings and publications

4.1.1. Medical writing and chatbots

Among the more common applications of AI in medical research is the use of chatbots in medical publications. As noted in Section 3.1, different chatbots have different capabilities. Chatbots differ in their applicability for a specific field of academia, availability (i.e., free versus paid), ability for customization, output capabilities (e.g., text-to-text output, text-to-image output), date of initial knowledge base, ability to access information from the Internet in real time, permissiveness of output (e.g., some chatbots restrict “sensitive” topics), accuracy of output, and completeness of output. With specific regard to medical uses, not all chatbots interact with PubMed and not all chatbots provide real-time information. That said, AI in general and chatbots specifically are at the earliest of stages, with anticipated major advances soon– which presumably would ultimately include the full integration with PubMed Central.

4.1.2. Journal policies and chatbots

Many journals have established criteria for use of chatbots in article submissions, with the main emphasis being the need for disclosure and assurance that the human authors take responsibility for the integrity of the content generated by AI tools. Authors should report AI assistance in the Acknowledgment, Methods, or Dedicated manuscript section [16]. Table 3 details the Artificial Intelligence polices for Obesity Pillars. Included is the instruction that AI and AI-assisted technologies should not be listed as an author or co-author, nor cite AI as an author. Also, these policies require an additional disclosure, reflected by declaration at the end of this manuscript that states:

“During the preparation of this work the author(s) used Chat GPT to help list and categorize content. After using this tool/service, the author(s) reviewed and edited the content as needed and take(s) full responsibility for the content of the publication.”

Table 3.

Artificial Intelligence policies for Obesity Pillars (i.e., journal of the Obesity Medicine Association).

| The use and declaration of AI and AI-assisted technologies in scientific writing |

|---|

Where authors use artificial intelligence (AI) and AI-assisted technologies in the writing process, authors should:

Authors must disclose the use of AI and AI-assisted technologies in the writing process by adding a statement at the end of their manuscript in a new section entitled ‘Declaration of AI and AI-assisted technologies in the writing process’. Statement: During the preparation of this work the author(s) used [NAME TOOL/SERVICE] in order to [REASON]. After using this tool/service, the author(s) reviewed and edited the content as needed and take(s) full responsibility for the content of the publication. This declaration does not apply to the use of basic tools for checking grammar, spelling, references etc. If there is nothing to disclose, there is no need to add a statement. |

4.1.3. Copyright and chatbots

It is the human review and editing function that may best help avoid potential Copyright infringements. Chatbots derive their information from pre-existing data and text. Thus, chatbot generates text that has the potential to reflect the wording of its sources. Because chatbots are not human authors, it is the human author that is responsible for avoiding copyright infringements from chatbot generated text. As per Table 3, AI and AI-assisted technologies should not be listed as an author or co-author, or cite AI as an author. “Authorship implies responsibilities and tasks that can only be attributed to and performed by humans.”

On March 16, 2023, The U.S. Copyright Office has issued “Copyright Registration Guidance: Works Containing Material Generate by Artificial Intelligence (https://www.federalregister.gov/documents/2023/03/16/2023-05321/copyright-registration-guidance-works-containing-material-generated-by-artificial-intelligence#:%7E:text=When%20an%20AI%20technology%20determines). A sentinel aspect of copyright regarding AI is the “Human Authorship Requirement,” which states:

“In the Office’s view, it is well-established that copyright can protect only material that is the product of human creativity. Most fundamentally, the term “author,” which is used in both the Constitution and the Copyright Act, excludes non-humans. The Office’s registration policies and regulations reflect statutory and judicial guidance on this issue … When an AI technology determines the expressive elements of its output, the generated material is not the product of human authorship. As a result, that material is not protected by copyright and must be disclaimed in a registration application.

However, a work containing AI-generated material will also contain sufficient human authorship to support a copyright claim. For example, a human may select or arrange AI-generated material in a sufficiently creative way that ‘the resulting work as a whole constitutes an original work of authorship’ or an artist may modify material originally generated by AI technology to such a degree that the modifications meet the standard for copyright protection. In these cases, copyright will only protect the human-authored aspects of the work, which are “independent of” and do “not affect” the copyright status of the AI-generated material itself.

This policy does not mean that technological tools cannot be part of the creative process. Authors have long used such tools to create their works or to recast, transform, or adapt their expressive authorship. For example, a visual artist who uses Adobe Photoshop to edit an image remains the author of the modified image and a musical artist may use effects such as guitar pedals when creating a sound recording. In each case, what matters is the extent to which the human had creative control over the work's expression and ‘actually formed’ the traditional elements of authorship.”

4.1.4. Medical writing ethics and chatbots

Potential ethical concerns arise if authors rely too heavily on chatbots to write and publish manuscripts. However, the importance of ethics in medical writing did not start with the widespread application of AI. Long before chatbots, challenges with medical publishing ethics have included the level of author contribution, transparency of how the manuscript was crafted (i.e., use of medical writer, funding), proper disclosures (i.e., potential “conflicts of interest”), plagiarism, data fabrication, lack of proper informed consent (i.e., protection of patient privacy), and publication bias. As with other aspects of academia, the ethical use of AI in medical writing largely depends on the commitment of authors to use chatbots in a responsible manner.

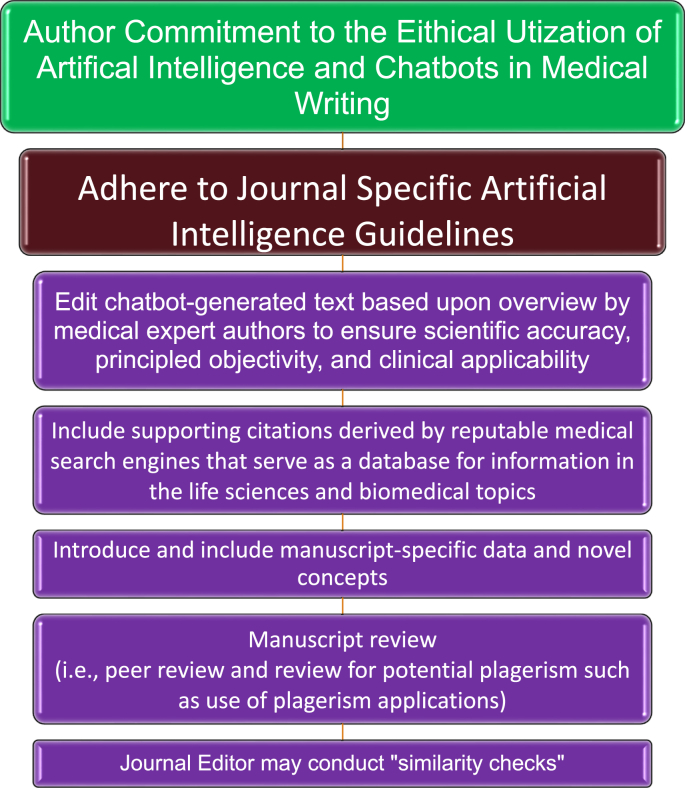

Fig. 7 describes illustrative author commitments to the ethical utilization of Artificial Intelligence and chatbots in medical writing. It is possible/probable that future human verification of the accuracy of manuscript text (i.e., peer review) will ultimately be augmented by medical specialty computer systems focused and trained based upon routine scientific updates applicable to the medical sciences. But for now, computer applications already exist to identify, and reword existing text to avoid plagiarism. Additionally, many journals routinely perform “similarity checks” or originality report on submissions. The criteria and accepted cut-off points of similarity scores vary. Similarity checks or originality reports do not necessarily rule out or rule in plagiarism. For example, while a text similarity of <25% may suggest acceptable text matching, plagiarism may still exist. A text similarity of >25% may suggest some word duplications (“copy and paste”). A text similarity score of over 50 or especially over 75% may suggest extensive copying and pasting from other sources. The challenge with higher similarity scores is that text similarity is increased with the inclusion of explicitly cited quotations, as well as with standardized wording of societal guidelines, recommendations, and/or clinical practice statements. For example, while the body text is original and thus differs for individual Obesity Medicine Association (OMA) Clinical Practice Statements (CPS), each OMA CPS has standardized wording for sections that address (a) transparency, (b) group composition, (c) managing disclosures and dualities of interest, (d) evidence, (e) ethics review, (f) conclusions and recommendations, (g) updating, (h) disclaimer and limitations, and (i) acknowledgements and funding. These word similarities of these mandatory inclusions may increase the similarity scores of each of these Clinical Practice Statements.

Fig. 7.

Proposed author commitments in the use of Artificial Intelligence in the ethical construct of medical publications.

4.1.5. Future medical writing and journal logistics and chatbots

Overall, it is likely AI advancements will change the role of writers, editor, and reviewers of medical journals. From an author standpoint, AI may help with literature search, data analysis, translation to different languages, and formatting the manuscript specific for the target journal (including the abstract, body text, and citation style in the reference section). AI writing assistance can help improve the quality of manuscripts by sentence structure and grammar, which may be especially helpful for novice authors or authors with limited skill in the language of the journal. Finally, AI will likely soon be able to fact and reference check the draft before the authors submit to the journal.

From a journal Editor standpoint, manuscript submissions will soon be able to undergo an AI fact check, reference check, and more refined plagiarism check before inviting journal reviewers for peer review. After both author and Editor filter for accuracy, then reviewers (most often volunteers) can better focus their time and effort in providing their clinical or scientific perspectives, rather than seeking and correcting factual errors. Collectively, AI has the potential to enhance journal article accuracy, improve clinical applicability, and streamline the submission, peer review, and publishing process.

4.2. Identify patterns in datasets (i.e., identify new drugs and drug targets)

AI algorithms applied to large genomic and proteomic datasets can assess biological activity of drug targets and potential pharmacotherapeutics directed towards those drug targets. Predictive AI modeling can help researchers prioritize which potential therapeutic interventions are most likely to succeed (i.e., based upon safety and effectiveness) and thus streamline the drug discovery process. Evaluation of drug candidates may be further enhanced by AI facilitated imaging of cells, tissues, and organs associated with disease. Finally, AI analysis of large datasets may help identify potential new uses for currently approved drugs.

4.3. Other medical research AI applications include

-

•

Assistance with scientific presentations and medical education

-

•

Medical imaging analyses

-

•

Facilitation and application of precision medicine

5. What are illustrative uses of artificial intelligence specifically for the management of patients with obesity? (See Fig. 8)

Fig. 8.

Illustrative uses of artificial intelligence specifically for the management of patients with obesity.

Many of the same principles that apply to use of AI for medical practice (Section 3.0) and medical research (Section 4.0) also apply to use of AI for obesity management.

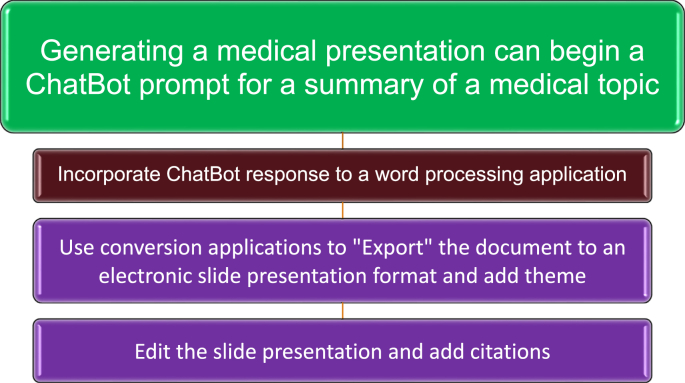

5.1. Writings, publications, and medical education

One of the key methods of medical education is through presentations by experts, educators, and/or researchers. A challenge for presenters and medical education staff is meeting time deadlines for quality presentations. Creation of presentation slides can be tedious and time-consuming. Fig. 9 describes how artificial Intelligence algorithmic can streamline medical presentation slide creations. Fig. 10 provides a practical example about how using AI, a simple presentation on an obesity-related topic was crafted within minutes. From the presenter standpoint, having to spend less time on slide creation, and more time with fact checking and referencing may improve the quality of the presentation. From the perspective of the medical education staff responsible for the multifaceted aspects of medical education meeting programming (selection of speakers, selection of location, management of travel and hotel, creation of workshops, poster presentations, network opportunities, societal meeting scheduling, exhibits, and continuing education), the less time spent to secure speaker slides for internal and/or continuing education review, then the more time can be sent to improve the quality of other aspects of the medical education experience.

Fig. 9.

Illustrative Artificial Intelligence algorithmic process utilized to streamline medical slide presentations.

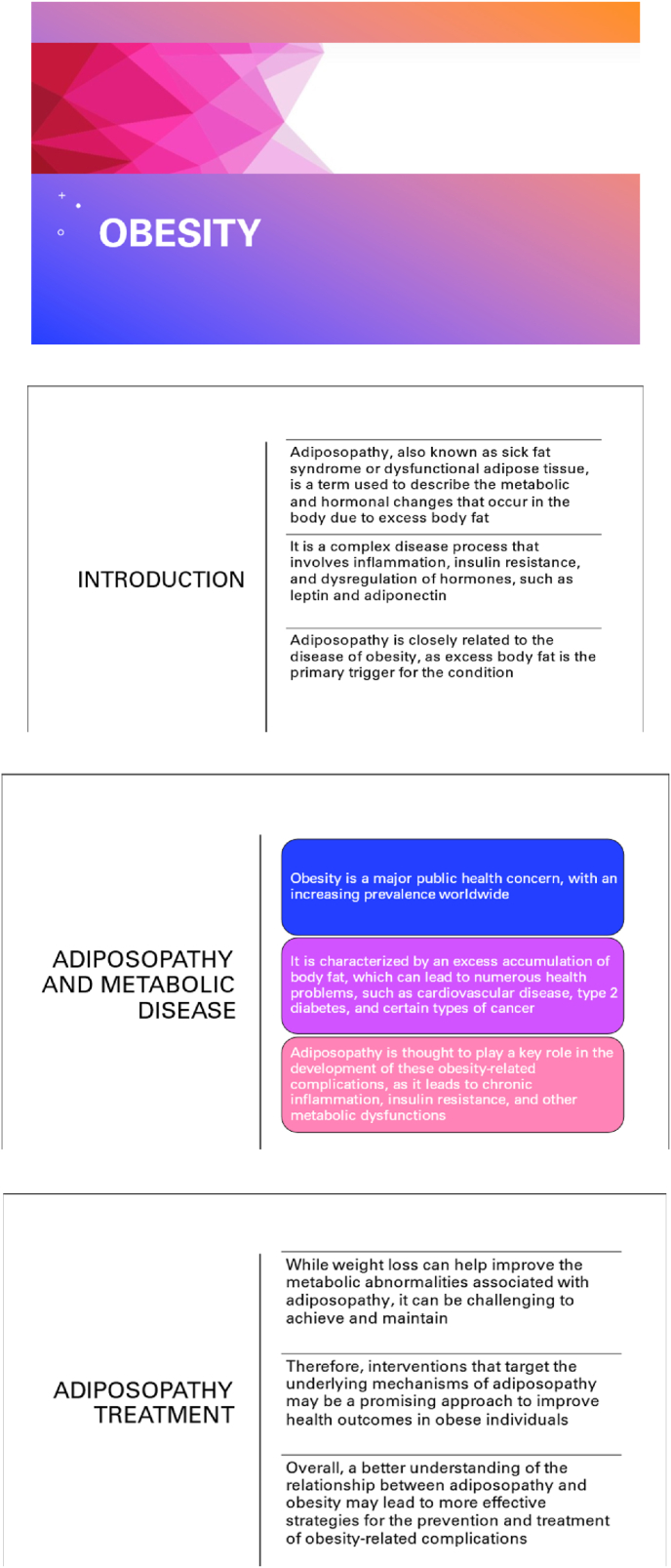

Fig. 10.

Unedited and unreferenced Artificial Intelligence-generated four slide presentation of “adiposopathy.” Content and text were generated solely by Chat GPT (Prompt request: “Draft 3 brief paragraphs about the relationship of adiposopathy to the disease of obesity”). Headings were manually marked to the Chat GPT response text located in online Microsoft Word, to help the Microsoft AI with proper formatting. The document was saved as the file name “Obesity” (to help Microsoft AI with subject relevant icons, if applicable and available). The online Word document was then converted to a presentation by use of “Export” to PowerPoint function (by online Microsoft Office 365). The theme was selected from several options, and the PowerPoint slide presentation was saved to a computer. The result was copied and pasted above. If an actual presentation, then the text content would need to be edited by the author (i.e., change test to “people first language”), and reference citations would need to be added to the slides. The entire process took minutes.

Additional ways that AI may assist in obesity medical education is the generation of educational videos via generation of avatar instructors and having automated interactions with the learner via text or voice to video instruction. The avatar (i.e., figures representing a person) instructors may have customizable images and voice based upon learner choice of age, gender, race, ethnicity, and even language chosen by the learner. Alternatively, by providing electronic images and making sample voice recordings, avatar instructors may be assigned an image likeness and voice clone of the clinician and patient, allowing for “on demand” reenactment virtual video encounter (VVE) recap of the medical office encounter (showing clinician and patient). This could be followed by an enactment VVE of a scripted and fictional medical video encounter of the patient and clinician regarding specific obesity-related topics (i.e., nutrition, physical activity, behavior modification, anti-obesity medications, and bariatric surgery).

From a medical society standpoint, learning management systems (LMS) are software platforms that help medical society staff manage and deliver educational content to society members. Among the components of an LMS includes features for creating, organizing, and delivering educational courses, tracking learner progress, and managing course materials. LMS can be used to provide continuing medical education (CME) and other forms of professional development to members, via a range of educational resources, such as videos, interactive modules, case studies, and webinars. Some LMS platforms may also include features for managing certification and accreditation, as well as tools for tracking compliance with regulatory requirements. Depending on its capabilities, a medical society LMS can integrate with medical society AI via:

-

•

Personalized learning: AI-integrated LMS can analyze a learner's performance and behavior, provide personalized learning experiences, and help learners (i.e., medical society members) identify their strengths and weaknesses, allowing them to focus on areas where they need more help through the creation of customized learning pathways and course recommendations.

-

•

Intelligent content curation: AI algorithms can be developed to analyze large amounts of material (i.e., medical literature) and provide learners a selection of relevant medical information. This more targeted approach, such as providing selected educational offerings regarding obesity, facilitates a more manageable approach in accessing the most relevant and latest medical knowledge. AI can also generate summary flash cards for interactive question and answer based learning, as opposed to passive reading.

-

•

Adaptive learning: AI-integrated LMS can adjust education content difficulty level based upon learner educational background and interest, as well as desired outcome. Stratification of medical education difficulty level may allow learners to remain more engaged and motivated by balancing what is challenging to what can be reasonably achievable tasks – based upon the anticipated skillset and needs of the individual learner.

-

•

Assessment and feedback: AI-integrated LMS can help manage educational platforms and assessments, which may include automatically grading the education assignments. This allows instant feedback to learners, allows learners to track their progress, and helps identify areas for improvement.

-

•

Predictive analytics: AI algorithms can analyze learner data from educational programs to predict which learners, or segment of learners, are most likely to drop out or require extra support. Thus, AI integrated LMS can provide administrators data that may suggest more targeted interventions and support, directed towards the individual learner or individual segments of learners.

Overall, AI can assist medical organizations better assess and adapt their educational programing and marketing communication practices to the needs of members, clinicians, patients, customers, or consumers. A practical example is illustrated by an analysis from an email marketing engine company that suggested only 1.37% of emails from industry have links that are “clicked” (https://knowledgebase.constantcontact.com/articles/KnowledgeBase/5409-average-industry-rates?lang=en_US) Leveraging artificial intelligence, healthcare and other marketing and communication professionals may better be able to tailor experiences to individuals, providing the right information, at the right time, to the right person – thus better realizing the educational benefits offered by the medical organization.

5.2. Medical imaging analysis (i.e., body composition)

Measures of body composition (i.e., muscle and fat mass) are often more diagnostic and prognostic than measuring body mass index alone [17]. Clinical methods of estimating body composition through anthropomorphic measures (i.e., dual-energy X-ray absorptiometry, bioelectrical impedance) are reasonably straight forward in their assessment [18]. However, from an obesity research perspective, manual assessment of body cross-sectional imaging via computed tomography and magnetic resonance imaging studies is labor intensive and time consuming. Automation of the process of body composition measurement may include the application of artificial intelligence and deep learning to tissue segmentation (i.e., visceral and subcutaneous adipose tissue) [19,20]. AI may enhance the imaging assessment of epicardial adipose tissue and pericardial adipose tissue, providing additional information regarding the adverse effects of obesity and adiposopathy on the pathogenesis and prognosis of the cardiovascular complications of obesity [21,22]. AI may help define anthropometric parameters and adipose tissue values based on magnetic resonance imaging towards predicting obstructive sleep apnea [23,24]. AI also may improve the diagnosis, prognosis, and response prediction of liver diseases (i.e., fatty liver disease) [25,26]. From an intervention standpoint, AI-mediated imaging may improve recognition of anatomical structures in patients with obesity and improve invasive outcomes (e.g., improved increased first-attempt success rate and reducing needle placement time for spinal anesthesia among patients with obesity) [27].

5.3. Scheduling appointments

AI may help patients schedule appointments, without medical staff involvement. AI may also help identify patients who may benefit from a referral for more intensive obesity care (i.e., obesity specialist, dietitian, behavior counseling, psychologist, exercise physiologist), and then initiate and automate and complete the referral process directly from the primary care office to the obesity medicine specialist, reducing staff time and eliminating the administrative burden associated with referrals (i.e., faxing referral documents).

5.4. Telemedicine and remote monitoring of patients via wearable technologies and mobile apps

As noted in section 3.0, AI can assist with the integration of remote measurement devices to electronic health records and information that might especially enhance telemedicine visits.

5.5. Identify patterns in data sets

Data sets derived from electronic health records AI may allow assessment of efficacy of obesity management approaches. AI patient data analytics may help support value-based payment models, which may be especially relevant to obesity medicine. Demonstrating value-based care in obesity medicine is often challenging due to the long duration of treatment required to see favorable outcomes. AI predictive models might better quantify obesity care health benefits sooner. Regarding obesity research, AI may help detect clinically meaningful patterns related to the disease obesity and its complications [[28], [29], [30], [31]]. Applicable AI methodologies include:

-

•

Machine learning: Involves the development of algorithms and statistical models that enable computers to learn from data input, beyond the machine's initial programming (See Fig. 1).

-

•

Deep learning: Uses artificial neural networks with multiple layers to model and solve complex problems through progressively higher-level data extraction from raw input.

-

•

Multimodal or multitask AI models: Capable of integrating diverse input data types (e.g., tabular plus text, image, video, or audio) and generating diverse output tasks (e.g., text generation, object detection, time series prediction, and speech recognition)

-

•

Synthetic data generation: Creation of new data using mathematical models or algorithms, instead of being collected from real-world sources. Mimicked data can train machine learning models, test data analysis pipelines, or generate realistic scenarios for simulation and modeling. Synthetic data provides a cost-effective alternative to collecting real data and avoid privacy concerns as may occur with the use of real patient data.

-

•

Human-in-the-loop: Persons are involved the AI-mediated decision-making process (i.e., collaboration between humans and machines).

5.6. Predictive modeling and precision medicine [[32], [33], [34], [35]]

AI may help identify patients at risk for obesity and obesity-related complications, including risk assessment in younger individual [36,37]. AI algorithmic predictive modeling (e.g., genetic profile, family history, medical history, environmental and behavioral background, and laboratory evaluation) may help predict the best management plan for patients with obesity.

Digital education and communication tools in the field of endocrinology include chatbots, interactive robots and augmented reality. Genomic medicine helps augment these tools via precision and personalization of education, communication, diagnosis, and therapy of endocrine conditions such as obesity [38]. For example, regarding pediatric patients and younger adults with obesity, testing of deoxynucleic acid sequencing or chromosomal structure may reveal underlying, rare genetic causes of obesity [39,40], better allowing for more targeted treatment. Setmelanotide is approved by the US Food and Drug Administration for chronic weight management in adult and pediatric patients ≥6 years of age with pro-opiomelanocortin (POMC)/proprotein convertase subtilisin/kexin type 1 (PCSK1) deficiency, leptin receptor (LEPR) deficiency POMC, or in patients with Bardet-Biedel syndrome (BBS) [41]. AI integration of patient and family clinical data, along with genetic and other testing may assist the clinician with more target therapies, greater access of applicable education to the patient and family, and possibly reduce obesity stigma by clearly identifying genetic etiologies of the disease of obesity.

Other ways that patient care may be improved is through the use of AI-derived tools that improve the efficiency of obesity-related diagnostic tools (i.e., eating disorder screening questionnaires) [42], improve treatment recommendations regarding meals to reduce the risk of obesity [43], better estimate resting energy expenditure [44], and generally help facilitate improved health literacy and weight reduction – contingent on engagement and contextualization [45,46]. A caveat is that AI-mediated interventions, such as the delivery of precision nutrition, may disproportionately benefit those at higher socioeconomic strata, potentially widening inequities among different populations with obesity [47].

5.7. Robotics

Machine learning through AI demonstrates promise in helping decision-making as it applies to bariatric surgery and precision medicine [48], and predicting surgical outcomes after bariatric surgery [49]. While likely to change with advancing technology, currently, reports suggest robotic surgery increases operating room time and cost without significant generalizable improvements in patient outcomes. Exceptions may include use in patients with severe obesity or revisional bariatric procedures. Applied artificial intelligence may help provide enhanced cues during surgery, augmenting the surgeon's judgment and skill and result in improved patient outcomes [15].

5.8. Personalized nutrition and physical activity plan

AI analysis that integrates patient personal data (e.g., age, sex, gender, body mass index, body composition metrics, medical history, and laboratory, as well as nutritional and physical activity behaviors) can enhance personalized health recommendations. Specifically, AI algorithms can analyze patient food intake and provide feedback on more healthful dietary adjustments. Similarly, AI algorithms can analyze a person's physical activity levels and provide feedback on healthful physical exercise recommendations. Machine learning can integrate patient data respective to response to recommendations and interventions to continually refine recommendations over time towards to goal of better health outcomes. Specifically:

“AI chatbots should be designed to be human-like, personalized, contextualized, immersive, and enjoyable to enhance user experience, engagement, behavior change, and weight loss. These require the integration of health metrics (e.g., based on self-reports and wearable trackers), personality and preferences (e.g., based on goal achievements), circumstantial behaviors (e.g., trigger-based overconsumption), and emotional states (e.g., chatbot conversations and wearable stress detectors) to deliver personalized and effective recommendations for weight loss” [6].

5.9. Behavior coaching

AI assessment of patient's individual behavior, actions, thoughts, and emotions may help identify patterns that may help direct personalized coaching. AI-medicated patient-centered recommendations may better help patients achieve their goals. Through an assessment of patient data, AI predictive modeling may identify areas of greatest need of improvement and interventions most likely to achieve favorable health outcomes. Such an assessment may include and integrate input data from: (a) self-reports via questionnaires, (b) medical health records, (c) self-monitoring devices and health trackers, and (d) conversational and wearable stress detectors that may provide insight into behaviors, preferences, and emotional states. Once data is assimilated and integrated, then virtual coaching assistants may provide personalized answers to patient questions, contribute to individualized feedback, relate evidenced-base guidance, and provide general support. Through natural language conversations with users, AI chatbots can provide real time adaptive recommendations which include decision-making and self-regulation skills, progress feedback to reach certain weight loss goals, and emotional support with motivation and validation. Overall, AI chatbots may help optimize weight loss and can deliver personalized and effective recommendations for weight loss programs [6].

In addition to weight reduction, consistent with the general principle that optimal behavior modification involves continuous monitoring and engagement [50], AI reinforced learning via continuously-monitored digital data may help optimize weight loss and weight loss maintenance [51]. AI with chatbots programmed with simulated empathy may further help promote long-term weight management [52].

Finally, AI gamification techniques may help make behavior coaching more engaging and fun (e.g., setting up challenges, establishing rewards, and creation of other incentives to motivate individuals to achieve their goals). Such promising emergent AI-related technologies are being developed for management of childhood obesity [53]. Benefits of AI-mediated gaming extend to reduced caloric consumption (and improved cardiopulmonary function) among younger individuals with obesity [54,55].

5.10. Policies and procedures & standard operating procedures

In a prior Obesity Medicine Association Clinical Practice Statement, specific policies and procedures [11], as well as standard operating procedures were identified that applied to medical practices in general, and obesity medicine specifically. AI chatbots can assist in generating initial draft of many of these documents.

6. What are illustrative challenges and limitations of artificial intelligence? (See Fig. 11)

Fig. 11.

Illustrative limitations of artificial intelligence (AI). All these limitations may apply to AI - depending on the AI; the same limitations may apply to humans – depending on the human.

Fig. 11 lists some limitations regarding AI. Of note is that almost all these limitations may also have some application to human counterparts as well – depending on the person or persons.

6.1. Dependence on data

AI algorithms rely on data that is accessible, accurate, complete, objective, and unbiased. Optimal AI integration of the totality of patient data also depends on the interoperability and compatibility of devices, databases, and platforms applicable to patient data. AI will not be able to “intelligently” talk to the patient in a comprehensive way, if the patient's devices, databases, and digital/computing platforms do not “talk” to one another. Thus, a major challenge for data acquisition is the establishment of universal standards for data communication and integration.

Additionally, AI algorithmic data that is compromised diminishes the value of AI assessments and recommendations (i.e., “garbage in, garbage out” or GIGO). Even when programmed data is accurate, AI may have prioritized responses, that may differ from the perspective of clinicians. For example, if a chatbot is asked for a diagnosis based solely on demographics and symptoms, then the response may be limited to the most common and most likely causes. However, clinicians are routinely challenged with evaluating common symptoms within the context of common causes, balanced against the possibility of the same common symptom representing uncommon severe life-threatening conditions, that if not appropriately diagnosed, may lead to severe adverse outcomes such as death. Thus, when it comes to diagnoses, clinicians prioritize the common, but do not discount the catastrophic. For example, strictly based upon the data, a headache may most likely be due to mental stress, migraines, or sinusitis; however, a headache may also be due to a life-threatening brain tumor or aneurism. Chest pain in a young female may commonly be due to costochondritis, but may also be due to angina, pericarditis, pulmonary embolism, or aortic dissection. Nausea and vomiting can commonly be due viral gastroenteritis, but may also be due to central nervous system lesions, cancer, or some more serious gastrointestinal condition. Unless the chatbot is preprogrammed to provide both the common and catastrophic, then when a clinician or patient prompts a chatbot for a diagnosis, the question prompt should not only include demographics, signs, symptoms, but also prompt for less common diagnoses that may be serious and/or life-threatening. In other words, one of the limitations regarding the accuracy and completeness of question responses, is the accuracy and completeness of whomever is asking the question.

6.2. Lack of common sense

AI lacks general knowledge possessed by humans, which may limit the ability for AI to make decisions based on historic, current, and future context. For example, patient-centered dynamics based upon family, friend, culture, work, and other external considerations may have practical implications that render AI recommendations impractical or undoable. A lack of common sense can also help explain occasional inexplicable AI responses (AI “hallucinations”). AI in medicine relies upon its assimilation and interpretation of data, that includes prior publications. AI chatbots may therefore sometimes respond with nonsensical conclusions based upon irrational and erroneous data associations (e.g., seemingly bizarre responses derived from selecting and conjoining unrelated text from a reference list of a publication related to the question). This potential for AI hallucinations supports the need to verify the output of chatbots [1]. That said, while humans may help discover and correct the failings of AI, AI may likewise help discover and correct the failings of humans.

6.3. Lack of ethical consideration

While many AI systems have ethical programming, AI lacks the consideration of ethical considerations in the same way as applied by humans. Some have advocated that if AI is to be integrated into clinical practice, then at minimum and depending on the use, AI integration should be disclosed to patients, potentially with informed consent depending on the applicable situation (https://www.medpagetoday.com/opinion/second-opinions/102987?xid=nl_secondopinion_2023-02-07&eun=g1174638d0r).

6.4. Lack of creativity

While AI can assimilate its programmed and acquired data to create novel output, AI lacks human creativity that extends beyond programmed and acquired data.

6.5. Complexity and cost

Building and maintaining complex AI systems can be costly and time-consuming, limiting accessibility to smaller organizations. Currently, it is prominently companies with necessary resources that are currently advancing the frontier of AI systems, designed by a relatively small number of model developers, which may have a potential for financial incentives that could create conflicts of interest [7]. Another “cost” to consider is the patients and clinician time and effort investment to not only acquire data input, but also to follow-through on AI-directed recommendations (i.e., user burden).

6.6. Security and privacy risks