Abstract

Human infants acquire language with notable ease compared to adults, but the neural basis of their remarkable brain plasticity for language remains little understood. Applying a scaling analysis of neural oscillations to address this question, we show that newborns’ electrophysiological activity exhibits increased long-range temporal correlations after stimulation with speech, particularly in the prenatally heard language, indicating the early emergence of brain specialization for the native language.

Newborns' brain activity shows increased temporal organization after hearing speech in the prenatal language, suggesting learning.

INTRODUCTION

Human infants acquire language with amazing ease. This feat may begin early, possibly even before birth (1–5), as hearing is operational by 24 to 28 weeks of gestation (6). The intrauterine environment acts as a low-pass filter, attenuating frequencies above 600 Hz (2, 7). As a result, individual speech sounds are suppressed in the low-pass–filtered prenatal speech signal, but prosody, i.e., the melody and rhythm of speech, is preserved. Fetuses already learn from this prenatal experience (5, 8): Newborns prefer their mother’s voice over other female voices (1) and show a preference for the language their mother spoke during pregnancy over other languages (3). After birth, as infants get exposed to the full-band speech signal, they become attuned to the fine details of the sound patterns of their native language by the end of the first year of life (9–13). What neural mechanisms allow the developing brain to learn from language experience remains, however, poorly understood. Here, we asked whether stimulation with speech may induce dynamical changes able to support learning in newborn infants’ brain activity, and whether this modulation is specific to the language heard prenatally.

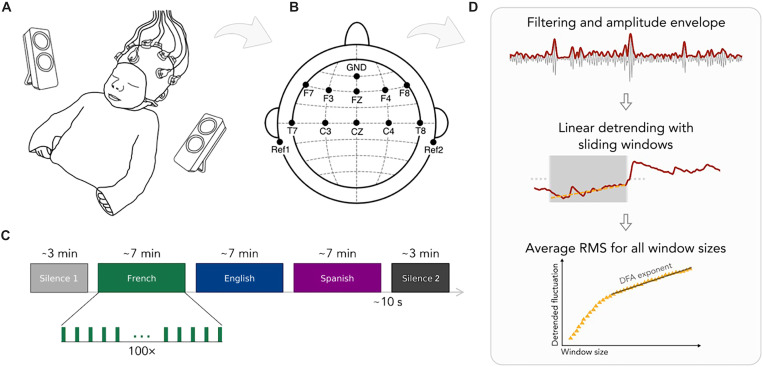

We measured prenatally French-exposed newborns’ (n = 49, age: 2.39 days; range 1 to 5 days; 19 girls) neural activity using electroencephalography (EEG) over 10 frontal, temporal, and central electrode sites while infants were at rest in their hospital bassinets (Fig. 1, A and B). We first measured resting state activity for 3 min (silence 1). Then, infants heard speech in three different languages—French, Spanish, and English in 7-min blocks. Last, resting state activity was measured again for 3 min (silence 2; Fig. 1C). The order of the languages was pseudo-randomized and counterbalanced across participants such that 17 infants heard French, 18 infants heard English, and 14 infants heard Spanish as the last block before silence 2. Besides the prenatally heard language, French, we chose Spanish and English as unfamiliar languages to test the effects of prenatal experience. Spanish is rhythmically similar to French, while English is different (14). Behaviorally, newborns can discriminate rhythmically different languages, even if those are unfamiliar to them, while they cannot distinguish rhythmically similar ones (15). The speech stimuli consisted of natural recordings of translation equivalent sentences in the three languages from the children’s story “Goldilocks and the Three Bears” recorded in mild infant-directed speech and matched for acoustic properties (table S1).

Fig. 1. Illustration of the experimental paradigm and the analysis pipeline.

(A) The experimental setup used in the study. (B) EEG channel locations. (C) The experimental design. (D) The detrended fluctuation analysis (DFA).

By comparing resting states before and after linguistic stimulation, this paradigm allowed us to address two questions. First, whether language exposure affects neural dynamics in the infant brain: Plastic changes immediately following exposure to speech may underlie infants’ ability to learn about the sound patterns they hear (16, 17). We were not interested in the newborn brain’s responses to different languages—a very interesting question, which has received ample attention in the literature (18–22), including using data from this study (23, 24). Rather, we asked whether exposure to speech makes lasting changes in neural dynamics, supporting learning and memory.

Second, by testing whether these plastic changes occur after exposure to all languages or only after the language heard prenatally, we asked whether prenatal experience already shapes neural circuitry. If prenatal experience already plays a role, then newborns may show greater plastic changes after exposure to the language heard prenatally than after unfamiliar languages. As an especially stringent test, we compared the native language not to one, but to two unfamiliar languages, including a rhythmically similar language, which newborns cannot discriminate from the native language behaviorally (15).

We expected changes in neural dynamics specifically at low frequencies, as the prenatal speech signal is low-pass filtered and mainly consists of low-frequency information, i.e., prosody (2, 7, 25). According to the embedded neural oscillations model (26, 27), oscillations between 1 and 3 Hz, i.e., the delta band, underlie the processing of large prosodic units, such as utterances and phrases; oscillations between 4 and 8 Hz, i.e., the theta band, underlie the processing of syllables; while oscillations above 35 Hz, i.e., the gamma band, are related to the processing of phonemes. We thus predicted that prenatal effects will specifically target the delta and theta bands, as linguistic units corresponding to those frequency bands are the ones present in the prenatal signal. To assess changes in neural dynamics induced by speech, we conducted two analyses: (i) detrended fluctuation analysis (DFA) and (ii) the autocorrelation between the fluctuations of electrophysiological activity before and after stimulation.

DFA (28) is a robust method for measuring the statistical self-similarity and temporal correlation of time series data. Specifically, DFA measures the scaling properties of temporal signals, quantified by the strength of long-range temporal correlations (LRTCs). The strength of LRTC is given by the scaling exponent α, i.e., the exponent of the power law relation between the average fluctuations of the signal and different timescales (widow sizes). DFA has been successfully applied to distinguish between healthy and pathological neural activity (29, 30) and to identify the onset of sleep (31).

The scaling exponent, α, is an estimate of the Hurst parameter, which indicates whether a process generating a given time series has “memory” in the sense that the state at a given moment does or does not relate to prior states. A value of α = 0.5 indicates an uncorrelated process, with the current state having no dependence on prior states, while α < 0.5 indicates an anticorrelated process, with previous states being avoided, leading to smaller fluctuations at larger timescales than would be expected by chance. Scaling exponents 0.5 > α > 1 indicate self-affine stationary processes with positively correlated memory, i.e., whereby the system is more likely to enter states that it had visited before. Human adult EEG typically exhibits Hurst exponents of ~0.70 (32), i.e., is a self-affine, positively correlated process.

Here, we hypothesize that newborn brain processes, as revealed by EEG, show evidence of language learning, i.e., lasting changes in brain dynamics after exposure to language. Specifically, upon encountering a learned, i.e., previously already activated brain state triggered by previous language experience, newborns’ brain processes maintain these triggered states, resulting in an increase in the value of α. Further, such an increase would be expected specifically for the prenatally heard, i.e., previously experienced language, and not for unfamiliar languages, since the brain states unfamiliar languages trigger would not be similarly privileged.

RESULTS

To calculate DFA, EEG recordings from the resting state periods were preprocessed using standard pipelines (fig. S1) for infant auditory EEG data (33–35). Subsequently, signals were band-passed between 4 and 8 Hz to obtain theta oscillations and between 30 and 60 Hz to obtain gamma oscillations. (The delta band could not be included in the analysis as the 3-min duration of the resting state periods did not provide sufficient data for the calculations.) We then extracted the amplitude envelope of the oscillations and performed DFA (28, 36). For each channel of each participant, we selected window sizes equally spaced on a logarithmic scale. For each size, we split the signals into windows with 50% overlap, detrended each window through a least-squares fit, and calculated the SD of the detrended signal. We then obtained the fluctuation function as the average SD of the detrended signal computed over the windows, as a function of window size. By plotting this on a log-log scale, the scaling exponent was obtained using a linear fit (see Materials and Methods).

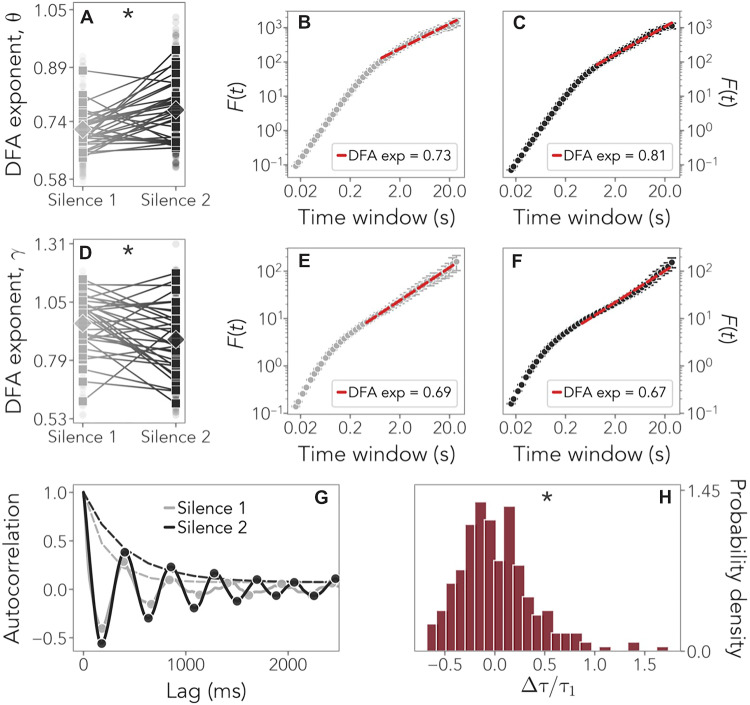

As the scaling distributions show (Fig. 2, B to F), oscillations exhibited power law scaling on timescales between 0.86 and 20 s in theta and between 0.43 and 20 s in gamma. To test whether LRTCs get stronger after stimulation with language, we applied a linear mixed-effects model to the DFA exponents with Resting State Period (silence 1/silence 2) as a fixed factor and Participants as a random factor (see Materials and Methods for model selection). In theta, scaling exponents showed a statistically significant increase from silence 1 (α = 0.76 ± 0.06) to silence 2 (α = 0.81 ± 0.08; slope = 0.05, P = 0.0005) (table S2). By contrast, in gamma, a significant decrease was observed (silence 1: α = 0.95 ± 0.1269; silence 2: α = 0.88 ± 0.16; slope = −0.07, P = 0.01) (table S3).

Fig. 2. DFA exponents and temporal correlations.

(A to C) Comparison of the average DFA exponents in the theta band during silence 1 (gray) and silence 2 (black) in channel F8 as an example. Squares indicate individual DFA exponents, and diamonds indicate the average. Statistically significant results are indicated by an asterisk (*). (A) P = 0.0005. (D to F) DFA exponents in the gamma band. (D) P = 0.01. (G) Comparison of the autocorrelation function in channel F8 during silence 1 (gray) and silence 2 (black) and the fits of the corresponding exponential envelopes (dashed lines) whose decay defines the autocorrelation time. (H) Distribution of the relative change in the autocorrelation times, obtained for all channels and all subjects. Its significantly positive skewness suggests that stronger temporal correlations are present after language stimulation. P < 0.00001 for skewness; P = 0.00002 for kurtosis.

These results confirm our hypothesis and have two implications. First, they reveal that exposure to speech rapidly increases long-range correlations in neural activity, thereby highlighting how language experience may shape the brain and contribute to learning. As in adults (32), newborns’ electrophysiological activity showed the properties of a self-affine stationary process with positively correlated memory, with exponents ~0.7 to 0.8 before stimulation. Long-term correlations got strengthened after stimulation for several minutes, providing evidence for learning. Second, LRTCs were enhanced specifically in the theta band, associated with the syllabic rate, i.e., speech units experienced in utero, as predicted, but not in gamma. The gamma band actually showed a slight decrease in long-range correlations. This increase in neural resources in theta as compared to gamma oscillations may be related to the greater importance of prosodic units such as syllables in infants’ representation of speech at birth due to their prevalence in the prenatal signal, as suggested by behavioral results (37–39) and theoretical models (40).

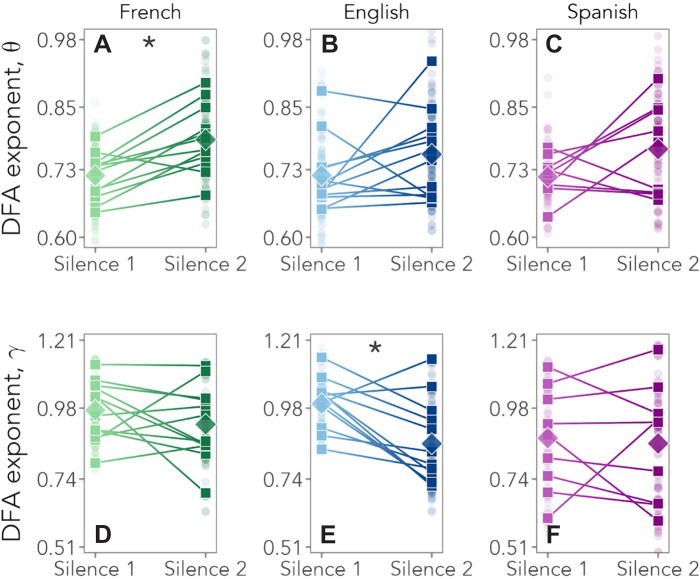

To test the role of prenatal experience directly, we compared changes in LRTCs as a function of the last language heard before silence 2 (Fig. 3). A linear mixed-effects model with Resting State Period (silence 1/silence 2) as a fixed factor and Participants as a random factor showed that in the theta band, only infants who listened to French last showed a significant increase in scaling exponents from silence 1 (α = 0.76 ± 0.05) to silence 2 (α = 0.82 ± 0.07; slope = 0.065, P = 0.0003) (table S2). Those exposed to Spanish [slope = 0.05, not significant (n.s.)] or English (slope = 0.04, n.s.) last did not. In the gamma band, no change was observed for newborns exposed last to French (slope = −0.04, n.s.) or Spanish (slope = −0.01, n.s.), while a significant decrease from silence 1 (α = 0.99 ± 0.08) to silence 2 (α = 0.86 ± 0.14) was found for those exposed last to English (slope = −0.14, P = 0.003) (table S3).

Fig. 3. DFA exponents as a function of the language heard last before silence 2.

(A to C) DFA exponents in the theta band for participants who heard French (green), English (blue), and Spanish (violet) as the last language before silence 2. (A) P = 0.0003. (D to F) DFA exponents in the gamma band. For further statistical details, see tables S2 to S5. (E) P = 0.003.

As a second approach, we also estimated the autocorrelation function of the fluctuations of the signal’s temporal correlations. We found that autocorrelations exhibited an oscillatory decay (Fig. 2G), and we computed the corresponding autocorrelation time by fitting their exponential envelope (see Materials and Methods). We thus compared temporal correlations before and after language stimulation. The positive skewness (1.05, P < 0.00001) and kurtosis (2.19, P = 0.00002) of the distribution of the relative change in autocorrelation times (Fig. 2H) suggest that language stimulation indeed leads to increased temporal correlations and therefore to sustained activity.

DISCUSSION

Together, these results provide the most compelling evidence to date that language experience already shapes the functional organization of the infant brain, even before birth. Exposure to speech leads to rapid but lasting changes in neural dynamics, enhancing LRTCs and thereby increasing infants’ sensitivity to previously heard stimuli. This facilitatory effect is specifically present for the language and the frequency band experienced prenatally. These results converge with observations of increased power in the electrophysiological activation of the newborn brain after linguistic stimulation (24) and suggest that the prenatal period lays the foundations for further language development, although it is important to note that its impact is not deterministic, as children, if exposed young, remain capable of acquiring a language even in the absence of prenatal experience with it, e.g., in the case of preterm infants (41), immigrant (42) or international adoptee children (43–45), or after cochlear implantation (46).

Whether the facilitatory effect of prenatal experience is specific to the speech domain remains an open question. Behaviorally, it has been shown that newborns recognize music they had been exposed to prenatally, so they show behavioral evidence of learning in auditory domains other than language (47, 48). Future neuroimaging studies will be necessary to test whether this learning is similarly accompanied by changes in neural temporal dynamics of the type we observed here for language.

From a broader perspective, our findings document power law scaling of neural activity during language processing in the newborn brain. This statistical property is a hallmark of critical phenomena, and it has been suggested that criticality in the brain is linked to states of optimal information transmission and storage (49–52). The newborn brain may thus already be in an optimal state for the efficient processing of speech and language, underpinning human infants’ unexpected language learning abilities.

MATERIALS AND METHODS

Experimental design

The EEG data from this study was acquired as part of a larger project that aimed to investigate speech perception and processing during the first 2 years of life (23, 24).

Participants

The protocol for this study was approved by the CER Paris Descartes ethics committee, Université Paris Descartes, Paris, France (now Université Paris Cité). All parents gave written informed consent before participation and were present during the testing session. We recruited participants at the maternity ward of the Robert Debré Hospital in Paris, and we tested them during their hospital stay. The inclusion criteria were as follows: (i) being born full-term and healthy, (ii) having a birth weight >2800 g, (iii) having an Apgar score >8 at 5 and 10 min after birth, (iv) being maximum 5 days old, and (v) being born to French native speaker mothers who spoke French at least 80% of the time during the last trimester of the pregnancy according to self-report. We recruited a total of 54 newborns and excluded 5 participants due to fussiness and crying (n = 4) or technical problems (n = 1), resulting in 49 newborns who completed the study. Of these, we excluded 16 participants due to bad data quality in the silence 1 or silence 2 periods (see the “Data analysis” section for data quality criteria). Thus, electrophysiological data from 33 newborns (age 2.55 ± 1.33 days; range 1 to 5 days; 16 girls and 17 boys) were included in the final analysis. Among these participants, 12 infants heard French as the last stimulation block, while 12 heard English, and 9 heard Spanish.

Stimuli

We tested infants in five blocks: at rest (silence 1), during three blocks of speech stimulation with three different languages, and again at rest (silence 2; Fig. 1C). While at rest, no stimulus was presented. During speech stimulation, the following three languages were presented in separate blocks: the infants’ native language (French), a rhythmically similar unfamiliar language (Spanish), and a rhythmically different unfamiliar language (English). Stimuli consisted of sentences taken from the story “Goldilocks and the Three Bears” and its translation equivalents in the other two languages. Three sets of sentences were used, where each set comprised the translation of a single sentence into the three languages (English, French, and Spanish). The translations were created so as to match sentence duration across languages within the same set both in number of syllables and in absolute duration. All sentences were recorded in mild infant-directed speech by a female native speaker of each language (a different speaker for each language), at a sampling rate of 44.1 kHz. There were no significant differences across the three languages in terms of minimum and maximum pitch, pitch range, and average pitch (see the Supplementary Materials and table S1 for details). The intensity of all recordings was adjusted to 77 dB. Further details of the stimuli are available in (23).

Procedure

Newborns were tested in a dimmed, quiet room at the Robert Debré Hospital in Paris. During the recording session, newborns were comfortably at rest, mostly naturally asleep in their hospital bassinets (Fig. 1, A and B), as is common in neuroimaging studies on newborn auditory and speech perception (53–56). The speech stimuli were delivered bilaterally through two loudspeakers positioned on each side of the bassinet using the experimental software E-Prime. The sound volume was set to a comfortable conversational level (~65 to 70 dB). We presented the participants with one sentence per language, repeated 100 times. The experiment consisted of five blocks: one initial resting state block (silence 1), three language blocks, and one final resting state block (silence 2; Fig. 1C). Each resting state block lasted 3 min, while each language block lasted around 7 min. An interstimulus interval of random duration (between 1 and 1.5 s) was introduced between sentence repetitions, and an interblock interval of 10 s was introduced between language blocks (Fig. 1C). The order of the languages was pseudo-randomized and counterbalanced across participants. The entire recording session lasted about 27 min.

Data acquisition

We recorded EEG data with active electrodes, using a Brain Products actiCAP and actiCHamp acquisition system (Brain Products GmbH, Gilching, Germany). We used a 10-channel layout to acquire cortical responses from the following scalp positions: F7, F3, FZ, F4, F8, T7, C3, CZ, C4, and T8 (Fig. 1B). We chose these recording locations, because this is where auditory and speech perception–related neural responses are typically observed in newborns and young infants (34, 35) (channels T7 and T8 were previously labeled T3 and T4, respectively). We used two electrodes placed on each mastoid for online reference and a ground electrode placed on the forehead. Data were referenced online to the average of the two mastoid channels. Data were recorded at a sampling rate of 500 Hz and filtered online with a high cutoff filter at 100 Hz, a low cutoff filter at 0.1 Hz, and an 8-kHz (−3 dB) anti-aliasing filter. The electrode impedances were kept below 140 kΩ.

Data analysis

We preprocessed and analyzed the data using custom Python and R scripts.

Preprocessing (fig. S1). First, we filtered the raw EEG signals with a 50-Hz notch filter to eliminate the power line noise. Then, we band-pass–filtered the denoised EEG signals between 1 and 100 Hz using a zero phase-shift Chebyshev filter. Then, we submitted the recordings to a rejection process to exclude noisy and artifact-contaminated data. First, we identified channels with amplitudes exceeding ±200 μV. If that happened in the first or last 30 s of the 3-min resting periods, we rejected only the compromised data segment. Otherwise, we rejected the whole channel. This way, we ensured that we had at least 150 s of continuous artifact-free recordings, as needed for the DFA. We then rejected channels whose power spectrum (in decibels) was above or below the participant’s average power spectrum by more than 4.5 × 10−5. Last, the procedure was validated by visual inspection to remove any residual artifacts. Participants who had fewer than five valid channels after channel rejection were not included in the data analysis (n = 15). On average, participants contributed 9 clean channels of the 10 total channels (SD 1.5 for silence 1 and 0.9 for silence 2). All data preprocessing and rejection were conducted in batch using the above-defined criteria before statistical analysis.

DFA. DFA was performed on the silence 1 and silence 2 blocks. The signal was filtered in the theta (4 to 8 Hz) and low gamma (30 to 60 Hz) bands. The delta band (1 to 3 Hz), although theoretically relevant, could not be included in the analysis: The 3-min duration of the resting state periods did not provide sufficient data for fitting the estimate of the DFA exponent in this slow band.

The signals were filtered with a finite impulse response filter, following standard practice (28), since it avoids introducing artifactual long-range correlations, unlike other filtering procedures. The order of the filter was varied across bands so as to cover at least two cycles of the lowest frequency of the band and was thus set to 2 * (sampling frequency/lowest frequency).

DFA was then performed on the amplitude envelope of the filtered signals, i.e., on the amplitude extracted from the analytic representation of the signal, with the following steps (28, 36). The cumulative sum of the time series was computed to create the signal profile. A set of window sizes, T, which were equally spaced on a base 2 logarithmic scale between the lower bound of eight samples and the length of the signal, was selected. For each window length t ∈ T, the signal profile was split into a set W of separate time series of length t, which have 50% overlap. For each window w ∈ W, the linear trend was removed (using a least-squares fit) from the time series to create the signal w_detrend. The SD of the detrended signal σ(w_detrend) was calculated in each window. Then, the fluctuation function F was calculated as the mean SD of the detrended signals over all identically sized windows, as a function of the window size: F(t) = mean(σ(w_detrend(t))). The fluctuation function was plotted for all window sizes, T, on logarithmic scales. The DFA exponent, α, is the slope of the trend line in the range of timescales of interest and can be estimated using linear regression. The lower bound for the linear regression fit was chosen by comparing the fluctuation function of the signal with a white noise surrogate, filtered using the same filter as the data. The lower bound was placed where the expected white noise scaling (α = 0.5) started to appear (28). This lower bound was a function of the band chosen, i.e., the lower the frequency band, the higher the bound, as expected. The higher bound was set at 20 s, i.e., approximately 15% of the minimum signal length (150 s).

The DFA exponent can be interpreted as an estimate of the Hurst parameter (28); i.e., the time series is uncorrelated if α = 0.5. If 0.5 < α < 1, then there are positive correlations present in the time series; i.e., the series shows larger fluctuations on longer timescales than expected by chance. If 0 < α < 0.5, then, the time series is anticorrelated; i.e., fluctuations are smaller in larger time windows than expected by chance.

Autocorrelation times: The connected autocorrelation function Ci(t) of the ith channel of the EEG signal is defined as Ci(T) = ⟨xi(t0)xi(t0 + t)⟩ − ⟨xi(t0)⟩⟨xi(t0 + t)⟩, where ⟨ ⟩ denotes an average over all times t0, and xi(t) is the signal of the ith channel. Ci(t) describes the temporal correlations of the fluctuations of xi(t). We found that the autocorrelation function Ci(t) typically displays damped oscillations, decaying with an exponential envelope Ci(t) ∝ exp (−t/τi), with τi being the autocorrelation time.

We estimated, for the ith channel of the nth subject, the autocorrelation time in silence 1, , and silence 2, , by fitting the exponential envelope of Ci,n(t) with standard maximum likelihood methods. We then computed the relative change in autocorrelation time . If Δτi,n > 0, the corresponding EEG activity displays enhanced temporal correlations. To assess whether autocorrelation times increase on average across channels and subjects, we estimated the empirical probability distribution of the relative change p(s) = ∑i,nδ[s − Δτi,n], where δ is the Dirac delta function. Positive skewness suggests that activity in silence 2 has larger correlation times than silence 1.

Statistical analysis

Two sets of statistical analyses were conducted: one to assess the overall impact of speech stimulation on neural dynamics and one to assess whether speech in different languages had a different impact. For the first analysis, the DFA exponents were entered into a linear mixed-effects models to test whether there is a significant difference in the DFA exponents of the signals before and after speech stimulation. We built and compared all the possible models. Model selection was based on the Akaike Information Criterion (57). The models were implemented using the lme4 and lmertest packages in R (58), and the parameters were estimated optimizing the log-likelihood criterion. In both the theta and the gamma bands, the best-fitting model included Resting State Period (silence 1/silence 2) as a fixed factor and Participant as random factor.

For the second analysis, linear mixed-effects models were run, dividing the subjects in three groups on the basis of the last language heard before silence 2. For participants who heard French last, the best fitting model in the theta band included Resting State Period (silence 1/silence 2) as a fixed factor and Participant as a random factor. The same model was also the best in the gamma band for participants who heard English last. For all other comparisons, the best fitting model only included Participant as a random factor.

Acknowledgments

Funding: This work was supported by ERC Consolidator Grant “BabyRhythm” no. 773202 (J.G.), FARE grant no. R204MPRHKE (J.G. and S.S.S.), and European Union Next Generation EU NRRP M6C2 - Investment 2.1 (J.G.).

Author contributions: Conceptualization: J.G. and S.S.S. Performing the experiment: M.C.O.B. Data analysis: B.M., G.N., G.B., and R.G. Supervision: J.G. and S.S.S. Funding: J.G. and S.S.S. Writing—original draft: all authors. Writing—review and editing: all authors.

Competing interests: The authors declare that they have no competing interests.

Data and materials availability: All data and code needed to evaluate the conclusions in the paper are present in the paper, in the Supplementary Materials, and at https://zenodo.org/record/8358768 as well as https://osf.io/g2rvc/.

Supplementary Materials

This PDF file includes:

Fig. S1

Tables S1 to S5

REFERENCES AND NOTES

- 1.A. J. DeCasper, W. P. Fifer, Of human bonding: Newborns prefer their mothers’ voices. Science 208, 1174–1176 (1980). [DOI] [PubMed] [Google Scholar]

- 2.J. P. Lecanuet, C. Granier-Deferre, Speech stimuli in the fetal environment, in Developmental Neurocognition: Speech and Face Processing in the First Year of Life (Kluwer Academic Press, 1993), pp. 237–248. [Google Scholar]

- 3.C. Moon, R. P. Cooper, W. P. Fifer, Two-day-olds prefer their native language. Infant Behav. Dev. 16, 495–500 (1993). [Google Scholar]

- 4.C. Moon, H. Lagercrantz, P. K. Kuhl, Language experienced in utero affects vowel perception after birth: A two-country study. Acta Paediatr. 102, 156–160 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.J. Gervain, The role of prenatal experience in language development. Curr. Opin. Behav. Sci. 21, 62–67 (2018). [Google Scholar]

- 6.J. J. Eggermont, J. K. Moore, Morphological and functional development of the auditory nervous system, in Human Auditory Development (Springer, 2012), pp. 61–105. [Google Scholar]

- 7.R. M. Abrams, K. J. Gerhardt, The acoustic environment and physiological responses of the fetus. J. Perinatol. 20, S31–S36 (2000). [DOI] [PubMed] [Google Scholar]

- 8.C. M. Moon, W. P. Fifer, Evidence of transnatal auditory learning. J. Perinatol. 20, S37–S44 (2000). [DOI] [PubMed] [Google Scholar]

- 9.J. F. Werker, R. C. Tees, Phonemic and phonetic factors in adult cross-language speech perception. J. Acoust. Soc. Am. 75, 1866–1878 (1984). [DOI] [PubMed] [Google Scholar]

- 10.J. F. Werker, Perceptual beginnings to language acquisition. Appl. Psycholinguist. 39, 703–728 (2018). [Google Scholar]

- 11.D. Swingley, Infants’ learning of speech sounds and word forms, in Oxford Handbook of the Mental Lexicon (Oxford Univ. Press, 2022). [Google Scholar]

- 12.P. K. Kuhl, Brain mechanisms in early language acquisition. Neuron 67, 713–727 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.C. Nallet, J. Gervain, Neurodevelopmental preparedness for language in the neonatal brain. Dev. Psychol. 3, 41–58 (2021). [Google Scholar]

- 14.F. Ramus, M. Nespor, J. Mehler, Correlates of linguistic rhythm in the speech signal. Cognition 73, 265–292 (1999). [DOI] [PubMed] [Google Scholar]

- 15.F. Ramus, M. D. Hauser, C. Miller, D. Morris, J. Mehler, Language discrimination by human newborns and by cotton-top tamarin monkeys. Science 288, 349–351 (2000). [DOI] [PubMed] [Google Scholar]

- 16.F. Homae, H. Watanabe, T. Nakano, G. Taga, Large-scale brain networks underlying language acquisition in early infancy. Front. Psychol. 2, 93 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.F. Homae, H. Watanabe, T. Otobe, T. Nakano, T. Go, Y. Konishi, G. Taga, Development of global cortical networks in early infancy. J. Neurosci. 30, 4877–4882 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.L. May, J. Gervain, M. Carreiras, J. F. Werker, The specificity of the neural response to speech at birth. Dev. Sci. 21, e12564 (2018). [DOI] [PubMed] [Google Scholar]

- 19.L. May, K. Byers-Heinlein, J. Gervain, J. F. Werker, Language and the newborn brain: Does prenatal language experience shape the neonate neural response to speech? Front. Psychol. 2, 222 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.M. Pena, A. Maki, D. Kovacic, G. Dehaene-Lambertz, H. Koizumi, F. Bouquet, J. Mehler, Sounds and silence: An optical topography study of language recognition at birth. Proc. Natl. Acad. Sci. U.S.A. 100, 11702–11705 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Y. Sato, Y. Sogabe, R. Mazuka, Development of hemispheric specialization for lexical pitch- accent in Japanese infants. J. Cogn. Neurosci. 22, 2503–2513 (2010). [DOI] [PubMed] [Google Scholar]

- 22.P. Vannasing, O. Florea, B. González-Frankenberger, J. Tremblay, N. Paquette, D. Safi, F. Wallois, F. Lepore, R. Béland, M. Lassonde, A. Gallagher, Distinct hemispheric specializations for native and non-native languages in one-day-old newborns identified by fNIRS. Neuropsychologia 84, 63–69 (2016). [DOI] [PubMed] [Google Scholar]

- 23.M. C. O. Ortiz Barajas, R. Guevara Erra, J. Gervain, The origins and development of speech envelope tracking during the first months of life. Dev. Cogn. Neurosci. 48, 100915 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.M. C. O. Ortiz Barajas, R. Guevara, J. Gervain, Neural oscillations and speech processing at birth. iScience 10.1016/j.isci.2023.108187 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.K. J. Gerhardt, R. M. Abrams, C. C. Oliver, Sound environment of the fetal sheep. Am. J. Obstet. Gynecol. 162, 282–287 (1990). [DOI] [PubMed] [Google Scholar]

- 26.A. L. Giraud, D. Poeppel, Cortical oscillations and speech processing: Emerging computational principles and operations. Nat. Neurosci. 15, 511–517 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.L. Meyer, The neural oscillations of speech processing and language comprehension: State of the art and emerging mechanisms. Eur. J. Neurosci. 48, 2609–2621 (2018). [DOI] [PubMed] [Google Scholar]

- 28.R. Hardstone, S.-S. Poil, G. Schiavone, R. Jansen, V. V. Nikulin, H. D. Mansvelder, K. Linkenkaer-Hansen, Detrended fluctuation analysis: A scale-free view on neuronal oscillations. Front. Physiol. 3, 450 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.S. Monto, S. Vanhatalo, M. D. Holmes, J. M. Palva, Epileptogenic neocortical networks are revealed by abnormal temporal dynamics in seizure-free subdural EEG. Cereb. Cortex 17, 1386–1393 (2007). [DOI] [PubMed] [Google Scholar]

- 30.T. Montez, S.-S. Poil, B. F. Jones, I. Manshanden, J. P. Verbunt, B. W. van Dijk, A. B. Brussaard, A. van Ooyen, C. J. Stam, P. Scheltens, Altered temporal correlations in parietal alpha and prefrontal theta oscillations in early-stage Alzheimer disease. Proc. Natl. Acad. Sci. U.S.A. 106, 1614–1619 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.J. W. Kim, H.-B. Shin, P. A. Robinson, Quantitative study of the sleep onset period via detrended fluctuation analysis: Normal vs. narcoleptic subjects. Clin. Neurophysiol. 120, 1245–1251 (2009). [DOI] [PubMed] [Google Scholar]

- 32.S. Lahmiri, Generalized Hurst exponent estimates differentiate EEG signals of healthy and epileptic patients. Phys. A: Stat. Mech. 490, 378–385 (2018). [Google Scholar]

- 33.I. Winkler, G. P. Háden, O. Ladinig, I. Sziller, H. Honing, Newborn infants detect the beat in music. Proc. Natl. Acad. Sci. 106, 2468–2471 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.G. Stefanics, G. P. Háden, I. Sziller, L. Balázs, A. Beke, I. Winkler, Newborn infants process pitch intervals. Clin. Neurophysiol. 120, 304–308 (2009). [DOI] [PubMed] [Google Scholar]

- 35.B. Tóth, G. Urbán, G. P. Haden, M. Márk, M. Török, C. J. Stam, I. Winkler, Large-scale network organization of EEG functional connectivity in newborn infants. Hum. Brain Mapp. 38, 4019–4033 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.C.-K. Peng, S. V. Buldyrev, S. Havlin, M. Simons, H. E. Stanley, A. L. Goldberger, Mosaic organization of DNA nucleotides. Phys. Rev. E Stat. Phys. Plasmas Fluids Relat. Interdiscip. Topics 49, 1685–1689 (1994). [DOI] [PubMed] [Google Scholar]

- 37.J. Bertoncini, C. Floccia, T. Nazzi, J. Mehler, Morae and syllables: Rhythmical basis of speech representations in neonates. Lang. Speech 38, 311–329 (1995). [DOI] [PubMed] [Google Scholar]

- 38.J. Bertoncini, J. Mehler, Syllables as units in infant speech perception. Infant Behav. Dev. 4, 247–260 (1981). [Google Scholar]

- 39.R. Bijeljac-Babic, J. Bertoncini, J. Mehler, How do 4-day-old infants categorize multisyllabic utterances? Dev. Psychol. 29, 711–721 (1993). [Google Scholar]

- 40.J. Mehler, E. Dupoux, T. Nazzi, G. Dehaene-Lambertz, Coping with linguistic diversity: The infant’s viewpoint, in Signal to Syntax: Bootstrapping from Speech to Grammar in Early Acquisition, J. L. Morgan, K. Demuth, Eds. (Lawrence Erlbaum Associates Inc, 1996), pp. 101–116.

- 41.T. M. Luu, B. R. Vohr, W. Allan, K. C. Schneider, L. R. Ment, Evidence for catch-up in cognition and receptive vocabulary among adolescents born very preterm. Pediatrics 128, 313–322 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.J. S. Johnson, E. L. Newport, Critical period effects in second language learning: The influence of maturational state on the acquisition of English as a second language. Cogn. Psychol. 21, 60–99 (1989). [DOI] [PubMed] [Google Scholar]

- 43.V. A. Ventureyra, C. Pallier, H.-Y. Yoo, The loss of first language phonetic perception in adopted Koreans. J. Neurolinguistics 17, 79–91 (2004). [Google Scholar]

- 44.L. J. Pierce, D. Klein, J.-K. Chen, A. Delcenserie, F. Genesee, Mapping the unconscious maintenance of a lost first language. Proc. Natl. Acad. Sci. 111, 17314–17319 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.J. Choi, M. Broersma, A. Cutler, Early phonology revealed by international adoptees’ birth language retention. Proc. Natl. Acad. Sci. 114, 7307–7312 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.A. Kral, A. Sharma, Developmental neuroplasticity after cochlear implantation. Trends Neurosci. 35, 111–122 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.P. G. Hepper, An examination of fetal learning before and after birth. Ir. J. Psychol. 12, 95–107 (1991). [Google Scholar]

- 48.S. Ullal-Gupta, C. M. V. B. der Nederlanden, P. Tichko, A. Lahav, E. E. Hannon, Linking prenatal experience to the emerging musical mind. Front. Syst. Neurosci. 7, 48 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.G. Barzon, G. Nicoletti, B. Mariani, M. Formentin, S. Suweis, Criticality and network structure drive emergent oscillations in a stochastic whole-brain model. J. Phys. Complex. 3, 025010 (2022). [Google Scholar]

- 50.W. L. Shew, D. Plenz, The functional benefits of criticality in the cortex. Neuroscientist 19, 88–100 (2013). [DOI] [PubMed] [Google Scholar]

- 51.M. A. Munoz, Colloquium: Criticality and dynamical scaling in living systems. Rev. Mod. Phys. 90, 031001 (2018). [Google Scholar]

- 52.J. Hidalgo, J. Grilli, S. Suweis, M. A. Munoz, J. R. Banavar, A. Maritan, Information-based fitness and the emergence of criticality in living systems. Proc. Natl. Acad. Sci. 111, 10095–10100 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.D. Perani, M. C. Saccuman, P. Scifo, D. Spada, G. Andreolli, R. Rovelli, C. Baldoli, S. Koelsch, Functional specializations for music processing in the human newborn brain. Proc. Natl. Acad. Sci. 107, 4758–4763 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.F. Homae, H. Watanabe, T. Nakano, K. Asakawa, G. Taga, The right hemisphere of sleeping infant perceives sentential prosody. Neurosci. Res. 54, 276–280 (2006). [DOI] [PubMed] [Google Scholar]

- 55.M. Cheour, O. Martynova, R. Näätänen, R. Erkkola, M. Sillanpää, P. Kero, A. Raz, M.-L. Kaipio, J. Hiltunen, O. Aaltonen, J. Savela, H. Hämäläinen, Speech sounds learned by sleeping newborns. Nature 415, 599–600 (2002). [DOI] [PubMed] [Google Scholar]

- 56.I. Winkler, E. Kushnerenko, J. Horváth, R. Čeponienė, V. Fellman, M. Huotilainen, R. Näätänen, E. Sussman, Newborn infants can organize the auditory world. Proc. Natl. Acad. Sci. 100, 11812–11815 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.G. Claeskens, N. L. Hjort, Model Selection and Model Averaging (Cambridge Books, 2008). [Google Scholar]

- 58.D. Bates, M. Mächler, B. Bolker, S. Walker, Fitting linear mixed-effects models using lme4. arXiv:1406.5823 [stat.CO] (23 June 2014).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Fig. S1

Tables S1 to S5