Abstract

The screen image synthesis (SIS) meter was originally proposed as a high-speed measurement tool, which fused the measured data from multiple sample-rotational angles to produce a whole-field measurement result. However, it suffered from stray light noise and lacked the capability of spectrum measurement. In this study, we propose an SIS system embedded with a snapshot hyperspectral technology, which was based on a dispersion image of the sparse sampling screen (SSS). When a photo was captured, it was transformed and calibrated to hyperspectral data at a specific sample-rotational angle. After the hyperspectral data in all sample-rotational angles were captured, an SIS image-fusion process was then applied to get the whole field hyperspectral data. By applying SSS to the SIS meter, we not only create a screen image synthesis hyperspectral meter but also effectively address the issue of stray-light noise. In the experiment, we analyze its correctness by comparing the hyperspectral value with a one-dimensional spectrum goniometer (ODSG). We also show the 2D color temperature coefficient distribution and compare it with the ODSG. Experimental results also demonstrate the feasibility in terms of both spectrum distribution meter and color coefficient temperature distribution meter.

Subject terms: Optics and photonics, Optical techniques

Introduction

Intensity distribution meters and bidirectional scattering distribution function (BSDF) meters are essential tools in optical design1–7, optical modeling8–14, and color estimation15,16. The conventional technique for intensity distribution measurement, goniophotometer, involves time-consuming point-by-point scanning15–20. To overcome this limitation and improve measurement speed, image-based technologies have emerged as promising alternatives21–27. Notable high-speed technologies include the image-sphere system28,29, conoscopy30–32, and the Screen Image Synthesis (SIS) system33–35.

The image-sphere system utilizes a camera and a reflective sphere to capture the emitted light from a source28,29. By scattering the light using the reflective sphere, the system obtains a snapshot of the light distribution in all directions. However, the fixed size of the sphere restricts the far-field distance and limits the sample size. Moreover, the scattering of light from the spherical surface introduces two types of stray-light noise36. First, scattering from one part of the spherical surface tends to project onto other parts, leading to cross-talk noise. Second, scattering from one part of the spherical surface can hit the sample and reflect onto other parts, resulting in sample-reflecting-stray-light (SRSL) noise. Conoscopy, on the other hand, is a microscope-based technique that captures the intensity distribution of a small light source within a single plane30–32. A camera records the light distribution, providing a snapshot of the source. However, suppressing SRSL noise in conoscopy is a complex task that often requires specific lens design, coatings, and immersion oil operation.

In contrast, the SIS system has demonstrated its ability to measure intensity distribution as well as BSDF33–35. This system employs a screen and a camera to capture photos as the sample rotates at different angles, generating a whole-field measurement result. The adjustable distance between the screen and the sample offers flexibility in sample size, making the SIS system versatile and suitable for a wide range of samples and applications. The flat screen of the SIS system minimizes cross-talk noise. However, SRSL noise remains considerable due to the large solid-angle occupancy of the screen.

Furthermore, the conventional SIS system lacks spectral or color information. It makes measurement errors occurred by the Metamerism due to the spectral distribution of light source. To address this limitation, we replace the original screen of the SIS system with a black screen containing an array of holes. This modification effectively suppresses SRSL noise and serves as a sparse-sampling screen (SSS). Additionally, a grating is placed in front of the camera, working together with the SSS to enable snapshot hyperspectral technology. It is equivalent to embed an integral field spectroscopy (IFS) snapshot hyperspectral technique inside the SIS system37–40.

Another solution could be using a compact snapshot hyperspectral imaging module rotating around the sample to gather all angular responses from the sample. It may leverage advance snapshot hyperspectral solutions41, such like the code-aperture technique42–45, or the Chip-side technology46–52. However, because the intensity distribution measurement needs to measure in far-field distance, the compact size of the input plane means its angular coverage is small. It makes whole-field measurement a time-consuming process. As a result, the SIS with aid of snapshot hyperspectral provides a better solution. It can capture large angle coverage and get rid of SRSL noise at the same time.

In this paper, we successfully develop an SIS hyperspectral (SISH) meter and demonstrate it experimentally. It can benefit color science53–55, material identification56,57, and optical engineering58,59. Because of the sparse-sampling essence, it is compatible with data stream improvement techniques such as compressed sensing60–63 or deep-learning approaches64,65.

SIS hyperspectral system

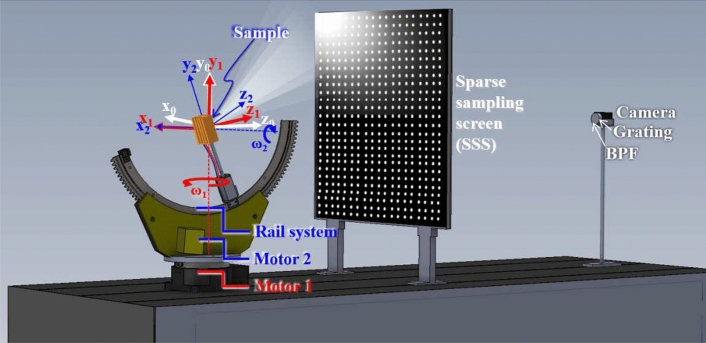

Figure 1 depicts the SISH system is composed by a snap-shot hyperspectral system and a sample-rotational system. The snap-shot hyperspectral system is composed by a camera, a grating, a band pass filter (BPF) and a sparse-sampling screen (SSS). The irradiance captured by the CMOS sensor corresponded to the radiance from each position of the screen and was captured by the camera. The sample rotational system is composed by Motor1, Mortor 2, a rail system and a sample mount. A Cartesian coordinate system is defined in the snap-shot hyperspectral system. The sample-rotational system is driven using two motors. Motor 1 rotates all components of the sample-rotational system along the red axis. A rail system is fixed on the loading side of Motor 1 and is driven by Motor 2, which rotates the load components along the blue axis. is the cartesian coordinate system defined on the loading side of Motor 1. When Motor 1 rotates, all the components loaded by Motor 1 rotate along the -axis. is the cartesian coordinate system defined on the loading side of the rail system. When Motor 2 rotates, the sample rotates along the -axis. Motor 1 rotates from to with a step of . Motor 2 rotates from -150 to 180 with a step of 30. The two motors rotate to 60 angles to cover the solid angle surrounding the sample. After the progress of parameter optimization and system calibration, the image reconstruction algorithm is used to obtain the 2-D light distribution.

Figure 1.

The architecture of the SIS hyper-spectrum system is composed with a sample-rotational system and a snap-shot hyperspectral system. It captures the spectrum distribution with solid angle.

Snap-shot hyperspectral technique

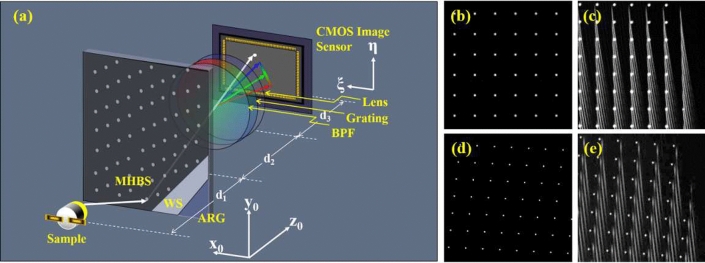

Figure 2a shows the schematic diagram of the snap-shot hyperspectral system. The camera consists of a CMOS image sensor and a lens, and it forms the image of the SSS on the CMOS image sensor. A grating mounted in front of the lens is used to disperse the image. The SSS is used to depress the SRSL noise and to perform the spectrum acquiring by Spatial multiplexing. The SSS is composed by a multi hole black stick (MHBS), a white stick (WS), and an AR coating glass (ARG) with size 601 (H) (W) (D) (mm). The optical property of the WS is scattering with Lambertian distribution. The band pass filter (BPF) in front of the grating limits the detection band within 390–710 nm, to remove the noise caused by field curvature aberration. The material of both the MHBS and WS are polyvinyl chloride (PVC). The distance between each hole is 10mm, and the hole diameter is 0.8mm. It makes the scattering reflection of the screen decreasing to . The SRSL noise is depressed accordingly. Figure 2b shows a design of the square holes array. The total number of holes in the MHBS is 2, 401. However, series distortion aberration of the off-axis dispersion image makes the spectrum image banding. The neighboring first-order spectrums overlap with each other, as shown in Fig. 2c. The spectrum detection of these off-axis holes thus failed. To improve the overlapping problem, we calculate the gradient of all the first order spectrum and increase the distance between the holes along the orthogonal direction of the gradient. Figure 2d shows the new design. Because of the distance increase, the total area of the holes is expanded. Some of the holes in the new design are located outside the SSS and are removed. So, the total number of the holes in the new design decreases to 2233. Figure 2e shows the dispersion image of the new design, and the off-axis spectrum overlapping problem are resolved.

Figure 2.

(a) The system setup of the snap-shot hyperspectral; (b) the holes distribute in square grid; (c) the dispersion light of the square-grid holes overlaps to each other; (d) the improved holes distribution; (e) the dispersion light of the improved holes was separated from each other.

For the imaging system shown in Fig. 2a, the object distance is , and the image distance is (as shown in Eq. (1). The light beam dispersed by the grating with the dispersion angle . The corresponding shifting of the dispersed point is

| 1 |

In the linear space invariant region of the image system, the spectrum can be treated as a convolution of the image of the hole and the spectrum curve. Thus, the spectral resolvance (V) is limited by the hole size, and is written as

| 2 |

The designed spectral resolution (R) is calculated from the spectral resolvance

| 3 |

It shows smaller hole size and longer object distance () lead to higher spectral resolvance. In this paper, the CMOS image sensor has 5 million pixels and the pixel pitch are m; the lens has focal length 5 mm; mm; mm. The image of the hole occupies pixels in the CMOS Image Sensor. The designed spectral resolution is 5 nm. The distance between the sample and the SSS is 500 mm, and the longest distance between the sample and the diagonal edge of the SSS is 612 mm. Therefore, the angular resolution is .

Spectrum retrieval process

is the hyperspectral data on SSS, and it is retrieved from a 2D photo , where is the position of the sampling hole on SSS, and is the coordinate of the CMOS image sensor. The point spread function of the optical system comparing to the size of the sampling hole is small enough, and the location of the dispersed image for the sampling hole located at is expressed as a quadratic function of . The relation between the 2D photo and the hyperspectral data can be expressed as

| 4 |

We use a laser beam with wavelengths of 457nm, 532nm, and 671nm to pass through a diffuser sequentially. The scattering light illuminated the SSS. And we capture the dispersed image of SSS to get , , and . Because the three dispersed images are discrete points array. It is easy to measure the position corresponding to each sampling hole located at . Use the three photos, we have 6 equations to solve the coefficients, i.e., . Then, we can transform a captured photo to the hyperspectral raw data

| 5 |

In the calibration process, we used a white light LED as the standard light source. We take a picture and calculate hyperspectral raw data using Eq. (5). Then we shifted a spectrometer (ISUZU OPTICS, ISM-Lux, corrected by ITRI-CMS) two-dimensionally to measure the spectrum distribution, i.e., . Thus, the calibration function was

| 6 |

Therefore, when the hyperspectral meter was applied to measure an arbitrary light source, the calibrated hyperspectral was:

| 7 |

Image reconstruction algorithm of the SISH system

Because the imaging system is static, the sample should be rotated in steps to fill all detection angles. The rotational angles of Motor 1() and Motor 2 () are expressed as functions of integer indices i and j respectively,

| 8 |

| 9 |

When the sample rotates, we need to map detected hyperspectral distribution on the screen in Cartesian coordinate, i.e. , to the captured hyperspectral data with sample-rotational angle in spherical coordinate, i.e. . The Cartesian coordinates attached to the screen, Motor 1, and Motor 2 (as well as samples) are expressed as the span of the bases , , and , i.e., , , and , respectively. The origin of the coordinate frames is located at the rotational center of the sample. For the rotation of Motors 1, and 2, the rotational matrix is expressed as follows:

| 10 |

and

| 11 |

where the subscripts B0 and B1 indicate that they are used as the basis of the corresponding rotational matrices. Using Eqs. (10) and (11), the intensity distribution captured with the Cartesian coordinates attached to the screen is transferred to the Cartesian coordinates attached to the sample35

| 12 |

The Cartesian coordinates are mapped to spherical coordinates (Fig. 3) as follows:

| 13 |

| 14 |

After the images for different rotational angles are captured at specific and , Eqs. (4)–(7) is used to calculate the hypectral . Subsequently, Eqs. (8)–(14) are used to calculate the hyperspectral . Where, and is the zenith angle and the azimuth angle of the spherical coordinate, respectively. In case of a forward illumination sample, 30 pictures with various values of and are used to reconstruct the whole field hyperspectral data. This is expressed as follows:

| 15 |

where is the number of repetitions of the superposition process. Equation (15) means in all rotational angles are summed, and is divided by . The calculation of uses the same simulation codes as . We replaced with an all-ones matrix, where all entry is equal to one. The all-ones matrix is mapped to the spherical coordinate using Eqs. (8)–(12) to produce , which is the area occupied by . Then we sum in each shot to calculate the number of repetitions

| 16 |

Table 1 shows the descriptions of functions used for the hyperspectral retrieval and the SIS fusion. The hyperspectral retrieval process uses the captured photo to calculate , which is the hyperspectral data on the SSS screen. The SIS fusion process maps to spherical coordinate with considering the rotational angle of the sample and , and gets . Then all are fused to get .

Table 1.

The function list. .

| Function | Description | |

|---|---|---|

| Hyperspectral retrieval | Captured photo | |

| Hyperspectral raw data of a standard light source | ||

| Hyperspectral raw data of a standard light source measured by a standard spectrometer | ||

| Clibration function | ||

| Hyperspectral data calculated from in Cartesian coordinate | ||

| SIS fusion | The rotational angle of motor1 and motor2 | |

| The rotational matrix of motor1 and motor2 | ||

| Hyperspectral data calculated from in spherical coordinate | ||

| The area occupied by | ||

| The number of repetitions | ||

| Whole field hyperspectral data |

System performance estimation methods

The distribution of coefficient of color temperature (CCT) is the key to photometry measurements, and it is convenient to show the whole measurement result using 2D Azimuth equidistant projection. So, we not only estimate the system performance of hyperspectral measurement, but also use the CCT distribution to estimate the system performance. The normalized correlation coefficient (NCC) is used to quantify the system performance of the hyperspectral measurement66. The NCC is defined as

| 17 |

where and are the spectrum measured by the SISH and the one-dimensional spectrum goniometer (ODSG), respectively. and are the mean values of the and the , respectively. m is the spectrum band number, and the value of m is 55 in this paper. For the performance of the color distribution measurement, we need to calculate the CCT distribution and the color coordinate. According to McCamy formula67,

| 18 |

where , and are corresponding color coordinate in CIE 1931 x–y chromaticity coordinate system68. And then calculate the CCT using the cubic polynomial approximation69

| 19 |

Finally, we use the average of the different (AD) and the different standard deviation (DSD) to evaluate the CCT measurement performance. Different from the unitless NCC, which is used to evaluate the similarity of two curves. The AD and the DSD in unit of color temperature (k) bring the sense of color accuracy and color precision. The AD is defined as

| 20 |

Where N is the total amount of the measurement of . is the CCT distribution or the color coordinate distribution based on the SISH and is the CCT distribution or the color coordinate distribution based on the ODSG.

| 21 |

Experiment and performance evaluation

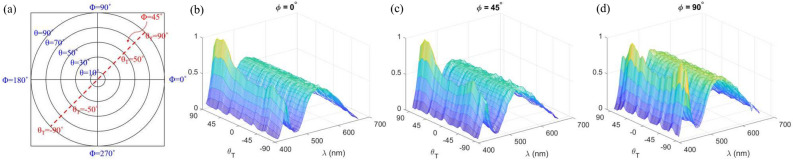

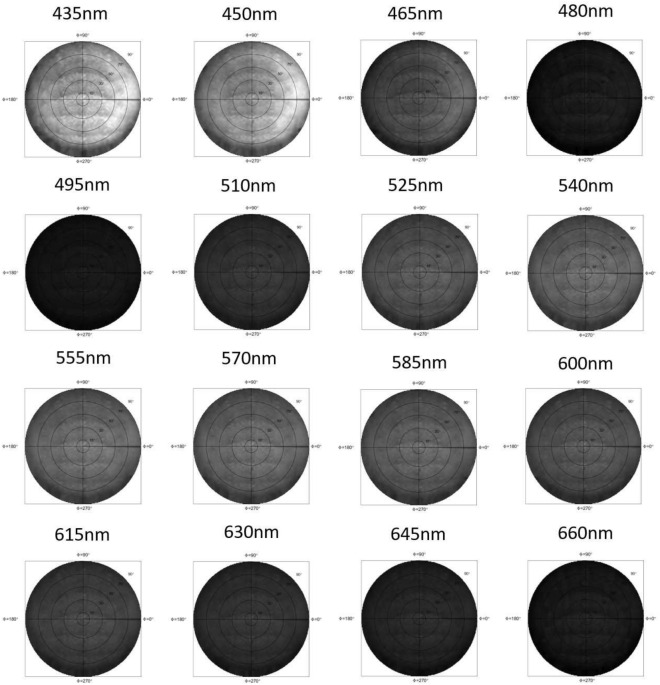

In the experimental process, we capture the spectrum distribution of different samples by using the SIS with aid of SSS, as shown in Fig. 1. In each rotational angle , a 2D photo was taken. Then, a calibrated hyperspectral was calculated by putting into Eqs. (4)–(7). It was then mapped to the spherical coordinate and was expressed as by using Eqs. (8)–(12). We count the number of repetitions in each position, and then apply these variables to Eq. (15) to calculate . The calculation software is MATLAB. For sake of clear expression, we draw on a disc using the azimuth equidistant projection, as shown in Fig. 3. The direction of is normal to the samples. In the experiment, since the measuring angular resolution is . We interpolate the measurement result to the spherical coordinate of grid with the angular resolution . The calculation results produce a total of spectrum curves. Figure 3b–d show we extract spectrum distributions , , and from . For a specific azimuth angle and , the coordinate is used to combine the distribution of two specific azimuth angles, and , to a single axis. Thus, the angle denotes the spherical coordinate . Because we used LED light sources as the samples, there is almost no backward light, and we only need to discuss the intensity distribution with . In Fig. 3b–d, the sample is a Lab-packaged LED using hemisphere package. The color distribution of LEDs in “Hemisphere” package should be radially symmetrical. However, we deliberately make a “Bias Hemisphere” package to change the color distribution. As a result, the spectrum distributions for the three figures are obviously different. Figure 4 shows the per-three-bands image of the “Bias Hemisphere” package. The blue band (435–460nm) is obviously affected by the bias package, but other bands appear to be unaffected. Because the blue light is emitted by a small die and passes through the phosphor, the smaller etendue makes it easier to be shaped by the hemispherical package lens. Other wavelength bands are re-emitted by the phosphor. The large etendue makes it hard to be shaped by the hemispherical package lens.

Figure 3.

(a) The coordinates in the Azimuth equidistant projection and the coordinate for the one-dimension expression, and (b–d) the hyperspectal of a bias hemisphere package LED measured by the SISH along the azimuth angle , , and , respectively.

Figure 4.

The per-three-band image shows the intensity distribution of different wavelength.

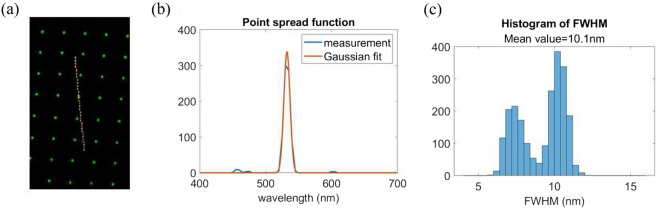

In order to evaluate the system performance, we used a laser beam with wavelength 532nm to illuminate a diffuser and to capture the image . A smoothing process was applied to average each pixel with 8 surrounding pixels, to get a new value of the pixel. The image after smoothing process is shown in Fig. 5a. It shows the hole distribution is sparse enough to separate the spectrum of each hole and makes sure the angular resolution follows the design value, i.e., to . However, the continuous temporal spectrum makes the image of the hole of different wavelength overlapping each other, the spectral resolution should be evaluated by the full width of half maximum (FWHM) of the point spread function (PSF) of the wavelength. The spectrum distribution corresponding to a hole location is calculated by Eq. (5). Thus, the path of a spectrum distribution in the photo is illustrated with a yellow dash line in the figure. The PSF of the wavelength can be measured along the spectrum path, such as the blue curve in Fig. 5b. We apply Gaussian fitting with fitting confidence, and get the orange curve. The full width of half maximum (FWHM) of the fitted Gaussian function is calculated accordingly. Figure 5c shows the statistic FWHM over total 2233 points. Because the field-curvature aberration is induced by the camera lens, the on-focusing depth changes with (x0, y0). It makes the FWHM varies from 5.6 to 12 nm, and the average FWHM is 10.1 nm. It turns out as the spectral resolution of the experimental results, and is about twice the theoretical spectral resolution calculated by Eq. (3).

Figure 5.

The measurement of the spectral resolution, (a) the captured image; (b) the point spread function of wavelength; (c) the statistical result of FWHM over total 2233 points.

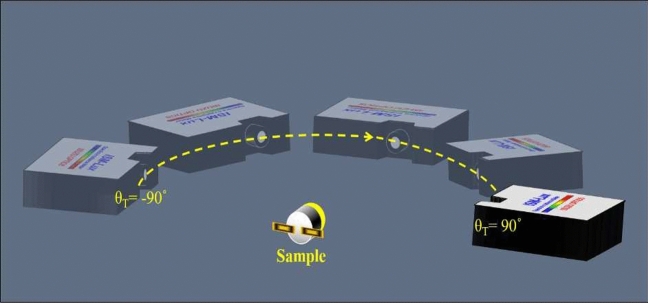

We used the spectrum meter (ISUZU OPTICS, ISM-Lux, corrected by ITRI-CMS) as a standard device to build up an ODSG. It rotates around the sample to measure the comparison spectrum of the sample per 2 degrees, as shown in Fig. 6.

Figure 6.

The one-dimensional spectrum goniometer (ODSG).

We tested four samples with SISH system and the ODSG. The four samples are Lab-packaged LEDs in the form of “Bias Hemisphere”, “Hemisphere extended height coating (Hemisphere EHC)”, “Conformal without lens” and “Cup”. Where, “cup” and “Conformal without lens” were the conventional techniques to achieve uniform CCT distribution67. The “Hemisphere EHC” was then proposed to achieve better uniformity of CCT distribution70. However, to show the value of SISH meter, which can be applied to check the quality of design or manufacture, we deliberately change the package parameter. For the Lab-packaged “Bias Hemisphere”, we made the lens biasing from the center, to simulate a poor package quality. For the Lab-packaged “Hemisphere EHC”, we deliberately choose the extended height of 1mm to produce poor CCT uniformity.

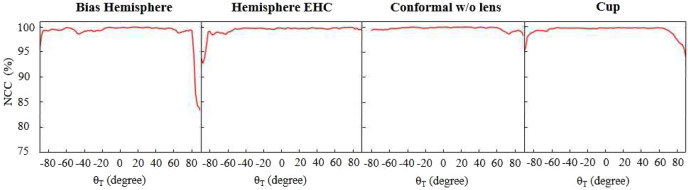

In the experiment, for were taken by the ODSG, and for were taken by the SISH meter. Then we calculate the NCC values between the measured results of the and the . Figure 7 shows for all types of packages, in the detection region , NCC values are higher than . The system performance gets worse in large because the intensity in large are weak. It induces noise and degrades the NCC values. Especially, the package of “Bias Hemisphere” in the region , weak signal and dramatic spectrum variation lead to the drop of NCC. We also need to note int the package of conformal without lens, the signals with are so weak, such that the ODSG detects only the noise. It makes the comparison values within these regions not trustable. So, we removed all the system evaluation within this region.

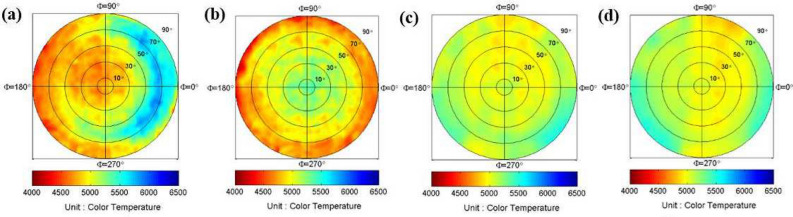

Figure 7.

The NCC value between the and the for .

Because a single value CCT is calculated from the 1D spectrum, the 3D hyperspectral data can be transformed to the 2D CCT distribution, and thus be shown in a single 2D picture. And it is sensitive to spectrum variation. Besides, color distribution is an important parameter for the lighting and display manufacturing industries. So, we used Eqs. (18) and (19) to calculate the spatial distribution of the CCT. Figure 8 shows the 2D Azimuth equidistant projection of CCT, and it was used to compare the CCT uniformity of different packages. Although the “Hemisphere EHC” was reported as owning the best CCT uniformity71. Figure 8 shows “conformal w/o lens” and “cup” have better CCT uniformity. It is because the performance of uniformity highly depends on package parameters and package quality. We deliberately change the package parameter to degrade the CCT uniformity of “Bias Hemisphere” and “Hemisphere EHC”. The package of “Bias Hemisphere” shows the CCT distribution of higher CCT in the right side, as shown in Fig. 8a. Since the short wavelength contributes to higher CCT, this picture is coincident with the wavelength distribution as shown in Fig. 3b–d.

Figure 8.

The 2D Azimuth equidistant projection of CCT.

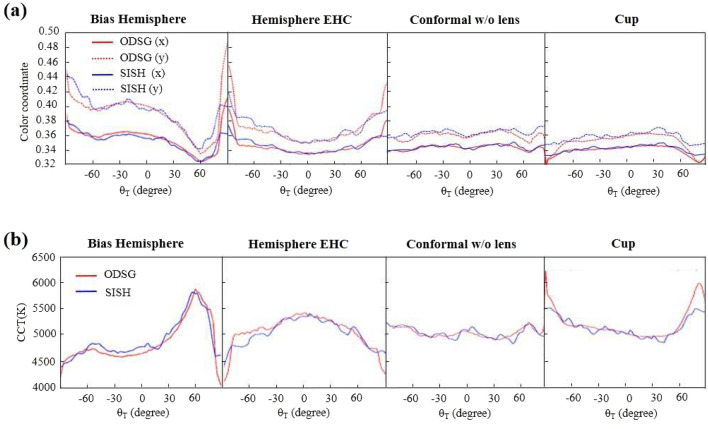

Figure 9a shows the color coordinate measured along the angle . Where the red curves are measured by the ODSG, and the blue curves are measured by the SISH. It shows most SISH curves are coincident with the ODSG curves within the detection region , except some regions within . The errors are large inaccuracy induced by the weak irradiance, as gets larger. Figure 9b shows the calculated CCT, based on the color coordinates. Therefore, the mismatch between the ODSG and the SISH appears in the similar regions of Fig. 9a. Table 2 shows the AD and the DSD calculated from the CCT curves within . It shows the maximum value of |AD| and |DSD| are smaller than 69k.

Figure 9.

The comparison of (a) color coordinates, and (b) CCT.

Table 2.

The AD and the DSD calculated from the CCT within . .

| Bias hemisphere | Hemisphere EHC | Conformal w/o lens | Cup | |

|---|---|---|---|---|

| AD | −68.6K | 52.0K | 5.1K | 30.8K |

| DSD | 65.7K | 52.6K | 54.8K | 50.5K |

Conclusion

The lighting and display manufacturing industries need high throughput measurement in either color accuracy or spectrum distribution. The SIS meter was proposed to make high-speed whole-field light-distribution measurement. However, it suffered from stray-light noise and lacked the capability for spectrum measurement. We proposed a Snapshot Hyperspectral technology to build up a SISH. The screen of the SISH was replaced by SSS. There are totally 2233 holes in the SSS, and was used to sample the intensity distribution sparsely. It makes the scattering reflection of the screen decreasing to , and the SRSL noise is depressed accordingly. In the proposed snap-shot hyperspectral, the designed spectral resolution is 5nm, and the designed angular resolution is to .

In the spectrum retrieval process, the laser beams with wavelengths 457 nm, 532 nm, and 671 nm were used as light sources to get the dispersed images of SSS, i.e., , , and . Then, the three images were used to calculate the binominal equations to retrieve the hyperspectrum raw data from a captured photo .

In the experiment, the measured spectral resolution is 10.1 nm. We compared the hyperspectral and CCT distribution measured by SISH with which measured by ODSG. Four types of LED packages were used as samples. It shows that for all types of packages, NCC values of the hyperspectral are higher than in the detection region . AD and DSD calculated from the CCT curves within have the maximum values of 69k.

Acknowledgements

The authors acknowledge support from the Ministry of Science and Technology of Taiwan (Grant numbers MOST 111-2221-E-008 -028 -MY3, MOST 111-2221-E-008-100-, MOST 111-2221-E-008-033- and NSTC 112-2218-E-008-008-MBK.

Author contributions

Y.W.Y. conceived the experiments and served as the chief author. M.L. conducted the experiment and analyzed the results. T.H.Y. contributed to the calibration procedure, participated in authoring the paper and advising the data analysis. C.H.C., P.D.H., C.S. W. and C.C.L. participated in authoring the paper and reconfirming the experimental result. T.X.L. contributed to paper modification. C.C.S. served as the team leader, advised the technique direction, and submitted the paper. All authors reviewed the manuscript.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Acuna PC, et al. Impact of the geometrical and optical parameters on the performance of a cylindrical remote phosphor LED. IEEE Photon. J. 2015;7:1–14. doi: 10.1109/jphot.2015.2468679. [DOI] [Google Scholar]

- 2.Ryckaert J, et al. Selecting the optimal synthesis parameters of quantum dots for a hybrid remote phosphor white led for general lighting applications. Opt. Exp. 2017;25:A1009. doi: 10.1364/OE.25.0A1009. [DOI] [PubMed] [Google Scholar]

- 3.Chang S-I, et al. Microlens array diffuser for a light-emitting diode backlight system. Opt. Lett. 2006;31:3016–3018. doi: 10.1364/ol.31.003016. [DOI] [PubMed] [Google Scholar]

- 4.Lin RJ, Tsai M-S, Sun C-C. Novel optical lens design with a light scattering freeform inner surface for led down light illumination. Opt. Exp. 2015;23:16715. doi: 10.1364/oe.23.016715. [DOI] [PubMed] [Google Scholar]

- 5.min Zhu Z, Liu H, ming Chen S. The design of diffuse reflective free-form surface for indirect illumination with high efficiency and uniformity. IEEE Photon. J. 2015;7:1–10. doi: 10.1109/jphot.2015.2424402. [DOI] [Google Scholar]

- 6.Chen K-J, et al. An investigation of the optical analysis in white light-emitting diodes with conformal and remote phosphor structure. J. Display Technol. 2013;9:915–920. doi: 10.1109/jdt.2013.2272644. [DOI] [Google Scholar]

- 7.Krehel M, Grobe LO, Wittkopf S. A hybrid data-driven BSDF model to predict light transmission through complex fenestration systems including high incident directions. J. Facade Des. Eng. 2017;4:79–89. doi: 10.3233/fde-161191. [DOI] [Google Scholar]

- 8.Acuña P, et al. Power and photon budget of a remote phosphor led module. Opt. Exp. 2014;22:A1079–A1092. doi: 10.1364/oe.22.0a1079. [DOI] [PubMed] [Google Scholar]

- 9.Murray-Coleman J, Smith A. The automated measurement of BRDFS and their application to luminaire modeling. J. Illumin. Eng. Soc. 1990;19:87–99. doi: 10.1080/00994480.1990.10747944. [DOI] [Google Scholar]

- 10.Chien W-T, Sun C-C, Moreno I. Precise optical model of multi-chip white LEDs. Opt. Exp. 2007;15:7572–7577. doi: 10.1364/oe.15.007572. [DOI] [PubMed] [Google Scholar]

- 11.Moreno I, Sun C-C. Modeling the radiation pattern of LEDs. Opt. Exp. 2008;16:1808–1819. doi: 10.1364/oe.16.001808. [DOI] [PubMed] [Google Scholar]

- 12.Audenaert J, Durinck G, Leloup FB, Deconinck G, Hanselaer P. Simulating the spatial luminance distribution of planar light sources by sampling of ray files. Opt. Exp. 2013;21:24099–24111. doi: 10.1364/oe.21.024099. [DOI] [PubMed] [Google Scholar]

- 13.Zhu Z, Wang Z, Zhang F. Design method of diffuse transmission free-form surface combined with collimation lens array. Light. Res. Technol. 2020;53:333–343. doi: 10.1177/1477153520958457. [DOI] [Google Scholar]

- 14.Lin RJ, Sun C-C. Free-form thin lens design with light scattering surfaces for practical LED down light illumination. Opt. Eng. 2016;55:055102. doi: 10.1117/1.oe.55.5.055102. [DOI] [Google Scholar]

- 15.Harrison VGW. A goniophotometer for gloss and colour measurements. J. Sci. Instrum. 1947;24:21–24. doi: 10.1088/0950-7671/24/1/303. [DOI] [Google Scholar]

- 16.Jacobs VA, et al. Rayfiles including spectral and colorimetric information. Opt. Exp. 2015;23:A361–A370. doi: 10.1364/oe.23.00a361. [DOI] [PubMed] [Google Scholar]

- 17.Hünerhoff D, Grusemann U, Höpe A. New robot-based gonio reflectometer for measuring spectral diffuse reflection. Metrologia. 2006;43:S11. doi: 10.1088/0026-1394/43/2/s03. [DOI] [Google Scholar]

- 18.Obein G, Bousquet R, Nadal ME. New NIST reference gonio spectrometer. Opt. Diagn. (SPIE) 2005;5880:241–250. doi: 10.1117/12.621516. [DOI] [Google Scholar]

- 19.Rabal AM, et al. Automatic gonio-spectrophotometer for the absolute measurement of the spectral BRDF at in-and out-of-plane and retroreflection geometries. Metrologia. 2012;49:213. doi: 10.1088/0026-1394/49/3/213. [DOI] [Google Scholar]

- 20.Leloup FB, Forment S, Dutré P, Pointer MR, Hanselaer P. Design of an instrument for measuring the spectral bidirectional scatter distribution function. Appl. Opt. 2008;47:5454–5467. doi: 10.1364/ao.47.005454. [DOI] [PubMed] [Google Scholar]

- 21.De Wasseige C, Defourny P. Retrieval of tropical forest structure characteristics from bi-directional reflectance of spot images. Remote Sens. Environ. 2002;83:362–375. doi: 10.1016/s0034-4257(02)00033-0. [DOI] [Google Scholar]

- 22.Papas, M., de Mesa, K. & Jensen, H. W. A physically-based BSDF for modeling the appearance of paper. In Computer Graphics Forum. Vol. 33. 133–142. 10.1111/cgf.12420. (Wiley Online Library/Wiley, 2014).

- 23.Mazikowski A, Trojanowski M. Measurements of spectral spatial distribution of scattering materials for rear projection screens used in virtual reality systems. Metrol. Meas. Syst. 2013;20:443–452. doi: 10.2478/mms-2013-0038. [DOI] [Google Scholar]

- 24.Wu H-HP, Lee Y-P, Chang S-H. Fast measurement of automotive headlamps based on high dynamic range imaging. Appl. Opt. 2012;51:6870–6880. doi: 10.1364/ao.51.006870. [DOI] [PubMed] [Google Scholar]

- 25.Berrocal E, Sedarsky DL, Paciaroni ME, Meglinski IV, Linne MA. Laser light scattering in turbid media part I: Experimental and simulated results for the spatial intensity distribution. Opt. Exp. 2007;15:10649–10665. doi: 10.1364/oe.15.010649. [DOI] [PubMed] [Google Scholar]

- 26.Tohsing K, Schrempf M, Riechelmann S, Schilke H, Seckmeyer G. Measuring high-resolution sky luminance distributions with a CCD camera. Appl. Opt. 2013;52:1564–1573. doi: 10.1364/ao.52.001564. [DOI] [PubMed] [Google Scholar]

- 27.Leroux, T. Fast contrast vs. viewing angle measurements for LCDs. In Proceedings of the 13th International Display Research Conference (Eurodisplay 93). Vol. 447 (1993).

- 28.Ward, G. J. Measuring and modeling anisotropic reflection. In Proceedings of the 19th Annual Conference on Computer Graphics and Interactive Techniques. 265–272. 10.1145/133994.134078 (1992).

- 29.Kostal, H., Kreysar, D. & Rykowski, R. Application of imaging sphere for BSDF measurements of arbitrary materials. In Frontiers in Optics, FMJ6. 10.1364/fio.2008.fmj6 (Optica Publishing Group, 2008).

- 30.Becker ME. Evaluation and characterization of display reflectance. Displays. 1998;19:35–54. doi: 10.1016/s0141-9382(98)00029-8. [DOI] [Google Scholar]

- 31.Becker ME. Display reflectance: Basics, measurement, and rating. J. Soc. Inf. Display. 2006;14:1003–1017. doi: 10.1889/1.2393025. [DOI] [Google Scholar]

- 32.Hung C-H, Tien C-H. Phosphor-converted led modeling by bidirectional photometric data. Opt. Exp. 2010;18:A261–A271. doi: 10.1364/oe.18.00a261. [DOI] [PubMed] [Google Scholar]

- 33.Yu Y-W, et al. Bidirectional scattering distribution function by screen imaging synthesis. Opt. Exp. 2012;20:1268–1280. doi: 10.1364/oe.20.001268. [DOI] [PubMed] [Google Scholar]

- 34.Yu Y-W, et al. Total transmittance measurement using an integrating sphere calibrated by a screen image synthesis system. Opt. Contin. 2022;1:1451–1457. doi: 10.1364/optcon.463039. [DOI] [Google Scholar]

- 35.Yu Y-W, et al. Scatterometer and intensity distribution meter with screen image synthesis. IEEE Photon. J. 2020;12:1–12. doi: 10.1109/jphot.2020.3025485. [DOI] [Google Scholar]

- 36.McClain, C. & Meister, G. Mission Requirements for Future Ocean-Colour Sensors.10.25607/OBP-104 (2012).

- 37.Adam G, et al. Observations of the Einstein cross 2237+ 030 with the tiger integral field spectrograph. Astron. Astrophys. 1989;208:L15–L18. [Google Scholar]

- 38.Bacon R, et al. 3D spectrography at high spatial resolution. I. Concept and realization of the integral field spectrograph tiger. Astron. Astrophys. Suppl. 1995;113:347. [Google Scholar]

- 39.Bodkin, A. et al. Snapshot hyperspectral imaging: The hyperpixel array camera. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XV. Vol. 7334. 164–174. 10.1117/12.818929 (SPIE, 2009).

- 40.Cao X, Du H, Tong X, Dai Q, Lin S. A prism-mask system for multispectral video acquisition. IEEE Trans. Pattern Anal. Mach. Intell. 2011;33:2423–2435. doi: 10.1109/tpami.2011.80. [DOI] [PubMed] [Google Scholar]

- 41.Gao L, Wang LV. A review of snapshot multidimensional optical imaging: Measuring photon tags in parallel. Phys. Rep. 2016;616:1–37. doi: 10.1016/j.physrep.2015.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Xu N, et al. Snapshot hyperspectral imaging based on equalization designed doe. Opt. Exp. 2023;31:20489–20504. doi: 10.1364/oe.493498. [DOI] [PubMed] [Google Scholar]

- 43.Xie H, et al. Dual camera snapshot hyperspectral imaging system via physics-informed learning. Opt. Lasers Eng. 2022;154:107023. doi: 10.1016/j.optlaseng.2022.107023. [DOI] [Google Scholar]

- 44.Kim T, Lee KC, Baek N, Chae H, Lee SA. Aperture-encoded snapshot hyperspectral imaging with a lensless camera. APL Photon. 2023 doi: 10.1063/5.0150797. [DOI] [Google Scholar]

- 45.Zhao R, Yang C, Smith RT, Gao L. Coded aperture snapshot spectral imaging fundus camera. Sci. Rep. 2023;13:12007. doi: 10.21203/rs.3.rs-2515559/v1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Goossens T, Geelen B, Lambrechts A, Van Hoof C. Vignetted-aperture correction for spectral cameras with integrated thin-film Fabry–Perot filters. Appl. Opt. 2019;58:1789–1799. doi: 10.1364/ao.58.001789. [DOI] [PubMed] [Google Scholar]

- 47.Lambrechts, A. et al. A CMOS-compatible, integrated approach to hyper-and multispectral imaging. In 2014 IEEE International Electron Devices Meeting. 10–5. 10.1109/iedm.2014.7047025 (IEEE, 2014).

- 48.Wang S-W, et al. 128 channels of integrated filter array rapidly fabricated by using the combinatorial deposition technique. Appl. Phys. B. 2007;88:281–284. doi: 10.1007/s00340-007-2726-3. [DOI] [Google Scholar]

- 49.Walls K, et al. Narrowband multispectral filter set for visible band. Opt. Exp. 2012;20:21917–21923. doi: 10.1364/oe.20.021917. [DOI] [PubMed] [Google Scholar]

- 50.Gupta N, Ashe PR, Tan S. Miniature snapshot multispectral imager. Opt. Eng. 2011;50:033203. doi: 10.1117/1.3552665. [DOI] [Google Scholar]

- 51.Geelen, B., Tack, N. & Lambrechts, A. A snapshot multispectral imager with integrated tiled filters and optical duplication. In Advanced Fabrication Technologies for Micro/Nano Optics and Photonics VI. Vol. 8613. 173–185. 10.1117/12.2004072 (SPIE, 2013).

- 52.Geelen, B., Tack, N. & Lambrechts, A. A compact snapshot multispectral imager with a monolithically integrated per-pixel filter mosaic. In Advanced Fabrication Technologies for Micro/Nano Optics and Photonics VII. Vol. 8974. 80–87. 10.1117/12.2037607 (SPIE, 2014).

- 53.Ma S-H, Chen L-S, Huang W-C. Effects of volume scattering diffusers on the color variation of white light LEDs. J. Display Technol. 2014;11:13–21. doi: 10.1109/jdt.2014.2354434. [DOI] [Google Scholar]

- 54.Zheng L, et al. Research on a camera-based microscopic imaging system to inspect the surface luminance of the micro-led array. IEEE Access. 2018;6:51329–51336. doi: 10.1109/access.2018.2869778. [DOI] [Google Scholar]

- 55.Liu Z, et al. Micro-light-emitting diodes with quantum dots in display technology. Light Sci. Appl. 2020;9:83. doi: 10.1038/s41377-020-0268-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Nguyen TAN, et al. Controlling the electron concentration for surface-enhanced Raman spectroscopy. ACS Photon. 2021;8:2410–2416. doi: 10.1021/acsphotonics.1c00611. [DOI] [Google Scholar]

- 57.Li M, Jwo K-W, Yi W. A novel spectroscopy-based method using monopole antenna for measuring soil water content. Measurement. 2021;168:108459. doi: 10.1016/j.measurement.2020.108459. [DOI] [Google Scholar]

- 58.Yang T-H, et al. Noncontact and instant detection of phosphor temperature in phosphor-converted white LEDs. Sci. Rep. 2018;8:296. doi: 10.1038/s41598-017-18686-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Boo H, et al. Metasurface wavefront control for high-performance user-natural augmented reality waveguide glasses. Sci. Rep. 2022;12:5832. doi: 10.1038/s41598-022-09680-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Emadi A, Wu H, de Graaf G, Wolffenbuttel R. Design and implementation of a sub-nm resolution microspectrometer based on a linear-variable optical filter. Opt. Exp. 2012;20:489–507. doi: 10.1364/OE.20.000489. [DOI] [PubMed] [Google Scholar]

- 61.Bao J, Bawendi MG. A colloidal quantum dot spectrometer. Nature. 2015;523:67–70. doi: 10.1038/nature14576. [DOI] [PubMed] [Google Scholar]

- 62.Wang Z, et al. Single-shot on-chip spectral sensors based on photonic crystal slabs. Nat. Commun. 2019;10:1020. doi: 10.1038/s41467-019-08994-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Cao X, et al. Computational snapshot multispectral cameras: Toward dynamic capture of the spectral world. IEEE Signal Process. Mag. 2016;33:95–108. doi: 10.1109/msp.2016.2582378. [DOI] [Google Scholar]

- 64.Huang L, Luo R, Liu X, Hao X. Spectral imaging with deep learning. Light Sci. Appl. 2022;11:61. doi: 10.1038/s41377-022-00743-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Rao S, Huang Y, Cui K, Li Y. Anti-spoofing face recognition using a metasurface-based snapshot hyperspectral image sensor. Optica. 2022;9:1253–1259. doi: 10.1364/optica.469653. [DOI] [Google Scholar]

- 66.Kudenov MW, Jungwirth ME, Dereniak EL, Gerhart GR. White-light SAGNAC interferometer for snapshot multispectral imaging. Appl. Opt. 2010;49:4067–4076. doi: 10.1364/ao.49.004067. [DOI] [PubMed] [Google Scholar]

- 67.McCamy CS. Correlated color temperature as an explicit function of chromaticity coordinates. Color Res. Appl. 1992;17:142–144. doi: 10.1002/col.5080170211. [DOI] [Google Scholar]

- 68.CIE 015:2018 Colorimetry. 4th Ed. 10.25039/TR.015.2018.

- 69.Sun C-C, Lee T-X, Ma S-H, Lee Y-L, Huang S-M. Precise optical modeling for LED lighting verified by cross correlation in the midfield region. Opt. Lett. 2006;31:2193–2195. doi: 10.1364/ol.31.002193. [DOI] [PubMed] [Google Scholar]

- 70.Collins III, W. D., Krames, M. R., Verhoeckx, G. J. & van Leth, N. J. M. Using electrophoresis to produce a conformally coated phosphor-converted light emitting semiconductor. In US Patent 6576488 (2003).

- 71.Sun C-C, et al. High uniformity in angular correlated-color-temperature distribution of white LEDs from 2800k to 6500k. Opt. Exp. 2012;20:6622–6630. doi: 10.1364/oe.20.006622. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.