Abstract

High resolution magnetic resonance (MR) images are desired in many clinical applications, yet acquiring such data with an adequate signal-to-noise ratio requires a long time, making them costly and susceptible to motion artifacts. A common way to partly achieve this goal is to acquire MR images with good in-plane resolution and poor through-plane resolution (i.e., large slice thickness). For such 2D imaging protocols, aliasing is also introduced in the through-plane direction, and these high-frequency artifacts cannot be removed by conventional interpolation. Super-resolution (SR) algorithms which can reduce aliasing artifacts and improve spatial resolution have previously been reported. State-of-the-art SR methods are mostly learning-based and require external training data consisting of paired low resolution (LR) and high resolution (HR) MR images. However, due to scanner limitations, such training data are often unavailable. This paper presents an anti-aliasing (AA) and self super-resolution (SSR) algorithm that needs no external training data. It takes advantage of the fact that the in-plane slices of those MR images contain high frequency information. Our algorithm consists of three steps: 1) We build a self AA (SAA) deep network followed by 2) an SSR deep network, both of which can be applied along different orientations within the original images, and 3) recombine the multiple orientations output from Steps 1 and 2 using Fourier burst accumulation. We perform our SAA+SSR algorithm on a diverse collection of MR data without modification or preprocessing other than N4 inhomogeneity correction, and demonstrate significant improvement compared to competing SSR methods.

Keywords: self super-resolution, deep network, MRI, CNN, aliasing

1. Introduction

High resolution (HR) magnetic resonance images (MRI) provide more anatomical details and enable more precise analyses, and are therefore highly desired in clinical and research applications [8]. However, in reality MR images are usually acquired with high in-plane resolution and lower through-plane resolution (slice thickness) to save acquisition time. Thus in these images, the high frequency information in the through-plane direction is missing. Some MRI protocols acquire 3D images as stacks of 2D images, which introduce aliasing that appears as high-frequency artifacts in the images. Interpolation is frequently used (both on the scanner and in postprocessing) to improve the digital resolution of acquired images, but this process does not restore any high frequency information. The partial volume artifacts that remain in these images make them appear blurry and degrade image analysis performance as well [2,8].

To address this problem, a number of super-resolution (SR) algorithms have been developed, including neighbor embedding regressions [11], random forests (RF) [9], and convolutional neural networks (CNNs) [5–7]. Generally, CNN methods need paired atlas images to learn the transformation from low resolution (LR) to high resolution (HR). They work well with natural images, but a lack of adequate training data (an LR/HR atlas) is a major problem when applying these approaches to MRI. There are two reasons for the lack of adequate training data. First, acquisition of HR data with isotropic voxels is time consuming—potentially taking hours, depending on the desired resolution—in order to also achieve adequate signal-to-noise ratio. Such long acquisitions are prohibitive from a subject comfort point of view and are also highly prone to motion artifacts. Second, MR images have no standardized tissue contrast, so application of an SR approach trained from a given atlas may not readily apply to a new subject from scan that has different contrast properties. It is therefore desirable that any SR approach for MRI not require the use of an external atlas.

To avoid the requirement of external training data, researchers have developed self super-resolution (SSR) methods [3,4,14,16]. SSR methods use the mapping between the high in-plane resolution images and simulated lower resolution images, to estimate high resolution through-plane images. Previous SSR methods [4,14,16] have achieved good results on medical images. Jog et al. [4] built an SSR framework that extracts training patches from the LR MRI and blurred LR2 images, trains a RF regressor, and applies the trained regressor to LR2 images in different directions. The resultant images are LR, but have low resolution in different directions. Thus, each of them contributes high frequency information to a different region of Fourier space. Finally, these images are combined through Fourier burst accumulation (FBA) [1] to obtain an HR image. We have previously reported [16] a method that replaces the RF framework of Jog et al. [4] with the state-of-art SR deep network EDSR [7]. This approach applies the trained network to the original LR image instead of the LR2 images as in Jog et al. [4]. Weigert et al. [14] reported an SSR method for 3D fluorescence microscopy images based on a U-net and showed improved segmentation. None of these previous works address anti-aliasing (AA).

In this paper, we report an approach for applying both anti-aliasing (AA) and super-resolution (SR) by building the first self AA (SAA) method in conjunction with an SSR deep network. We build upon our own [16] framework and the work of Jog et al. [4], with two major differences. First, the previous approaches constructed the LR2 data by applying a truncated sinc in -space simulating the incomplete signal in -space of LR images for 3D MRI. However, for 2D MRI, this process does not simulate aliasing artifacts and therefore cannot provide training data for removing aliasing. We therefore modify this filtering to suit our desired deep networks. Second, we build two deep networks, one for SAA and one for SSR.

2. Method

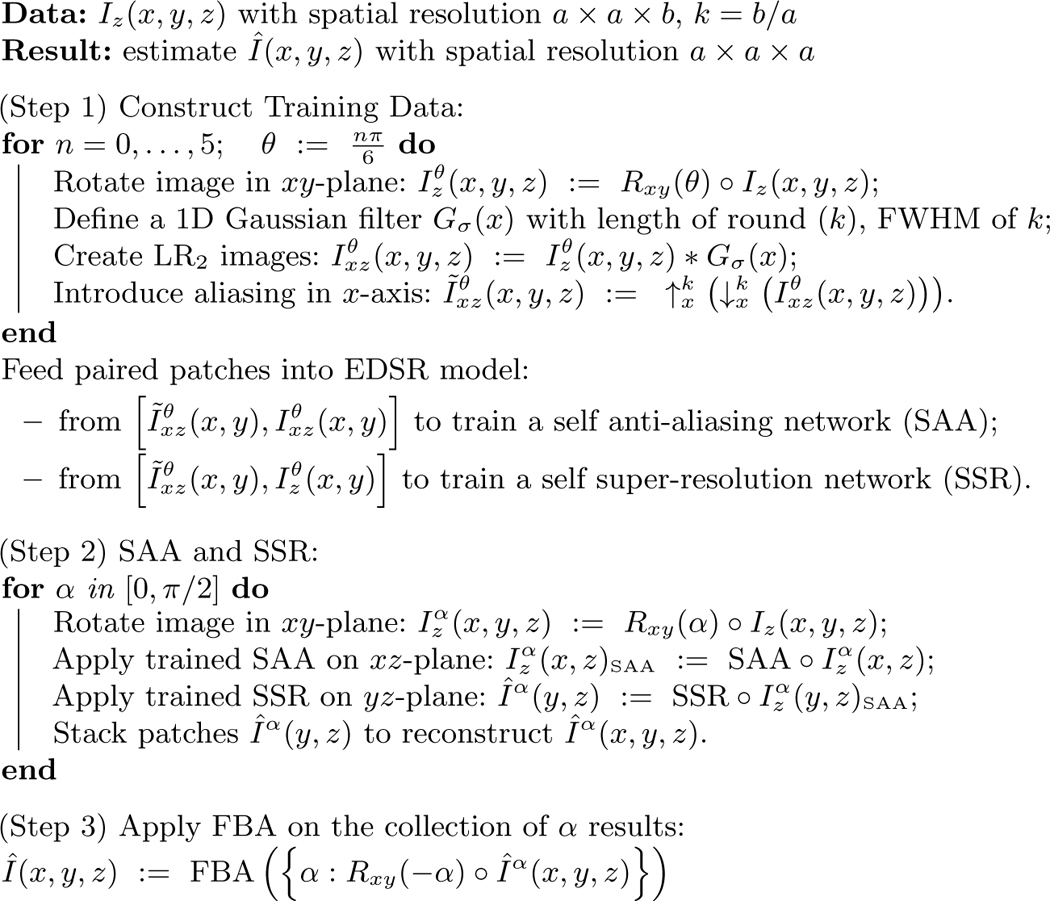

Our algorithm needs no preprocessing step other than N4 inhomogeneity correction [12] to make the image intensity homogeneous. The pseudo code is shown in Algorithm 1, and we refer to our algorithm as Synthetic Multi-Orientation Resolution Enhancement (SMORE). Consider an input LR image having slice thickness equal to the slice separation. The spatial resolution (approximate full-width at half-maximum) and voxel separation of this image is assumed to be where . Without loss of generality, we assume that the axial slices are HR slices. We model this image as a low-pass filtered and downsampled version of the HR image which has spatial resolution and voxel separation . Our first step is to apply cubic b-spline (BSP) interpolation to the input image yielding which has the same spatial resolution as the input but voxel separation . Aliasing exists in the direction in this image because the Nyquist criterion is not satisfied (unless the actual frequency content in the direction is very low, which we assume is not the case in normal anatomies.) We denote the ratio of the resolutions as , which need not be an integer. Similar to Jog et al. [4], the idea behind the algorithm is that 2D axial slices can be thought of as HR slices, whereas sagittal slices and coronal slices are LR slices. Blurring axial slices in the -direction produces with resolution of which we can use with as training data. Any trained system can then be applied to or to generate HR sagittal and coronal slices. We choose an state-of-art deep network model EDSR [7] as it won the Ntire 2017 super-resolution challenge [10]. We describe the steps of SMORE in details below.

Training Data Extraction:

To construct our training data, we desire aliased LR slices that accurately simulate the resolution and have aliasing in the -axis. For 2D MRI, we need to model the slice selection procedure, thus we use a 1D Gaussian filter in the image domain with a length round() and full-width at half-maximum (FWHM) of . The filtered image has the desired LR components without aliasing. To introduce aliasing, the image is downsampled by factor of using linear interpolation to simulate the large slice thickness. We denote this image as . To complete the training pair we upsample this image by a factor using BSP interpolation to generate LR2 which can be represented as ,

Algorithm 1:

SMORE Pseudocode

|

but for brevity denoted as . To increase the training samples, we rotate in the -plane by and repeat this process to yield . In this paper we use six rotations where for , but this generalizes for any number and arrangement of rotations.

EDSR Model:

We train two networks, one for SAA and one for SSR. 1) To train the SAA network, patch pairs are extracted from axial slices in and (i.e., aliased LR2 and LR, respectively). We train a deep network SR model, EDSR, to remove this aliasing. We use small patches to enhance edges without structural specificity so that this network can better preserve pathology. Additionally, small patches allow for more training samples. 2) To train our SSR network, patch pairs are extracted from axial slices in and . These patch pairs train another EDSR model to learn how to remove aliasing and improve resolution. Although training needs to be done for every subject, we have found that fine-tuning a pre-trained model is accurate and fast. In practice, training the two models for one subject based on pre-trained models from an arbitrary data set takes less than 40 minutes in total for a Tesla K40 GPU.

Applying the Networks:

Our trained SSR network can be applied to LR coronal and sagittal slices of to remove aliasing and improve resolution. However, experimentally we discovered that if we apply our SSR network to patches of a sagittal slice , and subsequently reconstruct a 3D image, then the result only removes aliasing in sagittal slices. To address this, we apply our SAA network to coronal slices to remove aliasing there, and then apply our SSR network to sagittal slices. Subsequently, the aliasing in both the coronal and sagittal planes of our SMORE result are removed. We repeat this procedure by applying SAA in sagittal slices and then SSR to the coronal slices to produce another image. As long as SAA and SSR are applied to orthogonal image planes, we can do this for any rotation in the -plane. The list of SAA and SSR results are finally combined by taking the maximum value for each voxel in -space for all rotations . This is the variant of Fourier burst accumulation (FBA) [1], which assumes that high values in -space indicate signal while low values indicate blurring. Since aliasing artifacts appears as high values in -space, this assumption of FBA necessitates our SAA network. Our presented results use only two values, 0 and .

3. Experiments

Evaluation on simulated LR data:

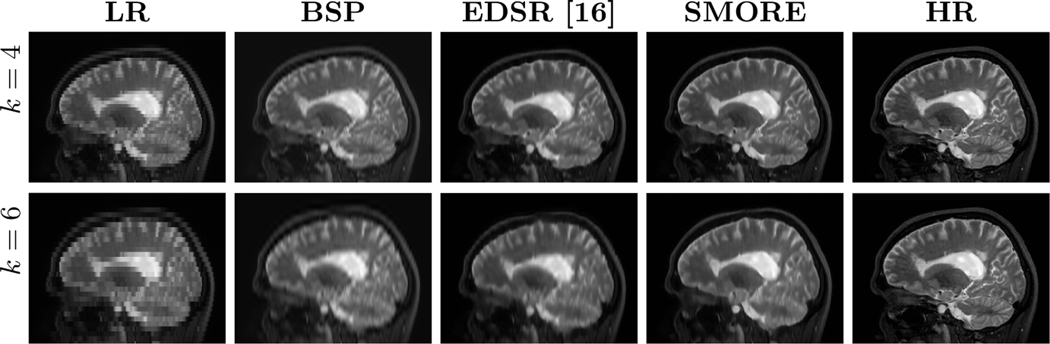

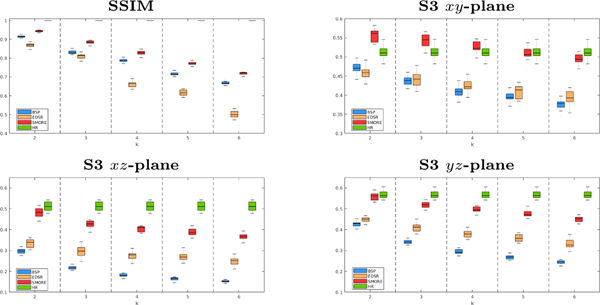

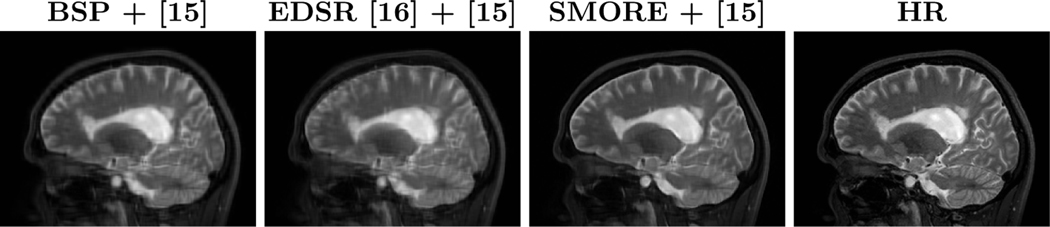

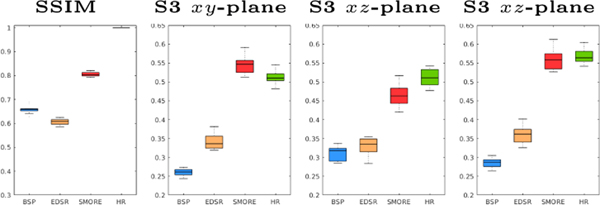

We compare SMORE to our previous work [16], which uses a different way of training data simulation and uses EDSR to do SSR on MRI without SAA, on T2-weighted images from 14 multiple sclerosis subjects imaged on a 3T Philips Achieva scanner with acquired resolution of mm. These images serve as our ground truth HR images, which are blurred and downsampled by factor in the -axis to simulate thick-slice MR images. The thick-slice LR MR images, and the results of cubic B-spline interpolation (BSP), our competing MR variant of EDSR [16], and our proposed SMORE algorithm are shown in Fig. 1 for and 6. Visually, SMORE has significantly better through-plane resolution than BSP and EDSR. For SMORE, the lesions near the ventricle are well preserved when . With , the large lesions are still well preserved but smaller lesions are not as well preserved. The Structural SIMilarity (SSIM) index is computed between each method and the mm ground truth. And the mean value masked over non-background voxels is shown in Fig. 2. We also compute the sharpness index S3 [13], a no-reference 2D image quality assessment, along each cardinal axis with the results also shown in Fig. 2. Our proposed algorithm, SMORE, significantly outperforms the competing methods.

Fig. 1.

Sagittal views of the mm LR image, the cubic B-spline (BSP) interpolated image, an MR variant of EDSR [16], our proposed method SMORE, and the HR ground truth image with lesions anterior and posterior of the ventricle.

Fig. 2.

For , we have evaluation of BSP (blue), an MR variant of EDSR [7] (yellow), our proposed method SMORE (red), and the ground truth (green).

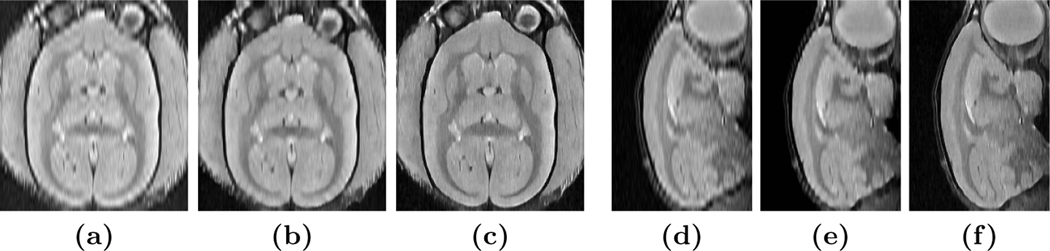

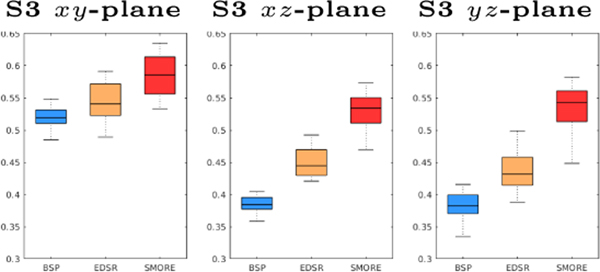

Evaluation on acquired LR data:

We applied BSP, our previous MR variant of EDSR [16], and our proposed SMORE method on eight PD-weighted MR images of marmosets. Each image has a resolution of mm (thus ), with HR in coronal plane. Results are shown in Fig. 3. We observe severe aliasing on the axial and sagittal plane of the input images, with an example shown in Fig. 3(a) and (d). Although there is no ground truth, visually SMORE removes the aliasing and gives a significantly sharper image (see Figs. 3(c) and (f)). To evaluate the sharpness, we use the S3 sharpness measure [13] on these results (see Fig. 4).

Fig. 3.

Experiment on mm LR marmoset PD MRI, showing axial views of (a) BSP interpolated image, (b) MR variant of EDSR [16], (c) SMORE, and sagittal views of (d) BSP, (e) MR variant of EDSR, and finally (f) SMORE.

Fig. 4.

S3 evaluation for the marmoset data, with BSP (blue), an MR variant of EDSR (yellow), and our proposed method SMORE (red).

Application to multi-view image reconstruction:

Woo et al. [15] presented a multi-view HR image reconstruction algorithm that reconstructs a single HR image from three orthogonally acquired LR images. The original algorithm used BSP interpolated LR images as input. We compare using BSP for this reconstruction with the MR variant of EDSR [16] and our proposed method SMORE. We use the same data as in Sec. ??, which have ground truth HR images. Three simulated LR images with resolution of , , and are generated for each data set. Thus = 6 and the input images are severely aliased. We apply each of BSP, EDSR, and SMORE to these three images and then apply our implementation of the reconstruction algorithm [15]. Example results for each of these three approaches are shown in Fig. 6. SSIM is computed for each reconstructed image to its mm ground truth HR image, with the mean of SSIM over non-background voxels being shown in Fig. 5. We also compute the sharpness index S3 along each cardinal axis with the results also shown in Fig. 5. Our proposed algorithm, SMORE, significantly outperforms the competing methods.

Fig. 6.

Sagittal views of the reconstructed image [15] using three inputs () from results of BSP, MR variant of EDSR [16], our proposed method SMORE, and the HR ground truth image.

Fig. 5.

SSIM and S3 for the reconstruction result using three inputs (). We have results from BSP (blue), an MR variant of EDSR (yellow), our proposed method SMORE (red), and the ground truth (green).

4. Conclusion and Discussion

This paper presents a self anti-aliasing (SAA) and self super-resolution (SSR) algorithm that can resolve high resolution information from MR images with thick slices and remove aliasing artifacts without any external training data. It needs no preprocessing step other than inhomogeneity correction like N4. The results are significantly better than competing SSR methods, and can be applied to multiple data sets without any modification or parameter tuning. Future work will include an evaluation of its impact on more applications such as skull stripping and lesion segmentation.

Acknowledgments

This work was supported by the NIH/NIBIB under grant R01-EB017743. Support was also provided by the National Multiple Sclerosis Society grant RG-1507-05243 and by the Intramural Research Program of the National Institute of Neurological Disorders and Stroke.

References

- 1.Delbracio M, Sapiro G: Hand-Held Video Deblurring Via Efficient Fourier Aggregation. IEEE Transactions on Computational Imaging 1(4), 270–283 (2015) [Google Scholar]

- 2.Greenspan H: Super-resolution in medical imaging. The Computer Journal 52(1), 43–63 (2008) [Google Scholar]

- 3.Huang JB, Singh A, Ahuja N: Single Image Super-Resolution From Transformed Self-Exemplars. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (June 2015) [Google Scholar]

- 4.Jog A, Carass A, Prince J: Self super-resolution for magnetic resonance images. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 553–560. Springer; (2016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kim J, Kwon Lee J, Lee K: Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 1646–1654 (2016) [Google Scholar]

- 6.Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z, et al. : Photo-realistic single image super-resolution using a generative adversarial network. arXiv preprint arXiv:1609.04802 (2016)

- 7.Lim B, Son S, Kim H, Nah S, Lee K: Enhanced deep residual networks for single image super-resolution. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops (2017) [Google Scholar]

- 8.Lüsebrink F, Wollrab A, Speck O: Cortical thickness determination of the human brain using high resolution 3 T and 7 T MRI data. Neuroimage 70, 122–131 (2013) [DOI] [PubMed] [Google Scholar]

- 9.Schulter S, Leistner C, Bischof H: Fast and accurate image upscaling with super-resolution forests. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 3791–3799 (2015) [Google Scholar]

- 10.Timofte R, Agustsson E, Van Gool L, Yang MH, Zhang L, Lim B, Son S, Kim H, Nah S, Lee K, et al. : Ntire 2017 challenge on single image super-resolution: Methods and results. In: Computer Vision and Pattern Recognition Workshops (CVPRW), 2017 IEEE Conference on. pp. 1110–1121. IEEE; (2017) [Google Scholar]

- 11.Timofte R, De Smet V, Van Gool L: A+: Adjusted anchored neighborhood regression for fast super-resolution. In: Asian Conference on Computer Vision. pp. 111–126. Springer; (2014) [Google Scholar]

- 12.Tustison NJ, Avants BB, Cook PA, Zheng Y, Egan A, Yushkevich PA, Gee JC: N4itk: improved n3 bias correction. IEEE transactions on medical imaging 29(6), 1310–1320 (2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Vu CT, Chandler DM: S3: A spectral and spatial sharpness measure. In: Advances in Multimedia, 2009. MMEDIA’09. First International Conference on. pp. 37–43. IEEE; (2009) [Google Scholar]

- 14.Weigert M, Royer L, Jug F, Myers G: Isotropic reconstruction of 3d fluorescence microscopy images using convolutional neural networks. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 126–134. Springer; (2017) [Google Scholar]

- 15.Woo J, Murano EZ, Stone M, Prince JL: Reconstruction of high-resolution tongue volumes from MRI. IEEE Transactions on Biomedical Engineering 59(12), 3511–3524 (2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zhao C, Carass A, Dewey BE, Prince JL: Self super-resolution for magnetic resonance images using deep networks. IEEE International Symposium on Biomedical Imaging (ISBI) (2018) [Google Scholar]