Abstract

The COVID-19 pandemic influenced teaching and learning in higher education. The transformation towards digital education challenged Faculty and students. This research examines the online learning readiness of students in a Higher Education Institution in Mexico. Specifically, we investigated how much prior digital skills, as well as having used the digital resources available by the university, influenced their academic achievement in distance learning settings. Seven dimensions of online learning readiness were selected to evaluate the student's preparation for the online learning process. Questionnaires were applied before the start and at the end of digital courses. Follow-up tools were offered to support the student, and two groups were observed, users and non-users of the digital devices. It was observed that students who used the support developed significantly better critical thinking, problem-solving, and time organization skills than non-users. On the other hand, although the evaluations were not significantly different, the lowest averages were found in the non-user group. Our results indicate that prior training in the use of digital tools is essential for the success of online education; in the same way, a timely follow-up with technical and pedagogical assistance is necessary for developing competencies. Training more autonomous and independent students capable of distance learning in a global world demands experts in digital education urgently. Educational institutions must embrace new technologies and teaching methods to meet the ever-changing needs of students. This research is expected to play a crucial role in promoting constructive discussions and facilitating informed decisions concerning the creation of future educational models.

Keywords: Digital competencies, Educational innovation, Higher education, Blended learning, Digital education

1. Introduction

Distance learning (often-called remote learning) encompasses all forms of education where the learner is physically separated from the instructor or institution [1]. It includes traditional correspondence, video conferencing, satellite broadcasts, and other forms of technology-enabled communication [2]. Distance learning can be conducted synchronously or asynchronously and may or may not involve online technologies [3]. It is also related to online learning (or e-learning), a mode of education delivered through online platforms such as learning management systems, web conferencing tools, and educational apps [4,5]. In this sense, online learning can be perceived as an evolution of distance learning [6]. It allows students to access course materials, participate in discussions, and complete assignments from anywhere on the internet. Online learning also can be synchronous (students and instructors engage in real-time interactions) or asynchronous (students access materials and complete assignments on their own time), or a mix of both [7,8].

Online learning in higher education (HE) levels has grown significantly in the last two decades. In the United States, in 2019, 17.5 % of all students enrolled in degree-granting postsecondary institutions were in exclusively distance education [9]. This statistic differs for other countries. For example, while for some countries such as Spain, Colombia, Sweden, and Germany, the percentage of students exclusively enrolled in distance HE was higher than 15 % (15.4–25 %), in other countries, such as Japan, Slovenia, Poland, Estonia, and Belgium, this figure was close to 0 % (0%–1%); at the same time, in Turkey, Chile, Italy, and Norway only 1.1–8.7 % of students were exclusively in distance-education programs [10]. In Mexico, according to the National Association of Universities and Institutions of HE [11], during the 2019–2020 academic year, 21.5 % of all university students were enrolled in a distance or non-schooled career. This data does not include students whose studies are carried out mainly in person but with one or more courses online.

The COVID-19 pandemic profoundly impacted online learning [12]. With the closure of universities and the need for social distancing, distance learning became the norm for many students and Faculty, and digital technologies played a crucial role in enabling this transition [13,14]. The pandemic highlighted both the benefits and challenges of online learning [15]. On the one hand, it demonstrated the potential for technology to enable flexible and accessible learning opportunities for students who may not have been able to attend classes in person [16,17]. On the other hand, it also highlighted the importance of face-to-face interactions and the social and emotional aspects of learning that may be more difficult to replicate in online environments [18,19]. Overall, it accelerated the adoption of online learning and highlighted the importance of digital technologies in education and the need for HE institutions to be prepared to respond quickly to changing circumstances and to ensure that all students have equitable access to technology and learning resources [[20], [21], [22]].

On the other hand, online learning demands greater self-regulation and other support from Faculty than face-to-face courses [23]. Recent evaluations of online learning courses suggest that online learning methods are less effective when students need more preparation or knowledge of digital tools [24]. Similarly, students' learning outcomes in online courses have been related to several aspects. Readiness for online work among them [25] is fundamental to their preparation. Finding ways to prepare students to be successful in an online learning environment has greatly interested those who design and manage online courses [23,26], particularly with the situation experienced in 2020 with the COVID-19 pandemic.

Online learning requires more independent and autonomous students, capable of organizing, communicating, and managing technology to carry out individual and collaborative work. According to Pearson's 2020 Global Learning Survey, 88 % of people believe that distance-learning experiences are here to stay [27]. Currently, students from all over the world study or have studied at least partially in some distance modality, so understanding the needs of students in these study methodologies will be essential.

2. Aim of this work

Aware of the need to understand the needs of students at a distance or online modalities, this work aims to report on the results of the application of a test for the students of a HE Institution in Mexico to evaluate online learning readiness in seven dimensions: organization of time, self-regulation of learning, interest and motivation, willingness to work at a distance, study habits, literacy skills, and technological skills. At the end of the test, the students, according to the results obtained, receive a series of didactic resources (videos, readings, infographics) to reinforce those skills required to improve some aspects. Our research is based on two specific research objectives.

-

1.

To know how students evaluate themselves concerning their preparation, knowledge, and abilities to take subjects in a distance modality, and

-

2.

To assess if there is a change in students' self-evaluation after reviewing support resources focused on developing and strengthening their abilities to take subjects in a distance modality.

Thus, the objective of this work is to analyze the impact of previous and systematic digital skills gained through didactic resources on the student's academic achievement. Academics can use the results of this work in many areas of knowledge as a reference for evaluating distance learning readiness as a prerequisite for distance learning to face a new reality such as the one lived in 2020 because of COVID-19.

This manuscript comprises a literature review on online learning readiness, including a Theoretical framework and a Methodology section, where this work's population and sampling, research approach, and research design are presented. Results and Discussion of the results section follow separately, ending with a conclusions section including recommendations and future work.

3. Literature review

Online education provides access to learning experiences without geographical limits [28]. It improves educational opportunities for more people by incorporating technology with flexibility and the ability to promote varied interactions in an academic environment [29]. Designing online learning experiences in HE implies students and faculty have specific skills and abilities to interact in a virtual environment.

3.1. Competencies to interact in virtual environments

Some essential digital skills for HE includes: A) the ability to navigate and use online learning management systems such as Blackboard, Canvas, and Moodle to visualize and understand content; B) The knowledge and the use of software tools to create content, like Adobe Creative Suites or Canva; C) The use of academic databases and scholarly journals, to stay updated; D) The knowledge and use of R or SPSS to analyze data and draw conclusions; and finally, E) The use of digital tools to collaborate with peers and instructor such as Google Docs or Microsoft Teams [[30], [31], [32]]. Incorporating digital skills into HE can enhance student engagement, provide new opportunities for learning and collaboration, and better prepare students for the modern workforce [33].

The proficiency in applying those abilities confidently, critically, and responsibly in a defined context (i.e., education) is a digital competence [34]. Since 2006, digital competence has been one of the eight necessary competencies in the EU for lifelong learning [35]. HE institutions can support the development of digital competencies by providing training and resources for students and faculty, incorporating technology into the curriculum, and promoting it as a core competence for all graduates [36,37].

Digital competencies are essential components of online learning readiness since online learning often requires learners to use technology and digital tools to complete coursework and interact with instructors and peers [38]. Studies indicate that low levels of online learning readiness among students have several essential deficiencies in the degree of participation and the depth of learning, leading them to major academic failures, such as low grades, failed subjects, or even dropping out [[39], [40],[41], [42]]. Therefore, in any online HE program, the student's levels of preparation for online learning must be evaluated to provide them with guidance and timely help and complete their online program [43].

3.2. Online learning readiness

Online learning readiness refers to an individual's ability and willingness to participate and succeed in an online learning environment. Warner et al. [44] first described the concept, focusing on three aspects: 1) students' preferences for the delivery format as opposed to the face-to-face modality, 2) students' confidence in the use of technology for learning, and 3) the ability to participate in self-directed learning activities. Since then, many other researchers have also studied this concept and defined the dimensions of online learning [[45], [46], [47]]. Some factors that may affect online learning readiness are [48].

-

•

To have equipment and software affordance (computer, reliable internet connection, and access to relevant software and applications).

-

•

Self-discipline, time management, and working independently (setting goals, managing time effectively, and staying motivated).

-

•

Basic digital literacy skills (navigating the internet, using email, and online learning platforms).

-

•

To feel comfortable communicating and collaborating with instructors and peers only online.

-

•

Support networks (including family and friends) and access to technical support and academic advising may better prepare for online learning.

To assess online learning readiness, institutions may use online readiness assessments or self-assessment tools [5]. These tools can help individuals identify their strengths and weaknesses and provide guidance on improving their online learning readiness. Additionally, institutions can provide resources such as technical support, academic advising, and online learning orientation programs to support students in developing the skills and support network necessary for success in online learning [45].

3.3. Theoretical framework

With the sudden shift towards online learning, assessing the student's ability to engage effectively in this mode of instruction has become essential. To do this, one must understand the theories and concepts underpinning studies in online learning readiness. This evaluation is necessary to adapt to the new normal in education.

3.4. Assessment of online learning environment

In the ever-changing world of online education, assessing prospective students' readiness is crucial. Ensuring they have the necessary competencies is paramount for their success [49]. Tools such as the Online Learning Readiness Questionnaire (OLRQ) and the Online Learning Readiness Scale (OLRS) can be used to assess one's preparedness for online learning. Penn State University has developed the OLRQ, licensed under Creative Commons for public use [49]. This assessment evaluates crucial factors for successful online learning, such as technological proficiency, self-regulation, motivation, and learning aptitude. These factors are distributed in five topics (in the order each section appears on the OLRQ): goal-setting, self-determination for learning, self-discipline for learning, internet self-efficacy, and technology self-efficacy [49]. It is an essential and reliable tool for assessing students' readiness and competence in effectively participating in digital learning environments. The Online Learning Readiness Scale (OLRS) by Ref. [45] focuses on five domains of online learning readiness: self-directed learning, motivation for learning, computer/Internet self-efficacy, learner control, and online communication self-efficacy. Scales such as the Tertiary students' readiness for online learning (TSROL) [50], Online Learning Strategies Scale (OLSS) [51], and others [52,53] have also been developed. Using scales can provide valuable insights into students' readiness levels for online learning, capturing various aspects of their readiness and identifying areas where additional support may be needed.

3.5. Digital skills and study habits

Digital literacy refers to the internet's ability to locate, assess, exchange, and produce information and content [54]. Digital literacy skills, such as information literacy and media literacy (such as reading and writing), are essential for succeeding in online learning [55]. Considering the Covid-19 pandemic and the resulting lockdowns, many higher education institutions are now prioritizing digital literacy readiness [54]. Moreover, a framework of 21st-century digital skills has been recently studied [56]. One elaborated on seven core skills supported by Information and Communication Technologies (ICT): technical, information management, communication, collaboration, creativity, critical thinking, and problem-solving [57]. On the other hand, the conceptual foundation that guides research on online learning readiness also involves different dimensions that may include social presence (examining students' ability to engage in collaborative learning, form meaningful connections, and actively participate in online discussions and group activities) [58], study habits (considering individual differences in learning styles and preferences) [59] and prior experience and support systems. Studies have found that student access to technology, along with their technology skills, lifestyle factors, teaching presence, cognitive presence, social presence, and study habits, determine online learning readiness [60].

3.5.1. Technology acceptance model

The Technology Acceptance Model (TAM) evaluates how people adopt technology based on usefulness and ease of use [61]. It can be used to understand student attitudes toward online learning and factors involved in adopting teleworking, such as training, trust, personal flexibility, and electronic communication [62]. The model has also been extended to consumer behavior patterns like online banking, e-shopping, and telemedicine [[63], [64], [65], [66], [67]]. Studies have shown that faculty members have concerns about student success, reputation, technical support, workload, and class enrollment in online courses [68]. E-learning self-efficacy and subjective norms are critical constructs in explaining the causal process of the TAM model [69]. Performance expectancy, effort expectancy, and social influence positively affect online learning readiness [70]. Research shows that online acceptance is crucial for improving people's behavioral intention toward technology [71]. Studies have shown that factors like perceived usefulness, ease of use, and emotional engagement positively affect students' willingness to learn online [45]. However, technological barriers and lack of institutional support can also affect this willingness [72].

3.5.2. Self-regulated learning

SRL refers to learners' feelings and actions to set goals, manage time, motivate perception, and use strategies to control their behaviors to achieve goals in a certain environment [73,74]. Self-Regulated Learning Theories are conceptual frameworks to understand the cognitive, motivational, and emotional aspects of learning. Several models focus on learners' ability to set goals, manage their time effectively, monitor their progress, and regulate their learning [75]. These theories provide valuable insights into the self-regulatory skills necessary for successful engagement in online learning [76]. During the Covid-19 outbreak, learners must study online and are required to adapt to new learning environments inevitably. SRL and motivation are considered key determinants influencing academic achievement [76]. Understanding the roles of the two factors is essential for stakeholders in higher education since we have been exposed to fully online teaching and learning for almost two years [77]. Lin & Hsieh [78] found that successful online learners make their own decisions to meet their needs at their own pace and by their existing knowledge and learning goals.

3.6. Supporting students in their online learning readiness

Taking courses specifically designed to enhance online learning readiness has been found to positively impact students' preparedness for online learning [79]. These courses should familiarize students with the technical tools, communication platforms, and learning strategies essential for successful online learning experiences. Research demonstrated that students who participated in an online learning orientation course reported lower anxiety levels [80]. Preparation in digital knowledge before taking online classes is also a critical point in determining the success of any online course, including the danger of deviations (tricks) in the digital expertise of “digital natives” due to digital growth based on electronic games or the increased use of the internet as a tool for services and information source [81].

These findings underscore the significance of targeted interventions in enhancing students' readiness and promoting a smoother transition to online learning environments. Besides, some researchers to have a positive correlation with students' learning achievement [82] have investigated students’ online learning readiness. Under these frameworks, we examined the effectiveness of preparatory courses in enhancing students' online learning readiness. We explored the relationships between the intervention, students' attitudes, and motivation and how this impacts the resulting readiness outcomes. We employed some of the constructs already mentioned in this theoretical framework and developed a questionnaire for understanding the potential benefits of preparatory courses in supporting students' transition and success in online learning environments.

4. Methodology

This project had two main objectives; the first one was to know how students self-evaluate regarding their preparation to take courses online, and the second one was to evaluate whether, over some time, this self-evaluation changed explicitly due to the impact of a strengthening strategy based on several educational resources.

4.1. Population and sampling

Convenience sampling was employed to randomly select 196 students, 98 men and 98 women, to whom self-assessment instruments were applied. All students were from the first three semesters located nationwide at the different campuses of the university enrolled in online classes. The students who participated in the study were between 18 and 21 years old.

4.2. Ethical considerations

The complete self-evaluation and follow-up project considered ethical aspects, the confidentiality of the student's data, their responses, and the non-execution of academic judgments and categorization of the results; the center of the strategy is to support students to strengthen their skills and have a better college career. A group of pedagogical and learning psychology experts from the Digital Education area of Tecnologico de Monterrey was summoned for the survey's design. After integrating the descriptions with their indicators, the validity and reliability of the survey were evaluated through a qualitative review with the judgment of experts [83]. For this, a general review script was designed using google forms to record comments (data not shown). In the second evaluation, a differentiating question was integrated to find out the students who did consult the digital resources provided by the university.

The approval of the Institutional Research Ethics Committee of Tecnologico de Monterey was sought. However, there was no number attached to the approval since ethical review and approval were waived for this study for the following reason: the data collected from the workshop was originally for the sole purpose of meeting the ViceRectory for Academic Affairs and Educational Innovation's objectives, i.e., providing effective, educative digital resources for the students of Tecnologico de Monterrey. The data were not collected for human subject research following the local legislation and institutional requirements. Additionally, the data was collected anonymously without identifiers. Written informed consent to participate in this study was not required from the participants, as the study involves only the analysis of non-identifiable private information in accordance with national legislation and institutional requirements.

4.3. Research approach

The study was conducted in 2020, at the beginning and end of the fall semester, with a quantitative approach and a descriptive scope incorporating a pre-experimental pre-post-test design. The students received an email invitation to carry out the self-assessment to determine their level of preparation for online learning. After answering the instrument, each student received a series of resources to reinforce those dimensions in which they self-assessed at a low-performance level or to strengthen: “I need to improve” and “moderately prepared”. The second application, made at the end of the semester, was like the first survey but with two additional questions regarding the resources’ usefulness and the final grade.

4.4. Instrument design

In the design of the self-assessment instrument, seven dimensions to evaluate the preparation of the student in the online learning process were selected.

-

(1)

Technological ability: The ability to search for information, process, and communicate efficiently through any digital device.

-

(2)

Reading and writing skills: Reading and writing comprehension skills to process information through digital devices.

-

(3)

Study habits: Methods and strategies for studying learning contents.

-

(4)

Willingness to work remotely: Intention to work remotely using different electronic means.

-

(5)

Interest and motivation: Emotional state that drives the student to undertake and maintain a behavior with a specific objective. In this case, it is a predisposition to act in a particular direction.

-

(6)

Self-regulation of learning: Ability to control the learning pace (self-management).

-

(7)

Organization of time: Planning and organization of the time devoted to the study of the learning contents and development of activities (individual and collaborative)

These dimensions were considered after reviewing multiple self-assessment instruments and selecting those of interest for Tecnologico de Monterrey. The final version of the instrument entitled "Do you feel prepared?" integrated the seven dimensions mentioned in the experimental design. A Likert scale was employed using four levels of achievement: 1) I need to improve, 2) moderately prepared, 3) prepared, and 4) highly prepared.

4.5. Research design

The process and the experience the student would have to participate in this project were designed. The strategy focused on curating and designing educational resources that could support the student in improving their preparation for online study. It began with the search for open educational resources that would help develop and strengthen the dimensions of online learning readiness. Subsequently, some were chosen and shared with the students due to their quality, duration, and relevance for the HE student. In total, there were three resources for each dimension.

When the resources were ready, the students received an email explaining the project and the invitation to join in answering an instrument to detect deficiencies. This was done before the start of the Fall term. Afterward, students could study the provided resources independently and attend their digital classes regularly. At the end of the term, those students who choose to participate in the study answer the instrument again, including the two new questions regarding the usefulness of the resources and final grade.

4.6. Data analysis and evaluation of the results

The instrument's internal consistency was evaluated using Cronbach's alpha coefficient, which consists of calculating the variance of the results of the responses [84]. Statistical analysis was performed using the MINITAB® software (Pennsylvania, United States) for data processing.

5. Results

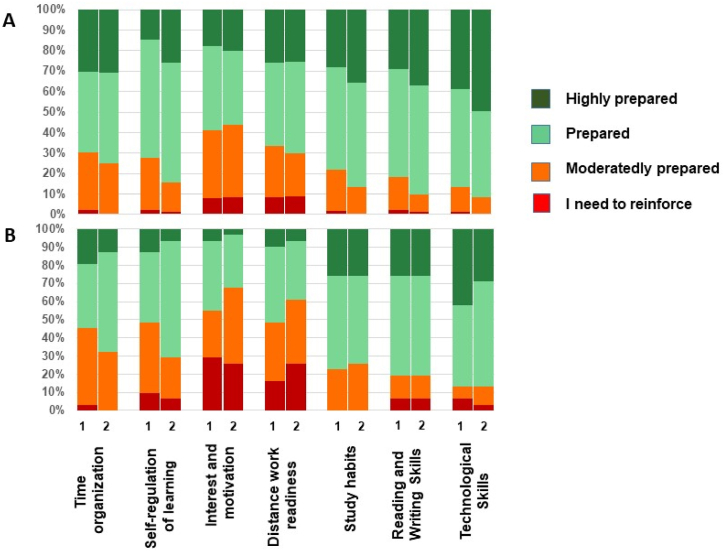

To find out how the students self-assessed in terms of their level of preparation to face a digital course, a descriptive statistical analysis was carried out using bar graphs that allowed us to analyze the self-assessment (Fig. 1). Of the 196 students who participated in the study, 165 indicated that they did consult the institution's digital resources during the course, and 31 did not use them. The instrument's internal consistency was evaluated using Cronbach's alpha coefficient, which consists of calculating the variance of the response results. In the first self-evaluation (before the beginning of the course), a Cronbach's alpha coefficient of 0.746 was obtained, and in the second (after the term ended), a coefficient of 0.769 was observed. According to the literature, the minimum acceptable value for the Cronbach coefficient is 0.70 [84], so we determined that the current version of the instrument has internal consistency.

Fig. 1.

Four Level Dimension Graph for the student's online learning readiness self-evaluation. A. Distribution of answers from students who consulted the digital resources provided by the University (n = 165). B. Distribution of answers from students who did not consult the resources (n = 31). The first column (1) in each pair reflects the answers of the first survey (at the beginning of the fall term) and the second (2) of the final survey (at the end of the fall term).

Fig. 1A shows the results of the 165 students that consulted the resources. The seven dimensions and the level of the student's initial (first) and final (second column) self-evaluation results are shown. As it can be seen, the dimensions in which the students were self-considered well prepared before starting the course were technological skills (38.79 % answered "highly prepared" and 47.88 % "prepared"), reading and writing habits (29.09 % "highly prepared" and 52.73 % "prepared") and study habits (27.88 % "highly prepared" and 50.30 % "prepared"). The dimensions in which these students self-evaluated the lowest before the course was: interest and motivation (7.88 % answered "I need to reinforce" and 33.33 % "moderately prepared") and willingness to work at a distance (8.48 % "I need to reinforce" and 24.85 % "moderately prepared") and finally the organization of time (2.42 % "I need to reinforce" and 27.88 % "moderately prepared").

As can be seen, the dimensions in which the students were self-considered well or highly prepared after taking this course (second column in each pair) were technological skills (49.70 % answered "highly prepared" and 41.82 % "prepared"), reading, and writing habits (36.97.14 % "highly prepared" and 53.33 % "prepared") and study habits (36.97 % "highly prepared" and 53.33 % "prepared"). The dimensions in which these students self-evaluated the lowest after the course were: interest and motivation (8.48 % answered "I need to reinforce" and 35.15 % "moderately prepared") and willingness to work at a distance (9.09 % "I need to reinforce" and 20.61 % "moderately prepared") and finally the organization of time (2.42 % "I need to reinforce" and 27.88 % "moderately prepared").

By contrast, Fig. 1B shows the same survey results but considers the answers of 31 students who did not consult the resources in this case. As can be seen, the dimensions in which the students were self-considered well prepared before starting the course were very similar to the previous group of study: technological skills (41.94 % answered "highly prepared" and 45.16 % "prepared"), reading, and writing habits (25.81 % "highly prepared" and 54.84 % "prepared") and study habits (25.81 % "highly prepared" and 51.61 % "prepared"). The dimensions in which this second group of students self-evaluated the lowest before the course were: interest and motivation (29.03 % answered "I need to reinforce" and 25.81 % "moderately prepared") and willingness to work at a distance (16.13 % "I need to reinforce" and 32.26 % "moderately prepared") and finally self-regulation of learning (9.68 % "I need to reinforce" and 38.71 % "moderately prepared").

The dimensions in which the students were self-considered well prepared after the course were: technological skills (41.94 % answered "highly prepared" and 45.16 % "prepared"), reading and writing habits (25.81 % "highly prepared" and 54.84 % "prepared") and study habits (25.81 % "highly prepared" and 51.61 % "prepared"). The dimensions in which this second group of students self-evaluated the lowest after the course were: interest and motivation (25.81 % answered "I need to reinforce" and 41.94 % "moderately prepared") and willingness to work at a distance (25.81 % "I need to reinforce" and 35.48 % "moderately prepared") and finally the organization of time (32.26 % "moderately prepared").

To determine the effect of the course on the development of the seven dimensions studied and, on the other hand, the impact of digital resources, we conducted a fine study of the data from the self-assessment survey. First, we added the percentages of "highly prepared" and "prepared" as a positive evaluation and, on the other hand, the rates of "I need to reinforce" and "moderately prepared" as a negative evaluation. Thus, we can compare each opinion and its variation before and after the course. Our analysis allows us to evaluate the impact of the use of digital resources available by the institution. Table 1 shows the percentages of change in each of the opinions before and after taking the course by users of the resources and non-users. A negative percentage implies that the readiness level decreased after the course, and a positive variation indicates that the readiness level increased.

Table 1.

Variation in the percentage of positive (highly prepared" and "prepared") or negative ("I need to reinforce" and "moderately prepared") answers of each online learning readiness dimension before and after the fall term from students who used digital resources and those who did not use them.

| Dimension | Users of Digital Resources | Non-Users of Digital Resources | ||

|---|---|---|---|---|

| Negative Opinion | Positive Opinion | Negative Opinion | Positive Opinion | |

| Technological Skills | −4.84 | +4.84 | +0.01 | −12.91 |

| Reading and Writing Skills | −8.49 | +8.49 | 0.00 | 0.00 |

| Study Habits | −8.48 | +8.48 | +3.23 | −3.23 |

| Willingness to Work Remotely | −3.63 | +3.63 | +12.90 | −12.91 |

| Interest and Motivation | +2.42 | −2.42 | +12.91 | −12.90 |

| Self-Regulation of Learning | −12.01 | +12.11 | −19.36 | +19.36 |

| Time Organization | −1.83 | +5.50 | −16-14 | +12.91 |

We can see in Table 1 that negative opinions on technological skills decreased −4.84 %, a percentage that was added to positive opinions; for the case of students who did not use digital resources, there was no significant variation between before or after the course in both opinions. Opinions on reading and writing skills had very similar behaviors where negative thoughts decreased −8.49 % in users of the resources, and there was no variation in non-users. Regarding the dimension of study habits, there was a significant variation in its improvement in the users of resources (+8.49 %). However, non-users felt that the deficiency in study habits increased (+3.23 %). The availability of working remotely increased the favorable opinion of resource users; in contrast, those who did not use digital resources increased negative thoughts by +12.90 %. Regarding interest and motivation, in both cases, negative opinion increased; for users, it increased by only +2.42 %, but for non-users of digital resources, it increased by +12.41 %. Interestingly, in both cases, the dimension of self-regulation of learning increased the positive opinion significantly in resource users by +12.11 %. However, there was an increase of +19.36 % in non-users of resources, the most significant increase in a favorable opinion. Similarly, the positive opinion on the time organization increased by +5.50 and + 12–91 % among users and non-users, respectively.

5.1. Statistical analyses

We were interested in analyzing whether there was a statistically significant difference between the results of group 1 (users of digital resources) versus the results of group 2 (non-users of digital resources) in the second self-assessment (after the course). First, a Kolmogorov-Smirnov regular distribution test was performed [85], and the analysis showed that the data did not have a normal distribution (data not shown). Subsequently, the Mann-Whitney test was chosen, which is used to calculate the difference between medians of a population. This test evaluates two independent samples, which compare two groups of cases with a variable, in this case, the use or not of educational resources [86].

Therefore, a comparison was made between groups 1 (n1) and 2 (n2), and the significance was analyzed to determine in which dimensions there was a significant difference. This was done considering 95 % confidence, so the values that showed to be below 0.05 of significance show a statistical difference (Table 2).

Table 2.

Statistical analyses of the answers of each online learning readiness dimension before and after the fall semester from students who used digital resources and those who did not use them.

| Mann-Whitney | |

|---|---|

| Median of n1 (users) minus median of n2 (non-users) | |

| Difference: n1 - n2 | |

| Confidence achieved | 95 % |

| Dimensions | p-value |

| Time organization | 0.080 |

| Self-regulation of learning | 0.007 |

| Interest and motivation | 0.003 |

| Willingness to remote work | 0.001 |

| Study habits | 0.110 |

| Reading and writing skills | 0.103 |

| Technological skills | 0.037 |

After the analysis, it was identified that there is a significant difference in 4 of the dimensions between the two groups analyzed and considering the consultation of resources as the variable: Self-regulation for learning, Interest and motivation, Willingness to work at a distance, and Technological skills.

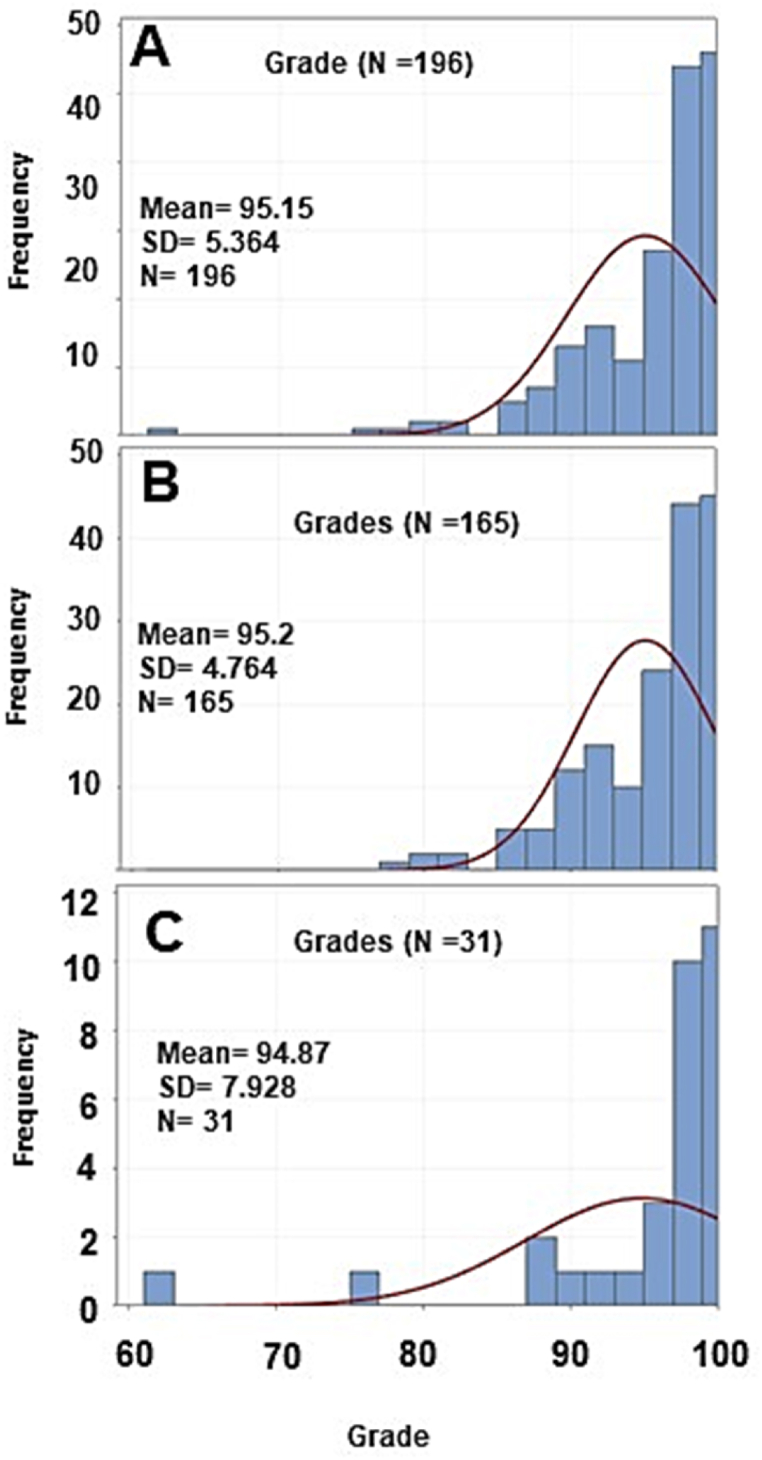

5.2. Performance indicators

After analyzing the instrument results, we investigated how much prior digital skills, as well as having used the digital resources available by the university, influenced academic achievement. Fig. 2 shows the student's final grade during the fall semester 2020. Fig. 2A shows the total scores (n = 196), showing a mean of 95.15. When analyzing the grades of the 165 students who did use digital resources, the average was 95.2 (Fig. 2B), and when the 31 students who did not use the resources, the average was 94.87 (Fig. 2C). However, the frequency curve clearly showed a distribution with lower grades.

Fig. 2.

Grades of students after a semester of digital courses. A) Total sample of students, B) Students that took the digital resources available from the institution, and C) Students that did not take the digital resources.

6. Discussion

To succeed in various fields, including higher education, possessing digital literacy has become increasingly necessary in today's digital age [87,88]. The ability to effectively use technology and digital tools has become crucial to success [[80], [81], [82], [83], [84], [85], [86], [87], [88], [89], [90], [91]] and is applicable across diverse fields and disciplines. Being born between the mid-1990s and the mid-2000s (Z generation, often referred to as “digital natives”) does not automatically mean that one has the necessary digital skills to navigate and use technology effectively [92]. While the Z generation may be familiar with digital technology, they still need to develop these skills to use technology effectively and responsibly [93]. Moreover, not all members of the Z generation have equal access to digital technology and digital literacy training. Differences in socio-economic status, geographic location, and cultural background can affect access to digital technology and digital literacy resources. Therefore, it is essential to provide digital literacy training and resources to all learners, regardless of their background or level of digital experience [94].

During 2020 and 2021, the challenge of transforming most face-to-face courses to online courses due to the COVID-19 pandemic brought concern about how much Faculty and students were prepared to face a sudden distance education. What tools do we have to face this challenge? The present manuscript focused on answering this question by analyzing online learning readiness and following up with digital tools to support students who require them. Even today, all the factors studied in this work are necessary for successful online learning. Learners need to have the technological ability, to be proficient in using digital tools and be prone to access online resources, participate in online discussions, and complete assignments [95,96]. Online learning often involves reading and writing assignments and participating in online discussions. Learners need strong reading and writing skills to understand the course material and communicate their ideas effectively [97,98]. They must develop effective study habits, such as setting goals, managing time, staying organized, and being self-directed and motivated [99,100].

When comparing the results of the student users between the first and second evaluations, it was observed that there was a substantial improvement in six of the seven dimensions. The group obtained positive increases in the dimensions of time organization and self-regulation of learning. In contrast, Zinchenko Yury et al. [101] found that students had more difficulty organizing their time during pandemics than older adults. Older adults can independently adapt and find self-regulation strategies [102]. Thus, the improvement observed in this work may result from using resources and having other subjects online. However, this could result from maturing as an independent student by taking the entire semester online; this positively impacted their self-assessment and, more than that, their growth and development as separate individuals.

On the contrary, the only category with a slight decrease was the willingness to work remotely. This result may be a consequence of the fatigue of students studying online learning throughout the semester. Other works have also encountered this [103]. They may be attributed to all the barriers faced during pandemics, which also impacted social connections and healthy habits [104]. The willingness to study at a distance is essential to students’ success and satisfaction with online learning [71].

The students who did not consult the resources decreased their self-evaluation in the “highly prepared” category in five dimensions (organization of time, self-regulation of learning, interest, motivation, willingness to work remotely, and technological ability). They remained the same in study habits and reading and writing skills. Additionally, it was observed that the students who did not consult the resources obtained negative results in motivation and disposition of the students to work remotely. When we compared the effects of both groups, it was clear that while the group that did consult the tools improved their abilities to take online courses, the other group had a decrease. Thus, the available tools may have had a positive effect. Support strategies for online learning readiness dimensions, such as self-regulated learning, have also positively affected other contexts [105].

When evaluating the impact that the use of resources could have on the student's academic performance, the final grade average was compared; there was no significant difference between the students who used the resources and those who did not use them (data not shown). However, the lowest scores belonged to the second group. Studies have found a positive correlation between the use of digital resources and academic achievement [106,107] and the use of digital resources on student engagement and motivation, which can, in turn, lead to improved academic performance [108]. For example, the study by Chen & Jang [109] found that digital storytelling increased student motivation and engagement, improving academic performance. However, it is essential to note that using digital resources alone is insufficient to improve academic performance. The effective use of digital resources requires careful planning, appropriate instructional design, and ongoing support and feedback [110]. The context and an effective and meaningful use must be considered as well.

Digital resources allow students to access learning materials more conveniently and flexibly, collaborate with their peers, and interact with course content. Offering digital resources to students is an effective way to enhance their skills in studying remotely and increase their achievements in this mode of learning. The aim is to support students in developing their competencies in distance learning and guarantee their success in this mode. Therefore, a punctual and timely intervention by the HE institutions to ensure the online preparation of its students can mean better academic results and be reflected in greater student satisfaction with their courses and better self-perception [111].

To tackle the issue of dropouts in higher education, digital resources can be a helpful tool [112]. By offering students flexible learning options, such as accessing course materials and participating in discussions remotely, universities can enhance learning accessibility and improve student retention rates. In addition, digital resources can cater to personalized learning, making education more tailored to the needs and preferences of individual students [113]. However, it's important to note that more than digital resources are needed to solve the complex issue of HE dropouts. Other factors, such as academic and social support, student motivation, and financial barriers, must also be addressed by universities to achieve long-lasting results [114].

7. Conclusion

The teaching-learning process is not static but dynamic and constantly evolving in response to changes in technology, society, and the environment. As technology advances and society changes, educators must adapt their teaching methods and learning materials to ensure students receive a relevant and practical education. For example, the widespread availability of digital tools and online resources has transformed how educators deliver instruction and students access and engage with learning materials. Similarly, societal and environmental changes such as the COVID-19 pandemic have necessitated a shift towards remote and online learning, presenting new challenges and opportunities for educators and students.

Universities can measure and improve digital skills among students by conducting digital skills assessments to evaluate students' digital skills and identify areas where they need improvement. Digital skills assessments can take many forms, such as online quizzes, self-assessments, or project-based assessments. They offer digital skills training to help students develop their skills to succeed in the digital age (workshops, online courses, or peer-to-peer learning opportunities). Embedding digital skills into the curriculum ensures HE students develop the skills they need to succeed in their fields. This can be done by providing access to digital tools and resources, such as online libraries, digital media production facilities, and software programs, to enable students to develop their digital skills. However, while digital resources can contribute to developing students’ abilities to study in a distance environment and improve their academic success, universities must also consider other factors to reduce dropouts in distance settings.

This research has some limitations, as the fact that all the courses that students took during the semester were online due to the pandemic may have influenced the results in the student's self-assessment of their abilities to study online and in a sudden saturation due to the enforced online modality. However, this type of study will be beneficial for the future discussion of future educational designs.

Recommendations and future research avenues

To ensure that the teaching-learning process remains effective and relevant, HE institutions must stay up-to-date with the latest technological and societal trends and assess and adapt their instructional strategies and materials to meet the evolving needs of students. It requires a commitment to ongoing professional development and a willingness to experiment with new teaching methods and technologies to create an engaging and effective student learning experience.

An aspect of this work to consider for future research is to define an instrument or mechanism to evaluate the student's skills to take their subjects online and compare these results with the self-evaluation that the student does. Another aspect to be assessed in future research is investigating why students score lower in interest, motivation, and willingness to work remotely. A third aspect to consider is whether these results are consistent with students from other educational levels, such as baccalaureate and postgraduate.

Funding

No funding was received to produce this work.

Availability of data and materials

The datasets generated and analyzed during the current study are available from the corresponding author upon reasonable request.

Data availability

The data associated with the study has not been deposited into a publicly available repository. Data will be made available on request.

CRediT authorship contribution statement

Maribell Reyes-Millán: Conceptualization. Myriam Villareal-Rodríguez: Conceptualization. M. Estela Murrieta-Flores: Formal analysis, Investigation, Methodology, Writing – original draft. Ligia Bedolla-Cornejo: Conceptualization. Patricia Vázquez-Villegas: Investigation, Writing – original draft, Writing – review & editing. Jorge Membrillo-Hernández: Investigation, Writing – review & editing.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

We thank Faculty and students who have made valuable contributions to this study. We acknowledge the financial support of the Writing Lab, Institute for the Future of Education, Tecnologico de Monterrey, Mexico.

References

- 1.Joaquin J.J.B., Biana H.T., Dacela M.A. The philippine higher education sector in the time of COVID-19. Frontiers in Education. 2020;5 doi: 10.3389/feduc.2020.576371. [DOI] [Google Scholar]

- 2.Pregowska A., Masztalerz K., Garlińska M., Osial M. A worldwide journey through distance education—from the post office to virtual, augmented and mixed realities, and education during the COVID-19 pandemic. Educ. Sci. 2021;11(3):118. doi: 10.3390/educsci11030118. [DOI] [Google Scholar]

- 3.Kokorina L.V., Potreba N.A., Zharykova M.V., Horlova O.V. Distance learning tools for the development of foreign language communicative competence. Linguistics and Culture Review. 2021;5(S4):1016–1034. doi: 10.21744/lingcure.v5nS4.1738. [DOI] [Google Scholar]

- 4.Badaru K.A., Adu E.O. Platformisation of education: an analysis of South African universities' learning management systems. Research in Social Sciences and Technology. 2022;7(2):66–86. doi: 10.46303/ressat.2022.10. [DOI] [Google Scholar]

- 5.Veluvali P., Surisetti J. Learning management system for greater learner engagement in higher education—a review. Higher Education for the Future. 2022;9(1):107–121. doi: 10.1177/23476311211049855. [DOI] [Google Scholar]

- 6.Coman C., Țîru L.G., Meseșan-Schmitz L., Stanciu C., Bularca M.C. Online teaching and learning in higher education during the coronavirus pandemic: students' perspective. Sustainability. 2020;12(24) doi: 10.3390/su122410367. [DOI] [Google Scholar]

- 7.Fabriz S., Mendzheritskaya J., Stehle S. Impact of synchronous and asynchronous settings of online teaching and learning in higher education on students' learning experience during COVID-19. Front. Psychol. 2021;12 doi: 10.3389/fpsyg.2021.733554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mohammadi G. Teachers' CALL professional development in synchronous, asynchronous, and bichronous online learning through project-oriented tasks: developing CALL pedagogical knowledge. Journal of Computers in Education. 2023 doi: 10.1007/s40692-023-00260-4. [DOI] [Google Scholar]

- 9.U.S Department of Education . 2021. Digest of Education Statistics.https://nces.ed.gov/programs/digest/d21/tables/dt21_311.15.asp National Center for Education Statistics; National Center for Education Statistics. [Google Scholar]

- 10.OECD . Organisation for Economic Co-operation and Development; 2021. The State of Higher Education: One Year into the COVID-19 Pandemic.https://www.oecd-ilibrary.org/education/the-state-of-higher-education_83c41957-en [Google Scholar]

- 11.ANUIES . 2020. Anuario estadístico 2019–2020. Estadísticas de la Educación Superior.http://www.anuies.mx/informacion-y-servicios/informacion-estadistica-de-educacion-superior/anuario-estadistico-de-educacion-superior [Google Scholar]

- 12.Xie X., Zang Z., Ponzoa J.M. The information impact of network media, the psychological reaction to the COVID-19 pandemic, and online knowledge acquisition: evidence from Chinese college students. Journal of Innovation & Knowledge. 2020;5(4):297–305. doi: 10.1016/j.jik.2020.10.005. [DOI] [Google Scholar]

- 13.Al Lily A.E., Ismail A.F., Abunasser F.M., Alhajhoj Alqahtani R.H. Distance education as a response to pandemics: coronavirus and Arab culture. Technol. Soc. 2020;63 doi: 10.1016/j.techsoc.2020.101317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ferri F., Grifoni P., Guzzo T. Online learning and emergency remote teaching: opportunities and challenges in emergency situations. Societies. 2020;10(4):86. doi: 10.3390/soc10040086. [DOI] [Google Scholar]

- 15.Maatuk A.M., Elberkawi E.K., Aljawarneh S., Rashaideh H., Alharbi H. The COVID-19 pandemic and E-learning: challenges and opportunities from the perspective of students and instructors. J. Comput. High Educ. 2022;34(1):21–38. doi: 10.1007/s12528-021-09274-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bozkurt A., Jung I., Xiao J., Vladimirschi V., Schuwer R., Egorov G., Lambert S., Al-Freih M., Pete J., Don Olcott J., Rodes V., Aranciaga I., Bali M., Alvarez A.J., Roberts J., Pazurek A., Raffaghelli J.E., Panagiotou N., Coëtlogon P. de.…Paskevicius M. A global outlook to the interruption of education due to COVID-19 pandemic: navigating in a time of uncertainty and crisis. Asian Journal of Distance Education. 2020;15(1) Article 1. [Google Scholar]

- 17.Chen J., Hughes S., Ranade N. Reimagining student-centered learning: accessible and inclusive syllabus design during and after the COVID-19 pandemic. Comput. Compos. 2023;67 doi: 10.1016/j.compcom.2023.102751. [DOI] [Google Scholar]

- 18.Adnan M., Anwar K. Online learning amid the COVID-19 pandemic: students' perspectives. Journal of Pedagogical Sociology and Psychology. 2020;2(1):45–51. doi: 10.33902/JPSP. [DOI] [Google Scholar]

- 19.Gómez-Rey P., Fernández-Navarro F., Vázquez-De Francisco M.J. Identifying key variables on the way to wellbeing in the transition from face-to-face to online higher education due to COVID-19: evidence from the Q-sort technique. Sustainability. 2021;13(11) doi: 10.3390/su13116112. Article 11. [DOI] [Google Scholar]

- 20.Chung E., Noor N.M., Mathew V.N. Are you ready? An assessment of online learning readiness among university students. Int. J. Acad. Res. Prog. Educ. Dev. 2020;9(1):301–317. [Google Scholar]

- 21.Fernández-Batanero J.M., Montenegro-Rueda M., Fernández-Cerero J., Tadeu P. Online education in higher education: emerging solutions in crisis times. Heliyon. 2022;8(8) doi: 10.1016/j.heliyon.2022.e10139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hodges C.B., Moore S., Lockee B.B., Trust T., Bond M.A. 2020. The Difference between Emergency Remote Teaching and Online Learning.https://vtechworks.lib.vt.edu/handle/10919/104648 [Google Scholar]

- 23.Liu J.C. Evaluating online learning orientation design with a readiness scale. Online Learn. 2019;23(4) doi: 10.24059/olj.v23i4.2078. [DOI] [Google Scholar]

- 24.Yeşilyurt F. The learner readiness for online learning: scale development and university students' perceptions. International Online Journal of Education and Teaching. 2021;8(1):29–42. [Google Scholar]

- 25.Zou C., Li P., Jin L. Online college English education in Wuhan against the COVID-19 pandemic: student and teacher readiness, challenges and implications. PLoS One. 2021;16(10) doi: 10.1371/journal.pone.0258137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lieberman M. 2017. Welcome Aboard. Inside Higher Ed.https://www.insidehighered.com/digital-learning/article/2017/09/13/orientation-programs-set-online-learners-success [Google Scholar]

- 27.Pearson . 2022. The Pearson Global Learner Survey.https://plc.pearson.com/en-US/insights/pearson-global-learner-survey [Google Scholar]

- 28.Conrad D. Deep in the hearts of learners: insights into the nature of online community. J. Distance Educ. 2002;17(1):1–19. [Google Scholar]

- 29.Moore J.L., Dickson-Deane C., Galyen K. e-Learning, online learning, and distance learning environments: are they the same? Internet High Educ. 2011;14(2):129–135. doi: 10.1016/j.iheduc.2010.10.001. [DOI] [Google Scholar]

- 30.Abduvakhidov A.M., Mannapova E.T., Akhmetshin E.M. Digital development of education and universities: global challenges of the digital economy. Int. J. InStruct. 2021;14(1):743–760. doi: 10.29333/iji.2021.14145a. [DOI] [Google Scholar]

- 31.López-Meneses E., Sirignano F.M., Vázquez-Cano E., Ramírez-Hurtado J.M. University students' digital competence in three areas of the DigCom 2.1 model: a comparative study at three European universities. Australas. J. Educ. Technol. 2020;36(3) doi: 10.14742/ajet.5583. Article 3. [DOI] [Google Scholar]

- 32.Rubach C., Lazarides R. Addressing 21st-century digital skills in schools – development and validation of an instrument to measure teachers' basic ICT competence beliefs. Comput. Hum. Behav. 2021;118 doi: 10.1016/j.chb.2020.106636. [DOI] [Google Scholar]

- 33.D'Angelo C. In: Technology and the Curriculum: Summer 2018. Power Learning Solutions. Power R., editor. 2018. The impacts of technology integration.https://pressbooks.pub/techandcurriculum/chapter/engagement-and-success/ [Google Scholar]

- 34.Brolpito A. European Training Foundation; 2018. Digital Skills and Competence, and Digital and Online Learning; p. 72.https://www.etf.europa.eu/en/publications-and-resources/publications/digital-skills-and-competence-and-digital-and-online [Google Scholar]

- 35.Svensson M., Baelo R. Teacher students' perceptions of their digital competence. Procedia - Social and Behavioral Sciences. 2015;180:1527–1534. doi: 10.1016/j.sbspro.2015.02.302. [DOI] [Google Scholar]

- 36.Cabero-Almenara J., Guillén-Gámez F.D., Ruiz-Palmero J., Palacios-Rodríguez A. Digital competence of higher education professor according to DigCompEdu. Statistical research methods with ANOVA between fields of knowledge in different age ranges. Educ. Inf. Technol. 2021;26(4):4691–4708. doi: 10.1007/s10639-021-10476-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gleason B., Manca S. Curriculum and instruction: pedagogical approaches to teaching and learning with Twitter in higher education. Horizon. 2019;28(1):1–8. doi: 10.1108/OTH-03-2019-0014. [DOI] [Google Scholar]

- 38.Istifci I., Goksel N. The relationship between digital literacy skills and self-regulated learning skills of open education faculty students. Language International Journal. 2022;2(1) doi: 10.56498/164212022. English as a Foreign. [DOI] [Google Scholar]

- 39.Cigdem H., Ozturk M. Critical components of online learning readiness and their relation¬ships with learner achievement. Turk. Online J. Dist. Educ. 2016;0(0) doi: 10.17718/tojde.09105. [DOI] [Google Scholar]

- 40.Guglielmino L., Guglielmino P. In: Preparing Learners for E-Learning. Piskurich G.M., editor. John Wiley & Sons; 2004. Identifying learners who are ready for e-learning and supporting their success; pp. 18–33. [Google Scholar]

- 41.Kirmizi Ö. The influence of learner readiness on student satisfaction and academic achievement in an online program at higher education. TOJET - Turkish Online J. Educ. Technol. 2015;14(1) n/a. [Google Scholar]

- 42.Kruger-Ross M.J., Waters R.D. Predicting online learning success: applying the situational theory of publics to the virtual classroom. Comput. Educ. 2013;61:176–184. doi: 10.1016/j.compedu.2012.09.015. [DOI] [Google Scholar]

- 43.Torun E.D. Online distance learning in higher education: E-learning readiness as a predictor of academic achievement. Open Prax. 2019;12(2):191. doi: 10.5944/openpraxis.12.2.1092. [DOI] [Google Scholar]

- 44.Warner D., Christie G., Choy S. 1998. Readiness of VET Clients for Flexible Delivery Including On-Line Learning; p. 65.https://www.voced.edu.au/content/ngv:35337 Australian National Training Authority. [Google Scholar]

- 45.Hung M.-L., Chou C., Chen C.-H., Own Z.-Y. Learner readiness for online learning: scale development and student perceptions. Comput. Educ. 2010;55(3):1080–1090. doi: 10.1016/j.compedu.2010.05.004. [DOI] [Google Scholar]

- 46.Smith P.J. Learning preferences and readiness for online learning. Educ. Psychol. 2005;25(1):3–12. doi: 10.1080/0144341042000294868. [DOI] [Google Scholar]

- 47.Smith P.J., Murphy K.L., Mahoney S.E. Towards identifying factors underlying readiness for online learning: an exploratory study. Dist. Educ. 2003;24(1):57–67. doi: 10.1080/01587910303043. [DOI] [Google Scholar]

- 48.Mosa A., Mahrin M. N. bin, Ibrrahim R. Technological aspects of E-learning readiness in higher education: a review of the literature. Comput. Inf. Sci. 2016;9(1) doi: 10.5539/cis.v9n1p113. Article 1. [DOI] [Google Scholar]

- 49.Toland S. Lindenwood University; 2022. A Mixed-Method Study on the Online Learner Readiness Questionnaire Instrument at a Midwest University [Doctor of Education.https://www.proquest.com/openview/fdc6a6b605843a27a161e182c4c6b12a/1?pq-origsite=gscholar&cbl=18750&diss=y [Google Scholar]

- 50.Pillay H., Irving K., Tones M. Validation of the diagnostic tool for assessing Tertiary students' readiness for online learning. High Educ. Res. Dev. 2007;26(2):217–234. doi: 10.1080/07294360701310821. [DOI] [Google Scholar]

- 51.Tsai M.-J. Seventh IEEE International Conference on Advanced Learning Technologies; 2007. A Pilot Study of the Development of Online Learning Strategies Scale (OLSS) ICALT 2007), 108–110. [DOI] [Google Scholar]

- 52.Alem F., Plaisent M., Bernard P., Chitu O. Student online readiness assessment tools: a systematic review approach. Electron. J. e Learn. 2014;12(4) Article 4. [Google Scholar]

- 53.Gast B. 2018. Student Readiness in an Asynchronous Online Environment: A Correlational Study on Student Success [Doctor of Education.https://www.proquest.com/openview/4f626110b4d9dd57e0b7ecc9aba25156/1?pq-origsite=gscholar&cbl=18750 Edgewood College] [Google Scholar]

- 54.Udeogalanya V. Aligning digital literacy and student academic success: lessons learned from COVID-19 pandemic. Int. J. Hyg. Environ. Med. 2022;8(Issue 02) https://ijhem.com/details&cid=90 [Google Scholar]

- 55.Park H., Kim H.S., Park H.W. A scientometric study of digital literacy, ICT literacy, information literacy, and media literacy. Journal of Data and Information Science. 2021;6(2):116–138. doi: 10.2478/jdis-2021-0001. [DOI] [Google Scholar]

- 56.Belchior-Rocha H., Mauritti R., Monteiro J.P., Carneiro L. 21ST century skills and digital skills, are one and the same thing? EDULEARN20 Proceedings. 2020:2752–2758. doi: 10.21125/edulearn.2020.0831. [DOI] [Google Scholar]

- 57.van Laar E., van Deursen A.J.A.M., van Dijk J.A.G.M., de Haan J. Determinants of 21st-century skills and 21st-century digital skills for workers: a systematic literature review. Sage Open. 2020;10(1) doi: 10.1177/2158244019900176. [DOI] [Google Scholar]

- 58.Rochmawati R. 2023. pp. 835–847. (Social Presence in Online Learning: Meta Analysis). [DOI] [Google Scholar]

- 59.Tabang M.P., Caballes D.G. Grade 10 students' online learning readiness and e-learning engagement in a science high school during pandemic. International Journal of Humanities and Education Development (IJHED) 2022;4(3) doi: 10.22161/jhed.4.3.28. Article 3. [DOI] [Google Scholar]

- 60.Walia P., Tulsi P., Kaur A. IEEE Learning With MOOCS (LWMOOCS); 2019. Student Readiness for Online Learning in Relation to Gender and Stream of Study. 21–25. [DOI] [Google Scholar]

- 61.Farahat T. Applying the technology acceptance model to online learning in the Egyptian universities. Procedia - Social and Behavioral Sciences. 2012;64:95–104. doi: 10.1016/j.sbspro.2012.11.012. [DOI] [Google Scholar]

- 62.Pérez Pérez M., Martínez Sánchez A., De Luis Carnicer P., José Vela Jiménez M. A technology acceptance model of innovation adoption: the case of teleworking. Eur. J. Innovat. Manag. 2004;7(4):280–291. doi: 10.1108/14601060410565038. [DOI] [Google Scholar]

- 63.Pikkarainen T., Pikkarainen K., Karjaluoto H., Pahnila S. Consumer acceptance of online banking: an extension of the technology acceptance model. Internet Res. 2004;14(3):224–235. doi: 10.1108/10662240410542652. [DOI] [Google Scholar]

- 64.Ha S., Stoel L. Consumer e-shopping acceptance: antecedents in a technology acceptance model. J. Bus. Res. 2009;62(5):565–571. doi: 10.1016/j.jbusres.2008.06.016. [DOI] [Google Scholar]

- 65.Hu P.J., Chau P.Y.K., Sheng O.R.L., Tam K.Y. Examining the technology acceptance model using physician acceptance of telemedicine technology. J. Manag. Inf. Syst. 1999;16(2):91–112. doi: 10.1080/07421222.1999.11518247. [DOI] [Google Scholar]

- 66.Kamal S.A., Shafiq M., Kakria P. Investigating acceptance of telemedicine services through an extended technology acceptance model (TAM) Technol. Soc. 2020;60 doi: 10.1016/j.techsoc.2019.101212. [DOI] [Google Scholar]

- 67.Koufaris M. Applying the technology acceptance model and flow theory to online consumer behavior. Inf. Syst. Res. 2002;13(2):205–223. doi: 10.1287/isre.13.2.205.83. [DOI] [Google Scholar]

- 68.Wingo N.P., Ivankova N.V., Moss J.A. Faculty perceptions about teaching online: exploring the literature using the technology acceptance model as an organizing framework. Online Learn. 2017;21(1):15–35. [Google Scholar]

- 69.Park S.Y. An analysis of the technology acceptance model in understanding university students' behavioral intention to use e-learning. Journal of Educational Technology & Society. 2009;12(3):150–162. [Google Scholar]

- 70.Pham L.T., Dau T.K.T. Online learning readiness and online learning system success in Vietnamese higher education. The International Journal of Information and Learning Technology. 2022;39(2):147–165. doi: 10.1108/IJILT-03-2021-0044. [DOI] [Google Scholar]

- 71.Abuhassna H., Al-Rahmi W.M., Yahya N., Zakaria M.A.Z.M., Kosnin A. Bt M., Darwish M. Development of a new model on utilizing online learning platforms to improve students' academic achievements and satisfaction. International Journal of Educational Technology in Higher Education. 2020;17(1):38. doi: 10.1186/s41239-020-00216-z. [DOI] [Google Scholar]

- 72.Liang L., Zhong Q., Zuo M., Luo H., Wang Z. 2021. What Drives Rural Students' Online Learning Continuance Intention: an SEM Approach. 2021 International Symposium on Educational Technology (ISET), 112–116. [DOI] [Google Scholar]

- 73.Akhter H., Abdul Rahman A.A., Jafrin N., Mohammad Saif A.N., Esha B.H., Mostafa R. Investigating the barriers that intensify undergraduates' unwillingness to online learning during COVID-19: a study on public universities in a developing country. Cogent Education. 2022;9(1) doi: 10.1080/2331186X.2022.2028342. [DOI] [Google Scholar]

- 74.Suwanwong P., Jamiat N., Phairot E. 2022. Undergraduates' Self-Regulated Learning and Motivation during the Covid-19 Situation. 1–10. [Google Scholar]

- 75.Panadero E. A review of self-regulated learning: six models and four directions for research. Front. Psychol. 2017;8 doi: 10.3389/fpsyg.2017.00422. https://www.frontiersin.org/articles/10.3389/fpsyg.2017.00422 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Karatas K., Arpaci I. The role of self-directed learning, metacognition, and 21st century skills predicting the readiness for online learning. Contemporary Educational Technology. 2021;13(3) doi: 10.30935/cedtech/10786. [DOI] [Google Scholar]

- 77.Tang Y.M., Chen P.C., Law K.M.Y., Wu C.H., Lau Y., Guan J., He D., Ho G.T.S. Comparative analysis of Student's live online learning readiness during the coronavirus (COVID-19) pandemic in the higher education sector. Comput. Educ. 2021;168 doi: 10.1016/j.compedu.2021.104211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Lin B., Hsieh C. Web-based teaching and learner control: a research review. Comput. Educ. 2001;37(3):377–386. doi: 10.1016/S0360-1315(01)00060-4. [DOI] [Google Scholar]

- 79.Küsel J., Martin F., Markic S. University students' readiness for using digital media and online learning—comparison between Germany and the USA. Educ. Sci. 2020;10(11) doi: 10.3390/educsci10110313. Article 11. [DOI] [Google Scholar]

- 80.Abdous M. Influence of satisfaction and preparedness on online students' feelings of anxiety. Internet High Educ. 2019;41:34–44. doi: 10.1016/j.iheduc.2019.01.001. [DOI] [Google Scholar]

- 81.Ng W. Can we teach digital natives digital literacy? Comput. Educ. 2012;59(3):1065–1078. doi: 10.1016/j.compedu.2012.04.016. [DOI] [Google Scholar]

- 82.Ismail I., Syukri S., Sukmawati A. Scrutinizing university students' online learning readiness and learning achievement. Pegem Journal of Education and Instruction. 2022;12(3) doi: 10.47750/pegegog.12.03.29. Article 3. [DOI] [Google Scholar]

- 83.Salazar Gómez E., Tobón Tobón S., Juárez Hernández L.G. Diseño y validación de una rúbrica de evaluación de las competencias digitales desde la socioformación. Apuntes Universitarios. 2018;8(3):24–42. [Google Scholar]

- 84.Oviedo H.C., Campo-Arias A. Aproximacion al uso del coeficiente alfa de Cronbach. Rev. Colomb. Psiquiatr. 2005;34(4):572. [Google Scholar]

- 85.Rubio Hurtado M.J., Berlanga Silvente V. Com aplicar les proves paramètriques bivariades t de Student i ANOVA en SPSS. Cas pràctic. REIRE. Rev. Innovació Recer. Educ. 2012;5(2):83–100. [Google Scholar]

- 86.Dietrichson A. 2019. Prueba de Shapiro-Wilks | Métodos Cuantitativos.https://bookdown.org/dietrichson/metodos-cuantitativos/test-de-normalidad.html [Google Scholar]

- 87.Alenezi M. Digital learning and digital institution in higher education. Educ. Sci. 2023;13(1):88. doi: 10.3390/educsci13010088. [DOI] [Google Scholar]

- 88.França R.P., Borges Monteiro A.C., Arthur R., Iano Y. In: Trends in Deep Learning Methodologies. Piuri V., Raj S., Genovese A., Srivastava R., editors. Academic Press; 2021. Chapter 3—an overview of deep learning in big data, image, and signal processing in the modern digital age; pp. 63–87. [DOI] [Google Scholar]

- 89.Coiro J. Toward a multifaceted heuristic of digital reading to inform assessment, research, practice, and policy. Read. Res. Q. 2021;56(1):9–31. doi: 10.1002/rrq.302. [DOI] [Google Scholar]

- 90.Falloon G. From digital literacy to digital competence: the teacher digital competency (TDC) framework. Educ. Technol. Res. Dev. 2020;68(5):2449–2472. doi: 10.1007/s11423-020-09767-4. [DOI] [Google Scholar]

- 91.Fischer G., Lundin J., Lindberg J.O. Rethinking and reinventing learning, education and collaboration in the digital age—from creating technologies to transforming cultures. The International Journal of Information and Learning Technology. 2020;37(5):241–252. doi: 10.1108/IJILT-04-2020-0051. [DOI] [Google Scholar]

- 92.Jiménez-Hernández D., González-Calatayud V., Torres-Soto A., Martínez Mayoral A., Morales J. Digital competence of future secondary school teachers: differences according to gender, age, and branch of knowledge. Sustainability. 2020;12(22):9473. doi: 10.3390/su12229473. [DOI] [Google Scholar]

- 93.Tejedor G., Segalàs J., Barrón Á., Fernández-Morilla M., Fuertes M., Ruiz-Morales J., Gutiérrez I., García-González E., Aramburuzabala P., Hernández À. Didactic strategies to promote competencies in sustainability. Sustainability. 2019;11(7):2086. doi: 10.3390/su11072086. [DOI] [Google Scholar]

- 94.van Rijmenam M. 2022. How to Ensure Digital Literacy Amongst Gen Alpha. Dr Mark Van Rijmenam, CSP - the Digital Speaker | Strategic Futurist.https://www.thedigitalspeaker.com/ensure-digital-literacy-amongst-gen-alpha/ [Google Scholar]

- 95.Fleming E.C., Robert J., Sparrow J., Wee J., Dudas P., Slattery M.J. A digital fluency framework to support 21st-century skills. Change. 2021;53(2):41–48. doi: 10.1080/00091383.2021.1883977. [DOI] [Google Scholar]

- 96.Kumalasari D., Purwantara S., Aw S., Supardi S., Hendrastomo G. Online learning implementation in the faculty of social sciences during the covid-19 pandemic. J. Soc. Stud. 2022;18(2) doi: 10.21831/jss.v18i2.46870. Article 2. [DOI] [Google Scholar]

- 97.Asad M.M., Hussain N., Wadho M., Khand Z.H., Churi P.P. Integration of e-learning technologies for interactive teaching and learning process: an empirical study on higher education institutes of Pakistan. J. Appl. Res. High Educ. 2020;13(3):649–663. doi: 10.1108/JARHE-04-2020-0103. [DOI] [Google Scholar]

- 98.Barus I.R.G., Simanjuntak M.B., Resmayasari I. Reading literacies through evieta-based learning material: students' perceptions (study case taken from vocational school – IPB university) Journal of Advanced Engish Studies. 2021;4(1) doi: 10.47354/jaes.v4i1.98. Article 1. [DOI] [Google Scholar]

- 99.Zhu M. Enhancing MOOC learners' skills for self-directed learning. Dist. Educ. 2021;42(3):441–460. doi: 10.1080/01587919.2021.1956302. [DOI] [Google Scholar]

- 100.Zhu M., Bonk C.J., Doo M.Y. Self-directed learning in MOOCs: exploring the relationships among motivation, self-monitoring, and self-management. Educ. Technol. Res. Dev. 2020;68(5):2073–2093. doi: 10.1007/s11423-020-09747-8. [DOI] [Google Scholar]

- 101.Zinchenko Yury P., Varvara Morosanova, Nailia Kondratyuk, Fomina Tatiana G. Conscious self-regulation and self-organization of life during the COVID-19 pandemic. Psychology in Rrussia: State of the Art. 2020;13(4):168–182. doi: 10.11621/pir.2020.0411. [DOI] [Google Scholar]

- 102.Kooij D.T.A.M. The impact of the covid-19 pandemic on older workers: the role of self-regulation and organizations. Work, Aging and Retirement. 2020;6(4):233–237. doi: 10.1093/workar/waaa018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Gonzalez-Ramirez J., Mulqueen K., Zealand R., Silverstein S., Mulqueen C., BuShell S. Emergency online learning: college students' perceptions during the COVID-19 pandemic. Coll. Student J. 2021;55(1):29–46. [Google Scholar]

- 104.Mosleh S.M., Shudifat R.M., Dalky H.F., Almalik M.M., Alnajar M.K. Mental health, learning behaviour and perceived fatigue among university students during the COVID-19 outbreak: a cross-sectional multicentric study in the UAE. BMC Psychology. 2022;10(1):47. doi: 10.1186/s40359-022-00758-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Wong J., Baars M., Davis D., Van Der Zee T., Houben G.-J., Paas F. Supporting self-regulated learning in online learning environments and MOOCs: a systematic review. Int. J. Hum. Comput. Interact. 2019;35(4–5):356–373. doi: 10.1080/10447318.2018.1543084. [DOI] [Google Scholar]

- 106.Noor U., Younas M., Saleh Aldayel H., Menhas R., Qingyu X. Learning behavior, digital platforms for learning and its impact on university student's motivations and knowledge development. Front. Psychol. 2022;13 doi: 10.3389/fpsyg.2022.933974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Valverde-Berrocoso J., Acevedo-Borrega J., Cerezo-Pizarro M. Educational technology and student performance: a systematic review. Frontiers in Education. 2022;7 doi: 10.3389/feduc.2022.916502. [DOI] [Google Scholar]

- 108.Chiu T.K.F., Sun J.C.-Y., Ismailov M. Investigating the relationship of technology learning support to digital literacy from the perspective of self-determination theory. Educ. Psychol. 2022;42(10):1263–1282. doi: 10.1080/01443410.2022.2074966. [DOI] [Google Scholar]

- 109.Chen K.-C., Jang S.-J. Motivation in online learning: testing a model of self-determination theory. Comput. Hum. Behav. 2010;26(4):741–752. doi: 10.1016/j.chb.2010.01.011. [DOI] [Google Scholar]

- 110.Singh J., Steele K., Singh L. Combining the best of online and face-to-face learning: hybrid and blended learning approach for COVID-19, post vaccine, & post-pandemic world. J. Educ. Technol. Syst. 2021;50(2):140–171. doi: 10.1177/00472395211047865. [DOI] [Google Scholar]

- 111.Wei H.-C., Chou C. Online learning performance and satisfaction: do perceptions and readiness matter? Dist. Educ. 2020;41(1):48–69. doi: 10.1080/01587919.2020.1724768. [DOI] [Google Scholar]

- 112.Mubarak A.A., Cao H., Zhang W. Prediction of students' early dropout based on their interaction logs in online learning environment. Interact. Learn. Environ. 2022;30(8):1414–1433. doi: 10.1080/10494820.2020.1727529. [DOI] [Google Scholar]

- 113.Alamri H.A., Watson S., Watson W. Learning technology models that support personalization within blended learning environments in higher education. TechTrends. 2021;65(1):62–78. doi: 10.1007/s11528-020-00530-3. [DOI] [Google Scholar]

- 114.Samuel R., Burger K. Negative life events, self-efficacy, and social support: risk and protective factors for school dropout intentions and dropout. J. Educ. Psychol. 2020;112(5):973–986. doi: 10.1037/edu0000406. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated and analyzed during the current study are available from the corresponding author upon reasonable request.

The data associated with the study has not been deposited into a publicly available repository. Data will be made available on request.