Summary

The need for efficient computational screening of molecular candidates that possess desired properties frequently arises in various scientific and engineering problems, including drug discovery and materials design. However, the enormous search space containing the candidates and the substantial computational cost of high-fidelity property prediction models make screening practically challenging. In this work, we propose a general framework for constructing and optimizing a high-throughput virtual screening (HTVS) pipeline that consists of multi-fidelity models. The central idea is to optimally allocate the computational resources to models with varying costs and accuracy to optimize the return on computational investment. Based on both simulated and real-world data, we demonstrate that the proposed optimal HTVS framework can significantly accelerate virtual screening without any degradation in terms of accuracy. Furthermore, it enables an adaptive operational strategy for HTVS, where one can trade accuracy for efficiency.

Keywords: high-throughput screening, HTS, high-throughput virtual screening pipeline, HTVS, optimal computational campaign, optimal decision-making, optimal screening, return on computational investment, ROCI

Graphical abstract

Highlights

-

•

The problem of optimal virtual screening is mathematically formalized

-

•

The proposed framework maximizes the ROCI

-

•

The proposed framework is generally applicable to any virtual screening task

The bigger picture

Screening large pools of molecular candidates to identify those with specific design criteria or targeted properties is demanding in various science and engineering domains. While a high-throughput virtual screening (HTVS) pipeline can provide efficient means to achieving this goal, its design and operation often rely on experts’ intuition, potentially resulting in suboptimal performance. In this paper, we fill this critical gap by presenting a systematic framework that can maximize the return on computational investment (ROCI) of such HTVS campaigns. Based on various scenarios, we empirically validate the proposed framework and demonstrate its potential to accelerate scientific discoveries through optimal computational campaigns, especially in the context of virtual screening.

The proposed framework can be used to design the optimal operational policy for a given high-throughput virtual screening campaign—e.g., for drug or material screening—to maximize the return on computational investment and accelerate scientific discoveries.

Introduction

In various real-world scientific and engineering applications, the need for screening a large set of candidates to prioritize a small subset that satisfies certain criteria or possesses targeted properties arises fairly frequently. For example, since the coronavirus disease 2019 (COVID-19) outbreak, there have been significant concurrent efforts among various groups of scientists to identify or develop drugs that can provide a potential cure for this extremely infectious disease. One such notable effort is IMPECCABLE (integrated modeling pipeline for COVID cure by assessing better leads)1 whose operational objective is to optimize the number of promising ligands that potentially lead to the successful discovery of drug molecules. To this aim, IMPECCABLE utilized deep learning-based surrogates for predicting docking scores and multi-scale biophysics-based computational models for computing docking poses of compounds. Built on the strength of massive parallelism on exascale computing platforms combined with RADICAL-Cybertools (RCT) managing heterogeneous workflows, IMPECCABLE identified promising leads targeted at COVID-19.

Considering that severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) responsible for COVID-19 is known to rapidly mutate itself to create more infectious and deadlier variants,2 such a drug screening process to identify effective anti-viral drug candidates against a specific variant may have to be repeated as new variants emerge. However, considering that there are about compounds that can be considered for drug design in chemical space theoretically3 and the astronomical amount of computation that was devoted to the screening of drug candidates in IMPECCABLE1 to screen candidates, this is without question a Herculean task that requires enormous resources and one that cannot be routinely repeated.

While different in scale and complexity, such high-throughput virtual screening (HTVS) pipelines have been widely utilized in various fields, including biology,4,5,6,7,8 chemistry,1,9,10,11,12,13 engineering,14 and materials science.15,16 However, the construction of such HTVS pipelines and the strategies for operating them heavily rely on expert intuition, often resulting in heuristic methods without systematic considerations for increasing the return on computational investment (ROCI), a ratio of the number of desired candidates to computational resource investment in this context. It has remained a fundamental challenge to find a general strategy to better construct or modify a screening pipeline and identify its operational policy resulting in a higher ROCI despite an enormous search space.

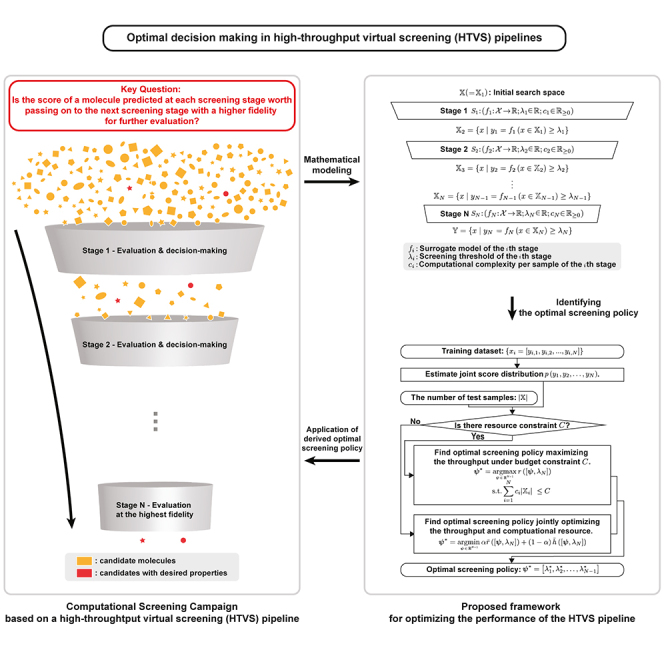

In general, HTVS pipelines consist of multiple stages, where each stage consists of a scoring function that evaluates the property of the molecules with a different accuracy/fidelity and computational cost (e.g., one of the multi-fidelity computational models or surrogate models of a high-fidelity computational model). This is illustrated on the left side of Figure 1. At each stage in the pipeline, the molecular candidate is evaluated to determine whether the evaluation result appears promising enough to warrant passing it to the next—often more computationally expensive but more accurate—stage without unnecessarily wasting computational resources and time. In this way, the HTVS pipeline narrows down the number of candidate molecules, while sensibly allocating the available resources for investigating those that are promising and more likely to possess the desired property. The most promising candidates that remain at the end of screening may proceed to experimental validation, which is often more laborious, costly, and time-consuming. For example, an HTVS pipeline8 based on multi-fidelity surrogate models combined with an experimental platform successfully selected and reported a novel non-covalent inhibitor, MCULE-5948770040. The reported inhibitor has been identified by screening over 6.5 million molecules, and it has been shown to inhibit the SARS-CoV-2 main protease. HTVS pipelines have been also widely used for materials screening. For example, a first-principles high-throughput screening pipeline for non-linear optical materials16 consisting of several computational predictors, based on density functional theory (DFT) calculations as well as data transformation and extraction methods, successfully identified deep-ultraviolet non-linear optical crystals that were reported in previous studies.17,18,19,20,21,22,23

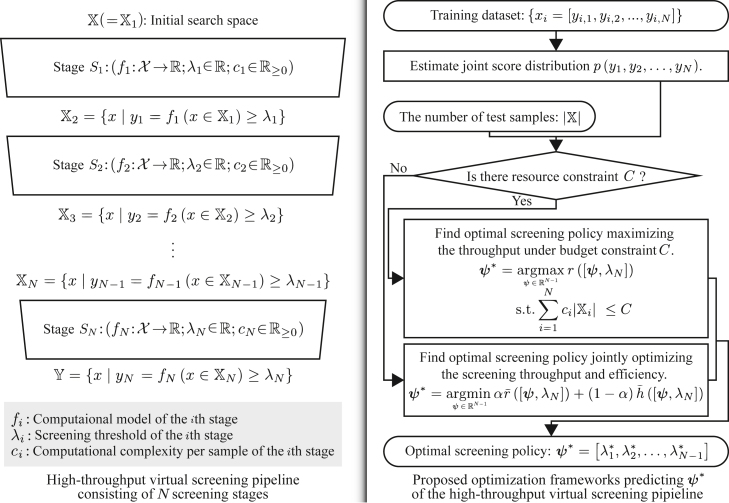

Figure 1.

Illustration of a general HTVS pipeline (left) that consists of N screening stages for rapid and reliable identification of a set of candidate molecules that likely possess the desired properties from a huge original set

Stage evaluates all the molecules , which passed the previous stage , via computational model . passes the sample x to the next stage if . Otherwise, it dcards the molecule. The proposed optimization framework shown on the right side predicts optimal screening policy that yields either the maximal screening throughput under a resource budget constraint C or strikes the optimal balance between the screening throughput and efficiency according to the screening campaign scenario.

Although previous studies have demonstrated the advantages of constructing an HTVS pipeline for rapid screening of a huge set of molecules to narrow down the most promising molecular candidates that are likely to possess the desired properties, the problem of optimal decision-making for maximally increasing the ROCI in such screening pipelines has not been extensively investigated to date. For example, how should one decide whether or not to pass a molecular candidate at hand to the next stage, given the score of the current stage? More specifically, in the HTVS example shown on the left side of Figure 1, how do we optimally determine the screening threshold of each stage for a given HTVS structure to maximize the ROCI? Furthermore, if we were to modify the HTVS structure or construct it from scratch by interconnecting multi-fidelity computational or surrogate models, what would be an advantageous structure of such an HTVS pipeline that is likely to lead to a higher ROCI? This requires selecting the proper subset of the available multi-fidelity models, arranging them in the optimal order, and then exploring the interrelations among their predictive outcomes to make optimal operational decisions for the constructed HTVS pipeline.

To answer the aforementioned questions, we propose a computational framework for the optimization of HTVS pipelines that consist of multiple computational models with different costs and fidelity. The key idea is to estimate the joint probability distribution of predictive scores that result from the different stages constituting the HTVS pipeline, based on which we optimize the screening threshold values. We consider two optimization scenarios. First, we consider the case where the total computational budget is fixed, and the goal is to maximize the screening throughput defined as the number of promising candidates with the desired property within the given budget. Second, we assume that the computational budget is liquid and consider the case where we aim to balance the screening throughput and screening efficiency defined as a ratio of the number of promising candidates to the total computational consumption. We demonstrate the performance of the proposed HTVS pipeline optimization framework based on both simulated data as well as real data. In the simulated example, the joint distribution of the predictive scores from the multi-fidelity models at different stages is assumed to be known, based on which we extensively evaluate the performance of the proposed approach under various scenarios. As a second example, we consider the problem of screening for long non-coding RNAs (lncRNAs). In this example, we first construct an HTVS pipeline by interconnecting existing lncRNA prediction algorithms with varying costs and accuracy and apply our proposed framework for performance optimization. Both examples clearly demonstrate the advantages of our proposed scheme, which leads to a substantial reduction of the total computational cost at virtually no degradation in overall screening performance. Furthermore, we show that the proposed framework enables one to make an informed decision to balance the trade-off between screening efficiency and screening throughput, where one could trade accuracy for higher efficiency, and vice versa.

Results

In this section, we first formally describe the operational process of a general HTVS pipeline and provide a high-level overview of the proposed optimization framework. Two different scenarios will be considered. In the first scenario, the objective is to maximize the expected throughput of the HTVS pipeline under a fixed computational budget. In the second scenario, the objective is to jointly optimize the screening throughput and the computational efficiency of the pipeline. We validate the proposed optimization framework based on both synthetic and real data. First, we evaluate the performance of our optimization framework based on a four-stage HTVS pipeline, where the joint probability distribution of the predictive scores is assumed to be known. Next, we construct an HTVS pipeline for lncRNAs by interconnecting existing lncRNA prediction algorithms with different prediction accuracy and computational complexity. In this example, the joint distribution of the predictive scores from the different algorithms at different stages is learned from training data, based on which the proposed HTVS optimal framework is used to identify the optimal screening policy.

Overview of the proposed HTVS pipeline optimization framework

We assume that an HTVS pipeline consists of N screening stages , , connected in series as shown in Figure 1 (left), where is a computational model for predicting the property of interest for a given molecule and is the screening threshold. The average computational cost per sample for associated with the ith stage is denoted by . For simplicity, we will use and interchangeably. At each stage , the corresponding surrogate model is used to evaluate the property of all molecules that passed the previous screening stage , where is given by:

| (Equation 1) |

By definition, we have , which contains the entire set of molecules to be screened. At stage , every molecule whose property score is below the threshold is discarded such that only the remaining molecules that meet or exceed this threshold are passed on to the next stage . We assume that all molecules in at each stage are batch processed to select the set of molecules that will be passed to the subsequent stage , as it is often done in practice.24,25,26

Although every stage in the screening pipeline performs a down-selection of the molecules by assessing their molecular property based on the computational model and comparing it against the threshold , we assume only the threshold values of the first stages will need to be determined while the threshold for the last screening stage is predetermined. This reflects how such screening pipelines are utilized in real-world scenarios. For example, in the IMPECCABLE pipeline,1 as well as in many other computational drug discovery pipelines, potentially effective lead compounds that pass the earlier stages based on efficient but less accurate models will be assessed using computationally expensive yet highly accurate molecular dynamics (MD) simulations to evaluate the binding affinity against the target. Only the molecules whose binding affinity estimated by the MD simulations exceeds a reasonably high threshold set by domain experts may be further assessed experimentally as further steps are time consuming and labor intensive. Similarly, in a materials screening pipeline, the last screening stage may involve expensive calculations based on DFT, a quantum mechanical modeling scheme that is widely used for predicting material properties.27,28,29,30,31,32,33,34,35

Based on this setting, our primary objective is to predict the optimal screening policy that leads to the optimal operation of the HTVS pipeline. We consider two different scenarios. In the first scenario, we assume that the total computational budget for screening the candidate molecules is fixed, where the design goal would then be to identify the optimal screening policy that maximizes the screening throughput, namely, the percentage (or number) of potential molecules that meet or exceed the qualification in the last stage (i.e., ). In the second scenario, we consider the case when the computational budget is not fixed and where the goal is to design the optimal policy that balances the screening throughput and screening efficiency (i.e., a ratio of the screening throughput to the required computational resource). This is done by defining and optimizing the objective function, which is a convex combination of the number of desired samples that we will miss and the overall computational cost.

Figure 1 (right) shows a flowchart summarizing the proposed approach for identifying the optimal screening policy for the optimal operation of a given HTVS pipeline under the two screening scenarios described above. First, we estimate the joint distribution of the predictive scores from the N stages based on the available training data. In the case where the probability density function (PDF) is known a priori, this PDF estimation step will not be required. Given , we can predict the optimal screening policy that leads to the optimal operational performance of the HTVS pipeline. Specifically, in the case where the total computational budget C is fixed, we find the optimal policy that maximizes the screening throughput of the pipeline—i.e., the proportion of molecules that pass the last (and the most stringent/accurate) screening stage that meet the condition —under the budget constraint C. The formal definition of the given optimization problem is shown in Equation 4. Otherwise, we predict optimal screening policy that jointly optimizes the screening throughput and screening efficiency based on a weighted objective function of the screening throughput and the computational efficiency. The formal definition of this joint optimization problem can be found in Equation 6. In this case, the balancing weight α can be used to trade throughput for computational efficiency, or vice versa. We note that the training dataset is only used for estimating the PDF and not (directly) for finding the optimal screening policy. In fact, the optimal policy is determined by a function of up to three parameters: the joint score distribution , (the number of potential molecules to be screened), and the total computational budget C (in the first screening scenario, where the computational budget is assumed to be limited).

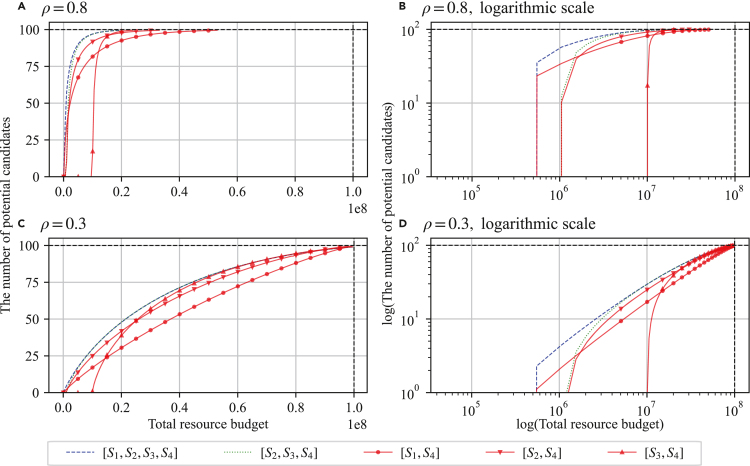

Figure 4.

Performance evaluation of the optimized lncRNA HTVS pipeline

The number of potential candidates (i.e., lncRNAs) detected by the HTVS pipeline is shown under various computational budget constraints (x axis). Various different pipeline structures were tested, and the results show that the proposed optimization framework leads to reliable performance regardless of the structure used.

Comprehensive performance analysis of the HTVS pipeline optimization framework

For comprehensive performance analysis of the proposed HTVS pipeline optimization framework, we consider a synthetic HTVS pipeline with four stages (i.e., ), where the joint PDF of the predictive scores from all stages is assumed to be known. We vary the correlation levels between the scores from neighboring stages to investigate the overall impact on the performance of the optimized HTVS pipeline.

Specifically, we assume that the computational cost for screening a single molecule is 1 at stage , 10 at , 100 at , and at . As the per-molecule screening cost is fairly different across stages, the given setting for the synthetic HTVS pipeline allows us to clearly see the impact and significance of optimal decision-making on the overall screening throughput and efficiency of the screening pipeline.

Here, we consider the case when we have complete knowledge of the joint score distribution . The score distribution is assumed to be a multivariate uni-modal Gaussian distribution , where the covariance matrix is a Toeplitz matrix defined as follows:

| (Equation 2) |

where ρ is the correlation between neighboring stages and for . We assumed that the score correlation is lower between stages that are further apart, which is typically the case in real screening pipelines that consist of multi-fidelity models.

The primary objective of the HTVS pipeline is to maximize the number of potential candidates (i.e., screening throughput) that satisfy the final screening criterion (i.e., ) based on the highest-fidelity model at stage . The total number of all candidate molecules in the initial set is assumed to be . We assume that we are given as prior information set by a domain expert, which results in 100 promising molecules (among in ) that satisfy the final screening criterion (i.e., ). We validate the proposed HTVS optimization framework for two cases: first, for , where the neighboring stages yield scores that are highly correlated, and next, for where the correlation is very low. Performance analysis results based on various other covariance matrices can be found in the supplemental information.

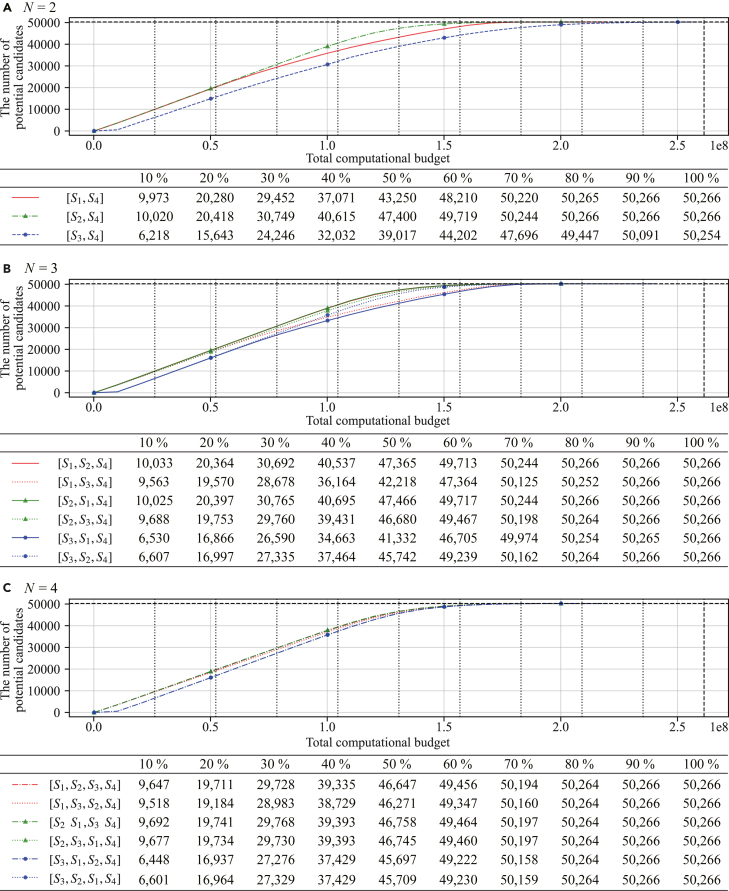

Performance of the optimized HTVS pipeline under computational budget constraint

Figure 2 shows the performance evaluation results for different HTVS pipeline structures optimized via the proposed framework under a fixed computational resource budget. The total number of the desirable candidates detected by the pipeline is shown as a function of the available computational budget for two cases: (1) HTVS pipelines that consist of highly correlated stages (, Figures 2A and 2B) and (2) HTVS pipelines comprised of stages with low correlation (, Figures 2C and 2D). Note that Figures 2B and 2D, respectively, show identical contents of Figures 2A and 2C in a logarithmic scale for a better distinction between the curves with similar performance. The black horizontal and vertical dashed lines depict the total number of true candidates that meet the screening criterion (100 in this simulation) and the total computational budget required when screening all molecules in only based on the last stage (i.e., the highest-fidelity and most computationally expensive model), respectively. Figure 2 shows the performance of the best-performing stage pipeline and that of the best-performing pipeline. In addition, the performance of all stage pipelines is shown for comparison.

Figure 2.

Performance assessment of the optimized HTVS pipelines

The number of candidate molecules that meet the desired screening criterion is shown as a function of the available computational budget. Results are shown for the case when the stages are highly correlated (A and B, ) as well as when they have low correlation (C and D, ). Note that (B and D), respectively, show identical contents of (A and C) in a logarithmic scale. The performance of the best-performing four-stage pipeline and the best-performing three-stage pipeline is shown. For comparison, we also show the performance of all two-stage pipelines. Note that only the best-performing configurations are shown for .

First, as shown in Figures 2A and 2B, the performance curves of the pipelines consisting of only two stages (shown in red with different shapes of markers) demonstrate how each of the lower-fidelity stages – improves the screening performance when combined with the highest-fidelity stage and performance optimized by our proposed framework. As shown in Figures 2A and 2B, the correlation between the lower-fidelity/lower-complexity stage , , at the beginning of the HTVS pipeline and highest-fidelity/highest-complexity stage at the end of the pipeline has a significant impact on the slope of the performance curve. For example, in the two-stage pipeline , where the two stages are highly correlated to each other, we can observe the steepest performance improvement as the available computational budget increases. On the other hand, for the two-stage pipeline , which consists of less correlated stages, the performance improvement is more moderate in comparison as the available computational budget increases. Note that the minimum required computational budget to screen all candidates is larger for the pipeline compared with that for , which is due to the assumption that all candidates are batch processed at each stage. For example, with the minimum budget needed by pipeline to screen all candidates, the other pipelines and are capable of completing the screening and detecting more than of the desirable candidates. Nevertheless, the detection performance improves with the increasing computational budget for all two-stage pipelines.

It is important to note that we can in fact simultaneously attain the advantage of using a lower-complexity stage (e.g., ) that allows a “quick-start” with a small budget as well as the merit of using a higher-complexity stage (e.g., ) for rapid performance improvement with the budget increase by constructing a multi-stage HTVS pipeline and optimally allocating the computational resources according to our proposed optimization framework. This can be clearly seen in the performance curve for the four-stage pipeline (blue dashed line). The optimized four-stage pipeline consistently outperforms all other pipelines across all budget levels. Specifically, the optimized pipeline quickly evaluated all the molecular candidates in through the most efficient stage and sharply improved the screening performance through the utilization of more complex yet also more accurate subsequent stages in the HTVS pipeline in a resource-optimized manner. For example, the optimized four-stage pipeline detected of the desirable candidates that meet the target criterion at only of the total computational cost that would be required if one used only the last stage (which we refer to as the “original cost”). To detect of the desired candidates, the optimized four-stage pipeline would need only about of the original cost.

Among all three-stage pipelines (i.e., ), pipeline yielded the best performance when performance-optimized using our proposed optimization framework (green dotted line in Figures 2A and 2B). As we can see in Figures 2A and 2B, the screening performance sharply increases as the available computational budget increases, thanks to the high correlation between and the prior stages and . However, due to the higher computational complexity of compared with that of , the optimized pipeline required a higher minimum computational budget for screening all candidate molecules compared with the minimum budget needed by a pipeline that begins with . Despite this fact, when the first stage in this three-stage HTVS pipeline is replaced by the more efficient , our simulation results (see Figure S41 in the supplemental information) show that the screening performance improves relatively moderately as the budget increases. Empirically, when all stages are relatively highly correlated to each other, the best strategy for constructing the HTVS pipeline appears to place the stages in increasing order of complexity and optimally allocate the computational resources to maximize the ROCI. In fact, this observation is fairly intuitive and also in agreement with how screening pipelines are typically constructed in real-world applications.

Figures 2C and 2D show the performance evaluation results of the HTVS pipelines, where the screening stages are less correlated to each other (). Results are shown for different pipeline configurations, where the screening policy is optimized using the proposed framework to maximize the ROCI. Overall, the performance trends were nearly identical to those shown in Figures 2A and 2B, although the overall performance is lower compared with the high correlation scenario () as expected. While the screening performance of the optimized HTVS pipeline is not as good as the high-correlation scenario, the multi-stage HTVS pipeline with the optimized screening policy still provides a much better trade-off between the computational cost for screening and the detection performance. For example, if we were to use only the highest-fidelity model in for screening, the only way to trade accuracy for reduced resource requirements would be to randomly sample the candidate molecules from and screen the selected candidates. The performance curve, in this case, would be a straight line connecting and , below most of the performance curves for the optimized pipeline approach shown in Figures 2C and 2D. As in the previous case (), the best pipeline configuration was to interconnect all four stages, where the stages are connected to each other in increasing order of complexity.

Performance of the HTVS pipeline jointly optimized for screening throughput and efficiency

Table 1 shows the performance of the various HTVS pipeline configurations, where the screening policy was jointly optimized for both screening throughput and computational efficiency. The joint optimization problem is formally defined in Equation 6, and α was set to 0.5 in these simulations. As a reference, the first row (configuration ) shows the performance of solely relying on the last stage for screening the molecules without utilizing a multi-stage pipeline. The effective cost is defined as the total computational cost divided by the total number of molecules detected by the screening pipeline that satisfy the target criterion (i.e., average computational cost per detected candidate molecule or reciprocal of screening efficiency). The computational savings of a given pipeline configuration is calculated by comparing its effective cost to that of the reference configuration (i.e., ). As we can see in Table 1, our proposed HTVS pipeline optimization framework was able to significantly improve the overall screening performance across all pipeline configurations in a highly robust manner. For example, for , the optimized pipelines consistently led to computational savings ranging from to compared with the reference, while detecting of the desired candidates that meet the target criterion. Although the overall efficiency of the HTVS pipelines slightly decreases when the neighboring stages are less correlated (), the pipelines were nevertheless effective in saving computational resources. As shown in Table 1, the optimized HTVS pipelines detected of all desired candidate molecules with computational savings ranging between and .

Table 1.

Performance comparison of various HTVS pipeline structures jointly optimized via the proposed framework ()

| Configuration | High correlation |

Low correlation |

||||||

|---|---|---|---|---|---|---|---|---|

| Potential candidates | Total cost | Effective cost |

Computational savings (%) | Potential candidates | Total cost | Effective cost |

Computational savings (%) | |

| 100 | 100 | |||||||

| 94 | 89 | |||||||

| 96 | 90 | |||||||

| 98 | 92 | |||||||

| 97 | 94 | |||||||

| 98 | 94 | |||||||

| 97 | 94 | |||||||

| 98 | 94 | |||||||

| 99 | 94 | |||||||

| 99 | 94 | |||||||

| 99 | 96 | |||||||

| 99 | 96 | |||||||

| 99 | 96 | |||||||

| 99 | 96 | |||||||

| 99 | 96 | |||||||

| 99 | 96 | |||||||

For further evaluation of the proposed framework, we performed additional experiments based on the four-stage pipeline . In this experiment, we first investigated the impact of α on the screening performance. Next, we compared the performance of the optimal screening policy with the performance of a baseline policy that mimics a typical screening scenario in real-world applications (e.g., see Saadi et al.1). The baseline policy selects the top candidate molecules at each stage and passes them to the next stage while discarding the rest. This baseline screening policy is agnostic of the joint score distribution of the multiple stages in the HTVS and aims to reduce the overall computational cost by passing only the top candidates to subsequent stages that are more costly. Similar strategies are in fact often adopted in practice due to their simplicity. In our simulations, we assumed the proportion is uniform across the screening stages. The performance evaluation results are summarized in Table 2. When the neighboring stages were highly correlated (), the optimized pipelines detected 100, 99, and 96 candidate molecules at a total cost of , , and , respectively. Interestingly, when α was reduced from 0.75 to 0.25 (i.e., trading accuracy for higher efficiency), the number of detected candidate molecules decreased only by 4 (i.e., from 100 to 96), while leading to an additional computational savings of 8 percentage points (i.e., from to ). On the other hand, the performance of the baseline screening policy was highly unpredictable and very sensitive to the choice of . For example, although the baseline with found all the potential candidates, the effective cost of the baseline was significantly higher than that of the proposed optimized pipeline with . For , the baseline detected 98 potential candidates (out of 100) with a total cost of , which was higher than the total cost of the optimized pipeline that detected 99 potential candidates. The baseline pipelines with and 0.25 selected and of the potential candidates, respectively. Considering that the primary goal of such a pipeline is to detect the largest number of potential candidates, these results clearly show that this baseline screening scheme that mimics conventional screening pipelines resulted in unreliable screening performance even when the neighboring stages were highly correlated to each other. While the baseline may lead to reasonably good performance for certain , it is important to note that we cannot determine the optimal in advance as the approach is agnostic to the relationships between different stages. As a result, the application of this baseline screening pipeline may significantly degrade the screening performance in practice. When the correlation between the neighboring stages was low (), the overall performance of the proposed pipeline degraded as expected. In this case, the pipeline jointly optimized for screening throughput as well as screening efficiency with α set to 0.75, 0.5, and 0.25 detected 99, 96, and 86 potential candidates with the computational cost of , , and , respectively. As in the high correlation case, the performance of the baseline scheme significantly varied and was sensitive to the choice of .

Table 2.

Performance comparison between the proposed pipeline jointly optimized for throughput and computational efficiency (with various α) and the baseline pipeline (with different screening ratio ) in terms of the total number of detecteded potential candidates after screening and the computational cost induced

| approach | High correlation |

Low correlation |

||||||

|---|---|---|---|---|---|---|---|---|

| Potential candidates | Total cost | Effective cost |

Computational savings (%) | Potential candidates | Total cost | Effective cost |

Computational savings (%) | |

| Proposed () | 100 | 99 | ||||||

| Proposed () | 99 | 96 | ||||||

| Proposed () | 96 | 86 | ||||||

| Baseline () | 100 | 93 | ||||||

| Baseline () | 98 | 69 | ||||||

| Baseline () | 78 | 28 | ||||||

| Baseline () | 26 | 6 | ||||||

Performance evaluation of the optimized HTVS pipeline for screening lncRNAs

In recent years, interest in lncRNAs have been constantly increasing in relevant research communities, as there is growing evidence that lncRNAs and their roles in various biological processes are closely associated with the development of complex and often hard-to-treat diseases including Alzheimer’s disease,36,37,38 cardiovascular diseases,39,40 and several types of cancer.41,42,43,44 RNA sequencing techniques are nowadays routinely used to investigate the main functional molecules and their molecular interactions responsible for the initiation, progression, and manifestation of such complex diseases. Consequently, the accurate detection of lncRNA transcripts from a potentially huge number of sequenced RNA transcripts is a fundamental step in studying lncRNA-disease association. While several lncRNA prediction algorithms have been developed so far,45,46,47,48 each with its own pros and cons, no HTVS pipeline has been proposed to date for fast and reliable screening of lncRNAs.

To bridge the gap based on the proposed HTVS optimization framework, we first construct diverse HTVS pipeline structures by interconnecting four state-of-the-art lncRNA prediction algorithms—CPC2 (coding potential calculator 2),47 CPAT (Coding Potential Assessment Tool),45 PLEK (predictor of lncRNAs and messenger RNAs based on an improved k-mer scheme),46 and LncFinder.48 Then, we estimate the joint probability distribution of the scores from the given algorithms. The estimated score distribution is then used to derive the optimal screening policies in two different optimization scenarios. The performance of the optimized HTVS pipelines is comprehensively compared and analyzed from diverse perspectives.

In what follows, we describe the details of these steps and present the performance evaluation results of the optimal computational screening on real RNA transcripts in the GENCODE database.49

Dataset and preprocessing

We collected the nucleotide sequences of Homo sapiens RNA transcripts from GENCODE version 38,49 which consists of lncRNA sequences and protein-coding sequences. We filtered out sequences that contain any unknown nucleotides (other than A, U, C, or G) and sequences whose length exceeds nt. This resulted in lncRNA sequences and protein-coding sequences. Next, we applied a clustering algorithm CD-hit50 to lncRNAs and protein-coding RNAs, respectively, to remove redundant sequences. We finally obtained a set of RNA transcripts consisting of lncRNA sequences and protein-coding sequences.

Construction of the lncRNA HTVS pipeline

For the construction of the lncRNA screening pipeline, we selected four state-of-the-art lncRNA prediction algorithms that have been shown to achieve good prediction performance: CPC2,47 CPAT,45 PLEK,46 and LncFinder.48

Table 3 summarizes the performance of the individual algorithm based on the GENCODE dataset, preprocessed as described previously. We assessed the accuracy, sensitivity, and specificity of the respective lncRNA prediction algorithms. For algorithm CPAT, which yields confidence scores between 0 and 1 rather than a binary output, we set the decision boundary to 0.5 for lncRNA classification. As shown in Table 3, LncFinder achieved the accuracy, sensitivity, and specificity of 0.8329, 0.7062, and 0.9678, respectively, outperforming all other algorithms in terms of accuracy and sensitivity. However, LncFinder also had the highest computational cost among the compared algorithms, where processing an RNA transcript required ms on average. CPAT was the second-best performer among the four in terms of accuracy and sensitivity. Furthermore, CPAT also achieved the highest specificity. CPC2 and PLEK were less accurate compared with LncFinder and CPAT in terms of accuracy, sensitivity, and specificity. Despite their high computational efficiency, both CPC2 and CPAT also outperformed PLEK based on overall accuracy.

Table 3.

Performance of the four individual lncRNA prediction algorithms that constitute the lncRNA HTVS pipeline

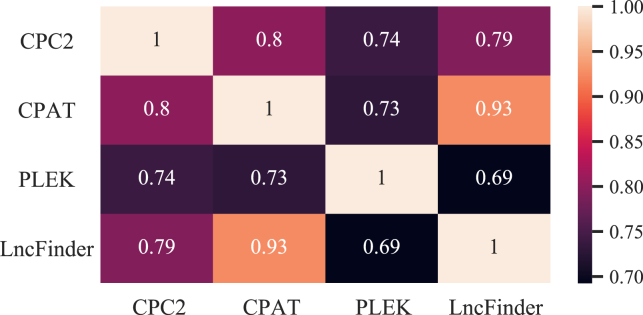

As we previously observed from the performance assessment results based on the synthetic pipeline, the efficacy of the optimized HTVS pipeline is critically dependent on the interrelation between the stages constituting the pipeline. The proposed HTVS optimization framework aims to exploit the correlation structure across different screening stages to find the optimal screening policy either maximizing the screening throughput under a given budget constraint or striking the optimal balance between the screening throughput and screening efficiency depending on the optimization scenario under consideration. Here, we placed LncFinder—the most accurate and the most computationally costly algorithm among the four—in the final stage. In the first three stages in the HTVS pipeline, we placed CPC2, CPAT, and PLEK, in order of increasing computational complexity. After constructing the screening pipeline, we computed Pearson’s correlation coefficient between the predictive output scores obtained from different algorithms. As shown in Figure 3, CPAT showed the highest correlation with LncFinder in the last stage (with a correlation coefficient of 0.93), the highest among the first three stages in the screening pipeline.

Figure 3.

Heatmap showing Pearson’s correlation coefficient between different stages

CPAT had the highest correlation to LncFinder. While PLEK was computationally more complex compared with CPAT, it showed a relatively lower correlation to LncFinder.

To apply our proposed HTVS pipeline optimization framework, we first estimated the joint probability distribution of the predictive scores generated by the four different lncRNA prediction algorithms—CPC2 (), CPAT (), PLEK (), and LncFinder ()—via the expectation-maximization (EM) algorithm.51 For training, of the preprocessed GENCODE data were used. Note that all the computational lncRNA identification algorithms considered in this study output protein-coding probabilities, hence a higher output value corresponds to a higher probability for a given transcript to be protein coding. Since our goal was to identify the lncRNAs, we multiplied the output scores generated by the algorithms by such that higher values represent higher chances of being lncRNA transcripts. The screening threshold for the LncFinder in the last stage of the HTVS pipeline was set to , which leads to the optimal overall performance of LncFinder with a good balance between sensitivity and specificity.

Performance of the optimized lncRNA HTVS pipeline under computational budget constraint

Figure 4 shows the performance of the optimized lncRNA HTVS pipelines with various pipeline structures, where Figures 4A–4C illustrate the optimized pipelines with 2, 3, and 4 screening stages, respectively. The black horizontal dashed line indicates the total number of desired candidates (i.e., the total number of functional lncRNAs in the test set) and the black vertical dashed line shows the total computational cost (referred to as the original cost as before) that would be needed for screening all candidates based on the last stage LncFinder alone, without using the HTVS pipeline. Black vertical dotted lines are located at intervals of of this original cost. Underneath each dotted line, the number of potential candidates (i.e., true functional lncRNAs) detected by each optimized HTVS pipeline is shown (see the columns in the table aligned with the dotted lines in the plots).

As before, we assumed that the candidates are batch processed at each stage. As a result, for a given pipeline structure, the computational cost of the first stage determines the minimum computational resources needed to start screening. The correlation between the neighboring stages was closely related to the slope of the corresponding performance curve, which is a phenomenon that we already noticed before based on synthetic pipelines. For example, at of the original cost, the pipelines starting with PLEK (i.e., ) showed the worst performance among the tested pipelines in terms of the throughput. Specifically, , , , , and detected , , , , and lncRNAs, respectively. On the other hand, pipelines starting with either CPC2 or CPAT (i.e., or ) detected to lncRNAs at the same cost. In addition, pipelines , , , , , , , , , , and including the second stage associated with CPAT that is highly correlated to the last stage LncFinder showed the steepest performance improvement. As a result, all HTVS pipelines that include CPAT were able to identify nearly all true lncRNAs (i.e., ) at only of the original cost, regardless of which stage CPAT was placed in the pipeline.

While the structure of the HTVS pipeline impacts the overall screening performance, Figure 4 shows that our proposed optimization framework alleviated the performance dependency on the underlying structure by optimally exploiting the relationships across different stages. For example, even though the optimized pipeline outperformed the optimized pipeline , which additionally included PLEK (i.e., ), the performance gap was not very significant. The maximum difference between the two pipeline structures in terms of the detected lncRNAs was when the computational budget was set at of the original cost. However, when considering that PLEK () was computationally much more expensive compared with CPC2 () and CPAT () and also had a lower correlation with LncFinder (), the throughput difference of was only about of the total lncRNAs in the test dataset, which is relatively small. Moreover, this throughput difference was drastically reduced as the available computational resources increased. For example, when the computational budget was set at of the original cost the throughput difference between the two pipelines was only 50.

In practice, real-world HTVS pipelines may involve various types of screening stages using multi-fidelity surrogate models. The computational complexity and the fidelity of such surrogate models may differ significantly, and the structure of the pipeline may vastly vary depending on the domain experts designing the pipeline. Considering these factors, an important advantage of our proposed HTVS pipeline optimization framework is its capability to consistently attain efficient and accurate screening performance that may weather the effect of potentially suboptimal design choices in constructing real-world HTVS pipelines.

Performance of the lncRNA HTVS pipeline jointly optimized for screening throughput and efficiency

We evaluated the performance of the lncRNA HTVS pipelines, jointly optimized for both screening throughput and efficiency based on the proposed framework with . The results for various pipeline configurations are shown in Table 4. On average, the optimized HTVS pipeline detected lncRNAs out of total lncRNAs in the test dataset. The average effective cost was . Pipeline configurations that include CPAT () achieved relatively higher computational savings (ranging from to ) compared with those without (ranging from to ). As we have previously observed, our proposed optimization framework was effective in maintaining its screening throughput and efficiency even when the pipeline included a stage (e.g., PLEK) that is less correlated with the last and the highest-fidelity stage (i.e., LncFinder). In fact, the inclusion of a less-correlated stage in the HTVS pipeline does not significantly degrade the average screening performance. This is because the proposed optimization framework enables one to select the optimal threshold values that can sensibly combine the benefits of the most computationally efficient stages (such as CPC2 and CPAT in this case) as well as the highest-fidelity stage (LncFinder), thereby maximizing the expected ROCI. Similar observations can be made regarding the ordering of the multiple screening stages, as Table 4 shows that the average performance does not significantly depend on the actual ordering of the stages when the screening threshold values are optimized via our proposed framework. For example, when using all four stages in the HTVS pipeline (), the optimized pipeline detected lncRNAs on average and with consistent computational savings ranging between and . We also evaluated the accuracy of the potential candidates screened by the optimized HTVS pipeline based on four performance metrics: accuracy (ACC), sensitivity (SN), specificity (SP), and F1 score. Interestingly, all configurations except for outperformed LncFinder in terms of ACC. In terms of SN, the optimized pipeline achieved an average sensitivity of 0.9177. All pipeline configurations resulted in higher specificity compared with LncFinder. Besides, pipeline configurations that include consistently outperformed LncFinder in terms of the F1 score.

Table 4.

Performance evaluation of the lncRNA HTVS pipeline jointly optimized for throughput and efficiency ()

| Configuration | Potential candidates | Total cost (ms) | Effective cost |

Computational savings (%) | Accuracy | Sensitivity | Specificity | F1 |

|---|---|---|---|---|---|---|---|---|

| 0.8440 | 0.9264 | 0.7936 | 0.8186 | |||||

| 0.8429 | 0.9075 | 0.8034 | 0.8144 | |||||

| 0.8624 | 0.9215 | 0.8262 | 0.8357 | |||||

| 0.8450 | 0.8876 | 0.8188 | 0.8131 | |||||

| 0.8600 | 0.9216 | 0.8222 | 0.8333 | |||||

| 0.8442 | 0.9120 | 0.8026 | 0.8164 | |||||

| 0.8600 | 0.9216 | 0.8222 | 0.8334 | |||||

| 0.8602 | 0.9230 | 0.8218 | 0.8338 | |||||

| 0.8444 | 0.9124 | 0.8026 | 0.8166 | |||||

| 0.8600 | 0.9231 | 0.8214 | 0.8336 | |||||

| 0.8591 | 0.9228 | 0.8200 | 0.8326 | |||||

| 0.8587 | 0.9215 | 0.8203 | 0.8321 | |||||

| 0.8591 | 0.9229 | 0.8200 | 0.8326 | |||||

| 0.8591 | 0.9230 | 0.8200 | 0.8327 | |||||

| 0.8589 | 0.9228 | 0.8197 | 0.8324 | |||||

| 0.8589 | 0.9229 | 0.8197 | 0.8325 |

Finally, we compared the performance of the optimized pipeline with that of the baseline approach that selects the top of the incoming candidates for the next stage, where is a parameter to be determined by a domain expert. For this comparison, we considered the four-stage pipeline . The optimal screening policy was found based on our proposed framework using three different values of . The baseline screening approach was evaluated based on four different levels of . The performance assessment results are summarized in Table 5. As shown in Table 5, the baseline approach detected fewer lncRNAs for all values of compared with the optimized pipeline. Specifically, the jointly optimized pipeline detected , , and lncRNAs at a cost of (), (), and (), respectively. On the other hand, the baseline approach with detected only lncRNAs at a total cost of . For and , the baseline scheme detected only and lncRNAs, respectively. In terms of the four quality metrics (ACC, SN, SP, and F1), the optimized pipeline outperformed the baseline scheme in terms of ACC, SN, and F1. The optimized pipeline resulted in lower SP compared with the baseline. However, it should be noted that the potential candidates detected by the optimized HTVS pipeline are remarkably higher compared with the baseline approach. This is clearly reflected in the much lower SN of the baseline approach, as shown in Table 5. As a result, the baseline approach tends to achieve significantly lower ACC and F1 compared with the optimal screening scheme.

Table 5.

Performance of the four-stage lncRNA HTVS pipeline jointly optimized for throughput and efficiency is compared with that of the baseline screening approach

| Approach | Potential candidates | Total cost (ms) | Effective cost |

Computational savings (%) | Accuracy | Sensitivity | Specificity | F1 |

|---|---|---|---|---|---|---|---|---|

| Proposed () | 0.8553 | 0.9249 | 0.8126 | 0.8292 | ||||

| Proposed () | 0.8591 | 0.9228 | 0.8200 | 0.8326 | ||||

| Proposed () | 0.8650 | 0.9143 | 0.8348 | 0.8373 | ||||

| Baseline () | 0.8366 | 0.7761 | 0.8737 | 0.7801 | ||||

| Baseline () | 0.7170 | 0.2866 | 0.9807 | 0.4348 | ||||

| Baseline () | 0.6318 | 0.0330 | 0.9986 | 0.0638 |

Discussion

In this work, we proposed a general mathematical framework for identifying the optimal screening policy that can either maximize the ROCI of an HTVS pipeline under a given computational resource constraint or strike the balance between the screening throughput and efficiency depending on the optimization scenarios. The need for screening a large set of molecules to detect potential candidates that possess the desired properties frequently arises in various science and engineering domains, although the design and operation of such screening pipelines strongly depend on expert intuitions and ad hoc approaches. We aimed to rectify this problem by taking a principled approach to HTVS, thereby maximizing the screening performance of a given HTVS pipeline, reducing the performance dependence on the pipeline configuration, and enabling quantitative comparison between different HTVS pipelines based on their optimal achievable performance.

We considered two scenarios for HTVS performance optimization in this study: first, maximizing the detection of potential candidate molecules that possess the desired property under a constrained computational budget and second, jointly optimizing the screening throughput and efficiency of the HTVS pipeline when there is no fixed computational budget for the screening operation. For both scenarios, we have thoroughly tested the performance of our proposed HTVS pipeline optimization framework. Comprehensive performance assessment based on synthetic HTVS pipelines as well as real lncRNA screening pipelines showed clear advantages of the proposed framework.

On the synthetic HTVS pipeline dataset, the optimized pipelines were able to remarkably maximize the screening throughput under a given resource constraint as shown in Figure 2. For example, one of the best-performing HTVS pipelines, , optimized via the proposed framework detected of the promising candidates that retain the desired property at the cost of only of the original cost that will be needed for screening all the initial candidates with the highest-fidelity model alone (i.e., ) when computational resources were constrained. When the HTVS pipelines were jointly optimized for throughput and computational efficiency, it discovered at least of the true potential candidates while saving up to of the original computational resource requirement (see Tables 1 and 2).

As shown in Figure 4, the optimized HTVS pipelines built for a real-world lncRNA screening campaign consistently demonstrated their practical efficacy in terms of maximizing the ROCI. All the optimized pipelines including CPAT, which is reasonably correlated to LncFinder, identified at least of the true lncRNAs at of the original computational cost. As illustrated in Table 4, the jointly optimized HTVS pipelines for throughput and computational efficiency selected of the true lncRNAs and saved a computational resource of compared with the original computational cost on average. Besides, the simulation results summarized in Table 5 demonstrate that the pipeline optimized via the proposed framework clearly outperformed the agnostic baseline approach.

Overall, not only does the HTVS optimization framework remove the guesswork in the operation of HTVS pipelines to maximize the throughput, enhance the screening accuracy, and minimize the computational cost, it also leads to reliable and consistent screening performance across a wide variety of HTVS pipeline structures. This is a significant benefit of the proposed framework that is of practical importance since it makes the overall screening performance robust to variations and potentially suboptimal design choices in constructing real-world HTVS pipelines. As there can be infinite different ways of building an HTVS pipeline in real scientific and engineering applications, it is important to note that our proposed optimization framework can guarantee near-optimal screening performance for any reasonable design choice regarding the HTVS pipeline configuration.

In addition, the comprehensive simulation results in diverse setups (see Figures S1–S48 in the supplemental information) have provided insights into how one can better design an HTVS pipeline when one should construct an HTVS pipeline from scratch. First, if one is allowed to analyze the interrelations of the screening stages with respect to the highest-fidelity model of interest prior, it might be generally effective not to include the screening stages that are less correlated to the highest-fidelity screening stage unless necessary. In other words, it might be computationally beneficial to exclude the screening stages with a lower ratio of correlation to their computational complexity. Second, one of the effective strategies for ordering the screening stages of an HTVS pipeline is to place them in increasing order of computational complexity. Finally, care has to be taken when deciding the number of screening stages used for constructing an HTVS pipeline as its optimality is a complicated function of diverse factors including the computational complexity, order, and correlation of the screening stages in the HTVS pipeline and the positive sample ratio. However, it should be also noted that, as demonstrated, the proposed framework can alleviate the performance degradation from the suboptimal designs.

While the HTVS optimization framework presented in this paper is fairly general and may be applied to various molecular screening problems, there are interesting open research problems for extending its capabilities even further. For example, how can we design an optimal screening policy when the structure of the HTVS pipeline is not linear (i.e., sequential)? It would be interesting to investigate how one may design optimal screening policies for pipelines whose structure is a directed acyclic graph. Another interesting problem is how to construct an ideal screening pipeline if only a high-fidelity model is available and its lower-fidelity surrogates need to be designed/learned from this high-fidelity model from scratch. This problem has been recently investigated in a preliminary setting for the case of screening redox-active organic materials.52 Further research is needed to generalize this result and enable joint optimization of multiple surrogate models to optimize the performance of the overall HTVS pipeline. Finally, we can envision designing a dynamic screening policy—e.g., based on reinforcement learning—where there is no predetermined “pipeline structure” but a candidate molecule is dynamically steered to the next stage based on the screening outcome of the previous stage. In this case, instead of first learning the relations between different stages and then deriving the optimal screening policy—as we did in this study—we may learn, apply, and enhance the screening policy in a dynamic and adaptive manner, which may be better suited for specific applications.

Experimental procedures

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Dr. Byung-Jun Yoon (bjyoon@ece.tamu.edu).

Materials availability

This study did not generate new unique reagents.

Simulation environment

We optimized the screening policy—for both optimization problems defined in Equations 4 and 6—using the differential evolution optimizer54 in the Scipy Python package (version 1.7.0). We performed all simulations on Ubuntu (version 20.04.2 LTS) installed on Oracle VM VirtualBox (version 6.1.22) that runs on a workstation equipped with an Intel i7-8809G CPU and 32 GB RAM.

Learning the output relations across multiple stages in an HTVS pipeline

As shown in Figure 1, the proposed optimization framework that identifies the optimal screening policy takes a two-phase approach. In the first phase, we estimate the joint score distribution . Based on the estimated score distribution, we find the optimal screening policy that maximizes the screening performance. To ensure good screening performance, accurate estimation of the joint score distribution is crucial. In this study, we performed a spectral estimation under the assumption that the joint score distribution follows a multivariate Gaussian mixture model and estimated the parameters via the EM scheme.51

Finding the optimal screening policy under computational budget constraint

A formal objective is to find the optimal screening policy that leads to the maximal number of desired candidates whose property at the highest fidelity satisfies a given condition (i.e., ) under a given computational resource constraint C. The interrelation between the property scores computed based on all stages is captured by their joint score distribution . Based on the joint score distribution , we define reward function which is a function of N screening thresholds of the stages , , as follows:

| (Equation 3) |

We can find the optimal screening policy to be applied to the first stages () that maximizes the reward under a given computational resource constraint C by solving the constrained optimization problem shown below:

| (Equation 4) |

where is the number of molecules that passed the previous stages from to . Formally, is defined as:

| (Equation 5) |

where denotes the marginal score distribution for , which can be obtained by marginalizing over to .

Joint optimization of the screening policy for screening throughput and efficiency

In many real-world screening problems, including drug or material screening, the total computational budget for screening may not be fixed, and one may want to jointly optimize for both screening throughput as well as computational efficiency of screening. In such a scenario, we can formulate a joint optimization problem to find the best screening policy that strikes the optimal balance between throughput and efficiency:

| (Equation 6) |

The weight parameter determines the relative importance between the relative reward function and the normalized total cost function defined as follows:

| (Equation 7) |

| (Equation 8) |

| (Equation 9) |

Note that is the marginal score distribution for , which is obtained by marginalizing over to .

Acknowledgments

This work was supported in part by the Department of Energy (DOE) award DE-SC0019303, the National Science Foundation (NSF) award 1835690, and the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (RS-2023-00279241). Portions of this research were conducted with the advanced computing resources provided by Texas A&M High-Performance Research Computing (HPRC). S.J. acknowledges ASCR Surrogates Benchmark Initiative project (DE-SC0021352), ECP CANDLE, and ExaWorks (Brookhaven National Laboratory under contract DE-SC0012704).

Author contributions

B.-J.Y., S.J., and F.J.A. initiated the project. H.-M.W. and B.-J.Y. proposed the method and designed the experiments. H.-M.W. implemented the algorithms, performed the simulations, analyzed the results, and wrote the initial manuscript. H.-M.W., X.Q., L.T., S.J., F.J.A., E.R.D., and B.-J.Y. discussed the results and edited the manuscript. H.-M.W. and B.-J.Y. revised the manuscript.

Declaration of interests

B.-J.Y. is a member of the Advisory Board of Patterns.

Published: November 3, 2023

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.patter.2023.100875.

Supplemental information

Data and code availability

-

•

All raw RNA sequences that were used in this study are publicly available at GENCODE: https://www.gencodegenes.org/human/release_38.html.49

-

•

Python source code and the pre-processed RNA sequences are publicly available at Zenodo: https://doi.org/10.5281/zenodo.8392685.53

References

- 1.Saadi A.A., Alfe D., Babuji Y., Bhati A., Blaiszik B., Brace A., Brettin T., Chard K., Chard R., Clyde A., et al. 50th International Conference on Parallel Processing. 2021. IMPECCABLE: integrated modeling pipeline for COVID cure by assessing better leads; pp. 1–12. [Google Scholar]

- 2.Roy B., Dhillon J., Habib N., Pugazhandhi B. Global variants of covid-19: Current understanding. J. Biomed. Sci. 2021;8:8–11. [Google Scholar]

- 3.Bohacek R.S., McMartin C., Guida W.C. The art and practice of structure-based drug design: a molecular modeling perspective. Med. Res. Rev. 1996;16:3–50. doi: 10.1002/(SICI)1098-1128(199601)16:1<3::AID-MED1>3.0.CO;2-6. [DOI] [PubMed] [Google Scholar]

- 4.Rieber N., Knapp B., Eils R., Kaderali L. Rnaither, an automated pipeline for the statistical analysis of high-throughput rnai screens. Bioinformatics. 2009;25:678–679. doi: 10.1093/bioinformatics/btp014. [DOI] [PubMed] [Google Scholar]

- 5.Studer M.H., DeMartini J.D., Brethauer S., McKenzie H.L., Wyman C.E. Engineering of a high-throughput screening system to identify cellulosic biomass, pretreatments, and enzyme formulations that enhance sugar release. Biotechnol. Bioeng. 2010;105:231–238. doi: 10.1002/bit.22527. [DOI] [PubMed] [Google Scholar]

- 6.Hartmann A., Czauderna T., Hoffmann R., Stein N., Schreiber F. Htpheno: an image analysis pipeline for high-throughput plant phenotyping. BMC Bioinf. 2011;12:148–149. doi: 10.1186/1471-2105-12-148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sikorski K., Mehta A., Inngjerdingen M., Thakor F., Kling S., Kalina T., Nyman T.A., Stensland M.E., Zhou W., de Souza G.A., et al. A high-throughput pipeline for validation of antibodies. Nat. Methods. 2018;15:909–912. doi: 10.1038/s41592-018-0179-8. [DOI] [PubMed] [Google Scholar]

- 8.Clyde A., Galanie S., Kneller D.W., Ma H., Babuji Y., Blaiszik B., Brace A., Brettin T., Chard K., Chard R., et al. High-throughput virtual screening and validation of a sars-cov-2 main protease noncovalent inhibitor. J. Chem. Inf. Model. 2022;62:116–128. doi: 10.1021/acs.jcim.1c00851. [DOI] [PubMed] [Google Scholar]

- 9.Martin R.L., Simon C.M., Smit B., Haranczyk M. In silico design of porous polymer networks: high-throughput screening for methane storage materials. J. Am. Chem. Soc. 2014;136:5006–5022. doi: 10.1021/ja4123939. [DOI] [PubMed] [Google Scholar]

- 10.Cheng L., Assary R.S., Qu X., Jain A., Ong S.P., Rajput N.N., Persson K., Curtiss L.A. Accelerating electrolyte discovery for energy storage with high-throughput screening. J. Phys. Chem. Lett. 2015;6:283–291. doi: 10.1021/jz502319n. [DOI] [PubMed] [Google Scholar]

- 11.Chen J.J.F., Visco D.P., Jr. Developing an in silico pipeline for faster drug candidate discovery: Virtual high throughput screening with the signature molecular descriptor using support vector machine models. Eur. J. Med. Chem. 2017;140:31–41. [Google Scholar]

- 12.Filer D.L., Kothiya P., Setzer R.W., Judson R.S., Martin M.T. tcpl: the toxcast pipeline for high-throughput screening data. Bioinformatics. 2017;33:618–620. doi: 10.1093/bioinformatics/btw680. [DOI] [PubMed] [Google Scholar]

- 13.Rebbeck R.T., Singh D.P., Janicek K.A., Bers D.M., Thomas D.D., Launikonis B.S., Cornea R.L. Ryr1-targeted drug discovery pipeline integrating fret-based high-throughput screening and human myofiber dynamic ca 2+ assays. Sci. Rep. 2020;10:1791–1813. doi: 10.1038/s41598-020-58461-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tran A., Wildey T., McCann S. smf-bo-2cogp: A sequential multi-fidelity constrained bayesian optimization framework for design applications. J. Comput. Inf. Sci. Eng. 2020;20 [Google Scholar]

- 15.Yan Q., Yu J., Suram S.K., Zhou L., Shinde A., Newhouse P.F., Chen W., Li G., Persson K.A., Gregoire J.M., Neaton J.B. Solar fuels photoanode materials discovery by integrating high-throughput theory and experiment. Proc. Natl. Acad. Sci. USA. 2017;114:3040–3043. doi: 10.1073/pnas.1619940114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zhang B., Zhang X., Yu J., Wang Y., Wu K., Lee M.-H. First-principles high-throughput screening pipeline for nonlinear optical materials: Application to borates. Chem. Mater. 2020;32:6772–6779. doi: 10.1021/acsami.0c15728. [DOI] [PubMed] [Google Scholar]

- 17.Chen C., Wang Y., Xia Y., Wu B., Tang D., Wu K., Wenrong Z., Yu L., Mei L. New development of nonlinear optical crystals for the ultraviolet region with molecular engineering approach. J. Appl. Phys. 1995;77:2268–2272. [Google Scholar]

- 18.Shi G., Wang Y., Zhang F., Zhang B., Yang Z., Hou X., Pan S., Poeppelmeier K.R. Finding the next deep-ultraviolet nonlinear optical material: Nh4b4o6f. J. Am. Chem. Soc. 2017;139:10645–10648. doi: 10.1021/jacs.7b05943. [DOI] [PubMed] [Google Scholar]

- 19.Zhang B., Shi G., Yang Z., Zhang F., Pan S. Fluorooxoborates: beryllium-free deep-ultraviolet nonlinear optical materials without layered growth. Angew. Chem., Int. Ed. Engl. 2017;56:3916–3919. doi: 10.1002/anie.201700540. [DOI] [PubMed] [Google Scholar]

- 20.Luo M., Liang F., Song Y., Zhao D., Xu F., Ye N., Lin Z. M2b10o14f6 (m= ca, sr): Two noncentrosymmetric alkaline earth fluorooxoborates as promising next-generation deep-ultraviolet nonlinear optical materials. J. Am. Chem. Soc. 2018;140:3884–3887. doi: 10.1021/jacs.8b01263. [DOI] [PubMed] [Google Scholar]

- 21.Mutailipu M., Zhang M., Zhang B., Wang L., Yang Z., Zhou X., Pan S. Srb5o7f3 functionalized with [b5o9f3] 6- chromophores: Accelerating the rational design of deep-ultraviolet nonlinear optical materials. Angew. Chem. 2018;57:6095–6099. doi: 10.1002/anie.201802058. [DOI] [PubMed] [Google Scholar]

- 22.Wang Y., Zhang B., Yang Z., Pan S. Cation-tuned synthesis of fluorooxoborates: Towards optimal deep-ultraviolet nonlinear optical materials. Angew. Chem. 2018;57:2150–2154. doi: 10.1002/anie.201712168. [DOI] [PubMed] [Google Scholar]

- 23.Zhang Z., Wang Y., Zhang B., Yang Z., Pan S. Cab5o7f3: A beryllium-free alkaline-earth fluorooxoborate exhibiting excellent nonlinear optical performances. Inorg. Chem. 2018;57:4820–4823. doi: 10.1021/acs.inorgchem.8b00531. [DOI] [PubMed] [Google Scholar]

- 24.Wilmer C.E., Leaf M., Lee C.Y., Farha O.K., Hauser B.G., Hupp J.T., Snurr R.Q. Large-scale screening of hypothetical metal–organic frameworks. Nat. Chem. 2011;4:83–89. doi: 10.1038/nchem.1192. [DOI] [PubMed] [Google Scholar]

- 25.Gupta S., Parihar D., Shah M., Yadav S., Managori H., Bhowmick S., Patil P.C., Alissa S.A., Wabaidur S.M., Islam M.A. Computational screening of promising beta-secretase 1 inhibitors through multi-step molecular docking and molecular dynamics simulations-pharmacoinformatics approach. J. Mol. Struct. 2020;1205 [Google Scholar]

- 26.Kim D.Y., Ha M., Kim K.S. A universal screening strategy for the accelerated design of superior oxygen evolution/reduction electrocatalysts. J. Mater. Chem. A Mater. 2021;9:3511–3519. [Google Scholar]

- 27.Liu T., Kim K.C., Kavian R., Jang S.S., Lee S.W. High-density lithium-ion energy storage utilizing the surface redox reactions in folded graphene films. Chem. Mater. 2015;27:3291–3298. [Google Scholar]

- 28.Kim K.C., Liu T., Lee S.W., Jang S.S. First-principles density functional theory modeling of li binding: thermodynamics and redox properties of quinone derivatives for lithium-ion batteries. J. Am. Chem. Soc. 2016;138:2374–2382. doi: 10.1021/jacs.5b13279. [DOI] [PubMed] [Google Scholar]

- 29.Kim S., Kim K.C., Lee S.W., Jang S.S. Thermodynamic and redox properties of graphene oxides for lithium-ion battery applications: a first principles density functional theory modeling approach. Phys. Chem. Chem. Phys. 2016;18:20600–20606. doi: 10.1039/c6cp02692c. [DOI] [PubMed] [Google Scholar]

- 30.Liu T., Kim K.C., Lee B., Chen Z., Noda S., Jang S.S., Lee S.W. Self-polymerized dopamine as an organic cathode for li-and na-ion batteries. Energy Environ. Sci. 2017;10:205–215. [Google Scholar]

- 31.Park J.H., Liu T., Kim K.C., Lee S.W., Jang S.S. Systematic molecular design of ketone derivatives of aromatic molecules for lithium-ion batteries: First-principles dft modeling. ChemSusChem. 2017;10:1584–1591. doi: 10.1002/cssc.201601730. [DOI] [PubMed] [Google Scholar]

- 32.Kang J., Kim K.C., Jang S.S. Density functional theory modeling-assisted investigation of thermodynamics and redox properties of boron-doped corannulenes for cathodes in lithium-ion batteries. J. Phys. Chem. C. 2018;122:10675–10681. [Google Scholar]

- 33.Sood P., Kim K.C., Jang S.S. Electrochemical and electronic properties of nitrogen doped fullerene and its derivatives for lithium-ion battery applications. J. Energy Chem. 2018;27:528–534. doi: 10.1002/cphc.201701171. [DOI] [PubMed] [Google Scholar]

- 34.Sood P., Kim K.C., Jang S.S. Electrochemical properties of boron-doped fullerene derivatives for lithium-ion battery applications. ChemPhysChem. 2018;19:753–758. doi: 10.1002/cphc.201701171. [DOI] [PubMed] [Google Scholar]

- 35.Zhu Y., Kim K.C., Jang S.S. Boron-doped coronenes with high redox potential for organic positive electrodes in lithium-ion batteries: a first-principles density functional theory modeling study. J. Mater. Chem. A Mater. 2018;6:10111–10120. [Google Scholar]

- 36.Ng S.-Y., Lin L., Soh B.S., Stanton L.W. Long noncoding rnas in development and disease of the central nervous system. Trends Genet. 2013;29:461–468. doi: 10.1016/j.tig.2013.03.002. [DOI] [PubMed] [Google Scholar]

- 37.Tan L., Yu J.-T., Hu N., Tan L. Non-coding rnas in alzheimer’s disease. Mol. Neurobiol. 2013;47:382–393. doi: 10.1007/s12035-012-8359-5. [DOI] [PubMed] [Google Scholar]

- 38.Luo Q., Chen Y. Long noncoding rnas and alzheimer’s disease. Clin. Interv. Aging. 2016;11:867–872. doi: 10.2147/CIA.S107037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Congrains A., Kamide K., Oguro R., Yasuda O., Miyata K., Yamamoto E., Kawai T., Kusunoki H., Yamamoto H., Takeya Y., et al. Genetic variants at the 9p21 locus contribute to atherosclerosis through modulation of anril and cdkn2a/b. Atherosclerosis. 2012;220:449–455. doi: 10.1016/j.atherosclerosis.2011.11.017. [DOI] [PubMed] [Google Scholar]

- 40.Xue Z., Hennelly S., Doyle B., Gulati A.A., Novikova I.V., Sanbonmatsu K.Y., Boyer L.A. A g-rich motif in the lncrna braveheart interacts with a zinc-finger transcription factor to specify the cardiovascular lineage. Mol. Cell. 2016;64:37–50. doi: 10.1016/j.molcel.2016.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Yang G., Lu X., Yuan L. Lncrna: a link between rna and cancer. Biochim. Biophys. Acta. 2014;1839:1097–1109. doi: 10.1016/j.bbagrm.2014.08.012. [DOI] [PubMed] [Google Scholar]

- 42.Shi X., Sun M., Liu H., Yao Y., Kong R., Chen F., Song Y. A critical role for the long non-coding rna gas5 in proliferation and apoptosis in non-small-cell lung cancer. Mol. Carcinog. 2015;54:E1–E12. doi: 10.1002/mc.22120. [DOI] [PubMed] [Google Scholar]

- 43.Peng W.-X., Koirala P., Mo Y.-Y. Lncrna-mediated regulation of cell signaling in cancer. Oncogene. 2017;36:5661–5667. doi: 10.1038/onc.2017.184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Carlevaro-Fita J., Lanzós A., Feuerbach L., Hong C., Mas-Ponte D., Pedersen J.S., Johnson R. Cancer lncrna census reveals evidence for deep functional conservation of long noncoding rnas in tumorigenesis. Commun. Biol. 2020;3:1–16. doi: 10.1038/s42003-019-0741-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Wang L., Park H.J., Dasari S., Wang S., Kocher J.-P., Li W. Cpat: Coding-potential assessment tool using an alignment-free logistic regression model. Nucleic Acids Res. 2013;41:e74. doi: 10.1093/nar/gkt006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Li A., Zhang J., Zhou Z. Plek: a tool for predicting long non-coding rnas and messenger rnas based on an improved k-mer scheme. BMC Bioinf. 2014;15:311–410. doi: 10.1186/1471-2105-15-311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Kang Y.-J., Yang D.-C., Kong L., Hou M., Meng Y.-Q., Wei L., Gao G. Cpc2: a fast and accurate coding potential calculator based on sequence intrinsic features. Nucleic Acids Res. 2017;45:W12–W16. doi: 10.1093/nar/gkx428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Han S., Liang Y., Ma Q., Xu Y., Zhang Y., Du W., Wang C., Li Y. Lncfinder: an integrated platform for long non-coding rna identification utilizing sequence intrinsic composition, structural information and physicochemical property. Briefings Bioinf. 2019;20:2009–2027. doi: 10.1093/bib/bby065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Frankish A., Diekhans M., Jungreis I., Lagarde J., Loveland J.E., Mudge J.M., Sisu C., Wright J.C., Armstrong J., Barnes I., et al. Gencode 2021. Nucleic Acids Res. 2021;49:D916–D923. doi: 10.1093/nar/gkaa1087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Li W., Godzik A. Cd-hit: a fast program for clustering and comparing large sets of protein or nucleotide sequences. Bioinformatics. 2006;22:1658–1659. doi: 10.1093/bioinformatics/btl158. [DOI] [PubMed] [Google Scholar]

- 51.Dempster A.P., Laird N.M., Rubin D.B. Maximum likelihood from incomplete data via the em algorithm. J. Roy. Stat. Soc. B. 1977;39:1–22. [Google Scholar]

- 52.Woo H.-M., Allam O., Chen J., Jang S.S., Yoon B.-J. Optimal high-throughput virtual screening pipeline for efficient selection of redox-active organic materials. iScience. 2022;26:105735. doi: 10.1016/j.isci.2022.105735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Woo H.-M., Qian X., Jha S., Alexander F., Dougherty E., Yoon B.-J. Zenodo; 2023. Alexpecial/OCC: Patterns. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Storn R., Price K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Global Optim. 1997;11:341–359. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

-

•

All raw RNA sequences that were used in this study are publicly available at GENCODE: https://www.gencodegenes.org/human/release_38.html.49

-

•

Python source code and the pre-processed RNA sequences are publicly available at Zenodo: https://doi.org/10.5281/zenodo.8392685.53