Abstract

Background: Expression proteomics involves the global evaluation of protein abundances within a system. In turn, differential expression analysis can be used to investigate changes in protein abundance upon perturbation to such a system.

Methods: Here, we provide a workflow for the processing, analysis and interpretation of quantitative mass spectrometry-based expression proteomics data. This workflow utilizes open-source R software packages from the Bioconductor project and guides users end-to-end and step-by-step through every stage of the analyses. As a use-case we generated expression proteomics data from HEK293 cells with and without a treatment. Of note, the experiment included cellular proteins labelled using tandem mass tag (TMT) technology and secreted proteins quantified using label-free quantitation (LFQ).

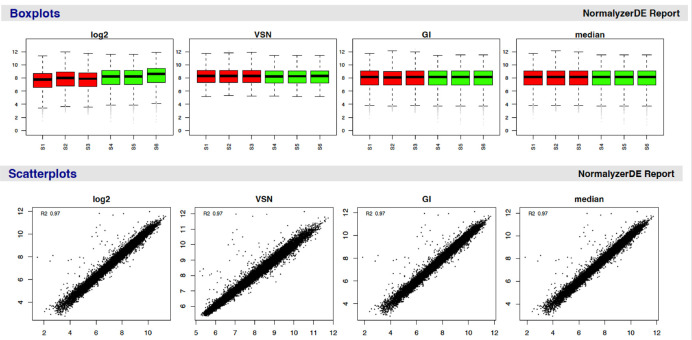

Results: The workflow explains the software infrastructure before focusing on data import, pre-processing and quality control. This is done individually for TMT and LFQ datasets. The application of statistical differential expression analysis is demonstrated, followed by interpretation via gene ontology enrichment analysis.

Conclusions: A comprehensive workflow for the processing, analysis and interpretation of expression proteomics is presented. The workflow is a valuable resource for the proteomics community and specifically beginners who are at least familiar with R who wish to understand and make data-driven decisions with regards to their analyses.

Keywords: Bioconductor, QFeatures, proteomics, shotgun proteomics, bottom-up proteomics, differential expression, mass spectrometry, quality control, data processing, limma

Introduction

Proteins are responsible for carrying out a multitude of biological tasks, implementing cellular functionality and determining phenotype. Mass spectrometry (MS)-based expression proteomics allows protein abundance to be quantified and compared between samples. In turn, differential protein abundance can be used to explore how biological systems respond to a perturbation. Many research groups have applied such methodologies to understand mechanisms of disease, elucidate cellular responses to external stimuli, and discover diagnostic biomarkers (see Refs. 1– 3 for recent examples). As the potential of proteomics continues to be realised, there is a clear need for resources demonstrating how to deal with expression proteomics data in a robust and standardised manner.

The data generated during an expression proteomics experiment are complex, and unfortunately there is no one-size-fits-all method for the processing and analysis of such data. The reason for this is two-fold. Firstly, there are a wide range of experimental methods that can be used to generate expression proteomics data. Researchers can analyse full-length proteins (top-down proteomics) or complete an enzymatic digestion and analyse the resulting peptides. This proteolytic digestion can be either partial (middle-down proteomics) or complete (bottom-up proteomics). The latter approach is most commonly used as peptides have a more favourable ionisation capacity, predictable fragmentation patterns, and can be separated via reversed phase liquid chromatography, ultimately making them more compatible with MS. 4 Within bottom-up proteomics, the relative quantitation of peptides can be determined using one of two approaches: (1) label-free or (2) label-based quantitation. Moreover, the latter can be implemented with a number of different peptide labelling chemistries, for example, using tandem mass tag (TMT), stable-isotope labelling by amino acids in cell culture (SILAC), isobaric tags for relative and absolute quantitation (iTRAQ), among others. 5 MS analysis can also be used in either data-dependent or data-independent acquisition (DDA or DIA) mode. 6 , 7 Although all of these experimental methods typically result in a similar output, a matrix of quantitative values, the data are different and must be treated as such. Secondly, data processing is dependent upon the experimental goal and biological question being asked.

Here, we provide a step-by-step workflow for processing, analysing and interpreting expression proteomics data derived from a bottom-up experiment using DDA and either LFQ or TMT label-based peptide quantitation. We outline how to process the data starting from a peptide spectrum match (PSM)- or peptide- level .txt file. Such files are the outputs of most major third party search software (e.g. Proteome Discoverer, MaxQuant, FragPipe). We begin with data import and then guide users through the stages of data processing including data cleaning, quality control filtering, management of missing values, imputation, and aggregation to protein-level. Finally, we finish with how to discover differentially abundant proteins and carry out biological interpretation of the resulting data. The latter will be achieved through the application of gene ontology (GO) enrichment analysis. Hence, users can expect to generate lists of proteins that are significantly up- or downregulated in their system of interest, as well as the GO terms that are significantly over-represented in these proteins.

Using the R statistical programming environment 8 we make use of several state-of-the-art packages from the open-source, open-development Bioconductor project 9 to analyse use-case expression proteomics datasets 10 from both LFQ and label-based technologies.

Package installation

In this workflow we make use of open-source software from the R Bioconductor 9 project. The Bioconductor initiative provides R software packages dedicated to the processing of high-throughput complex biological data. Packages are open-source, well-documented and benefit from an active community of developers. We recommend users to download the RStudio integrated development environment (IDE) which provides a graphical interface to R programming language.

Detailed instructions for the installation of Bioconductor packages are documented on the Bioconductor Installation page. The main packages required for this workflow are installed using the code below.

if (!require("BiocManager", quietly = TRUE)) { install.packages("BiocManager") } BiocManager::install(c("QFeatures", "ggplot2", "stringr" "NormalyzerDE", "corrplot", "Biostrings", "limma", "impute", "dplyr", "tibble", "org.Hs.eg.db", "clusterProfiler", "enrichplot"))

After installation, each package must be loaded before it can be used in the R session. This is achieved via the library function. For example, to load the QFeatures package one would type library("QFeatures") after installation. Here we load all packages included in this workflow.

library("QFeatures") library("ggplot2") library("stringr") library("dplyr") library("tibble") library("NormalyzerDE") library("corrplot") library("Biostrings") library("limma") library("org.Hs.eg.db") library("clusterProfiler") library("enrichplot")

The use-case: exploring changes in protein abundance in HEK293 cells upon perturbation

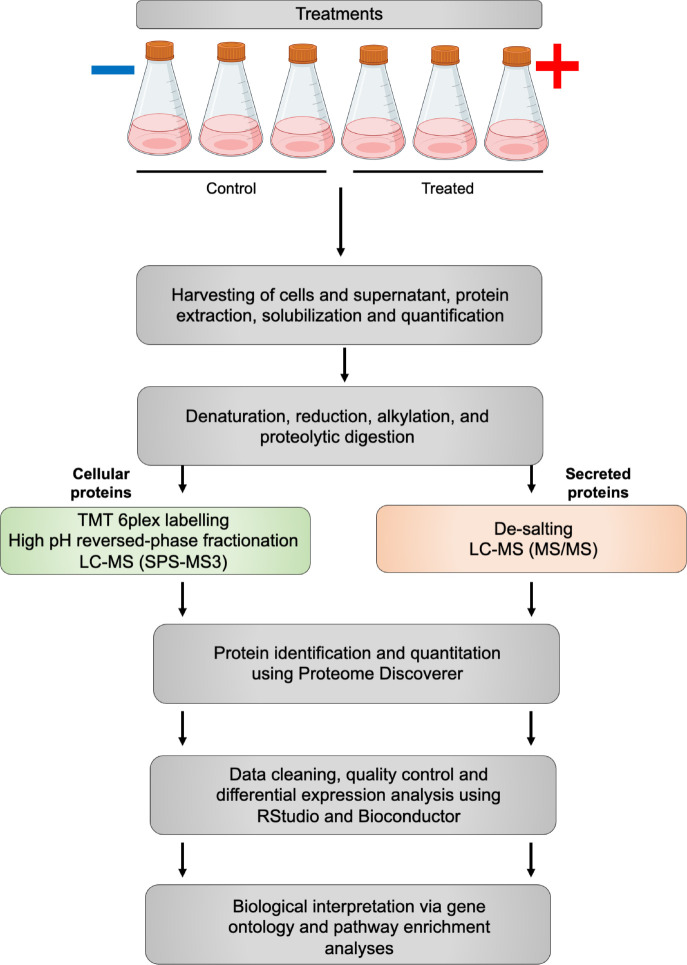

As a use-case, we analyse two quantitative proteomics datasets derived from a single experiment. The aim of the experiment was to reveal the differential abundance of proteins in HEK293 cells upon a particular treatment, the exact details of which are anonymised for the purpose of this workflow. An outline of the experimental method is provided in Figure 1.

Figure 1. A schematic summary of the experimental protocol used to generate the use-case data.

Briefly, HEK293 cells were either (i) left untreated, or (ii) provided with the treatment of interest. These two conditions are referred to as ‘control’ and ‘treated’, respectively. Each condition was evaluated in triplicate. At 96-hours post-treatment, samples were collected and separated into cell pellet and supernatant fractions containing cellular and secreted proteins, respectively. Both fractions were denatured, alkylated and digested to peptides using trypsin.

The supernatant fractions were de-salted and analysed over a two-hour gradient in an Orbitrap Fusion™ Lumos™ Tribrid™ mass spectrometer coupled to an UltiMate™ 3000 HPLC system (Thermo Fisher Scientific). LFQ was achieved at the MS1 level based on signal intensities. Cell pellet fractions were labelled using TMT technology before being pooled and subjected to high pH reversed-phase peptide fractionation giving a total of 8 fractions. As before, each fraction was analysed over a two-hour gradient in an Orbitrap Fusion™ Lumos™ Tribrid™ mass spectrometer coupled to an UltiMate™ 3000 HPLC system (Thermo Fisher Scientific). To improve the accuracy of the quantitation of TMT-labelled peptides, synchronous precursor selection (SPS)-MS3 data acquisition was employed. 11 , 12 Of note, TMT labelling of cellular proteins was achieved using a single TMT6plex. Hence, this workflow will not include guidance on multi-batch TMT effects or the use of internal reference scaling. For more information about the use of multiple TMTplexes users are directed to Refs. 13, 14.

The cell pellet and supernatant datasets were handled independently and we take advantage of this to discuss the processing of TMT-labelled and LFQ proteomics data. In both cases, the raw MS data were processed using Proteome Discoverer v2.5 (Thermo Fisher Scientific). While the focus in the workflow presented below is differential protein expression analysis, the data processing and quality control steps described here are applicable to any TMT or LFQ proteomics dataset. Importantly, however, the experimental aim will influence data-guided decisions and the considerations discussed here likely differ from those of spatial proteomics, for example.

Downloading the data

The files required for this workflow can be found deposited to the ProteomeXchange Consortium via the PRIDE 15 , 16 partner repository with the dataset identifier PXD041794, Zenodo at http://doi.org/10.5281/zenodo.7837375 and at the Github repository https://github.com/CambridgeCentreForProteomics/f1000_expression_proteomics/. Users are advised to download these files into their current working directory. In R the setwd function can be used to specify a working directory, or if using RStudio one can use the Session -> Set Working Directory menu.

The infrastructure: QFeatures and SummarizedExperiments

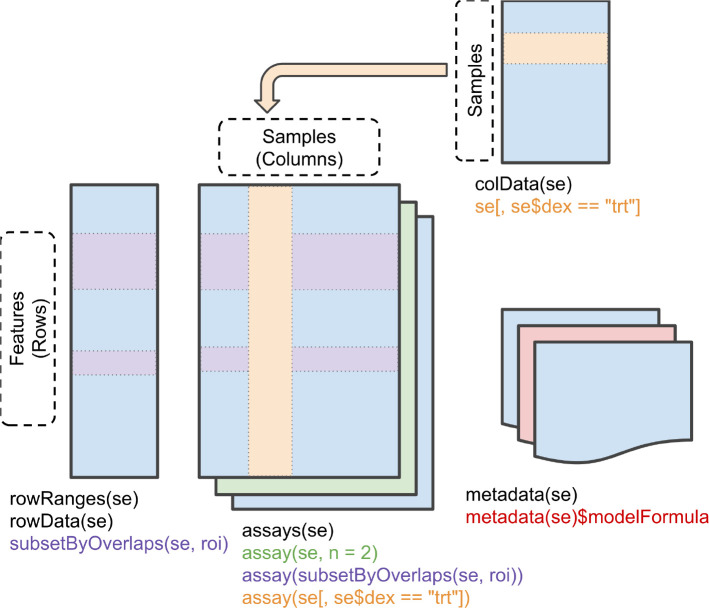

To be able to conveniently track each step of this workflow, users should make use of the Quantitative features for mass spectrometry, or QFeatures, Bioconductor package. 17 Prior to utilising the QFeatures infrastructure, it is first necessary to understand the structure of a SummarizedExperiment 18 object as QFeatures objects are based on the SummarizedExperiment class. A SummarizedExperiment, often referred to as an SE, is a data container and S4 object comprised of three components: (1) the colData (column data) containing sample metadata, (2) the rowData containing data features, and (3) the assay storing quantitation data, as illustrated in Figure 2. The sample metadata includes annotations such as condition and replicate, and can be accessed using the colData function. Data features, accessed via the rowData function, represent information derived from the identification search. Examples include peptide sequence, master protein accession, and confidence scores. Finally, quantitative data is stored in the assay slot. These three independent data structures are neatly stored within a single SummarizedExperiment object.

Figure 2. A graphic representation of the SummarizedExperiment (SE) object structure.

Figure reproduced from the SummarizedExperiment package 18 vignette with permission.

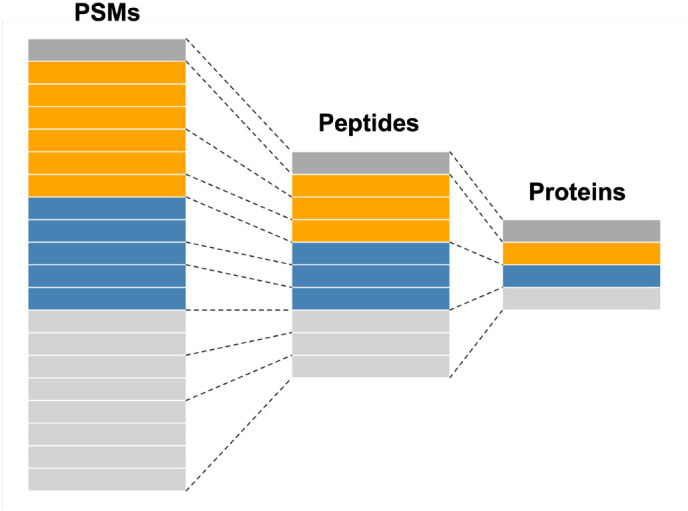

A QFeatures object holds each level of quantitative proteomics data, namely (but not limited to) the PSM, peptide and protein-level data. Each level of the data is stored as its own SummarizedExperiment within a single QFeatures object. The lowest level data e.g. PSM, is first imported into a QFeatures object before aggregating upward towards protein-level ( Figure 3). During this process of aggregation, QFeatures maintains the hierarchical links between quantitative levels whilst allowing easy access to all data levels for individual proteins of interest. This key aspect of QFeatures will be exemplified throughout this workflow. Additional guidance on the use of QFeatures can be found in Ref. 17. For visualisation of the data, all plots are generated using standard ggplot functionality, but could equally be produced using base R.

Figure 3. A graphic representation of the QFeatures object structure showing the relationship between assays.

Figure modified from the QFeatures 17 vignette with permission.

Processing and analysing quantitative TMT data

First, we provide a workflow for the processing and quality control of quantitative TMT-labelled data. As outlined above, the cell pellet fractions of triplicate control and treated HEK293 cells were labelled using a TMT6plex. Labelling was as outlined in Table 1.

Table 1. TMT labelling strategy in the use-case experiment.

| Sample name | Condition | Replicate | Tag |

|---|---|---|---|

| S1 | Treated | 1 | TMT128 |

| S2 | Treated | 2 | TMT127 |

| S3 | Treated | 3 | TMT131 |

| S4 | Control | 1 | TMT129 |

| S5 | Control | 2 | TMT126 |

| S6 | Control | 3 | TMT130 |

Identification search of raw data

The first processing step in any MS-based proteomics experiment involves an identification search using the raw data. The aim of this search is to identify which peptide sequences, and therefore proteins, correspond to the raw spectra output from the mass spectrometer. Several third-party software exist to facilitate identification searches of raw MS data but ultimately the output of any search is a list of PSMs, peptides and protein identifications along with their corresponding quantification data.

The use-case data presented here was processed using Proteome Discoverer 2.5 and additional information about this search is provided in an appendix in the GitHub repository https://github.com/CambridgeCentreForProteomics/f1000_expression_proteomics. Further, we provide template workflows for both the processing and consensus steps of the Proteome Discoverer identification runs. It is also possible to determine several of the key parameter settings during the preliminary data exploration. This step will be particularly important for those using publicly available data without detailed knowledge of the identification search parameters. For now, we simply export the PSM-level .txt file from the Proteome Discoverer output.

Importing data into R and creating a QFeatures object

Data cleaning, exploration and filtering at the PSM-level is performed in R using QFeatures. The function readQFeatures is used to import the PSM-level .txt file. As the cell pellet TMT data we will use is derived from one TMT6plex, only one PSM-level .txt file needs to be imported. This file should be stored within the users working directory.

The columns containing quantitative data also need to be identified before import. To check the column names we use names and read.delim (the equivalent for a .csv file would be read.csv). In the current experiment the order of TMT labels was randomised in an attempt to minimise the effect of TMT channel leakage. For ease of grouping and simplification of downstream visualisation, samples are re-ordered during the import step. This is done by creating a vector containing the sample column names in their correct order. If samples are already in the desired order, the vector can be created by simply indexing the quantitative columns.

## Locate the PSM .txt file cp_psm <- "cell_pellet_tmt_results_psms.txt" ## Identify columns containing quantitative data cp_psm %>% read.delim() %>% names()

## [1] "PSMs.Workflow.ID" "PSMs.Peptide.ID" ## [3] "Checked" "Tags" ## [5] "Confidence" "Identifying.Node.Type" ## [7] "Identifying.Node" "Search.ID" ## [9] "Identifying.Node.No" "PSM.Ambiguity" ## [11] "Sequence" "Annotated.Sequence" ## [13] "Modifications" "Number.of.Proteins" ## [15] "Master.Protein.Accessions" "Master.Protein.Descriptions" ## [17] "Protein.Accessions" "Protein.Descriptions" ## [19] "Number.of.Missed.Cleavages" "Charge" ## [21] "Original.Precursor.Charge" "Delta.Score" ## [23] "Delta.Cn" "Rank" ## [25] "Search.Engine.Rank" "Concatenated.Rank" ## [27] "mz.in.Da" "MHplus.in.Da" ## [29] "Theo.MHplus.in.Da" "Delta.M.in.ppm" ## [31] "Delta.mz.in.Da" "Ions.Matched" ## [33] "Matched.Ions" "Total.Ions" ## [35] "Intensity" "Activation.Type" ## [37] "NCE.in.Percent" "MS.Order" ## [39] "Isolation.Interference.in.Percent" "SPS.Mass.Matches.in.Percent" ## [41] "Average.Reporter.SN" "Ion.Inject.Time.in.ms" ## [43] "RT.in.min" "First.Scan" ## [45] "Last.Scan" "Master.Scans" ## [47] "Spectrum.File" "File.ID" ## [49] "Abundance.126" "Abundance.127" ## [51] "Abundance.128" "Abundance.129" ## [53] "Abundance.130" "Abundance.131" ## [55] "Quan.Info" "Peptides.Matched" ## [57] "XCorr" "Number.of.Protein.Groups" ## [59] "Percolator.q.Value" "Percolator.PEP" ## [61] "Percolator.SVMScore"

## Store location of quantitative columns in a vector in the desired order abundance_ordered <- c("Abundance.128", "Abundance.127", "Abundance.131", "Abundance.129", "Abundance.126", "Abundance.130")

Now that the necessary file and its quantitative data columns have been identified, we can pass this to the readQFeatures function and provide these two pieces of information. We also specify that the file is tab-delimited by including sep = “\t” (similarly you would use sep = “,” for a .csv file). Of note, the readQFeatures function can also take fnames as an argument to specify a column to be used as the row names of the imported object. Whilst previous QFeatures vignettes used the “Sequence” or “Annotated.Sequence” as row names, we advise against this because of the presence of PSMs matched to the same peptide sequence with different modifications. In such cases, multiple rows would have the same name forcing the readQFeatures function to output a “making assay row names unique” message and add an identifying number to the end of each duplicated row name. These sequences would then be considered as unique during the aggregation of PSM to peptide, thus resulting in two independent peptide-level quantitation values rather than one. Therefore, we do not pass a fnames argument and the row names automatically become indices. Finally, we pass the name argument to indicate the type of data added.

## Create QFeatures cp_qf <- readQFeatures(table = cp_psm, ecol = abundance_ordered, sep = "\t", name = "psms_raw")

Accessing the QFeatures infrastructure

As outlined above, a QFeatures data object is a list of SummarizedExperiment objects. As such, an individual SummarizedExperiment can be accessed using the standard double bracket nomenclature, as demonstrated in the code chunk below.

## Index using position cp_qf[[1]]

## class: SummarizedExperiment ## dim: 48832 6 ## metadata(0): ## assays(1): ” ## rownames(48832): 1 2 … 48831 48832 ## rowData names(55): PSMs.Workflow.ID PSMs.Peptide.ID … Percolator.PEP ## Percolator.SVMScore ## colnames(6): Abundance.128 Abundance.127 … Abundance.126 ## Abundance.130 ## colData names(0):

## Index using name cp_qf[["psms_raw"]]

## class: SummarizedExperiment ## dim: 48832 6 ## metadata(0): ## assays(1): ” ## rownames(48832): 1 2 … 48831 48832 ## rowData names(55): PSMs.Workflow.ID PSMs.Peptide.ID … Percolator.PEP ## Percolator.SVMScore ## colnames(6): Abundance.128 Abundance.127 … Abundance.126 ## Abundance.130 ## colData names(0):

A summary of the data contained in the slots is printed to the screen. To retrieve the rowData, colData or assay data from a particular SummarizedExperiment within a QFeatures object users can make use of the rowData, colData and assay functions. For plotting or data transformation it is necessary to convert to a data.frame or tibble.

## Access feature information with rowData ## The output should be converted to data.frame/tibble for further processing cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% summarise(mean_intensity = mean(Intensity))

## # A tibble: 1 x 1 ## mean_intensity ## <dbl> ## 1 13281497.

Adding metadata

Having imported the data, each sample is first annotated with its TMT label, sample reference and condition. As this information is experimental metadata, it is added to the colData slot. It is also useful to clean up sample names such that they are short, intuitive and informative. This is done by editing the colnames. These steps may not always be necessary depending upon the identification search output.

## Clean sample names colnames(cp_qf[["psms_raw"]]) <- paste0("S", 1:6) ## Add sample info as colData to QFeatures object cp_qf$label <- c("TMT128", "TMT127", "TMT131", "TMT129", "TMT126", "TMT130") cp_qf$sample <- paste0("S", 1:6) cp_qf$condition <- rep(c("Treated", "Control"), each = 3) ## Verify colData(cp_qf)

## DataFrame with 6 rows and 3 columns ## label sample condition ## <character> <character> <character> ## S1 TMT128 S1 Treated ## S2 TMT127 S2 Treated ## S3 TMT131 S3 Treated ## S4 TMT129 S4 Control ## S5 TMT126 S5 Control ## S6 TMT130 S6 Control

## Assign the colData to first assay as well colData(cp_qf[["psms_raw"]]) <- colData(cp_qf)

Preliminary data exploration

As well as cleaning and annotating the data, it is always advisable to check that the import worked and that the data looks as expected. Further, preliminary exploration of the data can provide an early sign of whether the experiment and subsequent identification search were successful. Importantly, however, the names of key parameters will vary depending on the software used, and will likely change over time. Users will need to be aware of this and modify the code in this workflow accordingly.

## Check what information has been imported cp_qf[["psms_raw"]] %>% rowData() %>% colnames()

## [1] "PSMs.Workflow.ID" "PSMs.Peptide.ID" ## [3] "Checked" "Tags" ## [5] "Confidence" "Identifying.Node.Type" ## [7] "Identifying.Node" "Search.ID" ## [9] "Identifying.Node.No" "PSM.Ambiguity" ## [11] "Sequence" "Annotated.Sequence" ## [13] "Modifications" "Number.of.Proteins" ## [15] "Master.Protein.Accessions" "Master.Protein.Descriptions" ## [17] "Protein.Accessions" "Protein.Descriptions" ## [19] "Number.of.Missed.Cleavages" "Charge" ## [21] "Original.Precursor.Charge" "Delta.Score" ## [23] "Delta.Cn" "Rank" ## [25] "Search.Engine.Rank" "Concatenated.Rank" ## [27] "mz.in.Da" "MHplus.in.Da" ## [29] "Theo.MHplus.in.Da" "Delta.M.in.ppm" ## [31] "Delta.mz.in.Da" "Ions.Matched" ## [33] "Matched.Ions" "Total.Ions" ## [35] "Intensity" "Activation.Type" ## [37] "NCE.in.Percent" "MS.Order" ## [39] "Isolation.Interference.in.Percent" "SPS.Mass.Matches.in.Percent" ## [41] "Average.Reporter.SN" "Ion.Inject.Time.in.ms" ## [43] "RT.in.min" "First.Scan" ## [45] "Last.Scan" "Master.Scans" ## [47] "Spectrum.File" "File.ID" ## [49] "Quan.Info" "Peptides.Matched" ## [51] "XCorr" "Number.of.Protein.Groups" ## [53] "Percolator.q.Value" "Percolator.PEP" ## [55] "Percolator.SVMScore"

## Find out how many PSMs are in the data cp_qf[["psms_raw"]] %>% dim()

## [1] 48832 6

original_psms <- cp_qf[["psms_raw"]] %>% nrow() %>% as.numeric()

We can see that the original data includes 48832 PSMs across the 6 samples. It is also useful to make note of how many peptides and proteins the raw PSM data corresponds to, and to track how many we remove during the subsequent filtering steps. This can be done by checking how many unique entries are located within the “Sequence” and “Master.Protein.Accessions” for peptides and proteins, respectively. Of note, searching for unique peptide sequences means that the number of peptides does not include duplicated sequences with different modifications.

## Find out how many peptides and master proteins are in the data original_peps <- cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% pull(Sequence) %>% unique() %>% length() %>% as.numeric() original_prots <- cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% pull(Master.Protein.Accessions) %>% unique() %>% length() %>% as.numeric() print(c(original_peps, original_prots))

## [1] 25969 5040

Hence, the output of the identification search contains 48832 PSMs corresponding to 25969 peptide sequences and 5040 master proteins. Finally, we confirm that the identification search was carried out as expected. For this, we print summaries of the key search parameters using the table function for discrete parameters and summary for those which are continuous. This is also helpful for users who are analysing publicly available data and have limited knowledge about the identification search parameters.

## Check missed cleavages cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% pull(Number.of.Missed.Cleavages) %>% table()

## . ## 0 1 2 ## 46164 2592 76

## Check precursor mass tolerance cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% pull(Delta.M.in.ppm) %>% summary()

## Min. 1st Qu. Median Mean 3rd Qu. Max. ## -8.9300 -0.6000 0.3700 0.6447 1.3100 9.6700

## Check fragment mass tolerance cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% pull(Delta.mz.in.Da) %>% summary()

## Min. 1st Qu. Median Mean 3rd Qu. Max. ## -0.0110400 -0.0004100 0.0002500 0.0006812 0.0010200 0.0135100

## Check PSM confidence allocations cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% pull(Confidence) %>% table()

## . ## High ## 48832

Experimental quality control checks

Experimental quality control of TMT-labelled quantitive proteomics data takes place in two steps: (1) assessment of the raw mass spectrometry data, and (2) evaluation of TMT labelling efficiency.

Quality control of the raw mass spectrometry data

Having taken an initial look at the output of the identification search, it is possible to create some simple plots to inspect the raw mass spectrometry data. Such plots are useful in revealing problems that may have occurred during the mass spectrometry run but are far from extensive. Users who wish to carry out a more in-depth evaluation of the raw mass spectrometry data may benefit from use of the Spectra Bioconductor package which allows for visualisation and exploration of raw chromatograms and spectra, among other features. 19

The first plot we generate looks at the delta precursor mass, that is the difference between observed and estimated precursor mass, across retention time. Importantly, exploration of this raw data feature can only be done when using the raw data prior to recalibration. For users of Proteome Discoverer, this means using the spectral files node rather than the spectral files recalibration node.

## Generate scatter plot of mass accuracy cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% ggplot(aes(x = RT.in.min, y = Delta.M.in.ppm)) + geom_point(size = 0.5, shape = 4) + geom_hline(yintercept = 5, linetype = "dashed", color = "red") + geom_hline(yintercept = -5, linetype = "dashed", color = "red") + labs(x = "RT (min)", y = "Delta precursor mass (ppm)") + scale_x_continuous(limits = c(0, 120), breaks = seq(0, 120, 20)) + scale_y_continuous(limits = c(-10, 10), breaks = c(-10, -5, 0, 5, 10)) + ggtitle("PSM retention time against delta precursor mass") + theme_bw()

Since we applied a precursor mass tolerance of 10 ppm during the identification search, all of the PSMs are within ppm. Ideally, however, we want the majority of the data to be within ppm since smaller delta masses correspond to a greater rate of correct peptide identifications. From the graph we have plotted we can see that indeed the majority of PSMs are within ppm. If users find that too many PSMs are outside of the desired ppm, it is advisable to check the calibration of the mass spectrometer.

The second quality control plot of raw data is that of MS2 ion inject time across the retention time gradient. Here, it is desirable to achieve an average MS2 injection time of 50 ms or less, although the exact target threshold will depend upon the sample load. If the average ion inject time is longer than desired, then the ion transfer tube and/or front end optics of the instrument may require cleaning.

## Generate scatter plot of ion inject time across retention time cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% ggplot(aes(x = RT.in.min, y = Ion.Inject.Time.in.ms)) + geom_point(size = 0.5, shape = 4) + geom_hline(yintercept = 50, linetype = "dashed", color = "red") + labs(x = "RT (min)", y = "Ion inject time (ms)") + scale_x_continuous(limits = c(0, 120), breaks = seq(0, 120, 20)) + scale_y_continuous(limits = c(0, 60), breaks = seq(0, 60, 10)) + ggtitle("PSM retention time against ion inject time") + theme_bw()

From this plot we can see that whilst there is a high density of PSMs at low inject times, there are also many data points found at the 50 ms threshold. This indicates that by increasing the time allowed for ions to accumulate in the ion trap, the number of PSMs could also have been increased. Finally, we inspect the distribution of PSMs across both the ion injection time and retention time by plotting histograms.

## Plot histogram of PSM ion inject time cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% ggplot(aes(x = Ion.Inject.Time.in.ms)) + geom_histogram(binwidth = 1) + labs(x = "Ion inject time (ms)", y = "Frequency") + scale_x_continuous(limits = c(-0.5, 52.5), breaks = seq(0, 50, 5)) + ggtitle("PSM frequency across ion injection time") + theme_bw()

## Plot histogram of PSM retention time cp_qf[["psms_raw"]] %>% rowData() %>% as_tibble() %>% ggplot(aes(x = RT.in.min)) + geom_histogram(binwidth = 1) + labs(x = "RT (min)", y = "Frequency") + scale_x_continuous(breaks = seq(0, 120, 20)) + ggtitle("PSM frequency across retention time") + theme_bw()

The four plots that we have generated look relatively standard with no obvious problems indicated. Therefore, we continue by evaluating the quality of the processed data.

Checking the efficiency of TMT labelling

The most fundamental data quality control step in a TMT experiment is to check the TMT labelling efficiency. TMT labels react with amine groups present at the peptide N-terminus as well as the side chain of lysine (K) residues. Of note, lysine residues can be TMT modified regardless of whether they are present at the C-terminus of a trypic peptide or internally following miscleavage.

To evaluate the TMT labelling efficiency, a separate identification search of the raw data was completed with lysine (K) and peptide N-termini TMT labels considered as dynamic modifications rather than static. No additional residues (S or T) were evaluated for labelling in the search. This allows the search engine to assess the presence of both the modified (TMT labelled) and unmodified (original) forms of each peptide. The relative proportions of modified and unmodified peptides can then be used to calculate the TMT labelling efficiency. To demonstrate how to check for TMT labelling efficiency, only two of the eight fractions were utilised for this search.

As we will only look at TMT efficiency at the PSM-level, here we upload the .txt file directly as a SummarizedExperiment rather than a QFeatures object. This is done using the readSummarizedExperiment function and the same arguments as those in readQFeatures.

## Locate the PSM .txt file tmt_psm <- "cell_pellet_tmt_efficiency_psms.txt" ## Identify columns containing quantitative data tmt_psm %>% read.delim() %>% names()

## [1] "PSMs.Workflow.ID" "PSMs.Peptide.ID" ## [3] "Checked" "Tags" ## [5] "Confidence" "Identifying.Node.Type" ## [7] "Identifying.Node" "Search.ID" ## [9] "Identifying.Node.No" "PSM.Ambiguity" ## [11] "Sequence" "Annotated.Sequence" ## [13] "Modifications" "Number.of.Proteins" ## [15] "Master.Protein.Accessions" "Master.Protein.Descriptions" ## [17] "Protein.Accessions" "Protein.Descriptions" ## [19] "Number.of.Missed.Cleavages" "Charge" ## [21] "Original.Precursor.Charge" "Delta.Score" ## [23] "Delta.Cn" "Rank" ## [25] "Search.Engine.Rank" "Concatenated.Rank" ## [27] "mz.in.Da" "MHplus.in.Da" ## [29] "Theo.MHplus.in.Da" "Delta.M.in.ppm" ## [31] "Delta.mz.in.Da" "Ions.Matched" ## [33] "Matched.Ions" "Total.Ions" ## [35] "Intensity" "Activation.Type" ## [37] "NCE.in.Percent" "MS.Order" ## [39] "Isolation.Interference.in.Percent" "SPS.Mass.Matches.in.Percent" ## [41] "Average.Reporter.SN" "Ion.Inject.Time.in.ms" ## [43] "RT.in.min" "First.Scan" ## [45] "Last.Scan" "Master.Scans" ## [47] "Spectrum.File" "File.ID" ## [49] "Abundance.126" "Abundance.127" ## [51] "Abundance.128" "Abundance.129" ## [53] "Abundance.130" "Abundance.131" ## [55] "Quan.Info" "Peptides.Matched" ## [57] "XCorr" "Number.of.Protein.Groups" ## [59] "Contaminant" "Percolator.q.Value" ## [61] "Percolator.PEP" "Percolator.SVMScore"

## Read in as a SummarizedExperiment tmt_se <- readSummarizedExperiment(table = tmt_psm, ecol = abundance_ordered, sep = "\t") ## Clean sample names colnames(tmt_se) <- paste0("S", 1:6) ## Add sample info as colData to QFeatures object tmt_se$label <- c("TMT128", "TMT127", "TMT131", "TMT129", "TMT126", "TMT130") tmt_se$sample <- paste0("S", 1:6) tmt_se$condition <- rep(c("Treated", "Control"), each = 3)

## Verify

colData(tmt_se)

## DataFrame with 6 rows and 3 columns ## label sample condition ## <character> <character> <character> ## S1 TMT128 S1 Treated ## S2 TMT127 S2 Treated ## S3 TMT131 S3 Treated ## S4 TMT129 S4 Control ## S5 TMT126 S5 Control ## S6 TMT130 S6 Control

Information about the presence of labels is stored within the ‘Modifications’ feature of the rowData. Using this information, the TMT labelling efficiency of the experiment is calculated using the code chunks below. Users should alter this code if TMTpro reagents are being used such that “TMT6plex” is replaced by “TMTpro”.

First we consider the efficiency of peptide N-termini TMT labelling. We use the grep function to identify PSMs which are annotated as having an N-Term TMT6plex modification. We then calculate the number of PSMs with this annotation as a proportion of the total number of PSMs.

## Count the total number of PSMs tmt_total <- length(tmt_se) ## Count the number of PSMs with an N-terminal TMT modification nterm_labelled_rows <- grep("N-Term\\(TMT6plex\\)", rowData(tmt_se)$Modifications) nterm_psms_labelled <- length(nterm_labelled_rows) ## Calculate N-terminal TMT labelling efficiency efficiency_nterm <- (nterm_psms_labelled / tmt_total) * 100 efficiency_nterm %>% round(digits = 1) %>% print()

## [1] 96.8

Secondly, we consider the TMT labelling efficiency of lysine (K) residues. As mentioned above, lysine residues can be TMT labelled regardless of their position within a peptide. Hence, we here calculate lysine labelling efficiency on a per lysine residue basis.

## Count the number of lysine TMT6plex modifications in the PSM data k_tmt <- str_count(string = rowData(tmt_se)$Modifications, pattern = "K[0-9]{1,2}\\(TMT6plex\\)") %>% sum() %>% as.numeric() ## Count the number of lysine residues in the PSM data k_total <- str_count(string = rowData(tmt_se)$Sequence, pattern = "K") %>% sum() %>% as.numeric() ## Determine the percentage of TMT labelled lysines efficiency_k <- (k_tmt / k_total) * 100 efficiency_k %>% round(digits = 1) %>% print()

## [1] 98.5

Users should aim for an overall TMT labelling efficiency >90% in order to achieve reliable quantitation. In cases where labelling efficiency is towards the lower end of the acceptable range, TMT labels should be set as dynamic modifications during the final identification search, although this will increase the search space and time as well as influencing false discovery rate (FDR) calculations. A summary of the current advice from Thermo Fisher is provided in Table 2. Where labelling efficiency is calculated as being between categories, how to progress is ultimately decided by the user.

Table 2. ThermoFisher search strategy recommendations based on TMT labelling efficiency.

| N-term efficiency | K efficiency | Suggested search method |

|---|---|---|

| >98% | >98% | Both modifications as ’static’ |

| 85-95% | >98% | N-terminal modification ’dynamic’ and K modification ’static’ |

| <84% | <84% | Data not suitable for quantitation |

Since the use-case data has a sufficiently high TMT labelling efficiency, we can continue to use the output of the identification search. This search considered TMT labelling of lysines as a static modification whilst N-terminal labelling was kept as dynamic, to investigate the presence of protein N-terminal modifications.

Basic data cleaning

Being confident that the experiment and identification search were successful, we can now begin with some basic data cleaning. However, we also want to keep a copy of the raw PSM data. Therefore, we first create a second copy of the PSM SummarizedExperiment, called “psms_filtered”, and add it to the QFeatures object. This is done using the addAssay function. All changes made at the PSM-level will then only be applied to this second copy, so that we can refer back to the original data if needed.

## Extract the "psms_raw" SummarizedExperiment data_copy <- cp_qf[["psms_raw"]] ## Add copy of SummarizedExperiment cp_qf <- addAssay(x = cp_qf, y = data_copy, name = "psms_filtered") ## Verify cp_qf

## An instance of class QFeatures containing 2 assays: ## [1] psms_raw: SummarizedExperiment with 48832 rows and 6 columns ## [2] psms_filtered: SummarizedExperiment with 48832 rows and 6 columns

Of note, manually adding an assay (or SummarizedExperiment) to the QFeatures object does not automatically generate links between these assays. We will manually add the explicit links later, after we complete data cleaning and filtering.

The cleaning steps included in this section are non-specific and should be applied to all quantitative proteomics datasets. The names of key parameters will vary in data outputs from alternative third party software, however, and users should remain aware of both terminology changes over time as well as the introduction of new filters. All data cleaning steps are completed in the same way. We first determine how many rows, here PSMs, meet the conditions for removal. This is achieved by using the dplyr::count function. The unwanted rows are removed using the filterFeatures function. Since we only wish to apply the filters to the “psms_filtered” level, we specify this by using the i = argument. If this argument is not used, filterFeatures will remove features from all assays within a QFeatures object.

Removing PSMs not matched to a master protein

The first common cleaning step we carry out is the removal of PSMs that have not been assigned to a master protein during the identification search. This can happen when the search software is unable to resolve conflicts caused by the presence of the isobaric amino acids leucine and isoleucine. Before implementing the filter, it is useful to find out how many PSMs we expect to remove. This is easily done by using the dplyr::count on the master protein column. Any master proteins that return TRUE will be removed by filtering. If this returns no TRUE values, users should move on to the next filtering step without removing rows as this will introduce an error.

## Find out how many PSMs we expect to lose cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% dplyr::count(Master.Protein.Accessions == "")

## # A tibble: 2 x 2 ## ‘Master.Protein.Accessions == ""‘ n ## <lgl> <int> ## 1 FALSE 48660 ## 2 TRUE 172

For users who wish to explicitly track the process of data cleaning, the code chunk below demonstrates how to print a message containing the number of features removed.

paste("Removing", length(which(rowData( cp_qf[["psms_filtered"]])$Master.Protein.Accessions == "")), "PSMs without a master protein accession") %>% message()

## Removing 172 PSMs without a master protein accession

This code could be adapted to each cleaning and filtering step. To maintain simplicity of this workflow, we will not print explicit messages at each step. Instead, the decision to do so is left to the user.

## Remove PSMs without a master protein accession using filterFeatures cp_qf <- cp_qf %>% filterFeatures(~ !Master.Protein.Accessions == "", i = "psms_filtered")

Removing PSMs matched to a contaminant protein

Next we remove PSMs corresponding to contaminant proteins. Such proteins can be introduced intentionally as reagents during sample preparation, as is the case for digestive enzymes, or accidentally, as seen with human keratins derived from skin and hair. Since these proteins do not contribute to the biological question being asked and it is standard practice to remove them from the data. This is done by using a carefully curated, sample-specific contaminant database. Critically, the database used for filtering should be the same one that was used during the identification search. Whilst it is possible to remove contaminants using the filterFeatures function on a contaminants annotation column (as per the QFeatures processing vignette), we demonstrate how to filter using only contaminant protein accessions for users who do not have contaminant annotations within their identification data.

For this experiment, a contaminant database from Ref. 20 was used. The .fasta file for this database is available at the Hao Group’s Github Repository for Protein Contaminant Libraries for DDA and DIA Proteomics and specifically can be found at https://github.com/HaoGroup-ProtContLib/Protein-Contaminant-Libraries-for-DDA-and-DIA-Proteomics/tree/main/Universal%20protein%20contaminant%20FASTA. Here, we import this file using the fasta.index function from the Biostrings package. 21 This function requires a file path to the .fasta file and then asks users to specify the sequence type. In this case we have amino acid sequences so pass seqtype = "AA". The function returns a data.frame with one row per FASTA entry. We then can extract the protein accessions from the fasta file. Users will need to alter the below code according to the contaminant file used.

## Load Hao group .fasta file used in search cont_fasta <- "220813_universal_protein_contaminants_Haogroup.fasta" conts <- Biostrings::fasta.index(cont_fasta, seqtype = "AA") ## Extract only the protein accessions (not Cont_ at the start) cont_acc <- regexpr("(?<=\\_).*?(?=\\|)", conts$desc, perl = TRUE) %>% regmatches(conts$desc, .)

Now we have our contaminant list by accession number, we can identify and remove PSMs with any contaminant protein within their “Protein.Accessions”. Importantly, filtering on “Protein.Accessions” ensures the removal of PSMs which matched to a protein group containing a contaminant protein, even if the contaminant protein is not the group’s master protein.

## Define function to find contaminants find_cont <- function(se, cont_acc) { cont_indices <- c() for (i in 1:length(cont_acc)) { cont_protein <- cont_acc[i] cont_present <- grep(cont_protein, rowData(se)$Protein.Accessions) output <- c(cont_present) cont_indices <- append(cont_indices, output) } cont_psm_indices <- cont_indices } ## Store row indices of PSMs matched to a contaminant-containing protein group cont_psms <- find_cont(cp_qf[["psms_filtered"]], cont_acc) ## If we find contaminants, remove these rows from the data if (length(cont_psms) > 0) cp_qf[["psms_filtered"]] <- cp_qf[["psms_filtered"]][-cont_psms, ]

At this point, users can also remove any additional proteins which may not have been included in the contaminant database. For example, users may wish to remove human trypsin (accession P35050) should it appear in their data.

Several third party softwares also have the option to directly annotate which fasta file (here, the human proteome or contaminant database) a PSM is derived from. In such cases, filtering can be simplified by removing PSMs annotated as contaminants in the output file.

Removing PSMs which lack quantitative data

Now that we are left with only PSMs matched to proteins of interest, we filter out PSMs which cannot be used for quantitation. This includes some PSMs which lack quantitative information altogether. In outputs derived from Proteome Discoverer this information is included in the “Quan.Info” column where PSMs are annotated as having “NoQuanLabels”. For users who have considered both lysine and N-terminal TMT labels as static modifications, the data should not contain any PSMs without quantitative information. However, since the use-case data was derived from a search in which N-terminal TMT modifications were dynamic, the data does include this annotation. Users are reminded that column names are typically software-specific as the “Quan.Info” column is found only in outputs derived from Proteome Discoverer. However, the majority of alternative third party softwares will have an equivalent column containing the same information.

## Find out how many PSMs we expect to lose cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% dplyr::count(Quan.Info == "NoQuanLabels")

## # A tibble: 2 x 2 ## ‘Quan.Info == "NoQuanLabels"‘ n ## <lgl> <int> ## 1 FALSE 47241 ## 2 TRUE 228

## Drop these rows from the data cp_qf <- cp_qf %>% filterFeatures(~ !Quan.Info == "NoQuanLabels", i = "psms_filtered")

This point in the workflow is a good time to check whether there are any other annotations within the “Quan.Info” column. For example, if there are any PSMs which have been “ExcludedByMethod”, this indicates that a PSM-level filter was applied in Proteome Discoverer during the identification search. If this is the case, users should determine which filter has been applied to the data and decide whether to remove the PSMs which were “ExcludedByMethod” (thereby applying the pre-set threshold) or leave them in (disregard the threshold).

## Are there any remaining annotations in the Quan.Info column? cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% pull(Quan.Info) %>% table()

## . ## ## 47241

In the above code chunk we see there are no remaining annotations in the “Quan.Info” column so we can continue.

Removing PSMs which are not unique to a protein

The next step is to consider which PSMs are to be used for quantitation. There are two ways in which a PSM can be considered as unique. The first and most pure form of uniqueness comes from a PSM corresponding to a single protein only. This results in the PSM being allocated to one protein and one protein group. However, it is common to expand the definition of unique to include PSMs that map to multiple proteins within a single protein group. That is PSMs which are allocated to more than one protein but only one protein group. This distinction is ultimately up to the user. By contrast, PSMs corresponding to razor and shared peptides are linked to multiple proteins across multiple protein groups. In this workflow, the final grouping of peptides to proteins will be done based on master protein accession. Therefore, differential expression analysis will be based on protein groups, and we here consider unique as any PSM linked to only one protein group. This means removing PSMs where “Number.of.Protein.Groups” is not equal to 1.

In the below code chunk we count the number of PSMs linked to more than 1 protein group.

## Find out how many PSMs we expect to lose cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% dplyr::count(Number.of.Protein.Groups != 1)

## # A tibble: 2 x 2 ## ‘Number.of.Protein.Groups != 1‘ n ## <lgl> <int> ## 1 FALSE 44501 ## 2 TRUE 2740

We again use the filterFeatures function to retain PSMs linked to only 1 protein group and discard any PSMs linked to more 1 group.

## Remove these rows from the data cp_qf <- cp_qf %>% filterFeatures(~ Number.of.Protein.Groups == 1, i = "psms_filtered")

Additional considerations regarding protein isoforms

Users searching against a database that includes protein isoforms must take extra caution when defining ‘unique’ PSMs. A PSM that corresponds to a single protein when data is searched against the proteome without isoforms may correspond to multiple proteins once additional isoforms are included. As a result, PSMs or peptides that were previously mapped to one protein and one protein group could instead be mapped to multiple proteins and one protein group. These PSMs would be filtered out by defining ‘unique’ as corresponding to only one protein and one protein group, but would be retained if the definition was expanded to multiple proteins and one protein group. Users should be aware of these possibilities and select their filtering strategy based on the biological question of interest.

Removing PSMs that are not rank 1

Another filter that is important for quantitation is that of PSM rank. Since individual spectra can have multiple candidate peptide matches, Proteome Discoverer uses a scoring algorithm to determine the probability of a PSM being incorrect. Once each candidate PSM has been given a score, the one with the lowest score (lowest probability of being incorrect) is allocated rank 1. The PSM with the second lowest probability of being incorrect is rank 2, and so on. For the analysis, we only want rank 1 PSMs to be retained.

## Find out how many PSMs we expect to lose cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% dplyr::count(Rank != 1)

## # A tibble: 2 x 2 ## ‘Rank != 1‘ n ## <lgl> <int> ## 1 FALSE 43426 ## 2 TRUE 1075

## Drop these rows from the data cp_qf <- cp_qf %>% filterFeatures(~ Rank == 1, i = "psms_filtered")

The majority of search engines, including SequestHT, also provide their own PSM rank. To be conservative and ensure accurate quantitation, we also only retain PSMs that have a search engine rank of 1.

## Find out how many PSMs we expect to lose cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% dplyr::count(Search.Engine.Rank != 1)

## # A tibble: 2 x 2 ## ‘Search.Engine.Rank != 1‘ n ## <lgl> <int> ## 1 FALSE 43153 ## 2 TRUE 273

## Drop these rows from the data cp_qf <- cp_qf %>% filterFeatures(~ Search.Engine.Rank == 1, i = "psms_filtered")

Removing ambiguous PSMs

Finally, we retain only unambiguous PSMs. Since there are several candidate peptides for each spectra, Proteome Discoverer allocates each PSM a level of ambiguity to indicate whether it was possible to determine a definite PSM or whether one had to be selected from a number of candidates. The allocation of PSM ambiguity takes place during the process of protein grouping and the definitions of each ambiguity assignment are given below in Table 3.

Table 3. Definitions of PSM ambiguity categories based on Proteome Discoverer outputs.

| PSM category | Definition |

|---|---|

| Unambiguous | The only candidate PSM |

| Selected | PSM was selected from a group of candidates |

| Rejected | PSM was rejected from a group of candidates |

| Ambiguous | Two or more candidate PSMs could not be distinguished |

| Unconsidered | PSM was not considered suitable |

Importantly, depending upon the software being used, output files may already have excluded some of these categories. It is still good to check before proceeding with the data.

## Find out how many PSMs we expect to lose cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% dplyr::count(PSM.Ambiguity != "Unambiguous")

## # A tibble: 1 x 2 ## ‘PSM.Ambiguity != "Unambiguous"‘ n ## <lgl> <int> ## 1 FALSE 43153

## No PSMs to remove so proceedAssessing the impact of non-specific data cleaning

Now that we have finished the non-specific data cleaning, we can pause and check to see what this has done to the data. We determine the number and proportion of PSMs, peptides, and proteins lost from the original dataset.

## Determine number and proportion of PSMs removed psms_remaining <- cp_qf[["psms_filtered"]] %>% nrow() %>% as.numeric() psms_removed <- original_psms - psms_remaining psms_removed_prop <- ((psms_removed / original_psms) * 100) %>% round(digits = 2) ## Determine number and proportion of peptides removed peps_remaining <- cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% pull(Sequence) %>% unique() %>% length() %>% as.numeric() peps_removed <- original_peps - peps_remaining peps_removed_prop <- ((peps_removed / original_peps) * 100) %>% round(digits = 2) ## Determine number and proportion of proteins removed prots_remaining <- cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% pull(Master.Protein.Accessions) %>% unique() %>% length() %>% as.numeric() prots_removed <- original_prots - prots_remaining prots_removed_prop <- ((prots_removed / original_prots) * 100) %>% round(digits = 2)

## Print as a table data.frame("Feature" = c("PSMs", "Peptides", "Proteins"), "Number lost" = c(psms_removed, peps_removed, prots_removed), "Percentage lost" = c(psms_removed_prop, peps_removed_prop, prots_removed_prop))

## Feature Number.lost Percentage.lost ## 1 PSMs 5679 11.63 ## 2 Peptides 1565 6.03 ## 3 Proteins 452 8.97

PSM quality control filtering

The next step is to take a look at the data and make informed decisions about in-depth filtering. Here, we focus on three key quality control filters for TMT data: 1) average reporter ion signal-to-noise (S/N) ratio, 2) percentage co-isolation interference, and 3) percentage SPS mass match. It is possible to set thresholds for these three parameters during the identification search. However, specifying thresholds prior to exploring the data could lead to unnecessarily excessive data exclusion or the retention of poor quality PSMs. We suggest that users set the thresholds for all three aforementioned filters to 0 during the identification search, thus allowing maximum flexibility during data processing. In all cases, quality control filtering represents a trade-off between ensuring high quality data and losing potentially informative data. This means that the thresholds used for such filtering will likely depend upon the initial quality of the data and the number of PSMs, as well as the experimental goal being stringent or exploratory.

Quality control: Average reporter ion signal-to-noise

Intensity measurements derived from a small number of ions tend to be more variable and less accurate. Therefore, reporter ion spectra with peaks generated from a small number of ions should be filtered out to ensure accurate quantitation and avoid stochastic ion effects. When using an orbitrap analyser, as was the case in the collection of the use-case data, the number of ions is proportional to the S/N value of a peak. Hence, the average reporter ion S/N ratio can be used to filter out quantification based on too few ions.

To determine an appropriate reporter ion S/N threshold we need to understand the original, unfiltered data. Here, we print a summary of the average reporter S/N before plotting a simple histogram to visualise the data. The default threshold for average reporter ion S/N when filtering within Proteome Discoverer is 10, or 1 on the base-10 logarithmic scale displayed here. We include a line to show where this threshold would be on the data distribution.

## Get summary information cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% pull(Average.Reporter.SN) %>% summary()

## Min. 1st Qu. Median Mean 3rd Qu. Max. NA’s ## 0.3 84.2 215.8 321.8 450.3 3008.2 140

## Plot histogram of reporter ion signal-to-noise cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% ggplot(aes(x = log10(Average.Reporter.SN))) + geom_histogram(binwidth = 0.05) + geom_vline(xintercept = 1, linetype = "dashed", color = "red") + labs(x = "log10(average reporter SN)", y = "Frequency") + ggtitle("Average reporter ion S/N") + theme_bw()

From the distribution of the data it is clear that applying such a threshold would not result in dramatic data loss. Whilst we could set a higher threshold for more stringent analysis, this would lead to unnecessary data loss. Therefore, we keep PSMs with an average reporter ion S/N threshold of 10 or more. We also remove PSMs that have an NA value for their average reporter ion S/N since their quality cannot be guaranteed. This is done by including na.rm = TRUE.

## Find out how many PSMs we expect to lose cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% dplyr::count(Average.Reporter.SN < 10)

## # A tibble: 3 x 2 ## ‘Average.Reporter.SN < 10‘ n ## <lgl> <int> ## 1 FALSE 42066 ## 2 TRUE 947 ## 3 NA 140

## Drop these rows from the data cp_qf <- cp_qf %>% filterFeatures(~ Average.Reporter.SN >= 10, na.rm = TRUE, i = "psms_filtered")

Quality control: Isolation interference

A second data-dependent quality control parameter which should be considered is the isolation interference. The first type of interference that occurs during a TMT experiment is reporter ion interference, also known as cross-label isotopic impurity. This type of interference arises from manufacturing-level impurities and experimental error. The former should be reduced somewhat by the inclusion of lot-specific correction factors in the search set-up and users should ensure that these corrections are applied. In Proteome Discoverer this means setting “Apply Quan Value Corrections” to “TRUE” within the reporter ions quantifier node. The second form of interference is co-isolation interference which occurs during the MS run when multiple labelled precursor peptides are co-isolated in a single data acquisition window. Following fragmentation of the co-isolated peptides, this results in an MS2 or MS3 reporter ion peak (depending upon the experimental design) derived from multiple precursor peptides. Hence, co-isolation interference leads to inaccurate quantitation of the identified peptide. This problem is reduced by filtering out PSMs with a high percentage isolation interference value. As was the case for reporter ion S/N, Proteome Discoverer has a suggested default threshold for isolation interference - 50% for MS2 experiments and 75% for SPS-MS3 experiments.

Again, we get a summary and visualise the data using the code chunk below.

## Get summary information cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% pull(Isolation.Interference.in.Percent) %>% summary()

## Min. 1st Qu. Median Mean 3rd Qu. Max. ## 0.000 0.000 8.385 12.637 21.053 84.379

## Plot histogram of co-isolation interference cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% ggplot(aes(x = Isolation.Interference.in.Percent)) + geom_histogram(binwidth = 2) + geom_vline(xintercept = 75, linetype = "dashed", color = "red") + labs(x = "Isolation inteference (%)", y = "Frequency") + ggtitle("Co-isolation interference %") + theme_bw()

Looking at the data, very few PSMs have an isolation interference above the suggested threshold, and hence minimal data will be lost. Again, we choose to apply the standard threshold with the understanding that decreasing the threshold would result in greater data loss. Importantly, we are able to apply relatively standard thresholds here as the preliminary exploration did not expose any problems with the experimental data (in terms of labelling or MS analysis). If users have reason to believe the data is of poorer quality then more stringent thresholding should be considered.

## Find out how many PSMs we expect to lose cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% dplyr::count(Isolation.Interference.in.Percent > 75)

## # A tibble: 2 x 2 ## ‘Isolation.Interference.in.Percent > 75‘ n ## <lgl> <int> ## 1 FALSE 42007 ## 2 TRUE 59

## Remove these rows from the data cp_qf <- cp_qf %>% filterFeatures(~ Isolation.Interference.in.Percent <= 75, na.rm = TRUE, i = "psms_filtered")

Quality control: SPS mass match

The final quality control filter that we will apply is a percentage SPS mass match threshold. SPS mass match is a metric which has been introduced by Proteome Discoverer versions 2.3 and above to quantify the percentage of SPS-MS3 fragments that can still be explicitly traced back to the precursor peptide. This parameter is of particular importance given that quantitation is based on the SPS-MS3 spectra. Unfortunately, the SPS Mass Match percentage is currently only a feature of Proteome Discoverer (2.3 and above) and will not be available to users of other third party software.

We follow the same format as before to investigate the SPS Mass Match (%) distribution of the data. The default threshold within Proteome Discoverer is a SPS Mass Match above 65%. In reality, since SPS Mass Match is only reported to the nearest 10%, removing PSMs annotated with a value below 65% means removing those with 60% or less. Hence, only PSMs with 70% SPS Mass Match or above would be retained. We can see how many PSMs would be lost based on such thresholds using the code chunk below.

## Get summary information cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% pull(SPS.Mass.Matches.in.Percent) %>% summary()

## Min. 1st Qu. Median Mean 3rd Qu. Max. ## 0.00 50.00 70.00 64.31 80.00 100.00

## Plot histogram of SPS mass match % cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% ggplot(aes(x = SPS.Mass.Matches.in.Percent)) + geom_histogram(binwidth = 10) + geom_vline(xintercept = 65, linetype = "dashed", color = "red") + labs(x = "SPS mass matches (%)", y = "Frequency") + scale_x_continuous(breaks = seq(0, 100, 10)) + ggtitle("SPS mass match %") + theme_bw()

From the summary and histogram we can see that the distribution of SPS Mass Matches is much less skewed than that of average reporter ion S/N or isolation interference. This means that whilst the application of thresholds on average reporter ion S/N and isolation interference led to minimal data loss, attempting to impose a threshold on SPS Mass Match represents a much greater trade-off between data quality and quantity. For simplicity, here we choose to use the standard threshold of 65%.

## Find out how many PSMs we expect to lose cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% dplyr::count(SPS.Mass.Matches.in.Percent < 65)

## # A tibble: 2 x 2 ## ‘SPS.Mass.Matches.in.Percent < 65‘ n ## <lgl> <int> ## 1 FALSE 21697 ## 2 TRUE 20310

## Drop these rows from the data cp_qf <- cp_qf %>% filterFeatures(~ SPS.Mass.Matches.in.Percent >= 65, na.rm = TRUE, i = "psms_filtered")

Assessing the impact of data-specific filtering

As we did after the non-specific cleaning steps, we check to see how many PSMs, peptides and proteins have been removed throughout the in-depth data-specific filtering.

## Summarize the effect of data-specific filtering ## Determine the number and proportion of PSMs removed psms_remaining_2 <- cp_qf[["psms_filtered"]] %>% nrow() %>% as.numeric() psms_removed_2 <- psms_remaining - psms_remaining_2 psms_removed_prop_2 <- ((psms_removed_2 / original_psms) * 100) %>% round(digits = 2) ## Determine number and proportion of peptides removed peps_remaining_2 <- rowData(cp_qf[["psms_filtered"]])$Sequence %>% unique() %>% length() %>% as.numeric() peps_removed_2 <- peps_remaining - peps_remaining_2 peps_removed_prop_2 <- ((peps_removed_2 / original_peps) * 100) %>% round(digits = 2) ## Determine number and proportion of proteins removed prots_remaining_2 <- cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% pull(Master.Protein.Accessions) %>% unique() %>% length() %>% as.numeric() prots_removed_2 <- prots_remaining - prots_remaining_2 prots_removed_prop_2 <- ((prots_removed_2 / original_prots) * 100) %>% round(digits = 2) ## Print as a table data.frame("Feature" = c("PSMs", "Peptides", "Proteins"), "Number lost" = c(psms_removed_2, peps_removed_2, prots_removed_2), "Percentage lost" = c(psms_removed_prop_2, peps_removed_prop_2, prots_removed_prop_2))

## Feature Number.lost Percentage.lost ## 1 PSMs 21456 43.94 ## 2 Peptides 10162 39.13 ## 3 Proteins 1299 25.77

Managing missing data

Having finished the data cleaning at the PSM-level, the final step is to deal with missing data. Missing values represent a common challenge in quantitative proteomics and there is no consensus within the literature on how this challenge should be addressed. Indeed, missing values fall into different categories based on the reason they were generated, and each category is best dealt with in a different way. There are three main categories of missing data: missing completely at random (MCAR), missing at random (MAR) and missing not at random (MNAR). Within proteomics, values which are MCAR arise due to technical variation or stochastic fluctuations and emerge in a uniform, intensity-independent distribution. Examples include values for peptides which cannot be consistently identified or are unable to be efficiently ionised. By contrast, MNAR values are expected to occur in an intensity-dependent manner due to the presence of peptides at abundances below the limit of detection. 17 , 22 , 23 In many cases this is due to the biological condition being evaluated, for example the cell type or treatment applied.

To simplify this process, we consider the management of missing data in three steps. The first step is to determine the presence and pattern of missing values within the data. Next, we filter out data which exceed the desired proportion of missing values. This includes removing PSMs with a greater number of missing values across samples than we deem acceptable, as well as whole samples in cases where the proportion of missing values is substantially higher than the average. Finally, imputation can be used to replace any remaining NA values within the dataset. This final step is optional and can equally be done prior to filtering if the user wishes to impute all missing values without removing any PSMs, although this is not recommended. Further, whilst it is possible to complete such steps at the peptide- or protein-level, we advise management of missing values at the lowest data level to minimise the effect of implicit imputation during aggregation.

Exploring the presence of missing values

First, to determine the presence of missing values in the PSM-level data we use the nNA function within the QFeatures infrastructure. This function will return the absolute number and percentage of missing values both per sample and as an average. Importantly, alternative third-party software may output missing values in formats other than NA, such as zero, or infinite. In such cases, missing values can be converted directly into NA values through use of the zeroIsNA or infIsNA functions within the QFeatures infrastructure.

## Determine whether there are any NA values in the data cp_qf[["psms_filtered"]] %>% assay() %>% anyNA()

## [1] TRUE

## Determine the amount and distribution of NA values in the data cp_qf[["psms_filtered"]] %>% nNA()

## $nNA ## DataFrame with 1 row and 2 columns

## nNA pNA ## <integer> <numeric> ## 1 4 0.00307262 ## ## $nNArows ## DataFrame with 21697 rows and 3 columns ## name nNA pNA ## <character> <integer> <numeric> ## 1 13 0 0 ## 2 20 0 0 ## 3 25 0 0 ## 4 26 0 0 ## 5 29 0 0 ## ... ... ... ... ## 21693 48786 0 0 ## 21694 48792 0 0 ## 21695 48797 0 0 ## 21696 48810 0 0 ## 21697 48819 0 0 ## ## $nNAcols ## DataFrame with 6 rows and 3 columns ## name nNA pNA ## <character> <integer> <numeric> ## 1 S1 0 0.00000000 ## 2 S2 2 0.00921786 ## 3 S3 0 0.00000000 ## 4 S4 1 0.00460893 ## 5 S5 1 0.00460893 ## 6 S6 0 0.00000000

We can see that the data only contains 0.003% missing values, corresponding to 4 NA values. This low proportion is due to a combination of the TMT labelling strategy and the stringent PSM quality control filtering. In particular, co-isolation interference when using TMT labels often results in very low quantification values for peptides which should actually be missing or ‘NA’. Nevertheless, we continue and check for sample-specific bias in the distribution of NAs by plotting a simple histogram. We also use colour to indicate the condition of each sample as to check for condition-specific bias.

## Plot histogram to visualize the distribution of NAs nNA(cp_qf[["psms_filtered"]])$nNAcols %>% as_tibble() %>% mutate(Condition = rep(c("Treated", "Control"), each = 3)) %>% ggplot(aes(x = name, y = pNA, group = Condition, fill = Condition)) + geom_bar(stat = "identity", position = "dodge") + geom_hline(yintercept = 0.002, linetype = "dashed", color = "red") + labs(x = "Sample", y = "Missing values (%)") + theme_bw()

The percentage of missing values is sufficiently low that none of the samples need be removed. Further, there is no sample- or condition-specific bias in the data. We can get more information about the PSMs with NA values using the code below.

## Find out the range of missing values per PSM nNA(cp_qf[["psms_filtered"]])$nNArows$nNA %>% table()

## . ## 0 1 ## 21693 4

From this output we can see that the maximum number of NA values per PSM is one. This information is useful to know as it may inform the filtering strategy in the next step.

## Get indices of rows which contain NA rows_with_na_indices <- which(nNA(cp_qf[["psms_filtered"]])$nNArows$nNA != 0) ## Subset rows with NA rows_with_na <- cp_qf[["psms_filtered"]][rows_with_na_indices, ] ## Inspect rows with NA assay(rows_with_na)

## S1 S2 S3 S4 S5 S6 ## 12087 11.0 17.0 13.3 22.1 NA 30.6 ## 30824 45.0 NA 43.1 66.7 69.7 62.1 ## 30846 34.3 NA 47.9 56.8 65.5 57.2 ## 44791 22.8 28.7 19.6 NA 3.8 12.2

Filtering out missing values

First we apply some standard filtering. Typically, it is desirable to remove features, here PSMs, with greater than 20% missing values. We can do this using the filterNA function in QFeatures, as outlined below. We pass the function the SummarizedExperiment and use the pNA = argument to specify the maximum proportion of NA values to allow.

## Check how many PSMs we will remove nNA(cp_qf[["psms_filtered"]])$nNArows %>% as_tibble() %>% dplyr::count(pNA >= 20)

## # A tibble: 1 x 2 ## ‘pNA >= 20‘ n ## <lgl> <int> ## 1 FALSE 21697

Although the use-case data does not contain any PSMs with >20% missing values, we demonstrate how to apply the desired filter below.

## Remove PSMs with more than 20 % (0.2) NA values cp_qf <- cp_qf %>% filterNA(pNA = 0.2, i = "psms_filtered")

Since previous exploration of missing data did not reveal any sample with an excessive number of NA values, we do not need to remove any samples from the analysis.

Although not covered here, users may wish to carry out condition-specific filtering in cases where the exploration of missing values revealed a condition- specific bias, or where the experimental question requires. This would be the case, for example, if one condition was transfected to express proteins of interest whilst the control condition lacked these proteins. Filtering of both conditions together could, therefore, lead to the removal of proteins of interest.

Imputation (optional)

The final step is to consider whether to impute the remaining missing values within the data. Imputation refers to the replacement of missing values with probable values. Since imputation requires complex assumptions and can have substantial effects on downstream statistical analysis, we here choose to skip imputation. This is reasonable given that we only have 3 missing values at the PSM-level, and that some of these will likely be removed by aggregation. A more in-depth discussion of imputation will be provided below in the LFQ workflow.

Summary of PSM data cleaning

Thus far we have checked that the experimental data we are using is of high quality by visualising the raw data and calculating TMT labelling efficiency. We then carried out non-specific data cleaning, data-specific filtering steps and management of missing data. Here, we present a combined summary of these PSM processing steps.

## Determine final number of PSMs, peptides and master proteins psms_final <- cp_qf[["psms_filtered"]] %>% nrow() %>% as.numeric() psms_removed_total <- original_psms - psms_final psms_removed_total_prop <- ((psms_removed_total / original_psms) * 100) %>% round(digits = 2) peps_final <- cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% pull(Sequence) %>% unique() %>% length() %>% as.numeric() peps_removed_total <- original_peps - peps_final peps_removed_total_prop <- ((peps_removed_total / original_peps) * 100) %>% round(digits = 2) prots_final <- cp_qf[["psms_filtered"]] %>% rowData() %>% as_tibble() %>% pull(Master.Protein.Accessions) %>% unique() %>% length() %>% as.numeric() prots_removed_total <- original_prots - prots_final prots_removed_total_prop <- ((prots_removed_total / original_prots) * 100) %>% round(digits = 2) ## Print as table data.frame("Feature" = c("PSMs", "Peptides", "Proteins"), "Number lost" = c(psms_removed_total, peps_removed_total, prots_removed_total), "Percentage lost" = c(psms_removed_total_prop, peps_removed_total_prop, prots_removed_total_prop), "Number remaining" = c(psms_final, peps_final, prots_final))

## Feature Number.lost Percentage.lost Number.remaining ## 1 PSMs 27135 55.57 21697 ## 2 Peptides 11727 45.16 14242 ## 3 Proteins 1751 34.74 3289

Logarithmic transformation of quantitative data

Once satisfied that the PSM-level data is clean and of high quality, the PSM-level quantitative data is log transformed. log2 transformation is a standard step when dealing with quantitative proteomics data since protein abundances are dramatically skewed towards zero. Such a skewed distribution is to be expected given that the majority of cellular proteins present at any one time are of relatively low abundance, whilst only a few highly abundant proteins exist. To perform the logarithmic transformation and generate normally distributed data we pass the PSM-level data in the QFeatures object to the logTransform function, as per the below code chunk.

## log2 transform quantitative data cp_qf <- logTransform(object = cp_qf, base = 2, i = "psms_filtered", name = "log_psms") ## Verify cp_qf

## An instance of class QFeatures containing 3 assays: ## [1] psms_raw: SummarizedExperiment with 48832 rows and 6 columns ## [2] psms_filtered: SummarizedExperiment with 21697 rows and 6 columns ## [3] log_psms: SummarizedExperiment with 21697 rows and 6 columns

Aggregation of PSMs to proteins

For the aggregation itself we use the aggregateFeatures function and provide the base level from which we wish to aggregate, the log PSM-level data in this case. We also tell the function which column to aggregate, which is specified by the fcol argument. We will first aggregate from PSM to peptide to create explicit QFeatures links. This means grouping by PSM “Sequence”.

As well as grouping PSMs according to their peptide sequence, the quantitative values for each PSM must be aggregated into a single peptide-level value. The default aggregation method within aggregateFeatures is the robustSummary function from the MsCoreUtils package. 19 This method is a form of robust regression and is described in detail elsewhere. 24 Nevertheless, the user must decide which aggregation method is most appropriate for their data and biological question. Further, an understanding of the selected method is critical given that aggregation is a form of implicit imputation and has substantial effects on the downstream data. Indeed, aggregation methods have different ways of dealing with missing data, either by removal or propagation. Options of aggregation methods within the aggregateFeatures function include MsCoreUtils::medianPolish , MsCoreUtils::robustSummary , base::colMeans , base::colSums , and matrixStats::colMedians . Users should also be aware that some methods have specific input requirements. For example, robustSummary assumes that intensities have already been log transformed.

Aggregating using robust summarisation

Here, we use robustSummary to aggregate from PSM to peptide-level. This method is currently considered to be state-of-the-art as it is more robust against outliers than other aggregation methods. 24 , 25 We also include na.rm = TRUE to exclude any NA values prior to completing the summarisation.

## Aggregate PSM to peptide cp_qf <- aggregateFeatures(cp_qf, i = "log_psms", fcol = "Sequence", name = "log_peptides", fun = MsCoreUtils::robustSummary, na.rm = TRUE)

## Your quantitative and row data contain missing values. Please read the ## relevant section(s) in the aggregateFeatures manual page regarding the ## effects of missing values on data aggregation.

## Verify

cp_qf

## An instance of class QFeatures containing 4 assays: ## [1] psms_raw: SummarizedExperiment with 48832 rows and 6 columns ## [2] psms_filtered: SummarizedExperiment with 21697 rows and 6 columns ## [3] log_psms: SummarizedExperiment with 21697 rows and 6 columns ## [4] log_peptides: SummarizedExperiment with 14242 rows and 6 columns

We are now left with a QFeatures object holding the PSM and peptide-level data in their own SummarizedExperiments. Importantly, an explicit link has been maintained between the two levels and this makes it possible to gain information about all PSMs that were aggregated into a peptide.

Considerations for aggregating non-imputed data

If users did not impute prior to aggregation, NA values within the PSM-level data may have propagated into NaN values. This is because peptides only supported by PSMs containing missing values would not have any quantitative value to which a sum or median function, for example, can be applied. Therefore, we check for NaN and convert back to NA values to facilitate compatibility with downstream processing.

## Confirm the presence of NaN assay(cp_qf[["log_peptides"]]) %>% is.nan() %>% table()

## . ## FALSE ## 85452

## Replace NaN with NA assay(cp_qf[["log_peptides"]])[is.nan(assay(cp_qf[["log_peptides"]]))] <- NA

Next, using the same approach as above, we use the aggregateFeatures function to assemble the peptides into proteins. As before, we must pass several arguments to the function. Namely, the QFeatures object i.e. cp_qf, the data level we wish to aggregation from i.e. log_peptides, the column of the rowData defining how to aggregate the features i.e. by "Master.Protein.Accessions" and a name for the new data level e.g. "log_proteins". We again choose to use robustSummary as our aggregation method and we pass na.rm = TRUE to ignore NA values. Users can type ?aggregateFeatures to see more information. Users should be aware that peptides are grouped by their master protein accession and, therefore, downstream differential expression analysis will consider protein groups rather than individual proteins.

## Aggregate peptides to protein cp_qf <- aggregateFeatures(cp_qf, i = "log_peptides", fcol = "Master.Protein.Accessions", name = "log_proteins", fun = MsCoreUtils::robustSummary, na.rm = TRUE)