Abstract

Objective:

Using outpatient neurology clinic case logs completed by medical students on neurology clerkships, we examined the impact of outpatient clinical encounter volume per student on outcomes of knowledge assessed by the National Board of Medical Examiners (NBME) Clinical Neurology Subject Examination and clinical skills assessed by the Objective Structured Clinical Examination (OSCE).

Methods:

Data from 394 medical students from July 2008 to June 2012, representing 9,791 patient encounters, were analyzed retrospectively. Pearson correlations were calculated examining the relationship between numbers of cases logged per student and performance on the NBME examination. Similarly, correlations between cases logged and performance on the OSCE, as well as on components of the OSCE (history, physical examination, clinical formulation), were evaluated.

Results:

There was a correlation between the total number of cases logged per student and NBME examination scores (r = 0.142; p = 0.005) and OSCE scores (r = 0.136; p = 0.007). Total number of cases correlated with the clinical formulation component of the OSCE (r = 0.172; p = 0.001) but not the performance on history or physical examination components.

Conclusion:

The volume of cases logged by individual students in the outpatient clinic correlates with performance on measures of knowledge and clinical skill. In measurement of clinical skill, seeing a greater volume of patients in the outpatient clinic is related to improved clinical formulation on the OSCE. These findings may affect methods employed in assessment of medical students, residents, and fellows.

There has been an emphasis at medical schools and residency programs on providing increased outpatient exposure.1 It remains to be seen how to best evaluate these experiences during training to ensure their adequacy. An easily applicable method of measuring clinical experience is via case logs of patient encounters. Case logs provide objective data at low cost, provide a measure of volume and breadth of experience, and can be scored quickly. Pitfalls include that they rely on self-reporting, are potentially time-consuming for the individual completing them, and may provide no specific information on the trainees' medical knowledge or clinical acumen. Establishing validity for the use of case logs has consequences for medical student and residency training.

One previous study evaluating case logs as assessment tools in neurology clerkships has been published, showing no correlation between the total number of inpatient and outpatient cases recorded per student and performance on an internally developed written examination.2 Additional studies evaluating student performance in other specialties have found no correlation between number of patient encounters documented in case logs and either National Board of Medical Examiners (NBME) clinical subject examination scores or internally developed examinations.3,4 While case logs have long played an important role in the assessment of surgical trainees, there is a paucity of data on their use in the evaluation of trainees from non–procedurally oriented specialties.

METHODS

This project was submitted to the University of Chicago Institutional Review Board and was deemed exempt from review.

Students at the University of Chicago Pritzker School of Medicine completing a required 4-week neurology clerkship are assigned to University of Chicago Medical Center (UCMC) or NorthShore University Health System (NSUHS), a community-based teaching affiliate. During the approximately week-long outpatient clinical portion of the clerkship, students are required to keep case logs of outpatient encounters. Students obtain a sticker from the patients' clinic encounter sheets and place it in a logbook maintained by the student. Six students (1.5%) did not log any cases. These students were not included in analyses. Students are requested to document diagnosis or chief complaint of each patient encounter, and 98% (n = 9,600) of logged patient encounters included this. Additional components of our preexisting assessment methods include the NBME Clinical Subject Examination, a nationally available standardized examination of knowledge in neurology, and the Neurology Objective Structured Clinical Examination (OSCE), an internally developed examination of clinical skill in neurology that we have previously described.5,6 The OSCE score is derived from 3 components. The first 2 are scored by standardized patients (SP) via completion of a checklist evaluating performance of key elements of history taking and physical examination. The third component is a clinical formulation scored by faculty raters using a checklist reviewing an online write-up completed by students after evaluating the SP. The write-up includes the history, physical examination, assessment, and management plan.

Case logs, NBME clinical subject examination scores, and OSCE scores for all 394 students (362 third year, 32 fourth year) who logged cases from July 2008 to June 2012 were analyzed. Prior to analysis, data were anonymized. Pearson correlations were performed to evaluate the total number of patient encounters recorded by a given student and his or her score on the NBME subject examination. A similar analysis was performed to test for correlation of patient encounters with overall OSCE scores. Additional analyses evaluating the number of patient encounters per student and performance on the 3 distinct components of the OSCE score were performed.

Finally, an exploratory analysis was performed evaluating the breadth of clinical presentations encountered by students in the outpatient neurology clinic. Case logs were reviewed and all recorded chief complaints/diagnoses were tabulated and classified into the following categories: seizure, cerebrovascular disease, peripheral/neuromuscular, spinal disease/neck/back pain, CNS demyelinating disease/neuroimmunology, headaches, sleep disorders, neuro-oncology, movement disorders, dementia, other neurodegenerative disorders, and other. If more than one diagnosis or chief complaint was listed, the first one listed was used in the tabulation.

RESULTS

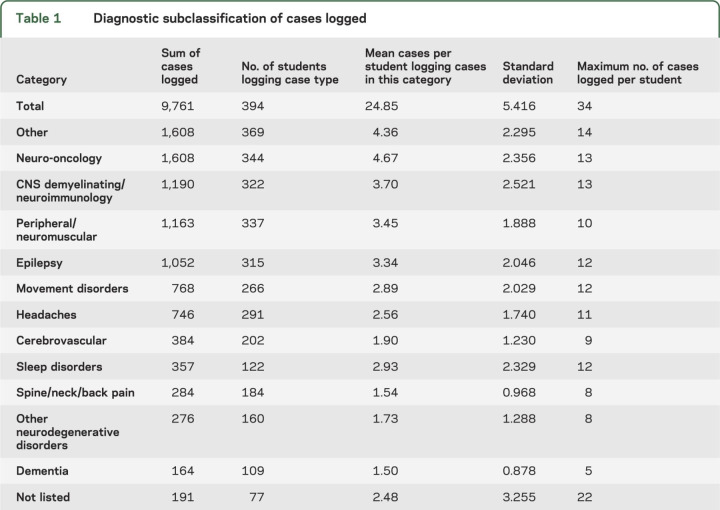

Students logged a mean of 24.8 cases (range 6–34, SD 5.4). Hierarchical analysis found no difference (p = 0.3) between the number of cases recorded between third (mean = 24.9, SD = 5.4) and fourth year (mean = 24.7, SD = 6.1) students. Comparison between the 2 clinical sites, UCMC (306 students) and NSUHS (88 students), demonstrated a difference in the number of cases seen. Students assigned to NSUHS recorded a mean of 26.9 (SD 5.0) cases per student compared to 24.3 (SD 5.4) at UCMC (p = 0.02). The difference in total number of cases logged per student is likely due at least in part to the number of days spent in the outpatient clinic at the 2 respective sites. The mean number of days in the outpatient clinic for students assigned to UCMC was 4.8 in comparison to 6.3 for students assigned to NSUHS. This small statistically significant difference in length of the ambulatory rotation was not associated with a difference in NBME scores between students assigned to the 2 sites (p = 0.77). However, there was a modestly greater mean OSCE score in students assigned to the UCMC clinical site (t = 2.3; df = 392, p = 0.02). The students logged a range of case types (table 1). The most frequently logged categories were other (n = 1,608 cases), which consisted of a variety of neurologic conditions including behavioral problems, infections, congenital abnormalities, and psychogenic symptoms, and neuro-oncology (n = 1,608 cases). The least frequently encountered categories were dementia (n = 164) and other neurodegenerative disorders (n = 276).

Table 1.

Diagnostic subclassification of cases logged

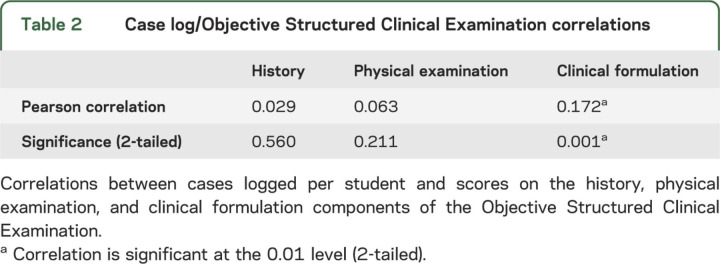

The mean NBME score for all students was 77.77 (range 55–99). A significant relationship was demonstrated between number of patients recorded via case logs and NBME subject examination scores (r = 0.142, p = 0.05). The mean NBME subject examination score in the cohort of students logging the lowest quartile of cases (6–21 cases) was 76.23, the second quartile (22–26 cases) was 77.61, the third quartile (27–29 cases) was 78.78, and the fourth quartile (30–34 cases) was 78.61. Thus, a difference of 2.38 points on the mean NBME subject examination score exists between the lowest case logging quartile and the highest. This 2.38-point difference in average absolute NBME score between students seeing fewer vs greater cases in the outpatient clinic corresponds to a difference of about 10 percentile points in the score distributions reported by the NBME, representing a modest but considerable improvement in student performance. A similar relationship was also found with regards to number of cases recorded and OSCE scores (r = 0.136, p = 0.007). When evaluating the relationship between patients recorded per student via case logs and scores on 3 distinct components of the OSCE, a correlation was found only on the faculty-graded clinical written formulation component (r = 0.172, p = 0.001) (table 2).

Table 2.

Case log/Objective Structured Clinical Examination correlations

DISCUSSION

We present evaluations of correlations in medical students between patient encounters documented via case logs and performance on national standardized examinations of knowledge and validated examinations of clinical skill in a specialty. Outpatient clinical experiences occurred either at an academic medical center or a community medical center, making findings relevant to a range of clinical education settings. While these findings specifically reflect our analyses of medical students in a neurology clerkship, it would be reasonable to hypothesize that similar relationships between volume of cases seen and performance on standardized examinations of knowledge and clinical skill would be present in other medical student clerkships and non-procedure-oriented residency/fellowship programs. Of particular interest is that the correlation between number of cases recorded per student and performance on the OSCE was due exclusively to performance on the written clinical formulation component of the evaluation, reflecting students' skills in reporting and analyzing clinical findings. There was no relationship between case volume and the components of the OSCE that evaluate thoroughness of history taking and physical examination. We hypothesize that by directly observing expert clinicians evaluating patients with a wide range of complaints and underlying pathologies, trainees gain valuable experience in formulating their own thoughts and developing management plans with respect to clinical encounters. By understanding the correlations between volume of patients encountered and students' outcomes on specific performance measures, we can work on tailoring the outpatient experience to best achieve our educational goals. As the outpatient experience represents only a single component of a clerkship with other inpatient components, our study does not lend insight into whether a similar correlation is noted with inpatient volume.

The exploratory analysis evaluating the breadth of cases students encountered in neurology clinic reveals exposure to a broad range of clinical symptoms and disorders. These findings do not reflect the prevalence of neurologic disorders at the population level but are likely influenced by a number of factors, including prevalence of these disorders at an institutional/departmental level as well as the interest, enthusiasm, and availability for faculty within specific subspecialties to provide clinical education to students in the clinic. Prior evaluation of categories of cases encountered by students on neurology clerkships employed different methodology and included inpatient clinical experiences, making direct comparison difficult.2 Comparison to neurology resident outpatient exposure is also difficult due to similar reasons.7,8

Despite the positive aspects of case logs, many clerkships do not employ them. There is additional work required of trainees to complete the logs.9,10 The logs rely on student accuracy in recording patient encounters, which can be underestimated or overestimated. While case logs can be used to tally the types of patients encountered, they do not reflect the depth of experience. However, our results demonstrate that the volume of cases logged is predictive of educational outcomes on standardized examinations of medical knowledge. Of potential greater significance is the relationship between volume of cases logged and skill in the formulation of a clinical assessment and management plan. Our ability to evaluate this skill may provide us with a means to assess individuals as they progress from trainees to independent clinicians.

ACKNOWLEDGMENT

The authors thank Marla Scofield for collecting the data.

GLOSSARY

- NBME

National Board of Medical Examiners

- NSUHS

NorthShore University Health System

- OSCE

Objective Structured Clinical Examination

- SP

standardized patients

- UCMC

University of Chicago Medical Center

AUTHOR CONTRIBUTIONS

Drs. Albert, Brorson, and Lukas contributed to the conceptualization and design of the work. Drs. Amidei and Lukas performed the statistical analyses. Drs. Albert, Brorson, Amidei, and Lukas contributed to the analysis and interpretation of the data. Drs. Albert and Lukas contributed to drafting of the manuscript. Drs. Albert, Brorson, Amidei, and Lukas contributed to revising the manuscript critically for intellectual content. Drs. Albert, Brorson, Amidei, and Lukas gave final approval of the version to be published.

STUDY FUNDING

No targeted funding reported.

DISCLOSURE

D. Albert reports no disclosures relevant to the manuscript. J. Brorson has received compensation for consulting work for CVS-Caremark, Inc., and for National Peer Review Corporation. C. Amidei reports no disclosures relevant to the manuscript. R. Lukas received honoraria from American Physician Institute for delivering CME Board review courses. Go to Neurology.org for full disclosures.

REFERENCES

- 1.Knowles JH. The professor and the outpatient department: 1966. Acad Med 2002;77:708. [DOI] [PubMed] [Google Scholar]

- 2.Poisson S, Gelb D, Oh M, Gruppen L. Experience may not be the best teacher, patient logs do not correlate with clerkship performance. Neurology 2009;72:699–704. [DOI] [PubMed] [Google Scholar]

- 3.Greenberg LW, Getson P. Clinical experiences of medical students in a pediatric clerkship and performance on the NBME subject test: are they related? Med Teach 1999;21:420–423. [Google Scholar]

- 4.Neumayer L, McNamara RM, Dayton M, Kim B. Does volume of patients seen in an outpatient setting impact test scores? Am J Surg 1998;175:511–514. [DOI] [PubMed] [Google Scholar]

- 5.Lukas RV, Adesoye T, Smith S, Blood A, Brorson JR. Student assessment by objective structured examination in a neurology clerkship. Neurology 2012;79:681–685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Blood A, Brorson J, Lukas R. The neurology clerkship objective structured clinical examination (OSCE). MedEdPORTAL; 2013. Available at: www.mededportal.org/publication/9368. Accessed May 14, 2013. [Google Scholar]

- 7.Bhattacharya P, Van Stavern R, Madhaven R. Automated data mining: an innovative and efficient web-based approach to maintaining resident case logs. J Grad Med Educ 2010;2:566–570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ances B. The more things change, the more they stay the same: a case report of neurology residency experiences. J Neurol 2012;259:1321–1325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bardes CL, Wenderoth S, Lemise R, Ortanez P, Storey-Johnson C. Specifying student-patient encounters, web-based case logs, and meeting standards of the liaison committee on medical educations. Acad Med 2005;80:1127–1132. [DOI] [PubMed] [Google Scholar]

- 10.Gill DJ, Freeman WD, Thoresen P, Corboy JR. Residency training the neurology resident case log: a national survey of neurology residents. Neurology 2007;68:E32–E33. [DOI] [PubMed] [Google Scholar]