Abstract

Automatically detecting the playing styles of musical instruments could assist in the development of intelligent software for music coaching and training. However, the respective methodologies are still at an early stage, and there are limitations in the playing techniques that can be identified. This is partly due to the limited availability of complete and real-world datasets of instrument playing styles that are mandatory to develop and train robust machine learning models.

To address this issue, in this data article, we introduce a multimodal dataset consisting of 549 video samples in MP4 format, and their respective audio samples in WAV format, covering nine different electric guitar techniques in total. These samples are produced by a recruited guitar player using a smartphone device. The recording setup is designed to closely resemble real-world situations, making the dataset valuable for developing intelligent software applications that can assess the playstyle of guitar players. Furthermore, to capture the diversities that may occur in a real scenario, different exercises are performed using each technique with three different electric guitars and three different simulation amplifiers using an amplifier simulation profiler. In addition to the audio and video samples, we also provide the musescores of the exercises, making the dataset extendable to more guitar players in the future.

Finally, to demonstrate the effectiveness of our dataset in developing robust machine learning models, we design a Support Vector Machine (SVM) and a Convolutional Neural Network (CNN) for classifying the guitar techniques using the audio files of the dataset. The code for the experiments is publicly available in the dataset's repository.

Keywords: Electric guitar recordings, Music information retrieval, Machine learning, Audio signal processing

Specifications Table

| Subject | Computer Science / Applied Machine Learning |

| Specific subject area | Electric Guitar Playing Technique Classification and Recognition |

| Data format | Raw |

| Type of data | .wav (audio files), .mp4 (video files), .pdf (guitar exercises), .mscz (guitar exercises), .json (train/test splits) |

| Data collection | A total of 549 video samples, representing nine distinct guitar techniques, are acquired over a one-month period. The recording process occurs within a home studio setup, utilizing an Android smartphone device. The setup includes the use of three unique guitars and three amplifier simulations, carefully selected to capture a diverse range of sounds. The audio WAV files are extracted from the initial MP4 video recordings using FFmpeg software. |

| Data source location | Institute of Informatics and Telecommunications, NCSR ‘Demokritos’, 27, Neapoleos str &, Patriarchou Grigoriou E, Ag. Paraskevi 153 41, Athens, Greece. |

| Data accessibility | Repository name: guitar_style_dataset Data identification number: 10.5281/zenodo.10075352 Direct URL to data: https://zenodo.org/doi/10.5281/zenodo.10075351 |

1. Value of the Data

-

•

The dataset covers a wide range of electric guitar techniques, contributing this way to the development of machine learning models for intelligent software applications. By incorporating a wider range of techniques, we increase the variety and realism and contribute to the creation of statistical models that can be more robust, accurate, and reliable regarding the classification of electric guitar techniques.

-

•

Due to the complexity of accurately reproducing the sound of techniques whose timbre is similar, most of the works so far have been restricted to modeling techniques that are easily distinguishable, such as bend, slide, hammer-on, pull-off, and vibrato. With our dataset we fill a gap in electric guitar datasets by expanding the range of techniques, capturing variations such as alternate picking, legato, tapping, and sweep picking.

-

•

The dataset is multimodal since both video and audio stream is provided. This enables researchers to experiment with new deep learning architectures by utilizing all provided data modalities.

-

•

Finally, the MuseScore files can be used by other guitar players for educational purposes or to expand the dataset in the future.

2. Background

While numerous datasets are available for various music information retrieval tasks, such as music genre recognition (e.g. GTZAN dataset), guitar transcription (e.g. GuitarSet [1]), instrument and playing techniques recognition (e.g. Extended playing techniques dataset [2]), there seems to be a limited number of datasets exclusively focused on guitar playing technique recognition, posing a limitation in this specific domain.

Among the significant datasets dedicated to guitar playing technique recognition are the Guitar Playing Techniques dataset [3] and the IDMT-SMT-GUITAR database [4]. The Guitar Playing Techniques dataset incorporates a range of fundamental techniques, including normal playing, muting, vibrato, pull-off, hammer-on, sliding and bending. Respectively, the IDMT-SMT-GUITAR database features three distinct plucking styles, such as finger style, picked and muted, along with five expression playing styles including bending, slide, vibrato, harmonics and dead notes.

As evident from the above, the datasets for instrument playing techniques present several limitations. First, the availability of datasets is rather restricted, and the datasets specifically tailored for guitar playing techniques are even more infrequent. Additionally, while there are a few datasets dedicated to guitar playing techniques, they often encompass a confined number of techniques. Moreover, a notable gap is present in the provision of accompanying video data, constraining the analysis of technique execution. To tackle these limitations, the dataset we introduce covers a wider range of guitar playing techniques, adding classes such as alternate picking, legato, sweep picking and tapping, and includes video data for the respective incorporated techniques.

3. Data Description

3.1. Classes description

The dataset consists of nine classes of guitar playing techniques. The techniques are alternate picking, legato, tapping, sweep picking, vibrato, hammer-on, pull-off, slide, and bend, each with its own characteristic style and execution. Further description for each of these classes is provided below.

Alternate picking: continuously alternating between downward and upward strokes of the guitar strings using a pick.

Legato: playing the notes in a smooth manner, transitioning from one note to the next without leaving any audible space in between.

Tapping: using the fingertips of both the fretting and picking hand on the fretboard to fret notes.

Sweep Picking: using a 'sweeping' motion of the pick across strings resulting in a fast, fluid, and smooth sound.

Vibrato: repeatedly moving a string back and forth using the fretting hand, while holding a note, causing the pitch of the note to fluctuate.

Hammer - on: playing a note and then sharply bringing down a finger of the fretting hand onto the same string but on a higher fret from the first note played, without re-plucking the string.

Pull - off: removing a finger of the string-pressing hand from a previously played note, allowing the tone to ring and produce a lower tone.

Slide: smoothly moving the fretting hand along a string, across multiple frets, while keeping the string pressed down, resulting in a seamless transition between notes.

Bend: stretching a string with the fretting hand after a note has been played, to increase the pitch of the played note.

3.2. Dataset statistics

In this section we provide some general information about the dataset with regard to its size and the time duration (on average) for each of the nine guitar techniques. The total size of the dataset (folder “data”, see 2.3) is 13.6 GB. In Table 1 you can see the total size for each group of files, and in Table 2 the average duration and number of recordings per class.

Table 1.

Total size of each group of files.

| Technique | Avg. duration (sec) | Recordings |

|---|---|---|

| Alternate picking | 6.3 | 81 |

| Legato | 5.2 | 81 |

| Tapping | 5 | 81 |

| Sweep picking | 5.7 | 36 |

| Vibrato | 8.9 | 54 |

| Hammer - On | 28.7 | 54 |

| Pull - Off | 23.4 | 54 |

| Slide | 23.7 | 54 |

| Bend | 3.3 | 54 |

Table 2.

Average duration and number of recordings per class.

| Data description | Number of files | Size | Type of data |

|---|---|---|---|

| Audio data | 549 | 1.2 GB | wav |

| Video data | 549 | 13 GB | mp4 |

| Guitar exercises | 18 | 196 KB | mscz |

| Guitar exercises | 18 | 727 KB | |

| Class mapping | 1 | 90 KB | |

| Train/test splits | 3 | 208 KB | json |

3.3. Final data format and file structure

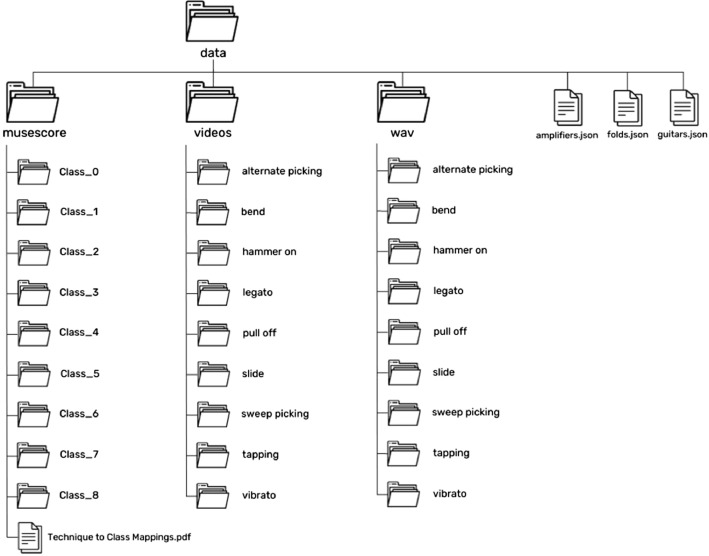

The entire dataset is openly accessible in the repository's data folder. Regarding the structure of the data folder, there are three JSON files and three subfolders: the videos folder contains the nine class folders with the corresponding MP4 files, the wav folder contains the nine class folders with the corresponding WAV files, and the musescore folder contains the nine class folders with the MuseScore files of the exercises used for each technique, and the associated PDF files. Fig. 1 illustrates the layout of the data directory.

Fig. 1.

Data folder layout.

The filenames of the video and audio samples also contain metadata that describe the corresponding class, guitar, amplifier, and exercise of each file in that exact order, separated by the underscore character. For example, in the file “class_0_carvin_dc-modern_1.wav”, “class 0” corresponds to alternate picking technique, “carvin” to the guitar, and “dc-modern” to the type of amplifier simulation. The number at the end of the filename indicates the exercise played.

The three JSON files that are shared, correspond to the three experiments that are performed to demonstrate the effectiveness of the dataset in developing machine learning models for electric guitar recognition, as described below. The folds.json file is used for the first experiment, the guitars.json file is used for the second experiment, and the amplifiers.json file is used for the third one. Each file lists the file names of the recordings belonging to train/test datasets of each fold (see experiments section).

3.4. Classification results

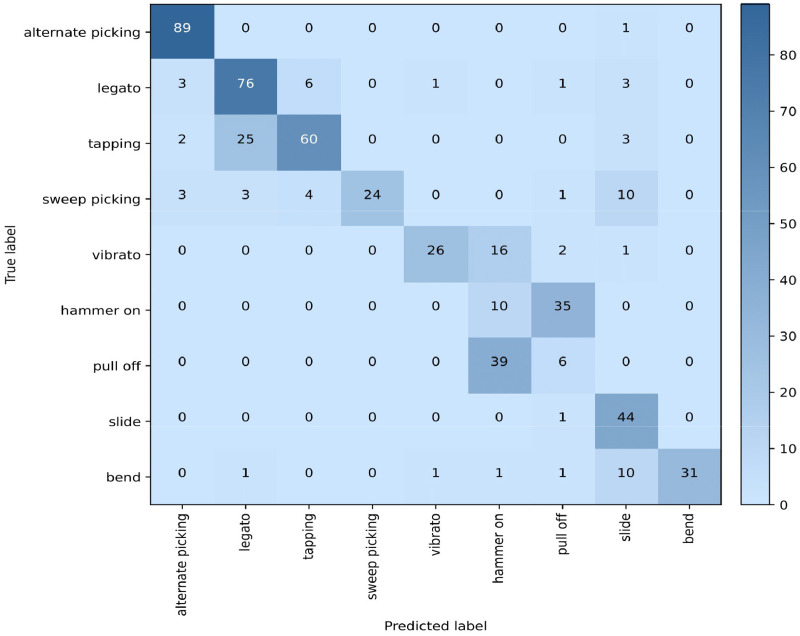

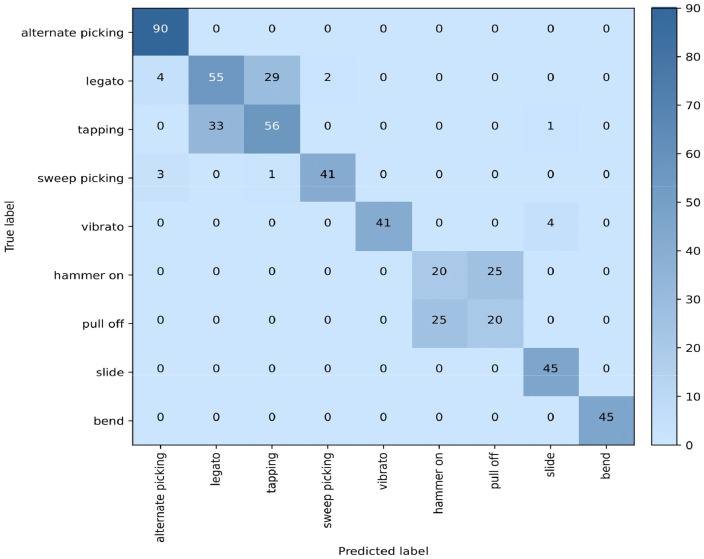

To evaluate the effectiveness of our dataset in developing accurate machine learning models for classifying the guitar techniques we design a Support Vector Machine (SVM) [5], and a Convolutional Neural Network (CNN) classifier. More details about the methodology and configuration of the experiments, as well as the architecture of these two models, are described in 4.3. In this subsection we present the results obtained for each of the three individual experiments and for each model separately. Table 3 summarizes the results and Figs. 2 & 3 show the aggregated confusion matrices for the first experiment, for the SVM, and the CNN approach, respectively.

Table 3.

Classification results.

| Data split | Method | Accuracy (%) | F1-score (%) (std (%)) |

|---|---|---|---|

| Experiment 1 | SVM | 67.8 | 62.3 (11.6) |

| Experiment 2 | SVM | 84.2 | 81.2 (2.0) |

| Experiment 3 | SVM | 83.4 | 81.9 (5.0) |

| Experiment 1 | CNN | 76.5 | 76.9 (8.0) |

| Experiment 2 | CNN | 81.1 | 83.1 (1.2) |

| Experiment 3 | CNN | 80.7 | 82.3 (3.7) |

Fig. 2.

Experiment 1 (SVM) - Aggregated Confusion Matrix across all folds.

Fig. 3.

Experiment 1 (CNN) - Aggregated Confusion Matrix across all folds.

4. Experimental Design, Materials and Methods

4.1. Experimental design

To compile this dataset, a guitar player is recruited to perform certain exercises for each of the nine classes. The guitarist meticulously choses the exercises to allow for diverse sound alterations, maximizing the potential variety in sound. To perform the exercises, three distinct guitars and three separate simulation amplifiers are utilized. The recordings are made indoors, in a household environment, using a smartphone device. This setup aims to replicate the scenario where a student learns guitar at home, utilizing an application developed based on this dataset to master various guitar playing techniques. The data is initially recorded in video format, and subsequently, the corresponding audio files are extracted from the videos. The goal is to generate valuable data for the development of educational applications for teaching guitar playing techniques. This includes not only audio files, but also video files, facilitating the development of applications capable of assessing the student's body posture and hand placement, providing comprehensive guidance. Lastly, the respective MuseScore files for the exercises are also provided, enabling the production and incorporation of data generated in a similar manner.

4.2. Materials and methods (e.g. data gathering process, guitars, recording devices, tools)

The Xiaomi Redmi Note 8 smartphone is used to capture the video stream and generate the MP4 video samples of the dataset. Subsequently, from these video samples we extract the audio stream in a stereo WAV format with a sampling rate of 48000 Hz, using the FFmpeg software. In total we utilize three different electric guitars, all of them equipped with humbucker pickups. These include the River West Guitars (RWG), the Carvin DC-400, and the Palm Bay Cyclone P7-XR. These three guitars provide a range of sounds for our study, allowing us to analyze the guitar-playing techniques across multiple contexts. To simulate three different amplifiers and further enhance the sound, we use an amplifier simulation profiler, Avid's Eleven Rack. More specifically, by selecting the amplifier simulations named SL100, Plexiglass, and DC-Modern, we simulate Soldano, Vox, and Marshall amplifiers, respectively. Below we describe in more detail the adopted procedure for generating the samples of each class.

Alternate picking, Legato, Tapping: Three exercises are executed for each of these three individual techniques, each one commencing from three distinct positions. Consequently, for every individual combination of amplifier and guitar, the guitarist has to perform nine repetitions for each technique.

Sweep picking: Two exercises are performed. The first exercise requires starting in three different positions in contrast to the second, which has to be performed as written. This means that for each unique combination of amplifier and guitar, the guitarist has to perform four times.

Vibrato: Three exercises are performed. The first exercise involves notes that are played only with vibrato, starting from the second string and ending on the fifth string. The second, as well as the third exercise concerns licks which are found quite often in the discography. Therefore, the guitarist has to perform six times for each unique combination of amplifier and guitar.

Hammer-on, Pull-off: An exercise is performed for each of these two individual techniques. This exercise requires the guitarist to start performing for each string. In this case, the guitarist has to perform six times for each unique combination of amplifier and guitar.

Slide: In order to be able to utilize most of the range of the guitar's fretboard, we aim to have the widest possible range. Hence, we decide to perform a major scale in ascending and descending order starting from the second fret. This exercise is repeated for all strings. Therefore, the guitarist has to perform six times for each unique combination of amplifier and guitar.

Bend: This technique is the most challenging. Even in related publications, the bend adopted was up to two semitones in terms of musical intervals. In order to achieve the widest possible range for this technique, we write an exercise in which all notes have to be played with a bend and the initial range of musical intervals is a semitone, while the widest is up to three tones. Hence, the guitarist has to perform six times for each unique combination of amplifier and guitar.

4.3. Machine learning experiments

In this subsection we present the adopted training setup for the experiments, and the two different approaches. The first approach involves the development of an SVM classifier, and the second approach a Convolutional Neural Network. In the experiments we use only the audio WAV files of the dataset, resampled to a sampling rate of 8000 Hz.

Support Vector Machine (SVM): In this approach, the audio WAV files of the dataset are split into segments corresponding to 1 sec of total duration. A 136-dimensional feature vector is extracted from each segment using the pyAudioAnalysis [6] library. This feature vector is the result of calculating the average and standard deviation from 20 68-dimensional short-term feature vectors in the coordinate-wise sense. The short-term feature vectors are extracted from each segment with a resolution of 50 ms with no overlapping. These 136-dimensional feature vectors are the input to the SVM model. We choose the Radial Basis Function (RBF) as the kernel function, and for each experiment the parameters for C and gamma are estimated by employing a GridSearch on the sets [0.1, 1, 10, 50, 100, 1000] and [0.0001, 0.001, 0.01, 0.1, 1, 10], respectively.

Convolutional Neural Networks (CNN): In this case, the input to the model are mel-spectrograms extracted from the 1 s audio segments with a Short-Time-Fourier-Transform window of 50 ms and no overlapping. A total of 128 mel-frequency bins have been adopted. These parameters yield a 2D - representation of size 20 × 128 (timestamps x frequency bins) for each 1-sec of audio segment. The CNN consists of 4 convolutional layers followed by a 3-layer linear classifier, mapping the input spectrograms to a 9-dimensional feature vector, corresponding to the nine distinct electric guitar techniques. Each of the four convolutional layers uses 5 × 5 kernels with a stride of 1 × 1 and padding equal to 2, maintaining the spatial dimensions unchanged. The number of channels is doubled in each convolutional layer, starting from 32 and reaching 256 channels. Subsequently, a 2D - Batch Normalization is applied. followed by a LeakyReLU activation function. Additionally, the spatial dimensions are halved in each convolutional layer using a 2D - Max Pooling with a kernel size of 2 × 2. The output of the last convolutional layer is a feature map with dimensions 256 × 1 × 8, which, when flattened, yields a resulting 2048 - dimensional feature vector. Three linear layers are employed mapping the 2048 - dimensional feature vector to the final 9 - dimensional vector. Each linear layer consists of a linear mapping, followed by a LeakyReLU activation. The output dimensions of each of these three linear layers are 1048, 256, and 9.

To eliminate as much as possible the likelihood of dependency between the train and test sets with regard to particularities such as the exercise, the guitar, or the amplifier type, we conduct three independent experiments, each of which represents a different "leave-one-out" validation setup:

Experiment 1: We generate 5 folds of train/test splits for the first experiment. Each fold is created by eliminating an exercise from the others and placing it in the test set. For instance, in the alternate picking class, we maintain 8 of the 9 exercises in the training set and one in the test set for each fold. To obtain the 5 folds, we similarly treat the other classes. The training set in each fold consists of 441 guitar recordings and the remaining 108 belong to the test set. The purpose of this experiment is to validate the ability of the aforementioned models to generalize independently of the exercise.

Experiment 2: For the second experiment, we split the dataset by aggregating all of the recordings of a particular guitar in the test set in each fold. Therefore, in this case, we create 3 pairs of train/test splits, each one of them corresponding to the guitar for which the recordings constitute the test set. Each training set consists of 366 recordings, and the other 183 belong to each test set. This experiment focuses on validating the ability of the recognition methods to generalize independently of the guitar type.

Experiment 3: For the third and last experiment, we split the dataset by eliminating all of the recordings of a particular amplifier from the training set in each fold. Hence, as in the case of the guitars, we create 3 pairs of train/test splits. The train/test sizes in this case for each fold are 366 and 183, respectively. This experiment focuses on validating the ability of the machine learning methods to generalize independently of the amp type.

Limitations

The limitation of the dataset is that the recording samples were generated by a single guitar player which may hinder the development of large scale machine learning models to capture the variety of different playing styles of other players. By providing the MuseScore files we encourage future users of the dataset to add more samples to capture a diverse range of individual playing styles.

Ethics Statement

Our study does not involve studies with animals or humans. Therefore, we confirm that our research strictly adheres to the guidelines for authors provided by Data in Brief in terms of ethical considerations.

CRediT authorship contribution statement

Alexandros Mitsou: Conceptualization, Data curation, Writing – review & editing, Writing – original draft, Resources. Antonia Petrogianni: Methodology, Software, Writing – review & editing, Writing – original draft. Eleni Amvrosia Vakalaki: Methodology, Software, Writing – review & editing, Writing – original draft. Christos Nikou: Methodology, Software, Writing – review & editing, Writing – original draft. Theodoros Psallidas: Data curation, Software, Writing – review & editing, Writing – original draft. Theodoros Giannakopoulos: Conceptualization, Methodology, Supervision, Writing – review & editing.

Acknowledgments

Acknowledgements

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Contributor Information

Alexandros Mitsou, Email: amitsou@iit.demokritos.gr.

Antonia Petrogianni, Email: a.petrogianni@iit.demokritos.gr.

Eleni Amvrosia Vakalaki, Email: evakalaki@iit.demokritos.gr.

Christos Nikou, Email: chrisnick@iit.demokritos.gr.

Theodoros Psallidas, Email: tpsallidas@iit.demokritos.gr.

Theodoros Giannakopoulos, Email: tyianak@iit.demokritos.gr.

Data Availability

Guitar Style Dataset (Original data) (Zenodo).

References

- 1.Xi Q., Bittner R.M., Pauwels J., Ye X., Bello J.P. GuitarSet: a dataset for guitar transcription. ISMIR. 2018, September:453–460. [Google Scholar]

- 2.Lostanlen V., Andén J., Lagrange M. Proceedings of the 5th International Conference on Digital Libraries for Musicology. 2018, September. Extended playing techniques: the next milestone in musical instrument recognition; pp. 1–10. [Google Scholar]

- 3.Su L., Yu L.F., Yang Y.H. Sparse cepstral, phase codes for guitar playing technique classification. ISMIR. 2014, October:9–14. [Google Scholar]

- 4.Kehling C., Abeßer J., Dittmar C., Schuller G. Automatic tablature transcription of electric guitar recordings by estimation of score-and instrument-related parameters. DAFx. 2014, September:219–226. [Google Scholar]

- 5.Noble W.S. What is a support vector machine? Nat. Biotechnol. 2006;24(12):1565–1567. doi: 10.1038/nbt1206-1565. [DOI] [PubMed] [Google Scholar]

- 6.Giannakopoulos T. Pyaudioanalysis: An open-source python library for audio signal analysis. PloS One. 2015;10(12) doi: 10.1371/journal.pone.0144610. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Guitar Style Dataset (Original data) (Zenodo).