Abstract

Purpose

To train an explainable deep learning model for patient reidentification in chest radiograph datasets and assess changes in model-perceived patient identity as a marker for emerging radiologic abnormalities in longitudinal image sets.

Materials and Methods

This retrospective study used a set of 1 094 537 frontal chest radiographs and free-text reports from 259 152 patients obtained from six hospitals between 2006 and 2019, with validation on the public ChestX-ray14, CheXpert, and MIMIC-CXR datasets. A deep learning model was trained for patient reidentification and assessed on patient identity confirmation, retrieval of patient images from a database based on a query image, and radiologic abnormality prediction in longitudinal image sets. The representation learned was incorporated into a generative adversarial network, allowing visual explanations of the relevant features. Performance was evaluated with sensitivity, specificity, F1 score, Precision at 1, R-Precision, and area under the receiver operating characteristic curve (AUC) for normal and abnormal prediction.

Results

Patient reidentification was achieved with a mean F1 score of 0.996 ± 0.001 (2 SD) on the internal test set (26 152 patients) and F1 scores of 0.947–0.993 on the external test data. Database retrieval yielded a mean Precision at 1 score of 0.976 ± 0.005 at 299 × 299 resolution on the internal test set and Precision at 1 scores between 0.868 and 0.950 on the external datasets. Patient sex, age, weight, and other factors were identified as key model features. The model achieved an AUC of 0.73 ± 0.01 for abnormality prediction versus an AUC of 0.58 ± 0.01 for age prediction error.

Conclusion

The image features used by a deep learning patient reidentification model for chest radiographs corresponded to intuitive human-interpretable characteristics, and changes in these identifying features over time may act as markers for an emerging abnormality.

Keywords: Conventional Radiography, Thorax, Feature Detection, Supervised Learning, Convolutional Neural Network, Principal Component Analysis

Supplemental material is available for this article.

© RSNA, 2023

See also the commentary by Raghu and Lu in this issue.

Keywords: Conventional Radiography, Thorax, Feature Detection, Supervised Learning, Convolutional Neural Network, Principal Component Analysis

Summary

The authors present a visually interpretable neural network model for patient reidentification from chest radiographs, identify key features determining the patient identity decision, and demonstrate its potential use as a longitudinal patient health biomarker.

Key Points

■ A neural network model for patient reidentification from chest radiographs was trained on a large dataset of longitudinal images and assessed on the tasks of patient confirmation and database image retrieval; the model achieved Precision at 1 scores of 0.976 on the internal test set and 0.868–0.950 on the external test data without fine-tuning.

■ The model was combined with a generative adversarial network to allow visual exploration of the identity-relevant features learned by the model, and human expert analysis showed that these features corresponded with intuitive factors, such as sex, age, body weight, and body habitus.

■ The degree of change in patient identity representation in longitudinal image sets may serve as a marker for abnormality and may help improve performance over single-image abnormality prediction from the learned feature representation.

Introduction

Machine learning has made rapid progress in medical imaging applications across a range of image processing and diagnostic tasks. Recent work has demonstrated clinical applications of deep learning methods in chest radiograph triage (1,2) and abnormality classification (3,4), offering potential benefits to overstretched health services. Ongoing development depends in part on the availability of public datasets of anonymized patient images to provide larger and more diversified data for training and evaluation of models on a reproducible basis. To ensure patient confidentiality when sharing imaging data, great efforts are made to anonymize Digital Imaging and Communications in Medicine (DICOM) file metadata and identifying text in images (5–7); however, recent work has demonstrated that computer vision algorithms can identify potentially sensitive patient information directly from chest radiograph image data, such as patient sex (8), age (9–11), ethnicity (12), and identity matches in public databases (13). The black box nature of many models can make the prediction mechanism unclear and therefore difficult to mitigate. This difficulty raises questions for the research community regarding patient confidentiality measures but also opens new avenues for patient health evaluation. For example, on chest radiographs, patient chronologic age prediction error has been shown to act as a marker for cardiovascular health (14), and estimated biologic age has been shown to improve predictive performance in a multivariable risk factor model of cardiovascular and all-cause patient mortality (15). The relative benefits of new machine learning developments versus potential privacy concerns should therefore be carefully considered (16).

In this work, we aimed to introduce and evaluate an explainable deep learning approach to patient reidentification in chest radiograph datasets; we evaluated its ability to reidentify patients in several large public datasets and attempted to visually interpret the identity-relevant anatomic features learned by the model. Although reidentification has recently been successfully demonstrated by Packhäuser et al (13), using the National Institutes of Health ChestX-ray14 dataset (17), with identification of relevant image areas via gradient-weighted class activation mapping (18), we aimed to achieve a more detailed understanding of the predictive mechanism using explanation through synthetic counterfactual images (19,20) generated by a generative adversarial network (GAN) (21) to explore the principal features in the learned identity representation. We further investigated whether change in identity representation over time in a normative model setting acts as a longitudinal marker for emergence of abnormalities and whether incorporating this longitudinal signal can improve on the Patient Contrastive Learning of Representations (22) approach of prediction directly from the identity representation of individual images.

Materials and Methods

Datasets

For this retrospective study, we used 2 775 902 chest radiograph DICOM files gathered by six UK hospitals between 2006 and 2019 following national governance (Governance Arrangements for Research Ethics Committees) and National Health Service data opt-out procedures; these data were previously collected nonidentifiable information from patients collected as part of their care and support. All available chest radiograph DICOM files were extracted from the hospitals’ central picture archiving and communication systems for this period, thus representing the range of specialties and populations served by the participating institutions rather than being sourced from a particular clinical setting (eg, emergency medicine). Images were automatically labeled for 37 radiologic findings by a natural language processing reading of the original radiologist’s free-text report (Appendix S1). After excluding images from patients younger than 16 years (n = 105 543), nonfrontal chest radiographs (n = 18 299), corrupt DICOM files (n = 13), derived images (ie, generated from annotations of a primary image and saved in the picture archiving and communication systems by the reporting radiologist) (n = 165 190), images lacking radiologist reports (n = 590 320), and images lacking a pseudoanonymized patient identification number or for which only one scan was available (n = 802 000), we retained 1 094 537 images from 259 152 unique patients for model training and evaluation (Fig 1). These images were split by patient identity into training (85% [929 169 images]), validation (5% [55 448 images]), and test (10% [109 920 images]) sets.

Figure 1:

Flowchart of data collection inclusion and exclusion criteria and training splits for the internal dataset. Derived images refers to images saved by the reporting radiologist in the picture archiving and communication system derived from an original primary image (eg, saved with image annotations or contrast enhancements made during analysis). DICOM = Digital Imaging and Communications in Medicine, ID = identification, y.o. = years old.

For external test data, we used three publicly available datasets: National Institutes of Health ChestX-ray14 (17) (https://nihcc.app.box.com/v/ChestXray-NIHCC), comprising 112 120 images from 30 805 patients at the National Institutes of Health Clinical Center from 1992 through 2015; CheXpert (23), comprising 224 316 images from 65 240 patients at Stanford Hospital from 2002 through 2017; and PhysioNet’s (24) MIMIC-CXR (25), comprising 377 110 images from 65 379 patients at the Beth Israel Deaconess Medical Center Emergency Department from 2011 through 2016. After removing nonfrontal radiographs and patients with only single images, ChestX-ray14 contained 91 222 images from 12 801 patients, CheXpert contained 158 237 images from 31 744 patients, and MIMIC-CXR contained 218 262 images from 35 258 patients. Abnormality labels were extracted from the provided free-text reports for MIMIC-CXR using our natural language processing system, while for ChestX-ray14 and CheXpert, the sets of 14 natural language processing–derived labels provided in each case were used.

Reidentification Model

To train our patient reidentification models, we adopted a deep metric learning approach to cluster images by patient identity in a low-dimensional feature representation. EfficientNet-B3 (Linux Foundation) (26) was used as a convolutional neural network (CNN) backbone outputting 1536 features from its final AdaptiveAvgPool2d layer, followed by a fully connected head projecting the features into an N-dimensional representation, with dimensionalities ranging from four to 128 investigated. For each experiment, the CNN layers were initialized with the public ImageNet pretrained weights, with Xavier initialization for the fully connected head. The model was trained end to end to optimize triplet loss (27) with online triplet mining and an Adam optimizer with initial learning rate 1e − 3, weight decay of 1e – 4, and a global learning rate decay of 0.5 with five epoch patience level. Training was terminated after 10 epochs without validation set loss improvement and the lowest resulting validation loss model used to report test set results. Code was implemented in PyTorch, version 1.8.0 (Linux Foundation), with the triplet loss function and online batch miner for sampling valid triplets within each training minibatch implemented from the PyTorch Metric Learning library (https://kevinmusgrave.github.io/pytorch-metric-learning). Models were trained on all eight V100 graphics processing units of an Nvidia-DGX1 server with total graphics processing units memory of 256 GB.

After training, the Euclidean distances (ie, the straight-line distance between the two image representations) in the representation space between 10 000 pairs of same-identity images and 10 000 pairs of different-identity images from the validation set were calculated. These distances were used as predictors to fit a logistic regression model for the probability of two images belonging to the same patient.

GAN-based Model Explainability

We incorporated our pretrained patient identity model into a GAN (21), allowing us to generate hypothetical images conditional on a particular patient identity and thus visually explore the representation space. We based our generative model on the official PyTorch StyleGAN-2 (28) implementation (https://github.com/NVlabs/stylegan2-ada-pytorch) adapted for training using our reidentification model as an auxiliary classifier, as shown in Figure 2. During training, a target identity representation r sampled from the training data were concatenated to the random latent noise input w, and the mean squared difference between the identity target and model-perceived identity representation of the generated image was added to the standard GAN realism loss term. The GAN model was trained at 256 × 256 resolution over 25 million training image exposures, and resulting generated image quality was evaluated with the Fréchet inception distance score.

Figure 2:

Graph of summary of method. 1, The reidentification network R is trained with triplet loss to cluster images from the same patient in an N-dimensional embedding space. 2, The test dataset is projected into the embedding space; we showed a two-dimensional (2D) t-distributed stochastic neighbor embedding (t-SNE) projection of real 32-dimensional embeddings for 14 test set patients with more than eight available images, illustrating the interclass separation and intraclass dispersion in representations. The t-SNE plot is for visualization purposes only; the original N-dimensional embeddings were used for downstream tasks. t-SNE was performed with the scikit-learn Python library, perplexity 30 250 iterations. 3, An auxiliary classifier generative adversarial network (AC-GAN) is trained to generate images conditional on a particular identity representation by using the trained network R with frozen weights as an auxiliary classifier. 4, Downstream tasks demonstrated in this work are database image retrieval from a query image through nearest neighbors in the embedding space, longitudinal abnormality prediction in a patient via distance from an anchor image known to be free of abnormality, and visual explanation of identifying features used by the model via synthetic image interpolations along the derived principal component vectors of the embedding space. PCA = principal component analysis.

To visually interpret the main features used by the identity prediction model, we first ran a principal component analysis (PCA) of the identity embeddings of our full training set, thus identifying the most identity-informative vectors in the learned representation. Seed images were then generated with the GAN from randomly selected identity representations with random noise inputs w, and alternative versions were generated with each of the top PCA vectors added and subtracted from the original identity representation while keeping w constant; hence, the pose was held constant while the synthetic patient was visualized, with the features associated with each PCA exaggerated independently. For each of the top eight principal components (PCs), three radiologists (two senior radiologists with > 20 years of clinical experience [C.E.H., V.G.] and one radiologist in training [C.H., academic track]) were separately presented with 16 synthetic image interpolations and were asked to report any commonalities observed in the changes in each image set and to what, if any, physical attributes or anatomy this change corresponded.

Abnormality Prediction Model

To evaluate longitudinal change in identity representation as an abnormality predictor, we trained a normative model using only training set images with no pathologic radiologic findings, comprising 263 497 images from 94 000 patients, operating at 256 × 256 resolution with a 32-dimensional fully connected head. The internal validation and all test sets (still comprising both normal and abnormal images) were filtered for patients for whom the first image in the set was normal, with subsequent images being either normal or abnormal. The Euclidean distance in the identity representation space between the first (normal) patient image and subsequent images in the series was then used as the predictive parameter for normal or abnormal classification in those subsequent images. With the validation set, we also fit logistic regression models to predict abnormality directly from both the 1536-dimension CNN feature maps (Patient Contrastive Learning of Representations approach) and the final 32-dimension identity representation of the final image, along with a two-factor model including both the single image prediction and the longitudinal distance measure. The performance of each of these models was assessed on the internal and external test sets.

We compared these reidentification-based approaches with two alternative models using the same CNN backbone and training data. First, a fully supervised classifier was trained to predict normal or abnormal status from a single image using the abnormality labels for the training data. Second, a fully supervised normative age regression model was trained to predict patient age in years from a single image; the absolute age prediction error for an image was then evaluated as an alternative single-factor predictor of abnormality. The alternative models were evaluated using the same filtered test sets used for longitudinal abnormality prediction for fair comparison.

Statistical Analysis

Our performance metrics for patient database image retrieval were Precision at 1 (the proportion of query images in which the nearest neighbor embedding in the database represents another image of the same patient) and mean R-Precision (for each image the proportion of the nearest K neighbors that share the same patient identity, in which K is the number of images of that patient in the query database) to account for the varying number of images for each patient in the test sets. For each retrieval test we took five randomly selected sets of 1000 query images from the relevant test set, reporting the mean and SD of the relevant performance metrics. For binary patient confirmation, we reported standard binary classification measures (sensitivity, specificity, F1 score) to assess the logistic regression model trained on the validation set identity embeddings, using a fixed probability decision boundary of 0.50. Patient confirmation test sets were constructed for each test set by randomly sampling an image of each patient, together with a second image of the same patient and an image of an alternative patient; this produced equal numbers of same-identity and different-identity image pairs for each patient in the test set. For abnormality prediction, we reported area under the receiver operating characteristic curve (AUC) values for the specified model for prediction of normal or abnormal status. The CIs for AUC measures were generated from bootstrapping of the relevant test set over 10 000 repetitions. Where statistical comparisons were made in the text (eg, Precision at 1 at different model dimensionality), we tested for statistical significance of the difference in means with two-sided paired t tests, and a P value was reported for a null hypothesis of no difference in means. All performance measures were quoted for the internal test set and external test sets. We used a 5% significance threshold for tests unless specified otherwise in the specific result. Statistical measures were calculated with the scikit-learn, version 1.0.2, library in Python, version 3.7.15 (Python Software Foundation).

Results

Dataset Characteristics

Summary characteristics of the internal data and external test sets are shown in Figure 3, with distributions of patient age, images per patient, and time elapsed between the first and last scan for each of the datasets. We noted the variation between datasets in mean patient age (internal, 63.8 years; MIMIC-CXR, 63.7 years; ChestX-ray14, 48.3 years; CheXpert, 61.1 years), mean scans per patient (internal, 4.22; MIMIC-CXR, 6.19; ChestX-ray14, 7.13; CheXpert, 4.98), and mean period covered by scans (internal, 2.44 years; MIMIC-CXR, 1.13 years; ChestX-ray14, 1.80 years; CheXpert, 0.97 years). Additional demographic information for the datasets is presented in Appendix S2.

Figure 3:

Graphs of summary statistics of the datasets used after removal of nonfrontal images and patients with only single images. Left: Patient distribution by sex. Middle: Number of scans per patient (y-axis log scale). Right: Distribution of time separations between first and last images per patient (y-axis log scale).

Patient Reidentification in Large Datasets

Table 1 presents a sensitivity analysis of model database retrieval performance versus (a) dimensionality of the representation with fixed image resolution of 256 × 256 and (b) resolution of the training data with fixed representation dimensionality of 128. Increased representation dimensionality resulted in significant performance improvement up to 16 to 32 dimensions (P < .001), with no significant improvement thereafter, with a peak mean Precision at 1 of 0.970 ± 0.010 and an R-Precision of 0.904 ± 0.009 at 128 dimensions. Increased image resolution gave steadily improved mean performance with Precision at 1 of 0.981 ± 0.007 and R-Precision of 0.947 ± 0.010 at 512 × 512, with a significant improvement in R-Precision between 299 × 292 and 512 × 512 (P = .009), although the improvement in Precision at 1 was not found to be significant (P = .11).

Table 1:

Image Retrieval Performance Metrics Versus Dimensionality of the Embedding Space at Fixed 256 × 256 Image Resolution and Model Resolution with Fixed Representation Dimensionality of 128

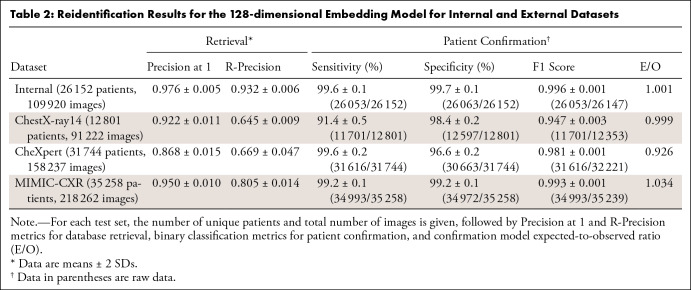

Table 2 presents patient reidentification results for all test sets for the 128-dimensional model operating at 299 × 299 resolution. For our internal test set of 26 152 patients, we achieved a Precision at 1 of 0.976 ± 0.005 and an R-Precision of 0.932 ± 0.006, indicating strong clustering performance. Without model fine-tuning, Precision at 1 on the external test datasets was 0.950 ± 0.010 for MIMIC-CXR, 0.922 ± 0.011 for ChestX-ray14, and 0.868 ± 0.015 for CheXpert. Visual examples of successful image retrievals and false-positive matches are shown in Appendix S3, where some portion of the false-positive results are due to poorly aligned or cropped images.

Table 2:

Reidentification Results for the 128-dimensional Embedding Model for Internal and External Datasets

Since a larger number of possible patients makes identifying the correct patient from a query more challenging, we produced results for subsets of increasing patient count up to the maximum available for each dataset to provide a fairer comparison. Figure 4 shows mean Precision at 1 values for each dataset for patient counts from 1000 up to the maximum available in each case. As expected, we observed diminished performance with increasing database size, but the internal dataset and MIMIC-CXR gave stronger results versus ChestX-ray14 and CheXpert. We also compared our model performance against the performance figures quoted in Packhäuser et al (13) using the ChestX-ray14 test set provided in their associated GitHub repository comprising 24 223 images from 1424 patients; we achieved mean Precision at 1 of 0.982 at 299 × 299 resolution versus their reported values of 0.976 at 224 × 224 resolution and 0.995 at 512 × 512 resolution.

Figure 4:

Graph of Precision at 1 versus number of patients in test set based on random samples of patients of increasing number from each of the internal and external datasets. Performance on test sets from Packhäuser et al (13) are marked against the performance figures quoted in that work. Chest14 = ChestX-ray14.

Results for binary patient confirmation for the image pairs generated from each test dataset are shown in Table 2, using a fixed probability decision threshold of 0.50. The F1 score for the internal dataset was 0.996 ± 0.001, with MIMIC-CXR slightly weaker at 0.993 ± 0.001, followed by CheXpert at 0.981 ± 0.001 and ChestX-ray14 at 0.947 ± 0.003.

Identity Representation Decomposition and Visualization

We trained our implementation of StyleGAN to generate synthetic images at 256 × 256 resolution conditional on points sampled from our pretrained 16-dimensional identity representation. The final model achieved a Fréchet inception distance score of 3.92, indicating a strong level of generated image realism. Example synthetic images are shown in Appendix S4. Figure 5 shows an example of a single synthetic base image with interpolations along each of the top eight PC vectors, along with consensus observations of the main anatomic changes and corresponding high-level semantic features by the three reviewing radiologists based on the full set of 16 interpolations (see Figs S6–S13). We found that the reidentification model relied on intuitively logical human-meaningful features. For example, the first PC was confidently associated with patient sex, with increased breast tissue and a narrower rib cage consistently seen. Other features appeared to correspond to patient age (PC 2); rib angulation (PC 3); body mass index (PC 4); and patient build, thoracic volume, and change in habitus (PC 5–8). Appendix S5 provides additional evidence for this correspondence with histograms of the MIMIC-CXR representations on each PC for the available patient information of age, sex, abnormality presence, and ethnicity matching the visual observations of the radiologists; we also demonstrate that these features can be predicted from the learned feature representation with logistic regression with high performance, as would be expected (patient age > 66 years: AUC, 0.92 ± 0.00; sex: AUC, 0.97 ± 0.00; ethnicity [White vs racial minority groups]: AUC, 0.88 ± 0.00).

Figure 5:

Chest radiographs of identity-relevant features via generative adversarial network interpolations for the first eight principal components (PCs) of the training data identity embeddings. Each row is labeled with the PC number and explained variance ratio in the validation set. In each interpolation, the central image is a shared synthetic randomly generated image; the left and right columns show images generated from the same input perturbed along the named PC vector in opposite directions to exaggerate and minimize the features associated with that PC. Radiologists’ consensus comments and provisional semantic identification based on the commonalities observed in the full sets of 16 interpolations for each finding (shown in full in the supplemental material) are shown alongside each component.

Abnormality Prediction in Longitudinal Image Series

Table 3 shows abnormality prediction performance for the longitudinal distance measure, along with the effect of combining this longitudinal measure with direct logistic regression prediction using either the 32-dimension identity representation head or the 1536-dimension CNN features. Noting the correlation between anteroposterior versus posteroanterior patient positioning and image abnormality, we performed separate analyses on each dataset for all image series, posteroanterior-only series, and anteroposterior-only series to control for the model being influenced by a change in patient view rather than abnormalities directly. Table 4 shows comparison results from a fully supervised classifier and indirect prediction from an age prediction error model. We observed a positive predictive effect for longitudinal identity change in all cases, and combining the distance-based and feature-based predictions into a two-factor model improved mean AUC in most cases. The strongest performance for the combined model was shown for MIMIC-CXR in 1536 dimensions, with an AUC of 0.83 ± 0.00 versus the 0.85 ± 0.01 achieved by the fully supervised classifier.

Table 3:

Abnormality Prediction in Longitudinal Image Sets Where the First Chronologic Image Is Labeled Normal and the Status of Subsequent Patient Images Is Classified

Table 4:

Alternative Abnormality Prediction Model Performance

Age prediction error was a consistently weaker abnormality predictor compared with longitudinal identity change, with a peak AUC of 0.58 ± 0.01 on MIMIC-CXR versus 0.73 ± 0.01. Figure 6 shows receiver operating characteristic curves for abnormality prediction stratified by the number of abnormal findings recorded in the image. In all cases we observed a progressively higher AUC with increasing abnormality count.

Figure 6:

Graphs of receiver operating characteristic curves for abnormal versus normal image detection stratified by number of findings in the abnormal image, comparing identity representation drift against age prediction error as markers. For each test set, results are shown for all data, posteroanterior (PA) radiographs only, and anteroposterior (AP) radiographs only to account for correlation in patient view versus abnormality status. The area under the receiver operating characteristic curve (AUC) increases with abnormality count consistently for both markers, but identity representation change outperforms age prediction error in all cases. Chest14 = ChestX-ray14, MIMIC = MIMIC-CXR.

Discussion

In this study, we aimed to introduce and evaluate an interpretable patient reidentification model for chest radiographs, explain the features used by the model, and investigate its use in longitudinal abnormality prediction. As assessed on image retrieval in a test database of 26 152 patients, our internal model achieved a Precision at 1 of 0.976 ± 0.005 and an R-Precision of 0.932 ± 0.006. Generalization performance without fine-tuning was demonstrated on external public datasets, with mean Precision at 1 of 0.950 ± 0.010 for MIMIC-CXR, 0.922 ± 0.011 for ChestX-ray14, and 0.868 ± 0.015 for CheXpert; the variation may result from the differing clinical settings of the data sources (eg, MIMIC-CXR is an emergency medicine setting) or from different image preprocessing pipelines in the case of ChestX-ray14 and CheXpert, which are supplied not as DICOM files but as PNG and JPG files, respectively, with unknown preprocessing pipelines. Our work confirms the high patient reidentification performance achievable for chest radiographs reported by Packhäuser et al (13), achieving similar performance levels at 256 × 256 resolution, despite their model being specifically trained on the ChestX-ray14 dataset with a higher parameter count model (ResNet-50 [23 million parameters] versus EfficientNet-B3 [12 million parameters]). We showed that most identity-discriminative features are contained at relatively coarse detail levels with little performance improvement at image resolutions above 256 × 256, and a compact representation of 32 dimensions is sufficient to capture the discriminative feature set.

We also demonstrated that GAN-based synthetic image generation conditional on the learned identity representation allows intuitive exploration of the features used by the identification model and a different level of semantic insight as compared with heat map methods such as GradCAM. The main factors of variation in the representation are shown to correspond to logical demographic and anatomic features familiar to a clinician (eg, patient sex, age, and body mass index and habitus), rather than involving superhuman levels of image detail. A similar approach to feature visualization could offer benefits in improving clinical acceptance of artificial intelligence tools, for which concerns over the black-box decision-making process have held back adoption. Investigating feature decomposition in other classification and diagnostic tasks is a promising area for further work.

In addition to demonstrating patient confirmation and image retrieval, we aimed to investigate if longitudinal changes in patient identity representation within a normative model framework given an initial normal reference image could act as a marker for the emergence of radiologic abnormalities. We found that single-parameter Euclidean distance predicted abnormality with an AUC of up to 0.73 ± 0.01 on MIMIC-CXR, significantly outperforming an alternative indirect single-parameter method, normative age prediction model error, which achieved an AUC of 0.58 ± 0.01 (P < .001). Although this simple distance measure, of course, was not as strong as a model trained to predict abnormality directly from lower level features, we showed that including it as an additional model parameter alongside the full single-image identity representation improved classification performance with a peak AUC of 0.83 ± 0.00 (MIMIC-CXR posteroanterior images) versus the 0.85 ± 0.01 achieved by a fully supervised custom-trained abnormality classifier. This is a remarkably similar performance given that the identity model was trained with no exposure to abnormal images. The proposed reidentification model may also have both forensic applications in confirming unknown patient identity and potential implications for patient confidentiality in notionally de-identified public datasets that warrant further investigation.

Our study had several limitations. We lacked widely time-separated images in our data due to the limited periods over which images were gathered for each dataset. Widely time-separated images would allow for exploration of reidentification performance over a larger portion of the life span. We also considered only frontal chest radiographs given a lack of lateral images in the available training data; incorporating lateral images would likely improve overall reidentification results. Although we subjectively identified the learned identity features through expert visual analysis and available metadata labels, a study with more comprehensive patient metadata available (eg, patient heights, weights) would allow quantitative confirmation of our findings. To confirm clinical use of this patient reidentification model as a longitudinal patient health biomarker, a prospective randomized trial would be required; this could also address potential sources of bias arising from the retrospective study (eg, ascertainment bias toward those presenting at the hospitals considered rather than the underlying population). We were also unable to investigate reidentification as a mortality predictor due to clinical outcomes and other patient risk factors being unavailable for our data. Although our results indicate potential in a forensic patient identification role, a follow-up study examining appropriate thresholding for false-positive matches and true-negative findings versus database size would be required to show a practical use case.

In conclusion, our study demonstrated that patient reidentification using frontal chest radiographs is possible with high performance even in large datasets and that the deep learning model used relies on intuitive macroscopic features, such as patient sex, age, and body habitus. We also showed that changes in identity representation from a healthy baseline are associated with the emergence of abnormalities, giving a promising approach to longitudinal health evaluation, which warrants further exploration. We also advocated for further integration of generative models into medical imaging algorithms as a powerful tool for model explainability and fostering greater acceptance among key stakeholders, such as clinicians, patients, and regulatory authorities.

Supported by the Wellcome Trust (research grant) and by the Engineering and Physical Sciences Research Council (student funding).

Disclosures of conflicts of interest: M.S.M. Wellcome Trust funding was paid to the institution under a research grant to the PI (Giovanni Montana); the trust and its employees had no active role in the study. EPSRC student funding was received as a monthly PhD researcher stipend by author via the MathSys center for doctoral training at Warwick University. EPSRC had no direct role in the study. C.E.H. No relevant relationships. C.H. National Institute for Health and Care Research (NIHR) Academic Clinical Fellow (ACF). V.G. Grant/contract from Siemens Healthineers to institution; book royalties from Oxford University Press, Imaging for Radiotherapy; support for travel to WHO IARC meeting, Lyon, France, May 2023, as speaker; Chair, Academic Committee, Royal College of Radiologists, UK; senior deputy editor of Radiology. G.M. No relevant relationships.

Abbreviations:

- AUC

- area under the receiver operating characteristic curve

- CNN

- convolutional neural network

- DICOM

- Digital Imaging and Communications in Medicine

- GAN

- generative adversarial network

- PC

- principal component

- PCA

- PC analysis

References

- 1. Annarumma M , Withey SJ , Bakewell RJ , Pesce E , Goh V , Montana G . Automated Triaging of Adult Chest Radiographs with Deep Artificial Neural Networks . Radiology 2019. ; 291 ( 1 ): 272 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Kolossváry M , Raghu VK , Nagurney JT , Udo H , Lu MT . Deep Learning Analysis of Chest Radiographs to Triage Patients with Acute Chest Pain Syndrome . Radiology 2023. ; 306 ( 2 ): e221926 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Majkowska A , Mittal S , Steiner DF , et al . Chest Radiograph Interpretation with Deep Learning Models: Assessment with Radiologist-adjudicated Reference Standards and Population-adjusted Evaluation . Radiology 2020. ; 294 ( 2 ): 421 – 431 . [DOI] [PubMed] [Google Scholar]

- 4. Seah JCY , Tang CHM , Buchlak QD , et al . Effect of a comprehensive deep-learning model on the accuracy of chest x-ray interpretation by radiologists: a retrospective, multireader multicase study . Lancet Digit Health 2021. ; 3 ( 8 ): e496 – e506 . [DOI] [PubMed] [Google Scholar]

- 5. Noumeir R , Lemay A , Lina JM . Pseudonymization of radiology data for research purposes . J Digit Imaging 2007. ; 20 ( 3 ): 284 – 295 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Aryanto KYE , Oudkerk M , van Ooijen PM . Free DICOM de-identification tools in clinical research: functioning and safety of patient privacy . Eur Radiol 2015. ; 25 ( 12 ): 3685 – 3695 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Willemink MJ , Koszek WA , Hardell C , et al . Preparing medical imaging data for machine learning . Radiology 2020. ; 295 ( 1 ): 4 – 15 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Yang CY , Pan YJ , Chou Y , et al . Using deep neural networks for predicting age and sex in healthy adult chest radiographs . J Clin Med 2021. ; 10 ( 19 ): 10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Karagyris A , Kashyap S , Wu JT , Sharma A , Moradi M , Syeda-Mahmood T . Age prediction using a large chest x-ray dataset . In: Kensaku M , Hahn HK , eds. Proceedings Volume 10950: Medical Imaging 2019: Computer-Aided Diagnosis . SPIE Medical Imaging ; 2019. . [Google Scholar]

- 10. Sabottke CF , Breaux MA , Spieler BM . Estimation of age in unidentified patients via chest radiography using convolutional neural network regression . Emerg Radiol 2020. ; 27 ( 5 ): 463 – 468 . [DOI] [PubMed] [Google Scholar]

- 11.MacPherson M, Muthuswamy K, Amlani A, Hutchinson C, Goh V, Montana G. Assessing the performance of automated prediction and ranking of patient age from chest x-rays against clinicians. In: Wang L, Dou Q, Fletcher PT, Speidel S, Li S, eds. Medical Image Computing and Computer Assisted Intervention—MICCAI 2022. Springer; 2022:255–265. [Google Scholar]

- 12. Gichoya JW , Banerjee I , Bhimireddy AR , et al . AI recognition of patient race in medical imaging: a modelling study . Lancet Digit Health 2022. ; 4 ( 6 ): e406 – e414 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Packhäuser K , Gündel S , Münster N , Syben C , Christlein V , Maier A . Deep learning-based patient re-identification is able to exploit the biometric nature of medical chest X-ray data . Sci Rep 2022. ; 12 ( 1 ): 14851 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Ieki H , Ito K , Saji M , et al . Deep learning-based age estimation from chest X-rays indicates cardiovascular prognosis . Commun Med (Lond) 2022. ; 2 ( 1 ): 159 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Raghu VK , Weiss J , Hoffmann U , Aerts HJWL , Lu MT . Deep Learning to Estimate Biological Age From Chest Radiographs . JACC Cardiovasc Imaging 2021. ; 14 ( 11 ): 2226 – 2236 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Seastedt KP , Schwab P , O’Brien Z , et al . Global healthcare fairness: We should be sharing more, not less, data . PLOS Digit Health 2022. ; 1 ( 10 ): e0000102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Wang X , Peng Y , Lu L , Lu Z , Bagheri M , Summers RM . ChestX-Ray8: hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases . In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) . Institute of Electrical and Electronics Engineers; ; 2017. : 3462 – 3471 . [Google Scholar]

- 18. Selvaraju RR , Cogswell M , Das A , Vedantam R , Parikh D , Batra D . Grad-CAM: visual explanations from deep networks via gradient-based localization . In: 2017 IEEE International Conference on Computer Vision (ICCV). Institute of Electrical and Electronics Engineers ; 2017. : 618 – 626 . [Google Scholar]

- 19. Lang O , Gandelsman Y , Yarom M , et al . Explaining in style: training a GAN to explain a classifier in StyleSpace . In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV) . Institute of Electrical and Electronics Engineers; ; 2021. : 693 – 702 [Google Scholar]

- 20. Singla S , Pollack B , Chen J , Batmanghelich K . Explanation by progressive exaggeration . In International Conference on Learning Representations , 2020. . https://openreview.net/pdf?id=H1xFWgrFPS . [Google Scholar]

- 21. Goodfellow I , Pouget-Abadie J , Mirza M , et al . Generative adversarial nets . In: Ghahramani Z , Welling M , Cortes C , Lawrence N , Weinberger KQ , eds. Advances in Neural Information Processing Systems 27 (NIPS 2014) . 2014. : 2672 – 2680 . https://papers.nips.cc/paper_files/paper/2014/hash/5ca3e9b122f61f8f06494c97b1afccf3-Abstract.html . [Google Scholar]

- 22. Diamant N , Reinertsen E , Song S , Aguirre AD , Stultz CM , Batra P . Patient contrastive learning: A performant, expressive, and practical approach to electrocardiogram modeling . PLOS Comput Biol 2022. ; 18 ( 2 ): e1009862 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Irvin J, Rajpurkar P, Ko M, et al. CheXpert: a large chest radiograph dataset with uncertainty labels and expert comparison. In: AAAI-19/IAAI-19/EAAI-19 Proceedings. AAAI Press; 2019:590–597. [Google Scholar]

- 24. Goldberger AL , Amaral LAN , Glass L , et al . PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals . Circulation 2000. ; 101 ( 23 ): E215 – E220 [DOI] [PubMed] [Google Scholar]

- 25. Johnson AEW , Pollard TJ , Berkowitz SJ , et al . MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports . Sci Data 2019. ; 6 ( 1 ): 317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Tan M , Quoc L . EfficientNet: rethinking model scaling for convolutional neural networks . In: Chaudhuri K , Salakhutdinov R , eds. Proceedings of the 36th International Conference on Machine Learning . 2019. ; 97 : 6105 – 6114 . https://proceedings.mlr.press/v97/tan19a.html . [Google Scholar]

- 27. Hoffer E , Ailon N . Deep metric learning using triplet network . In: Feragen A , Pelillo M , Loog M , eds. International Workshop on Similarity-Based Pattern Recognition . Springer; ; 2015. : 84 – 92 . [Google Scholar]

- 28. Karras T , Laine S , Aittala M , Hellsten J , Lehtinen J , Aila T . Analyzing and improving the image quality of StyleGAN . In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Institute of Electrical and Electronics Engineers ; 2020. : 8107 – 8116 . [Google Scholar]