Abstract

The effectiveness of an intervention is tied to the degree to which a program is implemented as described and the behavior analyst’s ability to individualize the program based on client-specific factors. LeBlanc et al. (2020) found that training clinicians to use enhanced data sheets, which represent both the antecedent and response, resulted in greater procedural integrity when compared to standard data sheets. Additional benefits of enhanced data collection systems include the representation of potential error patterns, which may be used to modify the intervention program. The current study compared naïve participants’ accuracy in predicting a client’s performance when represented on standard and enhanced data sheets. Participants consistently identified error patterns on enhanced data sheets; however, performance did not differ across data collection methods when accurate responding or unpredictable controlling relations were shown. These findings suggest that enhanced measurement may facilitate the identification of error patterns during instruction for behavior analysts.

Key words: Data sheet, error analysis, errors, measurement, position bias, stimulus bias

The demand for behavior analysts continues to increase across the United States (Behavior Analyst Certification Board [BACB], 2022a) as does the number of behavior analysts being certified each year (BACB, 2022b). Of these certified individuals, over 70% work with individuals with autism spectrum disorder (ASD; BACB, 2022b). Although the role of behavior analysts may differ based on the setting, domain of practice, or specialty area, a recent survey by Hajiaghamohseni et al. (2021) found that nearly half (i.e., 47.9%) of Board certified behavioral analyst (BCBA) respondents endorsed having a caseload of 12 or more consumers/clients. In addition, 59.3% reported serving as a direct supervisor of at least one registered behavior technician (RBT). These findings may suggest that large caseloads and supervision requirements of RBTs may lead to behavior analysts being present for the minority of a client’s direct intervention.

When behavior analysts are unable to attend sessions physically or remotely during a client’s programming, they may be required to make treatment decisions based on data obtained by the RBT only. In behavior analytic practice, a data collection system must allow for the recording of the relevant dimension of the dependent variable, such as the rate, latency, or magnitude of the response (Ledford & Gast, 2018). In skill acquisition programs, an RBT might instead record trial-based data, which typically include whether a target response occurs under some prearranged antecedent condition. The target response is typically defined based on this antecedent-response relation and represented using some derivative measure such as percentage of correct responses.

Behavior analysts have long recognized the potential limitations of derivative measures such as percent correct responding in representing a client’s discriminated performance (Iversen, 2021; Johnson & Sidman, 1993; McIlvane et al., 2002; Sidman, 1980, 1987). In particular, the learner may achieve performances at some criterion; however, the controlling variables may remain undefined (Mackay, 1991; McIlvane, 2013; Sidman, 1980, 1987). This point cannot be emphasized enough: behavior analysts, given their focus on contingencies, are uniquely poised to identify and remediate instances in which the conditions that control a client’s response do not align with those intended by the behavior analyst (see stimulus control topography coherence; McIlvane & Dube, 2003; Ray, 1969). To determine the variables controlling some response, behavior analysts may arrange conditions that allow for the measurement of restricted or faulty sources of stimulus control (Dickson et al., 2006; Dube & McIlvane, 1999; McIlvane et al., 2000). As an alternative, data collection systems may include the measurement of errors and subsequent error analyses (e.g., Grow et al., 2011; Hannula et al., 2020; Schneider et al., 2018), which may also be more feasibly incorporated into behavior analytic practice.

Data collection systems may allow for the recording of the specific response topography emitted and subsequent analysis of the controlling relations (e.g., Grow et al., 2011; Schneider et al., 2018). Depending on the type and pervasiveness of the error pattern, this information may be invaluable. Take for example a client who responds by touching a picture of a cat when presented with the auditory stimulus “cat,” but selects the same stimulus when “dog” is presented. Although the first instance may suggest control by the relevant stimulus, the latter would diminish our confidence in the controlling relation. Measurement systems that represent the latter performance only as an error may hinder the behavior analyst’s ability to remediate the error, but also affect their ability to identify the tenuous form of stimulus control exerted by the auditory stimulus “cat” (see additional examples by Ray & Sidman, 1970). Finally, because this example includes an auditory-visual conditional discrimination, additional information regarding the client’s performance is needed to determine the controlling variable(s). For example, the described performance may represent a stimulus bias (e.g., only selecting the cat); however, it is also possible that the client’s responding is controlled by the position in the comparison array (e.g., if cat happened to appear in the same position on both trials) or some other controlling variable (e.g., win–stay response patterns; Grow et al., 2011). Quickly identifying the restricted or faulty source of stimulus control may be critical to remediating the error.

Simultaneous matching-to-sample (MTS) arrangements, commonly used in auditory–visual and visual–visual conditional discrimination training procedures, have received significant attention due to the susceptibility of such arrangements to produce responding under faulty stimulus control (Grow & LeBlanc, 2013). Indeed, two articles have provided explicit guidelines for implementing instruction using MTS arrangements due to these concerns (Green, 2001; Grow & LeBlanc, 2013). Green (2001) provided nine guidelines including (1) presenting a unique sample, but identical comparisons across trials; (2) arranging an array of at least three comparison stimuli; (3) presenting each sample the same number of times within a session; and (4) varying the position of the correct comparison across trials. Grow and LeBlanc (2013) expanded upon the recommendations of Green (2001) and included an example data collection system that incorporated each of the four guidelines outlined above. This data collection system was subsequently evaluated in a training comparison by LeBlanc et al. (2020).

LeBlanc et al. (2020) trained 40 interventionists providing behavior analytic services to children with ASD to conduct an auditory–visual conditional discrimination training program using a MTS arrangement. All participants were exposed to video instruction and either an enhanced or traditional/standard data sheet. The enhanced data sheet was similar to the data sheet provided by Grow and LeBlanc (2013) such that the sample and comparison were shown for each trial of the session (see example in Figure 1). In contrast, the standard data sheet listed each target stimulus and space for the interventionist to record whether the response was correct or incorrect and the type of prompt presented across trials. The standard data sheet did not represent, nor did it allow the interventionist to recorded data on, the comparison array or the order in which the sample stimuli were presented (see example in Figure 1). The authors found that interventionists exposed to the enhanced data sheet were more likely to (1) rotate the target comparison stimulus across trials; (2) present each sample stimulus an equal number of times; and (3) rotate the comparison array and the position of the correct comparison across trials compared to those exposed to the traditional data sheet. This finding suggests that the type of data collection system may not only allow interventionists to record the dependent variable, but also result in greater procedural integrity.

Fig. 1.

Standard and Enhanced Data Sheet Examples for the Perfect Discrimination Condition

The findings of LeBlanc et al. (2020) support the superiority of enhanced data sheets, although there may be several other benefits of using an enhanced data collection system. In particular, the conspicuous representation of several sources of stimulus control (e.g., stimulus biases, position biases) on enhanced data sheets might also allow for instructors to more accurately identify these sources of control compared to the standard data sheet. The current study extended the work of LeBlanc et al. (2020) by evaluating the effects of enhanced and standard data sheets on participants’ identification of three (Exp. I) or five (Exp. II) response patterns and providing further support for the clinical utility of enhanced data collection systems, which may allow for the effective modification of interventions when BCBAs cannot attend client appointments. Participants with no prior experience with error analysis were shown completed data sheets and asked to predict the client’s next response for each target stimulus. Experiment I required participants to identify stimulus biases, position biases, and perfect discriminations across both data sheets. Experiment II included two additional conditions: (1) a sample-specific condition, in which the response pattern differed across sample stimuli; and (2) an unsystematic responding condition to serve as an additional control condition to the perfect discrimination condition.

Experiment I

Method

Participants, Setting, and Materials

Participant demographic information is shown in Table 1. Participants included five undergraduate students between the ages of 18 and 25 years old. All participants were enrolled in a special education practicum course as part of an applied behavior analysis and developmental disabilities minor sequence. The study took place as part of a guest lecture on the topic of error analysis. Approximately 11 students attended the lecture, all of whom completed the task. Before the guest lecture, 5 of the 11 students provided consent for the use of their data. The experimenters only included these participants’ data in the study.

Table 1.

Participant Demographic Information

| Participant | Age | Major | Minor | Employed as RBT |

|---|---|---|---|---|

| Experiment I | ||||

| 501 | 18–25 | Elementary education | ABA & DD | No |

| 502 | 18–25 | Psychology | ABA & DD | No |

| 503 | 18–25 | Exercise science | ABA & DD | No |

| 504 | 18–25 | Exercise science | ABA & DD | Yes |

| 505 | 18–25 | Psychology | ABA & DD | No |

| Experiment II | ||||

| 601 | 18–25 | Environmental science | None | No |

| 602 | 18–25 | Psychology | None | No |

| 603 | 18–25 | Marine biology | None | No |

| 604 | 18–25 | Undecided | None | No |

Note. ABA = applied behavior analysis; DD = developmental disabilities; RBT = registered behavior technician

Each participant used their personal laptop during the study. All tasks were presented using Testable (www.testable.org; Rezlescu et al., 2020), a browser-based program used to host psychological and behavioral tasks. The participants also completed a brief demographic survey to confirm their status as a student, age, major, minor, and whether they were employed as a behavioral line therapist or RBT.

Dependent Variable and Response Measurement

Correct responding was defined as the participant selecting the comparison stimulus concordant with the defined error pattern. Testable automatically recorded the participant’s response and produced a comma-separated values output file upon completion of the task. Using this output file, the experimenter calculated the mean percent correct responses for each response pattern by taking the total number of correct responses divided by the total number of trials in that condition (i.e., nine trials), multiplied by 100 to yield a percentage. The experimenters did not collect reliability and procedural integrity data as the procedures were automated using the Testable program.

General Procedure

The study began with a general overview of identity matching as a fundamental skill repertoire, MTS procedures, and the standard and enhanced data sheets used by LeBlanc et al. (2020). The experimenter then showed the participants a PowerPoint slideshow representing a nine-trial session of an identity-matching task with data being recorded on both the standard and enhanced data sheets (see Figure 1). The comparison array included the textual stimuli Dog, Cat, and Bird. The data sheets adhered to the guidelines for simultaneous discrimination training procedures described by Green (2001) and Grow and LeBlanc (2013). In particular, (1) each stimulus was presented as a sample three times in a semi-random order; (2) the comparison array changed across trials; (3) the discriminative stimulus appeared in each position on an equal number of trials (i.e., three trials); and (4) each textual stimulus was correct in each position once. Each slide also included a picture showing the sample textual stimulus presented above a comparison array of three textual stimuli in a line and the experimenter’s finger pointing to the correct comparison. All trials showed accurate performance consistent with the perfect discrimination condition. The experimenter never mentioned any other potential response patterns (e.g., stimulus or position biases) at any point during the study.

All participants then completed a six-trial pretraining session via Testable. The experimenter sent the Testable link to all participants after presenting the overview outlined above. Participants completed three perfect discrimination trials for the enhanced and standard data sheets. This pretraining was designed to acclimate the participants to the task and allow them to ask any questions before beginning the experiment. The experimenter required participants to emit 100% correct responses on all pretraining trials before progressing to the experimental task.

For the experimental task, participants were presented with previously completed standard or enhanced data sheets depicting one of three response patterns (i.e., stimulus bias, position bias, or perfect discrimination). Both the enhanced and standard data sheets resembled those used by LeBlanc et al. (2020) and only differed in that space to record performance on probe trials or the type of prompt used were omitted. These components were not germane to the research question. In the standard data sheet condition, plus and minus signs represented correct and incorrect responses, respectively. In the enhanced data sheet condition, the correct comparison stimulus appeared in a bold font and the client’s response corresponding to the experimental condition was circled in red on each trial (Figure 1). The participant’s selection did not produce differential consequences. Instead, each response produced the presentation of the next trial. After completing the task, the experimenter described the purpose of the study, provided additional examples of the response patterns and data sheets, and allowed the participants to ask any questions.

Independent Variables

The experimenters arranged three patterns of responding in the standard and enhanced data sheet conditions. The response patterns included (1) position bias; (2) stimulus bias; and (3) perfect discrimination (see Fig. 2). During each trial, the participant observed a completed nine-trial session depicted on either data sheet and the sample (e.g., bird) and comparison array (i.e., bird, cat, and dog) depicting the next trial. The sample included one of the three textual stimuli included in the identity matching task, and the comparison array included all three textual stimuli presented side-by-side. The experimenters instructed participants to select the comparison stimulus they predicted the client would select given the pattern of responding on the data sheet. An example data sheet from each of the conditions is shown in Fig. 2. Participants had the opportunity to emit one response on each trial. Three consecutive trials appeared for each error pattern and data sheet condition (e.g., three trials with a stimulus bias towards Dog on the enhanced data sheet) such that the participant was required to respond when given each sample stimulus (i.e., Dog, Cat, and Bird). Each participant experienced all response patterns across both standard and enhanced data sheets. The participant completed a total of nine trials for each condition and data sheet combination for a total of 54 trials. Participants were said to have successfully identified the pattern of responding if they met the performance criterion, defined as the participant identifying the correct error pattern in at least 80% of trials for a given condition.

Fig. 2.

Examples of Position Bias (Exp. I and II), Stimulus Bias (Exp. I and II), Sample-Specific (Exp. II), and Unsystematic Responding (Exp. II) Conditions. Note. The enhanced (left) and standard right) data sheets are shown for an example of each response pattern

Position Bias

In this condition, the client’s performance showed responding exclusively to one position in the array. Testable presented participants with standard and enhanced data sheets representing a left-side, middle, or right-side bias. Correct responding required that the participant select the comparison stimulus presented in the position identical to that represented on the completed data sheet regardless of the sample stimulus.

Stimulus Bias

The client’s performance suggested control exclusively by a particular textual stimulus (e.g., Cat) represented in both standard and enhanced data sheets. A correct response required the selection of the same textual stimulus (e.g., cat) on each trial, regardless of the sample stimulus and its position.

Perfect Discrimination

The completed standard and enhanced data sheets depicted accurate client performance across all trials. Correct responding required that the participant select the comparison stimulus identical to the sample stimulus. This condition served as a control condition for the task as participants were expected to respond with high accuracy in both the enhanced and standard data sheet conditions.

Experimental Design

Each participant experienced the experimental conditions in a random order using an alternating treatments design (Barlow & Hayes, 1979). This design was used as each task presented a unique error pattern represented in the assigned data sheet and performance on one was not expected to influence performance in any other condition (i.e., carryover effects).

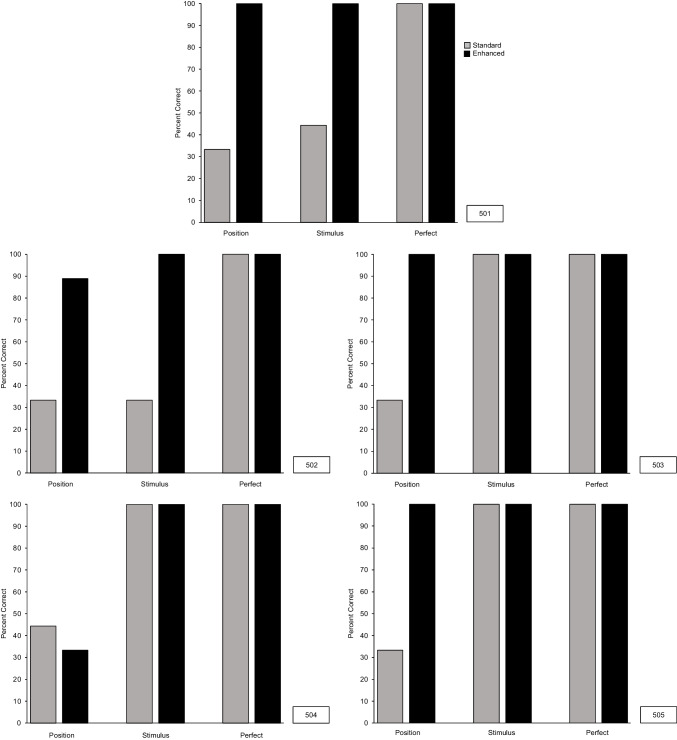

Results

Results from Experiment I are shown in Figure 3. Participants reliably identified the response pattern on all trials in the perfect discrimination condition across both standard and enhanced data sheets. When presented with any response pattern on the enhanced data sheet, all participants performed above criterion with the exception of 504, who responded at chance levels when presented with the position bias. When given the standard data sheet, three participants performed above criterion when presented with a stimulus bias; however, all participants responded at chance levels when presented with a position bias. Overall, participants’ performance in the enhanced data sheet condition exceeded performance in the standard data sheet condition across both error patterns.

Fig. 3.

Prediction of Response Patterns across Recording Methods for Experiment I

Experiment II

In this experiment, two additional conditions were included: (1) sample-specific and (2) unsystematic responding conditions. All other procedures were identical to Experiment I. Experiment II sought to determine whether enhanced data sheets may facilitate the identification of a more complex (i.e., sample-specific) error pattern by naïve participants.

Method

Participant and Setting

Participants included four undergraduate students between the ages of 18 and 25 years old recruited through a university-based psychology research pool. Each received one participation credit towards their psychology course requirements. Participant demographic information is shown in Table 1. None of the participants reported being employed as a behavioral line therapist or RBT. The experimenter conducted all procedures during one-on-one appointments using teleconferencing software (i.e., Zoom). The participant and experimenter had their video cameras on throughout the study. During the experimental procedures, the participant shared their screen so that the experimenter could ensure the task was executed successfully.

General Procedures

The current study included the same procedures and conditions as Experiment I with the addition of two response patterns. The experimenters arranged the tasks using Testable, which also recorded the participants’ performance. Each response pattern appeared across enhanced and standard data sheets for a total of 90 trials across all conditions. To reduce the likelihood of fatigue, the task was separated into two 45-trial sessions. After completing the first 45 trials, the participant was given a brief break before completing the last 45 trials.

Independent Variables

An additional test and control condition was added in Experiment II.

Sample-Specific

In this condition, the client’s performance suggested control by position for two of the textual stimuli and correct responding for the third. For example, when presented with Bird and Cat as the sample stimulus, the client’s responding suggested control by the left and middle positions, respectively. However, when presented with Dog, the client always selected Dog in the comparison array. The controlling relations changed across three-trial sessions. This error pattern was selected as it has been used as an example by several researchers (see Mackay, 1991; Sidman, 1980, 1987).

Unsystematic

The unsystematic responding condition served as a second control condition in which there were no consistent patterns of responding. In this condition, the experimenters ensured that the client’s performance was inconsistent across trials and stimuli such that no more than two responses were emitted to a single position or stimulus for each sample. This was done in two phases. First, the experimenters randomized the order of the client’s responses using a random number generator. Next, the first and second authors altered the response pattern to ensure there were no systematic patterns in responding.

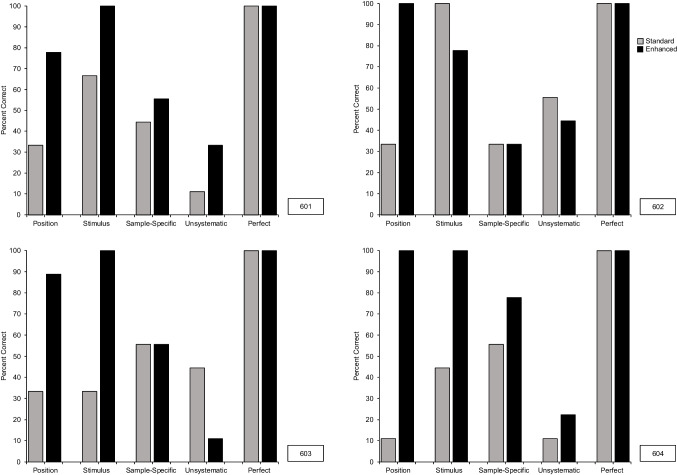

Results

Results from Experiment II are shown in Figure 4. All participants responded with perfect accuracy when presented with the perfect discrimination response pattern across both enhanced and standard data sheets. In contrast, participants’ performance remained at chance levels when presented with unsystematic response patterns across both data sheets. Similar to Experiment I, performance in the enhanced data sheet condition remained at criterion for three of four participants in the position and stimulus bias conditions. Two participants (601 and 602) responded just below the criterion in the position and stimulus bias conditions, respectively. Intermediate performances (i.e., above chance- but below criterion-level responding) were observed for three of four participants in the sample-specific condition when presented on the enhanced data sheet. The final participant (602) exhibited chance-level responding across both data sheets in the sample-specific response pattern. Nevertheless, this same participant responded above criterion in the stimulus bias condition on the standard data sheet, which was the only instance in which responding on the standard data sheet occurred at criterion. Overall, performance was generally higher in the enhanced data sheet condition across response patterns.

Fig. 4.

Prediction of Response Patterns across Recording Methods for Experiment II

General Discussion

The purpose of the current study was to compare the effects of standard and enhanced data sheets on naïve participants’ identification of error patterns in a MTS arrangement. The results of both experiments provide evidence of the utility of enhanced data sheets when used to identify certain error patterns that may be exhibited by clients during skill acquisition programs. Across both experiments, seven out of nine participants identified the position bias using the enhanced data sheet in at least 80% of trials, five of which did so on every trial. In contrast, performance on the standard data sheet remained at chance levels across Experiments I and II for all participants when presented with the same error pattern. In the stimulus bias condition, eight out of nine participants correctly identified the stimulus bias on all trials when presented on the enhanced data sheet across Experiments I and II. The experimenters observed variable performances on the standard data sheet across both experiments, ranging from chance levels to 100% correct. In Experiment II, participants did not identify the sample-specific performance on either data sheet. Finally, the type of data sheet did not impact performance across either experiment when presented with perfect discrimination and unsystematic responding. All participants predicted the response pattern with perfect accuracy in the perfect discrimination condition (Experiments I and II) and responding at chance levels in the unsystematic responding condition (Experiment II). Overall, these findings illustrate the benefits of using enhanced data sheets when identifying error patterns; yet, when error patterns are not present (e.g., perfect discrimination) or are unpredictable (e.g., unsystematic responding), the recording methods were similarly effective.

Across Experiments I and II, participants’ performance on the standard data sheet remained at chance levels during the position bias condition, whereas seven out of nine participants identified the same error pattern when presented with the enhanced data sheet. This may be unsurprising as the position bias on the standard data sheet represented a single correct response for each stimulus, consistent with chance levels of responding. This was always the case for the position bias condition as each stimulus was correct once in each position as aligned with the recommendations of Green (2001). This arrangement likely appeared to be similar to the unsystematic responding condition in Experiment II. In addition, because participants predicted the client’s next response, the lack of detail in the standard data sheet did not allow for the identification of which position controlled the response. This would require the participant to guess the correct position. In contrast, the enhanced data sheet displayed the sample, position of each comparison stimulus, and the client’s response, making the position bias more conspicuous. Nevertheless, performance just below the criterion (601) or at chance levels (504) was observed by two participants even when using the enhanced data sheets.

Participants reliably predicted the client’s response pattern in the stimulus bias condition when presented with the enhanced data sheet (near-criterion performance by 602) across both experiments. Participants responded with less accuracy when identifying stimulus biases depicted on the standard data sheet; however, four participants exhibited performances above the criterion. This finding is of particular interest as the client’s responding was represented at chance levels; however, only a single stimulus was selected. Although we might conclude that the client is exhibiting a stimulus bias, this may also represent a Type I error. That is, because the participant does not know which stimulus the client selected on incorrect trials, it is possible that the client emitted a different error. As a result, the performance of these four participants may represent carryover effects produced by previous exposure to the stimulus bias condition on the enhanced data sheet. Future studies might control for potential carryover effects by using a group design similar to LeBlanc et al. (2020) or requiring that participants first complete all conditions for a single data sheet and counterbalancing the order of exposures across participants.

In Experiment II, the addition of the sample-specific condition allowed the experimenters to evaluate participants’ identification of distinct error patterns for each sample stimulus. This was intended to represent a client’s performance under faulty stimulus control for two targets in a condition. This differed from the conditions included in Experiment I such that the position and stimulus biases represented error patterns emitted across all trials within a session. In the sample-specific condition, the specific response pattern differed across sample stimuli. Only 604’s performance approached the criterion. These findings suggest that when naïve participants utilized enhanced data sheets, they correctly identified response patterns that were consistent across trials, yet additional training may facilitate similar performances across sample-specific response patterns.

The current findings suggest that even naïve participants with no history in error analysis were more likely to successfully identify the controlling relations when a client’s performance was represented on an enhanced data sheet. Because derivative measures such as percent correct responding may not allow for the evaluation of controlling relations (Sidman, 1980, 1987), behavior analysts may depend on data collection methods that thoroughly represent both the instructional arrangement and the client’s response as is consistent with the enhanced data sheets in the current study. This work extends that of LeBlanc et al. (2020) by illustrating the additional benefits of robust data collection methods. It should be noted, however, the participants’ performance in the current study did not differ when standard and enhanced data sheets depicted perfect performance or unsystematic responding. This finding may suggest that enhanced data sheets may be particularly relevant for clients who exhibit pervasive errors patterns. Nevertheless, the higher levels of procedural integrity observed by LeBlanc et al. (2020) for participants using enhanced data sheets might suggest that these data sheets exemplify best practice, and the representation of possible unintended sources of stimulus control may simply serve as a promising feature of these data sheets.

Although both experiments demonstrate the clinical utility of enhanced data sheets when identifying error patterns, certain limitations should be mentioned. First, we recruited participants naïve to error or stimulus control analyses in an attempt to rule out prior history with the data collection methods and research questions. Nevertheless, Experiment I included one participant who reportedly worked as an RBT and all participants were obtaining a minor in applied behavior analysis and developmental disabilities. None of the participants in Experiment II worked as an RBT or reported pursuing a minor as a part of their degree. Nevertheless, the participants’ performances across experiments were largely consistent, suggesting that participants’ differing histories related to behavior analytic practice or coursework did not uniquely affect their performance. Future research might include other relevant populations (e.g., Board Certified Behavior Analysts) to further evaluate the effects of clinical or instructional history on similar tasks. Related to this, a client’s response pattern may be more discriminable when conducting the instructional session relative to the same performance being represented on completed data sheets. In the current study, participants only observed completed data sheets, which was intended to align with the experience of behavior analysts who cannot attend all instructional sessions and must make clinical decisions based on data alone. Future research might include a live model to determine whether this additional stimulus condition would influence participants’ accuracy in identifying response patterns, although this may more accurately reflect the experience of an RBT rather than a supervising behavior analyst.

The current study included only a few possible error patterns exhibited by clients in clinical settings. Other response patterns may further illustrate the importance of refined data collection systems. For instance, molecular win–stay response patterns, characterized by “following” the last reinforced response across trials (Grow et al., 2011), may require rigorous data collection systems and additional instruction for a behavior analyst to reliably identify. Future research might consider how other measures of discriminated performance may allow behavior analysts to identify complex controlling conditions.

The focus of the current study was on error patterns that may develop during instruction using MTS arrangements. Although MTS may represent an invaluable resource for behavior analysts working with individuals with limited verbal repertoires (McIlvane et al., 2002, 2016), this may represent a small proportion of clients’, or a particular client’s, instruction. Topographically distinct responses may allow for less refined data collection systems simply due to the fewer potential sources of faulty stimulus control. Indeed, some research suggests that estimated (Ferguson et al., 2019) or discontinuous data collection (Najdowski et al., 2009) may be similarly accurate as continuous (i.e., trial-by-trial) measurement systems. Additional research is needed to align these systems with relevant conditions. For example, estimated or discontinuous systems, much like the standard data sheets in the current study, may not allow for the analysis of responding under restricted stimulus control. Nevertheless, behavior analysts should prepare to select data collection methods appropriate for a given client and skill. In doing so, they may consider client-specific variables, features of the task or setting, and the feasibility of the data collection system (Ledford & Gast, 2018).

Finally, the current study evaluated participants’ identification of response patterns; however, the prevalence of certain error patterns remains unclear. Additional research should describe the development or amelioration of error patterns (e.g., Schneider et al., 2018) or further evaluate arrangements prone to produce restricted stimulus control (e.g., Dittlinger & Lerman, 2011; Singh & Solman, 1990). Although recent examples exist (Hannula et al., 2020; Scott et al., 2021), additional work will help guide evidence-based interventions in behavior analytic practice.

The current findings extend LeBlanc et al. (2020) by demonstrating the superiority of enhanced data sheets when identifying error patterns in a MTS procedure. The experimenters identified differences across standard and enhanced data sheets when stimulus and position biases were exhibited; however, no participant predicted the client’s response pattern in the sample-specific condition using either data sheet. These findings suggest that behavior analysts must consider how particular instructional arrangements (e.g., MTS) and response patterns may require enhanced data collection systems to support the specific needs of their clients.

Data Availability

The data sets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Declarations

Conflicts of Interest

The authors declare that they have no conflict of interest.

Ethical Approval and Informed Consent

This research was approved by the Institutional Review Board at the University of North Carolina Wilmington and informed consent was obtained before participation began.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Barlow DH, Hayes SC. Alternating treatments design: One strategy for comparing the effects of two treatments in a single subject. Journal of Applied Behavior Analysis. 1979;12(2):199–210. doi: 10.1901/jaba.1979.12-199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behavior Analyst Certification Board (2022a). U.S. employment demand for behavior analysts: 2010–2021.

- Behavior Analyst Certification Board (2022b). BACB certificant data.https://www.bacb.com/bacb-certificant-data/

- Dickson CA, Wang SS, Lombard KM, Dube WV. Overselective stimulus control in residential school students with intellectual disabilities. Research in Developmental Disabilities. 2006;27:618–631. doi: 10.1016/j.ridd.2005.07.004. [DOI] [PubMed] [Google Scholar]

- Dittlinger LH, Lerman DC. Further analysis of picture interference when teaching word recognition to children with autism. Journal of Applied Behavior Analysis. 2011;44(2):341–349. doi: 10.1901/jaba.2011.44-341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dube WV, McIlvane WJ. Reduction of stimulus overselectivity with nonverbal differential observing responses. Journal of Applied Behavior Analysis. 1999;32(1):25–33. doi: 10.1901/jaba.1999.32-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferguson JL, Milne CM, Cihon JH, Dotson A, Leaf JB, McEachin J, Leaf R. An evaluation of estimation data collection to trial-by-trial data collection during discrete trial teaching. Behavioral Interventions. 2019;35:178–191. doi: 10.1002/bin.1705. [DOI] [Google Scholar]

- Green G. Behavior analytic instruction for learners with autism: Advances in stimulus control technology. Focus on Autism & Other Developmental Disabilities. 2001;16:72–85. doi: 10.1177/108835760101600203. [DOI] [Google Scholar]

- Grow LL, Carr JE, Kodak TM, Jostad CM, Kisamore AN. A comparison of methods for teaching receptive labeling to children with autism spectrum disorders. Journal of Applied Behavior Analysis. 2011;44(3):475–498. doi: 10.1901/jaba.2011.44-475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grow L, LeBlanc L. Teaching receptive language skills: Recommendations for instructors. Behavior Analysis in Practice. 2013;6(1):56–75. doi: 10.1007/BF03391791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hajiaghamohseni Z, Drasgow E, Wolfe K. Supervision behaviors of board certified behavior analysts with trainees. Behavior Analysis in Practice. 2021;14:97–109. doi: 10.1007/s40617-020-00492-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannula C, Jimenez-Gomez C, Wu W, Brewer AT, Kodak T, Gilroy SP, Hutsell BA, Alsop B, Podlesnik CA. Quantifying errors of bias and discriminability in conditional-discrimination performance in children diagnosed with autism spectrum disorder. Learning & Motivation. 2020;71:1–16. doi: 10.1016/j.lmot.2020.101659. [DOI] [Google Scholar]

- Iversen IH. Sidman or statistics? Journal of the Experimental Analysis of Behavior. 2021;115(1):102–114. doi: 10.1002/jeab.660. [DOI] [PubMed] [Google Scholar]

- Johnson C, Sidman M. Conditional discrimination and equivalence relations: Control by negative stimuli. Journal of the Experimental Analysis of Behavior. 1993;59(2):333–347. doi: 10.1901/jeab.1993.59.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeBlanc LA, Sump LA, Leaf JB, Cihon J. The effects of standard and enhanced data sheets and brief video training on implementation of conditional discrimination training. Behavior Analysis in Practice. 2020;13:53–62. doi: 10.1007/s40617-019-00338-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ledford, J. R., & Gast, D. L. (Eds.) (2018). Single case research methodology: Applications in special education and behavioral sciences (3rd ed.). Routledge.

- Mackay HA. Conditional stimulus control. In: Iversen IH, Lattal KA, editors. Experimental analysis of behavior. Elsevier Science; 1991. pp. 301–350. [Google Scholar]

- McIlvane WJ. Simple and complex discrimination learning. In: Madden GJ, editor. APA handbook of behavior analysis. American Psychological Association; 2013. pp. 129–163. [Google Scholar]

- McIlvane WJ, Dube WV. Stimulus control topography coherence theory: Foundations and extensions. The Behavior Analyst. 2003;26(2):195–213. doi: 10.1007/bf03392076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIlvane WJ, Gerard CJ, Kledaras JB, Mackay HA, Lionello-DeNolf KM. Teaching stimulus-stimulus relations to minimally verbal individuals: Reflections on technology and future directions. European Journal of Behavior Analysis. 2016;17(1):49–68. doi: 10.10808/15021149.2016.1139363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIlvane WJ, Kledaras JB, Callahan TC, Dube WV. High-probability stimulus control topographies with delayed S+ onset in a simultaneous discrimination procedure. Journal of the Experimental Analysis of Behavior. 2002;77(2):189–198. doi: 10.1901/jeab.2002.77-189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIlvane WJ, Serna RW, Dube WV, Stromer R. Stimulus control topography coherence and stimulus equivalence: Reconciling test outcomes with theory. In: Leslie JC, Blackman D, editors. Experimental and applied analysis of human behavior. Context Press; 2000. pp. 85–110. [Google Scholar]

- Najdowski AC, Chilingaryan V, Bergstrom R, Granpeesheh D, Balasanyan S, Aguilar B, Tarbox J. Comparison of data-collection methods in behavioral intervention programs for children with pervasive developmental disorders: A replication. Journal of Applied Behavior Analysis. 2009;42(4):827–832. doi: 10.1901/jaba.2009.42-827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ray BA. Selective attention: The effects of combining stimuli which control incompatible behavior. Journal of the Experimental Analysis of Behavior. 1969;12(4):539–550. doi: 10.1901/jeab/1969.12-539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ray BA, Sidman M. Reinforcement schedules and stimulus control. In: Schoenfeld WN, editor. The theory of reinforcement schedules. Appleton-Century-Crofts; 1970. pp. 187–214. [Google Scholar]

- Rezlescu C, Danaila I, Miron A, Amariei C. More time for science: Using Testable to create and share behavioral experiments faster, recruit better participants, and engage students in hands-on research. Progress in Brain Research. 2020;253:243–262. doi: 10.1016/bs.pbr.2020.06.005. [DOI] [PubMed] [Google Scholar]

- Scott AP, Kodak T, Cordeiro MC. Do targets with persistent responses affect the efficiency of instruction? The Analysis of Verbal Behavior. 2021;37(2):217–225. doi: 10.1007/s40616-021-00163-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider KA, Devine B, Aguilar G, Petursdottir AI. Stimulus presentation order in receptive identification tasks: A systematic replication. Journal of Applied Behavior Analysis. 2018;51(3):634–646. doi: 10.1002/jaba.459. [DOI] [PubMed] [Google Scholar]

- Sidman M. A note on the measurement of conditional discrimination. Journal of the Experimental Analysis of Behavior. 1980;33(2):285–289. doi: 10.1901/jeab.1980.33-285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sidman M. Two choices are not enough. Behavior Analysis. 1987;22(1):11–18. [Google Scholar]

- Singh NN, Solman RT. A stimulus control analysis of the picture-word problem in children who are mentally retarded: The blocking effect. Journal of Applied Behavior Analysis. 1990;23(4):525–532. doi: 10.1901/jaba.1990.23-525. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data sets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.