Abstract

Background:

Deformable Image Registration (DIR) is an essential technique required in many applications of radiation oncology. However, conventional DIR approaches typically take several minutes to register one pair of 3D CT images and the resulting deformable vector fields (DVFs) are only specific to the pair of images used, making it less appealing for clinical application.

Purpose:

A deep-learning-based DIR method using CT images is proposed for lung cancer patients to address the common drawbacks of the conventional DIR approaches and in turn can accelerate the speed of related applications, such as contour propagation, dose deformation, adaptive radiotherapy (ART) etc.

Methods:

A deep neural network based on VoxelMorph was developed to generate DVFs using CT images collected from 114 lung cancer patients. Two models were trained with the weighted mean absolute error () loss and structural similarity index matrix (SSIM) loss (optional) (i.e., the MAE model and the model). In total, 192 pairs of initial CT () and verification CT () were included as a training dataset and the other independent 10 pairs of CTs were included as a testing dataset. The usually were taken 2 weeks after the . The synthetic CTs () were generated by warping the according to the DVFs generated by the pre-trained model. The image quality of the synthetic CTs was evaluated by measuring the similarity between the and the generated by the proposed methods and the conventional DIR approaches, respectively. Per-voxel absolute CT-number-difference volume histogram (CDVH) and MAE were used as the evaluation metrics. The time to generate the was also recorded and compared quantitatively. Contours were propagated using the derived DVFs and evaluated with SSIM. Forward dose calculations were done on the and the corresponding . Dose volume histograms (DVHs) were generated based on dose distributions on both and generated by two models, respectively. The clinically relevant DVH indices were derived for comparison. The resulted dose distributions were also compared using 3D Gamma analysis with thresholds of 3mm/3%/10% and 2mm/2%/10%, respectively.

Results:

The two models ( and ) achieved a speed of 263.7±163 / 265.8±190 ms and a MAE of 13.15±3.8 / 17.52±5.8 HU for the testing dataset, respectively. The average SSIM scores of 0.987±0.006 and 0.988±0.004 were achieved by the two proposed models, respectively. For both models, CDVH of a typical patient showed that less than 5% of the voxels had a per-voxel absolute CT-number-difference larger than 55 HU. The dose distribution calculated based on a typical showed differences of ≤2cGy[RBE] for clinical target volume (CTV) D95 and D5, within ±0.06% for total lung V5, ≤1.5cGy[RBE] for heart and esophagus , and ≤6cGy[RBE] for cord compared to the dose distribution calculated based on the . The good average 3D Gamma passing rates (>96% for 3mm/3%/10% and >94% for 2mm/2%/10%, respectively) were also observed.

Conclusion:

A deep neural network-based DIR approach was proposed and has been shown to be reasonably accurate and efficient to register the initial CTs and verification CTs in lung cancer.

Keywords: deformable image registration, 3D lung CT images, deep neural networks

1. Introduction

Image registration aims to find the spatial relationship between two or multiple sets of images and is usually formalized as the optimization of a function balancing the similarity between images (either in intensity, topology, or both)1. Compared to rigid image registration (RIR), deformable image registration (DIR) attempts to find the voxel-specific spatial relationship between two or multiple sets of images. Therefore, DIR has far more flexibilities than RIR and can be used in more complicated clinical scenarios such as images with large anatomical structure changes. DIR has been extensively used in radiation therapy1 such as automatic segmentation2,3, mathematical modeling4–7, functional imaging8–10, and dose deformation11–16.

Over the years, many conventional DIR approaches have been developed and adopted clinically. The conventional DIR approaches can be broadly categorized into two categories: parametric6,7,17 and non-parametric models18–21. The parametric model generates DVFs as a linear combination of its basic functions. The B-spline model22–25 is an example of such parametric models and it can handle the local change of a voxel by linear regression from nearby voxels within a certain distance. This property significantly reduces the computation time and memory required. For example, Shekhar et al.26 proposed a DIR framework for auto-segmentation. The framework consists of a B-spline-based transformation model, mean squared difference-based image similarity measure, and a downhill simplex algorithm as the optimization scheme. It achieved fewer than 120HU and 135HU mean squared difference for lung and abdomen patients, respectively. Yet, the results can only be used for CTs with either breath-holding or respiratory gating, which limit its wide applications in clinics. In contrast, non-parametric models such as demons-based18–21 methods calculate transformation vectors of all voxels, thus achieving more accurate DVFs, but requiring more computation time and memory than the parametric models. For example, Reed et al27 achieved an average of 1.3 mm mean displacement in auto-segmentation for 10 patients using an accelerated “demons” algorithm,28 which adds a HU number gradient similarity term and a transformation error term into the demons’ energy function, and uses the limited Broyden-Fletcher-Goldfarb-Shanno (L-BFGS) algorithm29 to automatically determine the iteration number, thus accelerating the algorithm. However, it also requires the patients to have a similar body mass index (BMI)29, which also potentially limits its application clinically.

Modern radiation therapy is increasingly sophisticated with more beam delivery techniques such as intensity modulation and/or volumetric modulation, including intensity modulated X-ray-based radiation therapy (IMRT)30–33, volumetric modulated arc therapy (VMAT)34, and intensity-modulated proton therapy (IMPT)35–43. IMPT enjoys distinct advantages in terms of high conformality of target coverage and superior organs-at-risk (OARs) protection owing to its high flexibility at the beamlet level in treatment planning and dose delivery35–38. However, it is also extremely sensitive to proton beam range, patient setup uncertainties, intra- and inter- fractional anatomical changes.38,44–78 The concept of adaptive radiotherapy (ART)14,79,80 has been introduced to account for anatomical changes during treatment course. For ART, patients under treatment require periodic verification imaging during treatment course to obtain information about their internal anatomical changes. However, the potential gain of ART is at the cost of increasing clinical workload, such as CT deformation, contour propagation, and dose deformation. Those clinical tasks all depend on the availability and quality of DIR. Unfortunately, it typically takes minutes for the conventional DIR approaches to register one pair of 3D CTs and the resulted deformable vector fields (DVFs) are not generalized to other CT images, even when they are similar or from the same patient, hence greatly limiting its further applications in ART, which is very time sensitive. Moreover, the frequency of re-planning is significantly higher in IMPT than IMRT/VMAT (for example, for head and neck cancer, 20–25% for IMRT/VMAT and 45–50% for IMPT). This makes the same tasks even more labor intensive in proton clinics. Therefore, the undesired patient breaks allowing for tumor cell repopulation might take place at busy clinics due to insufficient resources.

Recently, several deep learning-based methods have been developed to speed up DIR in medical image analysis81–83. Yang et al.81 proposed a two-steps deep learning framework for predicting the momentum parameterization for the large deformation diffeomorphic metric mapping (LDDMM) model. The proposed deep learning framework consists of two auto-encoder networks with the same architecture, in which the first auto-encoder is used to estimate the initial patch-wise momentum and the second one further tunes the initial patch-wise momentum. Although the proposed method is much faster comparing to the conventional DIR approaches, the computational complexity is higher than a typical single-step deep learning network. Besides, since it has two cascade networks, the symmetrical error may accumulate as the layers go deeper. Balakrishnan et al.82 proposed a UNet-like model termed as VoxelMorph to learn the DVFs from pairs of magnetic resonance images (MRIs) (i.e., moving images and fixed images), then the generated DVFs and moving images go through a non-learnable spatial transformation to form the final generated warped images that resemble the fixed images. The VoxelMorph can achieve comparable performance as the state-of-the-art conventional DIR methods, whereas it is orders of magnitude faster. Thus, it has been widely used in medical image analysis. Most of these methods have been proposed for MRIs, which typically have high-resolution and rich anatomical information, whereas in radiation therapy the commonly used image modality is CT with a relatively low resolution. Vos et al.83 proposed a deep learning image registration (DLIR) framework for unsupervised affine and deformable image registration. It uses convolutional layers to predict the B-spline control points in each of the three directions, then the DVFs are generated from the estimated control points by B-spline interpolation, which is implemented by transpose convolutions. Although the DLIR can be applied to both MRIs and CTs, when it is used for CT images, it requires a large training dataset to train the model. Moreover, the trained model can only be used for 4D CTs with only intra-fractional anatomical changes considered, which limits its clinical use. Another deep learning-based DIR approach was proposed by Zhao et al.84 by cascading multiple Volume Tweening Network (VTN) networks to recursively generate coarse-to-fine DVFs. Typically, the more the cascades are, the more accurate the generated DVFs are. However, the number of the cascades is bounded by the GPU memory, and a large amount of data is required to train such a large-scale network, which is challenging for tasks involving medical images.

To address the aforementioned challenges for the deep learning-based DIR approaches to be used in CTs (e.g., dependence of large training dataset, limitation of 4D CTs, and requirements of high resolution, which is not available in CTs), we proposed several additional loss terms in the objective function of VoxelMorph as well as a random masking strategy to greatly improved the quality of the synthetic CT images (a similar idea has been adopted by He et al.93 to significantly accelerate training speed as well as improve the classification accuracy.), yielding an efficient, accurate, and generalizable deep-learning based DIR method for CTs.

The contributions can be summarized as follows:

We proposed a novel VoxelMorph-based framework for inter-fractional lung DIR. Different from conventional DIR approaches that are only specific to the images used, our framework can be generalized to any images of any independent patients once the model is trained. Thus, it is more practical and versatile.

A new random masking strategy was proposed to significantly reduce artifacts in the deformed images due to intrinsic low resolution of the CT images compared with MRIs. In addition, we investigated the functionalities of different loss terms used in the model training and used weighted mean absolute error () and structural similarity index matrix (SSIM) loss (optional) to bridge the gap between CT images and MRIs, the latter has been well studied in deep learning-based DIR. Thus, the image quality of the deformed CTs is further improved.

Dedicated pre- and post-process methods are proposed to standardize all the CT images used in this work. Then, as a demonstration, we constructed a novel diversified inter-fractional lung CT dataset consisting of approximate 200 pairs of such standardized CT images collected from patients treated by both proton therapy and photon therapy. Such a dataset can be used to evaluate the performance of not only the DIR approaches, but also other related tasks. In the meantime, the proposed pre- and post-process methods can be applied to other medical images (e.g., head and neck CT images, MRI, etc.).

Our methods achieved the state-of-the-art performance in terms of time efficiency, high reconstructed image quality, indistinguishable dose distribution difference calculated between the ground-truth and deformed CTs, and good Gamma passing rates.

2. Methods

To address the drawbacks of the conventional DIR approaches, such as low accuracy and large time consumption, we propose to train a deep-learning-based model for the deformable vector fields (DVFs) with VoxelMorph, which is a general-purpose library for deep-learning-based tools for registration and deformations and includes two additional loss terms that focus on voxel-level similarity and structure-level similarity, respectively. We also introduce a new training strategy that can alleviate the artifacts in low resolution images (i.e., CT images) thus achieving accurately warped CT images. In section 2.1, we describe the data collection and data preprocessing. Section 2.2 introduces the overall structure of the proposed method and in section 2.3, the training process and validation process of the proposed method are elaborated. Statistical analysis is included in section 2.4.

2.1. Data Pre-processing

The initial CT and verification CT of 114 lung cancer patients treated at our institution were retrospectively selected, among which the CT images from 104 patients were used for training and 10 were used for testing. Each patient had one initial CT and 1–4 verification CTs, forming a training dataset of 192 pairs of CT images and a testing dataset of 10 pairs of CT images. In the training dataset, 101 pairs were collected from 67 patients treated with photon therapy whereas the other 91 pairs were collected from 37 patients treated with proton therapy. Among the 10 testing patients, 7 patients were treated with photon therapy, while the other three were treated with proton therapy.

As the collected CT images were captured at various times and by various CT simulators, the CT images may be different due to anatomical changes and various configurations of the different CT simulators. To make sure that the dataset was consistent, data preprocessing was conducted as follows. First, we used the iterative metal artifact reduction (iMAR) algorithm85, which is integrated in the commercial software for the CT simulator, to remove artifacts caused by metal implants. Then, rigid registration and center-cropping were applied to all the CT images using the following technique: we first randomly picked one CT image, where the regions of interest (ROIs) were roughly located in the center of the 3D CTs. We regarded this CT image set as the reference CT (). Then, we registered (rigid) all other CTs to using the Insight Tookit (ITK)86 such that all CTs had the same resolution of 2×1.26×1.26 mm3, the same dimension size, etc. Next, we center-cropped all CTs to a dimension size of 136×384×384 to exclude the non-informative areas from this study as well as to alleviate the memory burden in training. We manually selected a fixed center-cropping region instead of using the BODY contour since the BODY contour varied from patient to patient, and in some proton plans the BODY contour contained the digital couch. Last, we normalized all the CT numbers to values approximately around 0 to 1 by using a uniform shift of 1,000 and a fixed denominator of 3,000. The preprocessed initial CT () and verification CT () were then used for the model training and validation. The workflow of both the data pre-processing and data post-processing steps is shown in Figure 1.

Figure 1.

Illustration of the data pre-processing and post-processing steps. represented the raw data, represented the CT images after RIR was applied to and were the images that we used in the model training and validation.

2.2. Overview of the proposed framework

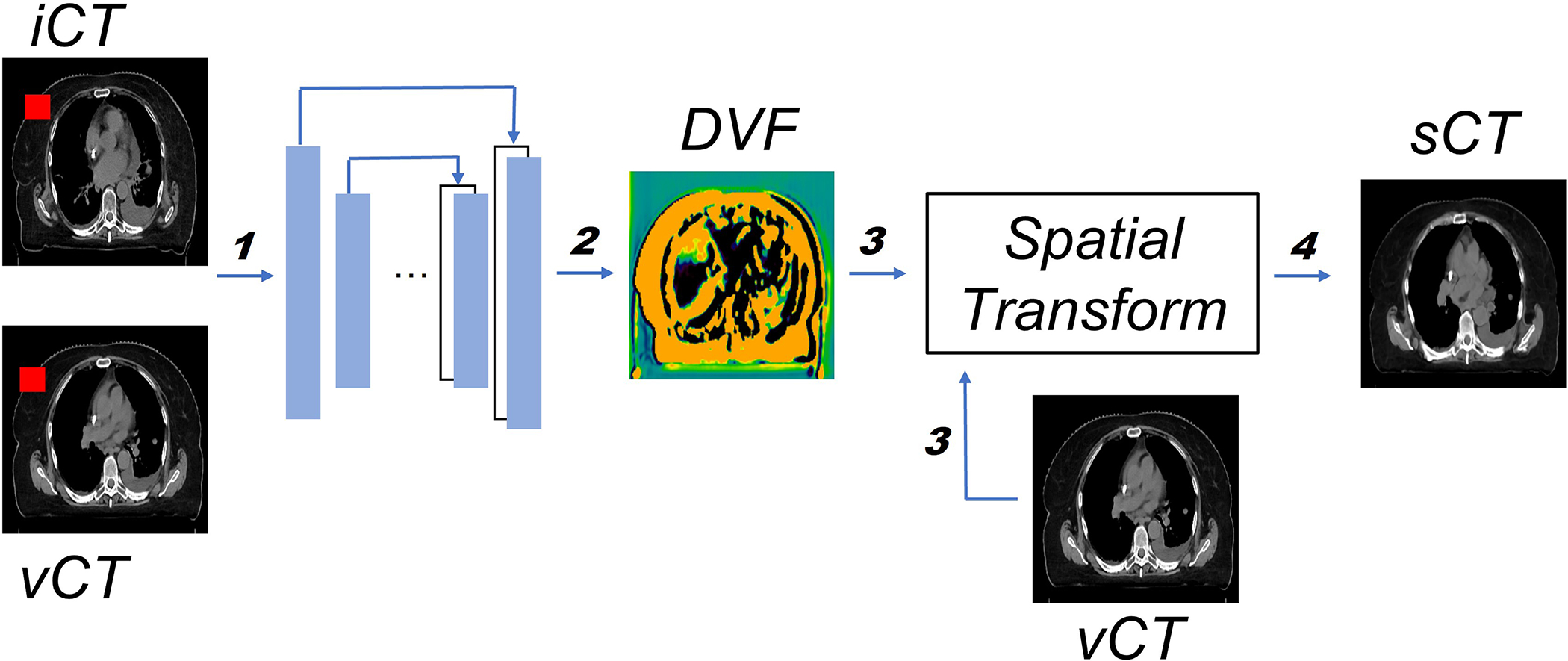

An overview of the proposed framework is illustrated in Figure 2 and the detailed model architecture is shown in Figure 3. The input for the model is a pair of CT images, and , that are taken at different time points (usually several weeks apart). The backbone of the model is VoxelMorph, which is a UNet-like deep neural network architecture and the output from the network is the DVFs. Finally, the undergoes a spatial transformation based on the derived DVFs to form the final output – the (Fig. 2).

Figure 2.

Overview of the proposed workflow. The inputs consist of both and (step 1). Both and DVF, which is generated by the model (step 2), will go through the spatial transformation (step 3) to obtain the final output (step 4). The training and inference path have been indicated by bolded numbers. Note that the random mask (red rectangle block in both and ) is only applied in the training stage. More details about random mask will be introduced in section 2.3.

Figure 3.

Details of the neural network architecture. Every block represents one layer, the value on the left is the input image size, whereas the value on the top indicates the number of feature maps.

Since the 3D lung CT images have different resolutions as well as different dimension sizes as the MRIs used in the original VoxelMorph model, the kernel size, stride, and other parameters are changed accordingly to make sure that the CT images and the network are compatible. To be more specific (Fig. 3), 3D convolutional layers are used with a kernel size of 3 and a stride of 1 in both the encoder and decoder. A LeakyReLU87 layer with a parameter of 0.2 was applied right after each convolutional layer. The convolutional layers together with down-sampling across different layers allow us to capture the hierarchical features, which are derived from the input CT image pairs. Similarly, the decoder learns the DVFs from both the hierarchical features extracted by each layer in the encoder and the previous layer in the decoder. To deal with the odd number of the feature maps in the deepest layer of the encoder and decoder, we randomly duplicated one of the feature maps and concatenated it with the original feature maps (the second layer in the decoder), thus the number of the feature maps is consistent in both the encoder and decoder. Finally, the output from the network, i.e., the DVFs, were applied to the image to generate the image through spatial transform, in which the voxel location is first calculated then followed by linear interpolation. The quality is evaluated with as the ground-truth.

Both and DVF, which is generated by the model (step 2), will go through the spatial transformation (step 3) to obtain the final output (step 4). The training and inference path have been indicated by bolded numbers. Note that the random mask (red rectangle block in both and ) is only applied in the training stage. More details about random mask will be introduced in section 2.3.

2.3. Training and validation protocols of the proposed framework

As shown in Figure 2 and Figure 3, the base architecture is the VoxelMorph, which is a UNet-like structure that was proposed for DIR of the MRI images in head and neck. Although the vanilla VoxelMorph works well for DIR of the MRI images in head and neck, its performance greatly degenerated when directly applied to lung CT images. A few reasons contribute to such a degeneration: 1) The MRI images typically have a much higher resolution than the CT images and thus the former will let the model capture more voxel-wise details; 2) The number of the MRI images used in the previous study are much larger than the number of the CT images used in this study to which the model can easily overfit; 3) The lung disease site has larger variation across different patients and within one patient due to inter/intra-fractional anatomy changes than the head and neck disease site. Therefore, to address the above-mentioned challenges, we proposed a new training strategy and a new loss function.

In the training stage, we proposed to use the weighted per-voxel HU number mean absolute error (MAE) () loss to measure the voxel-wise similarity between the ground-truth and synthetic . The definition of the is:

| (1) |

where represents the HU number of the voxel . Different from a plain MAE loss, the weight of the similarity loss of each voxel is proportional to the corresponding HU number of the voxel. Hence, the voxels in structures with higher HU number, e.g., bone, were assigned with larger weights than other voxels. This, together with other loss terms help diminish the appearance of high HU number artifacts.

Following VoxelMorph, we also applied the smooth loss term to the generated DVFs, making it physically realistic. The smooth loss term is defined in Equation (2) as follows:

| (2) |

where is the spatial gradients of the voxel . To simplify the computation, we used and to approximate the spatial gradients.

Then, we combined all loss terms to obtain the objective function as follows:

| (3) |

where is the weight for the smooth loss term. The model trained with Equation (3) as the objective function is referred to as the model. In training, was set to 0.01.

Since a lung CT image typically contains multiple structures with distinct HU numbers, it is challenging to recover all structures simultaneously. Thus, we further extended model by applying a structure loss term to each of the clinical target volume (CTV) and five organs at risk (OARs), namely esophagus, heart, left lung, right lung, and cord. To be specific, the contours of both CTV and OARs were converted to bitmaps with 1 indicating the voxels within the ROIs and with 0 indicating the voxels outside the ROIs. Then, the bitmaps of structures were generated by wrapping the bitmaps of structures based on the generated DVF. Last, we used structural similarity index matrix (SSIM)88 as the structure loss to compare the similarity between the bitmaps of and structures. SSIM was applied since it considers not only the similarity between the corresponding structures but also the illumination and contrast of the structures. On the contrary, the commonly used dice similarity coefficients (DSCs)22,89,90 only considers the volume overlap of the structures. The SSIM is more appropriate for the lung CT images since the lung CT images often have multiple structures with a large range of the HU numbers that potentially leads to diverse illuminations and contrasts. The definition of SSIM is defined in Equation (4) as follows:

| (4) |

where represents the structures in and represents the structures in , respectively. is the number of structures we considered in this study. and represents the mean and standard deviation of the voxels in the structure , respectively. and are two constants that ensure stability when the denominator becomes 0. A SSIM value of 1 indicates the best agreement and a value of 0 indicates the worst agreement. Finally, we combined all loss terms to obtain the objective function as follows:

| (5) |

where and are the weights for different loss. The model trained with Equation (5) as the objective function is referred as the model. In training was set to 0.01 and was set to 0.1.

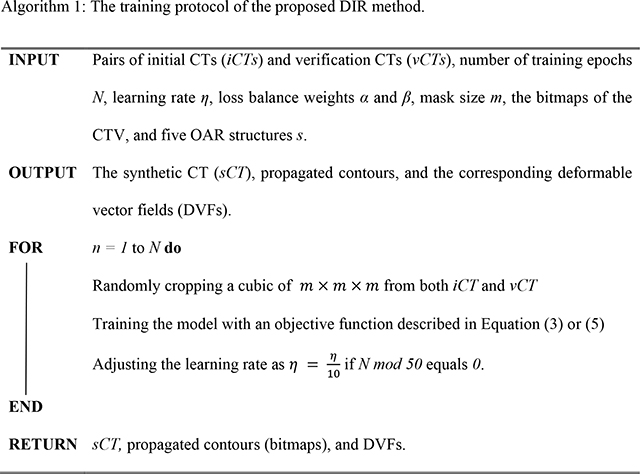

Considering the limited but diverse lung CT images used in this study and the small dimension size of each CT image, the model tends to either easily overfit to the dataset or cannot fully capture the detailed voxel information. Thus, we introduced a training strategy -- random mask to address the issue. In the training stage, for each batch, we randomly masked out a cube of size ) from both the and . An illustration of the random mask strategy is shown in Figure 4.

Figure 4.

Illustration of the random mask strategy used in the model training. In each batch, the value of the voxels enclosed by the random mask will be set to 0.

Since the voxels in the masked cube were completely blocked out from the network in the given batch, the network would return higher losses for the masked cube, which would in turn make the model assign higher weights for the masked cube and yield images with better fine details in the next batch to reduce the loss. With the batches going on, the model would go through all the voxels and eventually result in good deformation for the entire image dataset. Moreover, the introduced random mask can also be considered as a way of data augmentation by inserting various noise (i.e., the masked cubic) for each batch, thus alleviating the risk of overfitting. Through an empirical study, we found that a random mask with a size of 5 balanced the accuracy and time cost the best, thus we used a size of 5 in the following experiments. Adam optimizer with an initial learning rate of 1e-4 was used for training and the hyperparameters associated with the Adam optimizer were and . The models were implemented with the PyTorch (https://pytorch.org/) deep learning library and the model were trained on four A100 GPUs with a batch size of 4. The proposed iterative training loop is summarized in Algorithm 1.

In the validation stage, we would not apply the random mask to the given test pairs of lung CT images. The model would produce the DVFs given the test CT images pair and then generate the as mentioned before. Moreover, the bitmaps of the CTV and any given OARs contours in images were generated by warping the corresponding bitmaps of structure contours from images based on the generated DVFs. The details of the validation steps are shown in Algorithm 2.

2.4. Data Analysis

Both the trained and models were validated in the testing dataset, which comprised of 10 independent patients and were applied with the same data pre-processing and data post-processing mentioned before. For image quality evaluation in the testing dataset, we directly measured the similarity using Per-voxel absolute CT-number-difference volume histogram (CDVH) and MAE as the evaluation metrics between the ground-truth CTs, i.e., , and the synthetic CTs (), which were derived by deforming the with the derived DVFs.

Four conventional DIR approaches (fast symmetric force, diffeomorphic, log domain diffeomorphic, symmetric log domain diffeomorphic) were also compared. For the conventional DIR methods, we used the DIR algorithms included in the open source image registration library, Plastimatch91, to register to . We used the same pre-processing procedure for the conventional DIR approaches for fair comparison.

For the dosimetric evaluation, we postprocessed both the and by inversing all the steps in the pre-processing stage, so that all and had the same configurations as their corresponding . Then, forward dose calculations of the original plan were done based on and . The resulting dose distributions were compared using the 3D Gamma analysis. Dose volume histograms (DVHs) were generated as well for these two dose distributions. We also compared the clinically relevant DVH indices for the selected structures. We considered D95 and D5 (the minimum dose covering the highest irradiated 95% and 5% of the structure’s volume, respectively) for CTV, V5 (the minimum volume percentage receiving at least 5Gy [RBE]) for total lung, (mean dose) for heart, (max dose) for cord, and for esophagus. The clinically relevant DVH indices were also statistically analyzed using the paired Student’s T-test. A P-value 0.05 was considered to be statistically significant.

3. Results

Section 3.1 and Section 3.2 report the evaluation regarding the image quality of the . Section 3.3 and Section 3.4 show the dosimetric evaluation on the .

3.1. Evaluation of the quality

Table 1 displays the comparison of the HU number MAE and time cost of the proposed approach and four conventional DIR approaches (fast symmetric force, diffeomorphic, log domain diffeomorphic, symmetric log domain diffeomorphic). From Table 1, we observed that the proposed methods achieved better quality as indicated by much smaller MAE compared to the conventional methods with a time cost of fewer than 300 milliseconds whereas all conventional DIR approaches suffered from worse quality (as indicated by larger MAE) and all with a much longer (at least 1000 times larger than our methods) computation time.

Table 1.

Comparison of the MAE and time cost of the proposed approaches and four conventional DIR approaches. In each cell, we reported the mean and standard deviation value of 10 test patients.

| MAE (HU) | Time cost (Seconds) | |

|---|---|---|

|

| ||

| wMAE | 13.15±3.8 | (263.7± 163)×10−3 |

| M+S | 17.52±5.8 | (265.8± 190)×10−3 |

| FSF | 56.4±18.1 | 280.3±129.8 |

| DM | 100.4±25.5 | 283.5±125.2 |

| LD | 249.0±59.4 | 290.9±101.5 |

| SLD | 400.96±69.4 | 304.3±97.2 |

abbreviations: FSF for fast symmetric force, DM for diffeomorphic, LD for log domain diffeomorphic, SLD for symmetric log domain diffeomorphic

Furthermore, we derived the per-voxel CT-number absolute difference volume histogram (CDVH) with the absolute CT number differences (in HU) as the horizontal axis and the normalized volume (in %) as the vertical axis (Figure 5) to show the per-voxel absolute CT-number difference between and statistically. As shown in the figure, a majority of the voxels had a small per-voxel absolute CT number difference (close to 0) between the and . Statistically, only 5% of the voxels have a per-voxel absolute CT number difference larger than 46.7538 HU for the model trained with the weighted MAE only and 54.6117 HU for the model trained with both the weighted MAE and SSIM, respectively.

Figure 5.

The absolute per-voxel CT number difference volume histogram for a typical patient. The x-axis represents the HU number absolute difference between the and and the y-axis represents the percentage of the volume. For both models, more than 70% of the volume are exactly reconstructed.

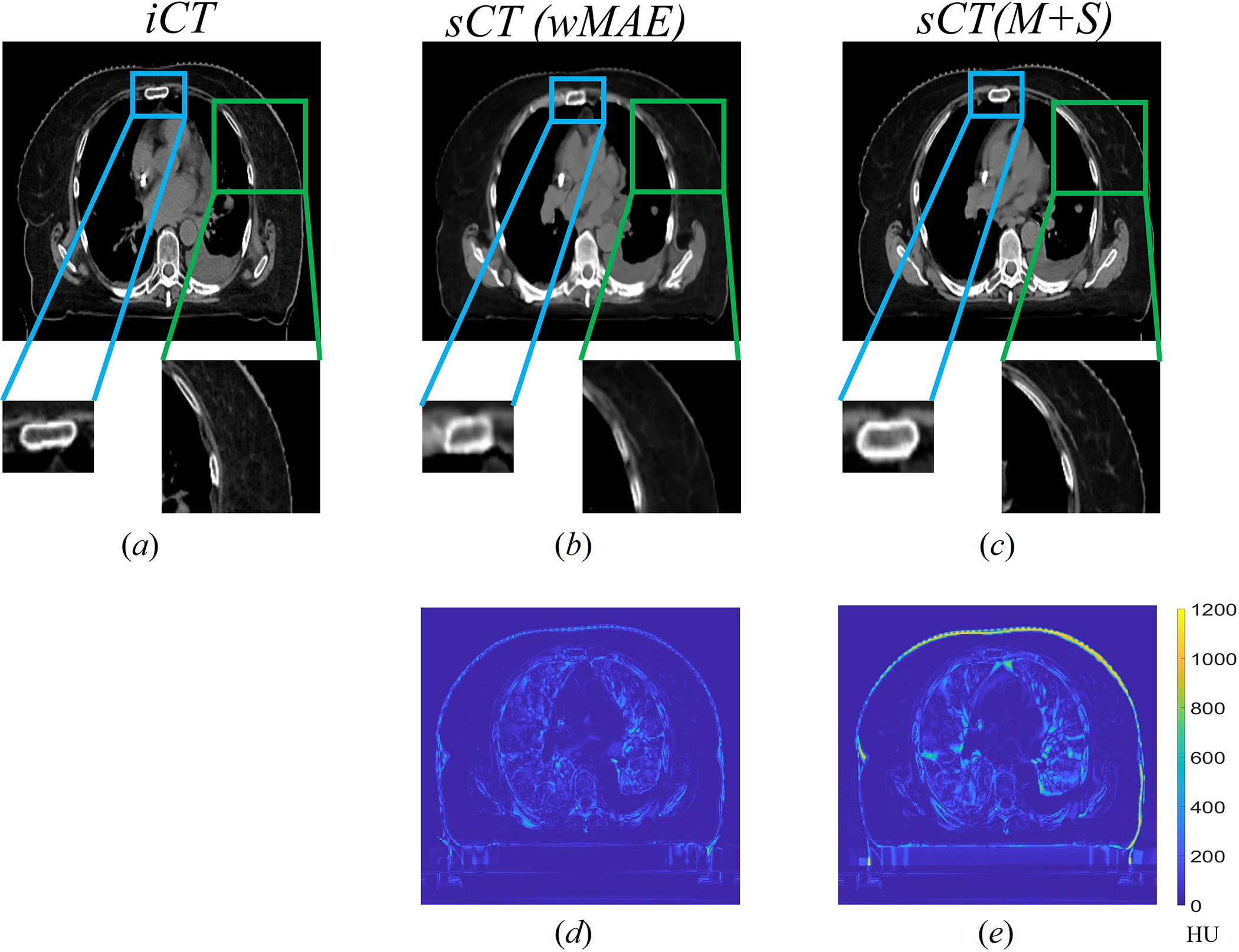

Figure 6 compares a typical CT slice between the (a) and the corresponding generated by the model (b) and the model (c), respectively. The differences between the slice and slices are shown in Fig. 5(d) and (e), where the brighter the color is, the greater the difference is. Overall, both matched the well and no obvious artifacts were identified. There were, however, some discrepancies between the and the in some soft tissue regions. When comparing the and the , the achieved a lower average MAE and had much smoother edges (skin), although its image quality appeared slightly worse than the since the sternum was blurred (blue rectangle in Figure 6) and the high Z material in muscle was barely recovered (green rectangle in Figure 6).

Figure 6.

Comparison of a typical slice between the slice (a) and its corresponding generated by the model trained with only () (b) and the model trained with both and SSIM () (c), respectively. The CT HU number display window level and width were −125HU and 1300HU respectively. The edge of the rectum was more blurred in generated by the model. The high Z material in generated by the model were partially recovered, whereas the model did not. Fig. 5(d) and (e) showed the absolute difference of the slice between the and generated by the and () models, respectively.

3.2. Evaluation of the propagated contours

Similar to the procedure in section 3.1, we generated a new set of contours by propagating the contours from the to based on the derived DVFs from both trained models. Then we measured the similarity between the propagated contours and the initial contours using SSIM. The detailed results are shown in Table 2.

Table 2.

Comparison of SSIMs of the selected structures generated by the models trained with wMAE and M+S, respectively. The higher the SSIM value is, the higher the agreement of the selected structures between the and is.

| wMAE | M+S | |

|---|---|---|

|

| ||

| CTV | 0.989±0.013 | 0.993±0.009 |

| Right lung | 0.973±0.011 | 0.975± 0.008 |

| Left lung | 0.976±0.008 | 0.977±0.007 |

| Esophagus | 0.997±0.001 | 0.997±0.001 |

| Heart | 0.987±0.005 | 0.989±0.005 |

| Cord | 0.998±0.001 | 0.998±0.001 |

| average | 0.987±0.006 | 0.988±0.004 |

From Table 2, it is obvious that the proposed methods can successfully generate contours with excellent agreement for the selected structures (CTV, right lung, left lung, esophagus, heart, and cord) with the ground-truth contours after propagation (the average SSIM scores of 0.987±0.006 and 0.988±0.004 for the two proposed models, respectively). The model trained with achieved higher SSIM scores for all structures than those of the model trained with . We also calculated the DSCs, which only consider the overlap between the ground-truth and propagated structures, for the selected structures. We found that both left and right lung suffered from low DSC scores (approximately 0.75) due to inter-fractional anatomy changes and irregular respiratory patterns, whereas the SSIM score was not greatly affected by the anatomy changes.

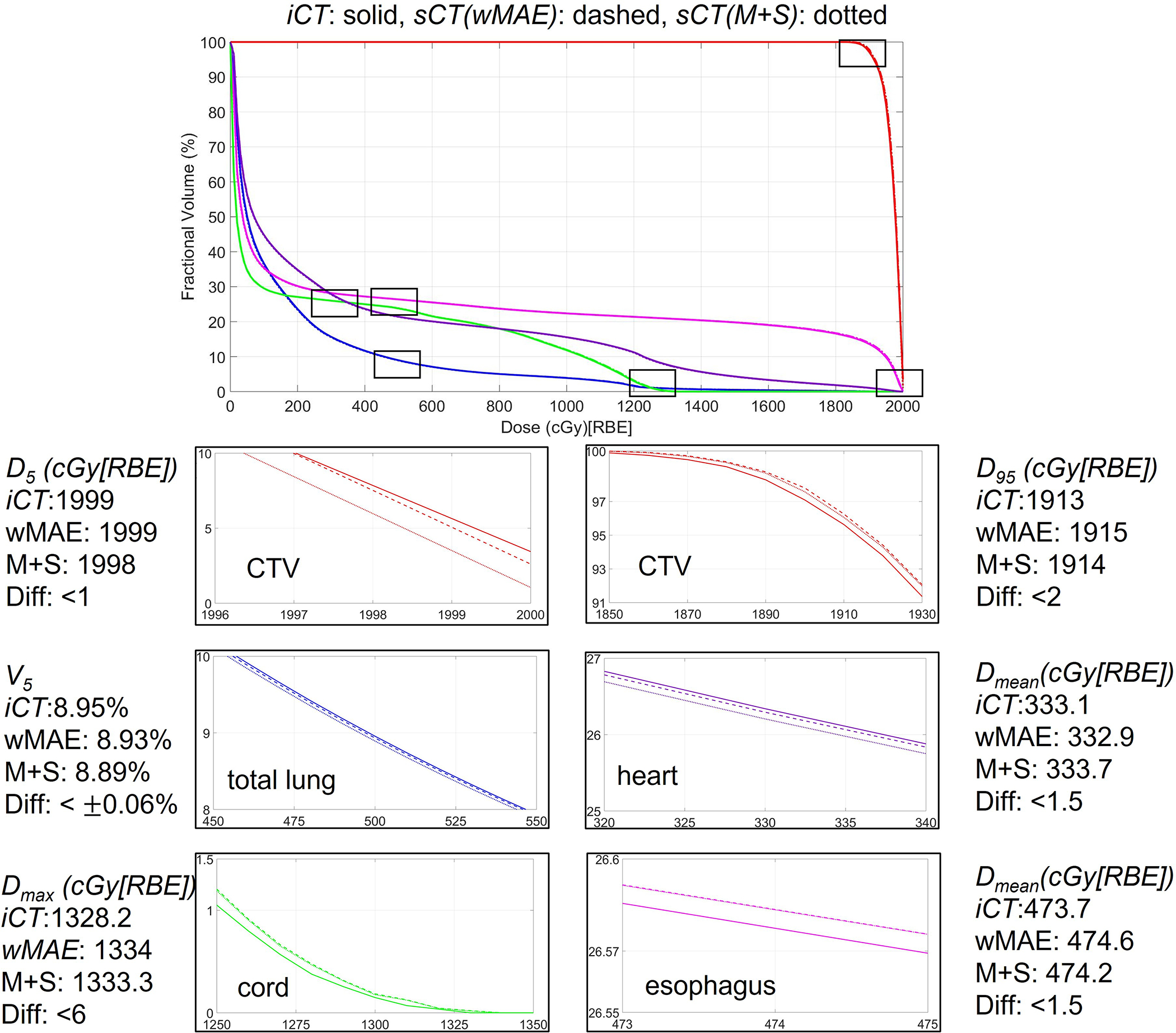

3.4. Comparison of the dose volume histograms (DVHs) and the clinically relevant DVH indices

We performed the forward dose calculation of the original plan on the and . We then generated dose volume histograms (DVHs) based on the two dose distributions for every testing patient. Figure 7 shows the comparison of the DVHs generated from the dose distributions calculated on the and its corresponding for a typical photon patient, where the red curve represents the CTV, the blue curve represents the total lung, the purple curve represents the heart, the green curve represents the cord and the magenta curve represents the esophagus. The solid, dashed, and dotted represent the DVHs generated from the dose distributions calculated based on and , respectively. For better visualization, zoom-in detailed subfigures and DVH indices differences were also provided. Visually, the DVH curves on and completely overlapped with each other with negligible differences only visible in the zoomed-in regions.

Figure 7.

Comparison of dose volume histograms (DVHs) of one typical patient derived from the dose distributions calculated on and the corresponding derived from the two models proposed in this study. In each figure, the solid, dashed, and dotted lines represent the DVHs generated from the dose distributions calculated based on and , respectively.

Figure 8 shows the comparison of the boxplots of the clinically relevant DVH indices of the ten testing patients from the dose distributions calculated on and the corresponding derived from the two models proposed in this study. P-values are shown on the top of the boxplots. From Figure 8, it is clear that the clinically relevant DVH indices derived from the dose distributions calculated on the were very similar to the ones from the dose distribution calculated on for all selected structures.

Figure 8.

Comparison of the boxplots of the clinically relevant DVH indices of the ten testing patients from the dose distributions calculated on and the corresponding derived from the two models ( and ) proposed in this study. P-valves derived from the statistic tests between the DVH indices calculated based on the dose distributions on and ), and between the DVH indices calculated based on the dose distributions on and are shown on the top of the corresponding boxplots.

3.5. Comparison of the dose distributions using 3D Gamma analysis

We also compared the dose distributions calculated on and the corresponding derived from the two models proposed in this study using 3D Gamma analysis with a threshold of 3%/3mm/10% and 2%/2mm/10%, respectively (Table 3). The average 3D gamma passing rate for a threshold of 3%/3mm/10% was above 98% and above 96% for the model and the model, respectively. For a threshold of 2%/2mm/10%, the average 3D Gamma passing rate was above 97%.and above 94% for the model and the model, respectively. If the 10th testing patient was excluded, the average 3D Gamma passing rate for the remaining 9 testing cases was above 99% for a threshold of 3%/3mm/10% and above 98% for a threshold of 2%/2mm/10% for the model. Moreover, the average 3D Gamma passing rate for patients treated with photon therapy was approximately 3% higher than that of patients treated with proton therapy. We also noticed that the model obtained a higher 3D Gamma passing rate than that of the model.

Table 3.

The 3D Gamma passing rates between the dose distributions calculated on and the corresponding of the 10 testing patients with a threshold of 3%/3mm/10% and 2%/2mm/10% for both the wMAE model and the M+S model, respectively.

| PATIENT | 3%/3mm/10% (wMAE) / (M+S) | 2%/2mm/10% (wMAE) / (M+S) |

|---|---|---|

|

| ||

| #1 | 0.999 / 0.998 | 0.997 / 0.996 |

| #2 * | 0.996 / 0.965 | 0.98 / 0.926 |

| #3 | 1.0 / 0.991 | 0.999 / 0.977 |

| #4 | 1.0 / 0.999 | 0.999 / 0.999 |

| #5 | 0.995 / 0.943 | 0.978 / 0.913 |

| #6 * | 0.966 / 0.976 | 0.921 / 0.934 |

| #7 | 1.0 / 0.979 | 1.0 / 0.970 |

| #8 | 1.0 / 0.979 | 1.0 / 0.970 |

| #9 | 0.999 / 0.924 | 0.991 / 0.811 |

| #10 * | 0.921 / 0.924 | 0.899 / 0.902 |

| Average | 0.986±0.026 / 0.963±0.029 | 0.977±0.036 / 0.945±0.062 |

| Average(photon) | 0.995±0.012 / 0.974±0.029 | 0.987±0.029 / 0.955±0.067 |

| Average(proton) | 0.971±0.043 / 0.944±0.020 | 0.953±0.047 / 0.907±0.007 |

indicates that the patient was treated with proton therapy.

3.6. Ablation Study

Figure 9 showed the and corresponding generated by different models trained with different loss terms. Figure 9 (a) and (b) show the generated by the model trained with the SSIM or the MSE loss term only, respectively. Figure 9 (b) was the model setting adpoted by the original Voxelmorph model. Figure 9 (c) presented the result generated by the model trained with both the MSE and SSIM loss terms, Figure 9 (d) was for the model trained with the loss term without applying ramdon mask strategy and Figure 9 (e) was the iCT. Figure 9 (f)–(i) were the absolute difference between the and . Compared with the results showed in Figure 6, it was clearly seen that no models shown in Figure 9 generated the with better details, for example, neither of the models can generate sCT with corrected rectum nor cord. We further quantitatively evaluated the quality of the by different models. The shown in Figure 9 (a), (b), (c) and (d) achieved a MAE of 52.73 HU, 26.12HU, 19.42HU and 22.64HU, respectively (Table 4), while the and models proposed in this work achieved a MAE of 13.15HU and 17.52HU, respectively (Table 1).

Figure 9.

Sample slices of the generated by the models trained with different loss terms and the corresponding (e). Figure (a) and (b) are the sample slices of the generated by the models trained with the SSIM or MSE loss term only, respectively. Figure (c) shows the sample slice of the generated by the model trained with both the MSE and SSIM loss terms. Figure (d) shows the sample slice of the generated by the model trained with the loss term without random mask strategy. The CT HU number display window position and width were −120 HU and 1300 HU, respectively. Figure (f)-(i) show the corresponding absolute differences between the and .

Table 4.

The MAE of the sCT generated by models trained with different loss terms.

| MAE(HU) | |

|---|---|

|

| |

| SSIM loss only | 52.73 |

| MSE loss only | 26.12 |

| MSE +SSIM | 19.42 |

| wMAE w/o random mask | 22.64 |

4. Dicussion

In this study, we have developed a VoxelMorph-based deep neural network for fast and accurate DIR in the radiotherapy of lung cancer. We tried two configurations (thus two models) in the proposed methods and performed a comprehensive validation of the proposed models on the CT images of lung cancer patients. Although the methods based on CT images were focused on lung cancer, the methods could be generalized to all disease sites.

To alleviate the potential overfitting caused by limited data and low resolution, we introduced an random mask training strategy, and included additional loss terms in the objective function (i.e, the weighted MAE and SSIM terms), to improve the quality of the . Through an empirical study, we found that a random mask with a size of 5×5×5 yielded the optimal performance in our study. A random mask with a very small size cannot mitigate the blur in the images well enough, whereas a random mask with a very large size, though it may help to mitigate the blur, introduces uncertainty to the model training and eventually slows down or even collapses the training of the neural network. As for the loss terms, we used the weighted MAE to guarantee the voxel-to-voxel similarity, in which the per-voxel loss weight is propotional to the HU numbers, thus helping to reduce the high-frequency artifacts (e.g., the artifacts in bone structure). Comparing with the MSE loss term, which is used by the original Voxelmorph model, the use of the loss term greatly improves the quality of the . Another additional loss term used in this study is the SSIM, which is a loss that enforces the similarity among structures. However, unlike dice similarity coefficients (DSCs)22,89,90, which has been extensively used as the structure similarity evaluation metric, SSIM considers not only the similarity among structures, but also the illumination and contrast among the images. Thus it is a better choice for the lung CT images since the lung CT images often have multiple structures with large variation of the HU numbers. This potentially leads to diverse illuminations and contrasts.

We trained the proposed neural network with two configurations (thus two models), one with the weighted MAE loss term only ( model) and the other with both the weighted MAE loss term and SSIM loss term ( model). The results related to quality during the validation showed that the model yielded slightly better image quality than that of the model. However, the dosimetric evaluation by comparing the clinically relevant DVH indices and performing 3D Gamma analysis between the dose distributions calculated on and the corresponding derived from the two models proposed in this study presented the opposite results – the model had a slightly better agreement of the DVH indices with ground-truth and a higher 3D Gamma passing rate. This indicates that the evaluation of the quality needs to be conducted thoroughly and cannot rely on one criterion alone. Additionally, it further suggests that high image quality, although clinically relevant in radiation therapy, does not necessarilly lead to favorable results in dose calculation. How to further improve the performance of the proposed deep neural network in dose calculation will be an interesting and challenging research direction.

The 3D Gamma passing rates reported in Table 3 are very promising and exceeds the clinical requirements suggested by American Association of Physicists in Medicine (AAPM) task group (TG) 21892, suggesting that the generated can be reliably used in clinical applications such as ART. However, it requires further improvements. There are multiple factors for these non-ideal results. One factor is inherited in the different physics characteristics of proton and photon therapy, where proton therapy could be more sensitive to the same variations in the HU numbers compared to photon therapy as shown in Table 3. Another possible contributing factor can be the challenging CT imaging dataset used in this study: all the CT images pairs ( and ) used in this study are the CT images taken at least several weeks apart, during which the inter-fractional anatomy changes can be large, irregular and unpredictable. In addition, the tumor may grow or shrink and patients’ weight may change during the time window between the and , thus introducing additional unpredictable ambiguities (new information or loss of the old information compared to the information contained in ) for DIR. Figure 10 shows the comparison of one CT slice in the middle of the tumor among the and of a case with a relatively poor performance from our proposed methods (the 10th testing patient in Table 3), where the circle highlights the CTV in each subfigure. It is obvious that the generated have a CTV with larger high density regions compared to the groud-truth CT (i.e., ), possibly due to the fact that this patient has a very aggressive tumor phenotype (the tumor grows a lot from to ). This might lead to worse agreements of DVH indices and a lower 3D Gamma passing rate (92.11% of 3mm/3%/10% and 89.92% of 2mm/2%/10% for the model and 92.30% of 3mm/3%/10% and 90.21% of 2mm/2%/10% for the model, respectively). Moreover, for more challenging disease sites which have complexity shapes, such as ovarian cancer94 and for registration between different modalities95, the proposed DIR approach may see its limitation. Further investigation are needed to address these issues.

Figure 10.

Comparison of one CT slice in the middle of CTV among the and of a case with a relatively poor performance from our proposed methods (the 10th testing patient in Table 3), where the circle highlights the CTV in each subfigure.

5. Conclusion

A deep neural network-based DIR approach was proposed and shown to be accurate and efficient to register the initial CTs and verification CTs for lung cancer.

Acknowledgments

This research was supported by the National Cancer Institute (NCI) Career Developmental Award K25CA168984, Arizona Biomedical Research Commission Investigator Award, the Lawrence W. and Marilyn W. Matteson Fund for Cancer Research, and the Kemper Marley Foundation.

Footnotes

Conflicts of Interest Notification

Terence T. Sio provides strategic and scientific recommendations as a member of the Advisory Board and speaker for Novocure, Inc., Catalyst Pharmaceuticals, Inc. and Galera Pharmaceutics, which are not in any way associated with the content presented in this manuscript.

Ethical considerations

This research was approved by the Mayo Clinic Arizona institutional review board (IRB, 13-005709). The informed consent was waived by IRB protocol. Only CT image and dose-volume data were used in this study. All patient-related health information was removed prior to the analysis and also publication of the study.

Reference

- 1.Oh S, Kim S. Deformable image registration in radiation therapy [published online ahead of print 2017/07/18]. Radiat Oncol J. 2017;35(2):101–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Thor M, Petersen JB, Bentzen L, Hoyer M, Muren LP. Deformable image registration for contour propagation from CT to cone-beam CT scans in radiotherapy of prostate cancer [published online ahead of print 2011/07/20]. Acta oncologica (Stockholm, Sweden). 2011;50(6):918–925. [DOI] [PubMed] [Google Scholar]

- 3.Hautvast G, Lobregt S, Breeuwer M, Gerritsen F. Automatic contour propagation in cine cardiac magnetic resonance images [published online ahead of print 2006/11/23]. IEEE Trans Med Imaging. 2006;25(11):1472–1482. [DOI] [PubMed] [Google Scholar]

- 4.Söhn M, Birkner M, Yan D, Alber M. Modelling individual geometric variation based on dominant eigenmodes of organ deformation: implementation and evaluation [published online ahead of print 2005/12/08]. Phys Med Biol. 2005;50(24):5893–5908. [DOI] [PubMed] [Google Scholar]

- 5.Nguyen TN, Moseley JL, Dawson LA, Jaffray DA, Brock KK. Adapting liver motion models using a navigator channel technique [published online ahead of print 2009/05/29]. Med Phys. 2009;36(4):1061–1073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Budiarto E, Keijzer M, Storchi PR, et al. A population-based model to describe geometrical uncertainties in radiotherapy: applied to prostate cases [published online ahead of print 2011/01/25]. Phys Med Biol. 2011;56(4):1045–1061. [DOI] [PubMed] [Google Scholar]

- 7.Oh S, Jaffray D, Cho YB. A novel method to quantify and compare anatomical shape: application in cervix cancer radiotherapy [published online ahead of print 2014/05/03]. Phys Med Biol. 2014;59(11):2687–2704. [DOI] [PubMed] [Google Scholar]

- 8.Guerrero T, Sanders K, Noyola-Martinez J, et al. Quantification of regional ventilation from treatment planning CT [published online ahead of print 2005/06/07]. International journal of radiation oncology, biology, physics. 2005;62(3):630–634. [DOI] [PubMed] [Google Scholar]

- 9.Yaremko BP, Guerrero TM, Noyola-Martinez J, et al. Reduction of normal lung irradiation in locally advanced non-small-cell lung cancer patients, using ventilation images for functional avoidance [published online ahead of print 2007/04/03]. International journal of radiation oncology, biology, physics. 2007;68(2):562–571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yamamoto T, Kabus S, von Berg J, Lorenz C, Keall PJ. Impact of four-dimensional computed tomography pulmonary ventilation imaging-based functional avoidance for lung cancer radiotherapy [published online ahead of print 2010/07/22]. International journal of radiation oncology, biology, physics. 2011;79(1):279–288. [DOI] [PubMed] [Google Scholar]

- 11.Qi XS, Santhanam A, Neylon J, et al. Near Real-Time Assessment of Anatomic and Dosimetric Variations for Head and Neck Radiation Therapy via Graphics Processing Unit-based Dose Deformation Framework [published online ahead of print 2015/04/08]. International journal of radiation oncology, biology, physics. 2015;92(2):415–422. [DOI] [PubMed] [Google Scholar]

- 12.Sharma M, Weiss E, Siebers JV. Dose deformation-invariance in adaptive prostate radiation therapy: implication for treatment simulations [published online ahead of print 2012/12/04]. Radiotherapy and oncology : journal of the European Society for Therapeutic Radiology and Oncology. 2012;105(2):207–213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Velec M, Moseley JL, Eccles CL, et al. Effect of breathing motion on radiotherapy dose accumulation in the abdomen using deformable registration [published online ahead of print 2010/08/25]. International journal of radiation oncology, biology, physics. 2011;80(1):265–272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Yan D, Vicini F, Wong J, Martinez A. Adaptive radiation therapy [published online ahead of print 1997/01/01]. Phys Med Biol. 1997;42(1):123–132. [DOI] [PubMed] [Google Scholar]

- 15.Schaly B, Kempe JA, Bauman GS, Battista JJ, Van Dyk J. Tracking the dose distribution in radiation therapy by accounting for variable anatomy [published online ahead of print 2004/04/09]. Phys Med Biol. 2004;49(5):791–805. [DOI] [PubMed] [Google Scholar]

- 16.Christensen GE, Carlson B, Chao KS, et al. Image-based dose planning of intracavitary brachytherapy: registration of serial-imaging studies using deformable anatomic templates [published online ahead of print 2001/08/23]. International journal of radiation oncology, biology, physics. 2001;51(1):227–243. [DOI] [PubMed] [Google Scholar]

- 17.Yan D, Jaffray DA, Wong JW. A model to accumulate fractionated dose in a deforming organ [published online ahead of print 1999/05/29]. International journal of radiation oncology, biology, physics. 1999;44(3):665–675. [DOI] [PubMed] [Google Scholar]

- 18.Vercauteren T, Pennec X, Perchant A, Ayache N. Non-parametric diffeomorphic image registration with the demons algorithm. Paper presented at: International Conference on Medical Image Computing and Computer-Assisted Intervention2007. [DOI] [PubMed] [Google Scholar]

- 19.Zhong H, Kim J, Li H, Nurushev T, Movsas B, Chetty IJ. A finite element method to correct deformable image registration errors in low-contrast regions [published online ahead of print 2012/05/15]. Phys Med Biol. 2012;57(11):3499–3515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gu X, Dong B, Wang J, et al. A contour-guided deformable image registration algorithm for adaptive radiotherapy [published online ahead of print 2013/02/28]. Phys Med Biol. 2013;58(6):1889–1901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nithiananthan S, Schafer S, Mirota DJ, et al. Extra-dimensional Demons: a method for incorporating missing tissue in deformable image registration [published online ahead of print 2012/09/11]. Med Phys. 2012;39(9):5718–5731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lawson JD, Schreibmann E, Jani AB, Fox T. Quantitative evaluation of a cone-beam computed tomography-planning computed tomography deformable image registration method for adaptive radiation therapy [published online ahead of print 2008/05/02]. J Appl Clin Med Phys. 2007;8(4):96–113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kierkels RGJ, den Otter LA, Korevaar EW, et al. An automated, quantitative, and case-specific evaluation of deformable image registration in computed tomography images [published online ahead of print 2017/11/29]. Phys Med Biol. 2018;63(4):045026. [DOI] [PubMed] [Google Scholar]

- 24.Mencarelli A, van Kranen SR, Hamming-Vrieze O, et al. Deformable image registration for adaptive radiation therapy of head and neck cancer: accuracy and precision in the presence of tumor changes [published online ahead of print 2014/08/26]. Int J Radiat Oncol Biol Phys. 2014;90(3):680–687. [DOI] [PubMed] [Google Scholar]

- 25.Jacobson TJ, Murphy MJ. Optimized knot placement for B-splines in deformable image registration [published online ahead of print 2011/09/21]. Med Phys. 2011;38(8):4579–4582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Shekhar R, Lei P, Castro-Pareja CR, Plishker WL, D’Souza WD. Automatic segmentation of phase-correlated CT scans through nonrigid image registration using geometrically regularized free-form deformation. Medical Physics. 2007;34(7):3054–3066. [DOI] [PubMed] [Google Scholar]

- 27.Reed VK, Woodward WA, Zhang LF, et al. Automatic Segmentation of Whole Breast Using Atlas Approach and Deformable Image Registration. Int J Radiat Oncol. 2009;73(5):1493–1500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wang H, Dong L, O’Daniel J, et al. Validation of an accelerated ‘demons’ algorithm for deformable image registration in radiation therapy. Physics in Medicine and Biology. 2005;50(12):2887–2905. [DOI] [PubMed] [Google Scholar]

- 29.Nocedal J Updating Quasi-Newton Matrices with Limited Storage. Mathematics of Computation. 1980;35(151):773–782. [Google Scholar]

- 30.Ong CL, Verbakel WF, Cuijpers JP, Slotman BJ, Lagerwaard FJ, Senan S. Stereotactic radiotherapy for peripheral lung tumors: a comparison of volumetric modulated arc therapy with 3 other delivery techniques. Radiotherapy and oncology. 2010;97(3):437–442. [DOI] [PubMed] [Google Scholar]

- 31.McGrath SD, Matuszak MM, Yan D, Kestin LL, Martinez AA, Grills IS. Volumetric modulated arc therapy for delivery of hypofractionated stereotactic lung radiotherapy: A dosimetric and treatment efficiency analysis. Radiotherapy and Oncology. 2010;95(2):153–157. [DOI] [PubMed] [Google Scholar]

- 32.Chan OS, Lee MC, Hung AW, Chang AT, Yeung RM, Lee AW. The superiority of hybrid-volumetric arc therapy (VMAT) technique over double arcs VMAT and 3D-conformal technique in the treatment of locally advanced non-small cell lung cancer–A planning study. Radiotherapy and Oncology. 2011;101(2):298–302. [DOI] [PubMed] [Google Scholar]

- 33.Chun SG, Hu C, Choy H, et al. Impact of intensity-modulated radiation therapy technique for locally advanced non-small-cell lung cancer: a secondary analysis of the NRG Oncology RTOG 0617 randomized clinical trial. Journal of clinical oncology. 2017;35(1):56–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Liu W, Patel SH, Shen JJ, et al. Robustness quantification methods comparison in volumetric modulated arc therapy to treat head and neck cancer. Practical radiation oncology. 2016;6(6):e269–e275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.van de Water TA, Bijl HP, Schilstra C, Pijls-Johannesma M, Langendijk JA. The potential benefit of radiotherapy with protons in head and neck cancer with respect to normal tissue sparing: a systematic review of literature [published online ahead of print 2011/02/26]. Oncologist. 2011;16(3):366–377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lin A, Swisher-McClure S, Millar LB, et al. Proton therapy for head and neck cancer: current applications and future directions. Translational Cancer Research. 2012;1(4):255–263. [Google Scholar]

- 37.Frank SJ, Cox JD, Gillin M, et al. Multifield optimization intensity modulated proton therapy for head and neck tumors: a translation to practice [published online ahead of print 2014/05/29]. International journal of radiation oncology, biology, physics. 2014;89(4):846–853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Schild SE, Rule WG, Ashman JB, et al. Proton beam therapy for locally advanced lung cancer: A review [published online ahead of print 2014/10/11]. World J Clin Oncol. 2014;5(4):568–575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Bhangoo RS, DeWees TA, Yu NY, et al. Acute Toxicities and Short-Term Patient Outcomes After Intensity-Modulated Proton Beam Radiation Therapy or Intensity-Modulated Photon Radiation Therapy for Esophageal Carcinoma: A Mayo Clinic Experience [published online ahead of print 2020/10/22]. Adv Radiat Oncol. 2020;5(5):871–879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bhangoo RS, Mullikin TC, Ashman JB, et al. Intensity Modulated Proton Therapy for Hepatocellular Carcinoma: Initial Clinical Experience [published online ahead of print 2021/08/20]. Adv Radiat Oncol. 2021;6(4):100675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Yang Y, Muller OM, Shiraishi S, et al. Empirical Relative Biological Effectiveness (RBE) for Mandible Osteoradionecrosis (ORN) in Head and Neck Cancer Patients Treated With Pencil-Beam-Scanning Proton Therapy (PBSPT): A Retrospective, Case-Matched Cohort Study [published online ahead of print 2022/03/22]. Front Oncol. 2022;12:843175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Yu NY, DeWees TA, Liu C, et al. Early Outcomes of Patients With Locally Advanced Non-small Cell Lung Cancer Treated With Intensity-Modulated Proton Therapy Versus Intensity-Modulated Radiation Therapy: The Mayo Clinic Experience [published online ahead of print 2020/06/13]. Adv Radiat Oncol. 2020;5(3):450–458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Yu NY, DeWees TA, Voss MM, et al. Cardiopulmonary Toxicity Following Intensity-Modulated Proton Therapy (IMPT) vs. Intensity-Modulated Radiation Therapy (IMRT) for Stage III Non-Small Cell Lung Cancer. Clinical Lung Cancer. 2022. doi: 10.1016/j.cllc.2022.07.017. [DOI] [PubMed] [Google Scholar]

- 44.Lomax AJ. Intensity modulated proton therapy and its sensitivity to treatment uncertainties 1: the potential effects of calculational uncertainties [published online ahead of print 2008/02/12]. Phys Med Biol. 2008;53(4):1027–1042. [DOI] [PubMed] [Google Scholar]

- 45.Lomax AJ. Intensity modulated proton therapy and its sensitivity to treatment uncertainties 2: the potential effects of inter-fraction and inter-field motions [published online ahead of print 2008/02/12]. Phys Med Biol. 2008;53(4):1043–1056. [DOI] [PubMed] [Google Scholar]

- 46.Lomax AJ, Pedroni E, Rutz H, Goitein G. The clinical potential of intensity modulated proton therapy [published online ahead of print 2004/10/07]. Zeitschrift fur medizinische Physik. 2004;14(3):147–152. [DOI] [PubMed] [Google Scholar]

- 47.Pflugfelder D, Wilkens JJ, Oelfke U. Worst case optimization: a method to account for uncertainties in the optimization of intensity modulated proton therapy [published online ahead of print 2008/03/28]. Phys Med Biol. 2008;53(6):1689–1700. [DOI] [PubMed] [Google Scholar]

- 48.Unkelbach J, Bortfeld T, Martin BC, Soukup M. Reducing the sensitivity of IMPT treatment plans to setup errors and range uncertainties via probabilistic treatment planning [published online ahead of print 2009/02/25]. Med Phys. 2009;36(1):149–163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Fredriksson A, Forsgren A, Hardemark B. Minimax optimization for handling range and setup uncertainties in proton therapy [published online ahead of print 2011/04/28]. Med Phys. 2011;38(3):1672–1684. [DOI] [PubMed] [Google Scholar]

- 50.Liu W, Zhang X, Li Y, Mohan R. Robust optimization in intensity-modulated proton therapy. Med Phys. 2012;39:1079–1091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Liu W, Li Y, Li X, Cao W, Zhang X. Influence of robust optimization in intensity-modulated proton therapy with different dose delivery techniques [published online ahead of print 2012/07/05]. Med Phys. 2012;39(6):3089–3101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Chen W, Unkelbach J, Trofimov A, et al. Including robustness in multi-criteria optimization for intensity-modulated proton therapy [published online ahead of print 2012/01/10]. Phys Med Biol. 2012;57(3):591–608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Liu W, Liao Z, Schild SE, et al. Impact of respiratory motion on worst-case scenario optimized intensity modulated proton therapy for lung cancers [published online ahead of print 2014/11/22]. Pract Radiat Oncol. 2015;5(2):e77–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Unkelbach J, Alber M, Bangert M, et al. Robust radiotherapy planning [published online ahead of print 2018/11/13]. Phys Med Biol. 2018;63(22):22TR02. [DOI] [PubMed] [Google Scholar]

- 55.An Y, Liang J, Schild SE, Bues M, Liu W. Robust treatment planning with conditional value at risk chance constraints in intensity-modulated proton therapy [published online ahead of print 2017/01/04]. Med Phys. 2017;44(1):28–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.An Y, Shan J, Patel SH, et al. Robust intensity-modulated proton therapy to reduce high linear energy transfer in organs at risk [published online ahead of print 2017/10/05]. Med Phys. 2017;44(12):6138–6147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Liu C, Patel SH, Shan J, et al. Robust Optimization for Intensity Modulated Proton Therapy to Redistribute High Linear Energy Transfer from Nearby Critical Organs to Tumors in Head and Neck Cancer [published online ahead of print 2020/01/29]. International journal of radiation oncology, biology, physics. 2020;107(1):181–193. [DOI] [PubMed] [Google Scholar]

- 58.Liu C, Yu NY, Shan J, et al. Technical Note: Treatment planning system (TPS) approximations matter - comparing intensity-modulated proton therapy (IMPT) plan quality and robustness between a commercial and an in-house developed TPS for nonsmall cell lung cancer (NSCLC) [published online ahead of print 2019/09/10]. Med Phys. 2019;46(11):4755–4762. [DOI] [PubMed] [Google Scholar]

- 59.Liu C, Schild SE, Chang JY, et al. Impact of Spot Size and Spacing on the Quality of Robustly Optimized Intensity Modulated Proton Therapy Plans for Lung Cancer [published online ahead of print 2018/03/20]. International journal of radiation oncology, biology, physics. 2018;101(2):479–489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Liu W, Inventor. System and Method For Robust Intensity-modulated Proton Therapy Planning. 09/February/2014, 2014. [Google Scholar]

- 61.Liu W, ed Robustness quantification and robust optimization in intensity-modulated proton therapy. Springer; 2015. Rath A, Sahoo N, eds. Particle Radiotherapy: Emerging Technology for Treatment of Cancer. [Google Scholar]

- 62.Liu W, Frank SJ, Li X, et al. Effectiveness of robust optimization in intensity-modulated proton therapy planning for head and neck cancers [published online ahead of print 2013/05/03]. Med Phys. 2013;40(5):051711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Liu W, Frank SJ, Li X, Li Y, Zhu RX, Mohan R. PTV-based IMPT optimization incorporating planning risk volumes vs robust optimization [published online ahead of print 2013/02/08]. Med Phys. 2013;40(2):021709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Liu W, Schild SE, Chang JY, et al. Exploratory Study of 4D versus 3D Robust Optimization in Intensity Modulated Proton Therapy for Lung Cancer [published online ahead of print 2016/01/05]. International journal of radiation oncology, biology, physics. 2016;95(1):523–533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Shan J, Sio TT, Liu C, Schild SE, Bues M, Liu W. A novel and individualized robust optimization method using normalized dose interval volume constraints (NDIVC) for intensity-modulated proton radiotherapy [published online ahead of print 2018/11/06]. Med Phys. 2018. doi: 10.1002/mp.13276. [DOI] [PubMed] [Google Scholar]

- 66.Shan J, An Y, Bues M, Schild SE, Liu W. Robust optimization in IMPT using quadratic objective functions to account for the minimum MU constraint [published online ahead of print 2017/11/18]. Med Phys. 2018;45(1):460–469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Feng H, Shan J, Ashman JB, et al. 4D robust optimization in small spot intensity-modulated proton therapy (IMPT) for distal esophageal carcinoma. Medical Physics. [DOI] [PubMed] [Google Scholar]

- 68.Feng H, Sio TT, Rule WG, et al. Beam angle comparison for distal esophageal carcinoma patients treated with intensity-modulated proton therapy [published online ahead of print 2020/10/16]. J Appl Clin Med Phys. 2020;21(11):141–152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Li Y, Liu W, Li X, Quan E, Zhang X. Toward a thorough Evaluation of IMPT Plan Sensitivity to Uncertainties: Revisit the Worst-Case Analysis with An Exhaustively Sampling Approach. Medical Physics. 2011;38(6):3853-+. [Google Scholar]

- 70.Liu C, Bhangoo RS, Sio TT, et al. Dosimetric comparison of distal esophageal carcinoma plans for patients treated with small-spot intensity-modulated proton versus volumetric-modulated arc therapies [published online ahead of print 2019/05/22]. J Appl Clin Med Phys. 2019;20(7):15–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Liu C, Sio TT, Deng W, et al. Small-spot intensity-modulated proton therapy and volumetric-modulated arc therapies for patients with locally advanced non-small-cell lung cancer: A dosimetric comparative study [published online ahead of print 2018/10/18]. J Appl Clin Med Phys. 2018;19(6):140–148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Tryggestad EJ, Liu W, Pepin MD, Hallemeier CL, Sio TT. Managing treatment-related uncertainties in proton beam radiotherapy for gastrointestinal cancers [published online ahead of print 2020/03/17]. J Gastrointest Oncol. 2020;11(1):212–224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Zaghian M, Cao W, Liu W, et al. Comparison of linear and nonlinear programming approaches for “worst case dose” and “minmax” robust optimization of intensity-modulated proton therapy dose distributions [published online ahead of print 2017/03/17]. J Appl Clin Med Phys. 2017;18(2):15–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Zaghian M, Lim G, Liu W, Mohan R. An Automatic Approach for Satisfying Dose-Volume Constraints in Linear Fluence Map Optimization for IMPT [published online ahead of print 2014/12/17]. J Cancer Ther. 2014;5(2):198–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Matney J, Park PC, Bluett J, et al. Effects of respiratory motion on passively scattered proton therapy versus intensity modulated photon therapy for stage III lung cancer: are proton plans more sensitive to breathing motion? [published online ahead of print 2013/10/01]. International journal of radiation oncology, biology, physics. 2013;87(3):576–582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Matney JE, Park PC, Li H, et al. Perturbation of water-equivalent thickness as a surrogate for respiratory motion in proton therapy [published online ahead of print 2016/04/14]. J Appl Clin Med Phys. 2016;17(2):368–378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Liu W, Patel SH, Harrington DP, et al. Exploratory study of the association of volumetric modulated arc therapy (VMAT) plan robustness with local failure in head and neck cancer [published online ahead of print 2017/05/16]. J Appl Clin Med Phys. 2017;18(4):76–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Li H, Zhang X, Park P, et al. Robust optimization in intensity-modulated proton therapy to account for anatomy changes in lung cancer patients [published online ahead of print 2015/02/25]. Radiotherapy and oncology : journal of the European Society for Therapeutic Radiology and Oncology. 2015;114(3):367–372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Bibault JE, Arsene-Henry A, Durdux C, et al. [Adaptive radiation therapy for non-small cell lung cancer] [published online ahead of print 2015/09/05]. Cancer Radiother. 2015;19(6–7):458–462. [DOI] [PubMed] [Google Scholar]

- 80.Yan D, Wong J, Vicini F, et al. Adaptive modification of treatment planning to minimize the deleterious effects of treatment setup errors [published online ahead of print 1997/04/01]. International journal of radiation oncology, biology, physics. 1997;38(1):197–206. [DOI] [PubMed] [Google Scholar]

- 81.Yang X, Kwitt R, Styner M, Niethammer M. Quicksilver: Fast predictive image registration - A deep learning approach [published online ahead of print 2017/07/15]. Neuroimage. 2017;158:378–396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Balakrishnan G, Zhao A, Sabuncu MR, Guttag J, Dalca AV. VoxelMorph: A Learning Framework for Deformable Medical Image Registration [published online ahead of print 2019/02/05]. IEEE Trans Med Imaging. 2019. doi: 10.1109/TMI.2019.2897538. [DOI] [PubMed] [Google Scholar]

- 83.de Vos BD, Berendsen FF, Viergever MA, Sokooti H, Staring M, Isgum I. A deep learning framework for unsupervised affine and deformable image registration [published online ahead of print 2018/12/24]. Med Image Anal. 2019;52:128–143. [DOI] [PubMed] [Google Scholar]

- 84.Zhao S, Dong Y, Chang EI, Xu Y. Recursive cascaded networks for unsupervised medical image registration. Paper presented at: Proceedings of the IEEE/CVF international conference on computer vision2019. [Google Scholar]

- 85.Subhas N, Primak AN, Obuchowski NA, et al. Iterative metal artifact reduction: evaluation and optimization of technique [published online ahead of print 2014/08/31]. Skeletal Radiol. 2014;43(12):1729–1735. [DOI] [PubMed] [Google Scholar]

- 86.Avants BB, Tustison NJ, Stauffer M, Song G, Wu BH, Gee JC. The Insight ToolKit image registration framework. Front Neuroinform. 2014;8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Maas AL, Hannun AY, Ng AY. Rectifier nonlinearities improve neural network acoustic models. Paper presented at: Proc. icml2013. [Google Scholar]

- 88.Wang Z, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity [published online ahead of print 2004/09/21]. IEEE Trans Image Process. 2004;13(4):600–612. [DOI] [PubMed] [Google Scholar]

- 89.Sorensen TA. A method of establishing groups of equal amplitude in plant sociology based on similarity of species content and its application to analyses of the vegetation on Danish commons. Biol Skar. 1948;5:1–34. [Google Scholar]

- 90.Dice LR. Measures of the amount of ecologic association between species. Ecology. 1945;26(3):297–302. [Google Scholar]

- 91.Sharp GC, Li R, Wolfgang J, et al. Plastimatch: an open source software suite for radiotherapy image processing. Paper presented at: Proceedings of the XVI’th International Conference on the use of Computers in Radiotherapy (ICCR), Amsterdam, Netherlands2010. [Google Scholar]

- 92.Miften M, Olch A, Mihailidis D, et al. Tolerance limits and methodologies for IMRT measurement-based verification QA: recommendations of AAPM Task Group No. 218. Medical physics. 2018;45(4):e53–e83. [DOI] [PubMed] [Google Scholar]

- 93.He K, Chen X, Xie S, Li Y, Dollár P, Girshick R. Masked Autoencoders Are Scalable Vision Learners. Paper presented at: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 18–24 June 2022, 2022. [Google Scholar]

- 94.Nag M, Liu J, Liu L, et al. Body location embedded 3D U-Net (BLE-U-Net) for ovarian cancer ascites segmentation on CT scans. Vol 12567: SPIE; 2023. [Google Scholar]

- 95.Liu L, Liu J, Nag MK, et al. Improved Multi-modal Patch Based Lymphoma Segmentation with Negative Sample Augmentation and Label Guidance on PET/CT Scans. 2022; Cham. [Google Scholar]