Abstract

Objective To evaluate the effectiveness of an educational intervention in adolescent health designed for general practitioners, in accordance with evidence-based practice in continuing medical education. Design Randomized, controlled trial with baseline testing and 7- and 13-month follow-ups. Setting The intervention was delivered in local community settings to general practitioners in metropolitan Melbourne, Australia. Participants A total of 108 self-selected general practitioners. Intervention A multifaceted educational program (2.5 hours per week for 6 weeks) in the principles of adolescent health care, followed 6 weeks later by a 2-hour session of case discussion and debriefing. Outcome measures Objective ratings of videotaped consultations with standardized adolescent patients and self-completion questionnaires were used to measure general practitioners' knowledge, skill, and self-perceived competency; satisfaction with the program; and self-reported change in practice. Results 103 of 108 physicians (95%) completed all phases of the intervention and evaluation protocol. The intervention group showed significantly greater improvements than the control group in all outcomes at the 7-month follow-up (all subjects P<0.03), except for the standardized patients' rating of rapport and satisfaction (P=0.12). 104 participants (96%) found the program appropriate and relevant. At the 13-month follow-up, most improvements were sustained, the standardized patients' rating of confidentiality fell slightly, and the objective assessment of competence further improved. 106 physicians (98%) reported a change in practice attributable to the intervention. Conclusions General practitioners were willing to complete continuing medical education in adolescent health and its evaluation. The design of the intervention, using evidence-based educational strategies, proved effective and expeditious in achieving sustainable and large improvements in knowledge, skill, and self-perceived competency.

Summary points

Firm evidence exists that the lack of confidence, knowledge, and skills of general practitioners in adolescent health contributes to barriers in delivering health care to young people

Evidence-based strategies in continuing medical education were used in the design of a training program to address the needs of general practitioners and young people

Most interested general practitioners attended and completed the 6-week, 15-hour training program and the evaluation protocol covering 13 months

General practitioners completing the training made substantial gains in knowledge, clinical skill, and self-perceived competency compared with the randomly allocated control group of practitioners.

These gains were sustained at 12 months and were further improved in the objective measure of clinical competence in conducting a psychosocial interview

The patterns of health need in youth have changed markedly in the past three decades. Studies in the United Kingdom, North America, and Australia have shown that young people experience barriers to accessing health services.1,2,3,4,5 With the rise in rates of a range of youth health problems such as depression, eating disorders, drug and alcohol use, unplanned pregnancy, chronic illness, and suicide, it is clear that the accessibility and quality of health services to youth need to improve.3,6

General practitioners provide the most accessible primary health care for adolescents in the Australian health care system.7 Yet, in a survey of 1,000 general practitioners in the state of Victoria, Veit and associates found that 80% reported inadequate undergraduate training in consultation skills and psychosocial diseases, and 87% wanted continuing medical education in these areas.4,8 These findings agreed with those of comparable studies.9,10,11

Evidence-based strategies in helping physicians to learn and change their practice are at the forefront of continuing medical education design.12,13,14 In response to the identified gap in training, an evidence-based educational intervention was designed to improve the knowledge, skill, and self-perceived competency of general practitioners in adolescent health. We report the results of a randomized controlled trial evaluating the intervention, with follow-up at 7 and 13 months after the baseline assessment.

PARTICIPANTS AND METHODS

The Divisions of General Practice are Australian regional organizations that survey needs and educate general practitioners in their zone. Metropolitan Melbourne has 15 divisions. Advertisements inviting participation in the intervention and evaluation were placed in 14 of the 15 divisional and state college newsletters and mailed individually to all division members. The course was free, and continuing medical education points were available. Respondents were sent intervention details and the evaluation protocol and asked to return a signed consent form. Divisions and physicians were excluded if they had previously received a course in adolescent health care from this institution.

RANDOMIZATION

Consenting physicians were grouped into eight geographic clusters by practice location to minimize contamination and to maximize the efficiency of intervention delivery. Clusters (classes) of similar size were randomly allocated to an intervention or control group by an independent researcher.

SAMPLE SIZE

Sample size estimation was based on the minimum desirable change in knowledge score. Seventy-four participants were required to detect an effect size difference of 0.67 in a simple random sample, with a power of 80% and a significance level of 95%. This figure was inflated to 148 to allow for randomization by cluster (ρ=0.05) and 20% attrition.

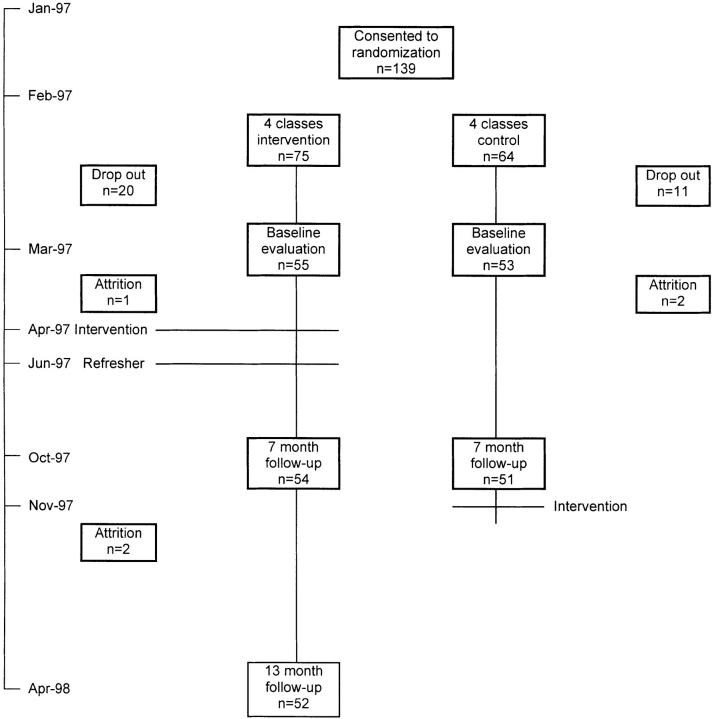

Figure 1.

Recruitment and protocol timelines

INTERVENTION

The objectives, content, and instructional design of the multifaceted intervention are detailed in the box. A panel consisting of young people, general practitioners, college education and quality assurance staff, adolescent health experts, and a state youth and family government officer participated in the design.15 The curriculum included evidence-based primary and secondary educational strategies, such as role playing with feedback, modeling practice with opinion leaders, and using checklists.12,16

The intervention and evaluation protocols are shown in the figure. The 6-week program was delivered concurrently by one of us (LAS), starting 1 month after baseline testing.

MEASURES

The instruments used in the evaluation are summarized in table 1. Parallel strategies of objective and self-reported ratings of knowledge, skill, and competency were used to ensure that findings were consistent.17,18 Participants' satisfaction with the course and their self-reported change in practice were evaluated at 13 months. Any other training or education obtained in adolescent health or related areas was noted.

Table 1.

Evaluation measures, their content, inter item reliability and intraclass correlation within randomization groups estimated at baseline

| Evaluation measures | Content* | Crohnbach α | Intraclass correlation |

|---|---|---|---|

| Skills | |||

| Patients' rating | |||

| Satisfaction and rapport | 7 | 0.95 | 0.01 |

| Confidentiality discussion | 1 | C | 0.07 |

| Observer's rating | |||

| Competency† | 13 | 0.95 | 0.05 |

| Content of risk assessment† | 22 items | C | 0.09 |

| Self-perceived competency | |||

| Comfort | |||

| Clinical process | 11 | 0.88 | <0.01 |

| Substantive issues | 10 | 0.93 | 0.01 |

| Self-perceived knowledge and skill | |||

| Clinical process | 11 | 0.90 | 0.04 |

| Substantive issues | 10 | 0.94 | 0.05 |

| General practitioner's self-score on interview | 6 | 0.93 | <0.01 |

| Knowledge | |||

| Self-completion knowledge test | 41 items | C | <0.01 |

Likert scales unless stated otherwise

Assessments from viewing taped consultations

Clinical skills

Seven female drama students were trained to simulate a depressed 15-year-old girl exhibiting health risk behavior. Case details and performances were standardized according to published protocols19,20,21 and varied for each testing period. Physicians were given 30 minutes to interview the patient in a consulting room at this institution. An unattended camera videotaped the consultation.

The standardized patients were trained in the use of a validated rating chart21 to assess, first, their own rapport and satisfaction and, second, a discussion about confidentiality. They completed these evaluations after the interview while still in role. They were blind to the intervention status of the physicians, and no physician had the same patient for successive interviews.

Two independent observers, blind to participants' status, assessed the taped consultations in the three testing periods. A physician in adolescent health care coded three items in the scale that related to medical decision making. A trained nonmedical researcher assessed all other items. The chart was developed from two validated instruments for assessing adolescent health consultations21 and general practice consultations.22,23 Marks for competency and content of the health risk assessment were summarized into a percentage score. The same observers were used in all three testing periods.

Self-perceived competency

Two questionnaires were developed for the physicians to rate their comfort and their knowledge or skill with process issues, including the clinical approach to adolescents and their families, and substantive issues of depression, suicide risk assessment, alcohol and drug issues, eating disorders, sexual history taking, and sexual abuse. In addition, physicians rated their consultation with the standardized patient on a validated chart,21 itemizing their self-perceived knowledge and skill.

Knowledge

Knowledge was assessed with short answer and multiple choice items developed to reflect the workshop topics. The items were pretested and refined for contextual and content validity. The course tutor, blind to group status, awarded a summary score.

ANALYSIS

Statistical analysis was performed using a commercial software package (STATA; Stata Corporation, College Station, TX), with the individual as the unit of analysis. Factor analysis with varimax rotation was used to identify two domains within the comfort and self-perceived knowledge or skill items: process and substantive issues. The internal consistency for all scales was estimated using the Cronbach α. Reproducibility within and between raters and the intraclass correlation of baseline scores within each teaching group were estimated using one-way analyses of variance.

The effect of this intervention was evaluated by the regression of gain scores (7-month score minus baseline) on the intervention status, with an adjustment made for baseline and potential confounding variables. Robust standard errors were used to allow for randomization by cluster. The sustainability of outcome changes in the intervention group between the 7- and 13-month assessments was evaluated using paired t tests.

RESULTS

Participants

Newsletters and mailed advertisements to 2,415 general practitioners resulted in 264 expressions of interest; 139 physicians gave written consent to be randomly assigned to either an intervention group or a control group. Attrition following notification of the study status left 55 (73%) in the intervention group and 53 (83%) in the control group, with an average of 13.5 (range: 12-15) physicians in each class.

The age and country of graduation of the physicians in this study were similar to those of the national general practitioner workforce.24,25 Female physicians were overrepresented (50% in this study vs 19% and 33% in other reports).25,26Table 2 describes the randomized groups. There was imbalance in age, sex, languages other than English spoken, average weekly hours of consulting, types of practice, and college examinations.

Table 2.

Demographic characteristics of general practitioners by intervention group

| Characteristic | Intervention group (n = 54) | Control group (n = 51) |

|---|---|---|

| Male | 24* (44) | 28**(55) |

| Age (years) | ||

| 25-34 | 13 (24) | 10 (20) |

| 35-44 | 20 (37) | 16 (31) |

| 45-54 | 18 (33) | 15 (29) |

| 55+ | 3 (6) | 10 (20) |

| Language other than English spoken | 14 (26) | 24 (47) |

| Average hours consulting/week | ||

| <20 | 17 (31) | 20 (20) |

| 20-40 | 29 (54) | 22 (44) |

| 40-60 | 8 (15) | 18 (36) |

| Patient seen in an average week | ||

| <50 | 14 (26) | 9 (18) |

| 51-100 | 16 (28) | 13 (26) |

| 101-150 | 18 (33) | 16 (32) |

| >150 | 7 (13) | 12 (24) |

| % of adolescents of total patients seen per week | ||

| <10 | 24 (45) | 21 (41) |

| 10-30 | 22 (40) | 23 (43) |

| >30 | 8 (15) | 8 (16) |

| Age (years) of oldest child | ||

| No children | 3 (6) | 9 (18) |

| ≤10 | 19 (35) | 12 (24) |

| 11-20 | 21 (39) | 10 (20) |

| ≥20 | 11 (20) | 19 (38) |

| Vocational registration | 51 (94) | 46 (90) |

| College exams taken | 25 (46) | 15 (29) |

| Previous training in adolescent health | 15 (28) | 15 (29) |

| Type of practice | ||

| Solo | 4 (7) | 13 (25) |

| Group | 43 (80) | 24 (47) |

| Community health center | 0 | 4 (8) |

| Extended hour | 0 | 2 (4) |

| Other | 7 (13) | 8 (16) |

| Appointments/hour | ||

| ≤4 | 32 (59) | 33 (65) |

| 5-6 | 9 (17) | 4 (8) |

| ≥6 | 8 (15) | 8 (16) |

| Other booking systems | 5 (9) | 6 (12) |

Numbers shown are percentages

Compliance

Of 54 physicians in the intervention group, 44 attended all six tutorials, 8 missed one, and 2 missed three. One practitioner abandoned the course and the evaluation protocol. Of the 108 participants at baseline, 103 (95%) completed the entire evaluation protocol (figure).

Measures

The evaluation scales showed satisfactory internal consistency and low association with class membership (table 1). Satisfactory inter-rater agreement was achieved on the competency scale (n=70; ρ=0.70). The intrarater consistency for both medical and nonmedical raters was also satisfactory (n=20; ρ=0.80 and 0.91, respectively).

Effect of the intervention

Table 3 describes baseline measures and the effect of the intervention at the 7-month follow-up. All analyses were adjusted for age, sex, language other than English, average weekly hours of consulting, practice type, and college examinations. Physicians reporting education in related areas during follow-up (67% of the control group and 41% of the intervention group) were characterized. The difference analysis was adjusted for this extraneous training and baseline score, although the extraneous training did not affect any outcomes. The study groups were similar in all measures at baseline. The intervention group showed significantly greater improvement than the control group at the 7-month follow-up in all outcomes, except in the rapport rating by the standardized patients.

Table 3.

Multiple regression analyses of baseline and difference in scores on continuous outcome measures evaluating success of an educational intervention at 7-month follow-up*

| Difference at 7 month follow-up | |||||

|---|---|---|---|---|---|

| Scores | No† | Baseline Mean (95% Cl) | Mean (95% Cl) | Effect size | P value |

| Skills | |||||

| Standardized patients' rapport and satisfaction | |||||

| Control | 50 | 67.9 (61.4 to 74.5) | -0.5 (-6.1 to 5.0) | -0.02 | 0.12 |

| Intervention | 54 | 67.9 (64.9 to 70.9) | 6.0 (2.6 to 9.5) | 0.54 | |

| Standardized patients' confidentiality | |||||

| Control | 50 | 35.2 (29.3 to 41.1) | 4.0 (-10.3 to 18.3) | 0.19 | <0.01 |

| Intervention | 54 | 42.2 (31.0 to 53.4) | 53.5 (49.3 to 57.8) | 1.28 | |

| Observer competence | |||||

| Control | 50 | 51.8 (45.9 to 57.6) | 2.6 (-3.0 to 8.1) | 0.12 | 0.01 |

| Intervention | 54 | 48.8 (46.2 to 51.4) | 15.3 (11.1 to 19.5) | 1.55 | |

| Observer risk assessment | |||||

| Control | 50 | 53.3 (49.4 to 57.2) | 0.5 (-3.0 to 4.1) | 0.04 | 0.03 |

| Intervention | 53 | 50.7 (44.2 to 57.2) | 9.9 (5.8 to 14.0) | 0.41 | |

| Self-perceived competency | |||||

| Comfort (process) | |||||

| Control | 49 | 71.1 (66.4 to 75.8) | 0.2 (-3.5 to 4.0) | 0.01 | 0.03 |

| Intervention | 54 | 71.8 (69.7 to 73.9) | 7.1 (4.7 to 9.4) | 0.89 | |

| Comfort (substantive) | |||||

| Control | 50 | 58.1 (52.3 to 63.9) | 0.3 (-5.1 to 5.6) | 0.01 | <0.01 |

| Intervention | 54 | 60.5 (56.1 to 64.8) | 15.8 (13.8 to 17.8) | 0.97 | |

| Knowledge and skill (process) | |||||

| Control | 50 | 65.9 (60.4 to 71.5) | 0.7 (-4.0 to 5.3) | 0.03 | <0.01 |

| Intervention | 53 | 66.3 (63.6 to 69.1) | 15.6 (12.1 to 19.2) | 1.54 | |

| Knowledge and skill (substantive) | |||||

| Control | 50 | 52.1 (44.5 to 59.7) | 2.8 (-2.0 to 7.6) | 0.10 | <0.01 |

| Intervention | 54 | 57.5 (53.8 to 61.2) | 20.6 (18.2 to 22.9) | 1.50 | |

| Doctors' self rating on taped consultation | |||||

| Control | 49 | 56.6 (52.7 to 60.5) | 3.1 (0.6 to 5.6) | 0.22 | <0.01 |

| Intervention | 54 | 56.9 (55.7 to 58.1) | 17.8 (15.9 to 19.7) | 4.01 | |

| Knowledge test | |||||

| Control | 49 | 33.3 (31.6 to 35.0) | 3.1 (0.6 to 5.6) | 0.51 | <0.01 |

| Intervention | 54 | 32.8 (31.6 to 34.0) | 14.6 (13.0 to 16.2) | 3.31 | |

Models include gender, age group, language other than English, type of practice, average hours worked per week, and college exams taken. Difference scores are also adjusted for baseline score and training obtained from elsewhere over 7-month period. Robust standard errors allowed for cluster randomization. All scores out of 100.

Variation due to missing values in the rating forms of some participants

Program satisfaction

The contextual validity and applicability of the course were assessed by 48 of 53 physicians and rated positively by 46 physicians (96%).

13-Month follow-up of the intervention group

The intervention effect was sustained in most measures and further improved in the independent raters' assessment of competence (table 4). The crude standardized patients' rating of the confidentiality discussion deteriorated at the 13-month assessment but was significantly greater than at baseline. Of the 52 participants remaining in the 13-month follow-up, 51 (98%) reported a change in practice, which they attributed to the intervention.

Table 4.

Change in unadjusted percentage scores for the intervention group (n = 54)*

| Follow-up | |||||

|---|---|---|---|---|---|

| Scores | Baseline | 7-months | 13-months | P value† | P value‡ |

| Skills | |||||

| Standardized patients' rapport and satisfaction | 68.6 (63.5 to 73.7) | 76.0 (71.7 to 80.2) | 75.9 (71.4 to 80.5) | <0.01 | 1.00 |

| Standardized patients' confidentiality | 42.5 (34.4 to 50.6) | 92.7 (89.1 to 96.3) | 84.4 (78.4 to 90.5) | <0.01 | 0.01 |

| Observer competence | 51.0 (46.3 to 55.8) | 65.3 (60.3 to 70.3) | 70.7 (66.3 to 75.0) | <0.01 | 0.02 |

| Observer risk assessment | 51.2 (47.9 to 54.5) | 61.3 (58.4 to 64.3) | 61.4 (58.3 to 64.4) | <0.01 | 1.00 |

| Self-perceived competency | |||||

| Comfort | |||||

| Process | 71.3 (67.8 to 74.8) | 78.1 (74.8 to 81.5) | 80.0 (77.3 to 82.7) | <0.01 | 0.12 |

| Substantive | 59.6 (55.4 to 63.9) | 74.9 (71.7 to 78.0) | 75.5 (72.4 to 78.7) | <0.01 | 0.58 |

| Self-perceived knowledge and skill | |||||

| Process | 66.6 (63.4 to 69.7) | 80.8 (78.1 to 83.5) | 81.9 (79.2 to 84.6) | <0.01 | 0.27 |

| Substantive | 56.7 (52.8 to 60.6) | 76.3 (73.2 to 79.5) | 76.3 (73.0 to 79.6) | <0.01 | 0.99 |

| General practitioner self-rating on taped consultation | 55.6 (50.6 to 60.6) | 72.1 (68.7 to 75.6) | 71.0 (67.3 to 74.7) | <0.01 | 0.59 |

| Knowledge test | 33.5 (31.5 to 35.4) | 48.0 (46.1 to 49.9) | 47.7 (45.8 to 49.6) | <0.01 | 0.71 |

From baseline to 7 month follow-up and from 7-13 month follow-up using paired t test. Values are mean (95% Cl) unless stated otherwise

Baseline to 13 months

7 to 13 months

DISCUSSION

A six-session course in adolescent health, designed with evidence-based strategies in physician education, brought substantial gains in knowledge, skills, and self-perceived competency of the intervention group of general practitioners compared with the control group, except for the standardized patients' rating on rapport and satisfaction. The changes were generally sustained over 12 months and further improved in the independent observers' rating of competence. Almost all participants reported a change in actual practice since the intervention.

These results are better than those reported in a review of 99 randomized controlled trials (published from 1974-1995)12 to evaluate continuing medical education. Although more than 60% had positive outcomes, they were small to moderate and usually occurred in only one or two outcome measures. In keeping with the recommendations of this review, we adopted a rigorous design, a clearly defined target group, and several methods of evaluating competence. Perhaps more importantly, the intervention design incorporated three further elements: the use of evidence-based educational strategies, a comprehensive preliminary analysis of needs, and assurance of the content validity of the curriculum by involving young people and general practitioners.

The study participants clearly represented a highly motivated group of practitioners. This self-selection bias was unavoidable but reflected the reality that only interested physicians would seek special skills in this domain. This aspect also conforms to the adult learning principle of providing education where a self-perceived need and a desire for training exist.12,26,27 We have, therefore, established that the intervention is effective with motivated practitioners.

Physicians with an interest in a topic are generally thought to have high levels of knowledge and skill, with little room for improvement. This was not the case in the present study. Baseline measures were often low, and improvements were large, confirming the need for professional development in adolescent health care. The retention rate was excellent, possibly due in part to the role of a general practitioner in the program design, recruitment, and tutoring.

The question remains whether improved competency in a controlled test setting translates to improved performance in clinical practice.28 High competency ratings are not necessarily associated with high performance, but low competency is usually associated with low performance.16,29,30

The standardized patients' rating of rapport and satisfaction with the physician was the only outcome measure apparently unresponsive to the intervention. Actors' ratings and character portrayal were standardized and sex bias controlled for by using only female actors. Even with these precautions, three actors scored differently from the rest, one had fewer encounters with physicians, and the subjective nature of the rating scale probably contributed to large individual variation. A trend toward improvement in the intervention group was noted, but our study lacked sufficient power to find a difference. In other settings, validity and reliability in competency assessments with standardized patients have been shown to increase with the number of consultations examined.31,32 Pragmatically, it was not feasible to measure multiple consultations in this study.

Inter-rater measurement error was minimized by using the same raters through all three periods of testing. The independent observer and patient were blind to study status but may have recognized the intervention group at the 7-month follow-up because of the learned consultation styles. Other measures of competency were included to accommodate this unavoidable source of error.

This study shows the potential of general practitioners to respond to the changing health needs of youth following brief training, based on a needs analysis and best evidence-based educational practice. Further study should address the extent to which these changes in physicians' competence translate to health gains for their young patients.

Table 1.

| Goals, content, and instructional design of the intervention in adolescent health care principles for general practitioners |

|---|

|

Acknowledgments

We thank all participating general practitioners and Helen Cahill (Youth Research Centre, Melbourne University) for her role in planning and facilitating the communication workshops and training the standardized patients, David Rosen (University of Michigan School of Medicine, Ann Arbor, MI) for advice and supervision in training the standardized patients, and Sarah Croucher (Centre for Adolescent Health) for her role as an observer in the evaluation.

Funding: The Royal Australian College of General Practitioners Trainee Scholarship and Research Fund and the National Health and Medical Research Council

A version of this paper was originally published in the BMJ 2000;320:224-229

References

- 1.Donovan C, Mellanby AR, Jacobson LD, et al. Teenagers' views on the general practice consultation and provision of contraception: the adolescent working group. Br J Gen Pract 1997;47: 715-718. [PMC free article] [PubMed] [Google Scholar]

- 2.Oppong-Odiseng ACK, Heycock EG. Adolescent health services—through their eyes. Arch Dis Child 1997;77: 115-119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ginsburg KR, Slap GB. Unique needs of the teen in the health care setting. Curr Opin Pediatr 1996;8: 333-337. [DOI] [PubMed] [Google Scholar]

- 4.Veit FCM, Sanci LA, Young DYL, et al. Adolescent health care: perspectives of Victorian general practitioners. Med J Aust 1995;163: 16-18. [DOI] [PubMed] [Google Scholar]

- 5.McPherson A, Macfarlane A, Allen J. What do young people want from their GP? [letter] Br J Gen Pract 1996;46: 627. [PMC free article] [PubMed] [Google Scholar]

- 6.Bearinger LH, Gephart J. Interdisciplinary education in adolescent health. J Paediatr Child Health 1993;29(suppl): S10-S15. [DOI] [PubMed] [Google Scholar]

- 7.Bennett DL. Adolescent health in Australia: an overview of needs and approaches to care. Sydney: Australian Medical Association; 1984.

- 8.Veit FCM, Sanci LA, Coffey CMM, et al. Barriers to effective primary health care for adolescents. Med J Aust 1996;165: 131-133. [DOI] [PubMed] [Google Scholar]

- 9.Blum R. Physicians' assessment of deficiencies and desire for training in adolescent care. J Med Educ 1987;62: 401-407. [DOI] [PubMed] [Google Scholar]

- 10.Blum RW, Bearinger LH. Knowledge and attitudes of health professionals toward adolescent health care. J Adolesc Health Care 1990;11: 289-294. [DOI] [PubMed] [Google Scholar]

- 11.Resnick MD, Bearinger L, Blum R. Physician attitudes and approaches to the problems of youth. Pediatr Ann 1986;15: 799-807. [DOI] [PubMed] [Google Scholar]

- 12.Davis DA, Thomson MA, Oxman AD, et al. Changing physician performance: a systematic review of the effect of continuing medical education strategies. JAMA 1995;274: 700-705. [DOI] [PubMed] [Google Scholar]

- 13.Davis DA, Thomson MA, Oxman AD, et al. Evidence for the effectiveness of CME: a review of 50 randomized controlled trials. JAMA 1992;268: 1111-1117. [PubMed] [Google Scholar]

- 14.Oxman AD, Thomson MA, Davis DA, et al. No magic bullets: a systematic review of 102 trials of interventions to improve professional practice. Can Med Assoc J 1995;153: 1423-1431. [PMC free article] [PubMed] [Google Scholar]

- 15.Owen JM. Program evaluation forms and approaches. St Leonards (Australia): Allen & Unwin; 1993.

- 16.Davis D, Fox R, eds. The physician as learner: linking research to practice. Chicago: American Medical Association; 1994.

- 17.Greene JC, Caracelli VJ, eds. Advances in mixed-method evaluation: the challenges and benefits of integrating diverse paradigms. San Francisco (CA): Jossey-Bass; 1997. New Directions for Evaluation No. 74.

- 18.Masters GN, McCurry D. Competency-based assessment in the professions. Canberra: Australian Government Publishing Service; 1990.

- 19.Norman GR, Neufeld VR, Walsh A, et al. Measuring physicians' performances by using simulated patients. J Med Educ 1985;60: 925-934. [DOI] [PubMed] [Google Scholar]

- 20.Woodward CA, McConvey GA, Neufeld V, et al. Measurement of physician performance by standardized patients: refining techniques for undetected entry in physicians' offices. Med Care 1985;23: 1019-1027. [DOI] [PubMed] [Google Scholar]

- 21.Rosen D. The adolescent interview project. In: Johnson J, ed. Adolescent medicine residency training resources. Elk Grove Village (IL): American Academy of Pediatrics; 1995: 1-15.

- 22.Royal Australian College of General Practitioners college examination handbook for candidates 1996. South Melbourne: Royal Australian College of General Practitioners; 1996.

- 23.Hays RB, van der Vleuten C, Fabb WE, et al. Longitudinal reliability of the Royal Australian College of General Practitioners certification examination. Med Educ 1995;29: 317-321. [DOI] [PubMed] [Google Scholar]

- 24.Bridges-Webb C, Britt H, Miles DA, et al. Morbidity and treatment in general practice in Australia 1990-1991. Med J Aust 1992;157: S1-S57. [PubMed] [Google Scholar]

- 25.The general practices profile study: a national survey of Australian general practices. Clifton Hill (Australia): Campbell Research & Consulting; 1997.

- 26.Knowles M. The adult learner: a neglected species. Houston (TX): Gulf Publishing Company; 1990.

- 27.Ward J. Continuing medical education, part 2: needs assessment in continuing medical education. Med J Aust 1988;148: 77-80. [PubMed] [Google Scholar]

- 28.Norman GR. Defining competence: a methodological review. In: Neufeld VR, Norman GR, eds. Assessing clinical competence. New York (NY): Springer Publishing; 1985: 15-35.

- 29.Rethans JJ, Strumans F, Drop R, et al. Does competence of general practitioners predict their performance? Comparison between examination setting and actual practice. BMJ 1991;303: 1377-1380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Pieters HM, Touw-Otten FWWM, De Melker RA. Simulated patients in assessing consultation skills of trainees in general practice vocational training: a validity study. Med Educ 1994;28: 226-233. [DOI] [PubMed] [Google Scholar]

- 31.Colliver JA, Swartz MH. Assessing clinical performance with standardized patients. JAMA 1997;278: 790-791. [DOI] [PubMed] [Google Scholar]

- 32.Colliver JA. Validation of standardized-patient assessment: a meaning for clinical competence. Acad Med 1995;70: 1062-1064. [DOI] [PubMed] [Google Scholar]