Abstract

The assessment of functional effect of amino acid variants is a critical biological problem in proteomics for clinical medicine and protein engineering. Although natively occurring variants offer insights into deleterious variants, high-throughput deep mutational experiments enable comprehensive investigation of amino acid variants for a given protein. However, these mutational experiments are too expensive to dissect millions of variants on thousands of proteins. Thus, computational approaches have been proposed, but they heavily rely on hand-crafted evolutionary conservation, limiting their accuracy. Recent advancement in transformers provides a promising solution to precisely estimate the functional effects of protein variants on high-throughput experimental data. Here, we introduce a novel deep learning model, namely Rep2Mut-V2, which leverages learned representation from transformer models. Rep2Mut-V2 significantly enhances the prediction accuracy for 27 types of measurements of functional effects of protein variants. In the evaluation of 38 protein datasets with 118,933 single amino acid variants, Rep2Mut-V2 achieved an average Spearman’s correlation coefficient of 0.7. This surpasses the performance of six state-of-the-art methods, including the recently released methods ESM, DeepSequence and EVE. Even with limited training data, Rep2Mut-V2 outperforms ESM and DeepSequence, showing its potential to extend high-throughput experimental analysis for more protein variants to reduce experimental cost. In conclusion, Rep2Mut-V2 provides accurate predictions of the functional effects of single amino acid variants of protein coding sequences. This tool can significantly aid in the interpretation of variants in human disease studies.

Keywords: Functional effect, Deep learning, Single amino acid variant, Precise estimation, High-throughput experiments

Graphical Abstract

1. Introduction

Proteins play fundamental roles in carrying out diverse cellular functions. Their biological activities could be affected by numerous variants. Although most of these variants have negligible effects on protein functions, a small fraction of native amino acid variants of human proteins are closely associated with human diseases [1]. Additionally, synthetically introduced variants are crucial in protein engineering to design proteins with specific characteristics. In both applications, accurately estimating the functional effects of millions of protein variants is a fundamental and challenging problem.

High-throughput experimental approaches, deep mutational scanning[2] and the recently developed GigaAssay[3], are proposed to address this challenge [4], [5]. These methods introduce thousands of variants of a given protein and measure the variants’ functional effects on the biological activities of the protein, overcoming the limitation of interpreting limited, natively occurring variants of proteins. These high-throughput experimental approaches have successfully and comprehensively investigated the functional effects of various variants for tens of proteins, including influenza Hemagglutinin[6] and influenza A virus PA polymerase subunit[7], nonstructural protein 5A (NS5A) inhibitor for Hepatitis C virus[8], Hsp90[9], beta-lactamase TEM-1[10], [11], [12], [13], a bacterial DNA methyltransferase M.HaeIII[14], BRCA1[15], hYAP65 WW domain[16], a Tn5 transposon-derived kinase APH(3′)II[17], yeast transcription factor Gal4[18], human tumor suppressor p53[18], PDZ domain[19], Saccharomyces cerevisiae poly(A)-binding protein Pab1[20], yeast ubiquitin gene[21], [22], [23] and a tRNA gene[24], β-glucosidase enzyme Bgl3 from Streptomyces sp.[25], FAS/CD95[26], 20 ParD-ParE TA family members[27], murine ubiquitination factor E4B[28] and HIV Tat[3]. However, as indicated by the list, each experiment typically focused on one protein/gene due to the high cost of the methods. It is difficult, if not impossible, to extend these methods to examine variants of thousands of proteins or to investigate all possible multiple variants of a given protein.

Thus, computational approaches were proposed to overcome this limitation. These approaches can be classified into four groups. The first group, which includes PolyPhen-2[29], SIFT[30] and SNAP2[31], usually relies on evolutionary conservation of homologous sequences[32], while the second group, which includes CADD[33], integrates diverse annotations in the inference of mutational effects. The third group, which includes EVmutation[34] and DeepSequence[35], considers epistatic couplings between amino acids[36], [37]. DeepSequence also uses a variational auto-encoder to detect latent features in sequences to predict a variant’s functional effect. The fourth group of the methods, which includes transformer models such as Evolutionary Scale Modeling (ESM) [38], [39], has recently been used to automatically capture higher-order hidden information behind the sequences. The transformer models were trained on millions of available protein sequences, and provide a novel way to predict the mutational effects of protein variants without the need for hand-craft features. However, few studies investigated how learned features from transformers perform on those high-throughput experimental datasets with various mutational measurements of functional effects upon protein variants.

Here, we propose a deep learning method, named Rep2Mut-V2, to use protein sequences as the sole input to accurately predict 27 types of measurements of mutational effects of protein variants. Rep2Mut-V2 is an improvement of our previous model Rep2Mut [40] which was designed to predict the transcriptional activity of HIV Tat protein (GigaAssay [3]). In an assessment of 38 protein datasets, Rep2Mut-V2 demonstrated superior performance when compared to six existing methods. Rep2Mut-V2 exhibits great potential to assist the investigation of mutational effects for more proteins, aiding in the interpretation of protein variants and human disease studies. Our tool is publicly available at https://github.com/qgenlab/Rep2Mut.

2. Materials and methods

2.1. Datasets

A total of 38 protein datasets, comprising 118,933 single amino acid variants (Table 1), were used to evaluate our method and the state-of-the-art methods. Each of these datasets investigates a protein with various numbers of variants, and the number of variants range from 313 (on YAP1 dataset) to 12,236 (on BF520 dataset) with a median of 1725 as shown in Table 1. Each dataset is also associated with a specific functional measurement, such as transcriptional activities, fitness, CRIPT, MIC score, etc. [35]. The 38 datasets encompass a total of 27 distinct functional measurements. Most of the datasets were generated by deep mutational scanning and collected by Riesselman et al. [35], while the HIV Tat data was generated by GigaAssay[3].

Table 1.

The 38 datasets for testing the model. Among them, fitness is used as the measurement for six datasets, and these six datasets are BF520, BG505, P84126, POLG_HCVJF, TIM_SULSO, and TIM_THEMA. “#variants”: the number of variants; “Seq length”: the length of protein sequences.

| Dataset names | Short Dataset names | #variants | Seq length |

|---|---|---|---|

| AMIE_PSEAE_Whitehead | AMIE | 4,507 | 346 |

| B3VI55_LIPSTSTABLE | B3VI55_LIPSTSTABLE | 6,541 | 439 |

| B3VI55_LIPST_Whitehead2015 | B3VI55_LIPST | 6,327 | 439 |

| BF520_env_Bloom2018 | BF520 | 12,236 | 852 |

| BG505_env_Bloom2018 | BG505 | 12,217 | 860 |

| BG_STRSQ_hmmerbit | BG_STRSQ | 2,635 | 501 |

| BLAT_ECOLX_Ostermeier2014 | BLAT_2014 | 4,595 | 286 |

| BLAT_ECOLX_Palzkill2012 | BLAT_2012 | 4,789 | 286 |

| BLAT_ECOLX_Ranganathan2015 | BLAT_2015 | 4,788 | 286 |

| BLAT_ECOLX_Tenaillon2013 | BLAT_2013 | 949 | 286 |

| BRCA1_HUMAN_BRCT | BRCA1_BRCT | 1,185 | 1,863 |

| BRCA1_HUMAN_RING | BRCA1_RING | 492 | 1,863 |

| CALM1_HUMAN_Roth2017 | CALM1_Roth2017 | 1,730 | 149 |

| DLG4_RAT_Ranganathan2012 | DLG4_RAT | 1,558 | 724 |

| GAL4_YEAST_Shendure2015 | GAL4 | 1,104 | 881 |

| HG_FLU_Bloom2016 | HG_FLU | 10,337 | 565 |

| HSP82_YEAST_Bolon2016 | HSP82 | 4,104 | 709 |

| IF1_ECOLI_Kishony | IF1_ECOLI | 1,312 | 72 |

| MK01_HUMAN_Johannessen | MK01 | 5,463 | 360 |

| MTH3_HAEAESTABILIZED_Tawfik2015 | MTH3 | 1,719 | 330 |

| P84126_THETH_b0 | P84126 | 1,519 | 254 |

| PABP_YEAST_Fields2013-singles | PABP | 1,187 | 577 |

| PA_FLU_Sun2015 | PA_FLU | 1,848 | 716 |

| POLG_HCVJF_Sun2014 | POLG_HCVJF | 1,631 | 3,033 |

| PTEN_HUMAN_Fowler2018 | PTEN | 3,014 | 403 |

| RASH_HUMAN_Kuriyan | RASH | 3,078 | 189 |

| RL401_YEAST_Bolon2013 | RL401_2013 | 1,160 | 76 |

| RL401_YEAST_Bolon2014 | RL401_2014 | 1,294 | 76 |

| RL401_YEAST_Fraser2016 | RL401_2016 | 1,168 | 76 |

| SUMO1_HUMAN_Roth2017 | SUMO1 | 1,329 | 101 |

| TIM_SULSO_b0 | TIM_SULSO | 1,519 | 248 |

| TIM_THEMA_b0 | TIM_THEMA | 1,519 | 252 |

| TPK1_HUMAN_Roth2017 | TPK1_2017 | 2,608 | 243 |

| TPMT_HUMAN_Fowler2018 | TPMT_2018 | 2,659 | 245 |

| UBC9_HUMAN_Roth2017 | UBC9 | 2,281 | 159 |

| UBE4B_MOUSE_Klevit2013-singles | UBE4B | 603 | 1,173 |

| YAP1_HUMAN_Fields2012-singles | YAP1 | 313 | 504 |

| HIV_Tat | HIV_Tat | 1,615 | 86 |

2.2. Deep learning framework to predict functional effects of protein variants

Rep2Mut-V2 is a deep learning-based method to estimate various functional effects of protein variants. Rep2Mut-V2 uses a pair of protein sequences as input, i.e., a wildtype sequence (WT), and a mutated sequence with a substitution of an amino acid at a position of interest (Fig. 1). Rep2Mut-V2 uses the WT and mutated sequences as input of ESM to learn the representation of the mutated position [38] (denoted as “ESM-f”, which distinguishes ESM predictions). ESM-f is composed of multiple transformer layers and is trained on millions of protein sequences with the masked language modeling objective. It endeavors to learn multiple levels of protein knowledge such as biochemical properties and evolutionary information. ESM-f has several different releases, and ESM-1v, used in Rep2Mut-V2, comprises 34 transformer layers trained on the UniRef90 dataset [41]. The 33rd layer of ESM-1v generates a 1,280-element vector which is used to represent either WT or variant information for the position of interest in the protein sequence. Each of the representation vectors is then used as the input of a fully connected neural network layer with a vector of 128 elements as output (Layers 1 and 2 in Fig. 1). After that, the two 128-dimension vectors are merged using an entry-wise product and then fed into Layer 3 to estimate the functional effect of a variant. The entry-wise product (or Hadamard product) takes two matrices of the same dimensions as inputs and generates another matrix of the same dimension as the operands using a binary operation. For example, given two matrices Am,n and Bm,n with m × n dimensions, the entry-wise product where 0 <i ≤ m, and 0 <j ≤ n. Additionally, a PReLU activation function [42] and a dropout rate of 0.2 are applied to the fully connected neural network layers to avoid overfitting.

Fig. 1.

The architecture of Rep2Mut-V2 to predict mutational effect from protein sequences. The model consists of 328,067 trainable parameters. “ESM” is used to generate representation vectors only; therefore, we denote it as “ESM-f” to distinguish from ESM prediction.

2.3. Training and testing Rep2Mut-V2

Three different strategies are used to evaluate Rep2Mut-V2: The first strategy is ten-fold cross-validation. With this strategy, Rep2Mut-V2 is assessed in two steps: a leave-one dataset-out pretraining step, and a cross-validation fine-tuning step. Initially, 36 of 37 non-GigaAssay datasets are used to pretrain the deep learning framework to capture shared information across proteins. During this step, layers 1 and 2 are shared across the datasets, and each dataset has its own specific layer 3. The framework was pretrained using 10 epochs with a batch size of 256 and a learning rate of 1e-5. After that, the remaining dataset is randomly split into 10 groups, and each group contains ∼10% variants. Each time, 90% of the variants of the dataset are used for fine-tuning, and the other 10% are for testing. The fine-tuning process is conducted with a batch size of 8, and a learning rate of 1e-4 for layer 3, and 5e-6 for layers 1 and 2. Both pretraining and fine-tuning processes use the Adam optimizer [43] and MSE loss function in the back-propagation process. MSE is defined in Eq. (1) where n is the number of variants, Yi are the observed activities and Ŷ are the predicted activities.

| (1) |

To compare Rep2Mut-V2 with other methods and to avoid the influence of random split of datasets, the fine-tuning process was repeated 50 times, and the final evaluation was based on the averaged performance.

The second strategy is few-shot learning. The few-shot learning model is trained on a small fraction of variants, but can be used to accurately predict the functional effects of a wide array of variants. Our few-shot learning process is evaluated on six datasets with fitness measurement (in Table 1), as other measurements are used on relatively fewer available datasets. During few-shot learning, we use 5 out of 6 fitness datasets for pretraining, as we did previously. Then, 30% of the variants of the remaining dataset are used for the fine-tuning, and the remaining 70% are used for testing. The testing is repeated five times on each of 6 fitness datasets.

The third strategy is zero-shot learning, where a new dataset that is not used in training process is used for testing. It allows the use of our model without further training. To evaluate zero-shot performance, we train the model using 5 out of 6 fitness datasets, and test our model on the 6th dataset. This process is repeated six times for each fitness dataset.

2.4. Estimate mutational effects of protein variants with state-of-the-art methods

We evaluated the performance of Rep2Mut-V2 against six published methods that were described below.

ESM: The first published method is ESM [38], [39], a pretrained model to estimate protein’s activity and functions. The ESM approach estimates the functional effects of protein variants through the following process: Given a protein sequence, ESM produces a representation vector of each position; Then, an additional layer is added to calculate a probability vector of all amino acid types for each position in the sequence [39]; after that, given a position of interest, the amino acid in the wildtype protein serves as a reference state and is compared to the mutated amino acid type. The variant effect is then calculated using the logarithmic ratio of the probability between the mutated amino acid and the WT amino acid [39] as shown below:

| (2) |

where is a probability vector for a position of interest, T is the set of mutated positions for a variant, is the masked input sequence, and represent the mutant and wildtype amino acids, is the probability assigned to the mutated amino acid , and is the probability assigned to the wildtype.

ESM released two versions (ESM-v1 and ESM-v2) each with several pretrained models. For ESM-v1, we employed two models in this evaluation: esm1v_t33_650M_UR90S_1 [39] (denoted as ESM-M2) if the protein sequence length is shorter or equal to 1024, and esm1_t34_670M_UR50S [38] (denoted as ESM-M1) for all datasets due to its ability to handle protein sequences longer than 1024 amino acids. ESM-2 [44] (denoted as ESM-v2) uses more parameters: 36 layers with up to 15 billion parameters vs 33 layers used in ESM-M1 and ESM-M2. The used ESM-v2 model is esm2_t36_3B_UR50D.

DeepSequence: DeepSequence [35] is a deep latent-variable model. It uses the concept of variational autoencoders (VAE) [45] to extract latent factors from a protein (or RNA) sequence, and can capture higher-order correlations in biological sequence families. Although DeepSequence is a generative model, predicting a variant’s effect on each protein sequence requires additional training. Given a sequence, we used a multiple sequence alignment (MSA) tool to generate MSA sequences. As recommended tools by DeepSequence, we used EVcoupling from the website v2.evcouplings.org together with a bit score of 0.5 bits/residue as a threshold during MSA. These MSA sequences were then used to retrain DeepSequence for predicting mutational effects.

SIFT: Sorting Intolerant From Tolerant (SIFT) [30] is an old tool to predict a variant’s effect on protein function. It uses substitution tolerance of a protein position to estimate the variant’s effect. Given a sequence, SIFT usually collects a set of related sequences and aligns them against the target protein. Then, it calculates the degree of conservation of amino acids and uses this to estimate a score that specifies whether a variant is tolerated or deleterious.

CPT: Cross-protein transfer (CPT) [46] uses various features to predict a variant’s effect. These features include scores from EVE and ESM-1v, MSAs, structural features from AlphaFold2, as well as amino acid descriptors such as charge, polarity, hydrophobicity, size, local flexibility, and so on. Based on these features, CPT uses a linear regression algorithm to train a model on five human proteins (CALM1, MTHR, SUMO1, UBC9, and TPK1). The model is mainly evaluated on human proteins for clinical variant interpretation. The variant’s predictions on human proteins were downloaded and used for performance evaluation.

VariPred: VariPred [47] is another ESM-based approach to predict pathogenicity of amino acid variants. Its manuscript was released on bioRxiv after our initial submission. It uses ESM to generate vector representations for predicting a variant’s pathogenicity. Its prediction outcome is binary: 1 denotes pathogenic and 0 means not pathogenic. We also extracted VariPred’s predictions for human proteins from our 38 datasets and evaluated its performance.

EVE: evolutionary model of variant effect (EVE) [48], like DeepSequence, utilizes evolutionary information to predict the clinical significance of human variants. It uses multiple sequence alignment (MSA) as input to train a Bayesian Variational autoencoder (VAE), and estimates an evolutionary index to distinguish variant sequences and wild-type sequences.

2.5. Evaluation measurements

We use the Spearman's correlation coefficient (SRCC) to measure the performance of each tested method across the 38 datasets. For the implementation, we used the Python package scipy to calculate the SRCC between the predicted and experimental estimates. Specifically, we let X and Y be experimental and predicted estimates for a list of variants. SRCC is calculated using Eq. (3).

| (3) |

where is the ranking of items in , is the covariance of and , is the standard deviation of , and is the standard deviation of .

3. Results

3.1. Evaluation of Rep2Mut-V2 under zero-shot and few shot strategies

Rep2Mut-V2 was first evaluated under three strategies: zero-shot transfer learning, few-shot learning and leave-one position-out cross-validation. The evaluation was conducted on the six fitness datasets (as detailed in Table 1), because other measurements were not available for more datasets and different measurements may not be comparable.

The zero-shot learning model was tested on a dataset after being trained on other fitness datasets. Through leave-one dataset-out cross-validation, the results in Table 2 reveal that Rep2Mut-V2 achieved better performance on three of the six datasets when compared with ESM, and on four of the six datasets when compared with DeepSequence.

Table 2.

The performance on the six fitness datasets. The values are Spearman’s rank correlation coefficients.

| One position out (no transfer) | Zero-shot transfer | few-shot transfer | DeepSequence | ESM-M1 | ESM-M2 | |

|---|---|---|---|---|---|---|

| BF520 | 0.632 | 0.735 | 0.789 | 0.003 | 0.37 | 0.469 |

| BG505 | 0.627 | 0.738 | 0.776 | 0.179 | 0.37 | 0.481 |

| P84126 | 0.564 | 0.45 | 0.71 | 0.588 | 0.569 | 0.536 |

| POLG_HCVJF | 0.61 | 0.440 | 0.651 | 0.372 | 0.069 | |

| TIM_SULSO | 0.557 | 0.495 | 0.622 | 0.551 | 0.608 | 0.595 |

| TIM_THEMA | 0.47 | 0.467 | 0.608 | 0.446 | 0.468 | 0.48 |

Few-shot learning usually fine-tunes a model on a small fraction of variants of a dataset and then tests the model on other variants of the dataset, after being pretrained on other datasets. In our evaluation, we pretrained our few-shot learning model on five of six datasets, and fine-tuned the model using 10% of the variants of the remaining testing dataset. The model was assessed on 90% of the variants of the testing dataset. The results are presented in Table 2 which shows that Rep2Mut-V2 outperforms DeepSequence and ESM on all six datasets.

We further assessed Rep2Mut-V2 using leave-one position-out cross-validation, because mutated positions might be correlated with fitness measurements. In our cross-validation, training and testing data were split based on mutated positions, rather than randomly splitting. As presented in Table 2, Rep2Mut-v2 still generates better results than state of the arts models on most of datasets. Rep2Mut-v2 outperformed DeepSequence, ESM-1 and ESM-2 by average SRCC improvements of 0.269, 0.265 and 0.112 respectively.

3.2. Evaluation of Rep2Mut-V2 with ten-fold cross-validation

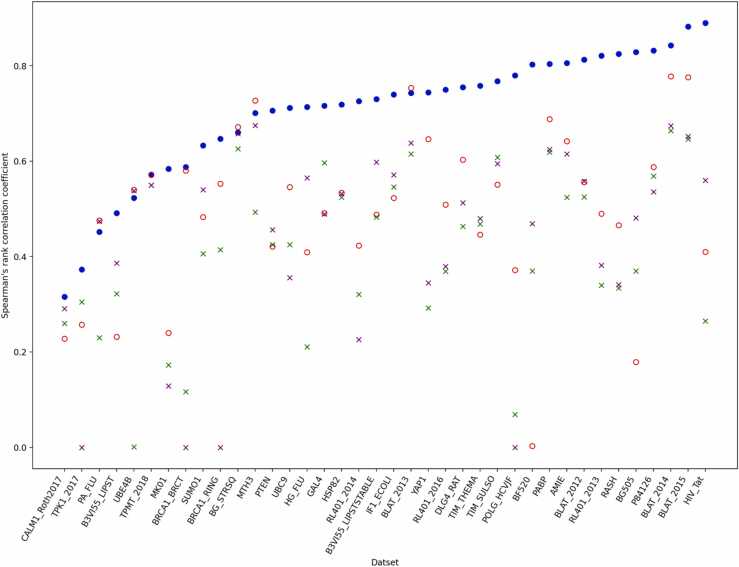

The performance of Rep2Mut-V2 on the 38 datasets under ten-fold cross-validation is presented in Table 3 and Fig. 2, together with the predictions made by SIFT, ESM and DeepSequence on the 38 datasets. The results generated by CPT and VariPred on human proteins are also provided in Table 3, considering that the developers of CPT and VariPred mainly assessed the predictions of variants’ effect on human proteins.

Table 3.

The performance (Spearman’s rank correlation) achieved by Rep2Mut-V2, DeepSequence, two ESM predictions (i.e., ESM-M1: esm1_t34_670M_UR50S, and ESM-M2: esm1v_t33_650M_UR90S_1), SIFT, CPT and VariPred. Na: Not available, because ESM-M2 cannot process a protein sequence longer than 1024 amino acids. DeepSequence's results are mainly borrowed from [35] except the last dataset, The results of CPT and EVE were downloaded from https://huggingface.co/spaces/songlab/CPT and https://evemodel.org/ respectively.

| Dataset names | Rep2Mut-V2 | DeepSequence | ESM-M1 | ESM-M2 | SIFT | CPT | VariPred | EVE | ESM-v2 |

|---|---|---|---|---|---|---|---|---|---|

| AMIE | 0.806 | 0.642 | 0.524 | 0.615 | 0.317 | 0.685 | |||

| B3VI55_LIPSTSTABLE | 0.73 | 0.488 | 0.483 | 0.598 | 0.257 | 0.632 | |||

| B3VI55_LIPST | 0.491 | 0.232 | 0.322 | 0.386 | 0.139 | 0.39 | |||

| BF520 | 0.803 | 0.003 | 0.37 | 0.469 | 0.35 | 0.131 | |||

| BG505 | 0.829 | 0.179 | 0.37 | 0.481 | 0.355 | 0.093 | |||

| BG_STRSQ | 0.66 | 0.672 | 0.626 | 0.658 | 0.506 | 0.649 | |||

| BLAT_2014 | 0.843 | 0.778 | 0.664 | 0.674 | 0.563 | 0.611 | |||

| BLAT_2012 | 0.813 | 0.556 | 0.525 | 0.558 | 0.458 | 0.53 | |||

| BLAT_2015 | 0.882 | 0.776 | 0.646 | 0.652 | 0.488 | 0.589 | |||

| BLAT_2013 | 0.743 | 0.754 | 0.615 | 0.638 | 0.494 | 0.587 | |||

| BRCA1_BRCT | 0.588 | 0.580 | 0.117 | 0.354 | 0.54 | 0.429 | 0.586 | 0.502 | |

| BRCA1_RING | 0.647 | 0.553 | 0.414 | 0.399 | 0.55 | 0.466 | 0.567 | 0.505 | |

| CALM1_Roth2017 | 0.316 | 0.228 | 0.26 | 0.291 | 0.199 | 0.307 | 0.077 | 0.233 | 0.276 |

| DLG4_RAT | 0.755 | 0.603 | 0.463 | 0.513 | 0.313 | 0.46 | |||

| GAL4 | 0.716 | 0.491 | 0.597 | 0.489 | 0.067 | 0.696 | |||

| HG_FLU | 0.714 | 0.409 | 0.211 | 0.565 | 0.357 | 0.558 | |||

| HSP82 | 0.719 | 0.534 | 0.524 | 0.532 | 0.245 | 0.482 | |||

| IF1_ECOLI | 0.74 | 0.523 | 0.546 | 0.571 | 0.3 | 0.576 | |||

| MK01 | 0.584 | 0.240 | 0.173 | 0.129 | 0.051 | 0.184 | 0.075 | 0.189 | |

| MTH3 | 0.701 | 0.727 | 0.493 | 0.675 | 0.247 | 0.608 | |||

| P84126 | 0.832 | 0.588 | 0.569 | 0.536 | 0.262 | 0.595 | |||

| PABP | 0.804 | 0.688 | 0.619 | 0.625 | 0.278 | 0.628 | |||

| PA_FLU | 0.452 | 0.476 | 0.23 | 0.474 | 0.439 | 0.19 | |||

| POLG_HCVJF | 0.78 | 0.372 | 0.069 | 0.572 | 0.134 | ||||

| PTEN | 0.706 | 0.421 | 0.425 | 0.456 | 0.251 | 0.54 | 0.317 | ||

| RASH | 0.825 | 0.466 | 0.334 | 0.341 | 0.21 | 0.456 | 0.182 | 0.477 | 0.439 |

| RL401_2013 | 0.821 | 0.490 | 0.34 | 0.382 | 0.38 | 0.55 | |||

| RL401_2014 | 0.726 | 0.423 | 0.321 | 0.226 | 0.431 | 0.432 | |||

| RL401_2016 | 0.75 | 0.509 | 0.369 | 0.379 | 0.394 | 0.57 | |||

| SUMO1 | 0.633 | 0.483 | 0.406 | 0.54 | 0.42 | 0.641 | 0.318 | 0.468 | |

| TIM_SULSO | 0.768 | 0.551 | 0.608 | 0.595 | 0.329 | 0.632 | |||

| TIM_THEMA | 0.758 | 0.446 | 0.468 | 0.48 | 0.236 | 0.47 | |||

| TPK1_2017 | 0.373 | 0.257 | 0.305 | 0.255 | 0.387 | 0.224 | 0.231 | 0.403 | |

| TPMT_2018 | 0.572 | 0.571 | 0.55 | 0.55 | 0.257 | 0.593 | 0.357 | 0.503 | |

| UBC9 | 0.712 | 0.546 | 0.425 | 0.356 | 0.432 | 0.585 | 0.316 | 0.556 | |

| UBE4B | 0.523 | 0.540 | 0.001 | 0.538 | 0.334 | 0.347 | |||

| YAP1 | 0.744 | 0.646 | 0.292 | 0.345 | 0.147 | 0.55 | 0.463 | 0.616 | 0.486 |

| HIV_Tat | 0.89 | 0.41 | 0.265 | 0.56 | 0.265 | 0.053 |

Fig. 2.

The performance (Spearman’s rank correlation) of Rep2Mut-V2 on different datasets compared to the two state-of-the-arts methods. Blue filled circles: Rep2Mut-V2, red empty circles: DeepSequence, Green crosses: ESM-M1, and purple crosses: ESM-M2.

Fig. 2 illustrates the significant improvement of our Rep2Mut-V2 approach over DeepSequence and ESM. Rep2Mut-V2 outperformed DeepSequence on 33 datasets, while DeepSequence showed better performance on five datasets. The SRCC improvement by Rep2Mut-V2 ranges from 0.001 (on TPMT_2018) to 0.8 (on BF520), averaging 0.242. In contrast, the SRCC improvement by DeepSequence varied from 0.011 (on BLAT_2013) to 0.026 (on MTH3), averaging 0.018. Notably, our improvement is substantially higher than that achieved by DeepSequence. For instance, on BG505 dataset, Rep2Mut-V2 yielded a SRCC of 0.829, while DeepSequence’s SRCC is 0.179, as shown in Fig. 3(a1, a2). On this dataset, DeepSequence’s prediction is almost random compared to the experimental estimation, and Rep2Mut-V2’s prediction closely aligns with the experimental estimation with an average error of approximately 0.171. On another dataset, BLAT_2015, the SRCCs of Rep2Mut-V2 and DeepSequence were 0.882 and 0.776, respectively (Fig. 3(b1, b2)). Rep2Mut-V2 demonstrates a 0.106 improvement against DeepSequence in this dataset.

Fig. 3.

Comparison of the estimation of mutational effects by Rep2Mut and two state-of-the-art methods (DeepSequence and ESM). a: BG505 dataset, b: BLAT_2015. 1: Rep2Mut-V2, 2: DeepSequence, 3: ESM.

When compared against ESM, Rep2Mut-V2 notably outperformed ESM-M1 on all 38 datasets: the improvement in SRCC ranges from 0.034 (on BG_STRSQ) to 0.711 (on POLG_HCVJF), with an average SRCC improvement of 0.29. Furthermore, Rep2Mut-V2 demonstrated better performance than ESM-M2 on 36 datasets, with a median SRCC improvement of 0.209. The SRCC improvement ranged from 0.002 (on BG_STRSQ) to 0.50 (on RL401_2014). The datasets of PA_FLU and UBE4B were exceptions where ESM-M2 outperformed Rep2Mut-V2 by 0.022 and 0.015, respectively. But overall, Rep2Mut-V2’s predictions outshine ESM. For example, on the BG505 dataset in Fig. 3 (a1, a3), Rep2Mut-V2 achieved a SRCC of 0.829, while ESM-M2 scored 0.481. Rep2Mut-V2’s SRCC is 0.348 higher than ESM-M2. Similarly, on the BLAT_2015 dataset (Fig. 3 (b1, b3)), ESM-M2 (SRCC: 0.652) produced poorer performance than Rep2Mut-V2 (SRCC: 0.882). Recently, ESM released the 2rd version (ESM-V2), and we tested the performance of ESM-V2 to predict functional effects as shown in Table 3. ESM-v2 achieved similar results to ESM-M1, ESM-M2, and performed worse than Rep2Mut-V2.

When compared to SIFT and EVE, Rep2Mut-V2 exhibited a consistent trend of performance improvement, outperforming both SIFT and EVE on all tested datasets. The SRCC improvement over SIFT ranged from 0.013 (PA_FLU) to 0.649 (GAL4), with an average improvement of 0.378. The SRCC improvement over EVE ranged from 0.002 (BRCA1_BRCT) to 0.348 (RASH), averaging 0.142.

In the evaluation against VariPred, Rep2Mut-V2 excelled, surpassing VariPred on all 11 human datasets. The average improvement varied from 0.149 (TPK1_2017) to 0.643 (RASH), averaging 0.319. Similarly, Rep2Mut-v2 improved the prediction variants’ effects on 8 out of 11 human datasets when compared to CPT, with the SRCC improvement ranging from 0.009 (CALM1_Roth2017) to 0.4 (MK01).

In summary, the estimation of Rep2Mut-V2 is substantially superior to DeepSequence, ESM, SIFT, CPT, VariPred and EVE. The averaged SRCC of Rep2Mut-V2 is 0.703, while DeepSequence, ESM-M1, ESM-M2, ESM-V2, SIFT, CPT, VariPred, and EVE had averaged SRCC score of 0.496, 0.412, 0.496, 0.461, 0.325, 0.484, 0.283 and 0.438 respectively. Rep2Mut-V2 significantly outperforms the state-of-the-art methods.

3.3. Performance of Rep2Mut-V2 on smaller training data

The above assessment of Rep2Mut-V2 used 90% of the dataset for training and 10% for testing. However, it is generally expensive and time consuming to generate more variant data through wet-lab experiments. Therefore, we tested Rep2Mut-V2’s performance with less variants for training. In detail, we used 30% of the variants from a dataset for fine-tuning Rep2Mut-V2 and the remaining 70% variants for testing.

We compared this Rep2Mut-V2 model to DeepSequence and ESM. As illustrated in Fig. 4, this Rep2Mut-V2 model still outperformed DeepSequence ion 26 datasets and ESM on 29 datasets. Compared to Rep2Mut-V2 with 90% variants for training, the performance of this Rep2Mut-V2 model decreased by 0.077 points on average. This robust performance clearly demonstrates Rep2Mut-V2’s ability to generate accurate predictions for variant analysis, especially in cases with limited available variants determined by wet-lab experiments. This offers a better solution for analyzing functional effect for numerous variants to reduce the intensive financial and human resources required in wet-lab experiments.

Fig. 4.

The performance (Spearman’s rank correlation) of Rep2Mut-V2 comparing to DeepSequence, ESM-M1 and ESM-M2. Rep2Mut-V2 was trained with less variants.

4. Discussion

Rep2Mut-V2 was evaluated on 118,933 single amino acid variants from 38 protein datasets with 27 types of measurements of functional effects. The evaluation was conducted under various cross-validation strategies, and the performance of Rep2Mut-V2 was compared against six existing methods. The results consistently highlighted the superiority of Rep2Mut-V2 in accurately predicting the mutational effects upon protein variants. Even using limited variants for training, Rep2Mut-V2 maintained superior performance over existing methods. Notably, Rep2Mut-V2 relies solely on protein sequences and does not require protein 3D structures for precise prediction. Given the availability of millions of protein sequences compared to the limited number of proteins with experimental 3D structures, Rep2Mut-V2 proves to be a highly valuable tool, especially for those proteins which have experimentally determined functional effects only for a small fraction of variants.

It is important to note that Rep2Mut-V2 uses representation vectors generated from an ESM framework as input, but its prediction performance is higher than the prediction performance of both ESM and DeepSequence. This success is partially attributed to the fact that ESM prediction relies solely on representation vectors of WT sequences, whereas our method uses representation vectors of both WT sequences and mutant sequences as inputs. Our design allows the models to learn the difference between WT and mutant vectors for accurate prediction. On the other hand, DeepSequence depends on evolutionary data generated from multiple sequencing alignments to infer mutational effects. Its performance is thus limited by the availability of similar sequences to refine the model.

Our method has some limitations. First, our method was designed to predict the functional effects of single amino acid variants and is not currently equipped to handle high-order variants. We are presently extending our framework to address the prediction of functional effects of double/triple variants, although there are limited datasets with high-order variants. Second, we tested transfer learning with Rep2Mut-V2. However, transfer learning generally treats the contribution of each dataset equally, while the reliability of measuring functional effects of variants is different across those datasets. This uniform treatment may mislead transfer learning. To overcome this, a potential solution is to weigh the contribution of each dataset to shared layers (in Fig. 1) based on experimental reliability of the measurement of functional effects. Unfortunately, with only 38 experimental protein datasets, it is hard to arrive at a robust conclusion. As the functional effects of more protein variants are experimentally determined through high-throughput methods, transfer learning could effectively learn shared information across proteins and then substantially enhance the prediction of variants’ effects. Consequently, these two limitations will be overcome with the availability of more datasets.

5. Conclusion

In this study, we proposed and tested Rep2Mut-V2 across 38 protein datasets with various effect measurements. Our approach was compared with six existing methods, and the evaluation demonstrates that our approach can achieve much better performance on most of the datasets. By relying solely on protein sequences, our approach achieved accurate prediction of functional effects even with a limited number of variants for training. These observations strongly suggest that Rep2Mut-V2 has the potential to study mutational effects across a broader spectrum of proteins, thereby benefiting human disease studies.

Declaration of Competing Interest

The authors claim that there is no conflict of interest.

Acknowledgements

We thank Mr. Bryce Forrest for testing the performance of several datasets, Ms. Zahra Moradpour Sheikhkanloo for helping the collection of the data, and Dr. James Raymond for proofreading. This research was funded by National Institute of General Medical Sciences grant number P20GM121325. ZZ was partially supported by National Institutes of Health grants (R01LM012806, R01LM012806-07S1, U01AG079847) and the Cancer Prevention and Research Institute of Texas grant (CPRIT RP180734).

Author statement

Q.L. conceived and supervised the study, H.D. developed the algorithms, H.D. and Q.L. investigated the performance, Z.Z. involved in data analysis, H.D and Q.L. wrote the manuscript and Z.Z. revised the manuscript. All authors have read and agreed to the published version of the manuscript.

References

- 1.Lappalainen T., MacArthur D.G. From variant to function in human disease genetics. Science. 2021;373:1464–1468. doi: 10.1126/science.abi8207. [DOI] [PubMed] [Google Scholar]

- 2.Fowler D.M., Fields S. Deep mutational scanning: a new style of protein science. Nat Methods. 2014;11:801–807. doi: 10.1038/nmeth.3027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Benjamin R., Giacoletto C.J., FitzHugh Z.T., Eames D., Buczek L., Wu X., Newsome J., Han M.V., Pearson T., Wei Z., et al. GigaAssay – an adaptable high-throughput saturation mutagenesis assay platform. Genomics. 2022;114 doi: 10.1016/j.ygeno.2022.110439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gasperini M., Starita L., Shendure J. The power of multiplexed functional analysis of genetic variants. Nat Protoc. 2016;11:1782–1787. doi: 10.1038/nprot.2016.135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Starita L.M., Ahituv N., Dunham M.J., Kitzman J.O., Roth F.P., Seelig G., Shendure J., Fowler D.M. Variant interpretation: functional assays to the rescue. Am J Hum Genet. 2017;101:315–325. doi: 10.1016/j.ajhg.2017.07.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Doud M.B., Bloom J.D. Accurate measurement of the effects of all amino-acid mutations on influenza hemagglutinin. Viruses. 2016;8 doi: 10.3390/v8060155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wu N.C., Olson C.A., Du Y., Le S., Tran K., Remenyi R., Gong D., Al-Mawsawi L.Q., Qi H., Wu T.-T., et al. Functional constraint profiling of a viral protein reveals discordance of evolutionary conservation and functionality. PLOS Genet. 2015;11 doi: 10.1371/journal.pgen.1005310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Qi H., Olson C.A., Wu N.C., Ke R., Loverdo C., Chu V., Truong S., Remenyi R., Chen Z., Du Y., et al. A quantitative high-resolution genetic profile rapidly identifies sequence determinants of hepatitis C viral fitness and drug sensitivity. PLOS Pathog. 2014;10 doi: 10.1371/journal.ppat.1004064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mishra P., Flynn J.M., Starr T.N., Bolon D.N.A. Systematic mutant analyses elucidate general and client-specific aspects of Hsp90 function. Cell Rep. 2016;15:588–598. doi: 10.1016/j.celrep.2016.03.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jacquier H., Birgy A., Le Nagard H., Mechulam Y., Schmitt E., Glodt J., Bercot B., Petit E., Poulain J., Barnaud G., et al. Capturing the mutational landscape of the beta-lactamase TEM-1. Proc Natl Acad Sci. 2013;110:13067–13072. doi: 10.1073/pnas.1215206110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Firnberg E., Labonte J.W., Gray J.J., Ostermeier M. A comprehensive, high-resolution map of a gene’s fitness landscape. Mol Biol Evol. 2014;31:1581–1592. doi: 10.1093/molbev/msu081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Stiffler M.A., Hekstra D.R., Ranganathan R. Evolvability as a function of purifying selection in TEM-1 β-lactamase. Cell. 2015;160:882–892. doi: 10.1016/j.cell.2015.01.035. [DOI] [PubMed] [Google Scholar]

- 13.Deng Z., Huang W., Bakkalbasi E., Brown N.G., Adamski C.J., Rice K., Muzny D., Gibbs R.A., Palzkill T. Deep sequencing of systematic combinatorial libraries reveals β-lactamase sequence constraints at high resolution. J Mol Biol. 2012;424:150–167. doi: 10.1016/j.jmb.2012.09.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Rockah-Shmuel L., Tóth-Petróczy Á., Tawfik D.S. Systematic mapping of protein mutational space by prolonged drift reveals the deleterious effects of seemingly neutral mutations. PLOS Comput Biol. 2015;11 doi: 10.1371/journal.pcbi.1004421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Starita L.M., Young D.L., Islam M., Kitzman J.O., Gullingsrud J., Hause R.J., Fowler D.M., Parvin J.D., Shendure J., Fields S. Massively parallel functional analysis of BRCA1 RING domain variants. Genetics. 2015;200:413–422. doi: 10.1534/genetics.115.175802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Araya C.L., Fowler D.M., Chen W., Muniez I., Kelly J.W., Fields S. A fundamental protein property, thermodynamic stability, revealed solely from large-scale measurements of protein function. Proc Natl Acad Sci. 2012;109:16858–16863. doi: 10.1073/pnas.1209751109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Melnikov A., Rogov P., Wang L., Gnirke A., Mikkelsen T.S. Comprehensive mutational scanning of a kinase in vivo reveals substrate-dependent fitness landscapes. Nucleic Acids Res. 2014;42 doi: 10.1093/nar/gku511. e112–e112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kitzman J.O., Starita L.M., Lo R.S., Fields S., Shendure J. Massively parallel single-amino-acid mutagenesis. Nat Methods. 2015;12:203–206. doi: 10.1038/nmeth.3223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.McLaughlin Jr R.N., Poelwijk F.J., Raman A., Gosal W.S., Ranganathan R. The spatial architecture of protein function and adaptation. Nature. 2012;491:138–142. doi: 10.1038/nature11500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Melamed D., Young D.L., Gamble C.E., Miller C.R., Fields S. Deep mutational scanning of an RRM domain of the saccharomyces cerevisiae poly (A)-binding protein. Rna. 2013;19:1537–1551. doi: 10.1261/rna.040709.113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Roscoe B.P., Thayer K.M., Zeldovich K.B., Fushman D., Bolon D.N.A. Analyses of the effects of all ubiquitin point mutants on yeast growth rate. J Mol Biol. 2013;425:1363–1377. doi: 10.1016/j.jmb.2013.01.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Roscoe B.P., Bolon D.N.A. Systematic exploration of ubiquitin sequence, E1 activation efficiency, and experimental fitness in yeast. J Mol Biol. 2014;426:2854–2870. doi: 10.1016/j.jmb.2014.05.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mavor D., Barlow K., Thompson S., Barad B.A., Bonny A.R., Cario C.L., Gaskins G., Liu Z., Deming L., Axen S.D., et al. Determination of ubiquitin fitness landscapes under different chemical stresses in a classroom setting. eLife. 2016;5 doi: 10.7554/eLife.15802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Li C., Qian W., Maclean C.J., Zhang J. The fitness landscape of a TRNA gene. Science. 2016;352:837–840. doi: 10.1126/science.aae0568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Romero P.A., Tran T.M., Abate A.R. Dissecting enzyme function with microfluidic-based deep mutational scanning. Proc Natl Acad Sci. 2015;112:7159–7164. doi: 10.1073/pnas.1422285112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Julien P., Miñana B., Baeza-Centurion P., Valcárcel J., Lehner B. The complete local genotype–phenotype landscape for the alternative splicing of a human exon. Nat Commun. 2016;7 doi: 10.1038/ncomms11558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Aakre C.D., Herrou J., Phung T.N., Perchuk B.S., Crosson S., Laub M.T. Evolving new protein-protein interaction specificity through promiscuous intermediates. Cell. 2015;163:594–606. doi: 10.1016/j.cell.2015.09.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Starita L.M., Pruneda J.N., Lo R.S., Fowler D.M., Kim H.J., Hiatt J.B., Shendure J., Brzovic P.S., Fields S., Klevit R.E. Activity-enhancing mutations in an E3 ubiquitin ligase identified by high-throughput mutagenesis. Proc Natl Acad Sci. 2013;110:E1263–E1272. doi: 10.1073/pnas.1303309110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Adzhubei I.A., Schmidt S., Peshkin L., Ramensky V.E., Gerasimova A., Bork P., Kondrashov A.S., Sunyaev S.R. A method and server for predicting damaging missense mutations. Nat Methods. 2010;7:248–249. doi: 10.1038/nmeth0410-248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ng P.C., Henikoff S. SIFT: Predicting amino acid changes that affect protein function. Nucleic Acids Res. 2003;31:3812–3814. doi: 10.1093/nar/gkg509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hecht M., Bromberg Y., Rost B. Better prediction of functional effects for sequence variants. BMC Genom. 2015;16 doi: 10.1186/1471-2164-16-S8-S1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Mann J.K., Barton J.P., Ferguson A.L., Omarjee S., Walker B.D., Chakraborty A., Ndung’u T. The fitness landscape of HIV-1 Gag: advanced modeling approaches and validation of model predictions by in vitro testing. PLOS Comput Biol. 2014;10 doi: 10.1371/journal.pcbi.1003776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kircher M., Witten D.M., Jain P., O’Roak, Cooper B.J., Shendure G.M. J. A general framework for estimating the relative pathogenicity of human genetic variants. Nat Genet. 2014;46:310–315. doi: 10.1038/ng.2892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hopf T.A., Ingraham J.B., Poelwijk F.J., Schärfe C.P.I., Springer M., Sander C., Marks D.S. Mutation effects predicted from sequence Co-variation. Nat Biotechnol. 2017;35:128–135. doi: 10.1038/nbt.3769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Riesselman A.J., Ingraham J.B., Marks D.S. Deep generative models of genetic variation capture the effects of mutations. Nat Methods. 2018;15:816–822. doi: 10.1038/s41592-018-0138-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Figliuzzi M., Jacquier H., Schug A., Tenaillon O., Weigt M. Coevolutionary landscape inference and the context-dependence of mutations in beta-lactamase TEM-1. Mol Biol Evol. 2016;33:268–280. doi: 10.1093/molbev/msv211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lapedes A., Giraud B., Jarzynski C. Using sequence alignments to predict protein structure and stability with high accuracy. arXiv Prepr arXiv. 2012;1207:2484. [Google Scholar]

- 38.Rives A., Meier J., Sercu T., Goyal S., Lin Z., Liu J., Guo D., Ott M., Zitnick C.L., Ma J., et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc Natl Acad Sci. 2021;118 doi: 10.1073/pnas.2016239118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Meier, J.; Rao, R.; Verkuil, R.; Liu, J.; Sercu, T.; Rives, A. Language Models Enable Zero-Shot Prediction of the Effects of Mutations on Protein Function; Synthetic Biology, 2021;

- 40.Derbel H., Giacoletto C.J., Benjamin R., Chen G., Schiller M.R., Liu Q. Accurate prediction of transcriptional activity of single missense variants in HIV tat with deep learning. IJMS. 2023;24:6138. doi: 10.3390/ijms24076138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Suzek B.E., Huang H., McGarvey P., Mazumder R., Wu C.H. UniRef: comprehensive and non-redundant uniprot reference clusters. Bioinformatics. 2007;23:1282–1288. doi: 10.1093/bioinformatics/btm098. [DOI] [PubMed] [Google Scholar]

- 42.He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV); IEEE: Santiago, Chile, December 2015; pp. 1026–1034.

- 43.Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization 2017.

- 44.Lin, Z.; Akin, H.; Rao, R.; Hie, B.; Zhu, Z.; Lu, W.; Smetanin, N.; Verkuil, R.; Kabeli, O.; Shmueli, Y.; et al. Evolutionary-Scale Prediction of Atomic-Level Protein Structure with a Language Model. 2023. [DOI] [PubMed]

- 45.Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes 2013.

- 46.Jagota M., Ye C., Albors C., Rastogi R., Koehl A., Ioannidis N., Song Y.S. Cross-protein transfer learning substantially improves disease variant prediction. Genome Biol. 2023;24 doi: 10.1186/s13059-023-03024-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lin, W.; Wells, J.; Wang, Z.; Orengo, C.; Martin, A.C.R. VariPred: Enhancing Pathogenicity Prediction of Missense Variants Using Protein Language Models; Bioinformatics, 2023; [DOI] [PMC free article] [PubMed]

- 48.Frazer J., Notin P., Dias M., Gomez A., Min J.K., Brock K., Gal Y., Marks D.S. Disease variant prediction with deep generative models of evolutionary data. Nature. 2021;599:91–95. doi: 10.1038/s41586-021-04043-8. [DOI] [PubMed] [Google Scholar]