Abstract

This article was migrated. The article was marked as recommended.

Effective training programs in clinical and translational research (CTR) are critical to the development of the research workforce. The evolution of global CTR competencies frameworks motivates many CTR institutions to align their training offerings with these professional standards. Guidelines for integrating competency-based frameworks and assessments into rigorous program evaluations are needed in order to promote the quality and impact of these training programs. These guidelines provide practical suggestions for how to ensure that subjective and objective assessments of CTR knowledge and skill can be effectively integrated in the evaluations used to improve these essential training programs. The approach presented here necessarily involves the systematic and deliberate incorporation of these particular types of assessments into comprehensive evaluation plans. While these guidelines are broadly applicable to the work of those charged with developing, administering and evaluating CTR training programs, they have been specifically designed for use by program directors.

Keywords: clinical and translational research, workforce development, competency-based assessment, competency framework, program evaluation, program improvement, logic model

Introduction

Clinical and translational research in the United States is supported by numerous federal, industrial and academic organizations, and many other stakeholder groups ( Callard, Rose and Wykes, 2012; Martinez et al., 2012; Trochim, Rubio and Thomas, 2013; Joosten et al., 2015). The NIH National Center for Advancing Clinical and Translational Science (NCATS) is a distinctive among them as it funds a broad network of research support centers, Clinical and Translational Science Awards (CTSAs), embedded in over 50 research institutions located across the country ( NCATS, 2018). A key strategic goal of these CTSAs regards the development of the clinical and translational workforce through dedicated research training programs ( NCATS, 2017). Clinical Translational Research (CTR) training programs provide highly valued instruction on relevant research skills and demand rigorous evaluations that demonstrate their impact on the development of the research workforce ( Bonham et al., 2012; Calvin-Naylor et al., 2017).

CTSA programs offer a variety of training options, typically in the form of short-term programs, short courses or one-time workshops. These training programs are often tailored to the need of professional degree students on a research tracks, postdoctoral fellows, residents, or early career faculty. These programs often provide education and training in a variety of core competencies, including study design, communication, teamwork, and research ethics, to name only a few areas of study.

Rigorous evaluations of CTR programs periodically require measurement of demonstration and application of research skills and acquired competencies ( Misso et al., 2016). Medical education programs are often subject to quality control, quality management, and quality assurance by regulators. However, no analogous formal mechanism exists for evaluating CTR programs. Instead, the responsibility for evaluating CTR education and training programs often resides with small groups of investigators, research managers and administrators with little experience measuring research competencies per se. This work provides concrete steps they can take to integrate competency assessments into evaluation plans implemented by CTR training programs ( Centers for Disease Control and Prevention, 1999; Trochim, Rubio and Thomas, 2013).

In this paper, we provide twelve guidelines for evaluating research education and training programs to better understand learner attainment of the skills and knowledge in clinical translational sciences. The guidelines discussed in this paper have been adapted to the role of the CTR training program directors. Therefore, to ensure the relevance of these guidelines to this role, the authors carefully considered the typical demographics, job duties, motivations, knowledge, skills and experiences of an administrator charged with guiding the evaluation and quality improvement of these education and training programs.

Guidelines for using competency assessments in program evaluation

Review team roles and expertise related to trainee’s professional development

The responsibility for evaluating CTR training programs is often carried out by personnel in a number of positions and roles. The collaborative review of these roles can be facilitated by creating personas, which are defined as archetypes with distinctive needs, goals, technical skills and professional characteristics ( Adlin and Pruitt, 2010). Creating a persona that defines who will be conducting evaluations can help program teams and stakeholders discuss and negotiate changes to the ways this work is distributed and coordinated. Table 1 provides examples of personas of clinical research professionals who are likely to share responsibilities for administering a CTR training program. This process can be carried out by CTR program leads and administrators to help focus collaborative efforts on measuring the research knowledge and skills of researchers.

Table 1. Professional roles involved with evaluating Clinical and Translational Research (CTR) training programs.

| Persona | |||

|---|---|---|---|

| CTR Investigator | CTR Training Program Director | CTR Training Program Administrator | |

| Associated professional responsibilities | Junior investigators or senior research fellows | Research department supervisor or supervisor of training programs for research team members and junior investigators | Research and regulatory support or program manager |

| Professional motivation | Wants to provide rigorous training for research teams who are required to complete research training. | Wants to use assessments of clinical research skill to revamp educational programs. | Wants to provide consistent training and professional development experiences for research teams |

| Understanding of best practices in evaluating learning | Expertise in program evaluation, use of logic models and postsecondary teaching | Understanding of learning outcome assessment, CTR competency frameworks and postsecondary teaching | Understanding of survey administration, data management and use of observation checklists |

| Responsibility for competency assessment administration, analysis and reporting. | Identifying validated competency assessments and interpreting the results with stakeholders | Developing assessment forms and communicating with CTR trainees and developing results reports for stakeholders | Communicating instructions to CTR trainees and instructors, monitoring administration of assessment forms and management of resultant data. |

Integrate competency frameworks into evaluation planning

Ideally, evaluators should be involved in the process of developing training programs to identify learning outcomes. However, early involvement may not always be possible due to contextual constraints. Ideally evaluators should be involved in mapping any existing CTR training curriculum to competency-based education (CBE) frameworks ( Dilmore, Moore and Bjork, 2013). It may be necessary to partner with subject matter experts who understand CBE during this mapping process. There are multiple evidence-based competency frameworks applicable to CTR education and training ( NCATS, 2011; Calvin-Naylor et al., 2017; Sonstein et al., 2018). Table 2 shows training opportunities that have been mapped to one domain of an established CTR competency framework ( Joint Task Force, 2018).

Table 2. Sample Training Offerings for Scientific Concepts and Research Design.

| Developing Research Questions | Choosing an Appropriate Study Design | Selecting Valid Instruments | Determining an Adequate Number of Study Participants |

|---|---|---|---|

| Developing and Writing Research Questions, Aims & Hypotheses

Formulating Research Questions, Hypotheses and Objectives The use of hypothesis testing in the social sciences |

Experimental & Observational Study Designs

Introduction to Clinical and Translational Research: Study Population and Study Design The Qualitative Research Process: Study Designs for Health Services Research |

Finding Tests & Measurement Instruments: Library Research Guide

Measuring assessment validity and reliability Community engaged approaches to measuring study team dynamics |

Hypothesis Testing: Significance level, power, and basic sample size calculation

Introduction to Power in Significance Tests Best practices in participant recruitment |

The outputs of this mapping process should be shared with programmatic stakeholders to facilitate the collection of their feedback about the breadth and depth of the existing or potential CTR training opportunities. Collecting stakeholder feedback about the content of CTR training programs is an essential first step in many guides to evaluating health research training programs, including the U.S. Center for Disease Control and Prevention’s (CDC) guide for the evaluation of public health programs ( Centers for Disease Control and Prevention, 1999).

Engage stakeholders in identifying critical knowledge and skill outcomes

As soon work on an evaluation plan has begun, evaluators should engage program stakeholders to help identify the most important knowledge and skills taught to CTR trainees. In collaboration with various stakeholder groups, evaluators can partner with instructional designers and other stakeholder groups to develop relevant and measurable lists of competencies. When identifying which specific stakeholder groups to involve in this phase of the evaluation planning process it is important to ensure that those with divergent recommendations of which CTR skills are in greatest need of development and assessment are included ( Callard, Rose and Wykes, 2012; Martinez et al., 2012; Trochim, Rubio and Thomas, 2013; Joosten et al., 2015).

Diverse stakeholder feedback can be systematically collected and synthesized using standard survey methods, interviews, focus groups and Delphi panels ( Brandon, 1998; Geist, 2010). Evaluators should collect stakeholder opinions about short- and long-term outcomes, including those regarding participant learning and behaviors ( Kirkpatrick and Kirkpatrick, 2006). The collection of data on all types of programmatic outcomes, but particularly including the knowledge and skills accrued through the program, is necessary for the development of rigorous program evaluations ( Centers for Disease Control and Prevention, 1999; Trochim, Rubio and Thomas, 2013). Evaluators should take care to consider all program characteristics relevant to key outcomes, most particularly those affecting the learning environment in which learners and their instructors are expected to work.

Develop models depicting the links between program operations and outcomes

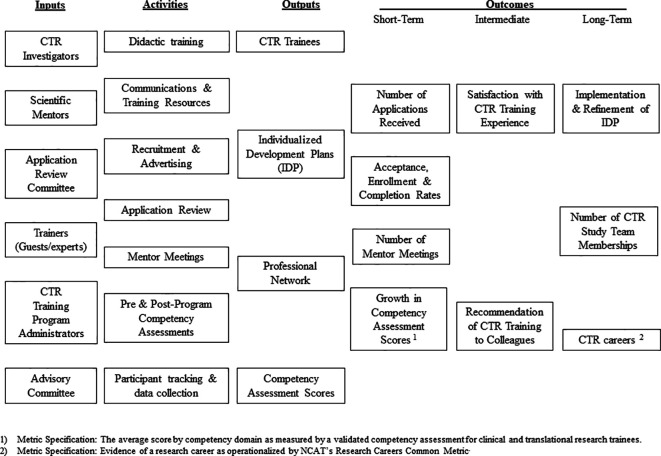

Logic models should be created in order to enrich and advance conversations with stakeholders and other administrators about the operation and impact of a CTR training program. Logic models are figures that typically depict the relationship between key programmatic A) inputs, B) activities, C) outputs and D) outcomes, often using itemized lists arranged into columns under each of these headers ( McLaughlin and Jordan GB, 1999). Some also include references to relevant important contextual or environmental factors affecting key programmatic goals. The choice of which elements to represent in the model should be informed by the need to visualize links between programmatic operations and skills development that would be of greatest interest to key stakeholders. Many funders ask that logic models be included in program proposals, and the production of these figures are standard practice in the evaluation of training programs in the health sciences ( Centers for Disease Control and Prevention, 1999; 2018).

Whenever possible, logic models for CTR training programs should include the identification of short-, intermediate-, and long-term goals. The acquisition of critical research knowledge and skills are often represented as outputs or short-term outcomes of these training programs in logic models. In contrast, distant impacts, such as the production of research grants, peer-reviewed publications and career advancement are often represented as intermediate- or long-term outcomes. To enhance the efficiency of the model-making process, utilize competency domains, each of which cover sets of related competencies, as outcomes rather than numerous specific competencies in these figures. Exemplars of logic models that include lists of CTR competency domains have been published and can be used inform development of logic models for similar programs ( Rubio et al., 2010). Figure 1 shows a logic model that can be used a basic template for enabling the planning, implementation and evaluation of a CTR training program.

Figure 1. Sample Logic Model for an Evaluation of a CTR Training Program.

Distinguish programmatic outcomes used for formative and summative evaluation

The choice of when to measure outcomes should be informed by the intent to use the results for formative or summative evaluations ( Newman et al., 1995; Yudkowsky, Park and Downing, 2019). Formative evaluation is typically conducted during the development or improvement of a program or course, whereas summative evaluation involves making judgments about the efficacy of a program or course at its conclusion. The results of formative evaluations are often used to improve programs and projects during their implementation. Outcomes chosen for the purpose of formative evaluation are often represented as short- or intermediate-term outcomes in logic models. The results of summative evaluations are used to produce valid and objective measures of programmatic impact at the end of the implementation process. Footnotes can be added to logic models to differentiate the use of certain metrics for these two distinct purposes, as shown in the template above ( Figure 1).

Measures of knowledge and skill can be used for both the formative and summative evaluation of CTR training programs. The results of relevant pre-program assessment tools, and of assessments conducted during the course of a program, enable formative evaluation when they are used to improve the experience of the currently participating trainees. For example, the results of subjective or objective skill assessment tools can be shared with respondents to inform development of individualized training plans or used to inform modifications to training curricula to address perceived or objectively-measured gaps in knowledge and skill. The results of post-program skill assessment tools enable summative evaluation when compared to relevant benchmarks, including the results of pre-program skill assessment tools or measures of skill acquisition produced by similar training programs ( Newman et al., 1995; Centers for Disease Control and Prevention, 1999).

Select validated assessment tools to measure critical knowledge or skills

The validation of knowledge or skill assessment tools requires that evidence be marshalled to advance the claim that an assessment tool actually measures what it was designed to measure ( Kane, 1992). Peer-reviewed publications that demonstrate the validity of a competency-based assessment will include the results of tests suggesting that the assessment tool provides reliable and accurate measures of knowledge or understanding among the level of learners targeted by the training program. The use of validated competency-based assessment tools for program evaluation lends credibility to the work and typically requires fewer resources than does the development of locally-developed assessments.

Several validated tools of CTR skills for investigators and research professionals have been published in recent years ( Bakken, Sheridan and Carnes, 2003; Streetman et al., 2006; Bates et al., 2007; Ellis et al., 2007; Mullikin, Bakken and Betz, 2007; Lowe et al., 2008; Cruser et al., 2009; Cruser et al., 2010; Lipira et al., 2010; Murphy et al., 2010; Poloyac SM et al., 2011; Robinson et al., 2013; Ameredes et al., 2015; Awaisu et al., 2015; Robinson et al., 2015; Sonstein et al., 2016; Jeffe et al., 2017; Patel et al., 2018; Hornung et al., 2019). When choosing between validated assessment tools, it is critical to select ones which are most closely aligned with the competency framework chosen for a given CTR program and which have been validated using learners with similar credentials and research experience to those participating in that program. Be sure to obtain all the necessary permissions from the creators of any validated assessment tools before using the instruments for evaluation purposes.

Subjective vs. Objective assessment tools

The design and purpose of a CTR training program may require the use of subjective and objective assessment tools. Subjective assessment tools, through which participants rate their own knowledge or skills, can provide valid measures of self-confidence in one’s abilities, but have not been shown to correlate with the results of objective measures ( Hodges, Regehr and Martin, 2001; Davis et al., 2006). Subjective and objective assessments of CTR knowledge and skill can be used simultaneously, but only the results of the latter type should be used to make justify claims about the actual knowledge and skills currently possessed by CTR participants.

Clinical and translational research training programs that confer any level of certification which are formally recognized by professional institutions or organizations may require that objective assessment tools be used to verify the actual research capabilities of the graduates. In these cases, the specific assessment tools that should be used by CTR training programs may have already been identified by these associated professional groups. When multiple or conflicting assessment tools are required by these groups conversations with programmatic stakeholders will be needed before any final determination about the use of any competency-based assessment tools can be made.

Estimate the time and effort required for implementing an evaluation plan

Evaluation plans take many different forms, but all plans detail how evaluation data will be collected, analyzed, reported and used ( Trochim, Rubio and Thomas, 2013). The costs of implementing rigorous evaluation plans can be substantial, so it is essential that they are accurately estimated and budgeted for. Some evaluation activities, such as the administration of publicly-available skill assessment tools using free online platforms, have comparatively low costs. The costs of other evaluations, such as those involving focus groups, can be considerably higher.

The effort required for each step of the evaluation plan can be estimated in a basic table ( Table 3). When reviewing an evaluation plan, carefully consider the risks and benefits of proposed assessment tools and choose those that are feasible to administer given the available financial and human resources. Collaborate with stakeholders to ensure that key evaluation activities are aligned with other project timelines and plans that guide the allocation of financial, human, and institutional resources needed to implement a CTR training program ( Centers for Disease Control and Prevention, 1999).

Table 3. Example evaluation activities and time required for an evaluation of CTR training.

| Evaluation Activities | Hours |

|---|---|

| Evaluation Planning | |

| Develop competency crosswalk for program components | 4 |

| Draft logic model with short, intermediate & long-term outcomes | 4 |

| Draft and submit IRB application | 8 |

| Data Collection | |

| Institutional records of participant affiliations | 2 |

| Competency assessment administration | 4 |

| Focus group administration | 8 |

| Focus group transcription | 16 |

| Data Analysis | |

| Cleaning and management of all quantitative data | 4 |

| Quantitative analysis of competency assessment data | 8 |

| Qualitative coding of focus group data | 16 |

| Qualitative coding of participant research projects | 2 |

| Reporting | |

| Draft Stakeholder Reports | 40 |

| Total: | 116 hrs. (~3 weeks) |

Train evaluation team members to collect assessment data in reliable ways

Once an evaluation plan has been developed, and a formal evaluation team has been assembled, it is important that team members understand the steps required for reliable data collection using competency-based assessments. For example, use of a CTR assessment of regulatory compliance may require that all persons administering the assessment tool be consistent in their use of the instrument as well as their subsequent scoring of individual’s performance. Even objective scoring systems include risks related to subjective interpretations ( Van der Vleuten et al., 2010). Research has shown that individuals in apparent positions of power may influence or dissuade respondents from giving honest responses on tests of their knowledge or skills ( Taut and Brauns, 2003; Van der Vleuten et al., 2010). Therefore, it is essential that the appropriate team members receive and demonstrate their understanding of validity, reliability, evaluation ethics, conflicts of interest, possible hegemonic practices or biasing and reporting procedures.

Use technology platforms that best facilitate data collection, analysis and reporting

Because no single technology platform specifically designed for CTR evaluation currently exists, evaluators must make use of existing platforms that are not tailored to CTR. To maintain consistency, accuracy and accessibility of the assessment results, CTR evaluators should use one platform to administer, analyze and report survey results whenever possible. For example, the same platforms used by clinical and translational researchers to collect research study data, such as REDCap TM ( Harris et al., 2009), Qualtrics®, and SurveyMonkey®, can also be used to conduct evaluations of CTR skills. If necessary, the resultant data can also be extracted from these platforms so that further analyses can be performed.

Many statistical analysis programs familiar to clinical and translational researchers, such as STATA, SAS and R, can also be used for rigorous validity tests. These software programs have the ability to conduct exploratory and confirmatory factor analysis ( Levine, 2005; Osborne and Costello, 2005), which are commonly used to identify and to validate the accuracy of the competency domains that structure many competency-based assessments. While there are many valuable validity tests ( Kane, 1992), these are the ones most commonly used to validate skill assessments. Software programs for qualitative analysis, such as Dedoose® or NVivo®, can be used to conduct qualitative evaluations of CTR programs ( Comeau et al., 2017).

Consult with subject matter experts to interpret assessment results

The results of competency-based assessment of CTR skill may not be readily interpretable, particularly when no established criteria or rubric is associated with the assessment tool. In fact, many validated assessment tools do not prescribe how the resultant scores should be interpreted by respondents to better understand their own training needs or by training program administrators to enable programmatic improvements. In these cases it is important to consult with subject matter experts in clinical and translational research, psychometrics and statistical analysis while conducting analyses of the assessment results.

The need to consult with these types of subject matter experts is particularly acute with subjective and objective assessment tools. Subjective tests of knowledge and skill have been shown to be poorly correlated with objective measures ( Hodges, Regehr and Martin, 2001; Davis et al., 2006). There is evidence suggesting that while subjective measures of CTR knowledge and skill often increase between pre- and post-program tests the scores obtained through such objective tests do not increase at a similar rate ( Ellis et al., 2007; Cruser et al., 2010). Measurement and educational experts can help ensure that assessment results are interpreted in ways that are justified by the design and administration of the assessment instrument.

Collect stakeholder feedback about options for programmatic improvement

An essential step of program evaluation involves sharing of evaluation results with stakeholder groups in order to facilitate collection of feedback about programmatic improvement ( Wandersman et al., 2000). For example, in the four overlapping and iterative phases of the Plan, Do, Check, and Act (PDCA) quality improvement cycle, the third stage typically involves studying the outcomes of a given initiative in ways that enable the articulation of what was learned through the implementation process ( Juran and DeFeo, 2010; Kleppinger and Ball, 2010). The involvement of stakeholders in this step of the process is critical to the rigorous evaluation of any CTR training program ( Trochim, Rubio and Thomas, 2013).

Reports of evaluation results should be customized to speak to stakeholder subgroups whenever it is not possible or productive to share the same report with all of them. For example, stakeholders with distinctive interests in the scientific content or pedagogical approach of a CTR training program may be most interested in reports showing how the results of competency-based assessment tools are being used to help participants identify and address their personal research learning challenges ( Chatterji, 2003). In contrast, stakeholders who value training programs as an institutional resource enabling the CTR enterprise may be more interested in the research careers or achievements of participants ( Frechtling and Sharp, 2002). Whenever possible thoroughly document stakeholder feedback so that it can be used to inform future discussions about programmatic improvement and impact.

Conclusion

The guidelines presented here are intended to support the work of all clinical research professionals who are charged with the administration and evaluation of CTR training programs. In particular, this work fulfills a need for guidelines that clinical research investigators and administrators can follow to integrate competency assessment tools into their evaluation plans. Doing so will better enable research centers to collaborate with programmatic stakeholders efficiently and effectively in order to measure and improve the quality and impact of CTR training using the results of competency-based assessments of research knowledge and skill.

Take Home Messages

-

•

Effective training programs in clinical and translational research are critical to the development of the research workforce.

-

•

Guidelines for integrating competency-based frameworks and assessments into program evaluations are needed to promote the quality and impact of research training programs.

-

•

The stakeholders of clinical and translational research training programs should be routinely consulted throughout evaluation processes that involve competency frameworks and assessments.

-

•

The systematic incorporation of competency-based approaches into evaluation plans facilitates the work of those developing, administering and evaluating research training programs.

-

•

The use of validated competency assessments for programmatic evaluation is essential to the collection of reliable and relevant performance metrics.

Notes On Contributors

All of the Co-authors contributed to the development of the guidelines presented in this work, informed the conclusions it advances and participated in all rounds of revisions required for submission.

Elias Samuels PhD, is the Manager of Evaluation at the Michigan Institute for Clinical and Health Research at the University of Michigan. ORCID ID: https://orcid.org/0000-0002-6725-3382

Phillip Anton Ianni PhD, is a Postdoctoral Research Fellow at the Michigan Institute for Clinical and Health Research at the University of Michigan. ORCID ID: https://orcid.org/0000-0003-1264-7322

Haejung Chung MA, is the Manager of Instructional Design and Technology at the Tufts Clinical and Translational Science Institute at Tufts University.

Brenda Eakin MS, is an Instructional Designer at the Michigan Institute for Clinical and Health Research at the University of Michigan. ORCID ID: https://orcid.org/0000-0002-7972-8621

Camille Martina PhD, is a Research Associate Professor in the departments of Public Health Sciences and of Emergency Medicine at the University of Rochester. ORCID ID: https://orcid.org/0000-0003-0523-7448

Susan Lynn Murphy ScD OTR/L, is an Associate Professor in the Department of Physical Medicine and Rehabilitation at the University of Michigan. ORCID ID: https://orcid.org/0000-0001-7924-0012

Carolynn Jones, DNP, MSPH, RN, FAAN is Associate Clinical Professor in the College of Medicine at The Ohio State University and Co-Director of Workforce Development for The Ohio State Center for Clinical Translational Science. ORCID ID: https://orcid.org/0000-0002-0669-7860

Acknowledgments

This work was made possible through the thoughtful guidance of Vicki L. Ellingrod, Pharm.D., Sarah E. Peyre, Ed.D., and the Development, Implementation and Assessment of Novel Training in Domain-Based Competencies (DIAMOND) study team. All tables and figures included in the manuscript were created by the authors.

[version 2; peer review: This article was migrated, the article was marked as recommended]

Declarations

The author has declared that there are no conflicts of interest.

Ethics Statement

This work does not constituent human subjects research and so no IRB review was required. The work contains only the opinions and individual work of the authors.

External Funding

This work was funded by the National Center for Advancing Translational Sciences – NIH (1-U01TR002013-01).

Bibliography/References

- Adlin T. and Pruitt J.(2010) The essential persona lifecycle: Your guide to building and using personas. Burlington, MA: Morgan Kauffman Publishers. [Google Scholar]

- Ameredes B. T., Hellmich M. R., Cestone C. M., Wooten K. C., et al. (2015) The Multidisciplinary Translational Team (MTT) Model for Training and Development of Translational Research Investigators. Cts-Clinical and Translational Science. 8(5), pp.533–541. 10.1111/cts.12281 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Awaisu A., Kheir N., Alrowashdeh H. A., Allouch J. N., et al. (2015) Impact of a pharmacy practice research capacity-building programme on improving the research abilities of pharmacists at two specialised tertiary care hospitals in Qatar: A preliminary study. Journal of Pharmaceutical Health Services REsearch. 6(3), pp.155–164. 10.1111/jphs.12101 [DOI] [Google Scholar]

- Bakken L. Sheridan J. and Carnes M.(2003) Gender differences among physician-scientists in self-assessed abilities to perform clinical research. Acad Med. 78(12), pp.1281–1286. 10.1097/00001888-200312000-00018 [DOI] [PubMed] [Google Scholar]

- Bates I., Ansong D., Bedu-Addo G., Agbenyega T., et al. (2007) Evaluation of a learner-designed course for teaching health research skills in Ghana. BMC Med Educ. 7, p.18. 10.1186/1472-6920-7-18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonham A. Califf R. Gallin E. and Lauer M.(2012) Appendix E Discussion Paper: Developing a Robust Clinical Trials Workforce.In: Envisioning a Transformed Clinical Trials Enterprise in the United States: Establishing an Agenda for 2020: Workshop Summary. Washington DC: Institute of Medicine, National Academies Press. [PubMed] [Google Scholar]

- Brandon P. R.(1998) Stakeholder participation for the purpose of helping ensure evaluation validity: Bridging the gap between collaborative and non-collaborative evaluations. American Journal of Evaluations. 19(3), pp.325–337. 10.1016/S1098-2140(99)80215-X [DOI] [Google Scholar]

- Callard F. Rose D. and Wykes T.(2012) Close to the bench as well as the bedside: Involving service users in all phases of translational research. Health Expectations. 15(4), pp.389–400. 10.1111/j.1369-7625.2011.00681.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvin-Naylor N., Jones C., Wartak M., Blackwell K., et al. (2017) Education and training of clinical and translational study investigators and research coordinators: a competency-based approach. Journal of Clinical and Translational Science. 1(1), pp.16–25. 10.1017/cts.2016.2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention (1999) Framework for program evaluation in public health. Morbidity and Mortality Weekly Report, 48(RR-12). Available at: https://stacks.cdc.gov/view/cdc/5204( Accessed: September 17, 2019). [Google Scholar]

- Centers for Disease Control and Prevention (2018) Step 2B: Logic Models. Available at: https://www.cdc.gov/oralhealth/state_programs/pdf/logic_models.pdf( Accessed: July 19, 2019). [Google Scholar]

- Chatterji M.(2003) Designing and using tools for educational assessment. Boston: Allyn & Bacon. [Google Scholar]

- Comeau D. L., Escoffery C., Freedman A., Ziegler T. R., et al. (2017) Improving clinical and translational research training: a qualitative evaluation of the Atlanta Clinical and Translational Science Institute KL2-mentored research scholars program. Journal of Investigative Medicine. 65(1), pp.23–31. 10.1136/jim-2016-000143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cruser D., Brown S. K., Ingram J. R., Podawiltz A. L., et al. (2010) Learning outcomes from a biomedical research course for second year osteopathic medical students. Osteopathec Medicine and Primary Care. 4(4). 10.1186/1750-4732-4-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cruser D., Dubin B., Brown S. K., Bakken L. L., et al. (2009) Biomedical research competencies for osteopathic medical students. Osteopath Med Prim Care. 3, p.10. 10.1186/1750-4732-3-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis D., Mazmanian P. E., Fordis M., Van Harrison R., et al. (2006) Accuracy of physician self-assessment compared with observed measures of competence: A systematic review. JAMA. 296(9), pp.1094–1102. 10.1001/jama.296.9.1094 [DOI] [PubMed] [Google Scholar]

- Dilmore T. C. Moore D. W. and Bjork Z.(2013) Developing a competency-based educational structure within clinical and translational science. Clin Transl Sci. 6(2), pp.98–102. 10.1111/cts.12030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ellis J. J. McCreadie S. R. McGregory M. and Streetman D. S.(2007) Effect of pharmacy practice residency training on residents’ knolwedge of and interest in clinical research. American Journal of Health-System Pharmacy. 64(19), pp.2055–2063. 10.2146/ajhp070063 [DOI] [PubMed] [Google Scholar]

- Frechtling J. and Sharp L.(2002) User-friendly handbook for project evaluation. National Science Foundation. Available at: https://www.nsf.gov/pubs/2002/nsf02057/nsf02057.pdf( Accessed: September 17, 2019). [Google Scholar]

- Geist M.(2010) Using the Delphi method to engage stakeholders: A comparision of two studies. Evaluation and Program Planning. 33(2), pp.147–154. 10.1016/j.evalprogplan.2009.06.006 [DOI] [PubMed] [Google Scholar]

- Harris P. A., Taylor R., Thielke R., Payne J., et al. (2009) Research electronic data capture (REDCap)-a metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics. 42(2), pp.377–381. 10.1016/j.jbi.2008.08.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hodges B. Regehr G. and Martin D.(2001) Difficulties in recognizing one’s own incompetence: Novice physicians who are unskilled and unaware of it. Academic Medicine. 76, pp.S87–S89. 10.1097/00001888-200110001-00029 [DOI] [PubMed] [Google Scholar]

- Hornung C., Ianni P. A., Jones C. T., Samuels E. M., et al. (2019) Indices of clinical research coordinators’ competence. Journal of Clinical and Translational Science. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeffe D., Rice T. K., Boyington J. E. A., Rao D. C., et al. (2017) Development and evaluation of two abbreviated questionnaires for mentoring and research self-efficacy. Ethnicity and Disease. 27(2), pp.179–188. 10.18865/ed.27.2.179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joint Task Force (2018) Joint Task Force for Clinical Trial Competency Blog. Available at: https://www.clinicaltrialcompetency.org/blog/( Accessed: August 31, 2018). [Google Scholar]

- Joosten Y., Israel T. L., Williams N. A., Boone L. R, et al. (2015) Community engagement studioes: A structured approach to obtaining meaningful input from stakeholders to inform research. Academic Medicine. 90(12), p.1646. 10.1097/ACM.0000000000000794 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Juran J. and DeFeo J.(2010) Juran’s Quality Handbook: The complete guide to performance excellence. 6th edn. New York: McGraw Hill. [Google Scholar]

- Kane M.(1992) An argument-based approach to validity. Psycological Bulletin. 112(3), pp.527–535. 10.1037/0033-2909.112.3.527 [DOI] [Google Scholar]

- Kirkpatrick D. and Kirkpatrick J.(2006) Evaluating training programs: The four levels. Berrett-Koehler Publishers. [Google Scholar]

- Kleppinger C. and Ball L.(2010) Building quality in clinical trials with use of a quality system approach. Clinical Infectious Diseases. 51, pp.5111–5116. 10.1086/653058 [DOI] [PubMed] [Google Scholar]

- Levine T.(2005) Confirmatory factor analysis and scale validation in communication research. Communication Research Reports. 22(4), pp.335–338. 10.1080/00036810500317730 [DOI] [Google Scholar]

- Lipira L., Jeffe D. B., Krauss M., Garbutt J., et al. (2010) Evaluation of clinical research training programs. Clini Transl Sci. 3(5), pp.243–248. 10.1111/j.1752-8062.2010.00229.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe B., Hartmann M., Wild B., Nikendei C., et al. (2008) Effectiveness of a 1-year resident training program in clinical research: a controlled before-and-after study. J Gen Intern Med. 23(2), pp.122–128. 10.1007/s11606-007-0397-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martinez L., Russell B., Rubin C. L., Leslie L. K., et al. (2012) Clinical and translational research and community engagement: Implications for researcher capacity building. Clinical and Translational Science. 5(4), pp.329–332. 10.1111/j.1752-8062.2012.00433.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLaughlin J. and Jordan G. B.(1999) Logic models: a tool for telling your program’s performance story. Evaluation and Program Planning. 22, pp.65–72. 10.1016/S0149-7189(98)00042-1 [DOI] [Google Scholar]

- Misso M., Ilic D., Haines T. P., Hutchinson A. M., et al. (2016) Development, implementation and evaluation of a clinical research engagement and leadership capacity building program in a large Australian health care service. BMC Medical Education. 16(1), p.13. 10.1186/s12909-016-0525-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullikin E. Bakken L. and Betz N.(2007) Assessing the research self-efficacy in physician scientists: The clinical research appraisal inventory. Journal of Clinical Assessment. 88(9), pp.1340–1345. [Google Scholar]

- Murphy S. Kalpakjian C. Z. Mullan P. B. and Clauw D. J.(2010) Development and Evaluation of the University of Michigan’s Practice-Oriented Research Training (PORT) Program. American Journal of Occupational Therapy. 64(5), pp.796–803. 10.5014/ajot.2010.08161 [DOI] [PubMed] [Google Scholar]

- NCATS (2011) Core Competencies for Clinical and Translational Research. Available at: https://clic-ctsa.org/sites/default/files/CTSA_Core_Competencies_final_2011.pdf( Accessed: September 16, 2019). [Google Scholar]

- NCATS (2017) Strategic Goal 3: Develop and foster innovative translational training and a highly skilled, creative and diverse translational science workforce. Available at: https://ncats.nih.gov/strategicplan/goal3( Accessed: September 16, 2019). [Google Scholar]

- NCATS (2018) About NCATS. Available at: https://ncats.nih.gov/about( Accessed: 2019). [Google Scholar]

- Newman D. Scheirer M. A. Shadish W. R. and Wye C.(1995) Guiding principles for evaluators. New Directions for Program Evaluation. 66, pp.19–26. 10.1002/ev.1706 [DOI] [Google Scholar]

- Osborne J. and Costello A.(2005) Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical Assessment, Research, and Evaluation. 10(7), pp.1–9. [Google Scholar]

- Patel M., Tomich D., Kent T. S., Chaikof E. L., et al. (2018) A program for promiting clinical scholarship in general surgery. Journal of Surgical Education. 75(4), pp.854–860. [DOI] [PubMed] [Google Scholar]

- Poloyac S. M., Empey K. M., Rohan L. C., Skledar S. J., et al. (2011) Core competencies for research training in the clinical pharmaceutical sciences. Am J Pharm Educ. 75(2), p.27. 10.5688/ajpe75227 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson G., Moore C., McTigue K., Rubio D., et al. (2015) Assessing competencies in a master of science in clinical research program: The comprehensive competency review. Clinical and Translational Science. 8, pp.7770–7775. 10.1111/cts.12322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson G., Switzer G. E., Cohen E., Primack B., et al. (2013) A shortened version of the Clinical Research Appraisal Inventory: CRAI-12. Academic Medicine. 88(9), pp.1340–1345. 10.1097/ACM.0b013e31829e75e5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubio D., Schoenbaum E. E., Lee L. S., Schteingart D. E., et al. (2010) Defining translational research: implications for training. Acad Med. 85(3), pp.470–475. 10.1097/ACM.0b013e3181ccd618 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sonstein S., Brouwer R. N., Gluck W., Kolb R., et al. (2018) Leveling the joint task force core competencies for clinical research professionals. Therapeutic Innovation and Regulatory Science. 10.1177/2168479018799291 [DOI] [PubMed] [Google Scholar]

- Sonstein S., Silva H., Jones C., Calvin-Naylor N., et al. (2016) Global self-assessment of competencies, role relevance, and training needs among clinical research professionals. Clinical Reseacher. 30(6), pp.38–45. [Google Scholar]

- Streetman D. S. McCreadie S. R. McGregory M. and Ellis J. J.(2006) Evaluation of clinical research knowledge and interest among pharmacy residents: survey design and validation. Am J Health Syst Pharm. 63(23), pp.2372–2377. 10.2146/ajhp060099 [DOI] [PubMed] [Google Scholar]

- Taut S. and Brauns D.(2003) Resistance to evaluation: A psychological perspective. Evaluation. 9(3), pp.703–719. 10.1177/13563890030093002 [DOI] [Google Scholar]

- Trochim W. Rubio D. and Thomas V.(2013) Evaluation Key Function Committee for the CTSA Consortium. Evaulation Guidelines for the clinical and translational science awards (CTSAs). Clinical and Translational Science. 6(4), pp.303–309. 10.1111/cts.12036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van der Vleuten C., Schuwirth L. W., Scheele F., Driessen E. W., et al. (2010) The assessment of professional competence: Building blocks for theory development: Best practice and research. Clinical Obstetrics and Gynaecology. 24(6), pp.703–719. 10.1016/j.bpobgyn.2010.04.001 [DOI] [PubMed] [Google Scholar]

- Wandersman A. Imm P. Chinman M. and Kaftarian S.(2000) Getting to outcomes: a results-based approach to accountability. Evaluation and Program Planning. 30, pp.389–395. 10.1016/S0149-7189(00)00028-8 [DOI] [Google Scholar]

- Yudkowsky R. Park Y. and Downing S.(2019) Assessment in health professions education. 2nd edn. Routledge. 10.4324/9781315166902 [DOI] [Google Scholar]