Abstract

The goal of this study was to determine whether the sensory nature of a target influences the roles of vision and proprioception in the planning of movement distance. Two groups of subjects made rapid, elbow extension movements, either toward a visual target or toward the index fingertip of the unseen opposite hand. Visual feedback of the reaching index fingertip was only available before movement onset. Using a virtual reality display, we randomly introduced a discrepancy between actual and virtual (cursor) fingertip location. When subjects reached toward the visual target, movement distance varied with changes in visual information about initial hand position. For the proprioceptive target, movement distance varied mostly with changes in proprioceptive information about initial position. The effect of target modality was already present at the time of peak acceleration, indicating that this effect include feedforward processes. Our results suggest that the relative contributions of vision and proprioception to motor planning can change, depending on the modality in which task relevant information is represented.

Keywords: Motor planning, Multisensory integration, Coordinate systems, Sensory maps, Reference frame

Introduction

It has been well established that information about initial hand position is essential for making accurate reaching movements (Desmurget et al. 1995; Ghez et al. 1995; Prablanc et al. 1979; Prodoehl et al. 2003). However, the relative contributions of vision and proprioception in providing such information remain unclear. The important role of vision in determining hand position prior to reaching movements is supported by studies that have compared movement accuracy between movements made with and without visual information (Brown et al. 2003a, b; Desmurget et al. 1995, 1997; Elliott et al. 1991; Prablanc et al. 1979). In addition, distorting visual feedback of initial hand position produces systematic effects on movement endpoints (Holmes and Spence 2005; Rossetti et al. 1995; Sainburg et al. 2003; Sober and Sabes 2003). This line of research has emphasized the important role of vision in defining the initial position of the hand for planning reaching movements.

The importance of proprioception in providing information about initial arm configuration has been demonstrated by the occurrence of configuration-dependent movement errors in proprioceptively deafferented patients (Ghez et al. 1995). Nougier et al. (1996) directly assessed the importance of proprioceptive information about initial limb configuration by mechanically perturbing the arm posture in deafferented patients prior to movement onset. Following the perturbation, movement distance reflected the visually displayed target distance relative to the initial position. This suggested that movements are planned as a displacement that is adjusted to the proprioceptively-derived hand position, rather than an absolute final position. Supporting an important role of proprioception in determining starting hand position, Larish et al. (1984) showed that when the arm muscles of neurologically intact subjects were vibrated prior to movements, final position was systematically and predictably altered. These findings demonstrate an essential role of proprioception in providing initial configuration information for planning movements. While it is clear that both vision and proprioception play important roles in providing information about initial limb conditions, the two modalities provide spatial information in quite different coordinate frameworks, and it is still not well understood how both signals are combined in the planning of goal-directed arm movements.

Multisensory integration in the control of reaching movements toward visual targets has been a topic of growing interest in the past decade and has been investigated in conditions where visual and proprioceptive signals of initial hand position are dissociated experimentally. In a seminal experiment, Rossetti et al. (1995) used optical prisms to create a visuo-proprioceptive mismatch during motor preparation. In this study, movement direction was not determined on the sole basis of initial visual or proprioceptive signals, but rather on the basis of a weighted combination of both. More recently, our laboratory employed a virtual-reality environment to create a visuo-proprioceptive mismatch and thereby investigated the contribution of each modality to the control of both movement direction and distance (Sainburg et al. 2003). In that study, we manipulated starting hand location while visual start location remained constant. Regardless of hand start location, movement direction remained constant, in accord with the visually displayed start location. In contrast, when hand start location was varied such that the projection of the new position onto the target line was increased or reduced, movement amplitude was proportionally adjusted. Thus, visual information played a primary role in directional control while proprioception played a substantial role in distance control. Because these effects were already present at peak velocity, we suggested that they might reflect planning processes.

In a follow-up study, starting hand position remained constant, and visual start location was varied (Lateiner and Sainburg 2003). This design allowed us to assess how visual information of initial hand position effected movement planning. In this case, initial movement direction, but not distance, varied according to the visual start location. A similar study of Sober and Sabes (2003) confirmed that visual information of initial hand position was predominantly used to determine initial movement direction. Taken together, these findings support the idea that vision contributes predominantly to direction planning when movements are performed toward visual targets. Proprioception appears to play a more substantial role in planning movement distance. The fact that each sensory modality contributes differentially to direction and distance planning is not surprising, given the evidence that movement direction and extent appear to be controlled through distinct neural processes, as reflected by both behavioral (Blouin et al. 1993; Bock and Arnold 1993; Ghez et al. 1999; Krakauer et al. 2000; Paillard 1996; Rosenbaum 1980; Soechting and Flanders 1989b; Vindras et al. 2005) and neurophysiological studies (Desmurget et al. 2004; Fu et al. 1995; Georgopoulos et al. 1983; Messier and Kalaska 2000; Riehle and Requin 1989; Turner and Anderson 1997).

Relatively few studies have investigated how the sensory nature of a target might influence the planning and control of goal-directed arm movements. Flanders and Cordo (1989) and Adamovich et al. (1998) both observed that the accuracy of motor responses was similar for visual and proprioceptive targets. In fact, Adamovich et al. (1998) suggested that planning strategies might be similar irrespective of target modality. In contrast, Sober and Sabes (2005) showed recently that the planning of movement direction depends directly on the sensory nature of the target. These authors tested whether the contributions of visual and proprioceptive information of starting hand position varied with the modality in which the target was presented. A visuo-proprioceptive mismatch was introduced before subjects reached either to a visual target or to their unseen opposite hand. When reaching for a visual target, vision dominated the direction planning process, but when reaching for a proprioceptive target, proprioception was dominant. Sober and Sabes (2005) focused on movement direction, but did not examine the planning of movement distance. Because we have shown that the planning of distance and direction are two aspects of movement planning that appear to rely differently on visual and proprioceptive information about hand position (Bagesteiro et al. 2006; Lateiner and Sainburg 2003; Sainburg et al. 2003), Sober and Sabes findings might not generalize to the control of movement distance.

The current study assessed the effect of target modality on both the feedforward and feedback control of movement distance during single-joint targeted movements. By restricting movements to the elbow joint, we were able to eliminate demands for direction planning, as well as to differentiate planning from online processes. This is because the feedforward and feedback contributions to the control of rapid, single-joint elbow movements have previously been well characterized (Bagesteiro et al. 2006; Brown and Cooke 1981; Ghez 1979; Gielen et al. 1985; Gordon and Ghez 1987a, b; Gottlieb et al. 1989; Prodoehl et al. 2003; Sainburg and Schaefer 2004). When subjects reach for visual targets located at different distances, peak acceleration and peak velocity scale with, and thus predict, movement distance. When a perturbation such as a load is unexpectedly applied to the arm near movement initiation, peak acceleration is unaffected by feedback mechanisms indicating that it reflects, feedforward processes. In contrast, acceleration duration (time to peak velocity) is substantially modified through feedback mechanisms (Brown and Cooke 1981). Similarly, in a targeted force task, when assessed across movements to a single force target, time to peak force has been shown to scale inversely with peak force, presumably compensating errors in movement planning (Gordon and Ghez 1987b). Taken together, this line of evidence suggests that force or acceleration amplitude reflects feedforward processes, whereas time to peak force or acceleration is substantially influenced by online error correction mechanisms.

In the present study, two groups of participants aimed either at a visual or a proprioceptive target, using rapid elbow extension movements. A mismatch was randomly introduced between visual and proprioceptive information of initial hand position. An original feature of the current paradigm is that we varied both initial visual and proprioceptive information of starting hand position with equal frequencies to prevent any bias toward a single sensory modality or even veridical conditions. This design allowed a direct assessment of both visual and proprioceptive contributions to motor performance, without making assumptions such as that visual and proprioceptive contributions must sum up to 100%. That is, we allowed the possibility of over-additive or under-additive interactions of visual and proprioceptive information of hand position.

We hypothesized that distance planning would rely on the modality that is used for target localization. This is based on the idea that the nervous system attempts to minimize errors that arise from transformations between visual and proprioceptive reference systems (Adamovich et al. 1998; Sober and Sabes 2005; Soechting and Flanders 1989a). This hypothesis leads to two predictions: (1) when reaching movements are performed toward a visual target, vision of initial hand position should strongly influence movement distance; (2) when reaching movements are performed toward a proprioceptive target, vision of initial hand position should not influence distance control because distance can be determined on the sole basis of proprioceptive information.

Methods

Subjects

Twelve neurologically intact adults, with normal or corrected-to-normal vision, participated in the present study. All subjects were right-handed, as indicated by laterality scores on a 12-item version of the Edinburgh Inventory (Oldfield 1971), and were naive to the purpose of the experiment. Subjects, 160–178 cm tall, were randomly assigned to each group. One group of three females and three males, from 20 to 37-years-old (mean and SD = 26 ± 6), performed reaching movements toward a visual target. One other group of three females and three males, from 20 to 34-years-old (mean and SD = 26 ± 6), reached toward a proprioceptive target. Informed consent was solicited prior to the paid participation, which was approved by the Biomedical Institutional Review Board of the Pennsylvania State University (IRB # 15084).

Experimental set-up

Figure 1a illustrates the set-up used for this experiment. Subjects sat with the right arm supported over a horizontal surface, positioned just below shoulder height, by a frictionless air-jet system. A visual display was projected on a horizontal back-projection screen located above the arm. A mirror, positioned parallel to and below this screen, reflected the visual display, so as to give the illusion that the display was in the same horizontal plane as the fingertip. A bib, running from the subjects’ neck to the edge of the mirror, blocked the view of the shoulder and the upper-arm. This set-up assured that subjects could not see their arms during the experimental session and the virtual reality environment allowed manipulation of the visual feedback of the right index fingertip position. All that subjects could see was a green start circle, a cursor representing the fingertip position and, possibly, a visual target depending on the experimental condition.

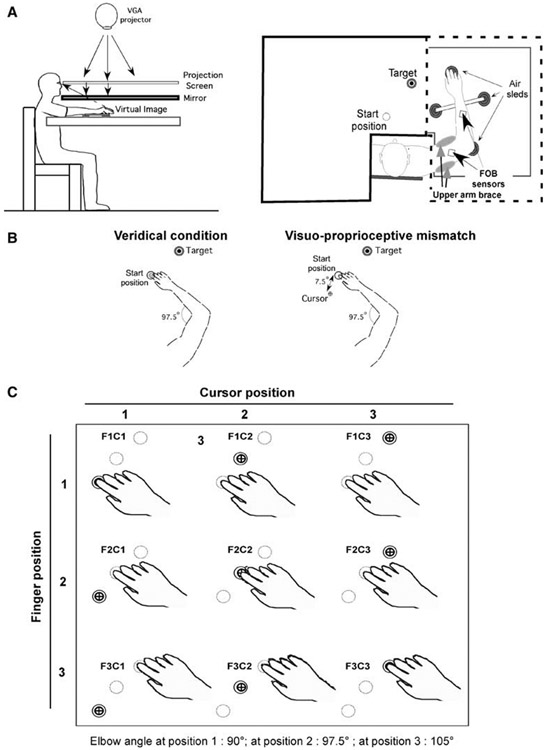

Fig. 1.

a Experimental set-up. On the right illustration, the projection screen is omitted for purpose of illustration. b Top view of a reaching movement performed toward a visual target. Subjects could not see their arm, depicted in dashed line for clarity. c Illustration of the nine experimental conditions. The hand and the dashed start circles were not visible by the subjects

For the right arm, all joints distal to the elbow were immobilized. The index finger was splinted in an extended position, the wrist joint was immobilized using an adjustable splint and the upper arm was immobilized by a brace (shoulder angle of 55°), restricting arm movements to the elbow joint (see Fig. 1a). Therefore, index fingertip position and elbow angle were linearly related. In addition, movements of the trunk and scapula were restricted using a butterfly-shaped chest restraint attached to subject’s chair. Position and orientation of each limb segment was sampled using the Flock of Birds® (FoB – Ascension Technology) electro-magnetic six degree of freedom movement recording system. A sensor was attached to the upper arm segment via an adjustable plastic cuff, while another sensor was fixed to the air sled where the forearm was fitted. The sensors were positioned approximately at the center of each arm segment. A stylus rigidly attached to a FoB sensor was used to digitize the positions of the following three landmarks: (1) a point located on the distal phalange of the right index finger, 43 cm away from the elbow joint, i.e., landmark 2; (2) the lateral epicondyle of the humerus; (3) the acromion, directly posterior to the acromio-clavicular joint. Landmark 1 was chosen so that movements were similar irrespective of subject’s forearm length (same starting and target positions). The recorded coordinates of the right index fingertip were used to project a cursor (cross hair, 1.4 cm diameter) onto the screen. Screen redrawing occurred at 85 Hz, fast enough to maintain the cursor centred on the moving fingertip when visual feedback was given. Digital data were collected at 103 Hz using a Macintosh computer. Custom computer algorithms for experiment control and data analysis were written in REAL BASIC™ (REAL Software, Inc.), C and IGOR Pro™ (WaveMetrics, Inc.).

Experimental task

The experimental session consisted of 90 elbow joint extension movements from a starting location corresponding to 90, 97.5 or 105° of elbow angle, toward a target positioned at 122.5° of elbow angle. Thus, three different starting positions were employed, requiring three different target distances in the various experimental conditions. In our study, 32.5, 25 and 17.5° of elbow excursion corresponded to 20.2, 15.1 and 10.3 cm of movement distance, respectively. Subjects were instructed to move the hand to the target using a “single, uncorrected, rapid motion”. One group of subjects was asked to reach with the right index fingertip to a visual target consisting of a visual circle (2.0 cm diameter) displayed on the screen. The other group of subjects was requested to reach with the right index fingertip toward their unseen left index fingertip. In this case, before each reaching movement, the left arm of the subject was gently guided by an experimenter toward a tactile landmark (corresponding precisely to the visual target position used for the other group) placed below the mirror. The left arm was then released by the experimenter and the subject had to actively maintain the contact between the left index fingertip and the landmark. There were no instructions about eye movements in the present study, and eye movements were not recorded.

At the beginning of each trial, a green start circle (2.0 cm diameter) was displayed. The cursor providing visual feedback about the right index fingertip position, was to be positioned in the start circle for 300 ms (see Fig. 1b). At the presentation of an audiovisual “go” signal, subjects had to reach for the (visual or proprioceptive) target. The cursor was blanked at the “go” signal, so visual feedback of the right index fingertip was only available before movement onset. Once the reaching movement was completed, the reaching (right) arm, and the target (left) arm for the group reaching toward a proprioceptive target, was brought to a random position for a short rest. Note that when subjects reached with their right hand toward their unseen left fingertip, the two hands never came into contact. Between trials, cursor feedback was only provided when the fingertip was within a 3 cm radius of the center of the start circle. This was done to prevent adaptation to altered visual feedback. Despite the fact that movements were restricted to the elbow joint, subjects could easily perform the movement. Subjects were given 30 practice trials to comply with the requirements of the task, qualified by all subjects as easy and comfortable.

The schematic shown in Fig. 1b depicts the relationship of the subjects’ finger position to the cursor position during two tested conditions. The left illustration represents a veridical trial, during which fingertip and cursor positions matched accurately while the fingertip was to be positioned in the start circle. The experimental manipulation of this study consisted in the random introduction of a discrepancy between actual and seen fingertip location in starting position (e.g., −7.5° of elbow angle as shown on the right illustration of Fig. 1b). In this condition, two plausible results might occur: (1) if subjects use the proprioceptive information of fingertip position, they will reach accurately to the target; (2) if subjects use the visual information of initial fingertip position (i.e., cursor location), they will overshoot the target by 7.5°.

Figure 1c shows that three conditions (F1C1, F2C2 and F3C3) were “veridical” conditions while in six experimental conditions, a visuo-proprioceptive mismatch was introduced (7.5 or 15° of elbow angle, in either direction). Within the 90 trials, nine different conditions (i.e., 3 cursor starting positions × 3 fingertip starting positions) were interspersed in a pseudo-random manner, and the same experimental condition was never presented twice in a row. The design of this study produced ten trials for each of the conditions tested. Subjects had no prior information about the mismatch.

Data analysis

The 3D positions of the right fingertip, elbow, and shoulder were calculated from sensor position and orientation data. Then, elbow and shoulder angles were calculated from these data. All kinematic data were low pass filtered at 8 Hz (third order, no-lag, dual pass Butterworth), and differentiated to yield velocity and acceleration values. Each trial usually started with the hand at zero velocity, but small oscillations of the hand sometimes occurred within the start circle. In this case, the onset of movement was defined by the last minimum (below 8% maximum tangential hand velocity) prior to the maximum in the hand’s tangential velocity profile. Movement termination was defined as the first minimum (below 8% peak velocity) following peak velocity. Visual inspection was performed on every single trial to ensure that movement onset, peak acceleration, peak velocity and movement termination were correctly determined.

The following measures of task performance were analyzed: peak acceleration, peak velocity, time to peak acceleration, time to peak velocity, movement duration and distance traveled up to peak tangential acceleration, up to peak tangential velocity and up to movement termination. Movement distance was calculated as the 2D distance between the start and the final locations of the fingertip. Distances at peak acceleration and peak velocity were similarly determined between the start location of the fingertip and its location at peak acceleration and peak velocity, respectively.

For each group of subjects, dependant measures of movement distance, velocity, acceleration and time were submitted to 3 × 3 [Cursor (Start position C1, C2, C3) × Fingertip (Start position F1, F2, F3)] analyses of variance with repeated measures. To study the effect of vision of initial position, the effect of modifying the starting cursor location, while the starting hand position remained constant, was analyzed. To study the effect of proprioception of initial hand position, we analyzed the effect of varying the starting hand location while the starting cursor location remained constant (see Fig. 1c). In order to assess the relative contributions of vision (i.e., initial cursor position) to distance control, movement distance was determined, within each subject, for each experimental condition, and was then averaged across start position for the cursor or the fingertip (e.g., F1C1, F2C1 and F3C1 conditions were averaged to obtain the mean movement distance when the cursor was in start position C1). We then calculated the difference between the mean movement distances observed when the cursor was in start position 1 and 3 (C1–C3; see Fig. 2b) to assess the effect of changes of initial visual information of hand position across the range of experimental manipulations. Similarly, the difference F1–F3 was used to assess the effect of initial proprioceptive information on movement kinematics. In addition, the mean difference under veridical conditions (i.e., between F1C1 and F3C3 conditions) was calculated within each subject. The ratio of mismatched to veridical mean differences [e.g., (C1–C3)/(F1C1–F3C3)] was then calculated to yield the percent contribution of each modality to distance control. The contributions were then averaged across subjects. This analysis was performed for movement distance up to peak acceleration, peak velocity and movement end to assess the contributions of vision and proprioception throughout the movement. For all analyses, statistical significance was tested using an alpha value of 0.05 and Tukey’s method was used for post-hoc analysis.

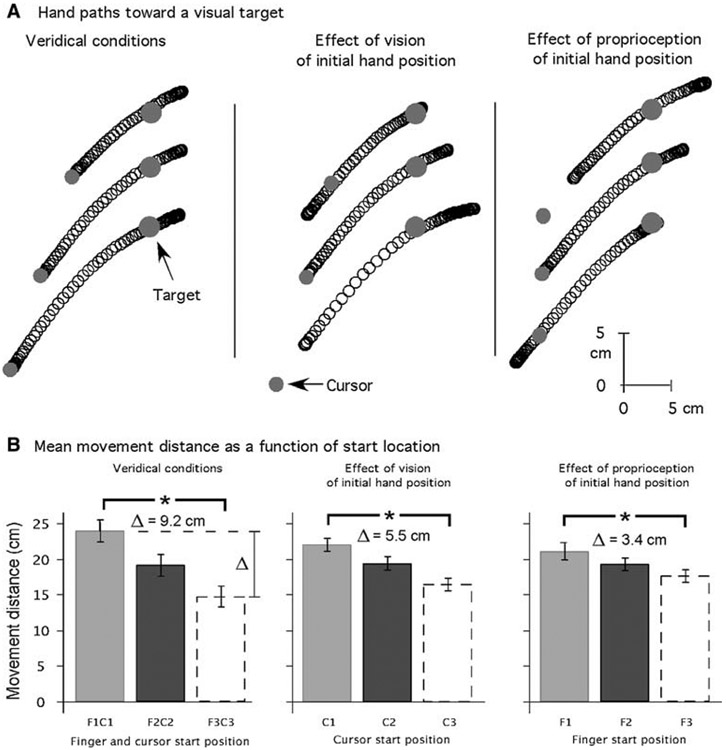

Fig. 2.

Top view of reaching movements toward a visual target. a Representative hand paths under the different conditions. b Averaged movement distance as a function of starting location under veridical and visuo-proprioceptive mismatch conditions. Error bars represent between subject standard errors

Results

Experiment 1: When reaching toward a visual target, visual information of initial position predominantly influences movement planning

Figure 2a displays typical hand paths for movements under veridical conditions, and illustrates the results of the experiment 1. When subjects reached for a visual target with their unseen arm, they overshot the target by 4.0 cm on average in veridical conditions. This is consistent with our previous studies of unseen planar movements under low friction conditions (e.g., Bagesteiro et al. 2006; Lateiner and Sainburg 2003; Sainburg and Schaefer 2004; Sainburg et al. 2003). More importantly, Fig. 2 shows that movement distance varied greatest with changes in the visual information of initial hand position. The ANOVA revealed significant effects of initial cursor position (i.e., vision; F2, 40 = 156.7; P < 0.001) and hand position (i.e., proprioception; F2, 40 = 59.3; P < 0.001), with no significant interaction between these factors (F4, 40 = 0.7; P = 0.60). Movement distance significantly increased as the cursor was displayed further from the target, independently of actual hand position (see Table 1). Our analysis of contributions (see Methods) indicated that visual information of initial hand position accounted for 60 ± 12% (mean ± between-subject SD) of movement distance control. Initial finger position significantly affected movement distance, but proprioceptive information of hand position contributed only 37 ± 11%.

Table 1.

Kinematic data of reaching toward a visual target

| C1F1 | C2F1 | C3F1 | C1F2 | C2F2 | C3F2 | C1F3 | C2F3 | C3F3 | |

|---|---|---|---|---|---|---|---|---|---|

| Movement distance (cm) | 23.91 ± 2.1 | 21.1 ± 2.6 | 17.8 ± 2.5 | 21.8 ± 2.4 | 19.1 ± 2.0 | 16.7 ± 2.0 | 20.1 ± 2.5 | 17.8 ± 1.7 | 14.7 ± 1.8 |

| Movement duration (ms) | 587 ± 97 | 594 ± 116 | 545 ± 80 | 587 ± 107 | 584 ± 85 | 542 ± 113 | 558 ± 82 | 527 ± 64 | 520 ± 96 |

| Peak velocity (ms) | 1.06 ± 0.17 | 0.93 ± 0.13 | 0.80 ± 0.16 | 0.95 ± 0.14 | 0.84 ± 0.12 | 0.77 ± 0.14 | 0.87 ± 0.11 | 0.84 ± 0.12 | 0.71 ± 0.11 |

| Time to peak velocity (ms) | 252 ± 36 | 244 ± 36 | 232 ± 37 | 243 ± 45 | 233 ± 43 | 222 ± 38 | 239 ± 42 | 227 ± 29 | 215 ± 35 |

| Peak acceleration (m/s) | 7.9 ± 2.4 | 6.8 ± 1.6 | 6.2 ± 2.0 | 7.1 ± 1.9 | 6.5 ± 1.7 | 6.4 ± 1.8 | 6.6 ± 1.5 | 6.8 ± 1.7 | 5.9 ± 1.6 |

| Time to peak acceleration (ms) | 137 ± 51 | 138 ± 56 | 117 ± 57 | 137 ± 57 | 120 ± 52 | 115 ± 48 | 123 ± 48 | 124 ± 40 | 110 ± 56 |

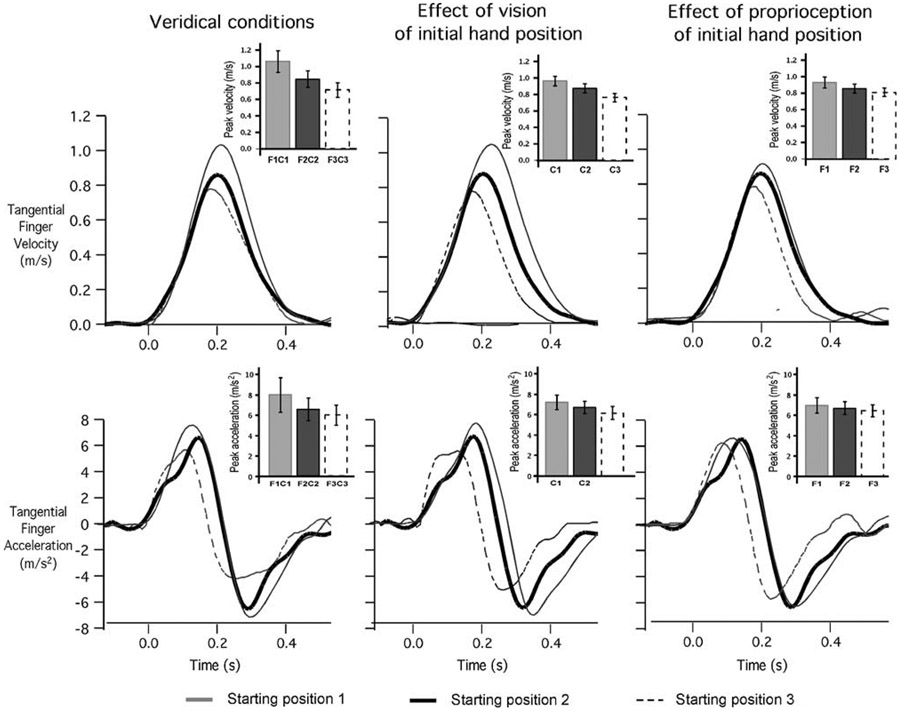

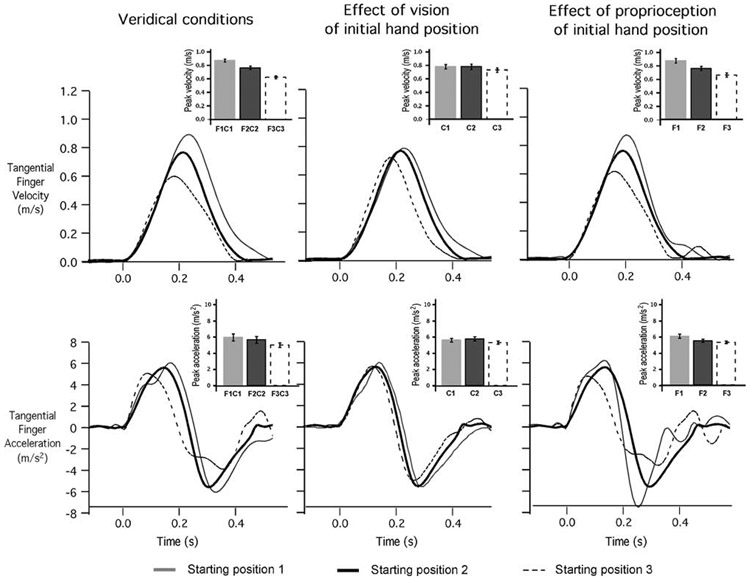

The effects of vision and proprioception observed on the distance of the rapid reaching movements (mean movement duration = 560 ± 93 ms) toward visual targets were already present at the time of peak velocity (mean = 234 ± 38 ms). Figure 3 shows representative velocity and acceleration profiles. We observed that peak velocity varied according to the cursor start location (F2, 40 = 58.5; P < 0.001). Post hoc analysis (Tukey’s method; P < 0.05) revealed that as the distance between the visual target and the cursor increased, peak velocity increased (means = 0.76 ± 0.13 and 0.96 ± 0.14 m/s for cursor positions C3 and C1, respectively; mean of within-subject differences = 0.20 m/s). Peak velocity also scaled with the distance between the visual target and the actual starting hand position (F2, 40 = 21.2; P < 0.001; means = 0.81 ± 0.11 and 0.93 ± 0.15 m/s for finger positions F3 and F1, respectively; Tukey’s method; P < 0.05), but the mean difference, considered to be an indicator of the proprioceptive contribution, was reduced (mean = 0.12 m/s). In fact, visual information of initial hand position contributed 60 ± 12% of the movement distance traveled up to peak velocity, while proprioceptive information of hand position accounted for only 37 ± 12%. There was no signiWcant interaction between visual and proprioceptive starting hand positions (F4, 40 = 2.0; P = 0.10).

Fig. 3.

Representative velocity (top) and acceleration (bottom) profiles of reaching movements toward a visual target under the experimental conditions. Profiles are synchronized to movement onset for sake of clarity. Insets represent averaged peak velocity and peak acceleration as a function of condition. Error bars represent between subject standard errors

For movements toward a visual target, peak acceleration varied according to the cursor start location (F2, 40 = 10.5; P < 0.001). When the cursor was at starting position C1, peak acceleration was significantly greater than that when the cursor was initially at position C3 (Tukey’s method; P < 0.05). The effect of proprioceptive information of initial Wnger position on peak acceleration was marginally significant (F2, 40 = 2.8; P = 0.07) and there was no significant interaction between visual and proprioceptive information of starting hand position (F4, 40 = 1.8; P = 0.54). In summary, the analysis of peak acceleration suggests that the distance of the unseen movements performed toward visual targets was mainly planned on the basis of visual information of starting hand position.

Experiment 2: When reaching toward a proprioceptive target, mainly proprioceptive information of initial position influences movement planning

Figure 4 shows that subjects were fairly accurate when asked to move their right index fingertip toward their left index fingertip. In veridical conditions, they overshot the target-fingertip by 1.5 cm on average, which was substantially more accurate than movements made toward visual targets (F2, 20 = 5.4; P < 0.05). The better accuracy was not due to either lower movement speeds, nor longer movement durations, as peak velocity (mean = 0.81 ± 0.21 m/s) and movement duration (mean = 536 ± 82 ms) were not statistically different for movements toward proprioceptive and visual targets (there was no significant main effect nor significant interaction; P > 0.05). When reaching toward a proprioceptive target, variability of movement distance (mean = 2.0 cm) was not significantly different than that measured when reaching toward a visual target.

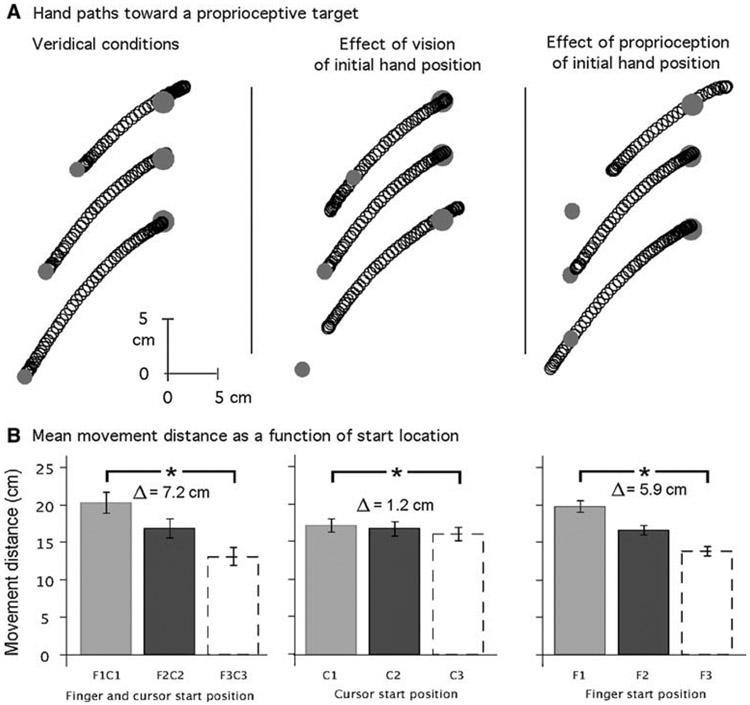

Fig. 4.

Reaching movements toward a proprioceptive target, viewed from above. a Representative hand paths under the different conditions. b Averaged movement distance as a function of experimental conditions. Error bars represent between subject standard errors

In striking contrast to what was observed when subjects reached toward visual targets, vision of the cursor starting position did not play a substantial role in the control of movement distance (see Fig. 4 and Table 2). The ANOVA revealed significant effects of initial cursor position (i.e., vision; F2, 40 = 9.7; P <0.001) and hand position (i.e., proprioception; F2, 40 = 219.3; P < 0.001), with no significant interaction between these factors (F4, 40 = 0.3; P = 0.85). However, post hoc analysis revealed that movement distance mainly varied with initial finger position. Indeed, proprioceptive information of hand position contributed to 82 ± 10% of the distance moved toward the proprioceptive target. Concerning the role of vision in distance control, movement extent was significantly, but only slightly greater when the cursor was in starting position C1 compared to C3 (Tukey’s method; P < 0.05). In fact, the relative contribution of visual information to movement distance, as compared with veridical conditions, was only 17 ± 12%.

Table 2.

Kinematic data of reaching toward a proprioceptive target

| C1F1 | C2F1 | C3F1 | C1F2 | C2F2 | C3F2 | C1F3 | C2F3 | C3F3 | |

|---|---|---|---|---|---|---|---|---|---|

| Movement distance (cm) | 20.2 ± 1.8 | 19.8 ± 2.0 | 19.0 ± 2.5 | 16.9 ± 1.7 | 16.8 ± 2.0 | 15.9 ± 2.1 | 14.6 ± 1.7 | 13.7 ± 1.8 | 13.1 ± 2.0 |

| Movement duration (ms) | 560 ± 76 | 515 ± 64 | 529 ± 66 | 503 ± 68 | 510 ± 86 | 504 ± 67 | 472 ± 68 | 458 ± 54 | 455 ± 62 |

| Peak velocity (ms) | 0.87 ± 0.13 | 0.89 ± 0.12 | 0.83 ± 0.14 | 0.77 ± 0.12 | 0.76 ± 0.11 | 0.72 ± 0.12 | 0.68 ± 0.10 | 0.66 ± 0.10 | 0.62 ± 0.10 |

| Time to peak velocity (ms) | 245 ± 33 | 238 ± 34 | 235 ± 34 | 233 ± 37 | 230 ± 41 | 230 ± 39 | 214 ± 34 | 206 ± 31 | 208 ± 35 |

| Peak acceleration (m/s) | 5.9 ± 1.3 | 6.2 ± 1.1 | 5.8 ± 1.2 | 5.5 ± 1.2 | 5.6 ± 1.2 | 5.1 ± 1.3 | 5.3 ± 1.2 | 5.4 ± 1.3 | 5.0 ± 1.2 |

| Time to peak acceleration (ms) | 143 ± 46 | 129 ± 57 | 121 ± 51 | 124 ± 50 | 119 ± 45 | 120 ± 55 | 102 ± 48 | 97 ± 38 | 101 ± 46 |

Figure 5 shows that peak velocity varied greater with the finger start location, as compared with the cursor start location. Our ANOVA revealed a significant effect of vision (F2, 40 = 10.4; P < 0.001) on peak velocity, which was greater when the cursor was positioned at C1 (mean = 0.77 ± 0.12 m/s), as compared with C3 (mean = 0.73 ± 0.12 m/s; Tukey’s method; P < 0.05). However, the mean difference was only 0.04 m/s. In contrast, peak velocity varied substantially with finger position (F2, 40 = 151.1; P < 0.001), such that the average difference in peak velocity for starting finger position F1 (mean = 0.87 ± 0.13 m/s) and F3 (mean = 0.65 ± 0.10 m/s) was 0.22 m/s (Tukey’s method; P < 0.05). Proprioceptive information of hand position accounted for 82 ± 10% of the movement distance traveled up to peak velocity, while visual information of starting hand position only contributed 17 ± 12%. There was no significant interaction between visual and proprioceptive information of starting hand position (F4, 40 = 0.5; P = 0.72).

Fig. 5.

Representative velocity and acceleration profiles of reaching movements toward a proprioceptive target under the experimental conditions. Insets represent averaged peak velocity and peak acceleration as a function of experimental conditions. Error bars represent between subject standard errors

When subjects reached for proprioceptive targets, peak acceleration varied mostly with finger start location, as illustrated by Fig. 5 (bottom row). Indeed, there was a significant effect of finger start location on peak acceleration (F2, 40 = 11.6; P < 0.001), which was greater when the finger position was F1 (mean = 6.0 ± 1.2 m/s2) compared to F3 (means = 5.2 ± 1.2 m/s2; Tukey’s method; P < 0.05). Peak acceleration also varied with cursor starting location (F2, 40 = 3.7; P < 0.05). However, post hoc analysis revealed that this was not due to a monotonic change across cursor start locations. Peak acceleration was significantly, but not substantially greater when initial cursor position was C2 (mean = 5.7 ± 1.2 m/s2) as compared to C3 (mean = 5.3 ± 1.2 m/s2; Tukey’s method; P < 0.05), while there was no significant difference between peak acceleration when the cursor was positioned at C3 or C1 (mean = 5.6 ± 1.3 m/s2; Tukey’s method; P > 0.05). Proprioceptive information of hand position contributed 76 ± 23 % of the movement distance traveled up to peak acceleration, while visual information of starting hand location accounted for 20 ± 10%. There was no significant interaction between visual and proprioceptive information of starting hand position (F4, 40 = 0.2; P = 0.95).

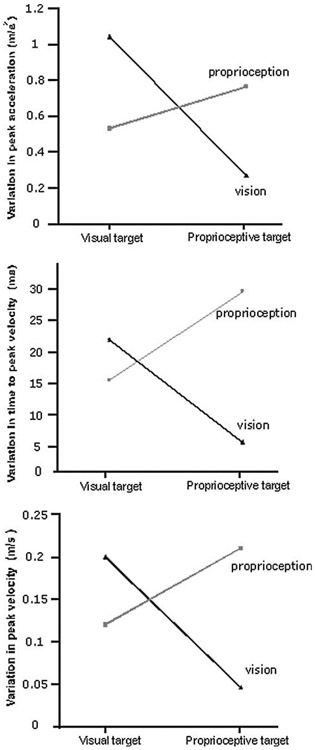

Figure 6 summarizes our results for reaching movements toward proprioceptive and visual targets, showing the interaction between the sensory contributions to distance control and the nature of the target. It can be seen that the patterns of interaction looked very similar whether assessed at peak acceleration or peak velocity. When subjects reached for a proprioceptive target, movement kinematics relied mostly on proprioceptive information of hand position. When subjects reached for a visual target, movements were controlled mainly on the basis of visual information of starting position.

Fig. 6.

Mean sensory (visual and proprioceptive) contributions to peak acceleration, time to peak velocity and peak velocity variation (when reaching toward visual and proprioceptive targets

Discussion

The present study investigated the contributions of visual and proprioceptive information of hand position to the planning of movement extent toward visual and proprioceptive targets. By presenting various discrepancies between visual and proprioceptive information about initial hand position, we were able to examine the relative roles of each modality in reaching toward targets. In veridical conditions, movements made toward proprioceptive targets were more accurate than those made toward visual targets, suggesting that the coding of information under each target condition might be different. We observed that the distance of movements made toward visual targets varied predominantly with visual information about initial hand position. Peak acceleration, a measure reflecting feedforward mechanisms, varied largely with initial visual information, supporting recent results (Bagesteiro et al. 2006). We conclude that the planning of movement distance toward visual targets was predominantly based on visual information of starting hand location. In contrast, when subjects reached toward proprioceptive targets, peak acceleration varied more with proprioceptive than with visual information. Taken together, our results indicate that the roles of visual and proprioceptive information about initial hand position vary with the modality in which the target is presented.

An interesting finding of the present study was that our calculations of visual and proprioceptive contributions to planning summed up to approximately 100%. This was not necessary, as it is plausible that visuo-proprioceptive integration could be under-additive or over-additive. For example, van Beers et al. (1996) showed that the contribution of vision and proprioception was different than the sum of the separately assessed contributions. These authors showed that the variance of hand localization with visual and proprioceptive information was smaller than expected from a simple additive or exclusive model. In examining the relative roles of each modality for planning movement direction, Sober and Sabes (2003, 2005) employed a model that was based on the assumption that the contribution of each modality summed to 100%. The current experiment was designed to address this discrepancy in the literature. It is plausible that this discrepancy is explained by the difference between the processes that determine sensory integration for conscious reporting of hand location and those used for planning movements. Our data suggest that visual and proprioceptive contributions do sum to nearly 100%, supporting the model of Sober and Sabes (2003). While our data differed slightly from a 100% sum (from 96 to 99%), this variation was consistent with the amplitude of our computed standard errors, and likely reflected measurement error.

Differential contributions of vision and proprioception to two stages of motor planning

Previous studies examining the planning of reaching movements have supported the view of a two-stage process in which a general kinematic plan is first determined by comparing initial hand position to target position. Following this stage, a dynamic transformation process generates motor commands that result in the forces required to produce the desired motion. The idea that these two stages of planning are different is supported by studies demonstrating differential use of sensory information for each stage of planning (Lateiner and Sainburg 2003; Sainburg et al. 2003; Sober and Sabes 2003, 2005).

In a previous study, we provided substantial support for this two stage model, using a similar proprioceptive/visual mismatch design to that used in the current study (Sainburg et al. 2003). Our results indicated that subjects planned the direction of movement based on visually detected start position, but adapted that plan to the actual position of the hand in order to accurately produce the intended direction from a variety of different limb configurations. Inverse dynamics analysis revealed substantial changes in joint torque patterns across the different starting limb configurations. These different joint torque strategies reflected the changes necessary to produce the same direction movements from different limb configurations. While both visual and proprioceptive information were used in planning movements, each modality played a dominant role in a different stage of planning. Because the direction of movement was perfectly adapted to the direction of the target relative to the cursor start location, this direction plan was entirely determined by visual information about hand location. However, because joint torque profiles were modified across different limb configurations in order to maintain the same movement direction, limb configuration information must have been accurately assessed through proprioceptive channels. These findings imply that both modalities provide separate but accurate information about visual or proprioceptive start position at different stages in the planning process.

While supported by the model of Sober and Sabes (2003), our findings contradicted the idea that information from each modality is fused to provide a weighted average position, as suggested by van Beers et al. (1996, 1999). If that were the case, direction would have been influenced by hand location, and dynamic transformations would have been inaccurate, resulting in variations in movement direction across limb configurations. In a recent study, we extended these findings to the planning of movement distance during a single-joint task (Bagesteiro et al. 2006). In agreement with our current findings for visual targets, when subjects reached from different starting locations, they adjusted acceleration amplitudes to the intended distance between the target and the displayed cursor location. In line with our multi-joint study (Sainburg et al. 2003), this must have required variations in the dynamic strategy that resulted in different torque profiles to produce the same amplitude displacement. These findings extend previous findings in deafferented patients indicating a substantial role of proprioception in anticipating the effects of limb dynamics, a process that likely requires some sort of inverse dynamics computation (Sainburg et al. 1993, 1995, 1999).

In contrast to the previously reviewed findings for reaching toward visual targets, Sober and Sabes (2005) recently showed that when proprioceptive targets were presented, proprioceptive information of starting hand position played the major role in both stages of planning. Our current empirical findings complement the model of Sober and Sabes (2005) and extend these findings to the planning of movement distance, a process which has previously been associated with neural processes that are distinct from those underlying direction planning (Desmurget et al. 2004; Favilla et al 1989; Fu et al. 1995; Rosenbaum 1980; Sainburg et al. 2003; Soechting and Flanders 1989b). Indeed, our current results indicate a dominant role of visual information of starting hand position in planning the distance of movements toward visual targets, and a dominant role of proprioceptive information in distance planning toward proprioceptive targets.

In our previous studies (Lateiner and Sainburg 2003; Sainburg et al. 2003), the planning of direction seemed to occur within extrinsic coordinates. This was supported by the fact that subjects produced the same initial movement direction from a variety of starting limb configurations. It is plausible that when reaching toward proprioceptive targets, the kinematic plan is specified in intrinsic coordinates. However, our current experimental design did not allow us differentiate between coordinate systems because intrinsic and extrinsic coordinates are linearly related for single-joint movements. Thus, further research is necessary in order to understand the reference frames used in planning reaching movements toward proprioceptive targets.

The persistent role of visual information in distance planning

It has previously been proposed that intermodality transformations of sensory information introduce a substantial source of errors in motor planning due to the heterogeneous nature of visual and proprioceptive coordinate systems (Adamovich et al. 1998; Darling and Miller 1993; McIntyre et al. 2000; Soechting and Flanders 1989a, b; Sober and Sabes 2005). This idea suggests that under conditions in which subjects are able to plan a movement based entirely on a single modality, the contributions of other modalities would be minimized. While our findings for visual target reaching appear to support this hypothesis, our findings for reaching to proprioceptive targets do not. When subjects reached to a visual target, movements were primarily controlled on the basis of visual information of hand position, consistent with several previous findings (Baraduc and Wolpert 2002; Flanagan and Rao 1995; Gentilucci et al. 1994; Scheidt et al. 2005; Wolpert et al. 1995). However, because proprioception is critical in transforming the kinematic plan into muscle level commands, it is difficult to conceive how this information could be disregarded. On the other hand, under our proprioceptive target condition, vision should not be necessary for any stage of planning. Nevertheless, under this condition, visual information persisted in playing a significant, yet small, role in distance planning, as reflected by variations in peak acceleration. This suggests that visual information persists in contributing to distance planning, even when this information is apparently unnecessary to complete the task. It is plausible that the persistence of visual contributions when reaching to visual targets might result from an implicit internal visualization of task space that imposes some degree of intermodality integration. In support of this idea, Desmurget et al. (1995, 1997) previously demonstrated that visual information about initial hand position improved the accuracy of movements made toward both visual and proprioceptive targets. The current study confirms and extends these results, by indicating a persistent role of visual information of starting hand position in the planning of movement distance toward proprioceptive targets.

Acknowledgments

We would like to thank Ewelina Styczynska for help in data collection. This research was funded by the National Institute of Health (NICHD Grant R01HD39311).

References

- Adamovich SV, Berkinblit MB, Fookson O, Poizner H (1998) Pointing in 3D space to remembered targets. I. Kinesthetic versus visual target presentation. J Neurophysiol 79:2833–2846 [DOI] [PubMed] [Google Scholar]

- Bagesteiro LB, Sarlegna FR, Sainburg RL (2006) Differential influence of vision and proprioception on control of movement distance. Exp Brain Res 171:358–370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baraduc P, Wolpert DM (2002) Adaptation to a visuomotor shift depends on the starting posture. J Neurophysiol 88:973–981 [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ (1996) How humans combine simultaneous propiroceptive and visual position information. Exp Brain Res 111:253–261 [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ (1999) Integration of proprioceptive and visual position-information: an experimentally supported model. J Neurophysiol 81:1355–1364 [DOI] [PubMed] [Google Scholar]

- Blouin J, Teasdale N, Bard C, Fleury M (1993) Directional control of rapid arm movements : the role of the kinetic visual feedback system. Can J Exp Psychol 47:678–696 [DOI] [PubMed] [Google Scholar]

- Bock O, Arnold K (1993) Error accumulation and error correction in sequential pointing movements. Exp Brain Res 95:111–117 [DOI] [PubMed] [Google Scholar]

- Brown SH, Cooke JD (1981) Responses to force perturbations preceding voluntary human arm movements. Brain Res 220:350–355 [DOI] [PubMed] [Google Scholar]

- Brown LE, Rosenbaum DA, Sainburg RL (2003a) Limb position drift: implications for control of posture and movement. J Neurophysiol 90:3105–3118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown LE, Rosenbaum DA, Sainburg RL (2003b) Movement speed effects on limb position drift. Exp Brain Res 153:266–274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darling WG, Miller GF (1993) Transformations between visual and kinesthetic coordinate systems in reaches to remembered object locations and orientations. Exp Brain Res 93:534–547 [DOI] [PubMed] [Google Scholar]

- Desmurget M, Rossetti Y, Prablanc C, Stelmach GE, Jeannerod M (1995) Representation of hand position prior to movement and motor variability. Can J Physiol Pharmacol 73:262–272 [DOI] [PubMed] [Google Scholar]

- Desmurget M, Rossetti Y, Jordan M, Meckler C, Prablanc C (1997) Viewing the hand prior to movement improves accuracy of pointing performed toward the unseen contralateral hand. Exp Brain Res 115:180–186 [DOI] [PubMed] [Google Scholar]

- Desmurget M, Grafton ST, Vindras P, Gréa H, Turner RS (2004) The basal ganglia network mediates the planning of movement amplitude. Eur J Neurosci 19:2871–2880 [DOI] [PubMed] [Google Scholar]

- Elliott D, Carson RG, Goodman D, Chua R (1991) Discrete vs. continuous visual control of manual aiming. Hum Mov Sci 10:393–418 [Google Scholar]

- Favilla M, Hening W, Ghez C (1989) Trajectory control in targeted force impulses VI. Independent specification of response amplitude and direction. Exp Brain Res 75:280–294 [DOI] [PubMed] [Google Scholar]

- Flanagan JR, Rao AK (1995) Trajectory adaptation to a nonlinear visuomotor transformation: evidence of motion planning in visually perceived space. J Neurophysiol 74:2174–2178 [DOI] [PubMed] [Google Scholar]

- Flanders M, Cordo PJ (1989) Kinesthetic and visual control of a bimanual task: specification of direction and amplitude. J Neurosci 9:447–453 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu QG, Flament D, Coltz JD, Ebner TJ (1995) Temporal encoding of movement kinematics in the discharge of primate primary motor and premotor neurons. J Neurophysiol 73:836–854 [DOI] [PubMed] [Google Scholar]

- Gentilucci M, Jeannerod M, Tadary B, Decety J (1994) Dissociating visual, kinesthetic coordinates during pointing movements. Exp Brain Res 102(2):359–366 [DOI] [PubMed] [Google Scholar]

- Georgopoulos AP, DeLong MR, Crutcher MD (1983) Relations between parameters of step-tracking movements and single cell discharge in the globus pallidus and subthalamic nucleus of the behaving monkey. J Neurosci 3:1586–1598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghez C (1979) Contributions of central programs to rapid limb movement in the cat. In: Wilson VJ, Asanuma H (eds) Integration in the nervous system. Igaku-Shoin, Tokyo, New York [Google Scholar]

- Ghez C, Gordon J, Ghilardi MF (1995) Impairments of reaching movements in patients without proprioception. II. Effects of visual information on accuracy. J Neurophysiol 73:361–372 [DOI] [PubMed] [Google Scholar]

- Ghez C, Krakauer J, Sainburg RL, Ghilardi MF (1999) Spatial representations and internal models of limb dynamics in motor learning. In: Gazzaniga MS (ed) The new cognitive neurosciences. The MIT Press, Cambridge [Google Scholar]

- Gielen CCAM, van den Oosten K, Pull ter Gunne F (1985) Relation between EMG activation patterns and kinematic properties of aimed arm movements. J Mot Behav 17:421–442 [DOI] [PubMed] [Google Scholar]

- Gordon J, Ghez C (1987a) Trajectory control in targeted force impulses. II. Pulse height control. Exp Brain Res 67:241–252 [DOI] [PubMed] [Google Scholar]

- Gordon J, Ghez C (1987b) Trajectory control in targeted force impulses. III. Compensatory adjustments for initial errors. Exp Brain Res 67:253–269 [DOI] [PubMed] [Google Scholar]

- Gottlieb GL, Corcos DM, Agarwal GC (1989) Organizing principles for single-joint movements. I. A speed-insensitive strategy. J Neurophysiol 62:342–357 [DOI] [PubMed] [Google Scholar]

- Holmes NP, Spence C (2005) Visual bias of unseen hand position with a mirror: spatial and temporal factors. Exp Brain Res 166:489–497 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krakauer JW, Pine ZM, Ghilardi MF, Ghez C (2000) Learning of visuomotor transformations for vectorial planning of reaching trajectories. J Neurosci 20:8916–8924 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larish DD, Volp CM, Wallace SA (1984) An empirical note on attaining a spatial target after distorting the initial conditions of movement via muscle vibration. J Mot Behav 16:76–83 [DOI] [PubMed] [Google Scholar]

- Lateiner JE; Sainburg RL (2003) Differential contributions of vision and proprioception to movement accuracy. Exp Brain Res 151:446–454 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIntyre J, Stratta F, Droulez J, Lacquaniti F (2000) Analysis of pointing errors reveals properties of data representations and coordinate transformations within the central nervous system. Neural Comput 12:2823–2855 [DOI] [PubMed] [Google Scholar]

- Messier J, Kalaska JF (2000) Covariation of primate dorsal premotor cell activity with direction and amplitude during a memorized-delay reaching task. J Neurophysiol 84:152–165 [DOI] [PubMed] [Google Scholar]

- Nougier V, Bard C, Fleury M, Teasdale N, Cole J, Forget R, Paillard J, Lamarre Y (1996) Control of single-joint movements in deafferented patients: evidence for amplitude coding rather than position control. ExpBrain Res 109:473–482 [DOI] [PubMed] [Google Scholar]

- Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9:97–113 [DOI] [PubMed] [Google Scholar]

- Paillard J (1996) Fast and slow feedback loops for the visual correction of spatial errors in a pointing task: a reappraisal. Can J Physiol Pharmacol 74:401–417 [PubMed] [Google Scholar]

- Prablanc C, Echallier JF, Jeannerod M, Komilis E (1979) Optimal response of eye and hand motor systems in pointing at a visual target. II. Static and dynamic visual cues in the control of hand movement. Biol Cybern 35:183–187 [DOI] [PubMed] [Google Scholar]

- Prodoehl J, Gottlieb GL, Corcos DM (2003) The neural control of single degree-of-freedom elbow movements. Effect of starting joint position. Exp Brain Res 153:7–15 [DOI] [PubMed] [Google Scholar]

- Riehle A, Requin J (1989) Monkey primary motor and premotor cortex: single-cell activity related to prior information about direction and extent of an intended movement. J Neurophysiol 61:534–549 [DOI] [PubMed] [Google Scholar]

- Rosenbaum DA (1980) Human movement initiation: specification of arm, direction, and extent. J Exp Psychology 109:444–474 [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Desmurget M, Prablanc C (1995) Vectorial coding of movement: vision, proprioception, or both? J Neurophysiol 74:457–463 [DOI] [PubMed] [Google Scholar]

- Sainburg RL, Schaefer SY (2004) Interlimb differences in control of movement extent. J Neurophysiol 92:1374–1383 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sainburg RL, Poizner H, Ghez C (1993) Loss of proprioception produces deficits in interjoint coordination. J Neurophysiol 70:2136–2147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sainburg RL, Ghilardi MF, Poizner H, Ghez C (1995) Control of limb dynamics in normal subjects and patients without proprioception. J Neurophysiol 73:820–835 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sainburg RL, Ghez C, Kalakanis D (1999) Intersegmental dynamics are controlled by sequential anticipatory, error correction, and postural mechanisms. J Neurophysiol 81:1045–1056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sainburg RL, Lateiner JE, Latash ML, Bagesteiro LB (2003) Effects of altering initial position on movement direction and extent. J Neurophysiol 89:401–415 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheidt RA, Conditt MA, Secco EL, Mussa-Ivaldi FA (2005) Interaction of visual and proprioceptive feedback during adaptation of human reaching movements. J Neurophysiol 93:3200–3213 [DOI] [PubMed] [Google Scholar]

- Sober SJ, Sabes PN (2003) Multisensory integration during motor planning. J Neurosci 23:6982–6992 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sober SJ, Sabes PN (2005) Flexible strategies for sensory integration during motor planning. Nature Neurosci 8:490–497 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soechting JF, Flanders M (1989a) Errors in pointing are due to approximations in sensorimotor transformations. J Neurophysiol 62:595–608 [DOI] [PubMed] [Google Scholar]

- Soechting JF, Flanders M (1989b) Sensorimotor representations for pointing to targets in three-dimensional space. J Neurophysiol 62:582–594 [DOI] [PubMed] [Google Scholar]

- Turner RS, Anderson ME (1997) Pallidal discharge related to the kinematics of reaching movements in two dimensions. J Neurophysiol 77:1051–1074 [DOI] [PubMed] [Google Scholar]

- Vindras P, Desmurget M, Viviani P (2005) Error parsing in visuomotor pointing reveals independent processing of amplitude and direction. J Neurophysiol 94:1212–1224 [DOI] [PubMed] [Google Scholar]

- Wolpert DM, Ghahramani Z, Jordan MI (1995) Are arm trajectories planned in kinematic or dynamic coordinates? An adaptation study. Exp Brain Res 103:460–470 [DOI] [PubMed] [Google Scholar]