Abstract

Background:

To ensure that residents are appropriately trained in the era of the 80-hour work-week, training programs have restructured resident duties and hired advanced practice providers (APPs). However, the effect of APPs on surgical training remains unknown.

Study Design:

We created a survey using a modified Delphi technique to examine the interaction between residents and APPs across practice settings (inpatient, outpatient, and operating room (OR)). We identified four domains: administrative tasks, clinical experience, operative experience, and overall impressions. We administered the survey to residents across 7 surgical training programs at a single institution, and assessed internal reliability with Cronbach’s alpha.

Results:

Fifty residents responded (77% participation rate). The majority reported APPs reduced the time spent on administrative tasks such as completing documentation (96%) and answering pages (88%). For clinical experience, 62% of residents felt that APPs had no impact on the amount of time spent evaluating consult patients, and 80% reported no difference in the number of bedside-procedures performed. However, 77% of residents reported a reduction in the time spent counseling patients. When APPs worked in the inpatient setting, 90% of residents reported leaving the OR less frequently to manage patients. When APPs were present in the OR, 34% of residents felt they were less likely to perform key-parts of the case. Cronbach’s alpha showed excellent-to-good reliability for the administrative tasks (0.96), clinical experience (0.76), operative experience (0.69), and overall impressions (0.66) domains.

Conclusions:

Most residents report that the integration of APPs has decreased the administrative burden. The reduction in patient counseling may be an unrecognized and unintended consequence of implementing APPs. The perceived effect on operative experience is dependent on the APPs’ role.

Keywords: Surgery Residency, Advanced Practice Providers, Physician Assistants, Nurse Practitioners, Mid-level providers, Education, Training

Introduction

Surgical training programs provide residents with the opportunity to hone their technical skills and build their medical knowledge, while relying on them to fulfill non-clinical service-related tasks. These administrative responsibilities have historically accounted for one-fifth of resident duty-hours.1 However, the advent of the 80-hour work-week forced many residency programs to re-evaluate the role and responsibilities of trainees. Consequently, many training programs have hired advanced practice providers (APPs) in an effort to strike a new balance between education and service; and this trend of increased utilization of APPs on surgical services is likely to continue.2,3

One intention of implementing APPs, typically nurse practitioners (NPs) and physician assistants (PAs), was to offset the downstream effects of resident duty-hour restrictions.4–6 The integration of APPs into the patient care team changes the structure and dynamics of a surgical service. How APPs have impacted the operative and clinical experience of residents remains poorly characterized. APPs may enhance training if residents have greater operative and clinical exposure, or APPs may detract from education if redundancy or competition is created within services.

It was our objective to obtain an understanding of the resident training experience. To do this, we created and administered a resident survey examining the impact of advanced practice providers within four domains: administrative tasks, clinical experience, operative experience and overall impressions.

Methods

Survey Design

We used a modified Delphi technique in three rounds to design the survey. In the first round, we obtained consensus on the key interactions between residents and APPs. In the second round, survey questions were finalized to capture these interactions. Lastly, in the third round, we piloted the finalized survey on a group of non-clinical personnel.

The Delphi method is a well-described technique used to identify shared views of a pre-defined group.7 Traditionally, Delphi methodology solicits responses from participants in an anonymous format. We modified the process to allow for in-person meetings. We recruited residents across surgical specialties to participate. Invited participants were at various stages of training (post-graduate years (PGY) 2–4). Our final panel consisted of a group of four residents from the general, orthopaedic, and vascular surgery training programs. All of these trainees had experience rotating on services with and without APPs.

First round

In the first round, we asked the panel to provide a list of how APPs are utilized in their respective departments. We advised panelists to think of all the locales in which APPs have been implemented (e.g. clinics, operating rooms, inpatient wards). The panel identified service specific variation in the role APPs occupy within their department. Next, we asked panelists to define the key interactions between residents and APPs in that role or setting. All participants nominated 5 interactions (e.g. pager coverage during didactic lectures, triage of new patient consultations, critical care procedure supervision). We grouped these interactions thematically to define domains. Consensus was reached that all interactions fit into one of four domains — administrative tasks, clinical experience, operative experience and overall impressions.

Second round

In the second round, we asked panelists to generate question-stems to explore the resident experience within the four domains. A total of 50 question-stems were generated and voted on. Questions which were redundant or captured a nuanced aspect of training specific to a surgical specialty or sub-specialty were removed via consensus vote. We used a 5-point Likert scale to capture responses for most questions. We added one optional open-ended question at the end of the survey to allow for general impressions and unprompted comments. The final survey contained twenty-three questions.

Third round

In the third round, we piloted the 23-item survey with a 10-person cohort of non-clinical graduate school students. The panel then reviewed each question with the pilot cohort. We asked the graduate students about their interpretation of question stems, wording, and the appropriateness of the Likert scale. We incorporated feedback to clarify the language of the questions. No questions were removed.

Human Subjects Protection

This study was conducted in accordance with the Center for the Protection of Human Subjects at Dartmouth College. All responses were de-identified prior to analysis and reporting, participation was entirely voluntary, and therefore the need for signed consent was waived.

Data Collection

The target population included all surgical residents at Dartmouth-Hitchcock Medical Center in Lebanon, New Hampshire. Days and times for mandatory resident educational conferences (e.g. grand rounds, morbidity & mortality conference, and resident didactic sessions) were identified for all surgical specialties and subspecialties including general surgery, neurosurgery, orthopaedic surgery, otolaryngology (ENT), plastic surgery, urology, and vascular surgery. We administered the survey at these conferences from February 20th to February 27th 2017. For those surgical specialties or subspecialties that did not have a conference scheduled during the survey administration period, we left surveys in residents’ mailboxes and assigned a designated drop-off area for completed surveys. Resident participation was voluntary.

Analysis

We used descriptive statistics to analyze the survey results, and performed a stratified analysis by training level (intern vs. junior resident vs. senior resident). We report results of the stratified analysis for the question-items where a trend was noted by training level. We used Chi-square and Fisher exact tests, and provide p-values where appropriate. We created question scales to assess the internal reliability of questions within a specific domain. We calculated Cronbach’s alpha, a measure of internal reliability, for each scale. We performed all statistical analyses using STATA version 14 (College Station, Texas).

Results

All surgical residents at our institution were invited to participate. A total of 50 residents completed the survey (77% participation rate). Forty percent of participants were senior residents (PGY 4 or higher), 36% were junior residents (PGY 2–3), and 22% were in their first year of training (Table 1).

Table 1:

Characteristics of Survey Participants

| Variable | n (%) |

|---|---|

| Training Year | |

| Intern (PGY1) | 11 (22) |

| Junior Resident (PGY2–3) | 18 (36) |

| Senior Resident (PGY4+) | 21 (42) |

| Unknown | 1 (2) |

| Surgical Training Program | |

| General surgery | 14 (28) |

| Neurosurgery | 5 (10) |

| Orthopaedic surgery | 13 (26) |

| Otolaryngology | 2 (4) |

| Plastic surgery | 1 (2) |

| Urology | 8 (16) |

| Vascular surgery | 5 (10) |

| Unknown | 2 (4) |

| Legend: PGY, postgraduate year. |

Resident Familiarity in Working with APPs

Most residents had some experience working with APPs on inpatient services: 32% reported having inpatient APPs on less than half of their rotations, 28% reported having APPs about half the time, and 22% reported having APPs more than half the time. An additional 14% reported having APPs on all their inpatient rotations, and 4% of residents had not rotated on a service with an inpatient APP.

Similarly, most residents (96%) had some experience working with APPs in the outpatient setting. Specifically, 36% reported less than half of the clinics they covered had implemented APPs, 34% reported about half, 24% reported more than half, and 2% reported APPs were present for all clinics. Far fewer residents had experience with APPs in the operating room; 36% reported they had no interaction with operative APPs. 56% reported that APPs were scrubbed in less than half time, and a minority (8%) reported APPs were scrubbed more frequently. This minority group primarily represented surgical subspecialties such as orthopaedics and plastic surgery.

Administrative Tasks

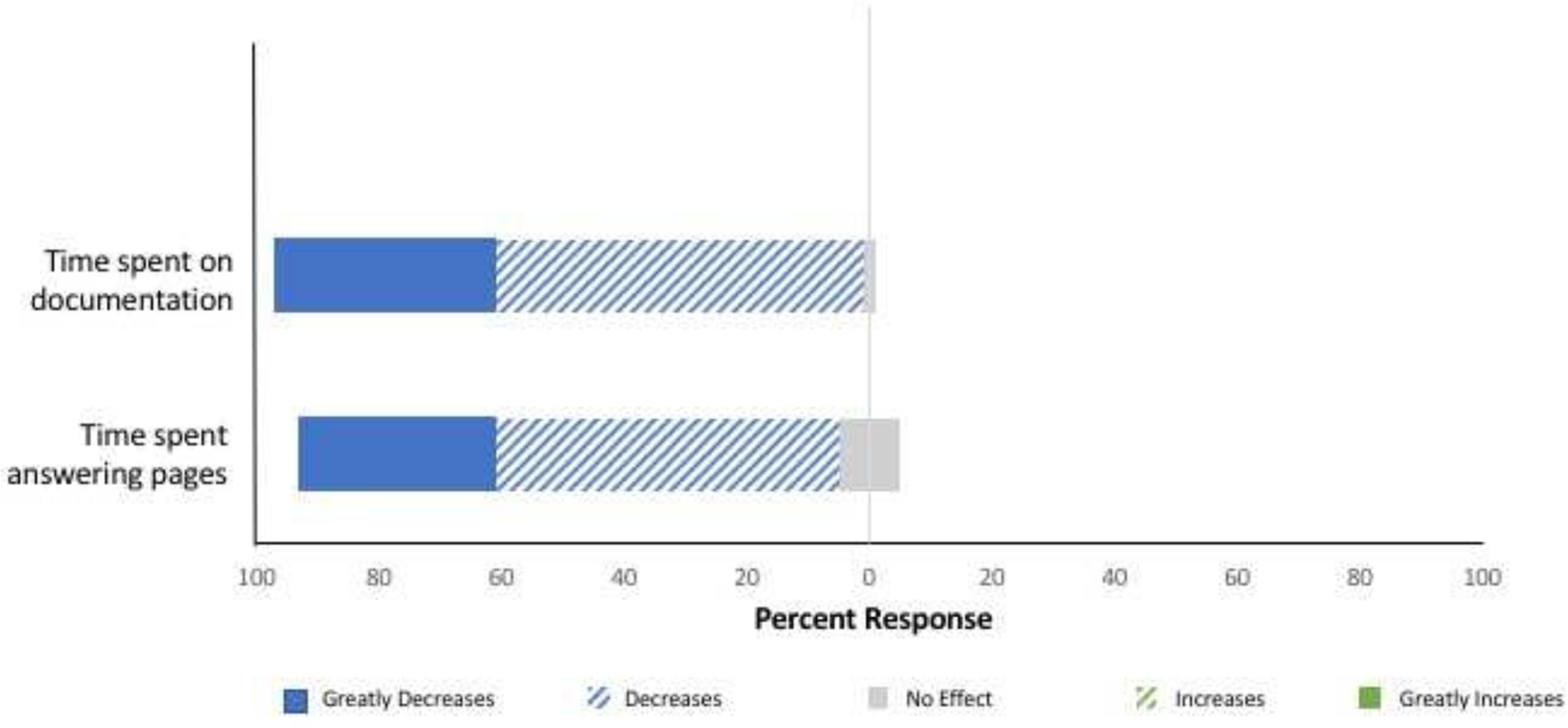

Almost all residents reported that APPs reduced the time trainees spent on administrative tasks (Figure 1). Most (96%) felt APPs decrease or greatly decrease the time spent placing orders within the electronic medical record system, completing daily progress notes, and writing discharge summaries. The majority (88%) also reported a reduction in the time spent answering pages.

Figure 1.

Effect of APPs on Administrative Tasks

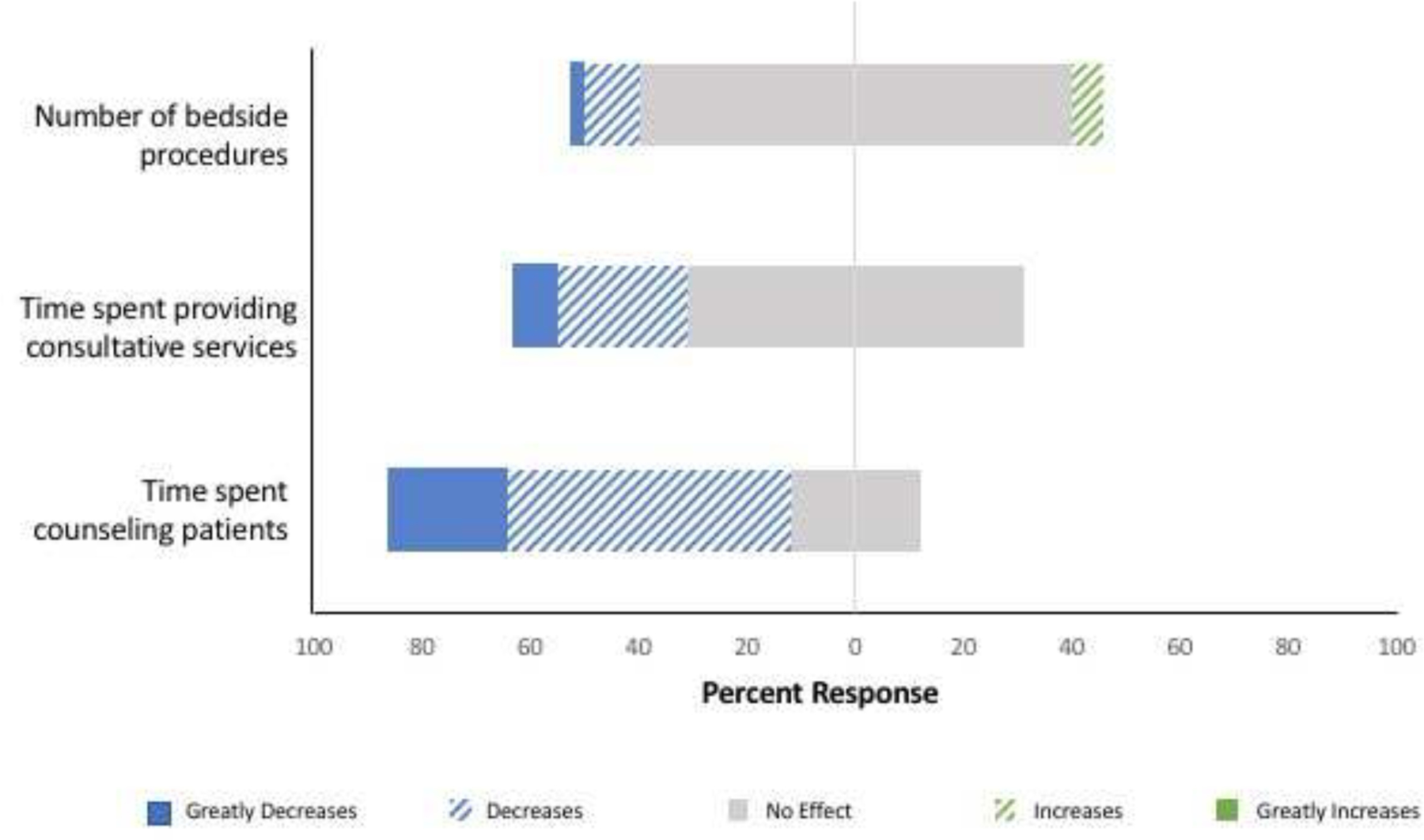

Clinical Experience

More than half of the surveyed residents (62%) reported that APPs on inpatient surgical services had no impact on the amount of time spent evaluating consult patients (Figure 2). Similarly, 80% of residents reported no difference in the number of bedside procedures (e.g. central line placements or bedside ultrasounds) performed when APPs were part of the care team. However, most residents (77%) did report a reduction in the amount of time spent counseling patients. On stratified analysis, no significant difference was noted by training level, with 91% of interns, 72% of junior residents, and 74% of senior residents reporting less time spent counseling patients when inpatient APPs were a part of the team (p=0.56).

Figure 2.

Effect of APPs on residents

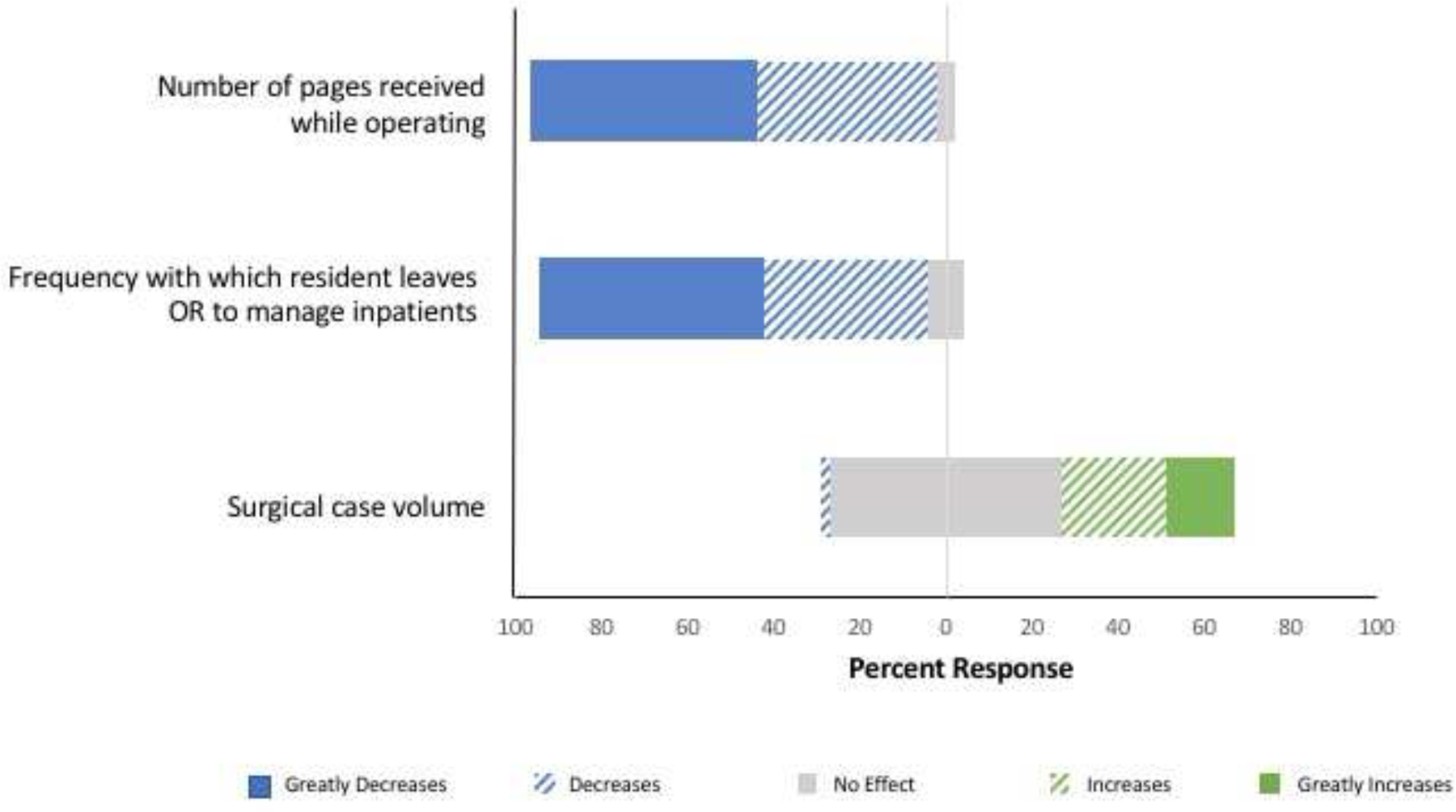

Operative Experience

Among residents who had interacted with APPs in the OR, 63% felt that the presence of APPs in the operating room did not affect their chance to perform key aspects of the case. Conversely, 34% did report that an APP in the OR decreases the resident’s chance of performing key parts of the case. A stratified analysis by training level revealed that compared to senior residents, interns and junior residents were more apt to report that APPs decreased or greatly decreased the resident’s chance of preforming key aspects of the case (67% of interns, 31% of junior resident, and 17% of senior residents), although this did not reach statistical significance (p=0.07).

Nearly all surveyed residents (94%) reported that inpatient APPs decreased or greatly decreased the number of pages received in the OR (Figure 3). 90% of trainees reported a reduction in the frequency with which they needed to leave the OR to manage patient issues. Overall, 52% of residents reported an increase in uninterrupted OR time when APPs were on inpatient teams, with similar responses across all training levels (55% of interns, 61% of junior residents, and 42% of senior residents, p=0.67). A higher proportion of interns (55%) and junior residents (56%) reported an increase in case volume compared to senior residents (22%), although this did not reach statistical significance (p=0.14).

Figure 3.

Effect of inpatient APPs on residents

Overall Impressions

Most residents (88%) felt that APPs make their workload lighter or much lighter (Table 2). The majority (86%) also felt that APPs enhance or greatly enhance patient care. Fewer residents (64%) felt APPs enhanced the outpatient experience, and nearly one-third (34%) reported APPs had no impact on their clinic experience.

Table 2:

Overall Resident Perceptions of NP/PAs based on individual’s cumulative experience

| n (%) | |

|---|---|

| Overall, how does the presence of NP/PAs affect your workload? | |

| Much Lighter | 5 (10) |

| Lighter | 39 (78) |

| No Impact | 6 (12) |

| Heavier | 0 (0) |

| Much Heavier | 0 (0) |

| Overall, what impact do NP/PAs have on your education? | |

| Greatly Detracts | 1 (2) |

| Detracts | 2 (4) |

| No Impact | 23 (47) |

| Enhances | 19 (39) |

| Greatly Enhances | 4 (8) |

| How do you feel NP/PAs affect patient care? | |

| Greatly Detracts | 0 (0) |

| Detracts | 1 (2) |

| No Impact | 6 (12) |

| Enhances | 25 (50) |

| Greatly Enhances | 18 (36) |

| NP, nurse practitioner, PA, physician assistant. | |

Residents were divided when it came to the impact APPs had on their education, approximately half (47%) reported APPs enhance or greatly enhance their education, and the remaining half reported no impact. Despite this, the majority (84%) felt hiring more APPs would enhance the resident training experience. More than half of trainees (63%) felt that the ideal relationship between residents and APPs is that of peers. Interestingly, this response appeared to become more common as residents progressed in their training, with 45% of interns, 67% of junior residents, and 70% of senior residents reporting a peer relationship with APPs is ideal, although this did not reach statistical significance (p=0.37). A minority (37%) reported that APPs should be under the supervision of residents.

Internal Reliability

Cronbach’s alpha showed excellent reliability for the administrative tasks domain (0.96), and good reliability for clinical experience (0.76), operative experience (0.69), and overall impressions (0.66) domains.

Qualitative Responses

Nearly a third of residents completed the optional open-ended question which asked: “[Is there] anything else you would like to tell us regarding NP/PAs and surgical training?” Two major themes emerged from this question. The primary theme was that APPs enhance patient care and add value by providing continuity of care to inpatients. Trainees recognized that continuity is otherwise difficult to maintain with residents who rotate between surgical services. The second theme was specific to robotic surgery cases, where trainees felt APPs within the OR could enhance the residents’ operative experience.

Discussion

We found that the majority of residents in a multi-specialty cohort felt that APPs reduced their workload with the greatest influence on administrative tasks. Many residents also described an enhanced operative experience on surgical services where APPs were part of the inpatient team. In this case, residents felt that interruptions in the operating room were minimized. They received fewer pages and needed to leave the OR less frequently. Interestingly, if the APPs’ role extended into the OR, a third of residents felt their chance to preform key parts of the case was reduced. However, most residents had limited interaction with APPs in the OR. Only a few residents reported scrubbing with APPs for more than half of their cases, and these responses were from residents in the orthopaedic and plastic surgery programs.

Most residents reported minimal impact of APPs on clinical inpatient and outpatient experiences. Many residents noted no change in the time spent providing consultation services or performing bedside procedures. This should be viewed in a positive light as these are integral parts of residency training. However, we did note a substantial reduction in the time that residents spent counseling patients. Gaining experience during training and ultimately becoming proficient with thoughtful and comprehensive patient counseling is important. A reduction in this educational activity must therefore be interpreted cautiously. The American Council for Graduate Medical Education (ACGME) core competencies include interpersonal and communication skills and professionalism. The successful integration of APPs onto surgical services provides residents with an opportunity to work along-side APPs as members of an interdisciplinary team. This engenders camaraderie, interpersonal relationships, and professional communication skills, as evidenced by two-thirds of residents describing the relationship between residents and APPs as that of peers. However, our findings also indicate that there may be missed opportunities to engage with patients directly and develop the necessary skills to counsel patients effectively. Therefore, surgical services that have employed APPs to assist with patient-care should consider the potential change to the resident experience of patient counseling.

Overall, our findings suggest that residents feel patient care is enhanced by APPs and that APPs play an important and beneficial role on the surgical team. Furthermore, the qualitative data from our survey indicates that residents recognize and value the continuity of care that APPs provide to patients.

New competency demands and concomitant reduction in resident duty-hours makes training surgical residents challenging. The ACGME requires that surgical trainees not only demonstrate competency in technical skills and medical knowledge, but also become proficient in system-based practice and engage in practice-based learning and improvement.1,8 To achieve these objectives, many training programs have increased their utilization of advanced practice providers in various settings. Despite the rapidly increasing employment of APPs, few investigators have assessed how APPs might affect resident training.9

Prior studies have evaluated the care delivered by APPs versus residents and found that patient outcomes are comparable, readmission rates are similar10, there is no difference in mortality9,11, and hospital length of stay may even be reduced with APPs.10,12,13 However, the widespread implementation of APPs is associated with increased financial costs.14,15 To justify this, the integration of APPs onto surgical services must facilitate residents meeting and exceeding competencies. In addition, any change to the structure of a surgical service may have unintended consequences. We must therefore thoroughly evaluate our current training paradigm to understand what potential trade-offs may have been made to incorporate APPs into surgical practice.

Objective data such as case logs, in-service exam scores, and resident work hours may provide some insight into the impact of APPs.12,16 Spisso and colleagues quantified the time saved by residents when APPs were introduced to the trauma service at the University of California Davis Medical Center.13 Similarly, work from Christmas and Huynhn noted a reduction in resident work hours with the integration of APPs onto the trauma surgery service.12,16 These studies provide important objective data, but are limited to single surgical services and don’t capture the interaction between residents and APPs.

A few investigators have attempted to define the interpersonal interactions between residents and APPs. Kahn et al. conducted a survey of surgery residents to evaluate their experience with APPs in the critical care unit.17 Those residents reported enhanced patient care and a reduction in clinical workload. However, they also found that residents felt APPs detracted from the training experience, particularly when nurses would preferentially contact APPs with patient matters. Buch and colleagues conducted a single institution survey of residents and APPs to evaluate communication.18 They noted a discrepancy between APPs’ and residents’ views of the contributions that APPs make to clinical education, and the role of APPs in the hierarchy of the training program. These studies highlight interesting aspects of the resident-APP relationship, but fail to consider the global resident experience.

We sought to address these limitations and evaluate the effect of APPs on surgical training across services and specialties. We paid particular attention to the operative experience. At our institution, 40% of residents felt their case volume increased on services with inpatient APPs, while 54% reported no impact. This was partially explained by training year, as junior residents reported a more positive effect of APPs. Junior residents are also more likely to be responsible for administrative tasks and thus more likely to benefit from the reduction in non-clinical responsibilities afforded by the APPs. In addition, our findings speak to an improved operative experience,19–21 where residents reported less frequent interruptions while operating when APPs were part of the inpatient team. The ability to be present and engaged in operative learning is vital for surgical training.

There are limitations to our study. This survey was conducted at a single academic institution, and therefore our findings may have limited generalizability. APPs were utilized in different capacities across surgical services. However, the roles APPs occupy at our institution are reflective of the full spectrum in which APPs are employed at other surgical programs. This survey was not limited to general surgery trainees. We felt that the training goals and challenges of all surgical programs are similar, and thus restricting the survey to a single program was not necessary. However, it is possible that the training needs of ENT, orthopaedic, neurosurgery, plastics, vascular, and urology residents differ from those of general surgeons. Because of this, a closer look at APPs within general surgery training may be warranted. Lastly, our objective was to elicit the subjective experience of surgical residents, and we did not evaluate objective experiences such as ABSITE scores or case-logs.

Conclusions

Most surgical residents felt that APPs reduced their workload, most notably by reducing administrative tasks. The operative experience was enhanced in most instances, with most residents reporting a reduction in interruptions. Moreover, most residents felt that hiring more APPs for the inpatient setting would improve both training and patient care. However, we must be mindful of the potential unintended consequences of integrating APPs onto surgical services, such as a reduction in direct patient counseling by residents. While objective measures such as case volume and in-service exam scores can allow for a comparative assessment of programs, these measures fail to capture the full-experience of the resident. Our findings suggest that APPs have enhanced surgical residents’ training experience. Further qualitative work is needed to evaluate this important topic, both within individual programs and nationally.

Footnotes

Disclosure Information: Nothing to disclose.

Presented at the 98th Annual Meeting of the New England Surgical Society, Bretton Woods, NH, September 2017.

References:

- 1.Podnos YD, Williams RA, Jimenez JC, et al. Reducing the noneducational and nonclinical workload of the surgical resident; defining the role of the health technician. Curr Surg 2003;60:529–532. [DOI] [PubMed] [Google Scholar]

- 2.Shea JA, Willett LL, Borman KR, et al. Anticipated consequences of the 2011 duty hours standards: views of internal medicine and surgery program directors. Acad Med 2012;87:895–903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Philibert I, Friedmann P, Williams WT. New requirements for resident duty hours. JAMA 2002;288:1112–1114. [DOI] [PubMed] [Google Scholar]

- 4.Hawkins CM, Bowen MA, Gilliland CA, et al. The impact of nonphysician providers on diagnostic and interventional radiology practices: operational and educational implications. J Am Coll Radiol 2015;12:898–904. [DOI] [PubMed] [Google Scholar]

- 5.Sansbury LB, Klabunde CN, Mysliwiec P, Brown ML. Physicians’ use of nonphysician healthcare providers for colorectal cancer screening. Am J Prev Med 2003;25:179–186. [DOI] [PubMed] [Google Scholar]

- 6.Colvin L, Cartwright A, Collop N, et al. Advanced practice registered nurses and physician assistants in sleep centers and clinics: a survey of current roles and educational background. J Clin Sleep Med 2014;10:581–587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hsu C-C, Sandford BA. The Delphi technique: making sense of consensus. Practical Assessment Research Eval 2007;12:1–8. [Google Scholar]

- 8.Sidhu RS, Grober ED, Musselman LJ, Reznick RK. Assessing competency in surgery: where to begin? Surgery 2004;135:6–20. [DOI] [PubMed] [Google Scholar]

- 9.Johal J, Dodd A. Physician extenders on surgical services: a systematic review. Can J Surg 2017;60:172–178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Morris DS, Reilly P, Rohrbach J, et al. The influence of unit-based nurse practitioners on hospital outcomes and readmission rates for patients with trauma. J Trauma Acute Care Surg 2012;73:474–478. [DOI] [PubMed] [Google Scholar]

- 11.Broers C, Hogeling-Koopman J, Burgersdijk C, et al. Safety and efficacy of a nurse-led clinic for post-operative coronary artery bypass grafting patients. Int J Cardiol 2006;106:111–115. [DOI] [PubMed] [Google Scholar]

- 12.Christmas AB, Reynolds J, Hodges S, et al. Physician extenders impact trauma systems. J Trauma 2005;58:917–920. [DOI] [PubMed] [Google Scholar]

- 13.Spisso J, O’Callaghan C, McKennan M, Holcroft JW. Improved quality of care and reduction of housestaff workload using trauma nurse practitioners. J Trauma 1990;30:660–663; discussion 663–665. [DOI] [PubMed] [Google Scholar]

- 14.George BP, Probasco JC, Dorsey ER, Venkatesan A. Impact of 2011 resident duty hour requirements on neurology residency programs and departments. Neurohospitalist 2014;4:119–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.McClellan CM, Cramp F, Powell J, Benger JR. A randomised trial comparing the cost effectiveness of different emergency department healthcare professionals in soft tissue injury management. BMJ Open 2013;3(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Huynh TT, Blackburn AH, McMiddleton-Nyatui D, et al. An initiative by midlevel providers to conduct tertiary surveys at a level I trauma center. J Trauma 2010;68:1052–1058. [DOI] [PubMed] [Google Scholar]

- 17.Kahn SA, Davis SA, Banes CT, et al. Impact of advanced practice providers (nurse practitioners and physician assistants) on surgical residents’ critical care experience. J Surg Res 2015;199:7–12. [DOI] [PubMed] [Google Scholar]

- 18.Buch KE, Genovese MY, Conigliaro JL, et al. Non-physician practitioners’ overall enhancement to a surgical resident’s experience. J Surg Educ 2008;65:50–53. [DOI] [PubMed] [Google Scholar]

- 19.Ferguson CM, Kellogg KC, Hutter MM, Warshaw AL. Effect of work-hour reforms on operative case volume of surgical residents. Curr Surg 2005;62:535–538. [DOI] [PubMed] [Google Scholar]

- 20.Hope WW, Griner D, Van Vliet D, et al. Resident case coverage in the era of the 80-hour workweek. J Surg Educ 2011;68:209–212. [DOI] [PubMed] [Google Scholar]

- 21.Condren AB, Divino CM. Effect of 2011 Accreditation Council for Graduate Medical Education duty-hour regulations on objective measures of surgical training. J Surg Educ 2015;72:855–861. [DOI] [PubMed] [Google Scholar]