Abstract

Important challenges in retinal microsurgery include prolonged operating time, inadequate force feedback, and poor depth perception due to a constrained top-down view of the surgery. The introduction of robot-assisted technology could potentially deal with such challenges and improve the surgeon’s performance. Motivated by such challenges, this work develops a strategy for autonomous needle navigation in retinal microsurgery aiming to achieve precise manipulation, reduced end-to-end surgery time, and enhanced safety. This is accomplished through real-time geometry estimation and chance-constrained Model Predictive Control (MPC) resulting in high positional accuracy while keeping scleral forces within a safe level. The robotic system is validated using both open-sky and intact (with lens and partial vitreous removal) ex vivo porcine eyes. The experimental results demonstrate that the generation of safe control trajectories is robust to small motions associated with head drift. The mean navigation time and scleral force for MPC navigation experiments are 7.208 s and 11.97 mN, which can be considered efficient and well within acceptable safe limits. The resulting mean errors along lateral directions of the retina are below 0.06 mm, which is below the typical hand tremor amplitude in retinal microsurgery.

I. INTRODUCTION

Retinal microsurgery requires precise manipulation of the delicate and non-regenerative tissue of the retina, while using high precision surgical tools. Its requirement for micrometer accuracy often exceeds the capability of even the most experienced surgeons. The success of retinal microsurgery relies on a clear view of the surgical workspace and precise hand-eye coordination while managing physiological hand tremor. Riviere et al. [1] have reported that the root mean square amplitude of hand tremor in retinal microsurgery is around 0.182 mm while the required positioning accuracy of subretinal injection can be as small as 0.025 mm [2]. Risks that may lead to damage of the retina and sclerotomy port must be reduced to avoid irreversible complications to the eye tissue. One challenge in retinal microsurgery is prolonged surgery duration. Loriga et al. [3] have shown a positive association between postoperative pain and duration of surgery. Experimentally induced light toxicity increase significantly after 13 minutes [4]. It is also thought that muscular and mental fatigue develop with prolonged operating times that exacerbate fine motor control and hand tremor concerns [5]. Due to physical limitations of the human surgeon and demands for short surgery duration, robot-assisted technology for autonomy and safety is necessary.

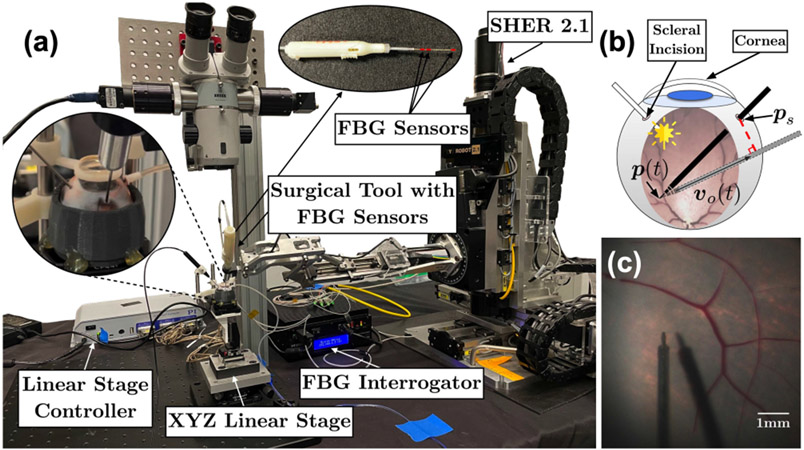

Various works have focused on robotic applications for retinal microsurgery. During these studies, artificial eye phantoms are commonly used due to their fixed and simple geometry. However, the use of porcine eyes, which are used in this study, imposes more challenges compared to artificial eye phantoms, since biological tissues have more irregular and unpredictable geometry. Furthermore, the additional physical constraints applied to the Remote-Center-of-Motion (RCM) at the scleral incision point (Fig. 1b) will make the robot system more complicated. In this work, we implement both open-sky and intact ex vivo porcine eye experiments. We have shown that by using this experimental setup (Fig. 1a), we can achieve high image resolution, and a field of view approaching that of a stereo microscope, Fig. 1c.

Fig. 1.

Robotic system overview: (a) Scleral force measurement setup. (b) Scleral incision point and cornea. The red-dashed line is set to zero as the scleral constraint. (c) The top-down view of an intact porcine eye.

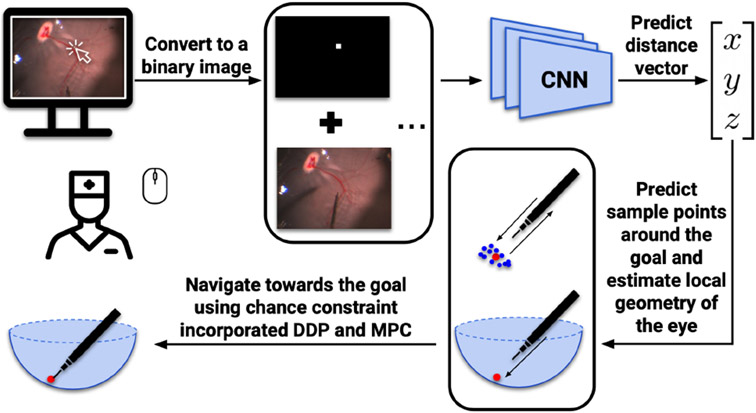

Our previous work [6], [7] demonstrated that autonomous navigation was possible using predicted depth information and optimal control. We used a deep neural network to assess depth perception, and predict the distance vector based on a top-down microscope image and a user-defined goal position, Fig. 2. Furthermore, we proposed a method [8] that combined real-time geometry estimation and chance constraint to generate a safer autonomous navigation process. However, all these works were validated using only silicone eye phantoms. Moreover, we only dealt with the simplest situation in which the eye was static.

Fig. 2.

Workflow of autonomous navigation process using MPC.

The present work extends and improves prior results in several ways. First, we demonstrate improved (in terms of completion time, accuracy, and safety) autonomous navigation. To better estimate the local geometry of the retina, we add a regularization term to the geometry fitting equation. We improve our chance constraint formulation through linearizing all ellipsoid parameters. By the use of MPC and chance constraint incorporated Differential Dynamic Programming (DDP) [9], the safety and navigation speed are thereby guaranteed. This could potentially alleviate the surgeon of any burden incurred by navigating the tool to the desired position, and allow the surgeon to focus on the main surgical objectives. Second, we evaluate the robotic system in a more realistic setting using both open-sky and intact ex vivo porcine eyes. Third, we show that the system is sufficiently robust to navigate to the updated goal position during simulated small head drift. To prove the efficiency of our scleral constraint, we measure the scleral force using a Fiber Brag Grating (FBG) sensors integrated surgical tool (Fig. 1a) developed by He et al. [10]. The results show that the scleral force can be maintained at a safe level.

II. RELATED WORK

A. Hand Tremor and Scleral Force Reduction

Numerous studies have focused on reducing hand tremor and scleral forces. Our group from Johns Hopkins University [11], [12] has developed a co-manipulation system called the Steady-Hand Eye Robot (SHER) that measures the force applied by the user to filter hand tremor. Gonenc et al. [13] integrated a force-sensing motorized micro-forceps with the hand-held device Micron tool to reduce hand tremor. Ida et al. [14] designed a teleoperated system to enable microcannulation experiments on an ex vivo porcine eye. To detect scleral force, He et al. [10] developed a multi-functional force-sensing instrument that measured both the scleral and tip forces. A recurrent neural network was further designed and trained to predict future safety status [15].

B. Depth Estimation

Research on depth estimation in image guided retinal surgery relies on the feedback of features generated by the light source. Zhou et al. [16] have developed a method to measure the distance between the instrument and surface based on projection patterns of the spotlight in the microscope camera. Koyama et al. [17] proposed a shadow-based autonomous positioning using two robotic manipulators. They automated the motion of the light guide to ensure that the shadow of the instrument was always inside the microscopic view. Our prior work [6], [7], [8] demonstrated that a deep neural network can be trained to predict depth with high accuracy. The work of others has utilized OCT [18], [19], [20], [21], which can provide significantly higher resolution than a microscope camera, but at a higher cost.

C. Head Drift or Eye Movement

Head drift or eye movement is an underappreciated problem during intraocular surgery. It may significantly increase the challenge or even prevent successful procedure completion. Severe intraoperative complications may result and diminish surgical outcomes [22]. McCannel et al. [23] reported that in 20 out of 230 vitreoretinal surgery cases major head movement occurred. Notably, 18 out of 37 snoring patients moved their heads substantially during the surgery.

III. PROBLEM SETUP

To achieve the autonomous navigation process in Fig. 2, we first define the tool-tip state of the surgical tool attached to the robot end-effector as , where and (3) are the tool-tip position and orientation, and are the linear and angular velocity relative to robot base frame. The control inputs are defined as , where and are translational forces and joint torques. The full state can be determined by robot joint encoders and forward kinematics.

Second, we use a convolutional neural network to achieve depth perception. The input of our network combines a RGB top-down image of the current surgical scene and a binary goal position image defined by users. The observed top-down image from a fixed microscope camera at time is defined as . The user is able to specify a 2-D goal position by clicking directly on the displayed real-time image. We convert this goal position into a binary image and attach as an additional layer along with to form the input. The output is a 3-D vector defined by , which is a vector predicted by the network from the current tool-tip position to the user-defined goal position. is the predicted distance between tool-tip and goal position.

Third, we generate a collision free tool-tip trajectory using chance constraint incorporated DDP. The cost function and constraints over time interval are defined as:

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

where is a unit vector representing the current orientation of surgical tool. is the scleral incision point. The translational motion of the instrument at this point will cause a net force applied to the sclera, which could damage the sclera. The scleral constraint closes the gap between the current and desired orientation of the tool shaft (Fig. 1b), and guarantees the range of movement within a small threshold. is the estimated ellipsoid center, is the level of confidence, and is a length parameter defined as:

| (6) |

where is the projection point that projects the corresponding sample point onto the estimated ellipsoid surface along the direction from pointed to the sample point. For example, if the estimated geometry is a sphere instead of an ellipsoid, is equal to the radius.

The stop position of the tool-tip should be immediately above the goal position and yet, without contact with the retinal surface. We summarize the process (Fig. 2) as follows: 1) Define a 2-D goal position through mouse clicking. 2) Collect over 200 sample points around the goal position based on predictions of the network. 3) Estimate the local retinal geometry as though it’s an ellipsoid using the weighted least squares method. 4) Use DDP-based MPC to navigate the surgical tool to the goal position autonomously and safely.

IV. TECHNICAL APPROACH

A. Experimental Setup

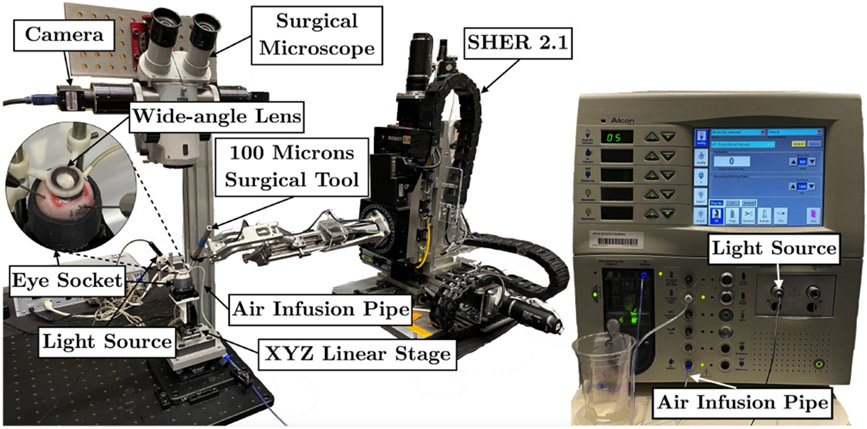

Both open-sky and intact porcine eye experimental setups contain a surgical robot manipulator (SHER 2.0 for open-sky eye and SHER 2.1 for intact eye experiments) with a surgical needle attached to its end-effector, a Q-522 Q-motion XYZ linear stage from Physik Instrumente (PI), an E-873.3QTU linear stage controller (PI), and a microscope camera to obtain the current image. The light source for the open-sky porcine eye experiments is on the right, while we use a brighter light source for the intact porcine eye experiments on the left - to enhance visual feedback inside the eye. An Accurus 800CS vitrectomy machine from Alcon Surgical is used to remove the lens and vitreous humor. The vitrectomy infusion maintains the intraocular pressure for intact eyes, avoiding eye collapse during experiments. A 3D-printed eye socket is used to secure and stabilize the eye. A wide-angle lens is used to provide a wide field of view and to simulate the distortion caused by the human lens, Fig. 3. The balanced saline solution is used to couple the gap between the lens and cornea. The outer diameter of the tool-tip is 0.1 mm.

Fig. 3.

Intact eye experimental setup. The vitrectomy machine provides the light source and air infusion to maintain the intraocular pressure.

B. Eye Preparation

The most time-consuming part before data collection is preparation of suitable intact eyes, that allows a clear top-down camera view. Retinal detachment and corneal opacity are two of the main obstacles in carrying out the experiments. While the intact eye allows immediate capture of a clear view through the lens and vitreous humor directly, the view is lost relatively quickly. As it requires one hour to collect 150 trajectories for one eye, we remove the lens and leave most vitreous humor inside the eye. Maintaining corneal clarity remains a challenge that is mitigated by keeping the cornea wet and utilizing the freshest eyes obtainable.

C. Data Collection

1800 trajectories are collected from 12 different porcine eyes (150 for each) for open-sky eye experiments, and 2100 trajectories are collected from 14 different porcine eyes for the intact eye experiments. Since the geometry of fresh cadaveric porcine eye varies from eye-to-eye, we collect the training data manually. To collect one trajectory for each experiment, we start the surgical tool from a random position and navigate it to a random goal position in a straight line. Both current surgical scene images and tool-tip positions are recorded at a frequency of 15 fps. We manually label the tool-tip stop position of the last frame from each trajectory, and generate the binary goal image based on this. The direction and distance vector label are calculated by subtracting the last frame tool-tip position from other frames.

D. Network Training

Our network is constructed using ResNet-18 [24] as well as our previous work [7], [8]. 75% of collected trajectories are randomly selected for training and the rest are used as a validation dataset. We discretize continuous XYZ coordinates into 854, 887, and 360 bins for both experiments to better label distance vectors. Each bin represents 0.02 mm. The cross-entropy loss is used as the loss function.

E. Regularized Geometry Fitting

We use weighted least squares ellipsoid estimation for local retinal geometry fitting. The ellipsoid fitting is a continuation of works by Li [25] and Reza [26]. We add a regularized term to the generalized ellipsoid equation, which fits better to our model with some prior information:

| (7) |

where , , is the regularization gain, , , , and are the ellipsoid center position and radius based on prior information. Let:

Equation (7) can be written with weight matrix as:

| (8) |

where , and is the distance variance calculated from the validation dataset.

Using the Lagrange multiplier method, is solved as an eigenvalue and eigenvector problem. We can rewrite (7) as:

| (9) |

where is the estimated center of ellipsoid, and is a lower triangular matrix.

Then (5) can be expressed as:

| (10) |

F. Chance Constraint Incorporated DDP and MPC

It is difficult to incorporate the chance constraint into the cost function directly. However, Blackmore et al. [27] has proved that a linear chance constraint is equal to a deterministic constraint on the mean of x denoted as:

| (11) |

where is a multivariate random variable with fixed covariance, is the level of confidence, , and is the standard error function.

Instead of approximating the nonlinear constraint at the center only in our previous work [8], we linearize (10) at both and . and can be derived by running geometry estimation several times. Then (10) can be written as:

| (12) |

where is the element of matrix:

where derives from , derives from , then:

| (13) |

where , and . Let and , then (13) is euqal to:

where is a combination of and . If , then .

MPC is then implemented by generating trajectories using chance constraint incorporated DDP along the actual navigation track. The time horizon is set to be 5s. Based on each trajectory generated by DDP, we navigate towards the predicted goal for a small step. Then we predict the goal position once again with the current top-down image and use this newly predicted goal to generate a new trajectory using DDP. We continue navigating the surgical tool for each small step and repeating the whole process until the predicted distance is less than 0.1 mm.

G. Optical Flow

We simulate the head drift by moving the XYZ linear stage randomly along the XY axes. Since our goal position is selected via mouse click on the monitor, the clicked goal on the screen will not move along with the linear stage movement. We must therefore track the goal position movement based on the top-down images. Optical flow is a feasible way to solve this. We first capture a 300 × 160 pixel image area before and after the linear stage movement. Then we use the Shi-Tomasi Corner Detector [28] to detect relevant features in both images. Then we use the Lucas-Kanade method [29] to derive the resulting motion between the two images. The goal position on the screen and the RCM point are updated based on these calculations.

H. Scleral Force Measurement

We use a Fiber Brag Grating (FBG) sensors integrated surgical tool (Fig. 1a) to measure the scleral force during the navigation process. Previous work [10] has related the raw wavelength data of FBGs to the scleral force along XY axes. The measurement starts from the initial position and ends by retracting the surgical tool to the initial position again. Before each trial, we rebias the force reading at the initial position. After that, we do some preliminary navigation tests to make sure when we retract the tool, the force reading is returned to the original level (i.e., the force reading should close to 0 mN before and after each test). In this work, we want to prove that by using scleral constraint incorporated control, we could keep the scleral forces at the scleral incision point within a safe limit. We set this safe limit as 115 mN based on mean forces using manual manipulators in in vivo rabbit experiments performed by two retina specialists. [30].

V. EXPERIMENTS

A. Open-sky Porcine Eye Experiments

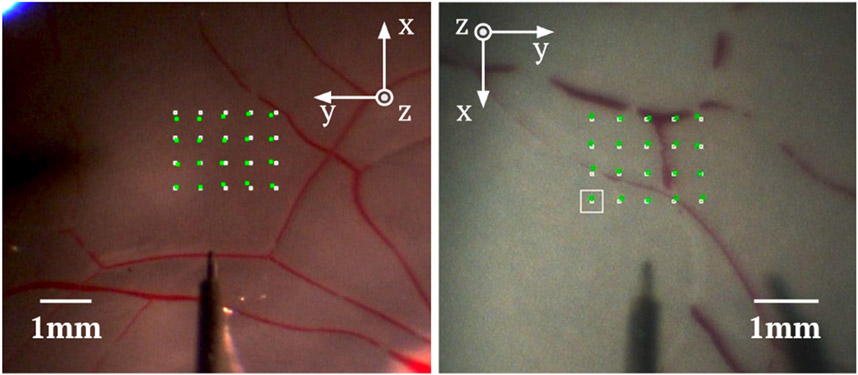

We used the open-sky porcine eye to evaluate the performance of our network with and without a chance constraint. For each trajectory, we navigated the surgical tool directly towards the goal until the tool-tip was 2mm away from the goal position. Then we followed the DDP-generated trajectory to reach the goal. Two eyes were tested. For each eye, we navigated the surgical tool to 20 pre-defined positions autonomously. The 20 positions were selected from a 60 × 80 pixel area in the camera view, Fig. 4. The actual size of the test area selected was 1.5 × 2.0 mm. The determination of depth perception performance was evaluated based on the number of trajectories that hit the retinal surface. This was done by assessing light reflection patterns around the contact area via the recorded video.

Fig. 4.

Experimental results for the open-sky eye (left) and the intact eye (right). The white points are pre-defined goal positions and the green points are actual landing positions. The white square is the current goal position. The directions of XY axes are different due to the utilization of different SHERs for the two experiments.

B. Intact Porcine Eye Experiments

In the intact porcine eye experiments, we first navigated to 20 pre-defined goal positions (60 × 80 pixel) without using chance constraint. We used this result as a baseline. Then we navigated to the same 20 pre-defined goal positions using MPC. The actual size of the test area was 1.32 × 1.76 mm due to the different magnifications of microscope. The tool was considered to reach the goal if the norm of the network prediction was smaller than 0.1 mm. To make a comparison, we added another stop condition and repeated the experiment. The tool will be stopped as well if the norm of the network prediction was smaller than 0.3 mm and the norm of tool tip error along XY axes was smaller than 2.3 pixels. Finally, we tested the performance of our system in the presence of modeled head drift using the two stop conditions mentioned above. We controlled the XYZ linear stage to randomly move along XY axes for a small distance during each navigation process. The movement range for each axis was from [−0.25 mm, 0.25 mm]. Head drift was only simulated within this small range. Otherwise, we lost the working area view from the fixed camera. Also, the scleral forces applied to the scleral wall were potentially too large to prevent surgical tool bending. When there was residual vitreous humor in the eye, we were unable to evaluate the performance of depth perception using the reflection of light. In this instance we assessed tool-to-retina contact by retinal surface deformation at the user-defined goal position.

C. Scleral Force Measurement

We navigated the FBG sensors integrated tool to 10 pre-defined positions to measure the scleral force during the MPC navigation. To evaluate the head drift motion, we navigated to a fixed position 10 times. For each time, we randomly moved the linear stage for a small distance along XY axes. We increased the movement range for each axis to [−0.5 mm, 0.5 mm] to make the change of force more visible. All goal positions were manually defined above the retinal surface.

VI. RESULTS

For the best network model of open-sky eye experiments, the mean training errors for XYZ axes were 0.028, 0.016, and 0.042 mm and the mean validation errors for XYZ axes were 0.058, 0.044, and 0.087 mm respectively. For the best network model of intact eye experiments, the mean training errors for XYZ axes were 0.049, 0.041, and 0.054 mm and the mean validation errors for XYZ axes were 0.152, 0.102, and 0.157 mm respectively. The relationship between distance groups and prediction errors was plotted in Fig. 5. A trend was evident indicating that when the tool was closer to the retina, the prediction errors were smaller. The validation errors of the intact eye were larger than open-sky eye’s due to increased blur in these top-down training images. This was not avoidable as in the time required for data collection degradation of the view through the intact cornea was progressive. Despite corneal opacity the prediction accuracy of the last few millimeters remained relevant. Fig. 5 showed that it was in an acceptable range of approximately 0.05 mm. The mean X and Y errors in Table I were mean errors between pre-defined goal positions and actual tool-tip stop positions in the top-down image, Fig. 4.

Fig. 5.

Relationship between distance groups and validation errors by three axes. (top) Open-sky eye (450 trajectories). (bottom) Intact eye (525 trajectories).

TABLE I.

Experimental results.

| evaluation items (units) |

# of tested goal positions |

mean X error (mm) |

mean Y error (mm) |

mean time duration (s) |

# of trajectories hit the retina |

|---|---|---|---|---|---|

| open-sky-eye1-without-chance-constraint | 20 | 0.0513 | 0.0463 | N/A | 10 |

| open-sky-eye1-chance-constraint (Fig. 4) | 20 | 0.0525 | 0.0463 | N/A | 7 |

| open-sky-eye2-without-chance-constriant | 20 | 0.0413 | 0.0475 | N/A | 11 |

| open-sky-eye2-chance-constraint | 20 | 0.0600 | 0.0400 | N/A | 5 |

| intact-eye3-without-chance-constraint | 20 | 0.0286 | 0.0429 | 36.194 | 10 |

| intact-eye3-MPC-100μm-stop-condition (Fig. 4) | 20 | 0.0253 | 0.0374 | 10.569 | 0 |

| intact-eye3-MPC-300μm-stop-condition | 20 | 0.0220 | 0.0220 | 7.208 | 0 |

| intact-eye3-head-drift-100μm-stop-condition | 20 | 0.0352 | 0.0385 | 15.021 | 0 |

| intact-eye3-head-drift-300μm-stop-condition | 20 | 0.0451 | 0.0440 | 10.809 | 0 |

A. Open-sky Porcine Eye Experiments

The last column of Table I demonstrated that by using the chance constraint, the result was a more reliable mean to determine depth. Although tool-to-retina contact had occurred during these experiments, it was not sufficient to cause retinal tissue deformation or damage. It was noted that the chance constraint method rendered the autonomous navigation process safer, but this did come at the cost of a small reduction in accuracy along the XY axes.

B. Intact Porcine Eye Experiments

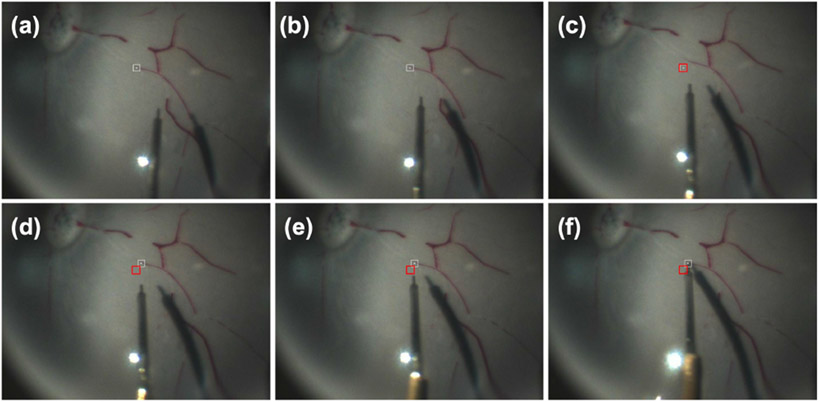

The results of intact eye experiments showed that using the MPC can almost eliminate the collision risk between the tool-tip and the retinal surface. This was because the MPC kept predicting the goal position until the tool-tip was very close to the goal. This differed from the open-sky eye experiments where the last prediction occurred at 2 mm from the goal position. This fact contributed to smaller mean XY axes errors. Also, the mean time duration was reduced significantly from 36.194s to 7.208s by using MPC. Fig. 6 showed one trial of the head drift simulation. The last two rows in Table I showed that the surgical tool was able to track the updated goal positions following head drift for all 40 trials. The mean navigation time of head drift was longer than MPC. This may result from the time spent to relocate the updated goal, and changes in the camera view and the relative shadow position. Experiments with 0.1 mm stop condition had longer mean time duration than the 0.3 mm stop condition, as for some trials the tool paused for the last few seconds waiting for the network prediction to meet the stop condition.

Fig. 6.

(a)-(f) Head drift simulation results. The head drift happens between (b) and (c). The white square in (a)-(c) is the original user-defined goal, and the white square in (d)-(f) is the updated goal. The red square represents the original goal after the head drift. In (c) the overlap occurs when the head drift happens, but the goal has not been updated.

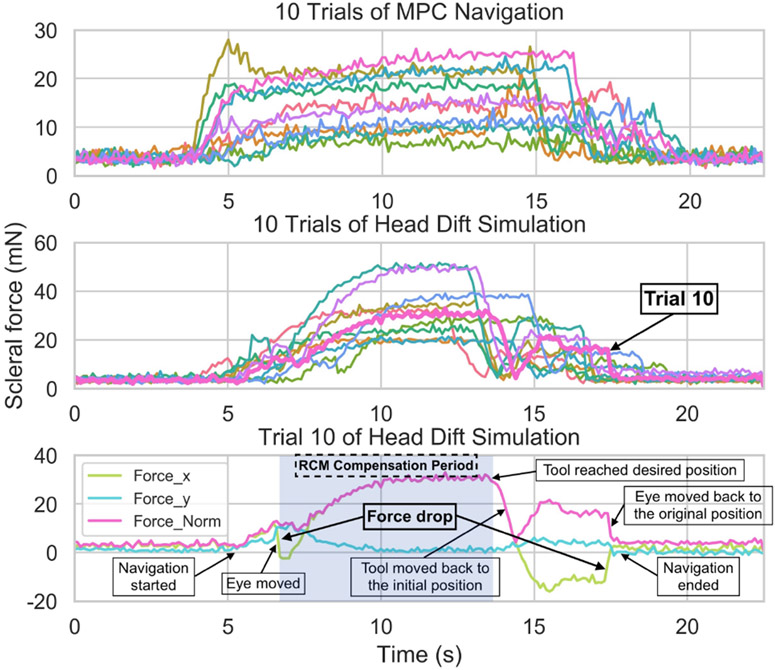

C. Scleral Force Measurement

Fig. 7 showed the experimental results from the scleral force measurements. As aforementioned, we set the safe limit of the scleral force to be 115 mN based on the work of Urias [30]. The results showed that no measured forces exceeded 30 mN for MPC navigation or 60 mN during head drift motion. The mean scleral forces were 11.97 mN and 21.75 mN respectively. Take trial 10 of head drift simulation as an example, the scleral force changed drastically along one direction when the eye moved. Since we updated the RCM point based on the movement of eye, the scleral force would drop a little bit afterwards. This RCM compensation lasted until we reached the goal (The light blue area in Fig. 7). As the tool moved towards the goal, it also rotated to reach the desired orientation. This further increased the scleral force until the goal was reached. Subsequently, we retracted the tool to the initial position using the original RCM point. Finally, we moved the eye back to the original position, explaining the large change of scleral force at the end of the trial. The mean scleral force was calculated from navigation start to the moment that the tool reached the goal.

Fig. 7.

Results of scleral force measurement. (Top) Scleral force of 10 trials of MPC navigation. (Middle) Scleral force of 10 trials of head drift simulation. (Bottom) Trial 10 0f head drift simulation.

VII. CONCLUSIONS

In this work, we test our improved robotic system using both open-sky and intact ex vivo porcine eyes. The results demonstrate smaller than 0.06 mm mean XY axes errors during experiments involving biological tissues. Moreover, by using chance constraint incorporated MPC, a safer, faster, and more accurate navigation process is obtained. We also demonstrate that our system is sufficiently robust to track the updated goal accurately during simulated head drift experiments. However, we only simulated head drift within a limited range. More work is required to refine performance especially during head movement. Future work will focus on experiments that use intact porcine eyes for the surgically relevant task of subretianl injection.

ACKNOWLEDGMENT

This work was supported by U.S. National Institutes of Health under the grants number 2R01EB023943-04A1, 1R01EB025883-01A1, and partially by JHU internal funds.

References

- [1].Riviere CN and Jensen PS, “A study of instrument motion in retinal microsurgery,” in Proceedings of the 22nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Cat. No. 00CH37143), vol. 1. IEEE, 2000, pp. 59–60. [Google Scholar]

- [2].Zhou M, Huang K, Eslami A, Roodaki H, Zapp D, Maier M, Lohmann CP, Knoll A, and Nasseri MA, “Precision needle tip localization using optical coherence tomography images for subretinal injection,” in 2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2018, pp. 4033–4040. [Google Scholar]

- [3].Loriga B, Di Filippo A, Tofani L, Signorini P, Caporossi T, Barca F, De Gaudio AR, Rizzo S, and Adembri C, “Postoperative pain after vitreo-retinal surgery is influenced by surgery duration and anesthesia conduction.” Minerva anestesiologica, vol. 85, no. 7, pp. 731–737, 2018. [DOI] [PubMed] [Google Scholar]

- [4].Williamson TH, Introduction to Vitreoretinal Surgery. Springer, 2008. [Google Scholar]

- [5].Slack P, Coulson C, Ma X, Webster K, and Proops D, “The effect of operating time on surgeons’ muscular fatigue,” The Annals of The Royal College of Surgeons of England, vol. 90, no. 8, pp. 651–657, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Kim JW, He C, Urias M, Gehlbach P, Hager GD, Iordachita I, and Kobilarov M, “Autonomously navigating a surgical tool inside the eye by learning from demonstration,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 7351–7357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Kim JW, Zhang P, Gehlbach P, Iordachita I, and Kobilarov M, “Towards autonomous eye surgery by combining deep imitation learning with optimal control,” Proceedings of machine learning research, vol. 155, p. 2347, 2021. [PMC free article] [PubMed] [Google Scholar]

- [8].Zhang P, Kim JW, and Kobilarov M, “Towards safer retinal surgery through chance constraint optimization and real-time geometry estimation,” in 2021 60th IEEE Conference on Decision and Control (CDC). IEEE, 2021, pp. 5175–5180. [Google Scholar]

- [9].Mayne D, “A second-order gradient method for determining optimal trajectories of non-linear discrete-time systems,” International Journal of Control, vol. 3, no. 1, pp. 85–95, 1966. [Google Scholar]

- [10].He X, Balicki M, Gehlbach P, Handa J, Taylor R, and Iordachita I, “A multi-function force sensing instrument for variable admittance robot control in retinal microsurgery,” in 2014 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2014, pp. 1411–1418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].He X, Roppenecker D, Gierlach D, Balicki M, Olds K, Gehlbach P, Handa J, Taylor R, and Iordachita I, “Toward clinically applicable steady-hand eye robot for vitreoretinal surgery,” in ASME International Mechanical Engineering Congress and Exposition, vol. 45189. American Society of Mechanical Engineers, 2012, pp. 145–153. [Google Scholar]

- [12].Üneri A, Balicki MA, Handa J, Gehlbach P, Taylor RH, and Iordachita I, “New steady-hand eye robot with micro-force sensing for vitreoretinal surgery,” in 2010 3rd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics. IEEE, 2010, pp. 814–819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Gonenc B, Gehlbach P, Handa J, Taylor RH, and Iordachita I, “Motorized force-sensing micro-forceps with tremor cancelling and controlled micro-vibrations for easier membrane peeling,” in 5th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics. IEEE, 2014, pp. 244–251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Ida Y, Sugita N, Ueta T, Tamaki Y, Tanimoto K, and Mitsuishi M, “Microsurgical robotic system for vitreoretinal surgery,” International journal of computer assisted radiology and surgery, vol. 7, no. 1, pp. 27–34, 2012. [DOI] [PubMed] [Google Scholar]

- [15].He C, Patel N, Iordachita I, and Kobilarov M, “Enabling technology for safe robot-assisted retinal surgery: Early warning for unsafe scleral force,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 3889–3894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Zhou M, Wu J, Ebrahimi A, Patel N, He C, Gehlbach P, Taylor RH, Knoll A, Nasseri MA, and Iordachita I, “Spotlight-based 3d instrument guidance for retinal surgery,” in 2020 International Symposium on Medical Robotics (ISMR). IEEE, 2020, pp. 69–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Koyama Y, Marinho MM, Mitsuishi M, and Harada K, “Autonomous coordinated control of the light guide for positioning in vitreoretinal surgery,” IEEE Transactions on Medical Robotics and Bionics, vol. 4, no. 1, pp. 156–171, 2022. [Google Scholar]

- [18].Ourak M, Smits J, Esteveny L, Borghesan G, Gijbels A, Schoevaerdts L, Douven Y, Scholtes J, Lankenau E, Eixmann T, et al. , “Combined oct distance and fbg force sensing cannulation needle for retinal vein cannulation: in vivo animal validation,” International journal of computer assisted radiology and surgery, vol. 14, no. 2, pp. 301–309, 2019. [DOI] [PubMed] [Google Scholar]

- [19].Weiss J, Rieke N, Nasseri MA, Maier M, Eslami A, and Navab N, “Fast 5dof needle tracking in ioct,” International journal of computer assisted radiology and surgery, vol. 13, no. 6, pp. 787–796, 2018. [DOI] [PubMed] [Google Scholar]

- [20].Kang JU and Cheon GW, “Demonstration of subretinal injection using common-path swept source oct guided microinjector,” Applied Sciences, vol. 8, no. 8, p. 1287, 2018. [Google Scholar]

- [21].Cheon GW, Huang Y, Cha J, Gehlbach PL, and Kang JU, “Accurate real-time depth control for cp-ssoct distal sensor based handheld microsurgery tools,” Biomedical optics express, vol. 6, no. 5, pp. 1942–1953, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Brogan K, Dawar B, Lockington D, and Ramaesh K, “Intraoperative head drift and eye movement: two under addressed challenges during cataract surgery,” Eye, vol. 32, no. 6, pp. 1111–1116, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Mccannel CA, Olson EJ, Donaldson MJ, Bakri SJ, Pulido JS, and Donna M, “Snoring is associated with unexpected patient head movement during monitored anesthesia care vitreoretinal surgery,” Retina, vol. 32, no. 7, pp. 1324–1327, 2012. [DOI] [PubMed] [Google Scholar]

- [24].He K, Zhang X, Ren S, and Sun J, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778. [Google Scholar]

- [25].Li Q and Griffiths JG, “Least squares ellipsoid specific fitting,” in Geometric modeling and processing, 2004. proceedings. IEEE, 2004, pp. 335–340. [Google Scholar]

- [26].Reza A and Sengupta AS, “Least square ellipsoid fitting using iterative orthogonal transformations,” Applied Mathematics and Computation, vol. 314, pp. 349–359, 2017. [Google Scholar]

- [27].Blackmore L, Ono M, and Williams BC, “Chance-constrained optimal path planning with obstacles,” IEEE Transactions on Robotics, vol. 27, no. 6, pp. 1080–1094, 2011. [Google Scholar]

- [28].Shi J et al. , “Good features to track,” in 1994 Proceedings of IEEE conference on computer vision and pattern recognition. IEEE, 1994, pp. 593–600. [Google Scholar]

- [29].Lucas BD, Kanade T, et al. , An iterative image registration technique with an application to stereo vision. Vancouver, 1981, vol. 81. [Google Scholar]

- [30].Urias MG, Patel N, Ebrahimi A, Iordachita I, and Gehlbach PL, “Robotic retinal surgery impacts on scleral forces: in vivo study,” Translational Vision Science & Technology, vol. 9, no. 10, pp. 2–2, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]