Abstract

Subset selection is a valuable tool for interpretable learning, scientific discovery, and data compression. However, classical subset selection is often avoided due to selection instability, lack of regularization, and difficulties with post-selection inference. We address these challenges from a Bayesian perspective. Given any Bayesian predictive model , we extract a family of near-optimal subsets of variables for linear prediction or classification. This strategy deemphasizes the role of a single “best” subset and instead advances the broader perspective that often many subsets are highly competitive. The acceptable family of subsets offers a new pathway for model interpretation and is neatly summarized by key members such as the smallest acceptable subset, along with new (co-) variable importance metrics based on whether variables (co-) appear in all, some, or no acceptable subsets. More broadly, we apply Bayesian decision analysis to derive the optimal linear coefficients for any subset of variables. These coefficients inherit both regularization and predictive uncertainty quantification via . For both simulated and real data, the proposed approach exhibits better prediction, interval estimation, and variable selection than competing Bayesian and frequentist selection methods. These tools are applied to a large education dataset with highly correlated covariates. Our analysis provides unique insights into the combination of environmental, socioeconomic, and demographic factors that predict educational outcomes, and identifies over 200 distinct subsets of variables that offer near-optimal out-of-sample predictive accuracy.

Keywords: education, linear regression, logistic regression, model selection, penalized regression

1. Introduction

Subset and variable selection are essential components of regression analysis, prediction, and classification. By identifying subsets of important covariates, the analyst can acquire simpler and more interpretable summaries of the data, improved prediction or classification, reduced estimation variability among the selected covariates, lower storage requirements, and insights into the factors that determine predictive accuracy (Miller, 1984). Classical subset selection is expressed as the solution to the constrained least squares problem

| (1) |

where is an -dimensional response, is an matrix of covariates, and is the -dimensional vector of unknown coefficients. Traditionally, the goal is to determine (i) the best subset of each size , (ii) an estimate of the accompanying nonzero linear coefficients, and (iii) the best subset of any size. Subset selection has garnered additional attention recently, in part due to the algorithmic advancements from Bertsimas et al. (2016) and the detailed comparisons of Hastie et al. (2020). More broadly, variable selection has been deployed as a tool for interpretable machine learning, including for highly complex and nonlinear models (e.g., Ribeiro et al., 2016; Afrabandpey et al., 2020).

Although often considered the “gold standard” of variable selection, subset selection remains underutilized due to several critical limitations. First, subset selection is inherently unstable: it is common to obtain entirely distinct subsets under perturbations or resampling of the data. This instability undermines the interpretability of a single “best” subset, and is a significant motivating factor for the proposed methods. Second, the solutions to (1) are unregularized. While it is advantageous to avoid overshrinkage, Hastie et al. (2020) showed that the lack of any regularization in (1) leads to deteriorating performance relative to penalized regression in low-signal settings. Third, inference about requires careful adjustment for selection bias, which limits the available options for uncertainty quantification. Finally, solving (1) is computationally demanding even for moderate , which has spawned many algorithmic advancements spanning multiple decades (e.g., Furnival and Wilson, 2000; Gatu and Kontoghiorghes, 2006; Bertsimas et al., 2016). For these reasons, penalized regression techniques that replace the -penalty with convex or nonconvex yet computationally feasible alternatives (Fan and Lv, 2010) are often preferred.

From a Bayesian perspective, (1) is usually translated into a predictive model via a Gaussian (log-) likelihood and a sparsity (log-) prior. Indeed, substantial research efforts have been devoted to both sparsity (e.g., Ishwaran and Rao, 2005) and shrinkage (e.g., Polson and Scott, 2010) priors for . Yet the prior alone cannot select subsets: the prior is the component of the data-generating process that incorporates prior beliefs, information, or regularization, while selection is ultimately a decision problem (Lindley, 1968). For instance, Barbieri and Berger (2004) and Liang et al. (2013) applied sparsity priors to obtain posterior inclusion probabilities, which were then used for marginal selection and screening, respectively. Jin and Goh (2020) selected subsets using marginal likelihoods, but required conjugate priors for Gaussian linear models. Hahn and Carvalho (2015) more fully embraced the decision analysis approach for variable selection, and augmented a squared error loss with an -penalty for linear variable selection. Alternative loss functions have been proposed for seemingly unrelated regressions (Puelz et al., 2017), graphical models (Bashir et al., 2019), nonlinear regressions (Woody et al., 2020), functional regression (Kowal and Bourgeois, 2020), time-varying parameter models (Huber et al., 2020), and a variety of Kullback-Leibler approximations to the likelihood (Goutis and Robert, 1998; Nott and Leng, 2010; Tran et al., 2012; Piironen et al., 2020).

Despite the appropriate deployment of decision analysis for selection, each of these Bayesian methods relies on -penalization or forward search. As such, they are restricted to limited search paths that cannot fully solve the (exhaustive) subset selection problem. More critically, these Bayesian approaches—as well as most classical ones—are unified in their emphasis on selecting a single “best” subset. However, in practice it is common for many subsets (or models) to achieve near-optimal predictive performance, known as the Rashomon effect (Breiman, 2001). This effect is particularly pronounced for correlated covariates, weak signals, or small sample sizes. Under these conditions, it is empirically and theoretically possible for the “true” covariates to be predictively inferior to a proper subset (Wu et al., 2007). As a result, the “best” subset is not only less valuable but also less interpretable. Reporting a single subset—or a small number of subsets along a highly restricted search path—obscures the likely presence of many distinct yet equally-predictive subsets of variables.

We advance an agenda that instead curates and summarizes a family of optimal or near-optimal subsets. This broader analysis alleviates the instability issues of a single “best” subset and provides a more complete predictive picture. The proposed approach operates within a decision analysis framework and is compatible with any Bayesian model for prediction or classification. Naturally, should represent the modeler’s beliefs about the data-generating process and describe the salient features in the data. Several key developments are required:

-

We derive optimal (in a decision analysis sense) linear coefficients for any subset of variables.

Crucially, these coefficients inherit regularization and uncertainty quantification via , but avoid the overshrinkage induced by -penalization. As such, these point estimators resolve multiple limitations of classical subset selection and (-penalized) Bayesian decision-analytic variable selection, and further are adapted to both regression and classification problems. Next,

-

We design a modified branch-and-bound algorithm for efficient exploration over the space of candidate subsets.

The search process is a vital component of subset selection, and our modular framework is broadly compatible with other state-of-the-art search algorithms (e.g., Bertsimas et al., 2016). Until now, these algorithms have not been fully deployed or adapted for Bayesian subset selection. Additionally,

-

We leverage the predictive distribution under to collect the acceptable family of near-optimal subsets.

A core feature of the acceptable family is that it is defined using out-of-sample metrics and predictive uncertainty quantification, yet is computed using in-sample posterior functionals from a single model fit of . Hence, we maintain computational scalability and coherent uncertainty quantification that avoids data reuse. Lastly,

-

We summarize the acceptable family with key member subsets, such as the “best” (in terms of cross-validation error) predictor and the smallest acceptable subset, along with new (co-) variable importance metrics that measure the frequency with which variables (co-) appear in all, some, or no acceptable subsets.

Unlike variable importances based on effect size, this inclusion-based metric effectively measures how many “predictively plausible” explanations (i.e., near-optimal subsets) contain each (pair of) covariate(s) as a member. Notably, each of these developments is presented for both prediction and classification.

The importance of curating and exploring a collection of subsets has been acknowledged previously. Existing approaches are predominantly frequentist, including fence methods (Jiang et al., 2008), Rashomon sets (Semenova and Rudin, 2019), bootstrapped confidence sets (Lei, 2019), and subsampling-based forward selection (Kissel and Mentch, 2021). Although the acceptable family has appeared previously for Bayesian decision analysis (Kowal, 2021; Kowal et al., 2021), it was applied only along the -path which does not enumerate a sufficiently rich collection of competitive subsets. Further, previous applications of the acceptable family did not address points 1., 2., and 4. above.

The paper is outlined as follows. Section 2 contains the Bayesian subset search procedures, the construction of acceptable families, the (co-) variable importance metrics, and the predictive uncertainty quantification. Section 3 details the simulation study. The methodology is applied to a large education dataset in Section 4. We conclude in Section 5. The Appendix provides additional algorithmic details, simulation studies, and results from the application. An R package is available online at https://github.com/drkowal/ BayesSubsets. Although the education dataset (Children’s Environmental Health Initiative, 2020) cannot be released due to privacy protections, access to the dataset can occur through establishing affiliation with the Children’s Environmental Health Initiative (contact cehi@nd.edu).

2. Methods

2.1. Predictive decision analysis

Decision analysis establishes the framework for extracting actions, estimators, and predictions from a Bayesian model (e.g., Bernardo and Smith, 2009). These tools translate probabilistic models into practical decision-making and can be deployed to summarize or interpret complex models. However, additional methodology is needed to convert subset selection into a decision problem, and further to evaluate and collect many near-optimal subsets.

Let denote any Bayesian model with a proper posterior distribution and posterior predictive distribution

where denotes the parameters of the model and is the observed data. Informally, defines the distribution of future or unobserved data conditional on the observed data and according to the model . Decision analysis evaluates each action based on a loss function that enumerates the cost of each action when is realized. Examples include point prediction (e.g., squared error loss) or classification (e.g., cross-entropy loss), interval estimation (e.g., minimum length subject to coverage), and selection among a set of hypotheses (e.g., 0–1 loss). Since is unknown yet modeled probabilistically under , an optimal action minimizes the posterior predictive expected loss

| (2) |

with the expectation taken under the Bayesian model . The operation in (2) averages the predictive loss over the possible realizations of according to the posterior probability under and then minimizes the resulting quantity over the action space.

Yet without careful specification of the loss function , (2) does not provide a clear pathway for subset selection. To see this, let denote the predictive variable at covariate . For point prediction of under squared error loss, the optimal action is

i.e., the posterior predictive expectation at . Similarly, for classification of under cross-entropy loss (see (10)), the optimal action is the posterior predictive probability . For a generic model , there is not necessarily a closed form for : these actions are computed separately for each with no clear mechanism for inducing sparsity or specifying distinct subsets. Hence, additional techniques are needed to supply actions that are not only optimal but also selective and interpretable.

Note that we use observation-driven rather than parameter-driven loss functions. Unlike the parameters , which are unobservable and model-specific, the predictive variables are observable and directly comparable across distinct Bayesian models. A decision analysis based on operates on the same scale and in the same units as the data that have been—and will be—observed, which improves interpretability. Perhaps most important, an observation-driven decision analysis enables empirical evaluation of the selected actions.

2.2. Subset search for linear prediction

The subset search procedure is built within the decision framework of (2). For any Bayesian model , we consider linear actions with , which offer both interpretability and the capacity for selection. Let denote the linear action with zero coefficients for all , where is a subset of active variables. For prediction, we assemble the aggregate and weighted squared error loss

| (3) |

where is the predictive variable at for each and is a weighting function. The covariate values can be distinct from the observed covariate values , for example to evaluate the action for a different population.

The loss in (3) evaluates linear coefficients for any given subset by accumulating the squared error loss over the covariate values . Since (3) depends on , the loss inherits a joint predictive distribution from . The loss is decoupled from the Bayesian model : the linear action does not require a linearity assumption for . The weights can be used to target actions locally, which provides a sparse and local linear approximation to . For example, we might parametrize the weighting function as with range parameter in order to weight based on proximity to some particular of interest (Ribeiro et al., 2016). Alternatively, can be specified via a probability model for the likelihood of observing each covariate value, including as a simple yet useful example, especially when using the observed covariate values ; this is our default choice.

Crucially, the optimal action can be solved directly for any subset :

Lemma 1 Suppose for . The optimal action (2) for the loss (3) is given by the nonzero entries

| (4) |

| (5) |

with zeros for indices , where , and is the matrix of the active covariates in for subset .

Proof It is sufficient to observe that , where the first term is a (finite) constant that does not depend on . The remaining steps constitute a weighted least squares solution. ■

The consequence of Lemma 1 is that the optimal action for each subset is simply the (weighted) least squares solution based on pseudo-data —i.e., a “fit to the fit” from . The advantages of can be substantial: the Bayesian model propagates regularization (e.g., shrinkage, sparsity, or smoothness) to the point predictions , which typically offers sizable improvements in estimation and prediction relative ordinary (weighted) least squares. This effect is especially pronounced in the presence of high-dimensional (large ) or correlated covariates. The optimal action may be non-unique if is noninvertible, in which case the inverse in (5) can be replaced by a generalized inverse.

At this stage, the Bayesian model is only needed to supply the pseudo-response variable ; different choices of will result in distinct values of and therefore distinct actions . An illuminating special case occurs for linear regression:

Corollary 2 For the linear regression model with and any set of covariate values and weights , the optimal action (2) under (3) for the full set of covariates is , where .

Depending on the choice of and may be non-unique. Corollary 2 links the optimal action to the model parameters: the posterior expectation is also the optimal action under the parameter-driven squared error loss . Similarly, a linear model for implies that , so the optimal action (5) for any subset is intrinsically connected to the (regression) model parameters. This persists for other regression models as well. By contrast, these restrictions also illustrate the generality of (3)-(5): the optimal linear actions are derived explicitly under any model (with ) and using any set of covariate values , active covariates , and weighting functions .

The critical remaining challenge is optimization—or at least evaluation and comparison—among the possible subsets . Our strategy emerges from the observation that there may be many subsets that achieve near-optimal predictive performance, often referred to as the Rashomon effect (Breiman, 2001). The goal is to collect, characterize, and compare these near-optimal subsets of linear predictors. Hence, there are two core tasks: (i) identify candidate subsets and (ii) filter to include only those subsets that achieve near-optimal predictive performance. These tasks must overcome both computational and methodological challenges—similar to classical (non-Bayesian) subset selection—which we resolve in the subsequent sections.

An exhaustive enumeration of all possible subsets presents an enormous computational burden, even for moderate . Although tempting, it is misguided to consider direct optimization over all possible subsets of ,

| (6) |

for the aggregate squared error loss (3). To see this—and find suitable alternatives—consider the following result:

Lemma 3 Let and , and suppose . For any point predictors and , we have

| (7) |

Proof Since is finite and does not depend on , the ordering of and will be identical whether using or . ■

Notably, is the key constituent in optimizing the predictive squared error loss (3), while is simply the usual residual sum-of-squares (RSS) with replacing .

Now recall the optimization of (6). For any subset , the optimal action is the least squares solution (5) with pseudo-data . However, RSS in linear regression is ordered by nested subsets: whenever . By Lemma 3, it follows that the solution of (6) is simply

for . As with (5), a generalized inverse can be substituted if necessary. The main consequence is that the optimal actions in (4) and (6) alone cannot select variables or subsets: (4) provides the optimal action for a given subset , while (6) trivially returns the full set of covariates. Despite the posterior predictive expectation in (6), this optimality is only valid in-sample and is unlikely to persist for out-of-sample prediction. Hence, this optimality is unsatisfying.

Yet Lemma 3 provides a path forward. Rather than fixing a subset in (4) or optimizing over all subsets in (6), suppose we compare among all subsets up to size . Equivalently, this constraint can be representation as an -penalty augmentation to the loss function (3), i.e.,

| (8) |

In direct contrast with previous approaches for Bayesian variable selection via decision analysis (e.g., Hahn and Carvalho, 2015; Woody et al., 2020; Kowal et al., 2021) we do not use convex relaxations to -penalties, which create unnecessarily restrictive search paths and introduce additional bias in the coefficient estimates.

Under the loss (3), the solution to (8) reduces to

| (9) |

using the “fit-to-the-fit” arguments from Lemma 1 and the RSS ordering results from Lemma 3. In particular, the solution resembles classical subset selection (1), but uses the fitted values from instead of the data and further generalizes to include possibly distinct covariates and weights .

Because of the representation in (9), we can effectively solve (8) by leveraging existing algorithms for subset selection. However, our broader interest in the collection of near-optimal subsets places greater emphasis on the search and filtering process. For any two subsets and of equal size , Lemma 3 implies that if and only if . Therefore, we can order the linear actions from (4) among all equally-sized subsets simply by ordering the values of . This result resembles the analogous scenario in classical linear regression on : subsets of fixed size maintain the same ordering whether using RSS or information criteria such as AIC, BIC, or Mallow’s . Here, the criterion of interest is the posterior predictive expected RSS, , and the RSS reduction occurs with the model fitted values serving as pseudo-data.

Because of this RSS-based ordering among equally-sized linear actions, we can leverage the computational advantages of the branch-and-bound algorithm (BBA) for efficient subset exploration (Furnival and Wilson, 2000). Using a tree-based enumeration of all possible subsets, BBA avoids an exhaustive subset search by carefully eliminating non-competitive subsets (or branches) according to RSS. BBA is particularly advantageous for (i) selecting covariates, (ii) filtering to subsets for each size , and (iii) exploiting the presence of covariates that are almost always present for low-RSS models (Miller, 1984). In the present setting, the key inputs to the algorithm are the covariates , the pseudo-data , and the weights . In addition, we specify the maximum number of subsets to return for each size . As increases, BBA returns more subsets—with higher RSS—yet computational efficiency deteriorates. We consider default values of and and compare the results in Section 4. An efficient implementation of BBA is available in the leaps package in R. Note that our framework is also compatible with many other subset search algorithms (e.g., Bertsimas et al., 2016).

In the case of moderate to large , we screen to covariates using the original model . Specifically, we select the covariates with the largest absolute (standardized) linear regression coefficients. When is a nonlinear model, we use the optimal linear coefficients on the full subset of (standardized) covariates. The use of marginal screening is common in both frequentist (Fan and Lv, 2008) and Bayesian (Bondell and Reich, 2012) high-dimensional linear regression models, with accompanying consistency results in each case. Here, sceening is motivated by computational scalability and interpretability: BBA is quite efficient for and , while the interpretation of a subset of covariates—acting jointly to predict accurately—is muddled as the subset size increases. By default, we fix . We emphasize that although this screening procedure relies on marginal criteria, it is based on a joint model that incorporates all covariates. In that sense, our screening procedure resembles the most popular Bayesian variable selection strategies based on posterior inclusion probabilities (Barbieri and Berger, 2004) or hard-thresholding (Datta and Ghosh, 2013).

2.3. Subset search for logistic classifiers

Classification and binary regression operate on {0, 1}, rendering the squared error loss (3) unsuitable. Consider a binary predictive functional where . In this framework, binarization can come from one of two sources. Most common, the data are binary , paired with the identity functional , and is a Bayesian classification (e.g., probit or logistic regression) model. Less common, non-binary data can be modeled via and paired with a functional that maps to {0, 1}.For example, we may be interested in selecting variables to predict exceedance of a threshold, , for some based on real-valued data . The latter case is an example of targeted prediction (Kowal, 2021), which customizes posterior summaries or decisions for any functional . This approach is distinct from fitting separate models to each empirical functional —which is still compatible with the first setting above—and instead requires only a single Bayesian reference model for all target functionals .

For classification or binary prediction of , we replace the squared error loss (3) with the aggregate and weighted cross-entropy loss,

| (10) |

where is the predictive variable at under and . The cross-entropy is also the deviance or negative log-likelihood of a series of independent Bernoulli random variables each with success probability for . However, (10) does not imply a distributional assumption for the decision analysis; all distributional assumptions are encapsulated within , including the posterior predictive distribution of . As before, is the linear action with zero coefficients for all , where is a subset of active variables.

The optimal action (2) is obtained for each subset by computing expectations with respect to the posterior predictive distribution under and minimizing the ensuing quantity. As in the case of squared error loss, key simplifications are available:

| (11) |

where is the posterior predictive expectation of under . The representation in (11) is quite useful: it is the negative log-likelihood for a logistic regression model with pseudo-data . Standard algorithms and software, such as iteratively reweighted least squares (IRLS) in the glm package in R, can be applied to solve (11) for any subset .

Instead of fitting a logistic regression to the observed binary variables , the optimal action under cross-entropy (10) fits to the posterior predictive probabilities under . For a well-specified model , these posterior probabilities can be more informative than the binary empirical functionals : the former lie on a continuum between the endpoints zero and one. Furthermore, for non-degenerate models with , the optimal action (11) resolves the issue of separability, which is a persistent challenge in classical logistic regression.

Despite the efficiency of IRLS for a fixed subset , the computational savings of BBA for subset search rely on the RSS from a linear model. As such, solving (11) for all or many subsets incurs a much greater computational cost. Yet IRLS is intrinsically linked with RSS. At convergence, IRLS obtains a weighted least squares solution

| (12) |

where for weights , fitted probabilities , and pseudo-data

| (13) |

with . By design, the weighted least squares objective associated with (12) is a second-order Taylor approximation to the predictive expected cross-entropy loss:

| (14) |

The weighted least squares approximation in (14) summons BBA for subset search. Hosmer et al. (1989) adopted this strategy for subset selection in classical logistic regression on . Here, both the goals and the optimization criterion are distinct: we are interested in curating a collection of near-optimal subsets—rather than selecting a single “best” subset—and the weighted least squares objective (14) inherits the fitted probabilities from the Bayesian model along with the weights .

Ideally, we might apply BBA directly based on the covariates , the pseudo-data , and the weights . However, both and depend on and therefore are subset-specific. As a result, BBA cannot be applied without significant modifications. A suitable alternative is to construct subset-invariant psuedo-data and weights by replacing with the corresponding estimate from the full model, . Specifically, let

| (15) |

both of which depend on rather than the individual subsets . The pseudo-data is defined similarly to in (13), where the second term now cancels. Finally, BBA subset search can be applied using the covariates , the pseudo-data , and the weights . As for squared error, we restrict each subset size to or for all subsets of size . Despite the critical role of the weighted least squares approximation in (14) for subset search, all further evaluations and comparisons rely on the exact cross-entropy loss (10).

2.4. Predictive evaluations for identifying near-optimal subsets

The BBA subset search filters the possible subsets to the best subsets for each size according to posterior predictive expected loss using the weighted squared error loss for prediction (Section 2.2) or the cross-entropy loss for classification (Section 2.3). However, as noted below Lemma 3, further comparisons based on these expected losses are trivial: the full set always obtains the minimum, which precludes variable selection. Selection of a single “best” subset of each size invites additional difficulties: if multiple subsets perform similarly—which is common for correlated covariates—then selecting subset will not be robust or stable against perturbations of the data. Equally important, restricting to subset is blind to competing subsets that offer similar predictive performance yet may differ substantially in the composition of covariates; see Sections 3–4. These challenges persist for both classical and Bayesian approaches.

We instead curate and explore a collection of near-optimal subsets. The notion of “near-optimal” derives from the acceptable family of Kowal (2021). Informally, the acceptable family uses out-of-sample predictive metrics to gather those predictors that match or nearly match the performance of the best out-of-sample predictor with nonnegligible posterior predictive probability under . The out-of-sample evaluation uses a modified -fold cross-validation procedure. Let denote the th validation set, where each data point appears in (at least) one validation set, . By default, we use validation sets that are equally-sized, mutually exclusive, and selected randomly from . Define an evaluative loss function for the optimal linear coefficients of subset , and let denote the collection of subsets obtained from the BBA filtering process. Typically, the evaluative loss is identical to the loss used for optimization, but this restriction is not required. For each data split and each subset , the out-of-sample empirical and predictive losses are

| (16) |

respectively, where is estimated using only the training data and is the posterior predictive distribution at conditional only on the training data. Averaging across all data splits, we obtain and .

The distinction between the empirical loss and the predictive loss is important. The empirical loss is a point estimate of the risk under predictions from based on the data . By comparison, the predictive loss provides a distribution of the out-of-sample loss based on the model . Both are valuable: is entirely empirical and captures the classical notion of -fold cross-validation, while leverages the Bayesian model to propagate the uncertainty from the model-based data-generating process.

For any two subsets and , consider the percent increase in out-of-sample predictive loss from to :

| (17) |

Since inherits a predictive distribution under , we can leverage the accompanying uncertainty quantification to determine whether the predictive performances of and are sufficiently distinguishable. In particular, we are interested in comparisons to the best subset for out-of-sample prediction,

| (18) |

so that is the optimal linear action associated with the subset that minimizes the empirical -fold cross-validated loss. Unlike the RSS-based in-sample evaluations from Section 2.2, the subset can—and usually will—differ from the full set , which enables variable selection driven by out-of-sample predictive performance.

Using as an anchor, the acceptable family broadens to include near-optimal subsets:

| (19) |

With (19), we collect all subsets that perform within a margin of the best subset, , with probability at least . Equivalently, a subset is acceptable if and only if there exists a lower posterior prediction interval for that includes . Hence, unacceptable subsets are those for which there is insufficient predictive probability (under ) that the out-of-sample accuracy of is within a predetermined margin of the best subset. The acceptable family is nonempty, since , and is expanded by increasing or decreasing . By default, we select and and assess robustness in the simulation study (see also Kowal, 2021; Kowal et al., 2021).

Within the acceptable family, we isolate two subsets of particular interest: the best subset from (18) and the smallest acceptable subset,

| (20) |

When is nonunique, so that multiple subsets of the same minimal size are acceptable, we select from those subsets the one with the smallest empirical loss . By definition, is the smallest set of covariates that satisfies the near-optimality condition in (19). As noted by Hastie et al. (2009) and others, selection based on minimum cross-validated error, such as , often produces models or subsets that are more complex than needed for adequate predictive accuracy. The acceptable family , and in particular , exploits this observation to provide alternative—and often much smaller—subsets of variables.

To ease the computational burden, we adapt the importance sampling algorithm from Kowal (2021) to compute (19) and all constituent quantities (see Appendix A). Crucially, this algorithm is based entirely on the in-sample posterior distribution under model , which avoids both (i) the intensive process of re-fitting for each data split and (ii) data reuse that adversely affects downstream uncertainty quantification. Briefly, the algorithm uses the complete data posterior as a proposal for the training data posterior . The importance weights are then computed using the likelihood under model . Similar algorithms have been deployed for Bayesian model selection (Gelfand et al., 1992), evaluating prediction distributions (Vehtari and Ojanen, 2012), and -based Bayesian variable selection (Kowal et al., 2021).

In the case of new (out-of-sample) covariate values , the predictive loss may be defined without cross-validation: , where is the predictive variable at for each . The empirical loss is undefined without a corresponding observation of the response variable for each . Hence, we modify the acceptable family (19) to instead reference the full subset in place of , which is no longer defined. When is a linear model, Corollary 2 implies that the corresponding reference is simply the posterior predictive expectation under .

2.5. Co-variable importance

Although it is common to focus on a single subset for selection, the acceptable family provides a broad assortment of competing explanations: linear actions with distinct sets of covariates that all provide near-optimal predictive accuracy. Hence, we seek to further summarize beyond and to identify (i) which covariates appear in any acceptable subset, (ii) which covariates appear in all acceptable subsets, and (iii) which covariates appear together in the same acceptable subsets.

For covariates and , the sample proportion of joint inclusion in achieves each of these goals:

| (21) |

and measures (co-) variable importance. Naturally, (21) is generalizable to more than two covariates, but is particularly interesting for a single covariate: is the proportion of acceptable subsets to which covariate belongs. When , covariate belongs to at least one acceptable subset. Such a result does not imply that covariate is necessary for accurate prediction, but rather that covariate is a component of at least one near-optimal linear subset. When , we refer to covariate as a keystone covariate: it belongs to all acceptable subsets and therefore is deemed essential. For highlights not only the covariates that co-occur, but also the covariates that rarely appear together. It is particularly informative to identify covariates and such that and are both large yet is small. In that case, covariates and are both important yet also redundant, as might be expected for highly correlated variables.

Although is defined based on linear predictors for each subset , the variable importance metric applies broadly to (possibly nonlinear) Bayesian models and a variety of evaluative loss functions , and can be targeted locally via the weights . The inclusion-based metric can be extended to incorporate effect size, which is a more common strategy for variable importance. Related, Dong and Rudin (2019) aggregated model-specific variable importances across many “good models”. Alternative approaches use leave-one-covariate-out predictive metrics (e.g., Lei et al., 2018), but are less appealing in the presence of correlated covariates.

2.6. Posterior predictive uncertainty quantification

A persistent challenge in classical subset selection is the lack of accompanying uncertainty quantification. Given a subset selected using the data , familiar frequentist and Bayesian inferential procedures applied to are in general no longer valid. In particular, we cannot simply proceed as if only the selected covariates were supplied from the onset. Such analyses are subject to selection bias (Miller, 1984).

A crucial feature of our subset filtering (Section 2.2 and Section 2.3) and predictive evaluation (Section 2.4) techniques is that, despite the broad searching and the out-of-sample targets, these quantities all remain in-sample posterior functionals under . There is no data re-use or model re-fitting: every requisite term is a functional of the complete data posterior or from a single Bayesian model. Hence, the posterior distribution under remains a valid facilitator of uncertainty quantification.

We elicit a posterior predictive distribution for the action by removing the expectation in (2):

| (22) |

which no longer integrates over and hence propagates the posterior predictive uncertainty. For the squared error loss (3), the predictive action is

| (23) |

akin to (5), where . The linear coefficients inherit a posterior predictive distribution from and can be computed for any subset . Similar computations are available for the cross-entropy loss (10). In both cases, draws from the posterior predictive distribution are sufficient for uncertainty quantification of . Under the usual assumption that , these draws are easily obtained by repeatedly sampling from the posterior and from the likelihood.

The predictive actions are computable for any subset , include those selected based on the predictive evaluations in Section 2.4. Let denote a subset identified based on the posterior (predictive) distribution under , such as or . The predictive action using this subset in (23) is a posterior predictive functional. Unlike for a generic subset , the predictive action is a functional of both and , which factors into the interpretation.

A similar projection-based approach is developed by Woody et al. (2020) for Gaussian regression. In place of in (22), Woody et al. (2020) suggest , which is a middle ground between (2) and (22): it integrates over the uncertainty of given model parameters , but preserves uncertainty due to . For example, under the linear regression model , the analogous result in (23) is ; when , this simplifies to the regression coefficient . Both approaches have their merits, but we prefer (22) because of the connection to the observable random variables rather than the model-specific parameters .

3. Simulation study

We conduct an extensive simulation study to evaluate competing subset selection techniques for prediction accuracy, uncertainty quantification, and variable selection. Prediction for real-valued data is discussed here; classification for binary data is presented in Appendix B. Although we do evaluate individual subsets from the proposed framework—namely, and —we are more broadly interested in the performance of the acceptable family of subsets. In particular, the acceptable family is designed to collect many subsets that offer near-optimal prediction; both cardinality and aggregate predictive accuracy are critical.

The simulation designs feature varying signal-to-noise ratios (SNRs) and dimensions , including , with correlated covariates and sparse linear signals. Covariates are generated from marginal standard normal distributions with for and . The columns are randomly permuted and augmented with an intercept. The true linear coefficients are constructed by setting and fixing nonzero coefficients, with equal to 1 and equal to −1, and the rest at zero. The data are generated independently as with and . We consider and for low and high SNR. Evaluations are conducted over 100 simulated datasets.

First, we evaluate point prediction accuracy across competing collections of near-optimal subsets. Each collection is built from the same Gaussian linear regression model with horseshoe priors (Carvalho et al., 2010) and estimated using bayeslm (Hahn et al., 2019). The collections are generated from different candidate sets based on distinct search methods: the proposed BBA method (bbound(bayes)), the adaptive lasso search (lasso (bayes)) proposed by Hahn and Carvalho (2015) and Kowal et al. (2021), and both forward (forward(bayes)) and backward (backward(bayes)) search. For each collection of candidate subsets, we compute the acceptable families for , and the observed covariate values ; variations of these specifications are presented in Appendix C. These methods differ only in the search process: if a particular subset is identified by more than one of these competing methods, the corresponding point predictions—based on the optimal action in (5)—will be identical.

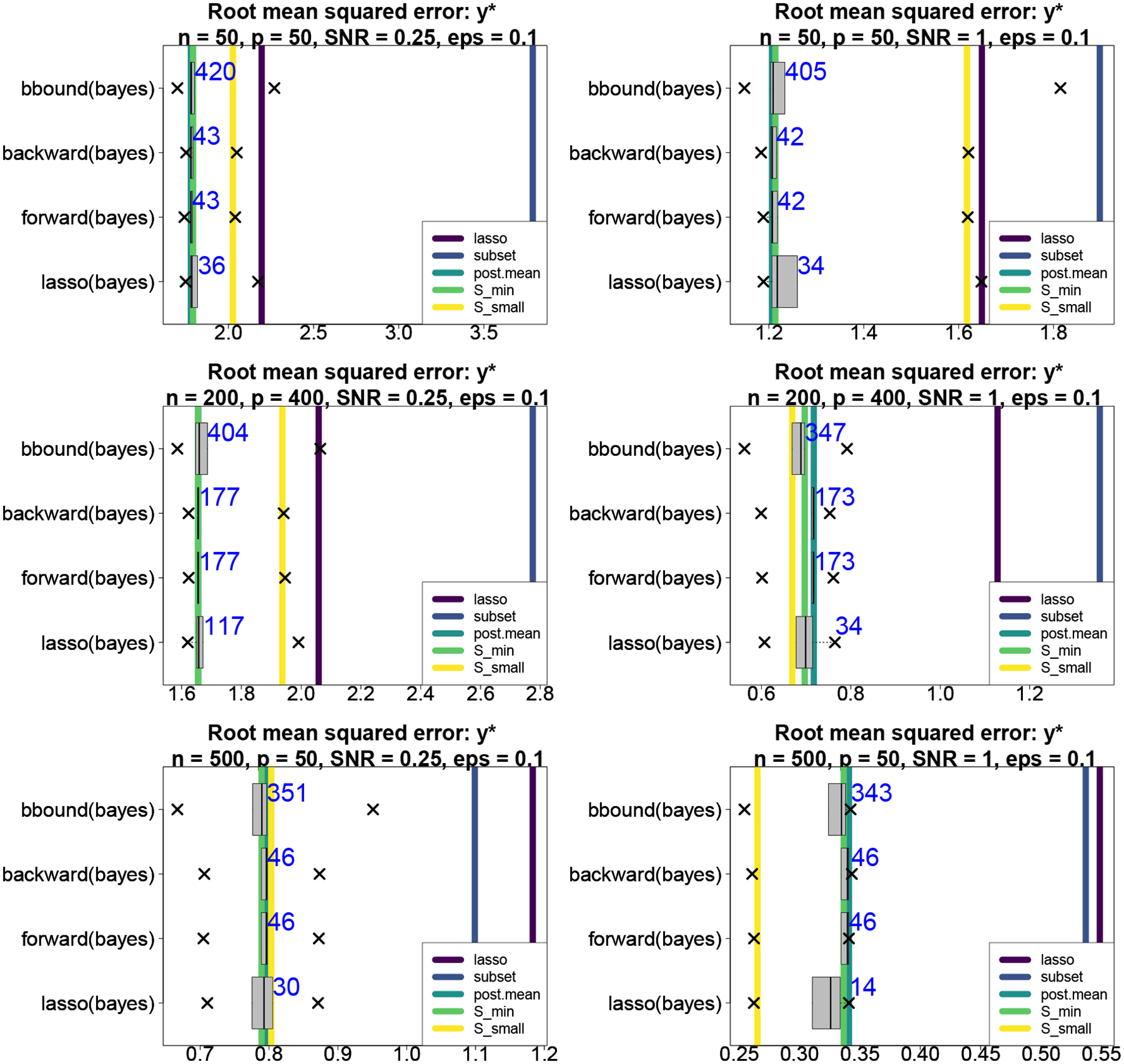

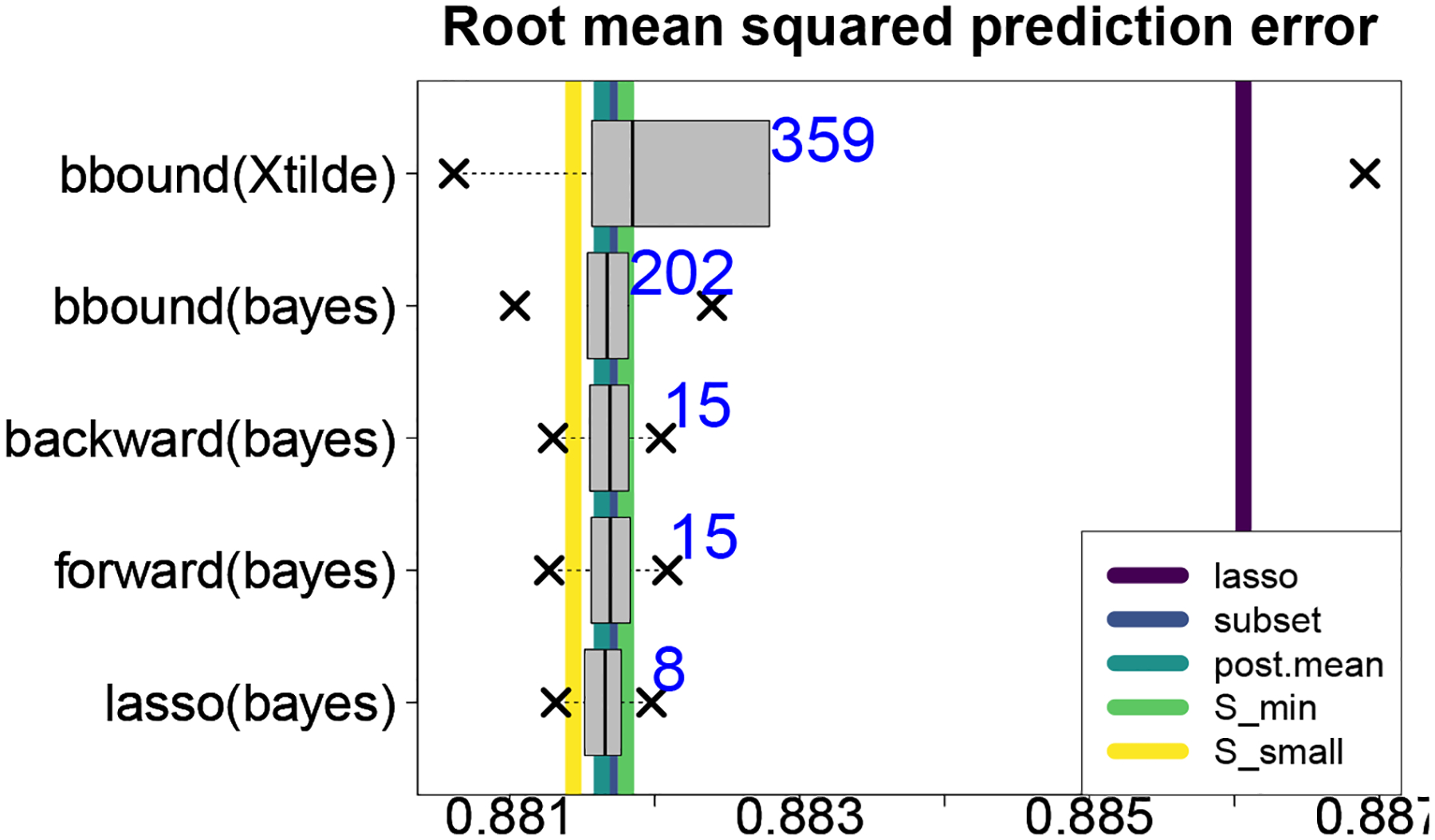

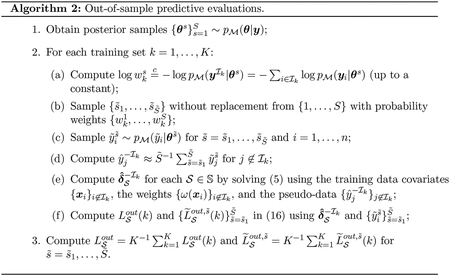

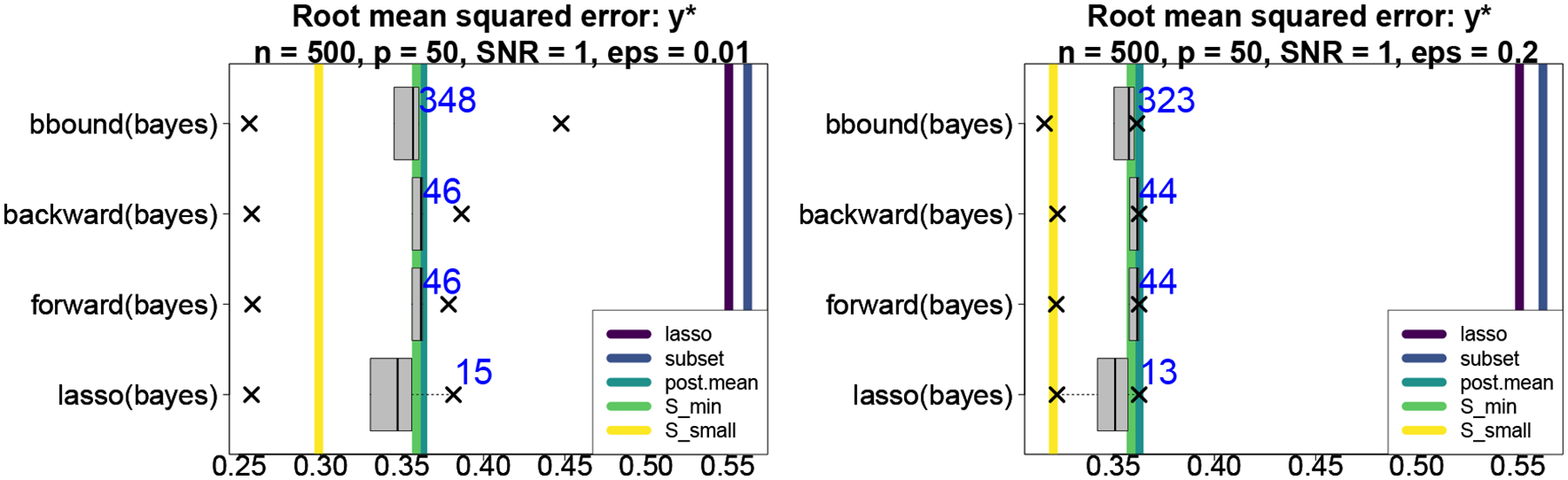

The competing collections of near-optimal subsets are evaluated in aggregate. At each simulation, we compute the qth quantile of the root mean squared errors (RMSEs) for the true mean across all subsets within that acceptable family, and then average that quantity across all simulations. For example, describes the predictive performance if we were to use the worst acceptable subset at each simulation, as determined by an oracle. These quantities summarize the distribution of RMSEs within each acceptable family; we report the values for and present the results as boxplots in Figure 1. Most notably, the proposed bbound(bayes) strategy produces up to 10 times the number of acceptable subsets as the other methods, yet crucially maintains equivalent predictive performance. Naturally, this expanded collection of subsets also produces a minimum RMSE that outperforms the remaining methods. Clearly, the proposed approach is far superior at collecting more subsets that provide near-optimal predictive accuracy.

Figure 1:

Root mean squared errors (RMSEs) for predicting across configurations. The boxplots summarize the RMSE quantiles for the subsets within each acceptable family, while the vertical lines denote RMSEs of competing methods. The average size of each acceptable family is annotated. The proposed BBA search returns vastly more subsets that remain highly competitive, while performs very well and substantially outperforms classical subset selection.

Figure 1 also summarizes the point prediction accuracy for several competing methods. First, we include the usual point estimate under given by the posterior expectation of the regression coefficients (post.mean), which does not include any variable or subset selection. Next, we compute point predictions for the key acceptable subsets and using the proposed bbound(bayes) approach; the competing search strategies produced similar results and are omitted. Among frequentist estimators, we use the adaptive lasso (lasso; Zou, 2006) with chosen by 10-fold cross-validation and the one-standard-error rule (Hastie et al., 2009). In addition, we include classical subset selection (subset) implemented using the leaps package in R with the final subset determined by AIC. When , we screen to the first 35 predictors that enter the model in the aforementioned adaptive lasso. Forward (forward) and backward (backward) selection were also considered, but were either noncompetitive or performed similarly to the adaptive lasso and are omitted from this plot.

Most notably, the predictive performance of is excellent, especially for high SNRs, and substantially outperforms the frequentist methods in all settings. Because is overly conservative—i.e., it selects too many variables (see Table 1)—it performs nearly identically to post.mean. Although and post.mean outperform when the SNR is low and the sample size is small, note that is not targeted exclusively for optimal predictive performance; rather, it represents the smallest subset that obtains near-optimal performance. Lastly, the regularization induced by is greatly beneficial: every member of each acceptable family decisively outperforms classical subset selection across all scenarios.

Table 1:

True positive rates (TPR) and true negative rates (TNR) for Gaussian synthetic data with active covariates including the intercept. The selection performance of is excellent and similar to the the adaptive lasso. Classical subset, forward, and backward selection are too aggressive, while the posterior interval-based selection is too conservative.

| , , SNR = 0.25 | |||||||

|---|---|---|---|---|---|---|---|

| lasso | forward | backward | subset | posterior HPD | |||

| TPR | 0.22 | 0.96 | 0.93 | 0.53 | 0.06 | 0.51 | 0.22 |

| TNR | 0.98 | 0.06 | 0.10 | 0.63 | 1.00 | 0.81 | 0.98 |

| , , SNR = 1 | |||||||

| lasso | forward | backward | subset | posterior HPD | |||

| TPR | 0.54 | 0.95 | 0.89 | 0.72 | 0.16 | 0.77 | 0.34 |

| TNR | 0.92 | 0.06 | 0.11 | 0.64 | 1.00 | 0.78 | 0.99 |

| , , SNR = 0.25 | |||||||

| lasso | forward | backward | subset | posterior HPD | |||

| TPR | 0.36 | 0.79 | 0.78 | 0.67 | 0.17 | 0.75 | 0.34 |

| TNR | 1.00 | 0.52 | 0.54 | 0.96 | 1.00 | 0.95 | 1.00 |

| , , SNR = 1 | |||||||

| lasso | forward | backward | subset | posterior HPD | |||

| TPR | 0.97 | 0.96 | 0.95 | 0.95 | 0.75 | 0.98 | 0.93 |

| TNR | 0.99 | 0.57 | 0.60 | 0.96 | 1.00 | 0.95 | 1.00 |

| , , SNR = 0.25 | |||||||

| lasso | forward | backward | subset | posterior HPD | |||

| TPR | 0.84 | 0.96 | 0.95 | 0.94 | 0.59 | 0.99 | 0.86 |

| TNR | 0.98 | 0.83 | 0.83 | 0.83 | 1.00 | 0.70 | 0.98 |

| , , SNR = 1 | |||||||

| lasso | forward | backward | subset | posterior HPD | |||

| TPR | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| TNR | 1.00 | 0.84 | 0.83 | 0.83 | 1.00 | 0.67 | 0.99 |

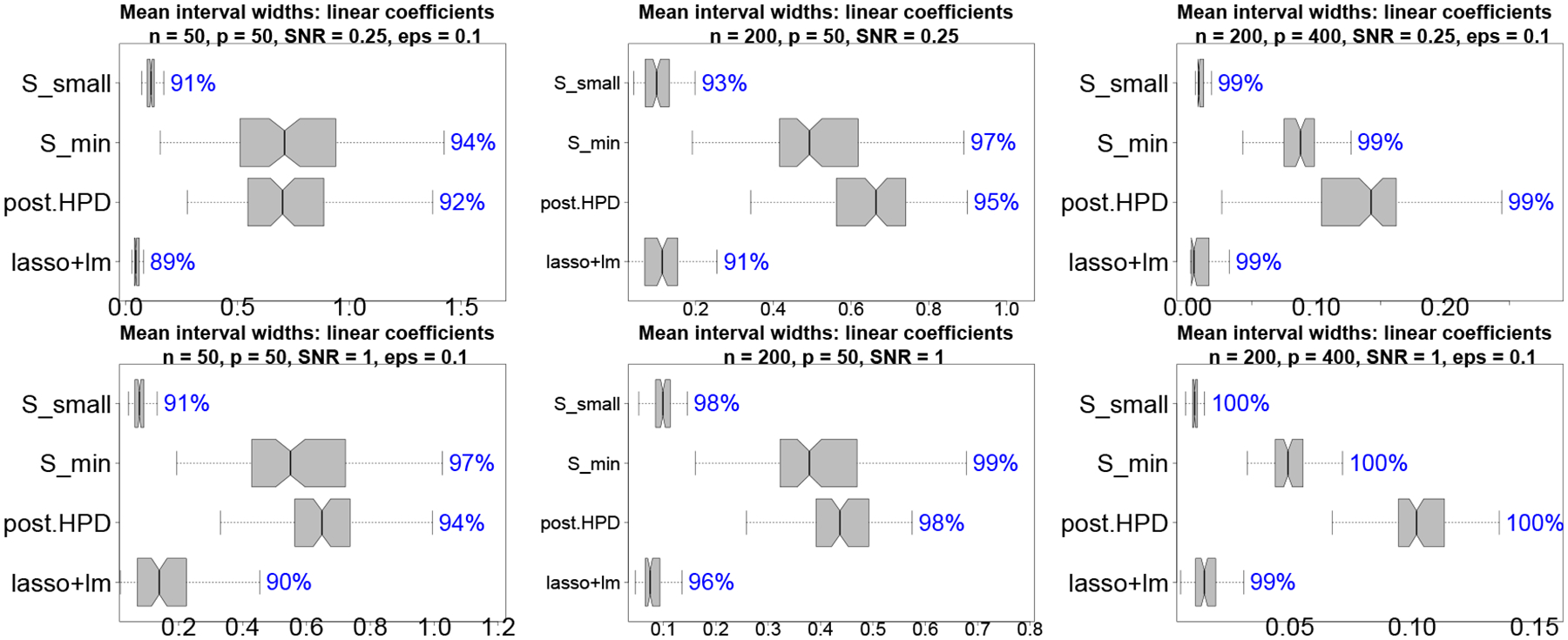

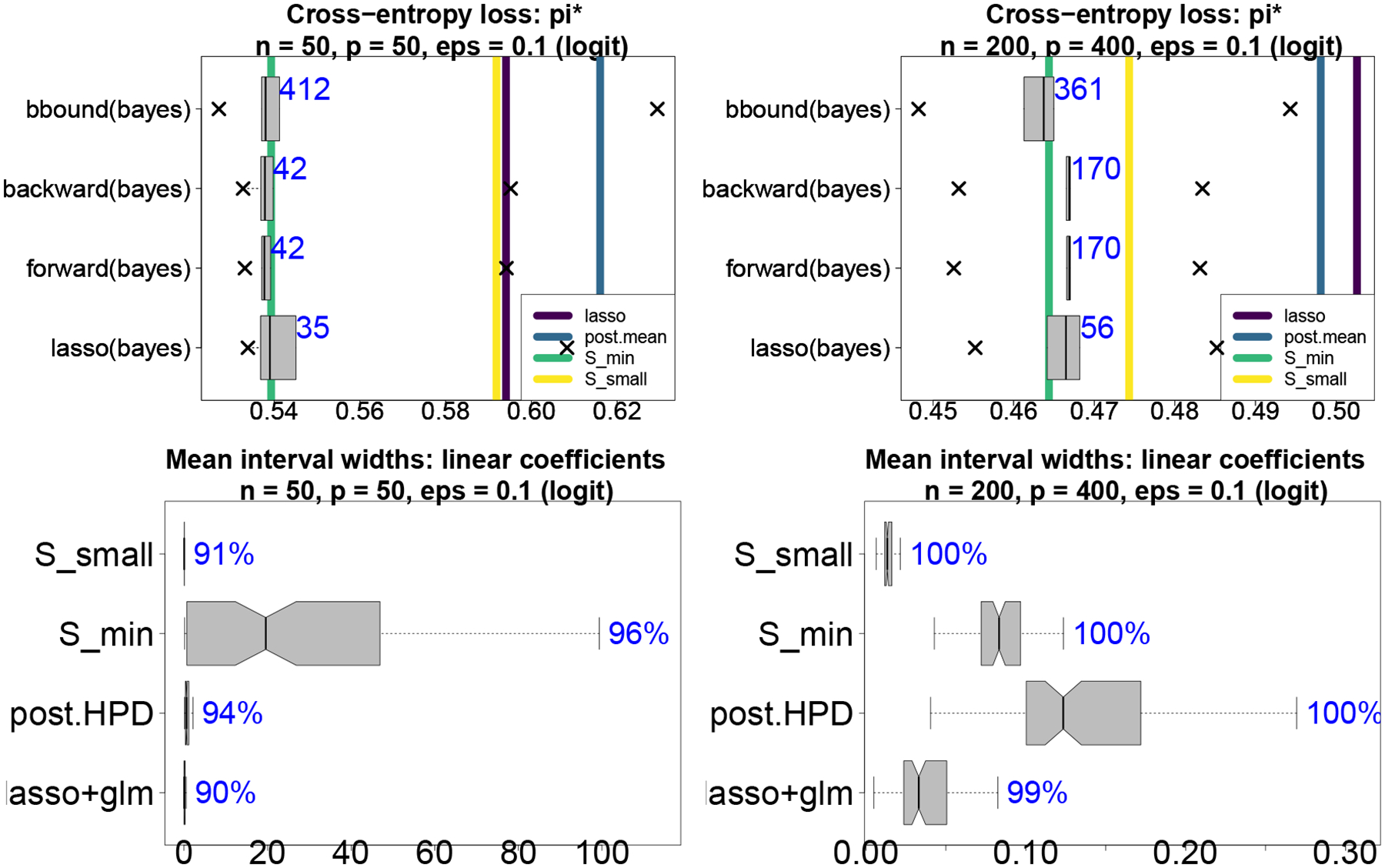

Next, we compare uncertainty quantification for the regression coefficients . We compute 90% intervals using the predictive action from (23) for and based on the proposed bbound(bayes) procedure. In addition, we compute 90% highest posterior density (HPD) credible intervals for under (post.HPD) and 90% frequentist confidence intervals using Zhao et al. (2021), which computes confidence intervals from a linear regression model that includes only the variables selected by the (adaptive) lasso (lasso+lm). The 90% interval estimates are evaluated and compared in Figure 2 using interval widths and empirical coverage. The intervals from using (22) are uniformly better (i.e., narrower) than the usual posterior HPD intervals under . In addition, the intervals from Zhao et al. (2021) are highly competitive, despite ignoring selection bias. In all cases, the intervals are overly conservative and achieve the nominal empirical coverage.

Figure 2:

Mean interval widths (boxplots) with empirical coverage (annotations) for . Non-overlapping notches indicate significant differences between medians. The proposed intervals based on are significantly narrower than the usual HPD intervals under yet maintain the empirical nominal 90% coverage.

Lastly, marginal variable selection is evaluated using true positive rates and true negative rates in Table 1. In addition to and , we include the common Bayesian selection technique that includes a variable when the 95% HPD interval for excludes zero (posterior HPD). The selection performance of is excellent and similar to the the adaptive lasso. Classical subset, forward, and backward selection are too aggressive, while the posterior interval-based selection is too conservative. Hence, despite the primary focus on the collection of near-optimal subsets, the smallest acceptable subset is a key member with excellent prediction, uncertainty quantification, and marginal selection performance.

The appendix contains simulation studies for classification (Appendix B) and variations for , and the distributions of and (Appendix C). All results are qualitatively similar to those presented here.

4. Subset selection for predicting educational outcomes

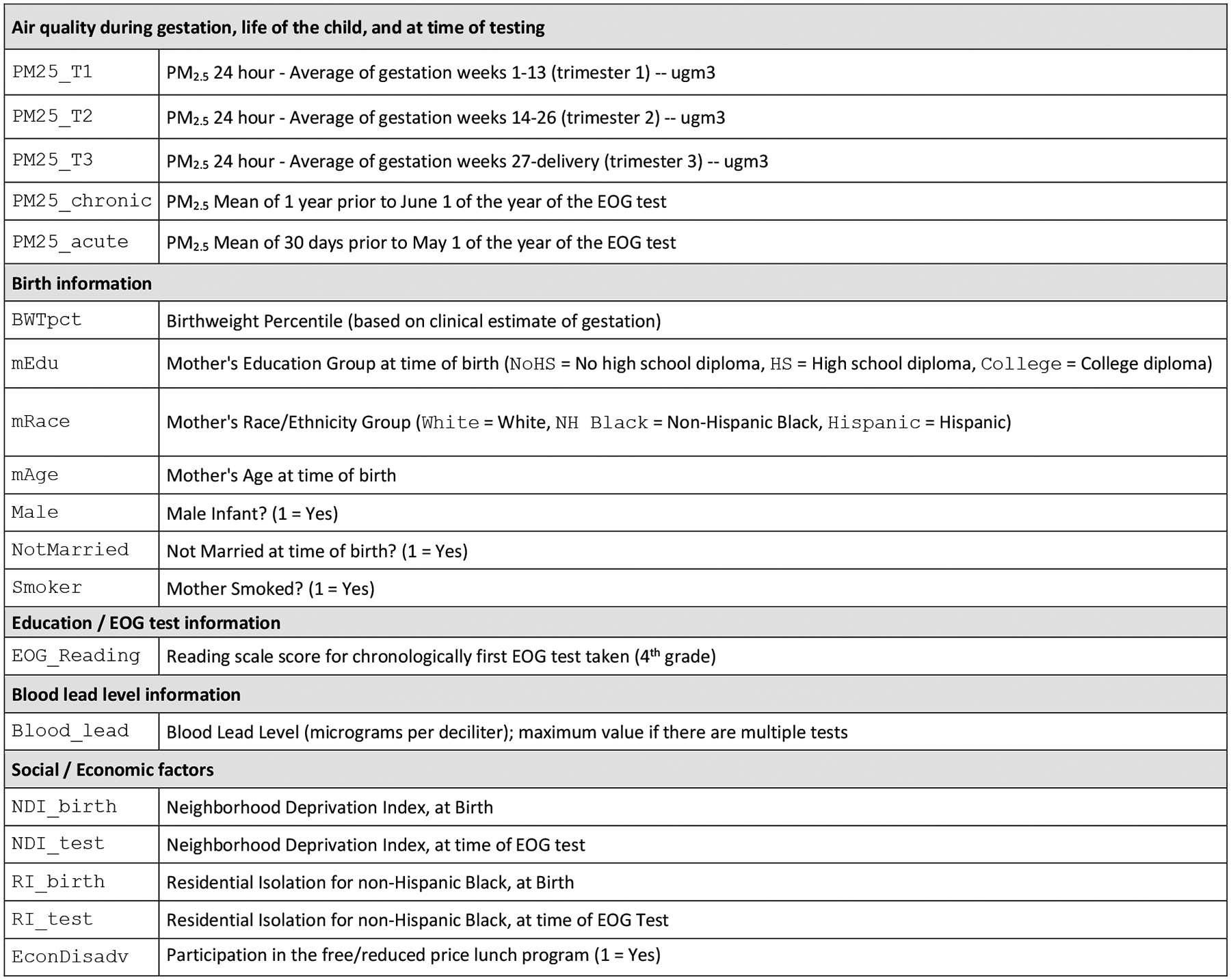

Childhood educational outcomes are affected by adverse environmental exposures, such as poor air quality and lead, as well as social stressors, such as poverty, racial residential isolation, and neighborhood deviation. We study childhood educational development using end-of-grade reading test scores for a large cohort of fourth grade children in North Carolina (Children’s Environmental Health Initiative, 2020). The reading scores are accompanied by a collection of student-level information detailed in Figure 3, which includes air quality exposures, birth information, blood lead measurements, and social and economic factors (see also Kowal et al., 2021). The goal is to identify subsets of these variables and interactions that offer near-optimal prediction of reading scores as well as accurate classification of at-risk students.

Figure 3:

Variables in the NC education dataset. Data are restricted to individuals with 37–42 weeks gestation, mother’s age 15–44, Blood_lead ≤80, birth order ≤4, no current limited English proficiency, and residence in NC at time of birth and time of 4th grade test.

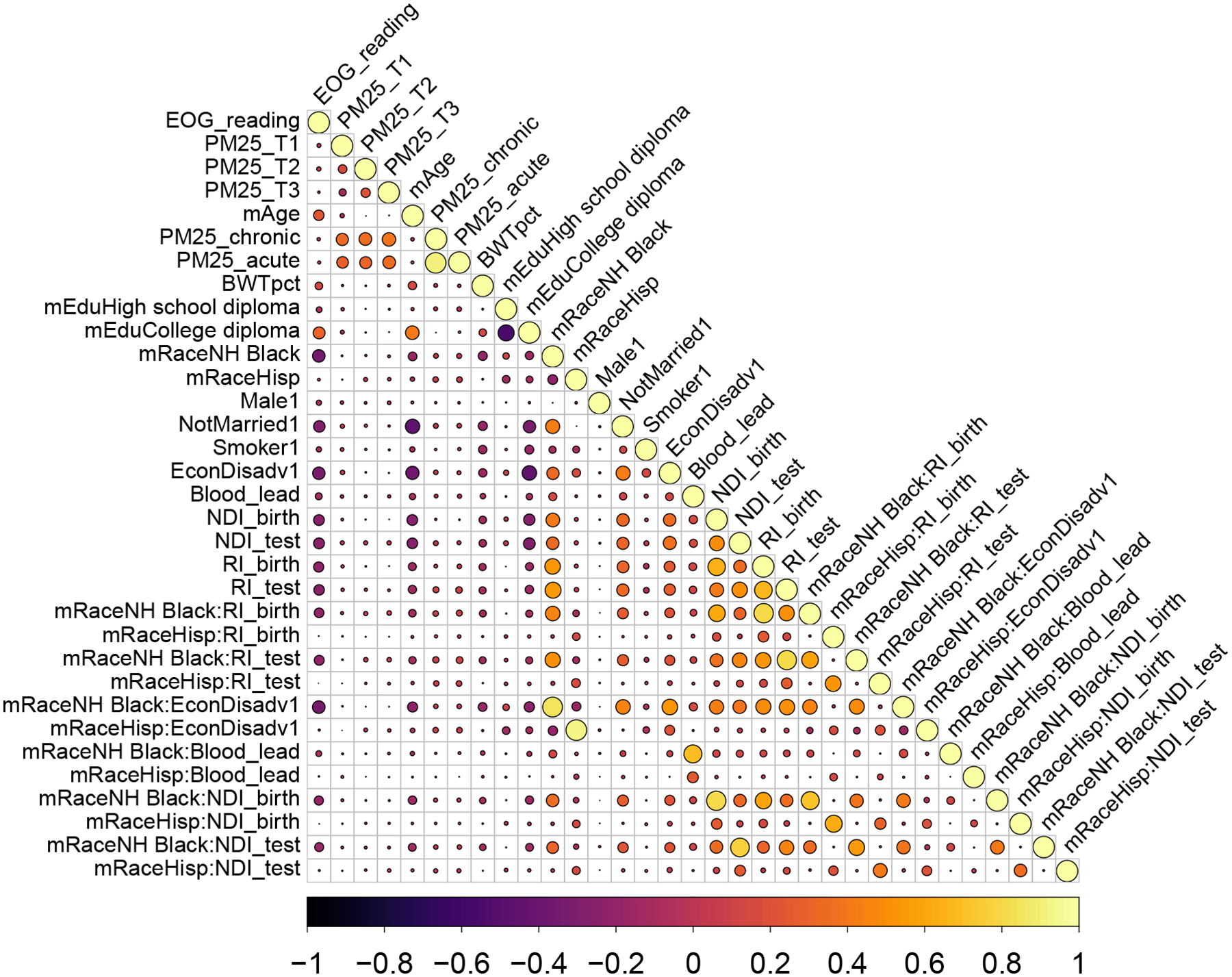

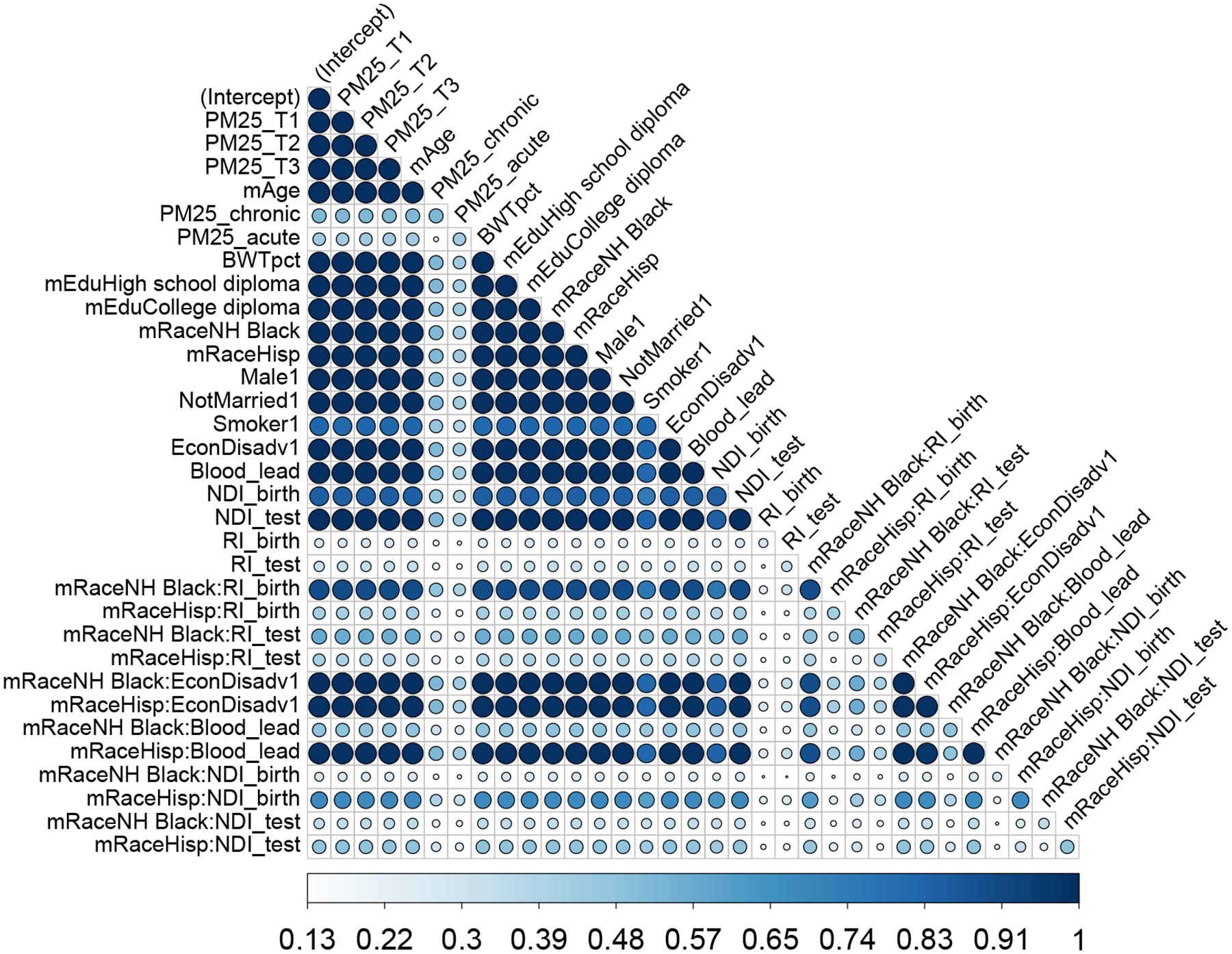

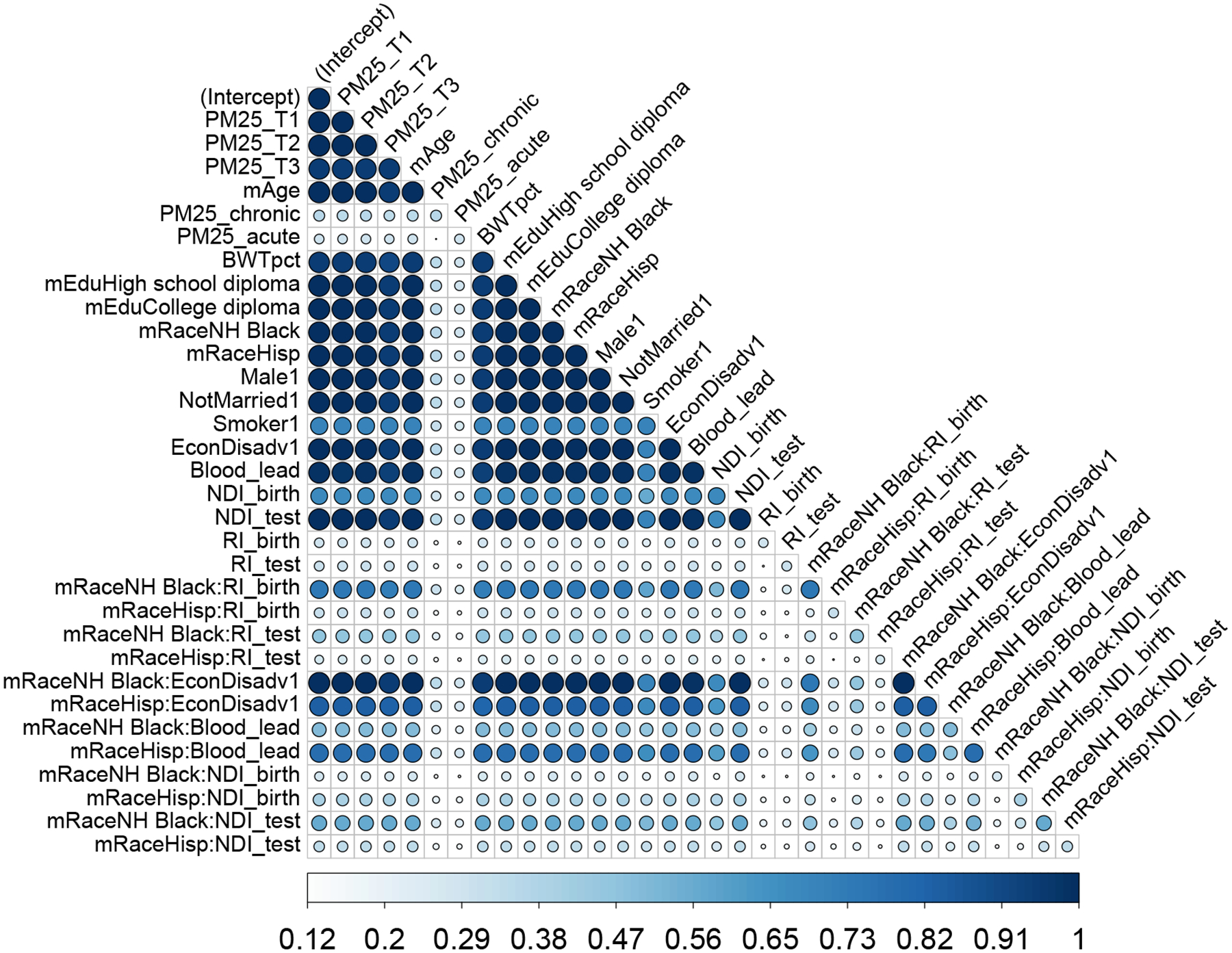

A prominent feature of the data is the correlation among the covariates. After centering and scaling the continuous covariates to mean zero and standard deviation 0.5, we augment the variables in Figure 3 (excluding the test scores) with interactions between race and the social and economic factors. The resulting dimensions are and . Figure E.1 displays the pairwise correlations among the covariates and the response variable. There are strong associations among the air quality exposures as well as among race and the social and economic factors. Due to the dependences among variables, it is likely that distinct subsets of similar predictive ability can be obtained by interchanging among these correlated covariates. Hence, it is advantageous to collect and study the near-optimal subsets.

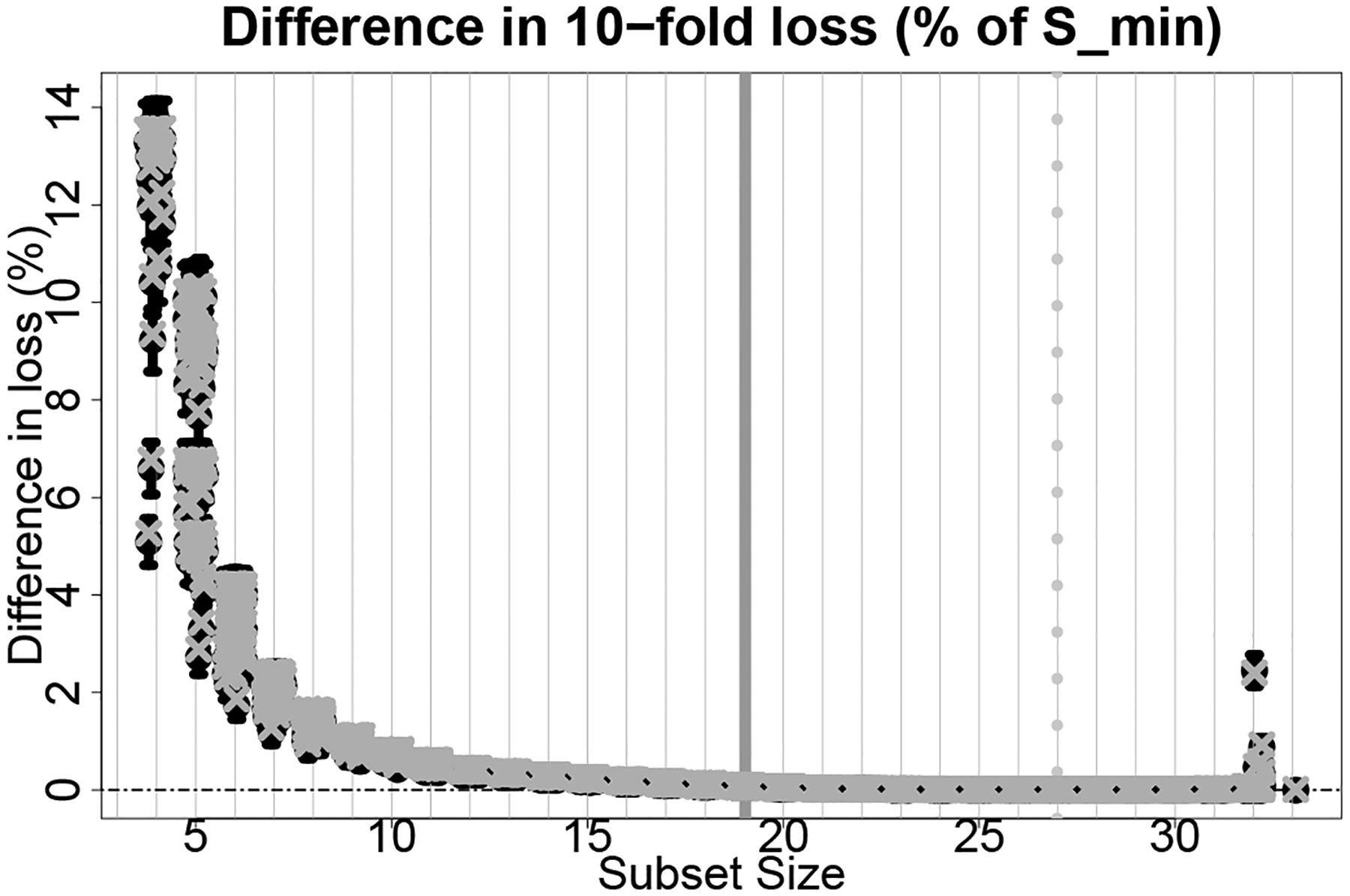

We compute acceptable families for (i) the reading scores under squared error loss and (ii) the indicator under cross-entropy loss, where is the 0.1-quantile of the reading scores (see Appendix D). While task (i) broadly considers the spectrum of educational outcomes via reading scores, task (ii) targets at-risk students in the bottom 10% of reading ability. Acceptable families and accompanying quantities for both tasks can be computed using the same Bayesian model : we focus on Gaussian linear regression with horseshoe priors. The acceptable families are computed using the proposed BBA search with and ; results for other values, , and are noted, while alternative target covariates are in Section 4.2.

4.1. Subset selection for predicting reading scores

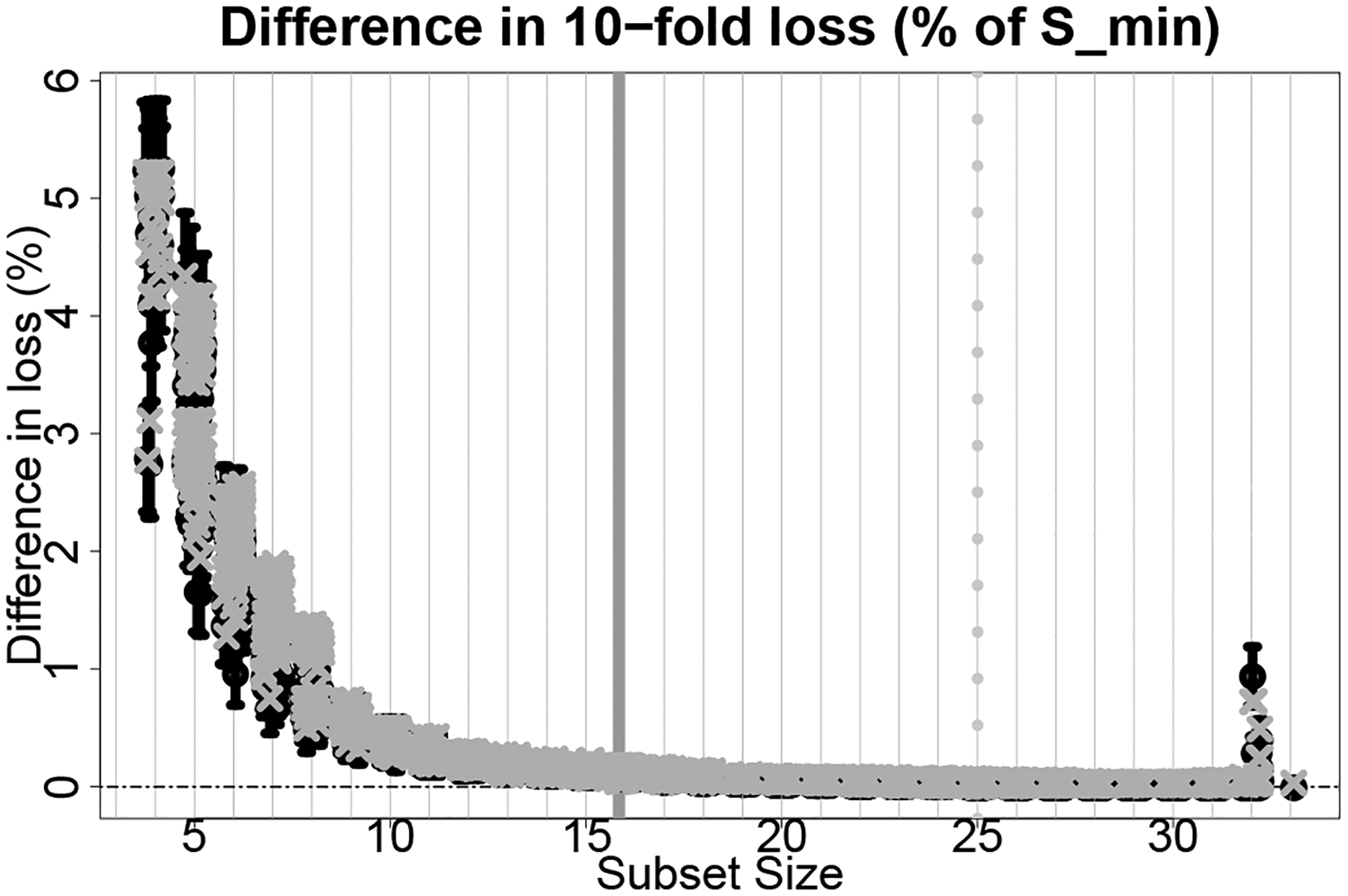

First, we predict reading scores using a linear model for and squared error loss for . Since acceptable family is defined based on in (19), we summarize its distribution in Figure 4. For each , we display 80% intervals, expectations, and the empirical analog . The smaller subsets of sizes four to six demonstrate clear separation for certain subsets. Along with the intercept and the race indicators, these subsets include mEdu (college diploma), EconDisadv, and mEdu (completed high school) in sequence. However, larger subsets are needed to procure near-optimal predictions for smaller margins, such as . While the best subset includes nearly all of the covariates, many subsets with achieve within of the accuracy of .

Figure 4:

The 80% intervals (bars) and expected values (circles) for with (x-marks) under squared error loss for each subset size with . We annotate (dashed gray line) and (solid gray line) for and and jitter the subset sizes for clarity of presentation.

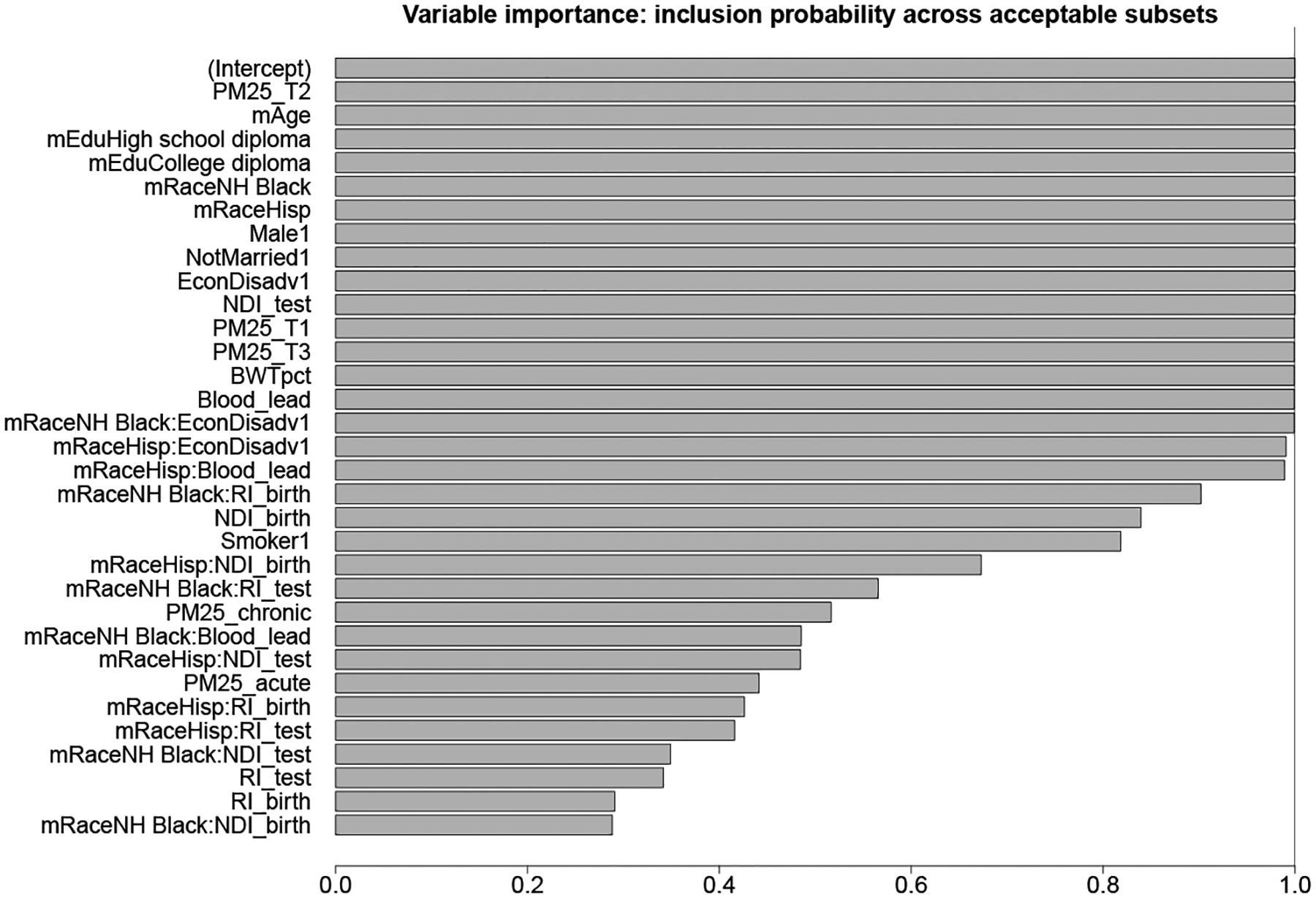

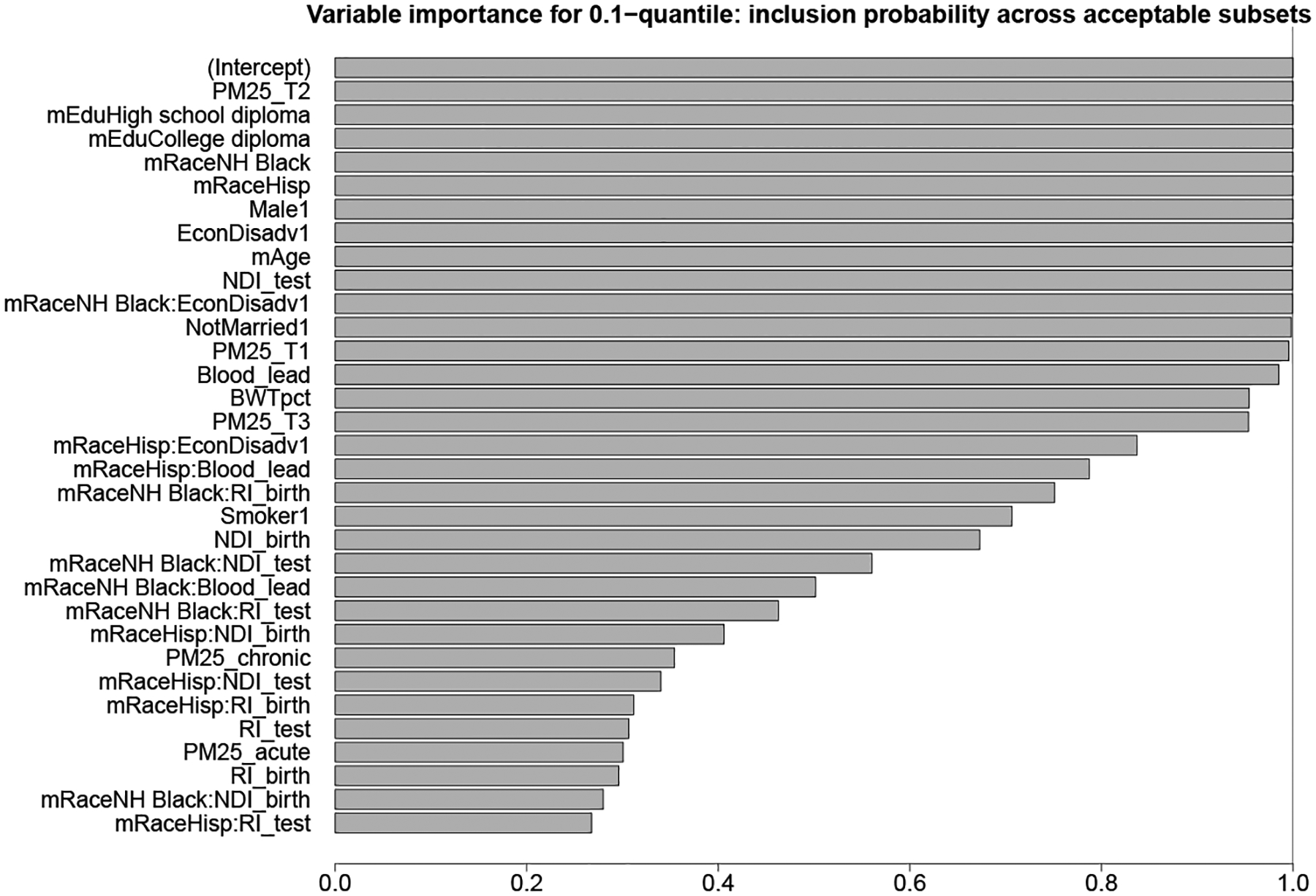

Among the candidate subsets identified from the BBA search, there are acceptable subsets. We summarize via the co-variable importance metrics and in Figures 5 and E.2, respectively. Unlike many variable importance metrics that measure effect sizes, instead quantifies whether each covariate is a component of all, some, or no competitive subsets. There are many keystone covariates that appear in (nearly) all acceptable subsets, including environmental exposures (prenatal air quality and blood lead levels), economic and social factors (EconDisadv, mother’s education level, neighborhood deprivation at time of test), and demographic information (race, gender), among others.

Figure 5:

Variable importance for prediction. There are several tiers: variables appear in (nearly) all, many (> 70%), some (> 30%), or no acceptable subsets.

Interestingly, chronic and acute exposure each belong to nearly 50% of acceptable subsets (Figure 5), yet rarely appear in the same acceptable subset (Figure E.2). The pairwise correlations (Figure E.1) offer a reasonable explanation: these variables are weakly correlated with reading scores but highly correlated with one another. Similar results persist for neighborhood deprivation and racial residential isolation both at birth and time of test. Moreover, this analysis was conducted after removing one acute outlier (50% larger than all other values). When that outlier is kept in the data, acute no longer belongs to any acceptable subset, while for chronic increases. These results are encouraging: the acceptable family identifies redundant yet distinct predictive explanations, but prefers the more stable covariate in the presence of outliers.

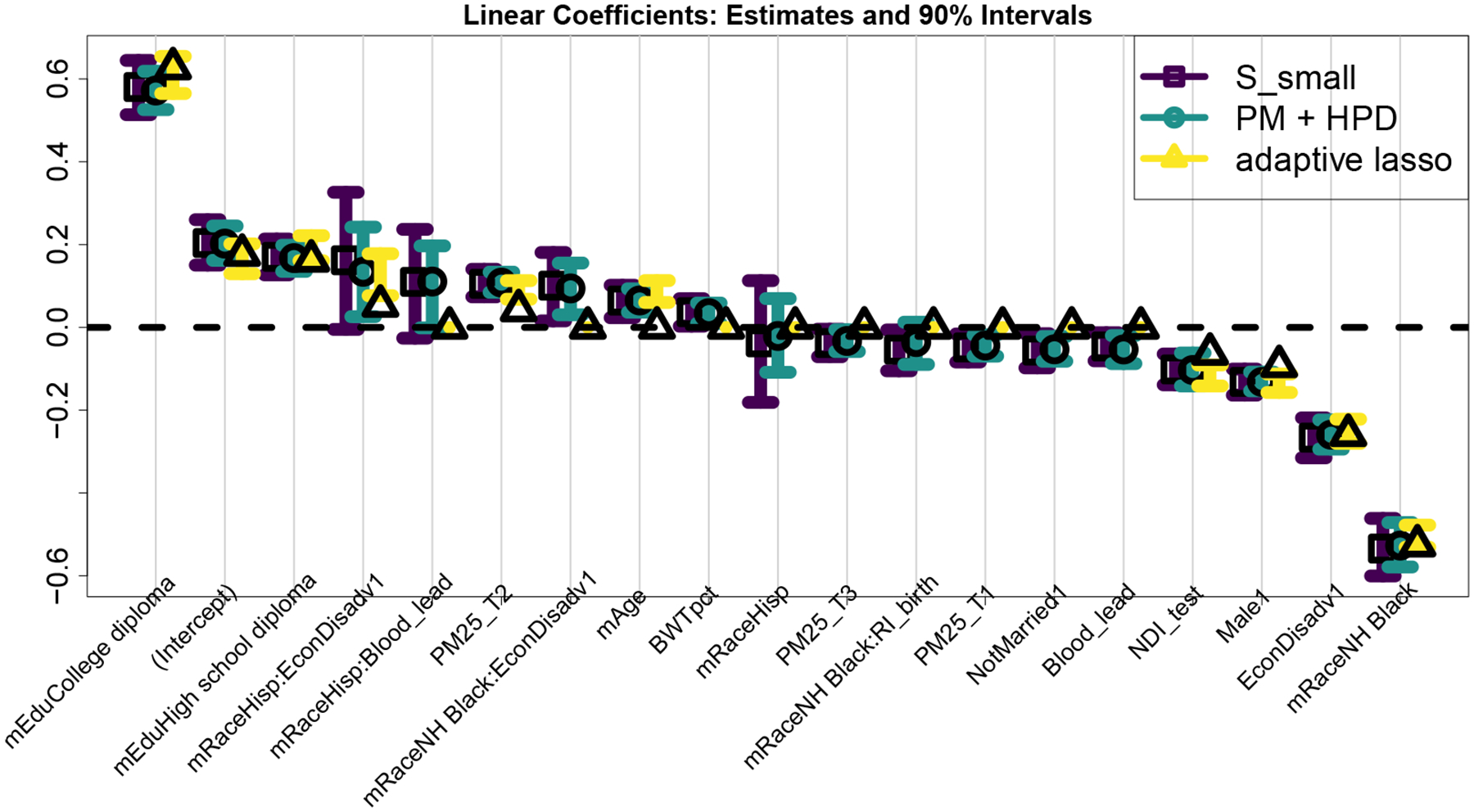

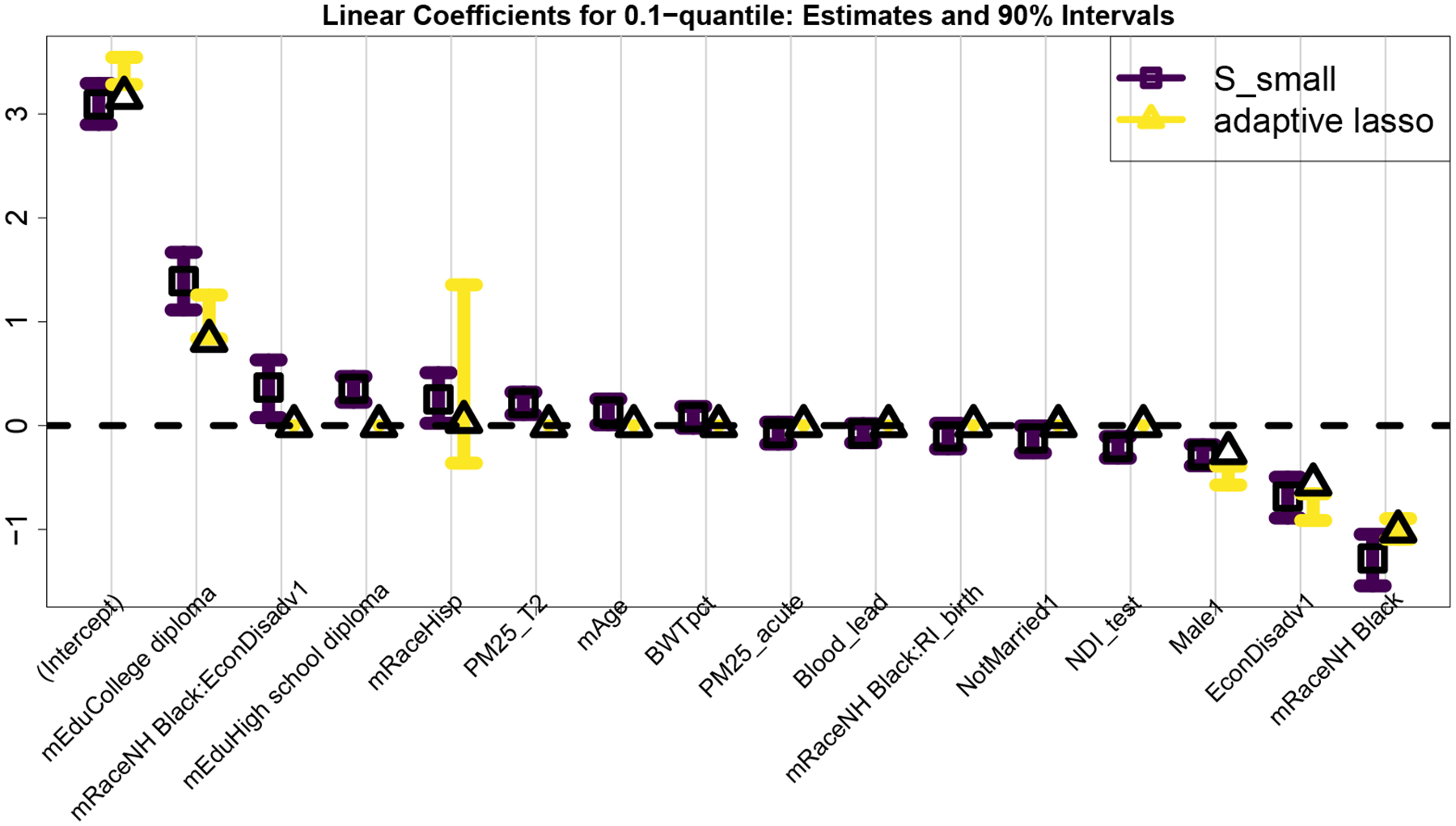

Next, we analyze the smallest acceptable subset and incorporate uncertainty quantification for the accompanying linear coefficients. The selected covariates are displayed in Figure 6 alongside the point and 90% intervals based on the proposed approach, the Bayesian model , and the adaptive lasso. The estimates and intervals for covariates excluded from are identically zero for the proposed approach (and, in this case, the adaptive lasso as well), while the estimates and HPD intervals from are dense for all covariates. Despite the encouraging simulation results for the Zhao et al. (2021) frequentist intervals, these intervals often exclude the adaptive lasso point estimates, which undermines interpretability.

Figure 6:

Estimated linear effects and 90% intervals for the variables in based on the proposed approach, model , and the adaptive lasso.

The estimates from and are similar with anticipated directionality: higher mother’s education levels, lower blood lead levels in the child, less neighborhood deprivation, and absence of economic disadvantages predict higher reading scores. Prenatal air quality exposure is significant: due to seasonal effects, the 1st and 3rd trimester exposures have negative coefficients, while the 2nd trimester has a positive effect. Naturally, these effects can only be interpreted jointly. Among interaction terms, the negative effect of NH Black × RI_birth suggests the lower reading scores among non-Hispanic Black students are accentuated by racial residential isolation in the neighborhood of the child’s birth. Since we do not force all main effects into each subset, does not contain an estimated effect of RI_birth for other race groups. Other interactions, such as the positive effects of Hisp and NH Black by EconDisadv and Hisp × Blood_lead, must also be interpreted carefully: the vast majority of Hispanic and non-Hispanic Black students belong to the EconDisadv group and have much higher blood lead levels on average, while each of NH Black, EconDisadv, and Blood_lead has a strong negative main effect.

The results are not particularly sensitive to or . When , there are candidate subsets and acceptable subsets. The variable importance metrics broadly agree with Figures 5 and E.2, while —and therefore Figure 6—is unchanged. When (and as before), the acceptable family reduces slightly to 977 members and differs only in the addition of Smoker and Hisp × NDI_test.

4.2. Out-of-sample prediction

We evaluate the predictive capabilities of the proposed approach for 20 training/testing splits of the NC education data. The same competing methods are adopted from Section 3, including the distinct search strategies for collecting near-optimal subsets. Since and are reasonably robust to , we select for computational efficiency. In addition, we include the acceptable family defined by setting to be the testing data covariate values (bbound(Xtilde)), which is otherwise identical to bbound(bayes). Root mean squared prediction errors (RMSPEs) are used for evaluation.

The results are presented in Figure 7. Among single subset methods, outperforms all competitors—including the classical forward and backward estimators and the smallest acceptable subsets from lasso(bayes), forward(bayes), and backward (bayes) discussed in Section 3 (not shown). The adaptive lasso selects fewer variables and is not competitive. Among search methods, Figure 7 confirms the results from the simulation study: the proposed BBA strategy (bbound (bayes)) identifies 10–25 times the number of subsets as the other search strategies, yet does not sacrifice any predictive accuracy in this expanded collection. Clearly, bbound(bayes) provides a more complete predictive picture, as the competing search strategies omit a massive number of subsets that do offer near-optimal prediction. The acceptable family based on the out-of-sample covariates bbound(Xtilde) is much larger, which is reasonable: the subsets are computed and evaluated on covariates for which the accompanying response variables are not available. This collection of subsets sacrifices some predictive accuracy relative to the other search strategies, yet still outperforms the adaptive lasso—yet another testament to the importance of the regularization induced by .

Figure 7:

Root mean squared prediction errors (RMSPEs) across 20 training/testing splits of the NC education data. The boxplots summarize the RMSPE quantiles for the subsets within each acceptable family, while the vertical lines denote RMSPEs of competing methods. The average size of each acceptable family is annotated. The proposed BBA search returns vastly more subsets that remain highly competitive, while the accompanying subset performs best overall.

Although the RMSPE differences appear to be small, minor improvements are practically relevant: even a single point on a standardized test score can be the difference between progression to the next grade level (on the low end) or eligibility for intellectually gifted programs (on the high end). More generally, education data are prone to weak signals and small effect sizes, which are conditions under which many methods may offer similar predictive performance. Nonetheless, our primary goal is not to substantially improve prediction, but rather to identify and analyze a large collection of near-optimal subsets.

The importance of the broader BBA search strategy is further highlighted in comparison lasso(bayes), which uses a lasso search path for decision analytic Bayesian variable selection (Hahn and Carvalho, 2015; Kowal et al., 2021). In particular, lasso(bayes) generates only candidate subsets and acceptable subsets. By comparison, bbound(bayes) returns more than 100 times the number of candidate subsets and acceptable subsets. Figure 7 shows that the subsets omitted by lasso(bayes) yet discovered by bbound(bayes) are indeed near-optimal. Further, the restrictive search path of lasso(bayes) does not guarantee greater stability: the smallest acceptable subsets for bbound(bayes) and lasso(bayes) are nearly identical, yet the interquartile range of across the 20 training/testing splits under bbound(bayes) is 0, while the same quantity under lasso(bayes) is 2. Indeed, by this metric, bbound(bayes) actually provides the most stable smallest acceptable subset across all search strategies considered.

5. Discussion

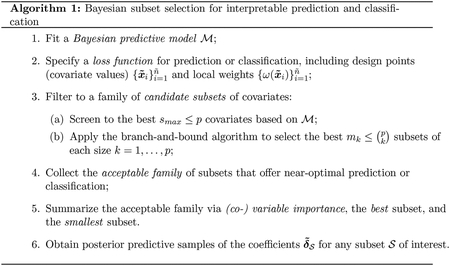

We developed decision analysis tools for Bayesian subset search, selection, and (co-) variable importance. The proposed strategy is outlined in Algorithm 1. Building from a Bayesian predictive model , we derived optimal linear actions for any subset of covariates. We explored the space of subsets using an adaptation of the branch-and-bound algorithm. After filtering to a manageable collection of promising subsets, we identified the acceptable family of near-optimal subsets for linear prediction or classification. The acceptable family was summarized by a new (co-) variable importance metric—the frequency with which variables (co-) appear in all, some, or no acceptable subsets—and individual member subsets, including the “best” and smallest subsets. Using the posterior predictive distribution from , we developed point and interval estimates for the linear coefficients of any subset. Simulation studies demonstrated better prediction, interval estimation, and variable selection for the proposed approach compared to existing Bayesian and frequentist selection methods—even for high-dimensional data with .

We applied these tools to a large education dataset to study the factors that predict educational outcomes. The analysis identified several keystone covariates that appeared in (almost) every near-optimal subset, including environmental exposures, economic and social factors, and demographic information. The co-variable importance metrics highlighted an interesting phenomenon where certain pairs of covariates belonged to many acceptable subsets, yet rarely appeared in the same acceptable subset. Hence, these variables are effectively interchangeable for prediction, which provides valuable context for interpreting their respective effects. We showed that the smallest acceptable subset offers excellent prediction of end-of-grade reading scores and classification of at-risk students using substantially fewer covariates. The corresponding linear coefficients described new and important effects, for example that greater racial residential isolation among non-Hispanic Black students is predictive of lower reading scores. However, our results also caution against overreliance on any particular subset: we identified over 200 distinct subsets of variables that offer near-optimal out-of-sample predictive accuracy.

Future work will attempt to generalize these tools via the loss functions and the actions. Alternatives to squared error and cross-entropy loss can be incorporated with an IRLS approximation strategy similar to Section 2.3, which would maintain the methodology and algorithmic infrastructure from the proposed approach. Similarly, the class of parametrized actions can be expanded to include nonlinear predictors, such as trees or additive models, with acceptable families constructed in the same way.

Acknowledgments

Research was sponsored by the Army Research Office (W911NF-20-1-0184) and the National Institute of Environmental Health Sciences of the National Institutes of Health (R01ES028819). The content, views, and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the Army Research Office, the North Carolina Department of Health and Human Services, Division of Public Health, the National Institutes of Health, or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation herein.

Appendix A. Approximations for out-of-sample quantities

The acceptable family in (19) is based on the out-of-sample predictive discrepancy metric and the best subset in (18). These quantities both depend on the out-of-sample empirical and predictive losses and , respectively, in (16). Hence, it is required to compute (i) the optimal action on the training data, , and (ii) samples from the out-of-sample predictive distribution . Because of the simplifications afforded by (4)-(5) and (11), computing only requires the out-of-sample expectations or for the classification setting. For many models , there is a further simplification that , where often has an explicit form (such as in regression models). Absent such simplifications, the expectations can be computed by averaging the draws of .

Although these terms can be computed by repeatedly re-fitting the Bayesian model for each training/validation split , such an approach is computationally demanding. Instead, we apply a sampling-importance resampling (SIR) algorithm to approximate these out-of-sample quantities. Notably, the SIR algorithm requires only a single fit of model to the complete data—which is already needed for posterior inference—and therefore contributes minimally to the computational cost of the aggregate analysis.

The details are provided in Algorithm 2. By construction, the samples are from the out-of-sample predictive distribution . Based on Algorithm 2, it is straightforward to compute draws of for any , as well as the best subset in (18). Therefore, the acceptable family is readily computable for any . By default, we use SIR samples.

Algorithm 2 recycles computations: steps 1–2(d) are shared among all subsets and loss functions . Hence, the algorithm is efficient even when the number of candidate subsets is large—and notably does not require re-fitting the Bayesian model . Modifications for classification (Section 2.3) are straightforward: steps (c), (d), and (e) are replaced by for and for ; and compute by solving (11) using the training data covariates , the weights , and the pseudo-data .

Appendix B. Simulation study for classification

The synthetic data-generating process for classification mimics that for prediction: the only difference is that the data are generated as with and as before. For the Bayesian model , we use a logistic regression model with horseshoe priors and estimated using rstanarm (Goodrich et al., 2018). The competing estimators are constructed similarly as before, now using cross-entropy loss (10) for the proposed approach and the logistic likelihood for the adaptive lasso.

The classification performance is evaluated using cross-entropy loss for in Figure B.1 (top), using the same competing search methods as in Figure 1. As in the regression case, the proposed bbound(bayes) search procedure returns vastly more subsets in the acceptable family, yet maintains excellent classification performance within this collection. In addition, classification based on and substantially outperform the adaptive lasso. We also include the 90% interval comparisons in Figure B.1 (bottom), which confirm the results from the prediction scenario: and Zhao et al. (2021) (modified for the logistic case) achieve the nominal coverage with the narrowest intervals.

Figure B.1:

Top: cross-entropy loss for . The boxplots summarize the cross-entropy quantiles for the subsets within each acceptable family, while the vertical lines denote cross-entropy of competing methods. The average size of each acceptable family is annotated. Bottom: Mean interval widths (boxplots) with empirical coverage (annotations) for .

Appendix C. Simulation study for sensitivity analysis

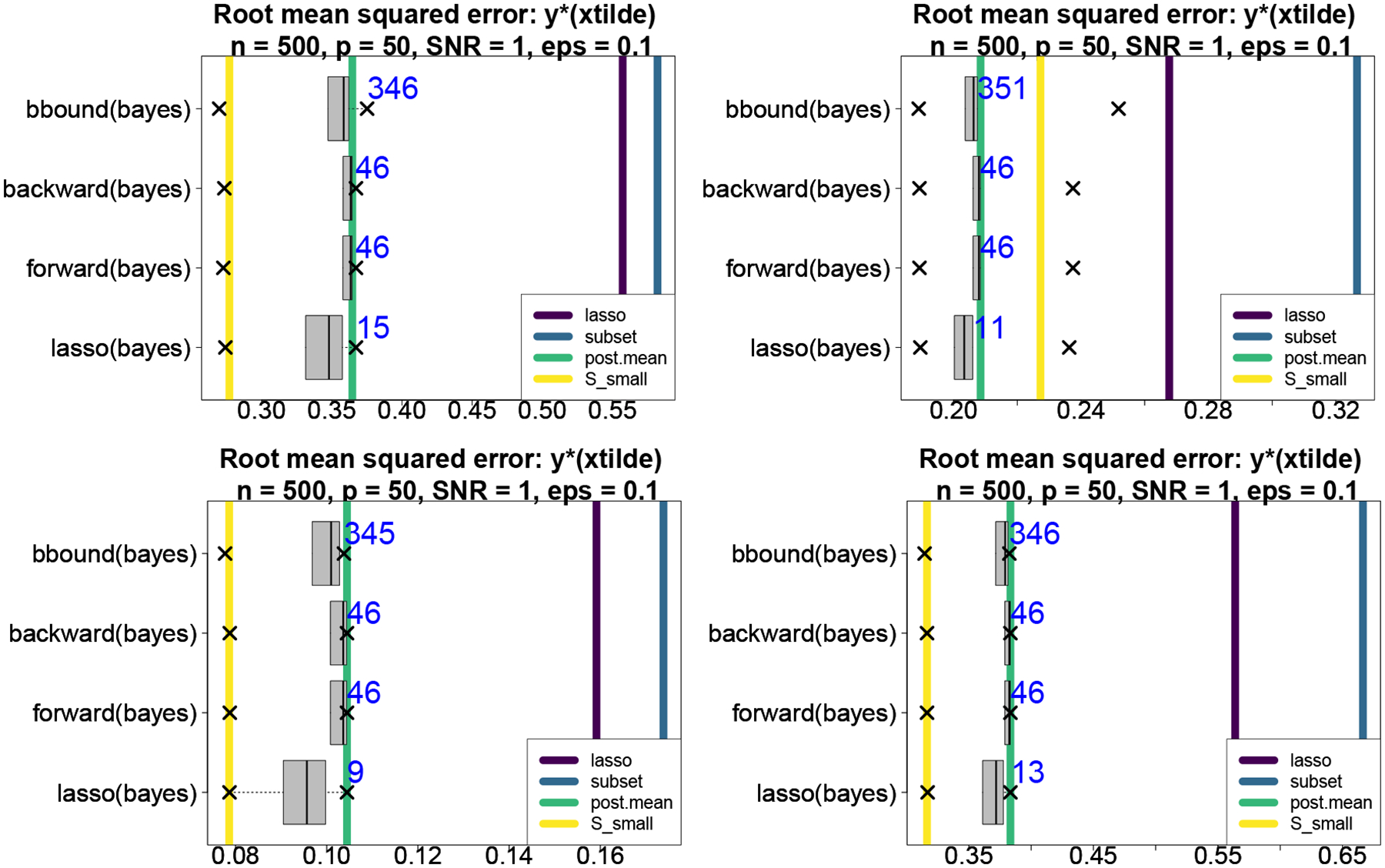

We revisit the comparisons in Figure 1 to consider sensitivities to and . In Figure C.1, we report results for the acceptable families with and ; the frequentist estimators and post.mean are unchanged from Figure 1. Compared also to the analogous case in Figure 1 with , we see the expected ordering in the cardinalities of the acceptable families: larger values of produce a more stringent criterion and therefore fewer acceptable subsets. Notably, the main results are not sensitive to the choice of , and performs exceptionally well in all cases.

Figure C.1:

Root mean squared errors (RMSEs) for predicting . The boxplots summarize the RMSE quantiles for the subsets within each acceptable family; here, the acceptable families use (left) and (right). The vertical lines denote RMSEs of competing methods and the average size of each acceptable family is annotated. The results suggest only minor sensitivity to the choice of .

Next, we again modify the comparisons in Figure 1 to evaluate prediction at a new collection of covariates . We allow variations in the covariate data-generating process—either correlated standard normals from Section 3 or iid Uniform(0,1)—and whether the observed covariates follow the same data-generating process as the target covariates . These configurations generate four scenarios, all with . The competing acceptable families are each generated using this choice of and the point predictions are evaluated for for . The results are in Figure C.2. Naturally, performance worsens across the board when observed and target covariates differ in either their values or their distributions. When the target covariates are iid uniform (top right, bottom left), all methods actually perform better—likely due to the light-tailed (bounded) and independent covariate values. Yet perhaps most remarkably, the relative performance among the methods remains consistent, while outperforms all competitors in all but one scenario—and decisively outperforms the frequentist methods in all cases.

Appendix D. Subset selection for classifying at-risk students

We now focus the analysis on at-risk students via the functional under cross-entropy loss, where is the 0.1-quantile of the reading scores. The posterior predictive variables derive from the same model as in Section 4, which eliminates the need for additional model specification, fitting, and diagnostics. However, it is also possible to fit a separate logistic regression model to the empirical functionals , which we do for the logistic adaptive lasso competitor.

Figure C.2:

Root mean squared errors (RMSEs) for predicting for covariates when the observed covariates are correlated standard normals (top) or iid uniforms (bottom) and when the target covariates have the same distribution (left) or a different distribution (right) from . The boxplots summarize the RMSE quantiles for the subsets within each acceptable family. The vertical lines denote RMSEs of competing methods and the average size of each acceptable family is annotated.

It is most informative to study how the acceptable families differ for targeted classification relative to prediction. The comparative distributions of (Figures 4 and D.1) clearly show that classification admits smaller subsets capable of matching the performance of within .

The variable importance metrics (Figures D.2 and E.3) identify the same keystone covariates as in the prediction setting. However, although there are many more acceptable subsets for classification than for prediction , each is generally much smaller for classification. Indeed, the smallest acceptable subset for classification is smaller, , and is accompanied by 21 acceptable subsets of this size. In aggregate, these results suggest that near-optimal linear classification of at-risk students is achievable for a broader variety of smaller subsets of covariates.

Lastly, the selected covariates from are plotted with 90% intervals in Figure D.3. Compared to the prediction version, for classification replaces 1st trimester exposure with acute exposure and omits the interactions Hisp × Blood_lead and Hisp × EconDisadv, but is otherwise the same. The posterior expectations under are excluded because they are not targeted for prediction of and therefore are not directly comparable. However, there is some disagreement between and the adaptive lasso, the latter of which excludes several keystone covariates and again suffers from inconsistency between the point and interval estimates.

Figure D.1:

The 80% intervals (bars) and expected values (circles) for with (x-marks) under cross-entropy for each subset size with . We annotate (dashed gray line) and (solid gray line) and jitter the subset sizes for clarity of presentation.

Figure D.2:

Variable importance for classification of the 0.1-quantile .

Figure D.3:

Estimated linear effects and 90% intervals for the variables in based on the proposed approach and the adaptive lasso.

Appendix E. Supporting figures

These figures include: pairwise correlations among the covariate (Figure E.1) and co-variable importance for prediction (Figure E.2) and classification (Figure E.3).

Figure E.1:

Pairwise correlations among the covariates in the NC education data.

Figure E.2:

Co-variable importance for prediction. For certain pairs of variables (chronic and acute PM2.5 exposure; neighborhood deprivation and racial residential isolation both at birth and time of test), it is common for one—but not both—to appear in an acceptable subset.

Figure E.3:

Co-variable importance for classification of the 0.1-quantile.

References

- Afrabandpey Homayun, Peltola Tomi, Piironen Juho, Vehtari Aki, and Kaski Samuel. A decision-theoretic approach for model interpretability in Bayesian framework. Machine Learning, 109(9):1855–1876, 2020. ISSN 1573–0565. [Google Scholar]

- Barbieri Maria Maddalena and Berger James O.. Optimal predictive model selection. Annals of Statistics, 32(3):870–897, 2004. ISSN 00905364. doi: 10.1214/009053604000000238. [DOI] [Google Scholar]

- Bashir Amir, Carvalho Carlos M., Hahn P. Richard, and Jones M. Beatrix. Post-processing posteriors over precision matrices to produce sparse graph estimates. Bayesian Analysis, 14(4):1075–1090, 2019. ISSN 19316690. doi: 10.1214/18-BA1139. [DOI] [Google Scholar]

- Bernardo José M and Smith Adrian F M. Bayesian theory, volume 405. John Wiley and Sons, Inc., 2009. ISBN 047031771X. [Google Scholar]

- Bertsimas Dimitris, King Angela, and Mazumder Rahul. Best subset selection via a modern optimization lens. Annals of statistics, 44(2):813–852, 2016. ISSN 0090–5364. [Google Scholar]

- Bondell Howard D. and Reich Brian J.. Consistent high-dimensional Bayesian variable selection via penalized credible regions. Journal of the American Statistical Association, 107(500):1610–1624, 2012. ISSN 01621459. doi: 10.1080/01621459.2012.716344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiman Leo. Statistical Modeling: The Two Cultures (with comments and a rejoinder by the author). Statistical Science, 16(3):199–231, 2001. ISSN 0883–4237. doi: 10.1214/ss/1009213726. [DOI] [Google Scholar]

- Carvalho Carlos M., Polson Nicholas G., and Scott James G.. The horseshoe estimator for sparse signals. Biometrika, 97(2):465–480, 2010. ISSN 00063444. doi: 10.1093/biomet/asq017. [DOI] [Google Scholar]

- Children’s Environmental Health Initiative. Linked Births, Lead Surveillance, Grade 4 End-Of-Grade (EoG) Scores [Data set], 2020. URL 10.25614/COHORT_2000. [DOI] [Google Scholar]

- Datta Jyotishka and Ghosh Jayanta K. Asymptotic properties of Bayes risk for the horseshoe prior. Bayesian Analysis, 8(1):111–132, 2013. [Google Scholar]

- Dong Jiayun and Rudin Cynthia. Variable importance clouds: A way to explore variable importance for the set of good models. arXiv preprint arXiv:1901.03209, 2019. [Google Scholar]

- Fan Jianqing and Lv Jinchi. Sure independence screening for ultrahigh dimensional feature space. Journal of the Royal Statistical Society. Series B: Statistical Methodology, 70(5): 849–911, 2008. ISSN 13697412. doi: 10.1111/j.1467-9868.2008.00674.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan Jianqing and Lv Jinchi. A selective overview of variable selection in high dimensional feature space. Statistica Sinica, 20(1):101–148, 2010. ISSN 10170405. [PMC free article] [PubMed] [Google Scholar]

- Furnival George M and Wilson Robert W. Regressions by leaps and bounds. Technometrics, 42(1):69–79,2000. ISSN 0040–1706. [Google Scholar]

- Gatu Cristian and Kontoghiorghes Erricos John. Branch-and-bound algorithms for computing the best-subset regression models. Journal of Computational and Graphical Statistics, 15(1):139–156, 2006. ISSN 1061–8600. [Google Scholar]

- Gelfand AE, Dey DK, and Chang H. Model determination using predictive distributions, with implementation via sampling-based methods (with discussion). Bayesian Statistics 4, 4: 147–167, 1992. ISSN 01621459. [Google Scholar]

- Goodrich Ben, Gabry Jonah, Ali Imad, and Brilleman Sam. rstanarm: Bayesian applied regression modeling via Stan., 2018. URL http://mc-stan.org/.

- Goutis Constantinos and Robert Christian P.. Model choice in generalised linear models: A Bayesian approach via Kullback-Leibler projections. Biometrika, 85(1):29–37, 1998. ISSN 00063444. doi: 10.1093/biomet/85.1.29. [DOI] [Google Scholar]

- Hahn P. Richard and Carlos M Carvalho. Decoupling shrinkage and selection in bayesian linear models: A posterior summary perspective. Journal of the American Statistical Association, 110(509):435–448, 2015. ISSN 1537274X. doi: 10.1080/01621459.2014.993077. [DOI] [Google Scholar]

- Hahn P. Richard, He Jingyu, and Lopes Hedibert F.. Efficient Sampling for Gaussian Linear Regression With Arbitrary Priors. Journal of Computational and Graphical Statistics, 28 (1):142–154, 2019. ISSN 15372715. doi: 10.1080/10618600.2018.1482762. [DOI] [Google Scholar]

- Hastie Trevor, Tibshirani Robert, and Friedman Jerome. The Elements of Statistical Learning, volume 2. Springer, 2009. ISBN 0387848584. [Google Scholar]

- Hastie Trevor, Tibshirani Robert, and Tibshirani Ryan. Best Subset, Forward Stepwise or Lasso? Analysis and Recommendations Based on Extensive Comparisons. Statistical Science, 35(4):579–592, 2020. ISSN 0883–4237. [Google Scholar]

- Hosmer David W, Jovanovic Borko, and Lemeshow Stanley. Best subsets logistic regression. Biometrics, pages 1265–1270, 1989. ISSN 0006–341X. [Google Scholar]

- Huber Florian, Koop Gary, and Onorante Luca. Inducing Sparsity and Shrinkage in Time-Varying Parameter Models. Journal of Business and Economic Statistics, pages 1–48, 2020. ISSN 15372707. doi: 10.1080/07350015.2020.1713796. [DOI] [Google Scholar]