Abstract

Recent experimental developments in genome-wide RNA quantification hold considerable promise for systems biology. However, rigorously probing the biology of living cells requires a unified mathematical framework that accounts for single-molecule biological stochasticity in the context of technical variation associated with genomics assays. We review models for a variety of RNA transcription processes, as well as the encapsulation and library construction steps of microfluidics-based single-cell RNA sequencing, and present a framework to integrate these phenomena by the manipulation of generating functions. Finally, we use simulated scenarios and biological data to illustrate the implications and applications of the approach.

Graphical Abstract

1. INTRODUCTION

In his classic systems biology textbook1, D. J. Wilkinson notes that “Improvements in experimental technology are enabling quantitative real-time imaging of expression at the single-cell level, and improvement in computing technology is allowing modelling and stochastic simulation of such systems at levels of detail previously impossible. The message that keeps being repeated is that the kinetics of biological processes at the intra-cellular level are stochastic, and that cellular function cannot be properly understood without building that stochasticity into in silico models”. From this perspective, systems biology studies control over randomness, and the ways in which living cells exploit variability to grow and function. Counterintuitively, this stochastic weltanschauung relies on mental models that are inherently deterministic: differentiation landscapes2–6, gene expression manifolds7, cellular state graphs8,9, gene regulatory networks10,11, and kinetic parameters12. Analysis of experimental data therefore requires reconciling underlying deterministic structure with biological stochasticity and experimental technical variability, or noise. In particular, distinguishing technical noise from biological stochasticity involves the statistical modeling of experimental readouts, expected noise sources, and the signal-to-noise ratio, and requires consideration of the theoretical and computational tractability of the model.

How can we model these features—latent deterministic structure, biological stochasticity, and technical noise—in a way that balances our models’ ability to adequately describe available data with our own ability to adequately understand the mathematical behavior and biological interpretation of our models? Answering this question is particularly challenging in the context of single-cell genomics, where datasets are large and sparse, the signal-to-noise ratio is low, and stochasticity is one of the defining features of the underlying biophysics13–15. Here, we explain why many naïve approaches to understanding the stochastic systems biology of single cells fall short, and describe a theoretical framework that can serve as an alternative. Our framework extends recent work on the mechanistic modeling of single-cell RNA count distributions16–21, and addresses both how models can be efficiently fit to single-cell data, and what features of the underlying biology we can hope to learn.

After introducing the general framework, we illustrate its consequences through a series of vignettes. In each case, we consider modeling particular aspects of biological and technical noise, and ask: (1) What do our models help us learn about the underlying biology? and (2) What could go wrong if we ignored these features of our data? We find that certain kinds of noise must be carefully modeled, others are poorly identifiable, while others still cannot be identified at all and can be safely ignored.

2. SYSTEMS BIOLOGY AND SINGLE-CELL GENOMICS

2.1. Standard approaches to systems biology

If an experiment has ample controls and provides a readout with a high signal-to-noise ratio in the relevant variables, coarse-grained, moment-based models can be ideal. For example, investigations of cell growth have effectively used least-squares regression to fit scaling relationships between cell volume and molecular abundance that hold on average22,23. Analogously, experiments leveraging the integration of multiple fluorescent reporters have successfully decomposed molecular noise sources into intrinsic and extrinsic components24, leading to numerous analytical25–28 and experimental29–31 extensions that leverage the lower moments of poorly-characterized biological drivers to describe or delimit the system variability. These approaches, which have found application to new experimental techniques, have origins in the Onsager and Langevin theories of the early twentieth century32, which specify the moment behaviors of near-equilibrium statistical thermodynamic systems using Gaussian terms.

Alongside studying biology on a gene-by-gene basis, considerable effort has been dedicated to the discovery of regulatory networks. This problem is considerably more challenging: the number of candidate network modules rapidly grows with the number and size of motifs of interest, and simple moment-based models risk distorting key qualitative features, such as multistability. From the perspective of statistics, network inference requires specifying or bypassing likelihood functions for joint gene expression, which may combine various noise sources in addition to the “signal” of regulation. Typical ways of addressing this challenge include33,34:

The purely descriptive approach, which interprets an expression correlation matrix as a graph, but does not provide an easily interpretable way to extract its “signal.”

Thresholding, which bins the unknown observed distribution to obtain a known, but lower-information distribution, as with binarization used to construct Boolean networks35 or implement the phixer algorithm36.

Distributional assertion, which fits static observations by assuming statistics or observations are Gaussian, as in a variety of popular Bayesian34, information-theoretic37, and regression-based38 methods; this assumption may39 or may not40 provide accurate results.

The dynamic approach, which fits a time-dependent trajectory to data using assuming Gaussian residuals; this assumption may reflect stochastic differential equation dynamics41 or isotropic observation noise added to a latent process42–44.

This overview is far from exhaustive, but it demonstrates a key theme: relatively robust signal, such as the lower moments or the absence/presence of gene expression, can be treated using fairly simple models that rely on highly optimized, well-understood methods and algorithms developed in the context of signal processing and dynamical systems analysis. Which simple model may perform best is not known a priori, and heavily depends on the task33. Ideally, methods are benchmarked on simulated39,45 or well-characterized “gold standard”33,46 datasets to glean partial insight about their performance and limitations. In this framework, improving the signal-to-noise ratio requires either designing more precise readouts or sacrificing a portion of the obtained data.

2.2. The challenge of single-cell data

Advances in sequencing technologies, most dramatically the rapid commercialization and adoption of single-cell RNA sequencing (scRNA-seq), which can profile millions of cells on a genome-wide scale47,48, have been heralded as a promising frontier for systems biology49–51. This potential is more striking yet due to simultaneous advances in multiomics, or the measurement of multiple modalities (transient and non-coding RNA species, DNA methylation, chromatin accessibility, surface protein abundance) in individual cells52,53, facilitating “integrated” analysis54–56. The “big data” from single-cell sequencing have thus served as substrate for a plethora of investigations which are, at first glance, analogous to the research program of systems biology at large: the identification of cell types; their aggregation into trajectories; the discovery of gene modules that consistently differ between cell types or throughout a differentiation trajectory; and the visualization of low-dimensional summaries reflecting some component of the data structure.

To identify these coarse-grained motifs in the structure of single-cell datasets, it is common practice to analyze cell–cell graphs, constructed from measures of expression similarity, to attempt to construct cliques (cell types), shortest paths (trajectories), and neighborhood-preserving low-dimensional embeddings (visualizations). In addition, relatively simple parametric distributions are widely used, with the Gaussian assumption popular for the lower moments (e.g., to compute measures of differential expression), and the lognormal or negative binomial used to describe count distributions57,58. Standard single-cell RNA sequencing data provide snapshots of processes, rendering dynamical analysis fairly complex, but it is common to fit a “pseudotemporal” curve through the dataset by minimizing a Gaussian error term between this curve and some transformation of the cells’ expression levels59,60.

Here, however, the underlying assumptions break down. Single-cell data are intrinsically and qualitatively different from readouts of typical systems biology experiments, with drastic implications for analysis. Single-cell data are large and sparse, with a preponderance of technical noise effects, poorly characterized batch- and gene-level biases, and low per-cell copy numbers13–15. Improving the signal-to-noise ratio by designing more targeted experiments is challenging, as commercial technology is designed to quantify molecules on a genome-wide scale. More problematically, typical distributional assumptions and data transformations risk losing a considerable amount of signal in the low-copy number regime. This challenge informs part of the broader discussion of the relative roles of data analysis and mechanistic hypotheses in genomics19,20,61, as analyses are not constrained by mechanism or theory and may contradict existing knowledge.

More specific critiques have considered whether various analyses are appropriate or excessively heavy-handed. For example, sparsity has led to ad hoc procedures to “correct” the data, which may in turn lead to incorrect conclusions62–64. Normalization and log-transformation, which attempt to remove technical biases and prepare the data for dimensionality reduction, rely on assumptions, such as high copy numbers and homogeneity, that are routinely violated in single-cell datasets65,66. Dimensionality reduction risks distorting both local and global relationships between data points19,67,68. Finally, the use of cell–cell graphs constructed from noisy data reifies relationships which may not reflect those in the original tissue, and risks introducing hard-to-diagnose errors into downstream analysis19,69. Although these issues span the entire process of analysis, all, at least partially, trace back to uncomfortable compromises in the treatment of uncertainty and variation in a regime unforgiving of approximations.

2.3. Stochastic modeling of intracellular network dynamics

Stochasticity is, then, mandatory, and we ignore it at our own risk. Therefore, we advocate for probabilistic alternatives to the “extraction” of signal from scRNA-seq datasets. Since biology is stochastic, the noise is the signal. To quantify and characterize the components of deterministic mental models—differentiation landscapes, kinetic parameters, and similar low-dimensional abstractions70—in a principled way, we need to combine them with stochastic terms which result from specific hypotheses about the underlying biophysics and chemistry20, or risk confirmation bias19.

The development of stochastic models offers advantages beyond loss function book-keeping. If multiomic data are available, there is typically a self-consistent way to extend the models accordingly71. Although likelihoods induced by stochastic processes are challenging to analyze and implement, they provide appealing statistical properties. When the data are sufficiently informative, full distributions provide better estimates than moments40. When they are not, probabilistic approaches are appropriately conservative, as they report, rather than elide, the parameter degeneracies. A thorough mathematical understanding of model behaviors—i.e., precisely which parameters are identifiable and which are degenerate, as well as how much data must be collected—enables the design of informative experiments20,72. Finally, the use of mechanistic models, parametrized by rate constants, allows us to draw conclusions about the mechanistic bases and effects of perturbations73.

These principles have guided fluorescence-based single-cell transcriptomics for nearly twenty years. To obtain as much information as possible from entire copy-number distributions40,74, the field has developed a considerable arsenal of theoretical tools75,76 and solution strategies77–79. It is, then, particularly natural to build scRNA-seq models that extend processes consistent with fluorescence imaging: this approach allows us to leverage existing theory, as well as encode the intuition that technology-dependent effects should be independent from biological ones. A particularly popular class of models involves the bursty production of RNA and its Markovian degradation73,80, which can be analyzed in the chemical master equation (CME) framework81,82. The key theoretical points have already been applied in the context of single-cell sequencing; for example, the Poisson, Poisson-gamma, and Poisson-beta distributions, which are common in sequencing analyses58,63,83,84, are three of the limiting distributions induced by this class of models20,80,85. However, this possible mechanistic basis is only rarely84,86–88 invoked in the development of analysis methods.

2.4. Outlook

Unfortunately, we cannot simply apply existing methods from fluorescence transcriptomics; the scale and chemistry of single-cell technologies create additional desiderata. General CME solutions are computationally prohibitive and challenging to scale to thousands of genes89, requiring careful study of narrow model classes with tractable solutions17,20. In addition, connecting biological models to observations requires explicitly representing the experimental process. The existing models for fluorescence data are sophisticated79, but cannot be directly applied to sequencing data. Although a variety of models have been proposed for technical noise in single-cell technologies13,14,90,91, their chemical foundations, as well as implications for biological parameter identifiability, have been understudied21.

In light of this lacuna, we seek to produce a mathematical framework that (1) integrates biological and technical variability in a coherent, modular way; (2) scales to large, multimodal data; (3) can be used to simulate datasets and make testable, quantitative predictions; and (4) affords a thorough mathematical analysis of its components, if not the entire model.

3. STOCHASTIC MODELING OF SINGLE-CELL BIOLOGY

Constructing a general-purpose framework for the stochastic modeling of single-cell biology necessitates working at a relatively high level of abstraction, since we would in principle like to account for a range of processes with one formalism. In this section, we motivate our abstract formalism using a collection of concrete, biologically relevant examples.

One of the simplest models of transcription is the constitutive model, which assumes RNA is produced at a constant rate20,92. It is defined by the chemical reactions

| (6) |

where is a single species of RNA, is the (constant) transcription rate, and is the degradation rate. The CME that corresponds to this system is

| (7) |

where is the probability that the system has RNA at time . Solving the above master equation allows us to compare its predictions with experimental scRNA-seq data. There are several theoretical approaches for doing this—including using a special ansatz85, the Poisson representation93, the Doi-Peliti path integral17,94–96, and operator techniques97—but we would like to highlight a straightforward method that we know works for far more general problems. The idea is to consider a certain transformed version of the probability distribution, which satisfies a partial differential equation (PDE) instead of a differential-difference equation. This PDE, for a large class of biologically relevant systems, can then be solved using the method of characteristics98, which converts the problem of solving a PDE into integrating a system of ordinary differential equations (ODEs). This is mathematically equivalent to using certain path integral methods17,20,99.

Define the generating functions (GFs)

| (8) |

where is on the complex unit circle and . It is easy to show that and satisfy the PDEs

| (9) |

We can use the method of characteristics to find that

| (10) |

where the ODE has initial condition , and where is the initial (log-) generating function of the system. In order to determine from , we can use an inverse Fourier transform:

where we integrate over all on the complex unit circle. In practice, this step is done numerically using an inverse fast Fourier transform.

The constitutive model, which produces Poisson distributions at steady state, is too simple for single-cell biology20. But fortunately, the technique we have just described can be adapted to predict the behavior of substantially more complex models.

Multiple types of RNA.

One possible generalization of the constitutive model is to so-called monomolecular systems17,85, which allow phenomena like RNA splicing to be accommodated. An example is the addition of splicing to the constitutive model:

| (11) |

In general, any number of production, conversion, and degradation reactions can be modeled:

| (12) |

Using the same technique we described earlier, the probability that the system is in state at time , can be shown to be equivalent to the generating function

| (13) |

where , and the matrix is defined via

| (14) |

and where by convention.

Multiple gene states.

Although the monomolecular model is a step forward, it still does not account for nontrivial transcription rate dynamics. One possibility is that there are multiple gene states, as in the telegraph model76,97,100:

| (15) |

The corresponding three-variable generating function is

| (16) |

where , and . If we want to marginalize over gene state, which we usually do since it is not observable, we can set . Notice that the relevant ODEs are now nonlinear (Riccati-type) equations, which make them difficult to solve by hand. In general, considering multiple gene states, or other kinds of added complexity like autocatalytic reactions, yields nonlinear characteristic ODEs. This is no obstacle for numerical integration, however.

Gene regulation.

Another possibility we would like to account for is nontrivial gene regulation. In previous work20, we considered two models of transcription rate variation: the gamma Ornstein–Uhlenbeck (-OU) model, which assumes variation is due to changes in the mechanical state of DNA; and the Cox–Ingersoll–Ross (CIR) model, which assumes it is due to fluctuations in the concentration of an abundant regulator molecule. Analyzing them can be mathematically challenging, since the discrete stochastic dynamics of RNA production and degradation are coupled to the continuous stochastic process that controls the transcription rate. Fortunately, both models and many generalizations of them can be solved using the method of characteristics. For example, the CIR model (assuming two RNA species) is defined by a stochastic differential equation (SDE81) and three reactions:

| (17) |

and its solution is20

| (18) |

Thus, it is straightforward to couple dynamics defined on different types of state spaces: categorical (e.g., gene states), continuous (e.g., transcription rates), and discrete (e.g., RNA counts), using the generating function approach. In all cases, one obtains a generating function solution in terms of a finite set of (possibly nonlinear) ODEs. The total number of ODEs is equal to the total number of degrees of freedom.

One feature of single-cell biology that is challenging to capture using this approach is feedback. For example, proteins expressed by a gene may affect the transcription rate of that gene. Although exact solutions for systems involving feedback are available in certain simple cases101–104, particularly when there is only one chemical species, more general results have proven elusive. From the point of view of our approach, including chemical reactions that involve feedback yields generating function PDEs which are not first order, and cannot be solved in terms of ODEs via the method of characteristics (as explored in more detail in the supplemental information).

Transient effects.

In the context of development or reprogramming, we are especially interested in using single-cell genomics data to study transient processes. In particular, certain cell types or subtypes (like neural progenitor cells) only exist for a certain window of time, and by collecting single-cell data we are taking a snapshot of many cells, each of which may be in a different part of the process. How does this affect observed RNA counts?

Different cells being observed at different times means we are not interested in , but averaged over some distribution that indicates how likely we are to sample different times. The shape of the sampling distribution depends on when cells tend to exit a given state (e.g., by differentiating into a different cell type). Nontrivial sampling distributions are compatible with our generating function approach, since we can simply modify the distribution that appears. For a model with one discrete species, we can write the full generating function as

i.e., we can obtain it by integrating the generating function that captures intrinsic noise.

Technical noise.

In single-cell genomics experiments, we do not directly observe a given cell’s RNA counts, but those numbers filtered through a noisy sequencing process21. In microfluidics-based sequencing, noise can come from some combination of droplets not capturing all molecules (especially types of RNA with low copy numbers), errors in amplification, and reads not being uniquely identifiable. We would like to account for these kinds of technical noise in a way that is both principled, and compatible with our generating function approach to modeling intrinsic noise.

Consider a simple example, in which the relevant biology is described by the one-species constitutive model (Equation 7), and each RNA molecule is observed independently with probability . The probability of observing molecules of RNA, given a biological distribution , is

| (19) |

The corresponding generating function is

| (20) |

i.e., the result is the same as without technical noise, except that we have . In general, including technical noise requires us to replace the usual factor with , the generating function associated with the observation model:

| (21) |

For certain common observation models, like the Bernoulli model just described, or a Poisson noise model, we can say more: since

| (22) |

for some , including technical noise amounts to replacing with , so that is a composition of generating functions. We typically assume that all technical noise models satisfy Equation 22 for some .

4. RESULTS

4.1. Theoretical framework for stochastic systems biology

We are ready to present our general framework for stochastic systems biology, which accommodates all of the sources of stochasticity described in the preceding section: intrinsic noise, transient effects, and technical noise. In order to balance the amount of biology our models can capture with the mathematical tractability of those models, we restrict our analysis to a fairly general class of systems that can be solved using the method of characteristics. For such systems, we can obtain likelihoods by integrating characteristic ODEs, using the obtained characteristics to construct the generating function, and then doing an inverse (fast) Fourier transform.

This class of systems permits gene state interconversion, as well as the production and processing of RNA and proteins, which could treated as discrete or continuous variables depending on their concentration. We allow zero- and first-order reactions, including state-dependent bursting, interconversion, degradation, and catalysis. However, we disallow higher-order reactions (e.g., binding reactions ), including feedback-based regulation like protein-promoter binding. Therefore, our analysis focuses on Markovian systems that possess categorical degrees of freedom, corresponding to gene states; discrete ones, corresponding to low-copy number molecular species; and continuous ones, corresponding to transcription rates or high-concentration species. This class of reactions is schematically represented in Figure 1a; crucially, it consists of distinct “upstream” and “downstream” components.

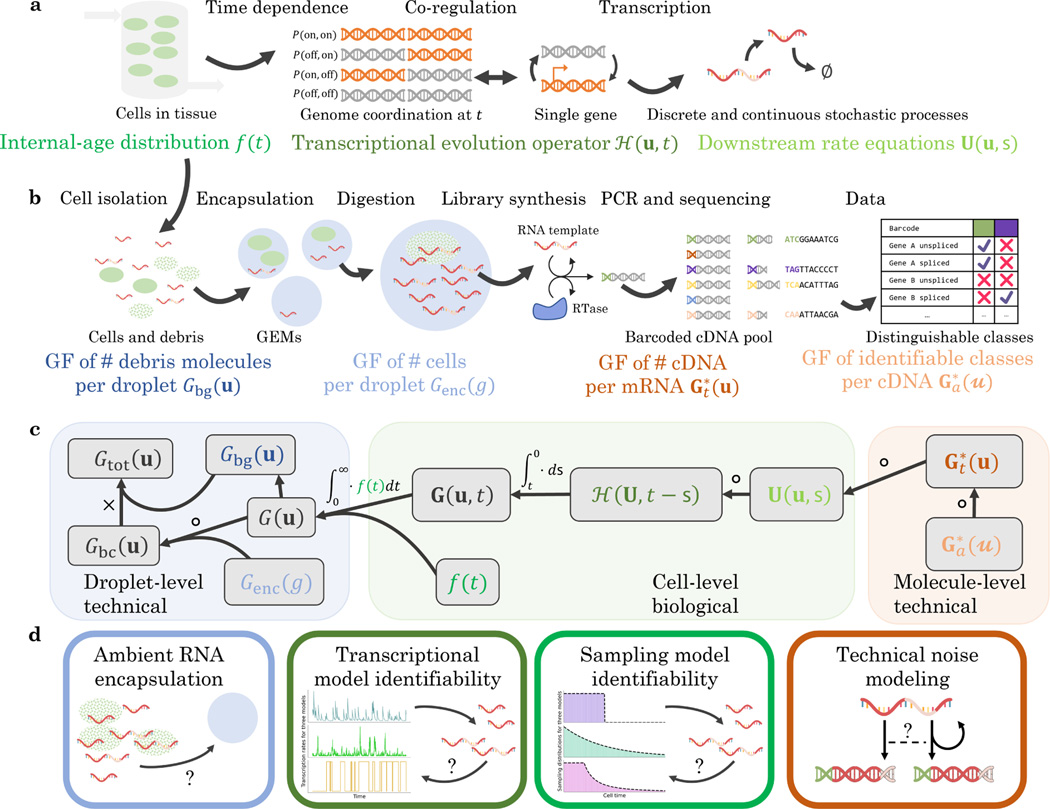

Figure 1.

The biophysical and chemical phenomena of interest, as well as the relationships between their generating functions.

a. The biological phenomena of interest: cell influx and efflux into a tissue observed by sequencing; the time-dependent transcriptional regulation of one or more genes; downstream continuous and discrete processes.

b. The technical phenomena of interest: the encapsulation of cells and cell debris; cDNA library construction; the loss of information in transcript identification (GF: generating function; RTase: reverse transcriptase).

c. The structure of the full generating function of the system in a and b: to obtain the solution, we variously compose, integrate, and multiply the generating functions of the constituent processes.

d. The stochastic and statistical properties of four components of the full system: the background debris, the transcriptional regulation, the cell/tissue relationship, and the technical noise mechanism.

Given all of a model’s possible reactions, one can write down a corresponding master equation that keeps track of how microstate probabilities change with time:

| (27) |

where each microstate consists of , the categorical dimension; , the discrete dimensions; and , the continuous dimensions. The generally complicated function aggregates all reaction rates. Master equations like Equation 27 typically consist of an infinite system of coupled ODEs, and hence are difficult to solve in general. This is one reason we chose a particular class of systems: to solve Equation 27 using the method of characteristics, and hence determine a given model’s predictions, all we need to do is solve (a finite number of) ODEs satisfied by the characteristics and GF.

The -dimensional of the system, which is a function of spectral variables and , is defined by

| (28) |

Equation 27 can be converted into a PDE satisfied by :

| (29) |

where is the Hadamard/elementwise matrix product, is the Jacobian matrix of the generating function, and combines the discrete and continuous degrees of freedom. The time-dependent matrix contains the kinetics of state transitions, whereas the operator describes the drift and bursty production processes, which may depend on state. Therefore, the operator aggregates the upstream components of the system. The matrix contains interconversion, degradation, and mean reversion-like terms, whereas contains the catalysis and square-root noise terms. , , and encode a quasi-linear, deterministic, and first-order -component system of partial differential equations in spectral variables.

Applying the method of characteristics to solve Equation 29 tells us that the downstream part of the system is fully determined by a set of characteristics , which are defined by the ODEs

| (30) |

where is an integration variable, and . Meanwhile, the generating function can be determined from

| (31) |

which has initial condition , where is the generating function of the initial distribution. The upstream components describe how molecule production occurs, and hence depend on ; their influence on the final answer is through the above integral.

The detailed form of Equation 27 is complicated, and the arithmetic exercise of converting it into Equation 29 is tedious. We show how to construct the biological master equation in Section 6.1, write it out in full in Section 6.2, and discuss at a high level how to solve it using our generating function approach in Section 6.3. The terms of the full master equation are annotated in Table S1, and the solution process is described in more detail in supplemental information.

In special cases, the ODEs we obtain can be solved exactly. For example, whenever , the downstream ODE system can be solved analytically by eigendecomposition. If, in addition, only a single gene state is present, vanishes and the upstream component can be evaluated by numerical integration16. Finally, in the simplest case of a linear operator , we obtain an analytically tractable system equivalent to a deterministic system of reaction rate equations17,85.

Although this formulation nominally describes a single gene, it may be exploited to represent multi-gene systems. Conceptually, this strategy entails constructing a model where the transcription of multiple species is controlled by a common regulator. We discuss potential candidate models in Section 6.4; these models instantiate hypotheses to produce and that represent co-regulation.

To explain the observation of transient processes, such as the simultaneous capture of progenitor and descendant cells from a differentiation process, we take inspiration from previous work in sequencing86 as well as chemical reactor modeling105,106, and extend the theoretical framework originally proposed in our recent RNA velocity analysis19. In brief, the simplest model that accounts for such desynchronization proposes that cells enter a tissue, receive a signal that triggers changes in transcriptional rates , and leave at some later point. Sequencing is the observation of cells within the tissue; to find the distribution of RNA counts, we need to condition on the distribution of times since receiving the signal.

As we discuss in Section 6.5, this latter distribution is not arbitrary, and reflects the kinetics of cell entry and exit. In the parlance of chemical reaction engineering, the times are drawn from , the internal-age distribution induced by those kinetics105,106. This model affords a particularly simple representation of the generating function:

| (32) |

where we marginalize over the gene state, which is typically not observable. Conveniently, this model possesses time symmetry: even though the cells within the tissue are all out of equilibrium, the tissue as a whole is at steady state.

We consider the technical noise phenomena shown in Figure 1b, i.e., the encapsulation of cells and background debris into droplets, as well as the stochasticity in cDNA library construction and sequencing. Under the assumption of independent encapsulation, the generating function of molecule count distributions on a per-droplet level takes the following form:

| (33) |

where the is the generating function of the cells per droplet, whereas is the generating function of background molecules per droplet, which depends on the entire cell population (Section 6.6). Finally, to represent sequencing variability and uncertainty, we evaluate the generating function at a set of transformed coordinates:

| (34) |

where reflects the distribution of cDNA produced per molecule of RNA (e.g., Bernoulli, as in Tang et al.107,108), whereas reflects the distribution of ambiguous sequenced fragments, which depends on transformed variables (Section 6.7 and supplemental information).

The full generating function of the molecule distribution is given by the composition and integration of the model components, as shown in Figure 1c. To evaluate this generating function, it is necessary to specify all components that make up the model. In the analysis below, we take advantage of the modularity of the system definition to investigate four kinds of modeling choices, their statistical implications, and their compatibility with sequencing data. Specifically, we treat the subsystems illustrated in Figure 1d: background noise in single droplets, stochastic transcription rate models, sampling from a transient process, and variation in technical noise.

4.2. Empty droplets

One of the first steps in scRNA-seq data analysis is cell quality control, which excludes cell barcodes that appear to originate from empty droplets from further analysis57. For computational tractability, this procedure typically relies on “hard” assignment, such that barcodes associated with a total molecule count above some threshold are treated as cells, whereas barcodes below the threshold are treated as empty droplets. Threshold selection is necessary because even “empty” droplets contain ambient RNA. This ambient RNA, which appears to originate from cells lysed in the preparation process, contaminates empty and cell-containing droplets alike57.

The observation of ambient RNA resulting in unwanted molecule counts has led to the development of statistical methods for removing this source of noise, either by estimating and subtracting it109 or incorporating it into a stochastic model110–112. Conceptually, Equation 33 reflects the latter approach: each droplet contains one or more cells, each with biological generating function , and background, with a generating function that depends on . To accurately model the background counts, we need to propose and justify a specific functional form for . Thus, under the assumption that empty and cell-containing droplets are similarly susceptible to contamination, the former provide a reasonable estimate of ambient distributions in the latter109.

The simplest model holds to be equivalent to a “pseudobulk” experiment, with molecules randomly sampled from the lysed cell population. If each cell is equally likely to contribute to the pool of free RNA, and diffusion occurs by a simple independent arrival process, we find that the distribution of background should be Poisson, with the mean for each species proportional to its mean in the original cell population, as in, e.g., Fleming et al.110 This functional form immediately induces a set of testable predictions: not only are the distributions Poisson, but they are independent Poisson, with no meaningful statistical structure remaining between transcripts of a single gene, as well as between different genes, as illustrated in Fig. 2a.

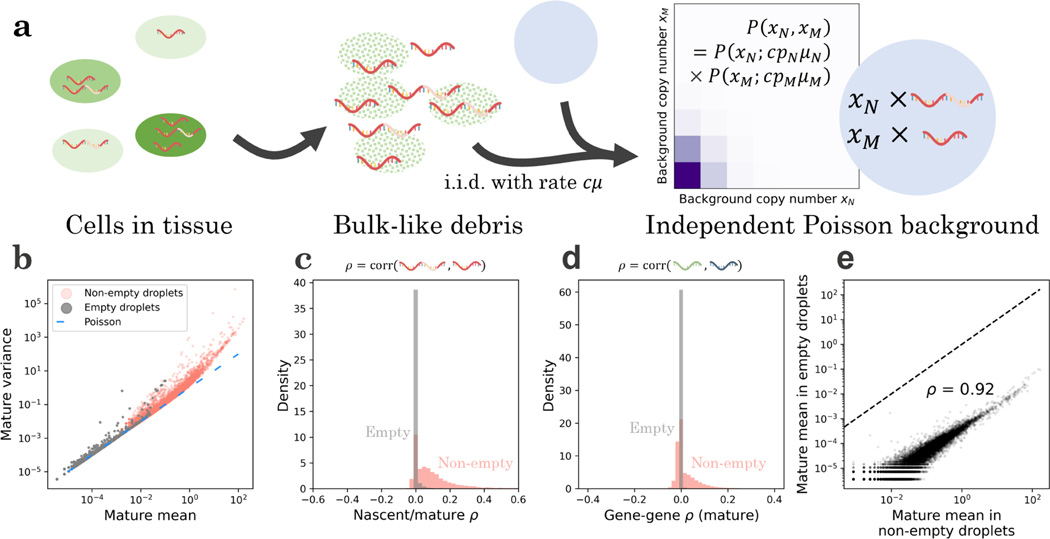

Figure 2.

The pseudo-bulk model of background noise is quantitatively consistent with counts from the pbmc_1k_v3 dataset.

a. The simplest explanatory model for background noise invokes the lysis of cells (green), which creates a pool of RNA that reflects the overall transcriptome composition but retains none of the cell-level information. If the loose RNA molecules diffuse into droplets (blue) according to a memoryless and independent arrival process, the resulting background distribution (purple: higher probability mass; white: lower probability mass) observed in empty droplets should be a series of mutually independent Poisson distributions, with the mean controlled by the composition in non-empty droplets.

b. The mature transcriptome in empty droplets has a mean-variance relationship near identity (gray points, = 12,298), consistent with Poisson statistics (blue line); the non-empty droplets demonstrate considerable overdispersion (red points, = 17,393).

c. The mature and nascent transcripts in empty droplets have sample correlation coefficients near zero, consistent with distributional independence (gray histogram, = 9,362); the non-empty droplets demonstrate nontrivial statistical relationships (red histogram, = 14,365).

d. The mature transcripts of different genes in empty droplets have sample correlation coefficients near zero, consistent with distributional independence (gray histogram, = 75,614,253); the non-empty droplets demonstrate nontrivial statistical relationships (red histogram, = 151,249,528).

e. When both are nonzero, the mature count mean in empty droplets is highly correlated with the mean in the non-empty droplets, consistent with the pseudo-bulk interpretation (black points, = 12,107; dashed line: identity).

To characterize the accuracy of these predictions, we inspected six datasets (Table S2) pseudoaligned with kallisto | bustools113, and compared the data for barcodes passing bustools quality control to data for barcodes which were filtered out. As a shorthand, we call the former “non-empty” and the latter “empty” droplets, keeping in mind that this identification is approximate. We fully describe the analysis procedure in Section 6.8.2, illustrate the results for the human blood dataset pbmc_1k_v3, and display the results for all datasets in supplemental information.

As shown in Figure 2b, data from non-empty droplets are substantially overdispersed relative to Poisson, whereas data from empty droplets are largely consistent with the Poisson identity mean–variance relationship. However, a small number of relatively high-expression genes are overdispersed. In addition, intra-gene (Figure 2c) and inter-gene (Figure 2d) correlations are typically nontrivial in non-empty droplets, but consistently near zero for empty droplets, supporting distributional independence of the background counts. Finally, the mean expression in empty droplets is highly correlated with mean expression in non-empty droplets, albeit lowered by approximately four orders of magnitude (Figure 2e), supporting the assumption that the original cells are lysed in a uniform fashion.

To characterize the deviations from the pseudo-bulk model, we identified the genes that demonstrated overdispersion in empty droplets (Table S3). A considerable fraction of these genes were associated with mitochondria or blood cells. For example, of the 21 annotated genes overdispersed in the empty droplets of the mouse neuron dataset neuron_1k_v3, nine were mitochondrial (mt-Nd1, mt-Nd2, mt-Co1, mt-Co2, mt-Atp6, mt-Co3, mt-Nd3, mt-Nd4, and mt-Cytb), three coded for hemoglobin subunits (Hba-a1, Hba-a2, and Hbb-bs), and two coded for blood cell-specific proteins (Bsg, Vwf)114,115. On the other hand, of the 10 annotated genes overdispersed in the empty droplets of the desai_dmso dataset, generated from cultured mouse embryonic stem cells116, six (mt-Nd1, mt-Co2, mt-Atp6, mt-Co3, mt-Nd4, mt-Cytb) were mitochondrial and none were blood cell-specific114 (Table S4).

Since overdispersion implies that contamination involves non-independent encapsulation of these molecules, the results suggest that the cell-free debris contain, among other structures, entire mitochondria or erythrocytes, when they are present in the source tissue. These membrane-bound structures may diffuse into droplets, then lyse and release all of their contents at once. In other words, empty droplets do not merely have disproportionally high mitochondrial content, as has been noted previously110,117,118; they have nontrivially distributed mitochondrial content, which can hint at the mechanism of its incorporation, and improve interpretation where simple thresholds may be misleading118. We hypothesize that cases where the model fails can be leveraged to discover more complicated forms of contamination, such as molecular aggregates112.

In addition, we examined the total UMI counts in empty droplets, which should be Poisson (Fano = 1) if each individual gene’s distribution is Poisson. For the human blood dataset demonstrated in Figure 2, the empty droplets had fairly significant overdispersion (Fano ≈ 43), which decreased, but did not disappear (Fano ≈ 7.6), once the 53 significantly overdispersed genes were excluded. This result suggests that, although the pseudo-bulk model is approximately valid, some residual variance, possibly due to variability in per-droplet capture rates, is present and needs to be modeled to fully describe the stochasticity in single-cell datasets.

4.3. Noise-corrupted candidate models of transcriptional variation

A considerable fraction of the variability in single-cell datasets arises from cell-to-cell and time-dependent variation in the transcription rates. These sources of variation control distribution shapes. By carefully analyzing candidate models, we can characterize the prospects for model selection: for example, if different models produce nearly identical distributions, selection is impossible and the choice of model is somewhat arbitrary. More interestingly, such analysis can guide the design of experiments: models may be indistinguishable based on some kinds of data, but not others20. This perspective has guided the interest in characterizing noise behaviors74,119: distributions provide strictly more information than averages, and allow us to distinguish between regulatory mechanisms. Similarly, multivariate distributions provide more information than marginal distributions. Obtaining different data (multiple molecular modalities) is qualitatively more useful than obtaining more data (a larger number of cells) or better data (observations less corrupted by noise).

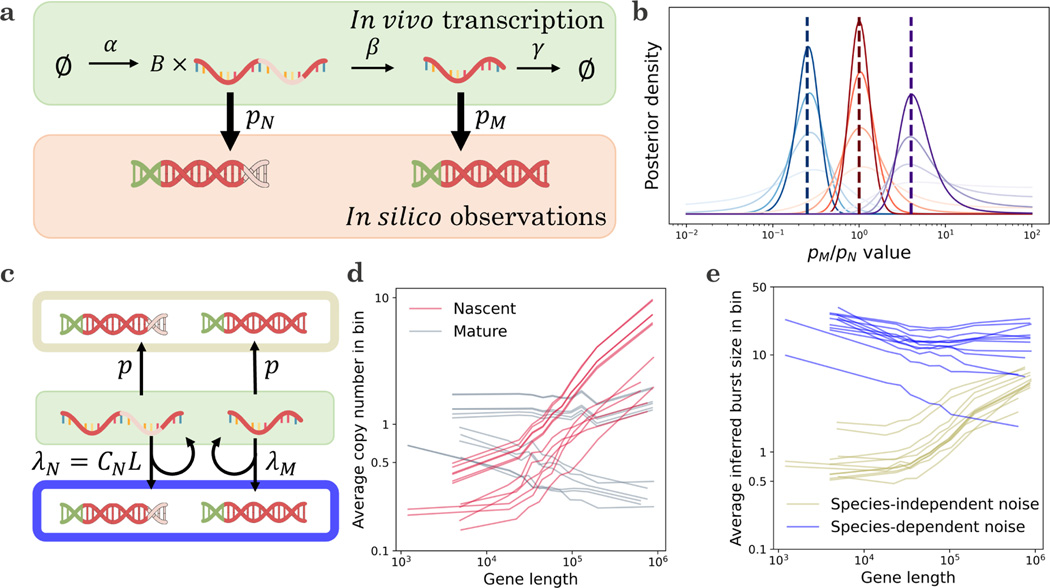

We illustrate this key point using the simple model system depicted in Figure 3a, which features intrinsic, extrinsic, and technical noise. The continuous stochastic process denoted by drives the rate of transcription of nascent RNA. We consider three different possibilities for : the gamma Ornstein–Uhlenbeck process, which models DNA winding and relaxation; the Cox–Ingersoll–Ross process, which models the fluctuations in a high-copy number activator20; and the telegraph process, which models variation due to random exposure of the locus to transcriptional initiation76,97,100. All three transcription rate models are described by three parameters20,100. After a Markovian delay, nascent RNA are converted to mature RNA; after another Markovian delay, the mature RNA are degraded. When the system reaches steady state, it is sequenced; each biological molecule has a probability of being observed in the final dataset. We seek to use imperfect count data to fit parameters and distinguish models. We fully describe the procedures in Section 6.8.3.

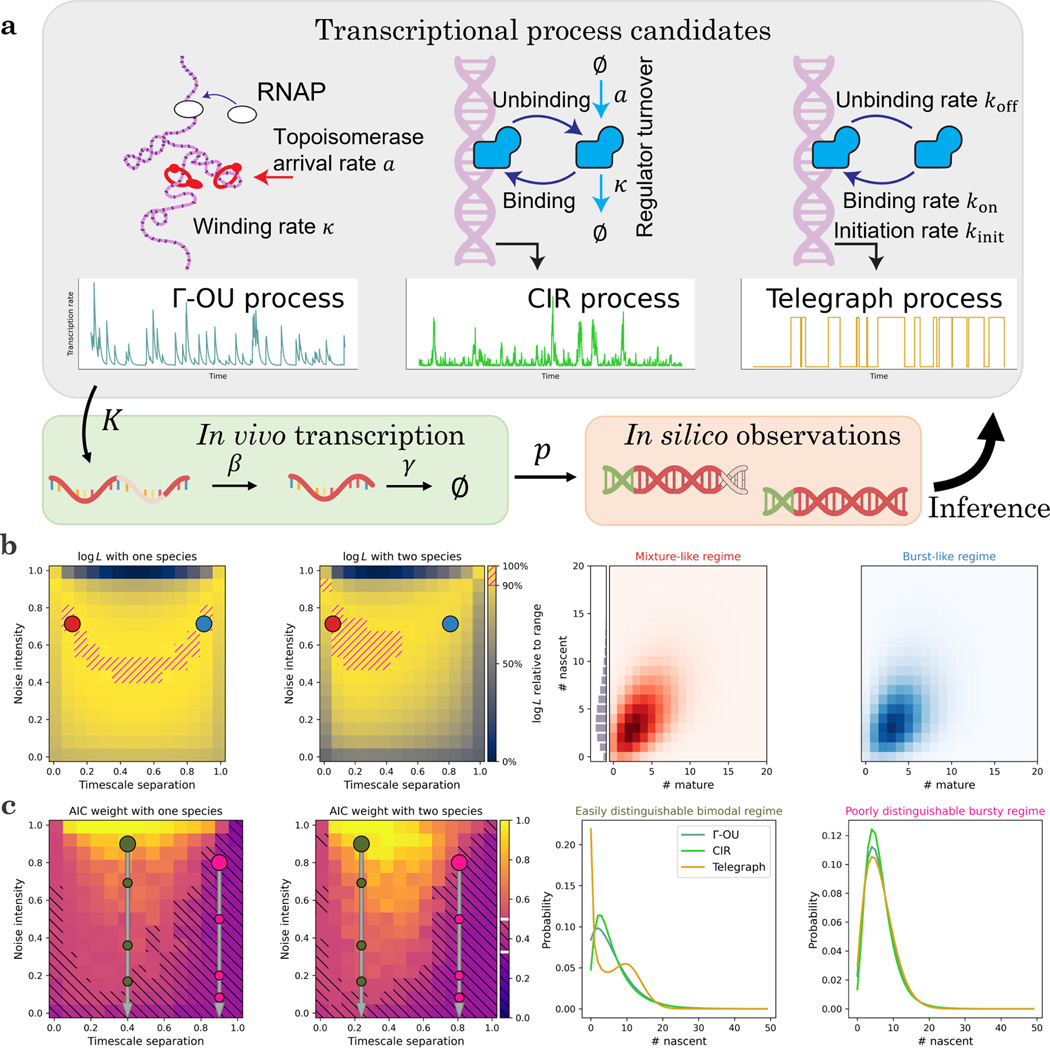

Figure 3.

The stochastic analysis of biological and technical phenomena facilitates the identification and inference of transcriptional models.

a. A minimal model that accounts for intrinsic (single-molecule), extrinsic (cell-to-cell), and technical (experimental) variability: one of three time-varying transcriptional processes generates molecules, which are spliced with rate , degraded with rate , and observed with probability . Given a set of observations, we can use statistics to narrow down the range of consistent models.

b. Given a particular model, parameter regimes indistinguishable using a single modality become distinguishable with two. The mixture-like and burst-like regimes both produce negative binomial marginal distributions, but have different correlation structures (Left: data likelihoods over the parameter space, computed from 200 simulated cells; -OU ground truth; red point: true parameter set in the mixture-like regime; color: log-likelihood of data, yellow is higher, 90th percentile marked with magenta hatching; blue: an illustrative parameter set in a burst-like parameter regime with a similar nascent marginal but drastically different joint structure. Right: nascent marginal and joint distributions at the points indicated on the left. Nascent distributions nearly overlap).

c. Given a location in parameter space, models are easier to distinguish using multiple modalities. However, the performance varies widely based on the location in parameter space and the specific candidate models: for example, the telegraph model has a well-distinguishable bimodal limit when the process autocorrelation is slower than RNA dynamics. In addition, all else held equal, drop-out noise effectively decreases the noise intensity, lowering identifiability (Left: -OU Akaike weights under -OU ground truth, average of = 50 replicates using 200 simulated cells; color: Akaike weight of correct model, yellow is higher, regions with weight < 0.5 marked with black hatching; large circles: illustrative parameter sets; smaller circles: distributions obtained by applying = 50%, 75%, and 85% dropout to illustrative parameter sets while keeping the averages constant. Right: the three candidate models’ nascent marginal distributions at the large points indicated on the left).

Even if we have perfect information about the true averages of the transcriptional strength and the molecular species, the systems can exhibit a wide variety of distribution shapes and statistical behaviors. This variety can be summarized by a two-dimensional parameter space, which was introduced in Fig. 2 of Gorin and Vastola et al.20 The “timescale separation” governs the relative timescales of the transcriptional and molecular processes; if it is high, the transcriptional process is faster than RNA turnover. The “noise intensity” governs the variability in the transcriptional process: if it is high, the process exhibits substantial variability that translates to overdispersion in the RNA distributions. The bottom edge of this parameter space produces Poisson distributions of RNA, the top left corner produces Poisson mixtures of the law of , and the top right corner yields bursty dynamics that do not typically have simple analytical solutions20.

Although these regimes reflect very different transcriptional kinetics, they can produce indistinguishable distributions. The first panel of Figure 3b demonstrates the likelihood landscape of a dataset generated from the gamma Ornstein–Uhlenbeck (-OU) transcriptional model, evaluated using the nascent marginal and . The mixture-like true parameters are indicated by a red point and the top decile of likelihoods is indicated by hatching. The -OU model’s transcription rate has a gamma stationary distribution, which produces approximately Poisson-gamma, or negative binomial, RNA marginals in this regime. However, the bursty regime, indicated by a blue point, also yields a negative binomial-like marginal20, preventing us from identifying the kinetics.

On the other hand, if we evaluate likelihoods using the entire two-species dataset, we obtain the landscape in the second panel of Figure 3b: the symmetry is broken, and the parameters can be localized to the mixture-like regime. The source of this improved performance is evident from examining the distributions, shown in the third and fourth panels of Figure 3b. The nascent marginals are essentially identical; no amount of purely nascent count data can distinguish between them. However, the bivariate distributions show subtle differences, such as higher nascent/mature correlations in the true regime, which can be used for inference. This approach is analogous to Fig. 4b of Gorin et al.21, where bivariate data are used to disambiguate differences which would otherwise be indistinguishable due to the degeneracies of steady-state distributions.

In addition, the timescale separation and noise intensity determine the model distinguishability. To quantify this, we use the Akaike weight , which transforms log-likelihood differences into model probabilities120. For example, if the Akaike weight is near 1/3, the models are indistinguishable; if the correct model’s weight is near 1, we can confidently identify the model from the data. The first panel of Figure 3c demonstrates the average Akaike weight landscape of datasets generated from the -OU model, computed using the nascent distribution at the same coordinate. We indicate the region by hatching. As the Akaike weight may be interpreted as a posterior model probability120, this somewhat arbitrary threshold gives even odds for choosing the correct model, on average.

The intermediate regime, indicated by a large olive green point, tends to yield fairly high Akaike weights, consistent with the two-model case explored in Fig. 3a of Gorin and Vastola et al.20 On the other hand, the burst-like regime, indicated by a large pink point, provides considerably less ability to distinguish the models. As expected, the situation improves somewhat when using bivariate data (second panel of Figure 3c): the Akaike weights increase throughout the parameter space, and the bursty regime data move closer to even odds for model selection.

To illustrate the source of the identifiability challenges, we plot the nascent marginals of the models at the two points. In the intermediate regime, the -OU and CIR models yield moderately different distributions, whereas the telegraph model is immediately distinguishable by its bimodality (third panel of Figure 3c). In contrast, in the bursty regime, the distributions are all unimodal and less identifiable (fourth panel of Figure 3c); the -OU and telegraph marginals are particularly similar, as they converge to the same negative binomial limit20.

Interestingly, this formulation fully characterizes the effect of certain forms of technical noise. If the transcriptional and observed molecular averages are fixed, but the experiment fails to capture some molecules, the distributions are identical to those obtained by deflating the transcriptional noise intensity. In other words, even though technical noise affects the molecules, its theoretical effects are indistinguishable from decreasing the variability of the transcriptional process. As the noise levels increase, the RNA distributions are pushed toward the indistinguishable Poisson limit at the bottom edge of the reduced parameter space. We quantify how rapidly the information degrades by plotting smaller circles on the first and second panels of Figure 3c to indicate the effect of 50%, 75%, and 85% dropout, in that order from top to bottom.

4.4. Distributions obtained from a transient process

Due to the interest in understanding developmental processes, the characterization of transient process dynamics is a key problem in single-cell analyses. The use of mechanistic models with multimodal data, which we emphasize here, was originally pioneered in the context of the RNA velocity framework, which attempts to exploit the causal relationship between nascent and mature RNA to fit transient processes86. However, the implementations proposed so far use relatively simple noise behaviors59,86,121, which do not recapitulate the bursty transcription observed in living cells. As discussed in our recent analysis of RNA velocity methods19, this leads to us to hold some reservations about the robustness and appropriate interpretation of results obtained by this class of methods.

The inference of transient dynamics from snapshot data is a formidable problem due to a combination of theoretical and practical factors. Most fundamentally, it is not precisely clear what a snapshot is: how does a single measurement simultaneously capture the early and late states in a differentiation process? To develop an explanatory model, we take inspiration from the existing work on cyclostationary processes122,123, cell cycle ensemble measurement modeling124–126, Markov chain occupation measure theory127–129, and chemical reactor engineering105,106. In the typical stochastic modeling context, we fit count data using stationary distributions , obtained as the limit of a transient distribution. By the ergodic theorem130–132, this distribution, when it exists, coincides with the occupation measure , i.e., observations drawn from a single trajectory over a sufficiently long time horizon, rather than from multiple trajectories at once. Conveniently, the ergodic limit has time symmetry with respect to measurement: the distribution does not depend on the timing of the experiment. In the transient case, we cannot take these limits. However, we can retain time symmetry by proposing that the experiment samples cells at almost surely finite times since the beginning of the process. Therefore, we conceptualize data as coming from a set of cells indexed by , such that each cell’s time is sampled from , and counts are drawn from some distribution , which is not typically available in closed form. This formulation yields Equation 32, which requires specifying the distribution .

We illustrate some of the challenges and implications using the model system shown at the bottom of Figure 4a. The underlying transient structure involves transitions through three cell types, each characterized by a particular transcriptional burst size. The transient transcription process produces nascent and mature RNA trajectories for each cell; however, we only obtain a single data point per trajectory. Even if we have perfect information about the cell times, it is far from clear that we can accurately reconstruct the transcriptional dynamics from snapshot data (center of Figure 4a).

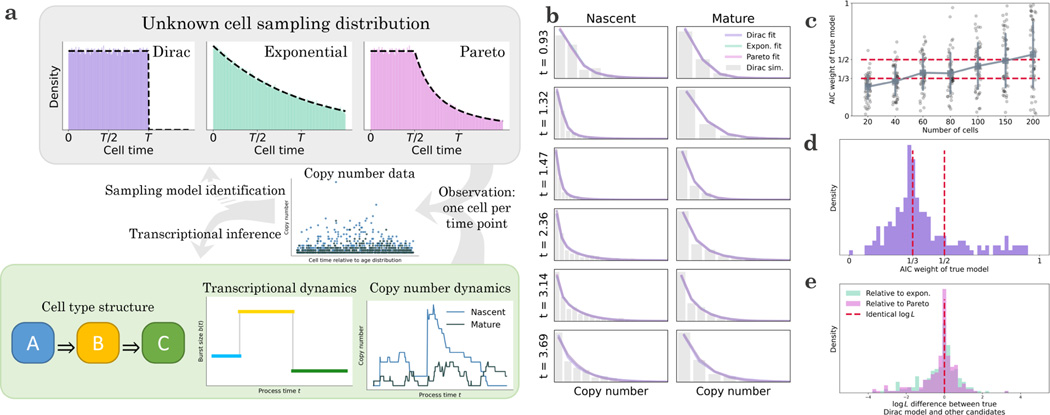

Figure 4.

Given ordered and labeled snapshot data obtained from a transient differentiation process, we can typically fit the copy number data, but identifying the mechanism of the snapshot is more challenging.

a. A minimal model that accounts for the observation of transient differentiation processes in scRNA-seq: cells enter a “reactor” and receive a signal to begin transitioning from cell type A through B and to C. The change in cell type is accompanied by a step change in the burst size, which leads to variation in the nascent and mature RNA copy numbers over time. Given information about the cell type abundances and the cells’ time along the process, we may fit a dynamic process to snapshot data and attempt to identify the underlying reactor type, which determines the probability of observing a cell at a particular time since the beginning of the process.

b. In spite of the considerable differences between the reactor architectures, they produce nearly identical molecular count marginals (histogram: data simulated from the Dirac model, 200 cells; colored lines: analytical distributions at the maximum likelihood transcriptional parameter fits for each of the three reactor models. Analytical distributions nearly overlap).

c. The true reactor model may be identified from molecule count data, but statistical performance is typically poor (points: Akaike weight values for = 50 independent rounds of simulation and inference under a single set of parameters; blue markers and vertical lines: mean and standard deviation at each number of cells; blue line connects markers to summarize the trends; red lines: the Akaike weight values 1/3, which contains no information for model selection, and 1/2, which gives even odds for the correct model; two-species data generated from the Dirac model; uniform horizontal jitter added).

d. The reactor models are poorly identifiable across a range of parameters, and rarely produce Akaike weights above 1/2 (histogram: Akaike weight values for = 200 independent rounds of parameter generation, simulation, and inference under the true Dirac model; red line: the Akaike weight values 1/3 and 1/2; two-species data for 200 cells generated from the Dirac model; parameters were restricted to the low-expression regime for both species).

e. The challenges in reactor identification arise because all three models produce similar likelihoods (histograms: likelihood differences between candidate models and the true Dirac model for = 200 independent rounds of parameter generation, simulation, and inference; red line: no likelihood difference; two-species data for 200 cells generated from the Dirac model; parameters were restricted to the low-expression regime for both species).

In addition, we wish to know whether we can identify the mechanism of the snapshot collection. We can imagine cells entering and exiting the observed tissue in multiple ways, which correspond to different choices of . Some natural choices are uniform, which implies the cells stay in the tissue for a deterministic time86; decreasing over time, so cells can exit immediately; or uniform, then decreasing, so cells must stay in the tissue for some duration but are free to leave afterward. These choices can be modeled by Dirac, exponential, and Pareto residence distributions. In the parlance of chemical reactor engineering, these configurations are known as the plug flow reactor, the continuously-stirred tank reactor, and the laminar flow reactor, respectively. Their , which are the reactor internal-age distributions, are well-known in the chemical engineering literature105,106, and shown at the top of Figure 4a. It is not a priori obvious the configurations are mutually distinguishable from count data. If they are not, the choice of is immaterial for inference.

We generated snapshot data from the Dirac model and fit it under all three models. To efficiently evaluate snapshot distributions, we designed an algorithm which essentially “recycles” for trapezoidal quadrature. The method is fully described in Section 6.8.4. As shown in Figure 4b, despite only having access to a single observation per time point, all models yield results visually close to the true marginals. However, despite these superficial similarities, quantitative model identification is possible: for the simulated dataset shown, the true Dirac model achieves an Akaike weight of ≈ 79%, whereas the exponential and Pareto both achieve ≈ 10%. Decreasing the dataset size substantially degrades the identifiability (Figure 4c). Even at higher sizes, spread is considerable; for example, a 150-cell dataset gives approximately even odds ( > 1/2) on average, but individual realizations vary from confidently correct ( ≈ 1) to confidently wrong ( ≈ 0).

To understand the robustness of model identifiability, we generated 200 synthetic datasets at random parameter values, constrained to have fairly low expression. We observed poor identifiability, with even or better odds for the correct model in only 20% of the cases (Figure 4d). This performance appears to be attributable to quantitative similarities between all three models’ likelihoods. As shown in Figure 4e, given data of this quality, we cannot even narrow the scope down to two models, as neither of the candidate models performs conspicuously worse than the true Dirac configuration. Therefore, it is possible to fit snapshot data approximately equally well using a variety of models; candidates for are identifiable in principle, but challenging to distinguish from any particular dataset. This simulated analysis implies that the details of the reactor configuration may not matter much, providing a basis for omitting this model identification problem for real data.

4.5. Variability in library construction

To properly interpret single-cell data, we need to exhibit caution regarding the technical noise behaviors and consider multiple possible candidate models. However, before fitting distributions, we must fully characterize the models and understand which of their parameters are actually identifiable with the data at hand. For example, the two-species models explored in Section 4.3 produce distributional forms that are closed under the assumption , i.e., the magnitude of the observation probability is impossible to identify from count data alone. Interestingly, when (that is, when nascent and mature RNA may have different observation probabilities), what we can learn about technical noise heavily depends on the form of the biological noise. For example, under slow transcriptional variation (as in the mixture and Poisson limits of the models explored in Section 4.3), the RNA distributions contain no identifiable information whatsoever about the technical noise, regardless of the amount of data. On the other hand, if transcription is bursty, the distributions depend on the ratio of and , but not their absolute values (Section 6.8.5). This theoretical result calls for further investigation: how much information can we obtain in practice, given finite data?

To understand the prospects for distinguishing parameters, we consider the simple model system shown in Figure 5a, which involves bursty transcription with average burst size , splicing, degradation, and molecular capture with species-specific probabilities. To characterize how much information about we can identify from count data, we simulated 200 datasets at the ratio values 1/4,1, and 4, and calculated their likelihoods over (10−2, 102). We repeated this analysis using synthetic datasets with 20, 50, 100, and 200 cells, and plotted the average of the posterior distributions for each condition. As shown in Figure 5b, color-coded by the ground truth and intensity-coded by the number of cells, the posteriors are, on average, consistent with the true value. However, even with perfect information about the averages and the nascent RNA distribution, the uncertainty is considerable; at larger dataset sizes, we can typically localize the ratio to an order of magnitude, but not much further.

Figure 5.

Technical noise models may be identified from count data, either by direct application of statistics or by imposing informal priors about the biological variability.

a. A minimal model that accounts for non-homogeneous noise: transcriptional events occur with frequency , generating geometrically-distributed bursts with mean size ; the molecules are spliced with rate and degraded with rate . Nascent molecules are observed with probability and mature molecules are observed with probability .

b. Given information about the nascent distribution and the mature mean, it is possible to use joint distributions to obtain information about the ratio of observation probabilities (curves: average posterior likelihoods, computed from 200 independent synthetic datasets; color: true value of , blue: 1/4, red: 1, purple: 4; dashed lines: location of each true value; color intensity: from lightest to darkest, synthetic datasets with 20, 50, 100, and 200 cells).

c. Two models considered in Gorin et al.21: the species-independent bias model for length dependence in averages, which proposes that nascent and mature RNA are sampled with equal probabilities, and the species-dependent bias model, which proposes that the nascent RNA sampling rate scales with length (top, gold: kinetics of species-independent model; bottom, blue: kinetics of species-dependent model; center, green: the source RNA molecules used to template cDNA).

d. A variety of single-cell datasets produce consistent and counterintuitive length-dependent trends in nascent RNA observations (lines: average per-species gene expression, binned by gene length; red: nascent RNA observations; gray: mature RNA statistics; data for 2,500 genes analyzed in Gorin et al.21).

e. Fits to the species-independent model show a strong positive gene length dependence for inferred burst sizes, whereas fits to the species-dependent model show a modest negative gene length dependence, which is more coherent with orthogonal data (lines: average per-gene burst size inferred by Monod161, binned by gene length; gold: results for species-independent model; blue: results for species-dependent model; data for genes analyzed in Gorin et al.21 after goodness-of-fit).

Given the statistical challenges illustrated by simulations, we speculate that it may be more fruitful to use prior information about biology and physical intuition about sequencing to construct technical noise models. For example, in a recent paper21, we fit models that represent two competing hypotheses (Figure 5c). The first has identical, gene-specific observation probabilities for the nascent and mature species. In this model, the inferred burst size is , as these two parameters are not mutually identifiable. The second has a gene length-dependent technical noise term for the nascent species, which coarsely represents a higher rate of priming for long molecules with abundant intronic poly(A) tracts, and a shared genome-wide term for the mature species, which represents priming at the poly(A) tail. In this model, the inferred burst size is .

These models attempt to explain the trend summarized in Figure 5d: across a wide range of datasets, nascent RNA averages exhibit a pronounced length dependence133 not evident in mature RNA134. The first model explains the trend by a species-independent bias, as and control nascent as well as mature RNA levels. Conversely, the second model explains it by a species-dependent bias. Both models produce fair fits to the data (as demonstrated, e.g., by the low rate of rejection by goodness-of-fit in Sections S7.4 and S7.5.2 of Gorin et al.21).

However, the trends in the resulting inferred parameters are strikingly different: the species-independent bias model predicts that longer genes have higher . Ascribing this trend to the term—longer genes have higher burst sizes—contradicts burst size trends from fluorescence microscopy135. Ascribing it to the term—longer genes have higher sampling probabilities—is physically unrealistic, because mature RNA molecules are depleted of the internal poly(A) tracts necessary for priming136. On the other hand, the species-dependent model predicts a modest negative relationship between length and burst size, which is more coherent with orthogonal data.

This technical noise model is a relatively simplistic low-order approximation, since all genes have the same mature molecule capture rate and length scaling . Nevertheless, it foregrounds a key modeling principle of the investigation: in the absence of prior information, biological parameters need to be fit on a gene-by-gene basis, but technical noise should be constructed using a common genome-wide model that varies in a mechanistic rather than arbitrary way. In sum, the mathematics enable us to define and fit systems, but to understand whether the fits are sensible, we need to contextualize and compare them with previous results and physical intuition.

5. DISCUSSION

The results we have derived provide a blueprint for the holistic modeling of single-cell biology and sequencing experiments. First, we have outlined a generic mathematical framework for treating stochasticity in living cells. By exploiting the generating function representation, we reduce discrete, continuous, and mixed reactions to operators in a system of differential equations. These ODEs can be straightforwardly solved via numerical integration to compute model properties, including likelihoods. This approach recapitulates and subsumes a wide range of previous results16,17,20,21,75,76,85,100,137,138.

By treating the discrete and continuous degrees of freedom on equal footing, our approach makes certain otherwise challenging problems straightforward to solve, as illustrated in Section 6.8.1. By making simplifying assumptions—chiefly, the assumption of independent and identically distributed sampling—we reduce the modeling of technical variation to the composition of generating functions. Our framework may be used in its current form, or as a substrate for developing more sophisticated models of transcriptional regulation and sequencing that subsume it in turn. This process simply involves instantiating hypotheses, converting them into probabilistic models, and constructing model solutions using a procedure analogous to the one presented in Figure 1c.

We believe this framework comprises a productive vision for the interpretation of large datasets, but many technological and mathematical challenges remain. For example, the library construction biases are dependent on molecule-specific factors that we do not yet fully understand, because their effect is heavily convolved with biological variability. In Figure 5, we considered two extreme cases, where the noise strength/length scaling is either unconstrained or forced to be identical for all genes. We anticipate that careful investigation of technical biases will be necessary to construct models that constrain the technical biases based on RNA chemistry, while allowing for gene-to-gene and droplet-to-droplet variability.

In Section 6.7 and supplemental information, we discuss the challenges associated with modeling ambiguous species, motivated by the limitations of short-read sequencing for distinguishing between spliced and unspliced forms of the same RNA gene product139. It is worth noting that even the spliced/unspliced binary is a convenient simplification primarily adopted because of data availability86,113; we stress that a truly comprehensive treatment requires defining intermediate states19, their relationships, and their mutually indistinguishable classes. These computational foundations do not yet exist, although we have attempted a partial solution in recent work16 and outlined some promising directions in supplemental information. Therefore, despite our immediate interest in bivariate RNA distributions, our framework is designed to generalize to other modalities as they become practical to quantify. In addition, although we focus on Markovian systems here, non-Markovian processing can be represented by appropriately defining 140, which suggests avenues for the treatment of systems with molecular memory141,142.

The full generating function solutions we have outlined here are typically not computable directly. By construction, the generating function needs to be evaluated on a grid; Fourier inversion produces a grid of microstate probabilities, which needs to be quite large to avoid artifacts138. If the grid dimension is for each discrete species , the overall state space size is . Even in the simplest case, where we only quantify and fit discrete counts, evaluating the probability mass function requires storing and inverting an -dimensional array, which usually has size far too large to be practical (e.g., Fig. S5b of our prior work on bursty models16).

When applicable, the generating function approach has numerical advantages over the stochastic simulation algorithm (SSA)143–145, which approximates distributions by the empirical distributions of trajectories, and finite state projection (FSP)78, which directly integrates a version of the master equation confined to a finite . Specifically, if we only care about a particular species , we can evaluate its marginal using a grid of size with log time complexity. In the worst-case scenario, FSP requires a grid of size with 3 time complexity, as evaluating a particular marginal requires explicitly evaluating the probabilities for the entire grid, then marginalizing. Similarly, SSA requires explicitly simulating the entire system to obtain the marginals, and has the drawback of the usual inverse square root Monte Carlo convergence146,147. In addition, FSP is not compatible with the generating function manipulations used to represent technical noise, SSA is relatively challenging to adapt to time-dependent rates148, and neither FSP not SSA is readily compatible with continuous stochastic processes (although exact20 and approximate20,149,150 hybrid schema can be constructed with some work). In the future, the “curse of dimensionality”— the reliance on grid evaluation—may be possible to bypass altogether by training neural networks to predict probability distributions, but this approach is as of yet in its nascence151–154 and will require considerable further development to apply to general systems.

Nevertheless, SSA and FSP are substantially more general than the approach we outline here. The simulation- and matrix-based methods only require a list of reactions, whereas the generating function methods also require those reactions to produce readily solvable partial differential equations. We have omitted phenomena which would be trivial to treat using FSP and SSA, such as regulation involving feedback. (In principle, one can always construct “synthetic likelihoods” for inference by fitting a function approximator to the results of stochastic simulations, even for highly nonlinear and chaotic systems155–157.) To our knowledge, these phenomena, which are mathematically analogous to adding multi-molecular interaction terms, cannot be directly treated with the method of characteristics. Instead, a mathematically precise treatment of them requires perturbative methods77 or fairly complicated special function manipulations101–104, which do not easily generalize. We illustrate the challenges in supplemental information, using the example of downstream species catalyzing gene state transitions.

On the other hand, there are a number of ways to treat systems involving feedback approximately. Approaches like the linear-mapping approximation158 permit the derivation of approximate but accurate generating functions for such systems, which can then be used in standard inference pipelines. Alternatively, using only the results presented here, the net effect of feedback can be captured in the time-dependence of certain parameters (e.g., burst sizes) if dynamics are sufficiently chaotic, or if the time scale of feedback is slow compared to other system time scales.

We have, until now, stressed applications to “snapshot” single-cell data from dissociated tissues; however, our framework may be extended to spatial single-cell data; for instance, we can define transcriptional parameters that depend on the cell’s coordinates in the tissue. In this case, the typical systems biology goals translate to fitting a time- and space-dependent function that governs these parameters. However, the generating function formulation relies on the assumption of cells being stochastically independent; it is far from clear that this should hold for densely sampled spatial data, and more sophisticated alternatives, such as agent-based models, may be needed159,160.

Despite these challenges, the framework is already quantitatively useful. To fully “explain” a dataset, we need to fit gene-specific transcriptional mechanisms, genome-wide technical noise and co-expression parameters, and cell type structure, while controlling for potential misspecification. However, at this time, it may be more fruitful to focus on narrower questions, using assumptions, orthogonal data, or simulated benchmarking to justify omitting some parts of the problem19. We have applied this “bottom-up” approach to single-cell data, considering, in turn, the estimation of transcriptional kinetics and technical noise21,161, the identification of transcriptional models20, the analysis of co-regulation patterns16, and the determination of nuclear transport kinetics140. Conversely, it may be valuable to apply a “top-down” approach, augmenting an existing method with biophysically meaningful noise, as we have proposed in the context of transient processes19 and neural network dimensionality reduction71.

We anticipate that making meaningful progress on the stochastic modeling project championed by Wilkinson will require extended “real contact”162 between systems biology, genomics, and mathematics. The general framework we propose, which unifies a variety of previous work, represents one step towards this synthesis. The role of mathematics here is key; as Wilkinson noted, the stochastic systems biology of single cells cannot be “properly understood” without stochastic mathematical models.

RESOURCE AVAILABILITY

Lead Contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the lead contact, Lior Pachter (lpachter@caltech.edu).

Materials Availability

This study did not generate new materials.

Data and Code Availability

This paper analyzes existing, publicly available data. These accession numbers for the datasets are listed in the key resources table. Pseudoaligned count matrices in the mtx format have been deposited at the Zenodo package 8132976. The data, Monod fits, and analysis scripts used to generate Figure 5d-e, originating from Gorin et al.21, were previously deposited as the Zenodo package 7388133.

All original code has been deposited at https://github.com/pachterlab/GVP_2023 and the Zenodo package 8132976, and is publicly available as of the date of publication. DOIs are listed in the key resources table.

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited Data | ||

| H. sapiens peripheral blood 10x v3 scRNA-seq data | 178 | pbmc_1k_v3 |

| M. musculus heart 10x v3 scRNA-seq data | 179 | heart_1k_v3 |

| M. musculus neuron 10x v3 scRNA-seq data | 180 | neuron_1k_v3 |

| M. musculus cultured embryonic stem cells treated with DMSO 10x v2 scRNA-seq data | Desai et al. | desai_dmso |

| H. sapiens peripheral blood 10x v2 scRNA-seq data (technical replicate of pbmc_1k_v3) | 181 | pbmc_1k_v2 |

| M. musculus neuron 10x v3 snRNA-seq data | 182 | brain_nuc_5k_v3 |

| Supporting data for GP_2021_3 | Gorin and Pachter | Zenodo: dataset 7388133 |

| Software and Algorithms | ||

| Python | python.org | 3.9.1 |

| NumPy | numpy.org | 1.22.1 |

| SciPy | scipy.org | 1.7.3 |

| pandas | pandas.pydata.org | 1.2.4 |

| kallisto | bustools | Melsted and Booeshaghi et al. | 0.26.0 |

| Monod | Gorin and Pachter | 2.5.0 |

| Other | ||

| Count matrices for all datasets | This manuscript | Zenodo: dataset 8132976 |

| Custom analysis notebooks | This manuscript | GitHub: https://github.com/pachterlab/GVP_2023 (version of record deposited at Zenodo: dataset 8132976) |

6. METHODS

6.1. Master equation models of transcription

We are interested in continuous-time stochastic processes that combine categorical, nonnegative discrete, and (usually nonnegative) continuous degrees of freedom. To solve these systems, we begin by separately defining their allowed transitions and converting them to master equation forms.

The categorical variable, denoted by , represents the instantaneous state of a multi-state gene. By assuming that the state interconversions are Markovian and independent of all other components of the system, we can define , the rates of transitioning from state to state :

| (35) |

These rates can be summarized in the state transition matrix , such that and to enforce the conservation of probability. This set of transitions can be represented by a master equation involving finitely many ODEs, which tracks the probabilities of each state at a time :

| (36) |

As this system is expressed in terms of a differential equation for an arbitrary time , the relation holds for time-dependent . For simplicity, we assume that is deterministic.