Abstract

Neuroaesthetic research has focused on neural predictive processes involved in the encounter with art stimuli or the related evaluative judgements, and it has been mainly conducted unimodally. Here, with electroencephalography, magnetoencephalography and an affective priming protocol, we investigated whether and how the neural responses to non-representational aesthetic stimuli are top-down modulated by affective representational (i.e. semantically meaningful) predictions between audition and vision. Also, the neural chronometry of affect processing of these aesthetic stimuli was investigated. We hypothesized that the early affective components of crossmodal aesthetic responses are dependent on the affective and representational predictions formed in another sensory modality resulting in differentiated brain responses, and that audition and vision indicate different processing latencies for affect. The target stimuli were aesthetic visual patterns and musical chords, and they were preceded by a prime from the opposing sensory modality. We found that early auditory-cortex responses to chords were more affected by valence than the corresponding visual-cortex ones. Furthermore, the assessments of visual targets were more facilitated by affective congruency of crossmodal primes than the acoustic targets. These results indicate, first, that the brain uses early affective information for predictively guiding aesthetic responses; second, that an affective transfer of information takes place crossmodally, mainly from audition to vision, impacting the aesthetic assessment.

This article is part of the theme issue ‘Art, aesthetics and predictive processing: theoretical and empirical perspectives’.

Keywords: neuroaesthetics, sensory multimodality, affective predictive processing, electroencephalography, magnetoencephalography, aesthetic emotion

1. Introduction

In the past two decades, a framework known as predictive processing (PP) has emerged across several scientific fields as a major account of human brain and mental functioning [1–3]. The core idea of PP is that the human brain constantly tries to predict the incoming sensory stimulations [1,2,4]. This predictive activity is supposed to unfold in a hierarchical fashion, with higher-level neural processes trying to predict the activity of the lower-level neural circuits, until the prediction error (i.e. the discrepancy between predicted and incoming information) is minimized across the hierarchy. [1–3]. According to PP accounts of emotions, the emotional content is generated by active top-down interoceptive inference, namely by a continuously updating model of the causes of the internal physiological condition of the body (interoception) [5]. In this inference process, the anterior insular cortex has been hypothesized to be crucial in comparing the expected condition and the experienced one while continuously attempting to minimize the discrepancy, or prediction error, between the two states [5]. Importantly, the top-down influence on lower-level neural circuits, as hypothesized by PP theory, should be intended not necessarily in a linear way because the neural pathways are most likely hierarchically organized as bidirectional information loops where ascending and descending connections coexist [6].

In relation to art, PP has recently been applied to identify mechanisms for the induction of positive appraisal and pleasure (i.e. aesthetic appreciation) of artistic stimuli. Aesthetic appreciation is a complex phenomenon, likely to be an outcome of several affective and cognitive processes spanning different temporal scales, from an evaluation of a simple feature, such as a painted line, to the experience of a state of fascination and immersion that can last for several hours (e.g. [7]). Common experiential features associated with aesthetic appreciation are for example awe, beauty and harmony [8–12]. By means of neuroimaging methodologies, aesthetic appreciation has been investigated mainly in relation to single sensory modalities [13–15]. For instance, certain features of music, like its (un)predictability have been linked to groove (the drive to move when listening to music and the pleasure associated with it) and chills (bodily changes such as goose bumps or shivers down the spine occurring when experiencing strong pleasure while listening to music) [16,17]. However, aesthetic experiences are only very rarely unimodal (i.e. relating to a single sensory modality): the experience of a musical piece for example does not just involve the processing of auditory signal but also, as a rule, multiple other co-occurring stimuli such as the surrounding environment (e.g. a stadium or a music hall), its lightning (such as those employed in a pop/rock concert), or even the outfit and the gestures of the artist (for a review of related literature, see [18]). Experimental evidence confirms how aesthetic appreciation is enhanced by audio-visual information [19] or even by eye contact between the artist and the audience [20]. Here, multimodality might occur, namely a situation where at least two perceptual modalities are simultaneously integrated, or crossmodality, that is, a situation where at least two sensory inputs are altering each other in inducing an experience [21]. In relation to this, a recent account suggests the importance of both global features (in audition, the whole sound, distinct of its individual components, such as instruments, harmony, structure or in vision, the combination and arrangement of features, distinct from individual lines, forms or shapes) and unisensorial low-level features (e.g. timbre, pitch, spatial frequency or saturation) for aesthetic appreciation [8]. Global (more than low-level) features might enable the formation of multi- and crossmodal, aesthetic appreciation consisting of emotions, judgements and individual preferences [22–24]. In summary, aesthetic appreciation can be either a domain general (independent of the sensory modality) or a domain specific process (dependent on the sensory modality), which is modulated by central processing (e.g. memory or attention) [7]. However, neuroimaging studies of aesthetic appreciation have mostly ignored the fact that aesthetic appreciation can also be multi- and crossmodally generated, and the related processing stages of affective crossmodality are not yet fully understood.

According to the PP framework, for affective stimuli that convey information on the levels of semantic, lexical, conceptual or propositional information, such as words or semantically representative images, the emotional experience is top-down modulated by the past experience and learning of those meanings. When it comes to aesthetic appreciation, however, several neurophysiological studies explicitly investigating aesthetic evaluative judgements have focused on non-representative stimuli and on the role of sensory features in the judgements, rather than on the top-down modulation by higher level neural processes that are hypothesized in the PP framework. This is because non-representative stimuli, such as musical chords or black-white visual patterns, are by themselves able to induce affective associations simply based on their physical features, such as symmetry/asymmetry for visual patterns or consonance/dissonance for musical chords [25–31] (for a review, see [32]). These features induce neural responses in the sensory cortices according to fast, automatic constrained reactions (e.g. [16,33]) and might even modulate several affect-related neural responses: a posterior sustained negativity [25,34–37], a late frontocentral negativity [34], a right-predominant early negativity and a late positive potential (LPP) [38].

Non-representative stimuli have been instrumental for crossmodal affect research: a previous experiment with the crossmodal affective paradigm successfully evidenced an interaction between audition and vision in which valence and arousal were transferred from the auditory (primes) to the visual (targets) modality independent on the task (rate arousal or the valence of the targets; however, note that arousal was transferred only when explicitly probed [39]. In another study, visually induced emotions primed the cognitive rating of musical targets (judging musical tuning, appropriateness of the musical continuations etc.), as evidenced by faster reaction times, although these effects were visible mainly in music experts [40]. In an opposite crossmodal configuration, oppositely valenced musical chords (varying in their consonance/dissonance) influenced the semantic processing of visually presented emotional words and modulated the neural N400 response in the brain, even in musically non-experts participants [41]. Such modulations, though, did not directly test the PP hypothesis applied to aesthetics since they do not allow us to understand whether the aesthetics ratings and related brain responses to non-representative aesthetic stimuli (e.g. consonant musical chords) might be top-down modulated by the semantic information conveyed by representative stimuli (e.g. graphical representations of emotionally evocative content, such as a traffic accident).

The affective valence of aesthetic stimuli seems to be lateralized in the cerebral hemispheres [22,42], in line with the valence model assigning negative emotions to the right and positive valence to the left hemisphere [43,44]. Other models for emotional lateralization in the brain also exist (approach-avoidance and behavioural inhibition system—behavioural activation system; [42,45]), although conclusive evidence for any of the models has not been achieved in reference to aesthetic appreciation.

Being motivated by multi- and crossmodal affect formation in a PP framework, we investigated if the early neurophysiological components of aesthetic affect are influenced by the previously formed crossmodal predictions. We built upon our previous work on crossmodal affective responses by applying the crossmodal affective priming protocol where affective stimuli from a sensory modality facilitate the affective (or aesthetic) responses to stimuli conveyed by another modality [39–41,46]. Priming [47] together with high-temporal resolution methods, such as electroencephalography (EEG) and magnetoencephalography (MEG), has been methodologically recognized in the context of PP [4]. Here, we aimed to determine how the temporal formation of affective neural chronometry within each sensory modality evolves by means of high temporal resolution methods such as EEG and MEG.

For testing whether auditorily and visually induced affect (primes) predict the aesthetic judgement of the subsequent auditory or visual stimuli (targets), we chose to apply visual and acoustic primes which have been applied in multiple psychological and neuropsychological studies and are thus known to be reliably emotionally evocative and comparable in their valence and arousal ratings [48–50]. The acoustic and visual target stimuli were chosen as they represent features (consonance and dissonance, symmetry and asymmetry) from the corresponding sensory modality which are known to be associated with a positive or negative valence [25–31] (for a review, see [32]). Affect formation in relation to aesthetic stimuli was operationalized by asking the participants for their liking assessment of the stimuli, a procedure which has been applied in various empirical neuroaesthetic studies (c.f. e.g. [10,38]) and can be accomplished in a rapid pace while incorporating self-referential information beyond mere emotion recognition [46,51]. Thus, the applied bi-directional crossmodal affective priming protocol entails the use of primes and targets from two sensory modalities always resulting in pairs of one being auditory, the other being visual, and vice versa (crossmodal bi-directionality). Also, the prime-target pairs always resulted in either affectively congruent pairs (prime and target stimuli are of similar valence), or affectively incongruent pairs negative and positive valence stimulus pairs).

The crossmodal affect formation and its' bidirectionality was investigated as follows: first, we assessed if an affective prediction formed with acoustic or visual stimuli influences the assessment of the subsequent aesthetic stimulus of another sensory modality, and whether this effect is visible in the neurophysiological correlates. Second, we investigated the chronometry of affect emergence by comparing evoked response latencies in the context of crossmodal prediction. Third, we studied whether vision and audition differ in their valence responsivity to neutral, positive and negative valence types (primes), and whether the affective prediction indicates any hemispheric lateralization in dependence to the valence (primes and targets). Finally, to investigate the spatio-temporal distribution of aesthetic affect formation, we conducted a source localization for the primes and targets in reference to the identified event-related potentials (ERP) and event-related fields (ERF) components.

First and foremost, we hypothesized that the early affective components of crossmodal aesthetic responses are dependent on the affective predictions formed in another sensory modality, such that emotionally incongruent and congruent stimulus pairs demonstrate significant differences in their brain responses (i.e. an affective congruency effect). Furthermore, when comparing the chronometry of the evoked responses, auditory modality was expected to indicate an earlier modulation of the ERP and ERF components during primes (in the region of N1, or earlier) than the visual modality. Regarding the differentiation of valence in each sensory modality and brain hemisphere, the literature is contradictory, yet we expected that a differentiation in both modalities is made between emotional and neutral stimuli, rather than between all three valence types (i.e. an effect of valence). That is, non-neutral affective information was expected to be more potent in guiding affective prediction, and thus being more emphasized as a neurophysiological correlate of PP. Whether the affect-related PP would be valence dependently lateralized to one of the brain hemispheres remained exploratory (i.e. an effect of hemisphere).

2. Methods

(a) . Participants

Data were analysed from 20 healthy participants, right-handed, with normal hearing and normal or corrected-to-normal vision; the sample included 11 females (mean age 25.3, with a standard deviation of 3.7) and nine males (mean age 25.9, with a standard deviation of 5.2). Participants fulfilling one or more of the following criteria were excluded: neural or psychiatric disorders, severe physical limitations, professional musicians and art experts, conservatory or art-history students. Participants were also excluded if they had a hearing impairment, were pregnant, suffered from claustrophobia, had a head circumference larger than 60 cm, were under medication or carried metallic implants or piercings that could not be taken out. Participation in the study was voluntary, and the participants received a voucher corresponding to a value of 200 DKK as a compensation for their time. The study was conducted according to the Helsinki Declaration II, and it was granted the ethical approval of the Ethics Committee of Central Region Denmark (55441). Each participant signed an informed consent before taking part in the study.

(b) . Stimuli

An affective priming protocol with crossmodally paired affective prime and target stimuli was applied. The visual prime stimuli were from the databank of the International Affective Picture System (IAPS) [52]. Each stimulus has been rated for an average arousal and valence during the development of the stimuli. The visual prime stimuli applied in this study had a medium arousal (mean 5.42, 5.84 and 3.18) and high, low and medium valence (mean 7.08, 2.42 and 4.88) for positive, negative and neutral primes correspondingly [52]. The acoustic primes were also from the databank of the International Affective Digital Sounds (IADS), and also had a similar arousal (5.92, 6.84 and 5.02) and high, low and medium valence for positive, negative and neutral stimuli, correspondingly (mean 6.9, 2.65 and 4.70) [53]. Unlike the target stimuli, both primes were semantically representational, thus they had either a culturally learned or biologically relevant positive or negative representation (e.g. sounds and images of cute animals for positive valence, or a visual close-up of an infected eye for negative valence). See the electronic supplementary material, tables S2-S7 for further details on the primes. As the IADS sounds are originally 6000 ms long, they were shortened for the purpose of the study by either cutting from the beginning or from the end of the sample depending on which editing retained the recognizability of the semantic information of the IADS sounds. The editing was based on the evaluation of two of the authors (M.T., E.B.), and it was conducted with the sound editing program Audacity [54]. Acoustic target stimuli were musical chords representing positive affect (high on valence, happiness and liking) and negative affect (low on valence, happiness and liking) as reported in a study investigating the affective associations to musical chords [28,29]. The visual target stimuli were geometric black and white patterns, which were demonstrated in a previous study to be associated with specific aesthetic values of beautiful and not beautiful, and they were thus categorized as positive and negative stimuli [26]. See the electronic supplementary material, table S8 and figures S1–S2 for further details on the targets.

(c) . Procedure

(i) . Trial structure

During the MEG/EEG experiment the participants were presented with a visual fixation cue of 500 ms indicating the start of an experimental trial followed by a prime (IAPS picture or IADS sound). The prime was presented 300 ms on its own, and it was followed by a target stimulus, which was either visual or acoustic, depending on the prime, always resulting in crossmodal stimulus pairs. There was an overlap of the prime and target, the prime being visible or audible for an accumulated duration of 1500 ms. After hearing or seeing the target stimulus for 1200 ms, a fixation cross appeared on the screen for 1800 ms, during which the participants were expected to give their answers, had they already not done so. An inter-trial interval average of 100 ms (randomly varying between 50 and 150 ms to reduce any oscillatory background brain activity) was used before the next trial started (figure 1). In both modalities, there were 12 different primes for each category of negative, positive and neutral valence and 18 different target stimuli for each category of negative and positive valence. Half of the prime stimuli in each category were combined with one-third of the target stimuli in each category, resulting in six trials per condition, which were repeated 10 times, resulting in overall 60 trials per condition. The order of the presentation of the stimuli and the specific prime-target stimulus combinations were randomized for each participant. All in all, there were 720 trials per participant, and the total duration of the MEG/EEG recording was approximately 40 min. See the electronic supplementary material, table S1 for further details on the trials.

Figure 1.

Timing of the stimulus presentation. (a) The prime is an IAPS image, and the target is a musical chord. (b) The prime is an IADS sound, and the target is a geometric pattern. ITI, Inter-trial interval.

(ii) . Experimental task

Before the experiment started, participants were trained during 10 practice trials in providing their affective judgement of liking of the target stimuli as fast and accurately as possible via a button press (binary forced choice). Participants reported their behavioural answer via right-hand index and middle finger button-press. The buttons were marked with the letter N for No and with the letter Y for Yes. Each practice trial had different stimuli than the ones used in the actual experiment.

(iii) . Magnetoencephalography and electroencephalography procedure

The study took place in an electromagnetically shielded and acoustically attenuated room (Vacuumschmelze GmbH & Co. KG). The MEG dewar was adjusted to a seated position, and the participants were instructed to sit still during the continuous MEG/EEG recording. The acoustic stimuli were delivered through pneumatic headphones (Etymotic ER-30) and controlled with the Neurobehavioral Systems Presentation v16 (Neurobehavioral Systems). Before the beginning of the MEG/EEG recording, the sound intensity levels of each participant were determined by applying an adaptive staircase test, and the stimuli were delivered 50 dB above the individual hearing threshold. The viewing distance for the visual stimuli to the screen was about 1 m, with a visual angle of 11 degrees.

(d) . Magnetoencephalography and electroencephalography acquisition

The neurophysiological data were acquired using a whole head MEG system (ElektaNeuromag TRIUX, Elekta Oy, Helsinki, Finland) comprising 102 MEG sensor triplets, each containing one magnetometer and two orthogonal planar gradiometers. The EEG was recorded using a 75-channel EEG with unipolar passive electrodes (EasyCap GmbH with a MEG Triux-suitable BrainCap). The electrodes were positioned on the cap according to the extended 10–20 system, and it was equipped with vertical and horizontal electrooculogram (EOG) leads, ground electrode and head position indicator coils for localizing the head in the MEG device coordinates. The data were referenced to the FcZ electrode and recorded at a sampling rate of 1000 Hz, a high pass filter of 0.1 Hz and low pass of 330 Hz were applied online. Two bipolar EOG and one bipolar electrocardiogram electrodes were used to record eye and heart artefacts.

(i) . Magnetic resonance imaging

T1-weighted structural magnet resonance (MR) scans of each individual were carried out with a CE-approved 3T Siemens Skyra scanner with an inversion time of 1100 ms, repetition time of 2300 ms, echo time of 2.61 ms, flip angle of 9°, slice thickness of 1 mm and field of view of 240 × 256 mm.

3. Data analysis

(a) . Magnetoencephalography and electroencephalography pre-processing

Movement correction and external noise removal was performed by applying the spatio-temporal signal separation method (Taulu & Simola [55]) with MaxFilter (Elekta). Also, using the MaxFilter software the data was down-sampled from a sampling rate of 1000 Hz signed 32-bit integers to 250 Hz 32-bit floating number format. The reference for online recording of the EEG was FcZ, and the offline reference was based on an average across all channels.

Drifts and muscular artefacts were reduced with a high-pass filter with half cut-off at 0.05 Hz and a low-pass filter with half amplitude cut-off at 25 Hz. The filtering was applied on the continuous data using a zero-phase finite impulse response. Bad EEG channels with no signal, or only receiving high amplitude noise, were corrected by replacing the signal with an interpolation of the signal from neighbouring channels by using the default weighted neighbour method in FieldTrip (Oostenveld et al. [56]). On average 4.5 (range 0–12), bad EEG channels were corrected per participant. Subsequently, component and artefact identification were performed by applying independent component analysis (ICA), vertical and horizontal eye-movements and heartbeat artefacts were visually inspected, with one artefact component per artefact type, clearly visible above the noise level, projected back to the channels and subtracted from the contaminated channel waveforms. ICA correction was performed separately on the EEG, MEG magnetometer and MEG gradiometer data. On average, 1.6 components were applied for correction of the EEG, 2.0 components for the MEG magnetometer and 1.8 components for correction of the MEG gradiometer channel waveforms. After artefact correction, the EEG data were re-referenced to the average across all EEG channels. Remaining noisy trials exceeding ± 100 µV in the EEG, ± 2000 fT in the magnetometers and ± 400 fT cm−1 in the gradiometers were automatically detected and rejected. A few participants were excluded from further analysis owing to excessive artefacts or noise in either the EEG, MEG or both. As a result, 19 participants were included in the EEG analysis, and 17 in the MEG analysis.

(b) . Sensor-level analysis

After pre-processing the data, ERP and ERF responses were analysed separately for primes and targets. As well as for ERP and ERF analysis, the single-trial data was epoched into time windows starting 100 ms before and ending 400 ms after stimulus onset. The epoched trials were baseline corrected in relation to the 100 ms pre-stimulus period. Based on grand averages across the experimental conditions, measured for acoustic and visual stimuli separately, clusters of four EEG and four MEG peak sensors indicating the strongest measured evoked response amplitudes above each hemisphere in the temporal and occipital locations were selected for sensor-level statistical analysis of category-specific effects. Individual average evoked response amplitudes were measured for each subject and condition in a 30 ms time window centred on the measured peak latencies in the grand averages. See the electronic supplementary material, table S9 for the exact gradiometers and EEG channels that were drawn for the analysis.

(c) . Statistical analysis of event-related fields and event-related potentials

R statistical software package (version 1.1.423) was used to conduct a two-way analysis of variance (ANOVA) with the factors valence (positive, negative, neutral) and hemisphere (left, right). The dependent variables were P50, N1, P2 amplitudes for the acoustic primes and targets, and C1, P1 and N1 amplitudes for the visual primes and targets. For testing crossmodal congruency effects, an ANOVA model with the factors congruency (positive congruent, positive incongruent, negative congruent, negative incongruent) and hemisphere (left, right) was applied by inspecting the target amplitudes. The analyses were performed separately for each modality. Regarding the ERF components, only amplitudes of the gradiometers were analysed since they provide a better signal-to-noise ratio [57]. The signal from the MEG magnetometers was used for the subsequent source localization. Bonferroni corrected post-hoc tests were applied to identify significant main effects and interactions.

(d) . Source reconstruction

Source space analysis on the MEG magnetometer ERFs was carried out using Statistical Parametric Mapping software (SPM8 version r6313, Wellcome Trust Centre for Neuroimaging, London, UK). Group level source statistics was performed with one-sample t-tests on the cluster-level with family-wise error correction at an alpha of 0.05. Furthermore, anatomical areas were estimated using the MNI to Harvard-Oxford conversion tool from the Yale BioImage Suite Package (Lacadie et al. [58]). Three-dimensional rendering of the source estimates was performed with MRIcroG (Rorden et al. [59]).

(e) . Statistical analysis of the affective assessment of the target stimuli

The per cent liking of the target stimuli was calculated for each affective priming condition for each of the 20 participants. Non-parametric statistics was applied since the liking ratings were not normally distributed. The Wilcoxon signed-rank test was applied to compare the average per cent liking between the positive and negative target stimuli and between the affective congruent and incongruent target stimuli.

4. Results

(a) . Differentiation of valence in early evoked responses

For the auditory primes, the N1 and P2 amplitudes were larger for the negative compared to the positive and neutral valence (figure 2 and table 1), as indicated by significant effects of valence and valence by hemisphere interactions. For the visual primes, the evoked response amplitudes did not differ significantly, except for a larger C1 amplitude measured above the left hemisphere for the positive compared to neutral valence (figure 2 and table 1). No further significant results were observed for the primes. See the electronic supplementary material, figure S3 for the equivalent gradiometer waveforms.

Figure 2.

Waveforms of acoustic and visual primes. Panels (a,b) demonstrate the EEG waveforms for the prime responses to the different valences of the auditory modality on the left and right hemispheres (acoustic primes). The auditory responses are based on four temporal channels in each hemisphere and the visual responses on four occipital channels, accordingly. Panels (c,d) contain the EEG waveforms for the prime responses to the different valences of the visual modality on the left and right hemisphere (visual primes). The grey-highlighted bars indicate the latency of the evoked responses, P50, N1 and P2 for audition, and C1, P1 and N1 for vision. Similarly, the time course of the latencies is colour-coded in the source localization brain images in each graph (larger figures of the source localization results with the corresponding labels of the anatomical regions can be found in the electronic supplementary material, figures S5–S6 and tables S10–S11). The x-axis indicates time in milliseconds (ms), and y-axis indicates the evoked response microvolts µV for EEG electrodes.

Table 1.

Statistical results of the evoked responses to the auditory and visual primes. (The table contains the statistically significant results for the evoked responses to the auditory and visual primes. The component / latency (ms) column indicates the time point where the global peak of the corresponding component was measured in reference to the stimulus onset in milliseconds. The results are presented separately for each identified component for EEG electrodes and MEG gradiometers (measurement). The statistical results are based on a two-way analysis of variance (ANOVA), where valence (neg, negative; neut, neutral; and pos, positive) and left hemisphere (LH) and right hemisphere (RH) were applied as two factors to test whether there are statistically significant differences in the evoked responses. A plus-symbol next to the valence type indicates the directionality of the component in reference to other valences. All significant main effects and interactions are Bonferroni corrected post hoc comparisons (factors: valence, or interaction: valence * hemisphere). (d.f., degrees of freedom; F, statistical F-test; p, probability of the observed effect; d, Cohen's d indicating the magnitude of the found effect; HFe, statistical test after Mauchly's test of sphericity.).)

| stimulus modality | component / latency (ms) | measurement | factors | d.f. | F | p-value | post hoc | p-value | d |

|---|---|---|---|---|---|---|---|---|---|

| audition | N1 / 108 | EEG | valence | 2, 36 | 9.15 | <0.001 | +neg −neut | <0.001 | 0.85 |

| +neg −pos | <0.001 | 0.67 | |||||||

| N1m / 108 | MEG | valence | 2, 32 | HFe 0.78 | <0.001 | +neg −neut | <0.001 | 0.87 | |

| +neg −pos | <0.001 | 0.98 | |||||||

| P2 / 200 | EEG | valence * hemisphere | 2, 36 | 3.99 | 0.03 | +neg neut * LH | 0.002 | 1.12 | |

| +neg neut * RH | 0.01 | 0.94 | |||||||

| P2m / 248 | MEG | valence * hemisphere | 2, 32 | 13.31 | <0.001 | +neg neut | 0.001 | 0.79 | |

| vision | C1 / 52 | EEG | valence * hemisphere | 2, 36 | 5.86 | 0.006 | +neut −pos * LH | 0.03 | 0.83 |

(b) . Crossmodal congruency effects on evoked responses

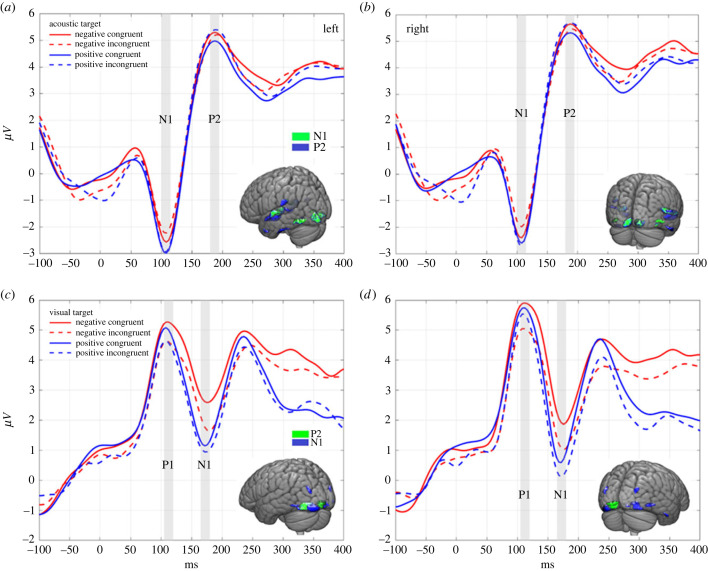

Effects of congruency and congruency by hemisphere interaction were observed from the ANOVA (figure 3 and table 2). For the visual targets, the P1 amplitudes were larger when the auditory prime valence was congruent compared to incongruent (figure 3 and table 2). Vice versa, the visual target N1 amplitudes were larger when the auditory prime valence was incongruent compared to congruent (figure 3 and table 2). For the auditory targets, larger P2m amplitude was observed for incongruent compared to congruent positive visual prime valence (figure 3 and table 2). No further significant results were observed for the targets. See the electronic supplementary material, figure S4 for the equivalent gradiometer waveforms.

Figure 3.

Acoustic and visual target responses. Panels (a,b) contain the EEG waveforms for the auditory target responses on the left and right hemispheres (acoustic targets). Panels (c,d) contain the EEG waveforms for the visual target responses for left and right hemispheres (visual targets). The grey-highlighted bars indicate the latency of the recognized evoked responses, N1 and P2 for audition, and P1 and N1 for vision. Similarly, the time course of the latencies is colour-coded in the source localization brain images in each graph (larger figures of the source localization results with the corresponding labels of the anatomical regions can be found in the electronic supplementary material, figures S5–S6 and tables S10–S11). The x-axis indicates time in milliseconds (ms), and the y-axis indicates the evoked response microvolts µV for EEG electrodes.

Table 2.

Statistical results of the crossmodal effects. (The table shows the statistically significant results for the evoked responses to auditory and visual targets measured with EEG and MEG. The component/ latency (ms) column indicates the time point where the global peak of the corresponding component was measured in reference to the stimulus onset in milliseconds. The results are presented separately for each identified component for EEG electrodes and MEG gradiometers (measurement). The results are based on a two-way analysis of variance (ANOVA), where left and right hemisphere (LH and RH) and congruency (neg cong, negative congruency; neg incong, negative incongruency; pos cong, positive congruency; pos incong, positive incongruency) were applied as factors to test whether there are statistically significant differences in the amplitudes of the evoked responses. A plus symbol next to the congruency type indicates the directionality of the component in reference to other congruencies. All significant main effects and interactions are Bonferroni corrected post hoc comparisons. (d.f. , degrees of freedom; F, statistical F-test; p , probability of the observed effect; d, Cohen's d indicating the magnitude of the found effect.).)

| stimulus modality | component / latency (ms) | measurement | factors | d.f. | F | p-value | post hoc | p-value | d |

|---|---|---|---|---|---|---|---|---|---|

| audition | P2m / 204 | MEG | congruency * RH | 3, 48 | 5.17 | 0.04 | +pos cong −neg incong | 0.05 | 0.07 |

| +pos incong −pos cong | 0.02 | 0.31 | |||||||

| visual | P1 / 108 | EEG | congruency | 3, 54 | 4.33 | 0.01 | +neg cong −neg incong | <0.001 | 0.66 |

| +neg cong −pos cong | 0.02 | 0.52 | |||||||

| +pos incong −neg incong | 0.01 | 0.53 | |||||||

| visual | N1 / 188 | EEG | congruency | 3, 54 | 9.48 | <0.001 | +neg inong −neg cong | <0.001 | 0.71 |

| +neg cong −pos cong | <0.001 | 1.1 | |||||||

| +neg cong −pos incong | 0.01 | 0.5 | |||||||

| +neg incong −pos cong | 0.04 | 0.46 | |||||||

| +pos incong −pos cong | 0.03 | 0.48 |

(c) . Source reconstruction

The evoked responses to the acoustic stimuli were estimated to originate mainly in the left and right primary auditory cortices, as well as in the left middle and superior temporal gyri, and the right temporal and frontal poles (electronic supplementary material, figure S5 and table S10). The visual primes and targets demonstrated activity in the left and right visual association areas, in particular in that of the left hemisphere (electronic supplementary material, figure S6 and table S11). Also, in response to the visual primes, the left temporal pole and the right inferior temporal gyrus were activated (electronic supplementary material, figure S6 and table S11). As expected, the activated areas in response to the targets were anatomically more distributed than in response to the primes, activating next to the prime response areas also areas from the target sensory modality. In addition, in response to the targets of both modalities the right and left fusiform gyri were additional estimated sources. Also, the insular cortex was activated during the P2 latency of the acoustic targets (electronic supplementary material, figure S5 and table S10).

(d) . Affective assessment of the target stimuli

As expected, the auditory positive targets (M = 93%) received significantly more liking ratings than the negative auditory targets (M = 31%), Z20 = 3.9, p < 0.001 Also, among the visual targets the positive ones (M = 96%) obtained significantly more liking ratings than the negative ones (M = 15%), Z20 = 3.7, p < 0.001. The liking ratings consistently tended to be shifted in the direction towards chance level (50%) for all the affective incongruent compared to congruent conditions; however, there was no statistically significant effect of the affective congruency (Mdiff = 2–14%, p = 0.083–0.512). All p-values are Bonferroni corrected.

5. Discussion

In line with some PP views about experience and evaluation of aesthetic stimuli, we studied whether semantically meaningful (representative) primes would top-down modulate the responses to abstract (non-representative) targets when the prime-target pairs were considered as positively and negatively congruent or incongruent, correspondingly. Overall, the target-evoked responses in both modalities showed clear N1 and P2 (in audition) and P1 and N1 (in vision) evoked responses. The visual modality indicated clear modulatory effects of congruency during N1 and P1 responses. Specifically, during the visual N1 the negative congruency and incongruency differed from one another, indicating an increased attention towards negative affect. This can be interpreted such that the previously heard affectively representative stimuli were effectively drawn into top-down predicting the subsequent aesthetic visual targets, thus demonstrating not only a crossmodal affective priming, but also a cognitive-affective feedforward effect from non-aesthetic representative affect to aesthetic responses. In turn, the neural responses to the auditory targets, i.e. the musical chords, were modulated only during P2-latency, which is relatively late in a priming context, indicating that the visual affective primes were slower in assigning top-down predictions to abstract chords.

Traditionally, regardless of the sensory modality, relatively early components such as the N1, P100 and P200 have not been linked to affective processing emerging so rapidly after an event onset. Rather, affect has been referred to later responses, such as the LPP and the N400 [60]. Yet, this study indicates that especially in the auditory modality, the earlier components are also responsive to affective modulation in reference to crossmodal representative primes (such as landscapes or cute animals, for positive affect). This is in line with the hypothesis provided by PP theory in relation to emotion. According to this theory all emotional experience derives from the brain's effort to attribute causes to changes of internal physiological states and to minimize the prediction error in relation to expectations about own internal state in response to the world, originating from past experience. In this context, PP account becomes even more relevant for understanding the aesthetic experience of artistic stimuli (including music): it has been hypothesized that artists intentionally create objects referring in ambivalent or unclear ways to past meanings in order to disrupt predictions in an optimal way, generating surprise, interest and hence, reward and motivation to engage further [61,62].

Our results can thus be interpreted to support a theoretical understanding of affect as a crucial part of perception already from the initial moments onwards [63]. Also, the results indicate that a transfer effect from representative (IAPS and IADS primes) to non-representative aesthetic stimuli (musical chords or black and white geometric patterns) takes place crossmodally, in particular from audition to vision. As this transfer takes place in a rather early perceptual stage, it can mean that apart from aiding the processing of evolutionarily evocative stimuli, such as distress vocalizations, swift affective processing also encodes less evocative affective information with different levels of semantic representation, including aesthetic affect too. These results support the idea that affect formation is understood as an emergent process, starting already pre-consciously, such that a valid self-report about one's emotional state reflects only a fraction of the emergent process of an emotion [64,65], and thus potentially highlighting the role of the very early affect elicitation and its incorporation into brain predictive processes from non-aesthetic affect to aesthetic affect.

To compare the affective chronometry in response to the primes within the sensory modalities, in the auditory modality a P50, N1/m and P2/m and in the visual modality a C1/C1m, P1/P1m and N1/N1m were analysed. Starting in the latency of the first 50 ms, the auditory modality clearly responded to the valence variation of the acoustic stimuli, indicated by a significant main effect of all three valences being present in the N1m/N1. The same trend continued in the P2/P2m, such that the auditory modality continued to be modulated by valence, negative valence causing a higher peak. In comparison, the visual modality remained unresponsive to the modulation. Despite one study having shown that the auditory P50 can be modulated in dependence to a distressed affective vocalization [66], we did not replicate this finding. Indeed, traditionally the only cognitive component modulating the auditory P50 is attention [60], which might explain why the distress vocalization has been shown to cause a difference, while the more complex stimuli that were used here did not. The corresponding visual C1 is less clear in its waveform, which is rather typical since the component often is either missing or indicating mixed polarities while being tangled with the subsequent P1 component [60], thus remaining an unreliable indication of an early response to the affective modulation. A potential reason for the difference between the responsiveness of the visual and auditory systems might lie in the anatomy-functional architecture and their related processing stages, for example it is known that the visual components in the latency of 100–250 ms are primarily influenced by the stimulus features, such as location and contrast [60], aspects which were not controlled for in this study. Nevertheless, the components have also been reported to be influenced by arousal and visual selective attention [67]. Yet, since the arousal was aimed at being as similar as possible across the stimuli, no arousal-based modulation could take place with the stimuli that were applied in this study. Nevertheless, most importantly, during the visual target responses, the above enlisted components did demonstrate modulatory effects in dependence to the congruency, which can mean that auditorily induced affect directed the prediction of the following visual aesthetic affect. This finding should be explored further while controlling for the visual sensory features.

Regarding differences in the responsivity of the hemispheres, we found no clear sensory modality or valence-dependent dominance. The auditory negative left hemispheric interaction effect (P2 and P2m) of affect modulation is in line with the auditory P2 separating emotional from neutral vocalization first reported in 2008 [68], and also being related to the anticipation and perception of negative emotional sounds [69]. Instead, a clear main effect of congruency in the auditory modality during the P2 indicates that the right hemisphere differentiates between positive congruency and incongruency. This could mean that, in particular, the right hemisphere decodes the discrepancy related to positive affect. Nevertheless, this finding should be interpreted with caution as the P2 effect of lateralization of congruency would be fairly late to be caused by congruency alone. Indeed, perhaps clearer hemispheric effects could be found if the sensors were also inspected in reference to frontal and posterior sites, and possibly separating more nuanced emotions by linking them to perceptual and emotion-cognitive processes instead of merely inspecting differences in perception or influence of valence [42,68,70].

From a neuroscientific perspective, the classical view is that in both visual and auditory systems, basic physical features of the stimuli are processed at the lower levels of the pathway, in primary sensory regions, before constructing a coherent sensory integration and meaning formation in prefrontal areas through ventral and dorsal routes [71–75]. By contrast, the PP account implies a hierarchical dynamic interaction between various processing stages, with top-down neuronal signals, e.g. from prefrontal cortex, informing and weighting the precision of bottom-up sensory information in continuously updating feedback and feedforward circuits [4]. To provide an example, the individual visual features registered as an image on the retina acquire belief-like meanings coming from both the visual cortices and from the prefrontal cortex conveying memories and attributing meanings [76,77]. However, despite not providing further anatomy-temporal insight into the proposed multiple feedforward-feedback stages governing PP, the here detected congruency effects on the neuronal level are in line with the PP idea that priming effects have the potential to be task-relevant from the perspective of the individual, i.e. rather than the congruency effects being mere memory traces or stimulus-processing effects, the here detected effects can be an indication of the aesthetic targets tapping into the potentially semi-hardwired emotionally evocative responses (a term used to describe a relatively stable conceptual architecture based on which an individual rapidly processes frequently encountered meaningful stimuli) induced by the primes (universally recognized emotional depictions), and thus guiding moment-to-moment processing [6].

The source localization results presented here firstly support the account that crossmodal emotion detection is distributed already during the early responses to the corresponding cortical areas. Moreover, they are in line with the proposal by Rauss et al. [6] that the priming paradigm might serve to test PP as the effects of congruency can be detected already in the very early perceptual process on the neuronal level. However, only the acoustic stimuli indicated activity in the primary auditory cortex, while the visual stimuli activated the visual association areas. A further difference between the modalities was the activity in the left insula during the acoustic targets. The functioning of the insular cortex is not fully understood [78]; yet, the anterior part of the insular cortex has been linked to emotional awareness and the conscious experience of emotions, [79,80], as well as to interoceptive predictive coding in the context of emotions [5]. Furthermore, both sensory modalities were activated during the targets in the fusiform gyrus which has been reported to respond to high-level visual processing, such as recognition of faces and objects [81]. Hence, our source localization findings align with what could have been expected based on theories of emotions that rely on interoceptive predictions [5], expanding those theories into the realm of aesthetic processing. Informed by conceptualization of bottom-up and top-down processes in PP [6], we further highlight the differences in the mechanisms governing how top-down predictions related to priming might guide sensory processing of stimuli in the two sensory modalities, the auditory channel possibly being more affected by interoceptive processes at a lower hierarchical level than the visual one.

(a) . Limitations of the study

The results indicated significant affective congruency effects in the early cortical responses but only non-significant affective congruency tendencies in the liking ratings. This divergence between the neural and behavioural responses might be owing to the different perceptual processing stages. The early cortical responses indicate rather early-stage neural processing, whereas the liking ratings are influenced by later processing stages of evaluation. In support of this interpretation, a recent study using the same stimuli and operationalizing behavioural response times, indicated that the behavioural responses were longer for the affective incongruency compared to affective congruency [46]. In a laboratory, with multiple repetitions of the stimuli, one might argue that affect induction cannot be assumed to be as authentic as in a naturalistic environment; yet these methodological trade-offs are hard to circumvent in MEG or EEG studies. The sample size of our study is aligned with the number of participants in comparable previous M/EEG studies on crossmodal affective congruency (e.g. [69,82]). Regardless of the obtained robust effect sizes, the interpretation of the results should be made in reference to the sample size, which ideally could be larger, or alternatively the study could be replicated with a larger sample size. Nevertheless, we believe that our affective priming protocol can shed light on how the brain incorporates affective information and uses it to guide the predictions about the affective value of future upcoming inputs.

6. Conclusion

Taken together, the results indicate that top-down modulation of aesthetic responses by semantically meaningful emotional primes takes place in a crossmodal situation. Such modulation is visible in particular in the early brain responses, being aligned with the latest PP accounts in the aesthetic domain. Moreover, the top-down modulation of aesthetic responses by affective primes, namely the incorporation of affect into predicting aesthetic responses, depends on the sensory modalities involved.

Acknowledgements

Also, we would like to thank Imre Lahdelma, Jose López Santacruz and David Quiroga-Martinez for their assistance during the data collection. Finally, we would like to thank Markus Butz for commenting on the manuscript.

Ethics

The study was conducted according to the Helsinki Declaration II, and it was granted ethical approval of the Ethics Committee of Central Region Denmark (55441).

Data accessibility

The contribution has been published in PsychArchives and assigned the following Persistent Identifier(s): The impact of crossmodal predictions on the neural processing of aesthetic stimuli (doi:10.23668/psycharchives.13581); supplementary material for: The impact of crossmodal predictions on the neural processing of aesthetic stimuli (doi:10.23668/psycharchives.13582); MEG and EEG data for: The impact of crossmodal predictions on the neural processing of aesthetic stimuli (doi:10.23668/psycharchives.13580); starting on the 17 December 2023.

Data are also provided in electronic supplementary material [83].

Declaration of AI use

We have not used AI-assisted technologies in creating this article.

Authors' contributions

M.T.: conceptualization, data curation, formal analysis, funding acquisition, investigation, methodology, project administration, visualization, writing—original draft, writing—review and editing; N.T.H.: data curation, formal analysis, methodology, software, visualization, writing—original draft, writing—review and editing; Y.S.: methodology, resources; P.V.: funding acquisition, supervision; E.B.: conceptualization, funding acquisition, methodology, project administration, resources, supervision, writing—original draft, writing—review and editing; T.J.: conceptualization, methodology, supervision, writing—original draft, writing—review and editing.

All authors gave final approval for publication and agreed to be held accountable for the work performed therein.

Conflict of interest declaration

We declare we have no competing interests.

Funding

This study was supported by Center for Music in the Brain funded by the Danish National Research Foundation (grant no. DNRF117). Also, we would like to thank Suvi Saarikallio and her project ‘Affect from Art’ (generously founded by Kone Foundation [grant no. 32881-9]) for supporting the initiation of this study financially as well as thematically and the University of Jyväskylä for granting a mobility grant for the first author for the duration of the data collection.

References

- 1.Clark A. 2013. EDITOR'S NOTE Whatever next ? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 36, 181-253. ( 10.1017/S0140525X12000477) [DOI] [PubMed] [Google Scholar]

- 2.Friston KJ, Daunizeau J, Kiebel SJ. 2009. Reinforcement learning or active inference ? PLoS ONE 4, 7. ( 10.1371/journal.pone.0006421) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hutchinson JB, Barrett LF. 2019. The power of predictions : an emerging paradigm for psychological research. Curr. Dir. Psychol. Sci. 28, 280-291. ( 10.1177/0963721419831992) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Friston K. 2005. A theory of cortical responses. Phil. Trans. R. Soc. B 360, 815-836. ( 10.1098/rstb.2005.1622) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Seth AK. 2013. Interoceptive inference, emotion, and the embodied self. Trends Cogn. Sci. 17, 565-573. ( 10.1016/j.tics.2013.09.007) [DOI] [PubMed] [Google Scholar]

- 6.Rauss K, Pourtois G. 2013. What is bottom-up and what is top-down in predictive coding. Front. Psychol. 4, 1-8. ( 10.3389/fpsyg.2013.00276) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jacobsen T, Beudt S. 2017. Domain generality and domain specificity in aesthetic appreciation. New Ideas Psychol. 47, 97-102. ( 10.1016/j.newideapsych.2017.03.008) [DOI] [Google Scholar]

- 8.Brattico P, Brattico E, Vuust P. 2017. Global sensory qualities and aesthetic experience in music. Front. Neurosci. 11, 1-13. ( 10.3389/fnins.2017.00159) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Clewis RR. 2021. Why the sublime is aesthetic awe. J. Aesthetics Criticism 79, 301-314. ( 10.1093/jaac/kpab023) [DOI] [Google Scholar]

- 10.Jacobsen T, Schubotz RI, Ho L, Yves D. 2006. Brain correlates of aesthetic judgment of beauty. Neuroimage 29, 276-285. ( 10.1016/j.neuroimage.2005.07.010) [DOI] [PubMed] [Google Scholar]

- 11.Konečni VJ. 2005. The aesthetic trinity: awe, being moved, thrills. Bull. Psychol. Arts 5, 27-44. [Google Scholar]

- 12.Sun M, Ying H. 2023. Color's perceptual diversity and categorical harmony improve aesthetic experience. Psychol. Aesthetics, Creativity Arts ( 10.1037/aca0000583) [DOI] [Google Scholar]

- 13.Kesner L. 2014. The predictive mind and the experience of visual art work. Front. Psychol. 5, 1-13. ( 10.3389/fpsyg.2014.01417) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vuust P, Dietz MJ, Witek M, Kringelbach ML. 2018. Now you hear it: a predictive coding model for understanding rhythmic incongruity. Ann. N Y Acad. Sci. 1423, 19-29. ( 10.1111/nyas.13622) [DOI] [PubMed] [Google Scholar]

- 15.Vuust P, Heggli OA, Friston KJ, Kringelbach ML. 2022. Music in the brain. Nat. Rev. Neurosci. 23, 287-305. ( 10.1038/s41583-022-00578-5) [DOI] [PubMed] [Google Scholar]

- 16.Brattico E. 2022. The empirical aesthetics of music. In The Oxford handbook of empirical aesthetics (eds Nadal M, Vartanian O), pp. 573-604. Oxford, UK: Oxford University Press. ( 10.1093/oxfordhb/9780198824350.013.26) [DOI] [Google Scholar]

- 17.Vander Elst OF, Vuust P, Kringelbach ML. 2021. Sweet anticipation and positive emotions in music, groove, and dance. Curr. Opin. Behav. Sci. 39, 79-84. ( 10.1016/j.cobeha.2021.02.016) [DOI] [Google Scholar]

- 18.Starr GG. 2015. Feeling beauty: the neuroscience of aesthetic experience. New York, NY: MIT Press. [Google Scholar]

- 19.Broughton MC, Dimmick J, Dean RT. 2021. Affective and cognitive responses to musical performances of early 20th century classical solo piano compositions. Music Percept. 38, 245-266. ( 10.1525/mp.2021.38.3.245) [DOI] [Google Scholar]

- 20.Antonietti A, Cocomazzi D, Iannello P. 2009. Looking at the audience improves music appreciation. J. Nonverbal Behav. 33, 89-106. ( 10.1007/s10919-008-0062-x) [DOI] [Google Scholar]

- 21.Spence C, Senkowski D, Röder B. 2009. Editorial: crossmodal processing. Exp. Brain Res. 198, 107-111. ( 10.1007/s00221-009-1973-4) [DOI] [PubMed] [Google Scholar]

- 22.Brattico E, Bogert B, Jacobsen T. 2013. Toward a neural chronometry for the aesthetic experience of music. Front. Psychol. 4, 1-21. ( 10.3389/fpsyg.2013.00206) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Changeux JP, Nalbantian S. 2017. A neurobiological theory of aesthetic experience and creativity. In Rencontres, pp. 31-48. Paris, France: Classiques Garnier. [Google Scholar]

- 24.Reybrouck M, Vuust P, Brattico E. 2018. Brain connectivity networks and the aesthetic experience of music. Brain Sci. 8, 107. ( 10.3390/brainsci8060107) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bertamini M, Makin ADJ. 2014. Brain activity in response to visual symmetry. Symmetry 6, 975-996. ( 10.3390/sym6040975) [DOI] [Google Scholar]

- 26.Jacobsen T, Höfel L. 2002. Aesthetic judgement of novel graphic patterns: analyses of individual judgements. Percept. Mot. Skills 95, 755-766. ( 10.2466/pms.2002.95.3.755) [DOI] [PubMed] [Google Scholar]

- 27.Jacobsen T, Höfel LEA. 2003. Descriptive and evaluative judgment processes: behavioral and electrophysiological indices of processing symmetry and aesthetics. Cognitive, Affective Behav. Neurosci. 3, 289-299. ( 10.3758/CABN.3.4.289) [DOI] [PubMed] [Google Scholar]

- 28.Lahdelma I, Eerola T. 2016. Mild dissonance preferred over consonance in single chord perception. I-Perception 7, 2041669516655812. ( 10.1177/2041669516655812) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lahdelma I, Eerola T. 2016. Single chords convey distinct emotional qualities to both naive and expert listeners. Psychol. Music 44, 37-54. ( 10.1177/0305735614552006) [DOI] [Google Scholar]

- 30.Leder H, Tinio PPL, Jacobsen T, Rosenberg R. 2019. Symmetry is not a universal law of beauty. Emp. Stud. Arts 37, 104-114. ( 10.1177/0276237418777941) [DOI] [Google Scholar]

- 31.Sollberger B, Rebe R, Eckstein D. 2003. Musical chords as affective priming context in a word-evaluation task. Music Percept. Interdiscip. J. 20, 263-282. ( 10.1525/mp.2003.20.3.263) [DOI] [Google Scholar]

- 32.Di Stefano N, Vuust P, Brattico E. 2022. Consonance and dissonance perception. A critical review of the historical sources, multidisciplinary findings, and main hypotheses. Phys. Life Rev. 43, 273-304. ( 10.1016/j.plrev.2022.10.004) [DOI] [PubMed] [Google Scholar]

- 33.Juslin PN, Västfjäll D. 2008. Emotional responses to music: the need to consider underlying mechanisms. Behav. Brain Sci. 31, 559-575. ( 10.1017/S0140525X08005293) [DOI] [PubMed] [Google Scholar]

- 34.Brattico E, Jacobsen T, De Baene W, Nakai N, Tervaniemi M. 2003. Electrical brain responses to descriptive versus evaluative judgments of music. Ann. N Y Acad. Sci. 999, 155-157. ( 10.1196/annals.1284.018) [DOI] [PubMed] [Google Scholar]

- 35.Höfel L, Jacobsen T. 2007. Electrophysiological indices of processing aesthetics: spontaneous or intentional processes? Int. J. Psychophysiol. 65, 20-31. ( 10.1016/j.ijpsycho.2007.02.007) [DOI] [PubMed] [Google Scholar]

- 36.Makin ADJ, Rampone G, Pecchinenda A, Bertamini M. 2013. Electrophysiological responses to visuospatial regularity. Psychophysiology 50, 1045-1055. ( 10.1111/psyp.12082) [DOI] [PubMed] [Google Scholar]

- 37.Rampone G, Makin ADJ, Tatlidil S, Bertamini M. 2019. NeuroImage representation of symmetry in the extrastriate visual cortex from temporal integration of parts: an EEG / ERP study. Neuroimage 193, 214-230. ( 10.1016/j.neuroimage.2019.03.007) [DOI] [PubMed] [Google Scholar]

- 38.Brattico E, Jacobsen T, De Baene W, Glerean E, Tervaniemi M. 2010. Cognitive versus affective listening modes and judgments of music - an ERP study. Biol. Psychol. 85, 393-409. ( 10.1016/j.biopsycho.2010.08.014) [DOI] [PubMed] [Google Scholar]

- 39.Armitage J, Eerola T. 2022. Cross-modal transfer of valence or arousal from music to word targets in affective priming? Auditory Percept. Cogn. 5, 192-210. ( 10.1080/25742442.2022.2087451) [DOI] [Google Scholar]

- 40.Timmers R, Crook H. 2014. Affective priming in music listening: emotions as a source of musical expectation. Music Percept. Interdiscip. J. 31, 470-484. ( 10.1525/mp.2014.31.5.470) [DOI] [Google Scholar]

- 41.Steinbeis N, Koelsch S. 2011. Affective priming effects of musical sounds on the processing of word meaning. J. Cogn. Neurosci. 23, 604-621. ( 10.1162/jocn.2009.21383) [DOI] [PubMed] [Google Scholar]

- 42.Demaree HA, Everhart DE, Youngstrom EA, Harrison DW. 2005. Brain lateralization of emotional processing: historical roots and a future incorporating ‘dominance’. Behav. Cogn. Neurosci. Rev. 4, 3-20. ( 10.1177/1534582305276837) [DOI] [PubMed] [Google Scholar]

- 43.Ehrlichman H. 1987. Hemispheric asymmetry and positive-negative affect. In Duality and the unity of the brain (ed. Ottoson D), Wenner-Gren Center International Symposium Series, vol 47, pp. 37-69. Boston, MA: Springer. [Google Scholar]

- 44.Silberman EK, Weingartner H. 1986. Hemispheric lateralization of functions related to emotion. Brain Cogn. 353, 322-353. ( 10.1016/0278-2626(86)90035-7) [DOI] [PubMed] [Google Scholar]

- 45.Davidson RJ. 1984. Affect, cognition, and hemispheric specialization. In Emotion, cognition, and behavior (eds Izard CE, Kagan J, Zajonc R), pp. 320-365. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 46.Tiihonen M, Jacobsen T, Haumann NT, Saarikallio S, Brattico E. 2022. I know what I like when I see it : likability is distinct from pleasantness since early stages of multimodal emotion evaluation. PLoS ONE 17, 1-16. ( 10.1371/journal.pone.0274556) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Fazio RH. 2001. On the automatic activation of associated evaluations: an overview. Cogn. Emotion 15, 115-141. ( 10.1080/0269993004200024) [DOI] [Google Scholar]

- 48.Bradley MM, Lang PJ. 2000. Affective reactions to acoustic stimuli. Psychophysiology 37, 204-215. ( 10.1111/1469-8986.3720204) [DOI] [PubMed] [Google Scholar]

- 49.Branco D, Gonçalves ÓF, Badia SBI. 2023. A systematic review of international affective picture system (IAPS) around the World. Sensors 23, 3866. ( 10.3390/s23083866) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Gerdes ABM, Wieser MJ, Alpers GW. 2014. Emotional pictures and sounds: a review of multimodal interactions of emotion cues in multiple domains. Front. Psychol. 5, 1-13. ( 10.3389/fpsyg.2014.01351) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Brattico E. 2015. From pleasure to liking and back: bottom-up and top-down neural routes to the aesthetic enjoyment of music. Art, Aesthetics Brain 2013, 303-318. ( 10.1093/acprof:oso/9780199670000.003.0015) [DOI] [Google Scholar]

- 52.Lang PJ, Bradley MM, Cuthbert BN. 2008. International affective picture system (IAPS): affective ratings of pictures and instruction manual. Technical report A-8. Gainesville, FL: University of Florida. [Google Scholar]

- 53.Bradley MM, Lang PJ. 2007. The International affective digitized sounds (2nd edn; IADS-2): affective ratings of sounds and instruction manual. Technical report B-3. Gainesville, Fl: University of Florida. [Google Scholar]

- 54.Audacity Team. 2020. Audacity. See https://www.audacityteam.org/.

- 55.Taulu, Simola. 2006. Spatiotemporal signal space separation method for rejecting nearby interference in MEG measurements. Phys. Med. Biol. 51, 1759–1768. ( 10.1088/0031-9155/51/7/008). [DOI] [PubMed]

- 56.Oostenveld R. 2011. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, 1–9. ( 10.1155/2011/156869) [DOI] [PMC free article] [PubMed]

- 57.Haumann NT, Parkkonen L, Kliuchko M, Vuust P, Brattico E. 2016. Comparing the performance of popular MEG/EEG artifact correction methods in an evoked-response study. Comput. Intell. Neurosci. 2016, 1-11. ( 10.1155/2016/7489108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lacadie CM, Fulbright RK, Rajeevan N, Constable T, Papademetris X. 2009. More accurate Talairach coordinates for neuroimaging using nonlinear registration. Neuroimage 42, 717–725. ( 10.1016/j.neuroimage.2008.04.240) [DOI] [PMC free article] [PubMed]

- 59.Rorden C, Karnath H, Bonhila L. 2007. Improving lesion-symptom mapping. J. Cogn. Neurosci. 19, 1081–1088. ( 10.1162/jocn.2007.19.7.1081) [DOI] [PubMed]

- 60.Luck SJ. 2014. An introduction to the event-related potential technique, 2nd edn. The MIT Press. Cambridge, MA. [Google Scholar]

- 61.Moors A, Van de Cruys S, Pourtois G. 2021. Comparison of the determinants for positive and negative affect proposed by appraisal theories, goal-directed theories, and predictive processing theories. Curr. Opin. Behav. Sci. 39, 147-152. ( 10.1016/j.cobeha.2021.03.015) [DOI] [Google Scholar]

- 62.Van de Cruys S, Chamberlain R, Wagemans J. 2017. Tuning in to art: a predictive processing account of negative emotion in art. Behav. Brain Sci. 40, e377. ( 10.1017/S0140525X17001868) [DOI] [PubMed] [Google Scholar]

- 63.Pessoa L. 2013. The cognitive-emotional brain. New York, NY: The MIT Press. [Google Scholar]

- 64.Lewis MD. 2000. Emotional self-organization on three time scales. In Emotion, development, and self-organization. Dynamic systems approaches to emotional development (eds Lewis MD, Granic I), pp. 37-69, 1st edn. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 65.Scherer KR. 2009. Emotions are emergent processes: they require a dynamic computational architecture. Phil. Trans. R. Soc. B 364, 3459-3474. ( 10.1098/rstb.2009.0141) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Parsons CE, Young KS, Joensson M, Brattico E, Hyam JA, Stein A, Green AL, Aziz TZ, Kringelbach ML. 2013. Ready for action : a role for the human midbrain in responding to infant vocalizations. Social Cogn. Affective Neurosci. 9, 977-984. ( 10.1093/scan/nst076) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Vogel EK, Luck SJ. 2000. The visual N1 component as an index of a discrimination process. Psychophysiology 37, 190-203. ( 10.1111/1469-8986.3720190) [DOI] [PubMed] [Google Scholar]

- 68.Paulmann S, Kotz SA. 2008. Early emotional prosody perception based on different speaker voices. Neuroreport 19, 209-213. ( 10.1097/WNR.0b013e3282f454db) [DOI] [PubMed] [Google Scholar]

- 69.Yokosawa K, Pamilo S, Hirvenkari L, Hari R, Pihko E. 2013. Activation of auditory cortex by anticipating and hearing emotional sounds: an MEG study. PLoS ONE 8, e80284. ( 10.1371/journal.pone.0080284) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Kotz SA, Paulmann S. 2007. When emotional prosody and semantics dance cheek to cheek: ERP evidence. Brain Res. 1151, 107-118. ( 10.1016/j.brainres.2007.03.015) [DOI] [PubMed] [Google Scholar]

- 71.Milner AD. 2012. Is visual processing in the dorsal stream accessible to consciousness ? Proc. R. Soc. B 279, 2289-2298. ( 10.1098/rspb.2011.2663) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Nelken I. 2008. Processing of complex sounds in the auditory system. Curr. Opin. Neurobiol. 18, 413-417. ( 10.1016/j.conb.2008.08.014) [DOI] [PubMed] [Google Scholar]

- 73.Rauschecker JP. 2015. Auditory and visual cortex of primates : a comparison of two sensory systems. Eur. J. Neurosci. 41, 579-585. ( 10.1111/ejn.12844) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Rauschecker JP, Tian B. 2000. Mechanisms and streams for processing of ‘what’ and ‘where’ in auditory cortex. Proc. Natl Acad. Sci. USA 97, 11 800-11 806. ( 10.1073/pnas.97.22.11800) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Wessinger CM, VanMeter J, Tian B, Van Lare J, Pekar J, Rauschecker JP. 2001. Hierarchical organization of the human auditory cortex revealed by functional magnetic resonance imaging. J. Cogn. Neurosci. 13, 1-7. ( 10.1162/089892901564108) [DOI] [PubMed] [Google Scholar]

- 76.Albright TD. 2012. On the perception of probable things: neural substrates of associative memory, imagery, and perception. Neuron 74, 227-245. ( 10.1016/j.neuron.2012.04.001) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Seth AK, Friston KJ. 2016. Active interoceptive inference and the emotional brain. Phil. Trans. R. Soc. B 371, 20160007. ( 10.1098/rstb.2016.0007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Stephani C, Fernandez-Baca Vaca G, MacIunas R, Koubeissi M, Lüders HO. 2011. Functional neuroanatomy of the insular lobe. Brain Struc. Funct. 216, 137-149. ( 10.1007/s00429-010-0296-3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Critchley HD, Wiens S, Rotshtein P, Öhman A, Dolan RJ. 2004. Neural systems supporting interoceptive awareness. Nat. Neurosci. 7, 189-195. ( 10.1038/nn1176) [DOI] [PubMed] [Google Scholar]

- 80.Gu X, Hof PR, Friston KJ, Fan J. 2013. Anterior insular cortex and emotional awareness. J. Comparat. Neurol. 521, 3371-3388. ( 10.1002/cne.23368) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Purves D, Augustine GJ, Fitzpartrick D, Hall WC, LaMantia A-S, White LE. 2012. Association cortex and cognition. In Neuroscience, 5th edn (eds Purves D, Augustine GJ, Fitzpartrick D, Hall WC, LaMantia A-S, White LE), pp. 587-624. Sunderland, MA: Sinauer Associates. [Google Scholar]

- 82.Wang X, Guo X, Chen L, Liu Y, Goldberg ME, Xu H. 2016. Auditory to visual cross-modal adaptation for emotion: psychophysical and neural correlates. Cereb. Cortex 321, 1337-1346. ( 10.1093/cercor/bhv321) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Tiihonen M, Haumann NT, Shtyrov Y, Vuust P, Jacobsen T, Brattico E. 2023. The impact of crossmodal predictions on the neural processing of aesthetic stimuli. Figshare. ( 10.6084/m9.figshare.c.6960059) [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Tiihonen M, Haumann NT, Shtyrov Y, Vuust P, Jacobsen T, Brattico E. 2023. The impact of crossmodal predictions on the neural processing of aesthetic stimuli. Figshare. ( 10.6084/m9.figshare.c.6960059) [DOI] [PMC free article] [PubMed]

Data Availability Statement

The contribution has been published in PsychArchives and assigned the following Persistent Identifier(s): The impact of crossmodal predictions on the neural processing of aesthetic stimuli (doi:10.23668/psycharchives.13581); supplementary material for: The impact of crossmodal predictions on the neural processing of aesthetic stimuli (doi:10.23668/psycharchives.13582); MEG and EEG data for: The impact of crossmodal predictions on the neural processing of aesthetic stimuli (doi:10.23668/psycharchives.13580); starting on the 17 December 2023.

Data are also provided in electronic supplementary material [83].