Abstract

AI Chat Bots such as ChatGPT are revolutionizing our AI capabilities, especially in text generation, to help expedite many tasks, but they introduce new dilemmas. The detection of AI-generated text has become a subject of great debate considering the AI text detector’s known and unexpected limitations. Thus far, much research in this area has focused on the detection of AI-generated text; however, the goal of this study was to evaluate the opposite scenario, an AI-text detection tool's ability to discriminate human-generated text. Thousands of abstracts from several of the most well-known scientific journals were used to test the predictive capabilities of these detection tools, assessing abstracts from 1980 to 2023. We found that the AI text detector erroneously identified up to 8% of the known real abstracts as AI-generated text. This further highlights the current limitations of such detection tools and argues for novel detectors or combined approaches that can address this shortcoming and minimize its unanticipated consequences as we navigate this new AI landscape.

Keywords: Generative AI, Machine learning, Text detection, Human-generated, Chat-GPT, GPT, LLM, Large Language Model

Highlights

-

•

Little research has been focused on an AI-text detection tool's ability to discriminate human-generated text.

-

•

Abstracts from esteemed scientific journals were used to test the predictive capabilities of these detection tools, assessing abstracts from 1980 to 2023.

-

•

An AI text detector erroneously identified up to 8% of the known real abstracts from prior to the existence of LLMs as AI-generated text with a high level of confidence.

-

•

This work highlights the yet unexplored risk of mislabeling human-generated work as AI-generated and begins the discussion on the potential consequences of such a mistake.

Introduction

Artificial intelligence (AI) and the subdomain of Machine Learning (ML)1, 2, 3 have progressed rapidly over the past few years, notably in natural language processing4 and text generation.5 In November 2022, the world became enamored with ChatGPT, a large language model (LLM) created by OpenAI, with its ability to answer sophisticated questions and to write novel text. To achieve this, ChatGPT (e.g. Chat Generative Pre-trained Transformer) and similar tools are built upon Pretrained Foundational Models (PFMs), which ingest a large corpus of text to create a general representation of language that can be further tuned to specific applications.6 The tools excel at summarizing, translating, and synthesizing text with numerous possible uses that come with new challenges.7 The scientific community and educators worldwide face questions of authorship8, 9, 10 and plagiarism as these tools are used in scientific publications and course assignments,11 while governments grapple with disinformation.12 This has led to a second wave of innovation to detect the presence of AI-generated text. Academia has led the charge with a diverse set of approaches (e.g., GLTR13 and RoBERTa-based approaches14) reviewed by Jawahar et al15 and Guerrero and Alsmadi.16 In addition, numerous companies, including OpenAI, Copyleaks, and Originality.ai, are now selling AI-generated text detection tools. Given the rapidly evolving nature of this field, ongoing efforts must be made to understand the performance of these tools. Work by Gao et al. on bioRxiv (https://doi.org/10.1101/2022.12.23.521610) looked at AI-generated abstract detection by OpenAI’s own detection tool. However, we are yet unaware of research focusing on the opposite scenario. How often do these detection tools flag work as AI-generated when it represents original, unaided creative thought from the author? This has broad-reaching implications, with the possibility of career-altering allegations being made against students and researchers when their work was an original creation. We explore this topic by employing a freely available GPT detector API to analyze abstracts from 5 scientific journals from the 1980s forward, well before AI-aided text generation, to assess the detector's false-positive rate.

Methods

Data collection

Abstracts were sourced from PubMed using the Requests and BeautifulSoup Python libraries. These tools enabled us to navigate to a PubMed URL that filtered for the specified year range and journal, and iterated through each search result link to pull the abstract from the underlying HTML source code, using BeautifulSoup's find and find_all methods. The abstracts were then placed into Pandas dataframes in order to analyze and visualize the data, using Matplotlib.

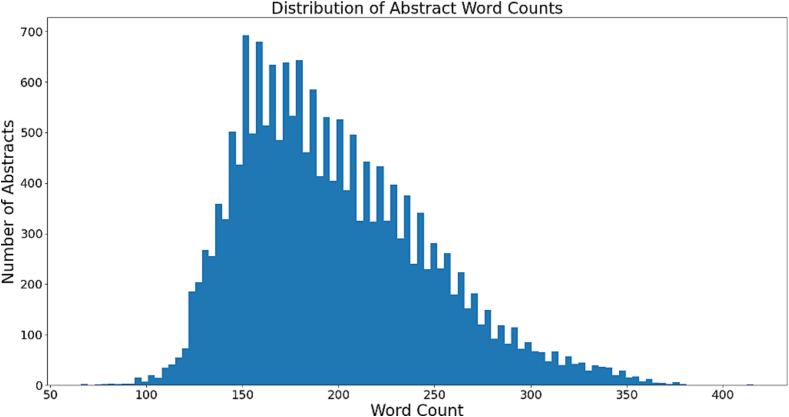

Five journals covering a range of disciplines were included in this study (Nature, Science, The New England Journal of Medicine, Radiology, and Archives of Pathology and Laboratory Medicine), and abstracts of which were sampled in 5-year clusters starting from 1980 to the present. The final group ranged from 2020 to February 2023. Abstracts were sourced from the first 5 pages of search results for each journal. 1600 random sample abstracts were selected per each 5-year range (1980–2023) within the aforementioned journals (adding up to 14400 total abstracts evaluated). The query also specified a certain abstract length to meet the token restrictions of the model. The distribution of word counts is provided in Fig. 1.

Fig. 1.

The overall distribution of the word counts in the abstracts.

AI detection model

Given that a GPT4 or GPT3-based AI detection API was not available to us at the time of this study, the RoBERTa-based detector model was sourced instead from OpenAI’s Hugging Face repository (“roberta-base-openai-detector"). The model was downloaded using pipeline from the transformers Python package, and the AutoTokenizer package. The model was then run locally on the full set of aggregated abstracts (as described above) and given a probability score of 0–1.0 (i.e., the likelihood of the text being identified as AI-generated by this GPT-2-based LLM approach). Although no GPT4-based AI detector API was available to us at the time of this study, a very limited subset of the false-positive results (i.e., 10 real abstracts that were called AI generated with >90% probability by the RoBERTa-based detector model) acquired from our main API-based study were also fed into a separate non-API GPT-4-based AI-detector method through the ChatGPT+ user interface. This separate GPT-4-based method was constructed through the following prompt: "Please act as an AI Detector, responding with a score from 0.00 to 1.00 on how likely the given text is generated by an AI, with 1.00 meaning absolutely written by a human and 0.00 meaning absolutely written by an AI." A summary of the results of these very limited runs (10 that were erroneously detected by the GPT2-based method as AI-generated) are shown in Supplementary table 1.

Data analysis

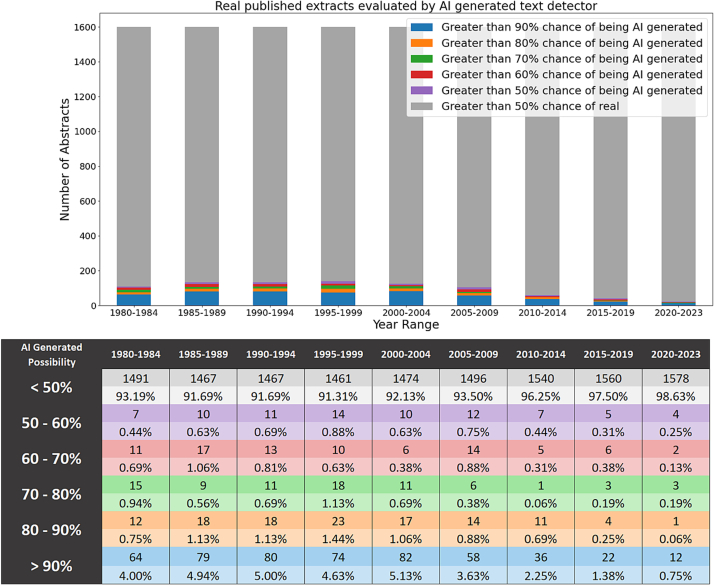

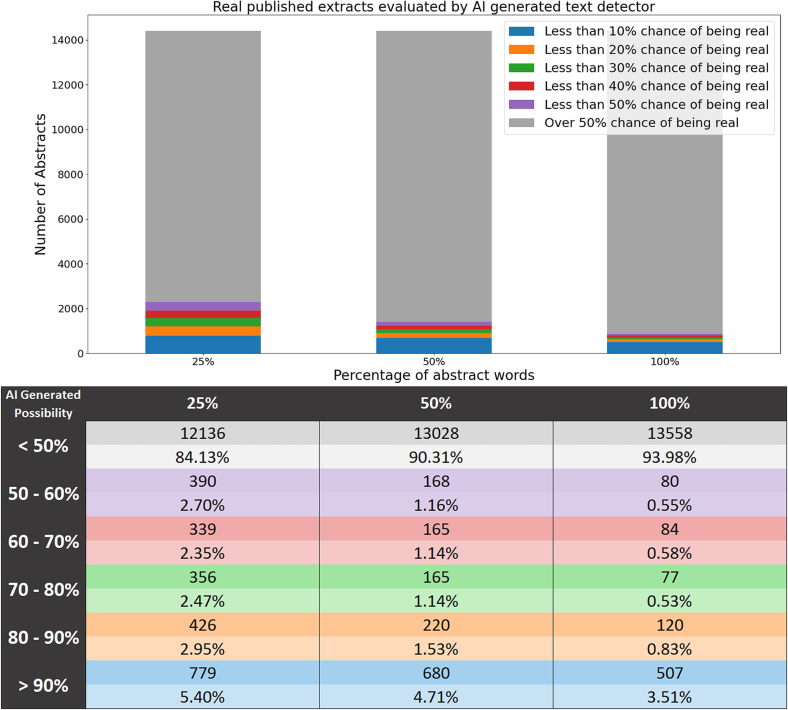

The AI detection output was segmented by 5-year time periods starting from 1980 to the present (2023), for each of the aforementioned journals listed above. For each time period, the full-length abstracts were utilized to better represent the true-predictive capability of the AI detection tool on these real abstracts. As noted above, to maintain the same sample size for each year range, 1600 abstracts per each 5-year range were randomly selected using the NumPy Python package. A 50% probability was used as the base threshold for suspected AI generation, with additional analysis for each of these spanning 10% intervals up to >90% AI text generation detection calls (i.e., >50%, >60%, >70%, >80%, and >90%) as shown in Fig. 2. In addition to the above, a separate text dilution study was also conducted to serve as a relatively predictable positive control (100% of abstract, first 50%, and first 25% of each of the abstracts, by word count), as shown in Fig. 3. The word count was compared by year range and journal using ANOVA. The statistical analysis was performed using R (cran.r-project.org).

Fig. 2.

The full-length abstracts evaluated by the AI-detection tool are shown in the bar chart above over year ranges, with each stack representing the AI detection model’s prediction of how likely an abstract is to be predicted as being AI generated. The table beneath the graphs shows corresponding details for each year range.

Fig. 3.

The effect of diluting the abstracts (i.e., comparing the full length abstract 100% to 50% which represents the first half of the abstract and 25% representing the first quarter of the abstract length) on the AI-detection tool’s outcomes. As expected, the AI-detection tool’s capabilities of accurately assigning the real text as real, diminishes through each dilution.

Results

Through our AI-text detection approach using the RoBERTa-based detector model, we found that while most of these “real” abstracts were correctly predicted as non AI-generated (i.e., correctly assigned as human-generated), there was a subset of abstracts (∼5%–10%) that were being erroneously assigned as AI-generated text with a high degree of confidence (i.e., >80% confidence as seen in Fig. 2).

The 1600 random real abstract samples per year range (adding up to 14400 total abstracts) were separated based on their likelihood of being called AI generated text according to the AI detection tool as shown in Fig. 2. The bar chart in Fig. 2 illustrates the trend of AI detection of such real text over time, along with their given likelihood as reported by the AI text detector. For example, in each bar, the “Greater than 90% chance of being AI-generated” represents the abstracts that were greater than the 90% likelihood of being AI generated by the AI detection tool.

Additionally, the same 14 400 sample set was also reviewed based on each of the 5 journals evaluated. Each column adds up to 100% for each journal as shown in Table 1.

Table 1.

The percentages of each journal separated by likelihood of being called AI-generated text. Arch Pathol Lab Med and NEJM noted above are the journals of Archives of Pathology & Laboratory Medicine and the New England Journal of Medicine, respectively.

| AI generated possibility (%) | Arch Pathol Lab Med (%) | Nature (%) | Radiology (%) | Science (%) | NEJM (%) |

|---|---|---|---|---|---|

| <50 | 93.98 | 96.69 | 95.72 | 93.89 | 84.69 |

| 50–60 | 0.60 | 0.31 | 0.75 | 0.65 | 0.84 |

| 60–70 | 0.75 | 0.31 | 0.58 | 0.56 | 1.12 |

| 70–80 | 0.64 | 0.31 | 0.44 | 0.19 | 1.30 |

| 80–90 | 0.94 | 0.50 | 0.58 | 0.83 | 1.81 |

| >90 | 3.11 | 1.89 | 1.94 | 3.89 | 10.24 |

As noted earlier, a separate text dilution study was also conducted (Fig. 3) on these 14 400 abstracts to evaluate the effect of word count on the AI detection tool’s optimized capabilities. This was conducted by using the full abstract (100% of abstract) versus its first half (50%) and its first quartile (25%) word count as shown in Fig. 3. As expected, the AI detection tool performed best on the full abstract when compared to its diluted abstract counterparts.

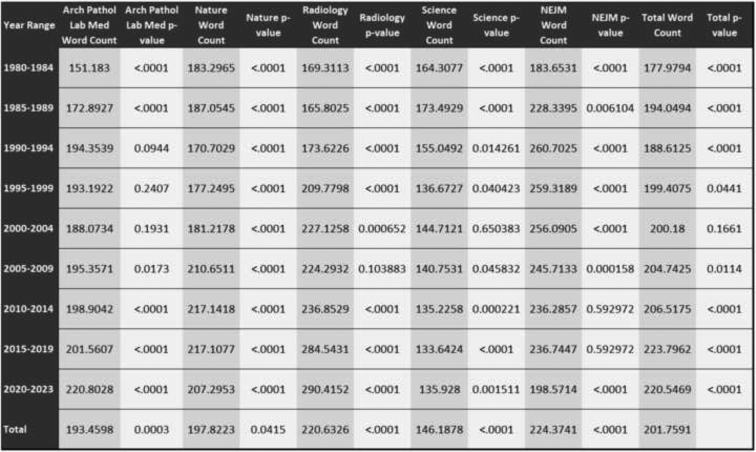

We also evaluated the average word count for each journal and year range, as well as the average for each. Based on 2-way ANOVA, the P-values were calculated for comparing each year range’s mean word count to other year changes from that year range and the mean word counts between different journals (Appendix Fig. 1).

Discussion

In this study, we have demonstrated that up to 8.69% of the “real” abstracts had more than 50% probability of being characterized as AI-generated text, and up to 5.13% of all “real” abstracts being erroneously characterized as having a 90% likelihood of being AI-generated. As a more concrete example, from 1990 to 1994, well before ChatGPT or computational capabilities of LLMs, roughly 1 in 20 abstracts were flagged as AI-generated with >90% probability.

However, the data also demonstrates a temporal dependence on its detection. Trending the >90% AI-generated predicted probability by 5-year segment, a peak is seen in 2000–2004 with a steady decline reaching only 0.75% in the 2020–2023 segment. The exact cause of these changes requires further study, but several factors are worth considering. First, the RoBERTa-based OpenAI detector used in this study is fine-tuned with GPT-2 parameters. The corpora used to train RoBERTA combines BookCorpus and English Wikipedia (BERT training set), CC-News, OpenWebText, and Stories.14 While GPT-2 is trained with WebText, it is created by following all outbound links from Reddit with at least 3 karma points.17 Thus, the tuning draws on popular ideas shared on a social media site rather than scholarly works. However, this doesn’t fully explain the improving accuracy over time. The observed differences may also result from temporal misalignment, a phenomenon nicely summarized by Luu et al., in which the language model training data and testing data differ enough in time that model performance is affected by the subtle evolution of language.18 However, neither fully explain all the observed trends in the data. If this were the only factor, we would expect even less accurate predictions in the earlier decades. However, in the early 2000s forward, when a more rapid change in accuracy was observed, the adoption of the internet was also rapidly accelerating, potentially catalyzing faster evolution of language or other additional factors, resulting in more noticeable difference in performance over time.

To further interrogate the model, we also tested the effect of text length on the prediction, serving as a positive control with more text expected to provide more accurate predictions within the token limit of the model. Reassuringly, the accuracy of predictions was demonstrated to be directly related to the abstract lengths when provided with increasing aliquots of the abstracts (25%, 50%, or 100% of text), as shown in Fig. 3.

Considering this, the data also was found to be variably influenced by the journal type, regardless of the time period. Specifically, 4 of the journals representing either specific disciplines (Radiology, Archives of Pathology & Laboratory Medicine) or a diverse array of subjects (Science, Nature) showed false-positive rates between 1.89 and 3.89%, while The New England Journal of Medicine (NEJM) was shown to be an outlier at 10.24% for unknown reasons. We further analyzed the average word count of each set of journal abstracts and employed ANOVA to evaluate the statistical difference. When the average word count of all abstracts was compared with the average word count of the individual journals, each journal was found to be relatively different (P < .05). Nature was closest to the average with a P-value of .0415, with the remaining journals showing P-values of <.001 (S1 Fig. 1). This suggests that similar performance is observed with statistically significantly different abstract lengths, and this likely is not the reason for the difference in the performance of NEJM. The format and structure of the abstracts is journal dependent, with NEJM, APLM, and Radiology all using various subsections (i.e., context, objective, design, results, and conclusions), while Nature and Science employ a single paragraph form. Still, this does not distinguish NEJM from the other journals. While the underlying cause remains unclear, it also highlights the importance of context for the tool's performance and demonstrates the potential for similar performance across multiple subject domains and abstract lengths.

The confidence in the probabilities also merits further discussion. The data demonstrate not only the identification of certain real text as AI-generated but with a confidence exceeding 90%. While these tools exist in an academic realm, thoughtful interpretation and skepticism will likely remain the norm; however, as they begin to transition into a broader consumer market with multiple corporations offering this technology, the notion of such strong prediction will warrant additional concern.

One fundamental limitation of this study is that it only evaluates a single AI-text detection model, which is not the most current technology available. OpenAI and other companies continue to develop more sophisticated methods to detect AI-text, but they have not, at the time of authoring this article, offered API access to these tools to facilitate large-scale analysis. Additionally, due to the expense of these tools, we were limited to using the freely available model. Ongoing assessment is needed especially given GPT-3 and GPT-4’s much more extensive corpus19,20 compared with GPT-2, leading to more sophisticated text generation while also allowing training and tuning of more sophisticated detectors. Notably, a small sample of the real abstracts (10 random abstracts, 2 from each of the 5 journals) that were being called as AI-generated by the GPT-2-based model, were also evaluated in a GPT-4 non-API-based method through ChatGPT+ UI which showed some interesting results as noted in our Supplementary Table 1. Although, at first glance, this GPT4-based AI-detector method was able to accurately identify the real abstracts as human and non-AI-generated, it also was shown to be rather non-specific for this task since a separate set of AI-generated abstracts fed into it were all also being called human generated (see Supplementary table 1). It is important to note that this GPT-4-based non-API approach used within this very limited subset of abstracts is not a validated method and more sophisticated trained and validated AI detection LLM approaches in the future (e.g., GPT-4 or others) may be able to address many of the aforementioned shortcomings.

In light of these results, it is worth noting that the detection rate provided by these tools, especially in the 2020–2023 abstracts, must be interpreted with caution. While our assumption that the AI generator technologies could not have been used in earlier cases, the assumption no longer applies in this time frame and thus, the true value of the false detection rate is less than or equal to the quoted value of 0.75%. Finally, as noted above, temporal misalignment likely amplifies the false-positive rate especially within the older abstracts.

Looking forward, the role and impact of LLMs remain in their infancy. As the scientific and educational community grapples with these changes, distinguishing human versus AI creations will remain an important subject as AI-generated text’s potential for misuse and abuse looms large.21,22 There now exists an “arms race” not only to push the envelope of possibility but to continue to distinguish humans from AI.23 Drawing on lessons from medical diagnostic testing, we envision new methods emerging with a highly sensitive screening test to meet the needs of identifying content of concern which can then be combined with highly specific diagnostic tests to confirm that suspicion and boost the overall performance. Additionally, the importance of standardizing and benchmarking the performance of these models in different domains and contexts also remains important as in the work by Uchendu et al in creating TuringBench.24 While this innovation accelerates, it is essential not to lose sight of the pitfall of labeling something as AI when it is, in fact, human. The observations presented here is only the beginning of this narrative.

Contributions

Hooman Rashidi (HR) and Tom Gorbett (TG) are the lead investigators and have developed and validated the above study whose results are highlighted within this manuscript. Brandon Fennel (BF), Bo Hu (BH), and Samer Albahra (SA), along with HR and TG, significantly contributed to the writing, review, statistical analysis, and editing of the manuscript. All authors reviewed the manuscript.

Declaration of interests

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests:

Dr. Hooman Rashidi serves as an Associate Editor for JPI.

Dr. Brandon Fennell has served as a reviewer for prior work published in JPI.

Contributor Information

Hooman H. Rashidi, Email: rashidh@ccf.org.

Tom Gorbett, Email: gorbetw@ccf.org.

Appendix A. Appendix

A.1. Word counts and p-values by year range and journal

Fig. A1.

Average word counts and p-values are graphed by year range and journal, using the random sample of 14,400 abstracts.

A.2. Information to Access Supporting Code on Github

Github repository link (placeholder) will be published and provided upon publication.

The following are the supplementary data related to this article:

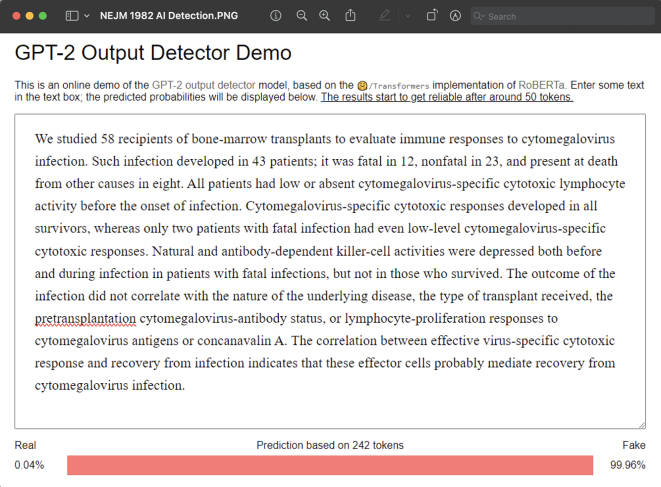

Supplementary Figure 1.

Image of a “real” NEJM abstract from 1982 which was assigned as AI-generated with 99.96% probability by the GPT-2 AI-detector tool.

Ten real abstracts from the 1980s from five well known journals that were falsely called as AI-generated with high probability in the main part of this study, were further evaluated using two different AI detection methods. One method evaluated these using the same OpenAI's GPT-2 AI-detection tool that was used within the main study while the other employed a GPT-4-based AI-detection method. As shown in the table, the 10 real abstracts that were falsely identified as AI-generated, were all being predicted as real by the GPT-4-based AI-detection method. However, this same GPT-4-based AI-detection method was also erroneously calling a separate set of ten AI-generated abstracts as real (i.e. human generated). The GPT-2 AI-detection tool did slightly better with these AI-generated abstracts, but most were still being falsely identified as real (i.e. human generated) abstracts.

Data availability

The datasets collected and analyzed during the current study will be made available on Github via a public repository upon publication of this work.

References

- 1.Rashidi H.H., Tran N.K., Betts E.V., Howell L.P., Green R. Artificial intelligence and machine learning in pathology: the present landscape of supervised methods. Acad Pathol. 2019;6 doi: 10.1177/2374289519873088. 237428951987308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rashidi H.H., Bowers K.A., Reyes Gil M. Machine learning in the coagulation and hemostasis arena: an overview and evaluation of methods, review of literature, and future directions. J Thromb Haemost JTH. 2022;S1538-7836(22):18293. doi: 10.1016/j.jtha.2022.12.019. [DOI] [PubMed] [Google Scholar]

- 3.Rashidi H.H., Albahra S., Robertson S., Tran N.K., Hu B. Common statistical concepts in the supervised machine learning arena. Front Oncol. 2023;13 doi: 10.3389/fonc.2023.1130229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Nadkarni P.M., Ohno-Machado L., Chapman W.W. Natural language processing: an introduction. J Am Med Inform Assoc JAMIA. 2011;18:544–551. doi: 10.1136/amiajnl-2011-000464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhou C., et al. 2023. A Comprehensive Survey on Pretrained Foundation Models: A History from BERT to ChatGPT. Preprint at. [DOI] [Google Scholar]

- 6.Bommasani R., et al. 2021. On the Opportunities and Risks of Foundation Models. arXiv.org https://arxiv.org/abs/2108.07258v3. [DOI] [Google Scholar]

- 7.Shen Y., et al. ChatGPT and other large language models are double-edged swords. Radiology. 2023;230163 doi: 10.1148/radiol.230163. [DOI] [PubMed] [Google Scholar]

- 8.Stokel-Walker C. ChatGPT listed as author on research papers: many scientists disapprove. Nature. 2023;613:620–621. doi: 10.1038/d41586-023-00107-z. [DOI] [PubMed] [Google Scholar]

- 9.Lee, J. Y. & Huh, S. Can an artificial intelligence chatbot be the author of a scholarly article? [DOI] [PMC free article] [PubMed]

- 10.Thorp H.H. ChatGPT is fun, but not an author. Science. 2023;379:313. doi: 10.1126/science.adg7879. [DOI] [PubMed] [Google Scholar]

- 11.Morrison R. Large Language Models and Text Generators: An Overview for Educators. 2022. https://eric.ed.gov/?id=ED622163 Online Submission.

- 12.Stiff H., Johansson F. Detecting computer-generated disinformation. Int J Data Sci Anal. 2022;13:363–383. [Google Scholar]

- 13.Gehrmann S., Strobelt H., Rush A. Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: System Demonstrations. Association for Computational Linguistics; 2019. GLTR: statistical detection and visualization of generated text; pp. 111–116. [DOI] [Google Scholar]

- 14.Liu Y., et al. 2019. RoBERTa: A Robustly Optimized BERT Pretraining Approach. Preprint at. [DOI] [Google Scholar]

- 15.Jawahar G., Abdul-Mageed M., Lakshmanan L.V.S. 2020. Automatic Detection of Machine Generated Text: A Critical Survey. Preprint at. [DOI] [Google Scholar]

- 16.Guerrero J., Alsmadi I. 2022. Synthetic Text Detection: Systemic Literature Review. Preprint at. [DOI] [Google Scholar]

- 17.Radford, A. et al. Language models are unsupervised multitask learners.

- 18.Luu K., Khashabi D., Gururangan S., Mandyam K., Smith N.A. Time Waits for No One! Analysis and Challenges of Temporal Misalignment. 2022. http://arxiv.org/abs/2111.07408 Preprint at.

- 19.Brown T.B., et al. Language Models are Few-Shot Learners. 2020. http://arxiv.org/abs/2005.14165 Preprint at.

- 20.OpenAI . 2023. GPT-4 Technical Report. Preprint at. [DOI] [Google Scholar]

- 21.Solaiman I., et al. 2019. Release Strategies and the Social Impacts of Language Models. Preprint at. [DOI] [Google Scholar]

- 22.Kreps S.E., McCain M., Brundage M. 2020. All the News that’s Fit to Fabricate: AI-Generated Text as a Tool of Media Misinformation. SSRN Scholarly Paper at. [DOI] [Google Scholar]

- 23.Heidt A. ‘Arms race with automation’: professors fret about AI-generated coursework. Nature. 2023 doi: 10.1038/d41586-023-00204-z. [DOI] [PubMed] [Google Scholar]

- 24.Uchendu A., Ma Z., Le T., Zhang R., Lee D. 2021. TURINGBENCH: A Benchmark Environment for Turing Test in the Age of Neural Text Generation. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Ten real abstracts from the 1980s from five well known journals that were falsely called as AI-generated with high probability in the main part of this study, were further evaluated using two different AI detection methods. One method evaluated these using the same OpenAI's GPT-2 AI-detection tool that was used within the main study while the other employed a GPT-4-based AI-detection method. As shown in the table, the 10 real abstracts that were falsely identified as AI-generated, were all being predicted as real by the GPT-4-based AI-detection method. However, this same GPT-4-based AI-detection method was also erroneously calling a separate set of ten AI-generated abstracts as real (i.e. human generated). The GPT-2 AI-detection tool did slightly better with these AI-generated abstracts, but most were still being falsely identified as real (i.e. human generated) abstracts.

Data Availability Statement

The datasets collected and analyzed during the current study will be made available on Github via a public repository upon publication of this work.