Abstract

Objective

The study aimed to investigate otolaryngologists' knowledge, trust, acceptance, and concerns with clinical applications of artificial intelligence (AI).

Methods

This study used mixed methods with survey and semistructured interviews. Survey was e‐mailed to American Rhinologic Society members, of which a volunteer sample of 86 members responded. Nineteen otolaryngologists were purposefully recruited and interviewed until thematic saturation was achieved.

Results

Seventy‐six respondents (10% response rate) completed the majority of the survey: 49% worked in academic settings and 43% completed residency 10 or fewer years ago. Of 19 interviewees, 58% worked in academic settings, and 47% completed residency 10 or fewer years ago. Familiarity: Only 8% of survey respondents reported having AI training in residency, although 72% had familiarity with general AI concepts; 0 interviewees had personal experience with AI in clinical settings. Expected uses: Of the surveyed otolaryngologists, 82% would use an AI‐based clinical decision aid and 74% were comfortable with AI proposing treatment recommendations. However, only 44% of participants would trust AI to identify malignancy and 53% to interpret radiographic images. Interviewees trusted AI for simple tasks, such as labeling septal deviation, more than complex ones, such as identifying tumors. Factors influencing AI adoption: 89% of survey participants would use AI if it improved patient satisfaction, 78% would be willing to use AI if experts and studies validated the technologies, and 73% would only use AI if it increased efficiency. Sixty‐one percent of survey respondents expected AI incorporation into clinical practice within 5 years. Interviewees emphasized that AI adoption depends on its similarity to their clinical judgment and to expert opinion. Concerns included nuanced or complex cases, poor design or accuracy, and the personal nature of physician‐patient relationships.

Conclusion

Few physicians have experience with AI technologies but expect rapid adoption in the clinic, highlighting the urgent need for clinical education and research. Otolaryngologists are most receptive to AI “augmenting” physician expertise and administrative capacity, with respect for physician autonomy and maintaining relationships with patients.

Level of Evidence

Level VI, descriptive or qualitative study.

Keywords: artificial intelligence, machine learning, chronic rhinosinusitis, qualitative research, patient satisfaction

In this mixed methods study, otolaryngologists were surveyed nationally and interviewed about their knowledge, trust, acceptance, and concerns with clinical applications of AI. Few physicians report experience with AI technologies but expect rapid adoption in the clinic, highlighting the urgent need for clinical education and research. Otolaryngologists are most receptive to AI “augmenting” physician expertise and administrative capacity, with respect for physician autonomy and maintaining relationships with patients.

1. INTRODUCTION

Twentieth century dreams for a machine that could think, and even replace the “intellectual functions of the physician,” initially gave way to disappointment due to early limitations of artificial intelligence (AI). 1 However, the advent of strategies to successfully handle “big data,” increased computing power, evolving industry needs, and novel AI approaches have prompted an entirely new scope for the possibilities and promise of AI. 2 In fact, recent research has identified numerous AI applications in aiding drug design, surgical skills development, and utilizing electronic health record (EHR) databases to calculate disease prognosis and to suggest personalized treatment recommendations. 3 In Otolaryngology, AI technologies are assisting with hearing loss management, cancer diagnosis, and real‐time identification of resection margins during oncologic procedures. 4 Beyond research applications, the development of novel clinical AI applications is proposed to target workflow to reach more patients and reduce nonclinical work for physicians.

However, the expanding capabilities of AI warrant consideration of their purposes and recognition of limitations. The ease at which new algorithms can be created from increasingly available data sources could easily result in the overproduction of digital health applications that may not be of interest to clinical end‐users. 5 Previous literature has explored obstacles to incorporating AI into medicine. 2 , 6 Despite theoretical expectations of improved accuracy or data processing efficiency, AI has not always shown benefits over the gold standard in randomized controlled trials. 7 In addition, AI algorithms and training data may not be high quality or representative, leading to biases, fitting to confounders instead of the correct features, and discrepancies between stagnant collected data versus a new and dynamic population. 8 , 9 These issues—theoretical and observed—may make physicians hesitant to accept AI services given the high stakes nature of medical applications.

In preparation for the integration of AI services into healthcare, previous studies have explored physicians' perceptions of AI in specialties as varied as neurology, pathology, and surgery. 10 However, there is limited research regarding otolaryngologists' perspectives toward AI. This study aims to elucidate physicians' knowledge, trust, acceptance, and concerns with clinical applications of AI in Otolaryngology and Rhinology. This study uses a mixed methods approach with a concurrent triangulation design 11 ; the combination of survey and interview data investigates Otolaryngologists' experiences and opinions regarding the current role of AI in medicine.

2. MATERIALS AND METHODS

This research was approved by the Colorado Multiple Institutional Review Board (COMIRB# 21‐3462 and COMIRB# 20‐2509).

2.1. American Rhinologic Society member survey

We conducted a literature review to identify important themes regarding AI in medicine and needs derived from early work in other specialties. 2 , 6 , 9 Survey design was centered on these themes and specific application to the field of Otolaryngology. Aside from demographic questions, we designed a set of 25 questions to assess understanding, trust, clinical application, and concerns, that could be answered on a 5‐point Likert scale (strongly disagree to strongly agree). These questions were categorized into overarching themes of familiarity, expected uses and desires, and factors influencing AI adoption. The survey was e‐mailed twice in 2021 to members of the American Rhinologic Society (ARS), a subspecialty of Otolaryngology‐Head and Neck Surgery consisting of fellowship trained and general Otolaryngologists with specific interests in Rhinology. Prior surveys have examined varied topics informing specialty needs, best practices, and future educational objectives, with a response rate of 7%–12%. 12 , 13 , 14 , 15 Responses were anonymous.

2.2. Otolaryngologist interviews

To further explore the themes introduced in the ARS survey, 19 Otolaryngologists were purposefully recruited from varying backgrounds, practice settings, and locations, to capture a range of perspectives during one‐on‐one interviews; informed consent was received. Subjects were recruited until preliminary results indicated thematic saturation. 16 PhD‐level qualitative researchers (C.T. and M.M.) oversaw the development of the semistructured interview guide, which included AI Otolaryngology applications such as automated radiology interpretation or AI programs as clinical decision aids. Two trained interviewers (C.T. and A.A.) conducted approximately 1‐h long interviews in 2021, which were recorded, professionally transcribed (Landmark Associates, Inc., Phoenix, AZ), and stored securely.

2.3. Analysis

Analysis of ARS survey data was performed with R software version 4.0.5. As mean differences between groups (categorized by length of experience or by practice setting) were small and unremarkable, additional analysis was not undertaken. Review of interview comments and subsequent review of transcriptions was performed using rapid thematic analysis with a structured matrix 17 organized by respondent. The methods followed accepted guidelines for qualitative research. 18 A.A. created the summary matrix by assigning neutral domains to interview questions, testing on four transcripts, and refining to be more usable and clear. A.A. and C.M. identified themes and example quotations related to AI‐related openness, trust, familiarity, advantages, disadvantages or concerns, and possible applications.

3. RESULTS

3.1. Participant characteristics

The survey was e‐mailed twice to 840 members with a 10% response rate, consistent with prior ARS member surveys. The survey received 86 responses over a 1‐month period. Ten respondents answered only demographic questions and no survey questions, so these were not included in the analysis. Seven respondents did not finish the survey questions in their entirety but were included in the analysis. Fifty‐eight respondents completed all multiple choice questions and entered additional free‐text responses. Nearly half (49%) of the 76 survey respondents with analyzable data had a full‐time academic position, with 25% working in private practice with academic affiliation and 26% working solely in private practice (Table 1). Duration from training demonstrated a spread, where less than half (43%) of participants completed residency within the past 10 years, 21% completed residency 11–20 years ago, and 36% completed residency over 20 years ago. A majority of respondents (61%) perform over 100 sinus surgeries per year, with 20% performing between 51 and 100 sinus surgeries and 20% performing 50 or fewer sinus surgeries.

TABLE 1.

Characteristics of survey and interview participants.

| Survey respondents (N = 76) | Interview participants (N = 19) | |

|---|---|---|

| N (%) | N (%) | |

| Practice setting | ||

| Academic | 37 (49%) | 11 (58%) |

| Private | 39 (51%) | 8 (42%) |

| Residency completion | ||

| ≤10 years ago | 33 (43%) | 9 (47%) |

| 11–20 years ago | 16 (21%) | 9 (47%) |

| >20 years ago | 27 (36%) | 1 (5%) |

| Fellowship trained | Data not collected | 9 (47%) |

| Sinus surgeries per year | ||

| ≤50 | 15 (20%) | Data not collected |

| 51–100 | 15 (20%) | |

| >100 | 46 (61%) | |

For the focused one‐on‐one interviews, the 19 participants worked at a mixture of academic and private practices (Table 1). Approximately half the participants completed residency within the past 10 years, with another half completing residency 11–20 years ago, and one participant completing residency more than 20 years ago. All participants reported sinus surgery as a significant part of their practice. Around half the participants (N = 9) were fellowship‐trained: six in Rhinology, two in Facial Plastics, and one in Neurotology.

3.2. Broad trends among subgroups

Among survey respondents practicing fewer than 20 years, there was a slight predominance of academic physicians, and among those with more than 20 years of experience, private practice was predominant. There were no major differences in distributions of career settings or age groups in responses. However, for questions regarding physician comfort with using AI, academic physicians tended to answer neutrally or agree, whereas private practice physicians were more polarized and some disagreed or strongly agreed. For those same questions, physicians with less than 20 years of experience tended to answer neutrally, whereas more experienced physicians contributed the most to agreement. Two survey questions that elicited some disagreement, mostly from private practice physicians, were if practitioners would trust AI to correctly interpret radiographic images or if practitioners would feel comfortable with AI aiding with direct treatment. In free text responses, private practice physicians who disagreed with those uses reported concerns with liability and with more inflexible insurance coverage.

3.3. Familiarity and trust

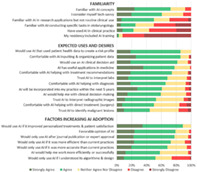

Most survey participants were aware of general AI applications but had limited education or experience with AI in clinical settings (Figure 1, top panel). Whereas 72% of respondents expressed familiarity with general AI concepts, there was a low level of familiarity with otolaryngology‐specific AI programs, research applications, or clinical uses. Few respondents (8%) had received AI training during residency, and only 22% could identify any current AI use in clinical practice. Respondents had a variety of comfort with new technology: some participants were more confident (61% agree/strongly agree) in their tech‐savviness than others (29% neutral), and only 11% did not consider themselves tech‐savvy.

FIGURE 1.

ARS member survey results: AI familiarity, uses, and adoption.

None of the interviewees had direct personal experience with using AI in clinical settings. Two participants had heard about clinical AI applications, another participant had taken machine learning courses, and only one participant had direct experience by using machine learning analytics in a research setting. Importantly, despite almost no familiarity with clinical AI, most physicians were open to learning about it or using it: some were “curious” or considered AI “interesting”; others stated that it “sounds great” or that they would “be totally for it.”

3.4. Expected uses and desires

The majority (78%) of survey respondents agreed that AI would have useful clinical applications. However, their comfort level varied depending on the specific role of AI in patient care. For example, most participants (86%) were comfortable or very comfortable with AI assistance for inputting and managing patient data or creating a health risk profile based on that data. However, there was some drop‐off in the proportion of respondents being comfortable or very comfortable about AI helping with diagnosis (66%), using patient labs for diagnostic purposes (68%), and providing treatment recommendations (74%). Physicians were least comfortable with AI's role in interpreting radiographic images (only 53% trusted AI for this task), identifying malignancy (44%), and participating in direct treatment, such as surgery (50%). However, in the role of augmenting physician capabilities, 82% agreed that they would use an AI clinical decision aid.

In free‐text responses, many survey respondents emphasized the potential of AI in a personalized approach to clinical decision‐making. Some physicians noted that AI “could be helpful in discovering subtypes” or endotypes in chronic rhinosinusitis, recommending allergy testing, and could use antibiotic history to influence therapy selection. Others suggested that AI‐aided “risk stratification,” “review[ing] databases,” “detecting patterns of care in large populations,” and “data collection for ongoing Q[uality] Assessments” would better place patients in the context of larger‐scale epidemiology and population patterns. In addition to AI facilitating “clinical decision support tools [in] routine practice,” respondents indicated potential to improve “patient education.” Together, these types of applications identify a comfort zone wherein physicians are aided by AI, but still maintain sufficient autonomy to express clinical judgment and contextualize any additional information.

The most easily imagined advantage indicated by physicians was a role for AI in “imaging evaluation,” in both pathology and radiology images, and therefore improved “differential diagnosis” and “diagnostic accuracy.” However, AI imaging interpretation could be used for more than diagnostic purposes; respondents suggested “preop planning” and “surgical navigation” would be of interest. Some physicians noted that AI could automate certain components of surgery, such as the segmentation of images in preoperative planning or live intraoperative video delineation of tumors or surgical anatomy.

Many physicians envision AI assisting with administrative duties through “documentation and ordering,” “writing notes,” and a “voice activated Electronic Medical Record.” Some respondents see AI going one step past data collection and helping with “filtration of relevant information” or “triage.” Ultimately, survey respondents hope for “improvement in office efficiency.”

Similarly, interview participants described specific tasks done by AI that they would find useful, with focuses on AI's role in interpreting radiographic images, identifying malignancy, and offering treatment recommendations (Table 2). Interviewees described greater comfort with using AI for simple objective measurements, such as percentage opacification of sinuses, rather than more complex interpretations.

TABLE 2.

Expected uses of AI from interview participants.

|

Interpreting radiographic images Describing location, severity, and qualities of sinus inflammation, such as mucosal thickening and osteitis Labeling position of arteries and nerves Labeling septal deviation, concha bullosa, and dehiscence Detailing anatomic boundaries Drawing attention to anatomic abnormalities and variants |

|

Identifying malignancy Labeling likelihood of a finding being benign or malignant Differentiating between acute inflammatory changes and tumors Including Hounsfield Units for neoplastic processes |

|

Treatment recommendations Certain percentage of sinus opacification should trigger surgery recommendation |

3.5. Factors influencing AI adoption

Survey respondents generally reported a favorable opinion of AI (79% agreeing or strongly agreeing), but even more respondents (89%) agreed that they would use AI if it enabled personalized treatments and/or improved patient satisfaction. The most common concerns were that AI would prevent rapport‐building and “compassionate clinical care” that comes from personal relationships; these physicians stated that AI utility may be best for patient education and communication realms. Others worried that AI may not be adept at handling “corner cases” or scenarios that are complex, unexpected, or unique—precisely the cases where physicians may require the most help.

Otolaryngologists were uncertain about AI's ability to help them work more efficiently, with 58% agreeing, 38% neutral, and 4% disagreeing. They were similarly divided on expectations for timeline of AI entry into clinical practice, whether it would be incorporated into their practice within the next 5 years—with 61% agreeing, 32% neutral, and 8% disagreeing. In open‐ended answers, survey respondents they expressed worry over “poorly designed algorithms,” “inaccuracy,” and current “lack of evidence.” Importantly, physicians valued efficiency more than accuracy or understanding AI algorithms and design. A total of 73% of respondents refused to use AI software unless it improved efficiency compared with 68% that required improved accuracy and 52% that required understanding AI design before using software. A smaller group indicated concern over AI's role in clinical decision‐making, and physicians developing “overreliance” on technology. A few respondents brought up unintended consequences of AI, such as inadequate protection of patient “privacy,” liability concerns, or insurance “denial of care based on AI.” Such concerns are widely held and generally come from those most familiar with AI. Finally, some respondents stated that they had no concerns regarding AI or “poor knowledge” of AI. The vast majority (78%) of survey participants expressed willingness to use AI clinically if experts and journal‐published studies approved of AI technologies, indicating a potential belief in the trend if leaders in the field establish legitimate value.

Interview participants expressed less overall concern regarding AI adoption (Table 3). Interviewees consistently valued two characteristics that trustworthy AI would have: agreement with their own clinical judgment and with expert analysis. Many participants would trust AI if it consistently matched their own assessment or that of a reliable radiologist, for example, in the case of interpreting radiographic images. Some participants want extensive testing on novel AI applications and proven reproducibility, with results in peer‐reviewed publications, and programmer explanations of methodology and algorithm design. One interviewee echoed survey respondents' concerns regarding AI's inability to handle atypical cases.

TABLE 3.

Advantages and concerns regarding AI, from semistructured interviews of practicing Otolaryngologists.

|

Advantages of AI for patients “Data‐driven AI will be able to aid decision making in rhinology.” –Academic physician, 6+ years of experience “Cost savings.” –Private practice physician, ≤5 years of experience “Detecting patterns of care in large populations.” –Academic physician, 20+ years of experience “QoL [Quality of life] tools seamlessly integrated into surgical decision making.” –Private practice physician, 16+ years of experience |

Advantages of AI for physicians “Disease identification.” –Academic physician, ≤5 years of experience “Imaging interpretation, automatic scoring, volumetric assessment of inflammation in CTs, identifying areas of risk for surgery.” –Private practice physician, 16+ years of experience “Doing virtual sinus surgery using robot.” –Private practice physician, 20+ years of experience “Patient data intake, risk profile, coding/billing.” –Private practice physician, 6+ years of experience “Collate and interpret prior treatment data.” –Private practice physician, 11+ years of experience |

|

Patient care concerns regarding AI “I would never trust a computer over…the human bond from doctor‐patient relationships.” –Private practice physician, 11+ years of experience “[AI cannot accomplish] tailoring communication to suit individual patient needs and preferences, use of eye contact, provision of empathy.” –Academic physician, 6+ years of experience “If someone is a complex rhinologic patient…AI algorithm [cannot] correctly individualize therapy (yet).” –Academic physician, 11+ years of experience |

Physician concerns regarding AI “There is an art to surgical decisions in rhinology that AI cannot substitute or augment.” –Private practice physician, 6+ years of experience “Learned dependence and therefore, deskilling.” –Private practice physician, 6+ years of experience “Integrity of the datasets used for training AI models.” –Academic physician, ≤5 years of experience “AI might have a mistake and who's responsible for that?” –Academic physician, 6+ years of experience |

4. DISCUSSION

4.1. Broad trends among subgroups

Consistent with past technological trends in medicine and surgical subspecialties, we expected younger physicians closer to their training periods to be more comfortable, educated, and willing to adopt AI into their practices, but there were few differences in answers among the career setting and experience subgroups. Notably, much of the agreement to questions about comfort with AI came from physicians with over 20 years of experience. This observation contradicts the concern that more experienced practitioners would be resistant to the adoption of new AI‐based technologies that may replace traditional approaches to care provision. Perhaps it may even reflect an experience‐based understanding of biases in clinical care, potential shortcomings of clinical guidelines and human intuition, and/or willingness to seek further aids to enhance patient outcomes.

4.2. Limited exposure to AI

Otolaryngologists reported limited familiarity with AI despite feeling comfortable with technology as a whole. Few otolaryngologists incorporate AI into their practice. AI‐related coursework is uncommon in residency and medical school, although augmented reality and virtual reality surgical tools are now being tested with medical students and surgical trainees. 19

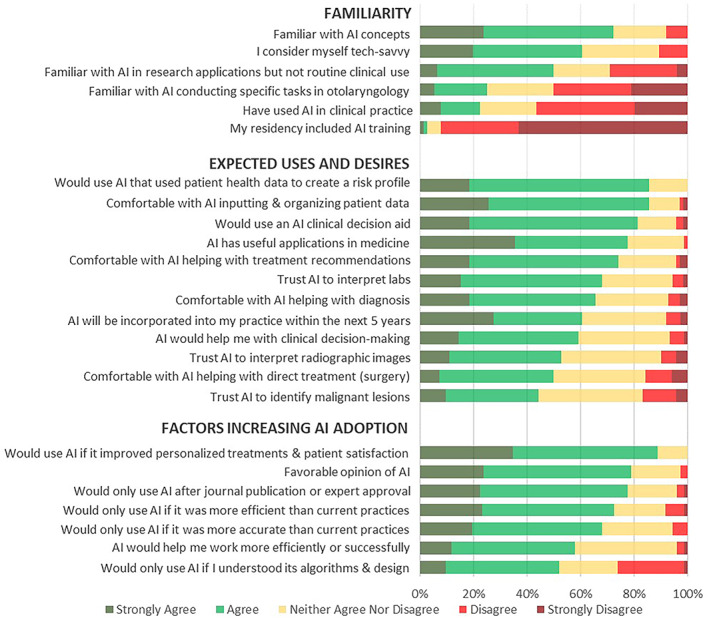

Limited exposure could also be due to the low availability of validated and accessible otolaryngology technology. The Food and Drug Administration (FDA) has approved over 500 AI‐enabled medical devices (Figure 2) to date, but the vast majority of these technologies are for radiology (70%) or cardiology (12%) purposes. 20 One of the few FDA‐approved otolaryngology devices making use of AI is the TruSeg software component of the TruDi image‐guided surgery system 21 ; TruSeg, which was released in fall 2021, can alert surgeons when compatible surgical tools approach certain anatomical structures, like the eyes. 22 Unlike AI devices in other fields, such as the IDx‐DR Diabetic Retinopathy Screening tool 23 or Cardiologs® interpretation tool, 24 there is limited research on outcomes using TruSeg at this time. This paucity of AI devices specific to otolaryngology explains low familiarity in survey and interview responses.

FIGURE 2.

AI or machine learning‐enabled medical devices authorized by FDA. 20

4.3. Concerns with AI development

Especially interesting given the lack of background education and understanding of AI product development, respondents consistently desired transparency on how an AI tool was developed and tested. They had lower trust in AI interpretation of pathology and radiology images but also found this task to be the biggest opportunity for future development. One path forward to build trust in image interpretation is systematic testing and validation. Open‐source software, public datasets, clear step‐by‐step protocols, and deliberate attempts to remove bias can encourage reproducibility and trust. 25 , 26 , 27

4.4. Support for and concerns with AI use

AI can be broadly categorized as applications to increase practice or administrative efficiency, clinical applications for physician augmentation, and autonomous applications. Most respondents believe AI can be useful in a broad sense; however, there was greater disagreement regarding specific tasks, such as reading radiology image. Respondents expressed greater hesitancy with applications that significantly impact patient well‐being; they disagreed on how sophisticated, or not, AI would be. Low‐risk tasks such as inputting health data were easily accepted by most respondents. On the other hand, tasks with potentially serious outcomes, such as participating in direct surgery or identifying malignancy, were less favored. The variation in support for certain tasks could also be because these have the most room for interpretation in current practice and rely on the experience and judgment of the individual physician. Tasks perceived to infringe on physician autonomy would, therefore, be met with greater hesitation.

Participants were generally enthusiastic for AI tools that would increase the efficiency of their practice. It is vital that any new technology does not add to the annoyance and burnout caused by EHR use. Numerous studies have noted that physicians spend as much—if not more—time interacting with a computer as they do with the patient. 28 Much of the time and effort spent on “desktop medicine” is low‐value, administrative work to comply with insurance requirements or government regulations, and does not make the best use of highly‐skilled physicians. However, EHR software outside of the United States is met with greater physician satisfaction, at least in part due to shorter clinical documentation and fewer requirements. 29 For AI to have a future as a desired practice efficiency tool, it must undergo dedicated user‐centered design that primarily fulfills physician needs rather than insurance, hospital, or regulatory priorities.

Participants worried that third‐party payers may use AI reports for screening purposes or denial of treatment. Previous reports have supported this concern: a recent investigation discovered that some insurance companies use unregulated AI algorithms to deny paying for treatment, even when in direct disagreement with physician recommendations. 30 Other hesitancy appears attributable to greater concern with liability. How do physicians justify a discrepancy between their assessment and an AI recommendation to patients, colleagues, or the courts? These worries are part of a general sentiment that nuance in clinical care, driven by years of experience, may be superseded by unequivocal “objective” assessments by an AI algorithm. Taken together, these concerns suggest that an initial role for AI might best be as a tool for the physician. Augmented intelligence, with the emphasis that the physician makes the ultimate assessment and decision, would then be more palatable. This measure approach to adoption is very similar to how image‐guided surgical technologies were initially regarded in Rhinology. 31 , 32

4.5. Limitations

Limitations of this study include selection bias due to nonrandom sampling of survey and interview participants as well as low survey response rate. In addition, survey and interview questions do not align precisely, limiting comparisons. In addition, the survey's study population focused on rhinologists, whereas the interview's study population included otolaryngologists of varying subspecialties.

5. CONCLUSION

Despite generally positive feelings toward AI, very few physicians have training or exposure to AI technologies in residency or clinical practice. Clinicians expressing hesitance feel that the nuance and humanism of medicine will be lost with AI, but some physicians also express excitement over AI's potential to assist in current areas of difficulty. Many clinicians believe that AI will soon be incorporated into clinical Otolaryngology, so they emphasize the need for further education and research—for published studies, transparency in algorithm development, leaders in the field to test AI capabilities, and personal hands‐on use. Physicians are most ready to accept AI where it does not infringe on clinical judgment and intangibles such as experience or physician‐patient relationships, and where implementation is designed such that administrative burden is relieved and patient satisfaction is improved. Initial receptiveness appears to be in the role as “augmenting” the physician, where highest risk tasks are still performed in traditional physician‐patient interactions, perhaps assisted by AI applications.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflicts of interest.

Asokan A, Massey CJ, Tietbohl C, Kroenke K, Morris M, Ramakrishnan VR. Physician views of artificial intelligence in otolaryngology and rhinology: A mixed methods study. Laryngoscope Investigative Otolaryngology. 2023;8(6):1468‐1475. doi: 10.1002/lio2.1177

REFERENCES

- 1. Schwartz WB. Medicine and the computer—the promise and problems of change. N Engl J Med. 1970;283:1257‐1264. [DOI] [PubMed] [Google Scholar]

- 2. Rajkomar A, Dean J, Kohane I. Machine learning in medicine. N Engl J Med. 2019;380(14):1347‐1358. doi: 10.1056/NEJMra1814259 [DOI] [PubMed] [Google Scholar]

- 3. Bohr A, Memarzadeh K. The rise of artificial intelligence in healthcare applications. Artificial Intelligence in Healthcare. Elsevier; 2020:25‐60. doi: 10.1016/B978-0-12-818438-7.00002-2 [DOI] [Google Scholar]

- 4. Karlıdağ T. Otorhinolaryngology and Artificial Intelligence. Turk Arch Otorhinolaryngol. 2019;57(2):59‐60. doi: 10.5152/tao.2019.36116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. NIH strategic plan for data science . National Institutes of Health. 2018. Accessed March 15, 2023. https://datascience.nih.gov/sites/default/files/NIH_Strategic_Plan_for_Data_Science_Final_508.pdf

- 6. Rajpurkar P, Chen E, Banerjee O, Topol EJ. AI in health and medicine. Nat Med. 2022;28(1):31‐38. doi: 10.1038/s41591-021-01614-0 [DOI] [PubMed] [Google Scholar]

- 7. Brocklehurst P, Field D, Greene K, et al. Computerised interpretation of fetal heart rate during labour (INFANT): a randomised controlled trial. Lancet. 2017;389:1719‐1729. doi: 10.1016/s0140-6736(17)30568-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Kelly CJ, Karthikesalingam A, Suleyman M, Corrado G, King D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. 2019;17(1):195. doi: 10.1186/s12916-019-1426-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Gudis DA, McCoul ED, Marino MJ, Patel ZM. Avoiding bias in artificial intelligence. Int Forum Allergy Rhinol. 2022;13:193‐195. doi: 10.1002/alr.23129 [DOI] [PubMed] [Google Scholar]

- 10. Esteva A, Chou K, Yeung S, et al. Deep learning‐enabled medical computer vision. NPJ Digit Med. 2021;4(1):5. doi: 10.1038/s41746-020-00376-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Creswell JW, Plano Clark VL. Chapter 4: choosing a mixed methods design. In: Creswell JW, Plano Clark VL, eds. Designing and Conducting Mixed Methods Research. Sage; 2007:58‐88. [Google Scholar]

- 12. Gill AS, Levy JM, Wilson M, Strong EB, Steele TO . Diagnosis and Management of depression in CRS: a knowledge, attitudes and practices survey. Int Arch Otorhinolaryngol. 2021;25(1):e48‐e53. doi: 10.1055/s-0040-1701268 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Grayson JW, McCormick JP, Thompson HM, Miller PL, Cho DY, Woodworth BA. The SARS‐CoV‐2 pandemic impact on rhinology research: a survey of the American Rhinologic Society. Am J Otolaryngol. 2020;41(5):102617. doi: 10.1016/j.amjoto.2020.102617 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Riley CA, Zheng Z, Williams N, Smith TL, Orlandi RR, Tabaee A. Concordance of self‐reported practice patterns of American Rhinologic Society members with the International Consensus Statement of Allergy and Rhinology: rhinosinusitis. Int Forum Allergy Rhinol. 2020;10(5):665‐672. doi: 10.1002/alr.22533 [DOI] [PubMed] [Google Scholar]

- 15. Lee JT, DelGaudio J, Orlandi RR. Practice patterns in office‐based rhinology: survey of the American Rhinologic Society. Am J Rhinol Allergy. 2019;33(1):26‐35. doi: 10.1177/1945892418804904 [DOI] [PubMed] [Google Scholar]

- 16. Guest G, Namey E, Chen M. A simple method to assess and report thematic saturation in qualitative research. PLoS One. 2020;15(5):e0232076. doi: 10.1371/journal.pone.0232076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Averill JB. Matrix analysis as a complementary analytic strategy in qualitative inquiry. Qual Health Res. 2002;12(6):855‐866. doi: 10.1177/104973230201200611 [DOI] [PubMed] [Google Scholar]

- 18. O'Brien BC, Harris IB, Beckman TJ, Reed DA, Cook DA. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med. 2014;89(9):1245‐1251. doi: 10.1097/ACM.0000000000000388 [DOI] [PubMed] [Google Scholar]

- 19. Stepan K, Zeiger J, Hanchuk S, et al. Immersive virtual reality as a teaching tool for neuroanatomy. Int Forum Allergy Rhinol. 2017;7(10):1006‐1013. doi: 10.1002/alr.21986 [DOI] [PubMed] [Google Scholar]

- 20. U.S. Food and Drug Administration . Artificial Intelligence and Machine Learning (AI/ML)‐Enabled medical Devices. U.S. Food and Drug Administration. 2022. Accessed February 19, 2023. https://www.fda.gov/medical‐devices/software‐medical‐device‐samd/artificial‐intelligence‐and‐machine‐learning‐aiml‐enabled‐medical‐devices

- 21. Brenner MJ, Shenson JA, Rose AS, et al. New medical device and therapeutic approvals in otolaryngology: state of the art review 2020. OTO Open. 2021;5(4):2473974X211057035. doi: 10.1177/2473974X211057035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Acclarent . Acclarent launches first ENT navigation technology powered by artificial intelligence. 2021. Accessed April 16, 2023. https://www.jnjmedtech.com/en‐US/news‐events/acclarent‐launches‐first‐ent‐navigation‐technology‐powered‐artificial‐intelligence

- 23. Savoy M. IDx‐DR for diabetic retinopathy screening. Am Fam Physician. 2020;101(5):307‐308. [PubMed] [Google Scholar]

- 24. Smith SW, Walsh B, Grauer K, et al. A deep neural network learning algorithm outperforms a conventional algorithm for emergency department electrocardiogram interpretation. J Electrocardiol. 2019;52:88‐95. doi: 10.1016/j.jelectrocard.2018.11.013 [DOI] [PubMed] [Google Scholar]

- 25. Muehlematter UJ, Daniore P, Vokinger KN. Approval of artificial intelligence and machine learning‐based medical devices in the USA and Europe (2015‐20): a comparative analysis. Lancet Digit Health. 2021;3(3):e195‐e203. doi: 10.1016/S2589-7500(20)30292-2 [DOI] [PubMed] [Google Scholar]

- 26. Collins GS, Moons KGM. Reporting of artificial intelligence prediction models. Lancet. 2019;393(10181):1577‐1579. doi: 10.1016/S0140-6736(19)30037-6 [DOI] [PubMed] [Google Scholar]

- 27. Collins GS, Dhiman P, Andaur Navarro CL, et al. Protocol for development of a reporting guideline (TRIPOD‐AI) and risk of bias tool (PROBAST‐AI) for diagnostic and prognostic prediction model studies based on artificial intelligence. BMJ Open. 2021;11(7):e048008. doi: 10.1136/bmjopen-2020-048008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Strongwater S, Lee TH. Are EMRs to blame for physician burnout? NEJM Catalyst. 2016;2(5). [Google Scholar]

- 29. Downing NL, Bates DW, Longhurst CA. Physician burnout in the electronic health record era: are we ignoring the real cause? Ann Intern Med. 2018;169(1):50‐51. doi: 10.7326/M18-0139 [DOI] [PubMed] [Google Scholar]

- 30. Ross C, Herman B. Denied by AI: how Medicare advantage plans use algorithms to cut off care for seniors in need. STAT News. March 13, 2023. 2023. Accessed March 15, 2023. https://www.statnews.com/2023/03/13/medicare‐advantage‐plans‐denial‐artificial‐intelligence/

- 31. Beswick DM, Ramakrishnan VR. The utility of image guidance in endoscopic sinus surgery: a narrative review. JAMA Otolaryngol Head Neck Surg. 2020;146(3):286‐290. doi: 10.1001/jamaoto.2019.4161 [DOI] [PubMed] [Google Scholar]

- 32. Ramakrishnan VR, Orlandi RR, Citardi MJ, Smith TL, Fried MP, Kingdom TT. The use of image‐guided surgery in endoscopic sinus surgery: an evidence‐based review with recommendations. Int Forum Allergy Rhinol. 2013;3(3):236‐241. doi: 10.1002/alr.21094 [DOI] [PubMed] [Google Scholar]