Summary

Background

Deep learning has revolutionized digital pathology, allowing automatic analysis of hematoxylin and eosin (H&E) stained whole slide images (WSIs) for diverse tasks. WSIs are broken into smaller images called tiles, and a neural network encodes each tile. Many recent works use supervised attention-based models to aggregate tile-level features into a slide-level representation, which is then used for downstream analysis. Training supervised attention-based models is computationally intensive, architecture optimization of the attention module is non-trivial, and labeled data are not always available. Therefore, we developed an unsupervised and fast approach called SAMPLER to generate slide-level representations.

Methods

Slide-level representations of SAMPLER are generated by encoding the cumulative distribution functions of multiscale tile-level features. To assess effectiveness of SAMPLER, slide-level representations of breast carcinoma (BRCA), non-small cell lung carcinoma (NSCLC), and renal cell carcinoma (RCC) WSIs of The Cancer Genome Atlas (TCGA) were used to train separate classifiers distinguishing tumor subtypes in FFPE and frozen WSIs. In addition, BRCA and NSCLC classifiers were externally validated on frozen WSIs. Moreover, SAMPLER's attention maps identify regions of interest, which were evaluated by a pathologist. To determine time efficiency of SAMPLER, we compared runtime of SAMPLER with two attention-based models. SAMPLER concepts were used to improve the design of a context-aware multi-head attention model (context-MHA).

Findings

SAMPLER-based classifiers were comparable to state-of-the-art attention deep learning models to distinguish subtypes of BRCA (AUC = 0.911 ± 0.029), NSCLC (AUC = 0.940 ± 0.018), and RCC (AUC = 0.987 ± 0.006) on FFPE WSIs (internal test sets). However, training SAMLER-based classifiers was >100 times faster. SAMPLER models successfully distinguished tumor subtypes on both internal and external test sets of frozen WSIs. Histopathological review confirmed that SAMPLER-identified high attention tiles contained subtype-specific morphological features. The improved context-MHA distinguished subtypes of BRCA and RCC (BRCA-AUC = 0.921 ± 0.027, RCC-AUC = 0.988 ± 0.010) with increased accuracy on internal test FFPE WSIs.

Interpretation

Our unsupervised statistical approach is fast and effective for analyzing WSIs, with greatly improved scalability over attention-based deep learning methods. The high accuracy of SAMPLER-based classifiers and interpretable attention maps suggest that SAMPLER successfully encodes the distinct morphologies within WSIs and will be applicable to general histology image analysis problems.

Funding

This study was supported by the National Cancer Institute (Grant No. R01CA230031 and P30CA034196).

Keywords: Digital pathology, Deep learning, Unsupervised learning, Representation learning, Multiple instance learning, WSI representation

Research in context.

Evidence before this study

We searched for English, Persian, and French articles containing “deep learning” and “whole slide image” in Google Scholar and PubMed up to December 1, 2022. Several research and review articles were selected, and their computational pipelines were reviewed. All of them analyzed whole slide images (WSIs) by breaking them into a collection of tiles. While early studies trained predictors at the tile level, recent methods use attention-based deep learning models to combine tile-level information into a slide-level representation. These models require supervised training at the tile aggregation step and are computationally expensive. The attention architecture can also have a large impact on the accuracy of the trained predictive model. In contrast, we found only two self-supervised tile aggregation approaches for slide representation learning. They were computationally expensive and sensitive to stain variations unless special training strategies were used. We hypothesized that unsupervised statistical approaches are more suited for encoding the collection of heterogenous morphologies within WSIs.

Added value of this study

We have developed SAMPLER, a statistical approach to construct slide-level representations from tile-level features. Our approach achieves accuracies comparable to state-of-the-art fully deep learning attention models on multiple benchmark classification tasks, but is > 100 times faster. This rapid speed is because the tile aggregation step in SAMPLER is unsupervised, so it does not require de novo training of a neural network nor substantial tuning of hyperparameters. Also, given slide-level cancer phenotype labels, SAMPLER outputs “attention maps” that highlight image regions informative of each phenotype. SAMPLER attention maps are more interpretable than traditional attention-based deep learning models, as the individual contribution of each deep learning feature at multiple image scales is reported. The statistical foundation of SAMPLER allows for theoretical formulation of the slide representation problem, and our derivations show that SAMPLER is optimal for classification tasks under moderate assumptions.

Implications of all the available evidence

Our work shows that the SAMPLER unsupervised approach to generation of slide representations is efficient and effective for encoding the different morphologies in WSIs. In contrast, fully supervised deep learning models, including attention models, are computationally expensive and may suffer training instability. SAMPLER-based logistic regression classifiers can be trained with little computation cost and are less susceptible to overfitting. Moreover, SAMPLER enables identification of regions of interest without training a classifier, providing interpretability advantages over attention models when training data are limited. While our study focuses on several benchmark tasks, we expect that SAMPLER will be broadly applicable to speed up and improve various image classification tasks in digital pathology.

Introduction

Pathology relies on inspecting H&E-stained tissue on glass slides under a microscope to diagnose, classify, and assess different types of cancer.1 Digital pathology enables pathologists to store, view, and analyze WSIs computationally, which has led to the development of deep learning models to automate and facilitate image analysis.2, 3, 4, 5 These models have achieved high accuracy in distinguishing cancerous versus non-cancerous tissue,6,7 as well as moderate to high accuracy in identifying cancer subtypes.6,8, 9, 10 Current deep learning models can also identify regions of interest in WSIs,7,11 a valuable tool for the diagnosis process.

The large size of WSIs presents challenges for deep learning-based predictive models.6,10,12 Therefore, WSIs are broken into smaller images called tiles, and each tile is encoded in a feature space by a neural network.6,9,10 Tile-level information is then aggregated to arrive at a slide-level prediction, generally using multiple instance learning (MIL) techniques in a weakly supervised learning paradigm. Early MIL techniques trained and quantified predictive models at the tile-level, then obtained slide-level predictions by placing equal importance on all tiles.6,7,10 Subsequent approaches clustered tiles and concatenated cluster-level feature representations.13, 14, 15 Recent studies use attention-based models to construct slide-level representation by aggregating weighted tile-level features,12,16, 17, 18, 19 e.g., via multi-head attention (MHA), hierarchical attention, dual attention, or convolutional block attention modules.12,16,20, 21, 22, 23, 24 Other important approaches have included multiscale attention and vision transformer models, which utilize the correlations across tiles to improve slide-level representations.20,25, 26, 27, 28 Fully CNN-based approaches have also been considered for attention-like functions, e.g., encoding of tiles by a CNN followed by an additional deep CNN,29 possibly with multi-scale tiling.30

Nonetheless, attention-based neural networks have weaknesses. Notably, they are computationally intensive because training is performed at the slide-level. This limits the range of network architectures that can be explored.31 The need for hyperparameter optimization exacerbates this problem since it requires training a multitude of models. Reliable model selection is a particular challenge for small datasets with insufficient data for a three-way data split (train/validation/test). Additionally, the variable number of tiles within WSIs requires special training procedures such as a batch size of one or a fixed number of random tiles to represent each slide. Such procedures can lead to training instability and long convergence times.32,33 Furthermore, attention-based models tend to have performance inferior to classical weakly supervised models on external validation datasets.31 The lack of robustness impedes reliable interpretation34,35 and hinders clinical utilization. Most attention-based models are trained in a supervised setting. Recent work has reported self-supervised learning for generating slide-level representations, but with lower accuracy compared with supervised models and with large performance drops on external datasets.36

To address these challenges, we propose a fully unsupervised statistical approach to construct slide-level representations from tile-level features, called “SAMpling of multiscale empirical distributions for LEarning Representations” (SAMPLER). SAMPLER is based on the concept that the distributions of tile-level deep learning features within a WSI should provide sufficient information to encode the distinct morphologies within a WSI and make clinical predictions, even if the feature distributions are specified only approximately. More precisely, the linear associations of deep learning features with morphologies7 suggests the marginal distributions of the deep learning features across WSI tiles reflect the distribution of morphologies within the WSI. To quantify feature distributions, SAMPLER computes the empirical cumulative distribution function (CDF) of each tile-level deep learning feature, and then the quantile values of the CDFs make up the WSI's encoded representation. Similar to several recent attention-based models28,37,38 SAMPLER uses multiple tile sizes to encode multiscale morphological features and spatial relations. Because the SAMPLER representation is based on quantile values, it does not require de novo training of a neural network. The SAMPLER approach also does not require substantial tuning of hyperparameters, other than a consideration of multiple tile sizes and the number of quantiles. These differences make SAMPLER orders of magnitude faster than attention-based models.

The manuscript is structured as follows. We first describe the SAMPLER approach. We compare accuracy of SAMPLER-based logistic regression classifiers with state-of-the-art fully deep learning models on tumor subtyping and survival analysis. Despite their simplicity, the SAMPLER-based classifiers were as effective or better than state-of-the-art attention-based deep learning models. We confirm that attention maps generated using SAMPLER correctly identify histopathologically meaningful regions. We further show how SAMPLER can be used to improve the design of attention-based neural networks. We also find sufficient conditions for which SAMPLER is optimal. Motivated by these results, we then investigate more general concepts suggested by SAMPLER. For this, we examine the computational efficiency of SAMPLER, as well as a new SAMPLER-inspired attention model (context-MHA). Finally, we investigate the mathematical basis of SAMPLER, showing its theoretical optimality under moderate assumptions.

Methods

Dataset

We studied three cancers: Breast cancer (BRCA), Non-Small Cell Lung Carcinoma (NSCLC), and Renal Cell Carcinoma (RCC). We used two public datasets: The Cancer Genome Atlas (TCGA) and the Clinical Proteomic Tumor Analysis Consortium (CPTAC). The TCGA dataset was split into two groups: frozen (TCGA-FR) and FFPE diagnostic (TCGA-DX). All the data used from CPTAC were frozen (CPTAC-FR).

Tiling process

All WSIs were pre-processed, tiled, and passed through an InceptionV3 model pre-trained on ImageNet as described in Noorbakhsh J et al.6 All WSIs were tiled at 20X, except for diagnostic slides of TCGA-BRCA which were tiled at 20X and 40X. We used 512 × 512 pixel tiles with 50% overlap. We used overlapping tiles to guard against missing relevant morphologies close to edges of two adjacent tiles. If a tile (small) had at least 50% tissue, the medium (3X larger) and large (5X larger) tiles centered around the original tile were saved. Medium and large tiles were then down sampled by factors of 3 and 5, respectively, to yield tiles with 512 pixels on edge. We used multiple tile sizes to obtain a multiscale representation of a WSI. All tiles (small, medium, and large) were then passed through InceptionV3. The 2048 features of the global average pooling (GAP) layer of InceptionV3 were written to disk and are hereafter denoted as deep learning features. We also use the feature vectors of Zhang R et al.27 using a ResNet50 backbone for NSCLC subtyping, which are provided on the associated GitHub page. Preprocessing details are provided in Zhang R et al.27

Train/validation/test splits

We used patient level 10-fold cross-validation splits of Chen RJ et al.12 for subtyping tasks on TCGA-DX images. We used the train/validation/test split of Zhang R et al.27 as a secondary splitting for NSCLC subtyping. We used a 10-fold Monte Carlo cross validation for frozen slides of TCGA, breaking data into train (70%) and test (30%) sets at the patient level. A similar process was separately carried out for CPTAC-FR data as no hyper-parameter optimization was performed for models trained on frozen slides. For external validation, models trained on TCGA-FR images were applied to CPTAC-FR and vice-versa. For survival analysis on TCGA-DX images we used the 5-fold cross validation splits of Chen RJ et al.,12 splitting the data to train and validation sets.

Computing slide-level representations via SAMPLER

SAMPLER generates slide-level representations by aggregating tile-level features. For each WSI, SAMPLER sorts and reports the values of each tile-level feature at several quantiles. We used 10 quantiles from 5th percentile to 95th percentile in steps of 10%. Suppose is a WSI matrix composed of N tiles each encoded in a D-dimensional feature space. SAMPLER represents the WSI in a D dimensional feature vector by reporting the quantiles of each feature.

Classification based on SAMPLER features using logistic regression

We used a two-phase approach. First, we used a t-test to remove features with p-values larger than a threshold (). Then, the remaining SAMPLER features were used to train a logistic regression classifier with lasso penalty (scikit-learn 1.1.1). We used a balanced class weight (class_weight = ‘balanced’). For TCGA-DX slides we tested two values of (0.01 and 0.001), three values of C (10, 100, and 1000), two solvers (“saga”39 and “liblinear”40), and three values for max_iter (100, 1000, 10,000). We observed minor differences in area under the receiver operator characteristic curve (AUC) of these models irrespective of the hyperparameters used when max_iter> 100. Nonetheless, the test AUC of the model with the highest validation AUC is reported for FFPE slides. For frozen slides we set = 0.001, C = 100, solver = “saga”, and max_iter = 1000. We additionally tested if applying principal component analysis (PCA) to features that passed the t-test improved runtime and AUC. Here, all principal component dimensions were kept.

Survival analysis based on SAMPLER features using the cox proportional hazard model

We used the Cox proportional hazard model with lasso penalty for two regularization penalty values () for survival analysis. We used the scikit-survival 0.22.1 python package with n_iter = 1000 and default values for other parameters.

SAMPLER attention maps

Attention-based deep learning models assign an attention score to each tile. Similarly, we compute attention scores for each tile using SAMPLER representations, where tiles with high scores are informative of the slide-level label. Attention maps are obtained by stitching tile-level attention scores and averaging on overlapping regions. We first consider a binary class problem before generalizing to multiclass problems. The unnormalized attention score of tile of WSI in class , denoted by , is a weighted sum of feature level attention scores:

where is the value of feature in tile (the row corresponding to , and column in ) and is the value of the empirical CDF of feature for slide at . Let be the value of feature when the slide-level empirical CDF of a random WSI reaches . and are the probability distribution functions (p.d.f.’s) of across all slides of classes and , respectively. is the -log (p-value) of a hypothesis test where null assumes the that corresponds to does not differ between classes. We used the t-test throughout. We used linear interpolation to compute and when does not correspond to a SAMPLER feature. We used kernel density estimation with a Gaussian kernel to estimate the p.d.f. of . For multiclass problems, to generate the attention map of a slide in class , for each feature we compute -log (p-value) of class versus other classes to compute , and use the average of the p.d.f. of classes instead of . Unless otherwise stated, we use large tiles to show examples of regions with high or low attention.

can be construed as the log-likelihood ratio of tile in WSI belonging to class , assuming (a) the log-likelihood ratio is a linear function of feature-level log-likelihood ratios, and (b) the decile-conditioned distributions are used to compute feature-level log-likelihood ratios (Supplementary File S1). It only remains to identify the weights to combine feature-level log-likelihood ratios, which we used -log (p-value) of a hypothesis test for simplicity and improving stability of attention maps. As individual deep learning features correlate with interpretable morphologies,7 the linearity assumption is reasonable.

Expert review of attention maps

A board-certified pathologist assessed SAMPLER's attention maps on all subtyping tasks. Original WSIs, overall attention maps, and example tiles with high and low attention were provided to the pathologist. Expert review (a) provided a description of morphological features in example tiles, (b) assessed whether tiles labeled as high/low attention contained morphological features informative of patient phenotype, and (c) assessed whether biologically relevant regions are correctly labeled as high attention in the overall attention maps.

Comparison with existing models

We compared SAMPLER with several different deep learning methods on subtyping, survival, and staging tasks using TCGA-DX data. Using splits of Chen RJ et al.,12 we compared SAMPLER with MIL,16 CLAM-SB,16 DeepAttnMISL,17 GCN-MIL,18 DS-MIL,19 and HIPT12 for BRCA, NSCLC, and RCC subtyping, and compared SAMPLER with SLPD-MIL,41 SLPD-DS-MIL,41 and SLPD-ViT41 for BRCA and NSCLC subtyping. Implementation details are provided in Chen RJ et al.12 and Yu Z et al.41 We reported the AUCs of these models from Chen RJ et al.12 and Yu Z et al.41 In addition, using splits of Zhang R et al.27 we compared SAMPLER with ABMIL,42 PT-MTA,43 MIL-RNN, DS-MIL,19 CLAM-SB,16 CLAM-MB,16 TransMIL,44 DGMIL,45 DTFD-MIL (AFS),46 DTFD-MIL (MaxS),46 MSG-Transformer,47 and MMIL-Transformer27 on NSCLC subtyping. Implementation details of the models are provided in Zhang R et al.27 For survival analysis, we compared SAMPLER with ABMIL,42 DeepAttnMISL,17 GCN-MIL,18 DS-MIL,19 and HIPT12 using splits of Chen RJ et al.12

SAMPLER-inspired context aware multi-head attention (context-MHA)

We propose context-MHA, a multi-head context-aware attention model whose architecture is motivated by the associations of SAMPLER features with morphological features. We used a multi-head attention model with 4 heads, each using 1 neuron with sigmoid activation. Total attention scores of each head were normalized to 1. Attention outputs were concatenated and passed through a 1-layer perceptron using ReLU activation, reducing feature dimensionality back to the original input size. This layer was followed by the classification layer. If a slide had more than Tmax (=1000 or 4000) tiles, Tmax tiles were randomly selected. We used Adam optimizer and categorical cross entropy for training. The model with the lowest validation loss across all training epochs (=50 or 100) was saved.

Lightweight attention model (tinyDeepMIL) for comparison to SAMPLER

Here we design an extremely lightweight attention model (tinyDeepMIL) to compare SAMPLER's computation cost with a basic and crude attention model. DeepMIL42 is a popular and foundational deep learning model for MIL, using a single attention mask for generating slide-level features. DeepMIL42 uses a multi-layer perceptron (MLP) for assigning attention weights to tiles. The attention weight of tile is:

where , , and denote the weights of the perceptron layers used to compute attention weights, and is the feature vector of tile , i.e., the row of , and denotes elementwise matrix multiplication. The number of neurons to use in each layer is a hyperparameter. Since we use DeepMIL as a baseline for runtime comparisons, we set the hyperparameters such that the number of trainable parameters is minimized. We used 1 neuron with tanh activation and one neuron with sigmoid activation. Therefore, which we set to 1 to minimize the number of trainable parameters. We call this architecture tinyDeepMIL. tinyDeepMIL uses small and large tile-level representations. We used hyperparameters similar to context-MHA. Although tinyDeepMIL uses a crude attention module, its performance (BRCA: AUC = 0.917 ± 0.032, NSCLC: AUC = 0.936 ± 0.017, RCC: AUC = 0.984 ± 0.011) was comparable to more complex attention models and SAMPLER-based classifiers (Tables 1 and 2). Context-MHA and tinyDeepMIL use the same splits of Chen RJ et al.12

Table 1.

SAMPLER WSI level classification.

| Tissue | Dataset | Magnification | Small | Medium | Large | Small + Medium + Large |

|---|---|---|---|---|---|---|

| BRCA | TCGA-DX | 20X | 0.899 ± 0.023 | 0.898 ± 0.035 | 0.899 ± 0.038 | 0.911 ± 0.029 |

| BRCA | TCGA-FR | 20X | 0.918 ± 0.029 | 0.918 ± 0.019 | 0.912 ± 0.023 | 0.932 ± 0.024 |

| BRCA | CPTAC-FR | 20X | 0.786 ± 0.066 | 0.768 ± 0.064 | 0.788 ± 0.098 | 0.797 ± 0.075 |

| NSCLC | TCGA-DX | 20X | 0.934 ± 0.023 | 0.937 ± 0.021 | 0.928 ± 0.025 | 0.938 ± 0.021 |

| NSCLC | TCGA-FR | 20X | 0.920 ± 0.008 | 0.907 ± 0.008 | 0.893 ± 0.017 | 0.927 ± 0.013 |

| NSCLC | CPTAC-FR | 20X | 0.867 ± 0.033 | 0.868 ± 0.031 | 0.877 ± 0.027 | 0.883 ± 0.027 |

| RCC | TCGA-DX | 20X | 0.984 ± 0.004 | 0.981 ± 0.009 | 0.982 ± 0.007 | 0.987 ± 0.006 |

| RCC | TCGA-FR | 20X | 0.989 ± 0.002 | 0.984 ± 0.003 | 0.977 ± 0.007 | 0.991 ± 0.002 |

| BRCA | TCGA-DX | 40X | 0.798 ± 0.033 | 0.826 ± 0.022 | 0.824 ± 0.020 | 0.850 ± 0.014 |

AUC performance of SAMPLER cancer subtype classification for breast cancer, non-small cell lung cancer, and renal cell carcinoma. AUCs are stratified by dataset source and image magnification. Results are shown for classifiers trained using one tile scale at a time (small, medium, or large, Fig. 1) or combining all 3 tile scales (small, medium, and large).

Table 2.

Comparison of SAMPLER with deep learning attention models.

| Architecture | BRCA | NSCLC | RCC |

|---|---|---|---|

| MIL | 0.778 ± 0.091 | 0.892 ± 0.042 | 0.959 ± 0.015 |

| CLAM-SB | 0.858 ± 0.067 | 0.928 ± 0.021 | 0.973 ± 0.017 |

| DeepAttnMISL | 0.784 ± 0.061 | 0.778 ± 0.045 | 0.943 ± 0.016 |

| GCN-MIL | 0.840 ± 0.073 | 0.831 ± 0.034 | 0.957 ± 0.012 |

| DS-MIL | 0.838 ± 0.074 | 0.920 ± 0.024 | 0.971 ± 0.016 |

| HIPT | 0.874 ± 0.060 | 0.952 ± 0.021 | 0.980 ± 0.013 |

| SAMPLER | 0.911 ± 0.029 | 0.940 ± 0.018 | 0.987 ± 0.006 |

Runtime comparison analysis

TCGA-FFPE NSCLC subtyping task was used for runtime comparisons. We used an environment with 4 logical cores of Intel(R) Xeon(R) Gold 6150 CPUs @ 2.70 GHz and 32 GB of RAM on a high-performance computing (HPC) platform. We considered 4 parallel processes, each using 1 logical core and 8 GB of RAM for computing SAMPLER features. SAMPLER features include all three scales (small, medium, and large). We used total runtimes to compare SAMPLER with context-MHA and tinyDeepMIL. SAMPLER's runtime is the total time to compute SAMPLER representations, train a model, and predict labels on validation and test WSIs across all 10 splits. We considered SAMPLER-based logistic regression classifiers with the following hyperparameters for runtime comparisons: no PCA initialization, C = 100, and solver = liblinear. For deep learning models we considered the small and large model for context-MHA and tinyDeepMIL and report the total run time for training a model and predicting labels on validation and test WSIs across all 10 splits. For attention based deep learning models we considered runtimes of models trained for 50 epochs as it provided a satisfactory balance between validation AUC and runtime.

Ethics

The study did not require ethical approval.

Statistics

Details on statistical tests are provided in main text. All t-tests were two-tailed. All error bars indicate standard deviation.

Role of funders

The funders had no roles in study design, analysis, or preparation of the manuscript.

Results

SAMPLER overview

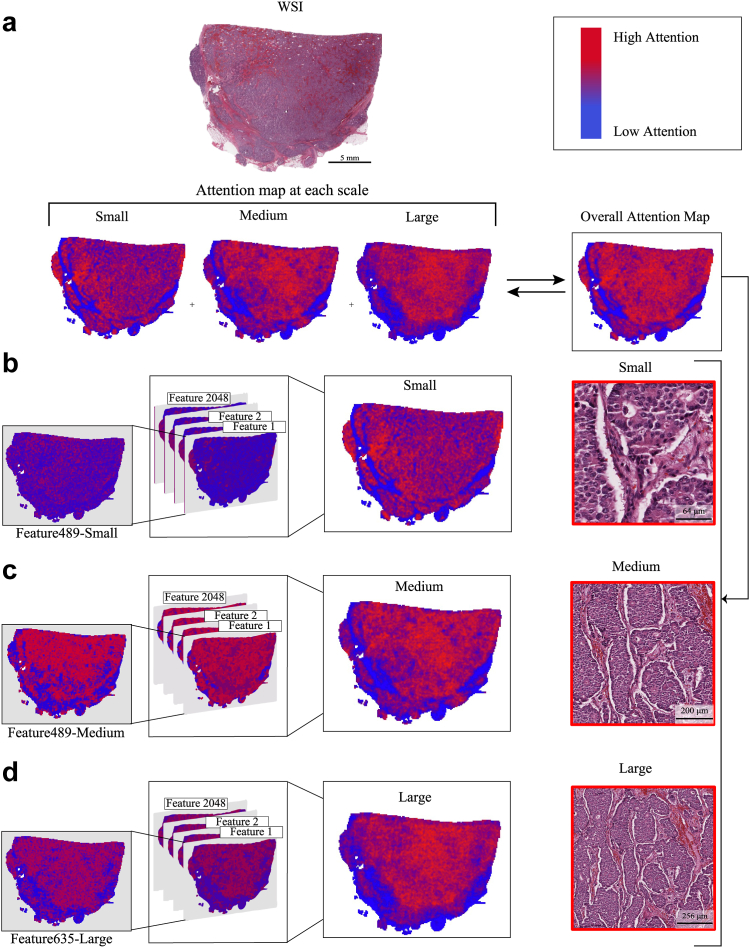

We propose an unsupervised statistical approach called SAMPLERd to efficiently combine the feature vectors of tiles into a single slide-level feature vector, summarized in Fig. 1 (Methods). To achieve this, we consider a multi-scale tile that combines the contributions of sub-tiles of three sizes: 512 (small), 1536 (3 512, medium), and 2560 (5 512, large) pixels wide, respectively. Use of multiple sub-tile sizes provides flexibility in capturing morphological information at different scales. We employ InceptionV3 pre-trained on ImageNet as a backbone to generate a representation for each sub-tile in a 2048-dimensional feature space (Fig. 1a). Next, we encode each feature at the slide-level by specifying its decile distribution across tiles, i.e., for each feature at each sub-tile size, we report the value at which the cumulative distribution function (CDF) reaches the respective decile (Fig. 1b). Thus, SAMPLER represents each WSI by a 3 10 2048 = 61,440-dimensional feature vector. The WSI feature vector can then be used for downstream analysis.

Fig. 1.

SAMPLER representation for a whole slide image. a. An InceptionV3 backbone is used to compute the deep learning features for each tile in a WSI. The scale bar for the WSI denotes the length corresponding to 5 mm. Features are computed at 3 scales using sub-tiles shown in red, purple, and black (small, medium, large tiles respectively) outlines. The scale bar denotes the length corresponding to 256 μm. b. For each feature, the distribution of values across tiles is computed. The decile values for each feature's CDF, concatenated across features and scales, form the WSI representation.

Effectiveness of SAMPLER for cancer subtype classification

To evaluate the effectiveness of SAMPLER, we examined its performance on several subtype classification tasks with a range of difficulties: distinguishing invasive ductal carcinoma (IDC) from invasive lobular carcinoma (ILC) in breast cancer (BRCA); distinguishing lung adenocarcinoma (LUAD) from lung squamous cell carcinoma (LUSC) in non-small cell lung carcinoma (NSCLC); and distinguishing clear cell (KIRC), papillary (KIRP) and chromophobe (KICH) subtypes in renal cell carcinoma (RCC). For each task, we analyzed 20X WSIs from three datasets: diagnostic formalin fixed paraffin embedded (FFPE) slides of The Cancer Genome Atlas (TCGA-DX), frozen slides of TCGA (TCGA-FR), and frozen slides of the Clinical Proteomic Tumor Analysis Consortium (CPTAC-FR) (Table 1).

SAMPLER-based logistic regression classifiers distinguished cancer subtypes, achieving AUCs> 0.75 across all tasks and tiling parameters (Table 1 and Supplementary Table S1). For most tasks, AUC values increased modestly when using all tile sizes (small, medium, and large model) compared to when using SAMPLER features from only the small tiles (Table 1 and Supplementary Table S1). These results suggest that SAMPLER features of the 512 × 512 pixel tiles are sufficient for distinguishing between classes in most cases, which is consistent with previous studies.48

Previous studies have shown that the magnification used for WSIs can affect the accuracy of classification models.48,49 Optimizations with respect to the magnification parameter and multiscale modeling are expected to be more impactful when models based on an individual scales perform poorly.48 We therefore evaluated the effect of image magnification on prediction accuracy using the BRCA data, as it was a more challenging subtyping task than RCC and NSCLC subtyping. The multiscale model using small, medium, and large tiles had a higher AUC (AUC = 0.850 ± 0.014) than the single scale using small tiles (AUC = 0.798 ± 0.033) when tiling WSIs at 40X (p-value = 0.000229 [two-tailed t-test]). In contrast, at 20X, AUC values for all tiling approaches were high (>0.898) and the addition of multiscale had a more limited effect (small, medium, and large vs small, p-value = 0.319 [two-tailed t-test]). This indicates that the multiscale encoding approach provides more robustness to variation in magnification parameters.

SAMPLER is as accurate as current fully deep learning attention models

We next compared the performance of SAMPLER to several state-of-the-art fully deep learning attention models (Methods). For the BRCA and RCC subtyping tasks, SAMPLER was the most accurate of all methods tested. For NSCLC subtyping, SAMPLER was the second-most accurate method (Table 2 and Supplementary Table S2). In all subtyping tasks SAMPLER had smaller AUC standard deviations than fully deep learning models, except for SLPD-MIL in NSCLC subtyping (SAMPLER AUC = 0.940 ± 0.018, SLPD-MIL AUC = 0.926 ± 0.017). We compared SAMPLER with additional fully deep learning models on NSCLC subtyping (Supplementary File S2), and SAMPLER performed comparable to state-of-the-art deep learning models.

To compare SAMPLER with deep learning models on challenging tasks, we performed survival analysis on several cancers (Methods, Supplementary Table S3). SAMPLER had the highest C-index for KIRC (0.696 ± 0.0251) and KIRP (0.740 ± 0.053), and the second highest C-index for IDC (0.564 ± 0.028). Interestingly, no approach consistently outperformed others in survival analysis across cancers.

SAMPLER attention maps are histopathologically meaningful

Attention maps are useful for showing which regions within a WSI are important to classification. In attention neural networks, attention layers identify tiles informative of slide labels by optimizing a weight function learned during training. Although SAMPLER does not use attention layers, we can generate analogous “attention” maps based on the differential statistics of SAMPLER features between classes in the training data. SAMPLER attention maps differ from attention maps of fully deep learning-based models, as they are not explicitly implemented as part of the classification rule and do not have trainable parameters. Still, like attention layers of deep learning models, they output tile scores prior to classification, and tiles with large weights are most indicative of a slide-level phenotype. Briefly, we generate SAMPLER attention maps at the tile level. For each tile, we define an attention score for each feature, where the feature scores are the log-likelihood ratios of decile conditioned feature distributions. We can also generate a weighted sum of feature scores to yield a tile-level score. The weights are assigned as proportional to log-transformed p-values of a hypothesis test on the SAMPLER feature (Methods). Scores can be further integrated across tile scales (small, medium, or large) to obtain overall attention maps (Methods).

Through this approach, SAMPLER can yield attention maps for each tile scale and each feature, providing interpretability (Fig. 2). We observed that attention maps from different scales can be similar, but are not necessarily identical (Fig. 2a). We also noticed that the attention map that combines all scales is smoother due to its superposition of signals (Fig. 2a). This is consistent with previous studies suggesting multiscale attention maps are smoother than single scale maps.47 Among the features that contribute most to successful classification, some can have spatially similar attention maps, while others differ (Fig. 2b, c, d and Supplementary Figure S1). This provides evidence that different groups of features represent distinct morphologies, each contributing separately to tissue classifications. Note, the features attention maps are aggregated together to form global attention maps (Fig. 2b, c, d). For the slide shown in Fig. 2, example tiles with high attention scores at small, medium, and large scales are shown in Fig. 2a. The large tile shows irregular sheets and nests of invasive ductal carcinoma, with less intervening stroma than would be seen in invasive lobular carcinoma. Progression to medium and small tiles shows more distinctly areas of lumen formation and the cohesive nature of the cells.

Fig. 2.

SAMPLER generates attention maps as a function of tile scale and individual features. a. SAMPLER outputs attention maps for each tile scale (small, medium, and large tiles) which are combined to obtain the overall attention map. The tiles with highest attention score for each of the small, medium, and large scales are shown on the side, the attention maps independently identified the same region of the slide at all three tile scales. The scale bars denotes the length corresponding to 5 mm, 64 μm, 256 μm, and 200 μm for the WSI, small, medium, and large tile, respectively. As shown on color map red/blue coloring indicates higher/lower attention. SAMPLER aggregates feature-level attention maps at each scale (b. small, c. medium, d. large) to obtain the attention map of each tiling scale. Features contributing most to prediction may have different attention maps, suggesting they identify distinct morphologies indicative of phenotype.

To further interpret SAMPLER-identified regions of interest, we generated attention maps for the BRCA, NSCLC, and RCC classification tasks in TCGA FFPE slides. Overall attention maps, tiles with high attention (red border), and tiles with low attention (blue border) were identified and were evaluated by a board-certified pathologist (Methods, Fig. 3). WSI morphologies known to be associated with the tumor subtype were observed in tiles with high attention scores. High attention tiles for IDC showed solid sheets of tumor cells with areas of glandular lumen formation, while low attention tiles had predominantly non-neoplastic fibrous tissue with only focal areas of tumor (Fig. 3a, top panel). High attention tiles for ILC showed typical features including single cells and cords infiltrating fibrous tissue with uniform cytology, while low attention tiles showed less tumor involvement and more abundant uninvolved adipose tissue (Fig. 3a, bottom panel). LUAD high attention tiles included carcinoma with glandular lumen formation diagnostic of adenocarcinoma, while low attention tiles showed non-neoplastic lung parenchyma (Fig. 3b, top panel). LUSC high attention tiles contained solid sheets of tumor with necrosis and focal keratin pearls, while low attention tiles showed non-neoplastic lung parenchyma (Fig. 3b, bottom panel). KIRC high attention tiles showed sheets and nests of tumor cells with clear cytoplasm, distinct nuclear membranes, and arborizing vasculature, while low attention tiles contained predominantly fibrous stroma (Fig. 3c, top panel). KIRP contained tumor with papillary architecture, and low attention tiles contained non-neoplastic kidney, fibrous stroma, and areas without tissue (Fig. 3c, middle panel). KICH high attention tiles showed sheets of tumor cells with clear to granular eosinophilic cytoplasm, distinct nuclear membranes, perinuclear halos and wrinkled hyperchromatic nuclei; low attention tiles had non-neoplastic kidney and fibrous stroma (Fig. 3c, bottom panel). The high attention tiles for each tumor type contained distinctive diagnostic features for classification by the pathologist (e.g., IDC vs. ILC), supporting their utility in classification by SAMPLER. SAMPLER high attention regions were concordant with pathologist evaluations in TCGA frozen slides as well (Supplementary Figure S2). Briefly, high attention tiles contained tumor with the above features specific to the tumor type, and low attention tiles generally had areas without tissue, or contained fibrous stroma, blood, or artifacts such as tissue folds.

Fig. 3.

Attention maps for(a)BRCA,(b)NSCLC, and(c)RCCsubtype classification. (Left column) WSI H&E image. (Middle column) Attention map generated by combining scores from all features from all three tile scales. Red/blue coloring shows more/less informative regions. (Right column) Left panel indicates the highest attention tiles. Right panel indicates the lowest attention tiles. Scale bars denote the length corresponding to 5 mm and 256 μm for WSIs and example tiles, respectively. All examples are from TCGA-DX FFPE WSIs.

SAMPLER classifiers are robust across data sources

Next, we tested the ability of SAMPLER-based classifiers to generalize across different data sources, i.e., TCGA and CPTAC. This was to determine robustness to batch effects in sample acquisition, staining, and scanning, which are common constraints in the applicability of H&E-based predictive models. We applied models trained on TCGA-FR to CPTAC-FR data and vice versa. This was done for both the BRCA and NSCLC subtyping tasks. The RCC task was omitted because CPTAC RCC slides are all clear cell carcinomas.

SAMPLER classifiers generalized well to external datasets, with the AUC (0.803 ± 0.023) of the TCGA-BRCA trained model applied to CPTAC-BRCA being similar (p-value = 0.812 [two-tailed t-test]) to the internal test AUC (0.797 ± 0.075) of classifiers trained on CPTAC-BRCA (Fig. 4a). The AUC (0.792 ± 0.027) of the CPTAC-BRCA trained model applied to TCGA-BRCA was less than (p-value = 3.593e-10 [two-tailed t-test]) the internal test AUC (0.932 ± 0.024) of classifiers trained on TCGA-BRCA (Fig. 4b). However, it was similar to the internal test AUC for CPTAC-BRCA (p-value = 0.845 [two-tailed t-test]). We observed similar results for NSCLC subtyping for models trained on TCGA-FR then tested on CPTAC-FR and vice versa (Supplementary Figure S3).

Fig. 4.

External validation on frozen WSIs. a. AUC and standard deviation (error bar) from 10 splits of BRCA subtype classification of CPTAC-FR test WSIs (N = 33 patients in each split), using models trained on CPTAC-FR or TCGA-FR (left). Attention map and examples of high attention tiles within a CPTAC-FR WSI (ILC) for a model trained on CPTAC-FR (middle). Attention map and examples of high attention tiles within the same CPTAC-FR WSI for a model trained on TCGA-FR (right). Scale bars denote 5 mm and 256 μm for example WSIs and tiles, respectively. b. AUC and standard deviation (error bar) from 10 splits of BRCA subtype classification of TCGA-FR test WSIs (N = 87 patients in each split), using models trained on TCGA-FR or CPTAC-FR (left). Attention map and examples of high attention tiles within a TCGA-FR WSI (IDC) for a model trained on CPTAC-FR (middle). Attention map and examples of informative tiles within the same TCGA-FR WSI for a model trained on TCGA-FR (right). Scale bars denote 5 mm and 256 μm for example WSIs and tiles, respectively.

Expert evaluations confirmed that regions with high attention scores in CPTAC-BRCA WSIs based on TCGA-BRCA train data were biologically meaningful (Fig. 4a). Similarly, based on CPTAC-BRCA train data we were able to identify biologically relevant regions in TCGA-BRCA images (Fig. 4b). For example, we observed infiltrating cords and single cells with low grade cytologic features in the high attention tiles in Fig. 4a, and sheets and clusters of tumor cells with areas of lumen formation in Fig. 4b. Similar observations were made in NSCLC (Supplementary Figure S3). Overall, the results of the external validation and expert evaluations suggest that the classifiers and attention maps generated using SAMPLER are robust to variations across datasets.

SAMPLER concepts motivate architecture optimization of fully deep learning models

Typical attention-based deep learning models have multiple weaknesses including but not limited to the large number of trainable parameters and the fact that training at the slide-level may create training instability and overfitting in such models.31,50 The simple effectiveness of the SAMPLER representation for WSI analysis suggests that SAMPLER concepts can guide the design of improved attention models. We expect that such improved models would have fewer parameters than typical deep attention models but maintain predictive accuracy.

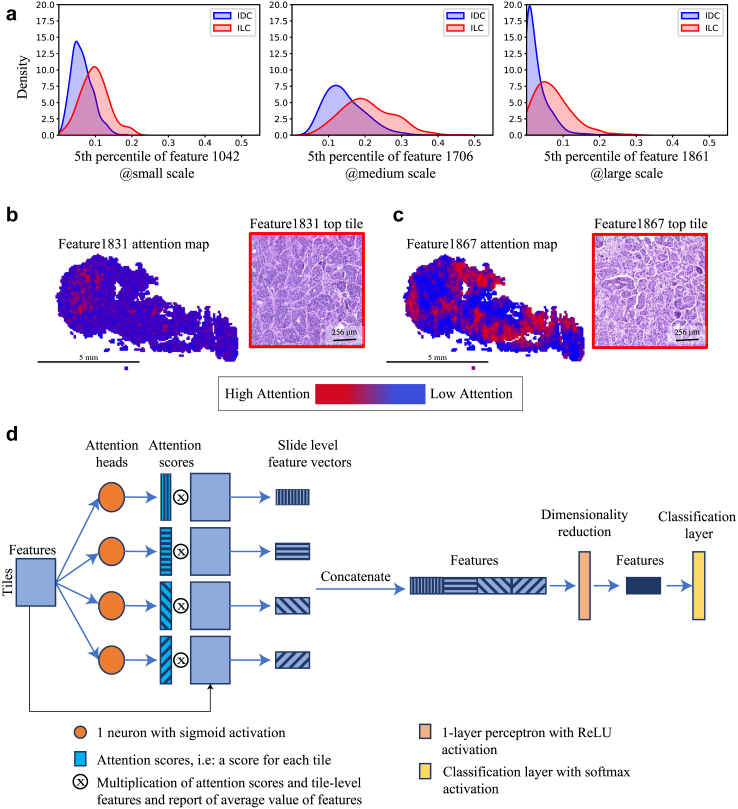

We have observed several common behaviors of the SAMPLER representation. First, some SAMPLER features are systematically higher or lower between classes (Fig. 5a), i.e., individual features can have linear rather than complex differences between cancer subtypes. This suggests that a 1-layer perceptron will be sufficient for identifying important tiles, as opposed to the multi-layer perceptrons42 used in typical attention models. Second, different features may correspond to different morphologies within a WSI. For example, in the classification of IDC/ILC, the regions salient to feature1831 and feature1837 differ (Fig. 5b and c), and the top tiles for these features show different morphologies (Fig. 5b and c). Specifically, feature1831 shows larger tumor clusters and darker staining compared with feature1837. This variability across features supports the need for multi-head attention in the deep learning model. Previous studies have shown that some features are strongly correlated and can be clustered into groups.6 This suggests that only a small number of attention heads are needed. Lastly, multiscale classifiers are feature accurate than single scale classifiers, and multiscale attention maps better distinguish tumor regions than single scale maps. This suggests that multi-scale (i.e., context aware) representations are valuable for predictive accuracy and robustness to image noise.48,49

Fig. 5.

SAMPLER guides design of an improved attention model: a. Density plot of top SAMPLER features of each tile size for classification of IDC (blue) versus ILC (red). Individual feature at a specified quantile and tile size is shown. (left) feature1042 at percentile 5 from small tiles. (middle) feature1706 at percentile 55 from medium tiles. (right) feature1861 at percentile 5 from large tiles. b. Attention map of feature1831 using large tiles (left), and example tile with high attention score from attention map (right) c. Attention map of feature1867 using large tiles (left), and an example tile with high attention score from attention map (right). Scale bars denote 5 mm and 256 μm for WSIs and example tiles, respectively. d. Schematic representation of context-MHA architecture.

We propose an attention deep learning model called context aware multi-head attention architecture (context-MHA) (Fig. 5d) that takes into account the behaviors observed above. The model uses a 1-layer perceptron for calculating attention, uses a small number of heads (4) for multi-head attention, and uses a context-aware representation via multi-scale tiles. These simplifications yield a much lighter network that can be more easily trained and tested than standard attention models.

We tested our context-MHA on TCGA-DX data. Compared with other deep learning models (see Table 2), context-MHA had the highest AUC for both the BRCA (AUC = 0.921 ± 0.027) and RCC (AUC = 0.987 ± 0.008) subtyping tasks. For the NSCLC subtyping task, it was second only to the HIPT model (context-MHA AUC = 0.946 ± 0.016, HIPT AUC = 0.952 ± 0.021). Context-MHA also had the lowest AUC deviations across all subtyping tasks. Context-MHA had slightly higher AUC than the SAMPLER classifiers on all subtyping tasks.

SAMPLER is computationally efficient

A major advantage of SAMPLER over fully deep learning attention models is its low computation cost. The computation cost of SAMPLER can be divided into two parts: (1) SAMPLER WSI level feature computation, and (2) classifier training followed by testing on the validation and test sets.

To evaluate part (1), we measured the time needed to compute WSI level representations from tile level features on the TCGA-DX NSCLC dataset. When we used all the tiles in the WSI, it took less than 135 min (Fig. 6a) (methods). However, SAMPLER is based on the distribution of feature values over all tiles within the WSI, and these distributions can also be approximated by sampling only a subset of tiles to further reduce the runtime (Fig. 6a) (methods). To establish an upper bound on the runtime, for our analyses we used all tiles in each WSI unless noted. Loading tile level features from disk accounts for more than half of the computation runtime. SAMPLER is also highly parallelizable, as each WSI is processed independently. The worst-case complexity of SAMPLER is , where S, D, and H are the number of slides in the dataset, the dimensionality of the feature space, and the maximum number of sampled tiles in a WSI, respectively (Supplementary File S3). This theoretical result establishes the low computation cost of SAMPLER.

Fig. 6.

Runtime comparison of SAMPLER with attention-based deep learning models: a. Runtime of SAMPLER feature computation for lung WSIs of TCGA-DX (N = 1503). b. Runtimes of logistic regression models (train, validation, and test) under various hyperparameters, using small, medium, and large tiles. c. Runtime of context-MHA and tinyDeepMIL under various training hyperparameters using small and large tile-level features. Error bars denote standard deviation.

To evaluate part (2), we measured the time needed to train, validate, and test a SAMPLER-based classifier to distinguish LUAD vs. LUSC on the TCGA-DX NSCLC dataset. We used logistic regression for classification tasks and measured runtime across different training choices. We observed that the “liblinear” optimizer was faster than “saga” using raw SAMPLER features. We tested a model in which we simplified the SAMPLER representation by PCA analysis before inputting into the logistic regression classifier. We found that application of PCA drastically reduced runtime of “saga” (Fig. 6b). Interestingly, the choice between “liblinear” and “saga”, as well as the use of PCA, only had a marginal effect on the AUCs (Supplementary Files S4 and S5). For all tested parameters, PCA or “liblinear” used less than 30 s of runtime for training, validation, and testing (Fig. 6b and c, Supplementary Files S4 and S5).

We also compared runtimes for SAMPLER followed by logistic regression to runtimes of attention-based deep learning approaches. For the attention models, we analyzed our context-MHA model and a simple version of DeepMIL.42 For DeepMIL, we specifically set its hyperparameters to minimize trainable parameters, yielding a lightweight attention model we refer to as tinyDeepMIL (methods). SAMPLER and logistic regression runtimes are orders of magnitude faster than both context-MHA and tinyDeepMIL (Fig. 6c). When sampling at most 1000 tiles per WSI to construct slide-level features, SAMPLER was 454 and 288 times faster than context-MHA and tinyDeepMIL, respectively. When sampling at most 4000 tiles, SAMPLER was 332 and 316 times faster than context-MHA and tinyDeepMIL, respectively. Moreover, when using all tiles for SAMPLER and constraining context-MHA and tinyDeepMIL to use only 4000 tiles per WSI SAMPLER was still 114 and 109 times faster (Fig. 6c, methods). A key reason for this speed advantage is that SAMPLER computes WSI level representations once and saves them, while an attention-based model performs this task every time an attention layer is being trained. Therefore, hyperparameter optimization of SAMPLER-based classifiers is much faster than fully deep learning models. For example, training and testing a logistic regression classifier (solver = liblinear, max_iter = 1000) is 43,000 times faster than tinyDeepMIL (1000 max tiles@50epochs). Runtimes of logistic regression classifiers under additional hyperparameters are provided in Supplementary Files S4 and S5.

SAMPLER is optimal under moderate conditions

Why does SAMPLER work, and under what conditions will it be applicable? We have found that SAMPLER is theoretically optimal for constructing slide-level representations that can recapitulate the Bayes classifier under moderate conditions. Supplementary File S3 proves this optimality for combining tile-level features into a slide-level representation, for a given and fixed tile-level feature extractor. Although information loss in the feature extraction step cannot be recovered by SAMPLER, independence of features and dense empirical sampling of feature CDFs are sufficient conditions to guarantee that SAMPLER can reconstruct the Bayes classifier. Moreover, the independence assumption can be relaxed for feature spaces encoding local tile interactions, and for an embedded feature space equipped with pairwise feature interactions. Two caveats are that our derivations do not provide information on the functional form of the SAMPLER-based Bayes classifier, and the optimality conditions of SAMPLER may be violated in practice. However, the strong performance of SAMPLER compared with fully deep learning models (Table 2) is likely due to two factors: (1) InceptionV3 features are linearly associated with phenotypes,7 and (2) empirical CDFs are smooth enough to be well-approximated by 10 deciles.

Discussion

We have presented SAMPLER to provide an unsupervised statistical approach for aggregating tile features into WSI representations. Remarkably, SAMPLER achieves performance comparable with state-of-the-art supervised attention-based deep learning models on various downstream tasks (Tables 2, Supplementary Table S2 and S3, and Supplementary File S2), suggesting it successfully encodes the heterogenous morphological features in WSIs. At the same time, SAMPLER is computationally cheaper by at least two orders of magnitude than supervised models. While previous unsupervised models performed poorly on external test sets,36 SAMPLER-based classifiers performed robustly across datasets. Such prior studies used self-supervised objectives36 and auxiliary tasks51 to train models that output slide representations. In contrast, SAMPLER uses a fully unsupervised approach and encodes the empirical CDFs of individual features. SAMPLER can be used to simplify and increase the accuracy of attention-based deep learning architectures. Additionally, our theoretical results provide sufficient conditions under which SAMPLER is optimal, i.e., is sufficient to recapitulate the Bayes classifier.

A strength of SAMPLER is its provision of “attention” maps with robust interpretability that agree with pathologist evaluations. SAMPLER attention maps are robust because they are derived directly from the statistical distribution of features (i.e., the deep learning features outputted from pre-trained architectures) and do not rely on the estimated weights of a trained classifier. Because these attention maps do not require training, they have the potential to reveal predictive regions even for WSI datasets with too few slides to train attention-based deep learning models. SAMPLER attention maps are also decomposable into the contributions of each deep learning feature, which can further assist interpretability.7 Combined with the high accuracy of SAMPLER classifiers, the biological meaningfulness of these attention maps show that SAMPLER representations successfully encode the distinct morphologies within WSIs. An advantage of SAMPLER over previous statistical models that encode each WSI using mean or median values of deep learning features7,52 is that SAMPLER yields feature-decomposable attention maps. Mean/median approaches have achieved high accuracies in certain tasks,7,52 but they do not output attention maps, which limits their interpretability.

It is worth emphasizing SAMPLER's advantages in computational efficiency over attention models. SAMPLER requires construction of WSI level representations only once. These representations can then be input to any downstream classifier; therefore, many classification models can be simultaneously trained and validated. Furthermore, since SAMPLER representations are at the WSI level, training using SAMPLER features is less expensive than approaches that use sets of tiles as inputs, such as attention-based deep learning models.

A myriad of attention architectures have been proposed in the literature, but architecture optimization and comparisons over architectures are often infeasible because such large models are expensive to train.31 In contrast, we were able to design a lightweight attention model (context-MHA) directly from concepts motivated by SAMPLER, and it performed better than the large but naive attention models in nearly all cases.

In summary, SAMPLER is an effective unsupervised approach for generating slide-level representations. We demonstrated its efficacy by training simple subtype classification models with accuracies comparable to state-of-the-art deep learning models, and by generating interpretable attention maps that identify regions of the WSI that are informative of tumor phenotype. SAMPLER facilitates the advancement of deep learning attention architectures and greatly reduces computational needs for slide-level classification. The simplicity, speed, and effectiveness of the SAMPLER representation support the value of unsupervised analysis to improve diverse research areas that have been limited by representation learning approaches, such as image similarity search.51 Such studies may catalyze further improvements to advance tissue image analysis and patient treatment.

Contributors

P.M conceptualized the idea, analyzed the data, and wrote the paper. T.S contributed to data analysis and provided pathological evaluations. A.F. conceptualized the idea, performed data analysis, provided the theoretical results, wrote the paper, and oversaw the work. J.C. oversaw the work, provided conceptual input, and wrote the paper. A.F. and P.M. have accessed and verified the underlying data. All authors read and approved the final version of the manuscript.

Data sharing statement

TCGA data are publicly available through the GDC portal (https://portal.gdc.cancer.gov) and CPTAC data are publicly available through the cancer imaging archive53 (https://www.cancerimagingarchive.net/). The code and SAMPLER representations of datasets analyzed in this study are publicly available at https://github.com/TheJacksonLaboratory/SAMPLER.

Declaration of interests

A.F. acknowledges research support from the JAX Scholar fellowship from The Jackson Laboratory. T.B.S. acknowledges effort as a consultant for Google on projects distinct from the current study. Other authors declare no competing interests.

Acknowledgements

The authors gratefully acknowledge the contribution of cyberinfrastructure high performance computing resources at the Jackson Laboratory. A.F. would like to thank the Jackson laboratory for the JAX scholar award. Research reported in this publication was supported by NIH grant R01CA230031 and NIH/NCI grant P30 CA034196.

Supplementary data related to this article can be found at https://doi.org/10.1016/j.ebiom.2023.104908.

SAMPLER's GitHub page: https://github.com/TheJacksonLaboratory/SAMPLER.

Contributor Information

Ali Foroughi pour, Email: ali.foroughipour@jax.org.

Jeffrey H. Chuang, Email: jeff.chuang@jax.org.

Appendix A. Supplementary data

References

- 1.Fischer A.H., Jacobson K.A., Rose J., Zeller R. Hematoxylin and eosin staining of tissue and cell sections. Cold Spring Harb Protoc. 2008;2008(5):pdb–rot4986. doi: 10.1101/pdb.prot4986. [DOI] [PubMed] [Google Scholar]

- 2.Zhang Z., Chen P., McGough M., et al. Pathologist-level interpretable whole-slide cancer diagnosis with deep learning. Nat Mach Intell. 2019;1(5):236–245. [Google Scholar]

- 3.Lin H., Chen H., Graham S., Dou Q., Rajpoot N., Heng P.A. Fast scannet: fast and dense analysis of multi-gigapixel whole-slide images for cancer metastasis detection. IEEE Trans Med Imag. 2019;38(8):1948–1958. doi: 10.1109/TMI.2019.2891305. [DOI] [PubMed] [Google Scholar]

- 4.Zhou X., Li C., Rahaman M.M., et al. A comprehensive review for breast histopathology image analysis using classical and deep neural networks. IEEE Access. 2020;8:90931–90956. [Google Scholar]

- 5.Li X., Li C., Rahaman M.M., et al. A comprehensive review of computer-aided whole-slide image analysis: from datasets to feature extraction, segmentation, classification and detection approaches. Artif Intell Rev. 2022;55(6):4809–4878. [Google Scholar]

- 6.Noorbakhsh J., Farahmand S., Foroughi Pour A., et al. Deep learning-based cross-classifications reveal conserved spatial behaviors within tumor histological images. Nat Commun. 2020;11(1):6367. doi: 10.1038/s41467-020-20030-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Foroughi Pour A., White B.S., Park J., Sheridan T.B., Chuang J.H. Deep learning features encode interpretable morphologies within histological images. Sci Rep. 2022;12(1):9428. doi: 10.1038/s41598-022-13541-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zhang J., Hou C., Zhu W., et al. 2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) IEEE; 2022. Attention multiple instance learning with Transformer aggregation for breast cancer whole slide image classification; pp. 1804–1809. [Google Scholar]

- 9.Wang Q., Zou Y., Zhang J., Liu B. Second-order multi-instance learning model for whole slide image classification. Phys Med Biol. 2021;66(14) doi: 10.1088/1361-6560/ac0f30. [DOI] [PubMed] [Google Scholar]

- 10.Coudray N., Ocampo P.S., Sakellaropoulos T., et al. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat Med. 2018;24(10):1559–1567. doi: 10.1038/s41591-018-0177-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Yang Z., Wang X., Xiang J., et al. The devil is in the details: a small-lesion sensitive weakly supervised learning framework for prostate cancer detection and grading. Virchows Arch. 2023;482(3):525–538. doi: 10.1007/s00428-023-03502-z. [DOI] [PubMed] [Google Scholar]

- 12.Chen R.J., Chen C., Li Y., et al. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022. Scaling vision transformers to gigapixel images via hierarchical self-supervised learning; pp. 16144–16155. [Google Scholar]

- 13.Yao J., Zhu X., Huang J. Medical image computing and computer assisted intervention–MICCAI 2019: 22nd international conference. Springer International Publishing; Shenzhen, China: 2019. Deep multi-instance learning for survival prediction from whole slide images; pp. 496–504. [Google Scholar]

- 14.Zhao L., Xu X., Hou R., et al. Lung cancer subtype classification using histopathological images based on weakly supervised multi-instance learning. Phys Med Biol. 2021;66(23) doi: 10.1088/1361-6560/ac3b32. [DOI] [PubMed] [Google Scholar]

- 15.Wang X., Chen H., Gan C., et al. Weakly supervised deep learning for whole slide lung cancer image analysis. IEEE Trans Cybern. 2019;50(9):3950–3962. doi: 10.1109/TCYB.2019.2935141. [DOI] [PubMed] [Google Scholar]

- 16.Lu M.Y., Williamson D.F., Chen T.Y., Chen R.J., Barbieri M., Mahmood F. Data-efficient and weakly supervised computational pathology on whole-slide images. Nat Biomed Eng. 2021;5(6):555–570. doi: 10.1038/s41551-020-00682-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yao J., Zhu X., Jonnagaddala J., Hawkins N., Huang J. Whole slide images based cancer survival prediction using attention guided deep multiple instance learning networks. Med Image Anal. 2020;65 doi: 10.1016/j.media.2020.101789. [DOI] [PubMed] [Google Scholar]

- 18.Zhao Y., Yang F., Fang Y., et al. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020. Predicting lymph node metastasis using histopathological images based on multiple instance learning with deep graph convolution; pp. 4837–4846. [Google Scholar]

- 19.Li B., Li Y., Eliceiri K.W. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021. Dual-stream multiple instance learning network for whole slide image classification with self-supervised contrastive learning; pp. 14318–14328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Raza M., Awan R., Bashir R.M., Qaiser T., Rajpoot N.M. Mimicking a pathologist: dual attention model for scoring of gigapixel histology images. arXiv. 2023 doi: 10.48550/arXiv.2302.09682. preprint arXiv:2302.09682. [DOI] [Google Scholar]

- 21.Yan R., Shen Y., Zhang X., et al. Histopathological bladder cancer gene mutation prediction with hierarchical deep multiple-instance learning. Med Image Anal. 2023;87 doi: 10.1016/j.media.2023.102824. [DOI] [PubMed] [Google Scholar]

- 22.Wibawa M.S., Lo K.W., Young L.S., Rajpoot N. European conference on computer vision. Springer Nature Switzerland; Cham: 2022. Multi-scale attention-based multiple instance learning for classification of multi-gigapixel histology images; pp. 635–647. [Google Scholar]

- 23.Ijaz A., Raza B., Kiran I., et al. Modality specific CBAM-VGGNet model for the classification of breast histopathology images via transfer learning. IEEE Access. 2023;11:15750–15762. [Google Scholar]

- 24.Xiong C., Chen H., Sung J., King I. Diagnose like a pathologist: transformer-enabled hierarchical attention-guided multiple instance learning for whole slide image classification. arXiv. 2023 doi: 10.48550/arXiv.2306.05029. preprint arXiv:2301.08125. [DOI] [Google Scholar]

- 25.Fan M., Chakraborti T., Chang E.I., Xu Y., Rittscher J. Medical image computing and computer assisted intervention–MICCAI 2020: 23rd international conference, Lima, Peru, october 4–8, 2020, proceedings, Part V 23. Springer International Publishing; 2020. Microscopic fine-grained instance classification through deep attention; pp. 490–499. [Google Scholar]

- 26.Li C., Zhu X., Yao J., Huang J. 2022 26th international conference on pattern recognition (ICPR) IEEE; 2022. Hierarchical transformer for survival prediction using multimodality whole slide images and genomics; pp. 4256–4262. [Google Scholar]

- 27.Zhang R., Zhang Q., Liu Y., Xin H., Liu Y., Wang X. Multi-level multiple instance learning with transformer for whole slide image classification. arXiv. 2023 doi: 10.48550/arXiv.2306.05029. preprint arXiv:2306.05029. [DOI] [Google Scholar]

- 28.Cai H., Feng X., Yin R., et al. MIST: multiple instance learning network based on Swin Transformer for whole slide image classification of colorectal adenomas. J Pathol. 2023;259(2):125–135. doi: 10.1002/path.6027. [DOI] [PubMed] [Google Scholar]

- 29.Tellez D., Litjens G., van der Laak J., Ciompi F. Neural image compression for gigapixel histopathology image analysis. IEEE Trans Pattern Anal Mach Intell. 2019;43(2):567–578. doi: 10.1109/TPAMI.2019.2936841. [DOI] [PubMed] [Google Scholar]

- 30.Deng R., Cui C., Remedios L.W., et al. Cross-scale multi-instance learning for pathological image diagnosis. arXiv. 2023 doi: 10.48550/arXiv.2304.00216. preprint arXiv:2304.00216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ghaffari Laleh N., Muti H.S., Loeffler C.M., et al. Benchmarking weakly-supervised deep learning pipelines for whole slide classification in computational pathology. Med Image Anal. 2022;79 doi: 10.1016/j.media.2022.102474. [DOI] [PubMed] [Google Scholar]

- 32.Shallue C.J., Lee J., Antognini J., Sohl-Dickstein J., Frostig R., Dahl G.E. Measuring the effects of data parallelism on neural network training. J Mach Learn Res. 2019;20(112):1–49. [Google Scholar]

- 33.Ziyin L., Li B., Galanti T., Ueda M. The probabilistic stability of stochastic gradient descent. arXiv. 2023 doi: 10.48550/arXiv.2303.13093. preprint arXiv:2303.13093. [DOI] [Google Scholar]

- 34.Ilyas A., Santurkar S., Tsipras D., Engstrom L., Tran B., Madry A. Adversarial examples are not bugs, they are features. Adv Neural Inf Process Syst. 2019;32 [Google Scholar]

- 35.Nielsen I.E., Dera D., Rasool G., Ramachandran R.P., Bouaynaya N.C. Robust explainability: a tutorial on gradient-based attribution methods for deep neural networks. IEEE Signal Process Mag. 2022;39(4):73–84. [Google Scholar]

- 36.Tavolara T.E., Gurcan M.N., Niazi M.K. Contrastive multiple instance learning: an unsupervised framework for learning slide-level representations of whole slide histopathology images without labels. Cancers. 2022;14(23):5778. doi: 10.3390/cancers14235778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ding S., Wang J., Li J., Shi J. International conference on medical image computing and computer-assisted intervention. Springer Nature Switzerland; Cham: 2023. Multi-scale prototypical transformer for whole slide image classification; pp. 602–611. [Google Scholar]

- 38.Hou W., Yu L., Lin C., et al. Hˆ 2-MIL: exploring hierarchical representation with heterogeneous multiple instance learning for whole slide image analysis. Proc AAAI Conf Artif Intell. 2022;36(1):933–941. [Google Scholar]

- 39.Defazio A., Bach F., Lacoste-Julien S.S.A.G.A. A fast incremental gradient method with support for non-strongly convex composite objectives. Adv Neural Inf Process Syst. 2014;27 [Google Scholar]

- 40.Fan R.E., Chang K.W., Hsieh C.J., Wang X.R., Lin C.J. LIBLINEAR: a library for large linear classification. J Mach Learn Res. 2008;9:1871–1874. [Google Scholar]

- 41.Yu Z., Lin T., Xu Y. International conference on medical image computing and computer-assisted intervention. Springer Nature Switzerland; Cham: 2023. SLPD: slide-level prototypical distillation for WSIs; pp. 259–269. [Google Scholar]

- 42.Ilse M., Tomczak J., Welling M. Vol. 3. PMLR; 2018. Attention-based deep multiple instance learning; pp. 2127–2136. (International conference on machine learning). [Google Scholar]

- 43.Li W., Nguyen V.D., Liao H., Wilder M., Cheng K., Luo J. Medical image computing and computer assisted intervention–MICCAI 2019: 22nd international conference, Shenzhen, China, October 13–17, 2019, proceedings, Part I 22. Springer International Publishing; 2019. Patch transformer for multi-tagging whole slide histopathology images; pp. 532–540. [Google Scholar]

- 44.Shao Z., Bian H., Chen Y., Wang Y., Zhang J., Ji X. Transmil: transformer based correlated multiple instance learning for whole slide image classification. Adv Neural Inf Process Syst. 2021 Dec 6;34:2136–2147. [Google Scholar]

- 45.Qu L., Luo X., Liu S., Wang M., Song Z.D. InteSrnational conference on medical image computing and computer-assisted intervention. Springer Nature Switzerland; Cham: 2022. Distribution guided multiple instance learning for whole slide image classification; pp. 24–34. [Google Scholar]

- 46.Zhang H., Meng Y., Zhao Y., et al. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022. DTFD-MIL: double-tier feature distillation multiple instance learning for histopathology whole slide image classification; pp. 18802–18812. [Google Scholar]

- 47.Fang J., Xie L., Wang X., Zhang X., Liu W., Tian Q. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2022. Msg-transformer: exchanging local spatial information by manipulating messenger tokens; pp. 12063–12072. [Google Scholar]

- 48.D'Amato M., Szostak P., Torben-Nielsen B. A comparison between single-and multi-scale approaches for classification of histopathology images. Front Public Health. 2022;10 doi: 10.3389/fpubh.2022.892658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Zhang H., Liu Z., Song M., Lu C. Hagnifinder: recovering magnification information of digital histological images using deep learning. J Pathol Inf. 2023;14 doi: 10.1016/j.jpi.2023.100302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Lu M.Y., Chen R.J., Wang J., Dillon D., Mahmood F. Semi-supervised histology classification using deep multiple instance learning and contrastive predictive coding. arXiv. 2019 preprint arXiv:1910.10825. [Google Scholar]

- 51.Fashi P.A., Hemati S., Babaie M., Gonzalez R., Tizhoosh H.R. A self-supervised contrastive learning approach for whole slide image representation in digital pathology. J Pathol Inf. 2022;13 doi: 10.1016/j.jpi.2022.100133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Asilian Bidgoli A., Rahnamayan S., Dehkharghanian T., et al. Evolutionary deep feature selection for compact representation of gigapixel images in digital pathology. Artif Intell Med. 2022;132 doi: 10.1016/j.artmed.2022.102368. [DOI] [PubMed] [Google Scholar]

- 53.Clark K., Vendt B., Smith K., et al. The Cancer Imaging Archive (TCIA): maintaining and operating a public information repository. J Digit Imag. 2013;26:1045–1057. doi: 10.48550/arXiv.1910.10825. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.