Abstract

Over the past decade, medical education has shifted from a time-based approach to a competency-based approach for surgical training. This transition presents many new systemic challenges. The Society for Improving Medical Professional Learning (SIMPL) was created to respond to these challenges through coordinated collaboration across an international network of medical educators. The primary goal of the SIMPL network was to implement a workplace-based assessment and feedback platform. To date, SIMPL has developed, implemented, and sustained a platform that represents the earliest and largest effort to support workplace-based assessment at scale. The SIMPL model for collaborative improvement demonstrates a potential approach to addressing other complex systemic problems in medical education.

Keywords: assessment, feedback, surgical education, collaboration, quality improvement

Mini Abstract: Over the past decade, medical education has shifted from a time-based approach to competency-based medical education (CBME) for surgical training. The Society for Improving Medical Professional Learning (SIMPL) was created to implement a workplace-based assessment and feedback platform through coordinated collaboration across an international network of medical educators and training programs. This Surgical Perspective article reviews the challenges of establishing a CBME platform that improves the quality of education provided to medical trainees while also improving the quality of care provided to patients.

Rising concern for patient safety, duty hour restrictions, and increased expectations for faculty supervision has led to a more regulated system of supervised training and evaluation within medical education.1 In response, organizations such as the Accreditation Council for Graduate Medical Education and the American Board of Surgery in the United States, the Royal College of Physicians in Canada, and the General Medical Council in the United Kingdom, among many others, have pursued implementation of a more competency-based medical education (CBME) system.2 At the same time, many local initiatives have tried to implement CBME within individual training programs. However, both the top-down and bottom-up approaches to implementing CBME have faced numerous challenges. SIMPL was designed to mitigate some of these challenges and implement CBME at scale.

THE SIMPL APPROACH

Founded by surgical educators, the Society for Improving Medical Professional Learning (SIMPL) was established as a nonprofit collaborative of training programs interested in improving the quality of care provided to patients by improving the quality of education provided to medical trainees.3 A natural starting point for their work was to help overcome barriers to implementing a CBME system, with a specific focus on competency assessment.

From the beginning, SIMPL members agreed to apply a collaborative, continuous quality improvement approach to implementing change. To support this approach, SIMPL members collectively developed a shared data infrastructure, which includes an assessment platform to collect performance ratings, a data registry for research, and advanced analytic tools to evaluate and inform educational practice. Governed by a volunteer group of medical educators, all SIMPL members agreed to share these collective resources, which includes their data, to support the larger mission.

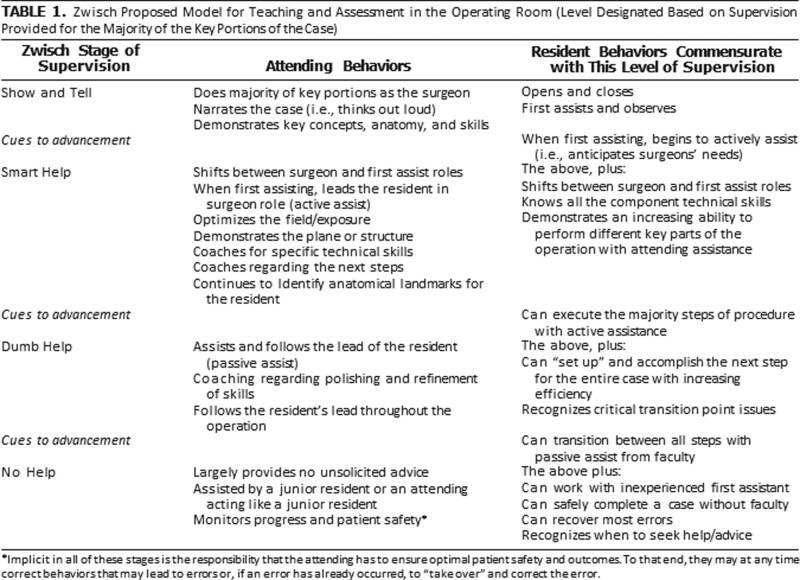

SIMPL’s work began in 2012, when surgical educators at Northwestern University launched a new workplace-based assessment (ie, SIMPL OR) designed specifically to evaluate and provide immediate feedback regarding operative performance.4 To support utilization, this assessment was implemented as a smartphone app. Raters used this app to assess trainee retrospective entrustment (autonomy) and prospective entrustment (performance) on a 4- and 5-point scale, respectively (Figure 1). Raters also classify cases by patient-related complexity (easiest 1/3, middle 1/3, hardest 1/3) relative to similar cases in their own practice (Figure 2). By 2014, there was interest from 2 other residency programs in using SIMPL OR. Together they incorporated the SIMPL collaborative as a nonprofit educational quality improvement collaborative.

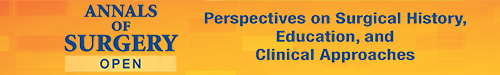

FIGURE 1.

Zwisch scale of progressive autonomy4 (with permission).

FIGURE 2.

Screenshots of the SIMPL evaluation workflow showing the Zwisch performance and Complexity scales3 (with permission).

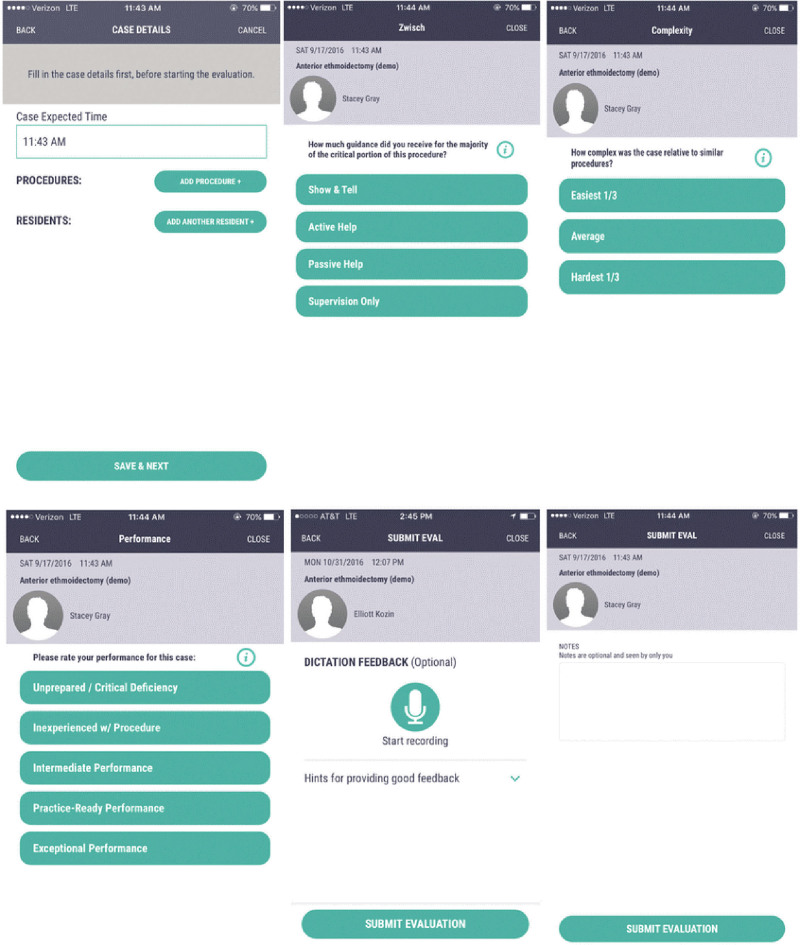

By 2015, SIMPL developed an assessment for operative performance and transitioned into an expansion phase, adding more members (N=14) from across the United States. Research was also prioritized with the American Board of Surgery funding the first SIMPL trial.5 As scaling and improvement efforts continued, more specialties (ie, pediatric surgery, urology, and otorhinolaryngology) joined the SIMPL collaborative, additional staff (ie, product managers and software developers) were hired, and dozens of papers collecting validity evidence were published.

Sweden was the first international member to join the SIMPL collaborative in 2019, followed by several other programs on other continents. New features, such as data downloads, automatic case logging, and additional apps (eg, SIMPL bedside) were added. Partnerships expanded with foundations, associations, and medical boards. To date, SIMPL consists of 222 programs in 7 countries, includes 6000 trainees, and has collected over 600,000 trainee evaluations (Figure 3).3

FIGURE 3.

Growth of SIMPL programs by year.3

SIMPL RATER TRAINING

To help standardize assessment in surgical training, SIMPL developed the Zwisch Scale (Figure 1), which includes descriptors of entrustment behaviors by faculty. This was taught using videos of open and laparoscopic case scenarios demonstrating each Zwisch level, from “show and tell” to “active help” to “passive help” to “supervision only.”4 Initially, every member that joined SIMPL received a 4-hour seminar on assessment principles using these video examples. To streamline training, SIMPL compared an accelerated versus an immersive training approach and found that a 1-hour training is sufficient.6 Following this study, SIMPL training was implemented as a 1-hour, in-person faculty and trainee session, with an additional hour to train the program coordinator and supportive faculty and residents labeled “local champions.”7 In 2020, this approach was adapted to Zoom, where faculty and trainees are shown 6 brief operative videos illustrating both open and laparoscopic examples for the Zwisch scale levels. In all cases, these sessions were led by a volunteer group of experienced SIMPL educators labeled “SIMPL ambassadors” who had been trained to onboard new member programs.

SIMPL DATA SHARING

Hosting a large repository of potentially sensitive data requires substantial infrastructure. For example, the SIMPL data infrastructure includes automated data pipelines to facilitate downstream analytic, reporting, and research tools. The SIMPL data infrastructure is also cloud-based, which permits scaling as SIMPL grows. Given the sensitivity of the data collected by SIMPL, it has also been designated an Agency for Healthcare Research and Quality Patient Safety Organization,8 which provides legal protections to use data for research, education, and quality improvement purposes. To support those uses, SIMPL has also ensured that registry data are Health Information Protection and Portability Act (HIPAA), Family and Educational Rights and Privacy Act, and Institutional Review Board compliant.9–11 Multiple layers of security and auditing further buttress these protections.

Given the quality improvement focus of SIMPL, data sharing is an essential activity. SIMPL is incorporated as a nonprofit organization to generate and share data for research, education, and quality improvement purposes. All programs who join SIMPL sign a network participation agreement that defines how data collected by the collaborative network are used. Importantly, all members retain the right to use their own data, as they see fit and in compliance with their local policies and regulations. Data collected by SIMPL can also be shared, in de-identified format, with other SIMPL network members for multi-institutional research and educational improvement efforts. Any member of the SIMPL network can request data from the SIMPL data registry by submitting a Letter of Intent to the SIMPL Research Standing Committee (RSC), which are reviewed in accordance to RSC bylaws. Once an Letter of Intent is approved, the member must obtain approval from their institutional review board and sign a data use agreement prior to receiving data. SIMPL uses a robust approach to storing, managing, and governing SIMPL data guided by documented principles and safeguards and enforced by the educators who volunteer their time on the SIMPL RSC.3 This process has been used to coordinate and share data for over 58 SIMPL-related manuscripts within the peer reviewed literature and generated through dozens of multi-institutional studies.

LESSON LEARNED: THE IMPORTANCE OF TRUST

What initially began as a response to challenges around CBME, ended up becoming one of medical education’s largest trust exercises. First, faculty and trainees are reluctant to use an assessment system unless they trust the value of the assessment process. SIMPL addresses these concerns by highlighting the supporting research. For instance, SIMPL ambassadors share the most current scientific evidence during the onboarding and training process with each and every member and institution. The scientific foundation is also visible as part of the assessment workflow. For example, if a rater does not complete an assessment in a timely fashion, they receive an email reminder that provides the evidence for how delays degrade the quality of feedback.12 Second, faculty, trainees, and institutions sometimes worry about how their data are going to be used; sharing data can elicit feelings of uncertainty, fear, and a loss of control. To address these concerns, SIMPL members and other stakeholders reinforce the collective goal, which is to improve the quality of patient care.

SIMPL IMPACT AND NEXT STEPS

The SIMPL network has been successful in helping advance CBME. For example, SIMPL ratings data are now being used to quantify competence across multiple specialties on every continent. SIMPL data are also being used, in partnership with national regulatory bodies, to support accreditation and certification policy updates that could help address competency gaps. Most importantly, by implementing a workplace assessment system at scale, SIMPL has demonstrated how a collaborative network of medical educators can collectively address difficult, systemic problems in a more sustainable way. Moving forward, we advocate for more approaches that leverage a collaborative improvement approach to tackle challenges in medical education. As this piece of surgical history from the SIMPL network showcases—if you want to go far, go together.

Footnotes

ORCID ID: https://orcid.org/0000-0001-6812-2571

J.B.Z., S.S.S.-S., and B.C.G. have leadership positions, contracts, and grants with SIMPL. The remaining authors declare that they have nothing to disclose.

J.B.Z., S.P.H., N.A.R., S.S.S.-S., and B.C.G. drafted the article and were involved in editing all versions. P.C. assisted with the literature review process.

Surgical Perspective submitted for publication in Annals of Surgery Open.

REFERENCES

- 1.Fronza JS, Prystowsky JP, DaRosa D, et al. Surgical residents’ perception of competence and relevance of the clinical curriculum to future practice. J Surg Educ. 2012;69:792–797. [DOI] [PubMed] [Google Scholar]

- 2.Van Melle E, Hall AK, Schumaker DJ, et al. Capturing outcomes of competency-based medical education: the call and the challenge. Med Teach. 2021;43:794. [DOI] [PubMed] [Google Scholar]

- 3.PLSC. SIMPL – Improving the quality of surgical care by improving the quality of education. Available at: https://www.simpl.org. Accessed April 6, 2023.

- 4.DaRosa DA, Zwischenberger JB, Meyerson SL, et al. A theory-based model for teaching and assessing residents in the operating room. J Surg Educ. 2013;70:24–30. [DOI] [PubMed] [Google Scholar]

- 5.Bohnen JD, George BC, Williams RG, et al. ; Procedural Learning and Safety Collaborative (PLSC). The feasibility of real-time intraoperative performance assessment with SIMPL (System for Improving and Measuring Procedural Learning): early experience from a multi-institutional trial. J Surg Educ. 2016;73:e118–e130. [DOI] [PubMed] [Google Scholar]

- 6.George BC, Teitelbaum EN, DaRosa DA, et al. Duration of faculty training needed to ensure reliable or performance ratings. J Surg Educ. 2013;70:703–708. [DOI] [PubMed] [Google Scholar]

- 7.George BC, Teitelbaum EN, Meyerson SL, et al. Reliability, validity, and feasibility of the Zwisch scale for the assessment of intraoperative performance. J Surg Educ. 2014;71:e90–e96. [DOI] [PubMed] [Google Scholar]

- 8.Agency for Healthcare Research and Quality (AHRQ). Patient Safety Organization (PSO) Program. Available at: Resources | PSO (ahrq.gov). Accessed August 12, 2023. [Google Scholar]

- 9.CDC. Family Educational Rights and Privacy Act (FERPA). Available at: Family Educational Rights and Privacy Act (FERPA) | CDC. Accessed August 12, 2023. [DOI] [PubMed] [Google Scholar]

- 10.CDC. Health Information Privacy and Accountability Act of 1996 (HIPAA). Available at: https://www.cdc.gov/phlp/publications/topic/hipaa.html. Accessed August 12, 2023.

- 11.FDA. Institutional Review Boards (IRBs) and Protection of Human Subjects in Clinical Trials. Available at: https://www.fda.gov/about-fda/center-drug-evaluation-and-research-cder/institutional-review-boards-irbs-and-protection-human-subjects-clinical-trials. Accessed August 12, 2023.

- 12.Williams RG, Sanfey H, Chen XP, et al. A controlled study to determine measurement conditions necessary for a reliable and valid operative performance assessment: a controlled prospective observational study. Ann Surg. 2012;256:177–187. [DOI] [PubMed] [Google Scholar]