Abstract

In the field of metastatic skeletal oncology imaging, the role of artificial intelligence (AI) is becoming more prominent. Bone metastasis typically indicates the terminal stage of various malignant neoplasms. Once identified, it necessitates a comprehensive revision of the initial treatment regime, and palliative care is often the only resort. Given the gravity of the condition, the diagnosis of bone metastasis should be approached with utmost caution. AI techniques are being evaluated for their efficacy in a range of tasks within medical imaging, including object detection, disease classification, region segmentation, and prognosis prediction in medical imaging. These methods offer a standardized solution to the frequently subjective challenge of image interpretation.This subjectivity is most desirable in bone metastasis imaging. This review describes the basic imaging modalities of bone metastasis imaging, along with the recent developments and current applications of AI in the respective imaging studies. These concrete examples emphasize the importance of using computer-aided systems in the clinical setting. The review culminates with an examination of the current limitations and prospects of AI in the realm of bone metastasis imaging. To establish the credibility of AI in this domain, further research efforts are required to enhance the reproducibility and attain robust level of empirical support.

Keywords: Artificial intelligence, Deep learning, Bone metastasis, Medical imaging

1. Introduction

After lung and liver, bone is the third most common location for metastases, primarily originating from breast and prostate cancers, which constitute nearly 70% of the primary tumors [1], [2]. Bone metastases typically portend a grim short-term prognosis, as the full eradication of such lesions is rarely achievable. Consequently, life expectancy is markedly reduced; on average, patients with bone metastases from melanoma survive for merely six months, those with breast cancer-associated bone metastases may expect to survive between 19 and 25 months, and prostate cancer can have an estimated survival of up to 53 months [3]. Palliative care is often used as the last resort to slow disease progression and relieve symptoms.

Bone metastasis is commonly found in the axial skeleton due to its high content of red marrow [4]. Batson's research revealed that venous blood from the breast and pelvis flows into the vena cava and the vertebral venous plexus, which runs from the pelvis to the epidural and peri-vertebral veins [5]. The blood drainage to the skeleton via the vertebral venous plexus may partially explain why breast and prostate cancers, as well as those originating from the kidney, thyroid, and lung, are prone to producing metastases in the axial skeleton and limb girdles, such as the pelvis, shoulder, and distal femur.

The range of skeletal-related events associated with bone metastasis is highly diverse, varying from a complete lack of symptoms to severe pain, reduced mobility, pathologic fractures, spinal cord compression, bone marrow depletion, and hypercalcemia. Hypercalcemia, in turn, can cause constipation, excessive urination, increased thirst, and fatigue, or cardiac arrhythmias and acute renal failure in advanced stages [6].

Hence, distinguishing metastatic lesions from primary bone tumors or benign lesions of the bone is crucial for the diagnosis, treatment, and management of cancer patients. Screening for bone metastases upon diagnosis of the primary tumor is important for choosing the most appropriate course of treatment. Conventional approaches for bone metastasis diagnosis include whole-body bone scintigraphy (WBS), computed tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography (PET) [7]. However, not one modality is the once-and-for-all solution to bone metastasis imaging. The radiological manifestation is related to the tumor-specific mechanisms of bone metastasis, though a complete understanding of which is still unclear. The biophysical stiffness that influences the dynamic interactions between tumor cells and the stroma may influence metastatic transformation, which is a physical property that may be visible on various imaging modalities [8]. Constantly undergoing a process of remodeling, the bone maintains a state of dynamic equilibrium between osteoclastic and osteoblastic activity. When a metastatic focus grows within the marrow, reactive changes in both osteoclastic and osteoblastic activity occur simultaneously. Traditional classifications divide the radiographic manifestations of bone metastases into three types—osteoblastic, osteolytic, and mixed—depending on the predominant way in which the metastases disrupt normal bone remodeling processes and affect the uptake of radiotracers [6], [9]. Thus, the imaging manifestation can be so variegated that sites of metastases could be very well mistaken for skeletal changes of other nature. A diagnosis of bone metastasis takes years of clinical experience, and the incidence of misdiagnosis is not uncommon.

Recent advances in artificial intelligence (AI) have shown promising results in detecting osseous metastases on various imaging modalities. The use of labeled big data, along with markedly enhanced computing power and cloud storage, has catalyzed the deployment of artificial intelligence (AI), particularly within the discipline of radiology. Machine-learning-aided image processing tasks have become an integral part of everyday medical practice, such as the computer-assisted interpretation of electrocardiograms (ECGs), white-cell differential counts, analysis of retinal photographs, and cutaneous lesions [10]. Although these AI-driven tasks may not achieve perfection, they generally provide sufficiently accurate results for quick image interpretations, which is especially valuable in settings where local expertise is lacking. Similarly, it is hoped that AI-assisted image interpretation for bone metastasis will attain the same level of success with a skilled healthcare professional overseeing the process. The advantages of using AI for bone metastasis detection are manifold. AI algorithms can process large amounts of data quickly and accurately, enabling faster and more reliable diagnoses. As an objective measure, an AI-aided automatic diagnostic system for bone metastasis breaches the inter- and intra-observer variability, improves the consistency and reproducibility of diagnoses, and serves as a fail-safe device that guards against false negatives. A missed bone metastasis could very well misguide a series of clinical decisions, with devastating consequences. Moreover, AI can assist radiologists and oncologists in making treatment decisions by providing objective and quantitative information about the extent and severity of bone metastasis.

2. AI-assisted detection of bone metastases on Whole-body Bone scintigraphy (WBS)

Whole-body Bone Scintigraphy (WBS) with 99 mTc-MDP is one of the most commonly used diagnostic techniques to detect cancer metastasis to the bone, known for its high sensitivity and ability to examine the whole body [11]. Radioactive tracers preferentially accumulate in areas of increased osteoblastic activity, making WBS reliable for detecting metastases in a range of malignancies such as breast, prostate, and lung cancer, with reasonable sensitivity (79–86%) and specificity (81–88%) [12].

Zhao et al. developed an AI model based on a deep neural network to assist nuclear medicine physicians in interpreting WBS for bone metastasis [13]. 12222 cases of bone scintigraphy images from patients with definitive diagnoses were included in the study, with 9776 cases in the training cohort, 1223 in the validation group, and 1223 cases as the test set. Without excluding potentially misleading cases, such as patients with large bladder, sternotomy, or fracture, the dataset approximated a real index better than those from the previous studies [14], [15]. Compared to experienced nuclear medicine physicians, the AI model had a time savings of 99.88% for the same workload of 400 cases. Not only was the AI model faster, it also had better diagnostic performance, with improved accuracy (93.5% vs. 89.00%) and sensitivity (93.5% vs. 85.00%), and comparable specificity (93.50% vs. 94.50%). The size, number of lesions, and adjacent diffused signals were the primary factors contributing to false-negative results, while fractures, inflammation, degeneration, and postoperative changes were the primary reasons for false-positive results in their study. By consulting the AI results, physicians were able to adjust their misdiagnoses and found false-negative lesions that either were too small or had insufficient radioactive uptake. Lung cancer was also analyzed, for the first time, as an individual subgroup, the model of which had a diagnostic accuracy of 93.36%. Their use of a multi-input deep convolutional neural network, as opposed to traditional hand-crafted feature methods, allowed the AI model to follow natural distributions better, reduce the subjective judgment of physicians, improve generalization performance, and provide a closer approximation to the typical clinical environment.

Just like the previous study focused solely on the AI model's ability to assist nuclear medicine physicians in detecting bone metastasis, Papandrianos et al. built a robust convolutional neural network (CNN)-based model to identify bone metastasis in prostate cancer patients on WBS [16]. Their model was constructed to solve a simple two-way classification problem based on unprocessed plain WBS. The original images, as acquired from the scanning device of WBS, are in an RGB format with 3-channel color information. These images in RGB structure only appear as a grayscale due to an absence of color components in the image. The authors applied a 2D CNN for each color channel and aggregated the inputs into the final dense layer of the network. The best-performing RGB-CNN model had an overall classification accuracy of 91.42% ± 1.64%, versus other architectures with classification accuracies ranging from 89.78% to 93.06%. The efficacy of RGB mode was better than or at least comparable to other state-of-the-art CNN architectures in literature, like ResNet50 [17], VGG16 [18], Inception V3, and Xception. An additional perk of the less complex RGB-CNN model is that it can train on a relatively small dataset, as opposed to the widely acknowledged fact that CNN can only work when fed with a huge dataset. Likewise, Papandrianos et al. also built a CNN model, trained in 408 samples, to detect bone metastasis in WBS in breast cancer patients [19]. Compared to that in grayscale mode, the classification accuracy was higher in RGB images at 92.50%, which is better than some other well-known CNN architectures like ResNet50, VGG16, MobileNet, and DenseNet [20].

2D-CNN modeling, having a small number of trainable parameters compared to labeled data, is the best fit for planar nuclear medicine scans like WBS, if there is a massive amount of training data available. However, the use of deep learning on WBS is usually constrained by the time-consuming effort needed to create precise labels for large datasets. Trying to overcome this constraint, Han et al. proposed a 2D CNN classifier- tandem architecture integrating whole body and local patches, named as “global–local unified emphasis” (GLUE) for WBS of prostate cancer patients [21]. Compared to a whole-body (WB)-based 2D CNN model, the GLUE model models had significantly higher AUCs than the WB model (0.894–0.908 vs. 0.870–0.877) when the dataset used for training was limited (10%:40%:50% for training:validation:test sets; 9113, the total number of WBS), with comparable overall accuracies (GLUE: 0.900, WB: 0.889). The authors proved that creating local patches to increase the volume of sample data and integrating them with the sliding patches and conventional whole-body input could boost the performance of 2D CNN in a setting with limited data while minimizing the risk of overfitting.

Trying to overcome the computational burden of conventional CNN, Ntakolia et al. built a new WBS-based architecture with a significantly lower number of floating-point operations (FLOPs) and free parameters [22]. Variations in multiscale feature extraction and the small number of free parameters boost the ability of the network to generalize, even for a small training set. Their LB-FCN light model was employed to solve the three-class classification problem (malignant bone metastasis, degenerative changes, and normal) with BS images from prostate cancer patients. The classification accuracy reached 97.41%, which was higher than any of the state-of-the-art networks (InceptionV3 88.96%, ResNet50 90.74%, Xception 91.54%) when tested on the same set of data. The computational requirement of LB-FCN light was at least ten times lighter than other CNN networks, with respect to the number of trainable free parameters and FLOPs, which allows for its application on mobile and embedded devices.

As with all other deep learning networks, the LB-FCN light model is a black box, with no explanation for its decision, whereas lesion-based analysis might shine a light on the thought processes employed by an AI model. Liu et al. employed a 2D CNN model to conduct a lesion number-based per-group analysis in WBS [23]. A total of 14,972 visible bone lesions on 99 mTc-MDP WBS images from 3352 patients were manually delineated and identified as either benign or metastatic. Based on the number of lesions per WBS image, the cases were divided into three groups: the few-lesions group: 1–3; the medium-lesions group: 4–6; the extensive-lesions group: > 6. Based on the subgroup analysis, their CNN model indicated significantly higher accuracy (82.79%) in the extensive-lesions group than that in the few-lesions group (81.78%) and medium-lesions group (78.03%). This finding indicates that the CNN model's inference mechanism approximates, to a certain extent, the cognitive process that nuclear medicine physicians employ to identify metastatic lesions. For instance, a physician might find it challenging to evaluate an isolated lesion, but the likelihood of malignancy increases when multiple lesions occur in close proximity to one another. Although the internal mechanisms of the CNN may remain opaque, the discernible patterns it recognizes could validate its utility as a diagnostic instrument in clinical settings.

In the hope of avoiding false negatives and alleviating physicians’ annotation workload, Liao et al. went one step ahead of simply solving a classification problem and built an object detection model that labels the location of metastatic lesions on WBS images [24]. A total of 870 images, of which 450 were confirmed with bone metastases, were used for training. Their model was built on a backbone of Faster R-CNN (faster region-based convolutional neural network with region proposal network) combined with R50-FPN (ResNet50-backboned feature pyramid network). A set of 50 images (20 with bone metastases) were used for testing. The Dice similarity coefficient (DSC) is used to measure the similarity between two bounding boxes for object detection, with values ranging from 0 to 1, where 1 indicates the best and 0 the worst overlap. The highest DSC of their object detection model was 0.6640. In comparison to the highest DSC of 0.7040 among the physicians, a minuscule difference of only 0.04 suggests that the performance of the model could well approximate that of a proper physician. Even though the DSC among physicians may be underestimated as the annotation was performed in a blinded manner without reference to any other clinical data, the result demonstrated that the fully automated object detection technology can safely assist in the detection of bone metastases, providing a fail-safe against careless omission.

3. Automated detection of skeletal metastases on computed tomography (CT) and magnetic resonance imaging (MRI)

The CT scan, which has a sensitivity of 74% and specificity of 56%, can serve as a helpful reference during interventional diagnostic procedures. It can clearly display bone destruction and sclerotic deposits and detect any soft tissue extension of osseous metastases with ease [6]. Furthermore, CT scanning enables simultaneous assessment of bone and systemic staging, reducing the need for multiple imaging examinations for patients. MRI, on the other hand, has excellent contrast resolution for both bone and soft tissue and boasts a sensitivity and specificity of 95% and 90%, respectively. It is a radiation-free technique and is regarded as the preferred imaging method for bone marrow metastasis [25].

Radiomics, a branch of AI, entails converting digital medical images, which contain information on tumor pathophysiology, into measurable and quantifiable data. These mineable datasets can be integrated with clinical information and qualitative imaging findings to improve medical decision-making and establish prognostic correlations between imaging characteristics and patient outcomes. Kumar outlines the classic radiomics process involving five steps: (1) acquiring and reconstructing images, (2) segmenting and rendering images, (3) extracting and qualifying features, (4) creating databases and sharing data, and (5) conducting ad hoc informatic analyses [26]. Hong et al. built a radiomic-based random forest (RF) model for differentiating benign bone islands from osteoblastic bone metastases in contrast-enhanced abdominal CT scans [27]. Bone islands refer to foci of compact bone that occur within the more porous spongiosa, whereas osteoblastic metastasis is characterized by an excess of osteoid tissue with a high concentration of tumor cells and an incomplete deposition of calcium salts. Due to these distinct pathological features, osteoblastic metastasis tends to be more variegated in texture on CT scans (Fig. 1), which makes radiomics, specialized in quantitatively analyzing image heterogeneity, a perfect tool for the task. The model was built on a training set of 177 patients, among whom 89 were diagnosed with benign bone island and 88 with metastasis. The AUC of the trained RF model was 0.96 (sensitivity, 80%; specificity, 96%; and accuracy, 86%), which is comparable to those of musculoskeletal radiology experts (AUC, 0.95 and 0.96) and higher than that obtained by an inexperienced radiologist (0.80). The diagnostic performance of the RF model was better than that of a single imaging feature, such as CT attenuation or shape of the lesion (AUC, 0.79 and 0.51, respectively). Likewise, Yin et al. developed a triple-classification multiparametric MRI-based radiomics model to preoperatively differentiate primary sacral chordoma, giant cell sacral tumor, and metastatic sacral tumor [28]. The best-performing radiomics model was extracted from joint T2w FS (T2-weighted Fat-Suppressed) and CE T1w (Contrast-Enhanced T1-weighted) images, with an AUC of 0.773, and an accuracy of 0.711.

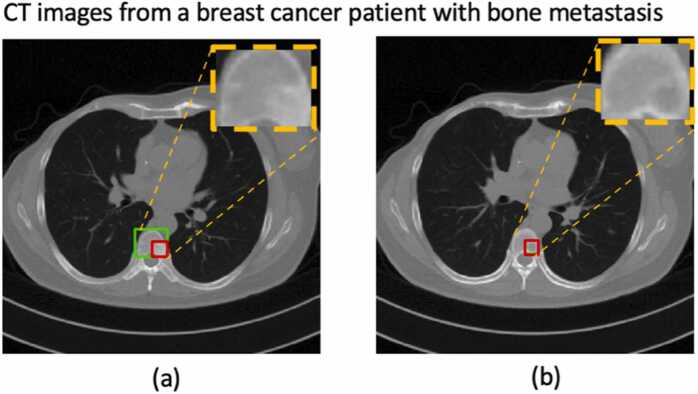

Fig. 1.

An example of automatic bone metastasis segmentation on CT images by YOLOv5 [38]; the green box was outlined by a nuclear medicine physician, and the red box was the segmented lesion as predicted by the algorithm. (a): the predicted lesion was smaller than the actual one; (b): the algorithm falsely segmented the lesion-free cross-section.

Using texture analysis, Acar et al. built a CT imaging-based radiomic model to differentiate metastatic and completely responded sclerotic bone lesions in prostate cancer patients [29]. Using Ga-68 PSMA PET for validation (an absence of PSMA expression in the lesion was treated as a complete response to treatment), they compared differences in textural features between the metastasis and completely responded lesions on CT by various machine learning measures, such as decision tree, discriminant analysis, support vector machine (SVM), k-nearest neighbor (KNN), and ensemble classifier. The model based on the weighted KNN method had the highest accuracy (73.5%) and AUC (0.76) in differentiating metastatic and sclerotic lesions on CT. Twenty-five of the total 35 acquired textural features were statistically significantly different between the two groups, among which GLZLM_SZHGE and histogram-based kurtosis values were the most important parameters in classification and were significantly higher in the completely responded group. In addition, the HU and mean HU levels were significantly higher in the healed sclerotic lesions than in the metastatic areas. This phenomenon might be associated with a surge in osteoblastic activity following a complete response to treatment. In a similar manner but on a different imaging modality, Filograna et al. did an in-depth investigation on the nature of significant predictors of metastasis in oncological patients with spinal metastasis [30]. Predictors were selected among the features of MRI-based radiomic analysis: 16 were found to differ between the metastatic vs. non-metastatic vertebral bodies with statistical significance. Internal cross-validation showed an AUC of 0.814 for T1w images and 0.911 for T2w images. One morphological feature (center of mass shift) for both imaging modalities, plus one histogram feature (minimum grey level) and one textural feature (matrix joint variance of the grey-level co-occurrence matrix) for T1w and T2w images respectively were found to be the best predictors of metastasis.

Taking one step ahead of determining the nature of in-situ bone lesions, radiomic analysis offers the potential to reveal prognostically valuable imaging features that remain imperceptible to the naked eye. Wang et al. determined that texture features derived from the original site of prostate cancer on multiparametric prostate MRI (mp-MRI: T2w and DCE T1w) before treatment, in combination with clinicopathologic risks (free PSA level, Gleason score, and age) could predict bone metastasis in prostate cancer patients [31]. The integrated model yielded a specificity of 89.2% (AUC 0.916) in the training cohort, which was confirmed in the validation cohort. Their work represents an interesting attempt to use radiomics to identify patients at high risk for bone metastatsis. If proven reliable by more evidence, this model can select patients who may benefit from closer radiographic monitoring or early ADT/CT for a better prognosis. Their work is important as prostate cancer’s prognosis changes drastically if one is diagnosed with skeletal metastasis: survival in patients is highly variable depending on the burden of the metastatic tumor [32]. The number of bone metastasis counted on bone scintigraphy, as the extent of disease, is a reported prognostic factor. As suggested by Hayakawa et al. who conducted an image analysis of pelvic bone metastasis in prostate cancer patients, shape-based features had prognostic value for OS (overall survival) [33]. Thus, putting early brakes on BM may have a profound clinical impact.

Not only could radiomics accomplish the given classification task to detect osseous metastases with relatively high efficacy and extract a large amount of advanced quantitative imaging features, it might be able to determine the presence of underlying genetic diagnosis as well. This study of the relationship between the genetic makeup of tumors and their radiographic or imaging characteristics is referred to as “radiogenomics”, which is the intersection between genomics and radiology in essence. The main premise behind radiogenomics is that the genetic variations or mutations in a tumor can influence its appearance on medical imagings. Several studies were conducted to investigate whether radiogenmoics could be used to predict the presence of epidermal growth factor receptor (EGFR) mutation in spinal metastases of primary lung adenocarcinoma. Ren et al. produced a nomogram (AUC 0.888) using multiparametric MRI-based radiomics signature and smoking status to preoperatively predict EGFR mutation for patients with thoracic spinal metastases from adenocarcinoma of the lung on T2w, T2w-FS, and T1w images [34]. They essentially devised a convenient tool to calculate the probability of EGFR mutation for individualized evaluation, which affects the choice of treatment. Aside from the nomogram, they found that the textural features related to the matrix-based gray level changes reflected intratumoral non-uniformity, implying the intra-tumor heterogeneity of spinal metastatic lesions was associated with the EGFR status. In line with Ren’s work, Fan et al. conducted a radiogenomic analysis of thoracic spinal metastasis subregions to determine the presence of EGFR mutation [35]. Spinal metastases were divided into phenotypically consistent subregions based on population-level clustering that reflects the spatial heterogeneity of the metastatic tumor: marginal, fragmental, and inner subregions. Radiomics features were extracted from both the subregions’ and the whole tumor regions’ T2w-FS and T1w images. The radiomic signature derived from the inner subregions from T1w and T2w-FS had the best detection capabilities in terms of AUC in both training and test sets. The results suggest that the inner region, with more discriminant information on EGFR mutation, may hold more metastatic tumor cells, while the outer edge is composed more of the residuals of bone destruction from cancer-associated osteolysis, which confirms metastatic tumor’s heterogeneous distribution centered around the vessel-rich red marrow [36]. The inner subregion may be more biologically aggressive than the others.

Brigding the gap between radiomics and the more advanced deep learning algorithms, Lang et al. tried to differentiate metastatic spinal lesions originating from primary lung cancer from spinal metastasis of other cancers using both radiomics and deep learning [37]. Radiomics analysis extracted histogram and texture features from three dynamic contrast-enhanced (DCE) parametric MRI maps. DCE-MRI can assess tumor angiogenesis. These maps were used as inputs into a conventional convolutional neural network (CNN), and all 12 sets of DCE images were also fed into a convolutional long short-term memory (CLSTM) network for deep learning. CLSTM has the additional merits of tracking the changes of signal intensity in pre- and post-contrast images in DCE-MRI. Without the use of predefined metrics, the deep learning-based CLSTM network using the entire sets of DCE images had an accuracy of 0.81, which was better than that of either the radiomics analysis (accuracy, 0.71) or the hot-spot ROI-based method (accuracy, 0.79). The classification accuracy was deemed acceptable; still, a better model is desired, which may be difficult to develop since metastatic lesions are often accompanied by soft tissue masses. The appearance of these lesions on imaging can vary significantly due to various factors, such as local myelofibrosis, infarction, edema, pathological compression fracture, and infection, which further complicates the differential diagnosis (Fig. 2).

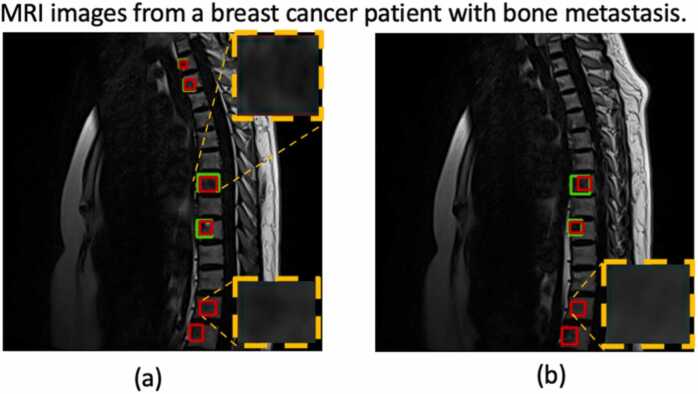

Fig. 2.

An example of automatic bone metastasis segmentation on MRI images by YOLOv5; the green box was outlined by a nuclear medicine physician, and the red box was the segmented lesion as predicted by the algorithm. The upper right zoomed area in (a): the predicted lesion matched the actual outline; The lower right zoomed corner in (a) and the zoomed box in Fig. 2(b): the algorithm falsely segmented those lesions as metastatic.

Because no single window setting can properly depict all bone metastases on CT scans, Noguchi et al. developed a deep learning–based algorithm (DLA) to automatically detect bone metastases in all scanned areas [39]. The training set was composed of 269 thin-sliced CT scans (of ≤ 1 mm thickness) with confirmed bone metastasis and 463 negative scans. The algorithm consisted of three CNNs: a 2D UNet-based network for bone segmentation, a 3D UNet-based network [40]for candidate region segmentation, and a 3D ResNet-based network to reduce the false-positive rate. The DLA achieved a lesion-based sensitivity of 89.8% (44 of 49) for the validation set, with 0.775 false positives per case, and 82.7% (62 of 75) for the test set, with 0.617 false positives per case. The diameter had the clearest correlation with sensitivity by subgroup analysis, which may be due to the fact that a smaller lesion contains fewer image features to be extracted, making extraction more difficult. They also conducted an observer study with nine board-certified radiologists to evaluate the clinical efficacy of the algorithm. With the DLA, the overall performance of the radiologists with respect to the mean weighted alternative free-response AUC figure of merit (wAFROC-FOM) improved from 0.746 to 0.899 (p < .001), and the mean interpretation time per case decreased from 168 to 85 s (p = .004). The sensitivity in detecting bone metastasis by radiologists improved from 51.7% to 71.7% in lesion-based analysis and 74.4–91.1% in case-based analysis. This result proved a better performance of the radiologists aided by DLA in less amount of time. The DLA algorithm is clinically useful as a diagnostic adjuvant for the automatic detection of bone metastases on CT.

There has been an increasing emphasis on the value of volumetric measurements in assessing treatment response. The volume of bone metastasis assessed on MRI-DWI is correlated with the established prognostic biomarkers and the overall survival in metastatic castration-resistant prostate cancer [41]. To reduce the burden of handicraft, Liu et al. established a deep learning model consisted of two-step 3D U-Net algorithms for the automatic segmentation of pelvic bone and detection of prostate cancer metastases on DWI and T1-w images [42]. Two deep learning 3D U-Net models in cascade have been widely adapted to improve the accuracy and systemic stability, as in lymph node detection and prostate cancer segmentation [43], [44]. Three sets of patients were included in the training set: 349 patients with PI-RADS scores of 1–2 or biopsy-proven benign prostate hyperplasia (set 1), 280 biopsy-proven prostate cancer patients without bone metastases (set 2), and 230 biopsy-proven prostate cancer patients with bone metastases (set 3). All three sets of patients were enrolled for the first 3D U-Net to segment pelvic bony structures, and prostate cancer patients of sets 2&3, excluding those with primary bone tumors, definite benign findings such as hemangiomas and bone island, or who had undergone prostate cancer treatment, were selected to train the model detecting metastatic lesions. Using the manual annotations by a physician as the reference standard, the mean DSC and volumetric similarity (VS) values of pelvic bone segmentation were above 0.85, and the Hausdorff distance (HD) values were < 15 mm. As a reliable foundation for the subsequent analysis, the volume of interest predicted by the model that segments pelvic bone was fed into the second network as the mask. This measure, together with rejecting all structures less than 0.2 cm3 in volume, i.e. smaller than the smallest annotated metastasis, effectively reduced the false positive rate. The AUCs of metastases detection at the patient level were 0.85 and 0.80 on DWI and T1w images, respectively. The overlaps between manual and automated segmentation as gauged by DSCs were 0.79 and 0.80 on DWI and T1w-IP images, respectively. As for the external evaluation comprised of 63 patients, the respective AUCs of the model for M-staging were 0.94 and 0.89 on DWI and T1w images. By subgroup analysis, the model works best on patients with few metastases (≤5) in terms of recall and precision, boosting the utility of using CNN as an aid in M-staging in clinical practice. Focusing on the pelvic bone for proof-of-concept, this deep learning-based 3D U-Net network was able to accurately segment lesions of prostate cancer metastasis on DWI and T1w images, paving the way for outlining skeletal metastasis on a broader scope.

4. AI-assisted interpretation of positron emission tomography (PET) images

PET is an imaging technique that utilizes radioactive isotopes that decay through positron emission. The procedure involves binding an isotope, like fluorine-18 [18F], to a biological compound to create a diagnostic PET radiopharmaceutical that can be intravenously injected into patients, which identifies functional processes within the body on a biochemical level. Quantitative information in standardized uptake values (SUVs) measures the activity normalized for the volume of distribution (body weight/surface area) and the dose of the injected radiopharmaceutical [45]. The radiopharmaceutical [18F] sodium fluoride seeks out bones and is used to locate areas with increased bone turnover. [18F] Fluoride is more effective than 99 mTc-labeled diphosphonates used in WBS due to its higher bone uptake, faster blood clearance, and higher target-to-background ratio. PET metabolic imaging with [18F] fluoride is highly sensitive and specific in diagnosing distant bone metastases (Fig. 3). CT and bone scintigraphy techniques lack the ability to differentiate between active or therapy-burnout lesions, causing ambiguity in prognosis. PET-based imaging, specifically PET/CT, provides a promising alternative that can accurately detect active bone metastasis by increased [18F] fluoride uptake, regardless of the morphological appearance of the bone, and predict subsequent pathological fracture in metastatic cancer patients [46]. Structural imaging techniques often face limitations in detecting metastatic diseases at early stages and in assessing responses to treatments. Conversely, molecular imaging with PET aligns closely with the principles of precision medicine and personalized care. This alignment positions PET imaging as a critical modality for tailoring treatment strategies to individual patient profiles in the context of bone metastasis.

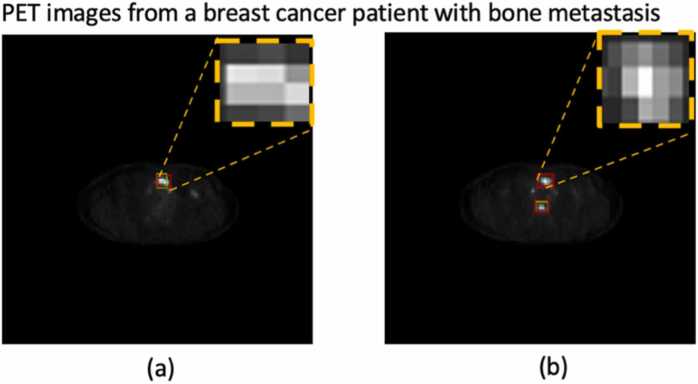

Fig. 3.

An example of automatic bone metastasis detection on PET images by YOLOv5; the green box was outlined by a nuclear medicine physician, and the red box was the detected lesion as predicted by the algorithm. (a): the predicted lesion matched the actual outline; (b): the algorithm falsely detected the upper high uptake lesion as metastatic.

Metastatic lesions and completely responded sclerosis areas in 68Ga-PSMA PET could be distinguished with good accuracy using texture analysis and machine learning (Weighted KNN algorithm) in prostate cancer [29]. The weighted KNN algorithm succeeded to differentiate sclerotic lesions from metastasis or completely responded lesions with a 0.76 AUC. Employing the more advanced deep learning methods, Lin et al. constructed multiple classifiers based on deep neural networks to identify bone metastasis on SPET [47]. They first cropped the original whole-body SPECT bone image based on a curve-fitting approach to extract the thoracic area. The authors utilized deep learning algorithms, VGG, ResNet, and DenseNet, to build two-way classifiers, which were tested on three different datasets, including an original dataset and two augmented datasets (with and without normalization). The augmentation techniques involved image manipulation, while normalization limited the radiation dosage range in thoracic SPET to an interval of 0–1. The results showed that all classifiers performed better on the augmented datasets, while normalization did not contribute to the overall performance. Experimental results demonstrated that, after finetuning their parameters and defining new network structures, the self-defined 21-layer classifier SPECS V21 (SPECT ClaSsifier with VGG-21) based on VGG worked best on identifying metastasis in thoracic SPECT bone images with augmentation, having a score of 0.9807, 0.9900, 0.9830, 0.9890, 0.9802 and 0.9933 for accuracy, precision, recall, specificity, F-1 Score, and AUC, respectively. False negatives happen a lot more often in the augmented dataset with normalization, hence, it can be concluded that the size of the dataset is critical for the performance of the deep learning-based algorithm in SPECT image classification. Less radionuclide absorption in elderly patients also contributed to miss-classified metastasized images. The authors also pointed out that to perfect the algorithm, some post-processing operations should be done after classification to alleviate the negative impact of the interpersonal difference in radiation dosage.

As an attempt to quantify the burden of bone metastasis, Lindgren Belal et al. generated a PET15 index, which is calculated by dividing the total volume of segmented suspicious uptake in the PET scan by the skeletal volume measured from the CT scan in prostate cancer patients with confirmed bone metastasis, [48]. The skeletal volume (33% of the total skeletal volume) was measured by CNN-based automated segmentation of the thoracic and lumbar spines, sacrum, pelvis, ribs, scapulae, clavicles, and sternum in the CT images [49]. This deep learning-based method was developed as the first step of a research series focusing on the quantification of skeletal metastases. The accuracy of this fully automated CNN-based segmentation was validated in 5 cases by the Sørensen-Dice index (SDI) that assessed the spatial overlap between the CNN-based method and manual segmentations. Setting 15 as the threshold SUV value, only lesions of SUV 15 or more was segmented and factored into the PET15 index, though this choice of threshold may seem a bit arbitrary. According to a previous study, the mean SUVmax of bone metastases was 5.5 ± 2.7 (0.4–30.4), which is a lot lower than this set point [50]. A manual PET index reflecting a nuclear physician’s binary classification (benign or metastatic) of individual hotspots was used to gauge the clinical interpretations of PET/CT scans. Just like the bone scan index (BSI) from planar whole-body bone scans [51], they proved that the PET15 index, a quantitative imaging biomarker from PET-CT, not only constitutes a surrogate for the tumor burden but is also significantly associated with overall survival (OS), with a concordance index of 0.70. This association was also seen in a retrospective clinical study done by Harmon et al.: Total functional burden, a metric derived from NaF-PET/CT, assessed after three cycles of androgen deprivation therapy or chemotherapy was predictive of progression-free survival (PFS) for men with mCRPC [52]. A comparison was made among the BSI, the manual PET index, and the PET15 index. The differences in the C-index between any two of the three were not statistically significant, proving the credibility of this fully automated measure of metastatic disease burden. Compared to the more widely accepted BSI derived from a two-dimensional technique, the PET15 index may be more accurate in depicting metastatic burden as the skeleton is a 3D structure.

A phase III randomized clinical trial showed that the automated bone scan index (aBSI) that overcomes the reader subjectivity is an independent predictive imaging biomarker for overall survival in castrate-resistant PCa with skeletal metastasis [53]. This RCT proves the concept that quantifiable information extracted from an otherwise subjectively interpreted imaging study has profound prognostic value. Hoping to create a PET/CT counterpart of aBSI, Lindgren Belal et al. improved on the PET15 index and developed a deep learning-based PET index as an imaging biomarker that assesses the whole-body skeletal tumor burden in prostate cancer patients to predict the course of the disease and influence clinical decision-making [54]. A total of 168 patients were included. 3D U-Net was employed to build the CNN that classifies each voxel as either bone metastasis or background to form the lesion mask. To calculate the PET index, the volume of all tracer uptake marked as metastatic by the model is summed and divided by the total skeletal volume calculated by the CNN-based automatic quantification method mentioned above. The PET index, void of the caveat of reader subjectivity, measures the skeletal tumor burden in percentage. There was no case in which all readers agreed on the existence of metastatic lesions that the AI model failed to detect. Due to the fact that false negatives may have more serious clinical consequences, a decent model should avoid missing lesions even at the expense of a higher false positive rate. Spearman correlation coefficients between the PET indices calculated by physicians, the PET index, and the PET15 index are calculated: the PET index had a fair-moderately strong correlation (mean r = 0.69) to reader PET indices in the same patient, whereas the PET15 index correlated fairly with the physician PET index (mean r = 0.49). The PET index was generally higher than the manual PET index, which probably reflected the AI model’s propensity to segment individual lesions as larger volumes, or due to the higher number of false positives. The sensitivity for lesion detection was 65–76% for the AI model, 68–91% for physicians, and 44–51% for the PET15 threshold method depending on which physician was considered a reference. The result proved a leap toward developing an objective PET/CT imaging biomarker for skeletal metastases of prostate cancer. A larger sample could further improve this model’s performance. The PET index for quantification of tumor burden in PET/CT has the potential to risk-stratify patients with metastatic prostate cancer, providing prognostic information in the clinic.

Previous approaches in semi-automatic and automatic PET segmentation based on artificial intelligence are subject to the impediment of low contrast or heterogeneity in lesions [55]. To overcome their drawbacks, Moreau et al. adopted a deep learning algorithm and compared different approaches to segment bones and metastatic lesions in metastatic breast cancer on PET/CT images [56]. The authors utilized two deep learning methods based on convolutional neural networks (CNN)-the “not new U-Net” (nnU-Net [57]) implementation that internalizes recent improvements of the original U-Net such as leaky ReLU activation, instance normalization, and padded convolutions- to segment bone lesions: the first one used expert lesion annotations as the ground truth solely (U-NetL), while the second used reference bone masks during training to constrain the network to focus on the region with lesions (U-NetBL). Their model was tested on 24 breast cancer patients. U-NetBL-based bone segmentation achieved a mean DSC of 0.94 ± 0.03, while the traditional approach, in comparison, failed to dissociate metabolically active organs such as the heart, and the kidneys from the bone draft. Of the two methods, U-NetBL had the best performance in bone lesion segmentation in terms of precision (0.88) and mean DSC (0.61 ± 0.16). The authors agreed that this segmentation can be further improved upon: a large PET SUV heterogeneity tends to lower the DSC as low 18FDG-fixing lesions with small SUV values tend to be ignored by the present architecture. Mimicking the PET15 index and the PET index, they also transposed BSI to PET imaging and calculated the percentage of total skeletal mass taken up by the metastases to quantify the metastatic burden of breast cancer in bones. There is a good agreement between the ground truth and the automatic PET Bone index as generated by their model.

5. Summary and outlook

AI empowers machines to execute functions traditionally associated with human intelligence, such as perception, reasoning, learning, and decision-making. Pertaining to bone metastasis, AI has the capability to scrutinize imaging modalities, including WBS, CT, MRI, and PET/CT. It can detect nuanced variations in bone tissue texture, segment respective lesions, and derive quantifiable data from images to serve as biomarkers. The application of AI in detecting bone metastasis of various tumors has shown great promise in improving the diagnosis and treatment of cancer patients. Deep learning algorithms, in particular, have demonstrated high sensitivity and specificity in analyzing medical images and identifying osseous metastasis. Taking one step ahead, machine learning and deep learning methods have successfully predicted metastasis onset with omics data as features, aside from analyzing medical images [58].

However, there are also challenges and limitations to using AI in bone metastasis detection. Large and diverse datasets are required to train and validate AI algorithms, especially deep learning algorithms. Researchers can try to overcome this issue by employing various strategies such as data augmentation, cross-modal image translation, multi-center collaboration, and the construction of semi-supervised or self-supervised models. As an attempt to solve the aforementioned problem, we once proposed a multimodal metric that employed deep learning methods and a cross-modal image fusion technique to detect cervical cancer, proving the clinical efficacy of cross-modal image fusion [59]. Other notable challenges encompass the risk of bias and overfitting, as well as the absence of standardized evaluation metrics for AI models. The lack of a standardized metric that transcends various AI approaches remains a significant hurdle. Even when specific evaluation metrics are in place, the appropriateness of these metrics within the medical domain are often called into question. Consequently, it is imperative to formulate evaluation metrics that are meticulously adapted to medical imaging and the intricacies of its applications. Doing so would greatly enhance the precision and trustworthiness of the algorithms employed in this field.Moreover, the black-box nature of deep learning algorithms has much controversy [60]. It may not be possible to understand how the output is determined, especially in the case of DNNs. This opaqueness has led to demands for “explainability”, such as the European Union’s General Data Protection Regulation requirement for transparency— deconvolution of an algorithm’s black box—before an algorithm can be used for patient care [61]. This demand gives rise to the notion of “interpretable machine learning”, which is an approach aimed at making the decision-making process of neural networks transparent. Techniques like heat maps and occlusion testing, where the "Region of Interest" (ROI) is intentionally obscured to observe changes in the model's output, are employed to trace and understand the focus areas of the DNN. These methods are particularly useful in clinical diagnoses, as they can help clinicians see which parts of the image the AI is considering most relevant when making its predictions, thereby providing insights into the AI's "thought process.".

Ultimately, major progress in artificial intelligence will come about through systems that combine representation learning with complex reasoning. The excitement that lies ahead, albeit much further along than many have forecasted, is for software capable of digesting massive sets of data quickly, accurately, and inexpensively and for machines that can scrutinize images, discern intricate features, and identify patterns within nature that surpass human capabilities. This capability will ultimately lay the foundation for high-performance medicine, which is truly data-driven, decompressing our reliance on human resources. The symbiosis will eventually take us well beyond the sum of the parts of human and machine intelligence.

Declaration of Competing Interest

The authors disclose no conflicts.

Acknowledgements

This research was funded in part by the CAMS Innovation Fund for Medical Sciences (2020-I2M-C&T-A-015 to Y.M., CIFMS, 2021-I2M-1-051 to J.Z. and N.W., 2021-I2M-1–052 to Z.W., 2020-I2M-C&T-B-030 to J.Z.),

National High Level Hospital Clinical Research Funding (2022-PUMCH-D-007 to T.J.Z. and N.W., 2022-PUMCH-C-033 to N.W.), Non-Profit Central Research Institute Fund of the Chinese Academy of Medical Sciences (2019PT320025).

References

- 1.Coleman R.E. Metastatic bone disease: clinical features, pathophysiology and treatment strategies. Cancer Treat Rev. 2001:27. doi: 10.1053/ctrv.2000.0210. [DOI] [PubMed] [Google Scholar]

- 2.Cecchini M.G., Wetterwald A., van der Pluijm G., Thalmann G.N. Molecular and biological mechanisms of bone metastasis. EAU Updat Ser. 2005:3. doi: 10.1016/j.euus.2005.09.006. [DOI] [Google Scholar]

- 3.Selvaggi G., Scagliotti G.V. Management of bone metastases in cancer: a review. Crit Rev Oncol Hematol. 2005:56. doi: 10.1016/j.critrevonc.2005.03.011. [DOI] [PubMed] [Google Scholar]

- 4.Coleman R.E. Clinical features of metastatic bone disease and risk of skeletal morbidity. Clin Cancer Res. 2006;12:6243s–6249s. doi: 10.1158/1078-0432.CCR-06-0931. [DOI] [PubMed] [Google Scholar]

- 5.Batson Oscar V. The function of the vertebral veins and their role in the spread of metastases. Ann Surg. 1940:112. doi: 10.1097/00000658-194007000-00016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cuccurullo V., Lucio Cascini G., Tamburrini O., Rotondo A., Mansi L. Bone metastases radiopharmaceuticals: an overview. Curr Radio. 2013:6. doi: 10.2174/1874471011306010007. [DOI] [PubMed] [Google Scholar]

- 7.O’Sullivan G.J. Imaging of bone metastasis: an update. World J Radio. 2015:7. doi: 10.4329/wjr.v7.i8.202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Emon B., Bauer J., Jain Y., Jung B., Saif T. Biophysics of tumor microenvironment and cancer metastasis - a mini review. Comput Struct Biotechnol J. 2018:16. doi: 10.1016/j.csbj.2018.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Migliorini F., Maffulli N., Trivellas A., Eschweiler J., Tingart M., Driessen A. Bone metastases: a comprehensive review of the literature. Mol Biol Rep. 2020:47. doi: 10.1007/s11033-020-05684-0. [DOI] [PubMed] [Google Scholar]

- 10.Haug C.J., Drazen J.M. Artificial intelligence and machine learning in clinical medicine, 2023. N Engl J Med. 2023;388:1201–1208. doi: 10.1056/NEJMra2302038. [DOI] [PubMed] [Google Scholar]

- 11.Van den Wyngaert T., Strobel K., Kampen W.U., Kuwert T., van der Bruggen W., Mohan H.K., et al. The EANM practice guidelines for bone scintigraphy. Eur J Nucl Med Mol Imaging. 2016:43. doi: 10.1007/s00259-016-3415-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yang H.L., Liu T., Wang X.M., Xu Y., Deng S.M. Diagnosis of bone metastases: a meta-analysis comparing 18FDG PET, CT, MRI and bone scintigraphy. Eur Radio. 2011:21. doi: 10.1007/s00330-011-2221-4. [DOI] [PubMed] [Google Scholar]

- 13.Zhao Z., Pi Y., Jiang L., Xiang Y., Wei J., Yang P., et al. Deep neural network based artificial intelligence assisted diagnosis of bone scintigraphy for cancer bone metastasis. Sci Rep. 2020:10. doi: 10.1038/s41598-020-74135-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Horikoshi H., Kikuchi A., Onoguchi M., Sjöstrand K., Edenbrandt L. Computer-aided diagnosis system for bone scintigrams from Japanese patients: Importance of training database. Ann Nucl Med. 2012:26. doi: 10.1007/s12149-012-0620-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Nakajima K., Nakajima Y., Horikoshi H., Ueno M., Wakabayashi H., Shiga T., et al. Enhanced diagnostic accuracy for quantitative bone scan using an artificial neural network system: a Japanese multi-center database project. EJNMMI Res. 2013;3:1–9. doi: 10.1186/2191-219X-3-83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Papandrianos N., Papageorgiou E.I., Anagnostis A. Development of convolutional neural networks to identify bone metastasis for prostate cancer patients in bone scintigraphy. Ann Nucl Med. 2020:34. doi: 10.1007/s12149-020-01510-6. [DOI] [PubMed] [Google Scholar]

- 17.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit. 2016;vol. 2016 doi: 10.1109/CVPR.2016.90. [DOI] [Google Scholar]

- 18.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. 3rd Int. Conf. Learn. Represent. ICLR 2015 - Conf. Track Proc., 2015.

- 19.Papandrianos N., Papageorgiou E., Anagnostis A., Feleki A. A deep-learning approach for diagnosis of metastatic breast cancer in bones from whole-body scans. Appl Sci. 2020;10:997. doi: 10.3390/app10030997. [DOI] [Google Scholar]

- 20.G. Huang Z. Liu L. Van Der Maaten K.Q. Weinberger. Densely connected convolutional networks. Proc. - 30th IEEE Conf. Comput. Vis Pattern Recognit, CVPR 2017 vol. 2017 2017 doi: 10.1109/CVPR.2017.243.

- 21.Han S., Oh J.S., Lee J.J. Diagnostic performance of deep learning models for detecting bone metastasis on whole-body bone scan in prostate cancer. Eur J Nucl Med Mol Imaging. 2022:49. doi: 10.1007/s00259-021-05481-2. [DOI] [PubMed] [Google Scholar]

- 22.Ntakolia C., Diamantis D.E., Papandrianos N., Moustakidis S., Papageorgiou E.I. A lightweight convolutional neural network architecture applied for bone metastasis classification in nuclear medicine: a case study on prostate cancer patients. Healthc. 2020:8. doi: 10.3390/healthcare8040493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Liu Y., Yang P., Yong P., Jiang L., Zhong X., Cheng J., et al. Automatic identification of suspicious bone metastatic lesions in bone scintigraphy using convolutional neural network. BMC Med Imaging. 2021;21:1–9. doi: 10.1186/s12880-021-00662-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Liao C.W., Hsieh T.C., Lai Y.C., Hsu Y.J., Hsu Z.K., Chan P.K., et al. Artificial intelligence of object detection in skeletal scintigraphy for automatic detection and annotation of bone metastases. Diagnostics. 2023:13. doi: 10.3390/diagnostics13040685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Talbot J.N., Paycha F., Balogova S. Diagnosis of bone metastasis: recent comparative studies of imaging modalities. Q J Nucl Med Mol Imaging. 2011;55:374–410. [PubMed] [Google Scholar]

- 26.Kumar V., Gu Y., Basu S., Berglund A., Eschrich S.A., Schabath M.B., et al. Radiomics: the process and the challenges. Magn Reson Imaging. 2012:30. doi: 10.1016/j.mri.2012.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hong J.H., Jung J.Y., Jo A., Nam Y., Pak S., Lee S.Y., et al. Development and validation of a radiomics model for differentiating bone islands and osteoblastic bone metastases at abdominal CT. Radiology. 2021:299. doi: 10.1148/radiol.2021203783. [DOI] [PubMed] [Google Scholar]

- 28.Yin P., Mao N., Zhao C., Wu J., Chen L., Hong N. A triple-classification radiomics model for the differentiation of primary chordoma, giant cell tumor, and metastatic tumor of sacrum based on T2-weighted and contrast-enhanced T1-weighted MRI. J Magn Reson Imaging. 2019:49. doi: 10.1002/jmri.26238. [DOI] [PubMed] [Google Scholar]

- 29.Acar E., Leblebici A., Ellidokuz B.E., Başbinar Y., Kaya G.Ç. Full paper: machine learning for differentiating metastatic and completely responded sclerotic bone lesion in prostate cancer: a retrospective radiomics study. Br J Radio. 2019:92. doi: 10.1259/bjr.20190286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Filograna L., Lenkowicz J., Cellini F., Dinapoli N., Manfrida S., Magarelli N., et al. Identification of the most significant magnetic resonance imaging (MRI) radiomic features in oncological patients with vertebral bone marrow metastatic disease: a feasibility study. Radio Med. 2019:124. doi: 10.1007/s11547-018-0935-y. [DOI] [PubMed] [Google Scholar]

- 31.Wang Y., Yu B., Zhong F., Guo Q., Li K., Hou Y., et al. MRI-based texture analysis of the primary tumor for pre-treatment prediction of bone metastases in prostate cancer. Magn Reson Imaging. 2019:60. doi: 10.1016/j.mri.2019.03.007. [DOI] [PubMed] [Google Scholar]

- 32.Gandaglia G., Karakiewicz P.I., Briganti A., Passoni N.M., Schiffmann J., Trudeau V., et al. Impact of the site of metastases on survival in patients with metastatic prostate cancer. Eur Urol. 2015:68. doi: 10.1016/j.eururo.2014.07.020. [DOI] [PubMed] [Google Scholar]

- 33.Hayakawa T., Tabata K. ichi, Tsumura H., Kawakami S., Katakura T., Hashimoto M., et al. Size of pelvic bone metastasis as a significant prognostic factor for metastatic prostate cancer patients. Jpn J Radiol 2020;38. 10.1007/s11604-020-01004-5. [DOI] [PubMed]

- 34.Ren M., Yang H., Lai Q., Shi D., Liu G., Shuang X., et al. MRI-based radiomics analysis for predicting the EGFR mutation based on thoracic spinal metastases in lung adenocarcinoma patients. Med Phys. 2021:48. doi: 10.1002/mp.15137. [DOI] [PubMed] [Google Scholar]

- 35.Fan Y., Dong Y., Yang H., Chen H., Yu Y., Wang X., et al. Subregional radiomics analysis for the detection of the EGFR mutation on thoracic spinal metastases from lung cancer. Phys Med Biol. 2021;66 doi: 10.1088/1361-6560/ac2ea7. [DOI] [PubMed] [Google Scholar]

- 36.Perrin R.G., Laxton A.W. Metastatic spine disease: epidemiology, pathophysiology, and evaluation of patients. Neurosurg Clin N Am. 2004:15. doi: 10.1016/j.nec.2004.04.018. [DOI] [PubMed] [Google Scholar]

- 37.Lang N., Zhang Y., Zhang E., Zhang J., Chow D., Chang P., et al. Differentiation of spinal metastases originated from lung and other cancers using radiomics and deep learning based on DCE-MRI. Magn Reson Imaging. 2019:64. doi: 10.1016/j.mri.2019.02.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Karthi M., Muthulakshmi V., Priscilla R., et al. Intelligent Communication and Smart Electrical Systems (ICSES) IEEE,; 2021. Evolution of yolo-v5 algorithm for object detection: automated detection of library books and performace validation of dataset[C]//2021 International Conference on Innovative Computing; pp. 1–6. [Google Scholar]

- 39.Noguchi S., Nishio M., Sakamoto R., Yakami M., Fujimoto K., Emoto Y., et al. Deep learning–based algorithm improved radiologists’ performance in bone metastases detection on CT. Eur Radio. 2022:32. doi: 10.1007/s00330-022-08741-3. [DOI] [PubMed] [Google Scholar]

- 40.Çiçek Ö., Abdulkadir A., Lienkamp S.S., Brox T., Ronneberger O. 3D U-net: Learning dense volumetric segmentation from sparse annotation. Lect. Notes Comput. Sci. 2016;tes Bioin doi: 10.1007/978-3-319-46723-8_49. [DOI] [Google Scholar]

- 41.Perez-Lopez R., Lorente D., Blackledge M.D., Collins D.J., Mateo J., Bianchini D., et al. Volume of bone metastasis assessed with whole-Body Diffusion-weighted imaging is associated with overall survival in metastatic castrationresistant prostate cancer. Radiology. 2016:280. doi: 10.1148/radiol.2015150799. [DOI] [PubMed] [Google Scholar]

- 42.Liu X., Han C., Cui Y., Xie T., Zhang X., Wang X. Detection and segmentation of pelvic bones metastases in MRI images for patients with prostate cancer based on deep learning. Front Oncol. 2021:11. doi: 10.3389/fonc.2021.773299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hu Y., Su F., Dong K., Wang X., Zhao X., Jiang Y., et al. Deep learning system for lymph node quantification and metastatic cancer identification from whole-slide pathology images. Gastric Cancer. 2021:24. doi: 10.1007/s10120-021-01158-9. [DOI] [PubMed] [Google Scholar]

- 44.Zhu Y., Wei R., Gao G., Ding L., Zhang X., Wang X., et al. Fully automatic segmentation on prostate MR images based on cascaded fully convolution network. J Magn Reson Imaging. 2019:49. doi: 10.1002/jmri.26337. [DOI] [PubMed] [Google Scholar]

- 45.Lin E.C., Alavi A. PET and PET/CT. A clinical guide. 2006.

- 46.Ming Y., Wu N., Qian T., Li X., Wan D.Q., Li C., et al. Progress and future trends in PET/CT and PET/MRI molecular imaging approaches for breast cancer. Front Oncol. 2020:10. doi: 10.3389/fonc.2020.01301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lin Q., Li T., Cao C., Cao Y., Man Z., Wang H. Deep learning based automated diagnosis of bone metastases with SPECT thoracic bone images. Sci Rep. 2021:11. doi: 10.1038/s41598-021-83083-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Lindgren Belal S., Sadik M., Kaboteh R., Hasani N., Enqvist O., Svärm L., et al. 3D skeletal uptake of 18F sodium fluoride in PET/CT images is associated with overall survival in patients with prostate cancer. EJNMMI Res. 2017:7. doi: 10.1186/s13550-017-0264-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lindgren Belal S., Sadik M., Kaboteh R., Enqvist O., Ulén J., Poulsen M.H., et al. Deep learning for segmentation of 49 selected bones in CT scans: first step in automated PET/CT-based 3D quantification of skeletal metastases. Eur J Radio. 2019:113. doi: 10.1016/j.ejrad.2019.01.028. [DOI] [PubMed] [Google Scholar]

- 50.Li X., Wu N., Zhang W., Liu Y., Ming Y. Differential diagnostic value of 18F-FDG PET/CT in osteolytic lesions. J Bone Oncol. 2020:24. doi: 10.1016/j.jbo.2020.100302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Imbriaco M., Larson S.M., Yeung H.W., Mawlawi O.R., Erdi Y., Venkatraman E.S., et al. A new parameter for measuring metastatic bone involvement by prostate cancer: the bone scan index. Clin Cancer Res. 1998:4. [PubMed] [Google Scholar]

- 52.Harmon S.A., Perk T., Lin C., Eickhoff J., Choyke P.L., Dahut W.L., et al. Quantitative assessment of early [ 18 F]sodium fluoride positron emission tomography/computed tomography response to treatment in men with metastatic prostate cancer to bone. J Clin Oncol. 2017;vol. 35 doi: 10.1200/JCO.2017.72.2348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ulmert D., Kaboteh R., Fox J.J., Savage C., Evans M.J., Lilja H., et al. A novel automated platform for quantifying the extent of skeletal tumour involvement in prostate cancer patients using the bone scan index. Eur Urol. 2012:62. doi: 10.1016/j.eururo.2012.01.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Lindgren Belal S., Larsson M., Holm J., Buch-Olsen K.M., Sörensen J., Bjartell A., et al. Automated quantification of PET/CT skeletal tumor burden in prostate cancer using artificial intelligence: the PET index. Eur J Nucl Med Mol Imaging. 2023 doi: 10.1007/s00259-023-06108-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Foster B., Bagci U., Mansoor A., Xu Z., Mollura D.J. A review on segmentation of positron emission tomography images. Comput Biol Med. 2014:50. doi: 10.1016/j.compbiomed.2014.04.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Moreau N., Rousseau C., Fourcade C., Santini G., Ferrer L., Lacombe M., et al. Deep learning approaches for bone and bone lesion segmentation on 18FDG PET/CT imaging in the context of metastatic breast cancer. Proc Annu Int Conf IEEE Eng Med Biol Soc EMBS. , 2020;vol. 2020 doi: 10.1109/EMBC44109.2020.9175904. [DOI] [PubMed] [Google Scholar]

- 57.Isensee F., Jaeger P.F., Kohl S.A.A., Petersen J., Maier-Hein K.H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat Methods. 2021:18. doi: 10.1038/s41592-020-01008-z. [DOI] [PubMed] [Google Scholar]

- 58.Albaradei S., Thafar M., Alsaedi A., Van Neste C., Gojobori T., Essack M., et al. Machine learning and deep learning methods that use omics data for metastasis prediction. Comput Struct Biotechnol J. 2021:19. doi: 10.1016/j.csbj.2021.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ming Y., Dong X., Zhao J., Chen Z., Wang H., Wu N. Deep learning-based multimodal image analysis for cervical cancer detection. Methods. 2022:205. doi: 10.1016/j.ymeth.2022.05.004. [DOI] [PubMed] [Google Scholar]

- 60.Castelvecchi D. Can we open the black box of AI. Nature. 2016:538. doi: 10.1038/538020a. [DOI] [PubMed] [Google Scholar]

- 61.C. Kuang Can A.I. Be Taught to Explain Itself? - The New York Times N Y Mag 2017.