Summary:

ChatGPT is a cutting-edge language model developed by OpenAI with the potential to impact all facets of plastic surgery from research to clinical practice. New applications for ChatGPT are emerging at a rapid pace in both the scientific literature and popular media. It is important for clinicians to understand the capabilities and limitations of these tools before patient-facing implementation. In this article, the authors explore some of the technical details behind ChatGPT: what it is, and what it is not. As with any emerging technology, attention should be given to the ethical and health equity implications of this technology on our plastic surgery patients. The authors explore these concerns within the framework of the foundational principles of biomedical ethics: patient autonomy, nonmaleficence, beneficence, and justice. ChatGPT and similar intelligent conversation agents have incredible promise in the field of plastic surgery but should be used cautiously and sparingly in their current form. To protect patients, it is imperative that societal guidelines for the safe use of this rapidly developing technology are developed.

Takeaways

Question: What are the ethical implications of using ChatGPT in plastic surgery clinical practice?

Findings: ChatGPT has impressive capabilities; however, it is not without limitations. The model may produce inaccurate, irrelevant, or frankly biased outputs. Ethical implementation must involve patient consent for all interactions, avoidance of chatbot use for information gathering or medical advice, and subjecting AI-generated content to clinician review before clinical deployment.

Meaning: ChatGPT and similar intelligent conversation agents have incredible promise in the field of plastic surgery but should be used cautiously and sparingly in their current form. Societal guidelines for the safe and ethical use of this rapidly developing technology are necessary.

INTRODUCTION

ChatGPT is a cutting-edge language model trained by OpenAI (San Francisco, Calif.). This technology has taken the internet by storm, with new applications emerging daily across a wide range of professional fields, including healthcare. This technology has the potential to dramatically impact plastic surgery training, education, research, and clinical practice. The promise of artificial intelligence (AI) in plastic surgery is alluring; however, it is important to recognize the potential ethical landmines that exist. Ethical concerns around machine learning regarding informed consent, quality assurance of source data and algorithms, risk of bias, and the integrity of the physician-patient relationship have been raised in the plastic surgery literature.1–3 With the advent of “intelligent” chatbots like ChatGPT, it is critical that the ethical implications of these technologies are considered. Prior studies have addressed the ethical considerations of ChatGPT related to scientific publishing, authorship, and plagiarism, as it relates to plastic surgery. It is imperative that the implications for plastic surgery clinical practice and surgical education are also addressed, including the ways this technology may be used and abused.

WHAT IS CHATGPT?

Studies related to ChatGPT are emerging rapidly in the medical literature, and there appears to be confusion about what exactly this technology is and what it is not. ChatGPT is the latest version of OpenAI’s generative pretrained transformer (GPT) language model that has undergone several iterations since its inception in 2018. Simply put, ChatGPT works by predicting the most likely next word in a sentence based on a prompt from a user. With iterative prediction, the model is then able to generate an entire coherent response. Although language models themselves are not new, what sets ChatGPT apart from earlier iterations is the massive amount of internet-derived text data that it has been trained on, as well as OpenAI’s implementation of “human annotators” to provide feedback and correct outputs of the model during training, a process known as reinforcement learning from human feedback. This has resulted in a powerful natural language processor with tremendous potential for plastic surgery research and clinical practice.

Although ChatGPT has demonstrated impressive knowledge feats such as achieving a passing score on the United States Medical Licensing Examination and writing convincing scientific abstracts, this does not imply the existence of general medical knowledge, or the ability to apply this knowledge in future tasks.4,5 The quality and accuracy of any model’s outputs are only as good as its training data. The large dataset used to train ChatGPT is derived from “internet dumps” obtained via web scraping from open source datasets such as Common Crawl and OpenWebText. These datasets are composed of data sourced from over 20 million URLs, including everything from PubMed and Google Scholar to Wikipedia, Reddit, and numerous others. Importantly, the majority of medical literature exists behind a paywall, meaning ChatGPT would likely have access only to the publicly available abstract or summary. These are important implications which must be considered before implementation of this tool in a clinical setting.

This technology in its current form certainly has its limitations; however, improvements and new iterations are emerging at a rapid pace. A tool like ChatGPT was nearly inconceivable just a few years ago, and now it seems that almost no application in the coming years is far-fetched. As these tools continue to gain momentum and popularity, it is imperative that the potential ethical implications are considered thoroughly.

ETHICAL IMPLICATIONS OF CHATGPT

The ethical implications of ChatGPT related to scientific publishing, authorship, and plagiarism in plastic surgery research have been previously addressed in the literature. Official statements from Plastic and Reconstructive Surgery and Plastic and Reconstructive Surgery Global Open have been released addressing ethical use guidelines for large language models when publishing in either of these journals.6,7 The ethical implications of ChatGPT related to clinical practice and surgical education in plastic surgery also must be considered.

The ethical implications of ChatGPT and its use in the clinical practice can be viewed through the four foundational ethical principles: respect for autonomy, nonmaleficence, beneficence, and justice. Patient autonomy (the ability to make informed decisions regarding one’s healthcare) is a key bioethical principle. One potential implication of AI chatbots in this domain is whether patients will be able to make the decision to “opt out” of interacting with these tools. AI chatbots have already been deployed in patient-facing domains to assist with appointment scheduling, screening postoperative questions, and even providing mental health counseling and support.8–10 Advanced chatbots like ChatGPT could expand these applications significantly, and even offer our plastic surgery patients a more dynamic and “human-like” interaction. It is tempting—and not far-fetched—to imagine these tools fully offloading some of these more mundane clinical tasks in plastic surgery. However, not all patients will be comfortable interacting and sharing medical information with these chatbots, and without the ability to opt out, may be less inclined to seek care.

The application of ChatGPT as a conversational patient-education tool is alluring. A patient seeking information regarding abdominoplasty or breast reduction could, in theory, interface with an advanced AI chatbot and “discuss” the basics of the procedure, including indications, postoperative expectations, potential complications, and outcomes. This may foster more well-informed and fruitful preoperative consultations, and potentially limit the number of preoperative visits necessary. However, can we be certain that the information our patients are receiving is reliable? Using ChatGPT in this way as a “search engine” often produces a large amount of incorrect information. If asked for a reference for a given response, ChatGPT may even confabulate a list of false citations. As the model continues to evolve, ChatGPT may ultimately be able to assign a “level of confidence” for a provided answer, or an accurate list of supporting references, but in its current form, it cannot be relied upon for accuracy or completeness.11 The principle of nonmaleficence requires that one does not intentionally inflict harm on a patient, including that inflicted through negligence or omission. For a patient to make a truly informed healthcare decision, the information they base this decision on must be reliable and factually accurate. In the absence of clinician supervision of our patient’s interactions with these tools, they may be exposed to inaccurate, mischaracterized, and biased information that may detrimentally impact their healthcare decision-making capacity.

One of the most compelling aspects of ChatGPT is its ability to generate text that is nearly indistinguishable from human-generated content. Earlier generation chatbots have already been used to answer common treatment-related questions and provide emotional support for patients, with high rates of patient satisfaction.8–10 A similar approach could be used for patients undergoing breast reconstruction, providing answers to common postoperative questions and even emotional support throughout reconstruction. But what happens when tools like ChatGPT reach this near-human threshold and patients can no longer clearly distinguish whether they are interacting with the clinic staff, their plastic surgeon, or a machine? Emotional support web applications have recently experimented with replacing human counselors with intelligent chatbots, resulting in feelings of distrust and betrayal from their users.12,13 The bioethical principle of beneficence—acting in the best interest of the patient—is at the core of the physician-patient relationship. If these tools are to be deployed widely in the clinic setting, it is imperative that intelligent chatbots are not used as substitutes for this physician-patient relationship. Patients must interface with these tools without deception or coercion.

Concerns about fair and equitable use of tools like ChatGPT have raised several important considerations related to the bioethical principle of justice. ChatGPT is trained on a massive volume of internet data, which are inherently fraught with bias.14,15 The model is prone to producing answers that align with the most represented demographic or viewpoint expressed in this dataset. ChatGPT will tend to amplify what is frequent and dampen what is rare. The implications of this tendency are highly concerning from an ethical perspective. If individuals of certain ethnic backgrounds or socioeconomic statuses are underrepresented or biased against within the training dataset, plastic surgery patients from these groups interfacing with ChatGPT may receive answers that are inaccurate, irrelevant, or frankly biased. Just implementation of these tools requires a thorough understanding of training data and frequent monitoring to ensure our patients are receiving unbiased information.

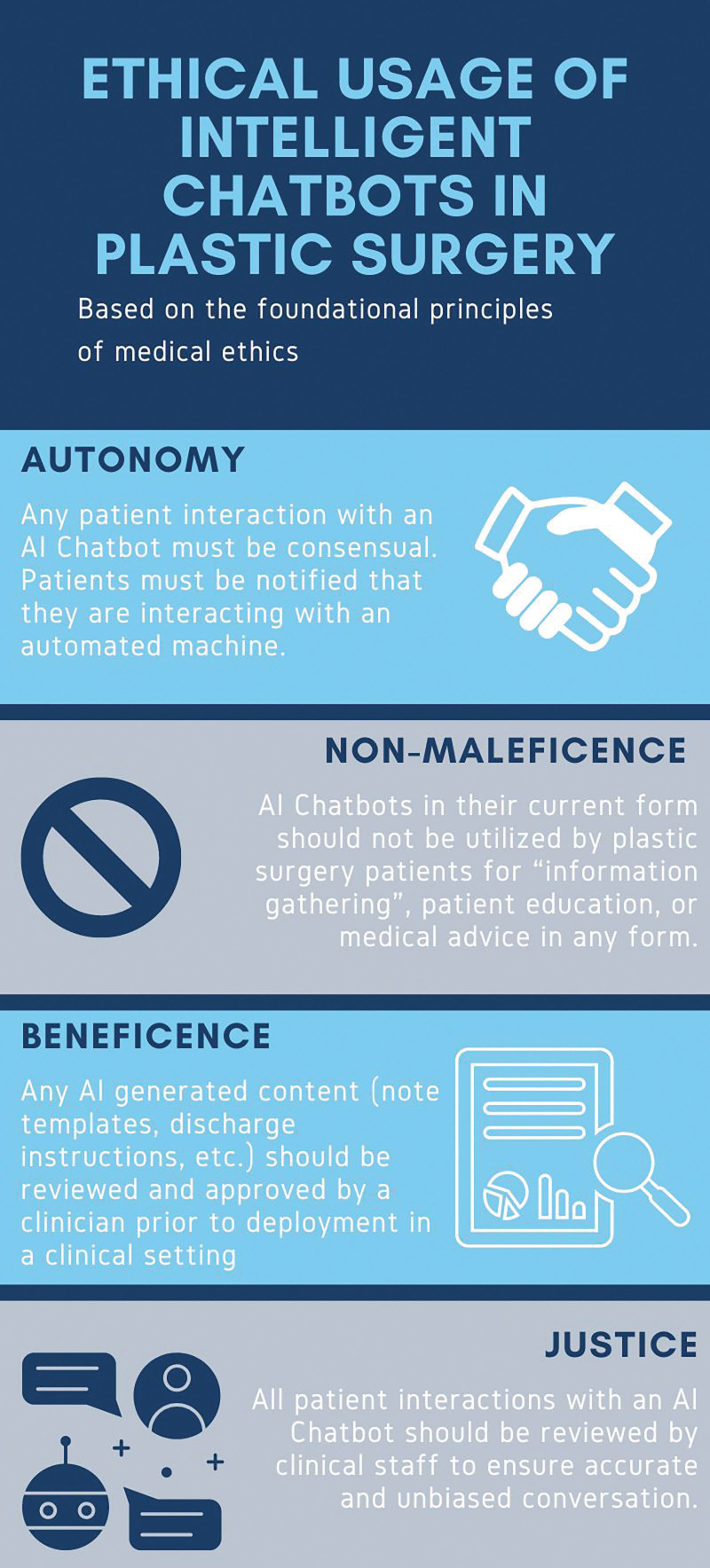

ChatGPT and similar intelligent conversation agents have incredible promise in the field of plastic surgery but should be used cautiously and sparingly in their current form. to protect our patients, it is incumbent on our specialty to develop societal guidelines for the safe and ethical use of this rapidly developing technology. In the authors’ view, four key principles should be followed (Fig. 1):

Fig. 1.

Infographic displaying the authors’ proposed guidelines for ethical use of AI chatbots in plastic surgery clinical practice.

Autonomy: Any patient interaction with an AI chatbot must be consensual. Patients must be notified that they are interacting with an automated machine.

Nonmaleficence: AI chatbots in their current form should not be used by plastic surgery patients for “information gathering,” patient education, or medical advice in any form.

Beneficence: Any AI-generated content (note templates, discharge instructions, etc) should be reviewed and approved by a clinician before deployment in a clinical setting.

Justice: All patient interactions with an AI chatbot should be reviewed by clinical staff to ensure accurate and unbiased conversation.

POTENTIAL ETHICAL USES OF CHATGPT

Although ChatGPT must be used judiciously in its current form, the authors believe that several exciting—and ethical—uses are within reach. In the realm of plastic surgery education, the interactive capabilities make ChatGPT an ideal tool for didactic teaching sessions. As a language model, ChatGPT can simulate real-world conversations and guide trainees through different clinical scenarios. After providing ChatGPT a patient prompt, it could present a case, ask follow-up questions, and challenge responses based on input from the trainee—just as an oral board examiner would. In this application, ChatGPT is used in its intended form as a conversational agent rather than relying on the model’s likely incomplete knowledge of our specialized field. The trainee or junior attending preparing for oral boards provides the surgical “expertise” during these practice sessions, while the model acts as a question generator.

ChatGPT could also be incredibly useful in developing plastic surgery practice website material and social media posts. A surgeon developing an internet presence could provide ChatGPT with an outline of the information they would like to convey on their website, and ChatGPT could generate and edit this text in seconds. After being reviewed carefully for inaccuracies, tone, formality, and reading level, the text could be automatically tailored and restructured by ChatGPT to optimize this content. Similarly, ChatGPT could save young surgeons tremendous amounts of time by generating text for hundreds of unique social media posts in the tone and structure of their choosing.

Finally, if ChatGPT is to be integrated into our clinical workflow in any capacity, it is critical to consider patient privacy and compliance with HIPAA regulations. Patient information shared with chatbots must be safeguarded to prevent unauthorized access or disclosure. One way this may be addressed is by direct integration of AI chatbots into the electronic medical record. In 2020, a California-based company “Asparia” developed a chatbot that could be fully integrated with electronic medical records like Epic.16 This integration allows for seamless communication between the chatbot and the patient’s medical record, ensuring that patient information is securely transmitted and accessed only by authorized personnel. This could open the door for safe use of ChatGPT to generate clinic notes, discharge instructions, and operative report templates—provided that all materials are screened by a clinician before submission. Although this integration does not exist for ChatGPT currently, a similar approach could help ensure robust security and privacy, maintaining patient confidentiality and trust.

CONCLUSIONS

ChatGPT is a powerful emerging tool with the potential to revolutionize various fields, including plastic surgery. As with any advanced technology, there are ethical implications that must be carefully considered. Patient autonomy, nonmaleficence, beneficence, and justice must be at the forefront of any application of ChatGPT in plastic surgery. Although caution is necessary, the potential benefits of this technology are vast, and with thoughtful implementation and continued oversight, ChatGPT has the potential to significantly impact the plastic surgery field in all of its domains.

DISCLOSURE

The authors have no financial interest to declare in relation to the content of this article.

ACKNOWLEDGMENT

This study is exempt from institutional review board evaluation due to the nature of the study design.

Footnotes

Disclosure statements are at the end of this article, following the correspondence information.

REFERENCES

- 1.Koimizu J, Numajiri T, Kato K. Machine learning and ethics in plastic surgery. Plast Reconstr Surg Glob Open. 2019;7:e2162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Jarvis T, Thornburg D, Rebecca AM, et al. Artificial intelligence in plastic surgery: current applications, future directions, and ethical implications. Plast Reconstr Surg Glob Open. 2020;8:e3200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Harrer S. Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine. EBiomedicine. 2023;90:104512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wu K, Peng Y, Wu Y, et al. How does ChatGPT Perform on the United States medical licensing examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ. 2021;7:e32450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gao CA, Howard FM, Markov NS, et al. Comparing scientific abstracts generated by ChatGPT to original abstracts using an artificial intelligence output detector, plagiarism detector, and blinded human reviewers. bioRxiv. 1610;2022:23–52. [Google Scholar]

- 6.Weidman AA, Valentine L, Chung KC, et al. OpenAI’s ChatGPT and its role in plastic surgery research. Plast Reconstr Surg. 2023;151:1111–1113. [DOI] [PubMed] [Google Scholar]

- 7.ElHawary H, Gorgy A, Janis JE. Large language models in academic plastic surgery: the way forward. Plast Reconstr Surg Glob Open. 2023;11:e4949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chaix B, Bibault J, Pienkowski A, et al. When chatbots meet patients: one-year prospective study of conversations between patients with breast cancer and a chatbot. JMIR Cancer. 2019;5:e12856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Goldenthal SB, Portney D, Steppe E, et al. Assessing the feasibility of a chatbot after ureteroscopy. Mhealth. 2019;5:8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Rasouli MR, Menendez ME, Sayadipour A, et al. A novel, automated text-messaging system is effective in patients undergoing total joint arthroplasty. J Arthroplasty. 2019;34:1180–1185. [DOI] [PubMed] [Google Scholar]

- 11.Kadavath S, Conerly T, Askell A., et al. Language models (mostly) know what they know. arXiv preprint arXiv:2207.05221. 2022. [Google Scholar]

- 12.Haupt CE, Marks M. AI-generated medical advice—GPT and beyond. JAMA. 2023;329:1349. [DOI] [PubMed] [Google Scholar]

- 13.Ingram D. A mental health tech company ran an AI experiment on real users. NBC News. January 14, 2023. Available at https://www.nbcnews.com/tech/internet/chatgpt-ai-experiment-mental-health-tech-app-koko-rcna65110 [Google Scholar]

- 14.Kandpal N, Deng H, Roberts A, et al. Large language models struggle to learn long-tail knowledge. arXiv preprint arXiv:2211.08411. 2022. [Google Scholar]

- 15.Schramowski P, Turan C, Andersen N, et al. Large pre-trained language models contain human-like biases of what is right and wrong to do. Nat Mach Intell. 2022;4:258–268. [Google Scholar]

- 16.Hung C. Asparia chatbot integration with epic yields a seamless experience for patients and epic users. HealthcareITToday. September 22, 2020. [Google Scholar]