Abstract

This review aims to critically examine the existing state-of-the-art forest fire detection systems that are based on deep learning methods. In general, forest fire incidences bring significant negative impact to the economy, environment, and society. One of the crucial mitigation actions that needs to be readied is an effective forest fire detection system that are able to automatically notify the relevant parties on the incidence of forest fire as early as possible. This review paper has examined in details 37 research articles that have implemented deep learning (DL) model for forest fire detection, which were published between January 2018 and 2023. In this paper, in depth analysis has been performed to identify the quantity and type of data that includes images and video datasets, as well as data augmentation methods and the deep model architecture. This paper is structured into five subsections, each of which focuses on a specific application of deep learning (DL) in the context of forest fire detection. These subsections include 1) classification, 2) detection, 3) detection and classification, 4) segmentation, and 5) segmentation and classification. To compare the model’s performance, the methods were evaluated using comprehensive metrics like accuracy, mean average precision (mAP), F1-Score, mean pixel accuracy (MPA), etc. From the findings, of the usage of DL models for forest fire surveillance systems have yielded favourable outcomes, whereby the majority of studies managed to achieve accuracy rates that exceeds 90%. To further enhance the efficacy of these models, future research can explore the optimal fine-tuning of the hyper-parameters, integrate various satellite data, implement generative data augmentation techniques, and refine the DL model architecture. In conclusion, this paper highlights the potential of deep learning methods in enhancing forest fire detection that is crucial for forest fire management and mitigation.

Keywords: Forest preservation, Forest fire, Artificial intelligence and deep learning

1. Introduction

Forest fires can be natural or manmade phenomena that occurred in natural ecosystems and usually, they spread uncontrollably [1]. According to Arteaga et al. [2], the magnitude, intensity, and duration of forest fires have continually increased in recent years. It is projected that continuous climate change will raise the risk of forest fire in many parts of the world, mostly as a result of extended warm and dry periods, coupled with increased lightning intensity [[3], [4], [5]]. With a staggering 4 billion hectares of forest around the world, it is clear that the negative impact of forest fires on the environment and global community cannot be overstated [6]. From 2002 until 2016, it is reported that on annual average, more than 420 million hectares of forest were burned globally [3,7]. Forest fires, which is also frequently referred to as wildfires, are a worldwide occurrence that have significant implications to the ecosystem, inhabitants, and assets [8]. The utilization of forests, conversely, is usually done for the purpose of agriculture, logging, mining, and establishment of infrastructure that include power plants, dams, and roads [9]. Besides that, the reduction of forest due to forest fire also will worsen the global warming impact [10]. Furthermore, the unpredictable and out-of-control forest fires can pose a serious hazard to the lives of communities [11].

Forest fires are typically regarded as inevitable calamities, particularly in the summer and during periods of drought [9]. Both natural and controlled version of forest fires will significantly influence the natural forest ecosystems [12]. There are three main categories of forest fires, which are crown fires, surface fires, and ground fires [13]. A comprehensive explanation of these three forest fire categories can be found in Bennett et al. [14]. Ground fires primarily burn the duff layer without producing any visible flame. This type of fire can continually smoulder for an extended period of time with very minimal smoke. While for surface fire, it produces flaming fronts that consume various types of vegetation, including needles, moss, lichen, shrubs, and small trees. Out of all the three types of forest fire, surface fire is the most common type that is characterized by high intensity flames, which can lead to the formation of crown fires [13]. Additionally, surface fires can also transition into ground fires, while crown fires will become surface fires upon reaching the ground level [15]. Crown fires can be either passive or active, with passive fires involve the ignition of individual or group of trees. The intensity of these fires is commonly high that dependent on various factors, including topography, wind patterns, and the density of trees [14]. The classification of forest fires based on their size is commonly referred to as the size class, which facilitates the comprehension of fire attributes and the necessary resources for their management. The determination of forest fire according to the size class is typically based on the fire's area and the precise definition may differ from one country to another. Table 1 depicts the forest fire classification according to the size class in United States of America.

Table 1.

Forest fire classification according to the size class [16].

| Class | Size of Forest Fire (acres) |

|---|---|

| Class A | <0.25 |

| Class B | 0.25–9.9 |

| Class C | 10.0–99.9 |

| Class D | 100–299 |

| Class E | 300–999 |

| Class F | 1000 or more |

It is essential to have forest fire detection and surveillance systems that are both accurate and reliable in order to minimize the negative impacts of forest fires. As a consequence, many forest fire surveillance systems employ a wide range of technologies, such as satellite imaging, ground sensors, and drones, in order to identify, analyse, and respond to the forest fire incidents in real time. The utilization of these sensors has led to significant advancements in forest fire detection technologies. Furthermore, the integration of deep learning (DL) models has also enhanced the accuracy of these technologies. Although, Harkat et al. and Yang et al. [17,18] stated that DL cannot performed well due to limited data, generalization, lacks interpretability, and features but the integration DL with other method can increase the performance. In the context of remote sensing-based applications, deep semantic segmentation models are typically developed with the objective of extracting road networks, building detection, and land use classification [19]. In recent time, the use of remote sensing imagery has become a crucial tool for studying and detecting forest fires, whether through spaceborne or airborne, which has proven to be cost and time-effective means of monitoring forest fires over large areas of interest [20]. The Landsat, Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER), Sentinel, Moderate Resolution Imaging Spectroradiometer (MODIS), Geostationary Operational Environmental Satellites (GOES-16), and Visible Infrared Imaging Radiometer Suite (VIIRS) satellite data have gained widespread popularity as the input modality for detecting and monitoring forest fires. The utilization of thermal remote sensing has also made a noteworthy contribution towards the identification of fire-related information such as fire risk, active fires, fire frequency, burn severity and affected areas [[21], [22], [23], [24]]. The application of remote sensing (RS) has provided extensive prospects for both qualitative and quantitative analysis of forest fires across various spatial scales [23,24]. The main limitations associated with the use of satellites have been discussed in several studies [[25], [26], [27], [28]], which have highlighted that the satellite imagery resolution is often inadequate, resulting in data averaging for a given area, which is less effective for detecting small fires within a specific pixel. However, the coverage area of satellite imagery is large, which requires a lot of pre-processing time before resurveying on the same region. Furthermore, the lack of real-time applications and inadequate precision of the imagery are deemed to be the main reason of not using satellite data for the continuous monitoring of forest fires.

According to Allison et al. [29]; of the input data for a forest fire intelligent application should match the spatial and temporal scale required for a precise decision-making system. In order to prevent large number of false alarm cases in a video-based system, the deployed sensors must possess a high level of resistance to various forms of interference, such as steam, fog, dust pollution, and condensing water [30]. High-altitude aerial/space sensors, including satellites, could offer a comprehensive view of large regional areas, integrated with georeferencing to locate the fire positions [29]. For instance, Gao et al. [31] acquired data from the Canadian Forest Fire Weather Index (CFFWI) system to analyse and examine the impact of forest fire under different weather conditions due to change in temperature, humidity, wind speed, and precipitation. Another type of sensor modality is wireless sensor networks (WSNs) that utilizes wireless sensor nodes to achieve broad coverage of the designated regions [32]. According to Dampage et al. [32], to improve usability of the sensors, a few peripherals that include microcontrollers, transceiver modules, and power supplies need to be integrated together.

Another aerial modality, UAV which is also referred to as Unmanned Aircraft System (UAS) and colloquially known as drone, is a flying unit that operates without the presence of an on-board human pilot since it can be remotely controlled from a ground station [33,34]. UAV has emerged as a highly effective instrument for mitigating and managing natural disasters, including forest fires. UAV has been successfully incorporated as a crucial instrument for the purpose of detecting fires in Maryam et al. [35]. Its capability to reach remote and hazardous locations is well documented that enables effective environmental surveillance by capturing high-resolution imagery [36]. Therefore, UAV is an ideal sensor modality for the purpose of forest fire mitigation and management, particularly for the regions with limited road access, where safety precaution is imperative. However, several critical constraints, especially on the performance, deployment, and design of the UAV, including autonomy, battery endurance, mobility, and limited flight time need to be addressed for an effective deployment [37]. Additionally, harsh weather conditions and environments can further degrade the UAV performance.

It is anticipated that the incidence of global forest fires will keep increasing due to climate change [38,39]. As a result, a comprehensive review of the current state-of-the-art DL models for detection, mitigation, and management of forest fires is crucial, whereby the conventional approaches are more time-consuming, expensive, and labour-intensive. Currently, there is an increasing trend in using DL for forest fire detection. Mohnish et al. [40] have combined satellite imagery, ground sensor datasets, and direct visual feeds from unmanned aerial vehicle (UAV) as the input for a DL model to identify forest fire incidences. These digital image modalities require extensive analysis and processing steps [41], especially for the satellite imaging [42], whereby this sensor often requires heavy computational processing time and resource. In DL model, the features of interest are learnt hierarchically, to extract a set of complex patterns to represents the problem [43]. It is often embedded with augmented data to enhance the possible attributes and features [44]. In order to eliminate repeating inputs, the training data is modified by performing a series of image manipulations that include random erasing, rotating, flipping, cropping, and translation [45,46]. This augmentation process is able to enhance the efficiency of training a DL model Alzubaidi et al. [44] and prevent the likelihood of model overfitting problem [39].

Recent advancements in machine learning field have made DL the dominant method, outperforming conventional techniques used in computer vision tasks, such as object recognition, classification, and natural language processing [47]. Even for semantic segmentation task, DL architectures offer better feature extraction that allow it to retrieve contextual information at various sizes and subsequently label the class of each pixel in an image [[48], [49], [50]]. A few instances of advanced semantic segmentation models are PSPNet, U-Net, DeepLab, SegNet, and FCN [51]. These models can automatically decide optimal segmentation thresholds because of its ability to learn high-level features of forest fires. Hence, this enables the models to effectively separates the fires from the background, and circumvents the potential issues of complexity and subjectivity in selecting the manual thresholds [52].

On the other hand, a simple forest fire detection that makes decision based on an image can be done through image classification, which aims to recognize semantic classes of a particular image [49] and assign the appropriate labels [53,54]. A few popular instances of classification models are Inception Net, AlexNet, VGG, and DenseNet, which are frequently used in image classification problem of various applications. Apart from that, bounding boxes of the forest fire areas can be generated through object localization models [53,54]. When these two previously mentioned processes are combined, they form the basis of object detection, a powerful tool used in computer vision to detect the class and areas of the object of interest [54,55]. In general, object detection is the process of predicting an object's location by identifying the class to which its belong and reporting the bounding box information that surround the object [56]. Object detection framework can be classified into two categories: one-stage and two-stage. Models such as R-CNN, FPN, and Faster R-CNN are several examples of two-stage framework. While, models such as YOLO, Centernet, SSD, and EfficientDet are several instances of one-stage framework. A large variety of applications, such as content-based image retrieval, autonomous driving, security, augmented reality, and intelligent video surveillance are seldomly equipped with object detection capability to produce effective computer vision applications [57].

The number of forest fires will keep increasing due to climate change. The forest fire needs to be controlled because forests protect biodiversity by providing habitats for plants and animals [58]. Forest fires or wildfires pose a substantial danger, since it will bring major and damaging impacts on nature, properties, as well as humans [59]. In order to effectively manage and prevent forest fire incidences, it is essential to develop deep intelligent models with good precision and efficiency. This review will highlight the methods and architectures of DL models that have been applied that include the type of datasets used and their accompanying performance accuracy. This review also discussed the impact of data augmentation methods in training the DL models, which focuses only on the recent works (2018–2023) of DL methods and architectures used for forest fire detection systems. These comprehensive findings are meant to guide researchers and practitioners to improve on the current limitations and issues of the current forest fire detection systems. In the methodology section, this paper discusses a few research questions, search engine databases, search terms, selection and rejection strategies, and other processes that are related to forest fires. While, in the discussion section, the results of analysis on the current DL methods in forest fire detection systems are discussed in depth. The conclusion section of this paper will summarize the review of forest fire detection using DL and provide several recommendations for future work to enhance the forest fire detection capability.

2. Methodology

2.1. Review protocol

In this paper, the preferred reporting items for standard systematic reviews and meta-analyses (PRISMA) principles strategy was utilised to conduct the survey, whereby a set of pre-planned questions was used to identify the related studies that were included in the survey [60]. Firstly, this study started with a set of research questions to determine the possible manuscripts that were deemed suitable for forest fire cases. Then, the related manuscripts were searched from the prominent databases based on the research questions developed. The collected manuscripts were then analysed, and the relevant data was extracted guided by the research questions. The final step is the documentation process of the extracted data before they are being analysed as required by the research questions. The following information describes the search engine sources, search terms, and the procedures for selection and rejection of the papers used in this work:

-

a)

Search Engine Source

The search engine sources included in this review are IEEE Xplore, Web of Science (WOS), and Scopus databases, all of which are highly respected and good quality peer-reviewed sourced.

-

b)

Search terms

In terms of search terms, the systematic search terms employed are a combination of main keywords such as “deep learning,” “forest fire,” “wildfire,” and “detection” to ensure the inclusion of all relevant studies. The included search period was selected for a specific timeline, which are from 2018 to 2023.

-

c)

Selection

The main selection criteria limit the included research studies that utilise DL methods for the identification of forest fires. Only studies published in English that specifically address segmentation, detection, and classification of forest fires using DL models were included. The selected articles were extracted from a four-year period between 2018 and 2023, which comprises of journal articles, conference proceedings, and book chapters that are related to our studied topics.

-

d)

Rejection

The rejection criteria for this review are review papers, manuscripts in languages other than English, and studies that were not peer-reviewed, or published as pre-prints or early works. Such studies were excluded to ensure the quality and reliability of the included studies.

2.2. Research questions

The number of DL projects that focused on forest fire detection has significantly increased recently. This progression in the number of scientific research can be interpreted using Population, Intervention, and Context (PICo) metric, which were used to formulate the research questions [[61], [62], [63]]. In this specific research, the population was defined as “deep learning,” while the intervention terms were reserved for “classification”, “detection”, and “segmentation” techniques. The context, on the other hand, was specifically targeted towards forest fire and wildfire. By using the PICo tool, this review was able to narrow down the research scope that focuses on specific aspects of forest fire detection, which is paper that relies on DL methods.

The review report is based on three key research questions in an effort to simplify the analysis of the selected studies. The first question aims to identify the deep machine learning architecture used in each study: “What deep architecture has been used in the study?” This step is crucial due to the varying levels of effectiveness among different DL architectures used in detecting forest fires that use various input data sources such as satellite imagery, video feeds, and sensor networks. The second question goal is to determine the type of data that was used in the studies, which could include satellite images (e.g., Sentinel-1, Landsat-8, etc.), web images, UAV imaging, etc. through asking “What types of data have been utilised in the study?” The quality and quantity of the utilised data during training and testing a deep model will have a significant impact on the model performance. Lastly, the third question focuses on evaluating the performance of the methods used in each study, measured by various performance metrics such as precision, accuracy, F1-score, recall, and mAP by asking the question – “How well is the selected method performance?“. This analysis can help in determining which of the methods are most effective that can provide insights into how to optimize the DL model for forest fire identification.

2.3. Literature collection

In order to perform the literature search, the following keywords have been used: “deep learning”, “forest fire”, “wildfire”, “detection”, “segmentation” and “classification”, and also their combined variations through Boolean operators ‘AND’ and ‘OR’. This study has conducted the search on three databases, which are Scopus, Web of Science (WoS), and IEEE Explore. A total of 117 manuscripts were obtained based on the searched keywords. These manuscripts were then categorised into four groups; identification, screening, eligibility, and inclusion as shown in Fig. 1. For the first screening phase, we removed 18 manuscripts from the Scopus database and three manuscripts from the WoS database. Then, 21 manuscripts were also removed after being cross-checked using Desktop version of Mendeley, followed by removal of additional 12 manuscripts in favour of full-text manuscripts availability. After that, the final results after inclusion and exclusion processes, a set of 39 manuscripts were selected for the final systematic review. Fig. 1 depicts the flow chart of manuscript selection for the final systematic review using the PRISMA framework method.

Fig. 1.

PRISMA framework method.

A total of 39 manuscripts were identified by the review process, covering the period from January 2018 until 2023. Only one journal article was found in the 2018 that has discussed the DL method for forest fire detection. In 2019, five studies were published, all of which were presented as conference papers. Six papers were released in 2020, with four articles being presented as conference papers and two articles being published in journals. The list of publications that were chosen for the final review and analysis is presented in Table 2.

Table 2.

A list of articles that has been selected for the final review.

| Authors | Year | Title |

|---|---|---|

| Zhao et al. [11] | 2018 | Saliency detection and deep learning-based wildfire identification in UAV imagery |

| Wang et al. [64] | 2019 | Early Forest Fire Region Segmentation Based on Deep Learning |

| Toan et al. [65] | 2019 | A deep learning approach for early wildfire detection from hyperspectral satellite images |

| Priya et al. [66] | 2019 | Deep Learning Based Forest Fire Classification and Detection in Satellite Images |

| Jiao et al. [67] | 2019 | A Deep Learning Based Forest Fire Detection Approach Using UAV and YOLOv3 |

| Hung et al. [68] | 2019 | Wildfire Detection in Video Images Using Deep Learning and HMM for Early Fire Notification System |

| Ban et al. [69] | 2020 | Near Real-Time Wildfire Progression Monitoring with Sentinel-1 SAR Time Series and Deep Learning |

| Arteaga et al. [2] | 2020 | Deep Learning Applied to Forest Fire Detection |

| de Almeida et al. [43] | 2020 | Bee2Fire: A deep learning powered forest fire detection system |

| Rahul et al. [70] | 2020 | Early detection of forest fire using deep learning |

| Khryashchev and Larionov [42] | 2020 | Wildfire Segmentation on Satellite Images using Deep Learning |

| Benzekri et al. [71] | 2020 | Early forest fire detection system using wireless sensor network and deep learning |

| Li et al. [52] | 2021 | Early Forest Fire Segmentation Based on Deep Learning |

| Ciprián-Sánchez et al. [72] | 2021 | FIRe-GAN: a novel deep learning-based infrared-visible fusion method for wildfire imagery |

| Jiang et al. [73] | 2021 | Deep learning of qinling forest fire anomaly detection based on genetic algorithm optimization |

| Bai et al. [74] | 2021 | Research on Forest Fire Detection Technology Based on Deep Learning |

| Fan and Pei [75] | 2021 | Lightweight Forest Fire Detection Based on Deep Learning |

| Ciprián-Sánchez et al. [59] | 2021 | Assessing the impact of the loss function, architecture and image type for deep learning-based wildfire segmentation |

| Li et al. [76] | 2021 | Early Forest Fire Detection Based on Deep Learning |

| Mohnish et al. [40] | 2022 | Deep Learning based Forest Fire Detection and Alert System |

| Seydi et al. [6] | 2022 | Fire-Net: A Deep Learning Framework for Active Forest Fire Detection |

| Ghali et al. [77] | 2022 | Deep Learning and Transformer Approaches for UAV-Based Wildfire Detection and Segmentation |

| Khan and Khan [78] | 2022 | FFireNet: Deep Learning Based Forest Fire Classification and Detection in Smart Cities |

| Sun [79] | 2022 | Analyzing Multispectral Satellite Imagery of South American Wildfires Using Deep Learning |

| Gayathri et al. [80] | 2022 | Prediction and Detection of Forest Fires based on Deep Learning Approach |

| Mohammed [39] | 2022 | A real-time forest fire and smoke detection system using deep learning |

| Mohammad et al. [81] | 2022 | Hardware Implementation of Forest Fire Detection System using Deep Learning Architectures |

| Kang et al. [82] | 2022 | A deep learning model using geostationary satellite data for forest fire detection with reduced detection latency |

| Ghosh and Kumar [83] | 2022 | A hybrid deep learning model by combining convolutional neural network and recurrent neural network to detect forest fire |

| Wang et al. [84] | 2022 | Forest Fire Detection Method Based on Deep Learning |

| Li et al. [85] | 2022 | A Deep Learning Method based on SRN-YOLO for Forest Fire Detection |

| Tahir et al. [86] | 2022 | Wildfire detection in aerial images using deep learning |

| Wei et al. [87] | 2022 | An Intelligent Wildfire Detection Approach through Cameras Based on Deep Learning |

| Peng and Wang [88] | 2022 | Automatic wildfire monitoring system based on deep learning |

| Tran et al. [89] | 2022 | Forest-Fire Response System Using Deep-Learning-Based Approaches with CCTV Images and Weather Data |

| Mashraqi, et al. [90] | 2022 | Drone Imagery Forest Fire Detection and Classification Using Modified Deep Learning Model |

| Almasoud [91] | 2023 | Intelligent Deep Learning Enabled Wild Forest Fire Detection System |

| Alice et al. [92] | 2023 | Automated Forest Fire Detection using Atom Search Optimizer with Deep Transfer Learning Model |

| Xie and Huang [93] | 2023 | Aerial Forest Fire Detection based on Transfer Learning and Improved Faster RCNN |

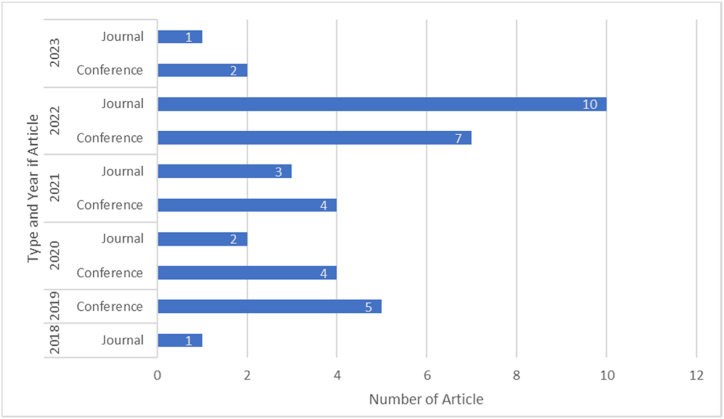

Fig. 2 shows the division of retrieved studies according to the year and type of publications. The number of publications has increased in 2021 with seven papers, of which four of them were conference papers and the remaining three were journal articles. However, in 2022, there was a remarkable surge in the number of publications with regards to the reviewed topic with a total of 17 publications, of which seven of them were conference papers and the remaining ten were published as journal articles. As of September 2023, only one journal paper and two conference papers have been selected for forest fire detection using DL techniques. Overall, most of the studies were presented as conference papers, accounting for 22 out of the 39 studies. Nevertheless, there was a noticeable increase in the number of studies published in journals in the later years, indicating that there is a growing interest in this field of research. Fig. 3 shows the percentage of journal and conference publications according to the publication year (2018–2023).

Fig. 2.

Journal and conference publications from January 2018 until 2023.

Fig. 3.

The distribution of the selected publications according to (a) the publication type and (b) publication year (2018–2023).

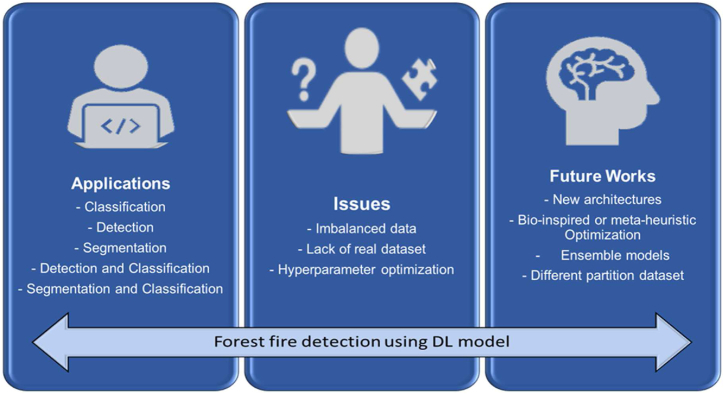

3. Discussion

The DL techniques have been widely applied in various computer vision tasks that include image classification, detection, and segmentation. Due to the various different tasks associated with the forest fire surveillance, this section was split into five subsections depending on the type of tasks; classification, detection, detection and classification, segmentation, and segmentation & classification. In the discussion section, a summary of the DL models used in the selected studies, the type of input data, the usage of augmented data augmentation, and the DL model's performance for each manuscript are summarized in details. Fig. 4 shows the overall types of DL model applications that have been used in forest fire detection studies.

Fig. 4.

DL model applications for forest fire surveillance system.

3.1. Classification

Classification task is one of the earliest and extensively researched topics in intelligent forest monitoring systems [94]. According to Shinozuka and Mansouri [95]; image classification is the procedure of categorising and labelling sets of pixels or vectors inside an image in accordance to asset of predetermined criteria. They argued that it is possible to develop the classification rule by using one or a combination of spectral or textural properties in an image [95]. The main objective of picture classification is to ensure that all images are classified based on their respective sectors or categories [96]. Based on the selected reviewed papers, the work by Benzekri et al. [71] produced the greatest accuracy in classifying the incident of forest fires. They have compared the performance of three DL models, which are long short-term memory (LSTM), recurrent neural networks (RNN), and gated recurrent units (GRU). The experimental results show that GRU achieved the highest accuracy compared to the other two models. In general, all three models attained performance accuracy of more than 90% and eight studies have not applied data augmentation technique to their dataset/study. However, the resultant accuracy for forest fires classification system is still good even without applying any data augmentation technique.

3.1.1. InceptionV3

In a study by Priya and Vani [66], InceptionV3 has been explored to improve the classification performance of the forest fire satellite images. Their work was validated using 534 satellite images that consists of 239 fire images and 295 non-fire images. For training purpose, 481 satellite images were randomly chosen, while the remaining 53 satellite images are dedicated to testing purposes. Uniquely, the authors have classified the satellite data on forest fires using imbalanced data with a relatively small number of training data, which frequently leads to overfitting problems.

3.1.2. ResNet + VGG

Rather than exploring a single ResNet model, Arteaga et al. [2] investigate multiple pre-trained CNN models for forest fire classification, which were deployed on mobile platform of the Raspberry Pi. This study used a medium-sized database of 1800 images that were downloaded from secondary source of internet. The authors applied a set of augmented data as part of the training dataset. The data was augmented by using cropping technique into 224-pixel-wide range, before it is rotated horizontally with a 50% likelihood, and finally, normalised using the ImageNet database's standard deviation and mean values. The authors explored several variants of pre-trained VGG and ResNet models. The results showed that ResNet-18 produced good accuracy performance of 0.9950, processed in less than 2.12 s. In addition, their study found that the ResNet-34, ResNet-101, ResNet-50, and ResNet-18 models are more suitable for mobile platform implementation compared to the VGG variants in detecting forest fires. However, the authors should experiment with large datasets to test whether the algorithms can work with large dataset or not in real world forest fire situations.

3.1.3. Ban et al. architecture

In Ban et al. [69], their CNN model was used to automatically detect burned zones using a combination of Synthetic Aperture Radar (SAR) imagery acquired during wildfire incidents and also SAR imaging time-series data before the incidents to extract the temporal backscatter changes information. They have also used Sentinel-2 imagery as an inventory map to verify and validate their findings, which consists of 10,000 points of burned and unburnt areas. Furthermore, they also used visual comparisons to pad up the datasets, which can be derived from SAR-based progression maps and burned area maps that were obtained from Sentinel-2. By utilizing training images that were automatically generated from the coarse binary transition map, the CNN model is fitted and trained with the goal to improve burned area recognition by producing burned confidence maps. These confidence maps will then be binarized using Otsu thresholding technique, and the resultant maps will be gradually merged to increase output reliability and certainty. The limitations of using Sentinel-1 SAR data are not addressed, such as spatial resolution and signal degradation in specific environmental conditions.

3.1.4. RNN, LSTM, and GRU

In this study, Benzekri et al. [71] presented a novel DL model that uses 2 hidden layers of 50 neurons and an output layer with either RNN, LSTM, or GRU to predict the final label. The network used Adam optimizer to backpropagate the loss function. The LSTM model made four incorrect predictions, the simple RNN model made two incorrect predictions, and the GRU model made one incorrect prediction. The authors examined the three models using around 2000 sample data points. The LSTM model achieved 0.0298 loss and 99.82% accuracy on the test data. The simple RNN model had 99.77% accuracy and a loss of 0.0062. Overall, the GRU model is the most consistent and suitable for early forest fire detection. The authors claimed that the model was more precise than traditional surveillance approaches. However, the high accuracy results were only tested on a small dataset compared to the real world; we need to test them on a large dataset.

3.1.5. Bee2Fire

The authors developed the method, namely Bee2Fire, to detect forest fires [43]. The forest fire localization algorithm of Bee2Fire is based on a ResNet-18, which was pre-trained with ImageNet data. The authors fine-tuned the system outcomes for three output classes of cloudy sample, smoke sample, and clear sample using transfer learning approach. In addition to scaling each image to 224 × 224 pixels, additional data augmentation techniques consisted of minor random transformations such as jitter, zoom, and rotation were also added to the main training dataset. The final training dataset comprises of 1903 images with 475 images were reserved for validation purpose. The method has attained an accuracy of 82.35% and a specificity of 99.99%. Using the raw sensor input for the experiments, Bee2Fire sensitivity is 73.68%, and it improves significantly using adapted sensor readings to 93.33% sensitivity. However, the model has low sensitivity and specificity during the testing period to detect smoke columns and fire.

3.1.6. ResNet-50

A comparative study between three DL architectures was carried out by Rahul et al. [70]. The authors applied ResNet-50, VGG-16, and DenseNet-121 models for the forest fire detection analysis. The input images are scaled to 224 pixels-wide, which are then augmented using shearing, flipping, etc. The general CNN layer configuration comprises of a SoftMax layer, a pooling layer, a ReLu activation layer integrated with dropout, a batch normalisation layer, and a convolutional layer for the purpose of image classification. The stochastic gradient descent (SGD) optimizer was found to be the optimal update backpropagation approach with the best global extremum. In conclusion, ResNet-50 performed better in comparison to VGG-16 and DenseNet-121. The findings also indicate that the SGD optimizer is more suitable for forest fire detection compared to the Adam optimizer. However, the specific dataset used for training and testing the model is not mentioned in the paper, making it challenging to assess the generalizability of the results.

3.1.7. Jiang et al. architecture

Jiang et al. [73] used genetic algorithm (GA) to tune their CNN model hyperparameters for detecting fire incidents with excellent accuracy. The authors benchmarked their method with back propagation (BP) neural network, support vector machine (SVM), GA-CNN, and CNN approaches. The testing and training data sets, which all together comprise of 1900 images, form the development dataset. The majority of the images consist of smoke and fire incidents, which contain both positive and negative images. It performs well in terms of true-positive level, accuracy, and false-alarm level across a wide range of evaluation conditions. The accuracy value of the optimized GA-CNN method is 95%, which is better than the accuracy values of the unoptimized CNN algorithm (85%), BP neural network algorithm (73%), and SVM algorithm (90%). The study also concluded that the GA-CNN method is suitable for use in forest fire detection. The imbalanced data could lead to an overfitting issue; therefore, the authors should consider the precision and recall metrics for better result interpretation.

3.1.8. Gayathri et al. architecture

Instead combining CNN with normal LSTM, Gayathri et al. [80] utilised LSTM and CNN in a hybrid setting of bidirectional algorithm. The approach incorporated Google's Firebase, which can be linked to mobile or IoT devices via notifications for alert purposes. The proposed model achieved 96% accuracy for training dataset and 92% accuracy for test dataset. The findings indicate that the integration of two DL models for the purpose of forest fire classification can yield more favourable outcomes. Based on the results, it shows that this study has an overfitting problem because it obtained a high accuracy value but low precision and recall results.

3.1.9. Ghosh and Kumar architecture

Rather than using a single model, Ghosh and Kumar [83] combined both RNN and CNN networks to extract the features, which are then passed to two fully-connected layers for final classification. For the Mivia dataset, there are a total of 22,500 images, of which 12,000 contain fire or smoke sequences while the remaining 10,000 contain neither fire nor smoke. For the Kaggle dataset, a total of 1000 images are available with 755 of the images are of fire class, whereas the other 245 images are normal class. Ghosh and Kumar [83] managed to achieve accuracy values of 99.62% and 99.10% for the Mivia lab and Kaggle fire datasets, respectively. The integration of CNN and RNN networks points to the possibilities for improved performance in detecting forest fires with a more comprehensive feature extraction model. However, this work lacks data augmentation, which can be used to balance the dataset. The authors applied preprocessing (augmentation) before training the dataset, which shows that the preprocessing would help to avoid overfitting and obtain good accuracy in classification.

3.1.10. FFireNet

In FFireNet, Khan and Khan [78] freeze the MobileNetV2 original weights and implement fully-connected layers on top of the feature extraction layers. They have used a dataset with evenly partitioned images, where 950 images were assigned to the fire class and the rest of 950 images were assigned to the no-fire class. Moreover, the authors applied augmentation techniques to the training dataset and reduced the size of the input images to 224 × 224 pixels in order to better represent the variety of images in the dataset. The FFireNet achieved an accuracy of 98.42% with an error rate of 1.58%, a recall of 99.47%, and a precision of 97.42%. It outperformed several benchmarked CNN models such as Xception, NASNetMobile, ResNet152-V2, and Inception-V3. FFireNet, which has been introduced recently, has shown that the inclusion of fully connected layers into the MobileNetV2 model results in more favourable outcomes compared to the models without it. In this paper, the lack of a training dataset could lead the model to classify dense fog as fire smoke, and the model will have low accuracy when the dataset has a low-quality image.

3.1.11. Modified MobileNet-v2

In this study, Mashraqi et al. [90] the focus of the work is to explore drone images that will be used to find and classify forest fires using a modified version of the DL model called DIFFDC-MDL. In order to produce the optimal set of feature vectors, DIFFDC-MDL enhanced the basic MobileNet-v2 architecture by integrating a hybrid LSTM-RNN layer. The shuffled frog leap algorithm (SFLA) is used to optimize the hyperparameter so that the model can achieve an even higher rate of classification performance. In concise form, SFLA imitates the foraging behaviour of frog populations. The authors utilised the SFLA on Fire Luminosity Airborne-based Machine Learning Evaluation (FLAME) dataset, which comprises 6000 samples divided into two balanced groups (fire images, 3000, and no-fire images, 3000). The DIFFDC-MDL produced a good performance accuracy of 99.38%, which proved that an optimized set of hyperparameters can potentially enhance the efficacy of the DL model.

3.1.12. Inception-ResNet-V2

In this study, Mohammed [39] focuses on transfer learning technique to extract features of smoke and forest fires from the ImageNet dataset. The compiled dataset, which contains 1102 images for every fire and smoke class were used as input to a pre-trained Inception-ResNet-V2 network. Data augmentation methods were also performed by using scaling and flipping operations. Inception-ResNet-V2 network was utilised in this study to extract the optimal features from the dataset, whereby ResNet layers were tasked to learn residual parameters to prevent diminishing weights problem. The authors utilised the Adam optimizer with the following configurations; dropout rate, batch size fixed at 55 images, momentum update rate, initial learning rate (LR) of 0.001, 10 backpropagation epochs, categorical cross-entropy loss function, and callback using a threshold of 2 for early stopping, respectively. The convolutional layer dimension is decreased using average pooling layers, while the likelihood overfitting is prevented via dropout layers. The proposed model achieved a 99.09% accuracy, 100% precision, 98.08% recall, a 98.09% F1-score, and a 98.30% specificity for the forest fire classification task. The authors also implemented transfer learning method, which enables them to enlarge the training dataset, which has been proven to work well for their system. The author implemented the data augmentation to increase the dataset and applied the dropout layers to avoid overfitting results. However, the author does not show results for training and testing, which causes doubt in the results of this work.

3.1.13. AlexNet

In this work, Mohammad et al. [81] analysed CNN-9, ResNet-50, MobileNet V2, GoogleNet, AlexNet, SqueezeNet, and Inception V3 to establish the ideal model for standalone module deployment on Raspberry Pi hardware. Two sources were utilised, which are the Kaggle wildfire detection and Mendely datasets that contain 275 fire images and 275 no-fire images. They further increased dataset variation by performing augmentation methods. Their findings indicate that AlexNet architecture produced the best accuracy (99.42%), followed by GoogleNet, MobileNet, ResNet-50, CNN-9, and Inception V3. However, there is no information relay system has been deployed from the Raspberry Pi via emails or messaging services in case of fire incidents. The authors only applied a small dataset and it worked well for the models. However, the forest fire system needs a larger dataset in the real world to train the different conditions of forest fire.

3.1.14. Kang et al. architecture

Due to the great temporal resolution of satellite sensors in geostationary, Kang et al. [82] found that forest fires can be spotted immediately if the data is used smartly. They have utilised 91 incidences of forest fires, in which seven of these occurrences have caused extensive damage to huge forest fires. Using just basic data augmentation methods through rotation and flip operations, the model was trained until convergence. The input data comprised of 9 × 9 window images having N input characteristics, and the outcome was a binary class, representing whether or not the centre pixel of the window showed a forest fire incident. The simulation results produce precision, F1-score, accuracy, and recall values of 0.91, 0.74, 0.98, and 0.63, respectively. The effectiveness of their CNN model in detecting forest fires improved when data augmentation and spatial patterns were utilised during model fitting. However, the models predicted larger areas than actual areas of forest fire. Table 3 shows a summary of classification applications used in forest fire detection studies.

Table 3.

The selected reviewed papers that applied classification algorithm for forest fire detection.

| Authors | Year | Method | Architecture | Accuracy | Application | Augmentation | Type of Data |

|---|---|---|---|---|---|---|---|

| Priya et al. [66] | 2019 | CNN | Inception V3 | Accuracy - 98% | Classification | No | Satellite Image |

| Arteaga et al. [2] | 2020 | CNN | ResNet + VGG | Accuracy - 99.5% | Classification | Yes | Image |

| Benzekri et al. [71] | 2020 | RNN, LSTM and GRU | RNN, LSTM, GRU | Accuracy - 99.89% | Classification | No | Image |

| de Almeida et al. [43] | 2020 | CNN | ResNet18 | Specificity - 99% | Classification | Yes | Image |

| Rahul et al. [70] | 2020 | CNN | ResNet-50, VGG-16, DenseNet-121 | Accuracy - 92.27% | Classification | Yes | Image |

| Ban et al. [69] | 2020 | CNN | CNN | Accuracy - 83.53% | Classification | No | Satellite Image |

| Jiang et al. [73] | 2021 | CNN | BP NN, GA, SVM, GA-BP | Accuracy - 95% | Classification | No | Image |

| Ghosh and Kumar [83] | 2022 | CNN | RNN | Accuracy - 99.62% | Classification | Yes | Image |

| Kang et al. [82] | 2022 | CNN | CNN & RF | Accuracy - 98% | Classification | Yes | Satellite Image |

| Khan and Khan [78] | 2022 | CNN | FFireNet, MobileNetV2 | Accuracy - 98.42% | Classification | Yes | Image |

| Mashraqi et al. [90] | 2022 | DIFFDC-MDL | hybrid LSTM-RNN, MobileNet V2 | Accuracy - 99.38%. | Classification | No | Image |

| Mohammad et al. [81] | 2022 | CNN | Resnet 50, GoogleNet, CNN-9 Layers, MobileNet, InceptionV3, AlexNet | Accuracy - 99.42% | Classification | Yes | Image |

| Mohammed [39] | 2022 | CNN | Inception-ResNet | Accuracy - 99.09% | Classification | Yes | Image |

| Gayathri et al. [80] | 2022 | CNN | CNN | Accuracy - 96% | Classification | No | Image |

| Alice et al. [92] | 2023 | Deep Transfer Learning | Quasi Recurrent Neural Network (QRNN), ResNet50 and optimize parameter used Atom Search Optimizer | Accuracy - 97.33% | Classification | No | Image |

3.1.15. AFFD-ASODTL

The AFFD-ASODTL model automates forest fire detection using Atom Search Optimizer with Deep Transfer Learning, improving response times and reducing wildfire damage [92]. The authors used the DeepFire dataset to detect forest fires. The AFFD-ASODTL approach was tested on a dataset of 500 samples, with 250 fire and 250 non-fire samples. The paper highlights the superior performance of the AFFD-ASODTL method compared to other models. Providing additional information about the dataset's characteristics or sources would greatly assist in evaluating its representatives and generalization.

3.2. Detection

For the object detection task, the goal is to localize and provide the label to a particular object within an image or video. The process of object detection involves not only identifying the object category, but also making prediction regarding the location of each object through bounding box representations [49]. Zaidi et al. [97] described that the concept of object detection is a logical progression from object classification, which is originally focused on solely object identification within an image. Object detection creates individual computational model for each object, which becomes essential input for computer vision-based applications [98]. Previously, the researchers used color-based and machine learning method for forest fire detection. Color-based forest fire detection is a technique that utilizes the color properties of forest fire and smoke to identify pixels [99]. Meanwhile, object detection in machine learning is detection and locates the object in images or videos (Rahul et al., 2023). This method also known as traditional method (Patkar et al., 2024). There were nine selected studies that have applied object detection method in detecting forest fires. Four out of the nine studies have implemented data augmentation techniques to further enrich the training dataset. Apart from that, hyperparameter optimization has also been implemented in Almasoud [91] work to further improve the accuracy. In general, one of the selected studies used a UAV-based image dataset, two of the studies used satellite image datasets, and the remaining studies utilised ground fire image dataset for the purpose of detecting forest fires.

3.2.1. YOLOv3-tiny

In Jiao et al. [67] work, they have used UAV-captured aerial imagery as a training dataset to fit their YOLOv3-tiny model. The backbones of the network are ResNet and Darknet-19, which are used to extract the optimal set of features. A multi-scale approach through feature pyramid network (FPN) are used to locate the best bounding box. The training process for the model consisted of 60,000 epochs, with each batch utilizes a set of 64 images. The results indicate that the detection rate is 83%, tested on a set of 60 images. However, in order for the proposed model to be useful for a small-scale detection, it needs to further enhanced since it is not capable to detect early-stage fires before they become wildfires. They also found out that data augmentation usage, when applied to a larger image dataset can enhance the accuracy of forest fire detection system. However, the authors also do not mention the limitations of using small-scale UAVs for forest fire detection.

3.2.2. DNCNN + hidden Markov model

In order to reduce the number of false alarms, Hung et al. [68] developed a method that integrates DL model with the Hidden Markov Model (HMM). The authors utilised a set of standard data augmentation techniques, which include image rotation and flipping of the horizontal and vertical axes. The authors used the CNN model with the aim of determining the status of each picture in each frame. The deep normalisation CNN (DNCNN) architecture was considered as the object detection algorithm. For buffer checking, Faster R-CNN model was employed during the training phase. For the training of the HMM, the output of DNCNN is used to identify the video frame class. The authors have utilised 4555 test images and 5295 training images for the CNN analysis. On the other hand, the video dataset consists of 613 testing frames and 827 training frames. The results show that the DNCNN outperformed AlexNet, ZF-Net, and GoogleNet in terms of prediction performance. The authors claimed that the suggested method reduces the number of false alarms from 288 to 33 incidents, or an 88.54% reduction rate. The paper lacks a comparison between the proposed system and current fire detection methods or algorithms, hindering the evaluation of its performance in comparison to other approaches.

3.2.3. H-EfficientDet

In this work, Li et al. [76] have developed a deep model based on object detection approach, called h-EfficientDet, which was adapted from the well-known DL algorithm, EfficientDet. The revised model substitutes the nonlinear activation function from swish to the hard swish version and combines it with an effective feature fusion system known as BIFPN. The resultant detection accuracy could reach as good as 98.35%. The suggested fire detection method was evaluated using a dataset of 4282 fire images, trained using an Adam optimization adaptive learning rate strategy. Three performance measures were utilised that include frame rate (FPS), mean absolute precision (mAP), and miss rate (MR) to validate the efficiency of the forest fire detection. The proposed system is very efficient at detecting tiny forest fire incidences, with a real-time detection rate of 97.73% accuracy. However, the authors do not compare the performance of h-EfficientDet with other algorithms, making it difficult to assess its superiority.

3.2.4. SRN-YOLO

In this study, the authors proposed SRN-YOLO, which is an upgraded version of YOLO-V3 combined with a sparse residual network (SRN) in order to identify forest fires precisely by using a more efficient network architecture [85]. There are a total of 880 images, whereby 704 images are for training and 176 images are for testing. The batch size is configured to be 64, while the momentum is fixed at 0.9 and the subdivision size is configured to be 8, as well as the decay is configured to be 0.005. In order to increase the convergence rate of the model during the early stages of training, the LR is fixed at 0.001; after which, the number of iterations hits 2500, 5000, and 7500, the LR value decreases by a factor of 10% compared to the prior value. The results indicate that the proposed approach produces a good balance of performance with a minimal missed detection rate and is more accurate compared to the other YOLO architectures. This demonstrates the usefulness of the proposed approach in identifying forest fire incidents. However, the authors only used eight videos of forest fire, which is quite small and did not mention about non-forest fire videos. Therefore, the authors need to use more videos of different situations to test the strength of the model in real forest fire conditions.

3.2.5. Mohnish et al. architecture

Mohnish et al. [40] has implemented another CNN-based object detection algorithm to detect and send warnings about forest fires that has been employed on a Raspberry Pi platform. The developed system was trained and validated by using a set of 2500 fire and 2500 non-fire images that were retrieved from an open-source website. The authors have also used an image generator to augment the training dataset. A dropout is embedded into the architecture to reduce the likelihood of overfitting issue. However, the authors only use accuracy results but in image classification we need other metrics to prevent overfitting results and give more information about the results.

3.2.6. ResNet18-saliency

In order to develop a comprehensive forest fire detection system, Peng and Wang [88] combined several techniques and then deployed them in a real-time C++ environment. The system consists of three main components, which are motion detection, visual saliency detection (VSD), and classification of fire images using transfer learning methodology. In order to effectively retrieve the relevant object of interest, the authors only applied the VSD algorithm to the maps that contained moving objects by using ResNet-18 as the backbone. They have used a real-world video dataset of 11 videos with fire incidences and 16 videos without fire incidences for the validation purposes. One frame is sampled from a video data for every eight frames to update the background model of the system. Their system has detected 15 false alarms out of 1329 detections for 16 non-fire videos, producing 1.12% false positives with overall accuracy of 99.28%. The authors concluded that the classification strategy based on DL has offered the benefits of rapid detection with good identification accuracy. The authors do not address the possible constraints of implementing the suggested approach on various platforms.

3.2.7. YOLOv5

Another object detection work that focuses on UAV imaging to map out the fire zone was designed by Tahir et al. [86]. They proposed a YOLOv5-based object detection system to detect fires based on the FireNet and FLAME aerial image datasets. These datasets have been augmented using image processing operators such as brightness, exposure, noise, cropping, saturation, cut-out, hue, blur, mosaic, etc. Operators that have produced additional three outputs for the training dataset. The LR and batch size have been fixed at 0.00001 and 16, respectively and trained for a maximum epoch of 350. The resultant outputs showed that the average accuracy is 97.14%, recall is 91.89%, and F1-score is 94.44%. The loss rate of the training box is 0.0168, while the loss of the training object is 0.00738. Based on the results, the model is efficient in real-time fire detection with good accuracy. However, the authors have also incorporated other types of wildfire images, not limited to UAV, which makes the system require large input data as illustrated in Fig. 5.

Fig. 5.

Three significant phases of YOLOv5 [86].

3.2.8. YOLO

Wang et al. [84] proposed an object detection model that could detect and identify the incidence of forest fire rapidly and precisely using minimal computation, low level equipment, and a small DL model. The authors suggested that their approach has a good level sensitivity and accuracy when tested using fire dataset that contains 1442, 617, and 617 of training, testing and validation images, respectively. The model is initialized with a transfer learning approach and then trained for 80 epochs with a batch size fixed at 8, and 0.001 LR, coded on the PyTorch framework. The reported results indicated that the proposed model's prediction accuracy is 83.9% and its recall rate is 96.9%. This model is useful for development of lightweight forest fire monitoring products. Nevertheless, the test images that contain forest fires are relatively scarce and lead to the dataset imbalance problem, which can be addressed by using data augmentation techniques.

3.2.9. ACNN-BLSTM

An intelligent DL-based wild forest fire detection and warning system, IWFFDA-DL, was developed by Almasoud [91]. To identify the presence of a forest fire, an ACNN-BLSTM model, which is an attention-based convolutional neural network with BiLSTM was used. This ACNN-BLSTM hyperparameters were tuned using the bacterial foraging optimization (BFO) method, which directly enhances the detection efficiency. When a fire incident is discovered, the authorities will receive signals from the Global System for Mobile (GSM) modem, allowing them to take immediate appropriate mitigation action. The model achieved a good accuracy rate of 99.56%, recall – 99.46%, F-Score – 98.65, and exceeded the other benchmarked methods performance. This paper is another example of works that utilizes hyperparameter optimization to demonstrate better performance outcomes, and directly validated the importance of the model optimization. However, this work only focuses on three classes, namely normal, potential and extreme. Therefore, we cannot determine whether this model is good or not for forest fire detection. The structure of the BLSTM model utilised in this work of forest fire warning and detection is illustrated in Fig. 6.

Fig. 6.

BLSTM model structure [91].

3.2.10. Fire-GAN

In this research, the authors Ciprián-Sánchez et al. [72] evaluated the effectiveness of Generative Adversarial Networks (GAN) method, to enable the DL model to adapt to various forest fire scenarios. Firstly, the authors employed a VGG-19 network that has already been pretrained on ImageNet to extract multi-layer features. A GAN-based network model was proposed to integrate infrared and visible channels. The authors used the Corsican Fire Dataset, which includes ground truth segmentation map of the forest fire regions, along with 640 sets of visible and near-infrared (NIR) fire images. In addition, 477 visible-NIR image combinations without fire incidents are also added to the RGB-NIR data collection. After performing the data augmentation techniques, there are 128 image combinations for the validation set and 8192 images for the training set. The model developed by the authors can identify the best performing combination of these parameters. The efficiency of the model can be improved and overfitting can be reduced by collecting more pairs. The authors stated that their study could improve wildfire fighting by using visible-NIR images to detect and segment wildfires accurately. Table 4 shows the summary of detection-based methods in forest fire detection studies.

Table 4.

Detection-based methods for forest fire monitoring and surveillance.

| Authors | Year | Method | Architecture | Accuracy | Application | Augmentation | Type of Data |

|---|---|---|---|---|---|---|---|

| Hung et al. [68] | 2019 | DN-CNN | Faster R-CNN, Hidden Markov Model (HMM) | Detection rate - 96% | Detection | Yes | Image & Video |

| Jiao et al. [67] | 2019 | CNN | YOLOv3 | the detection rate can reach 83%. | Detection | No | UAV Image |

| Li et al. [76] | 2021 | h-EfficientDet | EfficientDet and h-EfficientDet | Accuracy - 98.35% | Detection | No | Image |

| Peng and Wang [88] | 2022 | CNN | SqueezeNet1.1, AlexNet, MobileNetV3 Large and Small MobileNetV1 0.25 & 1.0, MobileNetV2 0.25 & 1.0, ResNet18, & VGG-16 | Accuracy - 99.28%. | Detection | No | Image |

| Li et al. [85] | 2022 | CNN | YOLOv3, YOLO-LITE, Tinier-YOLO | mAP - 96.05% | Detection | No | Image |

| Mohnish et al. [40] | 2022 | CNN | CNN | Accuracy - 92.20% | Detection | Yes | Image |

| Tahir et al. [86] | 2022 | CNN | YOLOv5 | F1-score - 94.44%. | Detection | Yes | UAV Image |

| Wang et al. [84] | 2022 | CNN | YOLO | Accuracy - 83.9% | Detection | No | Image |

| Almasoud [91] | 2023 | IWFFDA-DL, ACNN-BLSTM | ACNN-BLSTM optimized BFO & YOLO v3 | Accuracy - 99.56%. | Detection | No | Image |

| Ciprián-Sánchez et al. [72] | 2021 | CNN | Fire-GAN, VGG-19 | Information entropy EN - 10 | Classification | Yes | Image |

| Xie and Huang [93] | 2023 | Transfer Learning and Improved Faster RCNN | ResNet50 network and Faster RCNN with feature fusion and attention | Accuracy - 93.7% | Detection | No | UAV image |

3.2.11. Transfer learning and improved faster RCNN

The author proposed a method for forest fire detection using transfer learning and improved Faster RCNN [93]. Transfer learning with pre-trained ResNet50 network and Faster RCNN with feature fusion and attention were integrated. The ImageNet dataset was used for transfer learning, initializing the convolutional layer of Faster RCNN. The method achieved 93.7% detection accuracy in aerial images. However, it is important to note that the authors did not provide a thorough analysis of the computational requirements or efficiency of the proposed method. Such a lack of detail could potentially pose a limitation in real-world applications.

3.3. Segmentation

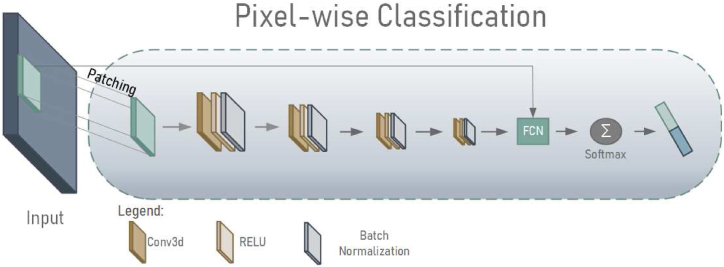

Apart from classification and detection tasks, image segmentation technique has also been explored by many researchers to detect the forest fire incidents. Segmentation can be defined as a technique employed to partition an image into multiple sections or segments [100]. It can be conceptualized as instructing an individual to delineate boundaries surrounding objects in a given image. The resultant output of the segmentation process is a set of segmented areas that collectively encompass the entirety of the image or a series of contours extracted from the image [101]. In this comprehensive review, only five studies have employed the segmentation algorithm for forest fires detection. Out of these five papers, Seydi et al. [6] work that used a Deep CNN model has produced the highest level of precision. Besides, three of the studies rely on satellite imagery, while the other two studies rely on ground forest fire imagery. The five proposed DL architectures are 3D CNN, SqueezeNet, F-Unet, Fire-Net, and Fully CNN, which have been finetuned for segmentation application.

3.3.1. Toan et al. architecture

Instead of using the popular Landsat satellite imagery, Toan et al. [65] have used the GOES-16 satellite imagery as the training data for their study. The multilayer structure of DL architectures, especially deep neural networks, allow the usage of multispectral input in both temporal and spatial dimensions, whereby they have used VIIRS-AFP, MODIS-Terra, GOES-AFP, and AVHRR-FIMMA methods. The authors implemented a layer of patch normalisation to improve the dataset's training potential with a relatively small dataset of 168 images of fire and 48 images of non-fire. Their results show that the proposed technique achieves 96.05% precision, 91.89% recall, and 94% F1-score by using only random search hyperparameter optimization. The purposely-built model also has a low lag time, with only 2.6 h of training time compared to the other models. The authors used the data augmentation method to increase the dataset and avoid high errors in the proposed model. The utilization of a spatio-spectral deep neural network has been found to be useful for predicting the early-stage forest fires as shown in Fig. 7.

Fig. 7.

The utilization of a spatio-spectral deep neural network has been proposed as a means of predicting wildfires in their early stages [65].

3.3.2. SqueezeNet

For extracting the fire maps, Wang et al. [64] have employed SqueezeNet as the backbone and incorporated an additional framework to produce a precise forest fire segmentation model. The authors used the CIFAR-10 dataset, which contains 60,000 images that are split into 10 classes, resulting in 6000 images for each class. Fifty thousand images are used for training, while ten thousand images are used for testing purposes. The parameter size of the improved SqueezeNet is 0.53 MB that produces detection accuracy of 0.942%. The authors have further tested the proposed model on two more forest fire videos for experimental purposes. The authors have elucidated that the proposed methodology is capable of segmenting the forest fire areas, even when the image is partially obstructed by smoke noise. The outcomes proved that the proposed methodology is appropriate for forest fire monitoring, especially in detecting early breakout fires. However, the authors need to provide other metrics results to give more information about model’s performance.

3.3.3. F-Unet

In this study, the authors utilised a segmentation model of F-Unet framework, which is modified from U-Net models, for the identification of forest fires [52]. The authors have utilised the FLAME dataset, which consists of 2003 fire images. This study shows that the addition of a feature fusion network to the U-Net architecture enable the model to incorporate several feature maps of varying sizes effectively in an attempt to improve the model's segmentation accuracy. The results showed that F-Unet enhances the mean pixel accuracy (MPA) of Unet by 8.42% and improve the mean intersection over union (MioU) of Unet by 7.45%. The findings demonstrated that F-Unet is suitable as a forest fire segmentation model that greatly enhances the efficiency of the early detection system. The findings also proved that the incorporation of feature-fusion module can lead to a more efficient segmentation model of forest fires with a reduced FPS. However, the authors have not addressed possible challenges in implementing the proposed feature fusion network.

3.3.4. Fire-Net

In this study, Seydi et al. [6] have suggested the utilization of Landsat-8 RGB and thermal images as a training dataset for the development of a novel segmentation model, which they have named as Fire-Net. The authors prepared 722 patches of 256 × 256 pixels, in which they are divided into training dataset of 469 patches, validation dataset of 109 patches, and testing dataset of 144 patches. For hyperparameter configurations, the authors used a batch size of 7 patches, a LR of 0.0001, and a maximum epoch of 250 epochs. The Fire-Net works very well in segmenting both non-active fire and active fire regions according to the performance metrics of F1-score, overall accuracy (OA), miss detection (MD), precision false positive rate (FPR), recall, and the kappa coefficient. It achieves an overall accuracy of 97.35% and can detect small active fires. The authors proved that the proposed model namely, Fire-Net could be applied to segment forest fire regions accurately using satellite imagery input. However, the authors do not mention the possible difficulties or disadvantages of utilizing Landsat-8 imagery for identifying fires.

3.3.5. Fully CNN

Instead of using a single forest fire event location, Sun [79] trained a Fully CNN (FCNN) segmentation model using Landsat-8 images. The results are very promising with F1 and F2 scores of 0.928 and 0.962, respectively. While, the precision performance is 0.878, and its recall value is 0.989. In summary, there were active fires in 14,274 of the sampled images, and there were non-fire cases in 10,685 of the images. This model rarely missed identifying the active fire pixels, although sometimes it was excessively sensitive and misidentified non-fire pixels for fire ones. The author should have implemented data augmentation or transfer learning on the dataset to prevent overfitting issue. Table 5 shows the summary of segmentation architecture used in forest fire detection studies.

Table 5.

Segmentation-based methods for forest fire monitoring and surveillance.

| Authors | Year | Method | Architecture | Accuracy | Application | Augmentation | Type of Data |

|---|---|---|---|---|---|---|---|

| Toan et al. [65] | 2019 | CNN | 3D CNN | F1-score - 94% | Segmentation | Yes | Satellite Image |

| Wang et al. [64] | 2019 | CNN | SqueezeNet | Accuracy - 94.2% | Segmentation | No | Image |

| Li et al. [52] | 2021 | CNN | F-Unet, U-net | MPA - 94.77% | Segmentation | No | Image |

| Seydi et al. [6] | 2022 | CNN | Deep CNN | Accuracy - 99.98 | Segmentation | No | Satellite Image |

| Sun [79] | 2022 | CNN | Fully - CNN, U-Net, U-Net Light | F1-score - 0.928 | Segmentation | No | Satellite Image |

3.4. Detection and classification

There are four studies have been selected for forest fire monitoring systems using a combination of detection and classification tasks. Out of the four methods, only the work by Fan and Pei [75] did not implement any data augmentation method for the forest fire detection. On the other hand, the highest accuracy out of the four methods is attained by Bai and Wang [74] with 96.5% accuracy. Bai and Wang [74] have used a combination of YOLO and VGG architectures in their work.

3.4.1. YOLO + VGG

In this study, a combined YOLO + VGG have been to produce a joint decision for fire warning system. This study used a transfer learning technique to initialized the models to identify the presence of smokes and flames [74]. The top layers of the original VGG are removed, while retaining the bottom feature extraction layers. The decision-making layer for VGG has been improved by adding leaky ReLU activation function, and dropout layers. Then, the YOLO network was configured with a LR of 0.001, a parameter of weight decay of 0.0005, and a value of momentum of 0.9. A total of 3500 images were included that consists of 1600 images of forest fire and 1900 images of non-forest fire. The dataset was further expanded by a factor of 10 using data augmentation techniques through random crop, translation, and scale operators. The detection speed run at 30.9 frames per second with a mAP performance of 96.5%. These results shown that the data augmentation is important to prevent the overfitting issue when used imbalanced dataset. This work is suitable for early detection of forest fire systems that rely on low FPS input using an optimized YOLO structure as shown in Fig. 8.

Fig. 8.

The network structure of YOLO that has been optimized and demonstrated by Bai and Wang [74].

3.4.2. YOLOv4-light

The second method that employed a combination of detection and classification was proposed by Fan and Pei [75] that modifies lightweight network structure YOLOv4-Light to detect forest fires. For the feature extraction network, MobileNet replaces the standard YOLOv4's backbone, while PANet's original convolution is replaced with a depth-wise separable convolution, which increases the prediction performance and makes it more appropriate for embedded system applications. The authors developed a FDRLS dataset that contains over 6000 images, whereby the background class is significantly enriched with various information, whereas the forest class also covers a wide range of unique forest types from cold, tropical, and temperate zones. The authors also applied Mosaic for data enhancement. The authors also highlighted that they tested the false alarm of forest fire detection before and after the addition of red leaf recognition. They also produced good speed detection and model size to ensure it complied with the system.

3.4.3. YOLOV5S + MFEN

In this study, Wei et al. [87] introduced YOLOV5S architectures, a recently introduced deep object detection model for detecting forest fires. The authors have setup a ratio of training to testing images to 80:20 that results in a self-created dataset with 11,520 training and 2880 testing images. The authors utilised mosaic data augmentation techniques, including scaling, rotation, translation, and cropping operations, at both the image-level and pixel-level. The SGD optimizer with a cosine annealing LR set to 0.01 was used to fit the model for a maximum of 900 epochs. The batch size value was fixed at 32, while the momentum and weight decay coefficient were set to 0.937 and 0.005, respectively. In order to extract contextual information from multi-scale objects in complex visual scenes, the authors devised a model with multi-scale feature extraction network (MFEN). This technique works particularly well for real-time forest fire monitoring, making it appropriate for deployment to edge devices with limited computing resources. However, the model required a larger size to detect the wild flame compared to wild smoke compared to DNCNN-based model.

3.4.4. DetNAS

The last algorithm that uses a combination of detection and classification models to identify forest fire incidents was introduced by Tran et al. [89] that is based on neural architecture search-based object detection (DetNAS). The authors deployed Faster R-CNN, testing it with various backbones that include ResNet, VoVNet, FBNetV3, and ShuffleNet V2. Furthermore, a part of ShuffleNetV2 block has also been embedded in the network as a searchable element in the backbone network. The batch size is set at 16 images, and the models were trained for a maximum of 10,000 epochs with an initial LR of 0.15. The authors found a forest fire detection performance of 27.9 mAP, supporting the use of a lightweight ShuffleNet V2 model. The model was trained using 349,774 combined CCTV images and weather data, and evaluated on 39,243 CCTV images. Simple data augmentation techniques were used to enrich the training dataset. The results were validated on a forest fire outbreak dataset with 2128 events. The RMSE for each test fold is about 2.6, which indicates that the model overfits the train data and generates subpar predictions using the test dataset. The models obtained a low mAP value due to the smoke visual similarities as well as many classes of dataset. The modified Faster R-CNN architecture, as depicted in Fig. 9, has been first introduced by Tran et al. [89]. Table 6 shows the summary of detection and segmentation applications in forest fire detection studies.

Fig. 9.

Architecture of Faster R-CNN illustrated by Tran et al. [89].

Table 6.

A combination of detection and segmentation-based methods for forest fire monitoring and surveillance.

| Authors | Year | Method | Architecture | Accuracy | Type | Augmentation | Type of Data |

|---|---|---|---|---|---|---|---|

| Bai and Wang [74] | 2021 | CNN | YOLO & VGG network | Accuracy - 96.5% | Detection & Classification | Yes | Image |

| Fan and Pei [75] | 2021 | CNN | YOLOv4 & MobileNet | mAP - 75.72 | Detection & Classification | No | Image |

| Wei et al. [87] | 2022 | CNN | YOLOv5S & Mobilenetv3 | Accuracy - 90.5% | Detection & Classification | Yes | Image |

| Tran et al. [89] | 2022 | DetNAS | ShuffleNetV2, Faster R-CNN model with VoVNet, ResNet, & FBNetV3 | mAP - 27.9 | Detection & Classification | Yes | Image |

3.5. Segmentation and classification

Among the selected papers, there are only four studies that have used a combination of segmentation and classification methods. Interestingly, all of these studies have implemented some forms of data augmentation techniques. The accuracy attained by Ghali et al. [77] produced the best forest fire detection score of 99.95% accuracy by deploying a combination of DenseNet-201 and EfficientNet-B5 models (TransUNet and TransFire) with the DCNN (EfficientSeg) architecture.

3.5.1. Fire_Net

In a study by Zhao et al. [11], they have utilised a 15-layered Deep Convolutional Neural Network (DCNN) called Fire_Net, which was modified from the 8-layered AlexNet model. The authors argued that the methodology for integrating saliency identification and Deep Learning (DL) for forest fire recognition has not yet been made available. Thus, they utilised 1500 imagery taken from various modalities to explore optimal model configuration for forest fire recognition. This combined dataset comprises of 908 images without fire incidents and 632 images with fire incidents. The saliency segmentation method was employed by the authors to augment the training dataset to a total of more than 3500 images. The results indicate that the model achieved an accuracy of 98% and 97.7% for with and without augmented data, respectively. However, the proposed model is weak against the mist noise during fire incidents. The authors suggested IR sensors be incorporated as part of the decision-making layers to assess whether or not a fire has occurred.

3.5.2. U-net, ResNet34, and U-ResNet34