Abstract

Student–study behaviors and metacognition are predictors of student-academic success. However, student metacognitive evaluation of their own study habit behavior use has been largely unexplored. To address this gap, we gave students enrolled in three different Biology courses (n = 1140) a survey that asked them to identify the study behaviors used to prepare for their first and third exams and to appraise the effectiveness of each behavior. We observed that, across all courses, students used different counts of active- and passive-study behaviors. However, there were no differences in performance across courses, and the use of effective (i.e., active) study behaviors resulted in improved exam performance for all students, regardless of course, while the use of ineffective (i.e., passive) study behaviors had no significant impact on exam performance. Finally, our qualitative analysis revealed that students across all courses demonstrated similar ability in identifying effective-study behaviors, but students could not explain why those behaviors were effective. Taken together, our study demonstrates that students use various study behaviors to prepare for exams without understanding their effectiveness. We encourage instructors to structure their courses to promote the development of metacognitive evaluation and effective-study behaviors.

INTRODUCTION

The structure of Science, Technology, Engineering, and Mathematics (STEM) courses, and the previously documented assumptions instructors hold about the baseline knowledge of their students, benefit those who enter the classroom academically prepared (Razali and Yager, 1994; Daempfle, 2003; Salehi et al., 2019, 2020; Boesdorfer and Del Carlo, 2020). These “idealized students” already possess well-developed study behaviors from having taken equivalent high school coursework (Razali and Yager, 1994; Daempfle, 2003; Boesdorfer and Del Carlo, 2020). In reality, equally capable students that transition to college from high schools who did not have access to those same learning resources enter the classroom at a serious disadvantage. Further, it is unclear when, how, and why students acquire effective study behaviors during their education.

Student approaches to learning (SAL) and self-regulated learning (SRL) have been identified as critical components to achieve academic success (Tomanek and Montplaisir, 2004; Heikkilä and Lonka, 2006; Collier and Morgan, 2008; Horowitz et al., 2013; Park et al., 2019; Google et al., 2021). Briefly, SAL encompass the learning strategies that students use during self-directed study. Students can choose to implement deep approaches to learning (i.e., tasks that deepen student understanding of content such as self-testing) or surface approaches (i.e., tasks that promote rote memorization such as rereading textbooks; Tomanek and Montplaisir, 2004; Heikkila and Lonka, 2006; Google et al., 2021). SAL are often studied in conjunction with SRL (Chin and Brown, 2000; Tomanek and Montplasir, 2004; Heikkilä and Lonka, 2006; Stanton et al., 2015; Dye and Stanton, 2017; Sebesta and Speth, 2017; Google et al., 2021). Self-regulated learners exert control over their learning environments by planning academic schedules, regulating their social and physical environments, monitoring performance outcomes, and applying appropriate learning strategies (e.g., study behaviors) when necessary (Horowitz et al.,2013). A key feature of SRL is that it can be developed through practice at any stage in the educational journey (Horowitz et al., 2013).

This study focuses primarily on study behaviors of students enrolled in Biology coursework. Previous literature indicates that many instructors expect students to enter college with well-developed study behaviors, to prioritize studying and apply appropriate study behaviors when necessary (Razali and Yager, 1994; Daempfle, 2003; Collier and Morgan, 2008; Li and Rubie-Davies, 2018; Boesdorfer and Del Carlo, 2020; Naylor et al., 2021). Despite these expectations, students struggle with selecting and using effective study behaviors (McGuire, 2006; Hora and Oleson, 2017; Sebesta and Speth, 2017; Walck-Shannon et al., 2021). Effective (i.e., active) study behaviors are behaviors that promote the long-term retention and retrieval of information (Marton and Säljö, 1976; Tomes et al., 2011; Nunes and Karpicke, 2015; Karpicke and O’Day, 2020; Table 1). These behaviors include self-testing, summarizing, and self-explanation (Table 2). However, students often report using ineffective (i.e., passive) study behaviors such as rereading the textbook or rewriting notes (Tables 1 and 2). These behaviors are described as being ineffective because they promote surface-level understanding of the content and rote memorization (Marton and Säljö, 1976; Tomes et al., 2011). Furthermore, students persist in using these behaviors as they continue into graduate school and professional school (West and Sadoski, 2011; Mirghani et al., 2014; Brown, 2017; Lee et al., 2020; Patera, 2021). Given the persistent use of ineffective-study behaviors among Biology students, we wanted to understand what influences the selection and use of study behaviors. Specifically, we explored whether students selected study behaviors because of the material or demands inherent to a particular course, and whether they metacognitively evaluated different study behaviors and make decisions based on this evaluation. We addressed whether students changed study behaviors over the course of a semester, and how these strategies impacted exam performance.

TABLE 1.

Definitions of active- and passive-study behaviors

| Definition of active- and passive-study behaviors | |

| Active | Strategies that promote deeper understanding of content, the retention of information in long-term memory, and the successful retrieval of that information later |

| Passive | Strategies that emphasize verbal fluency and are used for the purpose of rote memorization |

TABLE 2.

Description of active- and passive-study behaviors used by students

| Behavior | Description | Examples | Active or Passive? |

|---|---|---|---|

| Self-testing | Retrieval of content knowledge using student-driven techniques | Flashcards, practice quizzes, practice problems/ questions | Active |

| Summarizing | Students identify main points of readings or notes | Creating study guide, summarizing notes | Active |

| Self-explanation | Explanation of how new information is processed during learning | Teaching content to others, making diagrams | Active |

| Repeated reading | Texts are read repetitively to enhance recall | Rereading textbook, rereading notes | Passive |

| Rewriting notes | Rewriting notes taken during lecture | Rewriting lecture notes as is | Passive |

| Rewatching videos | Viewing videos related to content | Viewing YouTube videos or previously recorded lecture videos | Passive |

CONCEPTUAL FRAMEWORK

Our research investigates the study behaviors of students who are enrolled in several different Biology courses at a single, large institution. This study was guided by a conceptual framework that synthesizes ideas from the SAL framework (Marton and Säljö, 1976) and the social-cognitive model of SRL framework (Zimmerman, 2002; Pintrich, 2004).

SAL Framework

The SAL framework describes how students carry out specific learning tasks (Marton and Säljö, 1976; Google et al., 2021). Briefly, students can implement a deep approach or surface approach to a learning task. Deep approaches to learning are characterized by use of meaningful-learning approaches that are appropriately aligned to the demands of the task (Google et al., 2021). The selection and use of these tasks are driven by the student’s perceived need to understand the content (Tomanek and Montplaisir, 2004; Google et al., 2021). Conversely, students using a surface approach to learning tend to use approaches that are quick, promote rote memorization, and do not emphasize deep understanding (Tomanek and Montplaisir, 2004; Google et al., 2021). The adoption of a surface or deep approach to learning is influenced by individual factors. For example, students must have well-developed metacognitive skills in order to reflect on their own learning processes (Chin and Brown, 2000; Leung and Kember, 2003; Beccaria et al., 2014; Tuononen et al., 2020; Tuononen et al., 2023). Additionally, student decisions to use a particular approach to learning is also influenced by course-specific factors (e.g., instructional format, assessment; Feldt and Ray, 1989; Entwistle and Entwistle, 2003; Tomanek and Montplaisir, 2004; Kember et al.,, 2008; Momsen et al., 2010; Abd-El-Fattah, 2011; Google et al., 2021). As mentioned previously, undergraduate Biology students frequently report the use ineffective-study strategies that promote fluency and storage in short-term memory such as rereading the textbook or reading notes instead of behaviors that promote retrieval (i.e, active behaviors; Hora and Oleson, 2017; Sebesta and Speth, 2017; Ziegler and Montplaisir, 2014; Walck-Shannon et al., 2021). Given the role of course-specific factors in influencing SAL, we wanted to investigate whether Biology students select their study behaviors in a course-dependent manner.

Social-Cognitive Model of SRL Framework

In the social-cognitive model of SRL framework, learners can employ effective-learning strategies (behavior) in the pursuit of their academic goals, monitor their own learning (metacognition), and have intrinsic interest in their studies (motivation; Zimmerman, 1989; Pintrich and De Groot, 1990; Wolters, 1998; Zimmerman 2002; Wolters, 2003; Pintrich, 2004; Xu and Jaggars, 2014; DiFrancesca et al., 2016; Sebesta and Speth, 2017; Park et al., 2019; Dignath and Veenman, 2021; Cazan, 2022). Previous work has suggested that SRL benefits students in STEM contexts specifically (Vanderstoep et al., 1996; Schraw et al., 2006; Miller, 2015; Bene et al., 2021). For example, in a study that examined the SRL behaviors of college students enrolled in classes spanning different disciplines, Vanderstoep et al. (1996) found that high-achieving students enrolled in Natural Science courses used more learning strategies and engaged in metacognition, while low-achieving students used fewer learning strategies and did not engage in metacognition. Similarly, Miller (2015) demonstrated that high-achieving students enrolled in an introductory Chemistry course had better metacognitive skills and had access to optimal study environments.

Given the role of metacognition in SAL and student achievement in STEM (Vanderstoep et al., 1996; Tomanek and Montplaisir, 2004; Schraw, 2006; Miller, 2015; Bene et al., 2021), we specifically focused on how student metacognition impacted their selection and use of active or passive study strategies. Metacognition refers to our awareness of our own thinking and is composed of metacognitive knowledge and metacognitive regulation (Stanton et al., 2019). Metacognitive knowledge encompasses what students know about their own thinking and how they approach learning (Stanton et al., 2019). This type of knowledge is displayed when students can distinguish between the concepts they know and do not know. Metacognitive regulation refers to how learners regulate their thinking for learning and the actions taken in order to learn (Stanton et al., 2019). This involves three skills: 1) planning (selecting approaches for learning and determining when to study), 2) monitoring (implementing selected approaches and measuring their usefulness in real time) and 3) evaluating (determining effectiveness of individual-study behaviors and overall study plan; Schraw et al., 2006; Stanton et al., 2019). These skills are important components of learning because a student’s ability to learn and recall information is heavily dependent on their ability to gauge what they do and do not know (Fakcharoenphol et al., 2015; Sebesta and Speth, 2017). Thus, a student’s metacognitive-regulation influences which study behaviors a student uses during exam preparation (Fakcharoenphol et al., 2015; Stanton et al., 2019).

LITERATURE REVIEW

What are effective study behaviors?

Previous literature suggests that Biology students may be unaware of specific study behaviors that promote deep understanding of course materials (Stanton et al., 2015; Sebesta and Speth, 2017). Effective study behaviors are those that promote the retention of information in long-term memory and the successful retrieval of that information at a later time (e.g., during a test: Marton and Säljö, 1976; Tomes et al., 2011; Nunes and Karpicke, 2015; Karpicke and O’Day, 2020). These behaviors are also referred to as retrieval-based learning or active retrieval. Numerous studies have demonstrated the effectiveness of retrieval-based practice and two mechanisms have been proposed to explain their effectiveness: elaborative retrieval and episodic context. (Karpicke and Roediger, 2008; Bjork and Bjork, 2011; Karpicke and Blunt, 2011; Dunlosky et al., 2013; Brown et al., 2014; Karpicke, 2017; Biwer et al., 2021; Walck-Shannon et al., 2021).

The elaborative-retrieval theory proposes that the benefits of retrieval practice are connected to elaboration. Specifically, this theory states that when learners attempt to retrieve information, they generate knowledge that is related to that information (Carpenter, 2009; Karpicke, 2017). As a result, the learner adds details that make the target knowledge distinctive and easy to retrieve (Carpenter, 2009; Karpicke and O’Day, 2020).

Alternatively, the episodic-context theory posits that learners not only encode information about newly learned information, they also encode information about the learning context. When learners retrieve this information in a new context, they also retrieve information about the prior learning context. If the learner is able to successfully retrieve this information, they update the context to include the context in which information was learned and the context in which the information was retrieved. When the information is retrieved during the test, these two contexts can be used to help the learner retrieve information (Nunes and Karpicke, 2015; Karpicke, 2017). It is important to note that these two theories are not mutually exclusive and learners may use elaboration and context to retrieve information (Karpicke, 2017).

Active study behaviors.

For this study, we refer to retrieval-based practices or learning strategies as active-study behaviors (Table 1). Common active-study behaviors include practices such 1) self-testing, 2) summarizing, and 3) self-explanation (Table 2), which we describe here. Self-testing refers to any form of practice testing that students can complete on their own and involves retrieval over a period of multiple sessions distributed over a longer period (Dunlosky et al., 2013). Thus, self-testing can include practicing recall using flashcards, completing practice quizzes/tests, and completing practice problems or questions (Dunlosky et al., 2013). Self-testing contributes to student learning because it can guide future studying, influence student motivation, enhance how students mentally organize information and provide students with a measure of what concepts they have mastered (Dunlosky et al., 2013; Brown et al., 2014; Karpicke, 2017). However, students may avoid self-testing because it can feel frustrating when they have trouble recalling information. As a result, it does not feel as productive as rereading the textbook or notes (Brown et al., 2014).

Summarization (without the presence of text) is a learning behavior in which students identify the main points of their assigned readings or notes while excluding repetitive or unimportant information (Dunlosky et al., 2013). Self-explanation integrates newly learned content with prior knowledge by requiring students to explain their processing during learning (Dunlosky et al., 2013). These behaviors are effective because they provide students with a reliable measure of what concepts they have mastered, require learners to reconstruct concepts from long-term memory rather than repeating them from short-term memory, and promote greater long-term learning than behaviors that emphasize passive consumption of lecture presentations or textbook readings because they help students to identify gaps in their knowledge and strengthen the connections between prior knowledge and newly learned knowledge (Dunlosky et al., 2013; Brown et al., 2014; Hora and Oleson, 2017; Rodriguez et al., 2018; Osterhage et al., 2019; Walck-Shannon et al., 2021).

Passive study behaviors.

Alternatively, ineffective-study behaviors describe study strategies that emphasize surface-level understanding of content and are used for the purpose of rote memorization (Marton and Säljö, 1976; Tomes et al., 2011). Furthermore, these strategies emphasize verbal fluency and often result in students overestimating their understanding of content (Rawson and Dunlosky, 2002; Roediger and Karpicke, 2006). In this study, we refer to these strategies as passive-study behaviors (Table 1), and these include repeated reading, rewriting notes, and rewatching videos (Table 2). Several prior studies indicate that while students enrolled in Biology courses reported using primarily passive behaviors to study (e.g., rereading), they also reported using several active-study behaviors (Hora and Oleson, 2017; Sebesta and Speth, 2017; Walck-Shannon et al., 2021).

What influences the selection and use of specific-study behaviors?

The use of effective (active) study behaviors is not always necessary for success in the K-12 level, so students often enter college using ineffective (passive) study behaviors (McGuire, 2006). The continued use of these behaviors can be shaped by the anticipated format of Biology exams and course expectations. Prior studies have demonstrated that students select study behaviors based on exam format (Feldt and Ray, 1989; Entwistle and Entwistle, 2003; Kember et al., 2008; Momsen et al., 2010; Abd-El-Fattah, 2011). For example, when presented with a multiple-choice test format, students may opt to use memorization strategies because they were successfully used with previous multiple-choice exams (e.g, standardized tests; Feldt and Ray, 1989; Scouller, 1998; Martinez, 1999; Watters and Watters, 2007; Kember et al., 2008; Abd-El-Fattah, 2011; Stanger-Hall, 2012; Santangelo, 2021). Alternatively, students may select study behaviors based on the anticipated cognitive demands of an exam. Previous studies have demonstrated that if students are presented with exams that contain questions that emphasize memorization of facts, students will select study behaviors that accomplish this goal (Jensen et al., 2014).

In addition to exam expectations, students may also select their study behaviors based on their perceptions of the course (Wilson et al., 1997; Kember et al., 2008). For example, previous literature has demonstrated that courses that have a heavy workload, an excessive amount of course material, assessment practices that emphasize rote memorization, and surface coverage of content encourages students to adopt a surface (i.e., passive) approach to learning (Wilson et al., 1997; Kember et al., 2008). Additionally, students may select study behaviors based on student perceptions of the discipline associated with the course (Prosser et al., 1996; Tai et al., 2005; Cao and Nietfeld, 2007; Watters and Watters, 2007; Kember et al., 2008). As stated previously, students enter the college classroom with varied prior experiences related to studying. Specifically, students may have formed ideas on how to study Biology content based on their prior experience with the subject (Biggs, 1993; Minasian-Batmanian et al., 2006). For example, if students’ prior Biology courses emphasized rote memorization, students may perceive that memorization is essential for learning Biology and adopt study behaviors that emphasize this outcome (Chiou et al., 2012).

Finally, previous work also shows that students are advised by their instructors to use ineffective study behaviors (e.g., rereading the textbook is encouraged in the syllabus), and students may continue to use those behaviors as they progress through their coursework (Morehead et al., 2015; Hunter and Lloyd, 2018). Additionally, effective behaviors may cause students discomfort because they are more challenging (Dye and Stanton, 2017). As a result, students may refuse to change their approaches to studying and continue to use behaviors that are less challenging (Tomanek and Montplaisir, 2004; Dye and Stanton, 2017). Compounding these challenges, Biology students have underdeveloped-metacognitive skills and struggle with self-evaluation, preventing them from identifying ineffective study behaviors (Tomanek and Montplaisir, 2004; Stanton et al., 2015; Dye and Stanton, 2017; Sebesta and Speth, 2017; Walck-Shannon et al., 2021; Tracy et al., 2022). Taken together, these studies highlight the need to better understand the influence of context and metacognition in the selection of specific study behaviors in different Biology courses.

Current study rationale and research questions

In this study, we wanted to understand how students select and use specific-study behaviors. We also investigated the use of these study behaviors and the impact of these study behaviors on the exam performance of students enrolled in three different Biology courses. We then examined the metacognitive-evaluation skills of students in each course. We asked the following research questions:

Are there differences in the use of study behaviors between students in different Biology courses?

How do student–study behaviors change over the course of the semester (i.e., Exam one and Exam three)?

What is the effect of each study behavior on exam performance?

How do students in different courses compare in their use of the metacognitive skill of evaluation?

METHODS

Participants and Context

We collected data for this study from undergraduate Biology students enrolled in two sections of Anatomy and Physiology (n = 498), two sections of Microbiology (n = 474), and one section of Genetics (n = 168) with a total enrollment of 1140 students led by four different instructors during Fall 2021 at a research-intensive, land-grant institution in the southeast region of the United States (Table 3). The institutional population during the Fall 2021 semester was comprised of 49.5% women and 50% men and 81.5% White students (National Center for Education Statistics, United States Department of Education, 2021). Furthermore, 13% of students received Pell grant funding. The students enrolled in each of these courses are similar in terms of race/ethnicity, first-generation status, Pell-grant eligibility, and gender (Table 4). Additionally, the majority of students in each of these courses were in their second or third year of college and were STEM majors. All procedures for this study were approved by the Auburn University Institutional Review Board (IRB ID: 18-349).

TABLE 3.

Course features of lower-level and upper-level Biology classes

| Feature | Lower-division Biology Course | Upper-division Biology Course #1 | Upper-division Biology Course #2 |

|---|---|---|---|

| Course | Anatomy and Physiology, the study of structure and function of the human body. It includes cells, tissues and organs of the major body systems, three credit hours. | Microbiology, an introduction to the Science of microbiology with an emphasis on bacterial structure, function, growth, metabolism, genetics, and its role in human health, three credit hours | Genetics, an overview of theoretical and factual principles of transmission, cytological, molecular, and population genetics, three credit hours |

| Number of Students | 498 in two sections (258 + 240 = 498) | 474 in two sections (264 + 210 = 474) | 168 in one section |

| Course components | Lecture (3 × 50 min/wk) | Lecture (3 × 50 min/wk) | Lecture (3 × 50 min/wk) |

| Lecture style and features | Traditional lecture | Traditional lecture

|

Traditional lecture |

| Exams | Three section exams and one noncumulative final exam (section one)Three section exams and one cumulative final exam (section two) | Three section exams and one cumulative final exam | Four section exams and one cumulative final exam |

| Exam style | Multiple choice, short answer, true/false, matching | Multiple choice | Short answer |

| Exam Question Bloom’s Level | Lower-level (1 and 2): 99.97%Upper-level (3,4,5, and 6): 0.03% | Lower-level (1 and 2): 85.72%Upper-level (3,4,5, and 6): 14.28% | Lower-level (1 and 2): 60%Upper-level (3,4,5, and 6): 40% |

| Percentage of course grade from exams | 60% of course grade came from exams (section one)75.7% of course grade came from exams (section two) | 70% of course grade came from exams | 80% of course grade came from exams |

| Textbook | Online textbook, not free | Published and online textbook; not free | Online textbook; not free |

| Homework | Weekly homework assignments through learning management system; 13– 20% of course grade | Weekly homework assignments through external tool (Packback); 10% of course grade | Weekly homework assignments through external tool (Packback); 10% of course grade |

TABLE 4.

Demographic overview of students enrolled in Anatomy & Physiology, Microbiology, and Genetics during Fall 2021 for a) Exam one and b) Exam three

| Anatomy & Physiology | Microbiology | Genetics | |

|---|---|---|---|

| A | |||

| Gender | |||

| Women | 349 (80.4%) | 300 (72.1%) | 81 (60.0%) |

| Men | 82 (18.9%) | 105 (25.2%) | 38 (28.1%) |

| Unknown | 2 (0.46%) | 1 (0.24%) | 7 (5.19%) |

| College Generation Status | |||

| First | 45 (10.4%) | 40 (9.62%) | 12(8.9%) |

| Continuing | 386 (88.9%) | 387 (88.2%) | 108 (80.0%) |

| Unknown | 3 (0.69%) | 9 (2.16%) | 7 (5.19%) |

| Pell Grant Status | |||

| Yes | 42 (9.68%) | 50 (12.0%) | 2 (1.48%) |

| No | 322 (74.2) | 358 (86.1%) | 118 (87.4%) |

| Unknown | 70 (16.1%) | 8 (1.92%) | 7 (5.19%) |

| PEER Status | |||

| Yes | 43 (9.90%) | 48 (11.5%) | 14 (10.4%) |

| No | 385 (88.7%) | 356 (85.6%) | 106 (78.5%) |

| Unknown | 3 (0.69%) | 8 (1.92%) | 7 (5.19%) |

| B | |||

| Gender | |||

| Women | 327 (81.9%) | 263 (77.8%) | 88 (65.2%) |

| Men | 63 (15.8%) | 66 (19.5%) | 38 (25.9%) |

| Unknown | 7 (1.75%) | 5 (3.70%) | 11 (8.15%) |

| College Generation Status | |||

| First | 36 (9.02%) | 33 (9.76%) | 12 (8.9%) |

| Continuing | 356 (89.2%) | 299 (88.2%) | 111 (82.2%) |

| Unknown | 7 (1.75%) | 6 (1.77%) | 12 (8.89%) |

| Pell Grant Status | |||

| Yes | 45 (11.3%) | 37 (10.9%) | 20 (14.8%) |

| No | 345 (86.5%) | 294 (86.7%) | 104 (77.0%) |

| Unknown | 9 (2.26%) | 7 (2.06%) | 11 (8.15%) |

| PEER Status | |||

| Yes | 31 (7.77%) | 39 (11.5%) | 16 (11.9%) |

| No | 358 (89.7%) | 356 (85.5%) | 108 (80.0%) |

| Unknown | 7 (1.75%) | 6 (4.44%) | 11 (8.15%) |

Anatomy and Physiology is an introductory course taught by two different instructors in two sections that is focused on concepts related to the structure and function of the human body to include basic Biochemistry and Cell Biology. It is taken primarily by first-year and second-year students who are interested in Life Science majors and/or have prehealth aspirations. Furthermore, students completed three individual examinations to assess content mastery. Exams in this course, across both sections, contained structured response (multiple choice, matching, true/false) or short answer questions. Each exam was worth ∼15–20% of the course grade (Table 3).

Microbiology is an upper-level course taught by a single instructor that provides students with an introduction to Microbiology, with a special emphasis on bacterial structure, function, growth, metabolism, and genetics. This course is primarily taken by third- and fourth-year students who are Life Science or prehealth majors. Like students enrolled in the lower-level course (i.e., Anatomy and Physiology), students met in-person for three, 50-min class periods per week. Each class period was lecture based and student-content mastery was assessed through three individual examinations worth ∼20% of the course grade. Exams in this course consisted entirely of structured-response questions (i.e., multiple choice; Table 3).

Genetics is also classified as an upper-level course, is taught by a single instructor, and is taken primarily by third- and fourth-year students. This course provides students with an overview of the principles of transmission and cytological, molecular, and population genetics. Students enrolled in this course met in person for three, 50-minute class periods per week. Each class period was lecture-based and student-content mastery was assessed through four individual exams worth ∼20% of the course grade. Exams in this course consisted entirely of short-answer questions (Table 3).

DATA COLLECTION

Survey Development

To investigate study strategies used by students enrolled in different Biology courses, we constructed a survey that was modified from Walck-Shannon et al. (2021). Specifically, in our survey, students were asked “Which of the following did you do to prepare for Exam [X]?” Following this question (where [X] was replaced by the appropriate exam number), students were presented with a list of study strategies. They were prompted to select all the strategies they used to prepare for their exam. The list of strategies included a mixture of active and passive strategies, of which six strategies were classified as active-study behaviors and four strategies were classified as passive strategies. For this study, we characterized study strategies as either active or passive according to the classification in Walck-Shannon et al. (2021). Briefly, we define active strategies as those that prompt students to retrieve information from long-term memory through self-testing or through the generation of a product (i.e., self-quiz, explain concepts, synthesize notes, complete problem sets, complete old tests/quizzes, make diagrams; Table 1). We define passive strategies as those that promote fluency or storage of information in short-term memory (i.e., read notes, rewrite notes, watched lecture videos, read the textbook; Table 1). During the survey, students were not made aware of which strategies were active or passive.

To better understand students’ ability to evaluate the effectiveness of their individual study behaviors, we included two open-ended questions from a previously published metacognitive self-evaluation assignment (Stanton et al., 2015). Specifically, students were asked “Which study habits were effective for you? Why?” and “Which study habits were ineffective for you? Why?”

Survey Administration

During the Fall 2021 semester, instructors encouraged students to take a voluntary survey online via Qualtrics for a small amount of extra-credit points which students received for clicking into the survey. Students were given access to the survey immediately following the completion of each exam and results were downloaded by researchers one week later. Of the 498 Anatomy and Physiology students invited to participate in this study, 87.14 and 80.12% of students completed the survey and consented to participate in the study following exams one and three, respectively. Of the 474 Microbiology students invited to participate in this study, 87.76 and 71.30% of students completed the survey and consented to participate in the study following exams one and three. Of the 168 Genetics students invited to participate in this study, 75.59 and 79.76% of students completed the survey and consented to participate in the study following exams one and three.

Data Coding and Analysis

We used preexisting codes derived from the metacognition framework as previously described to label the data, indicate the level of evidence that students provided for evaluating the effectiveness of individual-study strategies, and evaluate the ineffectiveness of individual-study strategies (Stanton et al., 2019). Briefly, because lack of written evidence of metacognition does not indicate absence of metacognition, we labeled participants’ answers as providing sufficient evidence, partial evidence, or insufficient evidence of the skill of evaluation. As described previously, these labels comprise a three-level magnitude code where codes indicate the level of content found in the data (Saldaña, 2015; Stanton and Dye, 2019). Additionally, we coded for the evaluation skills separately. Two of the authors (W.G. and Q.J.) conducted initial coding independently and then met to code to consensus, meaning that all coders agreed on code assignments for all responses. Before coding to consensus, percent agreement between the coders was 97.4%.

Exam Coding

Exams given in these courses contained structured response (e.g., multiple choice, true/false, matching) or free-response (e.g., short answer) questions (Table 3). These exams were given in-person or online and contained a mixture of lower-order cognitive level (i.e., recall and comprehension) and higher-order cognitive (i.e., application, analysis, synthesis, or evaluation questions; Table 3). To determine the cognitive level of exam questions, two independent coders (W.G. and Q.J.) qualitatively coded individual items on each assessment by using the Blooming Biology rubric described by Crowe et al. (2008) to assess the Bloom’s Taxonomy level of the questions contained in each exam. Briefly, each coder independently coded each exam question according to the rubric from Crowe et al. (2008) and then met to code to consensus. This revealed that the lower-level and upper-level exam items were majority Bloom’s levels one and two (>50%; Table 3).

Calculated Indices

Number of Active and Passive Behaviors Used.

To determine the number of active- and passive-study behaviors used, we used the classification previously described by Walck-Shannon et al., 2021. Briefly, to define which study behaviors were effective (active) and ineffective (passive) Walck-Shannon and colleagues (2021) reviewed the literature about study behaviors, categorized each behavior independently, and then met to agree upon each categorization (Walck-Shannon et al., 2021). Using this categorization, we summed the number of active behaviors and passive behaviors that each student reported to yield the number of active strategies variable and passive behaviors variable, respectively.

Linear Mixed-Effect Model Analysis.

We used the nlme package (Pinheiro et al., 2022) in R Studio (R version 4.0.3; R Core Team, 2020) to create linear mixed-effects models, examining the following three questions: 1) Are there differences in the use of study behaviors between students in different Biology courses? 2) How do student–study behaviors change over the course of the semester (i.e., Exam one and Exam three)? 3) What is the effect of each study behavior on exam performance? We sampled individual students twice over the study period, so we used a repeated measures design to account for repeated sampling from a single student by including Student ID, nested under instructor, as a random effect in all our models (Table 5).

TABLE 5.

Final models to address each research question

| Research Question | Active Model | Passive Model |

|---|---|---|

| RQ1: Are there differences in the use of study behaviors between students in lower-level and upper-level in different Biology courses? | glmer ( Active ∼ Course + [1 instructoranon/Student], family = poisson) | glmer ( Passive ∼ Course + [1instructoranon/Student], family = poisson) |

| RQ2: How do student–study behaviors change over the course of the semester? | glmer ( Active ∼ Exam # + Course + [1 instructoranon/Student], family = poisson) | glmer ( Passive ∼ Exam # + Course + [1 instructoranon/Student], family = poisson) |

| RQ3: What is the effect of each study behavior on student performance? | lme ( Score ∼ Active + Course, random = ∼1 instructoranon/Student) | lme ( Score ∼ Passive + Course, random = ∼1 instructoranon/Student) |

To answer our first question, we created a model for each of the type of study behaviors (i.e., active and passive) as dependent variables. The independent variable in each model was Course, to test for differences in the use of study behaviors between courses. Active- and passive-study behaviors were counts with a ceiling of six and four, respectively, so we used generalized linear mixed-effects models with a Poisson distribution.

To answer our second question, we again created a model, for each of the study behaviors as dependent variables. However, the independent variable was exam number (i.e., exam one or exam three), to test for differences in the use of study behaviors over the course of the semester. Additionally, we added Course as an independent variable as well as the interaction between Course and Exam. If there was no significant interaction, then we removed the interaction of Course and Exam from the model. However, if there was a significant interaction between Course and Exam, then we analyzed the subsets of Course independently. Again, active- and passive-study behaviors were counts with a ceiling of six and four, respectively, so we used generalized linear mixed-effects models with a Poisson distribution.

To answer our third question, we again created a model for each of the study behaviors, but this time the study behaviors were the independent variables and exam score was the dependent variable (Table 5). Additionally, we added Course as an independent variable as well as the interaction between the study behavior (i.e., active count or passive count) and Course. If there was no significant interaction, then we removed the interaction of Course and the study behavior from the model. However, if there was a significant interaction between Course and the study behavior, then we analyzed the subsets of Course independently. All models were linear mixed-effects models.

We based statistical significance on p < 0.05 and confidence intervals that exclude zero. For these analyses, we converted exam scores to z-scores for all students by instructor, so we could account for differences in grading structure and summative assessments. However, for ease of interpretation, we used raw-exam score to present our results. To visualize differences in the use of study behaviors or performance on exams between students in the three courses, we used the R package, ggplot2 (Wickham, 2016). All R Studio code and data are available here: https://github.com/EmilyDriessen/A-Comparison-of-Study-Behaviors-and-Metacognitive-Evaluation-Used-by-Biology-Students-.git.

RESULTS

RQ1: Are there differences in the use of study behaviors between students in different Biology courses?

Across courses, for each exam, students used a mix of active- and passive-study behaviors (see Table 6 for Exam one study behavior descriptive statistics; see Table 7 for Exam three study behavior descriptive statistics). Specifically, most students reported reading notes (ranging from 88.94–93.75% of respondents across courses). Following this response, students reported strategies that were active, including self-quizzing, explaining concepts, and synthesizing notes. Each of these strategies were used by most students across all three courses (53.22–82.21%) for both Exam one and Exam three. While students enrolled in Anatomy & Physiology and Microbiology reported attending review sessions to prepare for Exam one, few Genetics students attended review sessions. Furthermore, Anatomy & Physiology students and Microbiology students reported that they used old exams to prepare for Exams one and three while Genetics students did not. Less frequently reported behaviors also included rewriting notes, watching lectures, making diagrams, and reading the textbook.

TABLE 6.

Study behaviors used according to exam type for students enrolled in different Biology courses, listed in prevalence of use exam one

| Behavior Name | Behavior Type | Anatomy & Physiology | Microbiology | Genetics | |||

|---|---|---|---|---|---|---|---|

| N | % | N | % | N | % | ||

| Read notes | Passive | 386 | 88.94 | 390 | 93.75 | 113 | 88.97 |

| Self-Quiz | Active | 331 | 76.26 | 342 | 82.21 | 91 | 71.65 |

| Explained concepts | Active | 265 | 61.05 | 255 | 61.29 | 76 | 59.84 |

| Synthesized notes | Active | 231 | 53.22 | 243 | 58.41 | 82 | 60.74 |

| Attended-review session | Mixed | 215 | 49.53 | 266 | 63.94 | 16 | 11.85 |

| Completed-problem sets | Active | 195 | 44.93 | 182 | 43.75 | 40 | 29.62 |

| Completed old exams | Active | 191 | 44.00 | 213 | 51.20 | 10 | 7.40 |

| Rewrote notes | Passive | 169 | 38.94 | 141 | 33.89 | 42 | 31.11 |

| Watched lecture | Passive | 148 | 34.10 | 132 | 31.73 | 42 | 31.11 |

| Made diagrams | Active | 78 | 17.97 | 118 | 28.36 | 35 | 25.92 |

| Read textbook | Passive | 57 | 13.13 | 129 | 31.00 | 17 | 12.59 |

TABLE 7.

Study behaviors used according to exam type for students enrolled in different Biology courses, listed in prevalence of use exam three

| Behavior Name | Behavior Type | Anatomy & Physiology | Microbiology | Genetics | |||

|---|---|---|---|---|---|---|---|

| N | % | N | % | N | % | ||

| Read notes | Passive | 364 | 91.22 | 314 | 92.89 | 125 | 92.59 |

| Self-Quiz | Active | 286 | 71.67 | 248 | 73.37 | 91 | 67.40 |

| Explained concepts | Active | 240 | 60.15 | 156 | 46.15 | 86 | 63.70 |

| Synthesized notes | Active | 229 | 57.39 | 188 | 55.62 | 82 | 60.74 |

| Attended-review session | Mixed | 121 | 30.32 | 229 | 67.75 | 16 | 11.85 |

| Completed-problem sets | Active | 138 | 34.58 | 120 | 35.50 | 40 | 29.62 |

| Completed old exams | Active | 199 | 49.87 | 280 | 82.84 | 10 | 7.40 |

| Rewrote notes | Passive | 160 | 40.10 | 102 | 30.17 | 42 | 31.11 |

| Watched lecture | Passive | 163 | 40.85 | 149 | 44.08 | 42 | 31.11 |

| Made diagrams | Active | 96 | 24.06 | 106 | 31.36 | 35 | 25.92 |

| Read textbook | Passive | 22 | 5.51 | 67 | 19.82 | 17 | 12.59 |

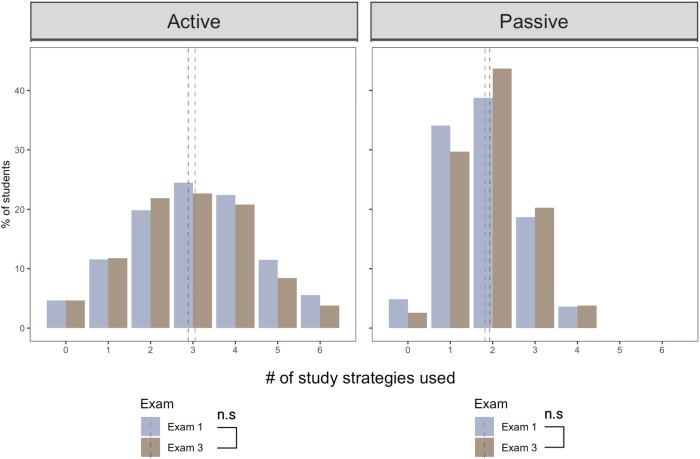

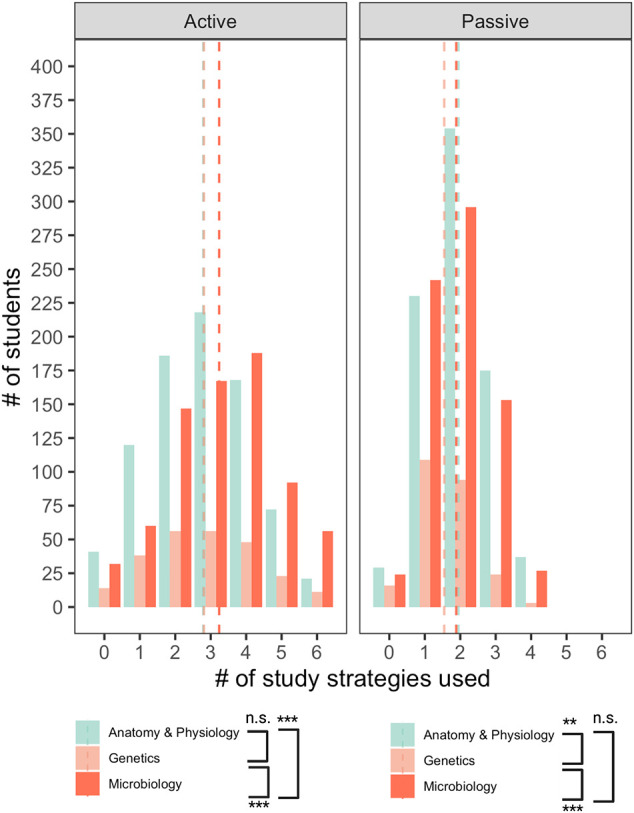

Our linear mixed-effects model analyses revealed significant differences in active- and passive-study behavior use between courses. Specifically, for active study strategies, students in Microbiology used 1.16 (1.09–1.24; 95% C.I.) as many active study strategies as students in Anatomy and Physiology (p < 0.0001); whereas students in Genetics use 1.0070 (0.92–1.096; 95% C.I.) as many active strategies as students in Anatomy and Physiology, however, this is not statistically significant (p = 0.87), and 0.87 (0.80–0.95; 95% C.I.) as many active-study strategies as students in Microbiology (p < 0.001). For passive-study strategies, students in Microbiology use 0.97 (0.79–1.19; 95% C.I.) as many passive strategies as students in Anatomy and Physiology, however, this is not statistically significant (p = 0.70); whereas students in Genetics use 0.80 (0.64–0.99; 95% C.I.) as many passive-study strategies as students in Anatomy and Physiology (p = 0.01) and 0.82 (0.64–0.95; 95% C.I.) as many passive-study strategies as students in Microbiology (p < 0.001). Our linear mixed-effects model analyses revealed that there were significant differences in the use of active- and passive-study behaviors by course (Figure 1).

FIGURE 1.

To answer the question, “Are there differences in the use of study behaviors between students in different Biology courses?”, we compared the count of active- and passive-study habits used by students in three different classes: 1) Anatomy and Physiology, 2) Genetics, and 3) Microbiology. *** represents statistical significance where p ≤ 0.001. ** represents statistical significance where p ≤ 0.01; ns indicates no statistical significance where p > 0.05.

RQ2: How do student–study behaviors change over the course of the semester (i.e., Exam one and Exam three)?

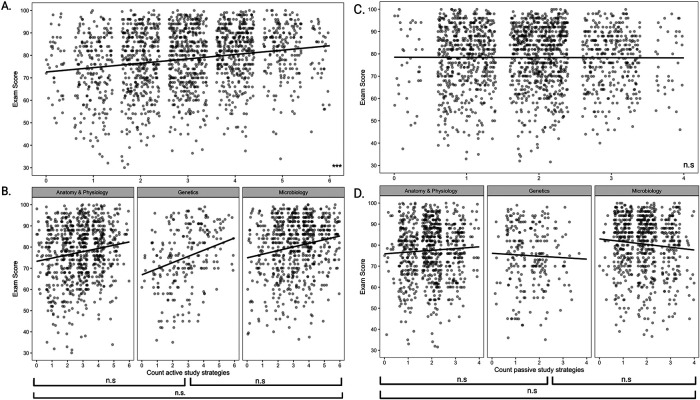

We found that students used 0.95 (0.90–1.00; 95% C.I.) times as many active-study strategies in preparation for Exam three as Exam one, however, this was not statistically significant (p = 0.063). According to this model, students in Microbiology used 1.16 (1.09–1.24; 95% C.I.) times as many active-study strategies as Anatomy and Physiology students (p < 0.0001); students in Genetics used 1.01 (0.92–1.10; 95% C.I.) times as many active-study strategies as Anatomy and Physiology students, which was not statistically significant (p = 0.86), and 0.87 (0.80–0.95; 95% C.I.) as many active-study strategies as students in Microbiology (p < 0.01).

We also found that students used 1.06 (0.99–1.13; 95% C.I.) times as many passive-study strategies preparing for Exam three as Exam one, however, this was not statistically significant (p = 0.088). According to this model, students in Microbiology used 0.97 (0.79–1.20; 95% C.I.) times as many passive–study strategies as the Anatomy and Physiology students, but this was not statistically significant (p = 0.72); students in Genetics used 0.80 (0.64–0.99; 95% C.I.) times as many passive-study strategies as the Anatomy and Physiology students (p = 0.011) and 0.82 (0.64–1.045; 95% C.I.) as many passive-study strategies as students in Microbiology (p < 0.05).

Our linear mixed-effects model analyses revealed that for all students, regardless of course, there were no significant changes in the use of active- or passive strategies between exam one and exam three (Figure 2). Separately, these analyses further supported the significant differences in use of active- or passive strategies by course revealed by the analyses for RQ1.

FIGURE 2.

To answer the question, “How do student–study behaviors change over the course of the semester (i.e., Exam one and Exam three)?”, we compared the count of active- and passive-study behaviors used by students in three different classes (i.e., Anatomy and Physiology, Genetics, and Microbiology) over time (i.e., those used for exam one vs. exam three). ns indicates no statistical significance (i.e., p > 0.05)

RQ3: What effect does each study behavior have on exam performance?

Our linear mixed-effects model analyses revealed a significant effect of active-study strategies on exam score (Figure 3A and B). Specifically, for each one-count increase in active-study strategy use, students performed 1.92% points better on their exam across courses (p < 0.0001). There were no differences in student performance between courses. According to this model, in which ‘Course’ was a fixed effect, we found students in the Microbiology class performed 2.16% (–8.46–12.78; 95% C.I.) higher on their exams than the students in the Anatomy and Physiology class, however, this was not statistically significant (p = 0.23); whereas students in the Genetics class performed 2.91% lower on their exams than the students in the Anatomy and Physiology class, however this was not statistically significant (p = 0.24), and 5.075% (–20.08–9.93; 95% C.I.) lower on their exams than the students in Microbiology, however, this was not statistically significant (p = 0.15).

FIGURE 3.

To answer the question, “What is the effect of each study behavior on student performance?”, we correlated the study behaviors of students (i.e., those used for Exam one and Exam three) with performance. A) Count of active-study strategies used by students across all classes compared with student-exam score. B) Count of active-study strategies used by students in each course (i.e., Anatomy and Physiology, Genetics, and Microbiology) compared with student-exam score. C) Count of passive-study strategies used by students across all classes compared with student-exam score. B) Count of passive-study strategies used by students in each course (i.e., Anatomy and Physiology, Genetics, and Microbiology) compared with student exam score. *** represents statistical significance where p ≤ 0.001; ns indicates no statistical significance where p > 0.05.

On the other hand, our linear mixed-effects model analyses revealed no significant effect of passive-study strategies on exam score (Figure 3C and D). For each one-count increase in passive-study strategy use, students performed 0.27% points (±0.71; ±95% C.I.) worse on their exam, however this was not statistically significant (p = 0.45). There were no differences in student performance between courses. According to this model, in which ‘Course’ was a fixed effect, students in the Microbiology class performed 3.04% (±10.77; ±95% C.I.) higher on their exams than the students in the Anatomy and Physiology class, however this was not statistically significant (p = 0.17); students in the Genetics class performed 2.97% (±9.36; ±95% C.I.) lower on their exams than the students in the Anatomy and Physiology class, however this was not statistically significant (p = 0.25), and 6.01% (–21.37–9.35; 95% C.I.) lower on their exams than the students in Microbiology, however, this was not statistically significant (p = 0.13).

RQ4: How do students in different courses compare in their use of the metacognitive skill of evaluation?

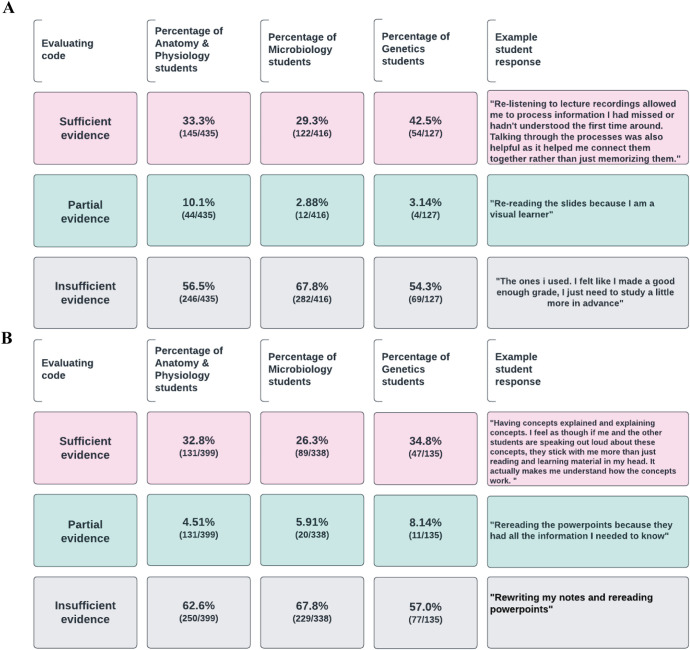

To determine why we observed significant differences in the use of study behaviors between students enrolled in different Biology courses, we investigated their ability to evaluate their study behaviors. For exam one, students enrolled in Anatomy & Physiology, Microbiology, and Genetics demonstrated similar ability to evaluate their study behaviors (Tables 8 and 9). Specifically, 33.3% of Anatomy & Physiology students, 29.3% of Microbiology students, and 42.5% of Genetics students provided sufficient evidence (i.e., students identify effective-study behavior and explain why that behavior was effective) of their ability to evaluate which study behaviors were effective while most students across all levels provided insufficient evidence (i.e., students identify effective-study behavior but do not explain why it was effective; Table 8). For exam three, 32.8% of Anatomy & Physiology students, 26.3% of Microbiology students, and 35.1% of Genetics students provided sufficient evidence of their ability to evaluate which study behaviors were effective while most student provided insufficient evidence.

TABLE 8. .

Evaluating individual study behaviors: Which study behaviors were effective for you? Following exam one (A) and exam three (B), students were asked “Which study strategy was effective for you? Why?” Student responses were coded as providing sufficient, partial, or insufficient evidence of metacognitive evaluation using content analysis.

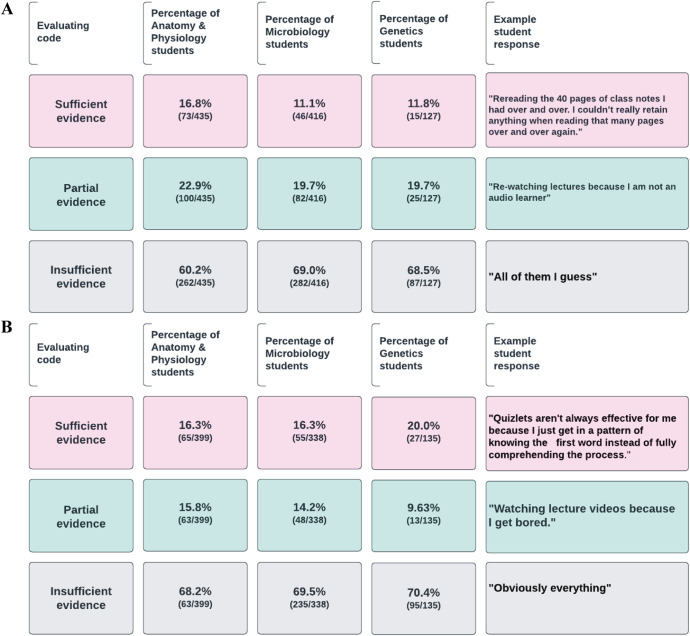

TABLE 9.

Evaluating individual study behaviors: Which study behaviors were ineffective for you? Following exam one (A) and exam three (B), students were asked “Which study strategy was ineffective for you? Why?” Student responses were coded as providing sufficient, partial, or insufficient evidence of metacognitive evaluation using content analysis.

Similarly, following exam one, 16.8% of Anatomy & Physiology students, 11.05% of Microbiology and 11.8% of Genetics students provided sufficient evidence (i.e., students identify ineffective-study behavior and explain why that behavior was ineffective) of their ability to evaluate which behaviors were ineffective while most students provided insufficient evidence (i.e., students identify ineffective-study behavior but do not explain why it was ineffective; Table 9). For exam three with 16.3% of Anatomy & Physiology students, 16.3% of Microbiology, and 20.0% of Genetics students providing sufficient evidence of their ability to evaluate with study behaviors were ineffective.

DISCUSSION

We found that students across several large Biology courses used variable counts of study behaviors to prepare for assessments over a semester. This strategy use did not change over time (i.e., for Exam one vs. Exam three). While the use of active-, effective-study behaviors resulted in improved exam performance, the use of passive-, ineffective-study behaviors had no significant impact on exam performance. Our qualitative analysis revealed that students demonstrated similar ability to evaluate the behaviors that were effective and those that were ineffective. In this section, we connect our main takeaways before explaining why students continue to use ineffective-study behaviors as they progress through Biology coursework. We conclude with recommendations for instructors to help students adopt better study behaviors.

Metacognition and study behaviors were similar across Biology courses

As students progress through undergraduate coursework, previous research showed instructors expect that students will use metacognitive awareness to select effective-study behaviors to learn course content (Persky and Robinson, 2017). Previous literature, along with the current study, has demonstrated that undergraduate students enrolled in various Biology courses report using ineffective-study behaviors (e.g., rereading notes; Hora and Oleson, 2017; Sebesta and Speth, 2017; Ziegler and Montplaisir, 2014; Walck-Shannon et al., 2021). Furthermore, students demonstrate difficulty with metacognitive-regulation skill of evaluation when prompting is not provided by an instructor (Stanton et al., 2015). While some students can automatically develop the metacognitive skills necessary for academic success through experience, the development of metacognition and use of effective-study behaviors do not always naturally improve as students advance in their coursework (Curley et al., 1987; Justice and Dornan, 2001; Pintrich, 2002; Veenman et al., 2004; Cao and Nietfeld, 2007; Cazan, 2022). Instead, the development of metacognition is influenced by course structure and instructor support (Curley et al., 1987; Justice and Dornan, 2001; Pintrich, 2002; Veenman et al., 2004; Cao and Nietfeld, 2007; Dye and Stanton, 2017).

Consistent with this, our results showed that students enrolled in different Biology classes used a mix of active- and passive-study behaviors and used the same behaviors throughout the semester. Additionally, we found that students show similar skill in their ability to evaluate the effectiveness or ineffectiveness of their study behaviors. Specifically, students could identify which behaviors did or did not work for them but had difficulty explaining why those behaviors were effective or ineffective. Taken together, these data suggest that students use a mix of study behaviors to prepare for exams but do so without considering what behaviors promote long-term learning and why.

Course demands drove study behaviors, but exam expectations did not

We explain our findings in the context of students’ perceptions of course demands and exam expectations (i.e., excessive content, multiple choice, low Bloom’s taxonomy) because previous work has demonstrated that students’ approaches to studying are influenced by these factors (Eley, 1992; Kember et al., 2008; Momsen et al., 2010). When students perceive that a course has a heavy workload and an excessive amount of content to learn, they are more likely to use ineffective-study behaviors to prepare for exams such as rereading the textbook to prepare for exams (Cao and Nietfeld, 2007; Kember et al., 2008). In our study, across all classes, students reported challenges with the amount of content presented on each exam and a reliance on passive-study behaviors with the following students stating:

“There is really no way to effectively study. There were over 10,000 words in my notes and my [a website that provides learning tools for students called] quizlets combined were over 1000 terms. The exam tested on maybe 1/10 of that…”

“Repetition is the only way to memorize the excess of information in this class.”

Literature shows students select study behaviors that are consistent with the expected format of the exam (Feldt and Ray, 1989; Entwistle and Entwistle, 2003; Kember et al.,, 2008; Momsen et al., 2010; Abd-El-Fattah, 2011). Due to large courses, Biology instructors commonly use multiple-choice assessments as they are easier to grade and enable fast return of grades (Simkin and Kuechler, 2005). However, based on past experiences and success with using memorization to prepare for multiple-choice exams (e.g., Scholastic Achievement Test, advanced placement tests), students have learned to associate multiple-choice exams with memorization (Scouller, 1998, Zheng et al., 2008; Stanger-Hall, 2012). As a result, when students expect multiple-choice questions on exams, they select study behaviors that emphasize rote memorization (Feldt and Ray, 1989; Scouller, 1998; Martinez, 1999; Watters and Watters, 2007; Kember et al., 2008; Abd-El-Fattah, 2011; Stanger-Hall, 2012; Santangelo, 2021).

The cognitive level of exam questions can also impact study behaviors. If students are presented with exams that contain low-level Bloom’s questions that emphasize memorization of facts, students will tailor their study behaviors to focus on memorizing definitions (Jensen et al., 2014). Students perceive their Biology exams will contain questions that target the lower-cognitive levels of Bloom’s taxonomy (Bloom’s levels one and two; Cuseo, 2007; Momsen et al., 2010). These questions focus primarily on knowledge and comprehension rather than the higher-cognitive levels of application, analysis, and evaluation. Based on this previous research, we expected that students would tailor their study behaviors to the expected exam format and/or the anticipated-cognitive demands of the exam. Specifically, we anticipated that students taking exams with higher level Bloom’s questions or short answer exams would use more active-study behaviors to prepare for exams. After determining the format of each exam and using Bloom’s taxonomy tool (Crowe et al., 2008) to assign cognitive-learning levels to individual items on each assessment, we found that each course differed in exam format and that across all courses the exam items were majority Bloom’s levels one and two (>50%; Table 3). Interestingly, we found that compared with students enrolled in courses with short-answer exams (Anatomy and Physiology and Genetics) or exams with more high-level Bloom’s questions (Genetics), students enrolled in a course that had predominately multiple-choice exams comprised of mostly low-level Bloom’s questions (Microbiology) used more active-study behaviors to prepare for their exams. Furthermore, our data indicates that, despite impact on exam grade, students are using certain behaviors without understanding why they are effective or ineffective. Taken together, our data suggests that students are considering other factors outside of exam format or anticipated cognitive demand to select study behaviors. Future work will examine how student motivation and perceptions of course demands influence their study behaviors.

Continued use of passive-study behaviors had no significant impact on exam performance

Previous research has demonstrated that use of passive-study behaviors to prepare for exams had no significant impact on or negatively impacted the exam performance of students enrolled in an Introductory Biology class (Walck-Shannon et al., 2021). Similarly, we found that increased use of passive-study behaviors had no significant impact on exam performance for students enrolled in three different Biology courses. Additionally, we found that increased use of active behaviors positively impacted exam performance, also reflecting the results of Walck-Shannon and colleagues (2021). In one scenario, students might only use different types of either passive-study habits or active-study habits. The ‘passive’ studiers who increase the use of those behaviors do not experience predictable gains or losses in their exam outcomes. However, the ‘active’ studiers who increase the use of those behaviors enjoy gains in performance. The more likely explanation, which reflects our observation that students continuously use a mix of passive and active behaviors to prepare for exams, suggest that students may be using passive behaviors and active behaviors in tandem while preparing for exams. For example, rereading text has been identified as a passive behavior that can be turned into an active behavior that results in long-term learning when paired with retrieval practice (e.g., self-testing; Miyatsu et al., 2018). Similarly, rewriting notes can be turned into an active behavior when paired with summarization or paraphrasing (Miyatsu et al., 2018). Future work will examine how students are implementing different study behaviors. Specifically, future work will investigate whether students are pairing passive-study behaviors with active-study behaviors.

The likely mixing of passive- and active-study behaviors may also explain why students are unable to explain why certain behaviors are effective or ineffective. Specifically, because active-behavior use is beneficial for exam performance and passive-behavior use has no impact, students cannot pinpoint which behaviors are truly effective. Taken together, our study supports previous research that demonstrates the effectiveness of active behaviors use to prepare for exams and provides a potential explanation for why students continue to use ineffective behaviors to prepare for exams.

Implications for Instructors

We propose several evidence-based strategies for instructors to use in combination to design their courses to foster development of effective-study behaviors.

Nudging’ students towards metacognitive skills and study behaviors.

Metacognitive regulation encompasses student ability to select appropriate strategies to meet a learning goal, monitor how well those strategies work, evaluate their strategies, and adjust future plans as needed (Dye and Stanton, 2017). Stanton et al. (2019) found students enrolled in various Biology classes displayed similar abilities to evaluate the effectiveness of individual-study behaviors. They showed how students had difficulty with evaluating which study behaviors are ineffective (Stanton et al., 2019). Across all courses, students can be ‘nudged’ to develop metacognitive-regulation skills and effective-study behaviors through metacognition interventions that are administered during class time (Stanton et al., 2015; Dye and Stanton, 2017; Cazan, 2022). For example, previous research has demonstrated that when students are implicitly taught metacognitive techniques (i.e., incorporating learning journals, error analysis tasks, concept maps, and peer-assessment tasks as course assignments), students display improved awareness of successful learning strategies (Cazan, 2022). Similarly, the inclusion of curricular activities that promote the development of metacognitive skills in a General Chemistry course and an Introductory Biology course resulted in improved self-evaluation skills and students reporting a shift from using study behaviors that emphasize rote memorization to those that promote conceptual understanding (Mynlieff et al., 2014; Sabel et al., 2017; Dang et al., 2018; Muteti et al., 2021; Santangelo et al., 2021). These interventions allow students to develop study behaviors that work best for their specific context (Pintrich, 2002; Cazan, 2020). Together, these data suggest that interventions aimed at developing metacognitive regulation skills can promote the development of effective-study behaviors in students.

Incorporate high-level Bloom’s questions on quizzes and exams.

The test expectancy effect suggests that students will adjust their study behaviors to match the anticipated demands of an exam (Thiede et al., 2011; Jensen et al., 2014). Our data suggests that students across different Biology courses are not selecting their study behaviors based on their exam expectations. However, higher-order questions lead to better learning. For example, Jensen and colleagues (2014) demonstrated that when Biology students were presented with multiple-choice quizzes and tests containing higher-order thinking questions throughout the semester, students demonstrated improved conceptual understanding and higher-final exam scores. These outcomes were attributed to student use of study approaches that promote understanding rather than memorization. Furthermore, the incorporation of high-level Bloom’s questions on formative assessments provides students with low stakes opportunities to gauge what they know and what they do not know and adjust their study behaviors accordingly (Na et al., 2021). Thus, gradually incorporating higher-order questions on assessments and nudging students towards effective-study behaviors will benefit student learning.

Limitations

We acknowledge that there are several limitations that can impact the interpretation of this study. In the current study, we collected data over the course of a single semester in two sections of a lower-level course and three sections of two upper-level courses at a research-intensive, public university located in the southeastern United States. The development of metacognition and study behaviors is dependent on the course context and instructional support (Curley et al., 1987; Justice and Dornan, 2001; Pintrich, 2002; Veenman et al., 2004; Cao and Nietfeld, 2007; Dye and Stanton, 2017). Thus, students who took different Biology courses, had different instructors, or are enrolled at a different institution may use different study behaviors and/or display different abilities in metacognitive regulation. For example, Justice and Dornan found that nontraditional age (i.e., 24–64 y) college students display developmental increases in metacognition and tend to use active-study behaviors that promote comprehension of course material rather than memorization (Justice and Dornan, 2001). This suggests that colleges with a primarily nontraditional student population (e.g., community colleges) may use different study behaviors than those presented in the current study. Similarly, previous literature indicates that the structure of large-enrollment classes results in instructors adopting an instructor-centered approach to teaching that promotes passive learning (Eley, 1992; Cuseo, 2007; Kember et al., 2008; Hobbins et al., 2020). This suggests that students who are not enrolled in large-enrollment Biology classes may be taught by instructors who are able to employ a student-centered approach to teaching and develop effective-study behaviors as a result. Taken together, our findings may not be applicable to all student populations, and future research should include a wider range of colleges and universities (Thompson et al., 2020).

Another caveat is that our data about study behaviors are self-reported. Thus, we cannot exclude the possibility that students entered responses they believed were desirable (social desirability bias; Gonyea, 2005). However, surveys generally have a small-social desirability bias effect and there is no strong evidence that students align their responses to what are considered to be effective-study behaviors (Gonyea, 2005; Walck-Shannon et al., 2021). For example, most students across all levels reported rereading notes as a study behavior even though it is an ineffective-study behavior. Nevertheless, we attempted to limit-social desirability bias by having students submit their surveys directly to us as researchers instead of their instructors. Additionally, all students were awarded extra credit for their participation in the study, regardless of their responses. Another limitation to self-reporting is that the survey administered asked students to recall their study behaviors in the time period leading up to the exam. Over time, it may take students longer to remember how they studied or forget how they studied altogether (Walentynowicz et al., 2018). We attempted to limit this confound by using the same well-defined time frames for each survey administration (Walentynowicz et al., 2018). Specifically, we opened the survey to students immediately following each exam and made it available to students for one wk.

Self-reporting also prevented us from assessing how students implemented study behaviors. For example, two students may have categorically used flash cards, but they could have each used them in different ways (e.g., with a partner or by themselves). Or, students may have indicated they used an active-study behavior but they may have used that behavior in a passive manner. For example, studies have documented that methods of self-testing, such as using flash cards or completing old exams, are effective, active study behaviors that help students to identify gaps in their knowledge. However, some students passively read through flashcards or old exam keys rather than using these strategies actively (Hartwig and Dunlosky, 2012; Bjork et al., 2013). The way in which we collected data did not allow us to capture inconsistent implementation of study behaviors. Future work will critically examine how students use different categories of study behaviors (and whether this changes over time).

Finally, while this study focuses on metacognition and the development of study behaviors, it is important to highlight that motivation cannot be parsed from our data. As stated previously, self-regulated learners are defined as learners who actively participate in their learning via metacognition, motivation, and behavior (Credé and Phillips, 2011). All three aspects impact academic performance with metacognition and motivation serving as mediators that influence the use of specific-learning behaviors (Duncan and McKeachie, 2005; Credé and Phillips, 2011). Motivation refers to any process that influences learning behavior (Palmer, 2005). Additionally, motivation is dynamic, influenced by context, and can vary across tasks (Duncan and McKeachie, 2005; Credé and Phillips, 2011; Gibbens, 2019). For example, student motivation can vary across courses (e.g., more interest in a major course vs. an elective) or across different tasks for the same class (e.g., studying for multiple choice exam vs. writing a paper; Duncan and McKeachie, 2005; Credé and Phillips, 2011). As a result, based on motivations related to the course or assignments within the course, students select varying study behaviors to prepare for exams. To date, studies have examined the motivation of Biology students and linked it to student achievement (Lin et al., 2003; Glynn et al., 2011; Partin et al., 2011; Hollowell et al., 2013; Gibbens, 2019). To our knowledge, there are no studies that investigate how motivation impacts the selection of specific-study behaviors of students enrolled in undergraduate Biology classes. Therefore, future studies should investigate how course level and assessment type impact motivation of undergraduate Biology students and study behaviors used to prepare for exams.

CONCLUSION

We demonstrate that while the number of study behaviors used by students varies depending on the course, students use similar study behaviors to prepare for exams, use those behaviors throughout the semester, and use similar metacognitive skills of evaluation. Taken together, this suggests that instructors must be cognizant of how students prepare for course assessments, regardless of course. Furthermore, by including in-class activities that foster the development of metacognition and restructuring assessments to include high-level Bloom’s questions, instructors can take an active role in helping all students develop effective-study behaviors that will serve them well through all stages of their educational journey.

Acknowledgments

We thank the Ballen Lab at Auburn University for their feedback on this manuscript and their support. We also thank Elise Walck-Shannon and Julie Stanton for their valuable feedback regarding study behaviors and metacognition. A Research Coordination Network grant from the National Science Foundation (DBI-1919462) supported the undergraduates who are co-authors on this paper.

REFERENCES

- Abd-El-Fattah, S. M. (2011). The effect of test expectations on study strategies and test performance: A metacognitive perspective, Educational Psychology, 314, 497–511, doi: 10.1080/01443410.2011.570250 [Google Scholar]

- Beccaria, L., Kek, M., Huijser, H., Rose, J., Kimmins, L. (2014). The interrelationships between student approaches to learning and group work. Nurse Education Today, 34(7), 1094–1103. 10.1016/j.nedt.2014.02.006 [DOI] [PubMed] [Google Scholar]

- Bene, K., Lapina, A., Birida, A., Ekore, J. O., Adan, S. (2021). A Comparative Study of Self-Regulation Levels and Academic Performance among STEM and Non-STEM University Students Using Multivariate Analysis of Variance. Journal of Turkish Science Education, 18(3), 320–337. doi: 10.36681/tused.2021.76 [Google Scholar]

- Biggs, J. (1993). What do inventories of students’ learning processes really measure? A theoretical review and clarification. British Journal of Educational Psychology, 63(1), 3–19. 10.1111/j.2044-8279.1993.tb01038.x [DOI] [PubMed] [Google Scholar]

- Biwer, F., Wiradhany, W., oude Egbrink, M., Hospers, H., Wasenitz, S., Jansen, W., de Bruin, A. (2021). Changes and Adaptations: How University Students Self-Regulate Their Online Learning During the COVID-19 Pandemic. Frontiers in Psychology, 12, 642593. doi: 10.3389/fpsyg.2021.642593 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bjork, E. L., Bjork, R. A. (2011). Making things hard on yourself, but in a good way: Creating desirable difficulties to enhance learning. In Gernsbacher, M. A., Pew, R. W., Hough, L. M., Pomerantz, J. R. (Eds.), FABBS Foundation, Psychology and the real world: Essays illustrating fundamental contributions to society (pp. 56–64). Duffield, UK: Worth Publishers. [Google Scholar]

- Bjork, R. A., Dunlosky, J., Kornell, N. (2013). Self-Regulated Learning: Beliefs, Techniques, and Illusions. Annual Review of Psychology, 64(1), 417–444. 10.1146/ANNUREV-PSYCH-113011-143823 [DOI] [PubMed] [Google Scholar]

- Boesdorfer, S. B., Del Carlo, D. I. (2020). Refocusing outcome expectations for secondary and postsecondary chemistry classrooms. Journal of Chemical Education, 97(11), 3919–3922. 10.1021/acs.jchemed.0c00689 [DOI] [Google Scholar]

- Brown, D. (2017). An evidence-based analysis of learning practices: The need for pharmacy students to employ more effective study strategies. Currents in Pharmacy Teaching & Learning, 9(2), 163–170. 10.1016/J.CPTL.2016.11.003 [DOI] [PubMed] [Google Scholar]

- Brown, P., Roediger, H., McDaniel, M. (2014). Make It Stick: The Science of Successful Learning. Cambridge: The Belknap Press of Harvard University Press. [Google Scholar]

- Cao, L., Nietfeld, J. L. (2007). College students’ metacognitive awareness of difficulties in learning the class content does not automatically lead to adjustment of study strategies. Australian Journal of Educational & Developmental Psychology, 7, 31–46. [Google Scholar]

- Carpenter, S. K. (2009). Cue Strength as a moderator of the testing effect: The benefits of elaborative retrieval. Journal of Experimental Psychology: Learning Memory and Cognition, 35(6), 1563–1569. 10.1037/a0017021 [DOI] [PubMed] [Google Scholar]

- Cazan, A. M. (2022). An intervention study for the development of self-regulated learning skills. Current Psychology, 1–18. 10.1007/s12144-020-01136-x [DOI] [Google Scholar]

- Chin, C., Brown, D. E. (2000). Learning in science: A comparison of deep and surface approaches. Journal of Research in Science Teaching, 37(2), 109–138. [DOI] [Google Scholar]

- Chiou, G. L., Liang, J. C., Tsai, C. C. (2012). Undergraduate Students’ Conceptions of and Approaches to Learning in Biology: A study of their structural models and gender differences. International Journal of Science Education, 34(2), 167–195. 10.1080/09500693.2011.558131 [DOI] [Google Scholar]

- Collier, P. J., Morgan, D. L. (2008). “Is that paper really due today?”: Differences in first-generation and traditional college students’ understandings of faculty expectations. Higher Education, 55(4), 425–446. 10.1007/s10734-007-9065-5 [DOI] [Google Scholar]

- Core Team R. (2020). R: A Language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. Retrieved May 6, 2022, from www.R-project.org/ [Google Scholar]

- Credé, M., Phillips, L. A. (2011). A meta-analytic review of the Motivated Strategies for Learning Questionnaire. Learning and Individual Differences, 21(4), 337–346. 10.1016/J.LINDIF.2011.03.002 [DOI] [Google Scholar]

- Crowe, A., Dirks, C., Wenderoth, M. P. (2008). Biology in Bloom: Implementing Bloom’s Taxonomy to enhance student learning in Biology. CBE—Life Sciences Education, 7, 368–381. 10.1187/cbe.08-05-0024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curley, R. G., Trumbull Estrin, E., Thomas, J. W., Rohwer, W. D. (1987). Relationships between study activities and achievement as a function of grade level and course characteristics. Contemporary Educational Psychology, 12(4), 324–343. 10.1016/S0361-476X(87)80004-8 [DOI] [Google Scholar]

- Cuseo, J. (2007). The empirical case against large class size: Adverse effects on the teaching, learning, and retention of first-year students . Journal of Faculty Development, 21(1), 5–21. [Google Scholar]

- Daempfle, P. A. (2003). An analysis of high attrition rates among first year college science, math, and engineering majors. Journal of College Student Retention, 5(1), 37–52. 10.2190/DWQT-TYA4-T20W-RCWH [DOI] [Google Scholar]

- Dang, N., Chiang, J. C., Brown, H. M., McDonald, K. K. (2018). Curricular Activities that Promote Metacognitive Skills Impact Lower-Performing Students in an Introductory Biology Course. Journal of Microbiology & Biology Education, 19(1). 10.1128/jmbe.v19i1.1324 [DOI] [PMC free article] [PubMed] [Google Scholar]

- DiFrancesca, D., Nietfeld, J. L., Cao, L. (2016). A comparison of high and low achieving students on self-regulated learning variables. Learning and Individual Differences, 45, 228–236. 10.1016/J.LINDIF.2015.11.010 [DOI] [Google Scholar]

- Dignath, C., Veenman, M. V. J. (2021). The role of direct strategy instruction and indirect activation of self-regulated learning—evidence from classroom observation studies. Educational Psychology Review, 33(2), 489–533. 10.1007/s10648-020-09534-0 [DOI] [Google Scholar]

- Duncan, T. G., McKeachie, W. J. (2005). The Making of the Motivated Strategies for Learning Questionnaire, Educational Psychologist, 40(2), 117–128, doi: 10.1207/s15326985ep4002_6 [Google Scholar]