Abstract

Patients with axial spondyloarthritis (axSpA) suffer from one of the longest diagnostic delays among all rheumatic diseases. Telemedicine (TM) may reduce this diagnostic delay by providing easy access to care. Diagnostic rheumatology telehealth studies are scarce and largely limited to traditional synchronous approaches such as resource-intensive video and telephone consultations. The aim of this study was to investigate a stepwise asynchronous telemedicine-based diagnostic approach in patients with suspected axSpA. Patients with suspected axSpA completed a fully automated digital symptom assessment using two symptom checkers (SC) (bechterew-check and Ada). Secondly, a hybrid stepwise asynchronous TM approach was investigated. Three physicians and two medical students were given sequential access to SC symptom reports, laboratory and imaging results. After each step, participants had to state if axSpA was present or not (yes/no) and had to rate their perceived decision confidence. Results were compared to the final diagnosis of the treating rheumatologist. 17 (47.2%) of 36 included patients were diagnosed with axSpA. Diagnostic accuracy of bechterew-check, Ada, TM students and TM physicians was 47.2%, 58.3%, 76.4% and 88.9% respectively. Access to imaging results significantly increased sensitivity of TM-physicians (p < 0.05). Mean diagnostic confidence of false axSpA classification was not significantly lower compared to correct axSpA classification for both students and physicians. This study underpins the potential of asynchronous physician-based telemedicine for patients with suspected axSpA. Similarly, the results highlight the need for sufficient information, especially imaging results to ensure a correct diagnosis. Further studies are needed to investigate other rheumatic diseases and telediagnostic approaches.

Supplementary Information

The online version contains supplementary material available at 10.1007/s00296-023-05360-z.

Keywords: Symptom checker, Telemedicine, Telehealth, Diagnosis, Spondyloarthritis, Health service research

Introduction

Axial spondyloarthritis (axSpA) is a common inflammatory rheumatic disease with an estimated prevalence of 0.3–1.4% worldwide [1–3]. The diagnostic delay of axSpA patients remains a major challenge, remaining unacceptably long with around 7 years in Europe [4, 5]. Untreated disease deteriorates prognosis, decreases quality [6] of life and leads to functional disability and economic losses [7, 8]. The increasing shortage of rheumatologists and the simultaneous rise in demand are likely to increase the diagnostic delay even further [9].

The European Alliance of Associations for Rheumatology (EULAR) recently highlighted the growing importance of telehealth for rheumatology [10], however also demonstrated the scarcity of evidence [11]. In the underlying systematic review [11], the authors identified only two published studies [12, 13] regarding remote diagnosis. These two studies investigated traditional resource intensive telemedicine strategies, where patients with different rheumatic diseases and additional health care professionals (HCP) communicated synchronously with rheumatologists. Encouragingly, both studies demonstrated high diagnostic accuracy and patient acceptance. Asynchronous telemedicine has been gaining popularity, in particular due to the increased flexibility and lower need of human resources. Symptom checkers (SC) are an extreme example of asynchronous telehealth, as they attempt to detect a disease only based on medical history and without any HCP review of data. Compared to traditional face-to-face diagnosis, SC showed low diagnostic accuracy for several rheumatic conditions, [14–16] including axSpA [17]. Only when rheumatologists were limited to medical history only, SC showed a significantly higher accuracy [18]. Similarly, Ehrenstein et al. demonstrated that even experienced rheumatologists needed additional imaging and laboratory data to reach a satisfactory diagnostic accuracy [19]. We hypothesized that an accurate asynchronous telehealth diagnosis of patients with suspected axSpA is possible, if physicians only had access to enough information, including medical history, laboratory parameters and imaging. Thus, our study investigated an asynchronous telediagnostic approach in patients with suspected axSpA.

Methods

Newly referred adult patients with suspected axSpA were included in this study. Exclusion criteria were a known diagnosis, a previous rheumatologist appointment and unwillingness or inability to comply with the protocol. This prospective study was approved by the institutional review board (IRB) of the Medical Faculty of the University of Erlangen-Nürnberg (21–357-B) and conducted in compliance with the Declaration of Helsinki. All study patients provided written informed consent prior to study participation.

Symptom checker diagnosis

Prior to their rheumatology visit, patients completed two SC, bechterew-check (BC; www.bechterew-check.de) and Ada (www.ada.com). BC is an axSpA-specific online questionnaire based on the ASAS criteria, consisting of 16 questions, classifying answers as likely or unlikely for axSpA. Ada is a freely-available medical app not limited to rheumatology. The artificial intelligence-driven chatbot questions are dynamically chosen, and the total number varies depending on the previous answers given. Ada provides a top (D1) and up to five disease suggestions (D5), their respective probability and urgency advice. Disease suggestions were compared to the final diagnosis reported on the discharge summary report. Patient acceptance of symptom checkers was measured using the net promoter score [20] (NPS), which is based on a 11-point numeric rating scale (0–10). Answers between 0 and 6 are categorized as detractors, 7–8 as passives and 9–10 as promoters. The NPS is equal to the percentage of promoters subtracting the percentage of detractors.

Healthcare professional-based telehealth diagnosis supported by symptom checkers

After the patient visit, two independent medical students (4 and 5 years of completed studies, respectively) who received a brief presentation of axSpA diagnosis (15 min) and three physicians (1 resident, 2 board-certified rheumatologists) were consecutively presented (1) symptom checker summaries from both BC and Ada, (2) CRP and HLA B-27 results (venous; gold standard) and (3) radiology reports. After each step, participants had to state if axSpA was present or not (yes/no), rate their perceived diagnostic confidence on an 11-point numeric rating scale (NRS 0–10) and record diagnostic step completion time in seconds. Disease suggestions were compared to the final diagnosis reported on the discharge summary report.

Statistical analysis

Due to the exploratory character of the trial, no formal sample size calculation was performed. Following recommendations for pilot studies [21], the number of patients was set at 40. Statistical analysis was performed using Microsoft Excel 2019 and GraphPad Prism 8. The P value is reported and P values less than 0.05 were considered significant. Additionally, for nominal variables, the 95% CI of the difference between medians is reported and for categorical variables, the 95% CI and Odd’s ratio are indicated. Patient-to-patient comparisons were summarized by median and interquartile range (IQR, interquartile range 25th and 75th percentiles) for interval data and as absolute (n) and relative frequency (percent) for nominal data. Statistical differences were assessed by Mann–Whitney-U test and Kruskal–Wallis test with Dunn’s test for multiple comparisons and Fisher's Exact Test for categorical variables. Results were reported following the STAndards for the Reporting of Diagnostic accuracy studies guideline [22]. Diagnostic accuracy was evaluated referring to sensitivity, specificity and overall accuracy. Asynchronous TM-based sensitivity and specificity were statistically compared after each diagnostic step using McNemar’s test.

Results

Baseline patient characteristics are shown in Table 1. 17/36 (47.2%) of patients were diagnosed with axSpA. There were three study dropouts due to missed appointments and one patient refused to participate. Median age was 37.2 years, 21/36 (58.3%) were female. All patients had lower back pain for more than 3 months.

Table 1.

Patient characteristics

| Patient characteristics | All patients (n = 36) | axSpA (n = 17) | No. axSpA (n = 19) | P value | 95% CI (and Odd’s ratio)* |

|---|---|---|---|---|---|

| Age, Mdn (IQR) | 37.19 (20.6) | 34.4 (15.2) | 39.9 (18.0) | 0.24 | – 3.6 to 12 |

| BMI, Mdn (IQR) | 24.6 (5.1) | 25.8 (2.3) | 24.4 (8.2) | 0.59 | – 3.4 to 2.5 |

| Active smoker status, N (%) | 5 (13.9) | 4 (21.1) | 1 (5.9) | 0.16 | 0.7 to 70.9 (5.5) |

| Female gender, N (%) | 21 (58.3) | 7 (41.2) | 14 (73.7) | 0.09 | 0.1 to 1.0 (0.3) |

| Chronic lower back pain, N (%) | 36 (100) | 17 (100) | 19 (100) | 1.00 | – |

| Peripheral enthesiopathy, N (%) | 10 (27.8) | 6 (35.3) | 4 (21.1) | 0.46 | 0.5 to 7.5 (2.0) |

| Peripheral arthralgia | 15 (41.7) | 6 (35.3) | 9 (47.4) | 0.52 | 0.2 to 2.4 (0.6) |

| Elevated baseline CRP, N (%) | 7 (38.9) | 4 (23.5) | 3 (15.8) | 0.68 | 0.4 to 7.3 (1.6) |

| HLA-B27 positive, N (%) | 18 (50) | 10 (58.8) | 8 (42.1) | 0.51 | 0.6 to 6.5 (2.0) |

| History of uveitis, N (%) | 0 (0) | 0 (0) | 0 (0) | 1.00 | – |

| History of IBD, N (%) | 0 (0) | 0 (0) | 0 (0) | 1.00 | – |

| History of psoriasis, N (%) | 1 (2.8) | 1 (5.9) | 0 (0) | 0.48 | 0.1 to Inf (Inf) |

| NSAR response, N (%) | 27 (75) | 15 (88.2) | 12 (63.2) | 0.13 | 0.9 to 23.0 (4.4) |

| Familiy history of axSpA | 7 (38.9) | 1 (5.9) | 6 (31.6) | 0.09 | 0.01 to 1.1 (0.14) |

| Family history of IBD | 3 (8.3) | 0 (0) | 3 (15.8) | 0.23 | 0.0 to 1.2 (0.0) |

| Family history of psoriasis | 4 (11.1) | 1 (5.9) | 3 (15.8) | 0.61 | 0.0 to 2.5 (0.3) |

| Baseline PtGA, Mdn (IQR) | 5 (3) | 4 (3) | 5 (3) | 0.36 | – 2.0 to 1.0 |

| Baseline Morning stiffness at T-1, Mdn (IQR) | 30 (51.3) | 30 (50) | 30 (37.5) | 0.86 | – 27.0 to 20.0 |

| Baseline BASDAI, Mdn (IQR) | 4.2 (2.7) | 4 (2.3) | 4.3 (2.7) | 0.47 | – 1.0 to 2.0 |

| BASMI > 0, N (%) | 13 (36.1) | 6 (35.3) | 7 (36.8) | 1.00 | 0.2 to 3.4 (0.8) |

Mdn Median, IQR interquartile range, BMI body mass index, IBD inflammatory bowel disease, VAS visual analogue scale, BASDAI Bath Ankylosing Spondylitis Disease Activity Index, BASFI Bath Ankylosing Spondylitis Functional Index, CI Confidence interval, Inf infinity

Statistical significances between the axSpA and non-axSpA patients were determined by Mann Whitney U test for nominal variables and Fisher’s exact test for categorical variables

*For nominal variables 95, % CI of the difference between medians is reported. For categorical variables, 95, % CI and Odd’s ratio in parantheses are indicated

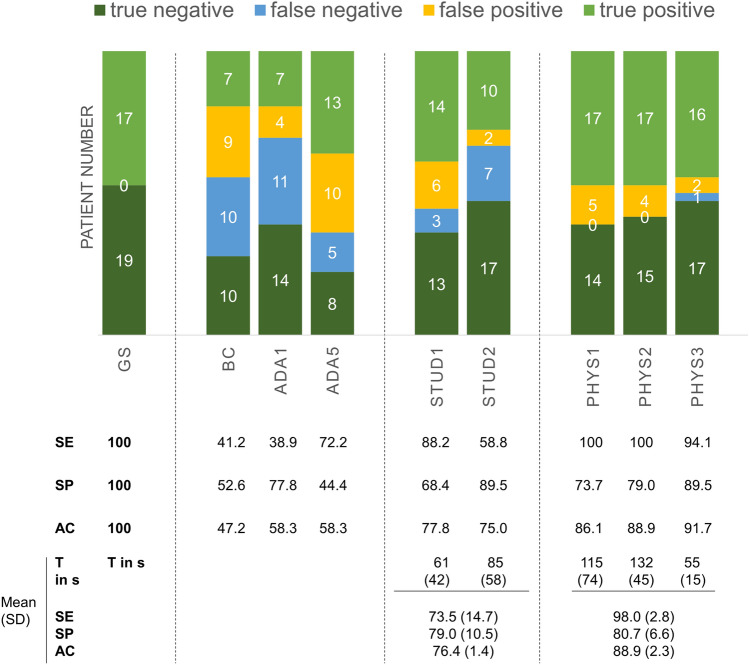

The diagnostic accuracy of both SC (BC: 47.2%; ADA: 58.3%) was inferior to medical students and physicians (Fig. 1). Diagnostic accuracy increased with increasing information for both students and physicians (see online supplemental material S1). Giving physicians and medical students only access to SC reports resulted in a mean diagnostic accuracy of 54.2 ± 4.2 (BC) vs. 62.5 ± 4.2 (ADA) and 55.2 ± 3.7 (BC) vs. 58.5 ± 0.2 (Ada), respectively.

Fig. 1.

Diagnostic accuracy measures of symptom checkers (SC), students and physicians. Final diagnosis reported on the discharge summary report served as the gold standard (GS). Based on this, the sensitivity, specificity and diagnostic accuracy of the two SC bechterew-check (BC) and Ada (ADA1 = top1 diagnosis, ADA5 = top5 diagnoses) were determined. Students 1 + 2 (STUD1 + 2) and physician 1–3 (PHYS1-3) decided asynchronously based on SC results, results for CRP and HLA-B27 and imaging, without ever having actually seen the patient. The mean time (T) for telehealth diagnosis per patient case vignette is listed in seconds (s). Mean diagnostic accuracy values are listed in the three lower rows of the table. SE sensitivity, SP specificity, AC accuracy

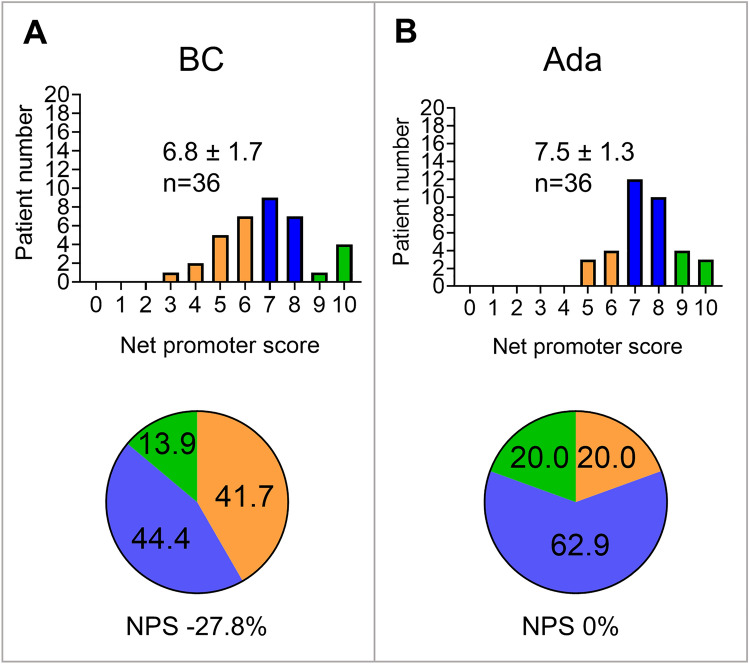

With access to all diagnostic information including SC reports, CRP and HLA-B27 results and imaging, students’ telehealth diagnostic accuracy still appeared limited (76.4 ± 1.4%), results of the three tele-rheumatologists showed a high mean sensitivity (98.0 ± 2.8%) and overall diagnostic accuracy (88.9 ± 2.3%) (Fig. 1). Interestingly, median diagnostic confidence of false axSpA classification was not significantly lower compared to correct axSpA classification for both students and physicians (online supplemental material S2). Similarly, the reported diagnostic probability of Ada did not significantly differ between correct diagnosis and false diagnosis (median diagnostic probability 0.4 vs. 0.5, 95% CI of difference -0.1 to 0.2; p = 0.46), see online supplemental material S3. Imaging significantly increased the sensitivity of the three individual telehealth physicians and one individual student (p < 0.05 by McNemar’s test, median physician’s sensitivity 64.71% w/o imaging vs. 100% including imaging, 95% CI of difference 22.7% to 52.9%), see online supplemental material S1. Mean time for asynchronous telehealth diagnosis varied between 55 and 132 s (Fig. 1). Patient acceptance of symptom checkers was poor with NPS ratings of 0% for Ada (mean ± SD 7.5 ± 1.3) and -27.8% for BC (6.8 ± 1.7), see Fig. 2.

Fig. 2.

Patient acceptance for symptom checkers is displayed as bar graphs and pie charts. The proportion of detractors (0–6) is shown in orange, the proportion of neutrals (7–8) in blue and the proportion of promoters (9–10) in green

Discussion

In this cross-sectional study of patients with suspected axSpA, we demonstrated the feasibility of asynchronous telediagnosis for the majority of patients. Asynchronous telehealth physicians reached a mean diagnostic accuracy of 88.9% and sensitivity of 98.0% and needed an average of only 1–2 min per case. Previous remote rheumatology diagnosis studies used a resource intensive synchronous video consultation approach involving additional personnel (junior doctor, nurse, general practitioner) reporting accuracies of 40% [23], 79% [13] and 97% [12]. In our study, the significant increase in sensitivity by gaining access to imaging data highlights the importance of having access to all crucial information, confirming a study by Ehrenstein et al. [19], that also examined relative contributions of sequential diagnostic steps. Being limited to medical history data only, such as symptom checkers are, it has been shown previously [19] that also experienced rheumatologists only reach a very limited diagnostic accuracy of 27%. The low diagnostic accuracy of symptom checkers in this study is similar to previous studies [16, 24]. Machine learning could however improve diagnostic accuracy [25]. As expected, in a recent large video consultation diagnostic study, accuracy was very high (100%) in disciplines that were mainly based on imaging and laboratory data compared to disciplines that heavily rely on physical examination [26]. The limitations of the physical examination in video consultations restrict remote diagnostic accuracy [23]. Increasing availability of professional imaging, and new smartphone-based techniques [27] slowly reduce these restrictions. However, substantial prevalence of inflammatory MRI lesions among healthy individuals [28] warrant for careful consideration. We previously reported high accuracy and patient acceptance regarding at-home capillary self-sampling for CRP and antibody analysis [29–31] to support telehealth diagnosis and monitoring. Importantly, the diagnostic uncertainty is only partly perceived by physicians, as can be seen from the low difference in perceived diagnostic confidence for false and correct diagnoses in this trial and a previous one [18]. Therefore in clinical routine this diagnostic approach should currently rather be used to triage patients but not prevent actual on-site visits.

To our knowledge, this is the first study investigating an asynchronous hybrid diagnostic telehealth approach in rheumatology. Despite its small size and its monocentric study nature, this study adds important evidence on telemedicine in rheumatology, as they were requested by EULAR [10]. Preliminary data of this study was presented at the American College of Rheumatology congress 2022 [32]. Our results have to be confirmed in larger studies in axSpA and can be rolled out to other diseases. Confirmation of cost-effectiveness will be crucial for wider implementation.

Conclusion

In regard of the persistently long diagnostic delay of patients with axial spondyloarthritis new innovative strategies should be evaluated. This study underlines the potential of asynchronous physician-based telemedicine to diagnose patients with axSpA. Access to imaging results was crucial for a correct diagnosis. Further studies are needed to investigate other rheumatic diseases and different telediagnostic approaches.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary Supplemental material S1. Diagnostic accuracy measures of students and physicians. SE, sensitivity; SP, specificity; AC, diagnostic accuracy; SD standard deviance file1 (TIF 1067 KB)

Supplementary Supplemental material S2. Diagnostic confidence of telehealth physicians and students. Median diagnostic confidence of correct vs. low axSpA classification 8.0 vs. 7.5 (95% CI of the difference between medians -2.0-0; p=0.08) for physicians and 8.0 vs. 7.0 (95% CI of difference -2.0-0; p=0.11) for students file2 (TIF 300 KB)

Supplementary Supplemental material S3. Ada’s reported diagnostic probabilities file3 (TIF 206 KB)

Acknowledgements

We thank all patients for their participation in this study. The present work was performed to fulfill the requirements for obtaining the degree “Dr. med.” for S. von Rohr and is part of the PhD thesis of the last author JK (AGEIS, Université Grenoble Alpes, Grenoble, France).

Author contributions

The manuscript draft was written by JK, HL and SR. Substantial contributions and revision comments were obtained from all coauthors who also oversaw the planning and development of the project. All authors take full responsibility for the integrity and accuracy of all aspects of the work.

Funding

Open Access funding enabled and organized by Projekt DEAL. The study was partially supported by Novartis Pharma GmbH, Nürnberg, Germany and the Deutsche Forschungsgemeinschaft (DFG—FOR 2886 “PANDORA”—Z to JK).

Availability of data and materials

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Declarations

Ethical approval

The study was approved on 3rd of November 2021 by the ethics committee of the University hospital Erlangen-Nürnberg (Reg no. 21-357-B). All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. Informed consent was obtained from all individual respondents included in the study.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Sieper J, Poddubnyy D. Axial spondyloarthritis. Lancet. 2017;390(10089):73–84. doi: 10.1016/S0140-6736(16)31591-4. [DOI] [PubMed] [Google Scholar]

- 2.Bohn R, Cooney M, Deodhar A, Curtis JR, Golembesky A. Incidence and prevalence of axial spondyloarthritis: methodologic challenges and gaps in the literature. Clin Exp Rheumatol. 2018;36(2):263–274. [PubMed] [Google Scholar]

- 3.Poddubnyy D, Sieper J, Akar S, Munoz-Fernandez S, Haibel H, Hojnik M, Ganz F, Inman RD. Characteristics of patients with axial spondyloarthritis by geographic regions: PROOF multicountry observational study baseline results. Rheumatology. 2022;61(8):3299–3308. doi: 10.1093/rheumatology/keab901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Redeker I, Callhoff J, Hoffmann F, Haibel H, Sieper J, Zink A, Poddubnyy D. Determinants of diagnostic delay in axial spondyloarthritis: an analysis based on linked claims and patient-reported survey data. Rheumatology. 2019;58(9):1634–1638. doi: 10.1093/rheumatology/kez090. [DOI] [PubMed] [Google Scholar]

- 5.Garrido-Cumbrera M, Navarro-Compan V, Bundy C, Mahapatra R, Makri S, Correa-Fernandez J, Christen L, Delgado-Dominguez CJ, Poddubnyy D, Group EW Identifying parameters associated with delayed diagnosis in axial spondyloarthritis: data from the European map of axial spondyloarthritis. Rheumatology. 2022;61(2):705–712. doi: 10.1093/rheumatology/keab369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Poddubnyy D, Sieper J. Diagnostic delay in axial spondyloarthritis - a past or current problem? Curr Opin Rheumatol. 2021;33(4):307–312. doi: 10.1097/BOR.0000000000000802. [DOI] [PubMed] [Google Scholar]

- 7.Yi E, Ahuja A, Rajput T, George AT, Park Y. Clinical, economic, and humanistic burden associated with delayed diagnosis of axial spondyloarthritis: a systematic review. Rheumatol Ther. 2020;7(1):65–87. doi: 10.1007/s40744-020-00194-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mennini FS, Viti R, Marcellusi A, Sciattella P, Viapiana O, Rossini M. Economic evaluation of spondyloarthritis: economic impact of diagnostic delay in Italy. Clinicoecon Outcomes Res. 2018;10:45–51. doi: 10.2147/CEOR.S144209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Knitza J, Krusche M, Leipe J. Digital diagnostic support in rheumatology. Z Rheumatol. 2021;80(10):909–913. doi: 10.1007/s00393-021-01097-x. [DOI] [PubMed] [Google Scholar]

- 10.de Thurah A, Bosch P, Marques A, Meissner Y, Mukhtyar CB, Knitza J, Najm A, Osteras N, Pelle T, Knudsen LR, Smucrova H, Berenbaum F, Jani M, Geenen R, Krusche M, Pchelnikova P, de Souza S, Badreh S, Wiek D, Piantoni S, Gwinnutt JM, Duftner C, Canhao HM, Quartuccio L, Stoilov N, Prior Y, Bijlsma JW, Zabotti A, Stamm TA, Dejaco C. 2022 EULAR points to consider for remote care in rheumatic and musculoskeletal diseases. Ann Rheum Dis. 2022;81(8):1065–1071. doi: 10.1136/annrheumdis-2022-222341. [DOI] [PubMed] [Google Scholar]

- 11.Marques A, Bosch P, de Thurah A, Meissner Y, Falzon L, Mukhtyar C, Bijlsma JW, Dejaco C, Stamm TA. Effectiveness of remote care interventions: a systematic review informing the 2022 EULAR Points to Consider for remote care in rheumatic and musculoskeletal diseases. RMD Open. 2022 doi: 10.1136/rmdopen-2022-002290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Leggett P, Graham L, Steele K, Gilliland A, Stevenson M, O'Reilly D, Wootton R, Taggart A. Telerheumatology–diagnostic accuracy and acceptability to patient, specialist, and general practitioner. Br J Gen Pract. 2001;51(470):746–748. [PMC free article] [PubMed] [Google Scholar]

- 13.Nguyen-Oghalai TU, Hunter K, Lyon M. Telerheumatology: the VA experience. South Med J. 2018;111(6):359–362. doi: 10.14423/SMJ.0000000000000811. [DOI] [PubMed] [Google Scholar]

- 14.Knevel R, Knitza J, Hensvold A, Circiumaru A, Bruce T, Evans S, Maarseveen T, Maurits M, Beaart-van de Voorde L, Simon D, Kleyer A, Johannesson M, Schett G, Huizinga T, Svanteson S, Lindfors A, Klareskog L, Catrina A. Rheumatic? A digital diagnostic decision support tool for individuals suspecting rheumatic diseases: a multicenter pilot validation study. Front Med. 2022;9:774945. doi: 10.3389/fmed.2022.774945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Knitza J, Tascilar K, Gruber E, Kaletta H, Hagen M, Liphardt AM, Schenker H, Krusche M, Wacker J, Kleyer A, Simon D, Vuillerme N, Schett G, Hueber AJ. Accuracy and usability of a diagnostic decision support system in the diagnosis of three representative rheumatic diseases: a randomized controlled trial among medical students. Arthritis Res Ther. 2021;23(1):233. doi: 10.1186/s13075-021-02616-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Knitza J, Mohn J, Bergmann C, Kampylafka E, Hagen M, Bohr D, Morf H, Araujo E, Englbrecht M, Simon D, Kleyer A, Meinderink T, Vorbruggen W, von der Decken CB, Kleinert S, Ramming A, Distler JHW, Vuillerme N, Fricker A, Bartz-Bazzanella P, Schett G, Hueber AJ, Welcker M. Accuracy, patient-perceived usability, and acceptance of two symptom checkers (Ada and Rheport) in rheumatology: interim results from a randomized controlled crossover trial. Arthritis Res Ther. 2021;23(1):112. doi: 10.1186/s13075-021-02498-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Proft F, Spiller L, Redeker I, Protopopov M, Rodriguez VR, Muche B, Rademacher J, Weber AK, Luders S, Torgutalp M, Sieper J, Poddubnyy D. Comparison of an online self-referral tool with a physician-based referral strategy for early recognition of patients with a high probability of axial spa. Semin Arthritis Rheum. 2020;50(5):1015–1021. doi: 10.1016/j.semarthrit.2020.07.018. [DOI] [PubMed] [Google Scholar]

- 18.Graf M, Knitza J, Leipe J, Krusche M, Welcker M, Kuhn S, Mucke J, Hueber AJ, Hornig J, Klemm P, Kleinert S, Aries P, Vuillerme N, Simon D, Kleyer A, Schett G, Callhoff J. Comparison of physician and artificial intelligence-based symptom checker diagnostic accuracy. Rheumatol Int. 2022;42(12):2167–2176. doi: 10.1007/s00296-022-05202-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ehrenstein B, Pongratz G, Fleck M, Hartung W. The ability of rheumatologists blinded to prior workup to diagnose rheumatoid arthritis only by clinical assessment: a cross-sectional study. Rheumatology (Oxford) 2018;57(9):1592–1601. doi: 10.1093/rheumatology/key127. [DOI] [PubMed] [Google Scholar]

- 20.Reichheld FF. The one number you need to grow. Harv Bus Rev. 2003;81(12):46–54, 124. [PubMed] [Google Scholar]

- 21.Browne RH. On the use of a pilot sample for sample size determination. Stat Med. 1995;14(17):1933–1940. doi: 10.1002/sim.4780141709. [DOI] [PubMed] [Google Scholar]

- 22.Cohen JF, Korevaar DA, Altman DG, Bruns DE, Gatsonis CA, Hooft L, Irwig L, Levine D, Reitsma JB, de Vet HC, Bossuyt PM. STARD 2015 guidelines for reporting diagnostic accuracy studies: explanation and elaboration. BMJ Open. 2016;6(11):e012799. doi: 10.1136/bmjopen-2016-012799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Graham LE, McGimpsey S, Wright S, McClean G, Carser J, Stevenson M, Wootton R, Taggart AJ. Could a low-cost audio-visual link be useful in rheumatology? J Telemed Telecare. 2000;6(Suppl 1):S35–37. doi: 10.1258/1357633001934078. [DOI] [PubMed] [Google Scholar]

- 24.Powley L, McIlroy G, Simons G, Raza K. Are online symptoms checkers useful for patients with inflammatory arthritis? BMC Musculoskelet Disord. 2016;17(1):362. doi: 10.1186/s12891-016-1189-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Knitza J, Janousek L, Kluge F, von der Decken CB, Kleinert S, Vorbruggen W, Kleyer A, Simon D, Hueber AJ, Muehlensiepen F, Vuillerme N, Schett G, Eskofier BM, Welcker M, Bartz-Bazzanella P. Machine learning-based improvement of an online rheumatology referral and triage system. Front Med. 2022;9:954056. doi: 10.3389/fmed.2022.954056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Demaerschalk BM, Pines A, Butterfield R, Haglin JM, Haddad TC, Yiannias J, Colby CE, TerKonda SP, Ommen SR, Bushman MS, Lokken TG, Blegen RN, Hoff MD, Coffey JD, Anthony GS, Zhang N, Diagnostic Accuracy of Telemedicine Utilized at Mayo Clinic Alix School of Medicine Study Group I Assessment of clinician diagnostic concordance with video telemedicine in the integrated multispecialty practice at mayo clinic during the beginning of COVID-19 pandemic from March to June 2020. JAMA Netw Open. 2022;5(9):e2229958. doi: 10.1001/jamanetworkopen.2022.29958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hugle T, Caratsch L, Caorsi M, Maglione J, Dan D, Dumusc A, Blanchard M, Kalweit G, Kalweit M. Dorsal finger fold recognition by convolutional neural networks for the detection and monitoring of joint swelling in patients with rheumatoid arthritis. Digit Biomark. 2022;6(2):31–35. doi: 10.1159/000525061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Baraliakos X, Richter A, Feldmann D, Ott A, Buelow R, Schmidt CO, Braun J. Frequency of MRI changes suggestive of axial spondyloarthritis in the axial skeleton in a large population-based cohort of individuals aged <45 years. Ann Rheum Dis. 2020;79(2):186–192. doi: 10.1136/annrheumdis-2019-215553. [DOI] [PubMed] [Google Scholar]

- 29.Muehlensiepen F, May S, Zarbl J, Vogt E, Boy K, Heinze M, Boeltz S, Labinsky H, Bendzuck G, Korinth M, Elling-Audersch C, Vuillerme N, Schett G, Kronke G, Knitza J. At-home blood self-sampling in rheumatology: a qualitative study with patients and health care professionals. BMC Health Serv Res. 2022;22(1):1470. doi: 10.1186/s12913-022-08787-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Knitza J, Tascilar K, Vuillerme N, Eimer E, Matusewicz P, Corte G, Schuster L, Aubourg T, Bendzuck G, Korinth M, Elling-Audersch C, Kleyer A, Boeltz S, Hueber AJ, Kronke G, Schett G, Simon D. Accuracy and tolerability of self-sampling of capillary blood for analysis of inflammation and autoantibodies in rheumatoid arthritis patients-results from a randomized controlled trial. Arthritis Res Ther. 2022;24(1):125. doi: 10.1186/s13075-022-02809-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zarbl J, Eimer E, Gigg C, Bendzuck G, Korinth M, Elling-Audersch C, Kleyer A, Simon D, Boeltz S, Krusche M, Mucke J, Muehlensiepen F, Vuillerme N, Kronke G, Schett G, Knitza J. Remote self-collection of capillary blood using upper arm devices for autoantibody analysis in patients with immune-mediated inflammatory rheumatic diseases. RMD Open. 2022 doi: 10.1136/rmdopen-2022-002641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Labinsky H, von Rohr S, Raimondo M, Vogt E, Horstmann B, Gehring I, Rojas-Restrepo J, Proft F, Muehlensiepen F, Bohr D, Schett G, Ramming A, Knitza J. Accelerating AxSpA diagnosis: exploring at-home self-sampling, symptom checkers, medical student-visits and asynchronous report-based assessment [abstract] Philadelphia: Arthritis Rheumatol; 2022. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Supplemental material S1. Diagnostic accuracy measures of students and physicians. SE, sensitivity; SP, specificity; AC, diagnostic accuracy; SD standard deviance file1 (TIF 1067 KB)

Supplementary Supplemental material S2. Diagnostic confidence of telehealth physicians and students. Median diagnostic confidence of correct vs. low axSpA classification 8.0 vs. 7.5 (95% CI of the difference between medians -2.0-0; p=0.08) for physicians and 8.0 vs. 7.0 (95% CI of difference -2.0-0; p=0.11) for students file2 (TIF 300 KB)

Supplementary Supplemental material S3. Ada’s reported diagnostic probabilities file3 (TIF 206 KB)

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.