Abstract

Nature has likely sampled only a fraction of all protein sequences and structures allowed by the laws of biophysics. However, the combinatorial scale of amino-acid sequence-space has traditionally precluded substantive study of the full protein sequence-structure map. In particular, it remains unknown how much of the vast uncharted landscape of far-from-natural sequences consists of alternate ways to encode the familiar ensemble of natural folds; proteins in this category also represent an opportunity to diversify candidates for downstream applications. Here, we characterize sequence-structure mapping in far-from-natural regions of sequence-space guided by the capacity of protein language models (pLMs) to explore sequences outside their natural training data through generation. We demonstrate that pretrained generative pLMs sample a limited structural snapshot of the natural protein universe, including >350 common (sub)domain elements. Incorporating pLM, structure prediction, and structure-based search techniques, we surpass this limitation by developing a novel “foldtuning” strategy that pushes a pretrained pLM into a generative regime that maintains structural similarity to a target protein fold (e.g. TIM barrel, thioredoxin, etc) while maximizing dissimilarity to natural amino-acid sequences. We apply “foldtuning” to build a library of pLMs for >700 naturally-abundant folds in the SCOP database, accessing swaths of proteins that take familiar structures yet lie far from known sequences, spanning targets that include enzymes, immune ligands, and signaling proteins. By revealing protein sequence-structure information at scale outside of the context of evolution, we anticipate that this work will enable future systematic searches for wholly novel folds and facilitate more immediate protein design goals in catalysis and medicine.

1. Introduction

The collection of naturally occurring protein structural motifs (“protein folds”) cataloged to date cannot reflect exhaustive sampling of all possible sequence-structure pairs – there are 20100 ≈ 10130 choices for a small domain of length 100, dwarfing even the exploratory capacity of a few billion years of evolutionary time. Faced with such a daunting scale, biophysicists have long contemplated what sequences and structures fill the unseen parts of protein-space. One pervasive question is that of which protein folds are most “designable,” that is, which structures tolerate the greatest sequence variation, and moreover, the most substantial departure from natural sequence space [Fontana, 2002, England and Shakhnovich, 2003]? The hidden degeneracy of the protein sequence-to-structure mapping (Figure 1a) holds implications for determining fundamental “rules” distinguishing stable well-folded proteins from gibberish amino-acid strings, accessing diverse candidates for protein design tasks, and even demystifying the roles of certain classes of proteins at the origins of life [Dupont et al., 2010, Alva et al., 2015].

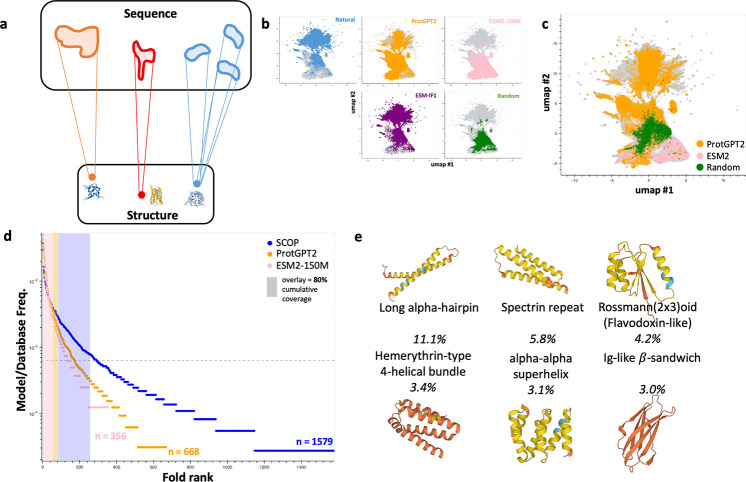

Figure 1: Structural ensembles generated by pretrained language models are imperfect reflections of natural protein-space.

a) Subview of the global protein sequence-structure map; a given structure is encoded by multiple sequences, possibly “connected” in some informative space. b) Dimension-reduced UMAP representation of ESM2–150M embeddings of natural, random, inverse-folded, and pLM-generated sequences. c) UMAP representation of random and pLM-generated sequences assignable to a SCOP fold. d) Rank-ordered fold abundance plots for natural and pLM-generated sequences. e) The 6 most-common SCOP folds among ProtGPT2 outputs; representative structures are of far-from-natural sequences (no pBLAST hit with E-value < 10)

Attempts to probe the designability question have historically been stymied by both the combinatorial complexity of sequence-space and the time-consuming nature of experimental protein structure determination. However, advances in deep learning methods for proteins now place characterizing the structural ensemble of far-from-natural sequences within reach. Transformer-based protein language models (pLMs) such as ProtGPT2, ProtT5-XL, and the ESM2 family can generalize to novel sequences and structures beyond their natural training data, suggesting that they might serve as guides into meaningful regions of far-from-natural sequence-space, skirting the high-dimensional sampling problem. [Ferruz et al., 2022, Elnaggar et al., 2022, Lin et al., 2023, Verkuil et al., 2022]. Likewise, rapid structure prediction via models such as AlphaFold2 and ESMFold makes assaying the structure side of the sequence-structure map computationally tractable [Jumper et al., 2021, Lin et al., 2023]. Combining pLMs with rapid protein structure prediction (ESMFold), we show that “off-the-shelf,” pretrained pLMs possess a latent capacity to generate sequences beyond the natural protein universe that map onto roughly 350–650 known structural motifs; however, the resulting structure distributions are skewed relative to the natural case. Building on these findings, we introduce a new “foldtuning” algorithm that modifies a PLM to preserve generative fidelity to a target fold while moving progressively further into far-from-natural sequence-space; we apply this approach for >700 common folds, uncovering well-folded regions of sequence-space and offering preliminary insight into how designability varies between folds.

2. Results

2.1. Untuned pLMs access a subset of known protein structures

We initially assess the ability of two commonly-used pLMs, ProtGPT2 and ESM2–150M, to sample from the full global sequence-structure landscape [Ferruz et al., 2022, Lin et al., 2023]. We generate ~ 106 sequences of 100aa from each model, via L-to-R next-token prediction and Gibbs sampling for ProtGPT2 and ESM2–150M respectively. Generated sequences are fed into an ESMFold structure prediction step and predicted structures are queried against a custom Foldseek database comprised of the 36,900 representative experimental structures (covering 1579 labeled protein folds, each a structurally conserved unit) of the Structural Classification Of Proteins (SCOP) dataset in TMalign mode [van Kempen et al., 2023, Andreeva et al., 2020]. We validate this structure prediction and assignment workflow by assessing the capacity of ESMFold to generalize to far-from-natural sequences with recent experimental structures deposited in the Protein Data Bank, finding a median backbone alignment RMSD of 0.92 ± 0.14 Å on sequences/structures satisfying basic quality control filters (Figure S1). Among ProtGPT2- and ESM2–150M-generated sequences, just 668 (42.3%) and 356 (22.5%) unique SCOP folds are represented respectively according to structure prediction and assignment (Figure 1d).

To determine where pLM-generated sequences lie with respect to natural sequence space, we extract the internal representations (“embeddings”) of these sequences with the ESM2–150M model, reduce dimensionality to 2D using UMAP, and apply a rule-of-thumb that the embeddings of qualitatively similar sequences should co-localize [McInnes et al., 2018]. We observe that a subpopulation of ProtGPT2-generated sequences and most ESM2–150M-generated sequences co-localize more substantially with random amino-acid sequences than with a set of ~ 106 natural proteins (sampled from the custom SCOP-UniRef50 database whose construction is described in Section 4.1.2) (Figure 1b–c). Furthermore, many of the pLM-generated sequences that are assignable to SCOP folds do not co-localize with natural sequences (Figure 1c). In contrast, ~ 106 sequences generated with a SOTA inverse-folding model, ESM-IF1, achieve high predicted structural fidelity but remain ensconced in natural sequence space, co-localizing almost perfectly with natural sequences (Figure 1b) [Hsu et al., 2022]. Taken together, it is clear that, unlike off-the-shelf inverse-folding models, both pLMs generate sequences that are appreciably distinct in some statistical sense compared to natural sequences yet able to fold into many of the same 3D structures. Notably, the structural distributions emitted by the two pLMs indicate strong preferences for small subsets of folds at rates far exceeding their natural frequencies (Figure 1d–e). For ProtGPT2, which has a higher overall structural hit rate – 32.7% vs. 5.5% for ESM2–150M – overrepresented folds include several flavors of -helical bundles, Rossmann(2×3)oids, and all- immunoglobulin-like domains (Figure 1e).

2.1.1. Loosening pLM sampling constraints increases sequence novelty at the cost of structure hit rates and diversity

Foundational results from natural language processing suggest that protein structure and sequence diversity might be unlocked by changing sampling hyperparameters to increase generative options at each next-token prediction step. To determine whether this hypothesis holds for pLMs, we systematically varied two key sampling hyperparameters of ProtGPT2 – sampling temperature and top_k (the number of highest-probability tokens available to sample from at a given step) – and repeated the generation, structure prediction, and structure assignment workflow from Section 2.1 for batches of ~ 106 sequences. Consistent with the notion that “flattening the energy landscape” of sequence generation should boost novelty, we find that the fraction of generated sequences lacking detectable homology to natural protein sequences grows as temperature and top_k are increased (Table S1–S6, Figure S5). However, we concurrently observe that any gains in sequence novelty are obviated by marked losses on the structure generation front. As sampling temperature increases, the generation frequency of fold-assignable structures falls by a factor of roughly 2x, from 34.5% to 15.1% for the default top_k value of 950, and the number of unique SCOP folds identified plummets by > 25% (Table S1, Figure S2). Additionally, high-temperature generation dramatically favors generation of proteins with an all- global topology, i.e. -helical bundles, at the notable expense of the functionally diverse class (Figure S2–S4) [Choi and Kim, 2006]. While obtaining far-from-natural sequences for -helical bundle proteins is useful for protein design writ large, the extreme structural biases introduced by pushing sampling hyperparameters into the regime necessary for sequence novelty indicate that a more robust method is required to access far-from-natural sequence-spaces coding for structurally diverse fold classes.

2.2. “Foldtuning” of a pLM maintains a target structure while escaping natural sequence space

Finding the structural reach of pretrained pLMs to be distorted, particularly when sequence novelty is an overriding goal, we introduce a new approach to push pLMs into far-from-natural sequence space. In this approach, which we term “foldtuning,” a pLM undergoes initial finetuning on natural sequences corresponding to a given target fold, plus several rounds of finetuning on self-generated batches of sequences that are predicted to adopt the target fold while differing maximally from the natural training sequences (Algorithm 1). We achieve this by selecting for finetuning those structurally-validated sequences that maximize semantic change – defined for a generated sequence as the smallest -distance between the ESM2–650M embeddings of and any of the natural training sequences [Hie et al., 2021]. Thus, foldtuning drives a pLM along a trajectory that accesses progressively further-from-natural sequences while preserving the structural “grammar” of a fixed target fold.

Algorithm 1.

pLM “Foldtuning”

| given a pretrained base model and target fold |

| for round do |

| if then |

| let training set contain (default: ) natural examples of fold |

| else |

| let training set contain all s.t. is among the -largest values (highest semantic change) of the -th round (see line 13 |

| end if |

| finetune on for 1 epoch, outputting updated model |

| generate (default: ) sequences from |

| fold generated sequences (ESMFold) |

| assign fold labels by structure-based search (Foldseek) |

| for all assigned to fold do |

| let semantic change , where via embedding with ESM2–650M |

| end for |

| end for |

2.2.1. “Foldtuned” models emit far-from-natural sequences for >700 target folds, including enzymes, cytokines, and signaling proteins

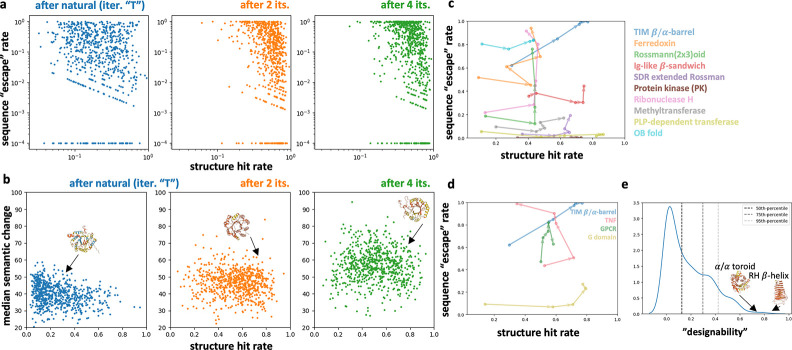

We “foldtune” ProtGPT2 (the best-performing model from Section 2.1) as described in Algorithm 1 for 727 total target folds; 708 SCOP folds (out of the top 850 ranked by natural abundance, for an 83.3% success rate), plus 19 cytokines/chemokines curated from InterPro. Model performance is assessed via two metrics; the fraction of generated sequences predicted to fold to the target structure, aka structure hit rate; and the fraction of structural hits with no sequence homology to any protein in the UniRef50 database (per MMseqs2 search, max ), aka sequence “escape rate”. Considering all 727 models, two rounds of “foldtuning” increase the median structure hit rate from to 0.565 from 0.203 after initial finetuning on the natural target fold; median sequence escape rate increases markedly after four “foldtuning” rounds, to 0.211 from 0.134 after the initial finetuning phase (Figure 2a). As a second measure of sequence novelty, semantic change w.r.t. natural fold members increases steadily with each “foldtuning” round (Figure 2b). With most models, exemplified by many of the top-10 most-abundant SCOP folds, maximizing sequence escape rate does not require any significant decline in structure hit rate (Figure 2c). Exceptions to this rule include the cytokine tumour necrosis factor (TNF) and G protein-coupled receptors (GPCRs), which exhibit model performance tradeoff between structural fidelity and sequence novelty (Figure 2d). Lastly, taking the product of structure hit and sequence escape rates as a proxy for global designability splits the 727 folds into at least three subpopulations, with the right-handed -helix, ribbon-helix-helix domain, TIM -barrel, pleckstrin homology domain, and toroid ranked as the most-designable folds (Figure 2e).

Figure 2: 727 “foldtuned” models achieve high structure hit and sequence escape rates.

a-b) Structure hit rates after initial (‘T’), 2, and 4 rounds of “foldtuning”, paired with a, sequence “escape” rates; b, sequence median semantic change w.r.t. natural fold members (embeddings extracted from ESM2–650M model). c-d) Hit/escape rate trajectories over 4 rounds of “foldtuning” for c, the 10 most-common natural folds; d, selected enzymatic, immune modulation, and signaling examples. e) Distribution of fold “designability” based on product of structure hit and sequence escape rates

3. Discussion

Knowing the features of the global protein sequence-structure map would unlock virtually limitless possibilities for protein design. We demonstrated that protein language models trained on the natural portions of this map can access far-from-natural sequence space, albeit with biases in preferred structures. We developed and deployed a “foldtuning” strategy to systemically explore deep into the far-from-natural corners of this map for 727 diverse targets including enzyme scaffolds, cytokines, and signaling ligands/receptors. Beyond serving translational goals in protein design for health and catalysis, we expect that with tweaks to selection criteria, “foldtuning” will be readily repurposed to search for novel protein structures unseen in nature and complete the sequence-structure map.

4. Appendix

4.1. Methods

Except where otherwise noted, interfacing with all models was via the TRILL software package as described in Martinez et al. [2023]. Sections 4.1.1–4.1.4 provide further implementation details for the “foldtuning” steps described in Algorithm 1.

4.1.1. Sampling from Base Models

For ProtGPT2, we sampled 100,000 sequences by L-to-R next-token prediction with the default best-performing hyperparameters from Ferruz et al. [2022]. (sampling temperature 1, top_k 950, top_p 1.0, repetition penalty 1.2), terminating after 40 tokens or the first STOP token, whichever came first, and truncating sequences to the first 100aa as necessary. Sequences containing rare or ambiguous amino acids (B, J, O, U, X, or Z) were filtered out, leaving 99,982 sequences for downstream analysis. For ESM2–150M, we sampled 148,500 sequences from L-to-R using Gibbs sampling for next-token prediction with a default sampling temperature of 1, no repetition penalty, and allowing for sampling from all tokens, terminating after 100aa or the first STOP token, whichever came first.

The random-sequence control set was generated by position-independent sampling of 74,250 sequences of length 100aa from the 20 proteinogenic amino acids, with sampling probability for each amino acid proportional to its natural abundance (first-order statistics). The inverse-folding control set was constructed by generating three sequences from ESM-IF1 with each of the 36,900 representative structures in the SCOP database as a backbone template, for 110,700 sequences in total; pre- and post-processing for rare ligands in templates and rare/ambiguous amino acids in outputs, respectively, reduced coverage to 104,591 inverse-folded sequences in total. Default hyperparameters for sampling were taken as in Hsu et al. [2022].

4.1.2. Finetuning of ProtGPT2 and Sampling from Finetuned Models

All finetuning of ProtGPT2 was performed with the Adam optimizer using a learning rate of 0.0001 and next-token prediction as the causal language modeling task. For “foldtuning” on a target fold , the base ProtGPT2 model was finetuned in the initial “T” round for 1–3 epochs on 100 natural sequences belonging to fold and selected randomly among deduplicated hits from a Foldseek-TMalign search of the SCOP database of superfamily representative PDB structures () against the AlphaFold-UniRef50 predicted structure database (this custom database is referred to as SCOP-UniRef50). Identical optimizer parameters were used for subsequent foldtuning rounds, finetuning for 1 epoch on 100 semantic-change-maximizing sequences assigned to fold , as described further in Section 4.1.4.

Sampling from finetuned ProtGPT2 models followed the same general procedures, hyperparameters, and processing steps as in 4.1.1, with 1000 sequences generated per finetuned model (before filtering of rare/ambiguous amino-acid-containing sequences). Inference batch size on a single A100–80GB GPU ranged from 125–500 sequences per batch depending on target sequence length.

4.1.3. Structure Prediction and Assignment

Structures were predicted for all generated sequences – from control, base, and finetuned models – that passed a quality control check for absence of rare or ambiguous amino acid characters (B, J, O, U, X, Z). Sequences were truncated to a max length of 100aa (base or control models) or the median length of natural sequences for target fold (finetuned models). All structures were predicted with ESMFold as described in Lin et al. [2023]. Inference batch size on a single A100–80GB GPU ranged from 10–500 sequences per batch depending on target sequence length.

If possible, each predicted structure was assigned a fold label by searching against the SCOP database of superfamily representative PDB structures () with Foldseek in accelerated TMalign mode as described in van Kempen et al. [2023] and selecting the SCOP fold accounting for the most hits satisfying TM-score > 0.5 and max(query coverage, target coverage) > 0.8, i.e. the “consensus hit”).

4.1.4. Sequence Selection for Foldtuning

For each target fold and foldtuning round , the semantic change was calculated for all sequences assigned to fold (as described in Section 4.1.3) as , where via embedding with ESM2–650M. The finetuning sequence set for subsequent round , was constructed by ranking the by their in descending-order and taking the top 100 corresponding .

4.1.5. ESMFold Validation on Far-From-Natural Sequences

To assess the accuracy of ESMFold structural prediction on out-of-distribution samples, we evaluated model performance on de novo proteins with structures deposited in the Protein Data Bank (PDB) on-or-after the ESMFold training cutoff date of 05–01-2020. Mirroring the training set construction process described in Lin et al. [2023], we filtered out structures with Resolution > 9 Å, length ≤ 20aa, rare or ambiguous amino acids (BJOUXZ), or containing > 20% sequence composition of any one amino acid, and clustered remaining sequences at the 40% identity level, obtaining a validation set of sequences. For each of the 122 sequences, the backbone RMSD was calculated between the ESMFold predicted structure and the ground-truth PDB experimental structure, with a median alignment RMSD of 0.92 ± 0.14 Å, indicating successful generalization of ESMFold beyond natural training data (Figure S1).

Supplementary Material

Acknowledgments and Disclosure of Funding

We thank Steve Mayo, Carl Pabo, Zach Martinez, Alec Lourenco, Lucas Schaus, Blade Olson, Joe Boktor, as well as all members of the Thomson Lab for helpful discussions.

This work was supported by the National Institutes of Health under award number R01GM150125, the Moore Foundation, the Packard Foundation, and the Heritage Medical Research Institute. The authors have no competing interests to disclose.

Contributor Information

Arjuna M. Subramanian, Division of Biology and Biological Engineering, California Institute of Technology, Pasadena, CA 91125

Matt Thomson, Division of Biology and Biological Engineering, California Institute of Technology, Pasadena, CA 91125.

References

- Alva V., Söding J., and Lupas A. N.. A vocabulary of ancient peptides at the origin of folded proteins. eLife, 4:e09410, Dec. 2015. ISSN 2050–084X. doi: 10.7554/eLife.09410. URL Publisher: eLife Sciences Publications, Ltd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andreeva A., Kulesha E., Gough J., and Murzin A. G.. The SCOP database in 2020: expanded classification of representative family and superfamily domains of known protein structures. Nucleic Acids Research, 48(D1):D376–D382, Jan. 2020. ISSN 0305–1048. doi: 10.1093/nar/gkz1064. URL 10.1093/nar/gkz1064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi I.-G. and Kim S.-H.. Evolution of protein structural classes and protein sequence families. Proceedings of the National Academy of Sciences, 103(38):14056–14061, Sept. 2006. doi: 10.1073/pnas.0606239103. URL 10.1073/pnas.0606239103. Publisher: Proceedings of the National Academy of Sciences. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dupont C. L., Butcher A., Valas R. E., Bourne P. E., and Caetano-Anollés G.. History of biological metal utilization inferred through phylogenomic analysis of protein structures. Proceedings of the National Academy of Sciences, 107(23):10567–10572, June 2010. doi: 10.1073/pnas.0912491107. URL 10.1073/pnas.0912491107. Publisher: Proceedings of the National Academy of Sciences. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elnaggar A., Heinzinger M., Dallago C., Rehawi G., Wang Y., Jones L., Gibbs T., Feher T., Angerer C., Steinegger M., Bhowmik D., and Rost B.. ProtTrans: Toward Understanding the Language of Life Through Self-Supervised Learning. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(10):7112–7127, Oct. 2022. ISSN 1939–3539. doi: 10.1109/TPAMI.2021.3095381. Conference Name: IEEE Transactions on Pattern Analysis and Machine Intelligence. [DOI] [PubMed] [Google Scholar]

- England J. L. and Shakhnovich E. I.. Structural Determinant of Protein Designability. Physical Review Letters, 90(21):218101, May 2003. doi: 10.1103/PhysRevLett.90.218101. URL 10.1103/PhysRevLett.90.218101. Publisher: American Physical Society. [DOI] [PubMed] [Google Scholar]

- Ferruz N., Schmidt S., and Höcker B.. ProtGPT2 is a deep unsupervised language model for protein design. Nature Communications, 13(1):4348, July 2022. ISSN 2041–1723. doi: 10.1038/s41467-022-32007-7. URL https://www.nature.com/articles/s41467-022-32007-7. Number: 1 Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fontana W.. Modelling ‘evo-devo’ with RNA. BioEssays, 24(12):1164–1177, 2002. ISSN 1521–1878. doi: 10.1002/bies.10190. URL https://onlinelibrary.wiley.com/doi/abs/10.1002/bies.10190. _eprint: https://onlinelibrary.wiley.com/doi/pdf/10.1002/bies.10190. [DOI] [PubMed] [Google Scholar]

- Hie B., Zhong E. D., Berger B., and Bryson B.. Learning the language of viral evolution and escape. Science, 371(6526):284–288, Jan. 2021. doi: 10.1126/science.abd7331. URL 10.1126/science.abd7331. Publisher: American Association for the Advancement of Science. [DOI] [PubMed] [Google Scholar]

- Hsu C., Verkuil R., Liu J., Lin Z., Hie B., Sercu T., Lerer A., and Rives A.. Learning inverse folding from millions of predicted structures. In Proceedings of the 39th International Conference on Machine Learning, pages 8946–8970. PMLR, June 2022. URL https://proceedings.mlr.press/v162/hsu22a.html. ISSN: 2640–3498. [Google Scholar]

- Jumper J., Evans R., Pritzel A., Green T., Figurnov M., Ronneberger O., Tunyasuvunakool K., Bates R., Žídek A., Potapenko A., Bridgland A., Meyer C., Kohl S. A. A., Ballard A. J., Cowie A., Romera-Paredes B., Nikolov S., Jain R., Adler J., Back T., Petersen S., Reiman D., Clancy E., Zielinski M., Steinegger M., Pacholska M., Berghammer T., Bodenstein S., Silver D., Vinyals O., Senior A. W., Kavukcuoglu K., Kohli P., and Hassabis D.. Highly accurate protein structure prediction with AlphaFold. Nature, 596(7873):583–589, Aug. 2021. ISSN 1476–4687. doi: 10.1038/s41586-021-03819-2. URL https://www.nature.com/articles/s41586-021-03819-2. Number: 7873 Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin Z., Akin H., Rao R., Hie B., Zhu Z., Lu W., Smetanin N., Verkuil R., Kabeli O., Shmueli Y., dos Santos Costa A., Fazel-Zarandi M., Sercu T., Candido S., and Rives A.. Evolutionary-scale prediction of atomic-level protein structure with a language model. Science, 379(6637):1123–1130, Mar. 2023. doi: 10.1126/science.ade2574. URL 10.1126/science.ade2574. Publisher: American Association for the Advancement of Science. [DOI] [PubMed] [Google Scholar]

- Martinez Z. A., Murray R. M., and Thomson M. W.. TRILL: Orchestrating Modular Deep-Learning Workflows for Democratized, Scalable Protein Analysis and Engineering, Oct. 2023. URL https:// 10.1101/2023.10.24.563881v1. Pages: 2023.10.24.563881 Section: New Results. [DOI]

- McInnes L., Healy J., and Melville J.. UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction, Feb. 2018. URL https://arxiv.org/abs/1802.03426v3.

- van Kempen M., Kim S. S., Tumescheit C., Mirdita M., Lee J., Gilchrist C. L. M., Söding J., and Steinegger M.. Fast and accurate protein structure search with Foldseek. Nature Biotechnology, pages 1–4, May 2023. ISSN 1546–1696. doi: 10.1038/s41587-023-01773-0. URL https://www.nature.com/articles/s41587-023-01773-0. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verkuil R., Kabeli O., Du Y., Wicky B. I. M., Milles L. F., Dauparas J., Baker D., Ovchinnikov S., Sercu T., and Rives A.. Language models generalize beyond natural proteins. Technical report, bioRxiv, Dec. 2022. URL 10.1101/2022.12.21.521521v1. Section: New Results Type: article. [DOI]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.