Abstract

Previously, it has been shown that maximum-entropy models of immune-repertoire sequence can be used to determine a person’s vaccination status. However, this approach has the drawback of requiring a computationally intensive method to compute each model’s partition function , the normalization constant required for calculating the probability that the model will generate a given sequence. Specifically, the method required generating approximately 1010 sequences via Monte-Carlo simulations for each model. This is impractical for large numbers of models. Here we propose an alternative method that requires estimating this way for only a few models: it then uses these expensive estimates to estimate more efficiently for the remaining models. We demonstrate that this new method enables the generation of accurate estimates for 27 models using only three expensive estimates, thereby reducing the computational cost by an order of magnitude. Importantly, this gain in efficiency is achieved with only minimal impact on classification accuracy. Thus, this new method enables larger-scale investigations in computational immunology and represents a useful contribution to energy-based modeling more generally.

I. INTRODUCTION

Energy-based models (EBMs) generally—and maximum entropy (MaxEnt) models particularly—have a wide range of applications, including in statistical physics [1–5] (where the major statistical ensembles all take the form of EBMs), natural language processing [6, 7], finance [8], RNA [9] and protein [10] sequence motifs, ecology [11–13], modeling flocking behavior in birds [14], modeling voting behavior [15], describing patterns of activity in neurons [16, 17], modeling disease outbreaks [18], modeling the environmental preferences of plant pathogens [19], and modeling immune repertoires [20, 21], among many others [22]. As probabilistic models, EBMs can be used as generative models when coupled with Monte Carlo sampling methods. When properly normalized, they can, unlike most types of discriminative models, also be used for Bayesian inference. In nontrivial settings, however, normalizing EBMs (or indeed any probabilistic models based on initially unnormalized probabilities) can be computationally quite expensive.

In previous work, MaxEnt models were trained on antibody heavy-chain (IGH) and T-cell receptor -chain (TRB) repertoires’ third complementary-determining regions (CDR3s), using features based on the physicochemical properties of their constituent amino acids [21]. It was demonstrated that these models allowed for the classification of influenza vaccination status among 31 samples from 14 individuals. However, this classification required the estimation of partition functions: the normalization constants of the individual probability distributions. This was done using in-house Monte-Carlo (MC) based estimation software, which was computationally expensive. The partition function for a given model is usually abbreviated as .

To understand the computational difficulty of the problem, consider a model that represents the distribution of amino-acid sequences comprising some collection of proteins, such as TCRs or B-cell receptors (BCRs) (e.g. IGH). Consider specifically the set of all possible polypeptide chains 100 amino acids in length, which is approximately the length of a TRB or IGH variable region. There are 20100 ≈ 10130 such sequences. Thus an exact computation of the partition function would involve a sum of 10130 terms. Such a sum is infeasible with present-day computational resources, even before considering that we will likely want to normalize many such models (e.g. one per person per timepoint). In a few special instances, such as the one-dimensional and two-dimensional local Ising or Potts models, there are shortcuts to computing this sum. It is very unlikely such simplifications will exist in general, however, as the problem of computing partition functions has been shown to be #P-hard [23, 24]. Of course in practice, we do not need an exact result, and methods such as bridge sampling [25–27] exist precisely in order to approximate these kinds of sums more efficiently. Yet even in these cases, it may be necessary to generate an enormous Monte Carlo sample from the model in question. We asked whether we could improve the efficiency of estimating for each model without sacrificing classification accuracy, using immune repertoires as a test case.

A. Energy-Based Models

An EBM is a model that assigns an unnormalized probability to every potential state (meaning, in the context of CDR3 repertoires, every possible amino acid sequence up to some maximum length) based on a parameterized energy function according to

| (1) |

The models used in this paper are MaxEnt models, in which the energy takes the form

| (2) |

for a set of features . Such models were introduced in Refs. [4, 5] and are based on distributions long studied in statistical physics. The name “maximum entropy” comes from the fact that these models maximize the entropy of the resulting distribution subject only to constraints on the moments of the features. The parameters fix these moments and determine how the distribution is allowed to vary from the uniform distribution (which corresponds to ) [9, 20, 28].

MaxEnt models can be trained using, for example, gradient descent to maximize the likelihood of a training sample as estimated by the model. Gradients of the log likelihood turn out to depend only on the feature moments for the current model, which can be estimated using Monte Carlo methods, and the sample moments of the training sample.

B. Estimating Partition Functions

The problem of normalizing an initially unnormalized probability distribution shows up in a number of contexts and has an extensive literature going back several decades. A review of some of this work may be found in section 6 of Ref. [29]. In statistical mechanics, such a normalization constant shows up for the various (microcanonical, canonical, etc.) thermodynamic ensembles and is known as the partition function1, a term we shall use in most of this work, and is usually written . Knowing the partition function (as a function of the distribution parameters) allows one to compute all the macroscopic physical quantities that characterize the distribution, such as the mean values of the entropy, internal energy, and magnetization, as well as each of their fluctuations. In the context of Bayesian inference, the posterior distribution takes the form of an unnormalized distribution when the distribution of the evidence is unknown. In a few cases (for example, a multitude of models in one spatial dimension, or the Ising model with nearest-neighbor interactions in two dimensions), the partition functions may be computed analytically, but in the typical case an exact solution is intractable. Indeed, the general case has been shown to be #P-hard [24]. As a result, approximation schemes—either analytical or computational—typically need to be employed.

A large class of computational approximation schemes rely on Monte-Carlo methods for generating model samples; these schemes only estimate the ratio of the partition functions of two models. Alternatively, one can view them as estimating the partition function of one model based on the already known partition function of a second model. In the present work, we will refer to these as the target and the teammate, respectively. Such methods include bridge sampling [25] and the free energy perturbation method [30]—also known as simple importance sampling (SIS)—among others. The method used previously for immune repertoires by Arora et al. [21] is also in this class. Here we focus on estimating the partition functions themselves (though it should be noted that for the task of maximum likelihood inference, strictly speaking all that is needed is the ratio of the partition function of every model to some fixed reference model).

Given two unnormalized probability distributions whose densities are given by

| (3) |

and

| (4) |

the normalization constants of these (unnormalized) distributions—i.e. the partition functions of these models—are defined to be

| (5) |

and

| (6) |

In the following we consider as the target distribution and as the teammate.

The free energy perturbation method [29, 31] estimates from (or alternatively, estimates their ratio) according to

| (7) |

| (8) |

| (9) |

| (10) |

where is a Monte Carlo sample drawn from . This Monte Carlo procedure will typically provide a good estimate if every region with non-negligible probability under also has non-negligible probability under . Otherwise, it will tend to do poorly [32]. One way around this is to consider these distributions as part of a set of models , such that the interpolate between and . One may then estimate [32].

| (11) |

| (12) |

with for some . Bridge sampling was introduced in Ref. [25] as the “acceptance ratio method,” before being rediscovered in Ref. [26], whose authors coined the term “bridge sampling” [32]. Bridge sampling seeks to cure the weaknesses of the free energy perturbation method by using a single intermediate model . One then estimates

| (13) |

Both of these methods (and others in this general family) may not perform well (or alternatively may perform well only when the samples used to compute moments are taken to be very large) if the target and teammate distributions are very different (i.e. there is a large distance between them, for an appropriate choice of metric).

In the immune-repertoire example in Ref. [21], computing the partition functions required sampling ≥ 1010 amino acid sequences via Markov-chain Monte Carlo (MCMC) methods. Even on a highly-parallelized high-performance computing cluster, this requires a day or more of running time. This severely limits the practical feasibility of using this technique directly for Bayesian inference, especially in the case where many such models need to be normalized. We believe that the main reason this method is so high-cost is that this teammate distribution is very far from the model distribution. In fact, it is essentially a uniformly random distribution at each length, combined with a distribution on lengths that depends on the target. (Immune repertoires include amino-acid sequences of multiple lengths.) The advantage of this distribution is that its normalization factor can easily be calculated exactly. The disadvantage is, it has a much higher entropy than any of the target models. As such, it takes an extremely large sample to encounter most of the states which have a high-probability in the target model. In fact, many of the target models are much closer to one-another than they are to their respective teammate models.

The key observation of this paper is that we can use this proximity to our advantage. Once the first few models have been normalized using the expensive but proven method in [21], those few models can be used as alternative teammates for the remaining targets. This strategy can even be used iteratively, with the high-entropy teammates used to normalize the first targets, these targets used as teammates for a second round of targets, this second round of targets used as teammates for a third round, and so on. In the rest of this paper we will show empirically that this method works well, achieving high classification accuracy while significantly speeding up the process of (approximately) normalizing a fairly sizeable batch of IGH and TRB immune-repertoire models.

II. METHODS

A. Data

A total of 19 unique repertoires were studied, summarized in table I. Seventeen of these were IGH and TRB CDR3 repertoires representing diverse physiological and pathophysiological states, including infection, vaccination, and cancer, as well as repertoires from subjects that lacked these conditions. Two were artificial “repertoires” of randomized sequences created from TRB repertoires (see below). The TRB repertoires included three from subjects imputed to be positive for cytomegalovirus (CMV) (“infected”), four imputed to be CMV-negative (“baseline”) [33], and two from subjects with breast cancer (“cancer”) [34]. CMV infection status was imputed as in [35]. The IGH repertoires similarly include two repertoires from subjects who had received an influenza vaccine (“vaccinated”) and four from subjects that had not (“baseline”) [36], as well as two repertoires from subjects with chronic lymphocytic leukemia (“cancer”—although in this case the repertoire includes sequences from the cancerous clone itself, not just sequences elaborated in response to/in the context of the cancer) [37]. The random repertoires were created from TRB repertoires [33] by preserving the lengths of each sequence but randomizing the amino acids among all sequences. As such, these have the same length and single-amino-acid distributions as their source TRB repertoires, but with all correlations between different amino acids, or between amino acids and position in the sequence, randomized away.

TABLE I:

Summary of repertoires. For TRB repertoires, baseline and infected indicate imputed CMV infection status, whereas for IGH repertoires, baseline and vaccinated indicate influenza vaccination status. Additionally, the TRB cancer repertoires are from breast cancer, whereas the IGH cancer repertoires are from chronic lymphocytic leukemia.

| Repertoire Type | Status | Number of Repertoires |

|---|---|---|

| TRB | baseline infected cancer | 4 |

| 3 | ||

| 2 | ||

| IGH | baseline vaccinated cancer | 4 |

| 2 | ||

| 2 | ||

| Random | - | 2 |

B. Models

A total of 29 maximum entropy (MaxEnt) models were trained as described previously [21]. For reference, each model was named according to its cell type, disease state, feature set, and and a number that along with this other information uniquely identifies the model. Twenty of these models (one per repertoire, plus a replicate model for one of the random repertoires, as a control) were trained using a set of features consisting of lengths, frequencies of single amino acids in both an entire sequence and in the first and last four amino acids of a sequence (the canonical stems; IGH and TRB proteins adopt stem-loop structures), and sums of pairwise products of physio-chemical descriptors of amino acids between different locations (including nearest neighbors, next-to-nearest neighbors, and opposites (i.e. first with last, second with second from last, etc.)), and summed over both the entire sequence and just the first and last four amino acids, as well as products of physio-chemical descriptors of four consecutive amino acids. This was feature set 1. To test a second set of features, nine additional MaxEnt models were trained on a subset of the repertoires: two each on IGH baseline, IGH vaccinated, and TRB baseline repertoires, as well as three on TRB infected repertoires. These were trained using a different set of features that did not include products of four physiochemical descriptors, but which did include products between 3rd nearest neighbors. This was feature set 2. Of these 29 models, two fits failed to converge and were thus excluded from the remainder of the study. These were the models trained on the two IGH cancer repertoires, which as described above come from subjects with chronic lymphocytic leukemia. As such, the failure of these models to converge is perhaps unsurprising, given that these repertoires are dominated by a single large clone. Conversely, because these repertoires can be well described by the sequence clone, there is a diminished need for a compact generative model (e.g. a MaxEnt model) to describe them.

C. Partition Function Estimates Using Non-Repertoire Teammates (Previous Method)

For each model, we first used the previous method [21] to estimate the partition function for each model. This method uses the Metropolis-Hastings algorithm to sample from two distributions, the target distribution (one of the immune repertoire models) and a teammate distribution. The teammate distribution was such that the probability of a sequence depends only on its length.

From each of these samples, we estimated the density of states of the target distribution: the distributions of energies, i.e. (unnormalized) negative log probabilities. We describe the procedure graphically (Fig. 1). For the target sample (green in Fig. 1), this was done by binning the energies and counting the number of unique sequences in each bin. For the teammate distribution (yellow in Fig. 1) each sequence contributed a weight equal to its (unnormalized) probability in the target distribution divided by its (normalized) probability in the teammate distribution. Energies were then binned with the same binning as before, with the weight for each sequence added to the corresponding energy bin. This resulted in two histograms representing the density of states. The first of these was estimated based on a sample of 1010 Monte Carlo (MC)-generated sequences from the target distribution and represents an absolute estimate; that is, the entries directly estimate the number of unique sequences per bin. The second of these was estimated from a sample of 1011 MC-generated sequences drawn from the teammate distribution and represents only a relative estimate, in that the overall histogram differs from the (estimated) density of states by an overall multiplicative constant: a vertical shift in Fig. 1.

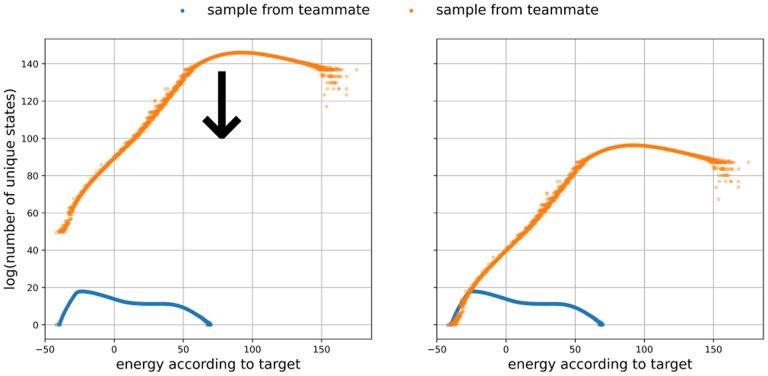

FIG. 1:

Left: the density of states estimates from both the target and teammate samples for model DCW4o. Right: the same plot, but with the estimate based on the teammate sample rescaled (downward arrow in left panel). The downward shift required to bring the upper distribution into alignment with the lower distribution is the ln .

We know that scaling the teammate by (the natural logarithm of) the partition function, ln , will make the absolute probabilities in each bin equal: it will shift the teammate distribution up or down until the high-confidence part of this curve coincides with the high-confidence part of the target distribution. The high-confidence part of the teammate distribution begins when bins contain enough sequences; it will fall off to the left of that (the lowest-energy sequences are unlikely to be sampled with sufficient density by the teammate’s random sampling). Meanwhile, the high-confidence part of the target distribution is the leftmost part; the distribution will fall off to the right (higher-energy sequences are unlikely to be sampled sufficiently densely by sampling from the target). The magnitude of the shift required gives the target’s ln .

Note this method requires a substantial amount of computational effort (typically a day or more on an academic supercomputing cluster) due to the large numbers of sequences sampled. This large sizes are necessary to ensure meaningful overlap between the two density-of-states histograms.

D. Partition Function Estimates with Immune-Repertoire Teammates (New Method)

Following the bridge-sampling partition function estimates described above, we performed a second analysis using the new method developed for this paper. For each of the 27 models, we computed 26 additional estimates using the free energy perturbation method, one using each of the other 26 models as a teammate. These estimates were computed using a 300,000-sequence sample generated from each model using MCMC methods. These samples were independent of those used to compute the bridge-sampling estimates.

For each of these 702 (= 27 targets × 26 teammates) free-energy-perturbation estimates, we found an estimated empirical log error, computed as the absolute value of the difference between the estimate in question for the ln and that found using the non-immune repertoire teammate. These error estimates ranged from 0.000281 to 8.81. We then trained a model to predict when these errors will fall below a threshold, which we chose, somewhat arbitrarily, to be that there should be less than a 30% error in the estimated value of . This threshold translated to empirical log errors of up to ln(1.3) ≈ 0.262. Since partition functions for different repertoires are observed to differ by many orders of magnitude, a 30% error indicates a quite accurate estimate of the relevant .

To this end, we divided the 27 models into two sets: a 15-model training set and a 12-model validation set. The details of this split are given in table II Since each individual model is designated as either a training model or a validation model, target-teammate pairs divide naturally into three sets: a training set, where both the target and teammate are from the model training set; a validation set, where both are from the model validation set; and a “crossover” set, consisting of the remaining mixed pairs. We trained a random-forest classifier on the training set, achieving a validation accuracy of 89%. The input features for this classifier were simple functions of the model parameters: the root-mean-squared difference between the bias vectors for five different types of biases (including two types of first-order bias, two types of second-order bias, and fourth-order biases), as well as a binary variable that flagged when one member of the pair was fit on IGH but the other was fit on TRB. On the set of all pairs (including validation pairs, training pairs, and crossover pairs), 75% of pairs classified by the model as “good” had log errors of 0.215 or lower (< 24%).

TABLE II:

Summary of models. Note that the first and second parts of the name (first only for randomer models) indicates cell type and disease state, the next part indicates the feature set used, and the last number (along with the other information) uniquely identifies that model.

| Name | Repertoire Type | Disease State | Feature Set | Test/Train | Converged |

|---|---|---|---|---|---|

| Random 1–1 | randomers | - | 1 | train | yes |

| Random 1–2 | randomers | - | 1 | train | yes |

| Random 1–3 | randomers | - | 1 | test | yes |

| TRB b.l. 1–2 | TRB | baseline | 1 | train | yes |

| TRB b.l. 1–1 | TRB | baseline | 1 | train | yes |

| TRB b.l. 1–3 | TRB | baseline | 1 | test | yes |

| TRB b.l. 1–4 | TRB | baseline | 1 | test | yes |

| TRB infex 1–3 | TRB | infected | 1 | train | yes |

| TRB infex 1–1 | TRB | infected | 1 | train | yes |

| TRB infex 1–2 | TRB | infected | 1 | test | yes |

| TRB can. 1–1 | TRB | cancer | 1 | train | yes |

| TRB can. 1–2 | TRB | cancer | 1 | test | yes |

| TRB b.l. 2–1 | TRB | baseline | 2 | train | yes |

| TRB b.l. 2–2 | TRB | baseline | 2 | test | yes |

| TRB infex 2–3 | TRB | infected | 2 | train | yes |

| TRB infex 2–1 | TRB | infected | 2 | train | yes |

| TRB infex 2–2 | TRB | infected | 2 | test | yes |

| IGH b.l. 1–1 | IGH | baseline | 1 | train | yes |

| IGH b.l. 1–4 | IGH | baseline | 1 | train | yes |

| IGH b.l. 1–2 | IGH | baseline | 1 | test | yes |

| IGH b.l. 1–3 | IGH | baseline | 1 | test | yes |

| IGH vax 1–2 | IGH | vaccinated | 1 | train | yes |

| IGH vax 1–1 | IGH | vaccinated | 1 | test | yes |

| IGH b.l. 2–1 | IGH | baseline | 2 | train | yes |

| IGH b.l. 2–2 | IGH | baseline | 2 | test | yes |

| IGH vax 2–1 | IGH | vaccinated | 2 | train | yes |

| IGH vax 2–2 | IGH | vaccinated | 2 | test | yes |

| IGH can. 1–1 | IGH | cancer | 1 | train | no |

| IGH can. 1–2 | IGH | cancer | 1 | test | no |

The methods described above consider every model as both a target and a teammate, and require previously-computed estimates for the partition functions of all models. However, this was only necessary for the purpose of training the classifier. In the remaining analysis, we simulated the scenario where a seed set of only a small number of models were initially identified as likely good teammates for the remaining models. The previous method outlined above was then used to generate estimates for these few models, and the resulting estimates allowed us to use those chosen models as teammates for a second batch of models, which were used as teammates for a third batch of models, and so on, until all partition functions had been estimated. Because all but the initial estimates are computationally inexpensive, this method is overall much more efficient. (See the description of the results in section III below.)

We chose the seed set as follows. For each of our 27 models , we listed each other model for which was a predicted good teammate for . For each , and for each in the list for , we then added to ’s list all models for which was also a predicted good teammate for (assuming was not already on the list). We did this iteratively until we reached a step where no additional models were added to the lists. The result of this is a list of “descendants” for each model. We chose the model with the most descendants as our first model in the seed set, breaking ties arbitrarily. We call this model . In our data, we found that there was no one model that had every other model as a descendant. Therefore, for all the models which were not descendants of , we listed the descendants of which were not descendants of , and the one with the most such descendants was chosen as the second model for the seed set, which we refer to as model b. We chose a third model for the seed set using a similar method. The three models chosen for the seed set in this way were those labeled yY7aq, J3AmH, and O8QGE. Collectively, these had all other models as descendants.

The seed-set models were assigned their partition function estimates computed using the previous method. We then iterated through the remaining models, iterating through direct descendants of the seed-set models first. For each model , we listed the models that had already been assigned a partition function that were predicted by the random forest classifier to be good teammates for . Using each of those models, we then used free energy perturbation to compute a partition function estimate for . The median of these estimates was then assigned as the partition function for , and was added to the list of models that had been assigned partition functions. Both these assigned partition function estimates and the partition function estimates computed using the non-immune repertoire teammate were then used to do maximum likelihood inference on sequences.

E. Quality Testing Via Inference on Sequences

For each model, we made an aggregate estimate of the partition function as follows. First we identified all other models which were classified by our random-forest classifier as giving good estimates as teammates for that model. Our aggregate estimate was then the median of all of these estimates. We refer to these estimates the median teammate estimates.

To test if the median teammate estimates were sufficiently accurate, we took 100 samples of 100 sequences from each model, each selected as an independent subsample of the 300,000-sequence sample used to compute expectation values in that code. For each of these 2,700 samples (27 models × 100 samples per model) we used maximum likelihood to guess which model it originated from. We did this using both the likelihood estimates from the bridge-sampled partition functions and the median teammate partition functions. We then compared the accuracies of both for identifying the correct source models, to see if there was any significant diminution in accuracy resulting from using the median teammate estimate partition functions rather than the bridge sampling partition functions.

III. RESULTS

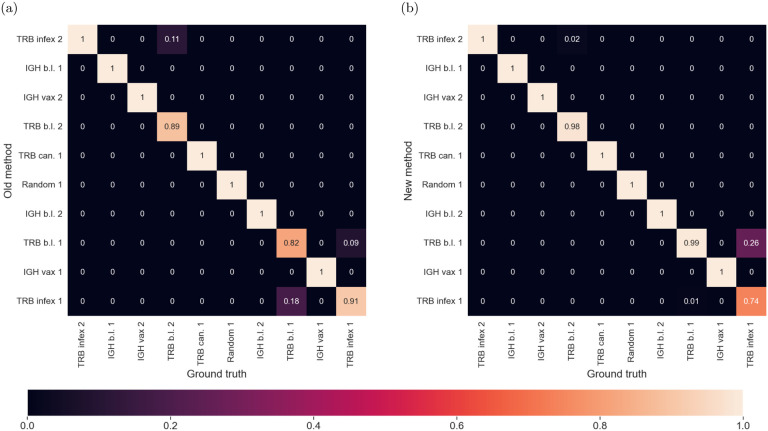

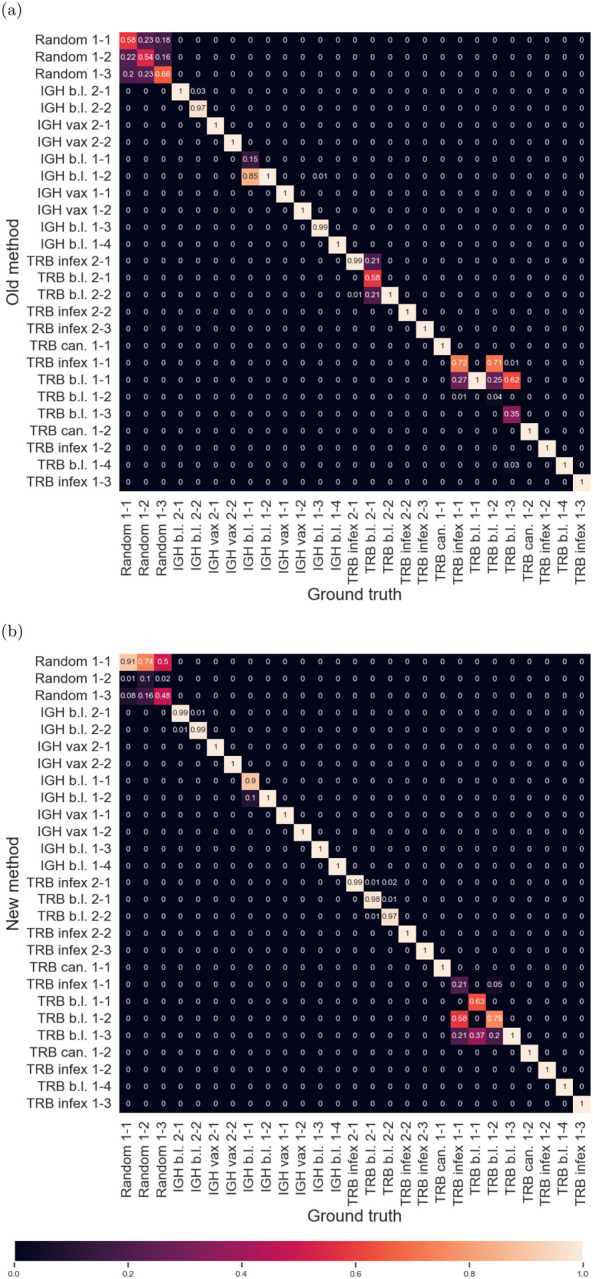

We compared the previous method[21] and the new method for both computational efficiency and performance of the resulting Bayesian classification. Figures 3 and 4 show the confusion matrices resulting from classifying 100 Monte-Carlo-generated sequences, both by the exact repertoire model they were sampled from (Fig. 3), as well as by sequence type (IGH vs. TRB) and disease or immunization state (Fig. 4). Comparisons of Figs. 3a–3b to Figs. 4a–4b show that the new method gives comparable classification performance.

FIG. 3:

(a) Confusion matrices between models using method (i). (b) Confusion matrices between models using method (ii).

FIG. 4:

(a) Confusion matrices between model parameter set/cell type/disease state labels using method (i). (b) Confusion matrices between model parameter set/cell type/disease state labels using method (ii). b.l. = baseline, vax = vaccinated, infex = infected, can. = cancer, and 1,2 refers to the feature set.

In addition, the new method had a much lower computational cost. Most of the cost came from the MC generation of sequence samples. Each estimate based on the non-immune repertoire teammates required about 1010 sequences. In contrast, the estimates using another immune repertoire model as a teammate only used 3 × 105 sequences per model, a savings of 99.997%. This translates to about 107 sequences in total across the 27 models—negligible compared to the previous method. As such, the computational cost essentially comes down to the number of more expensive estimates that need to be generated. In the previous method, each of the 27 estimates were of the expensive type. For the new method, we required only 3 of the expensive estimates, from which partition functions for the other 24 models were estimated much more efficiently. As a result, overall the new method leads to about an order of magnitude savings in computational cost.

IV. DISCUSSION

EBMs provide a way to summarize complex systems such as immune repertoires compactly and efficiently, based on aggregate features that are often human-interpretable. They also have the advantage of being generative models, which for immune repertoires means they can quickly and easily produce arbitrarily many de novo sequences that are representative of a given repertoire. Partition function estimation is important in EBMs because it allows calculation of the absolute probability of a given state—for example determining which of several immunological states, such as infection or cancer (each described by one or more models), a set of sequences is diagnostically most consistent with [38]. Together with methods for measuring immunological diversity, EBMs could become an important part of the diagnostic toolkit in next-generation immunology [35, 39], provided partition functions can be estimated efficiently. Here we have demonstrated a highly-efficient new method for estimating partition functions that performs as well as a previous method but much more efficiently, as assessed by correct classification of immune-repertoire sequences using models of real-world repertoires.

Although we have demonstrated substantial computational savings on this set of diverse IGH and TRB repertoires from a variety of states of health and disease, it should be noted that further work is necessary to precisely define how the computational cost of estimating partition functions will scale as the number of models requiring paritition-function estimation increases into the hundreds or thousands. The answer will likely depend in part on how closely related the models are, since we find closely related models tend to make good teammates. If the new method were to scale linearly, as the previous method does [21], then the advantage would be merely a (substantial) multiplicative factor. Based on our results, the new method likely scales sub-linearly, significantly improving the utility of this method in situations where many repertoires are modeled, e.g. representing precisely defined or multifaceted disease states across large clinical cohorts. Curating a database of previously fitted models with partition functions computed would maximize the cost savings of this method, by creating a bank of potential teammates for use in normalizing new models.

In the new method presented here, after partition functions for the seed models are estimated, additional estimates are found using free energy perturbation, arguably the simplest of the MCMC-based methods. In the future, it may be interesting to implement this idea using other MCMC-based methods, to test more broadly how those estimates compare in terms of accuracy and computational cost. It would also be interesting to explore replacing the initial teammate used in the old method, for which probabilities depended only on sequence length, with a better choice of teammate. A model trained on the same repertoire as the target of interest, but with features restricted to contain only couplings between nearest-neighbor pairs of amino acids2, would be formally the same as a 20-state 1D Potts model for each length, and as such the partition function for such a model could be found exactly using standard methods. This class of models could provide an improved teammate for the initial estimate, further reducing the cost to normalize an entire batch of models.

We conclude with a general note regarding obstacles to interdisciplinary adoption of EBMs. While the literature on MC methods for computing partition functions is extensive and goes back decades, it primarily traces its origins to statistics and statistical physics. Consequently, terminologies and concepts may not be readily accessible to researchers from diverse fields, including the biomedical sciences, whom they could otherwise benefit. Therefore, it may be useful to have a review that introduces these concepts specifically to biomedical researchers. Such a resource would facilitate the dissemination of knowledge, help avoid delays due to reinvention, and encourage the adoption of these powerful computational tools in various research domains. This is especially as the amount of data available in biology and related fields continues to increase, bringing ever-more-complex systems more fully into the realm of scientific study.

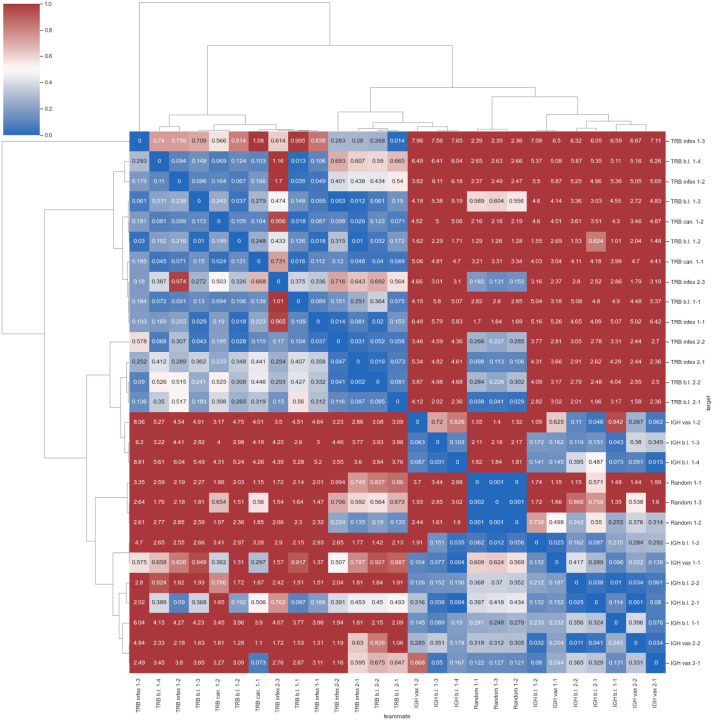

FIG. 2:

Differences in log Z estimates using methods (i) and (ii) for each pair (target, teammate) of immune repertoire models.

ACKNOWLEDGEMENTS

The authors would like to acknowledge that the Research Computing group in the Division of Information Technology at the University of South Carolina contributed to the results of this research by providing High Performance Computing resources and expertise. This work was supported by the NIH (R01AI148747-01).

Footnotes

Strictly speaking, the partition function in statistical mechanics should be understood as the function which, for a parameterized family of distributions, maps parameter values to the corresponding normalization constant, but that is not a distinction we will make here.

Longer range interactions up to th nearest neighbors can be included by grouping amino acids into a single variable with states, though the cost of the exact computation scales exponentially in .

References

- [1].Boltzmann L., Studien über das gleichgewicht der lebendigen kraft zwischen bewegten materiellen punkten, Wiener Berichte 58, 517 (1868). [Google Scholar]

- [2].Gibbs J. W., On the equilibrium of heterogeneous substances, Transactions of the Connecticut Academy of Arts and Sciences 3, 108–248 (October 1875 - May 1876). [Google Scholar]

- [3].Gibbs J. W., On the equilibrium of heterogeneous substances, Transactions of the Connecticut Academy of Arts and Sciences 3, 343–524 (May 1877 - July 1878). [Google Scholar]

- [4].Jaynes E. T., Information Theory and Statistical Mechanics, Physical Review 106, 620 (1957) [Google Scholar]

- [5].Jaynes E. T., Information Theory and Statistical Mechanics. II, Physical Review 108, 171 (1957). [Google Scholar]

- [6].Lafferty J. D. and Suhm B., Cluster expansions and iterative scaling for maximum entropy language models, in Maximum Entropy and Bayesian Methods, arXiv:cmplg/9509003, edited by Hanson K. M. and Silver R. N.(Springer; Netherlands, Dordrecht, 1996) pp. 195–202, cmp-lg/9509003 [Google Scholar]

- [7].Berger A. L., Della Pietra S. A., and Della Pietra V. J., A maximum entropy approach to natural language processing, Computational Linguistics 22, 39 (1996). [Google Scholar]

- [8].Molins J. and Vives E., Long range ising model for credit risk modeling in homogeneous portfolios (2004), condmat/0401378

- [9].Yeo G. and Burge C. B., Maximum entropy modeling of short sequence motifs with applications to RNA splicing signals, Journal of Computational Biology: A Journal of Computational Molecular Cell Biology 11, 377 (2004). [DOI] [PubMed] [Google Scholar]

- [10].Shimagaki K. and Weigt M., Selection of sequence motifs and generative hopfield-potts models for protein families, Phys. Rev. E 100, 032128 (2019), arXiv:1905.11848 [q-bio.BM] [DOI] [PubMed] [Google Scholar]

- [11].Shipley B., Vile D., and Garnier E., From plant traits to plant communities: A statistical mechanistic approach to biodiversity, Science 314, 812 (2006). [DOI] [PubMed] [Google Scholar]

- [12].Phillips S. J., Anderson R. P., and Schapire R. E., Maximum entropy modeling of species geographic distributions, Ecological Modelling 190, 231 (2006) [Google Scholar]

- [13].Williams R. J., Simple MaxEnt models for food web degree distributions (2009), 0901.0976 [q-bio].

- [14].Cavagna A., Giardina I., Ginelli F., Mora T., Piovani D., Tavarone R., and Walczak A. M., Dynamical maximum entropy approach to flocking, Physical Review E 89, 042707 (2014), 1310.3810 [cond-mat, physics:physics, q-bio] [DOI] [PubMed] [Google Scholar]

- [15].Lee E. D., Broedersz C. P., and Bialek W., Statistical mechanics of the US supreme court, Journal of Statistical Physics 160, 275 (2015), 1306.5004 [Google Scholar]

- [16].Ferrari U., Obuchi T., and Mora T., Random versus maximum entropy models of neural population activity, Physical Review E 95, 042321 (2017), 1612.02807 [cond-mat, q-bio]. [DOI] [PubMed] [Google Scholar]

- [17].Nghiem T.-A., Telenczuk B., Marre O., Destexhe A., and Ferrari U., Maximum-entropy models reveal the excitatory and inhibitory correlation structures in cortical neuronal activity, Phys. Rev. E 98, 012402 (2018), arXiv:1801.01853 [q-bio.NC]. [DOI] [PubMed] [Google Scholar]

- [18].Ansari M., Soriano-Paños D., Ghoshal G., and White A. D., Inferring spatial source of disease outbreaks using maximum entropy, Phys. Rev. E 106, 014306 (2022) arXiv:2110.03846 [physics.soc-ph]. [DOI] [PubMed] [Google Scholar]

- [19].Cohen S. D., Estimating the climate niche of sclerotinia sclerotiorum using maximum entropy modeling, Journal of Fungi (Basel, Switzerland) 9, 892 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Mora T., Walczak A. M., Bialek W., and Callan C. G., Maximum entropy models for antibody diversity, Proceedings of the National Academy of Sciences of the United States of America 107, 5405 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Arora R., Kaplinsky J., Li A., and Arnaout R., Repertoire-based diagnostics using statistical biophysics, bioRxiv; 10.1101/519108 (2019), https://www.biorxiv.org/content/early/2019/01/13/519108. [DOI] [Google Scholar]

- [22].De Martino A. and De Martino D., An introduction to the maximum entropy approach and its application to inference problems in biology, Heliyon 4, e00596 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Agrawal D., Pote Y., and Meel K. S., Partition Function Estimation: A Quantitative Study (2021), arXiv:2105.11132 [cs.AI]. [Google Scholar]

- [24].Roth D., On the hardness of approximate reasoning, Artificial Intelligence 82, 273 (1996). [Google Scholar]

- [25].Bennett C. H., Efficient estimation of free energy differences from monte carlo data, Journal of Computational Physics 22, 245 (1976). [Google Scholar]

- [26].W. W. H. MENG X. L., Simulating ratios of normalizing constants via a simple identity: a theoretical exploration, Statistica Sinica 6, 831 (1996). [Google Scholar]

- [27].Gronau Q. F., Sarafoglou A., Matzke D., Ly A., Boehm U., Marsman M., Leslie D. S., Forster J. J., Wagenmakers E.-J., and Steingroever H., A Tutorial on Bridge Sampling (2017), arXiv:1703.05984 [stat.CO]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Russ W. P., Lowery D. M., Mishra P., Yaffe M. B., and Ranganathan R., Natural-like function in artificial WW domains, Nature 437, 579 (2005). [DOI] [PubMed] [Google Scholar]

- [29].Neal R. M., Probabilistic Inference Using Markov Chain Monte Carlo Methods, Tech. Rep. (Dept. of Computer Science, University of Toronto,, 1993). [Google Scholar]

- [30].Zwanzig R. W., High-Temperature Equation of State by a Perturbation Method. I. Nonpolar Gases, The Journal of Chemical Physics 22, 1420 (1954). [Google Scholar]

- [31].Geyer C. J. and Thompson E. A., Constrained Monte Carlo Maximum Likelihood for Dependent Data, Journal of the Royal Statistical Society: Series B (Methodological) 54, 657 (1992). [Google Scholar]

- [32].Neal R. M., Estimating Ratios of Normalizing Constants Using Linked Importance Sampling (2005), arXiv:math/0511216 [math.ST]. [Google Scholar]

- [33].Britanova O. V., Putintseva E. V., Shugay M., Merzlyak E. M., Turchaninova M. A., Staroverov D. B., Bolotin D. A., Lukyanov S., Bogdanova E. A., Mamedov I. Z., Lebedev Y. B., and Chudakov D. M., Age-related decrease in TCR repertoire diversity measured with deep and normalized sequence profiling, Journal of Immunology (Baltimore, Md.: 1950) 192, 2689 (2014). [DOI] [PubMed] [Google Scholar]

- [34].Beausang J. F., Wheeler A. J., Chan N. H., Hanft V. R., Dirbas F. M., Jeffrey S. S., and Quake S. R., T cell receptor sequencing of early-stage breast cancer tumors identifies altered clonal structure of the t cell repertoire, Proceedings of the National Academy of Sciences 114, 10.1073/pnas.1713863114 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Arora R. and Arnaout R., Repertoire-scale measures of antigen binding, Proceedings of the National Academy of Sciences of the United States of America 119, e2203505119 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Vollmers C., Sit R. V., Weinstein J. A., Dekker C. L., and Quake S. R., Genetic measurement of memory b-cell recall using antibody repertoire sequencing, Proceedings of the National Academy of Sciences of the United States of America 110, 13463 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Bashford-Rogers R. J., Palser A. L., Huntly B. J., Rance R., Vassiliou G. S., Follows G. A., and Kellam P., Network properties derived from deep sequencing of human b-cell receptor repertoires delineate b-cell populations, Genome Research 23, 1874 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Arnaout R. A., Prak E. T. L., Schwab N., Rubelt F., and the Adaptive Immune Receptor Repertoire Community, The future of blood testing is the immunome, Frontiers in Immunology 12, 626793 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Kaplinsky J. and Arnaout R., Robust estimates of overall immune-repertoire diversity from high-throughput measurements on samples, Nature Communications 7, 11881 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]